1. Introduction

Over the past decade, computational thinking (CT) has increasingly received attention across education settings and levels. While research on CT and related areas has been conducted for decades, President Obama’s Computer Science for All initiative, which sought to “empower all American students…to learn computer science and be equipped with the computational thinking skills they need to be creators in the digital economy, not just consumers, and to be active citizens in our technology-driven world”, spurred researchers, educators, and policymakers across the country to design CT curricula and assessments and conduct research on how best to introduce CT to children at various ages and grade levels.

A decade before the Computer Science for All initiative was launched,

Wing (

2006) described CT as “a universally applicable attitude and skill set” oriented towards solving problems and designing solutions in ways that make them amenable to being solved with computational systems. Earlier notions of CT that focused mostly on programming and procedural thinking (

Papert, 1980) have since been expanded to include a broader set of skills and practices. While there are multiple emergent frameworks describing the various components of CT, there is not yet consensus on a definition nor clear articulation regarding how the different CT skills and practices evolve across age and grade levels. Across various frameworks, however, CT is viewed as an umbrella term that includes multiple skills and practices such as problem decomposition, systematic processing of information, abstraction and pattern generalizations, logical and algorithmic thinking, and systematic error detection and debugging (

Grover & Pea, 2013). Problem decomposition involves breaking complex problems into smaller, more manageable subproblems; systematic processing of information involves organizing and analyzing data in structured and logical ways; abstraction and pattern generalization involve identifying concepts and principles while ignoring irrelevant information; algorithmic thinking involves understanding and providing sequences of instructions; and debugging involves locating and fixing errors in systematic ways.

While a large portion of the CT work and research to date has focused on how upper-grade students learn these various skills, CT activities and resources designed for early learners have proliferated in recent years with the availability of coding environments (e.g., Scratch Jr., Kodable, and HopScotch), digital activities (e.g., using Blockly by Code.org), apps (e.g., Daisy the Dinosaur), board games (e.g., Robot Turtle), and tangible computing tools using programmable robots (e.g., KIBO, Bee-Bot, and LEGO WeDo). Research on CT curricula and assessment has also grown, with the vast majority focused on early elementary students. Efforts to better understand how CT can be promoted in preschool, however, are needed.

1.1. CT in Early Childhood

Initially, most of the early childhood studies on CT focused on curricular activities that involve the use of robotics in early elementary school, addressing skills such as sequence, loops, and conditional logic (

Bers, 2010;

Martinez et al., 2015).

Kazakoff et al. (

2013) asserted that children as young as four can build simple trash-collecting robots. However, although they were successful at getting children to build simple robots, they shared concerns about the suitability of a robotics curriculum for younger learners. In their observation of older preschool children (i.e., children aged five and above), students learned fewer robotics and programming concepts (relative to kindergarteners) and required significant one-on-one help from adults to build the robots. They concluded that using a robotics curriculum to help very young children master these new concepts required a level of scaffolding and teacher attention that may not be practical to achieve in preschool classrooms.

More recently, efforts have aimed to promote CT learning with preschoolers taking a broader approach to CT than those focused on programming and robotics and in ways that align with the playful and socially rich learning key in early childhood. A recent review of CT in early childhood identified various specific CT components and practices that are meaningful to early learning processes; these include abstraction, decomposition, testing and debugging, algorithmic thinking, and pattern recognition (

Su et al., 2023). Evidence evaluating children’s learning from emerging innovations addressing these CT components and practices, however, is still limited. Studies to understand how children develop understanding of these components and learn these skills are warranted as more CT innovations continue to emerge.

1.2. Why Focus on Preschool CT?

As has been the case in discussions about computer science in grades K–12 (

Vogel et al., 2017), several arguments can be offered to support a focus on CT in early childhood. One of these arguments involves responding to increased societal focus on and policy recommendations for promoting CT. These recommendations suggest that students across grade bands be prepared to be active participants in a technology-rich world and, as pointed out by

Guzdial (

2015), often place emphasis on job preparation. Promoting CT early may help ensure that children develop interest in CS and STEM fields and acquire requisite skills that can prepare them for more complex STEM and computing endeavors later (

Lei et al., 2020;

Zeng & Yang, 2023).

School systems, while also invested in facilitating learning trajectories that ultimately prepare students for employment, often relate the rationale for introducing CT to more proximal outcomes. For instance, school systems are increasingly giving attention to CT as a way to prepare students for improved learning more broadly and/or to facilitate learning in other disciplines or content domains (

Smith, 2018).

When linking CT to learning more broadly, researchers and educators argue that CT may help students develop problem-solving strategies and critical thinking skills that are crucial for learning across content domains (

Grover & Pea, 2013;

Henderson et al., 2007;

Lee et al., 2020;

Lei et al., 2020;

Weintrop et al., 2016). In preschool, there are clear connections to current early learning frameworks; for instance, the Head Start Outcome Framework’s Reasoning and Problem-Solving subdomain indicates that young children need to learn to use a variety of problem-solving strategies, and reason and plan ahead to solve problems. Our work examines whether and how CT accomplishes this in early learning.

The potential for CT integration with other domains is worth further exploration.

Harel and Papert (

1990) have argued that learning programming in concert with concepts from another domain can be easier than learning each separately. Disciplinary learning has been found to provide authentic and meaningful context for engaging in CT (

Cooper & Cunningham, 2010), and many believe that embedding CT into existing core subjects may make it more feasible to promote CT in equitable ways. Student learning in STEM subjects has also been reported to benefit from integration with coding and CT. For example, modeling using computational tools has been shown to serve as an effective vehicle for learning science and math concepts such as when students learn how objects move (physical science) by building models that describe the motion of objects (

DiSessa, 2001;

Hambrusch et al., 2009;

Hutchins et al., 2020).

Weintrop et al. (

2016) suggest that learning CT and other disciplines may, in fact, be mutually reinforcing.

While these rationales (argued largely in the context of secondary education) may also be relevant in early childhood, they are yet to be rigorously evaluated empirically. Early childhood educators, and preschool teachers specifically, are generalists by training. They are expected to promote young children’s learning in many areas (e.g., literacy, mathematics, science, social emotional, social studies, physical development, etc.). Adding CT to this list may indeed not be feasible, unless it is integrated meaningfully into existing areas of focus, making it more plausible to enact (and thus allowing CT to reach underserved populations that otherwise may not have access to high-quality CT learning experiences). However, integration is not an easy endeavor, and which content provides more meaningful and authentic context for CT learning and/or which synergies may be mutually supportive are still open questions. Our broader study aimed to (1) identify which CT skills can be promoted in preschool and (2) explore the productive and mutually supportive integration of CT with early mathematics and science (

Grover et al., 2022). To explore these questions, our work involved exploring the development of assessment tasks to make inferences of children’s learning of CT—the work and findings we report in this study.

1.3. Need for Early CT Assessment

Assessment tools that allow us to draw reliable and valid inferences about preschooler’s CT understanding are needed to evaluate the impact of emerging CT resources (

Angeli & Giannakos, 2020). A recent review of CT in early childhood education specifically calls attention to the lack of valid and reliable CT assessment for

young children; findings from the review indicate that most of the early childhood CT studies to date “did not report the scientific evidence of the psychometric properties of the instruments used” (

Su et al., 2023). Developing assessments for young learners is not easy; in addition to the usual assessment challenges, assessing young learners requires the design and development of tasks that are developmentally appropriate—playful, hands-on, and brief—and consider the fact that preschoolers are learning multiple skills simultaneously. For instance, task design must carefully consider that preschoolers are learning language at a rapid and varied pace; items that require verbal responses pose challenges when attempting to make reliable and valid inferences of children’s learning. Are verbal items measuring the intended construct, or are they a reflection of children’s language ability? How can items that require a verbal response be designed to minimize language load?

Recent efforts to design CT assessments for early learners have shown promise.

Clarke-Midura et al. (

2023), for example, recently developed an algorithmic thinking assessment within the context of spatial navigation. Similarly,

Lavigne et al. (

2020) and

Zapata-Cáceres et al. (

2021) recently developed and psychometrically evaluated assessments that measure multiple CT skills and practices with samples of elementary-age children in the United States and Spain, respectively. While these studies have all made important contributions to the early childhood field, studies that focus on preschool-age children are needed to evaluate emerging preschool CT innovations.

2. Research Context

The goal of this study was to explore the design of CT assessment tasks for preschool children. The assessment work was part of a larger project that aimed to study CT in preschool. This overarching project involved learning scientists working in close partnership with preschool educators, families, curriculum developers, and media designers to investigate whether and which CT skills resonate with young children and could be authentically promoted in children’s homes and preschool classrooms (

Grover et al., 2022). Part of this work involved exploring the integration of CT with early mathematics and science and co-designing (

Penuel et al., 2007) hands-on activities and digital apps for preschool children.

Early in our co-design work with preschool educators we identified the following as target skills to examine with preschoolers: (1) algorithmic thinking (sequences and loops), (2) problem decomposition (breaking down a problem or task into smaller, more manageable subtasks), (3) abstraction (observing and highlighting key characteristics and labeling classes of objects based on them), and (4) debugging (systematic error detection and resolution). These were initially selected based on existing research evidence, alignment with current early childhood standards, and because the co-design team found them to be promising entry points for exploring integration with other STEM areas. Some skills (e.g., algorithmic thinking) had been selected to extend the current evidence base. While some aspects of plugged programming activities may be difficult for young learners, related CT skills, such as sequence and loops, may be easily integrated with mathematics in ways that strengthen children’s understanding of numbers while also preparing them to later engage in more sophisticated coding and programming activities.

Other skills (e.g., abstraction) were selected to build evidence and investigate whether young children could productively engage in learning activities that promoted them. To achieve the goals of the overarching study, our work needed to explore the design of developmentally appropriate assessment tasks that could eventually be used to gather evidence of children’s CT learning. This paper details the process of designing the assessment, and documents both successes and challenges that can inform future early CT assessment efforts.

3. Method

3.1. Participants

A total of seven classrooms/teachers from public subsidized preschool programs in the San Francisco Bay Area (

n = 3) and the Greater DC area (

n = 4) were recruited to participate in the overarching study, with five of these classrooms implementing the CT activities during the duration of the study. All children enrolled in the classrooms were invited to participate (i.e., parental permission was sought); a total of 78 children consented to participate in study activities. Approximately 8 children per classroom were then selected to participate in the assessment study using a stratified random sampling procedure, aiming for an equal number of 3- and 4-year-old girls and 3- and 4-year-old boys from each participating classroom. This resulted in a total sample of 57 children. Children’s age in months ranged from 35 months to 60 months at the time of parental permission. Children aged 48 months or less were considered to be in the younger age assessment grouping, while children aged 49 months or more were in the older age assessment grouping. See

Table 1 for sample demographics. Attrition during the assessment study was low. Of the 57 children who completed assessment prior to the study, 55 (95%) completed the assessment at the end of the study.

3.2. Assessment Development Process

The design and development of the CT assessment drew on Evidence-Centered Design principles and began with a content domain analysis (

Mislevy et al., 2003). This involved conducting a literature and resource review and was followed by co-design meetings where participants discussed and identified target CT skills and practices to be included in the learning blueprint (

Vahey, 2018). The following four CT components/skills were selected as the first set to be explored in the overarching study: problem decomposition, algorithmic thinking, abstraction, and testing and debugging. At co-design meetings, the research team discussed findings from the literature review, shared CT definitions emerging from the field, and provided initial examples of different CT skills and practices that could be promoted in preschool, possibly in integration (conceptually and operationally) with preschool science and mathematics. A comprehensive list of math concepts and science practices was also generated based on prior work so that integration could be explored organically as activity ideas were drafted later in the co-design process. Together, the co-design team discussed which CT components are likely aligned with the abilities and interests of young children and bear the potential to strengthen children’s early learning in authentic ways.

In the learning blueprint we included detailed descriptions of the CT skills and practices targeted, description of the evidence that could be collected to make claims, and ways to vary difficulty. This information was then used to start the item generation process. The research team conducted a series of item generation meetings where item ideas were discussed and various item formats suitable for children aged 3–5 years were brainstormed. These included open-ended verbal responses to a verbal prompt; open-ended verbal responses to a picture prompt; selecting response by pointing, indicating, or embodying a response; and use of hands-on manipulatives to demonstrate a response. As items were drafted and pilot tested, the team iteratively refined the items with the aim of having brief items that included materials relatively familiar to young children (e.g., pictures and photos, building manipulatives, cards, etc.).

At the end of this design process our assessment comprised a total of 17 items, each of which targeted a specific CT skill or practice (see

Table 2). Problem decomposition included both verbal response items and items using manipulatives. One of the verbal response items involved a birthday party scene where children were asked to decompose the large task of planning a party into smaller, more manageable tasks. The manipulative item required children to decompose a block-building activity by assigning a subtask to the assessor and a task for himself/herself. Abstraction items involved sorting and labeling a set of materials to make a given task more efficient, such as colored blocks to build a tower or toy vehicles to place in a group based on observable characteristics. These items required children to identify certain details and ignore other details while sorting and labeling groups. Such items provided opportunity for children to engage and respond to the task through manipulative placement. Some algorithmic thinking items focused on sequence and invited children to navigate a small map where they were asked to follow a sequence of instructions, provide a sequence of instructions, and debug a mistake in navigation. These items explored verbal response and manipulative placement options. Other algorithmic thinking items focused on repetition; children were again asked to follow as well as provide a set of instructions that involved repeating common movements, such as clapping or jumping. This type of item allowed children to show their understanding through pointing and embodied responses (e.g., clapping).

Appendix A presents an example item for each CT skill/practice along with children’s responses.

3.3. Procedures

Prior to data collection, assessors (all early childhood specialists with assessment experience) participated in a half-day assessment training. The training included a review of adequate assessment practices (e.g., the importance of assent, processes for establishing rapport, etc.), a detailed overview and demonstration of each assessment item (including the placement of the flipbook and manipulatives), and a discussion around how to complete the assessment scoring sheet. After the training, each assessor was given the opportunity to practice and later underwent a reliability assessment with a research team member. The reliability assessment evaluated the following criteria for each item: adequate prompt delivery (prompt was read accurately and as intended), manipulative placement (pointing to photos or providing materials as intended), and scoring (accuracy of scored responses). Assessors were required to achieve at least 85% reliability to conduct the assessment in the field.

A total of six assessors administered the assessment one-on-one with participating children, both at the beginning and end of the intervention. Assessors were required to obtain child assent at the beginning of the session and discontinue the assessment session if the child no longer wanted to participate. Administration time varied, but overall, the assessment lasted for an average of 20 to 30 min per child. Post-administration, assessors reviewed the scoring sheet and entered data into an online database form.

3.4. Data Analytic Approach

An initial analysis was conducted for both pre- and post-assessment to examine the psychometric properties of each of the items. For each item, the following indices were examined: (a) the proportion of responses scored as “correct” as an index of item difficulty, and (b) the corrected item –total correlation (CITC) as an index of item discrimination (i.e., how well the scored responses to the item differentiate between children having different levels of understanding of the construct being measured).

Item difficulty and discrimination were examined along with qualitative information gathered during assessment sessions to make decisions about whether to include or exclude items. A follow-up, or second, set of analysis was then conducted to examine the psychometric properties of retained items and preliminarily examine reliability. The reliability of the total summated score (across all scored components of all items) was then estimated for the pre- and post-assessment using Cronbach’s alpha.

4. Results

4.1. Initial Psychometric Analyses

Findings from the initial set of analysis indicated that the overall difficulty of the assessment items was relatively high for both the pre- and post-assessment, with most having a proportion correct less than 0.5. Most items, however, demonstrated acceptable levels of discrimination (higher than 0.20). See

Table 3 for a list of items’ psychometric properties at pre- and post-assessment.

Findings from this initial set of analysis identified three items that had very low proportion correct values: two problem decomposition items (Items 1 and 15) and one algorithmic thinking (repetition) item (Item 5). Two of these items (Items 1 and 15, which both measure children’s understanding of problem decomposition) were ultimately removed; not only were they too difficult, but their discrimination values were also quite low. In essence, these items contributed very little information pertaining to the construct intended to be measured. Additionally, qualitative data recorded by assessors suggested that the item formats posed challenges. In Item 1, children were presented with a picture of a birthday party scene and asked to identify the subtasks or smaller tasks that are involved in planning a birthday party. This item required a verbal response, which can generally pose challenges for young learners. When designing problem decomposition items, the team found that most authentic scenarios that would be brief and not reveal the answer involved children providing verbal responses. Providing response options to select from often meant that the child was being led to the correct response. When designing this item, the team attempted to alleviate demands on verbal skill by including an image to provide context. However, some children attended to the objects in the photo of the birthday party and simply named them, which made it unclear whether they were decomposing or listing observable objects. With some children, the birthday party image also seemed to elicit responses describing the child’s own celebrations, rather than decomposing the task. Together, these observations suggest that the image of the birthday party may have been a distraction in this task.

In Item 15, children were presented with a previously built structure of red and blue blocks and a set of red and blue blocks in a random arrangement. The child was told the goal was to replicate the tower and asked to identify what subtask he/she could do and what subtask the assessor could do to build the tower together. The child was allowed to verbally describe each subtask (e.g., you build the blue part, and I will build the red part), indicate by pointing, or distribute blocks to each person. While using the set of blocks allowed for varied response options, it may have created confusion for children. Many children started building with those blocks, likely focused on the initial part of the prompt. The building task itself was intentionally easy to avoid measuring other skills that were not the target of the assessment item. However, building an easy object seemed to invite children to tackle or build it all themselves. Children may have not felt there was a real need to or benefit from decomposing it into subtasks.

Item 5 was designed to measure children’s understanding of algorithmic thinking, (looping, or repetition, in particular), and was ultimately retained. While the item was very difficult, the item discrimination index was not as low. Furthermore, the discrimination was higher at post-test, suggesting possible sensitivity to intervention, which is a desirable quality in any assessment. In this item, children were introduced to cards that indicated a given action (e.g., jump). They were then told that placing three jump cards would indicate jumping three times. Children were then invited to use the action card along with a number card, in essence, generating a codified representation of the same instruction (one that would indicate an instruction to jump three times). Children were given various number cards to select from. Many of the children repeated the same code (which did not involve a loop) or used multiple or incorrect numerals. Amongst the set of items that targeted repetition, this was the only item that required children to create an instruction (i.e., generate code) rather than follow a set of instructions (interpret or enact code), which may be an indication that the former is a more difficult skill. Additionally, qualitative observations by assessors indicated that the item’s materials or approach did not appear to present any significant confusion or distraction, as in Items 1 and 15, providing further evidence to retain the item.

4.2. Final Psychometric Analyses

The psychometric properties of items were calculated again, after removing Items 1 and 15 (see

Table 4 for a final list of items’ psychometric properties). Consistent with the original analysis, analysis of the final set indicated that the overall difficulty of the assessment items was relatively high for both the pre- and post-assessment, with many of the items having a proportion correct less than 0.5. Items demonstrated acceptable levels of discrimination (higher than 0.20). The reliability of the final set was found to be reasonably good: Cronbach’s alphas were 0.72 and 0.78 at pre- and post-assessment, respectively.

Overall, abstraction items were found to be easier relative to algorithmic thinking items. Algorithmic thinking items varied in difficulty but ranged from very difficult to moderately difficult. Algorithmic thinking items focused on repetition seemed to be less difficult than those focused on sequences. Items that required children to generate code (rather than enact code) were found to be most difficult. Testing and debugging items were also less difficult than algorithmic thinking items focused on sequences. It is important to note that of the two testing and debugging items, only one improved from pre- to post-assessment. While both testing and debugging items required children to identify and fix a navigation error made by the assessor, the item that improved (Item 4) presented the code as pictures that matched the map, while the other one (Item 12) presented arrows that indicated which direction to travel in.

5. Discussion

Overall, the findings indicate that preschool children can develop understanding of CT components and engage in a variety of CT practices. Findings from item analyses indicate that the items targeting algorithmic thinking, which measured skills often considered requisite to coding, were more difficult than other CT practices such as abstraction. This is in line with recent findings that indicate that while young children may be able to engage in programming and coding, other CT skills may be better entry points for young learners and more naturally aligned with the hands-on activities young children often experience at home and preschool classrooms (

Yadav et al., 2019). The findings also indicate that specific subskills may be good entry points to larger CT content and better aligned with preschool science and mathematics. Abstraction items were less difficult than items measuring sequences, possibly because they involved science practices such as sorting, which children may have been familiar with. Similarly, items that involved repetition or looping were often less difficult than those involving sequences. Classroom and home activities designed to promote repetition naturally provided opportunities to practice important mathematical skills such as counting and cardinality. Recent studies report similar synergies;

Lavigne et al. (

2020) explored how young children and their teachers engage with activities focused on sequencing, modularity, and debugging in the context of math activities. These findings provide useful information that designers and educators can consider as they design CT learning activities for preschoolers. Moreover, future research and design efforts can further explore the impact of these learning activities integrating CT with other learning domains such as science and math.

One of the most interesting findings from our assessment work is that while problem decomposition was found to be one of the most feasible CT skills to integrate in authentic ways (as described in findings from our overarching study) and a good entry skill to introduce CT to preschoolers, assessment of problem decomposition posed challenges. Many of the ideas that were generated by our team to measure problem decomposition involved verbal response formats. These response formats pose challenges with reliability since preschoolers are undergoing substantial language development, and it is therefore hard to judge when responses reflect the construct being measured, rather than language ability. Our team intentionally selected contexts children were likely familiar with (e.g., a birthday party) and explored the inclusion of photographs to elicit children’s ideas. These photos, however, invited children to verbally react to them, making it hard for coders to determine if children were decomposing or simply listing observable objects. Our team also explored providing manipulatives so that children could demonstrate or embody responses. We designed an item that involved blocks, where children were asked to replicate a block structure by decomposing or assigning a subtask to themselves and a subtask to the assessor. We intentionally selected a task that was not complex and could be done briefly. This, however, may have made it harder for children to engage in decomposition. Children often built the tower themselves fully, possibly because the building task was too easy, and they may have viewed decomposition as unnecessary or inefficient (rather than helpful). This is supported by research in early mathematics that has found that children are able to successfully use decomposition strategies when learning to add when they understand how these strategies allow them to more efficiently arrive at accurate responses (

Cheng, 2012). Future assessment work to tackle this challenge is warranted. Specifically, problem decomposition assessment items that present more complex tasks but do not require manipulation and or complex verbal responses would be an important revision to the designed battery or any additional assessment efforts aiming to include decomposition as a subskill.

For long, based on classical Piagetian theory, early childhood researchers and educators believed preschool children were not capable of engaging in abstract thought. Research in multiple disciplinary areas, however, has demonstrated that young children are indeed able to reason and think critically and abstractly.

Gopnik et al. (

2015), for instance, found that young children can use patterns of data to infer more abstract, general causal principles (at times, even more so than older children). Our findings suggest that preschoolers can engage in abstraction; they were able to identify and highlight necessary detail and disregard unnecessary detail when sorting objects and labeling sorted groups. This suggests abstraction may be a good entry to CT. It also seemed to be a relatively easier skill to measure; item formats developed often involved hands-on activities such as sorting and labeling and could be easily modified to also include measurement of mathematics (e.g., sorting shape and size) and science core ideas (e.g., sorting into observable characteristics or groups of animals, plants, physical objects, etc.).

Yadav et al. (

2019) similarly identified abstraction as one of the CT skills that should be promoted in early elementary as it would integrate with common activities and could be feasibly adopted and scaffolded by teachers.

While algorithmic thinking items varied in difficulty ranging from very difficult to moderately difficult, items focused on repetition were found to be less difficult than those focused on sequences. This may be an indication that looping is a good entry point when introducing algorithms, perhaps because it easily integrates with common mathematical skills such as numeral recognition, counting, and cardinality, or because it often allows children to embody responses. However, we should note that it is possible that this finding was a result of the fact that sequence items involved navigating maps/visual spatial presentations. This may have resulted in a higher cognitive load and thus a higher item difficulty. Future efforts should explore developing sequence items that do not involve visual/spatial navigation and compare findings to those found in this study. This further examination could shed light regarding how children could progressively learn this CT skill.

When examining repetition items, we also noted that the more difficult items required children to generate code rather than follow code. This aligns with research that has found that elementary-aged children overall tend to perform poorly on assessment tasks designed to generate a correct algorithm (

Chen et al., 2017), thus suggesting that this skill may also be harder for young learners. However, in our study, another reason driving this finding may be that most of the activities co-designed by teachers and families in our overarching study involved following rather than generating code, likely due to them observing children requiring significantly more scaffolding to generate code. It is important to note, though, that this does not fully explain this finding as the pattern indicating that items that involved generating code were more difficult for children was observed not only at post-test but also at pre-test.

Finally, our study found that children were able to engage in testing and debugging and one of the testing and debugging items was quite sensitive to detecting change from pre- to post-assessment. Data collectors also observed that children often debugged without explicitly detecting the error (as asked in assessment items). This may be related to language (children may find it easier to simply demonstrate than verbally explain), but it could also be that this skill is not often practiced formally in preschool classrooms and homes. Research that further examines these nuances is needed to inform early CT teaching practices. Interestingly, one testing and debugging item was less difficult at post-test. The other item, however, was slightly harder (though not significantly). The difference between these two items is that one involved code that matched locations on a map, whereas the other included arrows/directional as codes to navigate a map. The addition of visual spatial directions may well have increased the cognitive demands and skills being measured, and hence increased item difficulty. The connection between CT and visual spatial skills could be further explored to inform future item formats and potential integrated learning activities.

6. Conclusions

Our study extends the research on CT in early childhood by focusing on preschool learners. While several CT assessments have recently been designed to evaluate CT innovations in early childhood, none of them have been evaluated for use with preschool children specifically. Our assessment provides researchers and practitioners with a tool to better understand preschoolers’ learning of CT skills and evaluate the promise of emerging CT innovations for our youngest learners. While our assessment work can play a key role in helping build the evidence base, our study has several limitations that must be acknowledged. First, our research involved working with a relatively small sample of children from urban locations. While the participating public preschool programs served mostly families from low-income communities and culturally and linguistically diverse backgrounds, the sample included only children who were fluent in English and were able to conduct assessments in English. Studies with populations from different geographic areas that include dual language learners are necessary and key, especially as we work to ensure early CT practices are relevant to diverse groups of learners and assessments are not biased. Furthermore, it is important to note that our sample size precluded us from using more sophisticated analytic techniques. For instance, our analyses were not able to account for the nested structure of the data (children nested within classrooms), and we were unable to conduct Item Response Theory analyses that would have allowed us to reduce measurement error and evaluate test and item bias. Nevertheless, our assessment as well as the findings from our study are promising. We believe they will inform future early CT research and design efforts both by providing ideas for item generation and sharing lessons regarding issues that should be considered and further evaluated when creating CT learning resources.

Author Contributions

Conceptualization, X.D., D.K. and S.G.; methodology, X.D.; formal analysis, X.D. and D.K.; data curation, X.D., D.K. and T.L.; writing—original draft preparation, X.D., D.K., T.L., S.G. and P.V.; writing—review and editing, X.D., D.K., T.L., S.G. and P.V.; visualization, T.L., D.K. and X.D.; project administration, X.D., D.K. and T.L.; funding acquisition, X.D. and S.G. All authors have read and agreed to the published version of the manuscript.

Funding

This material is based upon work supported by the National Science Foundation under Grant No. 1827293. Any opinions, findings, and conclusions or recommendations expressed in this material are those of the author(s) and do not necessarily reflect the views of the National Science Foundation.

Institutional Review Board Statement

The study was conducted in accordance with the Declaration of Helsinki, and approved by the Institutional Review Board of Ethical & Independent Review Services (17190; 11 October 2019) for studies involving humans.

Informed Consent Statement

Informed consent was obtained from all subjects involved in the study.

Data Availability Statement

The data presented in this study are available on request from the corresponding author.

Acknowledgments

We would like to thank the preschool partners, including the teachers, families, and children, involved in the co-design and pilot testing efforts.

Conflicts of Interest

Author Shuchi Grover was employed by the company Looking Glass Ventures. Author Phil Vahey was employed by the company Applied Learning Insights. The remaining authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

Appendix A

Table A1.

Sample assessment items.

Table A1.

Sample assessment items.

References

- Angeli, C., & Giannakos, M. (2020). Computational thinking education: Issues and challenges. Computers in Human Behavior, 105, 106185. [Google Scholar] [CrossRef]

- Bers, M. U. (2010). The TangibleK Robotics Program: Applied computational thinking for young children. Early Childhood Research & Practice, 12(2), n2. [Google Scholar]

- Chen, G., Shen, J., Barth-Cohen, L., Jiang, S., Huang, X., & Eltoukhy, M. (2017). Assessing elementary students’ computational thinking in everyday reasoning and robotics programming. Computers & Education, 109, 162–175. [Google Scholar] [CrossRef]

- Cheng, Z. J. (2012). Teaching young children decomposition strategies to solve addition problems: An experimental study. The Journal of Mathematical Behavior, 31(1), 29–47. [Google Scholar] [CrossRef]

- Clarke-Midura, J., Silvis, D., Shumway, J. F., & Lee, V. R. (2023). Developing a kindergarten computational thinking assessment using evidence-centered design: The case of algorithmic thinking. In Assessing computational thinking (pp. 5–28). Routledge. [Google Scholar]

- Cooper, S., & Cunningham, S. (2010). Teaching computer science in context. ACM Inroads, 1(1), 5–8. [Google Scholar] [CrossRef]

- DiSessa, A. A. (2001). Changing minds: Computers, learning, and literacy. MIT Press. [Google Scholar]

- Gopnik, A., Griffiths, T. L., & Lucas, C. G. (2015). When younger learners can be better (or at least more open-minded) than older ones. Current Directions in Psychological Science, 24(2), 87–92. [Google Scholar] [CrossRef]

- Grover, S., Dominguez, X., Leones, T., Kamdar, D., Vahey, P., & Gracely, S. (2022). Strengthening early STEM learning by integrating CT into science and math activities at home. In Computational thinking in preK-5: Empirical evidence for integration and future directions (pp. 72–84). Association for Computing Machinery. [Google Scholar]

- Grover, S., & Pea, R. (2013). Computational thinking in K–12: A review of the state of the field. Educational Researcher, 42(1), 38–43. [Google Scholar] [CrossRef]

- Guzdial, M. (2015). Learner-centered design of computing education: Research on computing for everyone. Synthesis Lectures on Human-Centered Informatics, 8(6), 1–165. [Google Scholar] [CrossRef]

- Hambrusch, S., Hoffmann, C., Korb, J. T., Haugan, M., & Hosking, A. L. (2009). A multidisciplinary approach towards computational thinking for science majors. ACM SIGCSE Bulletin, 41(1), 183–187. [Google Scholar] [CrossRef]

- Harel, I., & Papert, S. (1990). Software design as a learning environment. Interactive Learning Environments, 1(1), 1–32. [Google Scholar] [CrossRef]

- Henderson, P. B., Cortina, T. J., & Wing, J. M. (2007, March 7–11). Computational thinking. 38th SIGCSE Technical Symposium on Computer Science Education (pp. 195–196), Covington, KY, USA. [Google Scholar]

- Hutchins, N. M., Biswas, G., Maróti, M., Lédeczi, Á., Grover, S., Wolf, R., & McElhaney, K. (2020). C2STEM: A system for synergistic learning of physics and computational thinking. Journal of Science Education and Technology, 29(1), 83–100. [Google Scholar] [CrossRef]

- Kazakoff, E. R., Sullivan, A., & Bers, M. U. (2013). The effect of a classroom-based intensive robotics and programming workshop on sequencing ability in early childhood. Early Childhood Education Journal, 41(4), 245–255. [Google Scholar] [CrossRef]

- Lavigne, H. J., Lewis-Presser, A., & Rosenfeld, D. (2020). An exploratory approach for investigating the integration of computational thinking and mathematics for preschool children. Journal of Digital Learning in Teacher Education, 36(1), 63–77. [Google Scholar] [CrossRef]

- Lee, I., Grover, S., Martin, F., Pillai, S., & Malyn-Smith, J. (2020). Computational thinking from a disciplinary perspective: Integrating computational thinking in K-12 science, technology, engineering, and mathematics education. Journal of Science Education and Technology, 29(1), 1–8. [Google Scholar] [CrossRef]

- Lei, H., Chiu, M. M., Li, F., Wang, X., & Geng, Y. J. (2020). Computational thinking and academic achievement: A meta-analysis among students. Children and Youth Services Review, 118, 105439. [Google Scholar] [CrossRef]

- Martinez, C., Gomez, M. J., & Benotti, L. (2015, July 4–8). A comparison of preschool and elementary school children learning computer science concepts through a multilanguage robot programming platform. 2015 ACM Conference on Innovation and Technology in Computer Science Education (pp. 159–164), Vilnius, Lithuania. [Google Scholar]

- Mislevy, R. J., Almond, R. G., & Lukas, J. F. (2003). A brief introduction to evidence-centered design. ETS Research Report Series, 1, i–29. [Google Scholar] [CrossRef]

- Papert, S. A. (1980). Mindstorms: Children, computers, and powerful ideas. Basic Books. [Google Scholar]

- Penuel, W. R., Roschelle, J., & Shechtman, N. (2007). Designing formative assessment software with teachers: An analysis of the co-design process. Research and Practice in Technology Enhanced Learning, 2(01), 51–74. [Google Scholar] [CrossRef]

- Smith, K. (2018, April 18). Challenge collaboratives: School districts and researchers partner to solve high-priority challenges. digital promise. Available online: https://digitalpromise.org/2018/04/18/challenge-collaboratives-school-districts-researchers-partner-solve-high-priority-challenges/ (accessed on 17 April 2025).

- Su, J., Yang, W., & Bautista, A. (2023). Computational thinking in early childhood education: Reviewing the literature and redeveloping the three-dimensional framework. Educational Research Review, 39, 100520. [Google Scholar] [CrossRef]

- Vahey, P. J., Reider, D., Orr, J., Presser, A. L., & Dominguez, X. (2018). The Evidence Based Curriculum Design Framework: Leveraging Diverse Perspectives in the Design Process. International Journal of Designs for Learning, 9(1), 135–148. [Google Scholar] [CrossRef]

- Vogel, S., Santo, R., & Ching, D. (2017, March 8–11). Visions of computer science education: Unpacking arguments for and projected impacts of CS4All initiatives. 2017 ACM SIGCSE Technical Symposium on Computer Science Education (pp. 609–614), Seattle, WA, USA. [Google Scholar]

- Weintrop, D., Beheshti, E., Horn, M., Orton, K., Jona, K., Trouille, L., & Wilensky, U. (2016). Defining computational thinking for mathematics and science classrooms. Journal of Science Education and Technology, 25(1), 127–147. [Google Scholar] [CrossRef]

- Wing, J. M. (2006). Computational thinking. Communications of the ACM, 49(3), 33–35. [Google Scholar] [CrossRef]

- Yadav, A., Larimore, R., Rich, K., & Schwarz, C. (2019). Integrating computational thinking in elementary classrooms: Introducing a toolkit to support teachers. In Proceedings of society for information technology & teacher education international conference. AACE. [Google Scholar]

- Zapata-Cáceres, M., Martín-Barroso, E., & Román-González, M. (2021). Collaborative game-based environment and assessment tool for learning computational thinking in primary school: A case study. IEEE Transactions on Learning Technologies, 14(5), 576–589. [Google Scholar] [CrossRef]

- Zeng, Y., & Yang, W. (2023). A systematic review of integrating computational thinking in early childhood education. Computers and Education Open, 4, 100122. [Google Scholar] [CrossRef]

Table 1.

Demographics of the sample.

Table 1.

Demographics of the sample.

| Sex | n | Percentage of Sample | Age in Months | n of Younger Age Assessment Group | Age in Months of Younger Age Assessment Group | n of Older Age Assessment Group | Age in Months of Older Age Assessment Group |

|---|

| Female | 28 | 49.12% | 50.36 M * | 12 | 44.33 M | 17 | 54.41 M |

| | | | 6.15 SD | | 4.33 SD | | 3.84 SD |

| Male | 29 | 50.88% | 51.28 M | 7 | 41.71 M | 21 | 54.81 M |

| | | | 6.53 SD | | 4.46 SD | | 2.73 SD |

| Total | 57 | | 50.82 M | 19 | 43.37 M | 38 | 54.63 M |

| | | | 6.31 SD | | 4.61 SD | | 3.23 SD |

Table 2.

Assessment items developed.

Table 2.

Assessment items developed.

| Item | CT Skill/Practice | Description | Response Format | Item Type |

|---|

| 1 | Problem

Decomposition | Decompose subtasks to plan a birthday party. | Open-ended verbal response | Dichotomous |

| 2 | Algorithmic

Thinking (sequence) | Generate code to navigate a small map. Code involves pictures. | Manipulative placement | Dichotomous |

| 3 | Algorithmic

Thinking (sequence) | Interpret (or follow) code to navigate a small map. Code involves pictures. | Verbal response, point or indicate | Dichotomous |

| 4 | Testing and

Debugging | Debug a mistake (made by an assessor) in navigation of a small map. | Verbal response, point or indicate | Polytomous |

| 5 | Algorithmic

Thinking

(repetition/looping) | Generate code that includes a loop. Jump three (3) times. | Manipulative placement (open ended) | Dichotomous |

| 6 | Abstraction (sorting) | Sort colored blocks for a given purpose (one variable—color). | Manipulative placement | Dichotomous |

| 7 | Abstraction (sorting) | Sort toy vehicles for a given purpose (two variables—color and vehicle type). | Manipulative placement | Polytomous |

| 8 | Problem

Decomposition | Decompose subtasks to help three friends set up a lemonade stand. | Verbal response, Manipulative placement | Dichotomous |

| 9 | Algorithmic

Thinking

(repetition/looping) | Interpret (or follow) code including a loop. Clap two (2) times. | Verbal response, point or indicate | Dichotomous |

| 10 | Algorithmic

Thinking (sequence) | Generate code to navigate a small map. Code involves arrows to indicate direction. | Manipulative placement | Dichotomous |

| 11 | Algorithmic

Thinking (sequence) | Interpret (or follow) code to navigate a small map. Code involves arrows to indicate direction. | Verbal response, point or indicate | Dichotomous |

| 12 | Testing and

Debugging | Debug a mistake (made by assessor) in navigation of a small map. | Verbal response, point or indicate | Polytomous |

| 13 | Abstraction

(labeling) | Label sorted groups of blocks for a given purpose (one variable—color). | Manipulative placement | Dichotomous |

| 14 | Abstraction

(labeling) | Label sorted groups of toy animals for a given purpose (two variables—color and animal type). | Manipulative placement | Polytomous |

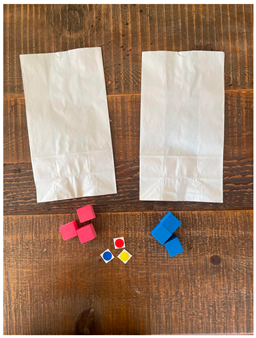

| 15 | Problem

Decomposition | Decompose steps to build a simple structure with blocks. | Verbal response, point or indicate | Dichotomous |

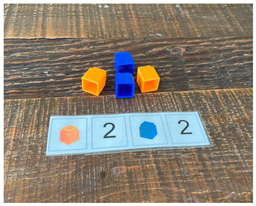

| 16 | Algorithmic

Thinking

(repetition/looping) | Interpret (or follow) code including a loop to build a simple block tower (2 orange, 2 blue). | Manipulative placement | Dichotomous |

| 17 | Algorithmic

Thinking

(repetition/looping) | Choose (or match) code with looping instructions. Wink three (3) times. | Point or indicate | Dichotomous |

Table 3.

Initial psychometric properties of items.

Table 3.

Initial psychometric properties of items.

| Item | Pre Proportion Correct | Post Proportion Correct | Pre Discrimination Index | Post Discrimination Index |

|---|

| | Problem Decomposition Items |

| Item 1 * | 0.05 | 0.09 | 0.099 | 0.234 |

| Item 8 | 0.23 | 0.16 | 0.352 | 0.364 |

| Item 15 * | 0.00 | 0.02 | 0.000 | 0.097 |

| | Abstraction Items |

| Item 6 | 0.44 | 0.56 | 0.277 | 0.339 |

| Item 7 (Polytomous) | 0.77 | 1.13 | 0.175 | 0.499 |

| Item 13 | 0.42 | 0.56 | 0.442 | 0.534 |

| Item 14 (Polytomous) | 0.54 | 0.85 | 0.346 | 0.288 |

| | Algorithmic Thinking (sequence) Items |

| Item 2 | 0.05 | 0.22 | 0.407 | 0.45 |

| Item 3 | 0.16 | 0.29 | 0.337 | 0.516 |

| Item 4 (Polytomous) | 0.54 | 0.73 | 0.471 | 0.374 |

| Item 10 | 0.12 | 0.24 | 0.247 | 0.508 |

| Item 11 | 0.14 | 0.25 | 0.206 | 0.46 |

| Item 12 (Polytomous) | 0.58 | 0.42 | 0.416 | 0.529 |

| | Algorithmic Thinking (repetition/looping) Items |

| Item 5 | 0.07 | 0.07 | 0.162 | 0.321 |

| Item 9 | 0.46 | 0.44 | 0.421 | 0.423 |

| Item 16 | 0.33 | 0.49 | 0.593 | 0.224 |

| Item 17 | 0.26 | 0.36 | 0.297 | 0.336 |

Table 4.

Final psychometric properties of items.

Table 4.

Final psychometric properties of items.

| Item | Pre Proportion Correct | Post Proportion Correct | Pre Discrimination Index | Post Discrimination Index |

|---|

| | Problem Decomposition Items |

| Item 8 | 0.23 | 0.16 | 0.339 | 0.335 |

| | Abstraction Items |

| Item 6 | 0.44 | 0.56 | 0.293 | 0.350 |

| Item 7 (Polytomous) | 0.77 | 1.13 | 0.171 | 0.499 |

| Item 13 | 0.42 | 0.56 | 0.449 | 0.531 |

| Item 14 (Polytomous) | 0.54 | 0.85 | 0.354 | 0.273 |

| | Algorithmic Thinking (sequence) Items |

| Item 2 | 0.05 | 0.22 | 0.414 | 0.431 |

| Item 3 | 0.16 | 0.29 | 0.332 | 0.525 |

| Item 4 (Polytomous) | 0.54 | 0.73 | 0.473 | 0.375 |

| Item 10 | 0.12 | 0.24 | 0.240 | 0.503 |

| Item 11 | 0.14 | 0.25 | 0.214 | 0.455 |

| Item 12 (Polytomous) | 0.58 | 0.42 | 0.404 | 0.539 |

| | Algorithmic Thinking (repetition/looping) Items |

| Item 5 | 0.07 | 0.07 | 0.167 | 0.318 |

| Item 9 | 0.46 | 0.44 | 0.418 | 0.420 |

| Item 16 | 0.33 | 0.49 | 0.586 | 0.246 |

| Item 17 | 0.26 | 0.36 | 0.297 | 0.336 |

| Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).