Pre-Service EFL Primary Teachers Adopting GenAI-Powered Game-Based Instruction: A Practicum Intervention

Abstract

1. Introduction

1.1. Problem Statement

1.2. Aim and Potential Contribution of This Study

- To what extent do GenAI-powered EFL games enhance English grammar skills in non-native primary pupils relative to non-digital games?

- To what extent do GenAI-powered EFL games enhance English listening comprehension in non-native primary pupils relative to non-digital games?

- To what extent do GenAI-powered EFL games enhance English pronunciation in non-native primary pupils relative to non-digital games?

- How do pre-service teachers perceive and experience the integration of GenAI-powered game-based instruction during their practicum, and what professional development outcomes emerge from this experience?

- What barriers and facilitators influence the implementation of GenAI-powered games in EFL primary classrooms, as reported by pre-service teachers?

2. Literature Review

2.1. Theoretical Underpinning

2.2. Gamification in Education

2.3. GenAI in Educational Contexts

2.4. GenAI in Language Learning

3. Materials and Methods

3.1. Participants

3.2. Experimental Procedures

3.3. Data Collection

3.4. Quantitative Analysis

3.5. Qualitative Analysis

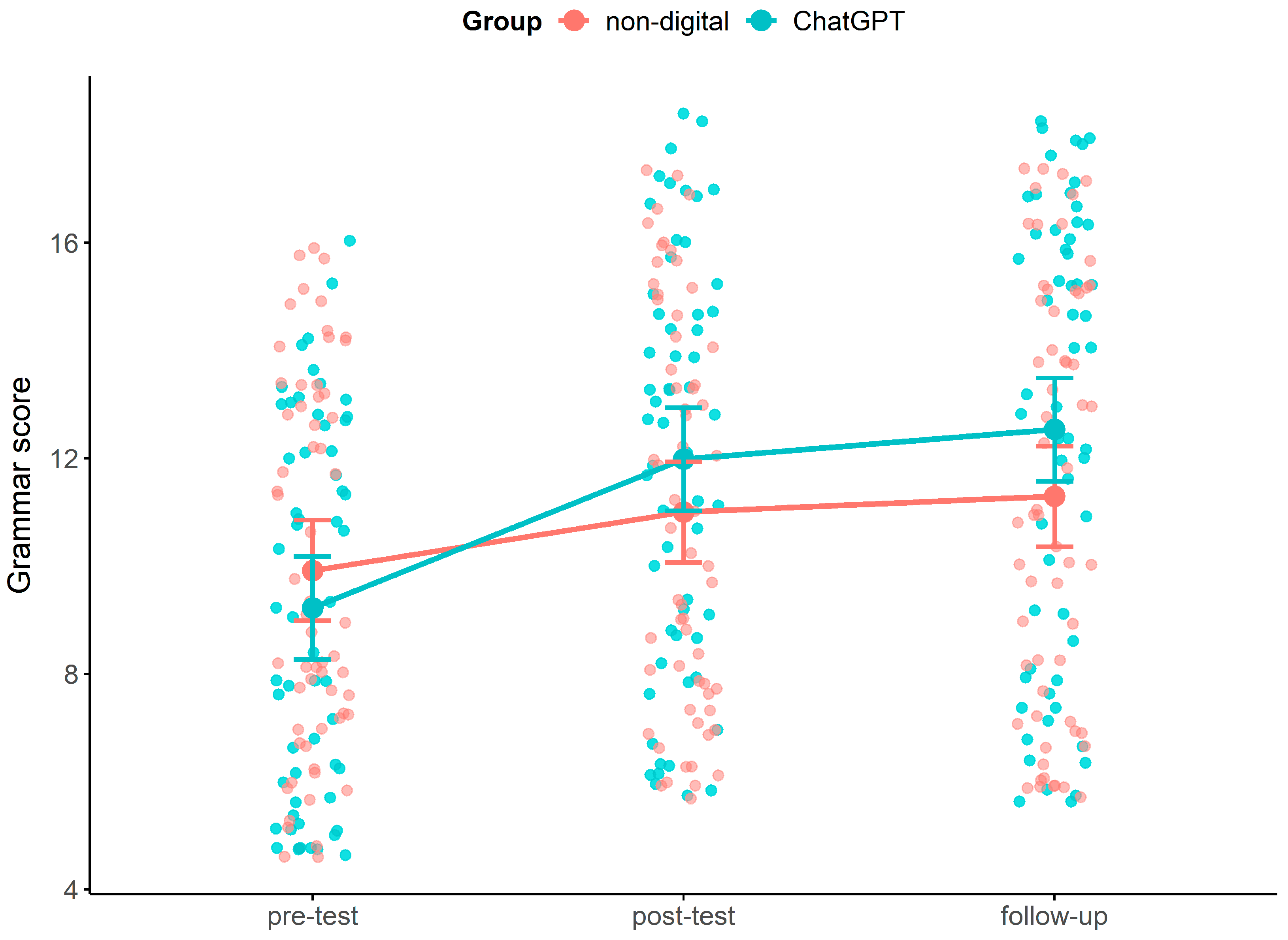

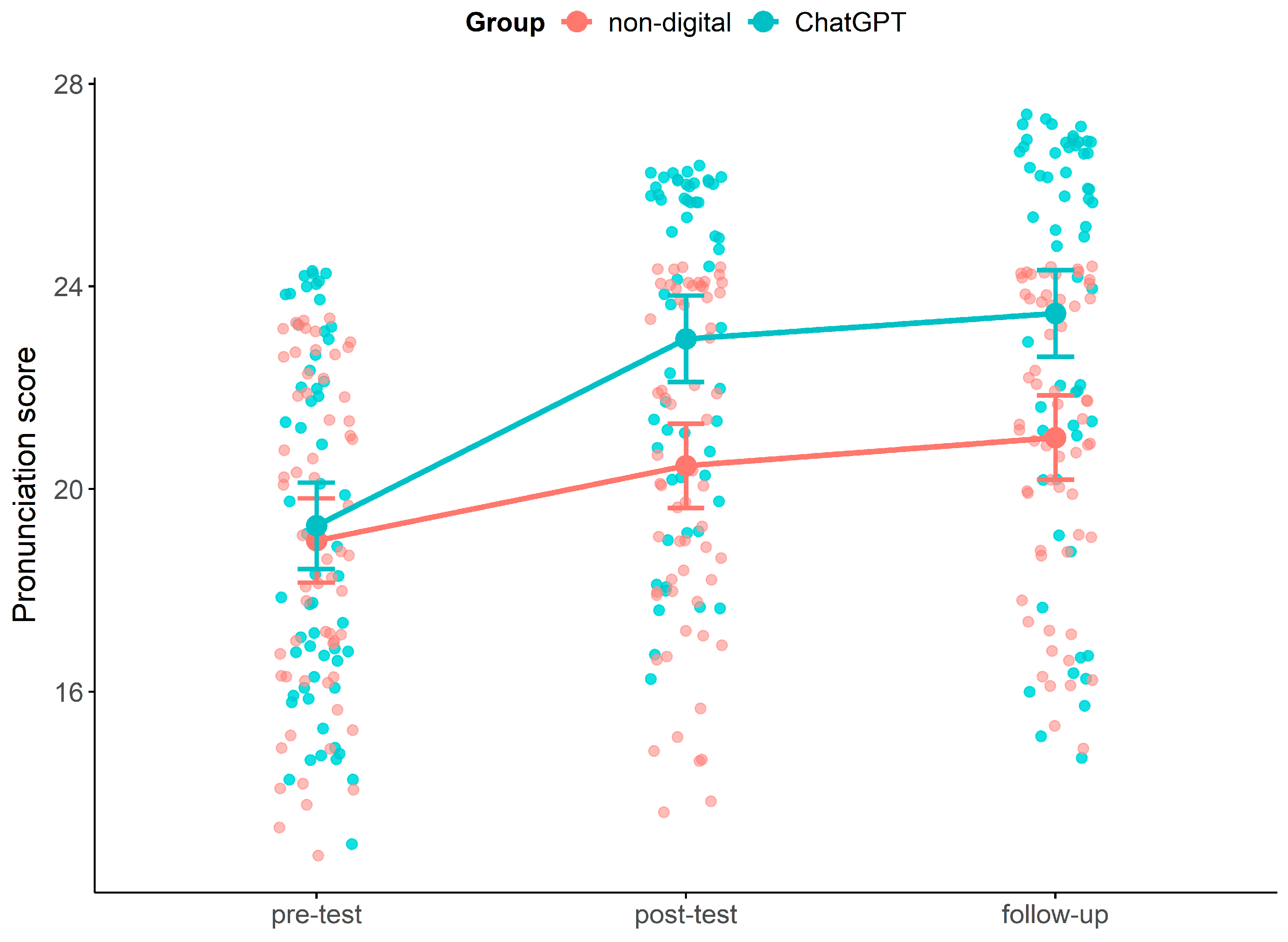

4. Quantitative Results

5. Qualitative Results

5.1. Perceptions and Professional Development (RQ4)

5.1.1. Initial Motivations and Expectations

5.1.2. Learning About GenAI as Learners

5.1.3. Professional Growth and Skill Development

5.1.4. Evolving Understanding of AI Role

5.2. Barriers and Facilitators in Implementation (RQ5)

5.2.1. Technical and Logistical Challenges

5.2.2. Pedagogical Facilitators and Obstacles

5.2.3. Classroom Dynamics and Management

5.2.4. Future Implementation

6. Discussion

6.1. Limitations

6.2. Suggestions for Practice and Further Research

7. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

Appendix A. Sample ChatGPT Activity

- Spy Training Game

Я третьеклассник, изучаю английский; давай сыграем в шпионскую тренировку: говори мне фразу на английском, проси меня повторить её и поправляй меня, если говорю неправильно; потом давай другую фразу; повторяй цикл.

I am a third-grader studying English; let’s play spy training: tell me a phrase in English, ask me to repeat it and correct me if I say it wrong; then give another phrase; repeat the cycle.

Appendix B. Reflective Prompts

- What are the main AI-based gamification ideas I want to incorporate in my English lesson? Why?

- Open reflection on the AI-based gamification task carried out in class. What did I learn about generative AI as a learner?

- Open reflection on upcoming AI-based gamification task (key considerations, hopes and concerns?)

- Identify key changes in your knowledge, understanding, skills or dispositions. Describe the changes and identify experiences or key points in the intervention that influenced your teaching.

- Three things I learned, two things I want to know more about, one question I still have.

- Open post-teaching reflection on educational AI experience.

References

- Ai, Y., Hu, Y., & Zhao, W. (2025). Interactive learning: Harnessing technology to alleviate foreign language anxiety in foreign literature education. Interactive Learning Environments, 33(3), 2440–2459. [Google Scholar] [CrossRef]

- Almelhes, S. A. (2024). Gamification for teaching the Arabic language to non-native speakers: A systematic literature review. Frontiers in Education, 9, 1371955. [Google Scholar] [CrossRef]

- Alpysbayeva, N., Zholtaeva, G., Tazhinova, G., Syrlybayeva, G., & Assylova, R. (2025). Fostering pre-service primary teachers’ capacity to employ an interactive learning tool. Qubahan Academic Journal, 5(1), 662–673. [Google Scholar] [CrossRef]

- Al-Rousan, A. H., Ayasrah, M. N., Khasawneh, M. a. S., Obeidat, L. M., & Obeidat, S. S. (2025). AI-enhanced gamification in education: Developing and validating a scale for measuring engagement and motivation among secondary school students: Insights from the network analysis perspective. European Journal of Education, 60(3), e70153. [Google Scholar] [CrossRef]

- Alturaiki, S. M., Gaballah, M. K., & El Arab, R. A. (2025). Enhancing nursing students’ engagement and critical thinking in anatomy and physiology through gamified teaching: A non-equivalent quasi-experimental study. Nursing Reports, 15(9), 333. [Google Scholar] [CrossRef]

- Arkoumanis, G., Sofos, A., Ventista, O. M., Ventistas, G., & Tsani, P. (2025). The impact of artificial intelligence on elementary school students’ learning: A meta-analysis. Computers in the Schools. advance online publication. [Google Scholar] [CrossRef]

- Babu, S. S., & Moorthy, A. D. (2024). Application of artificial intelligence in adaptation of gamification in education: A literature review. Computer Applications in Engineering Education, 32(1), e22683. [Google Scholar] [CrossRef]

- Belkina, M., Daniel, S., Nikolic, S., Haque, R., Lyden, S., Neal, P., Grundy, S., & Hassan, G. M. (2025). Implementing generative AI (GeNAI) in higher education: A systematic review of case studies. Computers and Education Artificial Intelligence, 8, 100407. [Google Scholar] [CrossRef]

- Busse, V., Hennies, C., Kreutz, G., & Roden, I. (2021). Learning grammar through singing? An intervention with EFL primary school learners. Learning and Instruction, 71, 101372. [Google Scholar] [CrossRef]

- Cambridge. (2018). English Qualifications. Pre A1 starters, A1 movers and A2 Flyers. In Sample papers for young learners. For exams from 2018, 2. Cambridge Assessment English. [Google Scholar]

- Chan, S., & Lo, N. (2024). Enhancing EFL/ESL instruction through gamification: A comprehensive review of empirical evidence. Frontiers in Education, 9, 1395155. [Google Scholar] [CrossRef]

- Chang, A. C. S. (2024). The effect of listening instruction on the development of l2 learners’ listening competence: A meta-analysis. International Journal of Listening, 38(2), 131–149. [Google Scholar] [CrossRef]

- Chapelle, C. A. (2025). Generative AI as game changer: Implications for language education. System, 132, 103672. [Google Scholar] [CrossRef]

- Chen, H. H. J., Yang, C. T. Y., & Lai, K. K. W. (2023). Investigating college EFL learners’ perceptions toward the use of Google Assistant for foreign language learning. Interactive Learning Environments, 31(3), 1335–1350. [Google Scholar] [CrossRef]

- Chen, J., Huang, K., Lai, C., & Jin, T. (2025a). The impact of GenAI-based collaborative inquiry on critical thinking in argumentation: A case study of blended argumentative writing pedagogy. TESOL Quarterly. advance online publication. [Google Scholar] [CrossRef]

- Chen, Y., Ke, N., Huang, L., & Luo, R. (2025b). The role of GenAI in EFL speaking: Effects on oral proficiency, anxiety and risk-taking. RELC Journal. advance online publication. [Google Scholar] [CrossRef]

- Cheng, J., Lu, C., & Xiao, Q. (2025). Effects of gamification on EFL learning: A quasi-experimental study of reading proficiency and language enjoyment among Chinese undergraduates. Frontiers in Psychology, 16, 1448916. [Google Scholar] [CrossRef] [PubMed]

- Chia, A., & Xavier, C. A. (2025). Grammar as a meaning-making resource: Fostering meaningful, situated literacy development. TESOL Journal, 16, e70015. [Google Scholar] [CrossRef]

- Chiu, T. K. F. (2024). The impact of Generative AI (GenAI) on practices, policies and research direction in education: A case of ChatGPT and Midjourney. Interactive Learning Environments, 32(10), 6187–6203. [Google Scholar] [CrossRef]

- Chung, Y., & Révész, A. (2021). Investigating the effect of textual enhancement in post-reading tasks on grammatical development by child language learners. Language Teaching Research, 28(2), 632–653. [Google Scholar] [CrossRef]

- Cong-Lem, N., Soyoof, A., & Tsering, D. (2025). A systematic review of the limitations and associated opportunities of ChatGPT. International Journal of Human–Computer Interaction, 41(7), 3851–3866. [Google Scholar] [CrossRef]

- Dahri, N. A., Yahaya, N. B., Al-rahmi, W. M., Almuqren, L., Almgren, A. S., Alshimai, A., & Al-Adwan, A. S. (2025). The effect of AI gamification on students’ engagement and academic achievement in Malaysia: SEM analysis perspectives. IEEE Access, 13, 70791–70810. [Google Scholar] [CrossRef]

- Dehghanzadeh, H., Fardanesh, H., Hatami, J., Talaee, E., & Noroozi, O. (2021). Using gamification to support learning English as a second language: A systematic review. Computer Assisted Language Learning, 34(7), 934–957. [Google Scholar] [CrossRef]

- Delgado-Garza, P., & Mayo, M. P. G. (2025). Can we train young EFL learners to ‘notice the gap’? Exploring the relationship between metalinguistic awareness, grammar learning and the use of metalinguistic explanations in a dictogloss task. International Review of Applied Linguistics in Language Teaching, 63(3), 1573–1597. [Google Scholar] [CrossRef]

- Ding, D., & Yusof, A. M. B. (2025). Investigating the role of AI-powered conversation bots in enhancing L2 speaking skills and reducing speaking anxiety: A mixed methods study. Humanities and Social Sciences Communications, 12, 1223. [Google Scholar] [CrossRef]

- Elmaadaway, M. A. N., El-Naggar, M. E., & Abouhashesh, M. R. I. (2025). Improving primary school students’ oral reading fluency through voice chatbot-based AI. Journal of Computer Assisted Learning, 41(2), e70019. [Google Scholar] [CrossRef]

- Evmenova, A. S., Regan, K., Mergen, R., & Hrisseh, R. (2025). Educational games and the potential of ai to transform writing across the curriculum. Education Sciences, 15(5), 567. [Google Scholar] [CrossRef]

- Far, F. F., & Taghizadeh, M. (2024). Comparing the effects of digital and non-digital gamification on EFL learners’ collocation knowledge, perceptions, and sense of flow. Computer Assisted Language Learning, 37(7), 2083–2115. [Google Scholar] [CrossRef]

- Fathi, J., Rahimi, M., & Teo, T. (2025). Applying intelligent personal assistants to develop fluency and comprehensibility, and reduce accentedness in EFL learners: An empirical study of Google Assistant. Language Teaching Research. advance online publication. [Google Scholar] [CrossRef]

- Fitzpatrick, M., & Leavy, A. (2025). Reciprocal interplays in becoming STEM learners and teachers: Preservice teachers’ evolving understandings of integrated STEM education. International Journal of Mathematical Education in Science and Technology. advance online publication. [Google Scholar] [CrossRef]

- Gamlem, S. M., McGrane, J., Brandmo, C., Moltudal, S., Sun, S. Z., & Hopfenbeck, T. N. (2025). Exploring pre-service teachers’ attitudes and experiences with generative AI: A mixed methods study in Norwegian teacher education. Educational Psychology. advance online publication. [Google Scholar] [CrossRef]

- Gao, Y., & Pan, L. (2023). Learning English vocabulary through playing games: The gamification design of vocabulary learning applications and learner evaluations. Language Learning Journal, 51(4), 451–471. [Google Scholar] [CrossRef]

- Gao, Y., Zhang, J., He, Z., & Zhou, Z. (2025). Feasibility and usability of an artificial intelligence-powered gamification intervention for enhancing physical activity among college students: Quasi-experimental study. JMIR Serious Games, 13, e65498. [Google Scholar] [CrossRef]

- Gaurina, M., Alajbeg, A., & Weber, I. (2025). The power of play: Investigating the effects of gamification on motivation and engagement in physics classroom. Education Sciences, 15(1), 104. [Google Scholar] [CrossRef]

- Giannakos, M., Azevedo, R., Brusilovsky, P., Cukurova, M., Dimitriadis, Y., Hernandez-Leo, D., Järvelä, S., Mavrikis, M., & Rienties, B. (2025). The promise and challenges of generative AI in education. Behaviour and Information Technology, 44(11), 2518–2544. [Google Scholar] [CrossRef]

- Goh, C. C. M., & Aryadoust, V. (2025). Developing and assessing second language listening and speaking: Does AI make it better? Annual Review of Applied Linguistics, 45, 179–199. [Google Scholar] [CrossRef]

- Gu, J., & Yan, Z. (2025). Effects of GenAI interventions on student academic performance: A meta-analysis. Journal of Educational Computing Research, 63(6), 1460–1492. [Google Scholar] [CrossRef]

- Guan, L., Zhang, E. Y., & Gu, M. M. (2025). Examining generative AI–mediated informal digital learning of English practices with social cognitive theory: A mixed-methods study. ReCALL, 37(3), 315–331. [Google Scholar] [CrossRef]

- Gunnarsson, K. U., Collier, E. S., & Bendtsen, M. (2025). Research participation effects and where to find them: A systematic review of studies on alcohol. Journal of Clinical Epidemiology, 179, 111668. [Google Scholar] [CrossRef]

- Honig, C. D., Desu, A., & Franklin, J. (2024). GenAI in the classroom: Customized GPT roleplay for process safety education. Education for Chemical Engineers, 49, 55–66. [Google Scholar] [CrossRef]

- Hori, R., Fujii, M., Toguchi, T., Wong, S., & Endo, M. (2025). Impact of an EFL digital application on learning, satisfaction, and persistence in elementary school children. Early Childhood Education Journal, 53(5), 1851–1862. [Google Scholar] [CrossRef]

- Hsieh, W. M., Yeh, H. C., & Chen, N. S. (2023). Impact of a robot and tangible object (R&T) integrated learning system on elementary EFL learners’ English pronunciation and willingness to communicate. Computer Assisted Language Learning, 38(4), 773–798. [Google Scholar] [CrossRef]

- Hsu, H. L., Chen, H. H. J., & Todd, A. G. (2023). Investigating the impact of the Amazon Alexa on the development of L2 listening and speaking skills. Interactive Learning Environments, 31(9), 5732–5745. [Google Scholar] [CrossRef]

- Hsu, T., & Hsu, T. (2025). Teaching AI with games: The impact of generative AI drawing on computational thinking skills. Education and Information Technologies. advance online publication. [Google Scholar] [CrossRef]

- Huang, T., Wu, C., & Wu, M. (2025). Developing pre-service language teachers’ GenAI literacy: An interventional study in an English language teacher education course. Discover Artificial Intelligence, 5, 163. [Google Scholar] [CrossRef]

- Hung, H. T., Yang, J. C., & Chung, C. J. (2025). Effects of performance goal orientations on English translation techniques in digital game-based learning. International Journal of Human–Computer Interaction, 41(9), 5575–5590. [Google Scholar] [CrossRef]

- Ishaq, K., Zin, N. A. M., Rosdi, F., Jehanghir, M., Ishaq, S., & Abid, A. (2021). Mobile-assisted and gamification-based language learning: A systematic literature review. PeerJ Computer Science, 7, e496. [Google Scholar] [CrossRef]

- Jiang, X., Wang, R., Hoang, T., Ranaweera, C., Dong, C., & Myers, T. (2025). AI-powered gamified scaffolding: Transforming learning in virtual learning environment. Electronics, 14(13), 2732. [Google Scholar] [CrossRef]

- Kalota, F. (2024). A Primer on generative artificial intelligence. Education Sciences, 14(2), 172. [Google Scholar] [CrossRef]

- Kessler, M., Valle, J. M. R., Çekmegeli, K., & Farrell, S. (2025). Generative AI for learning languages other than English: L2 writers’ current uses and perceptions of ethics. Foreign Language Annals, 58, 508–531. [Google Scholar] [CrossRef]

- Koç, F. Ş., & Savaş, P. (2025). The use of artificially intelligent chatbots in English language learning: A systematic meta-synthesis study of articles published between 2010 and 2024. ReCALL, 37(1), 4–21. [Google Scholar] [CrossRef]

- Kohnke, L., Zou, D., & Su, F. (2025). Exploring the potential of GenAI for personalised English teaching: Learners’ experiences and perceptions. Computers and Education Artificial Intelligence, 8, 100371. [Google Scholar] [CrossRef]

- Korseberg, L., & Stalheim, O. R. (2025). The role of digital technology in facilitating epistemic fluency in professional education. Professional Development in Education. advance online publication. [Google Scholar] [CrossRef]

- Law, L. (2024). Application of generative artificial intelligence (GenAI) in language teaching and learning: A scoping literature review. Computers and Education Open, 6, 100174. [Google Scholar] [CrossRef]

- Lee, S., Choe, H., Zou, D., & Jeon, J. (2025). Generative AI (GenAI) in the language classroom: A systematic review. Interactive Learning Environments. advance online publication. [Google Scholar] [CrossRef]

- Lee, S., & Jeon, J. (2024). Visualizing a disembodied agent: Young EFL learners’ perceptions of voice-controlled conversational agents as language partners. Computer Assisted Language Learning, 37(5–6), 1048–1073. [Google Scholar] [CrossRef]

- Lengersdorff, L. L., & Lamm, C. (2025). With low power comes low credibility? Toward a principled critique of results from underpowered tests. Advances in Methods and Practices in Psychological Science, 8(1). [Google Scholar] [CrossRef]

- Li, M., Wang, Y., & Yang, X. (2025). Can generative AI chatbots promote second language acquisition? A meta-analysis. Journal of Computer Assisted Learning, 41(4), e70060. [Google Scholar] [CrossRef]

- Li, X., Liu, J., Gao, W., & Cohen, G. L. (2024). Challenging the N-Heuristic: Effect size, not sample size, predicts the replicability of psychological science. PLoS ONE, 19(8), e0306911. [Google Scholar] [CrossRef]

- Liu, G., Fathi, J., & Rahimi, M. (2024). Using digital gamification to improve language achievement, foreign language enjoyment, and ideal L2 self: A case of English as a foreign language learners. Journal of Computer Assisted Learning, 40(4), 1347–1364. [Google Scholar] [CrossRef]

- Liu, X., Guo, B., He, W., & Hu, X. (2025). Effects of generative artificial intelligence on k-12 and higher education students’ learning outcomes: A meta-analysis. Journal of Educational Computing Research, 63(5), 1249–1291. [Google Scholar] [CrossRef]

- Mahmoudi-Dehaki, M., & Nasr-Esfahani, N. (2025). Utilising GenAI to create a culturally responsive EFL curriculum for pre-teen learners in the MENA region. Education 3-13, 53(7), 1175–1189. [Google Scholar] [CrossRef]

- Mi, Y., Rong, M., & Chen, X. (2025). Exploring the affordances and challenges of GenAi feedback in L2 writing instruction: A comparative analysis with peer feedback. ECNU Review of Education. advance online publication. [Google Scholar] [CrossRef]

- Mohebbi, A. (2025). Enabling learner independence and self-regulation in language education using AI tools: A systematic review. Cogent Education, 12(1), 2433814. [Google Scholar] [CrossRef]

- Monzon, N., & Hays, F. A. (2025). Leveraging generative AI to improve motivation and retrieval in higher education learners. JMIR Medical Education, 11, e59210. [Google Scholar] [CrossRef] [PubMed]

- Mulyani, H., Istiaq, M. A., Shauki, E. R., Kurniati, F., & Arlinda, H. (2025). Transforming education: Exploring the influence of generative AI on teaching performance. Cogent Education, 12(1), 2448066. [Google Scholar] [CrossRef]

- Nakagawa, S., Lagisz, M., Yang, Y., & Drobniak, S. M. (2024). Finding the right power balance: Better study design and collaboration can reduce dependence on statistical power. PLoS Biology, 22(1), e3002423. [Google Scholar] [CrossRef]

- Niño, J. R. G., Delgado, L. P. A., Chiappe, A., & González, E. O. (2025). Gamifying learning with AI: A pathway to 21st-century skills. Journal of Research in Childhood Education, 39(4), 735–750. [Google Scholar] [CrossRef]

- Oberpriller, J., de Souza Leite, M., & Pichler, M. (2022). Fixed or random? On the reliability of mixed-effects models for a small number of levels in grouping variables. Ecology and Evolution, 12(7), e9062. [Google Scholar] [CrossRef]

- Pahi, K., Hawlader, S., Hicks, E., Zaman, A., & Phan, V. (2024). Enhancing active learning through collaboration between human teachers and generative AI. Computers and Education Open, 6, 100183. [Google Scholar] [CrossRef]

- Pallant, J. L., Blijlevens, J., Campbell, A., & Jopp, R. (2025). Mastering knowledge: The impact of generative AI on student learning outcomes. Studies in Higher Education. advance online publication. [Google Scholar] [CrossRef]

- Papert, S. (1980). Mindstorms: Children, computers, and powerful ideas. Basic Books. [Google Scholar]

- Perifanou, M., & Economides, A. A. (2025). Collaborative uses of GenAI tools in project-based learning. Education Sciences, 15(3), 354. [Google Scholar] [CrossRef]

- Pham, T. D., Karunaratne, N., Exintaris, B., Liu, D., Lay, T., Yuriev, E., & Lim, A. (2025). The impact of generative AI on health professional education: A systematic review in the context of student learning. Medical Education. advance online publication. [Google Scholar] [CrossRef]

- Rad, S. H. (2024). Revolutionizing L2 speaking proficiency, willingness to communicate, and perceptions through artificial intelligence: A case of Speeko application. Innovation in Language Learning and Teaching, 18(4), 364–379. [Google Scholar] [CrossRef]

- Rafikova, A., & Voronin, A. (2025). Human–chatbot communication: A systematic review of psychologic studies. AI and Society. advance online publication. [Google Scholar] [CrossRef]

- Roehr-Brackin, K. (2024). Explicit and implicit knowledge and learning of an additional language: A research agenda. Language Teaching, 57(1), 68–86. [Google Scholar] [CrossRef]

- Salinas-Navarro, D. E., Vilalta-Perdomo, E., Michel-Villarreal, R., & Montesinos, L. (2024). Using generative artificial intelligence tools to explain and enhance experiential learning for authentic assessment. Education Sciences, 14(1), 83. [Google Scholar] [CrossRef]

- Scandola, M., & Tidoni, E. (2024). Reliability and feasibility of linear mixed models in fully crossed experimental designs. Advances in Methods and Practices in Psychological Science, 7(1). [Google Scholar] [CrossRef]

- Schielzeth, H., Dingemanse, N. J., Nakagawa, S., Westneat, D. F., Allegue, H., Teplitsky, C., Réale, D., Dochtermann, N. A., Garamszegi, L. Z., & Araya-Ajoy, Y. G. (2020). Robustness of linear mixed-effects models to violations of distributional assumptions. Methods in Ecology and Evolution, 11(9), 1141–1152. [Google Scholar] [CrossRef]

- Shortt, M., Tilak, S., Kuznetcova, I., Martens, B., & Akinkuolie, B. (2023). Gamification in mobile-assisted language learning: A systematic review of Duolingo literature from public release of 2012 to early 2020. Computer Assisted Language Learning, 36(3), 517–554. [Google Scholar] [CrossRef]

- Tai, T., & Chen, H. H. (2024). Navigating elementary EFL speaking skills with generative AI chatbots: Exploring individual and paired interactions. Computers and Education, 220, 105112. [Google Scholar] [CrossRef]

- Tan, X., Cheng, G., & Ling, M. H. (2025). Artificial intelligence in teaching and teacher professional development: A systematic review. Computers and Education Artificial Intelligence, 8, 100355. [Google Scholar] [CrossRef]

- Tavakoli, P., Campbell, C., & McCormack, J. (2016). Development of speech fluency over a short period of time: Effects of pedagogic intervention. TESOL Quarterly, 50(2), 447–471. [Google Scholar] [CrossRef]

- Taye, T., & Mengesha, M. (2024). Identifying and analyzing common English writing challenges among regular undergraduate students. Heliyon, 10(17), e36876. [Google Scholar] [CrossRef]

- Vygotsky, L. (1987). Zone of proximal development. In Mind in society: The development of higher psychological processes. Harvard University Press. [Google Scholar]

- Wang, K., Ruan, Q., Zhang, X., Fu, C., & Duan, B. (2024). Pre-service teachers’ GenAI anxiety, technology self-efficacy, and TPACK: Their structural relations with behavioral intention to design GenAI-assisted teaching. Behavioral Sciences, 14(5), 373. [Google Scholar] [CrossRef]

- Wang, Y., Derakhshan, A., & Ghiasvand, F. (2025a). EFL teachers’ generative artificial intelligence (GenAI) literacy: A scale development and validation study. System, 133, 103791. [Google Scholar] [CrossRef]

- Wang, Y., Zhang, T., Yao, L., & Seedhouse, P. (2025b). A scoping review of empirical studies on generative artificial intelligence in language education. Innovation in Language Learning and Teaching. advance online publication. [Google Scholar] [CrossRef]

- Wood, D., & Moss, S. H. (2024). Evaluating the impact of students’ generative AI use in educational contexts. Journal of Research in Innovative Teaching and Learning, 17(2), 152–167. [Google Scholar] [CrossRef]

- Wu, H., & Liu, W. (2025). Exploring mechanisms of effective informal GenAI-supported second language speaking practice: A cognitive-motivational model of achievement emotions. Discover Computing, 28, 119. [Google Scholar] [CrossRef]

- Wu, H., Zeng, Y., Chen, Z., & Liu, F. (2025a). GenAI competence is different from digital competence: Developing and validating the GenAI competence scale for second language teachers. Education and Information Technologies. advance online publication. [Google Scholar] [CrossRef]

- Wu, J., Wang, J., Lei, S., Wu, F., & Gao, X. (2025b). The impact of metacognitive scaffolding on deep learning in a GenAI-supported learning environment. Interactive Learning Environments. advance online publication. [Google Scholar] [CrossRef]

- Yang, C., Li, R., & Yang, L. (2025). Revisiting trends in GenAi-assisted second language writing: Retrospect and prospect. Journal of Educational Computing Research, 63, 1819–1863. [Google Scholar] [CrossRef]

- Zhang, L., Yao, Z., & Moghaddam, A. H. (2025a). Designing GenAI tools for personalized learning implementation: Theoretical analysis and prototype of a multi-agent system. Journal of Teacher Education, 76(3), 280–293. [Google Scholar] [CrossRef]

- Zhang, Y., Lai, C., & Gu, M. M. Y. (2025b). Becoming a teacher in the era of AI: A multiple-case study of pre-service teachers’ investment in AI-facilitated learning-to-teach practices. System, 133, 103746. [Google Scholar] [CrossRef]

- Zhang, Z., Aubrey, S., Huang, X., & Chiu, T. K. F. (2025c). The role of generative AI and hybrid feedback in improving L2 writing skills: A comparative study. Innovation in Language Learning and Teaching. advance online publication. [Google Scholar] [CrossRef]

- Zhou, M., & Peng, S. (2025). The usage of AI in teaching and students’ creativity: The mediating role of learning engagement and the moderating role of AI literacy. Behavioral Sciences, 15(5), 587. [Google Scholar] [CrossRef]

- Zhou, S. (2024). Gamifying language education: The impact of digital game-based learning on Chinese EFL learners. Humanities and Social Sciences Communications, 11, 1518. [Google Scholar] [CrossRef]

| Group | N (%) | Gender | Sig a | Age (Years) | Sig b | |

|---|---|---|---|---|---|---|

| Female | Male | Mean (SD) | ||||

| Non-digital | 61 (51.3) | 37 (60.7) | 24 (39.3) | 0.084 | 8.59 (0.50) | 0.051 |

| ChatGPT | 58 (48.7) | 26 (44.8) | 32 (55.2) | 8.76 (0.43) | ||

| Lesson | Topic | Non-Digital Group | ChatGPT Group |

|---|---|---|---|

| 1 | Introductory Activity | Group circle game with flashcards: Pupils pass cards and practice common English phrases in pairs, with teacher-led corrections. | Individual spy training: GenAI provides phrases, prompts repetition, and offers feedback on grammar and speaking. |

| 2 | Family Members | Board game with dice: Pupils roll to name family roles and describe them using simple sentences, group verification. | Family adventure quest: GenAI describes a family scenario in English, asks pupil to respond with related phrases, encourages speaking aloud with correct verb forms. |

| 3 | Daily Routines | Flashcard matching: Pupils match pictures to routine verbs, then role-play in small groups with teacher guidance. | Time traveler game: GenAI narrates a daily routine in English, prompts pupil to echo and add their own, checking grammar in responses. |

| 4 | Hobbies and Activities | Charades: Pupils act out hobbies non-verbally, others guess and form sentences like “I like playing soccer.” | Hobby explorer challenge: GenAI suggests an activity phrase in English, urges repetition with enthusiasm, and evaluates sentence structure. |

| 5 | Food and Meals | Memory card game: Pupils flip cards to match foods and discuss preferences in pairs, using basic grammar. | Chef competition: GenAI proposes a meal description in English, asks pupil to repeat and suggest additions, providing feedback on word order. |

| 6 | Places in Town | Map drawing relay: Groups draw town places and label them, then describe directions orally. | City detective puzzle: GenAI gives location clues in English, prompts pupil to respond with directions, correcting grammar and encouraging clear speaking. |

| Variable | Term | Estimate | SE | t | p | R2 Marg | R2 Cond |

|---|---|---|---|---|---|---|---|

| Grammar | Intercept | 9.918 | 0.475 | 20.889 | 0.001 | 0.085 | 0.094 |

| Group × Time (T1–T2) | −0.006 | 0.967 | −0.006 | 0.995 | 0.085 | 0.094 | |

| Group × Time (T1–T3) | 0.53 | 0.957 | 0.554 | 0.58 | 0.085 | 0.094 | |

| Listening | Intercept | 7.033 | 0.306 | 23.008 | 0.001 | 0.1 | 0.1 |

| Group × Time (T1–T2) | 1.525 | 0.619 | 2.462 | 0.015 | 0.1 | 0.1 | |

| Group × Time (T1–T3) | 0.484 | 0.619 | 0.781 | 0.436 | 0.1 | 0.1 | |

| Pronunciation | Intercept | 18.984 | 0.424 | 44.82 | 0.001 | 0.207 | 0.207 |

| Group × Time (T1–T2) | 2.241 | 0.858 | 2.612 | 0.01 | 0.207 | 0.207 | |

| Group × Time (T1–T3) | 0.975 | 0.858 | 1.137 | 0.257 | 0.207 | 0.207 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Raimkulova, A.; Ybyraimzhanov, K.; Halmatov, M.; Mailybayeva, G.; Khaimuldanov, Y. Pre-Service EFL Primary Teachers Adopting GenAI-Powered Game-Based Instruction: A Practicum Intervention. Educ. Sci. 2025, 15, 1326. https://doi.org/10.3390/educsci15101326

Raimkulova A, Ybyraimzhanov K, Halmatov M, Mailybayeva G, Khaimuldanov Y. Pre-Service EFL Primary Teachers Adopting GenAI-Powered Game-Based Instruction: A Practicum Intervention. Education Sciences. 2025; 15(10):1326. https://doi.org/10.3390/educsci15101326

Chicago/Turabian StyleRaimkulova, Akbota, Kalibek Ybyraimzhanov, Medera Halmatov, Gulmira Mailybayeva, and Yerlan Khaimuldanov. 2025. "Pre-Service EFL Primary Teachers Adopting GenAI-Powered Game-Based Instruction: A Practicum Intervention" Education Sciences 15, no. 10: 1326. https://doi.org/10.3390/educsci15101326

APA StyleRaimkulova, A., Ybyraimzhanov, K., Halmatov, M., Mailybayeva, G., & Khaimuldanov, Y. (2025). Pre-Service EFL Primary Teachers Adopting GenAI-Powered Game-Based Instruction: A Practicum Intervention. Education Sciences, 15(10), 1326. https://doi.org/10.3390/educsci15101326