Abstract

To date, the primary challenge in the field of information and communication technologies-mediated rehabilitative interventions for autism is the lack of evidence regarding efficacy and effectiveness. Although such interventions, particularly those realised with Immersive Virtual Reality-based Serious Games, show promise, clinicians are hesitant to adopt them due to minimal evidence supporting their efficiency and effectiveness. Efficacy refers to whether an intervention produces the expected result under ideal circumstances, while effectiveness measures the degree of beneficial effect in real-world clinical settings. The absence of efficacy and effectiveness evidence undermines the reliability and generalisability of such interventions, which are crucial for real-life settings, making accurate evaluation pivotal. Evaluating the efficacy and effectiveness of these interventions poses a significant challenge due to the absence of evaluation guidelines. A previous study systematically reviewed the evaluation of Immersive Virtual Reality-based Serious Games for autism, revealing incomplete or methodologically problematic evaluation processes. This evidence underpinned the aim of the present study: to propose an Evaluation Framework encompassing all necessary methodological criteria for evaluating the efficacy and effectiveness of such interventions. Disseminating this Evaluation Framework as a pocket guide could facilitate the development of reliable future studies, thereby advancing evidence-based interventions to improve the quality of life for individuals with autism.

1. Introduction

Nowadays, in the field of rehabilitative interventions (henceforth intervention/s) for individuals with Autism Spectrum Disorders (ASD), it is known that the more promising interventions are those realised with Information and Communication Technologies (ICT), e.g., robots, videos, tablets/mobile applications, and virtual reality [1,2,3,4,5,6,7,8,9,10,11].

The main reason is linked to the specific characteristics of ASD individuals, such as their natural affinity for Science, Technology, Engineering, and Mathematics (i.e., STEM) [12,13,14]. Indeed, computers and technologies are comfortable for them since they are generally predictable, consistent, free from social demands, and specific in focusing attention [6,15,16,17]. Then, it is widely recognised that individuals with ASD have a strong visual memory and learn better when presented with visual–spatial information, which can be easily achieved with technologies, given their visually stimulating nature [6,18,19,20,21,22].

Among ICT-based interventions, researchers agree in recognising the potentiality of Immersive Virtual Reality (IVR) [6,23,24] since, among others, it provides the optimal level of immersion for ASD individuals influencing their involvement [6]. Moreover, IVR combined with Serious Games (SGs) learning strategies appear to be the more promising means to realise an intervention for ASD individuals (e.g., [8,25,26,27,28,29,30]).

Notwithstanding, the evidence of the efficacy and effectiveness of such interventions in scientific studies and in clinical application, respectively, is minimal (e.g., [26,31,32,33,34]). Efficacy (explanatory trials) determines whether an intervention produces the expected result under ideal circumstances [35,36]. Meanwhile, effectiveness (pragmatic trials) measures the degree of beneficial effect under real-world clinical settings [35,36]. Demonstrating both efficiency and effectiveness is highly complex and requires a rigorous evaluation process [37,38].

In the wake of this, a previous study [22] systematically reviewed how IVR-based SGs for ASD are evaluated to provide an overview of the current state-of-the-art regarding the evaluation process of such interventions. The results are concerning, revealing an incomplete or methodologically problematic evaluation process. Specifically, these results highlighted several methodological issues related to evaluating the efficacy and effectiveness of IVR-based SG interventions for ASD.

Beyond the specific outcomes, the key insight is that these methodological issues undermine the possibility of demonstrating the durability, adaptability to different contexts, and consistency with the heterogeneity of ASD individuals of IVR-based SG interventions [39]. The negative effect is that the reliability of the intervention results is compromised [39,40,41]. Reliability refers to the reproducibility of the measurement (e.g., intervention) when repeated at random in the same subject or sample [40,42]. In the context of clinical research, reliability indicates the extent to which the study’s results are replicable and stable over time, under similar conditions, and with different researchers [43]. Ensuring the reliability of clinical research is crucial for establishing the efficacy and effectiveness of the study outcomes, which, in turn, influences their reproducibility and generalisability [44,45].

However, the lack of reproducibility and generalisability of the results obtained from an intervention still seems to be overlooked. This also happens because of the lack of reporting on the reliability of intervention results in studies [39,40,44]. In fact, especially in the clinical setting, the effectiveness, efficiency, and generalisability of interventions in real-life settings are paramount, making accurate evaluation of them crucial [44,45].

The situation becomes even more perplexing when it comes to ASD, as there is a pressing urgency to find effective and efficient interventions for at least three reasons:

- (i)

- The peculiar characteristics of the disorder, i.e., it is considered not only a disability, but also an example of human neurological variation—neurodiversity—that defines the identity of a person’s cognitive assets and challenges [46]; in fact, ASD can significantly impact the quality of life, leading to social isolation, employment difficulties, and mental health issues for individuals and their families [47,48];

- (ii)

- The prevalence of ASD has risen unprecedentedly in the past decade; the Centers for Disease Control and Prevention estimates that in the USA, 1 in 68 children is identified with ASD [49,50,51,52,53], while in Europe, the prevalence was < 1 of 100 [54];

- (iii)

- The high economic costs of ASD, with lifetime support costs estimated at 2.4 million in the USA, £1.5 million in the UK [55], and, in Europe, c.a. €2,834 for children, including health services and societal expenses, over two months [56].

Therefore, the interventions that are only promising and with low or minimal evidence of efficacy and effectiveness are not appropriate, and, consequently, clinicians did not use them [54,56,57]. Even if a rigorous study (e.g., randomised clinical trials) demonstrates the efficacy of an intervention, it may happen that its effectiveness in real-life settings is not guaranteed [58,59]. Indeed, it is necessary for an intervention to be both efficient and, at the same time, effective.

To be efficient and effective, an intervention must be evidence-based. Indeed, an evidence-based intervention would ensure that the clinical and scientific reliability has been evaluated and defined according to standard criteria [40,42,44]. A rigorous evaluation process is the gold standard for demonstrating that an intervention is evidence-based [45,60].

Nowadays, achieving this goal is not an easy task since there is a lack of evaluation guidelines that allow for avoiding or dealing satisfactorily with methodological issues. A detailed examination of the methodological issues that emerged from a previous systematic review [22] accompanied by a study of recent literature on this topic (e.g., [61,62,63,64,65,66,67,68]) leads us to identify methodological criteria for avoiding these issues in future evaluation processes. The term criterion (plural, criteria) refers to all the elements that allow a methodologically controlled study to be conducted [60,69]. Indeed, if these are adequately used, they determine whether interventions are efficient, effective, and ready for dissemination [69].

The present study goes in this direction by proposing an Evaluation Framework as a pocket guide for evaluating the efficacy and effectiveness of IVR-based SG interventions for ASD. This framework encompasses all the necessary methodological criteria to avoid methodological issues or dealing satisfactorily during the evaluation process. It goes even further by providing practical guidance on how issues should be addressed and, consequently, how to effectively meet all the methodological criteria.

The paper is organised as follows: Section 2 describes the methodology (Section 2.1) behind the systematic review of Peretti et al. [22] and its outcomes (Section 2.2) from which the Evaluation Framework proposed in this study was partially derived; in Section 3, the results and research implications of this study are presented: Section 3.1 reports the transformation from methodological issues to methodological criteria, Section 3.2 presents the Evaluation Framework as a pocket guide designed to push the usability, and finally, Section 3.3 provides a practical example to show how to use the Evaluation Framework in real applications. In Section 4, the conclusion and future work are drawn.

2. Background

2.1. Research Method

In this section, the rigorous systematic review informed by [70] and detailed in [22] was reported. The Research Question (RQ) that guided this systematic review is: How interventions realised with Immersive Virtual Reality-based Serious Games for individuals with autism are evaluated? To answer this RQ, Kitchenam’s protocol [70] was applied; it consists of three main phases: planning, conducting, and reporting the review. The planning phase is the preliminary stage required to justify the absolute necessity of conducting a systematic review to address the mentioned RQ. After demonstrating the need to start the systematic review, the other two steps were fulfilled: conduct and report. It should be noted that a detailed examination of the planning phase is provided in Section 2 (Background) of Peretti et al. [22]. For the aim of the present study, the rigorous method of [70] to conduct and report a systematic review, with all its phases, i.e., Data Sources and Search Strategies, Study Selection and Quality Assessment, Data Extraction, and Data Synthesis, is reported. For the reader’s convenience, these aspects are organised as subsections: Data Sources and Search Strategies (Section 2.1.1), Study Selection and Quality Assessment (Section 2.1.2), Data Extraction (Section 2.1.3), and Data Synthesis (Section 2.1.4).

To guarantee the transparency, reliability, and replicability of the systematic review, the detailed description of the research methods adopted, as well as the documentation of the entire study selection and quality assessment process, is available in the repository [71].

2.1.1. Data Source and Source Strategy

One of the main goals of a systematic review is to find the largest possible number of research articles that are meaningful in answering the defined RQ (i.e., “How interventions realised with Immersive Virtual Reality based Serious Games for individuals with autism are evaluated?”), using an unbiased search strategy like [70]. For this systematic review, the search strategy includes the search strings and the resources to be searched to identify as many as possible research articles dealing with IVR-based SGs, developed as interventions for individuals with ASD.

Specifically, the search string has been formulated using a list of relevant terms and their alternative forms, reported in Table 1. These alternative terms have been obtained, considering the most frequent ones appearing in the 23 reviews analysed in Section 2 (Background) of [22] (e.g., [6,26,28,31,72]). In detail, terms associated with the “Autism Spectrum Disorder” term have been included: “autism” and “ASD”. Similarly, associated with the “Immersive Virtual Reality” term, the "immersive virtual environment” term has been included. In addition, the most popular IVR technologies have also been considered as search terms, i.e., “HMD” and “CAVE”. Then, in agreement with other reviews in this field (e.g., [18,72]), the term “Virtual Reality” has also been included in the list of search terms. Often, “Virtual Reality” is used with a broad conceptualisation without referring to the level of immersion provided by the adopted technology. Finally, concerning the “Serious Game” term, the “Educational Game” term has also been included since it refers to a subset of serious games that have an educational goal [26,73].

Table 1.

List of search terms of the systematic review reported in Peretti et al. [22]. The three main terms are in bold in the first row, and their alternative forms are in their respective columns.

Consequently, the search string has been formulated as follows, using Boolean operators AND and OR:

(“autism spectrum disorder” OR “autism” OR “ASD”) AND (“immersive virtual reality” OR “immersive virtual environment” OR “virtual reality” OR “HMD” OR “CAVE”) AND (“serious game” OR “educational game”)

The resources to be searched through the formulated search string are the following eight:

- Scopus (https://www.scopus.com, accessed on 2 April 2024)

- ACM digital library (https://dl.acm.org, accessed on 2 April 2024)

- IEEE Xplore Digital Library (https://ieeexplore.ieee.org, accessed on 2 April 2024)

- Science Direct (https://www.sciencedirect.com, accessed on 2 April 2024)

- Web of Science (http://apps.webofknowledge.com, accessed on 2 April 2024)

- PubMed (https://pubmed.ncbi.nlm.nih.gov, accessed on 2 April 2024)

- Semantic Scholar (https://www.semanticscholar.org, accessed on 2 April 2024)

- Google Scholar (https://scholar.google.com, accessed on 2 April 2024)

They have been selected since they are regularly used by other reviews in this field (e.g., [6,26,28,31,72]), as well as by systematic reviews in general (see, e.g., [74,75]). This list allowed for access to an extensive collection of relevant resources, covering computer science conferences and journals (e.g., International Journal of Human-Computer Interaction, Journal of Computer Assisted Learning, Interactive Learning Environments) as well as health conferences and journals (e.g., Autism Research, Journal of Autism and Developmental Disorders). All searches were conducted in August 2021 thanks to the free access of the selected digital resources allowed by the authors’ institution.

2.1.2. Study Selection and Quality Assessment

According to [70], once the potentially relevant research articles have been found, they must be assessed and then selected according to their relevance. Related to the RQ, the relevance is shown by defining a set of selection factors. As mentioned above, to improve the quality assessment, the systematic review reported in [22] adopted a set of selection factors more detailed than those provided by [70]. Table 2 and Table 3 list the selection factors defined as inclusion and exclusion factors: research articles to be included in the systematic review must meet all the inclusion factors, as well as those to be excluded, and meet at least one of the exclusion factors.

Table 2.

Inclusion factors of the systematic review reported in [22].

Table 3.

Exclusion factors of the systematic review reported in [22].

Inclusion factors from IN1 to IN4 and exclusion factors from EX1 to EX5 are related to more general scientific argumentation. For example, including all the research articles published after 2009 (IN1) guarantees that results deal with the current generation of IVR technology, in agreement with other reviews in the same field (e.g., [31]). Including only the research articles written in English (IN2) guarantees that results are the highest quality possible since it is considered the universal language of science [76,77,78]. Likewise, the exclusion of research articles that do not have the full text available (EX5) (e.g., only abstract or title appear online) is necessary to guarantee that the research articles used for the systematic review reported in [22] have sufficient and consistent data.

On the other hand, the inclusion factors from IN5 to IN7 and exclusion factors from EX6 to EX12 are directly derived from the RQ that the systematic review reported in [22] deals with. For instance, including the factor IN5 guarantees that research articles have to focus only on interventions for the rehabilitation of individuals with ASD. Including the factor IN6 guarantees that the ASD rehabilitation interventions can only be realised as IVR-based SGs. Likewise, excluding all research articles that do not relate to the topics of the systematic review reported in [22] (EX6) guarantees a focus only on research articles that are relevant to the defined RQ (e.g., research articles that are clearly unrelated to the scope of the systematic review based on the title and abstract). Excluding research articles presenting interventions that do not realise with IVR (EX11) guarantees the exclusion of research articles presenting interventions based on other ICTs, such as non-immersive VR technology (i.e., desktop-based systems), robots, mobile technologies (e.g., smartphones, tablets), tangible user interfaces, and off-the-shelf commercial video game consoles (e.g., Nintendo Wii).

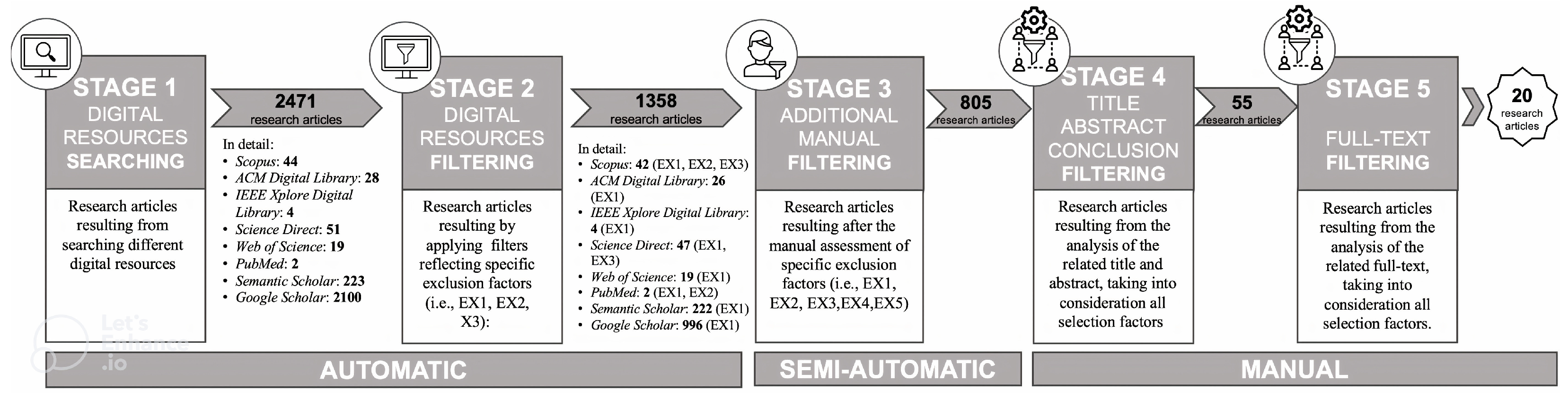

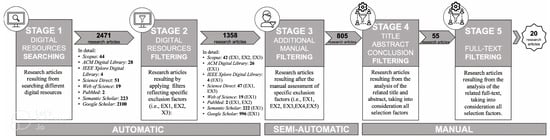

Figure 1 summarises and visualises the five stages underpinning the study selection and quality assessment. Whenever possible, stage outputs are associated with the digital sources from which they were derived (see outputs of stages 1 and 2 in Figure 1). For each stage, the specific performed actions and visual icons representing these actions are reported in the high part of the boxes, as well as in the low part of them, and the inclusion and exclusion factors are specifically reported. In what follows, the five stages are then detailed:

Figure 1.

Study Selection and Quality Assessment process conducted within the systematic review reported in [22].

- Stage 1: Digital Resource Searching— The search string has been applied to digital resources. The search string reported in the previous subsection has been adapted in “Autism”, “Immersive Virtual Reality”, and “Serious Game” only for Semantic Scholar since it is an AI-powered digital resource that does not allow the usage of Boolean operators.

- Stage 2: Digital Resource Filtering—Filters have been applied to the output of stage 1. Filters reflected the exclusion factors of Table 3, for example, publication year (EX1) or the chosen language (EX2). According to the functionalities of digital resources, selection factors have been adequately applied (for details, see specifications under the arrow between stage 2 and stage 3 in Figure 1).

- Stage 3: Additional Semi-Automatic Filtering—The research articles obtained as output from stage 2 were collected in a unique electronic sheet by reporting the related authors’ list, title, year of publication, and source (e.g., name of the journal or conference proceedings where it was published). If there was missing information (e.g., sources), it was retrieved manually and inserted in the electronic sheet. Since many digital libraries do not provide automatic filters related to all the listed exclusion factors in Table 3, in this stage, they were applied semi-automatically. For example, many research papers that survived stage 2 were often archived twice or even three times; in stage 3, these duplicates were removed (according to EX4). Furthermore, research articles that were semi-automatically excluded were those not published in peer-reviewed journals or conference proceedings and presenting reviews or similar contributions (according to EX3). This activity was conducted by analysing the titles and sources of the retrieved research articles. Additionally, in this stage, corresponding authors of those research articles not fully available were contacted (according to EX5).

- Stage 4: Title, Abstract, and Conclusion Filtering—The 805 research articles filtered from stage 3 were, in stage 4, randomly divided into two sets of 402 and 403 research articles (denoted as StA and StB). A manual filter was applied to these two sets by analysing titles, abstracts, and conclusions. To guarantee a high quality of this manual filter, two couples composed of experts (denoted as Cp1 and Cp2) dealt with the analysis of the two sets using a cross-referenced procedure. At the end of this stage, 55 research articles survived. Cohen Kappa Statistic was performed [79] to allow for the reliability of the inclusion decision [70]. The results of Cohen K (0.90) showed an agreement of 98% among experts (Cp1 and Cp2) about the inclusion of the 55 surviving research articles.

- Stage 5: Full-text Filtering—When the research articles filtered became 55, in stage 5, they were randomly divided into two new sets of 27 and 28 research articles (denoted as StC and StD). An additional manual filter was applied by Cp1 and Cp2, analysing full texts of the research articles using a cross-referenced procedure.

The output of the entire study selection consists of 20 research articles dealing with the evaluation of IVR-based SGs, developed as rehabilitative interventions for individuals with ASD. Cohen K performed on the expert’s agreement that led to the inclusion of these 20 research articles equals 1, i.e., 100% agreement. This result is because, at the end of the search on the selected digital resources (in August 2021), only 20 research articles met all the inclusion factors fixed in the present systematic reviews. Therefore, given the small number, all 20 articles were included, regardless of the quality of each. The Quality Assessment of these 20 articles is detailed in [22].

2.1.3. Data Extraction

Data extraction forms were designed to accurately record the information needed to address the identified RQ extracted from the selected research articles. The forms were realised through electronic sheets. The contents of the data extraction forms used in the systematic review of Peretti et al. [22], also including standard general data about the research articles, are detailed in [71]. The data extraction was performed independently by all the experts conducting the systematic review reported in [22]. The extracted data were then compared, and disagreements were resolved by consensus among researchers, obtaining a single electronic sheet for each selected research article.

2.1.4. Data Synthesis

All the data extracted from the selected 20 research articles were analysed by frequency analysis, as shown in the next section. The description of findings, detailed in Section 2.2, is accompanied by an analysis table (see Table 4) and a synthesis table (see Table 5).

2.1.5. Limitations

The systematic review by Peretti et al. [22] has limitations, which we report for transparency. First, the review was limited to research articles in the English language, which could have resulted in research articles being omitted; although the authors do not expect this would significantly affect the findings, it could limit the generalisability. Second, even though the authors tried hard, they did not check every research database out there, like IGI Global. For this reason, some research articles may not have been captured and analysed. Finally, the search was confined to peer-reviewed publications, including journals and conference proceedings. Consequently, some research articles were unavailable for retrieval and analysis.

2.2. Systematic Review Outcomes

In this section, the outcomes obtained from the analysis performed on the 20 research articles (their references are listed in the first column of Table 4) reviewed in Peretti et al. [22] are discussed. This analysis led to the emergence of several methodological issues and related methodological sub-issues about how IVR-based SG interventions for ASD individuals are evaluated.

Table 4 details the analysis conducted on the 20 research articles; empty cells indicate that a particular methodological issue or sub-issue was not addressed by the corresponding research article.

The results are broken down as follows:

- I1

- Multidisciplinary team. The analysis showed that 40% out of the total 20 research articles (8 of them) had a multidisciplinary team that collaborated on the design, development, and evaluation. None of them also specify the roles of each involved person on the team.

- I2

- Sample Characteristics. This involves seven sub-issues, which are:

- sI2a

- Sample size. The analysis showed that out of the total sample of 20 research articles, only 10% (2 of them) had a sample size sufficient to ensure minimum generalisability of the results (>10).

- sI2b

- Age of participants. The analysis showed that 75% of a total of 20 research articles (14 of them) specified the age of the participants.

- sI2c

- Ratio M:F. The analysis showed that 0% out of the total 20 research articles (none of them) met this ratio.

- sI2d

- ASD as the Control group. The analysis showed that only 20% out of the total 20 research articles examined (4 of them) used individuals with ASD as a control group.

- sI2e

- With or without intellectual disability. The analysis showed that 50% of the 20 research articles (10 of them) specified this cognitive characteristic of the sample. In detail, among this 50% of research articles, 80% include individuals without intellectual disabilities, and the remaining 20% include individuals with intellectual disabilities.

- sI2f

- Level of Severity. According to the DSM-5, the analysis showed that only 10% of the 20 research articles (2 of them) specified this information.

- sI2g

- Exclusion or inclusion. The analysis showed that only 10% of the 20 research articles (2 of them) specified this information.

- I3

- Experimental Design. This involves three sub-issues, which are:

- sI3a

- Statistical Design. The analysis showed that 75% out of the 20 research articles (15 of them) specified the kind of statistical design.

- sI3b

- Testing method for psychological variables. The analysis showed that 35% out of the 20 research articles (7 of them) used this sub-criterion.

- sI3c

- Testing method for technological measures. The analysis showed that 40% out of the 20 research articles (8 of them) used this sub-issue.

- I4

- Intervention. This involves five sub-issues, which are:

- sI4a

- Level of Immersion. The analysis showed that all 20 research articles do not have this sub-issue since all specified it (100%). Unfortunately, none of them used the level of immersion to verify the correlation between it and the outcome of the proposed interventions.

- sI4b

- Kind of ability. The analysis showed that all 20 research articles do not have this sub-issue since all specified it (100%). Unfortunately, none of them used a classifier to define the kind of ability. In the present systematic review, the classifier called American Association on Intellectual and Developmental Disabilities [80] was used. In this way, a more detailed analysis regarding the kind of ability addressed by the 20 research articles is provided. Specifically, 25% of them covered two types of skills, including social and conceptual skills: 45% social skills, 50% conceptual skills, and, finally, 30% practical skills.

- sI4c

- Engagement. The analysis showed that 35% of the 20 research articles (7 of them) considered this sub-issue.

- sI4d

- Acceptability. The analysis showed that 35% of the 20 research articles (7 of them) considered this sub-issue.

- sI4e

- Usability. The analysis showed that 20% out of the total 20 research articles (4 of them) considered this sub-issue.

- I5

- Level of Aversion or Negative Effect of IVR technology. The analysis showed that 15% out of the total 20 research articles (3 of them) reported the presence of these effects.

- I6

- Ethical aspects. The analysis showed that 40% out of the total 20 research articles (exactly 8 of them) disclosed that their study was approved by an ethics committee. The latter result is worrisome since it was predictable that this criterion obtains a score of 100% to safeguard both the individuals with ASD and the research team.

Table 4.

Analysis of included research articles in the systematic review of Peretti et al. [22].

Table 4.

Analysis of included research articles in the systematic review of Peretti et al. [22].

| Issues and Sub-Issues | |||||||||||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

|

I1 (Multidisc. Team) | I2 (Sample Characteristics) |

I3 (Experimental Design) | I4 (Intervention) | I5 (Level of Aversion or Negative Effect) | I6 (Ethical Aspects) | ||||||||||||||

| sI2a | sI2b | sI2c | sI2d | sI2e | sI2f | sI2g | sI3a | sI3b | sI3c | sI4a | sI4b | sI4c | sI4d | sI4e | |||||

| References | [81] | x | x | x | x | x | x | x | x | x | |||||||||

| [82] | x | x | x | x | x | x | x | x | |||||||||||

| [83] | x | x | x | x | x | x | x | x | |||||||||||

| [84] | x | x | x | x | x | x | x | x | |||||||||||

| [85] | x | x | x | x | x | x | x | x | |||||||||||

| [86] | x | x | x | x | x | ||||||||||||||

| [87] | x | x | x | ||||||||||||||||

| [88] | x | x | x | x | x | x | x | x | |||||||||||

| [89] | x | x | x | x | x | x | x | x | x | x | |||||||||

| [90] | x | x | x | x | x | x | x | x | |||||||||||

| [91] | x | x | x | x | x | x | x | ||||||||||||

| [92] | x | x | x | x | x | x | x | ||||||||||||

| [93] | x | x | x | x | x | x | x | x | x | ||||||||||

| [94] | x | x | x | x | x | x | x | x | |||||||||||

| [95] | x | x | x | ||||||||||||||||

| [96] | x | x | |||||||||||||||||

| [97] | x | x | x | x | x | x | x | x | x | ||||||||||

| [98] | x | x | x | x | x | x | x | x | |||||||||||

| [99] | x | x | x | x | x | ||||||||||||||

| [100] | x | x | x | x | x | x | x | x | x | ||||||||||

| % Coverage | 40% | 10% | 75% | 0% | 20% | 50% | 10% | 10% | 75% | 35% | 40% | 20% | 20% | 35% | 35% | 20% | 15% | 40% | |

To gain insights into the state-of-the-art, Table 5 captures these six key methodological issues (I) and their sub-issues (sI). The table also highlights for each issue and related sub-issues what aspects require further investigation and what elements, even if necessary, are entirely missing from current evaluation practices.

Table 5.

State-of-the-art related methodological issues and sub-issues that emerged as outcomes of the systematic review in Peretti et al. [22].

Table 5.

State-of-the-art related methodological issues and sub-issues that emerged as outcomes of the systematic review in Peretti et al. [22].

| ID | Methodological Issue | Methodological Sub-Issue | Existing | To Add |

|---|---|---|---|---|

| I1 | Multidisciplinary Team | x | x* | |

| I2 | Sample Characteristics | |||

| sI2a | Sample Size | x | x* | |

| sI2b | Age of Participants | x | x* | |

| sI2c | Ratio Male:Female | — | x** | |

| sI2d | ASD as the Control Group | x | x* | |

| sI2e | With or Without Intellectual Disability | x | x⌃ | |

| sI2f | Level of Severity according to DSM-5 | x | x* | |

| sI2g | Excluded/Included CR | x | x* | |

| I3 | Experimental Design | |||

| sI3a | Statistical Design | x | x* | |

| sI3b | Testing Methods- Psychological Variables | x | x* | |

| sI3c | Testing Methods- Technological Measures | x | x* | |

| I4 | Intervention | |||

| sI4a | Level of Immersion | x | — | |

| sI4b | Kind of Ability | x | x* | |

| sI4c | Engagement | x | x* | |

| sI4d | Acceptability | x | x* | |

| sI4e | Usability | x | x* | |

| I5 | Level of Aversion or Negative Effects of IVR Technology | x | x* | |

| I6 | Ethical Aspects | x | x* |

Legend: x* = To be refined and integrated into the Evaluation Framework because it was incompletely covered. x** = To be added completely to the Evaluation Framework because it was never treated. x⌃ = Wording not to be used in the Evaluation Framework because it is obsolete and no longer used. — = Do not add anything to the Evaluation Framework because it is already specified.

Moving into the core of the methodological issues just described, a first preliminary observation is that except for the Level of Immersion sub-issue (sI4a) of the Intervention methodological issue (I4), all other methodological issues must be addressed comprehensively. The most troubling result is related to the Ratio Male:Female sub-issue (sI2c) of the Sample Characteristics methodological issue (I2) since it is not addressed by any of the scientific articles and reviews analysed in Peretti et al. [22]. In this regard, a male predominance is consistent in epidemiological data: ASD affects 2–3 times more males than females [57]. However, many studies ignored this aspect, with the consequence that samples are free of females. The sex-linked susceptibility emphasised the importance of stratification by sex of participant selection, especially in evaluating possible differences between males and females in the effects of technological interventions. Likewise, neglecting a component of a population, even with a lower prevalence, constitutes a methodological issue.

Moreover, another key methodological issue is the necessity of involving a Multidisciplinary Team (I1). Clinical and technological researchers should collaborate across disciplines in the development and design of such interventions. The results obtained from a previous systematic review have shown that more than half of the reviewed studies were conducted by an imbalanced team (for more detail, see [22]). A multidisciplinary team is the first step to avoid methodological issues in evaluating the efficacy and effectiveness of IVR-based SG for ASD individuals.

Another contentious result is related to the Sample Size sub-issue (sI2a) of the Sample Characteristics issue (I2). Overall, reviewed studies referred to small sample sizes. Consequently, their results have problems with consistency [26,101]. Indeed, the issue of sample size in scientific research involving clinical populations such as ASD resulted in a widespread issue: it did not allow for the generalisability of results [31]. There is a need for more randomised controlled trials with larger sample sizes and control of participant selection.

Similarly, no consensus exists on the Experimental Design methodological issue (I3) that should be used to evaluate the intervention’s success, even if this is an aspect more relevant to verifying the efficacy and effectiveness of the intervention’s results [1,34]. This methodological issue includes other methodological sub-issues, such as Statistical Design (sI3a) that refers to the kind of statistical analysis (e.g., pre-post design, between design, follow-up) used to analyse variables under study [22,60]. If there is no agreement on which to use to evaluate the same intervention effects, comparing obtained results appears difficult and impossible to generalise [60].

Furthermore, it would be necessary for the specificity of the intervention, i.e., IVR-based SG, to also evaluate the negative effects of IVR technology, e.g., nausea and dry eyes (i.e., Level of Aversion or Negative Effects of IVR Technology methodological issue (I5)). However, the results of a previous systematic review [22] revealed the absence of studies on the negative effects [21]. Considering and, above all, monitoring the possible negative effects associated with IVR is crucial, especially when working with clinical populations during rehabilitation intervention. The lack of results in the rehabilitation process could be a negative effect of the kind of the technology itself rather than the intervention [30,33].

Moreover, often overlooked, as it is not consistently reported in studies involving clinical populations, is the approval of the ethical committee after considering Ethical Aspects (I6). Obtaining approval from an ethical committee ensures several elements, ranging from participants being informed about the risks and benefits of the study to informed consent, providing evidence of their awareness or that of their authorised representatives [17].

Overall, it emerges that each of the methodological issues obtained by Peretti et al. [22] (see Table 5) undermines the possibility of conducting a proper evaluation of IVR-based SG interventions for an ASD individual. This awareness prompted us to make a greater effort by combining such evidence with what is actually known in the literature on this topic (e.g., [61,62,63,64,65,66,67,68]) to transform these methodological issues into criteria that can be addressed systematically, thus enabling controlled evaluation. The result is the identification of six methodological criteria and their sub-criteria, as illustrated in the following section. For each criterion, we provide guidelines on how to adequately meet it.

3. Results and Research Implications

3.1. From Methodological Issues to Methodological Criteria

In this section, we present the core of the present study: the six methodological criteria and their sub-criteria underpinned the Evaluation Framework for conducting evaluations of IVR-based SG interventions for ASD that yield reliable, effective, and efficient results. These methodological criteria emerge as a constructive response to the current methodological issues undermining the replicability of such interventions.

It is worth noting that the six methodological issues and sub-issues just described and that emerged as results of the systematic review in Peretti et al. [22] simply represent the starting point from which the six methodological criteria and sub-criteria emerge.

Indeed, the following methodological criteria and sub-criteria result from the joining of the outcomes obtained in Peretti et al. [22], the most recent referred references on the topic (e.g., [65,66,67,68]), and the most recent research articles describing IVR-based SG interventions for ASD (i.e., four articles: [61,62,63,64]). The deep study of these articles has also highlighted the same methodological issues, ranging from sample size to the lack of ethical committee approval.

Thus, the six methodological criteria and sub-criteria described below come to life as an overall solution to the issues that actually compromise the evaluation process for evaluating the efficacy and effectiveness of IVR-based SG interventions for ASD. The methodological criteria and sub-criteria are broken down as follows:

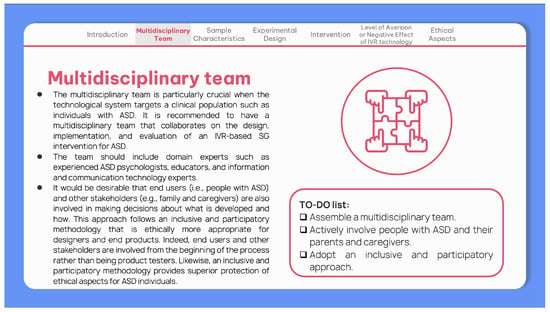

- C1

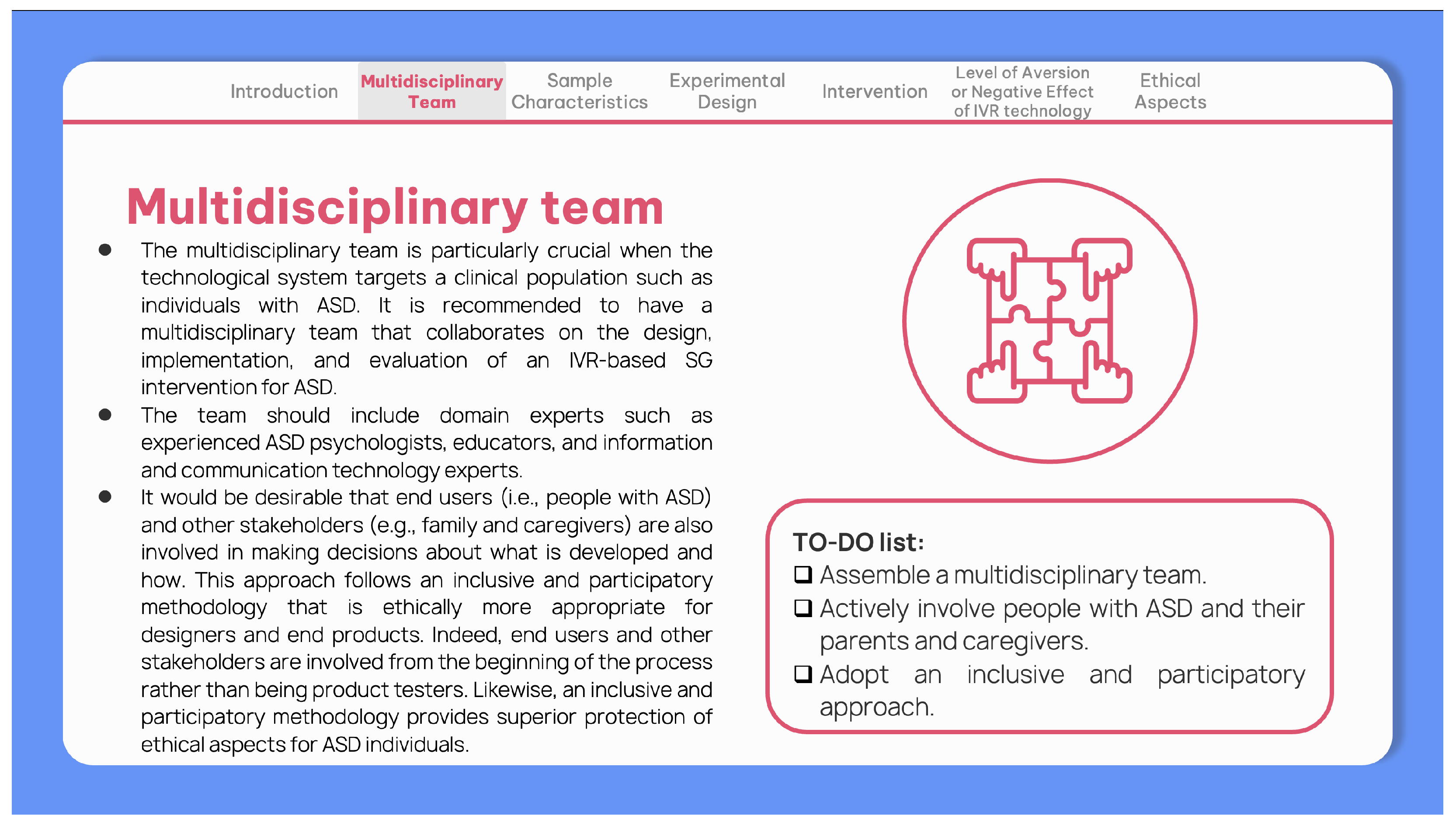

- Multidisciplinary team. It is recommended to have a multidisciplinary team that collaborates on the design, implementation, and evaluation of an IVR-based SG intervention for ASD. The team should include domain experts, such as experienced autism spectrum disorder psychologists, educators, and information and communication technology experts. The multidisciplinary team is particularly crucial when the technological system targets a clinical population, such as individuals with autism spectrum disorder [30,32,33,34]. It is desirable that in the design and development stages of technologies for ASD (e.g., [10,102,103]), end users and other stakeholders (e.g., family and caregivers) are also involved in making decisions about what is developed and how. This approach follows an inclusive and participatory methodology that is ethically more appropriate for designers and end products. Indeed, end users and other stakeholders are involved from the beginning of the process rather than being product testers [104,105,106,107]. Likewise, an inclusive and participatory methodology provides superior protection of the ethical aspects for autism spectrum disorder individuals [108,109,110].

- C2

- Sample Characteristics. This methodological criterion involves seven sub-criteria:

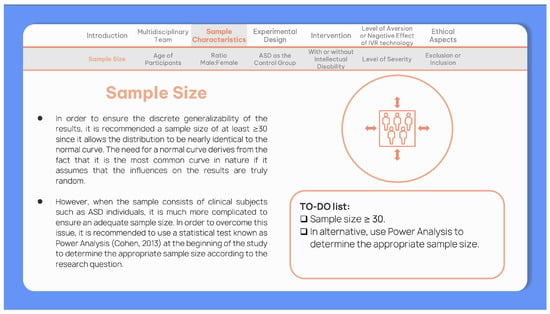

- sC2a

- Sample size. In order to ensure the discrete generalisability of the results, a sample size of at least ≥30 is recommended since it allows the distribution to be nearly identical to the normal curve. The need for a normal curve derives from the fact that it is the most common curve in nature if it assumes that the influences on the results are truly random. However, when the sample consists of clinical subjects, such as autism spectrum disorder individuals, it is much more complicated to ensure an adequate sample size. In order to overcome this issue, it is recommended to use a statistical test known as Power Analysis [111] at the beginning of the study to determine the appropriate sample size according to the research question.

- sC2b

- Age of participants. It is recommended to conduct studies with clustered age groups according to a universal age classification system, for instance, the one proposed by the World Health Organization, since most abilities are age-dependent.

- sC2c

- Ratio M:F. It is recommended that the sample under a study respects the male-to-female ratio of around 3:1 [57] since completely neglecting a component of the autism spectrum disorder population, even with a lower prevalence, could constitute a notable methodological issue.

- sC2d

- ASD as the control group. It is recommended that the entire experimental and control sample under a study consists of individuals with autism spectrum disorder. It is necessary to check the methodological criterion of inter-group comparability [112,113] by ensuring that the experimental intervention is the only discriminating variable between the two samples.

- sC2e

- With or without intellectual disability. It is recommended that studies provide information on the presence or absence of intellectual disability tested by specific and standardised tests since this information is essential for all aspects of research, from technology design to the type of skill to be treated.

- sC2f

- Level of severity according to DSM-5 It is recommended that studies published post-2013 define the level of autism spectrum disorder severity according to the DSM-5 [60] instead of using outdated diagnostic classifications, such as Asperger’s Syndrome. It would allow for appropriate and current diagnostic uniformity.

- sC2g

- Exclusion or inclusion. It is recommended to use both inclusion and exclusion sub-criteria. The first since ensuring that key characteristics of a target population are selected; the second since ensuring that potential participants that met the inclusion sub-criteria but have additional characteristics that could interfere with the success of the study are excluded [114,115]. Having inclusion and exclusion sub-criteria for clinical study participants is a standard, required practice for designing high-quality research protocols to ensure the generalisability of the results [115].

- C3

- Experimental Design. This methodological criterion involves three sub-criteria:

- sC3a

- Statistical Design. At the beginning of a study, it is recommended that studies plan the statistical analysis according to the specific research question. This planning allows for a methodologically controlled definition of the variables being studied. Likewise, all studies should always include a follow-up since it is necessary to verify the efficacy and effectiveness of an intervention over time [116,117,118].

- sC3b

- Testing method for psychological variables. In order to have a methodologically controlled study, it is recommended to define and adopt valid and standardised tests to evaluate the efficacy and effectiveness of the study itself [119,120].

- sC3c

- Testing method for technological measures. In order to have a methodologically controlled study, it is recommended to define and adopt valid and standardised tests to evaluate the efficacy and effectiveness of the study itself [119,120].

- C4

- Intervention. This methodological criterion involves five sub-criteria:

- sC4a

- Level of Immersion. Immersive virtual reality can have low, moderate, or high levels of immersion. It is recommended that studies specify the level of immersion and that they specify how much the level of immersion goes into impacting the outcome of the intervention.

- sC4b

- Kind of ability. It is recommended to define the type of ability focus of the research based on a known classifier, for example, the one proposed by the American Association on Intellectual and Developmental Disabilities [80], ensuring standard definitions enjoyable by different professional profiles.

- sC4c

- Engagement. It is recommended to evaluate engagement because if the study participants are not involved enough, they will not continue to use the system. Specifically, it is recommended to use objective measures to evaluate engagement, such as standardised tests and questionnaires that ensure replicable results (see, e.g., [9,24]).

- sC4d

- Acceptability. It is recommended, at the beginning of an intervention, to evaluate the acceptability for the success of it. For example, if an individual with severe autism spectrum disorder does not tolerate the use of HMD, an intervention implemented through an HMD may not be carried out. Specifically, evaluating the acceptability using standardised tests and questionnaires (e.g., Simulator Sickness Questionnaire [121]) that ensure replicable results is recommended (see, e.g., [9,24]).

- sC4e

- Usability. It is recommended to evaluate the usability since it is essential for proper human–computer interaction during the intervention. Specifically, it is recommended to use objective measures to evaluate usability, such as standardised tests and questionnaires that ensure replicable results (see, e.g., [9,122]).

- C5

- Level of Aversion or Negative Effect of IVR technology. It is recommended to consider this criterion since it is critical for the success of the intervention, especially for the clinical populations (e.g., [9,123,124]). Indeed, the intervention may be marred by several negative effects that are related to the use of immersive virtual reality [125]. These include cybersickness [125,126,127]. Cybersickness refers to a set of symptoms that can affect people when using immersive virtual reality technologies. These symptoms are like motion sickness and can include dizziness, headaches, eye fatigue, vertigo, and disorientation. Factors that can cause and exacerbate cybersickness include prolonged exposure to an immersive virtual reality-based experience, rapid movement in the field of view, lack of control over the immersive virtual environment, poor frame rate, and graphics quality [128]. Therefore, negative effects and recommendations for the use of such technology must necessarily be taken into account.

- C6

- Ethical aspects. It is recommended to have ethics committee approval before starting a study (for more detail, see [129]). An ethics committee must be declared within a study. Before starting the study, the study protocol must undergo evaluation and approval from an accredited research ethics committee [130,131,132,133,134]. This committee must maintain impartiality and transparency, free from conflicts of interest with researchers or sponsoring institutions. Protocol breaches or adverse events during the study should be reported promptly to the committee following established regulations [130,131,132,133,134]. Clinical studies must evaluate potential risks and benefits for participants. Researchers should minimise risks, monitor them continuously, and record risk factors. Participants should be fully informed about the study, voluntarily provide informed consent, and receive comprehensive information about the research, including purpose, method, expected benefits and risks, and conflicts of interest [130,131,132,133,134]. If a participant cannot provide consent, it must be obtained through a legally authorised representative (informed consent) [130,131,132,133,134].

At least three aspects ensure the quality of this process of transformation from issues to criteria:

- (i)

- It is the result of a rigorous research method related to planning, conducting, and inferring the results of a systematic review, i.e., Kitchenam’s method [70];

- (ii)

- Kitchenam’s rigorous method [70] was, in our case, enriched by the study of recent literature on this topic (e.g., [61,63,64,65,66]) and by the hard work conducted by a balanced multidisciplinary team of two psychologists with expertise in autism and research methodology and two ICT experts, especially in designing and developing IVR-based systems and SG;

- (iii)

- The indications or suggestions provided for addressing each of the six methodological criteria and their sub-criteria are supported by established scientific evidence. For example, it is known from the relevant scientific literature [60,113] that the diagnosis of ASD as well as the indication of the level of severity of the disorder should be by the Diagnostic and Statistical Manual of Mental Disorders-5th (DSM-5). Therefore, to meet the Level of severity according to DSM-5 sub-criterion of the Sample Size methodological criterion, it is suggested to refer to DSM-5 and not to old diagnostic labels, such as Asperger’s Syndrome. Along these lines, we have suggested what is most scientifically established for all methodological criteria and sub-criteria.

The seamless weaving of these methodological criteria and sub-criteria form the core of the proposed Evaluation Framework. We delve deeper into this discussion in the following section, presenting the Evaluation Framework as a pocket guide that pushes usability.

3.2. Research and Practitioners Implications: A Pocket Guide Evaluation Framework

This section presents the Evaluation Framework for evaluating the IVR-based SG interventions for ASD individuals. For the methodological criteria and sub-criteria to become an Evaluation Framework intended as a usable tool (i.e., the extent to which a tool can be used by specified final users to achieve specified goals, with effectiveness, efficiency, and satisfaction in a specified context of use [135]), a user-centred design approach was adopted [136,137,138]. This approach pays special attention to the characteristics of the final users and the context of use where the tool will be used.

Therefore, we started by analysing the final users and the context of use:

- Concerning the final users who will use the proposed Evaluation Framework, they are psychologists who are experts in research methodology but generally not experts in using technological devices. Likewise, they are confident with traditional, not advanced, easy-to-use technological devices and tools, such as computers and PowerPoint presentations, that are used from the very early years of university and clinical training.

- In relation to the context of use in which the Evaluation Framework will be used, it is generally an indoor environment, and we do not expect it to be equipped with specific advanced technological equipment, such as high-performance network connectivity or robust internet access, as well as head-mounted displays. However, since this environment is still a highly professionalising context of use (e.g., academia or clinics), we guess there is, in the worst case, at least a computer.

The design of the Evaluation Framework, intended as a tool to be used by psychologists, was based on the worst case for the final user’s needs and capabilities and the characteristics of the context of use. In response to the discerned needs and requirements, we engaged in thoughtful consideration of various design proposals. These were ultimately funnelled into a specific conceptual design for the tool. Specifically, we identified as an adequate metaphor for the intended tool the concept of a pocket guide, i.e., a small, portable booklet or brochure designed to provide concise information on a specific topic, activity, or location. Pocket guides are typically intended for quick reference and easy accessibility and usability, making them convenient for professionals who need essential information at their fingertips [139].

Taking into consideration these methodological and structural constraints, we proposed to implement a pocket guide, envisioned as an interactive and usable PowerPoint presentation, serving as a tool to streamline the use of the proposed Evaluation Framework.

In what follows, the implementation of the PowerPoint presentation was presented, focusing on its static part and dynamic part:

- Static Part—The PowerPoint Presentation incorporates a dedicated slide for each methodological criterion and sub-criterion. Each slide includes the following elements: (1) the name of the methodological criterion/sub-criterion, (2) a description of the methodological criterion/sub-criterion, (3) a visual representation of the methodological criterion/sub-criterion in the form of an icon to enhance understanding and retention, and (4) a TO-DO list of practical actions to address the associated methodological criterion/sub-criterion. Additionally, the PowerPoint presentation includes a brief overview of the Evaluation Framework and instructions on how to use and interact with it. Furthermore, a 6-colour rainbow palette is employed, with each colour corresponding to a specific methodological criterion.

- Dynamic Part—The PowerPoint presentation offers two distinct approaches in browsing the Evaluation Framework. The first approach involves traditional linear navigation, where users can browse through the PowerPoint presentation using mouse clicks, the space bar, or arrow keys. This method is commonly familiar to the final users of the proposed Evaluation Framework. The second approach incorporates more interactive and nonlinear navigation, resembling the browsing found on websites. In fact, a main navigation menu is located at the top of each slide, allowing final users to quickly access different methodological criteria included in the Evaluation Framework. This navigation menu consists of seven items: an “Introduction” item and six items, each representing a specific methodological criterion. By clicking on a particular item, the corresponding slide is displayed (see, for example, Figure 2). Notably, when final users click on an item related to a methodological criterion that includes sub-criteria, a contextual sub-menu appears beneath the main navigation menu (see, for example, Figure 3 and Figure 4). This sub-menu enables final users to explore and interact with all the associated methodological sub-criteria.

Figure 2. Evaluation Framework: Multidisciplinary Team methodological criterion slide.

Figure 2. Evaluation Framework: Multidisciplinary Team methodological criterion slide. Figure 3. Evaluation Framework: Sample Characteristics methodological criterion slide.

Figure 3. Evaluation Framework: Sample Characteristics methodological criterion slide. Figure 4. Evaluation Framework: Sample Size methodological sub-criterion slide.

Figure 4. Evaluation Framework: Sample Size methodological sub-criterion slide.

In Figure 2, Figure 3 and Figure 4, we showcase some screenshots of the designed interactive PowerPoint presentation, which can be downloaded from the following link: https://gitfront.io/r/UnivaqRepository/QRfqxA1wTFUh/Evaluation-Framework/ (accessed on 2 April 2024). At the same link, a PDF version of the PowerPoint presentation is also available. This document can be printed and referred to as if it were a pocket guide, facilitating the ease of access and portability.

3.3. Utilising the Pocket Guide Evaluation Framework: An Example of Its Application

In this section, we present a practical example showing how to fulfil the six methodological criteria and sub-criteria underlying the Evaluation Framework. We guess that we will use our Evaluation Framework before conducting a research.

First of all, before starting research, it is necessary to establish the research question and the research hypothesis. In our example:

- Research Question: Is an Immersive Virtual Reality-based Serious Game intervention more effective than a traditional non-technology mediated approach in enhancing social cognition skills in adolescents with ASD?

- Research Hypothesis: Researchers anticipate that employing an Immersive Virtual Reality-based Serious Game, with its immersive technology and serious game learning strategy, will be more effective in enhancing social cognition skills among adolescents with ASD compared to traditional interventions (e.g., Multimodal Anxiety and Social Skill Intervention for Adolescents with Autism Spectrum Disorder [140]).

Based on these, it is possible to proceed to fulfil the six methodological criteria:

- C1

- Multidisciplinary team. We plan to engage psychologists with a background in ASD interventions for social cognition, as well as experts in ICT, SG, and IVR from the early stages of the study.

- C2

- Sample Characteristics.

- sC2a

- Sample size. To determine the sample size for our future study, we will employ power analysis [111], given the challenge of recruiting adolescents with ASD, particularly for the IVR-mediated experimental condition. We guess, as a result of our power analysis, that we will have to reach a minimum total sample size of 55 individuals to ensure that the study has adequate statistical power to detect significant effects or differences.

- sC2b

- Age of participants. The study will be conducted on a group of 55 ASD adolescents with a range of ages 13–15.

- sC2c

- Ratio M:F. The sample of 55 adolescents with ASD will be composed of 41 males and 12 females to reflect the male-to-female ratio around 3:1 [57].

- sC2d

- ASD as the control group. To check the methodological criterion of inter-group comparability [112,113], the control group will consist of adolescents with autism. Therefore, the sample of 55 persons will be randomly divided as follows: the experimental group (SG intervention based on IVR) will contain 28 adolescents with ASD (20 males and 8 females); the control group (traditional non-technology mediated intervention) will contain 27 adolescents with ASD (20 males and 7 females).

- sC2e

- With or without intellectual disability. All 55 participants in the study will not have an intellectual disability.

- sC2f

- Level of severity according to DSM-5 All 55 study participants will have a diagnosis of ASD with severity level 1 (support needed), i.e., the least impaired condition.

- sC2g

- Exclusion or inclusion. The following exclusion criteria will be set: (1) all individuals with an intellectual disability; (2) all individuals with psychiatric disorders in comorbidity; (3) all individuals who have had previous negative experiences with IVR; (4) all individuals who have a history of epileptic seizures; (5) all individuals who suffer from emotional sickness; (6) all individuals with a diagnosis of autism with a severity level other than 1; (7) all individuals who are adolescents but not in the age range 13–15.

- C3

- Experimental Design.

- sC3a

- Statistical design. The statistical design of the study will be a between-group comparison. A follow-up will also be planned to monitor the effects of both interventions.

- sC3b

- Testing method for psychological variables. The following measures of social cognition will be used: Eyes Tasks [141], Basic Empathy Scale [142,143].

- sC3c

- Testing method for technological measures. Usability, acceptability, and negative effects will be assessed by means of standardised questionnaires (e.g., Virtual Reality Usability Questionnaire [144] and Simulator Sickness Questionnaire [121]). In addition, the engagement of participants will also be assessed through selected behavioural observation measures [9,24].

- C4

- Intervention.

- sC4a

- Level of immersion. The study will be conducted using highly immersive virtual reality technologies (i.e., HMD), as the literature suggests that a high level of immersion is the most effective in improving the learning abilities of people with ASD [6,23,24].

- sC4b

- Kind of ability. In this future study, the target abilities will be those of social cognition, which, according to the American Association on Intellectual and Developmental Disabilities [80], fall under the category of social skills.

- sC4c

- Engagement. For the assessment of engagement, several metrics will be set according to a Likert scale (e.g., 1–5) in terms of emotional participation, suspension of disbelief (i.e., the extent to which the virtual world is temporarily accepted as reality); bodily participation (i.e., the extent of body movement during the immersive experience); virtual world exploration [9,24]).

- sC4d

- Acceptability. Acceptability will be measured by means of the standardised Simulator Sickness Questionnaire test [121]. In addition, acceptability for the use of HMD will be examined in terms of readiness for use and a number of factors related to possible unpleasant physiological effects or discomfort (e.g., motion sickness and digital eye fatigue) measured as dichotomous values (i.e., yes or no).

- sC4e

- Usability. Usability will be measured using the Virtual Reality Usability Questionnaire [144]. In addition, we assessed usability by looking at the following aspects: autonomy in handling the device (e.g., support required from operators during the study).

- C5

- Level of aversion or negative effect of IVR technology. All factors that could increase the risk of cybersickness (e.g., rapid movement in the field of view and poor frame rate) will be considered in the design process. In addition, the standardised Simulator Sickness Questionnaire [121] will be used to assess the level of cybersickness experienced by users. All participants will be instructed to stop the study if they experience symptoms of cybersickness.

- C6

- Ethical aspects. Participants will have been tested individually in a quiet room according to the principles established by the Declaration of Helsinki. The pre-departure investigation will be approved by the Ethics Committee of Hospital XXX (report the number code), which will approve the experimental protocol before participants are recruited, according to the principles established by the Declaration of Helsinki. Written informed consent will be obtained from all accompanying persons of participants prior to the study.

4. Conclusions and Future Work

The present paper arises from the evidence that the evaluation process for evaluating the efficacy and effectiveness of IVR-based SG interventions for ASD is currently a black hole. In fact, it can be like a black hole since there is no light shed on the evaluation protocols, nor are there any guidelines to follow for conducting an evaluation that provides reliable results.

A systematic analysis of the literature on the topic [22] accompanied by a study of recent literature on this topic (e.g., [61,62,63,64,65,66,67,68]) revealed the presence of several methodological issues underpinning the evaluation process: it can be like a black hole since it does not produce rigorous scientific studies to show the efficacy of IVR-based SG interventions for ASD and in real-world applications of such intervention; the effectiveness is reduced [35,36]. Among these methodological issues, the need for a multidisciplinary team and the involvement of stakeholders in the evaluation process, although already addressed in the literature related to this field of research, remain unresolved [30,33,61,145]. Meanwhile, other methodological issues, such as the negative effects associated with technology or ethical aspects, are completely underestimated despite their potentially crucial role in a proper evaluation [22]. In the field of studies concerning reliability, efficacy, and efficiency [44,45,69,146], it is widely recognised that properly addressing the methodological issues could produce a rigorous evaluation process.

In the wake of this, the present paper aimed to propose an Evaluation Framework as a pocket guide, encompassing all the necessary criteria for conducting a rigorous evaluation of the efficacy and effectiveness of IVR-based SG interventions for ASD, avoiding methodological issues.

To this end, two flows were followed:

- (i)

- Methodological issues were transformed into methodological criteria necessary to conduct a rigorous evaluation. The quality of the transformation process from methodological issues to methodological criteria was ensured by at least three aspects. First, they are the results of a rigorous research methodology approach in planning, conducting, and inferring the results of a systematic review, i.e., Kitchenam’s method [70]. Secondly, Kitchenam’s method [70] was enhanced by the study of recent literature on this topic, e.g., [61,62,63,64,65,66,67,68] and by the strenuous efforts of a well-balanced multidisciplinary team, consisting of two psychologists skilled in autism and research methodology and two ICT experts specialising in designing and developing IVR-based systems and SG. Lastly, the guidelines to address the methodological criteria and their sub-criteria are firmly rooted in established scientific evidence.

- (ii)

- A usable tool was proposed for the methodological criteria to become an Evaluation Framework. The quality of the process involved in designing and developing the Evaluation Framework is assured by adopting a user-centred approach [136], which places particular emphasis on the end-users, such as psychologists experienced in research methodology, and the context of use, typically research centres, hospitals, and clinics [30,32,33,34]. The dedicated efforts of our multidisciplinary team further enriched this user-centred approach: the decision to select a usable tool for disseminating the Evaluation Framework resulted from the joining of different professional profiles proficient in all the necessary aspects for creating and using such a framework.

As a result of fulfilling these two flows, supported by the collaborative efforts of the multidisciplinary team, the Evaluation Framework came to fruition. That framework has twofold strength as a result of both quality processes (i) and (ii): the first strength is that we have not only created six methodological criteria and sub-criteria, but have each produced specific guidelines to meet them. That makes the proposed Evaluation Framework highly generalisable since it can be used in any evaluation study to evaluate the efficacy and effectiveness of IVR-based SG for ASD individuals. In fact, within the proposed framework, all the methodological criteria necessary to conduct such an evaluation are brought together [60,69]. Notably, our study sheds light on the often-overlooked issues, such as the underreporting of negative effects associated with technology and the approval of ethical committees [28,125,130,131,132,133,134]. Addressing these issues is imperative for ensuring the replicability and stability of results over time and across different conditions.

The latter strength lies in providing the usability of the proposed tool through its easy accessibility and user-friendliness. To achieve this, we adopted the visual metaphor of a pocket guide. Indeed, a pocket guide is a small, portable booklet or brochure designed to provide concise information on a specific topic, activity, or location [139].

Considering these methodological and structural constraints, we proposed to implement a pocket guide, envisioned as an interactive and usable PowerPoint presentation, serving as a tool to streamline the use of the proposed Evaluation Framework. Thus, the proposed Framework could work as a pocket guide for researchers, ensuring the conduction of evidence-based intervention studies for ASD.

The primary result of the present study is well-supported by the practical example of applying such an Evaluation Framework (Section 3.3) to conduct a research study on IVR-based SG interventions for ASD. Indeed, having access to such a framework structures the researchers’ activity from the outset by mandating the definition of the research question and research hypothesis. The fulfilment of the six methodological criteria and their respective sub-criteria, while well-guided by standards supported by the literature, is closely dependent on the research question and research hypothesis. Additionally, the proposed framework is customisable in content, as it provides a standardised structure for conducting methodologically rigorous evaluations, the contents of which can be adapted as needed. For example, we could use such a framework as a starting point to employ different technologies and a different population from those proposed in this study. Indeed, the proposed methodological criteria are cross-cutting and easily applicable to the field of rehabilitative interventions and clinical populations.

Looking ahead, the proposed Pocket Guide Evaluation Framework could be the first significant step in shedding light on the current black hole in the process of evaluating IVR-based SG interventions for individuals with ASD. In fact, it not only serves as a practical guide, but also as a catalyst for positive change in the clinical ASD field. Its dissemination could promote the flourishing of reliable and rigorous studies, as well as a greater readiness of clinicians to adopt such interventions in real-life settings.

By doing so, we strive to enhance the efficiency, effectiveness, and generalisability of interventions, ultimately improving the lives of individuals with ASD and addressing the growing societal and economic challenges associated with the disorder.

In conclusion, we plan to test and validate our framework through several future works. First, we will apply the framework in a real-world setting to gauge its empirical validity. Second, we will test it across various contexts to assess its adaptability and generalisability. Third, we will continuously refine the framework based on user and stakeholder feedback, updating guidelines and incorporating new practices. Lastly, we aim to promote collaborative research initiatives to encourage broader use of the framework among researchers, clinicians, and educators, ensuring the dissemination of reliable studies.

Author Contributions

Conceptualization, S.P. and T.D.M.; Methodology: S.P. and M.C.P.; Software, F.C.; Validation, S.P. and T.D.M.; Formal Analysis, S.P. and M.C.P.; Investigation, F.C.; Data Curation, S.P. and M.C.P.; Writing—original draft preparation, S.P., F.C. and M.C.P.; Writing—review and editing, S.P., F.C., M.C.P. and T.D.M.; Visualisation, F.C.; Supervision, T.D.M.; Project Administration, T.D.M.; Funding Acquisition, T.D.M. All authors have read and agreed to the published version of the manuscript.

Funding

This research was funded by the European Union—NextGenerationEU under the Italian Ministry of University and Research (MUR) National Innovation Ecosystem grant ECS00000041-VITALITY-CUP E13C22001060006.

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

The proposed Evaluation Framework is available at the following link: https://gitfront.io/r/UnivaqRepository/QRfqxA1wTFUh/Evaluation-Framework/, accessed 2 April 2024. The detailed description of the systematic review process, as well as the documentation of the entire study selection and quality assessment process, is available at [71].

Conflicts of Interest

The authors declare no conflicts of interest.

Abbreviations

The following abbreviations are used in this manuscript:

| ASD | Autism Spectrum Disorders |

| ICT | Information and Communication Technologies |

| STEM | Science, Technology, Engineering, and Mathematics |

| IVR | Immersive Virtual Reality |

| SG | Serious Game |

| HMD | Head-Mounted Display |

| CAVE | Cave Automatic Virtual Environment |

| AI | Artificial Intelligence |

References

- Karami, B.; Koushki, R.; Arabgol, F.; Rahmani, M.; Vahabie, A.H. Effectiveness of virtual/augmented reality–based therapeutic interventions on individuals with autism spectrum disorder: A comprehensive meta-analysis. Front. Psychiatry 2021, 12, 665326. [Google Scholar] [CrossRef] [PubMed]

- Billard, A.; Robins, B.; Nadel, J.; Dautenhahn, K. Building Robota, a mini-humanoid robot for the rehabilitation of children with autism. Assist. Technol. 2007, 19, 37–49. [Google Scholar] [CrossRef] [PubMed]

- Dautenhahn, K. Roles and functions of robots in human society: Implications from research in autism therapy. Robotica 2003, 21, 443–452. [Google Scholar] [CrossRef]

- DiPietro, J.; Kelemen, A.; Liang, Y.; Sik-Lanyi, C. Computer-and robot-assisted therapies to aid social and intellectual functioning of children with autism spectrum disorder. Medicina 2019, 55, 440. [Google Scholar] [CrossRef] [PubMed]

- Moghadas, M.; Moradi, H. Analyzing human-robot interaction using machine vision for autism screening. In Proceedings of the 2018 6th RSI international conference on robotics and mechatronics (IcRoM), Tehran, Iran, 23–25 October 2018; IEEE: Piscataway, NJ, USA, 2018; pp. 572–576. [Google Scholar] [CrossRef]

- Bozgeyikli, L.; Raij, A.; Katkoori, S.; Alqasemi, R. A survey on virtual reality for individuals with autism spectrum disorder: Design considerations. IEEE Trans. Learn. Technol. 2017, 11, 133–151. [Google Scholar] [CrossRef]

- Valentine, A.Z.; Brown, B.J.; Groom, M.J.; Young, E.; Hollis, C.; Hall, C.L. A systematic review evaluating the implementation of technologies to assess, monitor and treat neurodevelopmental disorders: A map of the current evidence. Clin. Psychol. Rev. 2020, 80, 101870. [Google Scholar] [CrossRef] [PubMed]

- Zhang, M.; Ding, H.; Naumceska, M.; Zhang, Y. Virtual reality technology as an educational and intervention tool for children with autism spectrum disorder: Current perspectives and future directions. Behav. Sci. 2022, 12, 138. [Google Scholar] [CrossRef] [PubMed]

- Di Mascio, T.; Tarantino, L.; De Gasperis, G.; Pino, M.C. Immersive virtual environments: A comparison of mixed reality and virtual reality headsets for ASD treatment. Adv. Intell. Syst. Comput. 2020, 1007, 153–163. [Google Scholar] [CrossRef]

- Di Mascio, T.; Tarantino, L.; Cirelli, L.; Peretti, S.; Mazza, M. Designing a personalizable ASD-oriented AAC tool: An action research experience. Adv. Intell. Syst. Comput. 2019, 804, 200–209. [Google Scholar] [CrossRef]

- Mazza, M.; Pino, M.C.; Vagnetti, R.; Peretti, S.; Valenti, M.; Marchetti, A.; Di Dio, C. Discrepancies between explicit and implicit evaluation of aesthetic perception ability in individuals with autism: A potential way to improve social functioning. BMC Psychol. 2020, 8, 74. [Google Scholar] [CrossRef]

- Baron-Cohen, S. Autism and the technical mind. Sci. Am. 2012, 307, 72–75. [Google Scholar] [CrossRef] [PubMed]

- Wei, X.; Yu, J.W.; Shattuck, P.; McCracken, M.; Blackorby, J. Science, technology, engineering, and mathematics (STEM) participation among college students with an autism spectrum disorder. J. Autism Dev. Disord. 2013, 43, 1539–1546. [Google Scholar] [CrossRef] [PubMed]

- Di Mascio, T.; Laura, L.; Temperini, M. A framework for personalized competitive programming training. In Proceedings of the 2018 17th International Conference on Information Technology Based Higher Education and Training (ITHET), Olhao, Portugal, 26–28 April 2018; IEEE: Piscataway, NJ, USA, 2018; pp. 1–8. [Google Scholar] [CrossRef]

- Glaser, N.; Schmidt, M.; Schmidt, C.; Palmer, H.; Beck, D. The centrality of interdisciplinarity for overcoming design and development constraints of a multi-user virtual reality intervention for adults with Autism: A design case. In Intersections Across Disciplines: Interdisciplinarity and Learning; Springer: Berlin/Heidelberg, Germany, 2021; pp. 157–171. [Google Scholar] [CrossRef]

- Drigas, A.; Vlachou, J.A. Information and communication technologies (ICTs) and autistic spectrum disorders (ASD). Int. J. Recent Contrib. Eng. Sci. IT iJES 2016, 4, 4–10. [Google Scholar] [CrossRef][Green Version]

- Høeg, E.R.; Povlsen, T.M.; Bruun-Pedersen, J.R.; Lange, B.; Nilsson, N.C.; Haugaard, K.B.; Faber, S.M.; Hansen, S.W.; Kimby, C.K.; Serafin, S. System immersion in virtual reality-based rehabilitation of motor function in older adults: A systematic review and meta-analysis. Front. Virtual Real. 2021, 2, 647993. [Google Scholar] [CrossRef]

- Glaser, N.; Schmidt, M. Systematic Literature Review of Virtual Reality Intervention Design Patterns for Individuals with Autism Spectrum Disorders. Int. J. Hum.-Comput. Interact. 2021, 38, 753–788. [Google Scholar] [CrossRef]

- Caruso, F.; Peretti, S.; Barletta, V.S.; Pino, M.C.; Di Mascio, T. Recommendations for developing Immersive Virtual Reality Serious Game for Autism: Insights from a Systematic Literature Review. IEEE Access 2023, 11, 74898–74913. [Google Scholar] [CrossRef]

- Meadan, H.; Ostrosky, M.M.; Triplett, B.; Michna, A.; Fettig, A. Using visual supports with young children with autism spectrum disorder. Teach. Except. Child. 2011, 43, 28–35. [Google Scholar] [CrossRef]

- Schmidt, M.; Schmidt, C.; Glaser, N.; Beck, D.; Lim, M.; Palmer, H. Evaluation of a spherical video-based virtual reality intervention designed to teach adaptive skills for adults with autism: A preliminary report. Interact. Learn. Environ. 2021, 29, 345–364. [Google Scholar] [CrossRef]

- Peretti, S.; Caruso, F.; Pino, M.C.; Di Mascio, T. A systematic review to know how interventions realized with immersive virtual reality-based serious games for individuals with autism are evaluated. In Proceedings of the 5th Biannual Conference of the Italian SIGCHI Chapter: Crossing HCI and AI, CHItaly 2023, Torino, Italy, 20–22 September 2023; pp. 1–14. [Google Scholar] [CrossRef]

- Miller, H.L.; Bugnariu, N.L. Level of immersion in virtual environments impacts the ability to assess and teach social skills in autism spectrum disorder. Cyberpsychol. Behav. Soc. Netw. 2016, 19, 246–256. [Google Scholar] [CrossRef]