Data Quality of Different Modes of Supervision in Classroom Surveys

Abstract

1. Introduction

2. Previous Research

2.1. Supervision during Self-Administered Surveys

2.2. Effects of Teachers’ Presence

2.3. Effects of External Supervision

2.4. Effects of Using Video Conference Software

2.5. Web Surveys

3. Materials and Methods

3.1. Data Quality Indicators and Analyses

3.2. Explanatory Variables

4. Results

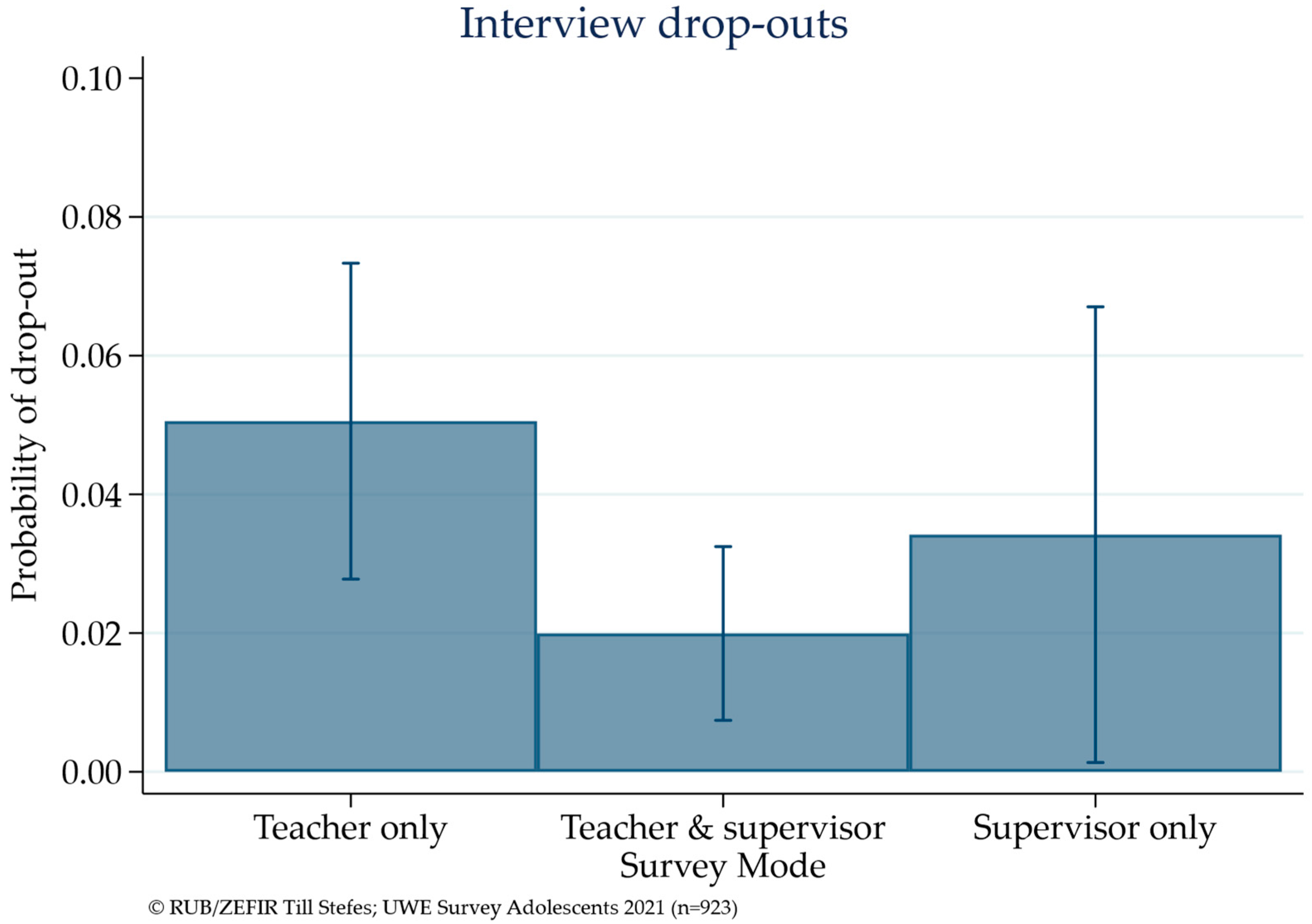

4.1. Interview Drop-Outs

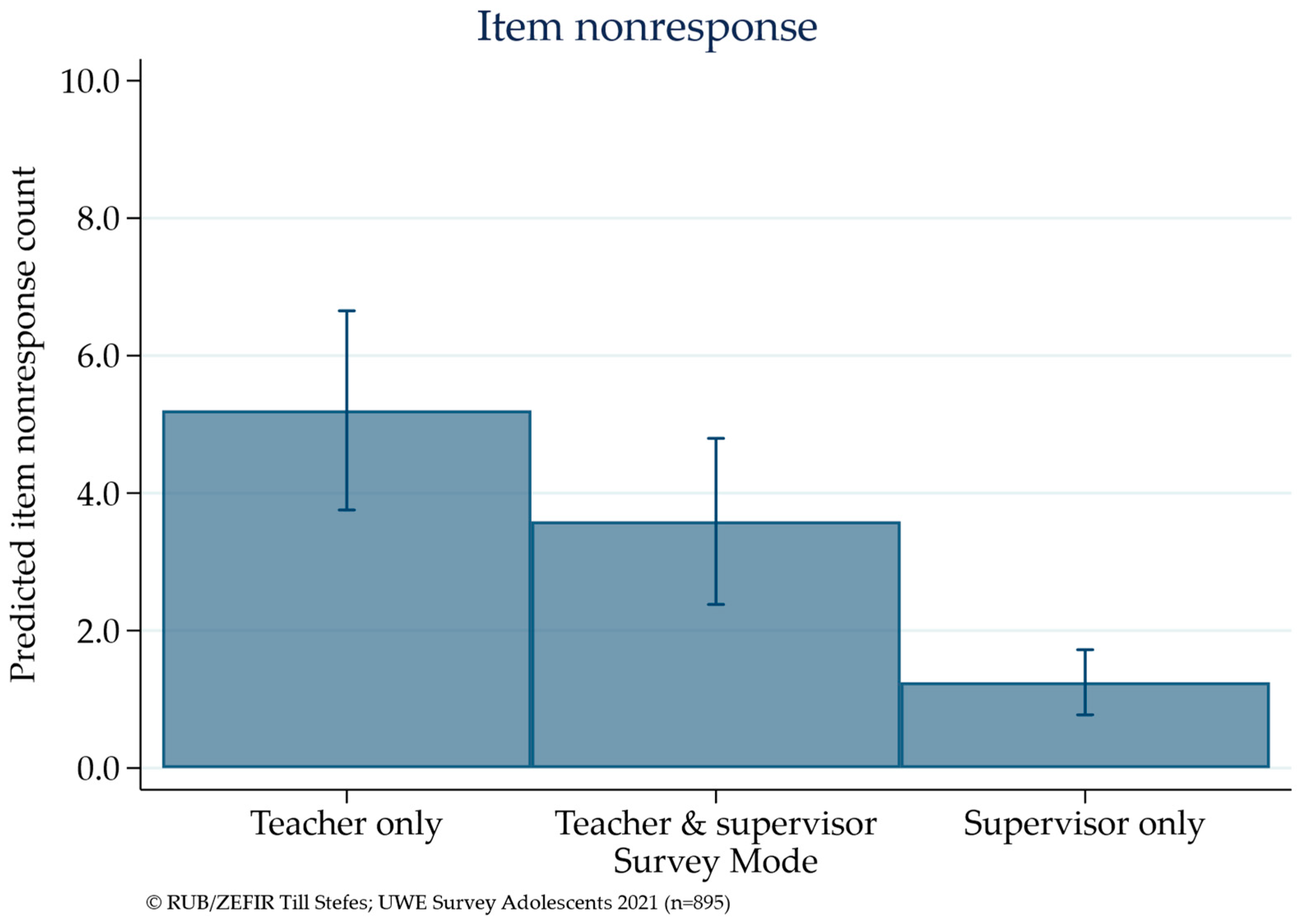

4.2. Item Nonresponse

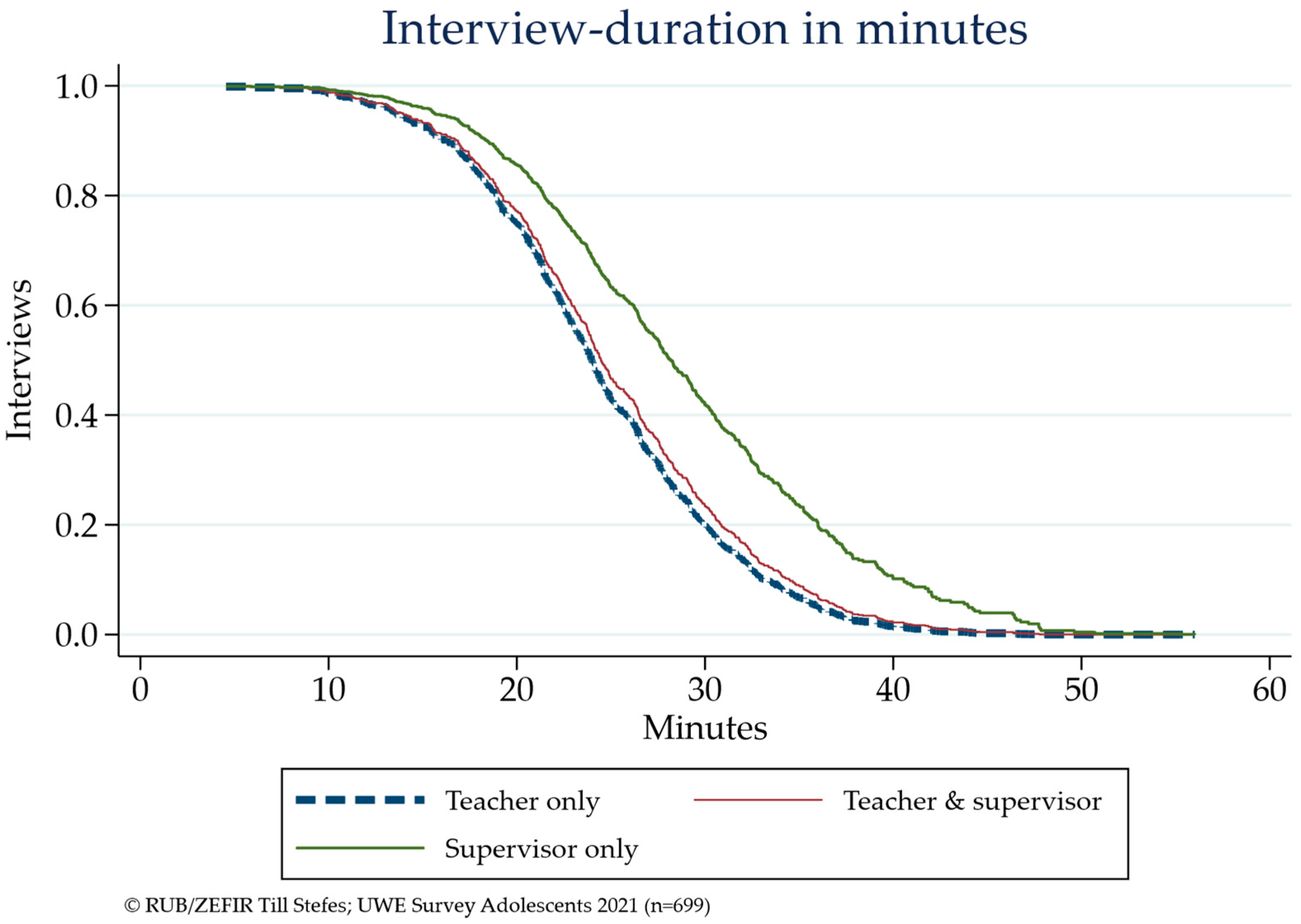

4.3. Interview Duration

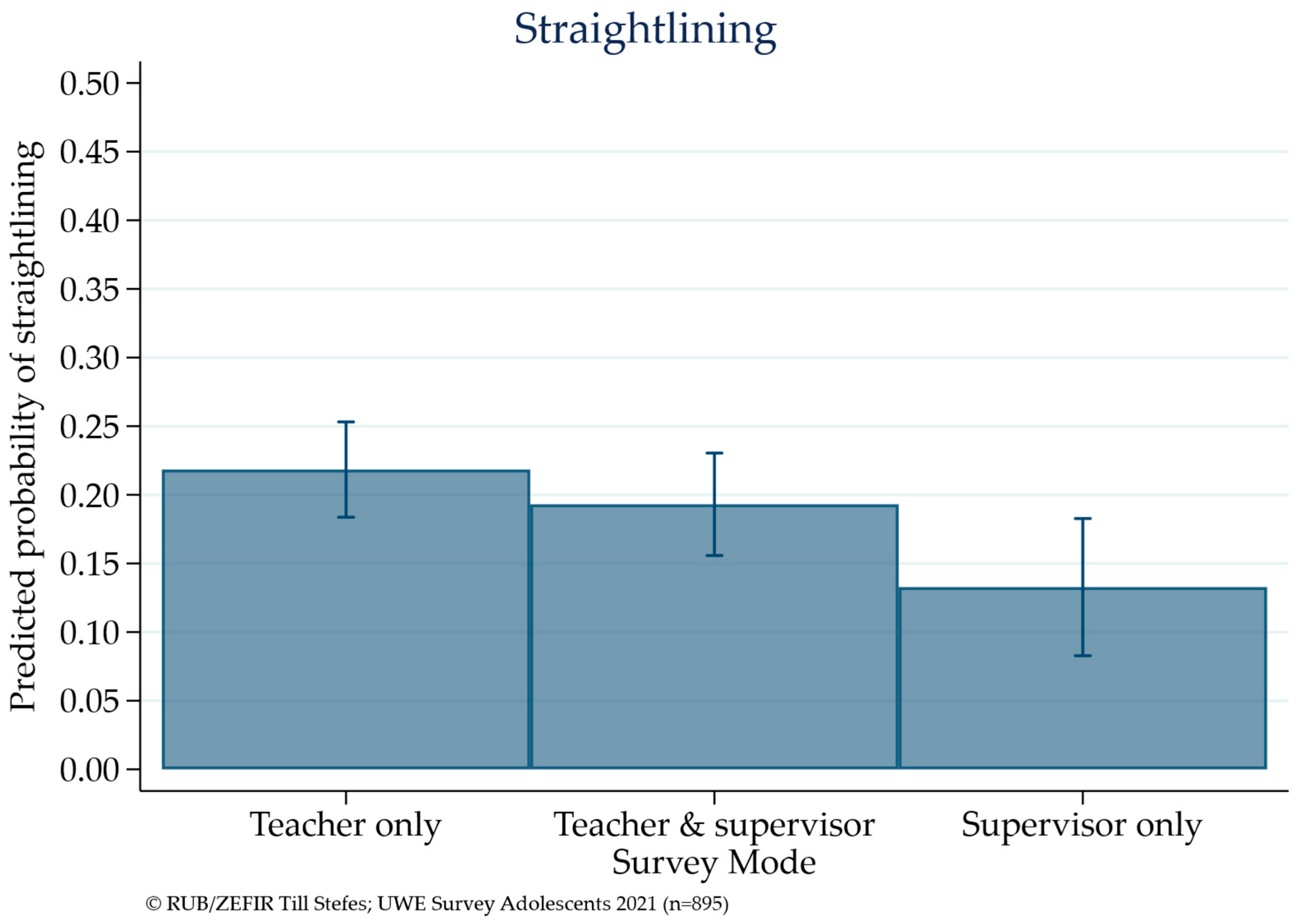

4.4. Straight-Lining

5. Discussion

5.1. Implications

5.2. Limitations

6. Conclusions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Hallfors, D.; Khatapoush, S.; Kadushin, C.; Watson, K.; Saxe, L. A comparison of paper vs computer-assisted self-interview for school alcohol, tobacco, and other drug surveys. Eval. Program Plan. 2000, 23, 149–155. [Google Scholar] [CrossRef]

- Lucia, S.; Herrmann, L.; Killias, M. How important are interview methods and questionnaire designs in research on self-reported juvenile delinquency? An experimental comparison of Internet vs paper-and-pencil questionnaires and different definitions of the reference period. J. Exp. Criminol. 2007, 3, 39–64. [Google Scholar] [CrossRef][Green Version]

- Alibali, M.W.; Nathan, M.J. Conducting Research in Schools: A Practical Guide. J. Cogn. Dev. 2010, 11, 397–407. [Google Scholar] [CrossRef]

- Bartlett, R.; Wright, T.; Olarinde, T.; Holmes, T.; Beamon, E.R.; Wallace, D. Schools as Sites for Recruiting Participants and Implementing Research. J. Community Health Nurs. 2017, 34, 80–88. [Google Scholar] [CrossRef] [PubMed]

- March, A.; Ashworth, E.; Mason, C.; Santos, J.; Mansfield, R.; Stapley, E.; Deighton, J.; Humphrey, N.; Tait, N.; Hayes, D. ‘Shall We Send a Panda?’ A Practical Guide to Engaging Schools in Research: Learning from Large-Scale Mental Health Intervention Trials. Int. J. Environ. Res. Public Health 2022, 19, 3367. [Google Scholar] [CrossRef]

- Kann, L.; Brener, N.; Warren, C.; Collins, J.; Giovino, G. An assessment of the effect of data collection setting on the prevalence of health risk behaviors among adolescents. J. Adolesc. Health 2002, 31, 327–335. [Google Scholar] [CrossRef]

- Brener, N.D.; Eaton, D.K.; Kann, L.; Grunbaum, J.A.; Gross, L.A.; Kyle, T.M.; Ross, J.G. The Association of Survey Setting and Mode with Self-Reported Health Risk Behaviors among High School Students. Public Opin. Q. 2006, 70, 354–374. [Google Scholar] [CrossRef]

- Cops, D.; De Boeck, A.; Pleysier, S. School vs. mail surveys: Disentangling selection and measurement effects in self-reported juvenile delinquency. Eur. J. Criminol. 2016, 13, 92–110. [Google Scholar] [CrossRef]

- Walser, S.; Killias, M. Who should supervise students during self-report interviews? A controlled experiment on response behavior in online questionnaires. J. Exp. Criminol. 2012, 8, 17–28. [Google Scholar] [CrossRef]

- Ellonen, N.; Pösö, T.; Mielityinen, L.; Paavilainen, E. Using self-report surveys in schools to study violence in alternative care: A methodological approach. Child Abus. Rev. 2023, 32, e2814. [Google Scholar] [CrossRef]

- Kivivuori, J.; Salmi, V.; Walser, S. Supervision mode effects in computerized delinquency surveys at school: Finnish replication of a Swiss experiment. J. Exp. Criminol. 2013, 9, 91–107. [Google Scholar] [CrossRef]

- Gomes, H.S.; Farrington, D.P.; Maia, Â.; Krohn, M.D. Measurement bias in self-reports of offending: A systematic review of experiments. J. Exp. Criminol. 2019, 15, 313–339. [Google Scholar] [CrossRef]

- Demkowicz, O.; Ashworth, E.; Mansfield, R.; Stapley, E.; Miles, H.; Hayes, D.; Burrell, K.; Moore, A.; Deighton, J. Children and young people’s experiences of completing mental health and wellbeing measures for research: Learning from two school-based pilot projects. Child Adolesc. Psychiatry Ment. Health 2020, 14, 35. [Google Scholar] [CrossRef]

- Bidonde, J.; Meneses-Echavez, J.F.; Hafstad, E.; Brunborg, G.S.; Bang, L. Methods, strategies, and incentives to increase response to mental health surveys among adolescents: A systematic review. BMC Med. Res. Methodol. 2023, 23, 270. [Google Scholar] [CrossRef]

- Rasberry, C.N.; Rose, I.; Kroupa, E.; Hebert, A.; Geller, A.; Morris, E.; Lesesne, C.A. Overcoming Challenges in School-Wide Survey Administration. Health Promot. Pract. 2018, 19, 110–118. [Google Scholar] [CrossRef]

- Hatch, L.M.; Widnall, E.C.; Albers, P.N.; Hopkins, G.L.; Kidger, J.; de Vocht, F.; Kaner, E.; van Sluijs, E.M.F.; Fairbrother, H.; Jago, R.; et al. Conducting school-based health surveys with secondary schools in England: Advice and recommendations from school staff, local authority professionals, and wider key stakeholders, a qualitative study. BMC Med. Res. Methodol. 2023, 23, 142. [Google Scholar] [CrossRef] [PubMed]

- Strange, V.; Forest, S.; Oakley, A.; Ripple Study Team. Using research questionnaires with young people in schools: The influence of the social context. Int. J. Soc. Res. Methodol. 2003, 6, 337–346. [Google Scholar] [CrossRef]

- Heath, S.; Brooks, R.; Cleaver, E.; Ireland, E. Researching Young People’s Lives; Sage: Atlanta, GA, USA, 2009. [Google Scholar]

- Gassman-Pines, A.; Ananat, E.O.; Fitz-Henley, J. COVID-19 and Parent-Child Psychological Well-being. Pediatrics 2020, 146, e2020007294. [Google Scholar] [CrossRef] [PubMed]

- Goh, E.C.L.; Rafie, N.H.B. Using whatsApp video call to reach large survey sample of low-income children during COVID-19: A mixed method post-hoc analysis. Int. J. Soc. Res. Methodol. 2023. [Google Scholar] [CrossRef]

- Felderer, B.; Kirchner, A.; Kreuter, F. The Effect of Survey Mode on Data Quality: Disentangling Nonresponse and Measurement Error Bias. J. Off. Stat. 2019, 35, 93–115. [Google Scholar] [CrossRef]

- Mühlböck, M.; Steiber, N.; Kittel, B. Less Supervision, More Satisficing? Comparing Completely Self-Administered Web-Surveys and Interviews Under Controlled Conditions. Stat. Politi. Policy 2017, 8, 13–28. [Google Scholar] [CrossRef]

- Atkeson, L.R.; Adams, A.N.; Alvarez, R.M. Nonresponse and Mode Effects in Self- and Interviewer-Administered Surveys. Politi. Anal. 2014, 22, 304–320. [Google Scholar] [CrossRef]

- Brown, B.; Larson, J. Peer relationships in adolescence. In Handbook of Adolescent Psychology; Lerner, R., Steinberg, L., Eds.; Wiley: Hoboken, NJ, USA, 2009; pp. 74–103. [Google Scholar]

- Tourangeau, R.; Smith, T.W. Asking Sensitive Questions: The Impact of Data Collection Mode, Question Format, and Question Context. Public Opin. Q. 1996, 60, 275–304. [Google Scholar] [CrossRef]

- Möhring, W.; Schlütz, D. Das Interview als soziale Situation. In Die Befragung in der Medien-und Kommunikationswissenschaft; Springer VS: Wiesbaden, Germany, 2019; pp. 41–67. [Google Scholar]

- Duncan, G.J.; Magnuson, K. Socioeconomic status and cognitive functioning: Moving from correlation to causation. WIREs Cogn. Sci. 2012, 3, 377–386. [Google Scholar] [CrossRef] [PubMed]

- Gummer, T.; Roßmann, J. Explaining Interview Duration in Web Surveys. Soc. Sci. Comput. Rev. 2015, 33, 217–234. [Google Scholar] [CrossRef]

- Epstein, D. “Are you a girl or are you a teacher?” The ‘least adult’ role in research about gender and sexuality in a primary school. In Doing Research about Education; Walford, G., Ed.; Falmer Press: London, UK, 1998. [Google Scholar]

- Deakin, H.; Wakefield, K. Skype interviewing: Reflections of two PhD researchers. Qual. Res. 2014, 14, 603–616. [Google Scholar] [CrossRef]

- Weller, S. Using internet video calls in qualitative (longitudinal) interviews: Some implications for rapport. Int. J. Soc. Res. Methodol. 2017, 20, 613–625. [Google Scholar] [CrossRef]

- Hennessey, A.; Demkowicz, O.; Pert, K.; Mason, C.; Bray, L.; Ashworth, E. Using Creative Approaches and Facilitating Remote Online Focus Groups with Children and Young People: Reflections, Recommendations and Practical Guidance. Int. J. Qual. Methods 2022, 21, 16094069221142454. [Google Scholar] [CrossRef]

- Raat, H.; Mangunkusumo, R.T.; Landgraf, J.M.; Kloek, G.; Brug, J. Feasibility, reliability, and validity of adolescent health status measurement by the Child Health Questionnaire Child Form (CHQ-CF): Internet administration compared with the standard paper version. Qual. Life Res. 2007, 16, 675–685. [Google Scholar] [CrossRef]

- Leiner, D.J. SoSci Survey, Version 3.2.24; Computer Software; 2021. Available online: https://www.soscisurvey.de (accessed on 9 March 2024).

- Stefes, T. Umwelt, Wohlbefinden und Entwicklung von Kindern und Jugendlichen (UWE) Befragung 2021; GESIS: Köln, Germany, 2023. [Google Scholar] [CrossRef]

- Schwabe, K.; Albrecht, M.; Stefes, T.; Petermann, S. Konzeption und Durchführung der UWE-Befragung 2019. In ZEFIR Materialien Band 17; Zentrum für Interdisziplinäre Regionalforschung (ZEFIR): Bochum, Germany, 2021. [Google Scholar]

- Stefes, T.; Lemke, A.; Gaffron, V.; Knüttel, K.; Schuchardt, J.; Petermann, S. Konzeption und Durchführung der UWE-Befragung 2021. In ZEFIR Materialien Band 22; Zentrum für Interdisziplinäre Regionalforschung (ZEFIR): Bochum, Germany, 2023. [Google Scholar]

- Knüttel, K.; Stefes, T.; Albrecht, M.; Schwabe, K.; Gaffron, V.; Petermann, S. Wie geht’s Dir? Ungleiche Voraussetzungen für das Subjektive Wohlbefinden von Kindern in Familie, Schule und Stadtteil; Bertelsmann Stiftung: Gütersloh, Germany, 2021. [Google Scholar] [CrossRef]

- Heeringa, S.G.; West, B.T.; Berglund, P.A. Applied Survey Data Analysis; CRC Press: Boca Raton, FL, USA, 2017. [Google Scholar]

- Niu, L. A review of the application of logistic regression in educational research: Common issues, implications, and suggestions. Educ. Rev. 2020, 72, 41–67. [Google Scholar] [CrossRef]

- Gummer, T.; Bach, R.L.; Daikeler, J.; Eckman, S. The relationship between response probabilities and data quality in grid questions. Surv. Res. Methods 2021, 15, 65–77. [Google Scholar]

- Leiner, D.J. Too Fast, too Straight, too Weird: Non-Reactive Indicators for Meaningless Data in Internet Surveys. Surv. Res. Methods 2019, 13, 229–248. [Google Scholar] [CrossRef]

- Wagner, J. The Fraction of Missing Information as a Tool for Monitoring the Quality of Survey Data. Public Opin. Q. 2010, 74, 223–243. [Google Scholar] [CrossRef]

- Wagner, J. A Comparison of Alternative Indicators for the Risk of Nonresponse Bias. Public Opin. Q. 2012, 76, 555–575. [Google Scholar] [CrossRef] [PubMed]

- Little, R.J. A test of missing completely at random for multivariate data with missing values. J. Am. Stat. Assoc. 1988, 83, 1198–1202. [Google Scholar] [CrossRef]

- Little, R.J. Regression with missing X’s: A review. J. Am. Stat. Assoc. 1992, 87, 1227–1237. [Google Scholar] [CrossRef]

- Türkan, S.; Özel, G. A Jackknifed estimators for the negative binomial regression model. Commun. Stat. Simul. Comput. 2018, 47, 1845–1865. [Google Scholar] [CrossRef]

- Revilla, M.; Ochoa, C. What are the Links in a Web Survey Among Response Time, Quality, and Auto-Evaluation of the Efforts Done? Soc. Sci. Comput. Rev. 2015, 33, 97–114. [Google Scholar] [CrossRef]

- Tourangeau, R.; Couper, M.P.; Conrad, F.G. The Science of Web Surveys; Oxford University Press: Oxford, UK, 2013. [Google Scholar]

- Braekers, R.; Veraverbeke, N. Cox’s regression model under partially informative censoring. Commun. Stat. Theory Methods 2005, 34, 1793–1811. [Google Scholar] [CrossRef]

- Newransky, C.; Kyriakakis, S.; Samaroo, K.D.; Owens, D.D.; Abu Hassan Shaari, A. Ethical and Methodological Challenges of Implementing Social Work Survey Research in Schools: A Perspective from the Suburban United States. Int. J. Sch. Soc. Work 2020, 5, 4. [Google Scholar] [CrossRef]

| Variable | Mean | sd | Min | Max | N |

|---|---|---|---|---|---|

| Individual Characteristics | |||||

| Age | 13.84 | 1.24 | 12 | 17 | 923 |

| Gender: Female | 0.54 | 0.50 | 0 | 1 | 923 |

| Literacy Problems: Yes | 0.02 | 0.15 | 0 | 1 | 923 |

| Migration Background: Yes | 0.41 | 0.49 | 0 | 1 | 923 |

| School: Comprehensive Secondary Track | 0.38 | 0.49 | 0 | 1 | 923 |

| School: High secondary Track | 0.49 | 0.50 | 0 | 1 | 923 |

| School: Practical Secondary Track | 0.26 | 0.44 | 0 | 1 | 923 |

| Interview Characteristics | |||||

| Delivery: Web survey | 0.22 | 0.41 | 0 | 1 | 923 |

| Teacher only (A) | 0.49 | 0.50 | 0 | 1 | 923 |

| Teacher and supervisor (B) | 0.38 | 0.49 | 0 | 1 | 923 |

| Data Quality Indicators | |||||

| Drop-out: Yes | 0.04 | 0.19 | 0 | 1 | 923 |

| Item nonresponse count | 8.12 | 24.06 | 0 | 186 | 923 |

| Interview Duration (Minutes) | 21.03 | 10.76 | 2 | 56 | 923 |

| Occurrences of straight-lining | 0.28 | 0.66 | 0 | 4 | 923 |

| School | (A) Teacher Only | (B) Teacher & Supervisor | (C) Supervisor Only | Response Rate |

|---|---|---|---|---|

| Comprehensive secondary track 1 | X | X | 11% | |

| Comprehensive secondary track 2 | X | 38% | ||

| Comprehensive secondary track 3 | X | 41% | ||

| High secondary track 1 | X | X | 49% | |

| High secondary track 2 | X | X | 77% | |

| High secondary track 3 | X | 64% | ||

| Practical secondary track 1 | X | 64% | ||

| Practical secondary track 2 | X | X | 53% | |

| Practical secondary track 3 | X | 37% |

| Coef. | St. Err. | z-Value | p-Value | [95% Conf | Interval] | Sig | |

|---|---|---|---|---|---|---|---|

| Respondent characteristics: | |||||||

| Gender: Female | 0.069 | 0.341 | 0.20 | 0.84 | −0.6 | 0.737 | |

| Age | −0.014 | 0.153 | −0.92 | 0.36 | −0.439 | 0.16 | |

| Problems with reading German | 1.829 | 0.778 | 2.35 | 0.019 | 0.305 | 3.353 | ** |

| Migration background | 0.546 | 0.339 | 1.61 | 0.108 | −0.119 | 1.21 | |

| Interview characteristics: | |||||||

| Web survey | 14.252 | 1.288 | 11.07 | 0 | 11.728 | 16.776 | *** |

| Teacher only (base) | - | - | - | - | - | - | |

| Teacher & supervisor | −1.331 | 0.487 | −2.73 | 0.006 | −2.286 | −0.376 | *** |

| Supervisor only | −0.579 | 0.706 | −0.82 | 0.412 | −1.963 | 0.804 | |

| School type: High secondary (base) | - | - | - | - | - | - | |

| Comprehensive secondary | 0.306 | 0.504 | 0.61 | 0.544 | −0.682 | 1.295 | |

| Practical secondary | −15.566 | 1.205 | −12.92 | 0 | −17.928 | −13.204 | *** |

| Constant | −1.072 | 2.152 | −0.50 | 0.618 | −5.289 | 3.145 | |

| Mean dependent var | 0.037 | SD dependent var | 0.188 | ||||

| Pseudo r-squared | 0.097 | Number of obs | 923 | ||||

| Chi-square | 513.943 | Prob > chi2 | 0.000 | ||||

| Akaike crit. (AIC) | 283.499 | Bayesian crit. (BIC) | 331.840 | ||||

| Coef. | St. Err. | z-Value | p-Value | [95% Conf | Interval] | Sig | |

|---|---|---|---|---|---|---|---|

| Respondent characteristics: | |||||||

| Gender | −0.165 | 0.171 | −0.97 | 0.335 | −0.499 | 0.17 | |

| Age | 0.02 | 0.057 | 0.35 | 0.729 | −0.092 | 0.132 | |

| Problems with reading German | −0.024 | 0.666 | −0.04 | 0.971 | −01.33 | 1.282 | |

| Migration background | 0.154 | 0.115 | 1.33 | 0.182 | −0.072 | 0.38 | |

| Interview characteristics: | |||||||

| Web Survey | −0.788 | 0.378 | −2.08 | 0.037 | −1.529 | −0.047 | ** |

| Teacher only (base) | - | - | - | - | - | - | |

| Teacher & supervisor | 0.179 | 0.223 | 0.80 | 0.422 | −0.259 | 0.617 | |

| Supervisor only | −0.42 | 0.241 | −1.74 | 0.081 | −0.892 | 0.052 | * |

| School type: High secondary (base) | - | - | - | - | - | - | |

| Comprehensive secondary | 0.817 | 0.242 | 3.38 | 0.001 | 0.343 | 1.292 | *** |

| Practical secondary | 1.86 | 0.388 | 4.79 | 0 | 1.099 | 2.62 | *** |

| Constant | 0.41 | 0.888 | 0.46 | 0.645 | −1.331 | 2.15 | |

| lnalpha | 0.475 | 0.101 | - | - | 0.278 | 0.672 | |

| Mean dependent var | 4.068 | SD dependent var | 9.815 | ||||

| Pseudo r-squared | 0.042 | Number of obs | 895 | ||||

| Chi-square | 169.814 | Prob > chi2 | 0.000 | ||||

| Akaike crit. (AIC) | 4158.635 | Bayesian crit. (BIC) | 4211.400 | ||||

| Hazard Ratio | Robust St. Err. | z-Value | p-Value | [95% Conf | Interval] | Sig | |

|---|---|---|---|---|---|---|---|

| Respondent characteristics: | |||||||

| Gender: Female | 0.99 | 0.075 | −0.14 | 0.892 | 0.853 | 1.149 | |

| Age | 1.206 | 0.049 | 4.60 | 0.000 | 1.114 | 1.307 | *** |

| Problems with reading German | 0.863 | 0.273 | −0.47 | 0.64 | 0.464 | 1.603 | |

| Migration background | 0.948 | 0.072 | −0.70 | 0.484 | 0.817 | 1.1 | |

| Interview characteristics: | |||||||

| Teacher only (base) | - | - | - | - | - | - | |

| Teacher & supervisor | 0.921 | 0.123 | −0.61 | 0.54 | 0.709 | 1.198 | |

| Supervisor only | 0.561 | 0.125 | −2.60 | 0.009 | 0.362 | 0.867 | *** |

| School type: High secondary (base) | - | - | - | - | - | - | |

| Comprehensive secondary | 0.654 | 0.091 | −3.06 | 0.002 | 0.498 | 0.858 | *** |

| Practical secondary | 0.23 | 0.073 | −4.62 | 0.000 | 0.123 | 0.429 | *** |

| Mean dependent var | 25.654 | SD dependent var | 7.935 | ||||

| Pseudo r-squared | 0.013 | Number of obs | 699 | ||||

| Chi-square | 62.718 | Prob > chi2 | 0.000 | ||||

| Akaike crit. (AIC) | 7683.672 | Bayesian crit. (BIC) | 7720.069 | ||||

| Coef. | St. Err. | z-Value | p-Value | [95% Conf | Interval] | Sig | |

|---|---|---|---|---|---|---|---|

| Respondent characteristics: | |||||||

| Gender: Female | −0.745 | 0.186 | −4.01 | 0.000 | −1.109 | −0.381 | *** |

| Age | −0.084 | 0.066 | −1.26 | 0.208 | −0.214 | 0.046 | |

| Problems with reading German | 0.261 | 0.456 | 0.57 | 0.568 | −0.633 | 1.154 | |

| Migration background | −0.113 | 0.16 | −0.71 | 0.478 | −0.427 | 0.2 | |

| Interview characteristics: | |||||||

| Web survey | −0.234 | 0.292 | −0.80 | 0.424 | −0.806 | 0.339 | |

| Teacher only (base) | - | - | - | - | - | - | |

| Teacher & supervisor | −0.283 | 0.209 | −1.35 | 0.176 | −0.693 | 0.127 | |

| Supervisor only | −0.603 | 0.301 | −2.01 | 0.045 | −1.192 | −0.014 | ** |

| School type: High secondary (base) | - | - | - | - | - | - | |

| Comprehensive secondary | 0.107 | 0.24 | 0.45 | 0.656 | −0.363 | 0.577 | |

| Practical secondary | −0.03 | 0.287 | −0.10 | 0.917 | −0.594 | 0.533 | |

| Constant | 0.356 | 0.947 | 0.38 | 0.707 | −1.5 | 2.211 | |

| Mean dependent var | 0.198 | SD dependent var | 0.399 | ||||

| Pseudo r-squared | 0.031 | Number of obs | 895 | ||||

| Chi-square | 31.717 | Prob > chi2 | 0.000 | ||||

| Akaike crit. (AIC) | 882.369 | Bayesian crit. (BIC) | 930.337 | ||||

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the author. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Stefes, T. Data Quality of Different Modes of Supervision in Classroom Surveys. Educ. Sci. 2024, 14, 299. https://doi.org/10.3390/educsci14030299

Stefes T. Data Quality of Different Modes of Supervision in Classroom Surveys. Education Sciences. 2024; 14(3):299. https://doi.org/10.3390/educsci14030299

Chicago/Turabian StyleStefes, Till. 2024. "Data Quality of Different Modes of Supervision in Classroom Surveys" Education Sciences 14, no. 3: 299. https://doi.org/10.3390/educsci14030299

APA StyleStefes, T. (2024). Data Quality of Different Modes of Supervision in Classroom Surveys. Education Sciences, 14(3), 299. https://doi.org/10.3390/educsci14030299