The Fallacy of Using the National Assessment Program–Literacy and Numeracy (NAPLAN) Data to Identify Australian High-Potential Gifted Students

Abstract

1. Introduction

2. Defining Giftedness and Talent in the Australian Context

Giftedness [emphasis in original] designates the possession and use of biologically anchored and informally developed outstanding natural abilities or aptitudes (called gifts), in at least one ability domain, to a degree that places an individual at least among the top 10% of age peers.Talent [emphasis in original] designates the outstanding mastery of systematically developed competencies (knowledge and skills) in at least one field of human activity to a degree that places an individual at least among the top 10% of ‘learning peers’, namely, those having accumulated a similar amount of learning time from either current or past training.(p. 10)

3. The Australian Context and Identification of Giftedness

4. The Australian National Assessment Program–Literacy and Numeracy (NAPLAN)

5. Australian School Processes for Identifying Giftedness

6. Discussion

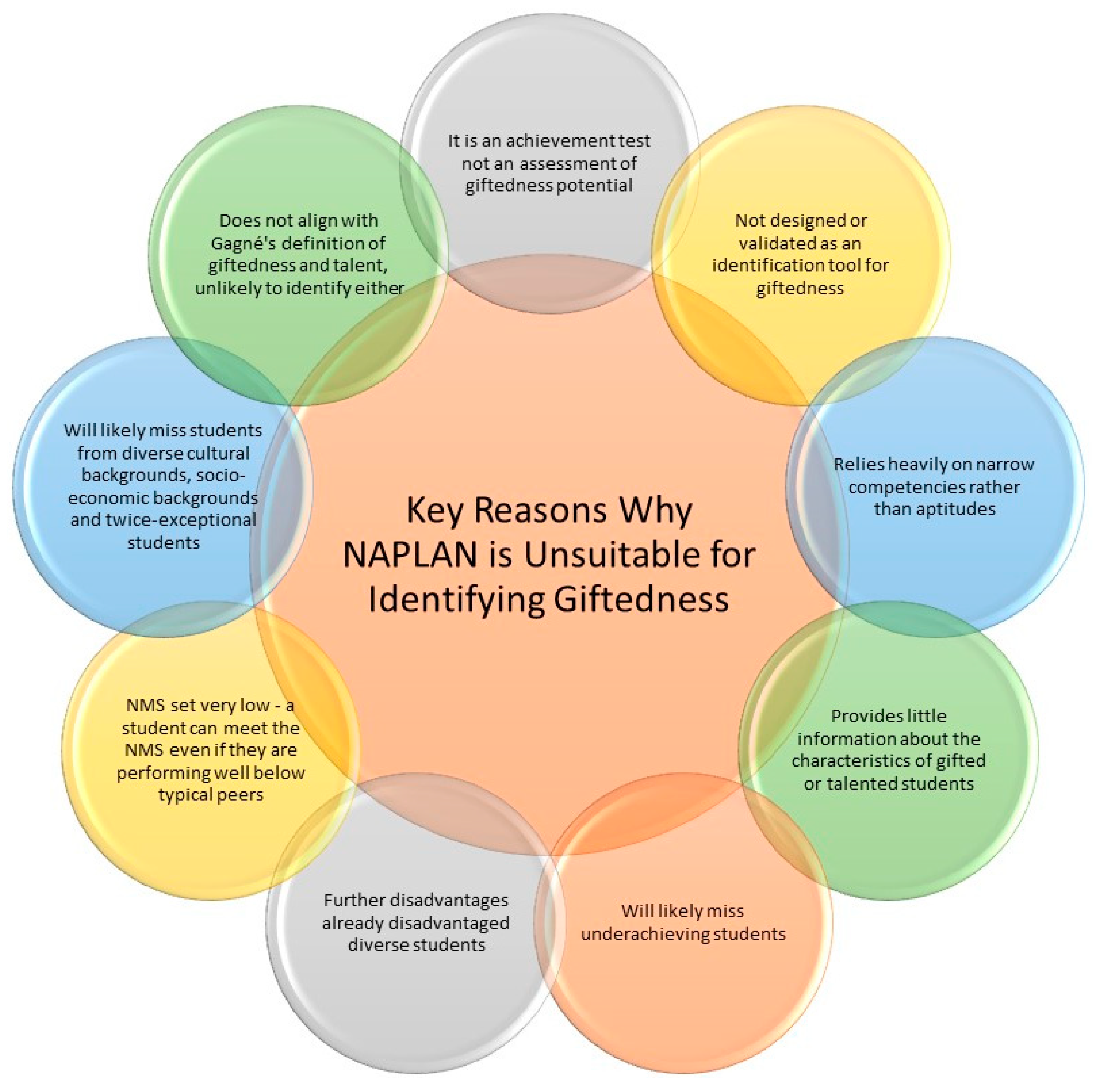

6.1. The Fallacy of Using NAPLAN Data to Identify Giftedness

6.2. Evidence of NAPLAN Use in Identification of Giftedness

6.3. Comprehensive Identification Practices and the Potential Role of NAPLAN

7. Limitations

8. Recommendations for Future Research

9. Conclusions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Gagné, F. Differentiating Giftedness from Talent: The DMGT Perspective on Talent Development; Routledge: London, UK, 2021. [Google Scholar]

- Ronksley-Pavia, M. A model of twice-exceptionality: Explaining and defining the apparent paradoxical combination of disability and giftedness in childhood. J. Educ. Gift. 2015, 38, 318–340. [Google Scholar] [CrossRef]

- Gagné, F. Implementing the DMGT’s constructs of giftedness and talent: What, why and how? In Handbook of Giftedness and Talent Development in the Asia-Pacific; Smith, S.R., Ed.; Springer: Singapore, 2019; pp. 71–99. [Google Scholar]

- Ericsson, K.A. Training history, deliberate practice and elite sports performance: An analysis in response to Tucker and Collins review—What makes champions? Br. J. Sport. Med. 2013, 47, 533–535. [Google Scholar] [CrossRef] [PubMed]

- Callahan, C.M. The characteristics of gifted and talented students. In Fundamentals of Gifted Education: Considering Multiple Perspectives, 2nd ed.; Callahan, C.M., Hertberg-Davis, H.L., Eds.; Routledge: New York, NY, USA, 2018; pp. 153–166. [Google Scholar]

- Gagné, F. The DMGT: The Core of My Professional Career in Talent Development. 2020. Available online: https://gagnefrancoys.wixsite.com/dmgt-mddt/the-dmgt-in-english (accessed on 7 August 2022).

- NAGC. Position Statement—Redefining Giftedness for a New Century: Shifting the Paradigm. 2010. Available online: http://www.nagc.org.442elmp01.blackmesh.com/sites/default/files/Position%20Statement/Redefining%20Giftedness%20for%20a%20New%20Century.pdf (accessed on 2 March 2022).

- Peeters, B. Thou shalt not be a tall poppy: Describing an Australian communicative (and behavioral) norm. Intercult. Pragmat. 2004, 1, 71–92. [Google Scholar] [CrossRef]

- Ronksley-Pavia, M.; Grootenboer, P.; Pendergast, D. Privileging the voices of twice-exceptional children: An Exploration of lived experiences and stigma narratives. J. Educ. Gift. 2019, 42, 4–34. [Google Scholar] [CrossRef]

- Pfeiffer, S.I. Essential of Gifted Assessment, 1st ed.; Wiley & Sons: Hoboken, NJ, USA, 2015. [Google Scholar]

- McBee, M.T.; Peters, S.J.; Miller, E.M. The impact of the nomination stage on gifted program identification: A comprehensive psychometric analysis. Gift. Child Q. 2016, 60, 258–278. [Google Scholar] [CrossRef]

- Flynn, J.R. Are We Getting Smarter? Rising IQ in the Twenty-First Century; Cambridge University Press: Cambridge, UK, 2012. [Google Scholar]

- Gould, S.J. The Mismeasure of Man; W. W. Norton: New York, NY, USA, 1996. [Google Scholar]

- Murdoch, S. IQ: A Smart History of a Failed Idea; Wiley: Hoboken, NJ, USA, 2007. [Google Scholar]

- Weiss, L.G.; Saklofske, D.H.; Holdnack, J.A. WISC-V: Clinical Use and Interpretation; Academic Press: London, UK, 2019. [Google Scholar]

- Dai, D.Y.; Sternberg, R.J. Motivation, Emotion, and Cognition: Integrative Perspectives on Intellectual Functioning and Development; Lawrence Erlbaum Associates Inc. Publishers: Mahwah, NJ, USA, 2004. [Google Scholar]

- Renzulli, J.S. Reexamining the role of gifted education and talent development for the 21st Century. Gift. Child Q. 2012, 56, 150–159. [Google Scholar] [CrossRef]

- Sternberg, R.J. Is gifted education on the right path? In The SAGE Handbook of Gifted and Talented Education; Wallace, B., Sisk, D.A., Senior, J., Eds.; SAGE: London, UK, 2019; pp. 5–18. [Google Scholar]

- McIntosh, D.E.; Dixon, F.A.; Pierson, E.E. Use of intelligence tests in the identification of giftedness. In Contemporary Intellectual Assessment: Theories, Tests, and Issues; Flanagan, D.P., McDonough, E.M., Eds.; The Guilford Press: New York, NY, USA, 2018; pp. 587–607. [Google Scholar]

- NAGC. Position Statement: Use of the WISC-V for Gifted and Twice Exceptional Identification; NAGC: Washington, DC, USA, 2018. [Google Scholar]

- Sternberg, R.J.; Davidson, J.E. Conceptions of Giftedness, 2nd ed.; Cambridge University Press: Cambridge, UK, 2005. [Google Scholar]

- Newman, T.M.; Sparrow, S.S.; Pfeiffer, S.I. The Use of the WISC-IV in assessment and intervention planning for children who are gifted. In WISC-IV Clinical Assessment and Intervention, 2nd ed.; Prifitera, A., Saklofske, H., Weiss, L.G., Eds.; Academic Press: San Diego, CA, USA, 2008; pp. 217–272. [Google Scholar]

- Merrotsy, P. Gagné’s Differentiated Model of Giftedness and Talent in Australian education. Australas. J. Gift. Educ. 2017, 26, 29–42. [Google Scholar] [CrossRef]

- McBee, M.T.; Makel, M.C. The quantitative implications of definitions of giftedness. AERA Open 2019, 5, 1–13. [Google Scholar] [CrossRef]

- Australian Curriculum, Assessment and Reporting Authority (ACARA). NAP National Assessment Program. 2022. Available online: https://www.nap.edu.au/naplan/key-dates (accessed on 3 March 2023).

- Australian Curriculum, Assessment and Reporting Authority (ACARA). NAPLAN What’s in the Tests? 2016. Available online: https://www.nap.edu.au/naplan/whats-in-the-tests (accessed on 3 March 2023).

- Marks, G.N. Are school-SES effects statistical artefacts? Evidence from longitudinal population data. Oxf. Rev. Educ. 2015, 41, 122–144. [Google Scholar] [CrossRef]

- Cumming, J.J.; Dickson, E. Educational accountability tests, social and legal inclusion approaches to discrimination for students with disability: A national case study from Australia. Assess Educ. 2013, 20, 221–239. [Google Scholar] [CrossRef]

- Johnston, J. Australian NAPLAN testing: In what ways is this a ‘wicked’ problem? Improv. Sch. 2017, 20, 18–34. [Google Scholar] [CrossRef]

- Australian Curriculum, Assessment and Reporting Authority (ACARA). NAPLAN: National Assessment Program—Literacy and Numeracy Infographic (V4-2). Available online: https://docs.acara.edu.au/resources/Acara_NAPLAN_Infographic.pdf (accessed on 3 March 2023).

- Lange, T.; Meaney, T. It’s just as well kids don’t vote: The positioning of children through public discourse around national testing. Math. Educ. Res. J. 2014, 26, 377–397. [Google Scholar] [CrossRef]

- Rose, J.; Low-Choy, S.; Singh, P.; Vasco, D. NAPLAN discourses: A systematic review after the first decade. Discourse Stud. Cult. Politics Educ. 2020, 41, 871–886. [Google Scholar] [CrossRef]

- Curriculum, A.; Authority, A. Connection to the Australian Curriculum. 2016. Available online: https://www.nap.edu.au/naplan/connection-to-the-australian-curriculum (accessed on 3 March 2023).

- Lingard, B.; Thompson, G.; Sellar, S. National testing from an Australian perspective. In National Testing in Schools: An Australian Assessment; Lingard, B., Thompson, G., Sellar, S., Eds.; Routledge: London, UK, 2016; pp. 3–17. [Google Scholar]

- Klenowski, V.; Wyatt-Smith, C. The impact of high stakes testing: The Australian story. Assess Educ. 2012, 19, 65–79. [Google Scholar] [CrossRef]

- Thompson, G.; Mockler, N. Principals of audit: Testing, data and ‘implicated advocacy’. J. Educ. Adm. Hist. 2016, 48, 1–18. [Google Scholar] [CrossRef]

- Wu, M.; Hornsby, D. Inappropriate uses of NAPLAN results. Pract. Prim. 2014, 19, 16–17. [Google Scholar]

- Lingard, B. Policy borrowing, policy learning: Testing times in Australian schooling. Crit. Stud. Educ. 2010, 51, 129–147. [Google Scholar] [CrossRef]

- Government, A. ACT Gifted and Talented Students Policy Appendix B: Identification Instruments. ACT Gifted and Talented Education Policy. 2021. Available online: https://www.education.act.gov.au/publications_and_policies/School-and-Corporate-Policies/access-and-equity/gifted-and-talented/gifted-and-talented-students-policy (accessed on 3 March 2023).

- Government ACT. ACT Gifted and Talented Students Policy. 2021. Available online: https://www.education.act.gov.au/publications_and_policies/School-and-Corporate-Policies/access-and-equity/gifted-and-talented/gifted-and-talented-students-policy (accessed on 3 March 2023).

- NSW Government. High Potential and Gifted Education Policy. 2019. Available online: https://education.nsw.gov.au/policy-library/policies/pd-2004-0051 (accessed on 3 March 2023).

- NSW Government. Assess and Identify. 2019. Available online: https://education.nsw.gov.au/teaching-and-learning/high-potential-and-gifted-education/supporting-educators/assess-and-identify#Assessment1 (accessed on 3 March 2023).

- Department of Education, Northern Territory Government of Australia. Gifted and Talented Students (G&T). 2022. Available online: https://education.nt.gov.au/support-for-teachers/student-diversity/gifted-and-talented-students (accessed on 3 March 2023).

- Department of Education, Queensland Government. Gifted and Talented Education. 2018. Available online: https://education.qld.gov.au/parents-and-carers/school-information/life-at-school/gifted-and-talented-education#:~:text=All%20Queensland%20state%20schools%20are,teachers%20in%20their%20local%20area (accessed on 3 March 2023).

- Department of Education, Queensland Government. P-12 Curriculum, Assessment and Reporting Framework (CARF) (Revised February 2022). 2022. Available online: https://education.qld.gov.au/curriculums/Documents/p-12-curriculum-assessment-reporting-framework.pdf (accessed on 3 March 2023).

- Department of Education, Government of South Australia. Student Support Programs—Gifted and Talented Education. 2020. Available online: https://www.sa.gov.au/topics/education-and-learning/curriculum-and-learning/student-support-programs (accessed on 3 March 2023).

- Department of Education, Tasmanian Government. Extended Learning for Gifted Students Procedure (Version 1.1—9/03/2022). 2022. Available online: https://publicdocumentcentre.education.tas.gov.au/library/Document%20Centre/Extended-Learning-for-Gifted-Students-Procedure.pdf (accessed on 3 March 2023).

- Department of Education, State Government of Victoria. Whole School Approach to High Ability. 2019. Available online: https://www.education.vic.gov.au/school/teachers/teachingresources/high-ability-toolkit/Pages/whole-school-approach-to-high-ability.aspx (accessed on 3 March 2023).

- Department of Education, State Government of Victoria. Identifying High-Ability. 2022. Available online: https://www.education.vic.gov.au/school/teachers/teachingresources/high-ability-toolkit/Pages/identifying-high-potential.aspx (accessed on 3 March 2023).

- Department of Education, Government of Western Australia. Gifted and Talented in Public Schools. 2018. Available online: https://www.education.wa.edu.au/dl/nl1dmpd (accessed on 3 March 2023).

- School Curriculum and Standards Authority, Government of Western Australia. Guidelines for the Acceleration of Students Pre-primary–Grade 10. 2020. Available online: https://k10outline.scsa.wa.edu.au/__data/assets/pdf_file/0010/637768/Guidelines_for_the_acceleration_of_students_Pre-primary_to_Grade_10.PDF (accessed on 3 March 2023).

- Bracken, B.A.; Brown, E.F. Behavioral identification and assessment of gifted and talented students. J. Psychoeduc. Assess 2006, 24, 112–122. [Google Scholar] [CrossRef]

- Corwith, S.; Johnsen, S.; Cotabish, A.; Dailey, D.; Guilbault, K. Pre-K-Grade 12 Gifted Programming Standards. National Association for Gifted Children. 2019. Available online: http://nagc.org.442elmp01.blackmesh.com/resources-publications/resources/national-standards-gifted-and-talented-education/pre-k-grade-12 (accessed on 6 March 2023).

- Ronksley-Pavia, M.; Ronksley-Pavia, S. The role of primary health care providers in supporting a gifted child. Australian Journal of General Practice. Forthcoming.

- Nicpon, M.F.; Allmon, A.; Sieck, B.; Stinson, R.D. Empirical investigation of twice-exceptionality: Where have we been and where are we going? Gift. Child Q. 2011, 55, 3–17. [Google Scholar] [CrossRef]

- Latifi, A. NAPLAN Results Being Used for Competitive Entry Programs. Illawarra Mercury. 30 April 2019. Available online: https://www.illawarramercury.com.au/story/6095472/naplan-results-being-used-for-competitive-entry-programs/ (accessed on 6 March 2023).

- Long, L.C.; Barnett, K.; Rogers, K.B. Exploring the relationship between principal, policy, and gifted program scope and quality. J. Educ. Gift. 2015, 38, 118–140. [Google Scholar] [CrossRef]

- The National Assessment Program Literacy and Numeracy (NAPLAN); Australian Curriculum, Assessment and Reporting Authority (ACARA) Frequently Asked Questions—Individual Student Reports. 2022. Available online: https://nap.edu.au/docs/default-source/default-document-library/faq-individual-student-report.pdf (accessed on 20 March 2023).

- Jackson, R.L.; Jung, J.Y. The identification of gifted underachievement: Validity evidence for the commonly used methods. Br. J. Educ. Psychol. 2022, 92, 1133–1159. [Google Scholar] [CrossRef] [PubMed]

- Haines, M.E. Opening the Doors of Possibility for Gifted/High-Ability Children with Learning Difficulties: Preliminary Assessment Strategies for Primary School Teachers; University of New England: Armidale, Australia, 2017. [Google Scholar]

- Goss, P.; Sonnemann, J. Widening Gaps: What NAPLAN Tells Us about Student Progress. 2016. Available online: http://www.grattan.edu.au/ (accessed on 21 August 2020).

- Dharmadasa, K.; Nakos, A.; Bament, J.; Edwards, A.; Reeves, H. Beyond NAPLAN testing: Nurturing mathematical talent. Aust. Math. Teach. 2014, 70, 22–27. Available online: https://files.eric.ed.gov/fulltext/EJ1093267.pdf (accessed on 21 August 2020).

- McGaw, B.; Louden, W.; Wyatt-Smith, C. November 2019 NAPLAN Review Interim Report Contents; NAPLAN: Sydney, Australia, 2020. [Google Scholar]

- Carey, M.D.; Davidow, S.; Williams, P. Re-imagining narrative writing and assessment: A post-NAPLAN craft-based rubric for creative writing. Aust. J. Lang. Lit. 2022, 45, 33–48. [Google Scholar] [CrossRef]

- Wu, M. Inadequacies of NAPLAN Results for Measuring School Performance. Available online: https://www.aph.gov.au/DocumentStore.ashx?id=dab4b1dc-d4a7-47a6-bfc8-77c89c5e9f74 (accessed on 11 April 2023).

- Watson, K.; Handal, B.; Maher, M. NAPLAN Data Is not Comparable across School Years. The Conversation. 2016. Available online: https://theconversation.com/naplan-data-is-not-comparable-across-school-years-63703 (accessed on 11 April 2023).

- Australian Curriculum, Assessment and Reporting Authority (ACARA). My School Fact Sheet: Guide to Understanding Gain. 2015. Available online: https://docs.acara.edu.au/resources/Guide_to_understanding_gain.pdf (accessed on 11 April 2023).

- Parliament of Victoria, Education and Training Committee. Inquiry into the Education of Gifted and Talented Students; Parliamentary Paper No. 108, Session 2010–2012. 2012. Available online: https://www.parliament.vic.gov.au/images/stories/committees/etc/Past_Inquiries/EGTS_Inquiry/Final_Report/Gifted_and_Talented_Final_Report.pdf (accessed on 6 March 2023).

- Ronksley-Pavia, M. Gifted Identification Practices across a Random Sample of Australian Schools: A Pilot Project. Working Paper. 2023. [Google Scholar]

- Ronksley-Pavia, M. Personalised learning: Disability and gifted learners. In Teaching Primary Years: Rethinking Curriculum, Pedagogy and Assessment; Pendergast, D., Main, K., Eds.; Allen & Unwin: Crows Nest, Australia, 2019; pp. 422–442. [Google Scholar]

- ACER. Tests of Reading Comprehension (TORCH). 2013. Available online: https://shop.acer.org/tests-of-reading-comprehension-torch-third-edition.html (accessed on 6 March 2023).

- ACER. Progressive Achievement Tests (PAT). 2022. Available online: https://www.acer.org/au/pat (accessed on 6 March 2023).

| State/Territory | Specific Gifted Education Policy | Identification Notations | Identification Practices Assessment Types Listed | Examples of Assessment Instruments Listed | Source/s |

|---|---|---|---|---|---|

| Australian Capital Territory (ACT) | Yes | Using data from multiple subjective and objective assessment measures of ability and achievement to identify potentially gifted and talented students. | Parent nomination checklists Teacher nomination checklists External psychometric testing School-based abilities testing Standardised achievement tests Parent observations Teacher observations School work/reports. | Qualitative: Cognitive and Affective Rating Scales Student work and assessments Interviews Quantitative: WISC-V, SB-5, Raven’s, Naglieri, PAT, TORCH, NAPLAN, Acceleration Assessments: IAS-Iowa Acceleration Scale, Renzulli Scales, Creativity Tests: Remote Association Task, Khatena-Torrance Tests for Artistic Ability and Talent: Clark’s Drawing Abilities, Barron-Welsh Art Scale [39] | ACT Gifted and Talented Students Policy [40] Appendix B: Identification Instruments [39] |

| New South Wales (NSW) | Yes | Objective, valid and reliable measures, as part of formative assessment, should be used to assess high potential and gifted students and identify their specific learning needs [41] | Ability tests, achievement tests, adaptive tests, rating scales performance-based assessments, dynamic assessments, growth modelling assessments | None listed | High Potential and Gifted Education Policy [42] |

| Northern Territory (NT) | Yes | The department uses data and evidence to identify intellectual giftedness and/or academic talents by using both qualitative and quantitative identification tools [43] | Gifts (high potential): Rating scales, Checklists, Nominations, Standardised cognitive assessments. Talents (high performance): NAPLAN, Student achievement data/school reports, Portfolios of student work, Parent/teacher nomination | None listed | Gifted and talented students (G and T) [43] |

| Queensland (Qld) | No * | All Queensland state schools are committed to meeting learning needs of students who are gifted…The Department of Education has many awards, programs and initiatives to recognise students who demonstrate outstanding talents and show potential in academic and extracurricular activities [43] | None listed, no specific gifted and talented education policy–although P-12 CARF suggests use of “school wide processes to identify groups and individuals who require tailored support” [44] | None listed (no specific gifted and talented education policy). | Gifted and talented education [44], P-12 Curriculum, assessment and reporting framework (CARF) [45] |

| South Australia (SA) | No * | Government schools and preschools have programs for gifted and talented children as part of the standard curriculum. Specialised courses and programs: A number of schools offer specialised courses and programs for students: with a special interest who are well ahead of their peers demonstrating talent in a particular area. | None listed. | None listed | Student Support Programs–Gifted and talented education [46] |

| Tasmania (Tas) | Yes | Implement processes to identify and make appropriate provision for gifted students in their school, including acceleration procedures and early entry to kindergarten [47] | None listed. | Early Entry to School WPPSI IV test required. None listed for other year levels. | Extended Learning for Gifted Students Procedure, Version 1.1 [47] |

| Victoria (Vic) | No *^ (Have a ‘high-ability toolkit and related webpages) | Identification should: begin as early as possible, be flexible and continuous, utilise many measures, highlight indicators of underachievement, be appropriate to age and stage of schooling. | List of measures: response to classroom activities, self-nomination, peer-nomination, teacher nomination, parent nomination, competition results, above-level tests, standardised tests of creative ability, standardised cognitive assessments (IQ tests) observations and anecdotes, checklists of traits interviews (child or parent), academic grades. Assessment data (formative & summative): classroom-based assessment samples (e.g., tests, assignments), standardised achievement assessments (e.g., NAPLAN or the Progressive Achievement Tests–Reading/Mathematics), teacher observations, and/or other qualitative information, projects or portfolios, past assessment results (e.g., curriculum levels–previous year), above-level tests. | NAPLAN, Progressive Achievement Tests–Reading/Mathematics, Silverman’s checklist and exemplar, Merrick’s checklist and exemplar, Frasier’s TABs and exemplar, Assessment audit template | Whole school approach to high ability [48] Identifying high-ability [49] High ability toolkit [48] |

| Western Australia (WA) | Yes | Principals will plan and implement strategies to identify gifted and talented students. | Identification processes for gifted and talented students should: Be inclusive, be flexible and continuous, use information from a variety of sources, including classroom teacher observation and assessment, as well as knowledge obtained from other people (e.g., parents and peers). Help teacher identify a student’s intellectual strengths, artistic or linguistic talents, and social and emotional needs. Direct quality of the teaching and learning environment [50] | None for identification of gifted/talented students. For acceleration of students pre-primary to Year 10-examples: performance in classwork and classroom teacher observation, school assessments, information from other sources, such as parents and peers, IQ tests and psychological assessments, other standardised achievement tests, NAPLAN performance, Iowa Acceleration Scale, information about social-emotional readiness [51] | Gifted and Talented in Public Schools [50], Guidelines for the Acceleration of Students Pre-primary–Year 10 [51] |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the author. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Ronksley-Pavia, M. The Fallacy of Using the National Assessment Program–Literacy and Numeracy (NAPLAN) Data to Identify Australian High-Potential Gifted Students. Educ. Sci. 2023, 13, 421. https://doi.org/10.3390/educsci13040421

Ronksley-Pavia M. The Fallacy of Using the National Assessment Program–Literacy and Numeracy (NAPLAN) Data to Identify Australian High-Potential Gifted Students. Education Sciences. 2023; 13(4):421. https://doi.org/10.3390/educsci13040421

Chicago/Turabian StyleRonksley-Pavia, Michelle. 2023. "The Fallacy of Using the National Assessment Program–Literacy and Numeracy (NAPLAN) Data to Identify Australian High-Potential Gifted Students" Education Sciences 13, no. 4: 421. https://doi.org/10.3390/educsci13040421

APA StyleRonksley-Pavia, M. (2023). The Fallacy of Using the National Assessment Program–Literacy and Numeracy (NAPLAN) Data to Identify Australian High-Potential Gifted Students. Education Sciences, 13(4), 421. https://doi.org/10.3390/educsci13040421