A Combination of Real-World Experiments and Augmented Reality When Learning about the States of Wax—An Eye-Tracking Study

Abstract

:1. Introduction

2. Theoretical Background

3. Research Questions and Hypothesis

- What hypotheses do students formulate about what substance burns when a candle is lit?

- How are the transmission comprehension tasks in the burning candle experiment affected when using a learning environment with different augmented reality learning scenarios?

- How do the participants’ eyes move when using different augmented reality learning scenarios of the burning candle?

4. Materials and Methods

4.1. Learning Environment

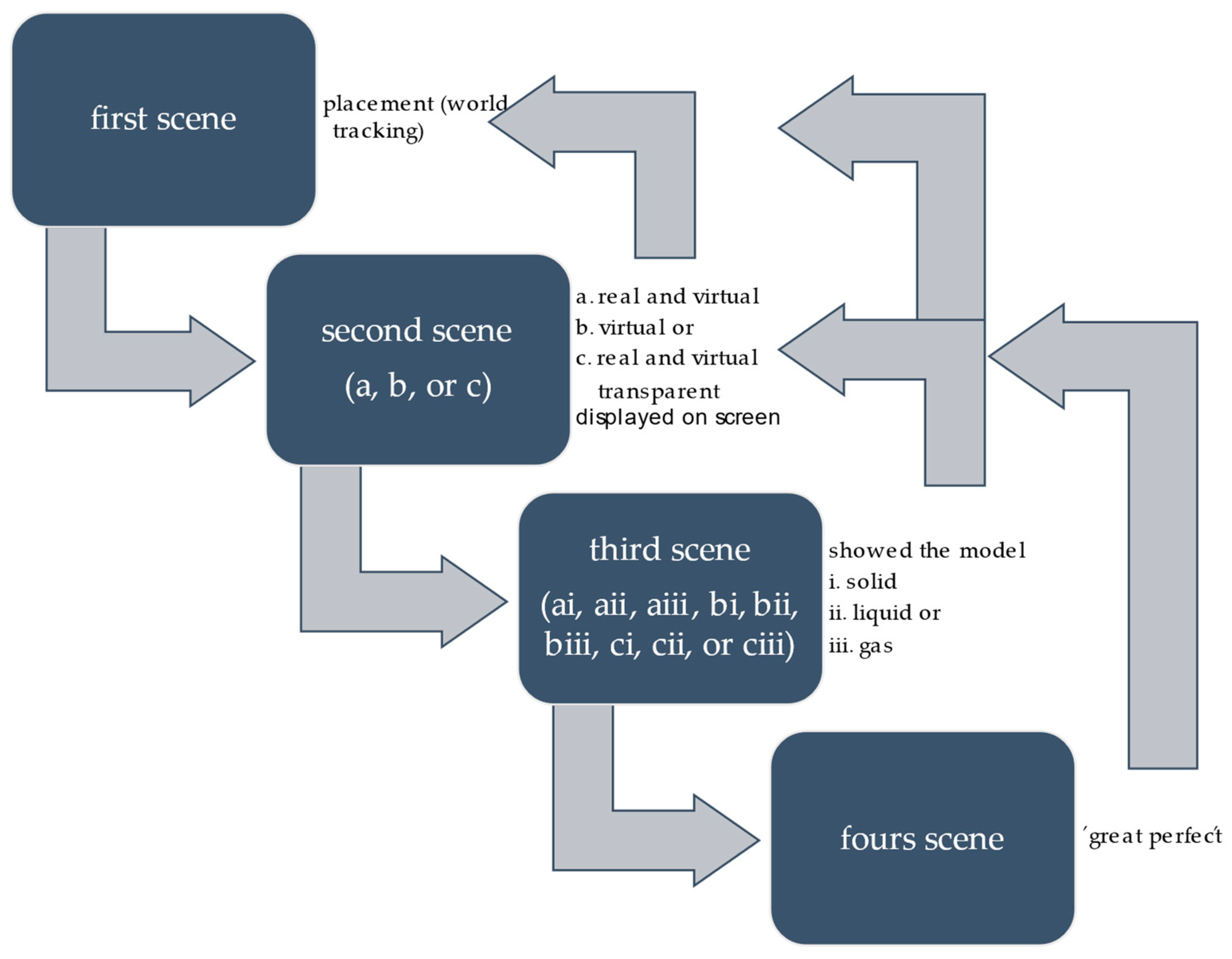

- First scene: Placing the virtual candle in front of the real candle on the table using world tracking within the Zappar app.

- Second scene: Three different versions are shown, which are the same in design parameters ‘additivity’ (level = 2), ‘interactivity’ (level = 3), complexity (level = 2), ‘immersion’ (indicator score of 1), ‘game elements’ (indicator score of 3), and ‘proximity to reality’ (indicator score of 2) for their AR contents but different in ‘congruence with reality’. Scenario (a) reaches an indicator score of 5, scenario (b) reaches an indicator score of 5, and scenario (c) reaches an indicator score of 4.

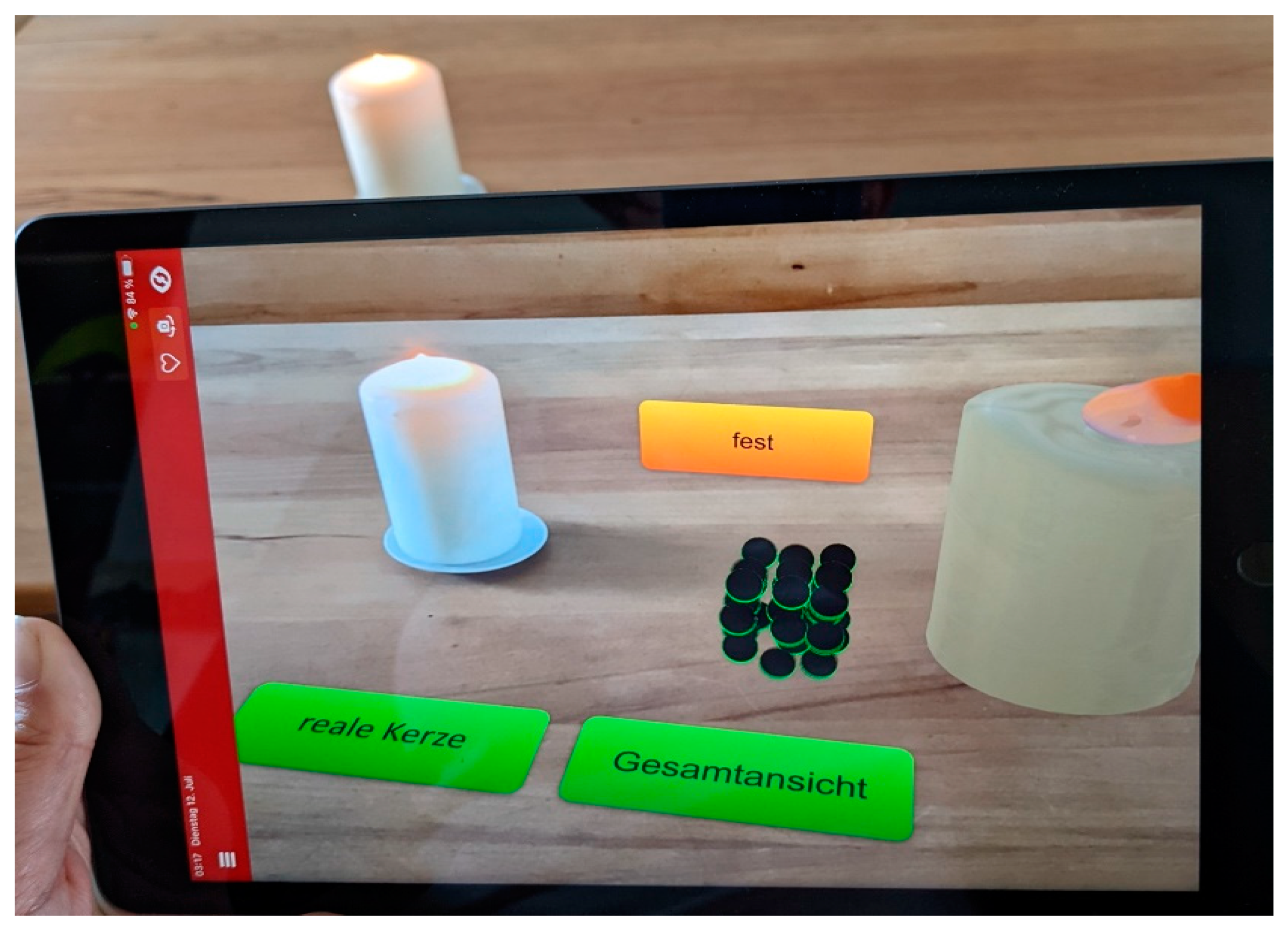

- Second (a) scene: The real candle is displayed on the screen (3D registration a); on the right next to it are solid, gas, and liquid buttons and on the right next to them is the virtual candle.

- Second (b) scene: The real candle is not displayed on the screen, but is rather concealed by the virtual candle (3D registration b). On the right next to the virtual candle are solid, gas, and liquid buttons.

- Second (c) scene: The real candle is displayed on the screen and is overlayed by a transparent virtual candle (3D registration b and photorealism loos). On the right next to it are solid, gas, and liquid buttons.

- Third scene: The models of a solid (i), a liquid (ii) or a gas (iii) are displayed, depending on the three states of matter selected by pressing a button. The models of the three states of matter are placed at the position where the two lower buttons are visible in second scene. The button that is pressed moves to the highest position of the buttons, as in the second scene (see Figure 1, Figure 2 and Figure 3).

- Fourth scene: If the participant pressed the right area of solid, liquid, or gas at the virtual candle in third scene, then a positive feedback message (‘great perfect’) appears on the screen. All scenes and their connections are shown in Figure 4.

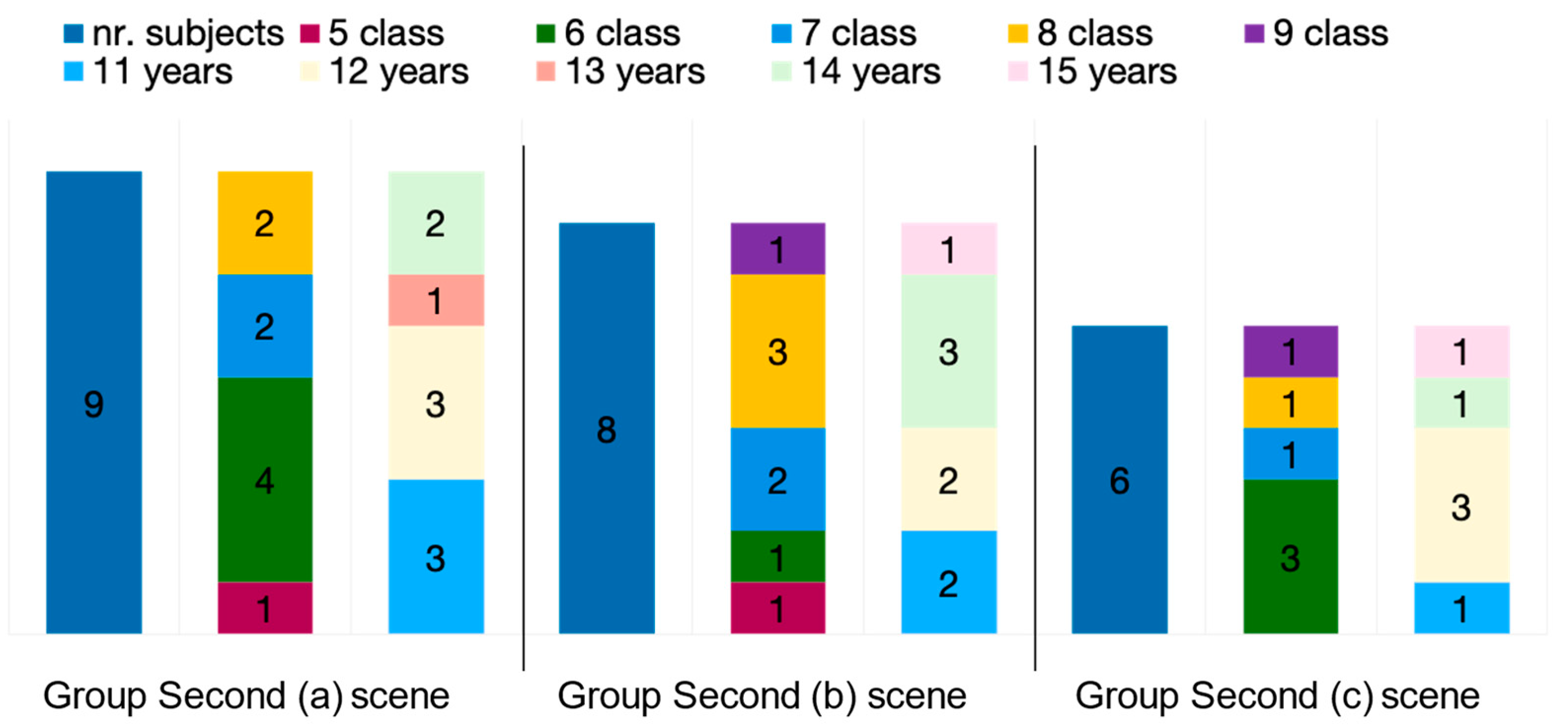

4.2. Context and Participants

4.3. Data Collection

5. Results and Discussion

5.1. RQ1 Hyphothesis—Burning Candle

5.2. RQ2 Using AR

5.3. RQ3 Transfer Question

6. Conclusions and Limitations

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Probst, C.; Fetzer, D.; Lukas, S.; Huwer, J. Effects of using augmented reality (AR) in visualizing a dynamic particle model. Chemkon 2022, 29, 164–170. [Google Scholar] [CrossRef]

- Altmeyer, K.; Kapp, S.; Thees, M.; Malone, S.; Kuhn, J.; Bruenken, R. The use of augmented reality to foster conceptual knowledge acquisition in STEM laboratory courses—Theoretical background and empirical results. Br. J. Educ. Technol. 2020, 51, 611–628. [Google Scholar] [CrossRef]

- Kuhn, J.; Müller, A. Context-based science education by newspaper story problems: A study on motivation and learning effects. Perspect. Sci. 2014, 2, 5–21. [Google Scholar] [CrossRef]

- Ovens, M.; Ellyard, M.; Hawkins, J.; Spagnoli, D. Developing an Augmented Reality Application in an Undergraduate DNA Precipitation Experiment to Link Macroscopic and Submicroscopic Levels of Chemistry. J. Chem. Educ. 2020, 97, 3882–3886. [Google Scholar] [CrossRef]

- Cai, S.; Liu, C.; Wang, T.; Liu, E.; Liang, J. Effects of learning physics using Augmented Reality on students’ self-efficacy and conceptions of learning. Br. J. Educ. Technol. 2021, 52, 235–251. [Google Scholar] [CrossRef]

- Qingtang, L.; Shufan, Y.; Chen, W.; Wang, Q.; Suxiao, X. The effects of an augmented reality based magnetic experimental tool on students’ knowledge improvement and cognitive load. J. Comput. Assist. Learn. 2021, 37, 645–656. [Google Scholar]

- Strzys, M.P.; Thees, M.; Kapp, S.; Kuhn, J. Smartglasses in STEM laboratory courses-the augmented thermal flux experiment. In Proceedings of the Physics Education Research Conference 2018, Washington, DC, USA, 1–2 August 2018. [Google Scholar]

- Fidan, M.; Tuncel, M. Integrating augmented reality into problem based learning: The effects on learning achievement and attitude in physics education. Comput. Educ. 2019, 142, 103635. [Google Scholar] [CrossRef]

- Qingtang, L.; Suxiao, X.; Shufan, Y.; Yuanyuan, Y.; Linjing, W.; Shen, B. Design and Implementation of an AR-Based Inquiry Courseware-Magnetic Field. In Proceedings of the 2019 International Symposium on Educational Technology (ISET), Hradec Kralove, Czech Republic, 2–4 July 2019. [Google Scholar]

- Akcayir, M.; Akcayir, G.; Pektas, H.M.; Ocak, M.A. Augmented reality in science laboratories: The effects of augmented reality on university students’ laboratory skills and attitudes toward science laboratories. Comput. Hum. Behav. 2016, 57, 334–342. [Google Scholar] [CrossRef]

- Jiwoo, A.; Poly, L.-P.; Holme, T.A. Usability Testing and the Development of an Augmented Reality Application for Laboratory Learning. J. Chem. Educ. 2020, 97, 97–105. [Google Scholar]

- Shufan, Y.; Qingtang, L.; Ma, J.; Le, H.; Shen, B. Applying Augmented reality to enhance physics laboratory experience: Does learning anxiety matter? Interact. Learn. Environ. 2022, 1–16. [Google Scholar] [CrossRef]

- Albuquerque, G.; Sonntag, D.; Bodensiek, O.; Behlen, M.; Wendorff, N.; Magnor, M. A Framework for Data-Driven Augmented Reality. In Proceedings of the 6th International Conference, AVR 2019, Santa Maria al Bagno, Italy, 24–27 June 2019; pp. 71–83. [Google Scholar]

- Buchner, J.; Kerres, M. Media comparison studies dominate comparative research on augmented reality in education. Comput. Educ. 2023, 195, 104711. [Google Scholar] [CrossRef]

- Mazzuco, A.; Krassmann, A.L.; Reategui, E.; Gomes, R.S. A systematic review of augmented reality in chemistry education. Rev. Educ. 2022, 10, e3325. [Google Scholar] [CrossRef]

- Gomollón-Bel, F. IUPAC Top Ten Emerging Technologies in Chemistry 2022. Chem. Int. 2022, 44, 4–13. [Google Scholar] [CrossRef]

- Milgram, P.; Takemura, H.; Utsumi, A.; Kishino, F. Augmented Reality: A Class of Displays on the Reality-Virtuality Continuum; SPIE: Bellingham, WA, USA, 1995. [Google Scholar]

- Ayres, P.; Sweller, J. The Split-Attention Principle in Multimedia Learning; Cambridge University Press: Cambridge, UK, 2014. [Google Scholar]

- Krug, M.; Czok, V.; Weitzel, H.; Müller; Wolfgang; Huwer, J. Design parameters for teaching-learning scenarios with augmented reality applications in science education–A review [Gestaltungsparameter für Lehr-Lernszenarien mit Augmented-Reality-Anwendungen im naturwissenschaftlichen Unterricht—Ein Review]. In Digitalisation in Chemistry Education: Digitales Lehren und Lernen an Hochschule und Schule im Fach Chemie; Graulich, N., Banerji, A., Huwer, J., Eds.; Waxmann Verlag GmbH: Münster, NY, USA, 2021; pp. 51–58. ISBN 9783830944188. [Google Scholar]

- Krug, M.; Czok, V.; Müller, S.; Weitzel, H.; Huwer, J.; Kruse, S.; Müller, W. AR in science education—An AR based teaching-learning scenario in the field of teacher education. Chemkon 2022, 29, 312–318. [Google Scholar] [CrossRef]

- Huwer, J.; Banerji, A.; Thyssen, C. Digitization—Perspectives for chemistry teaching [Digitalisierung—Perspektiven für den Chemieunterricht]. Nachrichten aus der Chemie 2020, 68, 10–16. [Google Scholar] [CrossRef]

- Huwer, J.; Lauer, L.; Seibert, J.; Thyssen, C.; Dorrenbacher-Ulrich, L.; Perels, F. Re-Experiencing Chemistry with Augmented Reality: New Possibilities for Individual Support. World J. Chem. Educ. 2018, 6, 212. [Google Scholar] [CrossRef]

- Nitz, S.; Ainsworth, S.E.; Nerdel, C.; Prechtl, H. Do student perceptions of teaching predict the development of representational competence and biological knowledge? Learn. Instr. 2014, 31, 13–22. [Google Scholar] [CrossRef]

- Kozma, R.B.; Russell, J. Multimedia and understanding: Expert and novice responses to different representations of chemical phenomena. J. Res. Sci. Teach. 1997, 34, 949–968. [Google Scholar] [CrossRef]

- Johnstone, A.H. Why is science difficult to learn? Things are seldom what they seem. J. Comp. Assist. Learn. 1991, 7, 75–83. [Google Scholar] [CrossRef]

- Ainsworth, S. DeFT: A conceptual framework for considering learning with multiple representations. Learn. Instr. 2006, 16, 183–198. [Google Scholar] [CrossRef]

- Nahlik, P.; Daubenmire, P.L. Adapting gaze-transition entropy analysis to compare participants’ problem solving approaches for chemistry word problems. Chem. Educ. Res. Pract. 2022, 23, 714–724. [Google Scholar] [CrossRef]

- Tóthová, M.; Rusek, M. Developing Students’ Problem-solving Skills Using Learning Tasks: An Action Research Project in Secondary School. Acta Chim. Slov. 2021, 68, 1016–1026. [Google Scholar] [CrossRef]

- Hahn, M. Transfer von Facetten des Pedagogical Content Knowledge im Sachunterricht; Pädagogische Hochschule Freiburg: Breisgau, Germany, 2021. [Google Scholar]

- Hahn, L.; Klein, P. Eye tracking in physics education research: A systematic literature review. Phys. Rev. Phys. Educ. Res. 2022, 18, 013102. [Google Scholar] [CrossRef]

- Braun, I.; Langner, A.; Graulich, N. Let’s draw molecules: Students’ sequential drawing processes of resonance structures in organic chemistry. Front. Educ. 2022, 7, 959. [Google Scholar] [CrossRef]

- Jamil, N.; Belkacem, A.N.; Lakas, A. On enhancing students’ cognitive abilities in online learning using brain activity and eye movements. Educ. Inf. Technol. (Dordr) 2022, 1–35. [Google Scholar] [CrossRef]

- Khosravi, S.; Khan, A.R.; Zoha, A.; Ghannam, R. Self-Directed Learning using Eye-Tracking: A Comparison between Wearable Head-worn and Webcam-based Technologies. In Proceedings of the 2022 IEEE Global Engineering Education Conference (EDUCON), Tunis, Tunisia, 28–31 March 2022; pp. 640–643. [Google Scholar]

- Ogunseiju, O.R.; Gonsalves, N.; Akanmu, A.A.; Bairaktarova, D.; Bowman, D.A.; Jazizadeh, F. Mixed reality environment for learning sensing technology applications in Construction: A usability study. Adv. Eng. Inform. 2022, 53, 101637. [Google Scholar] [CrossRef]

- Dzsotjan, D.; Ludwig-Petsch, K.; Mukhametov, S.; Ishimaru, S.; Kuechemann, S.; Kuhn, J. The Predictive Power of Eye-Tracking Data in an Interactive AR Learning Environment. In Proceedings of the UbiComp ‘21: Adjunct Proceedings of the 2021 ACM International Joint Conference on Pervasive and Ubiquitous Computing and Proceedings of the 2021 ACM International Symposium on Wearable Computers, Virtual, USA, 21–26 September 2021; ACM: New York, NY, USA, 2021; pp. 467–471, ISBN 9781450384612. [Google Scholar]

| Scenario Second Scene | Real Candle off Screen | Real Candle on Screen | Virtual Candle |

|---|---|---|---|

(a) (n = 9) | 22% | 100% | 89% |

(b) (n = 8) | 88% | 63% | 100% |

(c) (n = 6) | 50% | 100% | 100% |

| AOIs Scenario | Solid (i) | Liquid (ii) | Gas (iii) | |||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Real Candle off Screen | Real Candle on Screen | Virtual Candle | Model | Real Candle off Screen | Real Candle on Screen | Virtual Candle | Model | Real Candle off Screen | Real Candle on Screen | Virtual Candle | Model | |

| (a) (n = 9) | 0% | 22% | 100% | 100% | 0% | 11% | 100% | 44% | 11% | 22% | 100% | 56% |

| (b) (n = 8) | 13% | 25% | 100% | 100% | 38% | 50% | 100% | 50% | 13% | 38% | 100% | 100% |

| (c) (n = 6) | 0% | 100% | 100% | 50% | 17% | 100% | 100% | 33% | 0% | 67% | 83% | 50% |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Syskowski, S.; Huwer, J. A Combination of Real-World Experiments and Augmented Reality When Learning about the States of Wax—An Eye-Tracking Study. Educ. Sci. 2023, 13, 177. https://doi.org/10.3390/educsci13020177

Syskowski S, Huwer J. A Combination of Real-World Experiments and Augmented Reality When Learning about the States of Wax—An Eye-Tracking Study. Education Sciences. 2023; 13(2):177. https://doi.org/10.3390/educsci13020177

Chicago/Turabian StyleSyskowski, Sabrina, and Johannes Huwer. 2023. "A Combination of Real-World Experiments and Augmented Reality When Learning about the States of Wax—An Eye-Tracking Study" Education Sciences 13, no. 2: 177. https://doi.org/10.3390/educsci13020177

APA StyleSyskowski, S., & Huwer, J. (2023). A Combination of Real-World Experiments and Augmented Reality When Learning about the States of Wax—An Eye-Tracking Study. Education Sciences, 13(2), 177. https://doi.org/10.3390/educsci13020177