Abstract

This study is aimed at providing solutions to problems in the field of science and technology education, as well as approaches to improve its effectiveness. This study’s specific goal was to ascertain how inquiry-based learning, when aided by instructional technology, raises student success and fosters their capacity for scientific inquiry. In this paper, we investigate a technology-supported intervention that facilitates students to actively generate and solve questions in a cycle of science inquiry in a primary (elementary) school. Through utilizing a question generation technology platform with a guided pedagogical framework, the teachers purposefully leveraged on students’ generated questioning to design and implement a process of creating and presenting their inquiries. The questioning-driven dialogic exchanges took place in the classroom setting, as well as during online interactions outside of the class. Our empirical study, as demonstrated by quantitative and qualitative analysis, connotes a positive causal effect of students’ generated questioning to their cognitive performances, and their noteworthy differences of attitudes towards science between the experimental and control groups. The results uphold the value of fostering students to generate questions for their inquiries and learning. We also highlight the importance of teachers’ awareness of pedagogical design and enactment, enabled by technology, in order to adapt to the profiles of students’ generated questioning for fostering productive cognitive performances.

1. Introduction

In comparison to the research on teacher-guided questioning in science classrooms [1,2,3,4,5,6], there have been relatively fewer studies focusing on student-generated questioning [7,8,9,10,11]. Questioning is an essential component of effective learning and scientific investigation. Authors such as Biddulph, Symington, and Osborne (1986) and White and Gunstone (1992) have stressed the importance of student-generated questions in science learning [12,13]. From a constructivist perspective, generating questions would serve to help students interpret and relate new information acquired to existing knowledge that they possess [14] (pp. 25–32). As students ask questions, it allows them to verbalize their thoughts, exchange views and connect what they understand with other ideas [15] (pp. 854–870). Thus, meaningful learning transpires when individuals gain certain existing conceptual knowledge, which is constantly being negotiated and deepened through interpersonal exchanges, to be aligned with Bloom’s taxonomy [16].

Indeed, research has indicated that learning can be potentially effective if learners play an active role in classroom discourse [17] (pp. 33–42). However, the dominant voice in the classroom continues to be that of the teacher’s [18] (pp. 39–72). Newton and colleagues (1999) argue that especially in the learning of science, learners need to practice raising and answering questions to develop an appreciation of the types of questions that scientists will value [19] (pp. 553–576). If students are to truly understand the process scientific thinking, they should be equipped with the skills to argue scientifically based on observations and evidence. Moreover, in posing scientific questions, learners will be required to reason, make their own scientific arguments, and be fully immersed in the language of science [18,20].

The questioning process usually begins when a student notices and becomes intrigued by a scientific phenomenon, especially one that is new or does not fit into the student’s current understanding. The curiosity may be expressed as questions, such as, “Why does this happen?”, “How does it happen?”, “How does this fit into what I already know?”, “Does it happen all the time or only sometimes?” or “What causes this to happen?” Although ideally, questions should proceed from the students, teachers are usually the ones formulating questions to guide students towards certain understandings. Despite this, teachers can offer opportunities for students to adjust the given questions, hence sharing the learning process with the students [21].

Yet, student question-asking is often overlooked in science classrooms, as teachers tend to focus only on getting students to master the answering of questions [15,22]. The authors suggest that encouraging and teaching students to formulate questions will create the conditions for critical thinking to occur. That also means that students need to feel less pressured to know all the answers; only in this process will students feel more compelled to pose questions. For this to take place, students need a gentle nudge in an environment that they feel safe in.

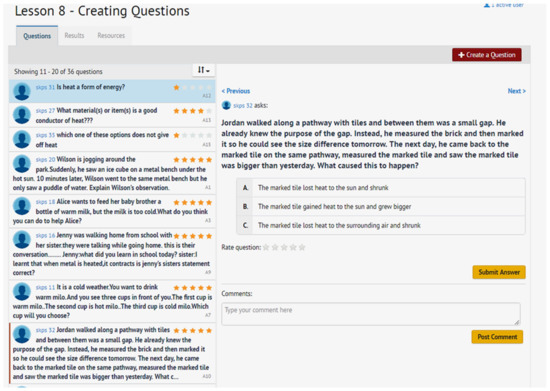

This paper is grounded on the constructivist approach to learning, in which students should adopt an active role in their learning process [23] (pp. 174–193). We made use of the Stanford Mobile Inquiry-based Learning Environment (SMILE) (Figure A1), with an interface that could support posting, commenting and rating both open-ended and multiple-choice questions; this is unlike previous studies, which only drew on multiple-choice questions [24,25]. SMILE can support student-centered inquiry and thinking. It creates opportunities intentionally for students to ask questions in an open-ended or multiple-choice format. Students are tasked to solve or respond to questions created by their peers, and rate the questions as part of the lesson. For the purpose of this study, our focus is on the component of student-generated questioning, anchored on some guiding principles underpinning the theory. The role of student-generated questions is central to students’ active participation in the learning process, since students can actively construct knowledge of concepts and processes through these experiences [11,26,27]. As students draft and review their questions, they take on more responsibility in their own learning, in the spirit of investigation and constructivism [28] (pp. 59–67). Therefore, questions should not be generated exclusively by the teacher [22].

What makes this study significant is that, Singapore, where this study took place, is a nation that is known for its high ranking on international measures of educational attainment [29,30]; yet, there are perceptions that its students are not inclined towards creativity or critical thinking [31,32]. Singapore has been widely critiqued for being a “results-oriented” system, with overemphasis on achievement results and learning that is driven by assessment at the expense of students’ genuine curiosity [33,34]. Koh has pointed out that these students may score well in exams, but are lacking in independent thinking. Instead, they are “examination-smart”, where they are motivated to score well in examinations through rote learning, instead of genuine curiosity [31] (pp. 255–264). Therefore, there is a need to study the students’ attitudes towards science if given the opportunity to take an active lead in their own learning through questioning, and the corresponding effects on their conceptual understanding.

1.1. Technology as Mediator for Scaffolding of Student-Generated Questioning

Despite its merits, educators find it challenging to sustain student-generated questioning. Technology, as proposed by Dillon (1988), could serve as a mediator to aid students in developing their question-asking abilities [35] (pp. 197–210). The interactive nature of technology that can help to avoid the passivity of the traditional didactic learning model, where students are taught by a direct transmission of knowledge.

Some studies have illustrated how technology may help in increasing students’ confidence levels in a subject [36] (pp. 295–299). According to Mativo, Womble, and Jones (2013), technology could help students undergo a more meaningful learning experience, especially at a young age [37] (pp. 103–115). The use of technology could also alleviate the anxiety experienced in certain subjects, such as math [38] (pp. 3–16). Students need to feel safe sharing their thoughts and questions, and technology could provide that safe space [39] (pp. 29–50). Certainly, technology should not be exploited for technology’s sake and should only be integrated into a curriculum purposefully, with a sound pedagogy, to increase efficiency [40] (pp. 4–33). Before its implementation, one needs to consider the technological tool’s potential applicability, and its projected costs and benefits, for it to be successfully incorporated into educational practices [39] (pp. 29–50). Likewise, teachers involved should feel at ease using the technology and undergo the necessary professional development training.

Students are not likely to ask questions spontaneously and need to be encouraged to do so [5] (1315–1346). With the right facilitative conditions comprising relevant stimuli to trigger curiosity, and the right support mechanisms, such as computer-aided teacher systems, students are more inclined towards asking questions [5] (1315–1346). Additionally, teachers need to employ targeted strategies and tasks with the use of technology to offer adequate guidance, structure, and focused goals [5,41].

Therefore, for student questioning to be a meaningful, students need guidance and direction, especially to steer them towards more sustained and higher-order questions [8,11]. This process could be integrated and purposefully designed by providing scaffolds for questioning opportunities; however, educators may find it challenging to plan and design such scaffolding [11] (pp. 40–55). With these concerns in mind, and based on research that have drawn attention to the benefits that technology can offer as educational tools, our study is premised on the idea that a technology-enhanced environment, paired with meaningful scaffolds, may be effective in providing a structure to guide student questioning [5,25,26,27,28,29,30,31,32,33,34,35,36,37,38,39,40,41,42,43].

1.2. Purpose of the Present Study

Our objective was to design an intervention that would provide students with more ownership in their learning. Giving students a chance to raise questions is a good way to involve the class in areas that they are curious about, before the teacher continues on with the rest of the syllabus. With that in mind, we formulated the following RQs:

RQ1: How does the questioning platform-enabled intervention affect students’ conceptual understanding towards science?

RQ2: How does the questioning platform-enabled intervention facilitate students’ questioning and learning?

RQ3: How do teachers perceive the intervention in supporting their teaching and student learning?

2. Methods

2.1. Participants

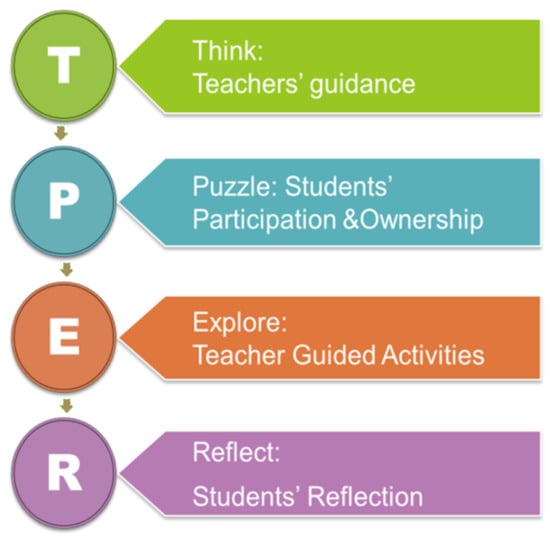

We used a mixed-method research design to account for the complexity of the phenomena being studied and to corroborate our findings from multiple perspectives. The participants were 69 primary four students from a public primary school in Singapore, of ages between 9–11 years. As a quasi-experimental research, students were divided into two groups, namely the experimental group and the control group. The experimental class had 37 students, while the control class had 32 students. During the course of the study, the researchers worked closely with the experimental teacher (with over 15 years working experience) and students to develop and enact lesson packages on topics related to heat, such as heat and heat transfer. The study took place during a duration of one semester. The teacher taught the experimental class with the lesson designs based on the guiding pedagogical framework. The teacher who taught the control class also possessed a teaching career of over 15 years. He did not use the SMILE platform or TPER (Figure A2) and Bloom’s taxonomy as guiding models, and enacted the lessons in a more traditional, teacher-centered way of teaching. Only a small portion of students asked questions via the conventional way of raising their hands. After the intervention study, the leading teacher involved in the experimental group was interviewed by the researcher to obtain her perspectives. Pertaining to ethical considerations, the involvement of the participant was voluntary and based on her permission. She was informed that her participation in this study would be kept anonymous and confidential.

2.2. Instrument

We gathered both quantitative and qualitative data from both the experimental and control classes. Data was collected using four instruments: (1) Pre/post-tests on conceptual understanding of heat, (2) Pre/post-surveys on their attitudes towards questioning and learning, and (3) Interviews with participating teachers and some students.

The pre/post-tests asked questions related to heat and temperature that covered conceptual understanding and application questions. An example is “Tom’s mother takes out a tray of baked cookies from the oven. He accidentally touches the side of the metal baking tray and burns his finger. Tom wonders why the cookies are not as hot as the baking tray. Explain why Tom is burnt by the baking tray and not the cookies. Explain how the oven gloves protect her hands.”

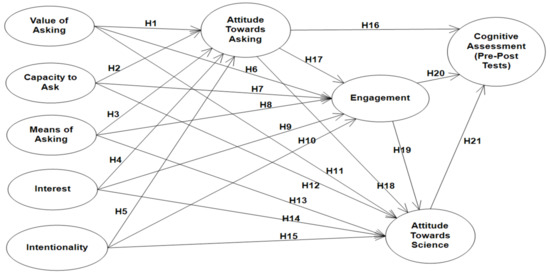

The pre/post-surveys were based on the work by Chin (2002), and Pittenger and Lounsbery (2011), which has 21 items on a 5-point Likert scale ranging from 1 (strongly disagree) to 5 (strongly agree) [44,45]. These survey items can be mapped into nine constructs including Value of Asking, Capacity to Ask, Means of Asking, Interest in Asking, Intentionality of Asking, Engagement in Asking, Attitude towards Asking, Attitude towards Asking, and Cognitive Performance. It was assessed for internal consistency in terms of composite reliability. Satisfactory values of composite reliability were achieved for all of the constructs (values ranging from 0.758 to 0.933). Discriminant validity was checked using the Fornell and Larcker (1981) test. All the constructs satisfactorily passed this test and achieved discriminant validity [46] (pp. 39–50).

Value of Asking refers to the importance or usefulness of questioning, such as filling an identified knowledge gap, the determination of an unforeseen puzzle, and confirmation of anticipation. Meanwhile, the Capacity to Ask is described as the number of questions asked by the students and Means of Asking is the method used for questioning. Next, Interest in Asking refers to the feeling of wanting to know or learn about questioning. A desire to question is regarded as the Intentionality of Asking. Engagement in Asking is defined as the involvement of students in questioning that leads to learning outcomes with three interdependent facets, i.e., emotion, behavior and [47] (pp. 17–32). Attitude towards Asking is viewed as an expression of favor or disfavor of the students toward questioning. Attitude towards Science is defined as an expression of favor or disfavor of the students toward an academic discipline. Finally, Cognitive performance is the students’ achievement of the given test.

Interview questions were related to students’ experiences using SMILE and their perspectives on learning science. Other questions include “Previously before SMILE, did you have opportunity to ask questions?” “Did SMILE help you in science learning?” “Do you see an improvement in your science results? Is it expected?”

2.3. Analysis

To analyze the survey items and constructs, we construe a hypothesis model, i.e., SMILE-Ask model, in Figure A3 consisted of the nine constructs and 21 hypotheses, echoing to the studies of Chin and Osborne (2008) [9] (pp. 1–39). The exogenous latent variables were Value of Asking, Capacity to Ask, Means of Asking, Interest in Asking, and Intentionality of Asking. Meanwhile, the endogenous latent variables were Attitude towards Asking, Engagement in Asking, Attitude towards Science, and Cognitive Performance. The measurement of the hypothesis model were implemented in part by utilizing PLS-SEM, as it is a data analysis method that is suitable for analyzing small sample sizes. The software used was SPSS as well as SmartPLS version.

The hypothesis model was assessed for the validity and reliability of the Indicators. The assessment of the reliability of the construct items for both the experimental class and the control class was accomplished in terms of the item loadings. Almost all the items exceeded Hair et al.’s (2014) threshold of 0.7 [48] (pp. 106–121), with the exception of ATT_QUES_Q3 (0.688), ATT_QUES_Q7 (0.605), and MEANS_QUES_Q16 (0.533) for the experimental class, and ATT_QUES_Q3 (0.678), ATT_QUES_Q7 (0.632), MEANS_Q17 (0.686) for the control class; however, they were considered acceptable since they were greater than 0.5. The assessment of the validity involved the examination of two subtypes of validity: convergent validity, as well as discriminant validity. Convergent validity utilizes the values of Average Variance Extracted (AVE), used to check whether a set of construct items corresponds to the same construct. Hair et al. (2014) proposed that the AVE value for each item should be greater than 0.5 [48] (pp. 106–121). In the current study, the convergent validity of the construct items of the experimental class, as well as the control class, were all greater than 0.5, which suggested that each latent variable was able to explain more than 50% variance in its indicators. Discriminant validity was checked using the Fornell and Larcker’s (1981) test [46] (pp. 39–50). All the constructs satisfactorily passed this test and achieved discriminant validity.

2.4. Intervention Design

The intervention consists of three distinct parts that work together to promote student questioning, with relevant scaffolding. The three parts comprised the SMILE platform, a pedagogical model (Figure A2) and Bloom’s. We selected an existing educational technology platform, the Stanford Mobile Inquiry-based Learning Environment (SMILE), as it is free, ready and easy to use, and explicitly designed to support student-generated questioning across a variety of disciplines [25] (pp. 99–118). In the platform, teachers and students can use mobile devices such as iPads to create questions that are either open-ended or multiple choice. Students are tasked to solve or respond to questions created by their teachers and peers, and they can “upvote” questions using a rating system (1–5) so that certain questions can receive more attention. Compared to the conventional method of soliciting student questions by asking “does anyone have questions”, which is often met by silence or dominated by the same few students, when using SMILE, students simultaneously and independently submit and answer questions, leading to increased participation. SMILE seems well suited to easing students’ fear of speaking up in class, and also for making participation more equitable and efficient. Another affordance of the system is that it captures this data for teachers to make immediate instructional decisions and for reflection after the lessons. The voting system lets teachers see at a glance which questions are of greatest interest to students, whether from curiosity, puzzlement, or confusion (conceptual understanding).

Instead of approaching questioning as purely discovery on one’s own, Mayer (2004) has found that there should be proper guidance, structure and objectives in guiding student questions [41] (pp. 14–19). Empirical studies have shown that e-learning environments must have suitable teacher guidance for it to be beneficial to students, especially in science classrooms [49,50]. We used an enhanced version of the Harvard Project Zero’s Think-Puzzle-Explore (TPE) thinking routine as a suitable pedagogical framework for student questioning (Project Zero 2007). In addition, the teachers we worked with used Bloom’s Taxonomy to teach students how to ask questions that move beyond basic recall towards higher order thinking.

Teacher A explains how the TPE model could be linked to student questioning: “This original idea came from the visible thinking framework, which is a pedagogical approach they call TPE. Last year, when we discussed the framework, we added another element, R.” R refers to the component of student reflection, where students reflect on what they have learnt from the lesson, or from the questions that they had posed.

In the component of “Think”, teachers will introduce a scientific concept, and get students to think and generate their hypothesis. “Puzzle” involves getting students to raise questions, based on the kinds of puzzlement they may encounter. “Explore” comes in when teachers present a phenomenon through an experiment or guided activity.

We co-designed three sessions of 3 lessons for a unit on heat. To start off the lesson, the teacher introduced the students to TPER and Bloom’s Taxonomy to help them understand the context of generating questions. This was meant to encourage students to take more ownership in their questioning process. The topic taught was on “Heat’’ and the teachers introduced the concepts of heat by using the story of Goldilocks and the three bears and their bowls of porridge. The stories included initial teacher-guided questions such as “Why were the bowls of porridge of different temperatures?” and “How can you cool down the bowl of porridge more quickly?”

The lessons took place over a term of 10 weeks. A typical lesson consisted of the following: (a) a class discussion in which a phenomenon related to heat will be introduced and a problem statement established through teacher guidance, (b) collection of data through a hands-on activity that addresses the phenomenon, (c) examining and evaluating the data and teachers reviewing the key concepts of the activity, and (d) students consolidating their results and solving questions on teacher-designed worksheets.

Students used SMILE to ask questions during the phase when they generate inquiries and when reviewing the concepts. Additionally, teachers used SMILE to prepare questions ahead of time for students to answer that checked their understanding. Students and teachers rated questions during the “Evaluate” phase. The three parts of the intervention—the SMILE technology platform, the TPER and Bloom’s Taxonomy frameworks, as well as the lesson routine with space for questioning—formed the hypothetical model that we tested to determine its impact on student questioning and conceptual understanding in an elementary science classroom.

3. Results

3.1. RQ1: How Does the Questioning Platform Enabled Intervention Affect Students’ Conceptual Understanding towards Science?

As Table A1 shown in Appendix A, the independent-samples t test shows that there is no significant difference between the two classes in PreMCQ (F = 0.24, p > 0.05), PreOE (F = 1.96, p > 0.05) and PreTotal (F = 1.96, p > 0.05). A paired-samples t-test was used to compare the two groups’ learning improvement. As shown in Table A1, the experimental class showed the following gains: Multiple Choice Questions (t = 5.36 ***, p < 0.001), Open-ended (t = 2.09 *, p < 0.05) and Total (t = 6.22 ***, p < 0.001). The control class showed the following gains: MCQ (t = 3.16 **, p < 0.01) and Total (t = 2.705 *, p < 0.05), with no significant gains found for open-ended questions (t = 0.161, p > 0.05). Such results indicate that the experimental class outperformed the control class in all the three sections in learning improvement, especially improving the students’ ability to answer open-ended questions. The results suggest that the SMILE-integrated lessons had a positive influence on the experimental students’ understanding of the topic.

Students did use SMILE for their exam preparation and acknowledged that it was a practical studying tool. Seeing that it could help them in improving their scores in science was an added advantage to them using SMILE:

“Some of us log in at home to watch videos, helpful videos that teachers post. For example, teachers ask us to post our mind maps. For example, I wrote 5 points for example, he wrote 10. I can see his learning points, so I have 15 more learning points. For him, he can see mine, if there is difference.”

Some students said that this it was reflected in the improvement in their assessment scores:

“I think SMILE really helped a lot. A lot of people actually improved a lot. My friend had 60 something for her science for semester 1. Semester 2 she jumped to 80. So I think it helped a lot.”

Not only could it help them in their studies, students were excited to share with people about SMILE, and as a result, what they had learnt, via the tool. They were enthusiastic to “share with [their] friends and family that little bit in science”. In fact, some of the questions that their friends asked made them more curious and they took the initiative to find out more:

“[…] Yes, because it’s interesting and you don’t actually know, it makes you want to ask more questions about it. You actually get really curious about it after that.

[…] Everybody is shy to ask teacher, so you can do it there, so you don’t have to go up to the teacher and ask, you can type it in and post it.

[…] If you ask questions, you get to know the subject or topic more easily, and there will be a flow.”

SMILE also enhanced peer learning, which played a vital part in their learning:

“[…] Because other people will also ask questions, then when you see the questions, you might not know how to answer it. Then you want to find out as well. Then nobody knows it, so you really want to find out, how do you answer the question.”

Students recognized that their peers could similarly benefit from their own questions:

“We were going through a science worksheet and I didn’t know if my answer was correct, so when I asked, I was sitting quite far from the teacher, the whole class could hear the question so they would be learning what’s wrong and what’s right.”

3.2. RQ2: How Does the Questioning Platform Enabled Intervention Facilitate Students’ Questioning and Learning?

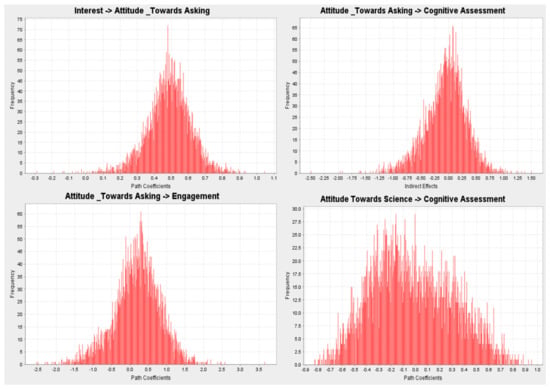

The relations between constructs in the hypothesis model were assessed for statistical significance using SmartPLS in terms of path coefficients of determination (R2), effect size (f2), and the t-values were examined using the two-tail test with significant levels of p < 0.05. These assessments with statistical significance for the experimental class and control class are illustrated in Table A2 and Table A3, respectively.

The coefficient of determination R2 for each endogenous variable was to assess the predictive strength of each respective model. For the experimental class, the model was able to explain 76.2% of the variance in Attitude towards Asking, 69.9% of the variance in Engagement in Asking, 52.5% of the variance in Attitude towards Science. These values were deemed to be substantial. There was a 57.8% variance in the Cognitive Performance, which was considered as strong. For the control class, the model was able to explain 86.5% of the variance in Attitude towards Asking, and 68.5% of the variance in Capacity in Asking, which were considered as substantial. Meanwhile, Interest in Asking had a 30.2% variance, which was moderate.

On the other hand, effect size f2 was employed to evaluate the influence of a predictor construct on an endogenous construct. According to Cohen (1988), effect size f2 values of 0.02, 0.15, and 0.35 were regarded as small, medium, and large effect sizes, respectively. A substantial significant effect size for experimental class was observed between Attitude towards Asking and Attitude towards Science (f2 = 0.305); between Interest in Asking and Attitude towards Asking (f2 = 0.242); between Attitude towards Science and Cognitive Performance (f2 = 0.205); and between Attitude Towards Asking and Engagement in Asking (f2 = 0.406). In Table A3, the constructs that had a large effect size for control class were included between Capacity to Ask and Attitude towards Asking (f2 = 0.305); between Interest in Asking and Attitude towards Asking (f2 = 0.535); between Value of Asking and Attitude Towards Asking (f2 = 0.707).

As shown in Figure A4, the t-values between the conceptual constructs were examined using the two-tail test by bootstrapping to 5000 samples in SmartPLS. Bootstrapping was utilized to test the relevance and significance of the structural model relationships. The t-value was found to be significant at a 0.05 level of significance if it is more than 1.645. There was a significant positive effect of Interest in Asking on Attitude of Asking for the experimental class (t = 2.876). In other words, this suggested that the experimental class was sufficiently motivated by the interest generated in their respective science classes. As an example, one student from the experimental class explained “Yes, you want to find out more, like why, like how does it go through the metal, what is inside the metal that makes it go through”. Besides that, there was a significant positive effect of Attitude towards Asking on Engagement in Asking (t = 0.305) for the experimental class, but not for the control class. This suggested that the experimental class had a positive attitude for asking questions, which led to more engagement to pose questions. One student from the experimental class shared that SMILE provided a conductive platform for her to ask questions, especially because she was “shy”: “Everybody is shy to ask the teacher, so you can do it there, so you don’t have to go up to the teacher and ask, you can type it in and post it. They don’t know if it’s you are not, it is not an embarrassment if you ask not that good a question. Because they don’t know your register number. So, when you say that, the teacher will reply, they help us how to find out”.

For the experimental class, there was also a significant positive effect of Attitude towards Asking on Cognitive Performance with a t-value of 3.185. For one student, he shared that he was excited with the new science knowledge that he learned and was eager to “also share with [his] friends and family, that little bit in science”. The Attitude towards Science also had a positive effect on the Cognitive Performance (t = 2.967) of the students in the science. This suggested that SMILE was instrumental in the intervention with the experimental class. During the science lessons using SMILE, one student from the experimental class mentioned the following: “You get a little bit of a fun way to learn science”. Such positive effects were not found in the control class.

3.3. RQ3: How Do Teachers Perceive the Intervention in Their Teaching and in Supporting Student Learning?

The teachers used students’ questions as a gauge to identify and address their conceptual misconceptions. Another strategy is to use students’ questions to trigger curiosity, to use these questions as a basis to teach new concepts. In our interview with the teacher, she disclosed the following: “The pupils don’t ask enough questions. Generally, they don’t know what kind of questions to ask, they are not asking enough questions, not thinking deep enough, to what is happening when we are doing experiments… there is that intentional part of teaching questioning, and the different levels of questioning, and they are able to use all these questions in their lesson, by asking questions and thinking deep into things, and that actually helps them with their thought process.”

The value of questioning, she added, is “important” as it “actually triggers thought. Because inquiry is about being curious about things, and when you are generally curious about things, you will have questions to ask about things, because you may not understand fully how things work. You want to find out more about something, so a question is something that you ask, so that you can think deeper, how come certain things work in this manner, why is this happening, why do I observe this; these are important aspects of inquiry. It would lead to deeper understanding. Greater learning.”

Thus, the teacher used SMILE to pose teacher questions that students answer to check for understanding. Another use was to initiate the question-asking process with a trigger. In the following example, the teacher showed the class a “drinking bird”, which is meant to show the concepts of Expansion and Contraction. She does not explain right away how the toy functions and instead she capitalizes on their curiosity by giving them time to share on SMILE how they think the drinking bird works.

“T: Goldilocks went to her grandma’s house and found this funny little toy. Which is the toy I am holding now. (class laughs) This is a drinking bird. What happens is that after some time in a hot place, [the bird gets] thirsty. When the weather is hot, it will be thirsty. What will happen? (Think)”

The class discusses, gets excited and cheers.

“T: Go into SMILE now. Why did this bird tip over and start drinking when it’s hot? What do you think causes it to happen? (Puzzle)

S: Can we rate also? [Gets excited]

(Teacher capitalizes on the students’ excitement)

T: We are not going to explore whose answer is correct, whose answer is not right yet. Because you are going to learn something new about heat. Ready? We are going to learn something new before we verify if our answers are correct. SMILE is meant for you to write down, based on what you understand on what you know and what causes the bird to drink water.”

After giving students some time to generate some ideas and questions about what they thought of the drinking toy, the teacher demonstrated how the toy worked and explained the mechanism behind the toy’s drinking action. (Explore)

In addition to the above, the teachers reviewed students’ questions as a form of lesson consolidation and lesson reflection in class.

“S: In the comments, our teachers told us to type like, this is how you can improve your question, or try to make it more specific and encouraging.”

One of the two participating teachers thought that it was useful that the questions raised by the students were visible to everyone in the class and stored on the SMILE platform. She pointed out that when questions were verbally expressed, they could only be discussed once and are, therefore, ephemeral. With SMILE, students could access the platform at any time, even after school hours, to review questions and answer their peers’ questions. The teacher also reviewed the questions, addressed any misconceptions and provided suggestions on how students could better phrase their questions or respond to their peers. The teacher also thought that the platform allowed them to hear from the shy students and get a more complete picture of the students’ level of understanding: “So that is the advantage. Because some questions I may not hear in the class but I see it in the system”.

4. Discussion

4.1. How Does a Technology-Enhanced Platform That Facilitates Student Generated Questioning Affect Students’ Conceptual Understanding and Attitudes towards Science?

The cognitive tests given before and after the intervention, as well as the semester exams, showed a positive effe suggests a correlation between the use of SMILE + TPER/Bloom’s taxonomy and learning gains. What factors might have contributed to this effect? Teachers regularly used SMILE to posed model questions that required all students to answer, and then students posted their own questions for peers to respond to. So perhaps it was this cognitive stimulus and response from the entire class, instead of from just the one or few students that contributed to learning gains [51]. The teachers’ questions functioned as models for students to pose similar ones for their peers to answer, thereby reinforcing conceptual understanding through more practice. In particular, the Bloom taxonomy was used to teach the students a vocabulary to write questions as practice for exams. This supports research that shows the effectiveness of having students generating test questions as a study tool, grounded in the theory of metacognition [52,53,54]. However, it was unclear whether the improved learning outcomes were due to questions generated by teachers or by students, or which had the greater impact and why [55].

There was limited evidence of the impact of student-generated questioning on shifting instructional practice from teacher-directed learning to that which empowered students use to take ownership of their learning. While teachers did structure lessons using TPER and directed students towards using SMILE to generate questions, there was no evidence that these questions led to students conducting their own investigations or influencing the direction of the teacher-led demonstration. In the vignette where a student did ask a question (“Which state are humans?”), it took place during live interaction, not on SMILE.

The results from the survey and the interviews from students indicated that SMILE was effective in engaging students during science lessons. This could be that the novelty of using technology to engage differently with teachers and peers aroused students’ excitement, leading to increased participation and engagement. This is consistent with findings in a 2015 study conducted in one of the leading intuitions of digital learning in Russia. Among the 225 educators and 814 students surveyed, about 95% of educators and 97% of students believed that e-Learning was appropriate for their learning [56]. In total, 87.6% of the educators felt that digital technologies could offer additional opportunities for engaging students. They were also confident about how digital learning could positively impact students’ learning outcomes (81%). Students also viewed digital learning positively as they saw the advantages of having regular access to learning materials (97.4%), online tests and online homework (85.4%).

However, we did not see any positive effect of using SMILE on students taking ownership in their learning. We observed that the students’ questions were narrowly confined to questions that resembled exam questions, instead of reflecting their own curious nature. This could be that the students were echoing the teacher-guided questions, which were designed for conceptual understanding. As this implementation was over the course of 10 weeks, it is likely that the students had not developed the skills needed to take the questions to a deeper level. As Williams and Otrel-Cass (2017) observed, technology could open up possibilities for student ownership, but for it to be viable, teacher scaffolds need to be faded out incrementally so that students can find their own authentic voice [57] (pp. 88–107).

Although the technology motivates students toward learning and taking ownership, as in our study, students were found to be motivated by using SMILE and asking several questions about their learning. The missing part in questioning was the level of questions, as the most of students were found asking the exam-related or teacher-guided questions. This is also a considerable matter, which is why the level of students’ questions was very low. The questions should be related to the cognitive dimensions from low to high-level questions. Bloom’s taxonomy provides the best description of the low to high-level cognitive dimensions [58] (pp. 258–266). Taxonomy divided the cognitive dimensions into six areas, and two levels: low and high. The low-level cognitive dimension areas are remembering, understanding, applying, and the high-level cognitive dimension areas are analyzing, evaluating, and creating [59] (pp. 1–6).

The actions that are required under each area of cognitive dimension, such as name, list and define come under remembering, interpret, explain and summarize come under understanding, and interpret, solve, and use come under applying; all these actions predict the low-level cognitive dimensions [59] (pp. 1–6). Meanwhile, the actions compare, analyze, and distinguish come under analyzing, judge, evaluate, criticize come under evaluating, and construct, design, and devise come under creating; all these actions show high-level cognitive dimensions [60].

Crowe et al. (2008) conducted a study to develop a tool for biology teachers, which was based on blooms taxonomy; the tool was named Blooming biology tool (BBT) [61] (pp. 368–381). The aim of this tool was to facilitate teachers to incorporate cognitive dimensions in their teaching by developing learning activities. The authors claimed that this tool will help students in developing a high level of cognitive skills.

Another high-order questioning strategy was proposed by Chistenbury and Kelly (1983), and the questioning circle model was designed [62]. This divided cognitive levels into three circles; the first is the subject matter, which is related to the study material, the second is a personal matter, which is related to students’ response and reaction to the study, and the third is the external environment or reality, which discusses the linking subject matter with the outer world and other disciplines [63] (p. 30). The questioning circle motivates students toward deep thinking and self-learning by encouraging students to provide their reflection and perception about the factor affecting learning after analyzing the material [62] (p. 19).

The teacher uses a questioning circle strategy to motivate students toward dialogues and discussions, which help them to reach an in-depth understanding of the subject. The teacher asks questions separately from the circle and interlinks them with each other, such as text-based only questions, reader reaction/response only questions, discipline only questions, posing text and reader related, reader and world related, text and world-related questions, and dense questions for creating higher order thinking [62] (p. 24).

4.2. How Did Teachers Perceive the Role of the Intervention in Their Teaching and in Supporting Student Learning?

While teachers wanted students to ask more questions to express their curiosity, they ended up using SMILE primarily to pose questions for students to answer, or to have students generate questions that are similar to questions on test papers. There is a tension between encouraging divergent questions, which explore new possibilities that drive discussion, and convergent questions, which focus on clarifying one’s thinking to arrive at “correct” explanations. Teachers do need to be conscious of their methods in their instruction, the strategies they use in eliciting questions and in their wielding of classroom power [64] (pp. 745–768). Reinsvold and Cochran (2012) cautioned of the complexities of teacher–student dynamics in engaging in question-asking, and discussed how classroom discourse tended to be more controlled by the teacher, especially if teachers are more used to traditional power interactions. Such interactions could often lead to convergent, closed-ended questions [63] (p. 30). While we intended the intervention to challenge and reshape conventional teaching practices, including roles and goals, teachers co-opted the tool and frameworks to fit their needs and agenda. In a school system driven by high stakes exams, the teachers are expected to focus investigations and classroom discourse to achieve certain conceptual understandings so that students can demonstrate these in exams.

What teachers saw most useful about SMILE was the ability to get more students to actively participate in class, and as a formative assessment tool, providing useful data to form a picture of students’ conceptions through their questions, answers, and ratings. These uses extended the teachers’ ability to engage students and finely tune their teaching to focus on the misconceptions, and probably better their learning.

4.3. Implications

We set out to design an intervention as an alternative to teacher-directed practices, while still demonstrating learning gains using traditional assessments. The intervention was also based on sound theories about the importance of students asking questions. While the results of learning gains and increased student engagement may persuade stakeholders of high achieving school systems to consider adopting the intervention, it must be noted that the questions generated by students primarily served the function of exam preparation. One can argue that the intervention was successful because the teachers recognized how to adapt it to serve their primary objective.

Our study shows that the intervention enabled students to build conceptual understanding, an important goal in a high-achieving school system. However, we have less evidence of the intervention supporting deeper and more reflective thinking in students. This may not be due to any inherent attributes of the intervention, but more likely proceeding from a tension to balance the pressures of students performing well on tests and recognizing the value of authentic, student-driven questioning. For now, the balance tips in favor of the former.

An implication of the study for school reformers is that a key lever for the successful adoption of an educational innovation is an alignment with stakeholders’ goals. In the case of a high-achieving school system that is heavily driven by test performance, the intervention was readily adopted to serve the purpose of improving learning outcomes. This is in line with what we know about efforts to reform education. The closer a reform effort is to achieving the goals of a school system, the more easily it would be accepted. This model of reform involves incremental, i.e., the gradual development of “shared meaning”, rather than disruptive change.

In Singapore, educational reforms are implemented in phrases and in an incremental fashion [65,66,67]. Policy makers do see the need to use technology for augmenting student learning, especially to enhance digital literacies required for 21st century learning [34] (pp. 334–353). However, accountability measures, such as high stakes summative testing, form ‘systemic’ barriers, hindering any form of transformative shift in the classroom [34] (pp. 334–353).

Currently, we have given teachers a useful tool to improve teaching and learning for existing goals. A possible next step would be to help teachers differentiate between the two types of student-generated questions—divergent ones to promote curiosity and convergent ones for conceptual understanding—and how to support these two types of questioning. Since there is currently a bias towards the latter type of questions, we would study how to gradually shift the balance towards divergent questions. For instance, how could we prepare teachers to model, solicit, and respond to these types of questions? Do teachers select student questions that best direct the investigation to certain conclusions? Do teachers and students select questions together to supplement the investigations? Do teachers organize the questions into clusters and have different groups explore different clusters? Do teachers let the students design and conduct their own investigations based on their questions? Although some of these questions have been addressed by past studies [68] (pp. 194–214), there is a need to investigate the pace for incremental reform in a public school system driven by high-stakes testing.

5. Conclusions

The study found that the incorporation of technology-supported inquiry-based learning using instructional technologies appealed to students’ emotional learning. The perspectives of the participating students and the instructor on this learning strategy were consistent with the related literature [69] (pp. 1075–1099). Hence, the technology–framework integration, with the evidence of its efficacies, as in this paper, is our theoretical contribution to knowledge as far as technology-based pedagogy is concerned. Furthermore, this research signifies that the teachers should understand that the ability to question is critical to effective teaching. Hence, with the emphasis on active learning, and critical and creative thinking, mastery of the art of questioning is also required for effective learning. This study also shed light on how a strong teacher-directed classroom culture responded to an intervention to shift the balance towards one that empowered students through increasing opportunities for student-generated questions. The SMILE technology, as a forum-like platform for posing and answering questions, enabled more students to participate and interact with peers, leading to greater engagement. Teachers used the tool primarily to pose questions or to have students generate test-like questions. Although there was limited evidence of student-generated questions being meaningful to generating curiosity, significant learning gains—a key lever for interventions to be embraced in a result-oriented school system—can pave the way for incremental reforms down the road. We argue that, in order to shift students towards such an ideal, the undertaking to begin with the scaffolding process, as depicted by this study. To bring students towards an agentic, generation of questions by students that is exhibited by divergent questions beyond the textbook, we conjecture that the dominant instructional practice has to be redesigned in more radical ways. Students must also not be constrained to the textbook and existing curriculum implicit norms, or at least told to be “generative”. In the Singapore schools’ context, changing formative and summative assessments into more open-ended questions would lead teachers and students toward such possibilities.

Bloom’s taxonomy would be incorporated into the teaching–learning process for in-depth learning and high-order thinking. The cognitive dimensions and actions required, according to each area and cognitive level, help students to think out of the box and in textbooks too. Merely focusing on teacher-guided or examination questions cannot help students to develop high-order thinking skills. Similarly, the questioning circle helps the teacher to create healthy and high-order discussions in the classroom. Teachers start from the low to high-order questions, such as the first question being only for text learning, the second being about the students’ perception of the given situation or matter, the third relating the learned knowledge with other disciplines or the outer world, and then interlinking these three-level questions with each other; this strategy may create a healthy discussion and boost students thinking ability to solve the problem.

Author Contributions

Conceptualization, L.W.; Methodology, L.W.; Writing—original draft, L.W. and S.H.; Writing—review and editing, Y.L.; Visualization, M.-L.H. All authors have read and agreed to the published version of the manuscript.

Funding

This research was funded by NATIONAL RESEARCH FOUNDATION, SINGAPORE, grant number NRF2015-EDU001-IHL11.

Institutional Review Board Statement

The study was conducted in accordance with the Decla-ration of Helsinki, and approved by the Institutional Review Board (or Ethics Committee) of NANYANG TECHNOLOGICAL UNIVERSITY (protocol code IRB-2016-12-016 and date of approval is 15 February 2017).

Informed Consent Statement

Informed consent was obtained from all subjects involved in the study.

Data Availability Statement

The data that support the findings of this study are available on request from the corresponding author, L.W.

Conflicts of Interest

The authors declare no conflict of interest.

Appendix A

Table A1.

A Paired-Samples t Test of Pre-/Post-Test Scores in School X.

Table A1.

A Paired-Samples t Test of Pre-/Post-Test Scores in School X.

| Variables | Conditions | Mean | Std. Deviation | t | p |

|---|---|---|---|---|---|

| PostMCQ–PreMCQ | Experimental Class | 1.01 | 1.17 | 5.36 *** | 0.000 |

| Control Class | 4.29 | 0.79 | 3.16 ** | 0.004 | |

| PostOE–PreOE | Experimental Class | 0.21 | 0.62 | 2.09 * | 0.044 |

| Control Class | 0.019 | 0.61 | 0.161 | 0.873 | |

| PostTotal–PreTotal | Experimental Class | 1.22 | 1.21 | 6.22 *** | 0.000 |

| Control Class | 0.63 | 1.20 | 2.71 * | 0.012 |

Note: * p ≤ 0.05, ** p ≤ 0.01, *** p ≤ 0.001.

Table A2.

Path Coefficients of Determination, Effect Size, and t-values of Structural Model for Experimental Class.

Table A2.

Path Coefficients of Determination, Effect Size, and t-values of Structural Model for Experimental Class.

| Hypotheses | Path Relationships | R2 | f2 | t-Value | p-Value |

|---|---|---|---|---|---|

| H5 | Interest in Asking → Attitude towards Asking | 0.534 | 0.242 | 2.876 ** | 0.004 (<0.01) |

| H17 | Attitude towards Asking → Cognitive Performance | 0.716 | 0.406 | 3.185 ** | 0.001 (<0.01) |

| H18 | Attitude towards Asking → Engagement in Asking | 0.926 | 0.305 | 2.607 ** | 0.009 (<0.01) |

| H21 | Attitude towards Science → Cognitive Performance | 0.532 | 0.205 | 2.967 ** | 0.003 (<0.01) |

Note: ** p ≤ 0.01.

Table A3.

Path Coefficients of Determination, Effect Size, and t-values of Structural Model for Control Class.

Table A3.

Path Coefficients of Determination, Effect Size, and t-values of Structural Model for Control Class.

| Hypotheses | Path Relationships | R2 | f2 | t-Value | p-Value |

|---|---|---|---|---|---|

| H1 | Value of Asking → Attitude towards Asking | 0.441 | 0.707 | 2.899 ** | 0.004 (<0.01) |

| H2 | Capacity to Ask → Attitude towards Asking | 0.269 | 0.305 | 2.336 * | 0.02 (<0.05) |

| H4 | Interest in Asking → Attitude towards Asking | 0.49 | 0.535 | 3.761 ** | 0.008 (<0.01) |

Note: * p ≤ 0.05, ** p ≤ 0.01.

Figure A1.

The SMILE Interface.

Figure A2.

Pedagogical Model (TPER) to Guide Student Questioning in SMILE.

Figure A3.

Hypothesis Model of Conceptual Constructs (SMILE-Ask framework).

Figure A4.

Bootstrapping examination of t-values by 5000 samples for Experimental Class.

References

- Boyd, M.P. Relations between teacher questioning and student talk in one elementary ELL classroom. J. Lit. Res. 2015, 47, 370–404. [Google Scholar] [CrossRef]

- Vaish, V. Questioning and oracy in a reading program. Lang. Educ. 2013, 27, 526–541. [Google Scholar] [CrossRef]

- Eshach, H. An analysis of conceptual flow patterns and structures in the physics classroom. Int. J. Sci. Educ. 2010, 32, 451–477. [Google Scholar] [CrossRef]

- Oliveira, A.W. Improving teacher questioning in science inquiry discussions through professional development. J. Res. Sci. Teach. Off. J. Natl. Assoc. Res. Sci. Teach. 2010, 47, 422–453. [Google Scholar] [CrossRef]

- Chin, C. Classroom interaction in science: Teacher questioning and feedback to students’ responses. Int. J. Sci. Educ. 2006, 28, 1315–1346. [Google Scholar] [CrossRef]

- Hill, J.B. Questioning techniques: A study of instructional practice. Peadbody J. Educ. 2016, 91, 660–671. [Google Scholar] [CrossRef]

- Choi, H.; Lee, S.; Chae, Y.; Park, H. Analysis of Differences in Academic Achievement based on the Level of Learner Questioning in an Online Inquiry Learning Environment. Educ. Technol. Int. 2018, 19, 93–122. [Google Scholar]

- Wong, K.Y. Use of Student Mathematics Questioning to Promote Active Learning and Metacognition. In Proceedings of the Selected Regular Lectures from the 12th International Congress on Mathematical Education, Seoul, Korea, 8–15 July 2012; Cho, S., Ed.; Springer: Cham, Switzerland, 2015; pp. 877–895. [Google Scholar]

- Chin, C.; Osborne, J. Students’ questions: A potential resource for teaching and learning science. Stud. Sci. Educ. 2008, 44, 1–39. [Google Scholar] [CrossRef]

- Whittaker, A. Should we be encouraging pupils to ask more questions? Educ. Stud. 2012, 38, 587–591. [Google Scholar] [CrossRef]

- Mueller, R.G. Making Them Fit: Examining Teacher Support for Student Questioning. Soc. Stud. Res. Pract. 2016, 11, 40–55. [Google Scholar] [CrossRef]

- Biddulph, F.; Symington, D.; Osborne, R. The place of children’s questions in primary science education. Res. Sci. Technol. Educ. 1986, 4, 77–88. [Google Scholar] [CrossRef]

- White, R.T.; Gunstone, R.F. Probing Understanding; Falmer Press: London, UK, 1992. [Google Scholar]

- Miller-First, M.S.; Ballard, K.L. Constructivist Teaching Patterns and Student Interactions. Internet Learn. J. 2017, 6, 25–32. [Google Scholar] [CrossRef]

- Marbach-Ad, G.; Sokolove, P.G. Can undergraduate biology students learn to ask higher level questions. J. Res. Sci. Teach. 2000, 37, 854–870. [Google Scholar] [CrossRef]

- Bloom, B.S.; Engelhart, M.B.; Furst, E.J.; Hill, W.H.; Krathwohl, D.R. Taxonomy of Educational Objectives: The Classification of Educational Goals; Longmans Green: New York, NY, USA, 1956. [Google Scholar]

- Jurik, V.; Gröschner, A.; Seidel, T. How student characteristics affect girls’ and boys’ verbal engagement in physics instruction. Learn. Instr. 2013, 23, 33–42. [Google Scholar] [CrossRef]

- Duschl, R.A.; Osborne, J. Supporting and Promoting Argumentation Discourse in Science Education. Stud. Sci. Educ. 2002, 38, 39–72. [Google Scholar] [CrossRef]

- Newton, P.; Driver, R.; Osborne, J. The place of argumentation in the pedagogy of school science. Int. J. Sci. Educ. 1999, 21, 553–576. [Google Scholar] [CrossRef]

- Eliasson, N.; Karlsson, K.G.; Sørensen, H. The role of questions in the science classroom–how girls and boys respond to teachers’ questions. Int. J. Sci. Educ. 2017, 39, 433–452. [Google Scholar] [CrossRef]

- National Research Council. Inquiry and the National Science Education Standards: A Guide for Teaching and Learning; National Academy Press: Washington, DC, USA, 2000. [Google Scholar]

- Dillon, J.T. The Practice of Questioning; Routledge: London, UK, 1990. [Google Scholar]

- Aguiar, O.G.; Mortimer, E.F.; Scott, P. Learning from and responding to students’ questions: The authoritative and dialogic tension. J. Res. Sci. Teach. 2010, 47, 174–193. [Google Scholar] [CrossRef]

- Seol, S.; Sharp, A.; Kim, P. Stanford Mobile Inquiry-based Learning Environment (SMILE): Using mobile phones to promote student inquires in the elementary classroom. In Proceedings of the 2011 International Conference on Frontiers in Education: Computer Science & Computer Engineering, Macao, China, 1–2 December 2011; pp. 270–276. [Google Scholar]

- Buckner, E.; Kim, P. Integrating technology and pedagogy for inquiry-based learning: The Stanford Mobile Inquiry-based Learning Environment (SMILE). Prospects 2014, 44, 99–118. [Google Scholar] [CrossRef]

- Buckner, E.; Kim, P. A Pedagogical Paradigm Shift: The Stanford Mobile Inquiry-based Learning Environment Project (SMILE). 2012. Available online: http://elizabethbuckner.wordpress.com/2012/02/smile-concept-paper.pdf (accessed on 30 January 2023).

- Piaget, J. Psychology and Epistemology: Towards a Theory of Knowledge; Grossman: New York, NY, USA, 1971. [Google Scholar]

- Aina, J.K. Developing a constructivist model for effective physics learning. Int. J. Trend Sci. Res. Dev. 2017, 1, 59–67. [Google Scholar]

- OECD. PISA 2015 Results in Focus. 2018. Available online: https://www.oecd.org/pisa/pisa-2015-results-in-focus.pdf (accessed on 30 January 2023).

- TIMSS. TIMSS 2015 and TIMSS Advanced 2015 International Results. 2015. Available online: http://timssandpirls.bc.edu/timss2015/international-results/wp-content/uploads/filebase/full%20pdfs/T15-About-TIMSS-2015.pdf (accessed on 30 January 2023).

- Koh, A. Towards a critical pedagogy: Creating ‘thinking schools’ in Singapore. J. Curric. Stud. 2002, 34, 255–264. [Google Scholar] [CrossRef]

- Cheng, C.Y.; Hong, Y.Y. Kiasu and creativity in Singapore: An Empirical test of the situated dynamics framework. Manag. Organ. Rev. 2017, 13, 871–894. [Google Scholar] [CrossRef]

- Reyes, V.; Tan, C. Assessment Reforms in High-Performing Education Systems: Shanghai and Singapore. In International Trends in Educational Assessment; Brill Sense: Paderborn, Germany, 2018; pp. 25–39. [Google Scholar]

- Toh, Y.; Jamaludin, A.; He, S.J.; Chua, P.; Hung, D. Leveraging autonomous pedagogical space for technology-transformed learning: A Singapore’s perspective to sustaining educational reform within, across and beyond schools. Int. J. Mob. Learn. Organ. 2015, 9, 334–353. [Google Scholar] [CrossRef]

- Dillon, J.T. The remedial status of student questioning. J. Curric. Stud. 1988, 20, 197–210. [Google Scholar] [CrossRef]

- Murphy, D. A literature review: The effect of implementing technology in a high school mathematics classroom. Int. J. Res. Educ. Sci. 2016, 2, 295–299. [Google Scholar] [CrossRef]

- Mativo, J.; Womble, M.; Jones, K. Engineering and technology students’ perceptions of courses. Int. J. Technol. Des. Educ. 2013, 23, 103–115. [Google Scholar] [CrossRef]

- Meagher, M. Students’ relationship to technology and conceptions of mathematics while learning in a computer algebra system environment. Int. J. Technol. Math. Educ. 2012, 19, 3–16. [Google Scholar]

- Han, T.; Keskin, F. Using a mobile application (WhatsApp) to reduce EFL speaking anxiety. Gist: Educ. Learn. Res. J. 2016, 12, 29–50. [Google Scholar] [CrossRef]

- Serdyukov, P. Innovation in education: What works, what doesn’t, and what to do about it? J. Res. Innov. Teach. Learn. 2017, 10, 4–33. [Google Scholar] [CrossRef]

- Mayer, R. Should there be a three-strikes rule against pure discovery learning? Am. Psychol. 2004, 59, 14–19. [Google Scholar] [CrossRef]

- Linn, M.C.; McElhaney, K.W.; Gerard, L.; Matuk, C. Inquiry learning and opportunities for technology. In International Handbook of the Learning Sciences; Routledge: London, UK, 2018; pp. 221–233. [Google Scholar]

- Hwang, G.J.; Chiu, L.Y.; Chen, C.H. A contextual game-based learning approach to improving students’ inquiry-based learning performance in social studies courses. Comput. Educ. 2015, 81, 13–25. [Google Scholar] [CrossRef]

- Chin, C. Student-Generated Questions: Encouraging Inquisitive Minds in Learning Science. Teach. Learn. 2002, 23, 59–67. [Google Scholar]

- Pittenger, A.L.; Lounsbery, J.L. Student-generated questions to assess learning in an online orientation to pharmacy course. Am. J. Pharm. Educ. 2011, 75, 94. [Google Scholar] [CrossRef]

- Fornell, C.; Larcker, D.F. Evaluating Structural Equation Models with Unobservable Variables and Measurement Error. J. Mark. Res. 1981, 18, 39–50. [Google Scholar] [CrossRef]

- Zeegers, Y.; Elliott, K. Who’s asking the questions in classrooms? Exploring teacher practice and student engagement in generating engaging and intellectually challenging questions. Pedagog. Int. J. 2018, 14, 17–32. [Google Scholar] [CrossRef]

- Hair, J.F., Jr.; Sarstedt, M.; Hopkins, L.; GKuppelwieser, V. Partial Least Squares Structural Equation Modeling (PLS-SEM): An Emerging Tool for Business Research. Eur. Bus. Rev. 2014, 26, 106–121. [Google Scholar] [CrossRef]

- Chang, C.Y. Teaching earth sciences: Should we implement teacher-directed or student-controlled CAI in the secondary classroom? Int. J. Sci. Educ. 2003, 25, 427–438. [Google Scholar] [CrossRef]

- Chang, C.Y.; Tsai, C.C. The interplay between different forms of CAI and students’ preferences of learning environment in the secondary science class. Sci. Educ. 2005, 89, 707–724. [Google Scholar] [CrossRef]

- Cohn, S.T.; Fraser, B.J. Effectiveness of student response systems in terms of learning environment, attitudes and achievement. Learn. Environ. Res. 2016, 19, 153–167. [Google Scholar] [CrossRef]

- Kuhn, D. Metacognitive development. Curr. Dir. Psychol. Sci. 2000, 9, 178–181. [Google Scholar] [CrossRef]

- King, A. Effects of self-questioning training on college students’ comprehension of lectures. Contemp. Educ. Psychol. 1989, 14, 366–381. [Google Scholar] [CrossRef]

- Van Blerkom, D.L.; van Blerkom, M.L.; Bertsch, S. Study strategies and generative learning: What works? J. Coll. Read. Learn. 2006, 37, 7–18. [Google Scholar] [CrossRef]

- Yu, F.Y.; Chen, Y.J. Effects of student-generated questions as the source of online drill-and-practice activities on learning. Br. J. Educ. Technol. 2014, 45, 316–329. [Google Scholar] [CrossRef]

- Kryukov, V.; Gorin, A. Digital technologies as education innovation at universities. Aust. Educ. Comput. 2017, 32, 1–16. [Google Scholar]

- Williams, P.J.; Otrel-Cass, K. Teacher and student reflections on ICT-rich science inquiry. Res. Sci. Technol. Educ. 2017, 35, 88–107. [Google Scholar] [CrossRef]

- Gul, R.; Kanwal, S.; Khan, S.S. Preferences of the Teachers in Employing Revised Blooms Taxonomy in their Instructions. Sjesr 2020, 3, 258–266. [Google Scholar]

- Churches, A. Bloom’s taxonomy blooms digitally. Tech Learn. 2008, 1, 1–6. [Google Scholar]

- Tofade, T.; Elsner, J.; Haines, S.T. Best practice strategies for effective use of questions as a teaching tool. Am. J. Pharm. Educ. 2013, 77, 155. [Google Scholar] [CrossRef]

- Crowe, A.; Dirks, C.; Wenderoth, M.P. Biology in bloom: Implementing Bloom’s taxonomy to enhance student learning in biology. CBE—Life Sci. Educ. 2008, 7, 368–381. [Google Scholar] [CrossRef]

- Christenbury, L.; Kelly, P. Questioning: A Path to Critical Thinking; National Council of Teachers: Urbana, IL, USA, 1983. [Google Scholar]

- Nappi, J.S. The importance of questioning in developing critical thinking skills. Delta Kappa Gamma Bull. 2017, 84, 30. [Google Scholar]

- Reinsvold, L.A.; Cochran, K.F. Power dynamics and questioning in elementary science classrooms. J. Sci. Teach. Educ. 2012, 23, 745–768. [Google Scholar] [CrossRef]

- Toh, Y.; Hung, D.; Chua, P.; He, S.; Jamaludin, A. Pedagogical reforms within a centralized-decentralized system: A Singapore’s perspective to diffuse 21st century learning innovations. Int. J. Educ. Manag. 2016, 30, 1247–1267. [Google Scholar] [CrossRef]

- Dimmock, C.; Goh, J.W. Transformative pedagogy, leadership and school organisation for the twenty-first-century knowledge-based economy: The case of Singapore. Sch. Leadersh. Manag. 2011, 31, 215–234. [Google Scholar] [CrossRef]

- Gopinathan, S. The challenge of globalisation: Implications for education in Singapore. Comment. J. Natl. Univ. Singap. Soc. 2002, 18, 83–90. [Google Scholar]

- Dobber, M.; Zwart, R.; Tanis, M.; van Oers, B. Literature review: The role of the teacher in inquiry-based education. Educ. Res. Rev. 2017, 22, 194–214. [Google Scholar] [CrossRef]

- Maeng, J.L. Using technology to facilitate differentiated high school science instruction. Res. Sci. Educ. 2017, 47, 1075–1099. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).