Abstract

Extant literature on education research focuses on evaluating schools’ academic performance rather than the performance of educational institutions. Moreover, the State of Louisiana public school system always performs poorly in education outcomes compared to other school systems in the U.S. One of the limiting factors is the stringent standards applied among heterogeneous schools, steaming from the fit-for-all policies. We use a pairwise controlled manifold approximation technique and gradient boosting machine algorithm to typify Louisiana public schools into homogenous clusters and then characterize each identified group. The analyses uncover critical features of failing and high-performing school systems. Results confirm the heterogeneity of the school system, and each school needs tailored support to buoy its performance. Short-term interventions should focus on customized administrative and academic protocols with malleable interpositions addressing individual school shortcomings such as truancy. Long-term policies must discourse authentic economic development programs to foster community engagement and creativity while allocating strategic resources that cultivate resilience at the school and community levels.

1. Introduction

Two reports published in 1983 by National Commission on Excellence in Education and Carnegie Forum on Education and the Economy painted a grim picture regarding education outcomes in the U.S. As a result, states and school districts have implemented policies and regulations to foster high academic standards, improve accountability, and achieve excellence while administering rules and laws to maintain school disciplinary conduct. However, according to [1,2,3], the U.S. still has one of the highest high school dropout rates in developed countries, and among students who complete high school and go on to college, half require remedial courses, and half never graduate [4,5].

For the U.S. youth to compete for rewarding careers against other brilliant young people from across the globe, a college degree or advanced certificate is necessary. As the World Economic Forum reports [6], three-quarters of the fastest-growing occupations require education beyond a high school diploma, with science, technology, engineering, and mathematics (STEM) careers prominent on the list. To reignite U.S. education competitiveness, relight economic growth, and create a thriving middle class, the U.S. requires an inclusive education system that prepares all students for college and STEM careers and implements innovative public policies to ensure every child receives a quality education.

The extant literature related to quantitative (behavioral and cognitive) education analyses focuses on determining factors influencing student achievements and school performance using academic growth models and other econometric tools summarized by [7,8]. These models assume that schools or school systems are homogenous. What arises from these studies are fit-for-all public policies that do not necessarily impact education outcomes, as no one policy guarantees success [6]. Due to location and neighborhood effects, schools exhibit heterogeneous characteristics and face different challenges and constraints across districts and time; therefore, they demand tailored and diversified support.

Most education studies rely on academic growth models [9] to measure students’ progress or schools’ performance on standardized test scores concerning academically similar students from one point to the subsequent and students’ progress toward proficiency standards. These models provide a general framework for interventions to revivify failing students or schools or rally high-achieving students or schools. However, results from these models are not helpful when the objective is deriving tailored recommendations for specific students or schools with distinctive features and characteristics that differ from others. Moreover, the statistical methods for controlling student background and other extraneous variables in these models make it impossible to determine the impact of covariate variables on student or school performance [9,10].

This study deviates from these studies by focusing on whether there is a non-random structure in the Louisiana public school system and pinpointing critical features that differentiate Schools’ performance. In this study, we used pairwise controlled manifold approximation (PaCMAP) as an unsupervised machine learning tool for multidimensionality reduction and visualizing the created school clusters [11] and applied different internal statistical tools to determine optimal numbers of clusters and validate the created groups as reported elsewhere [12]. Further analysis using visualization tools and multiclass classification, specifically random forest and gradient boosting machine method [13], identifies critical features of failing and performing schools. The results are vital in suggesting tailored interventions to improve Louisiana’s public school system’s performance.

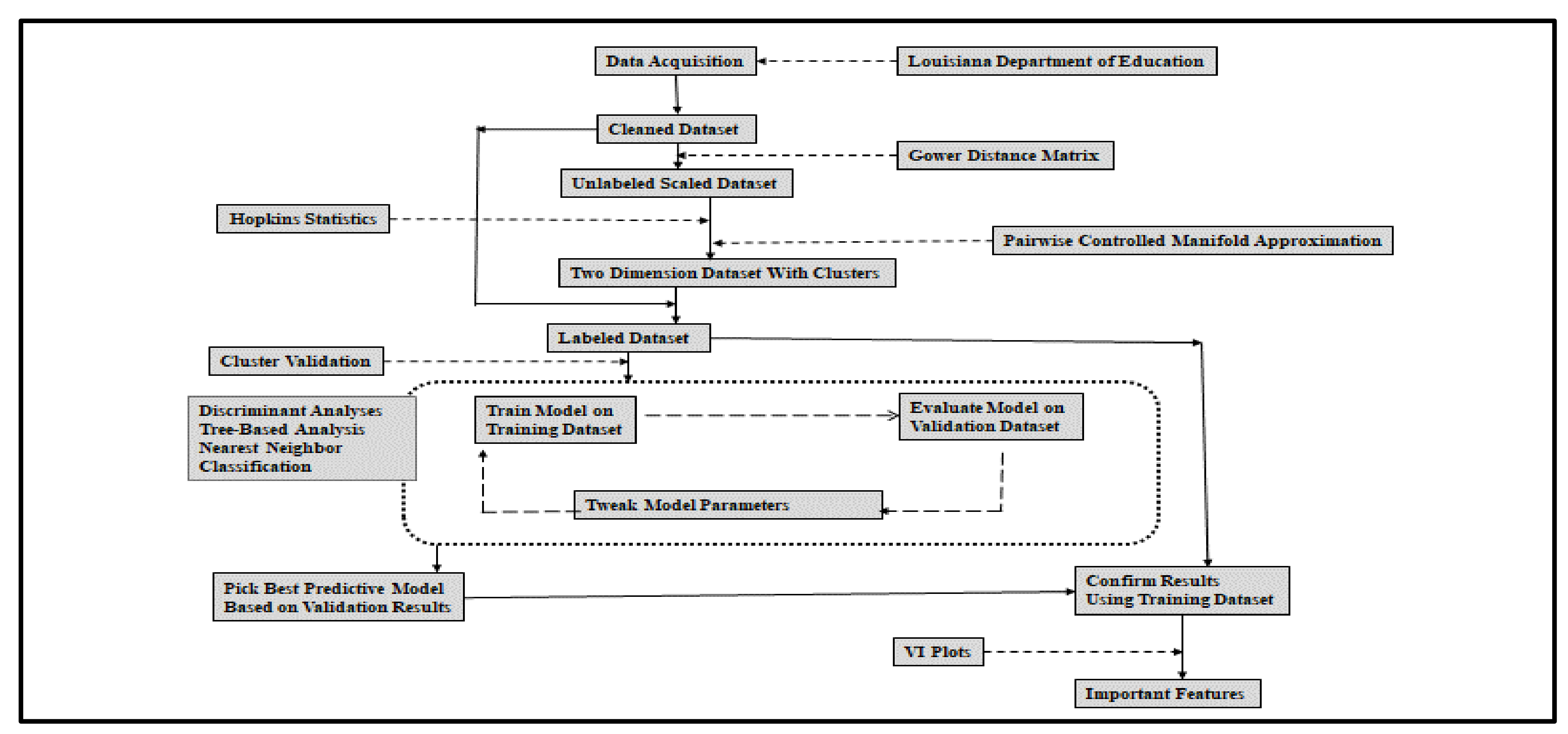

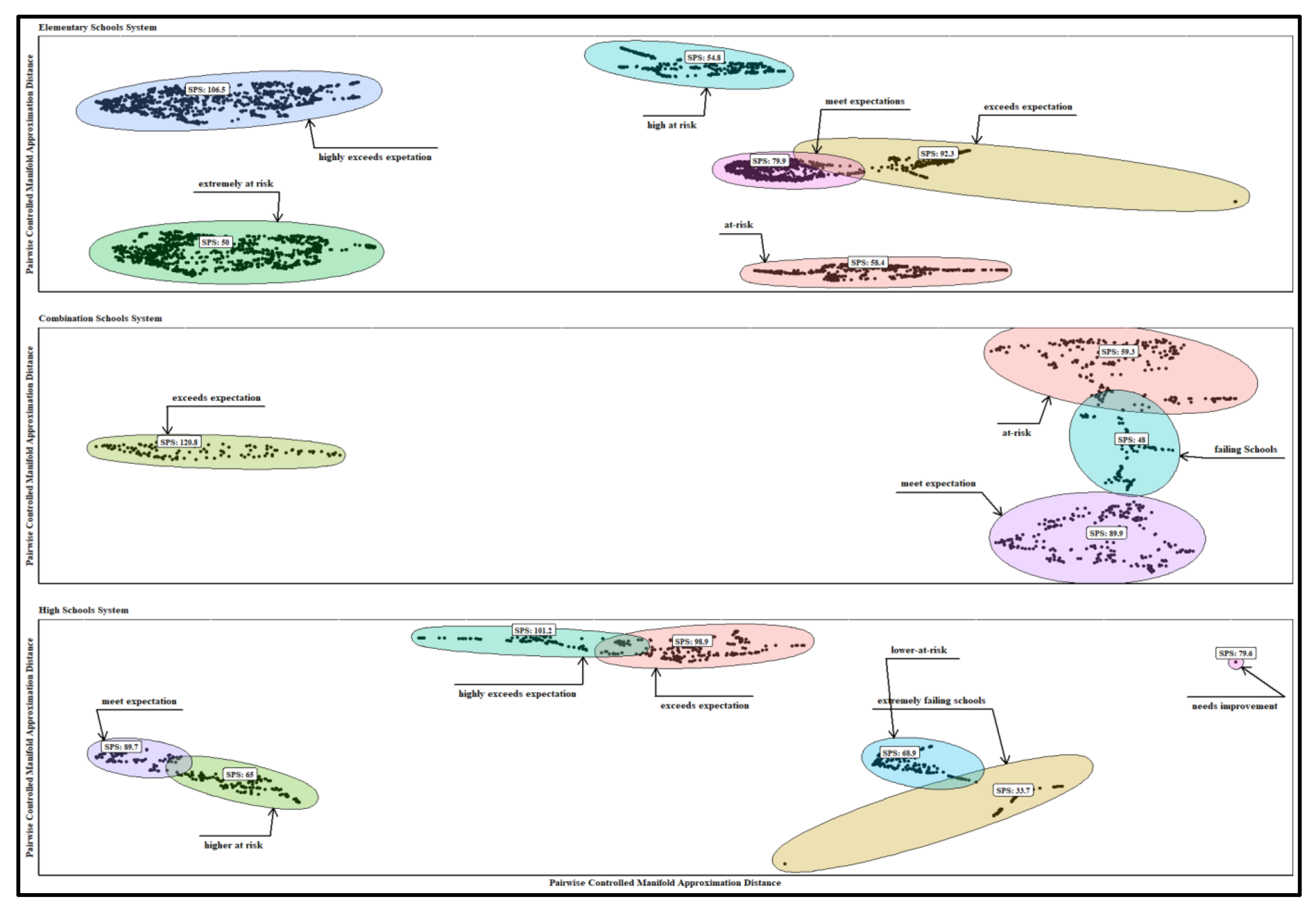

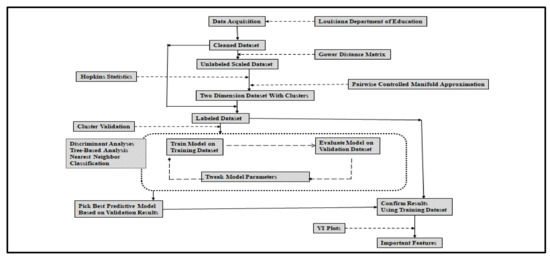

Figure 1 presents the steps taken for data collection and analysis, and the study has two main contributions. First, the unsupervised clustering technique illustrated in Figure 1 below helped build a multiclass classification model based on historical data to predict which schools belong to what cluster and what features are essential in each homogenous group. Therefore, school administrators and policymakers can respond appropriately to target certain shortcomings at the school level.

Figure 1.

Machine learning steps to typify the schools and identify critical features.

The analysis illustrated in Figure 1 allowed a simultaneous utilization of unlabeled and labeled data and enabled tracking of the effects of distinctive features on cluster projection. Second, combining unsupervised and supervised clustering tools allowed us to compare features across clusters that uncover connections between school characteristics and performance and critical differences among schools. The results provide evidence-based decision-making tools for selecting and implementing interventions to improve school outcomes. As illustrated in Figure 1, we use unsupervised machine learning techniques [14]; explicitly, we cluster schools into sub-homogenous groups using PaCMAP [11] and identify critical features of each cluster using multiclass classification [13,15]. Data points in clusters should be as similar as possible and dissimilar to teams in other groups. The main advantage of clustering is its adaptability to changes, which helps single out valuable features that distinguish distinct groups. Moreover, grouping schools by commonalities or differences is essential in exploring the factors that explain differences in achievement and performance. Clustering also helps to understand existing challenges and opportunities that influence change.

While the best clustering process maximizes inter-cluster distances, it should also minimize intra-cluster distances [16] (Bailey, 1989). The inter-cluster distance (global structure) is the distance between two data points belonging to different clusters, and the intra-cluster distance (local structure) is the distance between two data points belonging to the same group. A superior clustering algorithm makes clusters so that the inter-cluster distance between different clusters is prominent and the intra-cluster space of the same group is conservative. Popular methods for clustering social data through multidimensional reduction techniques include fuzzy clustering [17], t-distributed stochastic neighbor embedding (t-SNE) [18,19], uniform manifold approximation and projection (UMAP) [20], dimensionality reduction using triplet constraint (TriMap) [21]. The primary limitations of these methods are that they either preserve local or global structures, and hyperparameters of the models are difficult to interpret [11]. The PaCMAP is an ideal tool for clustering through dimensionality reduction as it depends on a founded mathematical formulation. Moreover, PaCMAP uncovers strong connections among variables by safeguarding local and global structures, an essential aspect of the geometric visualization of multidimensional datasets [11,22].

Evaluating the dimensionality reduction and cluster analysis includes quantitative and objective ways through cluster validation measures. The process has four main components: determining whether there is non-random structure in the data; determining the optimal number of clusters; evaluating how well a clustering solution fits the given data when the data is the only information available; and assessing how well a clustering solution agrees with partitions obtained based on other data sources. The first step involved evaluating the clustering tendency before applying the dimensionality reduction and clustering algorithms to determine whether the data contains any inherent grouping structure using the Hopkins statistic [23]. The statistic evaluates the null hypothesis that the data follows a uniform distribution (spatial randomness). In the second step, we used clustering validation measures [24,25] to determine the goodness of a clustering structure without respect to external information.

We organize the remaining part of the paper into four sections. The following section is the literature review that focuses on popular clustering techniques, their limitations, and the fundamentals of multiclass classification using machine learning. The data source, descriptions, and rationale of variables used to cluster the school systems and specific empirical techniques for data analysis are in Section 2, followed by the results and discussion section and the last section on implications for short- and long-term policies.

2. Background Information and Literature Review

2.1. Louisiana Schools Accountability System

According to the Louisiana Department of Education [26,27], the accountability system of the Louisiana school system aims to inform and focus educators through clear expectations for student outcomes; and provide objective information about school quality to parents, community members, and other stakeholders. Annually, public schools and early childhood centers in Louisiana receive a performance report that measures how well they prepare their children for the next phase of schooling. Since 1999, the state has issued public school performance scores (SPS) based on student achievement data. To communicate the quality of school performance to families and the public, Louisiana adopted letter grades (A–F). In 2014, the Department recalibrated its school performance score scale from a 200-point scale to a 150-point scale.

Under the previous 200-point scale, the letter grade was: A (120–200), B (105–119.9), C (90–104.9), D (75–89.9, F (0–74.9), and T (turnaround school). Under the 150-point scale, the groups of SPS are as follows: A (100–150), B (85–99.9), C (70–84.9), D (50–69.9), F (0–49.9), and T (turnaround school). The SPS for elementary is estimated based on these subindices: assessment (70%), growth (25%), and interest and opportunities (5%). The assessment subindex includes student assessments in student learning in English language arts (ELA), math, science, and social studies to measure student proficiency in the knowledge and skills reflected in the standards of each grade and subject. The SPS includes the points assigned to achievement levels by students for each subject assessed and progress made toward English language proficiency.

The calculation of the growth index accounts for changes in schools’ performance based on the previous year and the current year’s scores on each assessment result. Assessing student growth is done by answering two questions. If students are not yet achieving proficiency, are they on track to do so? If a student reaches the target, the school earns 150 points. Otherwise, if the students are growing at a rate comparable to their peers, schools earn points based on students’ growth percentile as compared to peers (i.e., 80th –99th percentile (150 points), 60th–79th percentile (115 points), 40th–59th percentile (85 points), 20th–39th percentile (25 points), and 1st–19th percentile (0 points). In addition, the interest and opportunities subindex constitutes a 5-point scale on various metrics reflecting the schools’ effort to make services available to all children.

Based on the Louisiana Department of Education [28], the SPS for elementary/middle schools with grade 8 is an aggregation of these subindices: assessment (65%), achievement and growth (25%), interest and opportunities (5%), and dropout credit accumulation (5%). For the high schools, the SPS is also an aggregation of the following subindices: assessment (25%), ACT/WorkKeys (25%), the strength of diploma (25%), cohort graduation rate (20%), and interest and opportunities (5%). The assessment subindex for the high schools includes the end-of-course (EOC) exams that assess whether students have mastered the standards of core high school subjects. EOC exams include algebra I, geometry, English I (beginning in 2017–2018), English II, biology, and U.S. History. Except for students who participate in LEAP alternative assessment 1, all high school students must take an ELA and math EOC exam by their 3rd cohort year, regardless of graduation pathways. The final scores do not include high school students retaking an EOC. Final student scores are on five levels: unsatisfactory (0 points), approaching basic (50 points), basic (80 points), mastery (100 points), or advanced (150 points). The mastery and above are considered proficient for the next grade level.

The dropout credit accumulation subindex is for schools that include grade 8 in the prior year, based on the number of Carnegie credits earned through the end of 9th grade (and transitional 9th, where applicable) and/or dropout status. The dropout credit accumulation encourages the successful transition to high school and access to Carnegie credits in middle school. The ACT/WorkKeys (ACT WorkKeys assessments are the cornerstone of ACT workforce solutions and measure foundational skills required for success in the workplace and help measure the workplace skills that can affect job performance) subindex measures student readiness for postsecondary learning, and all grade 11 take the ACT, a nationally recognized college and career readiness measure. Schools earn points for the highest composite score made by a student through the spring testing date of their senior year or a student who graduates at the end of grade 11. WorkKeys scores were included in the ACT subindex for accountability when the WorkKeys score yielded more index points than the ACT score beginning in 2015–2016.

Louisiana Department of Education report [27] indicates that the Strength of Diploma subindex measures the quality of the diploma earned by each 12th grader. The high school diploma plus awards points (110–150)) to schools for students who graduate on time and meet requirements for one or more of the following: advanced placement, International Baccalaureate (IB), JumpStart credentials, CLEP, TOPS-aligned dual enrollment course completion, and an associate degree. The system awards schools with four-year graduate students (100 points) with career diplomas or a regional Jump Start credential, as well as those who earned a diploma assessed on an alternate certification. Moreover, the system awards 50–75 points to schools with five-year graduates that have any certificate, five-year graduates who earn an A.P. score of 3 or higher, an IB score of 4 or higher, and a college-level examination program (CLEP) of 50 or higher. Other awards are for graduates with high school equivalency test (HiSET)/GED + JumpStart credential (40 points), and HiSET/GED earned no later than October 1 following the last exit record (25). The cohort graduation rate subindex measures the percentage of students who enter grade 9 and graduate four years later, adjusted for students who transfer in or out. All 9th-grade students who enter a graduation cohort are included in the cohort graduation rate calculations, regardless of diploma pathway, unless they are legitimate leavers. Legitimate leavers are students removed from the cohort and exited enrollment for one or more reasons: death, transfer out of state, transfer to an approved nonpublic school or a Board of Elementary Education and Secondary Education)-approved home study program, and transfer to early college.

After the calculation of SPS, the Louisiana Department of Education [27,28] categorize school that needs interventions into three groups: urgent intervention is necessary, urgent intervention is required, and comprehensive intervention is required. For schools in the first category, the subgroup performance equals D or F in the current year. For the second and third categories, the subgroups’ performance equal to F for two years and or out-of-school suspension rates more than double the national average for three years, and the overall performance of D or F for three years (or two years for a new school) and or graduation rate less than 67 percent in most recent year, respectively. For accountability purposes, Comprehensive Intervention Required labels will appear on the “Overall Performance” page in the Louisiana School Finder, while Urgent Intervention Needed and Required labels will appear on the “Discipline and Attendance” and or “Breakdown by Student Groups” pages. As part of Louisiana’s Every Student Succeeds Act (ESSA) plan, any school identified under one of the labels must submit an improvement plan to the Department and an application for funding to support its implementation (See details on how to calculate the SPS at: https://www.louisianabelieves.com/measuringresults/school-and-center-performance (accessed on 17 October 2022)).

2.2. An Overview of Empirical Clustering Techniques and Application in Education

Louisiana’s SPS is an aggregation indicating Schools’ overtime achievement and growth. Analyzing SPS data would allow identifying required changes to improve Schools’ performance. Standard education models used to measure achievement and growth range from subtracting last year’s test score from this year’s test score (called a gain score) to complex statistical models that account for differences in student academic and demographic characteristics [7,8]. The five standard growth models for measuring performance or progress: value-added, value table, trajectory, projection, and student growth percentile. The primary limitations of these models emanate from their inability to account for unobserved characteristics, and there is also a need to specify the mathematical relationship among variables explicitly. However, the education assessment literature (such as [29]) shows no consensus on the best methods and models for evaluating academic achievement and growth at the student and school systems level.

Clustering is a critical technique in data mining with applications in image processing, diagnosis systems, classification, missing value management and imputation, optimization, bioinformatics, and machine learning [30]. Moreover, dimensionality reduction is a fundamental step for clustering algorithms due to the curse of dimensionality and non-linearity of most observational data. As the number of dimensions increases, data points tend to be similar, and there is no clear structure to follow when grouping these pairs [31]. Since cluster analysis is just a statistical process of grouping related units into sets, the groups in the dataset may be genuinely related or related by chance. Different clustering techniques might give different results if the relationship is just by chance [32].

Conventional clustering algorithms such as the k-means clustering technique [33,34] assign a set of observations to a limited number of clusters such that pairs belonging to the same group are more like each other than those in other groups. The method assigns each observation to one and only one cluster. However, the assignment might often be too strict, leading to unfeasible partitions [35,36]. Fuzzy sets manage the challenges by assigning data points to more than one cluster [17]; therefore, each data point has a likelihood or probability score of belonging to a given cluster [37,38,39]. Extensions of the fuzzy c-means clustering algorithm (including [40,41,42]) improve the standard method by reducing errors during the segmentation process.

Education studies (including [43,44]) apply fuzzy clustering to analyze e-learning behavior by creating clusters with common characteristics, typifying schools across cultures [45], or combining learning and knowledge objects based on metadata attributes mapped with various learning styles to create personalized and more authentic learning experiences [46]. Others applied the technique to compare e-learning behaviors [47], predict and identify significant variables that affect undergraduate schools’ performance [48,49], allocate new students to homogenous groups of specified maximum capacity, and analyze the effects of such allocations on students’ academic performance [50], and creating performance profiles in reading, mathematics, and science [51]. Fuzzy clustering techniques perform poorly on data sets containing clusters with unequal sizes or densities, and the method is sensitive to outliers [52]

The t-distributed stochastic neighbor embedding (t-SNE) is a dimension reduction technique that tries to preserve the local structure and make clusters discernible in a two-or three-dimensional visualization [18,53]. The t-SNE algorithm preserves the local structure of the data using a heavy-tailed Student-t distribution to compute the similarity between two points in the low-dimensional space rather than a Gaussian distribution. A heavy-tailed Student-t distribution helps address crowding and optimization problems. The t-SNE algorithm takes a set of points in a high-dimensional space and finds an optimal representation of those points in a lower-dimensional space. The first objective is to preserve as much significant structure or information present in the high-dimensional data as possible in the low-dimensional representation. The second objective is to increase the data’s interpretability in the lower dimension space by minimizing information loss due to dimensionality reduction [54].

Education-related studies use the t-SNE algorithm to predict schools’ academic performance and evaluate the impact of different attributes on performance to identify at-risk students [54] or visualize clusters with unique features that correlate with success in medical school [55] to uncover success potential after accounting for inherent heterogeneity within the student population. Other studies [55] combine convolutional neural networks to identify critical features influencing academic performance and predicting future educational outcomes by visually distinguishing homogenous groups with fully connected layers of the networks [56] and highlighting prominent features influencing education outcomes and predicting future performances [57].

There are two primary limitations when using t-SNE for multidimensionality reduction and clustering. The technique requires calculating joint probability among all data points (at high and lower dimensions), which imposes a high computational burden [11,58]. Therefore, the t-SNE algorithm does not scale well for rapidly increasing sample sizes outside the computer cluster. Also, the algorithm does not preserve global data structure at high dimensions, meaning that only intra-cluster distances are meaningful and do not guarantee inter-cluster similarities [59,60].

Uniform manifold approximation and projection (UMAP) is a non-linear dimension reduction technique used for visualization like t-SNE, with the capacity to preserve local and global structures for non-noisy data [11]. Specifically, UMAP is highly informative when visualizing multidimensional data [61] and performs better in keeping global structure than t-SNE. The UMAP algorithm efficiently approximates k-nearest-neighbor via the nearest-neighbor-descent algorithm [20,62,63]. The application of the UMAP algorithm in education studies identifies community conditions that best support universal access and improved outcomes in the initial stages of childhood development or captures the neighborhoods that behave similarly at a particular time and explains the social-economic effects that bring communities together [22,64,65].

Dimensionality reduction using triplet constraint (TriMap) uses triplet (sets of three observations) constraints to form a low-dimensional embedding of a set of points [11,63]. The algorithm samples the triplets from the high-dimensional representation of the data points, and a weighting scheme reflects each triplet’s importance. The main idea is to capture higher orders of structure with triplet information (instead of pairwise information used by t-SNE and UMAP) and minimize a robust loss function for satisfying the chosen triplets, thereby providing a better global view of the data [63]. Theoretically, this method can preserve local and global structures; however, the inter-cluster distances are uncertain for large datasets with outliers [11,64].

Likewise, PaCMAP is a dimensionality reduction method that preserves local and global data structures [11,29]. The critical steps with the PaCMAP algorithm are graph construction, initialization of the solution, and iterative optimization using a custom gradient descent algorithm PaCMAP. The algorithm uses edges as graph components and distinguishes between three edges: neighbor pairs, mid-near pairs, and further-apart pairs. The first group consists of neighbors from each observation in the high-dimensional space. The second group consists of mid-near teams randomly sampling from additional data points and using the second smallest for the mid-near pair. The third group consists of a random selection of further data points from each observation. Parameters that specify the ratio of these quantities to the number of nearest neighbors determine the number of mid-near and further-apart point pairs. The PaCMAP algorithm is robust and works well on a large dataset, significantly faster than t-SNE, UMAP, and TriMap [65]. We could not find publications related to Education Research that use TriMap and PaCMAP as the primary data analysis tools.

Before clustering, it is critical to determine if the data are clusterable by applying the Hopkins statistic [66] that tests the spatial randomness of the data by measuring the probability that a given data set is from a uniform distribution. The Null hypothesis is that there are no meaningful clusters, and the alternative hypothesis is that the data set contains significant clusters. In addition, while the multidimensional reduction results help identify optimal numbers of clusters through visualizations, the analysis must be augmented by statistical tools such as the Elbow Method [67], the Silhouette Coefficient [68], Gap statistic methods [69], and other statistical measures (summarized by [70]) to ascertain the results. After determining the optimal number of clusters, clustering validation is also vital in deciding group quality [71]. Internal clustering validation aims to establish if the average distance within-cluster is small and the average distance between clusters is as significant as possible [25]. Internal clustering measures reflect connectedness, compactness, and the separation of the created clusters [72].

The connectivity has a value between zero and infinity. Minimizing the connectedness relates to what extent data points are in the same cluster (cohesion) as their nearest neighbors in the data space as determined by the K-nearest neighbors [73]. The compactness index assesses cluster homogeneity using the intra-cluster variance. It measures how closely related the data points in a cluster are. The index is estimable based on variance or distance. Lower variance indicates better compactness [25]. Separation quantifies the degree of separation between clusters by measuring the distance between cluster centroids [38,68]. Compactness and separation demonstrate opposing trends. While compactness increases with clusters, separation decreases with the number of clusters. Most measures of internal cluster validation, such as the Dunn Index and Silhouette width, combine compactness and separation into a single score [25,73]. The Dunn Index is the ratio of minimum average dissimilarity between two clusters and maximum average within-cluster dissimilarity. Given the formula for estimating the Silhouette [68], a Silhouette width for each data point can be positive or negative. Datapoints with a Silhouette close to one are close to the cluster’s center, and data points with a negative Silhouette value mean that the data points are on the boundaries and are more relative to the neighboring groups or clusters [74,75].

2.3. Multiclass Classification to Augment Clustering Results

After unsupervised clustering, the second interest is determining which features/variables significantly impacted each cluster using the original data. The most prominent impact features must differentiate the groups most strongly. Statistically, it is possible to perform a series of analyses of variance and select the attributes/variables with large t-values or smaller p-values [76]. It is also possible to distinguish critical features by calculating the average similarity of each data point based on intra- and inter-cluster distances of the centroid of each cluster [77]. However, a substitution effect occurs when two or more explanatory variables share information or predictive power. The analysis of variance and similarity analysis may not robustly determine which variables are critical [78]. Specifically, multiclass classification is a problem with more than two classes or clusters, where each data point belongs to one category [79]. The technique includes binary classification, discriminant analysis [80,81], tree algorithms extendable to manage multiclass problems, and nearest neighbors’ approach [82]. Discriminant analysis [83] is a versatile statistical method often used to assign data points to one group among known groups. The discriminant analysis aims to discriminate or classify the datasets based on more than two groups, clusters, or classes available priori. The process places new data points into a general category based on measured characteristics. Standard tools for noisy and high-dimensional data are penalized linear discriminant [84], high dimensional discriminant analysis [85], and stabilized linear discriminant analysis [86].

The tree-based algorithms are primary tools for supervised learning methods that empower predictive models with high accuracy, stability, and ease of interpretation. The most popular tree-based algorithms are decision trees [87,88], random forest [89], and gradient-boosting machines [90], as applied by [91,92,93]. Unlike other machine learning models, the algorithm has the quickest way to identify the most significant relationships between variables. Since it is a non-parametric method, it has no assumptions about space distributions and classifier structure [87]. The nearest neighbors’ classification algorithm assumes that similar objects exist in proximity or near each other, and the standard algorithms are the k-nearest neighbors [94] and the nearest shrunken centroids [95]. The objective is to find a group of k data points in the training dataset closest to the test dataset point and label assignments on the predominance of a particular class in this neighborhood. The output is a class membership, assigning each data point to a specific cluster by a plurality vote of its neighbors [94] or earmarked to the class most common among its nearest neighbors.

There are different metrics for comparing the performance of multiclass models and analyzing the behavior of the same model by tuning various parameters. The metrics are based on the confusion matrix since it encloses all the relevant information about the algorithm and classification rule performance [96]. The confusion matrix is a cross table that records the number of occurrences between observed classification (e.g., unsupervised machine learning) and the predicted classification (e.g., from supervised machine learning). Estimable metrics from the confusion matrix dictating more is better (should be maximized) include accuracy, kappa, mean Specificity, and mean recall, and the standard metrics for lower is better (should be minimized) are the logloss and mean detection rate [97]. Generally, the standard accuracy metric returns an overall measure of how much the model correctly predicts the class based on the entire dataset. Therefore, the metric is very intuitive and easy to understand. Balanced accuracy (mean recall) is another critical metric in multiclass classification and is an average of recalls. For details on various metrics used in machine learning model evaluations, see [97,98,99].

After determining the best model, the next step is estimating the relative importance of input variables through k-fold validation [100]. The process identifies the relative importance of explanatory variables by deconstructing the model weights and determining the relative importance or strength of association between the dependent and explanatory variables. For decision tree-based models, the connecting weights are tallied for each node and scaled close to all other inputs. Note that the model weights that connect variables in decision tree-based models are partially analogous to parameter coefficients in a standard regression. The model weights dictate the relative influence of information processed in the network by suppressing irrelevant input variables in their correlation with a response variable. Since no multiclass method outperforms others, the model choice depends on the desired precision and the nature of the classification problems. Therefore, a feature importance score ensures the interpretability of complex models as it quantifies information a variable contributes when building the model and ranks the relative influence of the variable in predicting a specific cluster [101,102,103].

3. Materials and Methods

3.1. Source of Data

This study used data from the 2015/16, 2016/17, and 2017/18 school years. While most data are available up to 2019/20, the financial data was not available when finalizing this paper. All data are from the Louisiana Department of Education Data Center. The link https://www.louisianabelieves.com/resources/library/student-attributes (accessed on 17 October 2022) provides data on schools’ attributes, including the total numbers of students and the percentage of students by gender and race (i.e., American Indian, Asian, Black, Hispanic, Hawaiian/Pacific Islander, and White). Other information is on English proficiency (e.g., percent of fully proficient students), the number of students in different grades, and the percentage in free and reduced lunch programs.

The annual financial report at https://www.louisianabelieves.com/data/310/ (accessed on 17 October 2022) summarizes financial activities for the school year. The variables in the dataset are current expenditures per pupil on instructors, pupil/instructional support, school administration, transportation, and other supports. Other information is on school-level student counts and school-level staff full-time equivalent (FTE) for teachers, administrators, other instructors, and other support staff. There is also information on staff salaries, education levels and average years of experience. The link https://www.louisianabelieves.com/resources/library/fiscal-data (accessed on 17 October 2022) has other financial data summarized by expenditure in each group (e.g., wages, transportation). The link https://www.louisianabelieves.com/resources/library/performance-scores (accessed on 17 October 2022) provides information on school-level performance scores. At the beginning of this study, the scores were available from 1998/1999 to the 2017/18 school year. The full dataset with all variables is available for public schools governed by a school district. School districts with high numbers of private and charter schools that are publicly funded but operated by independent groups, such as in New Orleans Parish, are underrepresented. The New Orleans School District follows the all-charter system with very few schools run by public school systems, and the district is represented by 14 individual schools in the data set. For data analysis purposes, the dataset is in three groups: elementary/middle (elementary after that), combination (with elementary, middle, and high schools), and high schools’ system. The available data are pre-COVID-19 pandemic. Further analysis is needed when the data collected during the pandemic is available, as there is a lag of three years.

3.2. Variables That Influence School Performance and Empirical Model

For definitions and examples of variables that influence school performance, see [104,105,106] on the critical school characteristics and roles of past achievement. Since Schools’ performance depends on schools’ performance, variables influencing school performance are in six groups [107]: schools’ socioeconomic status, past achievement, school attributes, faculty education, per-pupil expenditure, and variables defining the affluence of the communities in school catchment areas. Studies examining the importance of teacher training, teacher certification, and teachers’ professional development programs all conclude that students with certified teachers performed better ([for example, see [108,109,110,111]). Education studies link teachers’ effectiveness to positive student behavior, such as student attendance, which improves schools’ performance [112,113,114]. Other studies examine a constellation of teacher-related effects such as classroom effectiveness, collective teaching quality, and school academic organization that increase student performance and academic growth [115,116,117,118]. Studies (including [119,120,121,122,123]) focus on the influence of class size on student performance with varying conclusions.

Financial expenditure is another variable purported to influence school performance [120,124,125,126,127]; however, the conclusions from these studies are indeterminant. In addition, meta-analysis reviews of quantitative research documenting the association between neighborhoods and educational outcomes all concluded that individual academic results were significantly associated with neighborhood characteristics such as poverty, a poor educational climate, the proportion of ethnic/migrant groups, and social disorganization and other built environment that promotes parental engagement and participation [128].

The variables that capture community affluence are from the five-year American Community Survey (According to the U.S. Bureau of Census, the American Community Survey (ACS) helps local officials, community leaders, and businesses understand the changes in their communities. It is the premier source for detailed population and housing information about the U.S.) at the unified school district level. The data are available from 2009 to 2019 and match the schools’ data. All analyses were in R Environment [129]. To conduct PaCMAP while preserving the local and global structures, we follow a two-step cluster analysis [63] that allows variability among the created clusters [130]. The first step involved calculating Gower’s distance matrix in separating schools into (dis)similar groups using the daisy function in the cluster package [131] (see [132,133] regarding the advantages of Gower’s distance matrix). For PaCMAP, we recreated and executed Python’s pacmap function [11] using a reticulate package [134] in the R environment, the input being the Gowers distance of each school system. Fine-tuning the pacmap function requires specifying the size of the local neighborhood or the number of neighboring sample points (n-neighbors) used for manifold approximation. Larger values result in more global views of the manifold, while smaller values preserve local data. The number of neighbors should range from 2 to 100, but we set it to “NULL” to let the algorithm determine the optimal number of neighbors. Principal component analysis initialized the lower-dimensional embedding at the default levels.

We also used PaCMAP results to identify the medoids of the original data set using the partitioning around medoids (PAM) algorithm that partitions (cluster) based on the specified number of groups, as PAM is less sensitive to outliers [1]. The number represents the resampling iterations; repeats are the number of complete sets of folds to compute, and classProbs is a logical function telling the algorithm to compute class probabilities for classification models (along with predicted values) in each resample. After these two steps, we combined the PaCMAP results with the original dataset that added three variables to the new dataset (i.e., a cluster variable, location of medoids, and the two-dimension variable from the PaCMAP. The third step involved multiclass classification, where the dependent variable was the created cluster indicator variable, and the independent variables included scaled and centered demographic, social, and community variables. The caret package [80] was the primary tool for multiclass classification analysis using the different methods discussed in the multiclass classification section. To be consistent, the features of all models for the train control function (trainControl) were: method = “repeatedcv”, number = 10, repeats = 3, classProbs = TRUE, summaryFunction = multiClassSummary, and returnResamp = “all”. The repeating cross-validation with precisely the same splitting yields the same result for every repetition. The summaryFunction calculates performance metrics across samples, in this case, a multiclass function, and returnResamp is a character string indicating what to save regarding the resampled summary metrics, which can be all metrics. After selecting the best model by referencing the metrics discussed above, we identified and visualized the critical features of each cluster using a VIP package [135]. The package is a general framework for constructing variable importance plots from various machine learning models. We arbitrarily extracted 15 features for each best model using the variance-based methods [135,136].

4. Results and Discussion

4.1. Results from Unsupervised Learning Analyses

Because of space, the summary statistics of all variables for each school system are in Appendix A, and the entire dataset is available upon reasonable request. The summary statistics on the SPS are in Table 1. As stated before, the five broad categories of SPS are 100–150 (exceeds expectations), 85–99.9 (meets expectation), 70–84.9 (needs improvement), 50–69.9 (at risk), and 0–49.9 (Fail). The results in Table 1 show that the school performance scores are within the meets expectation and needs improvement category for all school systems and three school years. However, there is high variability in school performance, as exhibited by significant standard deviation, range, and coefficient of variation. The variability in SPS varied by the school system and by year. For example, it was low in 2017/18 for the elementary and combination school systems and high in 2016/17 for the high school system.

Table 1.

Summary statistics of school performance score by period.

Before clustering, the estimated Hopkins statistics to measure the clustering tendency were 0.870, 0.842, and 0.823 for elementary, combination, and high school systems. Note that when Hopkins’ statistic is equal to 0.5, the dataset reveals no clustering structure; when the statistic is close to 1.0, imply significant evidence that the data might be cluster-able and a value close to 0, in this case, the test is indecisive, and data are neither clustered nor random [23,137]. Based on the above results, we can reject the null hypothesis and conclude that the Louisiana education dataset has sufficient structures in the data to justify cluster analysis.

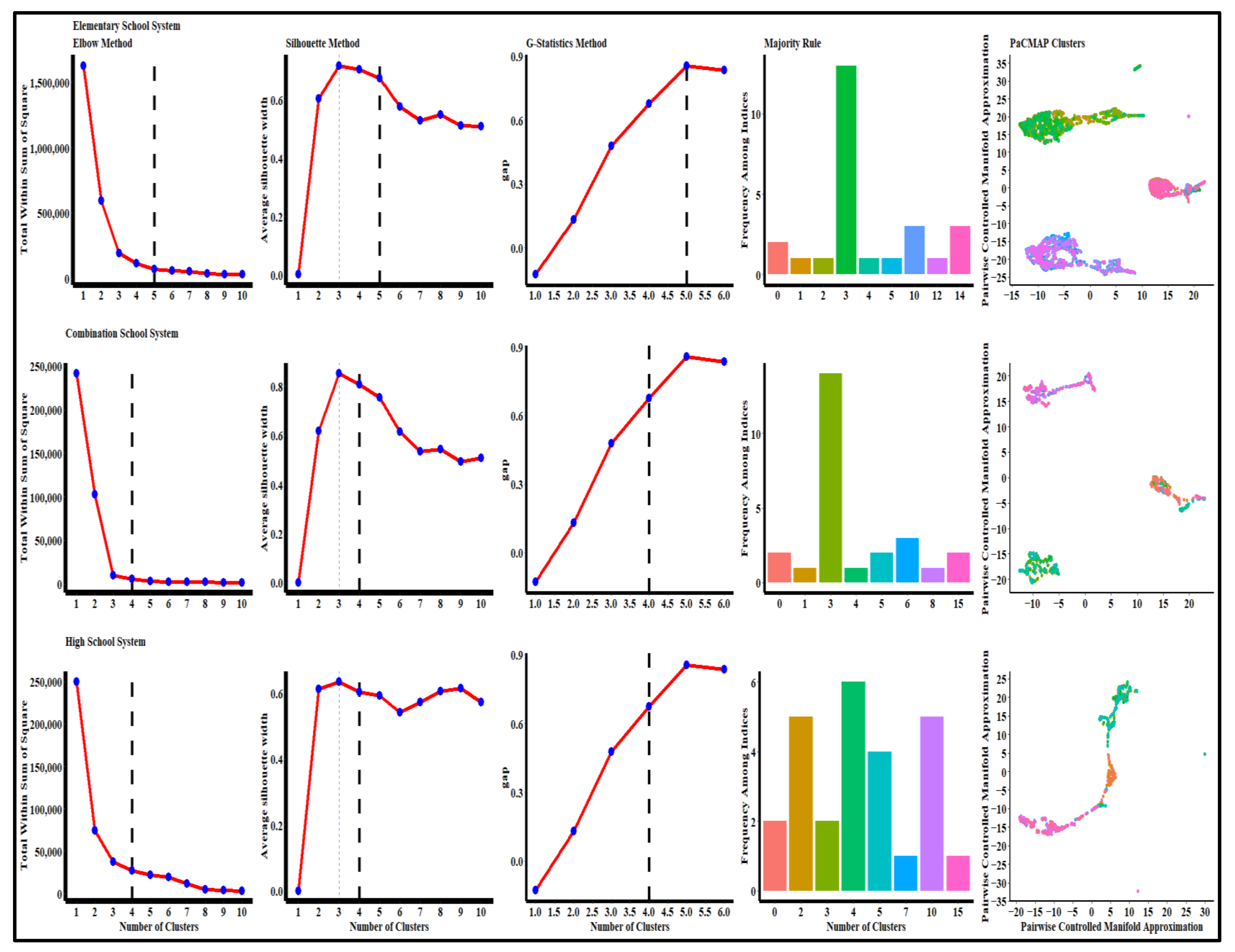

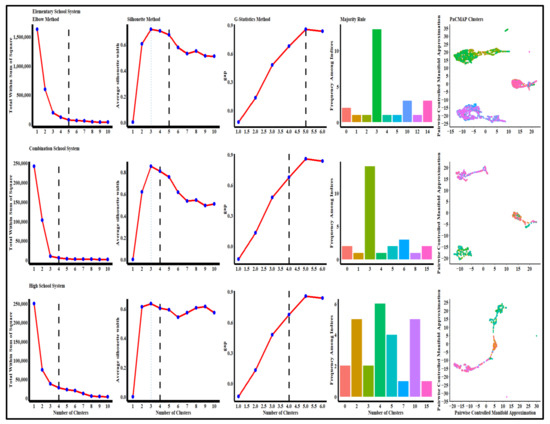

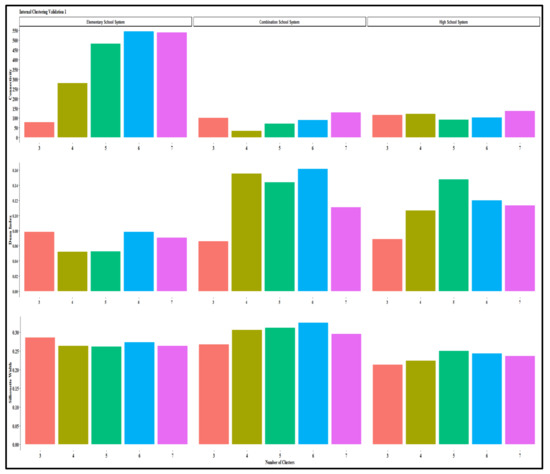

As shown in Figure 2, the manifold approximation and projection (PaCMAP) turn the whole dataset into a two-dimension scale, giving each data point a location on a map, thereby avoiding crowding them in the center of the map, and there are clear boundaries between clusters. After dimensionality reduction, we use visual inspection and other statistical measures to identify optimal numbers of clusters on the two-dimensional data, and the results are in Figure 2. As discussed in the literature review, the Elbow and the Silhouette methods in Figure 2 measure a global clustering characteristic. The Gap statistic formalizes the elbow/silhouette heuristic to estimate the optimal number of clusters.

Figure 2.

Potential optimal numbers of clusters.

The majority rule in Figure 2 measures the appropriateness of clusters using various indices [138]. Most statistical techniques for the elementary school system support five optimal clusters that provide the best visualization results with few outliers and minimal overlaps. For the combination and high school systems, visualization and statistical measures proposed four optimal clusters for each school system. Notice the two outliers for the high school system. The elementary school system’s inflection points for the Elbow, Silhouette, and G-Statistics methods are at five clusters. Indices in the majority rule also identify five optimal clusters, likewise the PaCMAP visualization. For the combination school’s system, whereas the Elbow, Silhouette, majority rule, and PaCMAP methods propose four clusters, the first inflection point for the G-Statistics method is five. While the Elbow and Silhouette methods suggest four clusters, the G-Statistics methods suggest five, the majority rule proposes four and six clusters for the high schools’ system, and the PaCMAP identifies six clusters with two outliers.

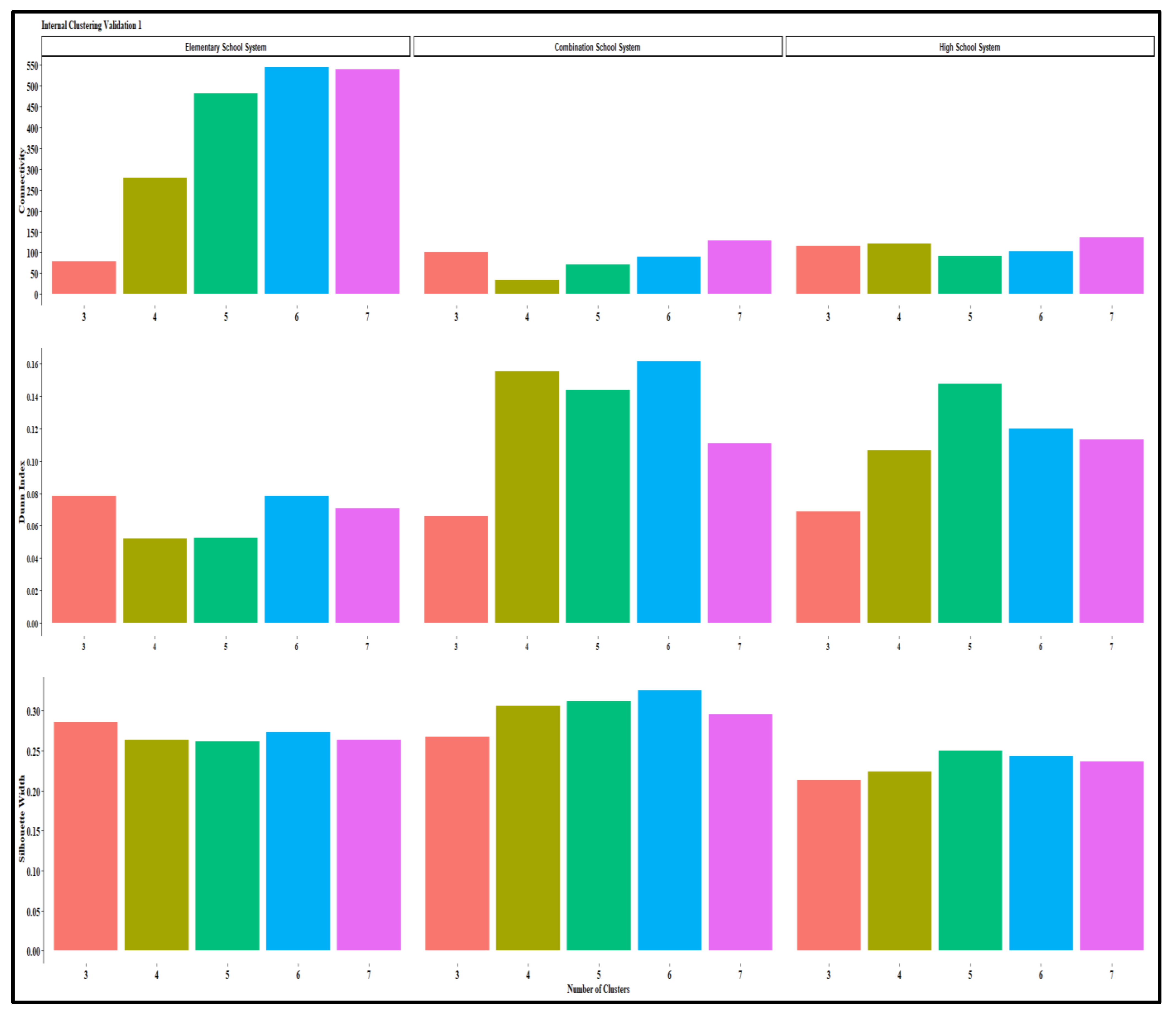

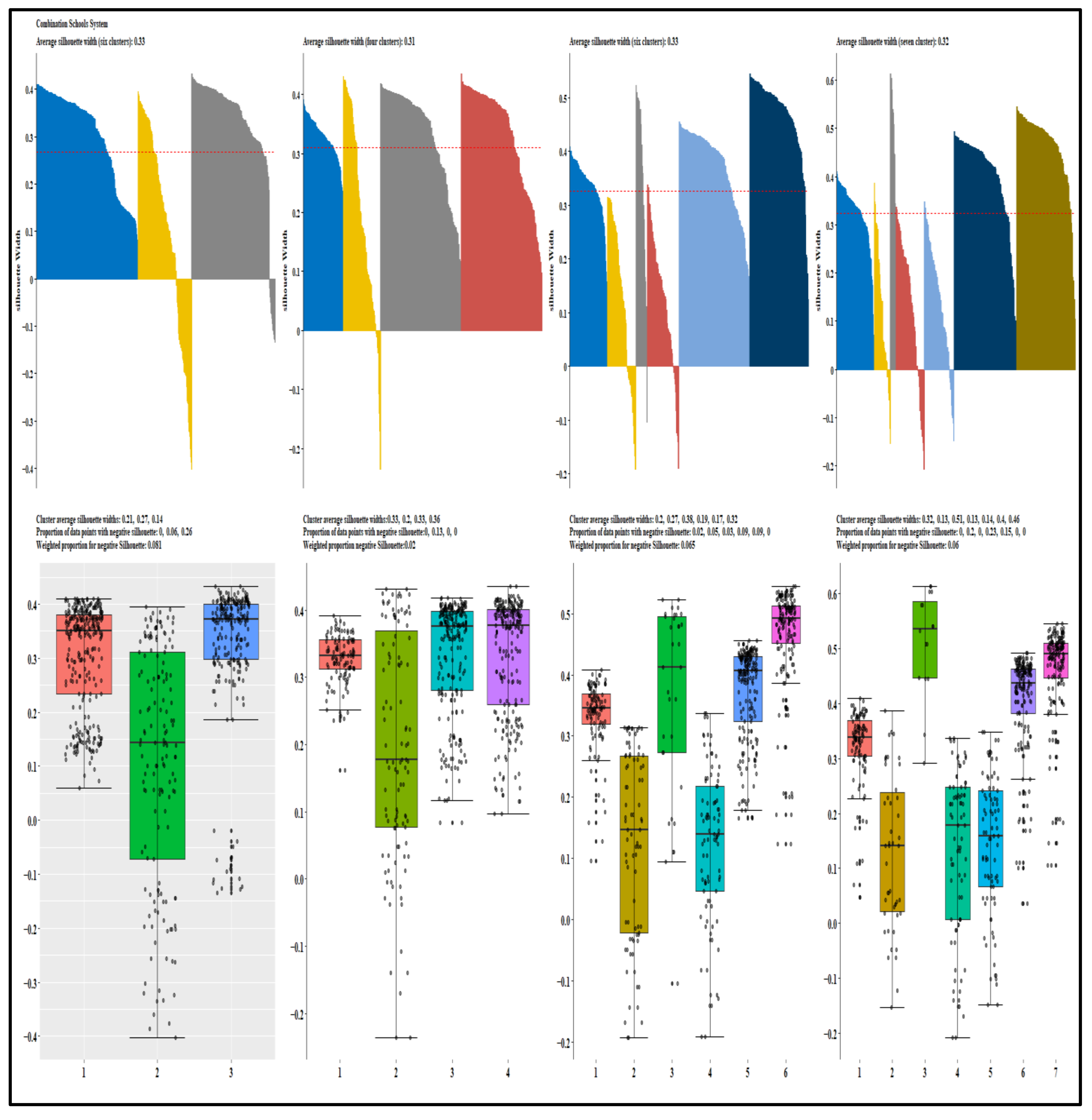

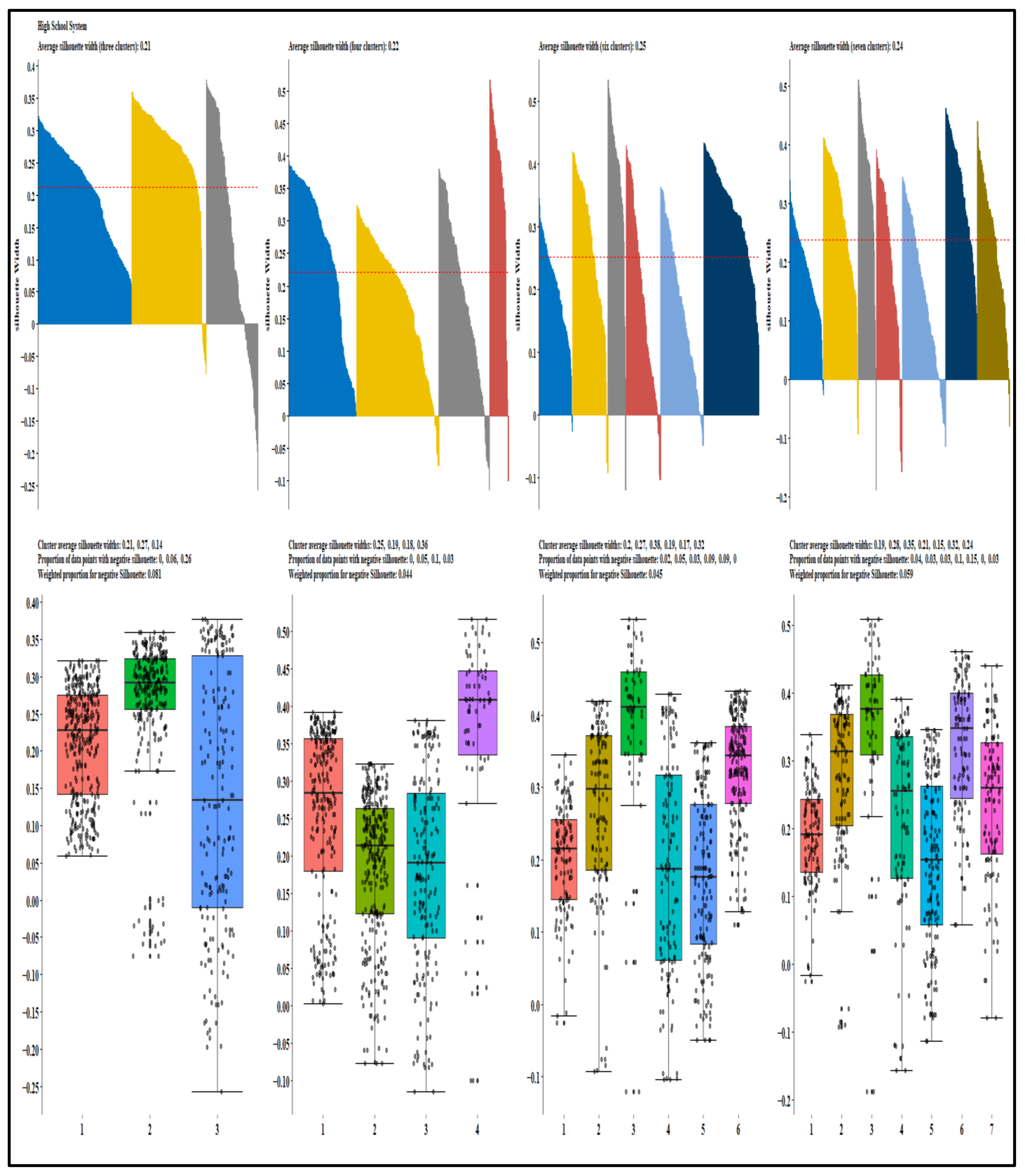

The results of internal clustering validation measures (i.e., connectivity index, Dunn Index, and Silhouette width) based on the Gowers distance of the original data and PAM are in Figure 3. The optimum value of the connectivity index should be minimum; Silhouette should be maximum; likewise, the Dunn index. In Figure 3, the three measures suggest three, six, and four optimal clusters for the elementary school system. Analogous results from the three measures are four, six, or seven for the combination school system and four, six, or seven for the secondary school system. The results from the three measures are as expected due to heterogeneity in the data. In Figure 2, each index has limitations, especially for a large dataset with outliers, and the results rely heavily on properties intrinsic to the dataset [12,74]. However, the Silhouette width is a widely used index for internal clustering validation and determining the quality of clusters and the entire classification [139]. The index provides enough information about clustering quality for unlabeled data and, therefore, suggests more accurate results regarding optimal numbers of clusters existing in the dataset.

Figure 3.

Internal clustering validation measures.

To get the best and most consistent results, we use the Silhouette width results and modify the measure to determine the best-bet numbers of groups by comparing the weighted proportions of data points at the boundaries of each created cluster using the following formula (, where is the weighted negative Silhouette proportion, K is the number of clusters, , is the size of the cluster (number of observations in the cluster), n is the sample size (observations in the dataset), and is the number of data points with negative Silhouette width in each cluster. The weight can evaluate results produced by similar or different algorithms on equal or different numbers of clusters). The results from the proposed formula are such that the weighted ratio is zero for perfect partitioning and one for random data points with no visible groups.

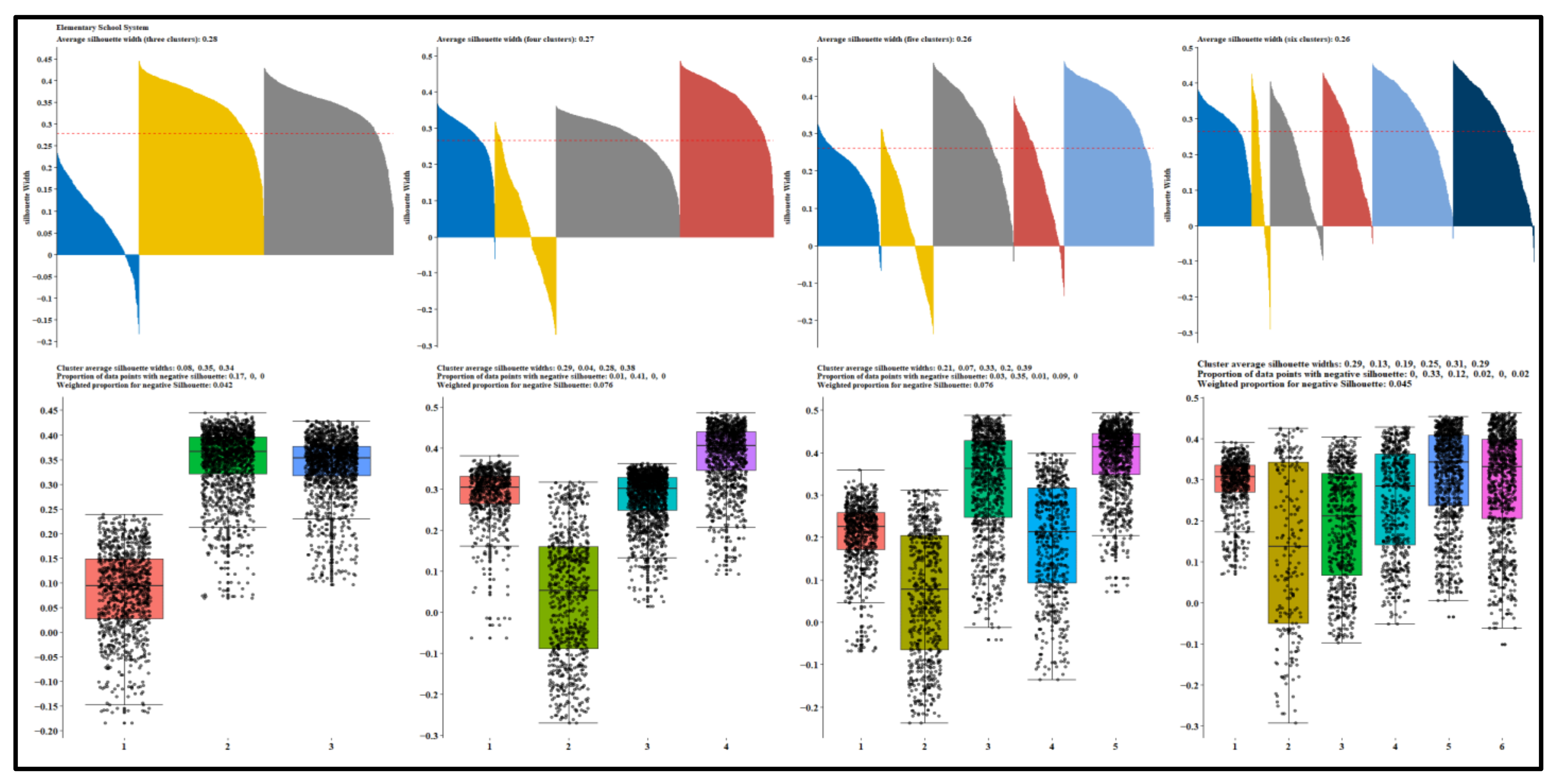

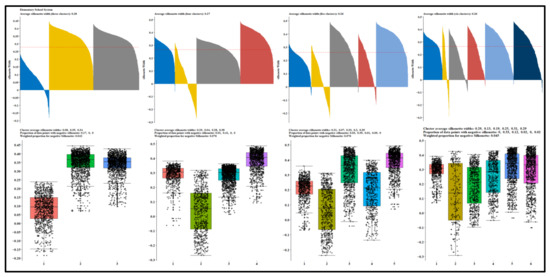

The first panel of Figure 4 presents the reference points, suggested optimal clusters identified in Figure 2 and Figure 3, and potential optimal clusters using the proposed weighted formula for the elementary schools’ system; comparable results for the combination and high school systems are in Appendix B. In Figure 4, regarding the elementary schools’ system, the average Silhouette width and the weighted proportion for the width under three clusters were 0.26 and 0.129, compared to 0.26 and 0.093 for four clusters, 0.26 and 0.115 for five clusters, 0.27 and 0.041 for six clusters. In Figure 4, the elementary school system reference point is five clusters, and the results show that 11.5 percent of data points were on the boundaries compared to 12.9, 9.3, and 4.1 percent when portioned into three, four, and six, respectively. Notice that 13 and 39 percent of data points in clusters one and two under the four cluster assumptions were on the cluster boundaries. Analogous numbers are 2 (cluster 1), 17 (cluster 2), and 13 (cluster 4) under the six clusters assumption. Six clusters partition the elementary school’s system data better by positioning a few (per cluster) data points on the cluster’s boundaries. Moreover, the second panel of Figure 4 is the box plot of the average Silhouette of the referenced clusters. For the elementary school system, the results from six clusters show few outliers compared to the remaining clusters.

Figure 4.

Proportional data points on cluster boundaries and their distributions for the elementary school system.

The equivalent results for the combination and high school systems are in Appendix B. The weighted proportion for negative Silhouette width from the combination schools’ system suggests selecting among three, four, and seven clusters that indicate that 5.8, 6.0, and 6.4 percent of the data points were on boundaries. For six clusters, 12.8 percent of data points are on the borders. Partitioning the combination schools’ system into four groups produced better results. For example, 14 and 33 percent of clusters 1 and 3 are on boundaries compared to 7 and 25 data points in clusters 1 and 2 when the data is portioned into four clusters. The results for the high school system imply retaining seven groups that position only 5.3 percent of the data point on the boundaries of the created clusters, and only cluster one has 11 percent of the data points on the edge of the group. Also, the data points with a negative Silhouette are less than one percent among all seven clusters for the high school system.

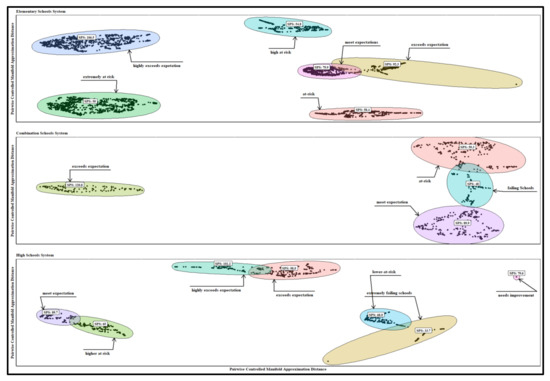

Figure 5 presents the annotated clusters with school performance scores and ellipses created using the Khachiyan algorithm (Gács and Lovász, 1981); the distance between the centroid and the furthest point in the cluster defines the radius of the circle. In Figure 5, SPS is the cluster medoid regarding SPS. In Figure 5, the pairwise controlled manifold approximation preserves both inter-cluster (global structure) and intra-cluster distance (local structure) distances (data geometry), the position of each cluster determines relatedness between clusters, and the size and spread of each cluster are proportional to the variance of the group and cluster membership. Figure 5 shows four unique groups of school clusters under the elementary schools system, denoted as extremely at-risk schools (the medoid SPS is 50.0), high-at-risk schools (the medoid SPS is 54.70), at-risk schools (the medoid SPS is 58.4, and exceeds expectations (the medoid SPS is 106.5). The meet-expectation cluster has a medoid score of 79.7, intersecting with exceeds expectations cluster with a medoid score of 92.3. Specific features for intersecting clusters are similar or share comparable attributes. The intersections or overlaps imply that the data points on the boundaries of these clusters are closer to data points in the neighboring cluster than to data points in its cluster.

Figure 5.

School systems optimal clusters annotated with school performance scores.

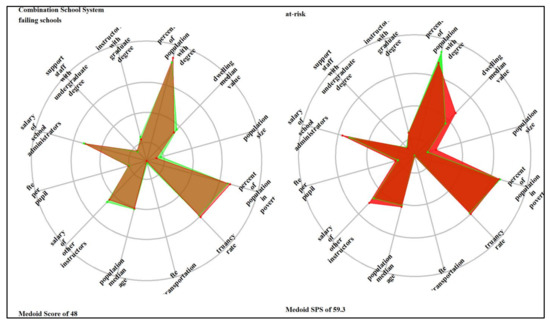

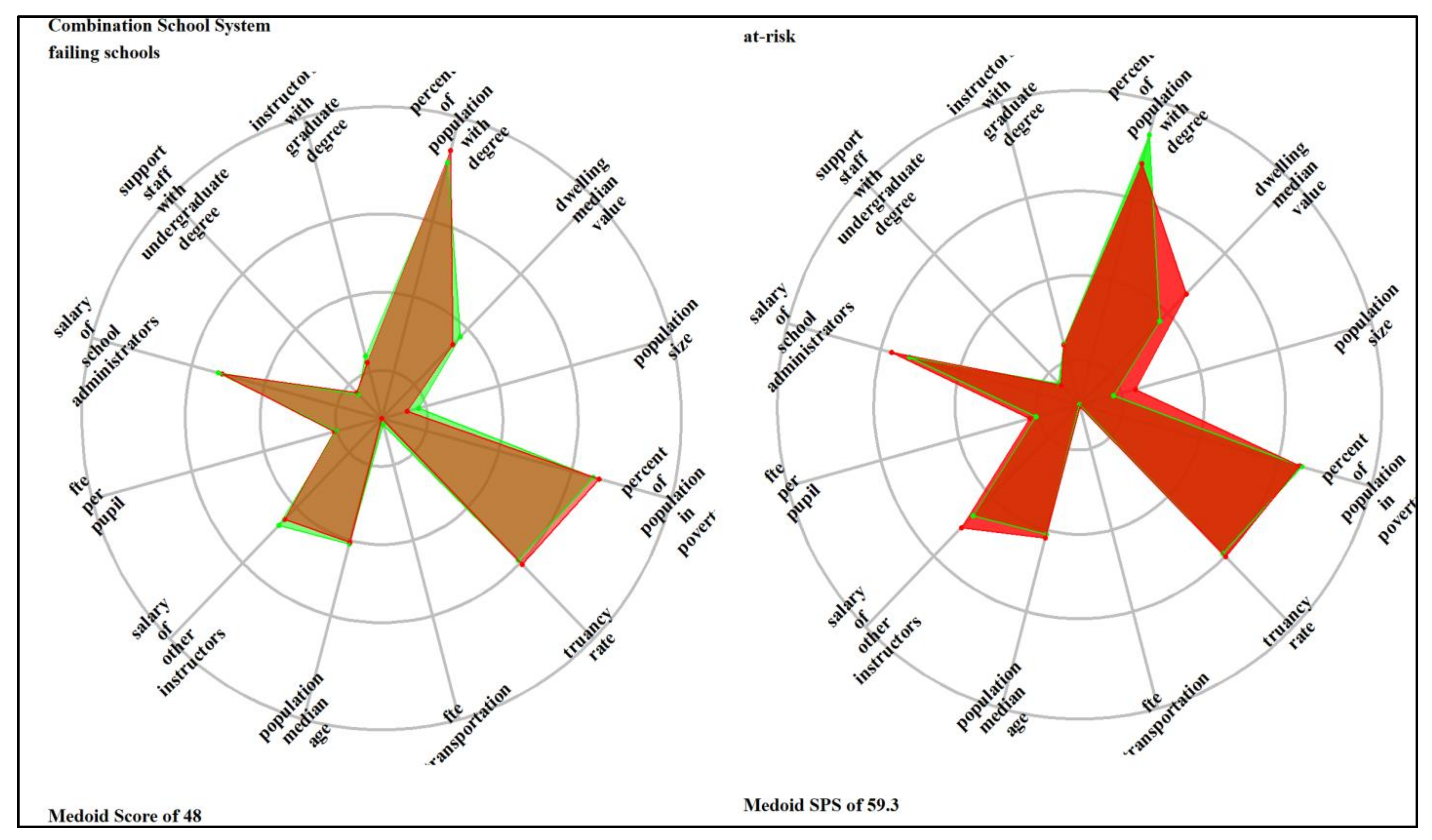

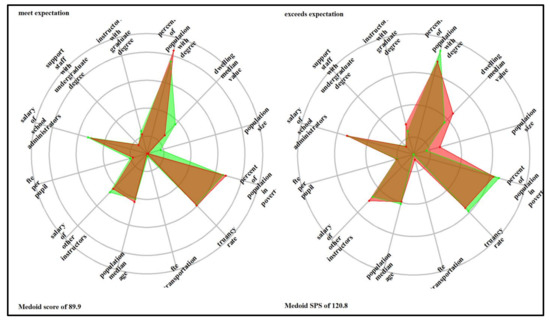

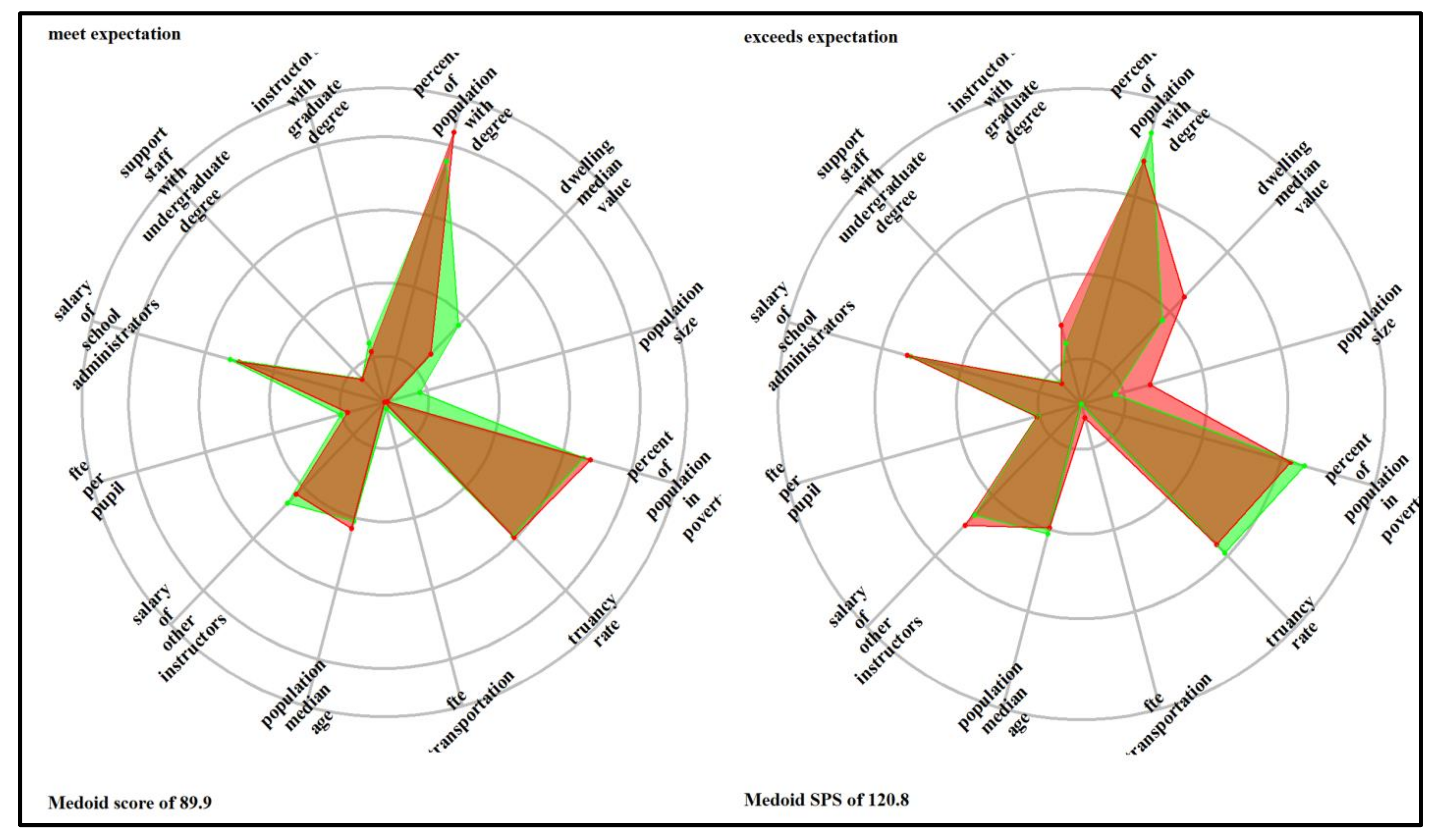

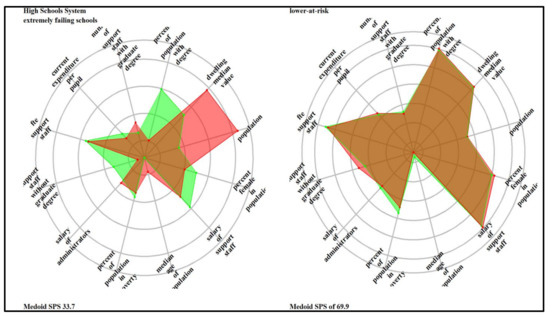

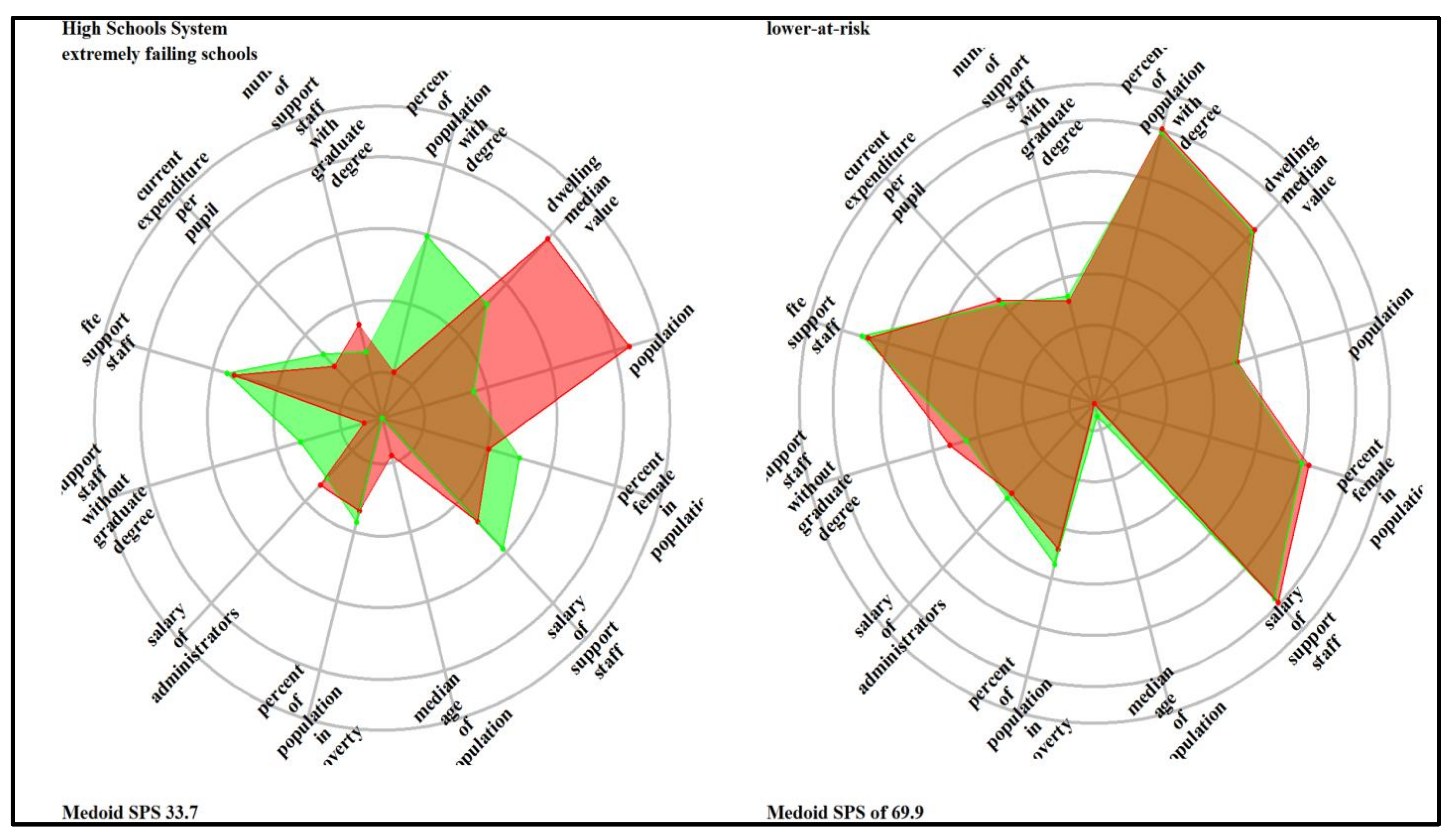

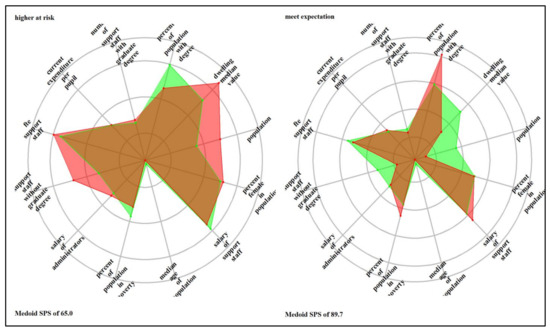

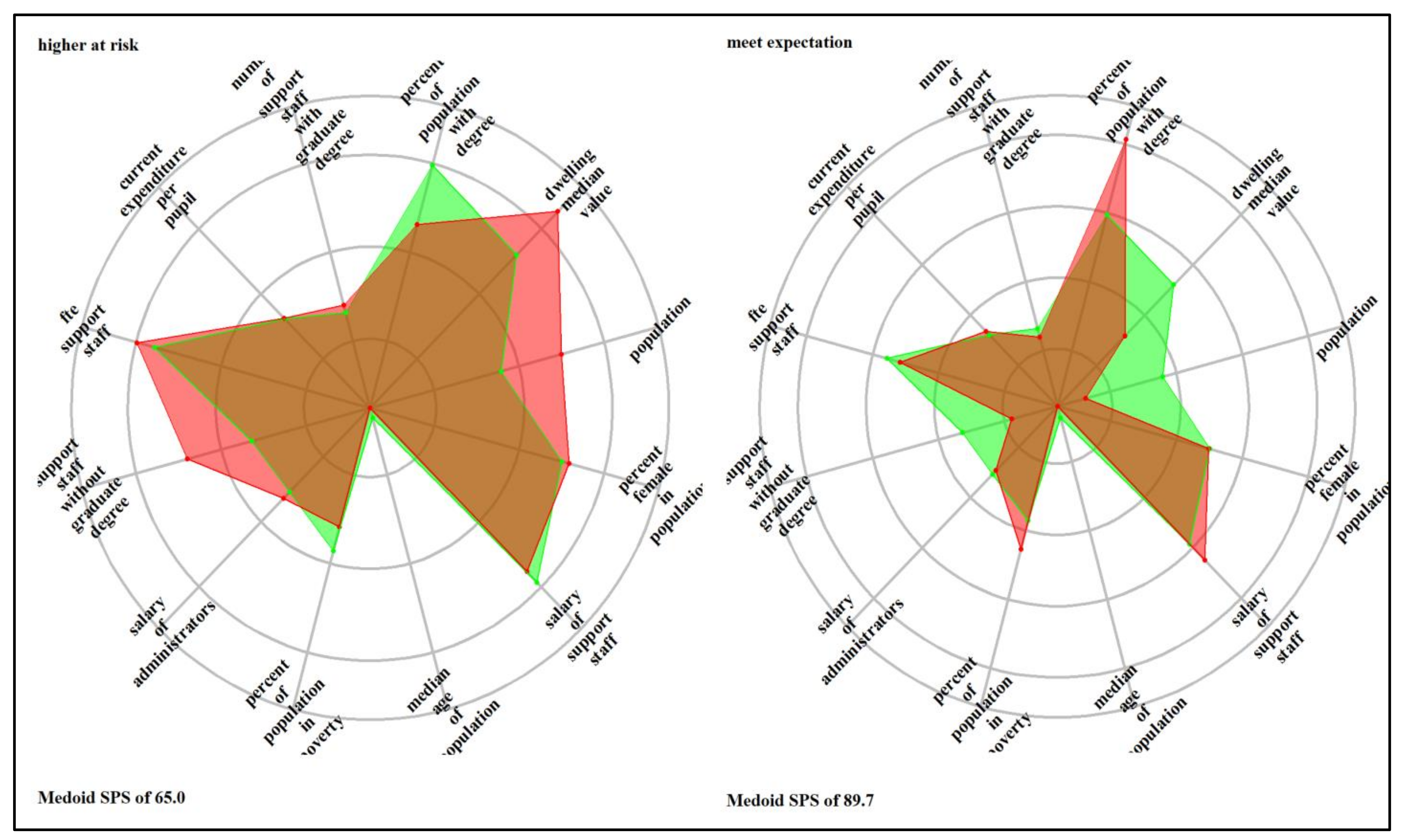

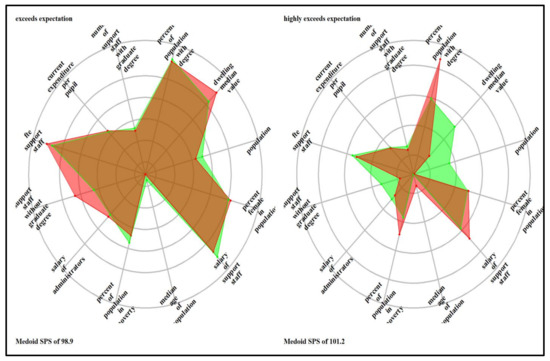

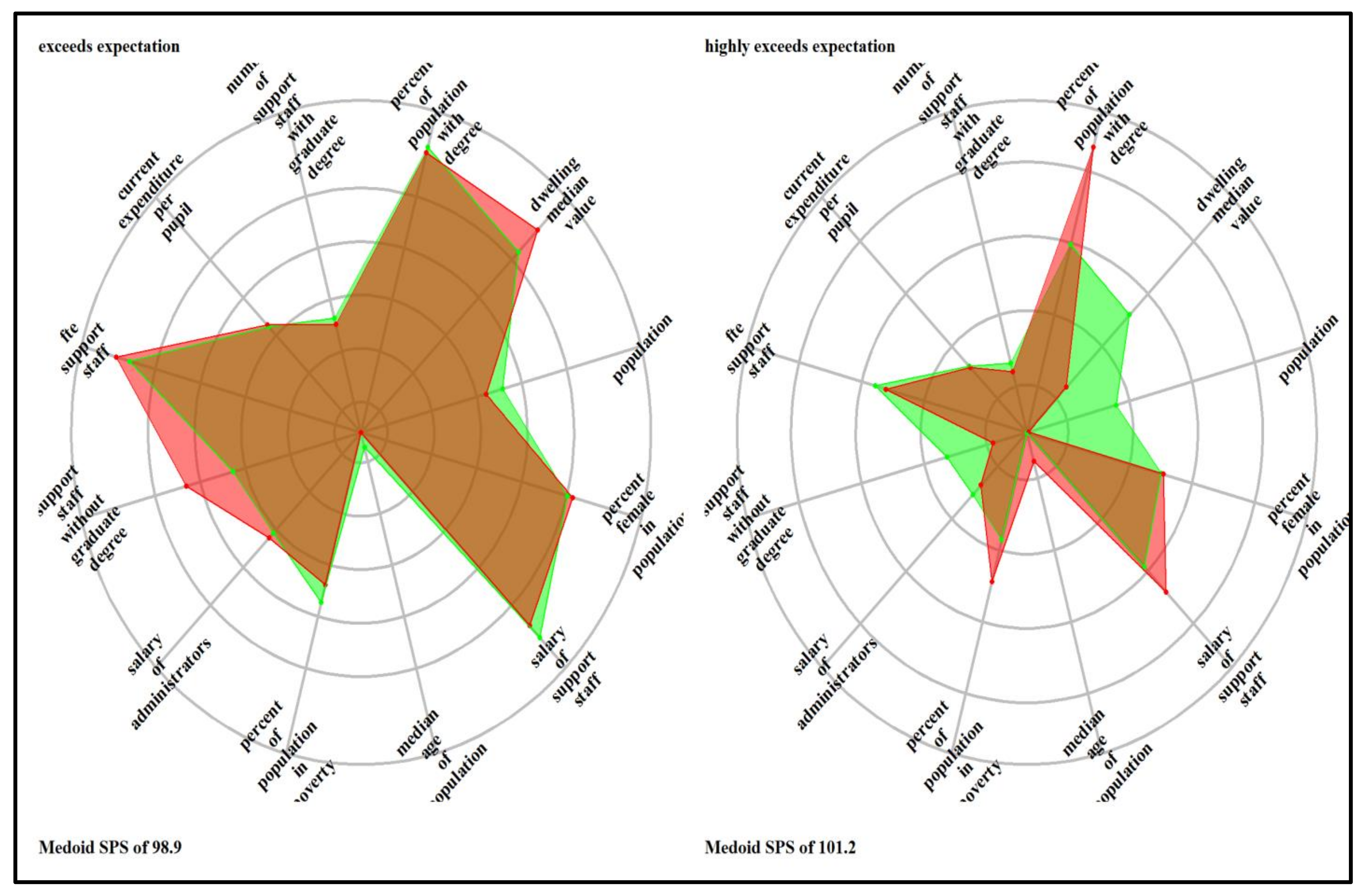

Figure 5 also shows one unique cluster under the combination schools’ system; the remaining three are interrelated. The cluster with the failing schools had a medoid score of 48.0 and intersected with the at-risk Schools’ cluster with a medoid score of 59.3 and meets expectation schools with medoid scores of 89.9. The unique “exceed expectation schools cluster” had a medoid score of 120.8. For the high school system, one of the seven clusters is unique, that is, the needs improvement cluster with a medoid SPS of 79.6. This unique cluster contained two outliers; therefore, they were removed for further analysis. Three groups, each with two clusters, are interrelated. The first two interconnected clusters are extremely failing schools (the medoid SPS is 33.7) and lower- at-risk cluster (medoid SPS is 68.9). While the upper-at-risk Schools’ cluster interconnects with meet expectation clusters with the medoid SPS of 65.0 and 89.7, respectively, the exceeds expectation cluster (medoid SPS is 98.9) intersects with exceeds expectation cluster with a medoid of 101.3. The results in Figure 5 suggest that the organization of school performance in the State of Louisiana is not along a single dimension of indicators but meaningfully organized into heterogeneous clusters with unique and interconnected features.

4.2. Results from Supervised Learning Analyses

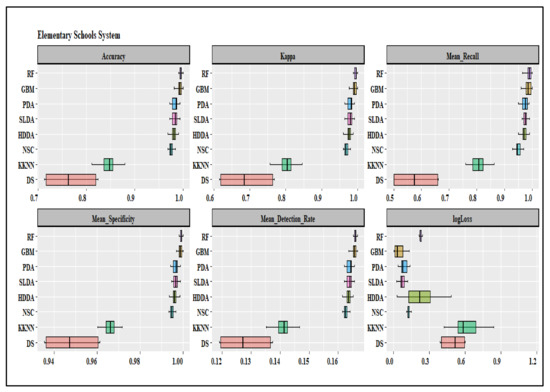

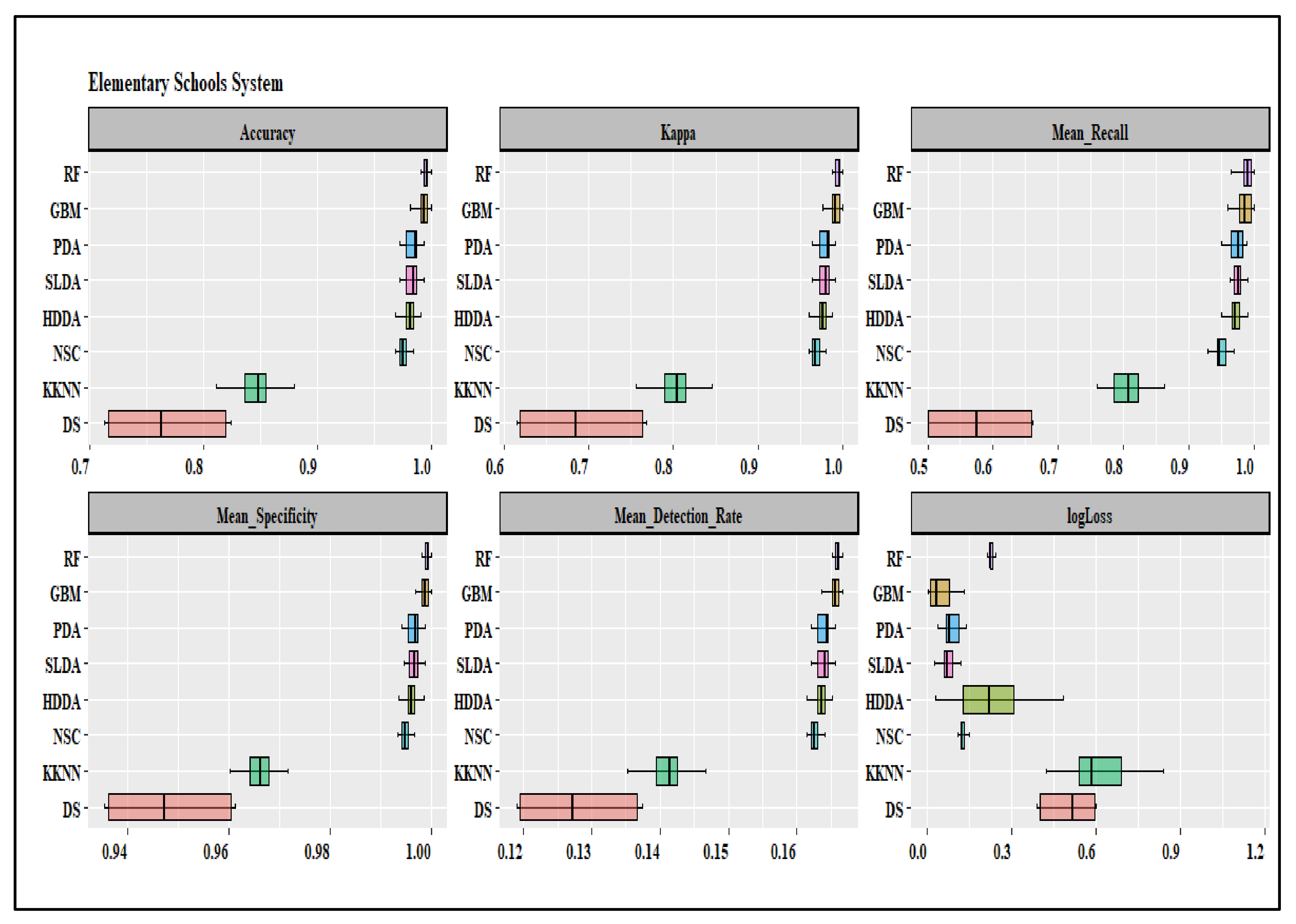

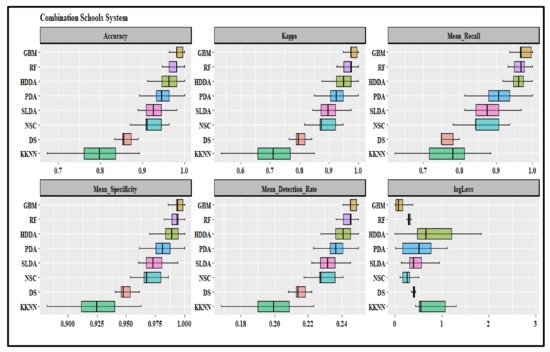

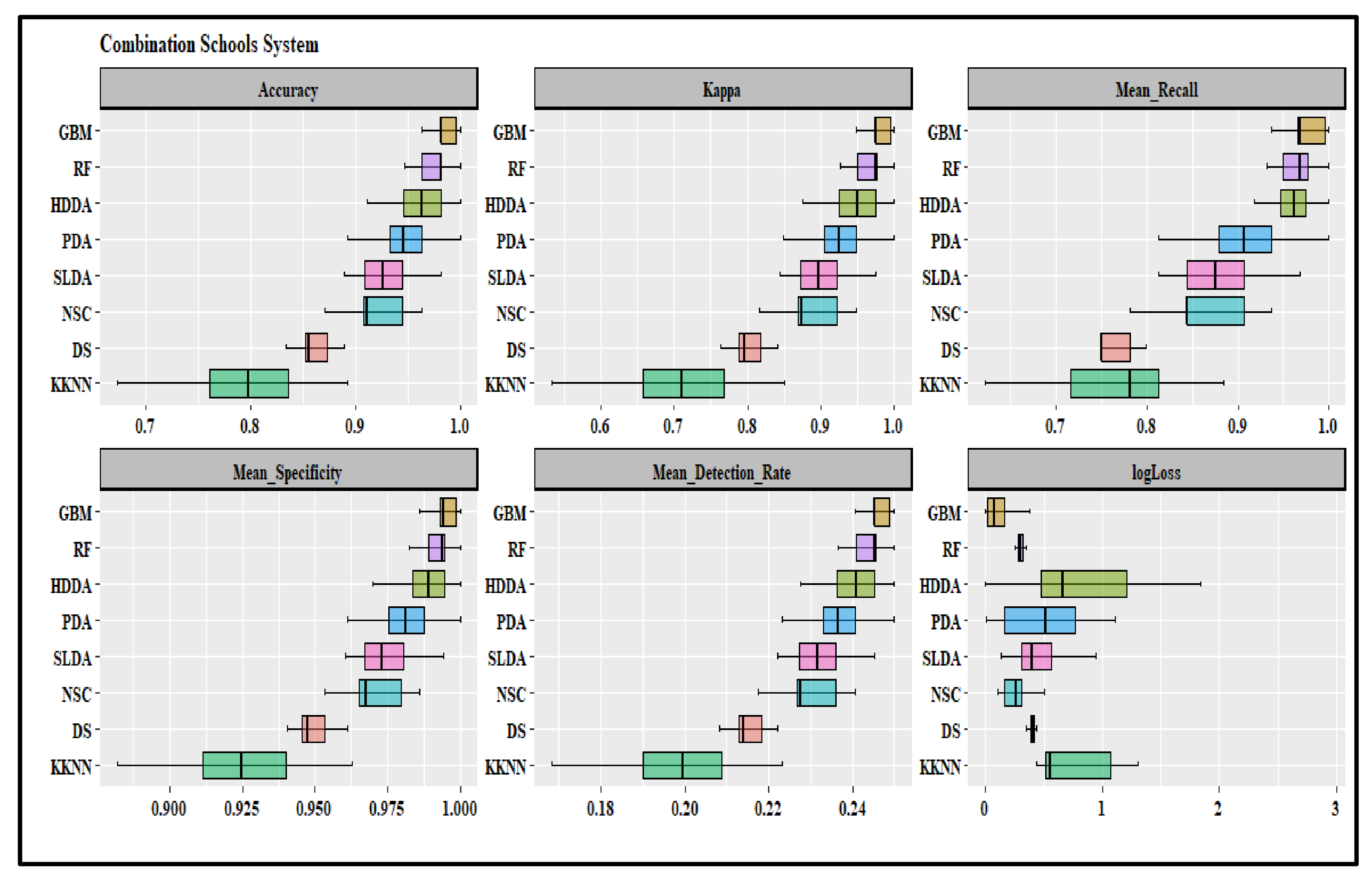

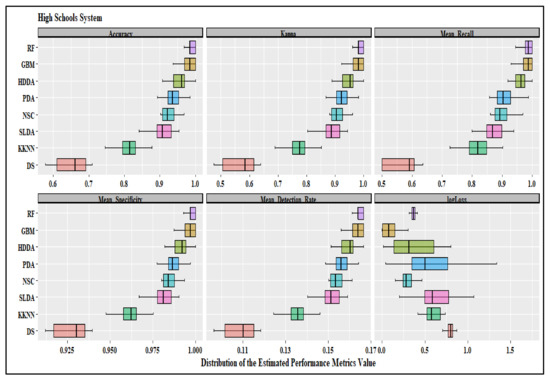

Reporting and comparing performance metrics is customary when evaluating machine learning models. Each metric has advantages and disadvantages; each reflects a different aspect of predictive performance. We used the holdout method to determine how statistical analysis can transform into a dataset, as explained in Section 2.2 and 2.3. The experimental part of the research covers the design of the test environment plus the formation of each model by splinting the datasets into training (80%) and validation (20%) sets. The algorithms used in this study include decision trees (DST), k-nearest neighbor (KKNN), stabilized linear discriminant analysis (SLDA), NSC (nearest shrunken centroids (NSC), penalized discriminant analysis (PDA), HDDA (high dimensional discriminant analysis (HDDA), random forest (RF), and gradient boosting machine (GBM). These are popular algorithms for multiclass classification. The summary statistics of each model’s performance metrics are in Table 2, and the relative distributions of the metrics are in Appendix C.

Table 2.

Metrics of machine learning models.

By comparing both “more-is-better” and “low-is-better” measures, the performance metrics in Table 2 results indicate that the random forest (RF) and gradient boosting machine (GBM) algorithms perform betters in predicting the created clusters for all school systems. More-is-better implies a preference for higher scores, and less-is-better means lower scores indicate better performance. Based on t-test results and more-is-better metrics, the RF and GBM models have the highest but similar scores with low standard deviations. The RF and GBM generated the best results related to the accuracy, kappa, recall, specificity, and detection rates (more-is-better) performance measures, with relatively small standard deviations compared to the results from other algorithms. The results imply that the RF and GBM results are more accurate and stable than other models. Also, the mean log losses for the RF and GBM are the lowest among all models. The mean log losses are statistically significantly lower for the GBM under the elementary and high school systems but higher for the combination school system. Therefore, we use the GBM results to identify features for the elementary and high school systems and RF results for the combination school system. Results in Table 2 show that the Decision Tree and K-nearest neighbor performed poorly across the three school systems. The emphasis of more-is-better performance measures is on avoiding “False Negative” or Type II errors. In statistics, type II errors mean failing to reject the null hypothesis when it is false. For RF and GBM, better measures are more than 0.95, implying that at least 95% of cluster members are in the ideal groups. A relatively higher detection rate suggests that the two algorithms are more likely to identify those members that do not belong in either cluster. In Table 2, the log-loss also focuses on model prediction performance. A model with perfect prediction has a log-loss score of 0; in other words, the model predicts each observation’s probability as the actual value. Therefore, the log-loss indicates how good or bad the prediction results are by denoting how far the predictions are from the actual values; thus, lower is better. Based on the results in Table 2, the RF and GBM models outperform all models for all school systems using more-is-better and low-is-better performance metrics.

For the elementary school system, we used the GBM models to identify critical features influencing school performance. Comparatively, in Table 2 (see also Appendix B), the mean and standard deviation estimates of the more-is-better performance metrics from the RF and GBM models are not statistically significantly different (p = 0.01) among the three Schools’ systems. However, while the mean values for the RF are consistently higher than those for the GBM models under the elementary school systems, the GBM model log-loss is lower (0.0448) and statistically different compared to the RF log-loss model (0.2268). Although not statistically significant, for the combination school system, the more-is-better metrics from the GBM are consistently higher and tighter (low standard deviation) compared to the RF results. Moreover, the mean Log-loss of the GBM model (0.1259) is statistically significantly lower (p = 0.01) than the mean log-loss for the RF model (0.3021). Therefore, the GBM is slightly better at predicting and classifying combination school system datasets than the RF model.

The performance metrics of the k-folds validation analysis are not statistically significant (p = 0.01) compared to the results from the training datasets. Similarly, Table 2 and Appendix C show that the RF’s more-is-better performance metrics are consistently higher but not statistically significantly different from the GBM model results. However, the mean logloss of the GBM (0.1485) is lower and statistically significant compared to the RF mean logloss (0.3651). Referring to these results, we also used GBM model results to identify the critical features of combination and high school systems. The estimated GBM best “metrics” using k-fold cross-validation were: accuracy (0.9927), kappa (0.9912), and logloss (0.012) for the elementary school system, accuracy (0.9876), kappa (0.9801), and logloss (0.023) for the combination school system and accuracy (0.9932), kappa (0.9911), and logloss (0.0165) for the high school system.

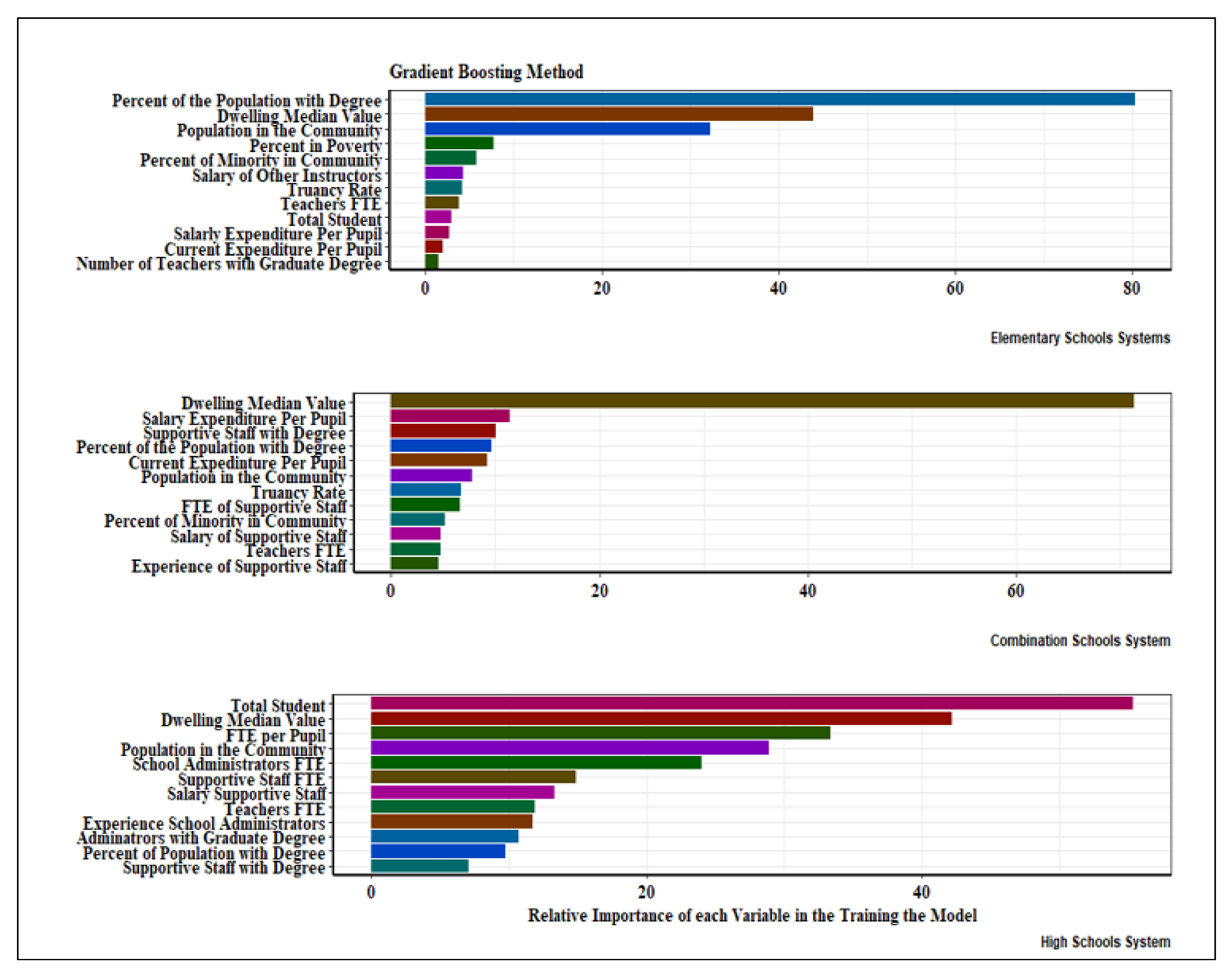

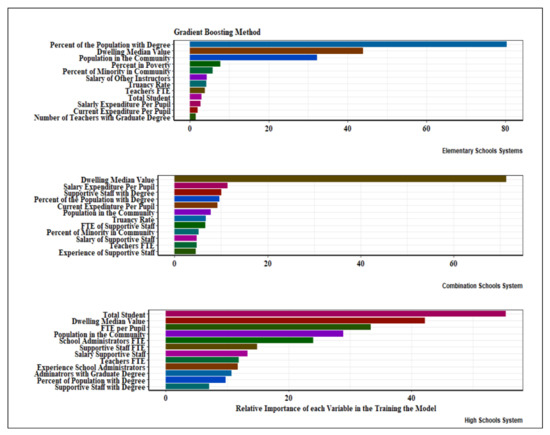

Generally, importance provides a score indicating how useful or valuable each feature was in constructing the boosted decision trees within the model. The more an attribute contributes to making critical decisions with decision trees, the higher its relative importance. Figure 6 shows the fifteen features (arbitrarily set) generated from the GBM (elementary and high school systems) and RF (combination schools system).

Figure 6.

Relative importance scores of variables at the school system levels.

In Figure 6, the critical features of the elementary school system are mainly related to community socioeconomic variables and school characteristics. The community variables (with the order of importance) include the percentage of the population with a bachelor’s degree (the dominant feature), dwelling median value, the total population in the community, the percentage of poverty in the community, and the relative number of minorities in the community. These variables are related to community affluence. The education literature always confirms a causal relationship between school performance and the socioeconomic status of communities. Socioeconomic status (SES) encompasses wealth, educational attainment, financial security, social status, and social class perception. Poverty is not a single factor but characterizes multiple physical and psychosocial stressors.

Further, SES is a consistent and reliable predictor of many life outcomes. Many studies (such as [140,141,142]) indicate that schools in low-SES communities perform poorly compared to schools in affluent neighborhoods, punctuated by the presence of minorities. Often, schools in low-SES communities have lower performance scores because students have poor cognitive development and lack language, memory, and socio-emotional processing. Consequently, their performance in schools is flawed. Moreover, the school systems in low-SES communities are often under-resourced, negatively affecting schools’ academic progress and outcomes, including inadequate education and increased dropout rates [128,143,144,145]. The State of Louisiana can reduce these risk factors through early intervention programs to improve the school systems with elevated identified critical features at the community level.

Solutions to improve the performance of Louisiana’s elementary school systems should focus on reducing teachers’ workload and boosting their morale through increased pay and other work-related incentives. The critical features related to school-level characteristics include the salary of other non-academic instructors, truancy rate, teachers’ full-time equivalent, total students in schools, salary expenditures and current expenditures per pupil, and the number of teachers with a graduate degree. These variables determine the workload, incentive, and morale of teachers and non-academic staff and the delivery of quality education to individual students. Frequently, schoolteacher and staff morale are associated with inequities from being overworked, lacking advancement, and low salaries resulting in unproductive school culture, which affects the administration, teaching, and learning [146,147]. Therefore, factors that cause low morale are from sources controllable by the school administrators and policymakers. Reducing teachers’ workload, class preparation time through smaller classrooms, administrative support, recognition, and opportunities for advancement offer administrators leverage to enhance or change the school culture.

The first four significant critical feature of the combination school system is the median dwelling values (the dominant feature), salary expenditure per pupil, support staff with a degree, and percentage of the population with a degree. Except for the experience of the support staff, although not in a similar order, other remaining variables appear as critical features in the elementary school’s system. Apart from community affluence and teachers’ and staff morale, the support staff experience was also an essential feature of the combination school system. Positive school culture thrives by including support staff and all non-teaching teams who play a crucial role in ensuring students learn in a safe and supportive learning environment. They can foster positive, trusting relationships with students and improve the school climate by encouraging parent and family involvement in their student’s education [148,149]. In Louisiana, schools rely on the professional input and expertise of a range of staff; some work alongside teachers, and some work behind the scenes to ensure an efficient infrastructure for effective teaching and learning. As the chief executive officers, the school principals should initiate a consistent, compelling reward system to motivate their job performance and general welfare. Often overlooked, legislators should ensure that the combination school system has a pool of efficient and motivated support staff to support learning in this diverse and complex school system.

The four dominant features of the high school system are total students (the most predominant), dwelling median value, the population in the community, and full-time equivalent per pupil. The second groups are variables related to workload (i.e., school administrator, support staff, and teacher FTE) and incentives (i.e., the salary of support staff). The remaining variables are allied to the experience and education of administrators and teachers (i.e., the experience of administrators, administrators with a graduate degree, and support staff with a degree) and a community-level variable (i.e., the percent of the population with a degree). Apart from community affluence, the experience and education of administrators and support staff were critical features within the high school systems. A successful school is about much more than teaching. While good teaching and learning are crucial, experienced administrators are vital in providing a well-rounded and effective teaching environment. Experienced administrators and support staff allow academic staff and teachers to focus on teaching. At the same time, they create robust systems for accountability, policies, and procedures to ensure that teaching and learning flow as smoothly as possible. An effective administration department can extract and analyze critical data to inform schools’ strategic decisions around education provision [150,151,152,153].

In addition, employee retention does not have a one-size-fits-all solution. Each school system and individual school must work purposefully to devise plans to retain its most influential administrators and teachers [149,154]. The State of Louisiana can reduce administrators turnover through beneficial job contracts, the tenure system, and a higher salary for administrators and teachers in the high school system. Additionally, creating a positive disciplinary environment lowers the odds of principals moving to another school, especially in high concentrations of students of color. Moreover, allowing the administrators to influence and determine teacher professional development and budgeting decrease the likelihood of principal turnovers.

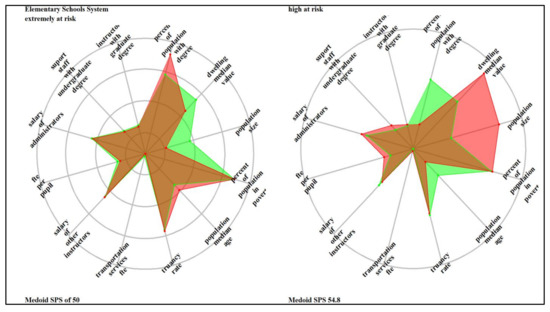

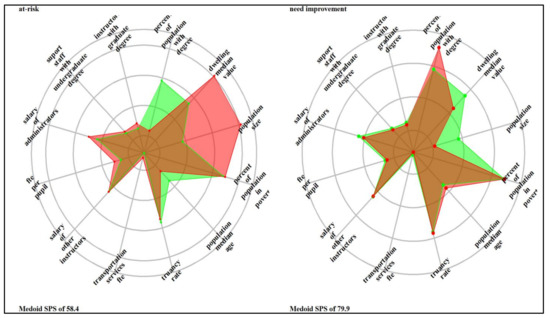

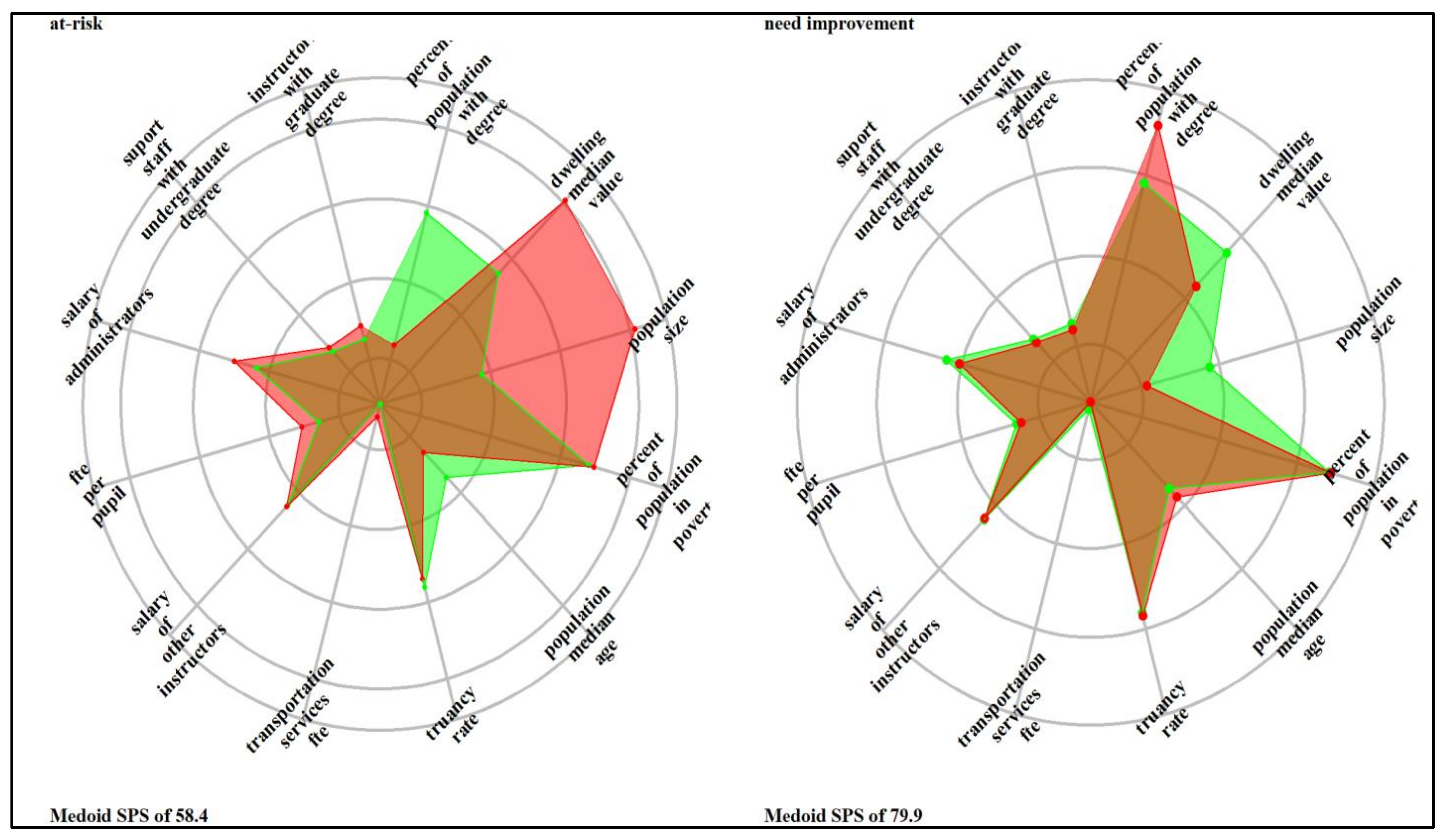

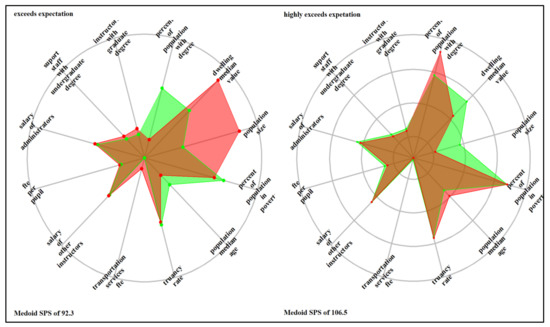

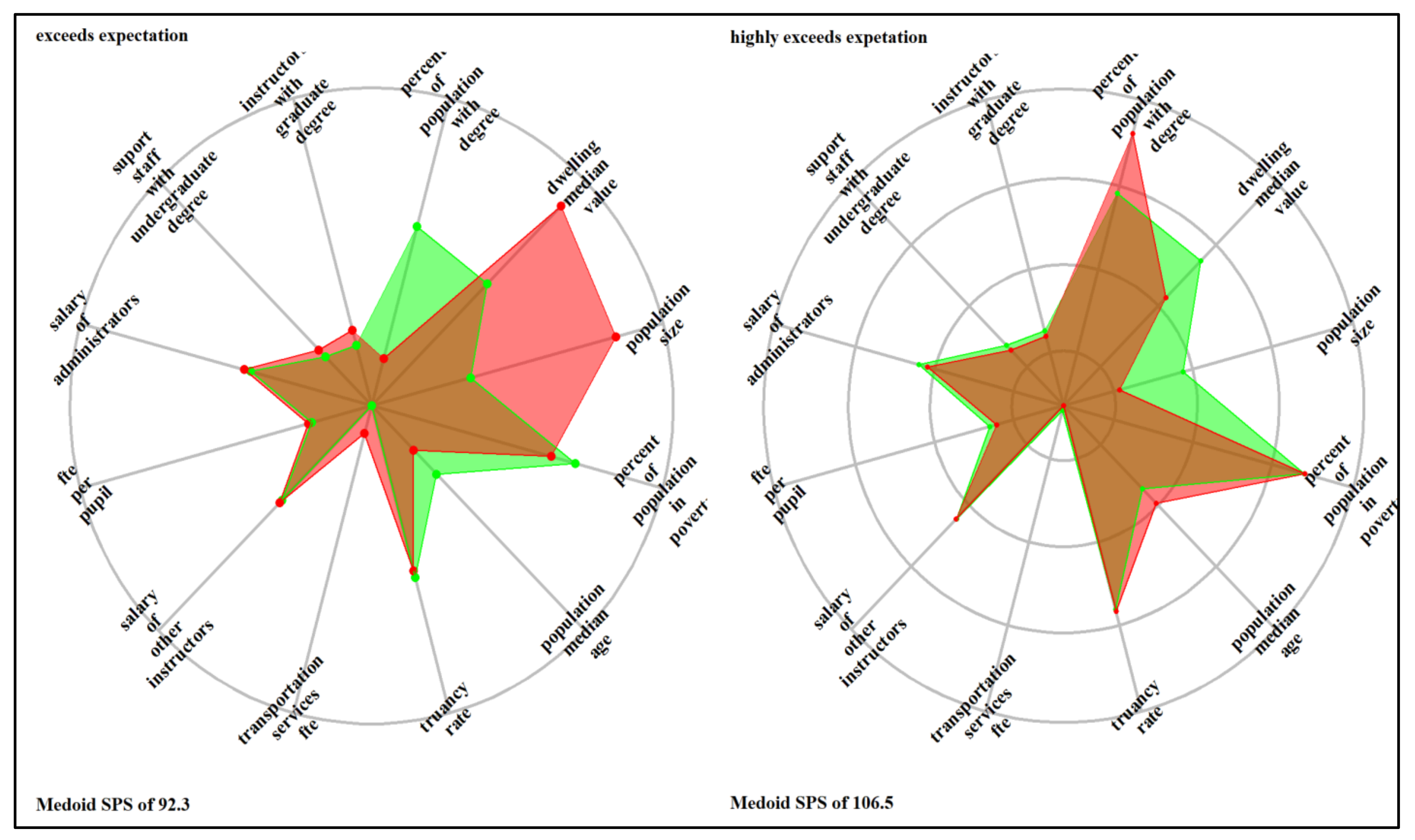

The ladder/spider plots in Appendix D illustrate the most prominent features within each identified cluster in each school system relative to the average school system values. The plotted variables are scaled (range standardized from 0 to 1) and represent lacking (0) to abundant (1); therefore, the spokes radiate outwards from a central zero hub. The center of the wheel or the x-axis represents a minimum value, which is zero; the mid-cycle and the last cycles represent the average (0.5) and the maximum (1). The red color represents the cluster’s average values, and the green color is the school system’s average value. The denotation of the clusters is ordinal and consists of three groups: SPS below 60 (failing schools, extremely at risk, highly at risk, and at risk), the SPS between 60 and 80 (lower at risk and meet expectation), and SPS above 80 (exceed expectations and highly exceeds expectations). Therefore, six clusters exist for the elementary school system (the 7th cluster included only outliers), and four and six prominent groups exist for the combination and high school systems. School-level attributes that distinguished the school system cluster include truancy rate, availability of transportation services, teacher FTE per pupil, the average salary of other support staff, the average wage of administrators, and the number of support staff with undergraduate and number of instructors with graduate degrees. Community-level attributes were the percentage of the population with degrees, median dwelling values, population size, the portion of the people in poverty, and population median age.

The medoid SPS score for the elementary school system was 52.0 for the extremely at-risk cluster. The cluster’s truancy rate, transportation services, and salaries for administrators and instructors are below the elementary school system average; likewise, the number of support staff with graduate degrees. Also, the number of teachers and support staff with graduate degrees was below the average for elementary schools. Most of the schools in this cluster are in low-populated communities with a young population and below-average wealth, measured by dwelling value. The poverty rate (based on income) in these communities is relatively low, and the percentage of people with a degree is above average, which signals the characteristics of working-class communities. Parents in these communities (working class) drives their children to schools (low truancy rate) when going to work. Although the FTE per pupil is above average, working parents might not have enough time to support their students academically, such as helping them with homework and other assignments. To increase performance, instituting effective mentoring, tutoring, and after-school education programs that provide motivation, personal individual attention, direct instruction, and access to textbooks and instructional materials to increase schools’ academic skills and support services [155,156,157,158]. Increasing the number of experienced administrators, staff, and teachers through new hires would boost the performance of the schools in the cluster.

Although transportation service in the “high-at-risk” cluster (medoid SPS of 54.7) is average, there is an elevated truancy rate. In this cluster, the number of support staff with an undergraduate degree is deficient, and teacher FTE per pupil is below the average. Schools in the groups are in young, high-populated, and relatively affluent communities with low poverty rates, but the population with the degree is below average. Low teacher FTE per pupil might indicate instructor understaffing that limits the process of identifying students with specific instructional needs. Increasing the number of instructors and collaborating with parents to reduce truancy would improve school performance. There is a need for intervention programs that dispel parental misbeliefs undervaluing the importance of regular attendance and the number of school days their child misses classes [159,160]. Truancy reduction efforts need a differentiated approach that targets risk factors more prevalent in a specific group of students and tailored concerted efforts to ensure chronic absentee students can get back on track.

Except for the above-average numbers of instructors with an undergraduate degree and slightly higher teacher FTE per pupil, the at-risk cluster with medoid SPS of 58.4 is like the high-at-risk cluster discussed above. Schools in the cluster serve vulnerable populations facing educational and economic barriers [161]. Besides mentoring, tutoring, and after-school education programs, such schools need to be recognized and supported with more physical, human, and financial resources. An increase in teacher FTE per pupil indicates increased student support [162] with direct and positive effects on school performance. Although the values of most attributes are below average, the teacher FTE per pupil for the cluster that meets expectations (medoid SPS of 79.7) was above average. Except for the number of support staff with a graduate degree, dwelling median value, and population size, the two clusters that exceed expectation (medoid SPS of 92.3) and highly exceed expectation (medoid SPS of 106.5), all other variables are within the elementary school averages. The schools in these clusters are in communities with low populations, but the percentage of the people with a degree is above average, and the population’s median age is below average, implying that these schools are in large affluent communities with resources to support the school systems.

For the combination school system, schools in the “failing Schools” cluster where a medoid had an SPS of 48 are in large communities with medium wealth measured by median dwelling values. Although the number of instructors with a graduate degree is slightly above average, other schools’ and community-levels attributes are below the school system averages. Excluding the population percentage with the degree variable, all other characteristics for the “at-risk” cluster (medoid SPS of 59.3) were below the average, implying various interrelated variables influence Schools’ performances. For both clusters, improvement in leadership will churn the interwoven network [163], resulting in positive structural and administrative changes that improve school performance [164]. Some of the attributes of the “meet expectation” cluster (medoid of SPS of 89.9) were above the school system average. These variables include the number of instructors with a graduate degree, dwelling medium values, population size, and average salary of school administrators. In the extant education literature, these are critical factors influencing school performance. For the “exceeded expectation” cluster where the medoid had an SPS of 120.8, the percentage of the population with a graduate degree and the teacher FTE per student was above average, and the truancy rate was below the school system average.

After dropping the outliers, the high school system had six clusters. The SPS for the medoid of the “upper at risk” cluster was 58.4. The above-average attributes for the group were the percentage of the population in poverty, the number of support staff with a graduate degree, dwelling medium values, and population size. School-level and community-level attributes within the cluster that was below average included the number of support staff without a graduate degree and the percentage of the population with graduate degrees. The schools in the cluster were in densely populated communities with high poverty rates commonly associated with poor school performance. These schools need active instruction that increases student engagement, a critical element of academic achievement in schools with students from families in poverty and at risk for adverse outcomes [165]. For the “lower-at-risk” cluster where the SPS for the medoid was 66.9, the above-average attributes were percent of the population in poverty, percent of people in poverty, and teacher FTE per pupil. Schools in the cluster were in relatively less affluent small communities with high poverty rates. The truancy rate, the salary of administrators, and the number of support staff with an undergraduate degree were below the cluster average.

Solid administrative leadership is a critical component of schools with high student achievement. Students receive more individualized help, and attention from the support staff; teachers receive specialist support and assistance with their administrative and planning tasks, granting them more time for their core responsibilities [166]. The medoid of the “highly needs improvement” cluster had an SPS of 71.3. The attributes representing the number of support staff without a graduate degree, the number of support staff with a degree, and percent of the population with a degree were above average. The schools in this cluster were in moderately affluent and medium-sized communities with low poverty rates that differentiate it from the “upper-at-risk” and “lower-at-risk” clusters. The medoid of the “meet expectation” cluster had an SPS of 90.0, and the schools in the group were in moderately affluent, medium-sized communities and relatively above-average median age. The number of support staff with graduate degrees and the salary of administrators in these schools was above average, boosting the overall school performance.

The “exceeds expectation” cluster attributes (SPS of 102.2) are almost like the “highly needs improvement” cluster. They differ in two features: the percentage of the population with a degree and support staff without an undergraduate degree. The medoid of SPS, the “highly exceeds expectation cluster,” was 131.5. The schools were in moderately affluent and low-populated communities, with above-average percent females in the population and a percentage of people with a degree and support staff without an undergraduate degree. In the cluster, the above-average unique attribute is the percentage of females in the population. Education research has found that parents with high education significantly influence their children’s educational and career aspirations through increased parent involvement in student education activities [158]. Education studies (such as [157,167,168]) also report a strong correlation between single parents and reviewing student report cards, as well as attending field trips and school activities, with a positive effect on students’ performance.

5. Summary and Conclusions

There are three main categories in Louisiana public schools: elementary/middle school system (1–8 grade), combination school system (1–12 grade), and high school system (9–12 grade). These schools persistently perform below the national average. Louisiana public schools perform below the national average. The base of the analyses is the data from 2015/16, 2016/17, and 2017/18 school years data available from the Louisiana Department of Education Data Center. The data include school performance scores, student characteristics, and school attributes combined with community-level variables from the 2019 American Community Survey (ACS). The ACS provides data annually and covers a broad range of topics about the U.S. population’s social, economic, demographic, and housing characteristics.

The objectives were to group the schools into homogenous clusters and identify subgroups based on critical features influencing their performance. This study uses a pairwise controlled manifold approximation technique as a multidimensional reduction technique to visualize and create the base clusters. We also used gradient-boosting machine learning to characterize the homogenous clusters at the school system and subgroup levels. Results indicate that the elementary/middle school system is in six homogenous clusters, the combination school system is in four groupings, and the high school system is in six groups. Failing schools were generally in densely populated and low affluent communities, with high truancy rates, below average teacher FTE per pupil, administrators’ salaries, and the number of support staff. High-performing schools were in communities with a high percentage of the population with a graduate degree, moderately affluent and smaller communities, and administrators’ salaries and numbers of support staff were relatively high.