In Search of Alignment between Learning Analytics and Learning Design: A Multiple Case Study in a Higher Education Institution

Abstract

:1. Introduction

2. Literature Review

2.1. Personalized Feedback with Learning Analytics: The Affordance of Open, Automated Feedback (AF) Tools

2.2. Learning Design: Orchestrating the Achievement of Course Objectives

2.3. Frameworks for Learning Analytics and Learning Design

2.4. Supporting Student Learning with Learning Analytics and Learning Design: What Has Been Done?

2.5. Purpose of the Research

- RQ1. How do instructors align LA with LD in their contexts for the purpose of personalizing feedback and support in their courses?

- RQ2. How do instructors evaluate the impact of personalized feedback and support when LA and LD are aligned?

- RQ3. How do students perceive their personalized feedback when LA is aligned with LD?

3. Materials and Methods

3.1. OnTask: Leveraging Learning Analytics for Personalized Feedback and Advice

3.2. Case Study Approach

3.3. Data Collection

- Instructor interviews. Semi-structured interviews were conducted with each participating instructor to delve deeper into their alignment practices. The interviews aimed to elicit instructors’ experiences in integrating learning analytics tools into their learning design, in particular their motivations for using the tool and their decision-making processes behind the alignment strategies. Instructors were also asked about the perceived impact of analytics on any learning outcomes, including their observations from course data. The interviews were structured around the following questions, with room for further in-depth probes: 1. Please tell me about the course in which you implemented OnTask. 2. To date, how many teaching sessions have you implemented OnTask for? 3. What motivated you to use OnTask in your course? 4. What impact do you think your feedback using OnTask had on the students’ learning experience? 5. Do you feel like you’ve had to learn any new skills to use OnTask? 6. Do you feel OnTask saved you time or prompted you to use your teaching and administrative time differently? 7. What were the main challenges you faced in using OnTask in your course?

- Artifacts demonstrating strategies of alignment between LA and LD. These artifacts were digital documents generated in the course of discussions between the researcher and the instructors during the process of planning the strategic alignment. These are in effect the kind of “design artifacts” noted by [2] that are typically in the form of “documents and visualizations that aid personal and joint cognitive work” (p. 24).

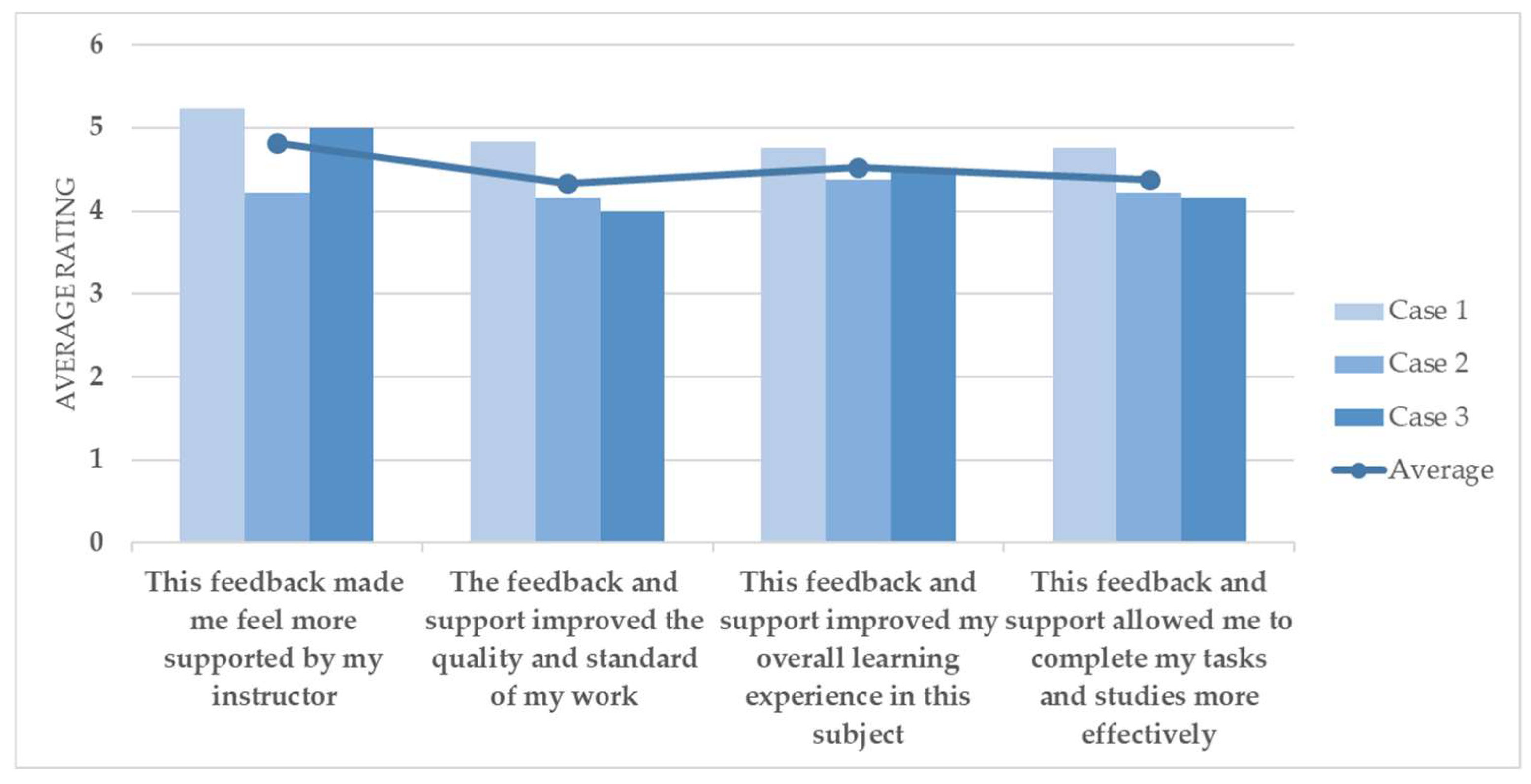

- Student experience surveys. To gain a holistic understanding of the impact of aligned learning analytics on the learning experience, feedback was gathered from students enrolled in the courses under investigation using a single, unified survey. The survey comprised four items on a 6-point Likert scale to assess students’ perceptions of their feedback, as well as one open-ended question inviting them to input any other comments they had about their feedback. The four items were: (1) This feedback made me feel more supported by my instructor; (2) The feedback and support improved the quality and standard of my work; (3) This feedback and support improved my overall learning experience in this subject; (4) This feedback and support allowed me to complete my tasks and studies more effectively. This was followed by an open-ended prompt: Do you have any other comments about your feedback experience? This data source provided a student-centered view of the alignment’s effectiveness and potential areas for improvement.

3.4. Data Analysis

3.5. Case Studies: Aligning LA and LD for Personalized Feedback and Study Advice

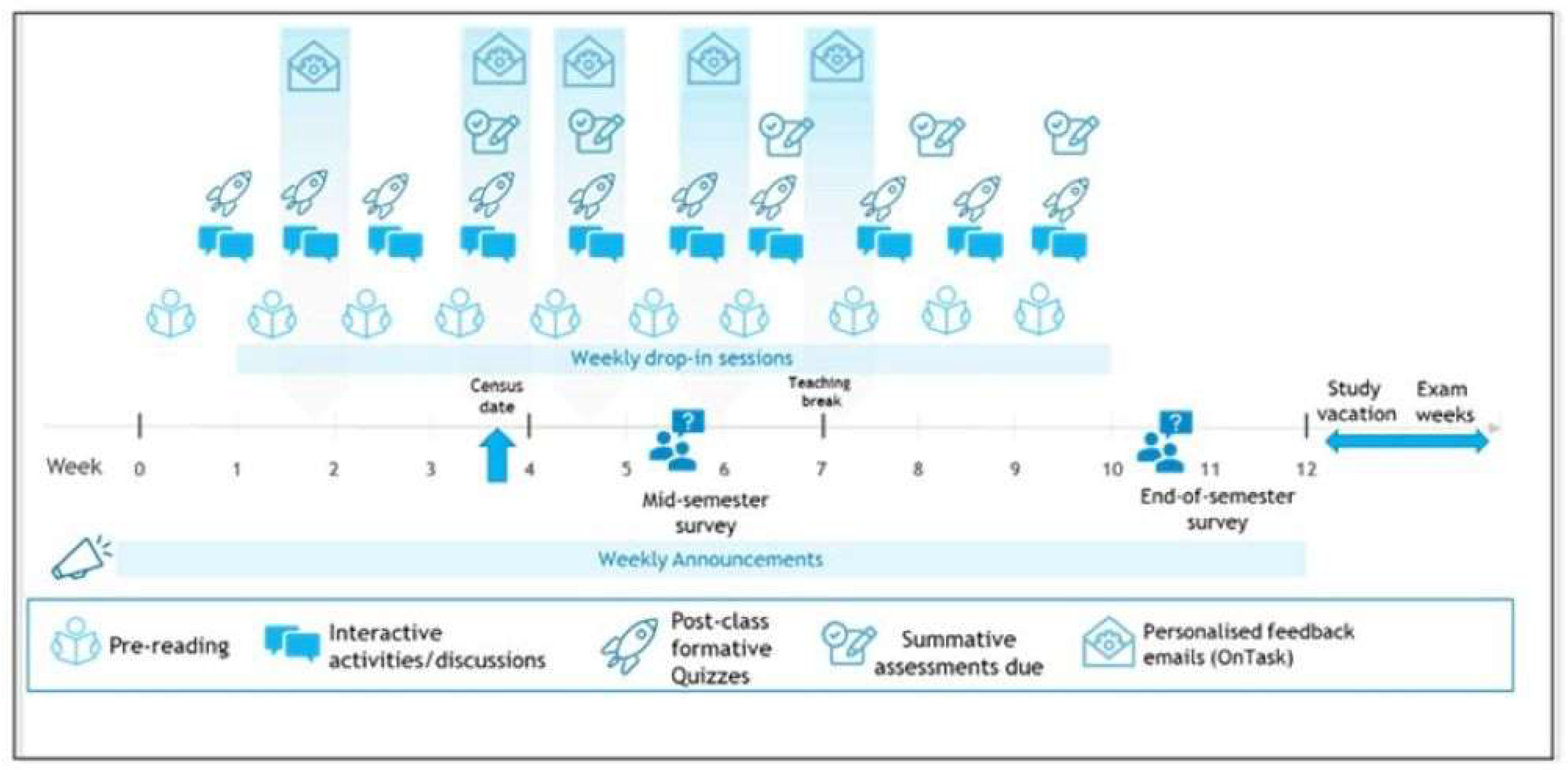

3.5.1. Case 1: Engineering and IT (First Year, Postgraduate)

- Prepare: Weekly announcements provided a clear outline of what would be covered in the upcoming week to help students plan their time effectively and prioritize their tasks. Students were required to complete pre-reading activities, namely, interactive quizzes and videos, or scenarios that aligned with the weekly topic.

- Engage: This section of the weekly module encouraged active learning and peer-to-peer interaction by including interactive and collaborative in-class activities (real-life case studies, videos, simulations, or hands-on activities) designed to capture students’ attention and involve them actively with the subject material.

- Reflect and Progress: This section of the weekly module comprised formative self-assessment quizzes to assess students’ understanding of the subject material and open-ended text questions to encourage active self-reflection on their learning experiences to promote deeper thinking and improved metacognition.

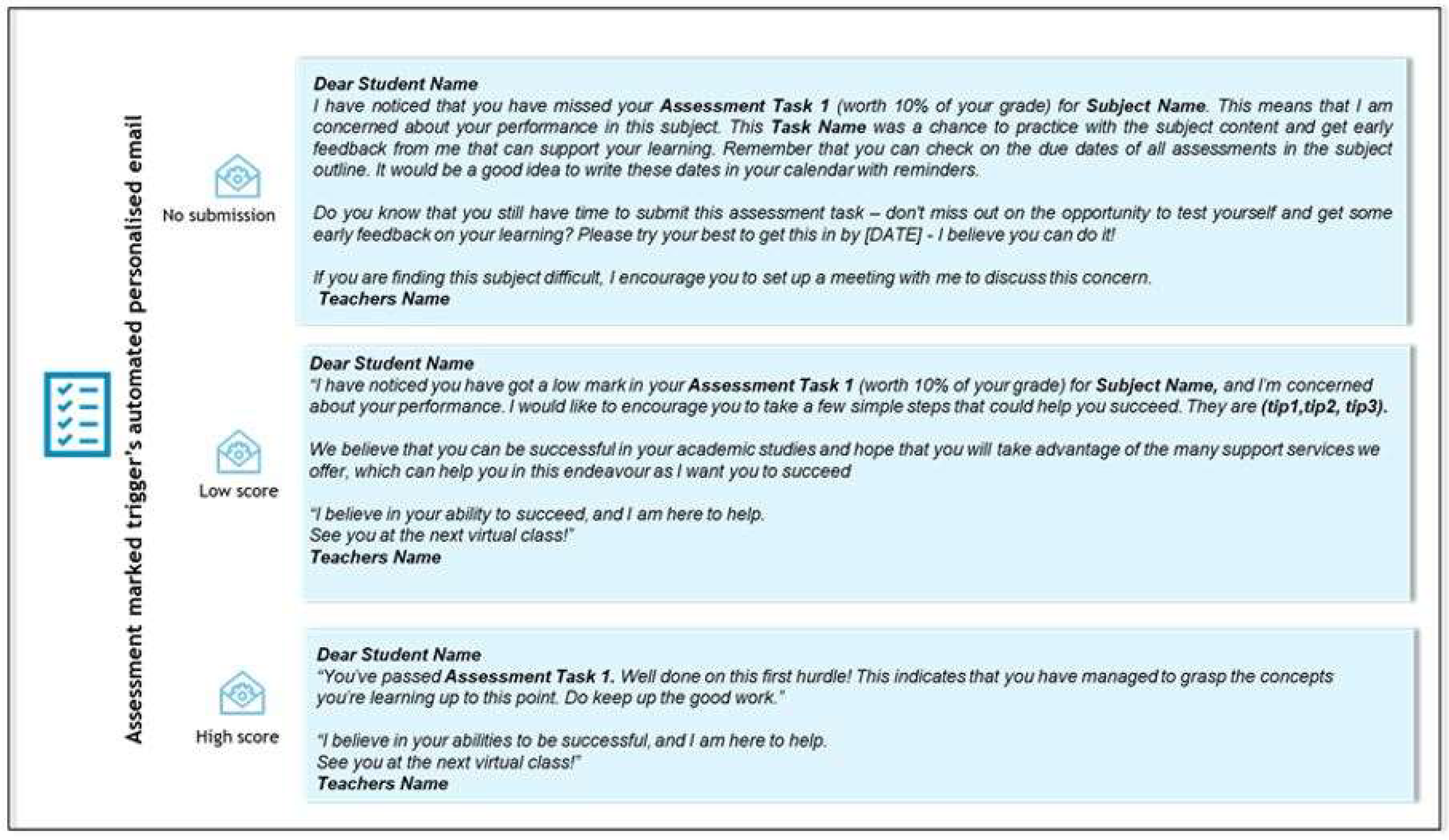

… I realised after attending the training sessions on OnTask that [the personalization] is missing [in the LMS analytics feature]. …. So the first thing is that in [the LMS analytics feature], we cannot address the students by their first names. So that personalization touch is missing from the very beginning. And in OnTask, we have that option available to tag our students in.

…in reply emails students they have clearly mentioned that they have felt connected to the subject more, as compared to other subjects. … So, once students, they feel that they are making progress in a subject, that give them the confidence to excel in that subject as well.

Initially, the plan was to send them nine or 10 emails, but we ended up sending only half of the emails. The reason is very positive behind that action … students didn’t give us the opportunity to send them emails. With the (first) assessment tasks, seven of them, I had no clue about them why they haven’t submitted. So I sent them a message that they have a missing assessment task, and that they still have five days to submit. So they submitted within those five days. And with the next assessment tasks, there was no missing submissions. I guess students knew, someone is checking on them. So they want to prove themselves that they should not get late this time.

That’s my personal observation that the quality of the submissions, the presentations, the in-class activities, the case study discussion students they were doing, it has much more improved if I compare it, with previous cohorts.

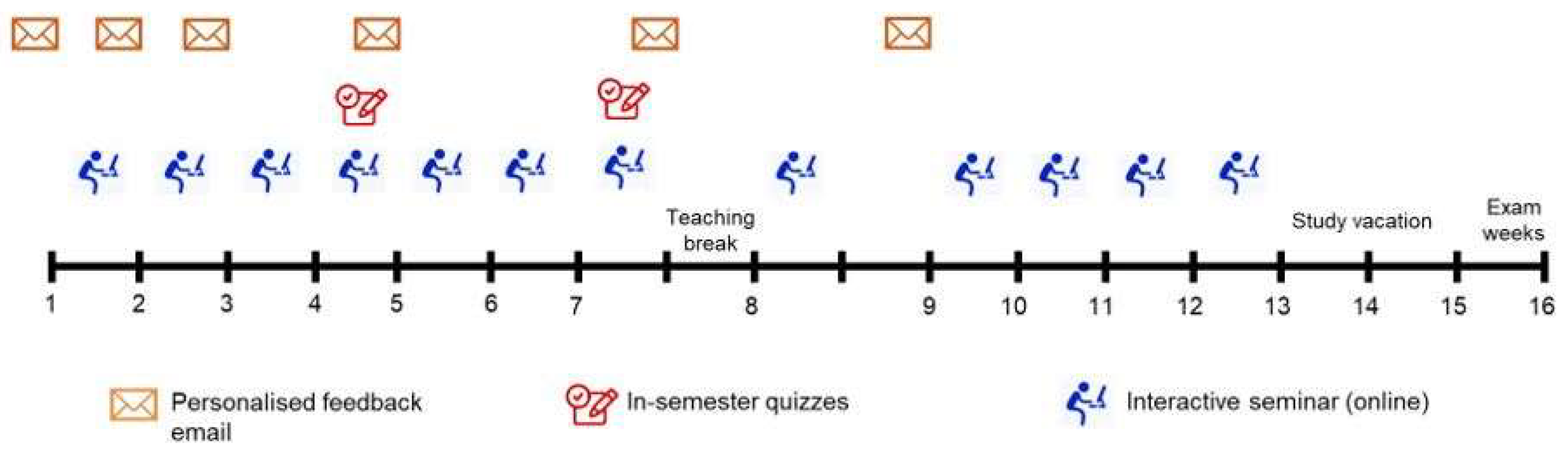

3.5.2. Case 2: Business (First Year, Postgraduate)

I get the sense that teaching online is like teaching through a keyhole … So you get a very small glimpse of the student, compared to when we had face to face where you can see when they walk in, what’s their mood, you can see how much preparation they’ve done by just looking on their computer or on their workbook, you can see them interacting, even if they’re not asking you questions. So you get a much more holistic picture of the student and how they’re progressing. And that feeds into how much support you feel you need to give in terms of their learning.

The other part about teaching through the keyhole is just how little the communication is between myself and the students. … When you’ve got 40, 45 people in a zoom class, and even if you’ve got a couple of hours with the class, you can’t really just have an individual conversation with a student there.

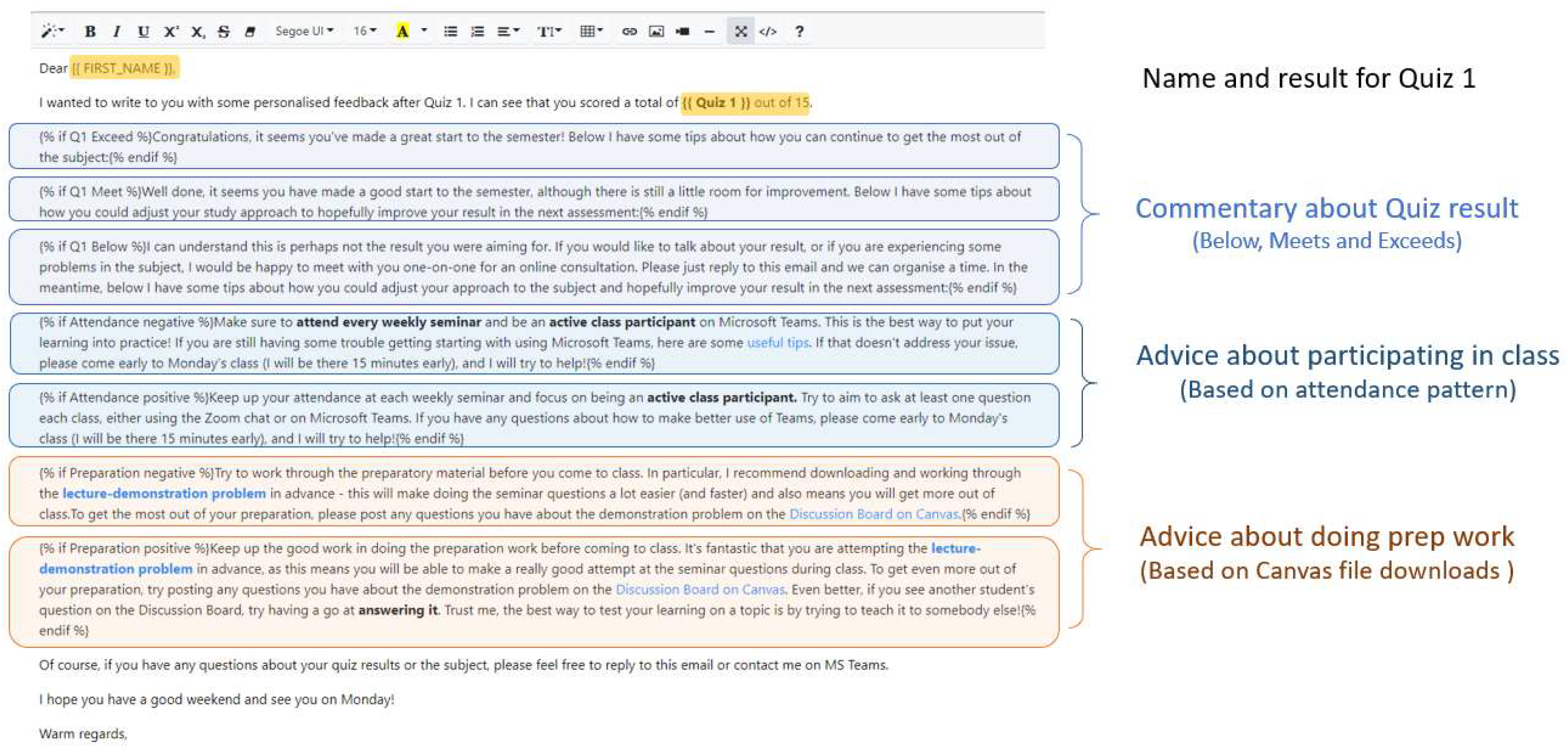

- The student’s name and individual quiz 1 score.

- Text customized to commentary on their outcome of Quiz 1 (blue).

- Text customized to provide advice about participating in class based on their attendance pattern (green).

- Text customized to provide advice about preparing for class based on their pattern. in downloading weekly preparation material from the LMS (red).

- Sign-off includes an offer for further individual contact with the subject coordinator.

Importantly, the course coordinator’s deliberate alignment of LA and LD meant that students were receiving personalized nudge reminders on a Friday as a lead up to the seminar on the following Monday, resulting in a regular feedback loop. As noted by the coordinator:

I would send it on the Friday, because we had class on the Monday. …most of the emails were a reminder about what to do in the lead up to Monday’s class. Yeah, it just so happened that I kept that kind of basic rhythm. And it’s so it meant that when they did the quiz either on Tuesday or Wednesday, they had a 48-h window to do it. I had marked it by Thursday. And then on the Friday, I sent out the email at the same time as I release the marks on [LMS] as well. … It was a really condensed and short feedback cycle, which I intended on that.

3.5.3. Case 3: Education (Graduate Certificate)

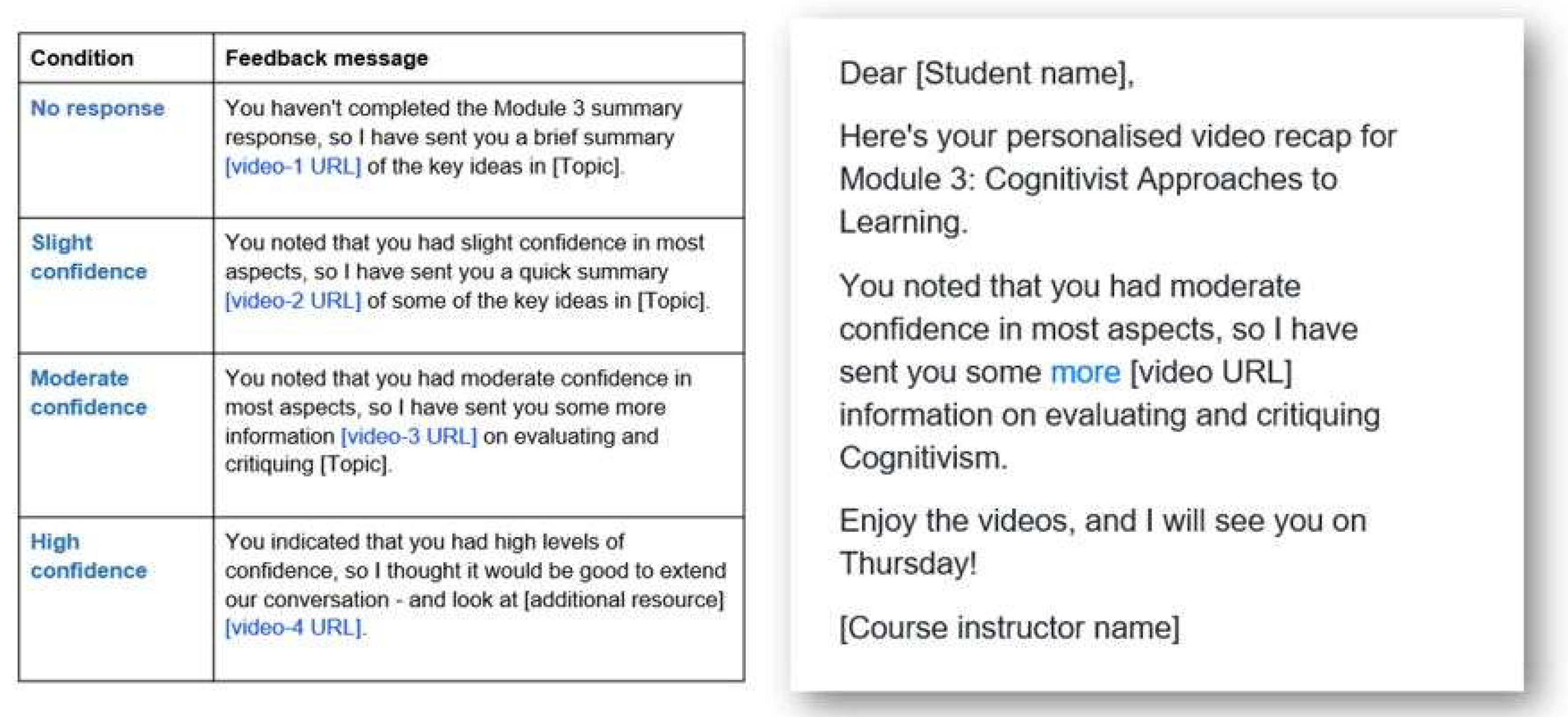

Most of the students do the first part (of the reflection). And so I thought that was unused data. You know, so what’s the point of me asking the question if I’m not actually going to do something with it? … And so I thought, what I should actually try to do is use that to help meet their needs. Specifically, if students said, I’m not confident that I understand [topic], well, what can I do to help them do that?

I’ve got [LMS] set up for digests emails. So every day it sends me a digest letting me know, participation in my subjects. And so usually on a Thursday or Friday, I’d send out the OnTask email ... And then over the next couple of days, the digest email would come in and say, you know, student A, posted on this discussion board, Student B posted on this discussion board, and so on.

4. Results and Discussion

4.1. RQ1. How do Instructors Align LA with LD in Their Contexts, for the Purpose of Personalizing Feedback and Support in Their Courses?

4.2. RQ2. How Do Instructors Evaluate the Impact of Personalized Feedback and Support When LA and LD Are Aligned?

4.3. RQ3. How Do Students Perceive Their Personalized Feedback When LA Is Aligned with LD?

4.4. Limitations and Future Work

- Documenting and gathering data from a wider range of contexts to capture more variations in the approaches of LA-LD alignment for personalized feedback.

- Include student performance data as an additional source of data to examine the impact of LA-LD alignment strategies.

- Compare the experiences of educators at institutions where OnTask or similar open AF systems are fully integrated with those of the current study to examine differences in the use of analytics for personalized feedback.

- Compare the student experience of personalized feedback based on checkpoint or process analytics to examine if the latter can shift students’ perceptions regarding the quality of feedback for improving their work and helping them to be more effective in their studies.

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Avila, A.G.N.; Feraud, I.F.S.; Solano-Quinde, L.D.; Zuniga-Prieto, M.; Echeverria, V.; Laet, T.D. Learning analytics to support the provision of feedback in higher education: A systematic literature review. In Proceedings of the 2022 XVII Latin American Conference on Learning Technologies (LACLO), Armenia, Colombia, 17–21 October 2022; pp. 1–8. [Google Scholar]

- Buckingham Shum, S.; Lim, L.-A.; Boud, D.; Bearman, M.; Dawson, P. A comparative analysis of the skilled use of automated feedback tools through the lens of teacher feedback literacy. Int. J. Educ. Technol. High. Educ. 2023, 20, 40. [Google Scholar] [CrossRef]

- Kaliisa, R.; Kluge, A.; Mørch, A.I. Combining Checkpoint and Process Learning Analytics to Support Learning Design Decisions in Blended Learning Environments. J. Learn. Anal. 2020, 7, 33–47. [Google Scholar] [CrossRef]

- Viberg, O.; Gronlund, A. Desperately seeking the impact of learning analytics in education at scale: Marrying data analysis with teaching and learning. In Online Learning Analytics; Liebowitz, J., Ed.; Auerbach Publications: New York, NY, USA, 2021. [Google Scholar]

- Lockyer, L.; Heathcote, E.; Dawson, S. Informing pedagogical action: Aligning learning analytics with learning design. Am. Behav. Sci. 2013, 57, 1439–1459. [Google Scholar] [CrossRef]

- Rienties, B.; Toetenel, L.; Bryan, A. “Scaling up” learning design: Impact of learning design activities on LMS behavior and performance. In Proceedings of the Fifth International Conference on Learning Analytics and Knowledge, Poughkeepsie, NY, USA, 16–20 March 2015; pp. 315–319. [Google Scholar]

- Kaliisa, R.; Kluge, A.; Mørch, A.I. Overcoming Challenges to the Adoption of Learning Analytics at the Practitioner Level: A Critical Analysis of 18 Learning Analytics Frameworks. Scand. J. Educ. Res. 2022, 66, 367–381. [Google Scholar] [CrossRef]

- Conole, G. Designing for Learning in an Open World; Springer Science & Business Media: Leicester, UK, 2012; Volume 4. [Google Scholar]

- Winstone, N.E.; Nash, R.A.; Parker, M.; Rowntree, J. Supporting learners’ agentic engagement with feedback: A systematic review and a taxonomy of recipience processes. Educ. Psychol. 2017, 52, 17–37. [Google Scholar] [CrossRef]

- Arthars, N.; Dollinger, M.; Vigentini, L.; Liu, D.Y.-T.; Kondo, E.; King, D.M. Empowering Teachers to Personalize Learning Support. In Utilizing Learning Analytics to Support Study Success; Ifenthaler, D., Mah, D.-K., Yau, J.Y.-K., Eds.; Springer International Publishing: Cham, Switzerland, 2019; pp. 223–248. [Google Scholar]

- Pardo, A.; Bartimote-Aufflick, K.; Buckingham Shum, S.; Dawson, S.; Gao, J.; Gašević, D.; Leichtweis, S.; Liu, D.Y.T.; Martinez-Maldonado, R.; Mirriahi, N.; et al. OnTask: Delivering Data-Informed Personalized Learning Support Actions. J. Learn. Anal. 2018, 5, 235–249. [Google Scholar] [CrossRef]

- Liu, D.Y.-T.; Bartimote-Aufflick, K.; Pardo, A.; Bridgeman, A.J. Data-driven personalization of student learning support in higher education. In Learning Analytics: Fundaments, Applications, and Trends; Studies in Systems, Decision and Control; Peña-Ayala, A., Ed.; Springer International Publishing: Berlin/Heidelberg, Germany, 2017; Volume 94, pp. 143–169. [Google Scholar]

- Bakharia, A.; Corrin, L.; Barba, P.d.; Kennedy, G.; Gašević, D.; Mulder, R.; Williams, D.; Dawson, S.; Lockyer, L. A conceptual framework linking learning design with learning analytics. In Proceedings of the Sixth International Conference on Learning Analytics & Knowledge, Edinburgh, UK, 25–29 April 2016; Association for Computing Machinery: Edinburgh, UK, 2016; pp. 329–338. [Google Scholar]

- Caeiro-Rodriguez, M. Making Teaching and Learning Visible: How Can Learning Designs Be Represented? In Proceedings of the Seventh International Conference on Technological Ecosystems for Enhancing Multiculturality, León, Spain, 16–18 October 2019; pp. 265–274. [Google Scholar]

- Toetenel, L.; Rienties, B. Analysing 157 learning designs using learning analytic approaches as a means to evaluate the impact of pedagogical decision making. Br. J. Educ. Technol. 2016, 47, 981–992. [Google Scholar] [CrossRef]

- Persico, D.; Pozzi, F. Informing learning design with learning analytics to improve teacher inquiry. Br. J. Educ. Technol. 2015, 46, 230–248. [Google Scholar] [CrossRef]

- Gašević, D.; Dawson, S.; Siemens, G. Let’s not forget: Learning analytics are about learning. TechTrends 2015, 59, 64–71. [Google Scholar] [CrossRef]

- Shibani, A.; Knight, S.; Buckingham Shum, S. Contextualizable Learning Analytics Design: A Generic Model and Writing Analytics Evaluations. In Proceedings of the 9th International Conference on Learning Analytics & Knowledge, Tempe, AZ, USA, 4–8 March 2019; Association for Computing Machinery: Tempe, AZ, USA, 2019; pp. 210–219. [Google Scholar]

- Siemens, G.; Long, P. Penetrating the fog: Analytics in learning and education. EDUCAUSE Rev. 2011, 46, 30. [Google Scholar]

- Macfadyen, L.P.; Lockyer, L.; Rienties, B. Learning Design and Learning Analytics: Snapshot 2020. J. Learn. Anal. 2020, 7, 6–12. [Google Scholar] [CrossRef]

- Hernández-Leo, D.; Martinez-Maldonado, R.; Pardo, A.; Muñoz-Cristóbal, J.A.; Rodríguez-Triana, M.J. Analytics for learning design: A layered framework and tools. Br. J. Educ. Technol. 2019, 50, 139–152. [Google Scholar] [CrossRef]

- Mangaroska, K.; Giannakos, M. Learning analytics for learning design: A systematic literature review of analytics-driven design to enhance learning. IEEE Trans. Learn. Technol. 2018, 12, 516–534. [Google Scholar] [CrossRef]

- Ahmad, A.; Schneider, J.; Griffiths, D.; Biedermann, D.; Schiffner, D.; Greller, W.; Drachsler, H. Connecting the Dots—A Literature Review on Learning Analytics Indicators from a Learning Design Perspective. J. Comput. Assist. Learn. 2022; early view. [Google Scholar] [CrossRef]

- Salehian Kia, F.; Pardo, A.; Dawson, S.; O’Brien, H. Exploring the relationship between personalized feedback models, learning design and assessment outcomes. Assess. Eval. High. Educ. 2023, 48, 860–873. [Google Scholar] [CrossRef]

- Yin, R.K. Case Study Research: Design and Methods, 4th ed.; Sage Publications: Thousand Oaks, CA, USA, 2009. [Google Scholar]

- Stake, R.E. Multiple Case Study Analysis; The Guilford Press: New York, NY, USA, 2006. [Google Scholar]

- Nicol, D.J.; Macfarlane-Dick, D. Formative assessment and self-regulated learning: A model and seven principles of good feedback practice. Stud. High. Educ. 2006, 31, 199–218. [Google Scholar] [CrossRef]

- Dinham, S. The secondary head of department and the achievement of exceptional student outcomes. J. Educ. Adm. 2007, 45, 62–79. [Google Scholar] [CrossRef]

- Pardo, A.; Jovanovic, J.; Dawson, S.; Gašević, D.; Mirriahi, N. Using learning analytics to scale the provision of personalised feedback. Br. J. Educ. Technol. 2019, 50, 128–138. [Google Scholar] [CrossRef]

- Lim, L.-A.; Dawson, S.; Gašević, D.; Joksimović, S.; Fudge, A.; Pardo, A.; Gentili, S. Students’ sense-making of personalised feedback based on learning analytics. Australas. J. Educ. Technol. 2020, 36, 15–33. [Google Scholar] [CrossRef]

- Sadler, D.R. Formative assessment and the design of instructional systems. Instr. Sci. 1989, 18, 119–144. [Google Scholar] [CrossRef]

- Merceron, A.; Blikstein, P.; Siemens, G. Learning Analytics: From Big Data to Meaningful Data. J. Learn. Anal. 2016, 2, 4–8. [Google Scholar] [CrossRef]

- Matz, R.L.; Schulz, K.W.; Hanley, E.N.; Derry, H.A.; Hayward, B.T.; Koester, B.P.; Hayward, C.; McKay, T. Analyzing the efficacy of Ecoach in supporting gateway course success through tailored support. In Proceedings of the LAK21: 11th International Learning Analytics and Knowledge Conference (LAK21), Irvine, CA, USA, 12–16 April 2021; ACM: New York, NY, USA, 2021; pp. 216–225. [Google Scholar]

- Yang, M.; Carless, D. The feedback triangle and the enhancement of dialogic feedback processes. Teach. High. Educ. 2013, 18, 285–297. [Google Scholar] [CrossRef]

- Tsai, Y.-S. Why feedback literacy matters for learning analytics. In Proceedings of the 16th International Conference of the Learning Sciences (ICLS), Online, 6 June 2022; pp. 27–34. [Google Scholar]

- Lim, L.; Dawson, S.; Gašević, D.; Joksimović, S.; Pardo, A.; Fudge, A.; Gentili, S. Students’ perceptions of, and emotional responses to, personalised LA-based feedback: An exploratory study of four courses. Assess. Eval. High. Educ. 2021, 46, 339–359. [Google Scholar] [CrossRef]

| Case | Faculty | Course Level | Subject Design | Cohort Size | Session Duration | Data Classes (Based on [21]) | ||

|---|---|---|---|---|---|---|---|---|

| Checkpoints | Process | Performance | ||||||

| Case 1 | Engineering and IT | Postgraduate, Masters | Blended learning, modular | 101 | 12 weeks | Login by Week 3; Weekly pre-reading participation; Weekly tutorial submissions; Weekly post-class quiz submission; Weekly post-class reflection | Assessment task 2 performance | |

| Case 2 | Business | Postgraduate, Masters | Project-based, Flipped learning | 42 | 12 weeks | Attendance at seminars; Downloads of preparatory material topic; Downloads of revision materials for exams | Assessment performance—summative quizzes | |

| Case 3 | Arts and Social Science | Postgraduate, Graduate Certificate | Blended, modular | 15 1 | 6 weeks | Student reflection self-ratings | ||

| OnTask Email Message | Date Sent | Purpose | Learning Analytics |

|---|---|---|---|

| Welcome message and instructions for Seminar 1 | Week 0 | Remind students about what they need to do to prepare before Seminar 1 | |

| Nudge to attempt preparatory material | End of Week 1 | Remind students to work through the preparation material and attend the seminar | Checkpoint analytics: Attendance at seminar 1 (Y/N) Downloaded preparatory material, topic 1 (Y/N) |

| Follow-up reminder to form assignment groups | End of Week 2 | Remind students to form a group for the assignment and begin interacting on Microsoft Teams | Checkpoint analytics: Attendance at seminar 1 (Y/N); Downloaded preparatory material topic 1 (Y/N) |

| Personalized feedback after Quiz 1 | End of Week 4 | Provide personalized feedback for Quiz 1, with personalized suggested learning strategies | Checkpoint analytics: Registered as group (Y/N) |

| Personalized feedback after Quiz 2 | End of mid-semester study break | Provide personalized feedback for Quiz 2 (noting changes from Quiz 1) with personalized suggested learning strategies | Performance data: Quiz 2 score Quiz 2 result (Exceed Maintain/Exceed Improve/Meet/Below); Checkpoint analytics: Download preparatory materials pattern (0–1 weeks, 2–3 weeks) |

| Strategies for revising the subject | Beginning of Week 11 | Remind students about the availability of weekly self-guided revision tasks | Checkpoint analytics: Download revision materials pattern (0, 1–4 weeks, 5+ weeks) |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Lim, L.-A.; Atif, A.; Heggart, K.; Sutton, N. In Search of Alignment between Learning Analytics and Learning Design: A Multiple Case Study in a Higher Education Institution. Educ. Sci. 2023, 13, 1114. https://doi.org/10.3390/educsci13111114

Lim L-A, Atif A, Heggart K, Sutton N. In Search of Alignment between Learning Analytics and Learning Design: A Multiple Case Study in a Higher Education Institution. Education Sciences. 2023; 13(11):1114. https://doi.org/10.3390/educsci13111114

Chicago/Turabian StyleLim, Lisa-Angelique, Amara Atif, Keith Heggart, and Nicole Sutton. 2023. "In Search of Alignment between Learning Analytics and Learning Design: A Multiple Case Study in a Higher Education Institution" Education Sciences 13, no. 11: 1114. https://doi.org/10.3390/educsci13111114

APA StyleLim, L.-A., Atif, A., Heggart, K., & Sutton, N. (2023). In Search of Alignment between Learning Analytics and Learning Design: A Multiple Case Study in a Higher Education Institution. Education Sciences, 13(11), 1114. https://doi.org/10.3390/educsci13111114