Abstract

Research that integrates Learning Analytics (LA) with formative feedback has been shown to enhance student individual learning processes and performance. Debates on LA-based feedback highlight the need to further understand what data sources are appropriate for LA, how soon the feedback should be sent to students and how different types of feedback promote learning. This study describes an empirical case of LA-based feedback in higher education and analyzes how content-focused feedback promotes student achievement. The model combines quantitative achievement indicators with qualitative data about student learning challenges to develop feedback. Data sources include student pretest results, participation in practice exercises as well as midterm and final exam grades. In addition, in-depth interviews with high-, medium- and low-performing students are conducted to understand learning challenges. Based on their performance, students receive content-focused feedback every two weeks. The results show statistically significant improvements in final grades, in addition to a higher rate of problem-solving participation among students who receive feedback compared to their peers who opt out of the study. The contributions to the area of LA-based formative feedback are the following: (a) a model that combines quantitative with qualitative data sources to predict and understand student achievement challenges, (b) templates to design pedagogical and research-based formative feedback, (c) quantitative and qualitative positive results of the experience, (d) a documented case describing the practical implementation process.

1. Introduction

Student enrollment is growing in many universities, resulting in larger classes and raising concerns about personalization and institutional demands to support individual learning processes [1]. Large parts of professional learning opportunities are offered in Massive Online Open Courses (MOOCs) or in blended-learning formats that generally lack personalized teaching materials and strategies. Be it in blended or online learning, datafication in education allows the collection of information about learners’ digital footprints and their relation to academic results. These data help build student achievement indicators, understand part of the learning process and aid in tailoring teaching strategies toward relevant tasks.

Learning Management Systems (LMSs) allow gathering information on student progress in courses. LMS data include intermediate grades, navigation history within the system, the number of attempts to solve activities, the type and number of files that students access, etc. Correlating LMS data regarding student activity in a platform with final grades can provide some indicators of the kinds of activities that positively relate to student achievement [2]. The analysis of these observations as soon as the data are in the system can contribute to identifying students in need of support earlier in the course. It also enables the creation of formative feedback that addresses their needs before it becomes too late to improve their performance [3].

Learning Analytics (LA) is an area of research and practice that focuses on analyzing data related to learning to better understand and improve student performance. Some elements of this work involve collecting relevant sources of data, establishing relationships among the data to identify learning patterns and providing timely reports to educational stakeholders (teachers, students, designers and administrators) to support better decision making [4]. LA provides key opportunities with respect to capturing student activity through interactions between students and LMS. These interactions can add valuable insights into student learning strategies and the associated effectiveness of such strategies, along with aiding student performance classification [5,6,7].

To support individual student learning, researchers are developing frameworks that integrate intelligent tutorial system technologies with LA [8]. Intelligent tutorial systems seek to guide learners with hints and instructional orientation based on their performance. Such guidance has been called adaptive feedback [8]. Automating adaptive and formative feedback systems become particularly relevant for large courses to assist professors in following up on each student’s performance and in providing timely suggestions for keeping up with the course learning progress [9]. In general, groups of students share similar sets of learning challenges and professors provide much the same orientation to these groups. Identifying a pattern in student challenges is the first step to developing quality adaptive feedback that relates to student struggles and helps teachers be more effective in their orientations. Combining learning challenges information with student performance data can provide some indicators about the groups of students who might benefit from formative feedback.

In the last decade, researchers have documented LA-based feedback intervention in higher education [10]. The literature has revealed some research gaps in this area. Firstly, historical academic student data (such as grades in previous courses, high school test results, etc.) are not enough or effective to make predictions or understand learning challenges [11]. A study by Conijn et al. [6] emphasized the need to include more specific theoretical argumentation and additional data sources other than just LA to deepen our understanding of student learning processes and challenges. Other studies pointed out a lack of experiences combining LA with qualitative data and research-based pedagogy, such as formative feedback in educational settings [12]. However, more research about the kinds of data sources that are effective for LA is needed.

Secondly, besides showing red flags or warnings, systems need to offer students guidance and alternatives on how to overcome their challenges. To address such needs, more research about the development and impact of different types of LA-based feedback is needed. Tempelaar et al. specifically posed the question of the different effects of process-oriented feedback (study planning, setting goals and monitoring learning) or content-oriented feedback (focused on specific learning disciplinary strategies) [11].

Thirdly, while there has been research showing the use of achievement data to detect students who might be at risk early in the course [13], the timelines of the feedback are still a challenge. In general, receiving feedback sooner than later seems to be more effective. However, personalized feedback requires student performance data that are not available during the first weeks [14]. Further investigation into the timing and frequency of LA-based feedback is still needed.

Fourthly, more empirical evidence on the effects of LA-based feedback is desirable in cases where feedback provides student support [10]. Based on a review of 24 cases, Wong et al. also argued for learning about the institutional and implementation process of developing LA-based feedback, as it assumes a cultural change regarding monitoring student progress.

By addressing these research gaps, the purpose of this study is to document a case in a higher education setting of LA-based feedback and to analyze the effect of content-focused feedback on student performance. This study describes the development and implementation of an automated prognostic student progress monitoring system (APSPMS) used in Electromagnetism II (EMII) at the Department of Electrical Engineering of the Eindhoven University of Technology (TU/e), the Netherlands. APSPMS identifies EMII students’ performance indicators from LA [15] and combines early warning system infrastructure with student interview data, exploring how different sources of data serve LA systems. Then, professors’ formative feedback offers discipline-oriented actionable learning tasks. APSPMS aggregates data in near real-time from practice exercises and a diagnostic content knowledge test. Using these data, the system selects different messages purposely designed to address student learning challenges around specific concepts and practices. Formative feedback theories and findings, from in-depth student interviews describing the learning processes in EMII, guide the development of these messages. Based on theories and interviews with the students, each feedback message includes items on clarification about the course focus, required prior knowledge, suggestions on how to interact with students, support for student’s self-efficacy, learning expectations, problem-solving strategies, content knowledge resources, relationships between the concepts of electromagnetism and electrical engineering applications, new vocabulary and general misconceptions.

Students who voluntarily participated in the intervention received e-mails with tailored-content-focused formative feedback written by the professors of this course. As a result of this experience, this study first identifies a set of learning challenges in electromagnetism using qualitative research to interpret the students’ points of view. Then, the combination of quantitative performance indicators with such qualitative data to develop formative feedback is documented. Finally, a comparison of student performance among those who voluntarily opted to receive the feedback messages and those who did not indicates a strong correlation between participation in the feedback system and improved student performance.

This study contributes to addressing the area’s main research gaps using different sources of data from the first week of the course, analyzing the effects of content-focused formative feedback and describing the implementation process in a higher education setting.

2. Theoretical References

2.1. Using Learning Analytics to Predict Student Achievement

In a systematic review [12], Banihashem et al. highlighted that LA plays an important role in improving feedback practices in higher education. The study draws attention to the fact that LA data help educators and students in different ways. For educators, LA provides insights for timely intervention, assessing student learning progress and the early identification of students who are at risk of under-performing. For students, LA shows their own progress in the class and offers activities that could help them move forward in their learning process; while LA is used as a diagnostic and predictive system for educators, it is employed as a descriptive and prescriptive system for students.

The pervasive use of digital learning environments has led to large amounts of tracking data that can be exploited to learn about different indicators of student achievement and activate Early Warning Systems (EWSs) in education. EWSs usually aggregate LMS data to notify teachers and/or students in a timely manner of academic support needs. Additionally, since data collection in a digital platform is not a separate act but rather a non-intrusive record of interactions, the data collected are often viewed as an authentic representation of student behavior within the platform [16]. These data can inform e-learning systems that offer learning activities through a digital interface. A review of the literature points out that a successful e-learning system should consider the personal, social, cultural, technological, organizational and environmental factors to offer academic guidance and learning support [17].

Despite having large amounts of data, authors have reported contradicting correlations of certain data predictors related to online activity and have discussed the associated challenges and limitations [18], mainly because many factors associated with learning are not considered. This is why Wilson’s study concludes that LA data should allow for pedagogical variations and are likely to be effective in reliably enhancing learning outcomes only if they are designed to track data that are genuine indicators of learning. Following a Self-Regulated Learning learning model (SRL), cognitive, metacognitive, motivational and goal-oriented factors drive the learning process. Thus, an LA model should consider several of these learning dimensions. Based on this model, the learner engages in a task. Firstly, he/she defines the task, and based on the definitions, he/she selects the learning strategies leveraging situated constraints. Some of these constraints may be internal (such as prior knowledge or motivation) and some external (such as instruction or resources). The internal and external conditions contribute to developing the planning and expectations to complete the tasks. As the learning process unfolds, learners monitor the learning progress and make necessary adjustments. This model of learning helps us understand why digital data may be limited to providing a picture of the learning process since it does not tell us about the student context or prior experiences that orient learning [19].

Looking for the most informative data to develop models that predict student performance, a study investigated several different data sources to explore the potential of generating informative feedback using LA, including data from registration systems and quizzes. The study found that data related to the academic history of students had little predictive power, while tests, assignments and quizzes were more effective [11].

Another aspect of LA models is their potential to improve student achievement. Synthesis studies mentioned that, in general, EWSs achieve the goal of identifying dropouts. However, educational responses are needed to further improve academic results. A study that analyzed 24 cases wherein LA interventions were made in higher education found that rather than a prediction of student problems, it is necessary to develop personalized support [10].

In addition, other publications [13,20,21] have shown that some LA tools are effective at predicting at-risk students. However, these systems neither tackled the educational problem nor did they improve student achievement since learning challenges were not addressed adequately. This is why these works suggest complementing predictions with feedback that provides actionable learning tasks. In some cases, the problems may require counseling practices. Overall, investigations conclude that data mean very little if there is no evidence of its relation to student achievement or if there are no educators to interpret the results and design an educational intervention to help students in their learning process.

Regarding the implementation of EWS, the selection of achievement indicators and the timing to send the warning seemed to be two important conditions for successful implementation [22]. After examining eight early alert methods [23], Howard et al. concluded that the best time to collect data and provide feedback is at weeks 5 and 6 (halfway through the semester). This schedule provides the necessary time for students to make changes to their study patterns while retaining reasonable prediction accuracy. However, there have been efforts to predict student achievement with weekly logs in the LMS [24]; while these endeavors attained a 60% accuracy, it is worthwhile investing in developing technology and selecting indicators to attain earlier predictions. Then, professors can provide students with support as soon as possible. Other studies found that the best predictive data is assessment. However, assessment data are not available before midterm. This is why these authors suggest using entry tests and exercise data together with the extensive data generated by e-tutorial systems. A mix of various LMS data allows the prediction of academic performance [11].

Concerning student experiences with EWS, a study by Atif et al. [25] documented that most students welcomed being alerted, preferred to receive alerts as soon as their performance was unsatisfactory and strongly preferred to be alerted via email, rather than face to face or by phone. Thus, the timing of the intervention and the type and quality of communication are very relevant for effective results.

In summary, LA data can help identify student performance and inform teachers about the tasks different types of students engage with. The timing to process LA data is key to offering feedback that can effectively contribute to student learning actions during a course. Learning from previous work, this study designed a system for a course on electromagnetism. The system includes a few indicators that are highly correlated with student achievement. Based on these data, the system sends formative feedback messages to students every two weeks. The next section provides evidence that supports the choice of formative feedback as an intervention strategy to promote achievement.

2.2. Using Learning Analytics to Target Formative Feedback

Feedback is a vital element in fostering continuous improvement in learning [26,27,28]. Over the last two decades, a vast amount of research has been conducted in developing personalized feedback for education using LA [29,30,31,32]. Numerous educational institutions have implemented LA-driven feedback systems for students. However, the comprehensive assessment of the effects of this personalized feedback remains relatively limited.

Cavalcanti et al. [33] presented a systematic review on the effectiveness of automatic feedback generation in LMS, showing that a significant number of studies present no evidence to support that manual feedback is more efficient than automatic feedback. Highly effective feedback should provide substantial information related to the task, the process and self-regulation [34]. Accordingly, a significant amount of the literature considers learner performance and self-regulation/satisfaction as two factors that are important in defining the impact of feedback. In this regard, Lim et al. [35] explored the impact of LA-based automatic feedback on student self-regulation and performance in a large undergraduate course. They split students into test and control groups and compared the differences in their self-regulated learning operations and course performance using t-tests. Their findings demonstrate the existence of different patterns in self-regulated learning; higher performance, in the test group; and the effectiveness of feedback regardless of students’ prior academic achievements. Afzaal et al. [36] combined LA both in an expert workshop and real educational settings, and reported the overall positive impact of feedback in supporting student learning outcomes and self-regulation. As another approach to delivering personalized feedback, a study used an LA-enhanced electronic portfolio [37]. They evaluated the effectiveness of their feedback model by implementing it in a professional training program and providing questionnaires. They documented the overall impression of the design’s usefulness. However, some students reported the feedback to be too generic and lacking personalization.

Besides the effects of LA-based feedback messages on learning indicators, a set of studies have provided insights into the content and structure of the messages. Pardo et al. [38] used digital traces from the course LMS and developed structured feedback based on student quizzes obtaining positive results. These digital traces form the new kinds of data frequently used in learning analytics. Pardo developed four different types of messages based on video activities, multiple choice results and summative exercises. Research has found [39,40] that the most effective formative feedback has the following characteristics:

- Always begins with the positive. Comments to students should first point out what students did well and recognize their accomplishments.

- Identify specific aspects of student performance that need to improve. Students need to know precisely where to focus their improvement efforts.

- Offer specific guidance and direction for making improvements. Students need to know what steps to take to make their product, performance or demonstration better and more aligned with established learning criteria.

- Express confidence in student abilities to achieve at the highest level. Students need to know their teachers believe in them, are on their side, see value in their work, and are confident they can achieve the specified learning goals.

Miller [41] has documented student perceptions on the effect of the four types of feedback embedded in a computer-based assessment (CBA) system that included the following: (a) directing students to a resource, (b) rephrasing a question, (c) providing additional information and (d) providing the correct answer. In general, students reported that feedback helped them with their learning.

There are other factors that influence the effects of feedback messages. A study that reviewed the literature on feedback elements [40] highlighted that the effectiveness of formative feedback depends on a number of factors, including the ability of students to self-assess, the clarity of goals and criteria, the way expected standards are set, the encouragement of teacher and peer dialogue around learning, the closure of the ‘feedback loop’, the provision of quality feedback information, and the encouragement of student positive motivational beliefs and self-esteem.

In addition, the perception and expectation of the learner from feedback play a great role in its effectiveness. In this regard, a pilot study [42] explored the relationship and the gap between student expectations for feedback and their experiences receiving LA-based feedback. Using OnTask [3] and considering student self-efficacy and self-regulation abilities as defining factors, researchers discovered that students with high levels of these factors tended to have a higher appreciation of feedback and more effective experiences with it. However, their identified gap indicated the need to further examine both the teacher’s feedback literacy and the system feedback guidelines. More recently, some studies have analyzed the impact of ChatGPT feedback for particular learning tasks, such as programming. Students who used this tool found that it offered them adequate feedback to learn programming [43]. Besides their positive perception, students who received feedback from ChatGPT improved self-efficacy and motivation. Thus, automated feedback has the potential to improve the most important factors associated with learning [44].

Exploring the more elaborate effects of feedback, Zheng et al. [45] designed a knowledge map analysis method together with an interview to positively prove the effectiveness of LA-based real-time feedback in knowledge elaboration and convergence, interactive connections and group performance in a collaborative learning context. Two mix-method research approaches applied in a flipped classroom context indicated the statistically and qualitatively significant effect of LA feedback on self-regulation and academic achievement [46], as well as improvements in student perceptions of community of inquiry and thinking skills [47]. Analyzing the impact of feedback, a study found that personalized semi-automatically generated textual messages sent weekly to students via email promoted a larger use of effective learning strategies. The study also showed the significant value of personalized textual messages over the use of personal LA dashboards [14]. While research highlights the impact of LA-based feedback on education, more systematic research on feedback organization and assessment is necessary [48].

Learning from past research, this study explored data sources that closely relate to learning EM, interpreted these results, established a frequency for feedback messages that allow students to regulate their learning based on content-focused orientations and compared student performance.

3. Research Methodology

Design-based research has been considered an adequate methodology to design and analyze educational interventions in a situated context [49]. This method shares the same principles of action research related to reflection about the problem or situation but also inquires about developing new theoretical agendas and relationships among variables. Following a design-based research approach, a group of professors at TU/e in the Netherlands analyzed student performance and teaching practices in their Electromagnetics II (EMII) course, and based on that, they developed and studied an intervention. The study followed the process of continuously analyzing and defining the educational problem, developing a theoretically based solution, implementing an intervention, analyzing the results and documenting it.

3.1. The Case Study

The course where the intervention was designed is part of the mandatory undergraduate program for electrical engineering students. EMII provides an introduction to space–time and space–frequency, and free and guided electromagnetic waves in one to three spatial dimensions, with applications in circuit theory, communication theory and wireless devices and systems.

After reflecting on some of the course’s main data that included registration numbers, passing rates, students participation in tutorial hours and dropout rates, the team decided to design an intervention for the following reasons. Firstly, the course has historically been conceived by students as a difficult one with low passing rates. Currently, 58% of the students pass the course, which is an improvement from the 20% rate before Student Led Tutorials (SLTs) were introduced. SLTs are an instructional format where students present the process of solving exercises during practice hours [50].

Secondly, in the last eight years, the registration rate for the course has increased from 77 students in 2014 to 222 in 2023, reaching a peak of 299 in 2019; while more Teaching Assistants (TAs) were hired to have groups of 20 students in peer learning tutorial settings, professors felt it was getting difficult to support student individual learning. Thirdly, the course gradually introduced more learning activities in the university LMS that allowed gathering student digital traces. Thus, a system that conducts some basic LA contributing to identifying those students who need academic help could give insight into offering individual (and, hopefully, also personalized) student support.

3.2. Data Collection and Analysis

As mentioned before, LMS contains data related to student attempts to solve exercises and navigation history on the course digital resources, such as videos and readings. It also includes personal data to which this team had no access due to university private data regulations. Therefore, an analysis correlating student activities in the system with assessment seemed interesting and valuable. These data contributed to developing a prognosis system that can identify at-risk students and provide them with targeted feedback.

The ethical review board of the TU/e university revised and approved the use of student private data before we could collect the information. In line with the General Data Protection Regulation (GDPR) policies, the board conducted a data protection impact assessment to ensure the research meets the privacy agreements. All data were used in such a way that no student was individually identifiable. Students who opted to receive personalized feedback signed an explicit consent that described the use of their personal and private data. The consent informed students about the goal of the research, the kinds of data that were going to be collected and processed and how they were going to be stored (among others). Participants were allowed to revert their participation at any point in time. Moreover, the students were able to select whether and how their data could be used. For this, they had multiple options ranging from limiting the data usage to only personalized feedback to whether their data could be used for this and future scientific research. Following a design-based research process, data collection and analysis were conducted in different phases.

3.3. First Round of Data Collection: Understanding Learning Challenges through LA and Students Interviews

The purpose of the first phase of the study was to understand learning challenges through LA and interview data. The dataset for the LA included all students from TU/e who enrolled in the EMII course exam in the previous three years, allowing us to gather 675 entries.

Quantitative data included (1) pretest results on prior knowledge, (2) practice exercise participation measured by attempts to solve exercises, (3) midterm exam grades, (4) final exam grades and (5) overall participation in the course LMS (access to videos, recorded lectures, e-books, etc.).

Regarding the pretest, given that student prior knowledge is a major indicator of student achievement, a voluntary diagnostic test on the main fundamental EMII concepts was conducted. This information helped us understand how relevant prior knowledge was to performance. Upon a brief literature review, three validated EM tests were found: Brief Electricity and Magnetism Assessment (BEMA) [51], the Electricity and Magnetism Conceptual Assessment (EMCA) [52] and the Conceptual Survey of Electricity and Magnetism (CSEM) [53]. It was decided that the items from CSEM would be used since this test matched most of the content required for the EM courses at this university.

The practice exercise participation was measured by the number of exercises students chose to present during practice hours. In 2019, practice hours of EMII were transformed into SLTs [50]. This method requires the students to first solve a set of problems in advance by themselves, select the LMS in which exercises they made a serious attempt and then present their efforts in finding a solution to a group of 20 students if the TA randomly selects them.

Analysis was conducted on both quantitative and qualitative data from the course. Correlations were established with the mentioned sources of data and final grades. The results showed that attempts to solve EMII problems measured by the number of exercises students chose to present during practice hours had the highest correlations with final exam grades. The pretest results showed the second highest correlation with final exams, and no clear pattern was found between overall participation in the course platform (access to videos, readings and e-lectures) and final grades.

Regarding the qualitative data, three researchers conducted six in-depth confidential interviews with students at the end of the 2021 course to learn about general student learning challenges and experiences with the course. Intentionally, the professors did not participate in the interviews to ensure that students could speak openly about the course. Because emerging themes started saturating with five participants, the sampling concluded with six students. In addition, a small focus group interview was conducted in 2023.

While interviews were voluntary, a diverse group of students, including high achievers, medium achievers, students who did not pass or retook the course and students with different rates of participation, was invited. Among the students who volunteered for the interview, those with different performances, practice hours and pretest indicators were intentionally selected to gather different perspectives. The sample represented four clusters of students and included the following: a student who did not pass the course, had low pretest scores and 60% peer tutorial participation; two students with average pretest scores, 60% peer tutorial participation and a passing grade of 6 (in a 1 to 10 scale); two students with average pretest scores but higher peer tutorial participation; and one student who had high pretest scores and high peer tutorial participation. These students also passed the final exam.

Interview data were coded following a constant comparison approach [54]. This method requires inductively identifying and classifying instances under a code and then comparing these instances with each other to find patterns and emerging themes. Software for qualitative analysis called Saturate App allowed digital classification, storage and grouping of text.

3.4. Second Round of Data Collection: Analysing the Effects of Content-Focused Formative Feedback on Student Performance

The sample data for this phase of the study include 366 students from the 2022 and 2023 editions of the EMII course at the same university. A total of 168 students effectively started the course in 2022 and 198 in 2023 (a few more registered but never started the course). A total of 55% of the students from 2022 and 56% from 2023 voluntarily consented to have the team access and analyze their LMS data to provide feedback messages. This situation provided the opportunity to treat the groups within a quasi-experimental design. Student final grades and SLT participation were compared among the group who received feedback and those who did not for both years. SLT participation was the stronger achievement indicator based on the results of Phase 1. A survey was conducted after the end of the course to learn about student experiences with the APSPMS. A total of 11 students answered the survey. A focus group with six self-selected students was also carried on by the end of the 2022/2023 academic years.

The following section describes the results for each of the phases of this educational intervention and analysis including a description of the design of the APSPMS project.

4. Results

4.1. Phase 1: Learning Challenges

The first task for developing feedback that addresses student academic needs in the course was understanding the educational problem. In this case, the problem was defined as EMII learning difficulties. Following Notaros [55], EM is often perceived as the most challenging and demanding course in the electrical engineering curriculum. Professors have stated that the difficulties lie in the abstract and complicated mathematical formalism in theoretical derivations, misconceptions about physical concepts and counter-intuitive physical phenomena [55,56]. In addition to acknowledging general challenges to learning EMII, the team analyzed the course data described above to understand situated and contextualized difficulties.

After running a linear regression analysis, the results showed that the higher the number of exercises students choose to present at SLTs, the higher the final grade achieved at the end of the course. A significant positive correlation (r = 0.618) is identified between the participation in SLT versus their final grade. Furthermore, the correlation between SLT participation and final grade is higher than the correlation between the pretest and final grade. The correlation for the students that did the pretest is slightly positive (r = 0.44), as expected. However, students who actively participate in SLTs (more than 70% engagement) have higher chances to do well in the final exam than those who have high prior knowledge. This means that SLT participation may compensate for the lack of prior knowledge for the majority of students. In this regard, a teaching strategy can effectively change a student’s predicted pathway based on previous performance. The team also analyzed other kinds of LMS data that included access to course resources, videos, lectures and readings. These data did not show a clear correlation with the final grade; thus, it was not considered an achievement indicator.

Beyond statistical data that showed significant correlations, qualitative data were also collected to recover student voices, understand their learning challenges and identify supporting actions that helped them overcome these challenges.

Emerging themes from the in-depth interviews allowed for identifying the main areas students needed support with and also learning tasks that they mentioned were helpful to their process. All these data aided in forming the items and the formative feedback messages that included student practices recognized as valuable.

These are the main findings that resulted from the interview analysis:

- Students want to receive feedback early and frequently.

Students mentioned that receiving comments from tutors or professors was often helpful for revising their learning process. Some students commented:

“I think it’s vital to see [the progress] every week. So SLT was a good example that you go to SLT to check whether you have done it the right way. Furthermore, if you have not, then you say that you have not understood something and you have to go back. So I think weekly progress is a very good thing. Because if you see them only in the midterm, which is usually after a month, it’s too late to start trying to go back and do everything again”.

Another student echoed this message and said:

“For me personally it [the feedback] would be yes. Earliest possible, so I can try to face the other stuff within the eight weeks. Instead of struggling with the course not understanding what I’m missing.”

This information reinforced the hypothesis that frequent feedback can contribute to helping students realize that they need to change their study methods before it is too late.

- Students need clarification on EM as an area of study and the prior related content knowledge needed for the course.

Many students reported they were confused about the required prior knowledge needed for the course (despite being in the syllabus), and this misunderstanding disoriented them regarding what content they needed to retrieve. One student mentioned:

“In Electromechanics it was basically motors, which are an application of EM. So I thought maybe something on those lines would be in EMII, but it was more related to communication. So I think I misunderstood that.”

Some students were uncertain if the course related to physics or electromechanics, and this confusion did not help students locate the appropriate prior knowledge needed.

Other students underestimated the math: “Well I did see this course was more math-based than I expected. Calculus mostly with vectors and all came into play as well.” This misunderstanding could limit student abilities to go back to their math courses to review prior knowledge.

Students mentioned that they would like to receive feedback on the knowledge they are lacking. One student was very clear about this and pointed out:

“If I knew [students] beforehand…[I would tell them] different aspects they are lacking or are not up to date with, so you can point them out to them. Well, you should probably read into this a little more or try to understand this because we’ll get a lot of this in this course, and it will help a lot if you know more about it.”

Students, in general, acknowledged that they needed a reminder of the main concepts that came into play in EMII.

Students mentioned that sometimes the requirements are “forgotten” because EMII is taught in the last two months of the academic year. One student mentioned:

“When we do EMI in quartile 1 [first two months of the academic year], we are fresh. That is the knowledge, and we are in the flow and then in Quartile 2 and Quartile 3 we do not. Then, in Quartile 4 they kind of expect us to completely remember Maxwell’s equations and build on that. From a logistics perspective, if it’s possible [to move EMII to Quartile 2], I think that might increase student satisfaction/performance in the course.”

Another student said:

“It’s not like the knowledge was insufficient. We were taught the basics we needed in EMI, I would say, because the core mathematical concepts are divergence and gradient. These three concepts were really taught in succession in a week or a week and a half was given for each topic. So it was fine but then, you know, by the time Quartile 4 came, we were not too fresh with that knowledge and it was not the best.”

These comments showed that formative feedback was needed to address prior content knowledge for each specific topic on EMII. In terms of specific prior content, students mentioned that what they needed was covered in EMI and electronics. These topics included calculus and linear algebra, complex analysis, vector calculus, Maxwell’s equations, circuits, signals, differential equations, waves, coordinate systems, potentials, etc. Prior knowledge was particularly relevant to this course because of the focus on providing an understanding of fundamental physics principles.

One student commented:

“I do not think there is a good chance of passing this course if you do not understand the principles behind the first course. So that is very essential. Of course there is a lot of calculus as well”

When asked about specific content that professors could have strengthened, one student mentioned boundary conditions and vectors. “There are a lot of new calculations with vectors and they can become quite lengthy and difficult sometimes”.

Solving bounce diagrams seemed to be a hinge moment of the course. A helpful reminder of prior knowledge required after two or four weeks after the course started could contribute to understanding this topic.

- Students reported having many misconceptions.

Students reported having to “unlearn” some of their intuitions to understand EMII concepts. Students mentioned:

“For example, circuits or signals, do you think that really matters in this course? Yeah, for circuits at some point. yeah. What you have been taught is a bit wrong because when you look at it from a physics standpoint it works differently. So that is the relation to circuits and signals. Of course there is a very last part of electromagnetics that is related to antennas. So in some way it is related to signals as well.”

Another student mentioned:

“I would say that the normal problems regarding bounce diagrams. Even though the problem itself is simple it left an impact on me because I realized that these are cultural flaws that we have been learning right from the start, right from high school. In fact, they are not completely correct. Because they can only be applied once everything is in steady state. Furthermore, to reach a steady state, we need those bouncing and reflections of the wave. So, those questions kind of helped me. Helped me understand that in real life, how the real phenomena works that we were thinking that everything is instantaneous, but these fields are traveling at the speed of light and, yeah, they reflect a lot.”

Based on a student conversation, the study of concepts such as vectors or waveguides was deeper than they expected. When recalling a problem about the direction of how a wave travels, one student commented that it was complicated because:

“It depends on how you define it. I guess you could define it either direction but it depends on how you define the time. Furthermore, I think, in my mind I would say, like, if the Time and displacement are opposite signs and you’re traveling in the positive direction I think. That is in the end but like in the electric was like he wrote the time vector with the opposite sign and he said, Oh yeah., but then it means it turns around and it was like, Okay wait. Oh, you can do that, right? Okay. It just depends on how you define the notation, for that.”

This quote shows a student is deeply confused and is an example of the difficulties, misconceptions and lack of prior knowledge they face. These kinds of comments show the crucial role of conventions in the mathematical definitions of some physical phenomena. Thus, formative feedback could more clearly describe the “area” of study and the related prior knowledge to remind students of content knowledge they learn in other subjects, including recognizing the link to physics concepts. In addition, formative feedback was needed to address misconceptions about how some physical phenomena work.

- Student interactions contributed to learning and to keeping up with the course pace.

Students mentioned that working with a small group and participating in the SLTs helped them understand the content and keep up with the course. One student said:

“For the exercises, I just met up with a couple of other students and we did most of them together, like in a small group. Furthermore, the student led tutorials really helped not fall that much behind”

Because the pace of the course is high, one student recommends asking immediately when in doubt:

“I would recommend not wasting too much time on being stuck. If you’re too stuck for an hour or two, start asking questions to anyone, using the chat channel or write an email to the professor.”

Students also mentioned that the Discord channels and looking at each other’s work and questions were helpful. Thus, the feedback included a section advising students about the importance of meeting with their peers.

- Revise reader, online lectures and Discord Q&A.

Some students mentioned that “Everything is in there”, referring to the Q&A channels on Discord, and many times they learned the answers by revising the material.

One student, for example, mentioned that he did not know the process of solving an equation. He found it in the book. Thus, the feedback pointed to different resources including videos, articles and book chapters.

- Provide hints or questions or counterintuitive questions that help in interpreting the problem.

Students mentioned that interpreting the problems and questions was key to solving them. Among the strategies they used were the following:

- (a)

- Breaking a problem into sub-problems;

- (b)

- Identifying what variables are provided.

A student commented:

“Start by seeing what variables you have. What kind of things can you do with them? Then, you can often see where I have four values from one machine and one value from the other. So I probably have to calculate everything from the first machine and then transfer it over to the second machine.”

“Understanding how to apply the concepts we have learned to that particular problem because it was quite extraordinary, which was which ones we should use and how to get the data. Apply the data they have provided to make, essentially, a circuit.”

As a result, feedback included strategies to analyze problems and apply concepts to solve problems.

- Understanding the exercise questions was a challenge for students.

Students mentioned several times that their problem was interpreting what the problem asked. One technique they found helped this process was drawing:

“One of the questions felt [that] it missed some explanation. So it takes a bit more to really read and understand what [the teachers] want from you, and it takes time, and then you cannot really solve it in the exam. That is something [that occurred]. From many of the students, they really missed the drawing as the explanation was in writing and they really missed the drawing or schematic of how the problem looks like. So it was hard to do calculations from it, if you do not really have an understanding of what you need to calculate”

Another student mentioned that sometimes they did not understand the meaning of the words that were used. It may be helpful to have an alternative way to express the idea with ordinary words. Some students commented:

“If I’m stuck in an exercise, it will probably be things like trying to use these equations or just pointing out some intermediate steps. Explain which route you should take.”

“The easiest thing for me is to either point to some easier problem that has the same meaning or basis. Or say (point out that) you solved it already in the other question. So I know where to start to approach it.” (This was mentioned by two students).

- An understanding of how things work.

Several students mentioned the need to understand “how things work”. One student mentioned a sense of “enjoyment” at understanding the physics behind it. Students said:

“I also enjoy physics and it’s a good mix between physics and electricity and understanding how the electrons and charges work from the physical standpoint. Not just from applications”

“We also had knowledge clips and additional material which we could explore in our free time or get even more explanations about some topics. That is the best way to attract students to a particular subject that you give more than it’s necessary.”

- Include the historical and social context of problems.

Students mentioned the value of adding historical and social contexts to the contents in EMII. “It showed the professors are thinking outside the box and trying to get students interested in the topic. Not just having raw problems, [like, for instance] you have these walls and these charges and calculate what current flows…it’s really boring that way”. Another case they mentioned regarded biomedical engineers on the conductivity of humans, where they had to calculate the resistance of human tissue.

After a careful analysis of student learning challenges, feedback templates integrated the best formative feedback practices based on the literature review with the most frequent learning challenges students reported. Part of the feedback took the best practices students reported during the interviews that contributed to overcoming their challenges. Thus, the feedback incorporated student voices, practices and perspectives, making it relevant to this class so that students could relate more to the message.

One specific alternative template was used for the first feedback message, i.e., the “Welcome” message, as it included information on the general characteristics of EMII. This information was the same for all the students since, at that point in the course, we did not have any LA performance data to target different groups of students. The second part of the first message included more targeted feedback since it suggested the revision of specific concepts based on the prior knowledge test results, which were used for initial diagnostics. These second sets of data were personalized since they recovered the test items each student had problems with. The third section offered general learning strategies. Professors completed this template using colloquial expressions “as if they were talking to the students”. The first version of the feedback message was written using formal pedagogical expressions. After reading the messages, the team realized that this tone did not help bring students and professors together.

Appendix A presents a summary of the template that professors completed. Another template was used for the subsequent feedback messages. Templates varied for each EMII topic. Thus, the team developed four different templates to include two topics per message. In addition, feedback was different for low-, medium- and high-performing students, as measured by their practice exercise participation (the strongest indicator of achievement). Appendix A includes an example of a message for low-performing students. Intentionally, the feedback included a picture of the professors’ faces with an emotion corresponding to LMS participation, together with a colloquial message, using typical expressions of the professors in the course. The aim of these strategies was to make clear that professors—and not robots—wrote the messages to strengthen the pedagogical relationship between students and teachers.

4.2. Phase 2: Developing the System

As mentioned above, the main indicator of student performance was SLT participation or the number of exercises students chose to present during practice hours. The participation rate must reach 60% at the end of the course as a requirement for taking the final exam [50].

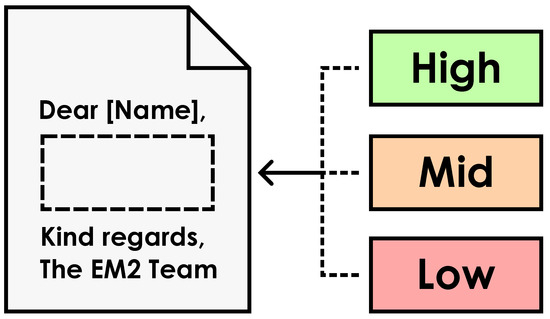

The second important indicator of student performance was prior knowledge, as measured in the diagnostic test. The first message provides feedback based on these results. The process of sending each message to the right students is as follows. A table is generated with pretest and SLT participation information and student IDs. Each row is used for creating and dispatching a tailored feedback e-mail. This process is schematically presented in Figure 1. The message consists of a “skeleton”, which is kept identical among all e-mails, that contains items where one of multiple tailored paragraphs can be slotted into. For determining which paragraph applies to which student, the numerical scores in the table are classified into three groups: low, mid and high. Since reaching an average SLT attendance of 60% is a course requirement, any score below this threshold is considered a “low” score. Having an attendance rate greater than 75% is considered a “high” score, and any value between 60% and 75% falls into the “mid” category. With these items in the message filled in, the only step that remains is sending the message to the correct student using the corresponding e-mail address from the table.

Figure 1.

Schematic of message template structure for APSPMS.

The system sends one message every two course topics or units (approximately bi-weekly). Intentionally, students receive feedback a week before a test so they have time to prepare (either midterm or final exam). Sending the message sooner does not give the system time to gather timely LA data.

4.3. Phase 3: Analysis of the Intervention

Overall, data show that students who received the feedback performed consistently better than those who did not. When tested for statistical differences, the variance was significant. Table 1 summarizes the main results.

Table 1.

Resulting data for students with/without automated feedback for academic years 2021–2022 and 2022–2023.

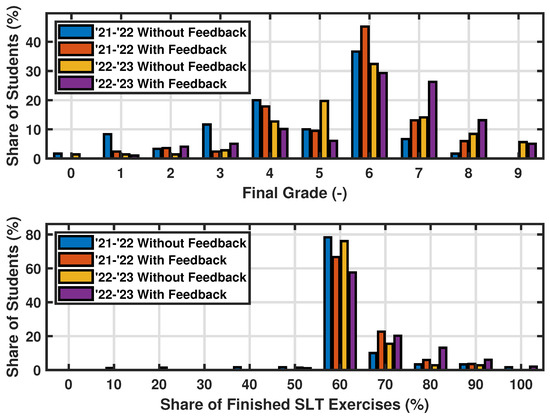

Figure 2 shows that students who received feedback participated more times in the practice exercises (SLTs) and achieved higher grades. To test for statistical significance between the results, an analysis of variance (ANOVA) was conducted, followed by a multiple comparisons test using Tukey’s honest significant difference procedure. It can be concluded that the results, in combination with a p-value = 0.0014, are statistically insignificant.

Figure 2.

Comparison of student results with and without APSPMS feedback, excluding dropouts at the final exam (grade of −1) for academic years 2021–2022 and 2022–2023.

The first consideration is that more students from the 2022–2023 academic year passed the course with respect to the 2021–2022 academic year. A total of 48% of total students (with and without the feedback system) passed the course in the 2021–2022 academic year compared to 58% in the 2022–2023 academic year, while the course materials, teaching methods and tutorial lessons remained the same, the assessment format changed from one academic year to the other (the content did not change). During 2021–2022, the midterm exam was optional and accounted for 30% of the final grade. During 2022–2023, the midterm was also optional, but after these were graded, students decided whether to keep their grades. If they did, the midterm grade accounted for 50% of the final grade. If not, they would take a larger final exam with both the content of the midterm and the final exam for 100% of the grade. A total of 159 (almost half the group) students opted for the midterm in 2022–2023. It is possible that breaking the exam into two parts contributed to increasing the passing rate. Due to these different grading schemes between the two academic years under consideration, we did not opt for aggregating all the data and analyzed them separately in each academic year. Despite these changes, almost twice as many students who opted for the feedback received a final grade in the range of 6 to 7 when compared with the group who did not sign up. This was the case in both 2021–2022 and 2022–2023 academic years. The grading scale in the Netherlands ranges from 0 to 10, and 6 is the minimum grade for passing a course. It can be observed that there is a significant and consistent difference in the number of passing students with and without feedback. For the 2021–2022 academic year, the difference is 21%, while in the 2022–2023 academic year, this difference is 15%.

The feedback seems to have a larger impact among those students receiving 6 and 7 as grades, and marginal or with no impact on high-performing students. Indeed, feedback for low- and medium-performing students was larger and contained more detail. During the focus group, high-performing students complained that they did not receive detailed feedback with actionable tasks because the “system” was set to provide such messages only to low- or medium-performing students.

Participation in SLTs was also higher among the APSPMS group. Particularly, in 2021–2022, 89 (95%) students who received feedback reached the minimum 60% participation rate in SLTs versus 62 (84%) students from the no feedback group. The 2022–2023 results showed only a 2% difference.

About half the students made an attempt to take the pretest (by answering at least one pretest question). Most of these students also signed up to APSPMS. This situation made it difficult to control achievement with pretest scores. However, comparing the percentage of students who passed the course, it was found that 85.5% of students who took the pretest received a final grade of 4 or higher versus 84% of the general student population. Thus, students who decide to take the pretest do not perform much better in the final exam than students who do not take the pretest. Interestingly, the grade distribution showed that a further 14% of students who also took the pretest and received the ASPPMS feedback received grades 6 or 7, which is higher than the non-pretest or APSMPS students.

In terms of dropout rates, an average of 11% of students who signed up for the feedback dropped out vs. 18% of the students who did not receive feedback.

The survey showed student perceptions of the feedback system were positive in general. Most students preferred to receive feedback through e-mails. For the large majority of students (more than 7 out of 11) participating in the survey, the feedback contributed to the following learning actions:

- Offered ideas to study EMII;

- Motivated them to learn;

- Recovered prior knowledge to learn EMII;

- Provided specific learning strategies;

- Clarified the learning content needed for the course.

These comments were also echoed in the focus group.

In addition, students reported that e-mails made them feel they could learn EMII and felt encouraged to work harder. They also pointed out that feedback was another way professors followed up on students with the coursework and addressed their learning needs. One student wrote:

“It really motivated me whenever I would receive the email, it’s a small thing, but it’s really nice. Because of this I only missed 4 ticks [in SLTs], and the reason was that they were too hard, but I personally liked this project! Keep it up EM team!”

In terms of the learning actions students took after receiving the e-mails, they mostly reported:

- Comparing the content of the email with their own learning practices;

- Working harder on SLTs;

- Returning to lectures and videos.

Half the students also reported not doing anything in particular after reading the feedback. Finally, most students mentioned the timing of the feedback was adequate. During a focus group, several students made critical comments about the feedback system. First, they mentioned that feedback did not address the exercises students attempted to solve. In most cases, students reported they wanted to receive feedback even if they solved the exercises because in their words: “Ticking the exercises does not mean that students understand the exercises”. One student said:

“I did most of the exercises. Preparing them does not mean that you know it or understand it. The feedback says “you are doing great” but does not address doubts.”

Students also reported that their friends who missed several SLTs received very useful feedback. One student commented, “If you miss it you got really good feedback”. On several occasions students mentioned that for those who performed below expectations, receiving feedback guided their learning. They reported that for these students “The content of feedback was clear”.

5. Discussions

This study has provided a description of the development of a formative feedback system that combines LA and student interview data. The APSPMS incorporates EWS technology with formative feedback to provide students with actionable content-focused learning tasks. The development of our system required in-depth analysis of student learning needs in the particular subject area. To analyze the effects of LA-based content-focused feedback, this study compared the performance in an electromagnetism course between two groups of students: those who opted for feedback and those who did not. In this regard, the study followed a quasi-experimental design. In this section, we discuss the results of the study.

In the first phase of this study, we examined different sources of data to build the indicators that could predict student achievement. The results of this part of the research showed that student participation in practice exercises and a diagnostic test on prior knowledge highly correlated with final grades. These findings support a hypothesis by Tempelaar et al. regarding the predictive power of course tasks, such as assignments, quizzes and tests [11]. Because of data privacy regulations, we were unable to use student academic history data. Thus, we could not compare the predictive power of different sources of LMS data. Furthermore, it was decided, for this pilot experience, that we work on challenges that were within actionable reach of the professors and TAs of the course. Learning strategies for EMII were within this focus; socio-demographic variables and student academic history would need a specialized team of psychologists and social workers to look at the data and to intervene later on. Identifying possible actionable tasks contributes to building EWSs that include pedagogical responses that are feasible for a group of professors and TAs.

Furthermore, when comparing the overall use of platform digital traces with practice exercises and pretest, we found that it was the latter sources that showed clear patterns of correlation with achievement. Thus, the results of this part of the study support the hypothesis that sources of data that closely relate to the application of content knowledge in specific learning tasks are better predictors for LA projects. Moreover, this study shows the potential predictive power of small data and/or self-generated data from the course. The lack of the academic history of student data is usually a problem most universities experience due to GDPR. Therefore, conducting LA with small and self-generated data will be the case for many institutions. This research sheds some light on this challenge.

In addition to using quantitative indicators, our study valued student voices to both understand learning challenges and document student learning strategies. Following an SRL model, students are active participants in their educational process. This means they engage in tasks, develop learning strategies, monitor the process and make adjustments based on their analysis [19]. Because of this learning paradigm, documenting student self-learning strategies, especially those that were effective, was important to providing quality feedback that relates to student struggles. Qualitative data from student interviews resulted in a relevant source to learn about student challenges and strategies to overcome them. In [37], students perceived feedback as being too generic and impersonal, this project made an intentional effort to personalize feedback relying on student and teaching voices and on the specific content knowledge of each of the EM topics of the course. The tone of formative feedback was made in cooperation with the professors as if they were talking to the students. In addition, we recovered student learning practices that they reported to have contributed to learning as part of the feedback. Consequently, student voices were also represented. As a result of this inquiry, targeted and focused content knowledge formative feedback was developed. Thus, this study presents a model of data collection for LA that includes qualitative information and analysis to improve the development of content-focused feedback.

One important challenge that LA-based feedback has is timeliness. Based on our interview data and on prior research [23], students benefit from receiving feedback as early as possible. However, generating adaptive feedback that addresses student struggles requires student performance data. We attempted to overcome this challenge with three strategies. Firstly, we used prior cohort data to learn about student achievement predictors. Secondly, we sent the first feedback message within the first week. This message had a generic section that was identical for all the students and a second section that only accounted for the pretest results, as no other performance data were available in the first weeks. Thirdly, because in prior cohort practice exercises participation was highly correlated with student achievement, the team decided to use these measures and achievement indicators to send the following sets of feedback every fifteen days. A lesson learned from this approach was that many students who were classified “high performing” by the system mentioned that they should have received feedback for medium performances since they were not confident about their learning strategies. For future editions, a “confidence” index could be added to further personalize the feedback. Another lesson we learned was that even if the prediction model is not accurate, students value feedback. Thus, designing feedback that is both content-focused and targets different achievement levels is an important and necessary challenge.

An important implementation challenge is to design a structure and format for the feedback message. To ensure the relevance and quality of feedback, the team agreed on a series of items that all feedback should address. The items resulted from a literature review on effective feedback and from the qualitative sources of data. Moreover, since prior knowledge is a relevant factor in SRL, this research focused on content-based feedback. Templates that helped professors write the feedback messages were developed. These templates can be reused for future editions since professors can improve the message but still keep the items and structure of the feedback.

Students mentioned the content-focused feedback that provides actionable learning tasks was relevant and helpful. This study adds to the discussion about the types of feedback that are effective for students; while we did not compare process-oriented feedback with content-oriented feedback, we found that content-oriented feedback seems to support student learning.

The results of this study show that the group of students who received the feedback had better grades and SLT participation rates than the group who did not receive feedback. Although the statistical analysis showed a high level of significance, this team recognizes a possible bias in the sample of students receiving the feedback due to the fact that students self-selected to participate in the project. However, it is impossible to control all prior variables affecting learning with self-selected groups. Ethical reasons inhibit the building of experimental groups to conduct a more rigorous study. Under the umbrella of design-based research, the interest in transforming teaching practices and the impact of an intervention is more relevant than controlling all variables in a sample; while the two groups might not be strictly comparable, the group receiving feedback did consistently better in terms of achievement, SLT participation and dropout rates for two consecutive years.

Regarding student experiences receiving formative feedback, most responses imply positive attitudes. Students responding to the survey recognized the relevance of the feedback to both orient their learning and provide motivation. The colloquial tone of the feedback, containing the pictures of the professors, contributes to creating a positive motivational setting. One way to strengthen the research will include inquiring further about student experiences with the APSPMS. In addition, future work should include significantly improving the system both in terms of prediction accuracy and adding elements in the e-mails that will further incite students to action. These data show that having a larger repertoire of feedback messages is necessary to reach more students.

This study offers a description of the process of building formative feedback from a perspective that includes student experiences. In addition, we provided a methodology to learn about the feasibility of implementing such a project in an actual university setting. The challenge is to improve the system to reach more students, particularly the apparent high-performing students who also demanded feedback. For this goal, it is necessary to collect more LMS data to further understand student learning actions after receiving the feedback.

Author Contributions

Conceptualization, C.M.; methodology, C.M.; software, T.B.; validation, C.M., K.v.H., T.B. and C.V.; formal analysis, T.B. and K.v.H.; investigation, C.M., T.B., C.V. and P.S.; resources, T.B., C.V., M.B. and R.S.; data curation, T.B., C.V. and M.B.; writing—original draft preparation, C.M., T.B., Z.B. and P.S.; writing—review and editing, Z.B., C.V., K.v.H. and P.S.; supervision, M.B. and R.S.; project administration, M.B. and R.S. All authors have read and agreed to the published version of the manuscript.

Funding

This project was partially funded by The Netherlands Initiative for Education Research (NRO) through the Comenius program Senior Fellow grant and partially by the Eindhoven University of Technology through the Be the Owner of your Own Study (BOOST!) program.

Institutional Review Board Statement

This research was reviewed and approved regarding privacy aspects by the privacy team of the TU/e, and underwent the approval process of the Ethical Review Board of the TU/e. In line with the GDPR, a data protection impact assessment was conducted to ensure the research met the privacy and data protection regulations.

Informed Consent Statement

Informed consent was obtained from all subjects involved in this study.

Data Availability Statement

The data used and analyzed in this study are available from the corresponding author upon reasonable request.

Acknowledgments

The authors thank both NWO and TU/e for funding this research.

Conflicts of Interest

The authors declare no conflict of interest. The funders had no role in the design of the study; in the collection, analyses or interpretation of data; in the writing of the manuscript; or in the decision to publish the results.

Appendix A. Feedback E-mail Formats

Feedback template for professors (Feedback E-mail 1)

Name: Welcome Message

Content: Feedback on pretest, explanation of system

Send date: Approximately April 28th

Dear {{Name}}

About these messages: We have been analyzing student data in CANVAS to develop a system that reports on your progress, anticipates challenges, and selects specific feedback messages based on your learning needs. Research shows that formative feedback helps student learning. Furthermore, former students of this course reported that feedback was effective. Based on these data, you will receive automated feedback every two weeks.

This message has 3 sections designed to guide you on your learning:

- Details about EM2 as an area of knowledge

- Feedback based on your survey

- Evidence-based study strategies

Section 1: Details about EM2 as an area of knowledge

Message: Uncertainty about what Electromagnetism includes was a frequent and relevant challenge for students.

Complete the following points:

- Details about EMII as an area of knowledge:

- Object of study:

- The relation between EMII with Electrical Engineering:

- What is new in this course:

- Relevance of EMII for engineers:

- Prior knowledge you will need:

Section 2: Feedback based on your survey

Based on your diagnosis test and in our experience teaching this course we strongly suggest you revisit {{name of topic with low average score in pretest + chapter of the book corresponding that topic}}

{If at least one answer is incorrect}

If you revise and study the following concepts you will be better prepared to understand the incoming concepts. You don’t need to study them all at once! You can gradually revisit the concepts based on the block’s topics. We provide the book section that addresses the concept but you can also check on your EMI, Calculus, or Circuits notes.

Prior knowledge from EMI we strongly suggest you revisit from the textbook Engineering Electromagnetics by Hayt and Buck.

Example: {if cluster ID 1 average is lower than a determined threshold value}

Dot Product. Book Section 1.6, 1.7 Cross Product/1.8 Other coordinate Systems; Hint: Look at the definitions of the order of components in each coordinate system. Remember that the cross product is antisymmetric.

Section 3. Evidence-based study strategies

How to learn best: Many students like you, reported that they learned a lot from interactions in SLTs, Discord Channels, and in study groups.

So, don’t get stuck, get together and do not hesitate to ask questions to your fellow students, Q&A sessions or on Discord.

We hope these messages help you learn Electromagnetics II. Good luck! The EM2 team.

Full Feedback E-mail Example

Dear{{first name}},

This is your feedback report from APSPMS; the Automated Prognostic Student Progress Monitoring System. The system analyzes your activities on the platform and based on that selects feedback messages that help you guide your learning process. Please note that the system is still under heavy development and some suggestions may be erroneous. At this point in the course you only participated in % of STLs. To date, this is less than we expected.

In the past, students who actively participated in SLTs did very well in this course. Last year one student commented:

“I think the discussions in the SLT were the reason I kept up with the course, and extended my understanding of the material of the week by discussing it with others. Because if we didn’t have the SLTs, it would not have been discussed that much.”

Based on our experience, if you increase your SLT participation you will do well in this course. Make sure you participate in the upcoming weeks.

Here are some solving problem strategies that helped other students. Please, keep them in mind to revisit blocks 1 and 2 and to learn blocks 3 and 4:

MAKE A SKETCH! If you have a problem, read it, make a sketch of it and start with the boundary conditions. It is best to start with conditions and then slowly—especially when you study again blocks 1 and 2—think about TIME.

For blocks 3 and 4 look at the transmission line in small steps. Although they look complex, if you break the phenomenon into parts it can help understand the whole.

Remember the finite speed of the wave, meaning that you should try to think about what a wave can and cannot do.

REVISE YOUR ASSUMPTIONS: The most frequent misconception students have is thinking that all signals work the same. Normally you may think that there is a big diagram and that if you put on the signal it will work the same in every case. That is not always true. The signal takes time to propagate, just like when you throw a stone in the water which makes the waves. One strategy to visualize this process is the concept of drawing a line on a piece of paper. It takes time to draw a line, and the drawing helps you visualize the process. Easy right? Now, when the wave is coming in another direction you have to visualize the splits. If you visualize it or write it down in a couple of words, it will give you an understanding of the problem.

For incoming blocks 3 and 4, a common mistake students have made is in the transition from “changing field” to “time harmonic field”. As the name implies, a time harmonic field has a periodicity, whereas a changing field does not necessarily mean that it returns to its original state. For example, if we have a loop and we move a magnet over it, we induce a current. However, if we move the magnet back and forth, suddenly we induce a current that changes over time but is periodic.

LINK MATH WITH REAL PHENOMENON: We expect you to link theory with real things. In the first unit we talked about transmission lines, cables, waves among cables and brought out the purpose of the math to this area. Review these topics to link theory and practice.

DIFFERENTIATE BETWEEN REGULAR SIGNALS AND HARMONIC FIELDS. In blocks 1 and 2, the signal is either on or off. In blocks 3 and 4 we study a continuous wave signal: time harmonic fields. Within these groups of signals the wave is not prograpaged but rather continuously moving.

EVERY MEASUREMENT YOU WILL DO IN HARMONIC SIGNALS USES TRANSFER AND SCATTER MATRICES. For a transmission line to “win something” at the end of the transmission line, it is necessary to change the input of such a line. We use a scatter matrix and other concepts we learned in weeks 1 and 2 to calculate this process (Ex: antennas, transmission lines or schematics electronics). The concepts we use include: Complex transmission lines, time varying transmission lines, PEC inside E-field including boundary conditions.

WATCH THE PHENOMENON AT WORK: Do you know how the transmission among cables work? What is the difference in free space? Check out the simulators on Mathematica Notebooks, play with them and reflect on the content.

FOLLOW THE 3Ps laws. The most common mistake many students make is forgetting to practice. Because you see the professors solving the problem on the board, you may think it is “easy peasy, I can do it”. However, translating the solution from listening to doing it, is a major pitfall you may have. So, as we like to say, do the “the 3Ps” PRACTICE, PRACTICE, PRACTICE. That is why we do SLTs, to build up that skill and see how to treat the questions.

CLARIFY NEW VOCABULARY. Some words that may be new for you are waves, reflections, transmission, bounce diagrams and point in vector, and power balance. Check on the reader or in the web page EMPossible what the meaning of these words are to understand the problems.