Abstract

Even though planning the educational action to optimize student performance is a very complex task, teachers typically face this challenging issue with no external assistance. Previous experience is, in most cases, the main driving force in curriculum design. This procedure commonly overlooks the students’ perception and weakly integrates the students’ feedback by using a non-systematic approach. Furthermore, transverse competences are, unfortunately, typically omitted in this procedure. This work suggests the use of a predictive tool that determines the optimal application time of different methodological instruments. The suggested method can be used for an infinite number of scenarios of promoted competences. The results can be regarded as a guide to modify the course structure, but, more importantly, it offers valuable information to understand better what is occurring in the teaching-learning process and detect anomalies in the subject and avoid the students’ exclusion. The predictive scheme simultaneously considers the teacher’s perspective, the student’s feedback, and the previous scores in a systematic manner. Therefore, results provide a broader picture of the educational process. The proposal is assessed in a course of Electrical Machines at the University of Malaga during the academic year 2021–2022.

1. Introduction

The design of the teaching activity at the university level is undoubtedly a complex task. First of all, the content must be lectured in such a manner that it becomes comprehensive to students, so as to maximize the learning achievements. For this purpose, there is a multitude of methodologies and activities that could be used, but the determination of the most effective approach is far from being a trivial issue [1]. Secondly, the teaching activity involves human beings; hence aspects such as students’ perception, motivation, and emotions are of paramount importance to obtain optimal performance [2]. Although at the university level, the focus is commonly placed on the content (especially in technical degrees), the relevant role of those subjective aspects and the importance of promoting transverse competences are currently well-recognized [3,4]. Thirdly, the proper design of the educational approach is a dynamic task that changes over space (in different locations) and evolves in time (along different generations). These constraints add further complexity since the best approach, here and now, might not be effective in the future (at the same place) or in other locations (at the same time).

The pursuit of pedagogical optimality is, therefore, a Herculean task that should be ideally aided by some external support [5]. Unfortunately, teachers do not usually have long-life guidance to obtain valuable information and assistance. Although some investigations have explored the possibility of aiding the curriculum design using external assistance (e.g., concept mapping techniques in [6]), the course structure is typically done just using past experience as a unique source of information [7]. Such a procedure results in an open-loop design or, in the best case, a closed-loop design with low feedback. There is no doubt that the teachers’ know-how that stems from his/her practice is a valuable source of information, but the course design can be enriched with additional information and analysis of some data-driven indices that might guide the teaching activity. In fact, different works have highlighted the role of different items when a university course is designed. Taking into account, the information provided in [8,9,10,11,12,13,14,15,16,17,18,19,20,21], the following list of requirements can be proposed:

- R1. Use the experience and opinion of the teacher and/or experts in the field.

- R2. Consider the performance results of the students (i.e., what they effectively learned in the past).

- R3. Take into account the students’ expectations (i.e., what they think they will learn in the course).

- R4. Be a continuous process that dynamically searches for optimality, rather than a static activity that is done once and for all.

- R5. Avoid the exclusion of students (i.e., when the results show a high variance).

- R6. Promote the students’ motivation and enthusiasm, so as to impress effective and long-life learning.

- R7. Aim at providing a high degree of excellence in the acquisition of technical competences.

- R8. Facilitate the development of transverse competences with a key role in the professional career.

Requirement R1 is usually fulfilled, and it is, in fact, the main traditional driven force while designing the structure of university courses [7,8,9,10]. Requirement R2 is considered in many cases in an informal manner since the teacher knows what occurred in the last year and has an idea about the past performance (in exams, tasks, or any other activity). Nevertheless, this feedback is typically used by the teacher without detailed data processing that might reveal some key aspects of the students’ performance. By contrast, works as [12] propose to consider the student performance as a fundamental part of the activities design. Requirement R3 is omitted in most cases, and, in the best case, it is indirectly taken into consideration through the feedback from conversations with the students. However, the expectations play a key role in the learning process since they impact motivation and ultimately decide the predisposition of the learners [13,14,22]. The fulfillment of requirement R4 highly depends on the teachers’ professionalism and attitude towards the subject. However, this issue should be a must in the course design since the teaching activity pursues a moving target [9,10]. In many cases, the lack of refreshment in the subject structure is caused by the lack of feedback. In other words, teachers think that everything remains the same because they are mainly relying on R1, which typically presents a high inertia after some years of experience. An external and systematic source of information should help in this regard. Requirement R5 is rarely considered, either in a qualitative or quantitative manner. It is, however, important when the class group is not homogeneous [15,16], as is the case in this study (see Section 2). When students have a very different levels of motivation and skills, some activities can be adequate for a part of the group [15] but may exclude other students that cannot follow the subject. If the exclusion is due to a poor previous background or a bad attitude from the student, the teacher might still consider those activities as valid, but it is worth evaluating this information in any case. Requirement R6 is always within the aim of teachers [17], although the procedures to awaken the interest of students are sometimes mysterious. This requirement is of paramount importance to promote lifelong learning [7]. In this regard, it is advisable to obtain information about the actual enthusiasm of students with the different course activities and methodologies [17,23,24]. Requirement R7 has been traditionally regarded as the main (even unique) aspect of technical degrees. It groups, however, skills of a very different nature, for example, theoretical knowledge, calculation abilities, data analysis, or problem-solving skills, to name a few. The selected activities and timing will promote in a higher or lower degree each of these technical aspects [18,19]. Finally, requirement R8 has growing relevance for professional development [19], and it is highly appreciated at industry [2,21,25]. Teamwork abilities, creativity, leadership, or communication skills are, in most cases, more important for companies than high-performance technical capabilities [19,20]. For this reason, transverse competences should definitely be promoted together with classical technical skills. It is worth noting, however, that fulfilling requirements R6, R7, and R8 at the same time is difficult, and consequently, a proper tradeoff must be sought in accordance with the target learning approach [7].

The consideration of requirements R1 to R8 in a systematic manner to obtain a simple tool for the course design is not straightforward, but fortunately, science provides a wide variety of mathematical approaches that can be used for this purpose. Some of these tools, commonly used to solve engineering problems, have been exported to the educational area. In most cases, these algorithmic approaches estimate relevant information to improve some educational aspects [26,27,28,29,30,31,32,33,34,35,36,37,38,39]. This is the case in [26], where artificial neural networks (ANN) are used to forecast which students will enter a university by considering their academic merits, background, and admission criteria. The proposals detailed in [27,28] also use ANNs together with other data mining techniques to determine the instructor and student performance, respectively. In the same trend, [29] studies how students perform in virtual learning environments with online teaching and [30] quantifies the academic performance using self-regulated learning indicators and online events engagement. In spite of the successful utilization of algorithmic approaches in [26,27,28,29,30], it is worth noting that those predictions do not directly assist teachers in their challenging task of designing a proper course structure. The same applies to studies that analyze the students’ behaviors and achievements [31,32] or identify the students at risk [34,35,36,37,38,39].

A more practical approach to guide the teacher activity is found in [7], where model predictive control (MPC) is used to determine the times of application of some predefined teaching activities. In this proposal, MPC is responsible for determining the optimum times of applications (inputs) to promote some target competences (outputs). Consequently, the aforementioned predictive tool guides the teachers’ practice by suggesting the teacher how to adjust the timing in a certain course. Although MPC is commonly used in the engineering field (e.g., to regulate electric machines [40,41,42,43]), it can also be a promising tool in the educational context. Nevertheless, some limitations can be identified in the precedent investigation [7]:

- L1. The activities/methodologies are predefined and fixed in advance.

- L2. The method fully relies on average values; hence the insight into the performance variance is lost.

- L3. A single prediction model is used with no comparative results.

Limitation L1 prevents the predictive tool from satisfying requirement R4. Since the activities are defined a priori and remain fixed, the evolution of the subject is hampered to some extent. Limitation L2, in turn, diminishes the capability of the assistant tool to provide a complete insight into the subject and may lead to non-inclusive teaching procedures. Finally, limitation L3 restricts the method to guide the teacher from a single perspective.

This work extends the proposal from [7] to overcome the aforesaid limitations. For this purpose, the MPC strategy is designed using different settings that enrich the insight that is provided to the user. While some settings give valuable information about the relative impact of each pedagogical methodology on the desired competences (defined by the user as a configurable scenario), others are focused on the calculation of the proper timings from an inclusive perspective (i.e., jeopardizing the cases when a successful solution is obtained with a highly unequal performance from different type of students). Although the capability to determine the times of application and the associated insight was somewhat shown in [7], the method was unable to detect differences between the students’ performance. The analysis of the variance provides the teacher with relevant clues about the profile of the classroom and eases the detection of students that might be at risk of exclusion. A final setting also suggests to the teacher which methodologies should remain, and which might be omitted in future courses. This is relevant to make the subject dynamic and adaptable (complying with R4) since the suggested tool can be used over the years, acting as a kind of natural selection of the most successful activities. Those activities that poorly contribute to promoting the target competences can be extinguished, and other new activities might come into play. This procedure ensures long-life guidance with the capability to evolve and adapt to eventual changes in the students’ profile, subject content, or social context. Furthermore, and this is a key feature of the suggested tool, the information that can be obtained from different scenarios brings valuable insight into the educational process. Since this information is automatic and systematic, it becomes an easy manner for the teacher to better understand the underlying issues that are occurring in his/her classroom, such as the exclusion of some students from specific activities. To be more concrete, it can be said that the study has two main aims: (a) to determine the times of application of the methodologies according to a certain competences profile (eventually omitting some of them) and (b) to provide the teacher with valuable information about the educational process (please see the discussion in Section 4 for further details). Contribution (b) can even be regarded as more important than (a), since the objective is not to create an algorithm that automatically designs the course (bypassing the teacher), but to generate a tool that provides significant information and assists the teacher in his/her decisions about the course structure and timing.

All the results from the different settings are just informative, and it is the teacher who ultimately decides the actions that will be taken to improve the course. However, the possibility to explore different scenarios and settings allows one to obtain valuable information that guides the course design, considering: the students’ opinion, their performance, the differences among students, the competences that should be promoted, and the extent to which the activities should be adapted to a vast majority of students. A powerful characteristic of the tool is that it can be run for as many scenarios as desired, hence opening the possibility to set certain scenarios to evaluate the results that would be obtained. The results of this study will focus on some specific scenarios to highlight the importance of using different settings within the tool and to exemplify how the output of the analysis can reveal some previously hidden aspects of the course (identifying, for example, some activities that put in risk of exclusion some students in spite of the satisfactory average scores).

The paper is structured as follows. Section 2 introduces the description of the course, as well as the methodologies, competences, tasks, and questionnaires defined. Following, Section 3 describes in detail the proposed predictive algorithm, whereas Section 4 evaluates the goodness of the developed predictive tool. Conclusions are finally drawn in the last section.

2. Description of the Course: Methodologies, Competences, Tasks, and Questionnaires

The proposed predictive guiding algorithm can be generally applied to any subject containing different methodologies and various competences. Aiming to illustrate the capability of the suggested tool, this paper includes the case study of an electric machines course with eight methodologies and six target competences. The proposal includes quantitative data from the scores in sixteen tasks and qualitative information from voluntary and anonymous questionnaires to students. Without a lack of generality, this section provides information on the context of this case study implemented at the University of Malaga.

2.1. Electric Machines Course

The proposed predictive tool has been implemented in a 6 ECTS course on electric machines at the University of Malaga. The name of the subject is “Electric Machines 2” (abbreviated ME2 in what follows), and it is lectured in the second semester of the third year to students coming from three different degrees: Electric Engineering (EE), Electric and Mechanic Engineering (EME) and Electric and Electronic Engineering (EEE). Even though the content is the same, the profile of the students is significantly different since the necessary scores to enter each degree vary considerably. For the sake of example, the cut-off mark in EE, EME, and EEE are 5, 10.305, and 5 over 14, respectively, in the course 2021–2022. Of course, the cut-off mark is not itself a predictor of the students’ performance, but it can be regarded as an indicator that the class will be heterogeneous. In fact, the final scores of the subject ME2 in the course 2021–2022 show an average score of EE and EEE students that is 46 and 54.5%, respectively, lower than EME. This information is relevant to understand that the class will not be homogeneous and, consequently, care should be taken in the design of the course to avoid excluding a significant percentage of students. Section 3 and Section 4 will address this issue, aiming to diminish the risk of exclusion in the selection of the activities and the associated timing.

The content of the course is divided into two clear parts: in the first part of the semester, the subject deals with the DC machine, whereas the second part of the course explains the operation of synchronous AC machines. The subject finds a precedent in a course named “Electric Machines 1” that is lectured in the first semester, that is, immediately before ME2. The traditional teaching procedure consists of theoretical explanations in a master class using presentations and/or chalk-and-board, resolution of problems by the teacher to the whole audience, and lab work in reduced groups following highly guided practical tasks (i.e., with a guide that indicates all steps that should be followed by the students). This is the methodology that is still used in ME1 and was used in ME2 for many years. Unfortunately, this procedure is mainly teacher-centered, and the activity of the students in the class is rather low, hence limiting the possibility of the students acquiring transverse competences (teamwork, oral expression, etc.). In fact, even the technical abilities were compromised since the students learned the manner to pass the exam instead of being motivated to generate long-life learning. For this reason, the structure of the subject was modified, and it is still adjusted every year, aiming to promote a wide variety of competences () by using a proper combination of methodologies () is specifically designed tasks with an adequate timing (). Each of these aspects is detailed next.

2.2. Methodologies

The course is designed with a wide variety of methodologies that combine standard lectures with student-centered activities that promote active learning within the class. Specifically, eight methodologies have been implemented in the ME2 subject during the course 2020–2021:

- M1: Master class. This methodology corresponds to the classical lectures where the teacher explains the theory of electric machines and solves problems for a wide audience. It is typically a quasi-unidirectional activity since the number of questions from the students is limited. Even when the lecturer promotes participation, with polls, for example, the role of the students remains somewhat passive. However, it is a powerful approach to provide students with valuable information to many students at the same time, and, if properly done, it can also be highly motivating (as it happens, for example, with the popular Ted talks).

- M2 and M3: Theoretical and practical videos, respectively. These methodologies are similar to M1, but the master class is recorded; hence the visualization is asynchronous. In this case, the communication is fully unidirectional, with no possibility of getting any feedback. On the other hand, it offers the possibility to stop, rewind or move forward the lecture, apart from visualizing it as many times as the student needs. Informal interviews confirm that students highly appreciate this flexibility.

- M4: Problem design. In this activity, the students must design and solve a problem. To this end, they need to set a context, quantify the data, and include an explained solution. In general, it is a demanding activity for the students because they are used to solving problems, but not to creating them. M4 requires describing an application and looking for coherent data that provide sensible results. In spite of the difficulty, this activity promotes creativity, and it is highly formative.

- M5: Work in groups. The students must create, in groups of 4 to 5 students, a document and a presentation for a certain topic. The document is uploaded to the Virtual Campus (Moodle system at UMA), and the presentation must be orally exposed in the classroom, obtaining some feedback from the teacher and other students. The topic is typically collateral to electric machines (e.g., application of synchronous machines in electric vehicles) so that students can broaden their knowledge and learn how to search for valuable information. Students are specifically asked to be technically sound and distinguish between the different sources of information (primary, secondary). The teacher provides some brief knowledge about indexed journals, conferences, or technical reports, to name a few.

- M6: Partial exams. This activity follows the classical structure of an exam, but it includes less content since it is done within the semester and not just at the end of the subject.

- M7: Online questions. This methodology was designed after COVID-19 burst into our lives in 2019, and in-person lectures were not allowed. It is a set of questions that are included in the Virtual Campus for the students to have some self-evaluation and be able to evaluate their skills in the subject. The methodology was kept when face-to-face teaching was allowed since it proved to be well accepted by students and seemed to be a powerful tool to promote their self-regulation capabilities.

- M8: Questions in couples. This activity is brief, and it is developed within the classroom several times during the course. Students are asked to sit in couples with the only condition that they can not sit twice with the same person. This condition is set to force students to collaborate with colleagues that have different profiles. Some questions and brief problems are given to each couple, and they have to discuss and jointly solve the quiz. The atmosphere during this activity is very enthusiastic, and much discussion can be observed in the classroom, which is the main idea of M8. After the given time, the teacher, with the help of the students, solves each item of the quiz, and students make a cross correction for their colleagues; hence the marks can be immediately obtained after the class.

2.3. Competences

The predictive approach that will be detailed in Section 3 and assessed in Section 4 aims at determining the optimal application times for the aforementioned methodologies. However, the term optimal is relative, since it depends on which competences want to be promoted. For this reason, it is necessary to define some target competences that later on will be weighted for each predefined scenario. The competences that have been selected to define the target profile are

- C1: Acquisition of theoretical concepts. This competence refers to the extent to which the students have understood the main concepts of the subject. For example, the understanding of the limitations of the different modeling approaches in the study of the synchronous machine will fall into this category.

- C2: Resolution of practical issues. The capability to perform accurate calculations, make good-quality scripts, build machine prototypes or take measurements in the laboratory are some examples of the skills that can be associated with C2.

- C3: Creativity. This is typically regarded as a transverse competence, but it shows a higher importance in the new context of the industry 4.0. It is worth noting that the standard teaching procedure based on closed-form problems hardly promotes creativity since students are asked to learn well-established repetitive calculation methods. For this reason, the development of this competence requires some non-standard methodologies that give some more flexibility to students.

- C4: Teamwork capability. As in the case of C3, teamwork capability is one of the most appreciated transverse competences in the industrial context since the engineering work is currently performed in teams, especially in multidisciplinary fields where electric machines are used (e.g., microgrids, wind energy systems, electric vehicles, to name a few) [44].

- C5: Motivation. This item has paramount importance in promoting self-efficacy and self-regulation, which in turn have a well-known correlation with the students’ performance. A high motivation boosts the development of creativity or resolution of practical issues since the students are more prompt to accept new challenges, explore new solutions and make an effort to understand the main physical phenomena underlying the electric machines’ operation.

- C6: Satisfaction. Similarly to the case of C5, satisfaction also serves as a powerful source of intrinsic willingness to learn and make the necessary efforts to acquire long-lasting abilities. With a low degree of satisfaction, the students are tempted to make a minimum effort to pass the subject even when the competences that have been developed are weak and ephemeral.

Section 4 explores three scenarios with a very diverse promotion of the competences C1 to C6, each one providing different timings for the methodologies M1 to M8.

2.4. Tasks and Questionnaires

To promote the aforementioned competences, a total of sixteen tasks have been designed for the course of ME2. They are listed in Table 1. Each task to is in turn related to the methodologies shown in Section 2.2, and consequently, the scores that the students obtain in the different tasks can be converted into scores for to .

Table 1.

Defined tasks.

The marks from the different tasks allows obtaining the term that will be introduced in Section 3. Apart from these scores, the students are asked to anonymously answer a questionnaire that includes their opinion on how each methodology actually promotes the different competences [7]. The results from the voluntary questionnaires provides the inclusion of the term that will be used in Section 3. In this manner, the predictive approach will consider both the quantitative values from the scores and the qualitative evaluation from the students’ opinion. A further description of the proposed algorithm is detailed next.

3. Description of the Proposed Algorithm

3.1. Fundamentals of the Proposed Predictive Algorithm

The aim of this predictive algorithm is to provide the instructor with the optimal selection of certain pedagogical methodologies and their corresponding optimal timing to ease the learners’ acquisition of a predefined pattern of competences.

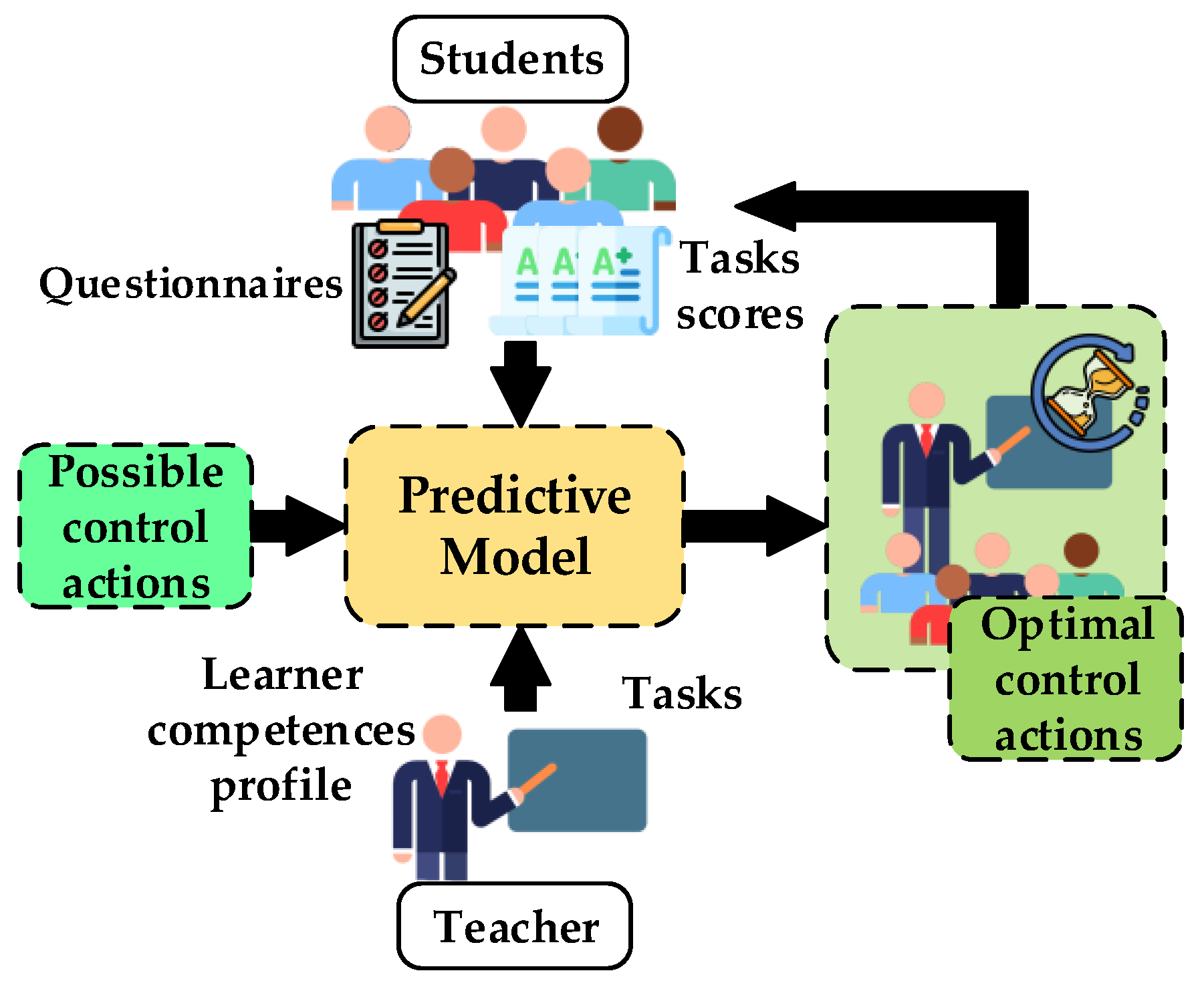

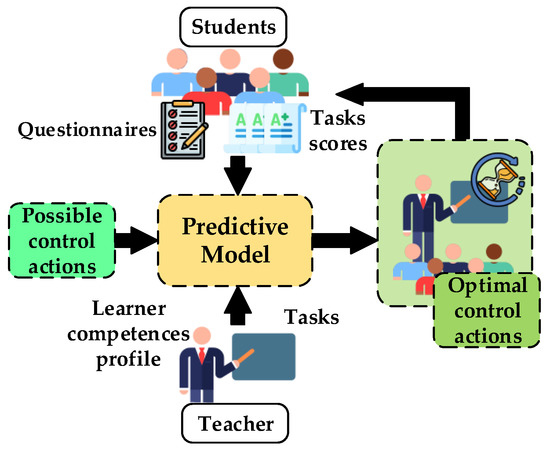

The proposed scheme is based on the model predictive regulation strategies that are widely employed in several fields of knowledge and research [7,40,41,42,43]. The operating basis of the MPC scheme consists in making use of a system model to forecast the effect of the available control actions on the predicted control variables, as illustrated in Figure 1. The aforementioned control actions are composed of some pedagogical methodologies and their corresponding times of application. To assess the impact of the control actions, a cost function is employed. This cost function allows incorporating the control system goals in a simple manner. In addition, certain restrictions on the times of application are included in the proposed predictive algorithm (e.g., in scenarios S1, S2, and S3 detailed in Section 4).

Figure 1.

Basic diagram of a model predictive control approach where the different inputs for the proposed scheme, such as questionnaires, scores, and task, have been identified.

The proposed algorithmic requires the implementation of a predictive model (PM) that enables an adequate reproduction of the studied system performance. This PM permits the evaluation of each control action using a cost function that takes into account the rest of the control restrictions and allows observing the change in the control variables, i.e., the learner competences.

3.2. Available Control Actions

In this case, the available control actions are formed by the available methodological instruments and their corresponding possible application times. The application of different control actions leads to diverse system performances, in this case, it changes the acquisition of the student competences.

The set of available control actions (1) is composed by the aforementioned methodological tools and their corresponding times of application . These application times are directly related to the acquisition of certain competences via the selected methodological instruments, and their shifts allow evaluation of this impact.

The variation of this timing allows obtaining all the available control actions. Nevertheless, this variation is subjected to some timing constraints (2) that can be imposed by the teacher/designer or considering the specific academic regulation. It is worth noting that the total course time is included as a restriction since the application of the optimal selection of methodologies runs throughout the total course duration, and the proposed system model has been implemented using per-unit values.

Regarding those time constraints, it must be noticed that it is possible to omit the minimum values for the application times, hence incorporating the possible discarding of some methodologies. However, a minimum application time is always imposed in M1, since lecturing could be minimized but not fully omitted. An adequate teaching-learning process cannot occur without a minimum theoretical content and without the instructor’s guidance, provided in the master class methodology. This minimum value for master class methodology is fixed to of the course duration. The inherent flexibility of the model predictive control schemes permits an easy inclusion of these time of application constraints (2).

3.3. Description of the Predictive Model

The proposed predictive model takes into account all the partakers and their interactions in the teaching-learning process. Therefore, the students’ opinion, their achieved scores in certain designed tasks, and the teachers’ experience are jointly considered to compose the PM.

The first term in the proposed PM is related to the students’ perception, and it is arranged in . This information is obtained using evaluation questionnaires to express their opinion about the influence of certain methodological actions (i) on each of the selected competences (j). The second term collects the information acquired from the reached scores in the selected tasks by the instructor. Sixteen specifically designed tasks (see Table 1) allow evaluation of the impact of the methodological tools on the competences that compose the desired learner profile. These data are processed and structured in . The purpose of these sixteen activities is twofold, on one side, they promote the achievement of the chosen competences and, on the other hand, they make it possible to quantify the performance. The design of these tasks by the instructor also requires taking into account their relationship with the competences and the selected methodological instruments . Thus, the components of are obtained using the average value of each evaluated testing task and the corresponding value in and as follows:

where n is the number of testing tasks designed by the teacher. It should be noticed that all the components of these term arrangements are composed of average values.

Nonetheless, the proposed work aims at enhancing the PM, avoiding the variability included in the quantitative information collected both in the term that concerns the learners’ opinion () and in the term that incorporates the students’ scores (). This issue is addressed in the proposal since, in addition to the acquisition of the selected competences, it is desirable to obtain homogeneous scores to avoid the exclusion of some learners. To this end, the standard deviation (4a,b) is included in each term of the PM.

where s is the number of students. Thereby, the average standard deviation (), determined using the standard deviation of the achieved marks in the designed tasks (), and the standard deviation corresponding to the students’ opinion () are implemented in the PM as follows:

3.4. Optimal Selection of the Methodological Actions

3.4.1. Cost-Function Implementation

In the design of a methodological action, it is necessary to determine both the content and the associated timing. The content design is out of the control of the teacher since it is typically predefined within the degree guidelines. On the contrary, the selection of the most adequate methodologies and their timing are available degrees of freedom that can be optimized. For a certain time setting (i.e., fixed values of to ), individual cost functions can be defined as follows:

Each one of these cost functions evaluates the impact of the methodological actions individually in the promotion of the different competences . The addition of these individual cost functions permits providing the total set of competences developed by the learner. Nonetheless, it is possible to promote the relative importance of acquiring a certain competence in relation to the others that can be weighted with some coefficient as follows:

The inclusion of these weighting factors in the total cost function gives priority to the competences included in the student competences profile. This, in turn, assists the teacher in the course design according to the specific needs (profile) that are previously set. For the sake of example, let us consider a scenario such as , , , , , and . This specific profile assigns paramount importance to the acquisition of theoretical concepts (C1) and targets fostering the creativity (C3) in a group of teamworking (C4) learners. The results shown in Section 4 will further explore different scenarios and discuss the optimal methodologies according to the proposed predictive algorithm.

3.4.2. Optimization Process and Optimal Timing

The optimization algorithm follows an iterative process where the times of application of the different methodologies are varied to sweep the whole range of control actions in an exhaustive search. Each control action (, ) is the input for the predictive model from (5) that provides a certain cost function . The times of application that provide the minimum value of define the optimal solution for a certain scenario (i.e., for some specific values of the weighting factors ). These optimum times provide valuable information to the teacher to shape the course structure.

The proposed algorithm opens the possibility for the optimization process to set a null value of application time to certain methodological instruments. In this case, these methodological tools could eventually be eliminated. This fact suggests that the inclusion of certain methodological instruments does not adequately contribute to the acquisition of the predefined competences profile.

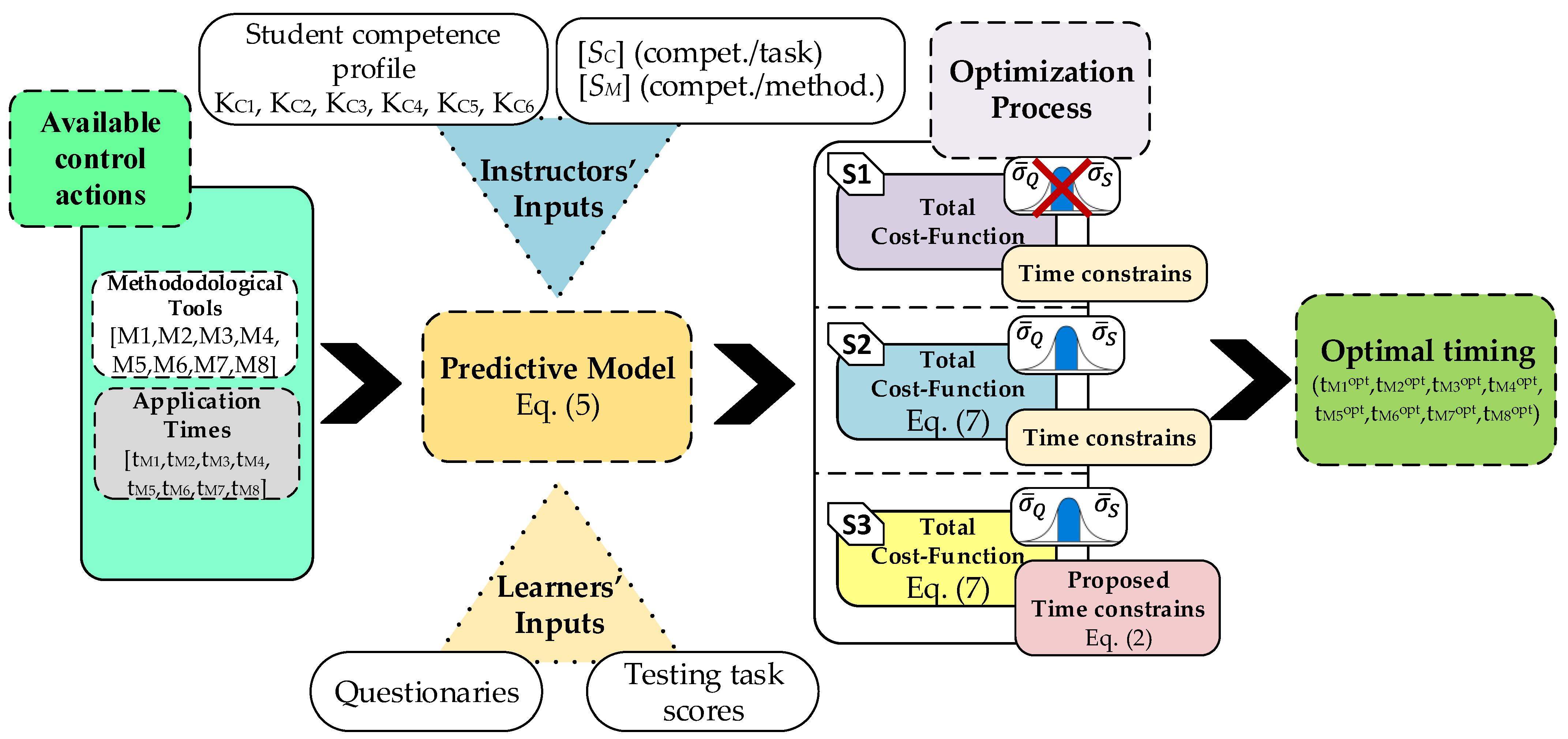

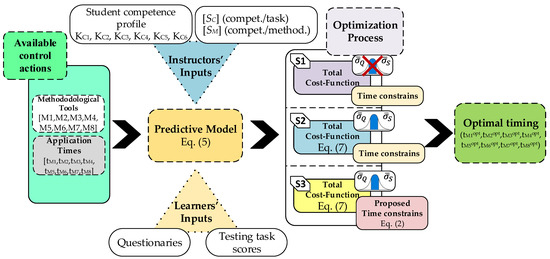

For the sake of facilitating the comparison, in the scheme shown in Figure 2, three optimization approaches are included (S1, S2, and S3). These settings allow analyzing the modeled system outputs when certain restrictions or/and prediction model variations are included. The full description of scenarios S1, S2, and S3 is detailed in the next section.

Figure 2.

Proposed algorithm scheme, including three possible settings where [7] has been employed to design settings 1 and 2. The scheme allows observing the control restrictions taken in to account in the different settings, from both predictive models and timing constraints points of view.

4. Evaluation of the Proposed Predictive Scheme

Results

The predictive guidance, based on the predictive algorithm detailed in Section 3, is assessed hereafter. To this end, different scenarios (i.e., competences profile) will be considered, including various weighting factors for each competence (see Section 2.3). In all the different scenarios under study, the predictive algorithm will provide the times of application of each methodology (see Section 2.2). Results will be given for three different settings:

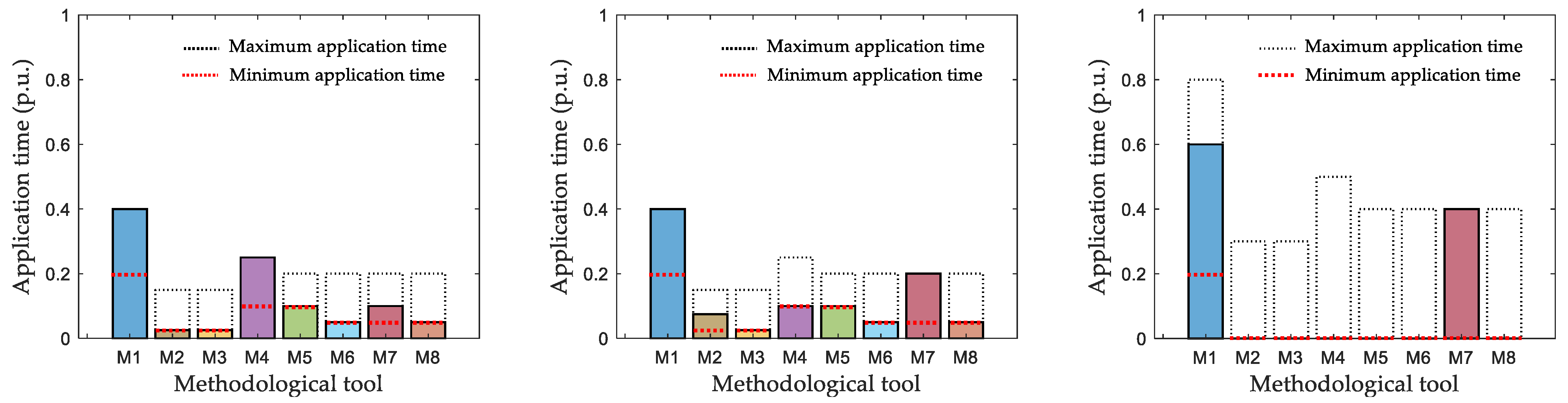

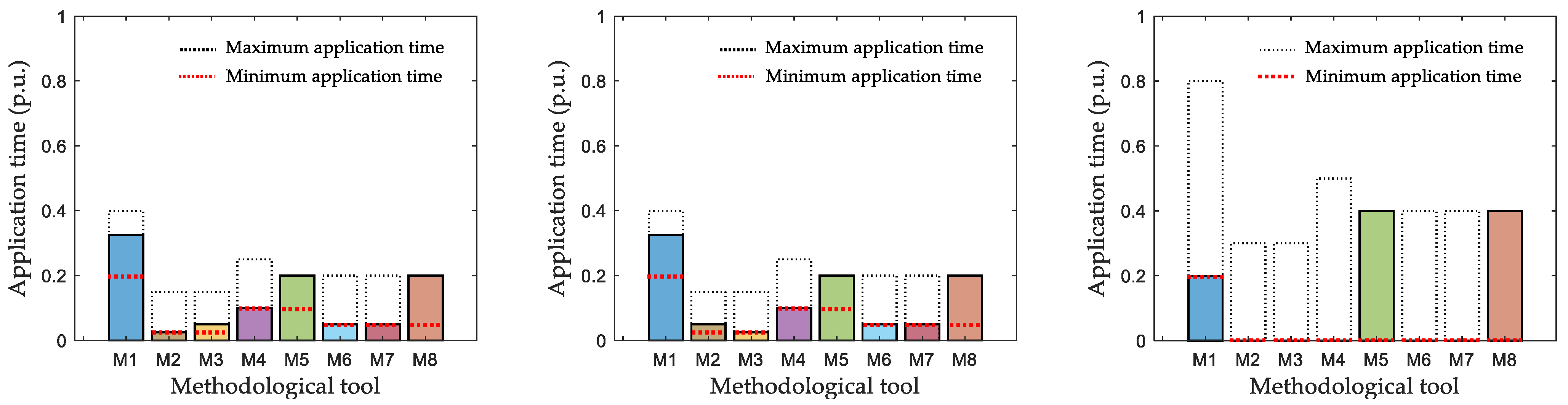

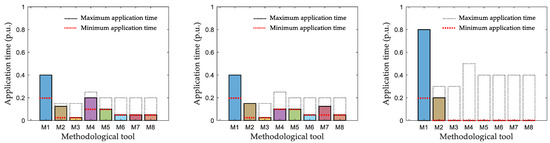

- Setting S1: The cost function will only evaluate the students’ scores and their evaluation through a questionnaire, only using the average value of both inputs. In S1, all methodologies M1 to M8 have a minimum and maximum value (these time constraints are indicated on the left side of Figure 3, Figure 4 and Figure 5 using red and black dotted lines, respectively). This setting is exactly the same as the one used in [7].

Figure 3. Results of Test 1 where the weighting factor related to theoretical concepts is set to 10. (left) setting S1, (middle) setting S2, (right) setting S3.

Figure 3. Results of Test 1 where the weighting factor related to theoretical concepts is set to 10. (left) setting S1, (middle) setting S2, (right) setting S3. Figure 4. Results of Test 2 where the weighting factor for the theoretical concepts is 5 and for student satisfaction is also 5. (left) setting S1, (middle) setting S2, (right) setting S3.

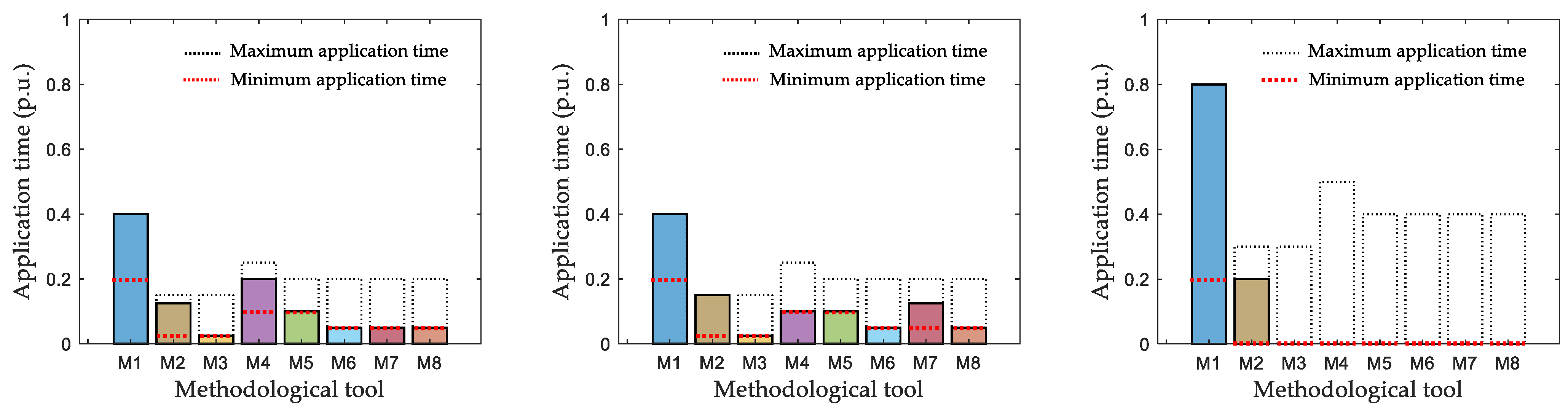

Figure 4. Results of Test 2 where the weighting factor for the theoretical concepts is 5 and for student satisfaction is also 5. (left) setting S1, (middle) setting S2, (right) setting S3. Figure 5. Results of Test 3 where the weighting factor for the theoretical concepts is 2, for resolution of practical problems is 2 and for teamwork is 6. (left) setting S1, (middle) setting S2, (right) setting S3.

Figure 5. Results of Test 3 where the weighting factor for the theoretical concepts is 2, for resolution of practical problems is 2 and for teamwork is 6. (left) setting S1, (middle) setting S2, (right) setting S3. - Setting S2: The cost function includes the new terms related to the standard deviation, as detailed in (4) and (5). The minimum and maximum values for each methodology are the same as in S1.

- Setting S3: The cost function is the same as in S2 (i.e., includes the students’ scores, the questionnaire assessment, and the standard deviation), but the minimum values are eliminated. The maximum values are doubled compared to S2.

Setting S1 will be taken as a reference, whereas setting S2 will consider the optimum times of application based not only on average values but also taking into account the differences among the students. This procedure aims at minimizing the risk of exclusion by jeopardizing those methodologies with a high variance thanks to the inclusion of the standard deviation in the PM. Finally, setting S3 releases the algorithm from the mandatory need to include all methodologies by eliminating the minimum times of applications. This extra freedom degree opens the possibility for the algorithm to set a zero time of application to certain methodologies that would then be eliminated. The maximum values are also increased (doubled) to provide higher flexibility to the predictive algorithm to set the suggested times of application. Maximum values are, however, not eliminated to avoid the cases when the predictive algorithm just considers a single methodology.

For the sake of example, three tests (T1 to T3) will now be analyzed for different scenarios with variable objectives in terms of the profile of competencies to be developed by the student. The results from Tests 1 to 3 are shown in Figure 3, Figure 4 and Figure 5, respectively, including the times of application with setting S1 (left column), S2 (middle column), and S3 (right column).

In Test 1, the weighting factor related to theoretical concepts is set to 10, and all other competences are established to zero; hence the algorithm will provide the times of application that better promote the acquisition of theoretical concepts exclusively. When only average values are evaluated (i.e., using setting S1), the algorithm provides a maximum value of the methodology M1 (master class) and also sets the design of problems (M4) to a maximum value (left plot in Figure 3). Therefore, the algorithm suggests dedicating most of the time to lectures and problem design since those activities are closely related to theory. Nevertheless, other methodologies, such as the use of theoretical and practical videos (M2 and M3), still have a residual time of application since the minimum values that were set in S1 prevents the algorithm from fully eliminating those methodologies.

Now, when the algorithm includes the standard deviation in S2, the time of application of methodology 4 is highly decreased (Figure 3, middle plot). This is so because problem design is a highly demanding task that brings students out of their comfort zone. This leads to a high variance because highly involved students have very good marks, but students with a lower profile cannot perform this activity with high performance. The amount of time reduced from M4 has been moved to M7 (online questions), characterized by a lower standard deviation. Except for a small increase in the time for M2, the rest of the methodologies maintain similar times as in setting S1. Results suggest that lectures (M1) are the most adequate methodology to promote the acquisition of theoretical concepts in any case, whereas methodologies M4 (problem design) and M7 (online questions) also contribute significantly to the aim of this scenario in Test 1. The differences between settings S1 and S2 suggest, however, that online questions (M7) will better suit all students, whereas the problem design (M4) is most directed towards a specific sector in the class.

Finally, S3 maintains the same cost function as in S2 but eliminates all minimum values, allowing the algorithm to set zero values to various methodologies. In fact, only methodologies M1 and M7 have non-null values. Methodology M1 is set to a high but non-maximum value of , whereas methodology M7 (online questions) has a maximum value of . With this approach, the predictive algorithm informs that the theoretical concepts will be better acquired if some methodologies with a residual time of application are not used in the course.

It is worth highlighting here that the times of application provided by the predictive algorithm in Figure 3, Figure 4 and Figure 5 for settings S1 to S3 are fundamentally informative. In other words, the aim of the proposed algorithm is not to automate the course design procedure and to eliminate the human factor. On the contrary, the objective is to provide valuable information to the teacher for him/her to decide better how the course should be organized. Since this information includes not only the students’ opinion but also their performance and the variance of both aspects, the times of application that are obtained in Figure 3, Figure 4 and Figure 5 can be a guide to decide on whether a certain methodology should be used and how much.

As a summary of the conclusions obtained in Test 1: (i) lectures are always a powerful tool to promote the understanding of theoretical knowledge, (ii) problem design is an activity that will be useful to fix some theoretical concepts better, but it is mainly directed towards a specific target of students (leading to significantly lower performance in some students), and (iii) the use of only questions helps the acquisition of the theory and seems to be more adequate to fit all students simultaneously. The rest of the methodologies are less appealing for the scenario considered in Test 1, although the work in groups and theoretical videos also contribute to the sought competence to some extent. Based on this information, the teacher can take different decisions on the subject. For example, methodologies M1 and M7 might be promoted for this scenario and for all students (i.e., including mandatory tasks) while methodology M4 might be left as voluntary work for highly motivated students that desire a higher final mark. Needless to say, this is just an example, and it is the responsible in charge of the subject who ultimately decides on the structure of the course. However, the proposed tool might help this course design by providing extensive information about promoting certain competences for different scenarios.

Test 2 follows the same procedure but for a different competence profile. In this case, the weighting factor for the theoretical concepts is reduced to 5 while the satisfaction increased up to 5. In setting S1, the methodology M1 (master classes) is again saturated to its maximum value, as in Test 1 (see left plots in Figure 3 and Figure 4). Methodology M4 also shows a relatively high time of application, but, opposite to Test 1, its value remains below the maximum (indicated in Figure 4 with black dotted lines). Methodology M2 (theoretical videos) is now the third higher time of application. This indicates that the theoretical videos, apart from helping the acquisition of theoretical concepts, satisfy the students. Although just from an informal perspective, this result matches the feedback obtained from students in interviews, where they said to be much satisfied with the videos because of their flexibility: they can easily stop, rewind and replay the video, and consequently, these features result convenient in their learning process.

Concerning this scenario in the case of using setting S2, the results obtained are slightly different, as it is shown in the middle plot of Figure 4. As it occurred in Test 1, the time of application for the problem design (M4) is diminished because it is an activity where results prove to be dispersed (i.e., with a high standard deviation). In this case, the reduction of the time for M4 allows a higher time of application for the theoretical videos (M2) and online questions (M7). The predictive algorithm in setting S2 is looking for a trade-off between theoretical learning and satisfaction, but it is promoting at the same time those activities that benefit a majority of the students.

Moving to setting S3, the minimum values for the times of application are eliminated, and this enables the elimination of various methodologies. In fact, only lectures and theoretical videos (M1 and M2) have non-null times of application. While M1 is set to its maximum value, M2 has an intermediate value, indicating that the best compromise between the acquisition of theoretical knowledge and satisfaction is mainly obtained through lectures, although the theoretical videos also help in this regard. Even though the algorithm provides these results, if no minimum values are forced, the teacher would ultimately decide if some other methodologies should be used. To this end, the other two settings (S1 and S2) might guide this decision.

The next scenario is explored in Test 3, where the weighting factor of the theoretical concepts and the resolution of practical problems are set to 2 whereas the value for the teamwork is set to 6. This scenario would clearly promote the capability of students to work in a group (transverse competence), although keeping theory and problems to a minimum. Setting 1 gives the greater times of application to lectures (M1), teamwork (M5), and question in couples (M8), as it can be observed in the left plots of Figure 5. This result is somewhat intuitive since M5 and M8 are closely related to the competence of teamwork (whose weighting factor is set to 6 in this test), and for this reason, they are saturated to their maximum values. Lectures are also well considered by the algorithm since they have an impact on the acquisition of theoretical concepts and the resolution of practical problems.

All in all, the provided times of application in setting S1 to find a trade-off between the competences that are weighted in this Test 3. When the setting is moved to S2, the results show almost no change compared to S1 (only M2 and M3 present a slight modification in their times of application). As it is shown in the middle plot of Figure 5, the times of application of M1, M5 and M8 are exactly the same as in S1 because the differences in those activities (i.e., the variance) are not enough to modify the results. This is quite different from what occurred in Tests 1 and 2, therefore, the modifications caused by the inclusion of the standard deviation (last term in (5)) prove to be dependent on each specific scenario and the methodologies that are promoted.

Finally, setting S3 eliminates the minimum values, and only methodologies M1, M5, and M8 now have non-null times of application. Even though the maximum values are doubled in S3, methodologies M5 and M8 are still saturated to their maximum values, and this diminishes the time of application of the lectures. The information that can be extracted is that in this scenario, teamwork and work in couples are the most effective activities, which again is aligned with intuition. Lectures (M2) are, however, not eliminated because the theoretical concepts and problems are also relevant in this scenario.

The afore-discussed scenarios for Tests 1, 2, and 3 are only some application examples of the proposed tool. Each teacher can set his/her own scenario according to the specific needs of the subject and the context. Furthermore, the teacher may explore different scenarios to improve his/her understanding of which methodologies better suit some competences and better avoid the exclusion of some students. At the end of the day, the provided tool and its associated analysis do not aim to replace the teacher in his/her decisions about the subject. Conversely, the results from the analysis should be considered valuable information that can assist and guide the teacher in the complex task of designing an educational action.

In the examples shown in this section, the proposed predictive algorithm provides a ranking (based on the times of application shown in Figure 3, Figure 4 and Figure 5 of the methodologies that would better achieve the desired scenario of developed competences (setting S1). Furthermore, the risk of excluding students with activities that generate a great variance is also evaluated in setting S2, showing that the inclusion of the standard deviation significantly modifies the results of Tests 1 and 2, whereas the times of application remain mainly constant on Test 3. Finally, in setting S3, the elimination of the minimum values enables the algorithm to select only some methodologies and eliminate others. This can be useful for modifying the course structure over the years since some methodologies might be eliminated and replaced by others in subsequent years. It is worth noting, however, that the algorithm is sometimes much more restrictive in the number of remaining methodologies; hence the teacher should value the information from the three settings (S1, S2, and S3) and decide on how many methodologies should remain and how much importance is given to each one.

5. Conclusions

The planning of a higher education course is mainly based on the instructor’s previous experience on most occasions. Moreover, unfortunately, this design process does not usually take into account in a systematic manner the learners’ opinions and the feedback provided by their evaluations. In this manner, without some external assistance, the design of a university course to promote the acquisition of a specific student competence profile becomes a difficult task. This work proposes a predictive tool that brings long-life guidance for the yearly enhancement of the teaching-learning process. The proposal provides key information to support the instructor in the labor of designing the course with the objective of helping students to acquire certain competences. Furthermore, to avoid the exclusion of some students, the proposed PM includes the standard deviation in the predictive algorithm. To assist the teacher in this task, the use of this predictive tool allows an easy determination of the optimal timing for certain pre-selected methodological actions. The time restrictions added in the predictive process guarantee a minimum theoretical content via the classical master class, but release the rest of the pre-selected methodologies from minimum time values, so it is possible to set a zero time of application time for certain methodological tools. Therefore, higher application times are provided for the methodological instruments that better adapt to the target student competence profile.

The results in different skill scenarios provide rankings of methodological instruments and their application times to achieve the target student competence profile. Furthermore. the proposal allows for reducing high score variations when the standard deviation is introduced in the predictive model. The elimination of minimum time values opens up the possibility of eliminating certain pre-selected methodological tools, and this offers valuable information for deciding on the elimination of methodological tools in subsequent years. This possible discarding of methodologies, provided by the three different settings presented, is valuable information that should be conscientiously analyzed by the instructor since this data analysis will support the decision about the definitive number of methodologies implanted and their relevance in the course design.

It should be noted that this proposal is implemented in a university course on electrical machines; however, the use of this predictive tool is extensive to any course with different methodological instruments and/or competence profiles. It is left for future work the extension of the proposed tool to other subjects and degrees (not necessarily related to the engineering field) and the comparison of the conclusions that can are obtained in different areas of knowledge and locations. Similarly, subsequent investigations may address the evolution of the tool over the years when it is applied to the same subject repeatedly. Finally, some changes might be devised in the core of the algorithm itself to evaluate the different performance of predictive approaches with various cost functions and weighting factors.

Author Contributions

Conceptualisation, J.J.A., A.C.C., I.G.-P. and M.J.D.; methodology, J.J.A., A.C.C., M.J.D. and I.G.-P.; software, J.J.A. and I.G.-P.; validation, J.J.A., I.G.-P. and M.J.D.; formal analysis, A.C.C., M.J.D. and I.G.-P.; investigation, J.J.A., A.C.C. and A.G.-P.; resources, I.G.-P. and A.C.C.; data curation, J.J.A.; writing—original draft preparation, M.J.D., J.J.A. and A.C.C.; writing—review and editing, M.J.D., J.J.A. and A.G.-P.; visualization, J.J.A., M.J.D., I.G.-P. and A.G.-P.; supervision, M.J.D. and I.G.-P.; project administration, M.J.D. and I.G.-P.; funding acquisition, M.J.D. and I.G.-P. All authors have read and agreed to the published version of the manuscript.

Funding

This research was funded in part by the Spanish Government under the Plan Estatal with the reference PID2021-127131OB-I00, in part by the Junta de Andalucia under the grant UMA20-FEDERJA-039 and in part by the Ministry of Science, Innovation and Universities under the Juan de la Cierva grant IJC2020-042700-I.

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

Not applicable.

Conflicts of Interest

The authors declare no conflict of interest.

References

- Ter Beek, M.; Wopereis, I.; Schildkamp, K. Don’t Wait, Innovate! Preparing Students and Lecturers in Higher Education for the Future Labor Market. Educ. Sci. 2022, 12, 620. [Google Scholar] [CrossRef]

- Forcael, E.; Garces, G.; Orozco, F. Relationship Between Professional Competencies Required by Engineering Students According to ABET and CDIO and Teaching–Learning Techniques. IEEE Trans. Educ. 2022, 65, 46–55. [Google Scholar] [CrossRef]

- Fink, L.D. Creating Significant Learning Experiences; John Wiley & Sons: New York, NY, USA, 2013. [Google Scholar]

- Meier, R.L.; Williams, M.R.; Humphreys, M.A. Refocusing Our Efforts: Assessing Non-Technical Competency Gaps. J. Eng. Educ. 2000, 89, 377–385. [Google Scholar] [CrossRef]

- Huizinga, T.; Handelzalts, A.; Nieveen, N.; Voogt, J.M. Teacher involvement in curriculum design: Need for support to enhance teachers’ design expertise. J. Curric. Stud. 2014, 46, 33–57. [Google Scholar] [CrossRef]

- Toral, S.L.; del Rocio Marines-Torres, M.; Barrero, F.; Gallardo-Vazquez, S.; Duran, M.J. An electronic engineering curriculum design based on concept-mapping techniques. Int. J. Technol. Des. Eduaction 2007, 17, 341–356. [Google Scholar] [CrossRef]

- Aciego, J.J.; Gonzalez-Prieto, A.; Gonzalez-Prieto, I.; Claros, A.; Duran, M.J.; Bermudez, M. On the Use of Predictive Tools to Improve the Design of Undergraduate Courses. IEEE Access 2022, 10, 3105–3115. [Google Scholar] [CrossRef]

- Öncü, S.; Şengel, E. The effect of student opinions about course content on student engagement and achievement in computer literacy courses. Procedia-Soc. Behav. Sci. 2010, 2, 2264–2268. [Google Scholar] [CrossRef][Green Version]

- Karam, M.; Fares, H.; Al-Majeed, S. Quality assurance framework for the design and delivery of virtual, real-time courses. Information 2021, 12, 93. [Google Scholar] [CrossRef]

- Matulich, E.; Papp, R.; Haytko, D.L. Continuous improvement through teaching innovations: A requirement for today’s learners. Mark. Educ. Rev. 2008, 18, 1–7. [Google Scholar] [CrossRef]

- Placencia, G.; Muljana, P.S. The effects of online course design on student course satisfaction. In Proceedings of the 2019 Pacific Southwest Section Meeting, Los Angeles, CA, USA, 4 April 2019. [Google Scholar]

- Mota, D.; Reis, L.P.; de Carvalho, C.V. Design of learning activities–pedagogy, technology and delivery trends. EAI Endorsed Trans. e-Learn. 2014, 1, e5. [Google Scholar] [CrossRef][Green Version]

- Tran, V.D. Perceived satisfaction and effectiveness of online education during the COVID-19 pandemic: The moderating effect of academic self-efficacy. High. Educ. Pedagog. 2022, 7, 107–129. [Google Scholar] [CrossRef]

- Appleton-Knapp, S.L.; Krentler, K.A. Measuring student expectations and their effects on satisfaction: The importance of managing student expectations. J. Mark. Educ. 2006, 28, 254–264. [Google Scholar] [CrossRef]

- Baer, J. Grouping and achievement in cooperative learning. Coll. Teach. 2003, 51, 169–175. [Google Scholar] [CrossRef]

- Herodotou, C.; Rienties, B.; Boroowa, A.; Zdrahal, Z.; Hlosta, M. A large-scale implementation of predictive learning analytics in higher education: The teachers’ role and perspective. Educ. Technol. Res. Dev. 2019, 67, 1273–1306. [Google Scholar] [CrossRef]

- Savage, N.; Birch, R.; Noussi, E. Motivation of engineering students in higher education. Eng. Educ. 2011, 6, 39–46. [Google Scholar] [CrossRef]

- Sushchenko, O.; Nazarova, S. E-Learning Course Design Concept for Engineering Students on the Basis of Competence Approach. In Proceedings of the 2021 IEEE International Conference on Modern Electrical and Energy Systems (MEES), Kremenchuk, Ukraine, 23–25 September 2021; pp. 1–6. [Google Scholar]

- Samavedham, L.; Ragupathi, K. Facilitating 21st century skills in engineering students. J. Eng. Educ. 2012, 26, 38–49. [Google Scholar]

- Oksana, P.; Galstyan-Sargsyan, R.; López-Jiménez, P.A.; Pérez-Sánchez, M. Transversal Competences in Engineering Degrees: Integrating Content and Foreign Language Teaching. Educ. Sci. 2020, 10, 296. [Google Scholar] [CrossRef]

- Shekhawat, S. Enhancing Employability Skills of Engineering Graduates. In Enhancing Future Skills and Entrepreneurship; Springer: Cham, Switzerland, 2020; pp. 263–269. [Google Scholar]

- Potvin, G.; McGough, C.; Benson, L.; Boone, H.J.; Doyle, J.; Godwin, A.; Kirn, A.; Ma, B.; Rohde, J.; Ross, M.; et al. Gendered Interests in Electrical, Computer, and Biomedical Engineering: Intersections With Career Outcome Expectations. IEEE Trans. Educ. 2018, 61, 298–304. [Google Scholar] [CrossRef]

- Chin, K.Y.; Lee, K.F.; Chen, Y.L. Impact on Student Motivation by Using a QR-Based U-Learning Material Production System to Create Authentic Learning Experiences. IEEE Trans. Learn. Technol. 2015, 8, 367–382. [Google Scholar] [CrossRef]

- Muñoz-Merino, P.J.; Fernandez Molina, M.; Muñoz-Organero, M.; Delgado Kloos, C. Motivation and Emotions in Competition Systems for Education: An Empirical Study. IEEE Trans. Educ. 2014, 57, 182–187. [Google Scholar] [CrossRef][Green Version]

- Cruz, M.L.; van den Bogaard, M.E.D.; Saunders-Smits, G.N.; Groen, P. Testing the Validity and Reliability of an Instrument Measuring Engineering Students’ Perceptions of Transversal Competency Levels. IEEE Trans. Educ. 2021, 64, 180–186. [Google Scholar] [CrossRef]

- Fong, S.; Si, Y.W.; Biuk-Aghai, R.P. Applying a hybrid model of neural network and decision tree classifier for predicting university admission. In Proceedings of the 2009 7th International Conference on Information, Communications and Signal Processing (ICICS), Macau, China, 8–10 December 2009; pp. 1–5. [Google Scholar] [CrossRef]

- Agaoglu, M. Predicting Instructor Performance Using Data Mining Techniques in Higher Education. IEEE Access 2016, 4, 2379–2387. [Google Scholar] [CrossRef]

- Conijn, R.; Snijders, C.; Kleingeld, A.; Matzat, U. Predicting Student Performance from LMS Data: A Comparison of 17 Blended Courses Using Moodle LMS. IEEE Trans. Learn. Technol. 2017, 10, 17–29. [Google Scholar] [CrossRef]

- Pardo, A.; Han, F.; Ellis, R.A. Combining University Student Self-Regulated Learning Indicators and Engagement with Online Learning Events to Predict Academic Performance. IEEE Trans. Learn. Technol. 2017, 10, 82–92. [Google Scholar] [CrossRef]

- Nam, S.; Frishkoff, G.; Collins-Thompson, K. Predicting Students Disengaged Behaviors in an Online Meaning-Generation Task. IEEE Trans. Learn. Technol. 2018, 11, 362–375. [Google Scholar] [CrossRef]

- Mengash, H.A. Using Data Mining Techniques to Predict Student Performance to Support Decision Making in University Admission Systems. IEEE Access 2020, 8, 55462–55470. [Google Scholar] [CrossRef]

- Qu, S.; Li, K.; Zhang, S.; Wang, Y. Predicting Achievement of Students in Smart Campus. IEEE Access 2018, 6, 60264–60273. [Google Scholar] [CrossRef]

- Choe, N.H.; Borrego, M. Prediction of Engineering Identity in Engineering Graduate Students. IEEE Trans. Educ. 2019, 62, 181–187. [Google Scholar] [CrossRef]

- Garcia-Magariño, I.; Plaza, I.; Igual, R.; Lombas, A.S.; Jamali, H. An Agent-Based Simulator Applied to Teaching-Learning Process to Predict Sociometric Indices in Higher Education. IEEE Trans. Learn. Technol. 2020, 13, 246–258. [Google Scholar] [CrossRef]

- Hung, J.L.; Shelton, B.E.; Yang, J.; Du, X. Improving Predictive Modeling for At-Risk Student Identification: A Multistage Approach. IEEE Trans. Learn. Technol. 2019, 12, 148–157. [Google Scholar] [CrossRef]

- Jiménez, F.; Paoletti, A.; Sánchez, G.; Sciavicco, G. Predicting the Risk of Academic Dropout With Temporal Multi-Objective Optimization. IEEE Trans. Learn. Technol. 2019, 12, 225–236. [Google Scholar] [CrossRef]

- Kostopoulos, G.; Karlos, S.; Kotsiantis, S. Multiview Learning for Early Prognosis of Academic Performance: A Case Study. IEEE Trans. Learn. Technol. 2019, 12, 212–224. [Google Scholar] [CrossRef]

- Sobnath, D.; Kaduk, T.; Rehman, I.U.; Isiaq, O. Feature Selection for UK Disabled Students’ Engagement Post Higher Education: A Machine Learning Approach for a Predictive Employment Model. IEEE Access 2020, 8, 159530–159541. [Google Scholar] [CrossRef]

- Ahamed Ahanger, T.; Tariq, U.; Ibrahim, A.; Ullah, I.; Bouteraa, Y. ANFIS-Inspired Smart Framework for Education Quality Assessment. IEEE Access 2020, 8, 175306–175318. [Google Scholar] [CrossRef]

- Duran, M.; Gonzalez-Prieto, I.; Gonzalez-Prieto, A.; Aciego, J.J. The Evolution of Model Predictive Control in Multiphase Electric Drives: A Growing Field of Research. IEEE Ind. Electron. Mag. 2022, 29–39. [Google Scholar] [CrossRef]

- Garcia-Entrambasaguas, P.; Zoric, I.; Gonzalez-Prieto, I.; Duran, M.J.; Levi, E. Direct Torque and Predictive Control Strategies in Nine-Phase Electric Drives Using Virtual Voltage Vectors. IEEE Trans. Power Electron. 2019, 34, 12106–12119. [Google Scholar] [CrossRef]

- Gonzalez-Prieto, I.; Zoric, I.; Duran, M.J.; Levi, E. Constrained Model Predictive Control in Nine-Phase Induction Motor Drives. IEEE Trans. Energy Convers. 2019, 34, 1881–1889. [Google Scholar] [CrossRef]

- Gonzalez-Prieto, A.; Gonzalez-Prieto, I.; Duran, M.J.; Aciego, J.J. Dynamic Response in Multiphase Electric Drives: Control Performance and Influencing Factors. Machines 2022, 10, 866. [Google Scholar] [CrossRef]

- Duran, M.J.; Barrero, F.; Pozo-Ruz, A.; Guzman, F.; Fernandez, J.; Guzman, H. Understanding Power Electronics and Electrical Machines in Multidisciplinary Wind Energy Conversion System Courses. IEEE Trans. Educ. 2013, 56, 174–182. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).