Taking the Big Leap: A Case Study on Implementing Programmatic Assessment in an Undergraduate Medical Program

Abstract

:1. Introduction

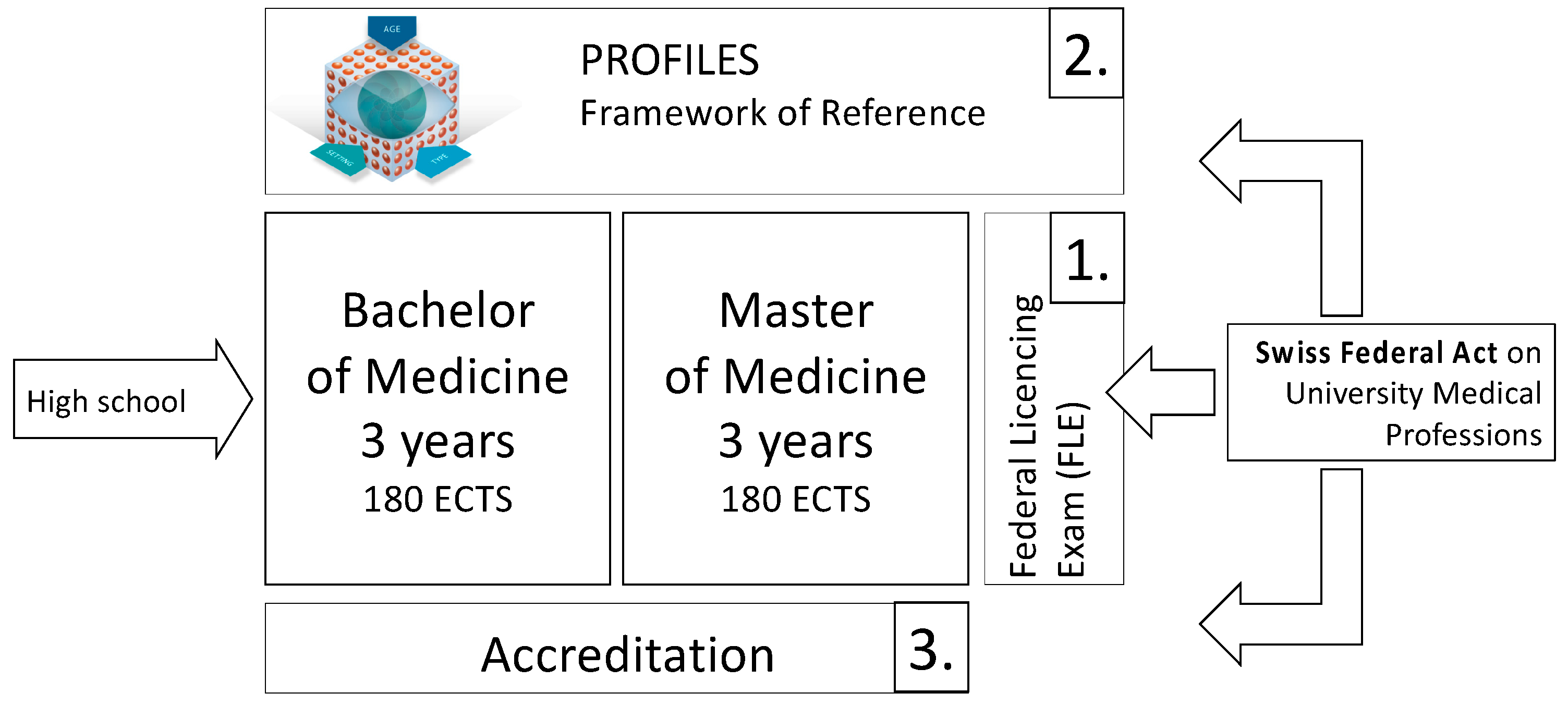

1.1. Context of Medical Education

1.1.1. Switzerland

1.1.2. Fribourg

1.1.3. University of Fribourg

2. Why Programmatic Assessment?

“Have you ever been a part of an idea whose time has come?”Harold Lyon, guest Fulbright Professor

2.1. Drivers

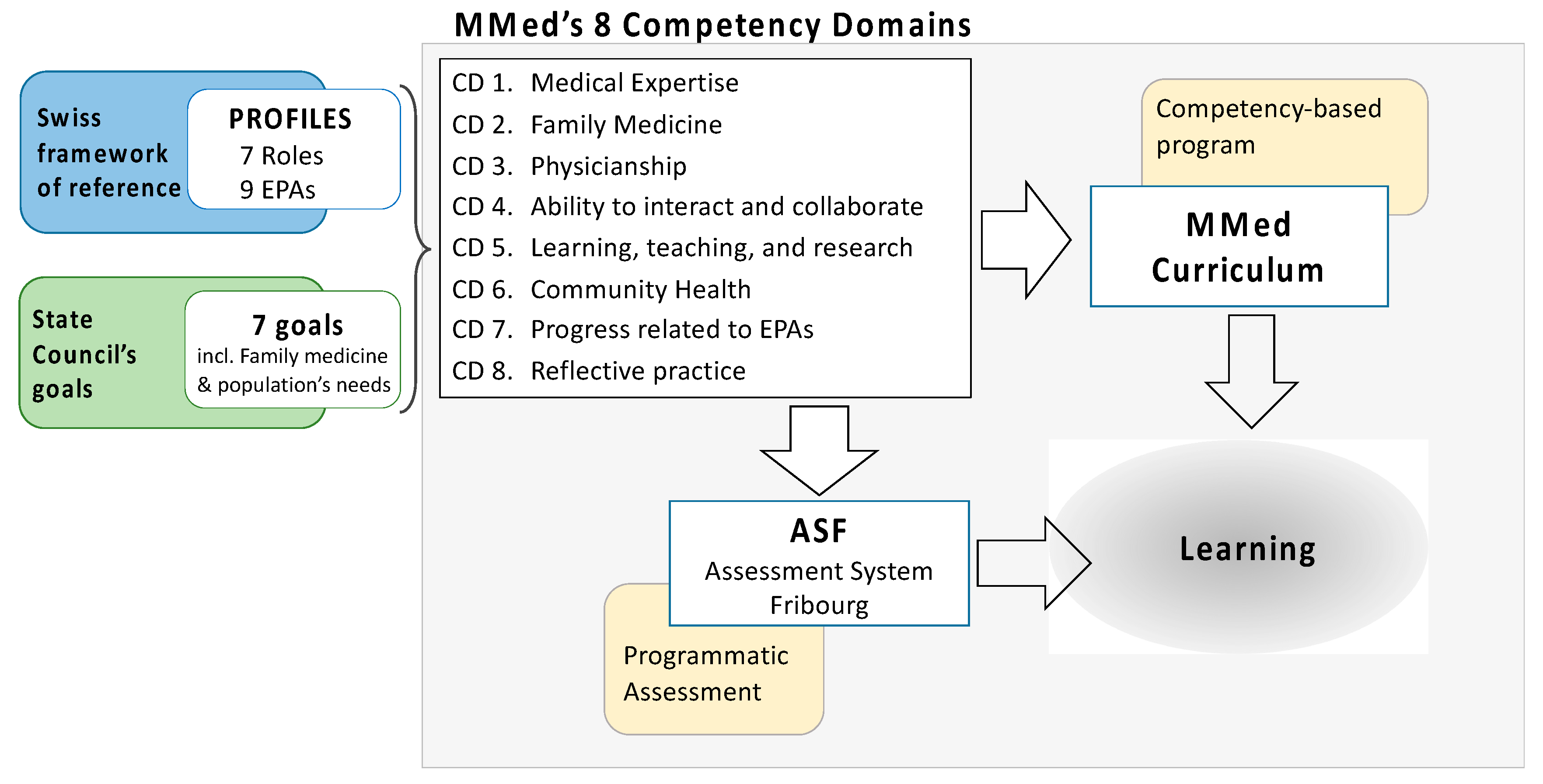

2.1.1. Competency-Based Education

2.1.2. Family Medicine

2.1.3. Cohort Size

2.1.4. Differentiating Factor

2.2. Enabling Factors and Hindrances

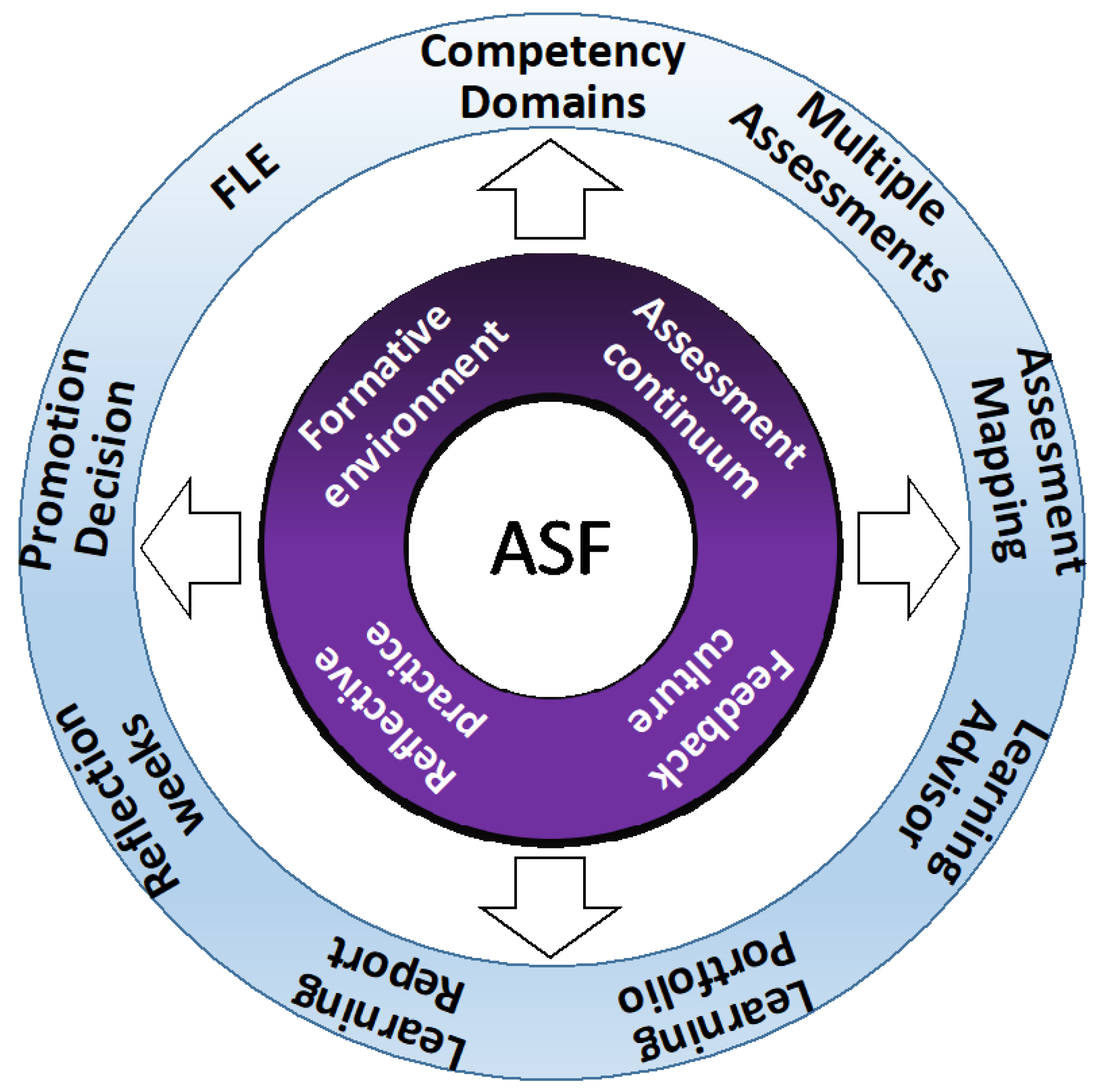

3. Description of Assessment System Fribourg (ASF)

“The goal of education, if we are to survive, is the facilitation of change and learning.”Carl Rogers

3.1. Four Key Concepts

3.1.1. Formative Approach

3.1.2. The Feedback Culture

3.1.3. Reflective Practice

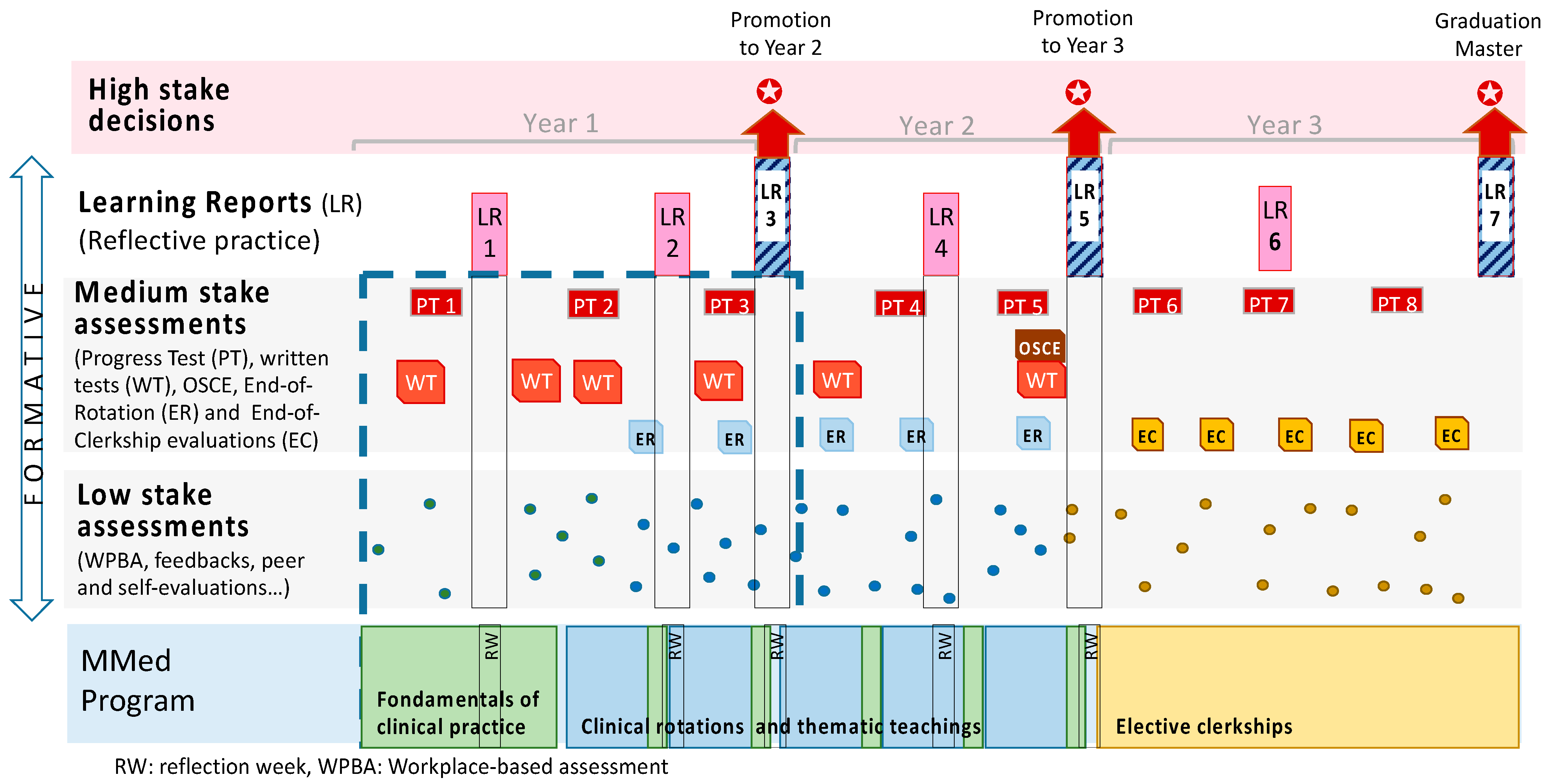

3.1.4. Assessment as a Continuum of Stakes

3.2. Nine Program Elements

3.2.1. Competency Domains

3.2.2. Multiple Assessment Methods

3.2.3. Mapping of the Assessments

3.2.4. Learning Advisor (Mentoring System)

3.2.5. Learning Portfolio

3.2.6. Learning Report

3.2.7. Reflection Weeks

3.2.8. Promotion Decision

3.2.9. Anticipating the Federal Licensing Exam

4. Implementing ASF

“Building the bridge as you walk on it”Robert E. Quinn

4.1. How We Started

4.2. The Change of Perspective

4.3. The Question of Resources

4.4. The Importance of the Narrative

“Narrative is the form humans use to make sense of events and relationships.”Gillie Bolton

4.5. Faculty Development

4.6. Continuous Improvement

5. Discussion

“Think big, start small, adjust frequently.”anonymous

6. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Bonvin, R.; Nendaz, M.; Frey, P.; Schnabel, K.P.; Huwendiek, S.; Schirlo, C. Looking Back: Twenty Years of Reforming Undergraduate Medical Training and Curriculum Frameworks in Switzerland. GMS J. Med. Edu. 2019, 36, Doc64. [Google Scholar]

- Profiles Working Group. Profiles—Principal Relevant Objectives and Framework for Integrative Learning and Education in Switzerland; Joint Commission of the Swiss Medical Schools: Zurich, Switzerland, 2017. [Google Scholar]

- Michaud, P.A.; Jucker-Kupper, P. The Profiles working group. The “profiles” Document: A Modern Revision of the Objectives of Undergraduate Medical Studies in Switzerland. Swiss Med. Wkly. 2016, 146, w14270. [Google Scholar] [PubMed] [Green Version]

- Federal Statistical Office. Number and Density of Doctors, Dental Practices and Pharmacies, By Canton; Federal Statistical Office: Neuchâtel, Switzerland, 2021. [Google Scholar]

- Sohrmann, M.; Berendonk, C.; Nendaz, M.; Bonvin, R. Nationwide Introduction of a New Competency Framework for Undergraduate Medical Curricula: A Collaborative Approach. Swiss Med. Wkly 2020, 150, w20201. [Google Scholar] [PubMed]

- Van Melle, E.; Frank, J.R.; Holmboe, E.S.; Dagnone, D.; Stockley, D.; Sherbino, J. Competency-based Medical Education Collaborators International. A Core Components Framework for Evaluating Implementation of Competency-Based Medical Education Programs. Acad. Med. 2019, 94, 1002–1009. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Boud, D.; Ajjawi, R.; Tai, J.; Dawson, P. Developing Evaluative Judgement in Higher Education; Routledge: London, UK; New York, NY, USA, 2018. [Google Scholar]

- Pfarrwaller, E.; Audétat, M.-C.; Sommer, J.; Maisonneuve, H.; Bischoff, T.; Nendaz, M.; Baroffio, A.; Perron, N.J.; Haller, D.M. An Expanded Conceptual Framework of Medical Students’ Primary Care Career Choice. Acad. Med. 2017, 92, 1536–1542. [Google Scholar] [CrossRef] [PubMed]

- Wu, L.; Wang, D.; Evans, J.A. Large Teams Develop and Small Teams Disrupt Science and Technology. Nature 2019, 566, 378–382. [Google Scholar] [CrossRef]

- Schuwirth, L.W.T.; van der Vleuten, C.P.M. How ‘Testing’ Has Become ‘Programmatic Assessment for Learning’. Health Prof. Educ. 2019, 5, 177–184. [Google Scholar] [CrossRef]

- Dannefer, E.F.; Henson, L.C. The Portfolio Approach to Competency-Based Assessment at the Cleveland Clinic Lerner College of Medicine. Acad. Med. J. Ass. Amer. Med. Coll. 2007, 82, 493–502. [Google Scholar] [CrossRef]

- Black, P.; Wiliam, D. Developing the Theory of Formative Assessment. Educ. Asse. Eval. Acc. 2009, 21, 5–31. [Google Scholar] [CrossRef] [Green Version]

- Dochy, F.; Segers, M.; Gijbels, D.; Struyven, K. Assessment Engineering: Breaking Down Barriers Between Teaching and Learning, and Assessment. In Rethinking Assessment in Higher Education: Learning for the Longer Term; Routledge: London, UK; New York, NY, USA, 2007; pp. 87–100. [Google Scholar]

- Entwistle, N.; McCune, V.; Walker, P. Conceptions, Styles, and Approaches Within Higher Education: Analytic Abstractions and Everyday Experience. In Perspectives on Thinking, Learning and Cognitive Styles; Routledge: London, UK; New York, NY, USA, 2014; pp. 103–136. [Google Scholar]

- Cilliers, F.J.; Schuwirth, L.W.T.; Herman, N.; Adendorff, H.J.; van der Vleuten, C.P.M. A Model of the Pre-Assessment Learning Effects of Summative Assessment in Medical Education. Adv. Health Sci. Edu. 2012, 17, 39–53. [Google Scholar] [CrossRef] [Green Version]

- Shute, V.J. Focus on Formative Feedback. Rev. Educ. Res. 2008, 78, 153–189. [Google Scholar] [CrossRef]

- Butler, R. Enhancing and Undermining Intrinsic Motivation: The Effects of Task-involving and Ego-involving Evaluation on Interest and Performance. Brit. J. Educ. Psych. 1988, 58, 1–14. [Google Scholar] [CrossRef]

- Schut, S.; Driessen, E.; van Tartwijk, J.; van der Vleuten, C.P.M.; Heeneman, S. Stakes in the Eye of the Beholder: An International Study of Learners’ Perceptions within Programmatic Assessment. Med. Educ. 2018, 52, 654–663. [Google Scholar] [CrossRef] [PubMed]

- Harrison, C.J.; Könings, K.D.; Molyneux, A.; Schuwirth, L.W.T.; Wass, V.; van der Vleuten, C.P.M. Web-Based Feedback After Summative Assessment: How Do Students Engage? Med. Educ. 2013, 47, 734–744. [Google Scholar] [CrossRef] [PubMed]

- Boud, D.; Molloy, E. Feedback in Higher and Professional Education: Understanding it and Doing It Well; Routledge: London, UK; New York, NY, USA, 2013. [Google Scholar]

- Boud, D.; Molloy, E. Rethinking Models of Feedback for Learning: The Challenge of Design. Assess. Eval. Higher Edu. 2013, 38, 698–712. [Google Scholar] [CrossRef]

- Carless, D.; Salter, D.; Yang, M.; Lam, J. Developing Sustainable Feedback Practices. Stud. Higher Edu. 2011, 36, 395–407. [Google Scholar] [CrossRef] [Green Version]

- Hounsell, D. Towards More Sustainable Feedback to Students. In Rethinking Assessment in Higher Education: Learning for the Longer Term; Boud, D., Falchikov, N., Eds.; Routledge: London, UK; New York, NY, USA, 2007; pp. 87–100. [Google Scholar]

- Van Merriënboer, J.G.; Kirschner, P.A. Ten Steps to Complex. Learning: A Systematic Approach to Four-Component Instructional Design; Routledge: London, UK; New York, NY, USA, 2017. [Google Scholar]

- Leach, D.C. Competence is a Habit. JAMA 2002, 287, 243–244. [Google Scholar] [CrossRef] [PubMed]

- Epstein, R.M. Defining and Assessing Professional Competence. JAMA 2002, 287, 226. [Google Scholar] [CrossRef]

- Sandars, J. The Use of Reflection in Medical Education: Amee Guide No. 44. Med. Teach. 2009, 31, 685–695. [Google Scholar] [CrossRef]

- Whitehead, C.; Selleger, V.; van de Kreeke, J.; Hodges, B. The ‘Missing Person’ in Roles-Based Competency Models: A Historical, Cross-National, Contrastive Case Study. Med. Educ. 2014, 48, 785–795. [Google Scholar] [CrossRef]

- Raelin, J.A. Toward an Epistemology of Practice. Acad. Manag. Learn. Edu 2007, 6, 495–519. [Google Scholar] [CrossRef]

- Schon, D.A. The Reflective Practitioner: How Professionals Think in Action; Basic Books: New York, NY, USA, 1984. [Google Scholar]

- Frenk, J.; Chen, L.; Bhutta, Z.; Cohen, J.; Crisp, N.; Evans, T.; Fineberg, H.; Garcia, P.; Ke, Y.; Kelley, P.; et al. Health Professionals for a New Century: Transforming Education to Strengthen Health Systems in an Interdependent World. Lancet 2010, 376, 1923–1958. [Google Scholar] [CrossRef] [Green Version]

- Van Der Vleuten, C.P.M.; Currie, E. The Assessment of Professional Competence: Building Blocks for Theory Development. Best Pract. Res. Clin. Obstet. Gynaecol. 2010, 24, 703–719. [Google Scholar] [CrossRef] [PubMed]

- Hodges, B. Medical Education and the Maintenance of Incompetence. Med. Teach. 2006, 28, 690–696. [Google Scholar] [CrossRef]

- Harden, R.M. Amee Guide No. 21: Curriculum Mapping: A Tool for Transparent and Authentic Teaching and Learning. Med. Teach. 2001, 23, 123–137. [Google Scholar] [CrossRef]

- Driessen, E. Do Portfolios Have a Future? Adv. Health Sci. Educ. Theory Pract. 2017, 22, 221–228. [Google Scholar] [CrossRef] [Green Version]

- Bierer, S.B.; Dannefer, E.F.; Tetzlaff, J.E. Time to Loosen the Apron Strings: Cohort-Based Evaluation of a Learner-Driven Remediation Model at One Medical School. J. Gen. Intern. Med. 2015, 30, 1339–1343. [Google Scholar] [CrossRef] [Green Version]

- Schuwirth, L.; van der Vleuten, C.P.M.; Durning, S.J. What Programmatic Assessment in Medical Education Can Learn from Healthcare. Perspect. Med. Educ. 2017, 6, 211–215. [Google Scholar] [CrossRef] [Green Version]

- Dannefer, E.F. Beyond Assessment of Learning Toward Assessment for Learning: Educating Tomorrow’s Physicians. Med. Teach. 2013, 35, 560–563. [Google Scholar] [CrossRef]

- Govaerts, M.J.B.; van der Vleuten, C.P.M.; Schuwirth, L.W.T.; Muijtjens, A.M.M. Broadening Perspectives on Clinical Performance Assessment: Rethinking the Nature of in-Training Assessment. Adv. Health Sci. Educ. 2007, 12, 239–260. [Google Scholar] [CrossRef]

- Rogers, C.R.; Lyon, H.C.; Tausch, R. On Becoming an Effective Teacher: Person-Centred Teaching, Psychology, Philosophy and Dialogues with Carl, R. Rogers; Routledge: London, UK; New York, NY, USA, 2013. [Google Scholar]

- DeCarvalho, R.J. The Humanistic Paradigm in Education. Human Psychol. 1991, 19, 88–104. [Google Scholar] [CrossRef]

- Van der Vleuten, C.P.; Heeneman, S. On the Issue of Costs in Programmatic Assessment. Perspect. Med. Educ. 2016, 5, 303–307. [Google Scholar] [CrossRef] [PubMed] [Green Version]

| Assessment System | Competency Domains | |||||||

|---|---|---|---|---|---|---|---|---|

| Phase 2.2—Clinical Immersion & Focus modules | CD 1 Medic. Expert. | CD 2 Family Med. | CD 3 Physicianship | CD 4 Interact & collabor. | CD 5 Learning teaching | CD 6 Comm. Health | CD 7 EPA Progress | CD 8 Reflective practice |

| Clinical immersion (Clinical rotations and longitudinal clerkship in family medicine) | ||||||||

| (a) Medium stake assessments | ||||||||

| ++ | + | + | + | +++ | |||

| ++ | +++ | + | ++ | + | + | ++ | |

| +++ | ++ | ||||||

| (b) Low stake assessments | ||||||||

| ++ | +++ | +++ | ++ | +++ | |||

| ++ | |||||||

| ++ | +++ | + | +++ | ++ | |||

| (+) | (+) | (+) | (+) | (+) | (+) | (+) | ++ |

| Focus Modules (Focus Days, Focus Weeks) | ||||||||

| (a) Medium stake assessments | ||||||||

| +++ | + | ||||||

| (b) Low stake assessments | ||||||||

| +++ | |||||||

| ++ | +++ | + | +++ | ++ | |||

| (+) | (+) | (+) | (+) | (+) | (+) | (+) | ++ |

| Learning progress | ||||||||

| (a) Medium stake assessments | ||||||||

| +++ | |||||||

| (b) Low stake assessments | ||||||||

| ++ | + | ++ | ++ | +++ | |||

| Competency Domain (CD) | |||||||||

|---|---|---|---|---|---|---|---|---|---|

| CD 1 | CD 2 | CD 3 | CD 4 | CD 5 | CD 6 | CD 7 | CD 8 | ||

| Learning Report (LR) | Medical expert. | Family med. | Physician -ship | Interact and collab. | Learning teaching | Comm. Health | EPA progress | Reflective practice | |

| Year 1 | |||||||||

| LR 1 | formative | X | X | X | X | ||||

| LR 2 | formative | X | X | Students report on their progress in 1 of these 3 CDs of their choice | 2 of the 9 EPAs | X | |||

| LR 3 | end-of-year | X | X | X | Students report on their progress in 1 of these CDs of their choice | 3 of the 9 EPAs | X | ||

| Year 2 | |||||||||

| LR 4 | formative | X | Students report on their progress for 2 of these CDs, of their choice | 3 of the 9 EPAs | X | ||||

| LR 5 | end-of-year | X | X | X | X | X | X | X | X |

| Year 3 | |||||||||

| LR 6 | formative (optional) | (X) | (X) | (X) | (X) | (X) | (X) | X | X |

| LR 7 | end-of-year | (X) | (X) | (X) | (X) | (X) | (X) | X | X |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Bonvin, R.; Bayha, E.; Gremaud, A.; Blanc, P.-A.; Morand, S.; Charrière, I.; Mancinetti, M. Taking the Big Leap: A Case Study on Implementing Programmatic Assessment in an Undergraduate Medical Program. Educ. Sci. 2022, 12, 425. https://doi.org/10.3390/educsci12070425

Bonvin R, Bayha E, Gremaud A, Blanc P-A, Morand S, Charrière I, Mancinetti M. Taking the Big Leap: A Case Study on Implementing Programmatic Assessment in an Undergraduate Medical Program. Education Sciences. 2022; 12(7):425. https://doi.org/10.3390/educsci12070425

Chicago/Turabian StyleBonvin, Raphaël, Elke Bayha, Amélie Gremaud, Pierre-Alain Blanc, Sabine Morand, Isabelle Charrière, and Marco Mancinetti. 2022. "Taking the Big Leap: A Case Study on Implementing Programmatic Assessment in an Undergraduate Medical Program" Education Sciences 12, no. 7: 425. https://doi.org/10.3390/educsci12070425

APA StyleBonvin, R., Bayha, E., Gremaud, A., Blanc, P.-A., Morand, S., Charrière, I., & Mancinetti, M. (2022). Taking the Big Leap: A Case Study on Implementing Programmatic Assessment in an Undergraduate Medical Program. Education Sciences, 12(7), 425. https://doi.org/10.3390/educsci12070425