Impact of COVID-19 on eLearning in the Earth Observation and Geomatics Sector at University Level

Abstract

1. Introduction

1.1. State of the Art

1.2. Motivation

- How does the satisfaction of students and teachers for a specific type of course relate with the digital elements used for teaching and for interaction?

- Do we need different interaction tools for different types of interaction (e.g., technical support, exchange of knowledge, organizational support, feedback, learning assessment)?

2. Material and Methods

2.1. Experimental Design

2.1.1. General Structure of the Survey

- General questions;

- Questions related to the online learning form;

- Questions related to the online learning of GIS and Earth Observation;

- Personal Evaluation of the online semester.

2.1.2. General Questions

2.1.3. Questions Related to the Online Learning Form

2.1.4. Specific Field Related Questions

2.1.5. Personal Assessment

2.1.6. Type of Questions

2.2. Methods

2.2.1. Target Population

2.2.2. Measurement Instruments

3. Results

3.1. Lecturers Responses

3.1.1. General Information

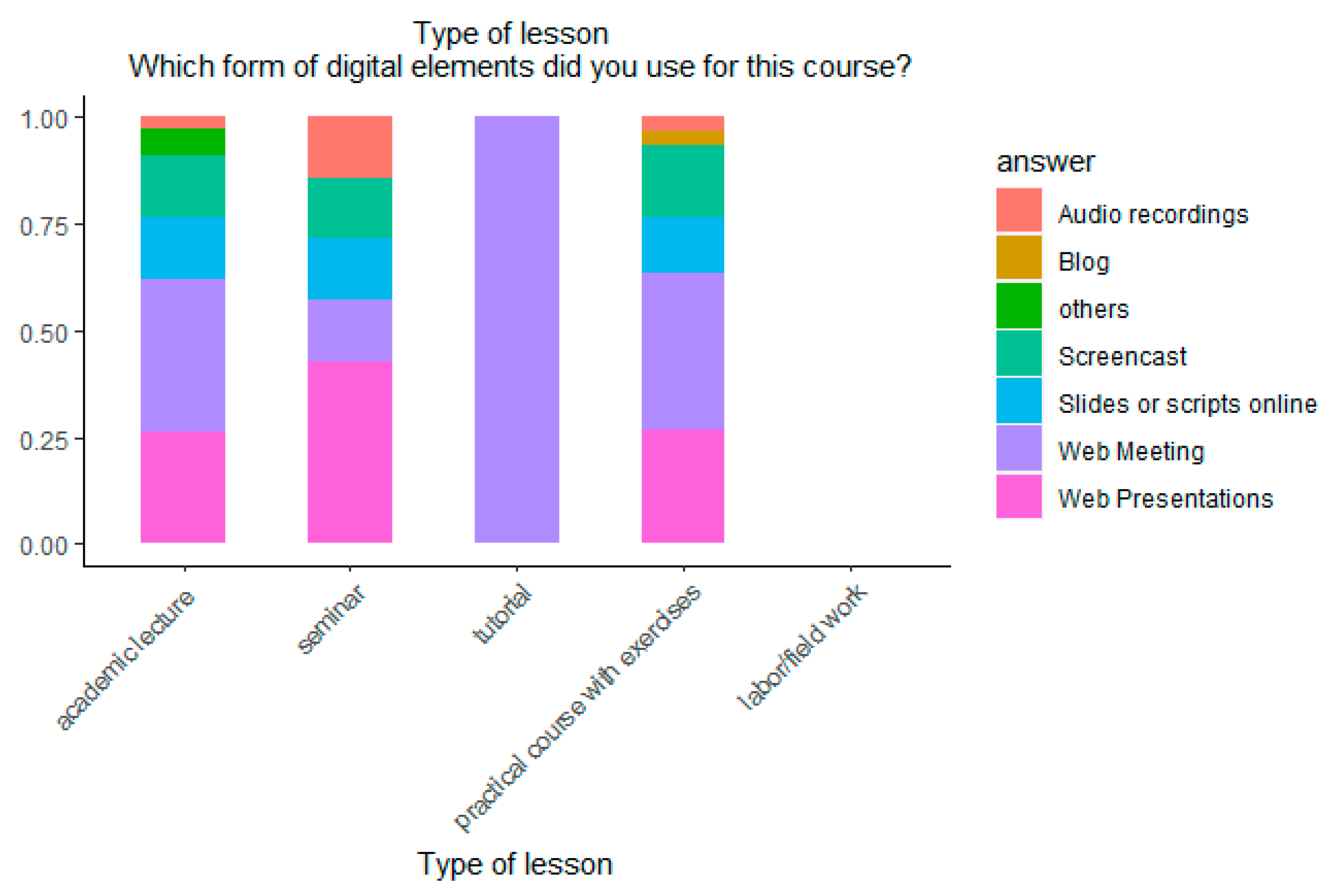

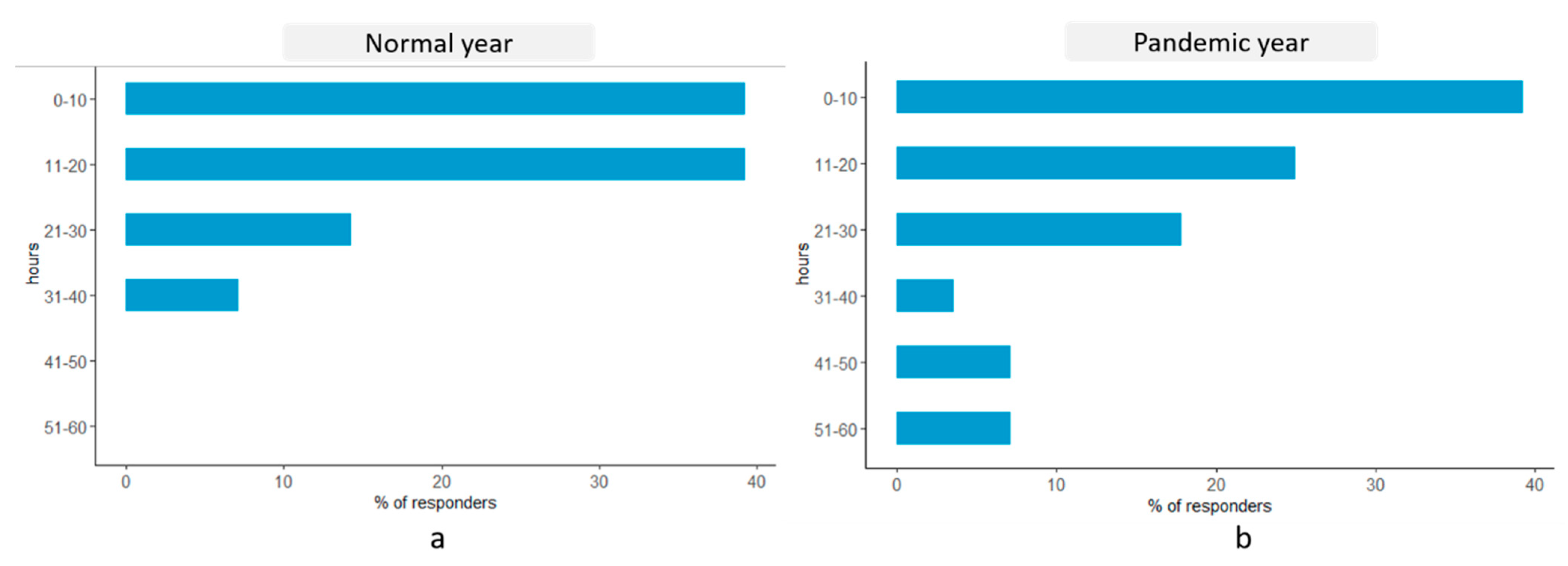

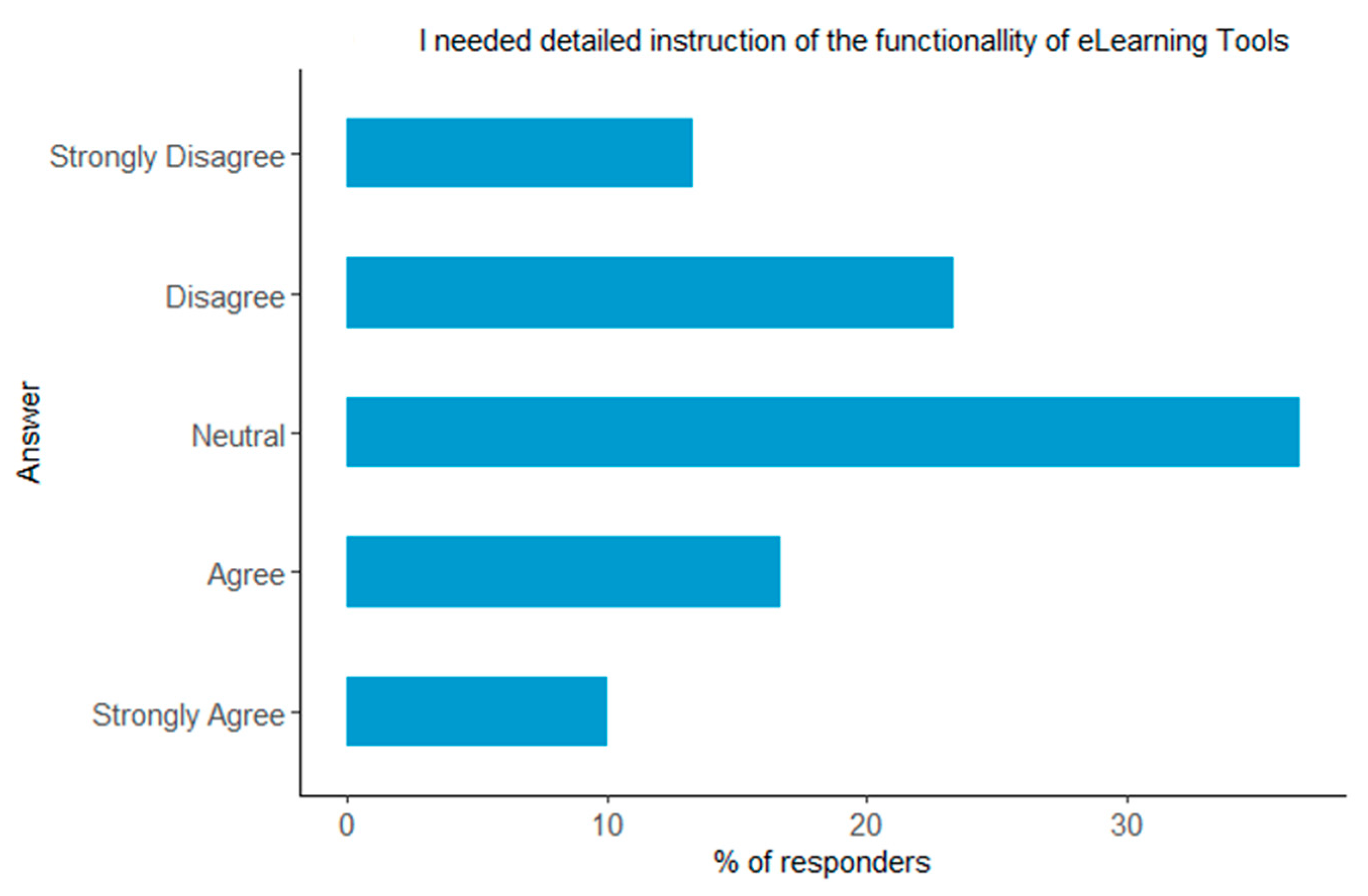

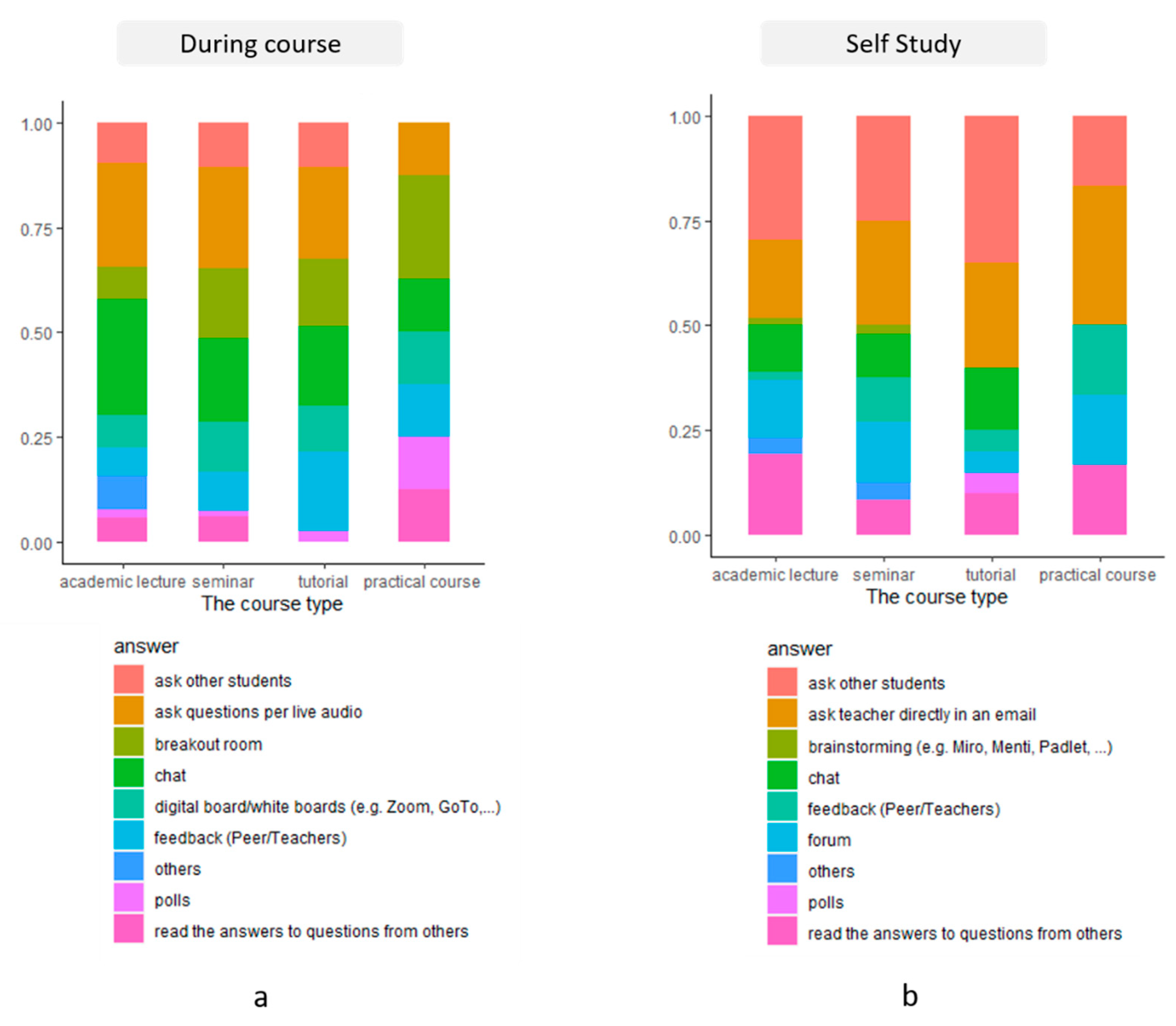

3.1.2. Online Learning Form

3.1.3. Use of Dedicated EO/GI Software and Related Technical Challenges

3.1.4. Self-Appreciation

3.2. Students Responses

3.2.1. General Information

3.2.2. Online Learning Form

3.2.3. Use of Dedicated EO/GI Software and Related Technical Challenges

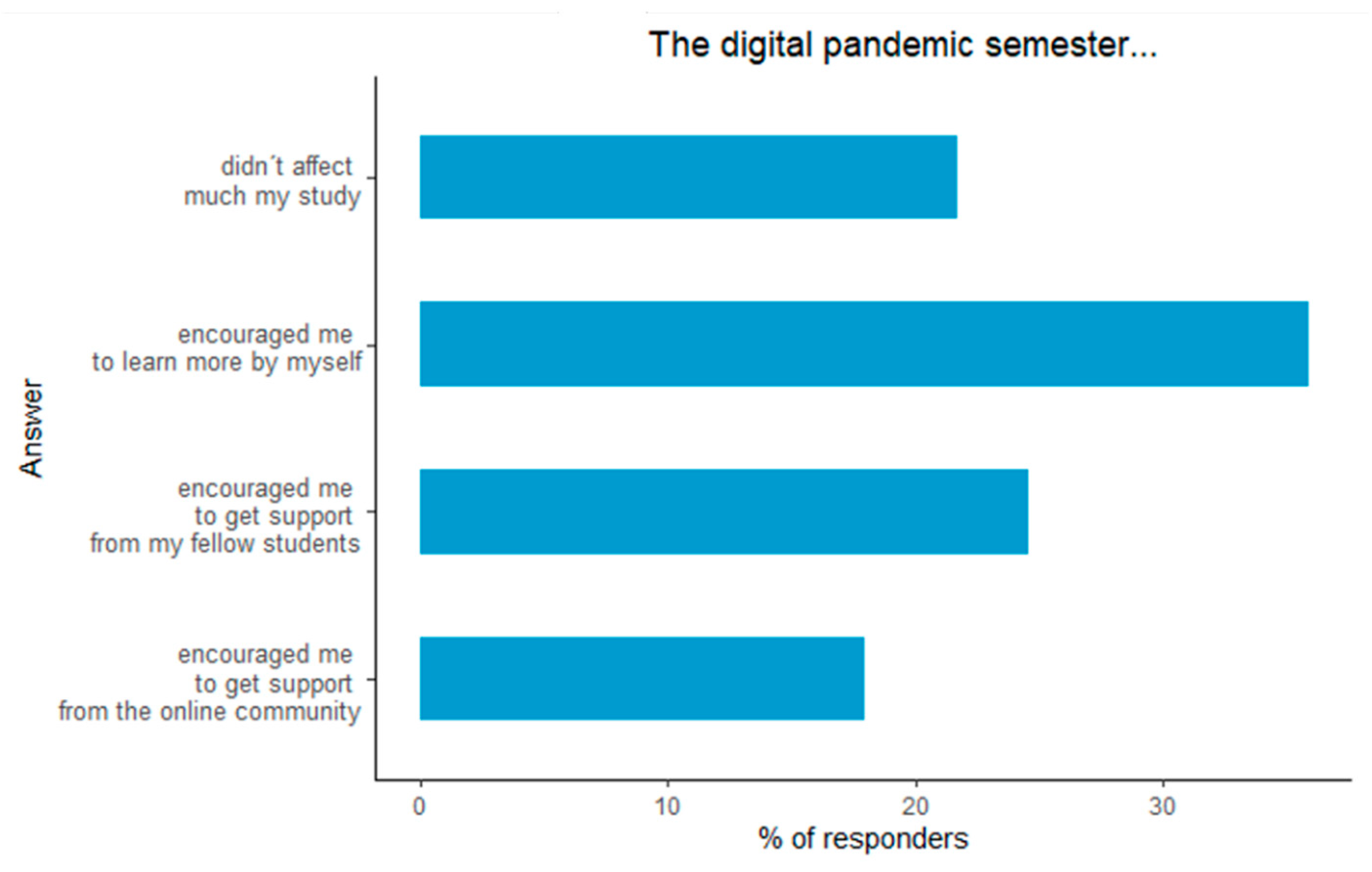

3.2.4. Self-Appreciation

4. Discussion

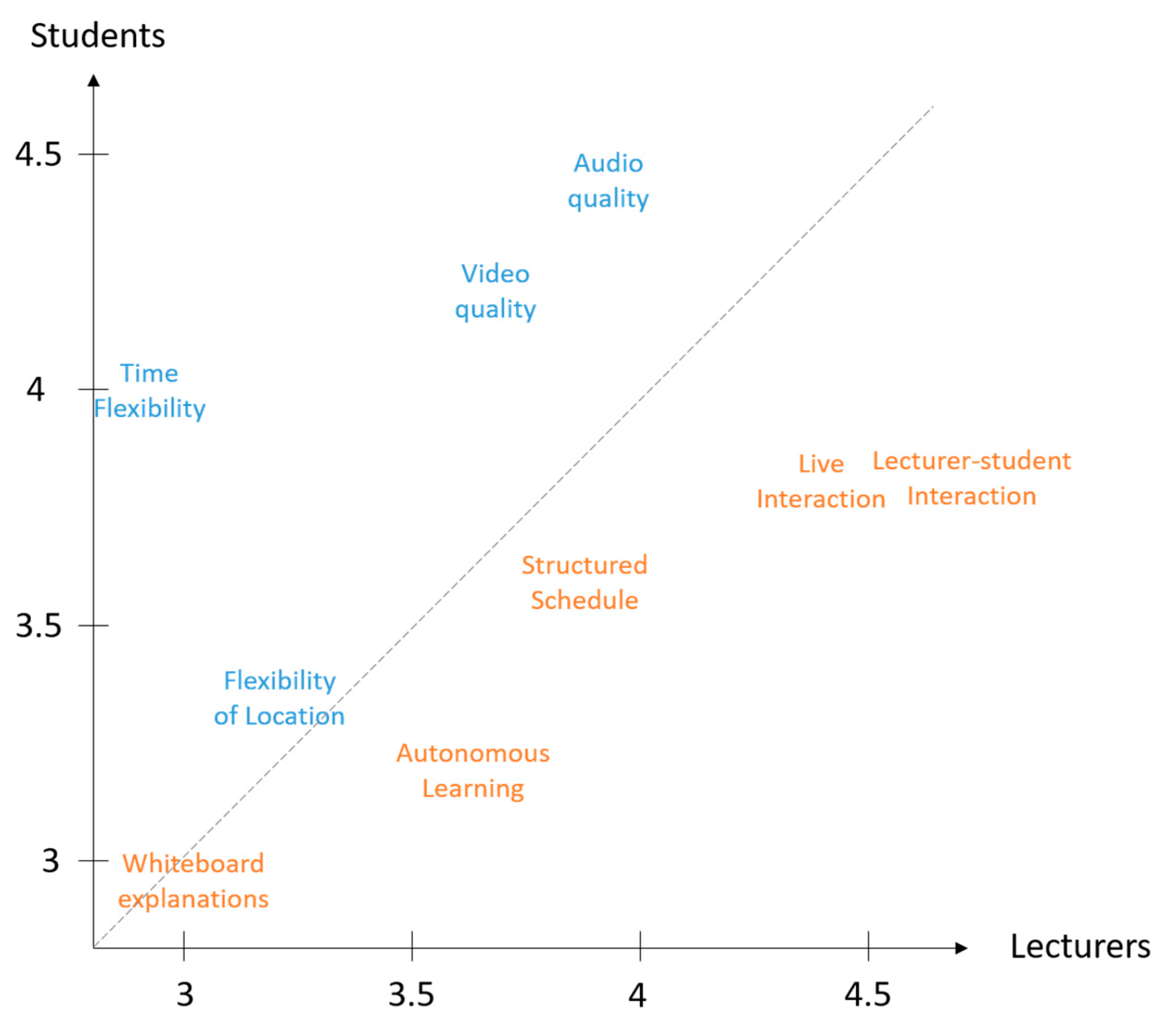

4.1. Different Expectations between Teachers and Students

4.2. Similar Expectation of Teachers and Students

4.3. Proposition of Sustainable Digital Course Designs

4.3.1. Pandemic-like Situation

4.3.2. Hybrid Designs

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Data Availability Statement

Conflicts of Interest

Appendix A

- Academic lecture: Formal class in which the lecturer presents knowledge and material in a given subject.

- Seminar: Class comprising lecture and practical application, usually requiring autonomous specialization of the students in a specific subject, which they present and discuss with the whole class.

- Tutorial: Small class where a tutor (lecturer or student) discusses the material from the lecture in more detail, with specific exercises.

- Practical course: Tutorial with higher-level exercises, usually using specific dedicated software and requiring deeper understanding of the course material and connections.

- Laboratory/field work: Applied exercises in laboratory or in the field.

Appendix B

- Web meeting: Online meeting happening live, usually using a conference tool. Both lecturer and students can use audio and/or video to speak and ask questions, and can share their screen.

- Web presentations: Additionally, called webinar. Similar principle as web meeting, except that only the lecturer and sometimes also a moderator, can share screen. It provides more possibility of interaction such as the possibility of sharing videos with sound, create live polls, and detailed reporting of chat and Q&A activities.

- Screencast: Pre-recorded video of the lecturer’s screen with corresponding audio and/or video.

- Slide or script online: Lecture slides are made available online without any additional comment.

- Blog: Dedicated (structured) website with all the course material.

- Audio recordings: Audio recording of the course without video of the corresponding slides/script.

References

- Alshaher, A.A.F. The McKinsey 7S model framework for e-learning system readiness assessment. Int. J. Adv. Eng. Technol. 2013, 6, 1948. [Google Scholar]

- Waterman, R.H., Jr.; Peters, T.J.; Phillips, J.R. Structure is not organization. Bus. Horiz. 1980, 23, 14–26. [Google Scholar] [CrossRef]

- Ebner, M.; Schön, S.; Braun, C.; Ebner, M.; Grigoriadis, Y.; Haas, M.; Leitner, P.; Taraghi, B. COVID-19 Epidemic as E-Learning Boost? Chronological Development and Effects at an Austrian University against the Background of the Concept of “E-Learning Readiness”. Future Internet 2020, 12, 94. [Google Scholar] [CrossRef]

- Hodges, C.; Moore, S.; Lockee, B.; Trust, T.; Bond, A. The difference between emergency remote teaching and online learning. Educ. Rev. 2020. Available online: https://er.educause.edu/articles/2020/3/the-difference-between-emergency-remote-teaching-and-online-learning (accessed on 5 September 2021).

- Means, B.; Bakia, M.; Murphy, R. Learning Online: What Research Tells Us about Whether, When and How; Routledge: London, UK, 2014. [Google Scholar]

- Voß, K.; Goetzke, R.; Hodam, H.; Rienow, A. Remote sensing, new media and scientific literacy–A new integrated learning portal for schools using satellite images. In Learning with GI—Implementing Digital Earth in Education; Jekel, T., Koller, A., Donert, K., Vogler, R., Eds.; Wichmann: Berlin, Germany, 2011; pp. 172–180. [Google Scholar]

- Rienow, A.; Hodam, H.; Menz, G.; Weppler, J.; Runco, S. Columbus Eye–High Definition Earth Viewing from the ISS in Secondary Schools. In Proceedings of the 65th International Astronautical Congress, Toronto, ON, Canada, 29 September–3 October 2014. [Google Scholar]

- Rienow, A.; Graw, V.; Heinemann, S.; Schultz, J.; Selg, F.; Weppler, J.; Menz, G. Experiencing space by exploring the Earth–easy-to-use image processing tools in school lessons. In Proceedings of the 66th International Astronautical Congress, Jerusalem, Israel, 12–16 October 2015; pp. 12–16. [Google Scholar]

- Ortwein, A.; Graw, V.; Heinemann, S.; Henning, T.; Schultz, J.; Selg, F.; Staar, K.; Rienow, A. Earth observation using the iss in classrooms: From e-learning to m-learning. Eur. J. Geogr. 2017, 8, 6–18. [Google Scholar]

- Kapur, R.; Byfield, V.; Del Frate, F.; Higgins, M.; Jagannathan, S. The digital transformation of education. Earth observation open science and innovation [Internet]. ISSI Sci. Rep. Ser. 2018, 15, 25–41. [Google Scholar]

- Flores-Anderson, A.I.; Herndon, K.E.; Thapa, R.B.; Cherrington, E. The SAR Handbook: Comprehensive Methodologies for Forest Monitoring and Biomass Estimation; NASA Servir and SilvaCarbon: Huntsville, AL, USA, 2019. [Google Scholar]

- NRCAN. Remote Sensing Tutorial from Canada Centre for Mapping and Earth Observation (or Canada Centre for remote Sensing), Natural Resources Canada. 2021. Available online: https://www.nrcan.gc.ca/maps-tools-publications/satellite-imagery-air-photos/tutorial-fundamentals-remote-sensing/9309 (accessed on 6 January 2021).

- EO-College. EO-College 2.0. 2021. Available online: https://eo-college.org/resources (accessed on 5 January 2021).

- Meyer, F. Synthetic Aperture Radar: Hazards. 2021. Available online: https://www.edx.org/course/sar-hazards (accessed on 6 January 2021).

- Robinson, A.C.; Kerski, J.; Long, E.C.; Luo, H.; DiBiase, D.; Lee, A. Maps and the geospatial revolution: Teaching a massive open online course (MOOC) in geography. J. Geogr. High. Educ. 2015, 39, 65–82. [Google Scholar] [CrossRef]

- FutureLearn. Observing Earth from Space 2022. Available online: https://www.futurelearn.com/courses/observing-earth-from-space (accessed on 10 January 2022).

- GI-N2K. Geographic Information: Need to Know. 2021. Available online: http://www.gi-n2k.eu/the-project/ (accessed on 6 January 2021).

- EO4GEO. Innovative Solutions for Earth Observation/Geoinformation Training. 2021. Available online: http://www.eo4geo.eu/ (accessed on 6 January 2021).

- EOTEC DevNet. The Earth Observation Training, Education, and Capacity Development Network. 2021. Available online: https://ceos.org/ourwork/other-ceos-activities/eotec-devnet/ (accessed on 10 January 2022).

- Bolliger, D.U.; Martindale, T. Key factors for determining student satisfaction in online courses. Int. J. E-Learn. 2004, 3, 61–67. [Google Scholar]

- Cole, M.T.; Shelley, D.J.; Swartz, L.B. Online instruction, e-learning, and student satisfaction: A three year study. Int. Rev. Res. Open Distrib. Learn. 2014, 15, 111–131. [Google Scholar] [CrossRef]

- Lorenzo, G. A research review about online learning: Are students satisfied? Why do some succeed and others fail? What contributes to higher retention rates and positive learning outcomes. Internet Learn. 2015, 1, 5. [Google Scholar] [CrossRef][Green Version]

- Arbaugh, J.B. Virtual classroom characteristics and student satisfaction with internet-based MBA courses. J. Manag. Educ. 2000, 24, 32–54. [Google Scholar] [CrossRef]

- Sher, A. Assessing the relationship of student-instructor and student-student interaction to student learning and satisfaction in web-based online learning environment. J. Interact. Online Learn. 2009, 8, 102–120. [Google Scholar]

- Roblyer, M.D.; Wiencke, W.R. Design and use of a rubric to assess and encourage interactive qualities in distance courses. Am. J. Distance Educ. 2003, 17, 77–98. [Google Scholar] [CrossRef]

- Bernard, R.M.; Abrami, P.C.; Borokhovski, E.; Wade, C.A.; Tamim, R.M.; Surkes, M.A.; Bethel, E.C. A meta-analysis of three types of interaction treatments in distance education. Rev. Educ. Res. 2009, 79, 1243–1289. [Google Scholar] [CrossRef]

- Keegan, D. Foundations of Distance Education; Psychology Press: Hove, UK, 1996. [Google Scholar]

- Strachota, E.M. Student satisfaction in online courses: An analysis of the impactof learner-content, learner-instructor, learner-learner and learnerteacher interaction. Diss. Abstr. Int. 2003, 64, 2746. [Google Scholar]

- Kranzow, J. Faculty leadership in online education: Structuring courses to impact student satisfaction and persistence. MERLOT J. Online Learn. Teach. 2013, 9, 131–139. [Google Scholar]

- Mehall, S. Purposeful interpersonal interaction and the point of diminishing returns for graduate learners. Internet High. Educ. 2020, 48, 100774. [Google Scholar] [CrossRef]

- Strang, K.D. Asynchronous knowledge sharing and conversation interaction impact on grade in an online business course. J. Educ. Bus. 2011, 86, 223–233. [Google Scholar] [CrossRef]

- Bolliger, D.; Erichsen, E. Student satisfaction with blended and online courses based on personality type. Can. J. Learn. Technol./Rev. Can. L’apprentiss. Technol. 2013, 39, 1–23. [Google Scholar]

- Understood. 2020. Available online: https://www.understood.org/en/friends-feelings/empowering-your-child/building-on-strengths/different-learning-strengths-what-you-need-to-know?_ul=1*1jpjc8u*domain_userid*YW1wLW9tM2Q4WE43LWNjQ1hDOUhxUE5FalE (accessed on 2 November 2020).

- Crawford, J.; Butler-Henderson, K.; Rudolph, J.; Malkawi, B.; Glowatz, M.; Burton, R.; Magni, P.; Lam, S. COVID-19: 20 countries’ higher education intra-period digital pedagogy responses. J. Appl. Learn. Teach. 2020, 3, 1–20. [Google Scholar]

- Doghonadze, N.; Aliyev, A.; Halawachy, H.; Knodel, L.; Adedoyin, A.S.; Al-Khaza’leh, B.; Oladayo, T.R. The Degree of Readiness to Total Distance Learning in the Face of COVID-19-Teachers’ View (Case of Azerbaijan, Georgia, Iraq, Nigeria, UK and Ukraine). J. Educ. Black Sea Reg. 2020, 5, 2–41. [Google Scholar] [CrossRef]

- Marinoni, G.; Van’t Land, H.; Jensen, T. The impact of Covid-19 on higher education around the world. In IAU Global Survey Report; International Association of Universities: Paris, France, 2020. [Google Scholar]

- Almaiah, M.A.; Al-Khasawneh, A.; Althunibat, A. Exploring the critical challenges and factors influencing the E-learning system usage during COVID-19 pandemic. Educ. Inf. Technol. 2020, 25, 5261–5280. [Google Scholar] [CrossRef]

- Bao, W. COVID-19 and online teaching in higher education: A case study of Peking University. Hum. Behav. Emerg. Technol. 2020, 2, 113–115. [Google Scholar] [CrossRef]

- Nagar, S. Assessing Students’ perception toward e-learning and effectiveness of online sessions amid COVID-19 Lockdown Phase in India: An analysis. UGC CARE J. 2020, 19, 272–291. [Google Scholar]

- Skulmowski, A.; Rey, G.D. COVID-19 as an accelerator for digitalization at a German university: Establishing hybrid campuses in times of crisis. Hum. Behav. Emerg. Technol. 2020, 2, 212–216. [Google Scholar] [CrossRef]

- Sobaih, A.E.E.; Hasanein, A.M.; Abu Elnasr, A.E. Responses to COVID-19 in higher education: Social media usage for sustaining formal academic communication in developing countries. Sustainability 2020, 12, 6520. [Google Scholar] [CrossRef]

- Toquero, C.M. Challenges and Opportunities for Higher Education Amid the COVID-19 Pandemic: The Philippine Context. Pedagog. Res. 2020, 5, 1–5. [Google Scholar] [CrossRef]

- Kerres, M. Against all odds: Education in Germany coping with COVID-19. Postdigital Sci. Educ. 2020, 2, 690–694. [Google Scholar] [CrossRef]

- Adnan, M.; Anwar, K. Online Learning amid the COVID-19 Pandemic: Students’ Perspectives. J. Pedagog. Sociol. Psychol. 2020, 2, 45–51. [Google Scholar] [CrossRef]

- Mishra, L.; Gupta, T.; Shree, A. Online teaching-learning in higher education during lockdown period of COVID-19 pandemic. Int. J. Educ. Res. Open 2020, 1, 100012. [Google Scholar] [CrossRef]

- Huang, R.H.; Liu, D.J.; Zhan, T. Guidance on Flexible Learning during Campus Closures: Ensuring Course Quality of Higher Education in COVID-19 Outbreak; Smart Learning Institute of Beijing Normal University: Beijing, China, 2020. [Google Scholar]

- IESALC. COVID-19 and Higher Education: Today and Tomorrow. Impact Analysis, Policy Responses and Recommendations. 2020. Available online: http://www.iesalc.unesco.org/en/wp-content/uploads/2020/04/COVID-19-EN-090420-2.pdf (accessed on 24 November 2020).

- Radha, R.; Mahalakshmi, K.; Kumar, V.S.; Saravanakumar, A.R. E-learning during lockdown of Covid-19 pandemic: A global perspective. Int. J. Control Autom. 2020, 13, 1088–1099. [Google Scholar]

- Bouton, E.; Tal, S.B.; Asterhan, C.S. Students, social network technology and learning in higher education: Visions of collaborative knowledge cosnstruction vs. the reality of knowledge sharing. Internet High. Educ. 2020, 49, 100787. [Google Scholar] [CrossRef]

- Patricia, A. College students’ use and acceptance of emergency online learning due to COVID-19. Int. J. Educ. Res. Open 2020, 1, 100011. [Google Scholar] [CrossRef]

- Gonzalez, T.; De La Rubia, M.A.; Hincz, K.P.; Comas-Lopez, M.; Subirats, L.; Fort, S.; Sacha, G.M. Influence of COVID-19 confinement on students’ performance in higher education. PLoS ONE 2020, 15, e0239490. [Google Scholar] [CrossRef]

- Carrillo, C.; Flores, M.A. COVID-19 and teacher education: A literature review of online teaching and learning practices. Eur. J. Teach. Educ. 2020, 43, 466–487. [Google Scholar] [CrossRef]

- Korkmaz, G.; Toraman, Ç. Are we ready for the post-COVID-19 educational practice? An investigation into what educators think as to online learning. Int. J. Technol. Educ. Sci. (IJTES) 2020, 4, 293–309. [Google Scholar] [CrossRef]

- eForm. 2021. Available online: https://eform.live/ (accessed on 2 December 2021).

- R Core Team. A Language and Environment for Statistical Computing. 2013. Available online: https://www.r-project.org/ (accessed on 1 March 2022).

- RUS. Research and User Support Service Portal. 2021. Available online: https://rus-copernicus.eu/portal/ (accessed on 23 August 2021).

| Average Score | Standard Deviation | |

|---|---|---|

| Interaction with students | 4.6 | 0.7 |

| Live interaction | 4.4 | 0.9 |

| Asynchronous interaction | 3.2 | 1.2 |

| Autonomous learning of the students | 3.6 | 1.2 |

| Supervision of the students | 3.4 | 1.2 |

| Audio quality | 3.9 | 1.2 |

| Video quality | 3.7 | 1.2 |

| Structured schedule | 3.8 | 1.0 |

| White board explanations | 3 | 1.3 |

| Flexibility of location | 3.2 | 1.3 |

| Time flexibility | 2.6 | 1.2 |

| Average Score | Standard Deviation | |

|---|---|---|

| Interaction with teacher | 3.8 | 1.2 |

| Interaction with other students | 4.3 | 1.0 |

| Live interaction (with teacher or fellow students) | 3.8 | 1.1 |

| Asynchronous interaction | 3.3 | 1.2 |

| Replaying material | 3.9 | 1.1 |

| Autonomous learning, independent research | 3.2 | 1.3 |

| Audio quality | 4.4 | 0.9 |

| Video quality | 4.2 | 1.0 |

| Structured schedule | 3.6 | 1.2 |

| White board explanations | 2.8 | 1.1 |

| Flexibility of location | 3.3 | 1.4 |

| Time flexibility | 4.0 | 1.0 |

| Average Score | Standard Deviation | |

|---|---|---|

| I learned and understood better in the pandemic situation | 2.7 | 1.0 |

| The pandemic situation demanded more self-discipline and self-motivation | 4.2 | 0.8 |

| The pandemic situation provided enough interaction with instructor and classmates | 2.6 | 1.2 |

| Online course materials were practical | 3.4 | 0.9 |

| The structure of the courses (deadlines, expectations) was well defined | 3.4 | 1.1 |

| The instructors were well prepared and organized | 3.5 | 1.0 |

| The instructors answered quickly and efficiently to students needs | 3.6 | 1.0 |

| The functionality of the digital elements was intuitive | 3.4 | 1.0 |

| The instructors provided an environment, which encourages interactive participation | 3.2 | 0.9 |

| During Course | Self-Study | |||

|---|---|---|---|---|

| Digital Element | Interaction | Digital Element | Interaction | |

| Academic lecture |

|

|

|

|

| Seminar |

|

|

|

|

| Practical course |

|

|

|

|

| Tutorial |

|

|

|

|

| During Course | Self-Study | |||

|---|---|---|---|---|

| Setting and Digital Element | Interaction | Digital Element | Interaction | |

| Academic lecture |

|

|

|

|

| Seminar |

|

|

|

|

| Practical course |

|

|

|

|

| Tutorial |

|

|

|

|

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Dubois, C.; Vynohradova, A.; Svet, A.; Eckardt, R.; Stelmaszczuk-Górska, M.; Schmullius, C. Impact of COVID-19 on eLearning in the Earth Observation and Geomatics Sector at University Level. Educ. Sci. 2022, 12, 334. https://doi.org/10.3390/educsci12050334

Dubois C, Vynohradova A, Svet A, Eckardt R, Stelmaszczuk-Górska M, Schmullius C. Impact of COVID-19 on eLearning in the Earth Observation and Geomatics Sector at University Level. Education Sciences. 2022; 12(5):334. https://doi.org/10.3390/educsci12050334

Chicago/Turabian StyleDubois, Clémence, Anastasiia Vynohradova, Anna Svet, Robert Eckardt, Martyna Stelmaszczuk-Górska, and Christiane Schmullius. 2022. "Impact of COVID-19 on eLearning in the Earth Observation and Geomatics Sector at University Level" Education Sciences 12, no. 5: 334. https://doi.org/10.3390/educsci12050334

APA StyleDubois, C., Vynohradova, A., Svet, A., Eckardt, R., Stelmaszczuk-Górska, M., & Schmullius, C. (2022). Impact of COVID-19 on eLearning in the Earth Observation and Geomatics Sector at University Level. Education Sciences, 12(5), 334. https://doi.org/10.3390/educsci12050334