Abstract

The COVID-19 pandemic has a high impact on education at many different levels. In this study, the focus is set on the impact of digital teaching and learning at universities in the field of Earth observation during the COVID-19 pandemic situation. In particular, the use of different digital elements and interaction forms for specific course types is investigated, and their acceptance by both lecturers and students is evaluated. Based on two distinct student and lecturer surveys, the use of specific digital elements and interaction forms is suggested for the different course types, e.g., academic courses could be either performed asynchronously using screencast or synchronously using web meetings, whereas practical tutorials should be performed synchronously with active participation of the students facilitated via web meeting, in order to better assess the student’s progress and difficulties. Additionally, we discuss how further digital elements, such as quizzes, live pools, and chat functions, could be integrated in future hybrid educational designs, mixing face-to-face and online education in order to foster interaction and enhance the educational experience.

1. Introduction

As the COVID-19 pandemic continues, most universities worldwide further provide their students with partial digital educational offers, and intend to build upon the gained experience during the pandemic situation to continue raising the level of digitalization of their educational offer. At the very beginning of the pandemic, most universities had to adapt within a short time to a fully digital term as both students and lecturers had to stay at home. The transition from face-to-face to digital lecture happened in many different ways, depending on both governmental and university requirements, as well as lecturer willingness to use new techniques and time to modify the course contents accordingly. Depending on the type of course, this transition faced some challenges. Especially in the earth observation and geoinformation (EO/GI) education sector, the use of specialized and often commercial software, as well as field work, represents an important part of the university education and their digital adjustment summons more originality than for traditional academic lectures.

In this paper, we assess the use of different digital elements for various course types in the EO/GI sector. Based on the outcomes, we suggest the use of specific digital elements for each course type. All outcomes are deduced from a survey performed for both lecturers and students, in order to contribute to improve both, the teaching and the learning experience. Additionally, we indicate which digital elements could be easily used even after the pandemic situation in order to further provide digital educational offer and we suggest how those elements could be adapted in durable eLearning scenarios in higher education. Even if intended primarily for the EO/GI sector, some outcomes can be used for other sectors as well.

1.1. State of the Art

Recent years have witnessed an accelerated evolution in higher education, aiming at the increased use of digital technologies for teaching and learning. Many models, such as blended learning and flipped classrooms, are yet facilitated through the accessibility to quantities of valuable information, tutorial, videos, and course material on the internet, using digital technologies. However, even though such models are highly praised and encouraged by many governments and fundings around the world, a formalized implementation of digital learning and teaching procedures is still at a fledging stage. A 23 points model for assessing the eLearning readiness of universities was developed [1], based on the McKinsey 7S framework developed for business strategic development [2]. It encompasses, principally, factors such as common vision and willingness of the university staff and IT employees to develop such technologies, the existence or the ability to create corresponding delivery platforms and content that can be adapted to fit eLearning strategies, as well as sufficient human-power for implementing those strategies.

The COVID-19 pandemic contributed to an acceleration of the digitalization process at all levels of society, and particularly at universities. It has been described as an “involuntary boost” [3]. This abrupt migration to a digital classroom, i.e., this forced distance learning in case of force majeure, compelled everyone to adapt in a very short period of time without a specific educational concept. This situation was even called “Emergency remote teaching” [4], as it did not rely on a careful design process, which should be the basis of each new (eLearning) educational model. Nine specific variables we identified that have to be considered when designing an online course [5], among which the pacing (self-paces or regular schedule), the instructor’s and student’s roles online (e.g., listen or collaboration) and the source of feedback (e.g., teacher or peers) play an important role. The authors showed that planning an online course is much more than solely delivering content online and requires the planning of adequate support and interaction activities in a social and cognitive process in order to increase the learning outcomes [4].

When specifically considering the field of Earth Observation and Geoinformation, many instructional designs have been developed for being used at a community level and delivered on the internet. At a school level, a portal for “Remote Sensing in Schools” that aims the more intensive use of remote sensing at schools through appropriate online teaching of pupils and their teachers has been developed [6] and enhanced with multiple teaching materials, easy-to-use analysis tools, and augmented reality features in a mobile application (https://www.fis.uni-bonn.de/, accessed on 3 November 2021 [7,8,9]). At a broader educational level, new capacity building solutions in Earth Observation, especially in remote sensing, are developing actively and new instructional designs arise, leading to a digital transformation of education in this field [10]. Starting from handbooks on particular applications [11], via diverse training materials such as tutorials [12], MOOCs (Massive Open online courses) ([10,11,12,13,14,15,16])) or slides on a particular topic ([11], https://eo-college.org/welcome, accessed on 3 November 2021), many materials exist for online education in the EO/GI sector. Furthermore, several projects aim the collection of training materials and training offers in the field of GI and EO ([17,18]) in order to better align curricula at the academic level with the needs of the job market, or the development of dedicated working groups and networks in this field [19]. In particular, an increasing use of recent digital technologies, such as cloud processing is mandatory for handling an ever-increasing amount of data and extract relevant information. Teaching in this field therefore already undergoes a paradigm shift, where the use of up-to-date digital technologies becomes mandatory to ensure a suitable education for the labor market. All those courses and approaches were carefully designed with respect to a defined audience and purpose, which often intend to make Earth observation accessible and understandable to a broader public via a full online accessibility and self-paced progress.

The assessment of such online instructional designs can be performed at different levels with many different criteria. In comparison to a classroom environment, where contact with faculty members is seen as the most relevant factor for the appreciation of a course by the students, the satisfaction about an online course is shifted on factors, such as suitable technological requirements, instructor accessibility, and interactivity [20]. In general, satisfied online learners are more prone to stay committed for the full duration of a course and retention rates are better [21,22]. Different approaches have been defined for estimating student’s satisfaction in online courses that encompass both criteria on the quality of the content [23,24] and criteria assessing of the interactive qualities of the courses [25]. One recurring factor of success of online education, and also one that has been investigated in many studies, is interaction [26]. If students feel isolated in their learning experience, the risk of dropout or unfinished courses or degrees is much higher [20,21,27]. Four types of interaction during online courses were defined [28], whose importance for learner’s satisfaction has been assessed differently depending on the studies: learner–learner [24,29,30,31], learner–instructor [24,29], learner–content [28], and learner–technology. It becomes evident that the satisfaction of the students towards a course is interconnected with the satisfaction and motivation of the instructor.

Most of the presented educational approaches and criteria were defined before a pandemic situation. Hodges et al. [4] stated that “The need to “just get it online” [during the pandemic] is in direct contradiction to the time and effort normally dedicated to developing a quality course”. One important additional parameter when considering online education during the pandemic is that the diverse lockdowns and limitations of the social life affect every part of the personal life. It creates disruptions also outside the university environment, so that online education was not a priority, neither for the instructors nor for the students, even though many spent a significant amount of time for the conception of suitable online concepts [4]. In such a short notice disruption of the everyday life, the personality and personal environment of both students and lecturers may also have an increased influence on their satisfaction towards new learning methods and their participation in online discussion and communication mode [32]. Closely related to the personality, the preferred mode of learning (e.g., just listening, or performing practical experiments) is also very subjective and defining instructional designs that satisfy all types of learners is challenging [33]. The level of readiness of higher education institutions, the impact and challenges caused by COVID-19 on online learning in higher education, either at a global [34,35,36] or at a more local or country scale [37,38,39,40,41,42,43], have been thoroughly described. One aspect that has been repetitively addressed was the mode of sharing the course content, which was limited either by data policy issues [43], or bad internet access and proficiency of use of digital technology [44,45]. A shift towards the use of Social Network Technology (SNT) (social medias and messengers) has been observed and recommended for sharing course material [45,46,47,48] even though student and teacher have different perspectives on their use [49]: teachers consider SNT as a collaborative knowledge building tool whereas students rather use SNT for downloading other student’s work with artifact, resulting eventually in less knowledge building through peer discussion. Although some authors reported that the student’s grade did not change much [50] or even improved during the pandemic [51], they also reported that the cognitive engagement (concentration, class attendance, and enthusiasm) of the students decreased during this period and suggested rewarding the students during the learning process to foster their engagement [51].

The pandemic situation forced every instructor and student to change their teaching-learning habits and transfer all courses in the digital world. This moving into online-teaching has evolved in this case from a possibility to a necessity. This paradigm shift is now at the state of refreezing [45]. One principal question addressed in the recent literature about the impact on COVID-19 on higher education is, therefore, if and how this crisis will change educational habits in the future [43,52,53]. Yet, few authors try to extend this analysis to a durable change in the education process. In this paper, we aim the identification of best practice examples for different types of courses and the determination of an optimized digital course model for EO/GI education, which could be followed upon even after pandemic situation.

1.2. Motivation

The goal of this study is to show, after almost two years of pandemic situation, the acceptance of diverse eLearning methods in the field of Earth Observation and Geoinformation (EO/GI), and their sustainability towards post-COVID education. In addition to drawing an overall overview of the digital experience at higher education level, it intends to analyze, for different types of courses (e.g., academic courses, practical course, seminar), which digital element or setup of digital elements offers best possibilities for teaching and learning. The term “digital element” is used intentionally, as we will not focus on the trademark of specific meetings and gathering tools but rather on their functionalities (e.g., chat, pool, live audio), which can be similar for different tools. An analysis based on the distinction between teacher’s and student’s experience of the digital year has been performed, as they both had to cope with different challenges during the pandemic year. This paper, therefore, intends to distinguish best practice examples and to propose new educational designs for different types of courses, specifically for the EO/ GI sector, by finding a common ground for both teachers and students.

To this goal, a survey was designed and performed to answer following research questions:

- How does the satisfaction of students and teachers for a specific type of course relate with the digital elements used for teaching and for interaction?

- Do we need different interaction tools for different types of interaction (e.g., technical support, exchange of knowledge, organizational support, feedback, learning assessment)?

The following chapters present answers to these questions. Section 2 shows the experimental design and the methods utilized for analyzing the survey’s answers, Section 3 lays out the results of the survey, and Section 4 discusses them according to the research questions, whilst proposing the integration of specific digital elements in future hybrid educational designs.

2. Material and Methods

2.1. Experimental Design

The analysis of the various digital elements and interaction forms used for the different types of courses during the pandemic year relies on the results of a survey performed from October 2020 to January 2021 at different universities worldwide.

2.1.1. General Structure of the Survey

Even if the central questions of the survey remain the same for both students and teachers, we developed two distinct surveys. Especially, questions related to their respective experience in learning or teaching, as well as data policy issues, remained different.

The survey was organized in four different categories, the same for students and lecturers:

- General questions;

- Questions related to the online learning form;

- Questions related to the online learning of GIS and Earth Observation;

- Personal Evaluation of the online semester.

A total of 43 questions for students and 37 questions for lecturers were asked, organized in these four categories, which are explained in more details in the following sections.

2.1.2. General Questions

The general questions aim to group the respondent into different categories, according to their country, their degree, or teaching experience. Rather than grouping the participants according to their age [21], we asked the participants which degree they are currently studying for and which year of study they are in. For the teachers, we consider their teaching experience. Additionally, we aim at obtaining an overall overview of the personal situation of the respondent regarding technical equipment and learning or teaching environment. In particular, questions related to the location of learning/teaching (at work or at home) and the learning/teaching conditions (e.g., child care) were asked in order to better analyze the influence of the study place on the perception of the pandemic year.

2.1.3. Questions Related to the Online Learning Form

The questions related to the online learning form regroup questions about the type of course rated for the survey, the number of participants, the type of digital elements used during the course, the form of interaction used during the course and/or during self-study and the organization of the course (e.g., synchronous or asynchronous).

Five different types of lectures were identified and could be selected for rating: academic lecture, seminar, tutorial, practical course, and laboratory/field work. The corresponding definitions can be found in Appendix A. Only one option could be chosen, and the rest of the survey was intended to be performed for this particular selected course.

Seven options were given for the form of digital elements used during course: web meeting, web presentations, screencast, slide or script online, blog, audio recording and others. The corresponding definitions can be found in Appendix B. The survey’s participants could select several options, e.g., web meeting or web presentation accompanied by the availability of the course slides or script online.

Two separate questions covered the form of interaction used for the course, one focusing on the interaction during the lecture and one focusing on the interaction during self-study time. Although the interaction forms during the course include options, such as chat, live audio, polls, the use of breakout room, and digital white board, the interaction forms during self-study include more asynchronous options, such as forum and email.

2.1.4. Specific Field Related Questions

The questions related specifically to GIS and Earth Observation intended to draw a better picture about the use of commercial and open-source software during the pandemic year, especially which solutions were found to maintain the practice of students with relevant tools and applications whilst coping with the data availability and processing. Those questions also included data exchange and possible problems encountered related to different operating systems.

2.1.5. Personal Assessment

To sum up the perception of the pandemic year, a personal assessment of the learning/teaching experience was performed, based on rating questions. In particular, the importance of different criteria, such as time flexibility, live or asynchronous interaction, and quality of the audio and video material were assessed individually. Furthermore, the time spent for learning or preparing teaching material compared to semesters under “normal” conditions was estimated. General questions, such as the overall satisfaction of studying or teaching during the pandemic year and the possible use of particular digital elements after the pandemic situation were followed by more specific questions about self-appreciation of the learning achievements, importance of the course structure and deadlines, as well as impression about the provided interaction possibilities.

2.1.6. Type of Questions

Different types of questions were used throughout the survey. Considering the student survey, 13 questions were free text questions, where participants were asked to give specific information, e.g., about their country of origin or on the reason why they appreciated or not appreciated the pandemic year.

Additionally, two types of multiple-choice questions were used for the survey. A total of 15 questions allowed only a single answer. For instance, as the survey aimed the rating of one particular course type, only one out of five types of course could be selected. Seven questions could be answered by selecting multiple responses (e.g., different types of digital elements can be used within one particular course).

Remaining questions were rating questions in the form of sliding bars in the range of 1 to 5 (not relevant to very important) or appreciation questions in the form of five different smiley faces ranging from strongly disagree to strongly agree.

The complete survey can be found under the following link: https://science.eo-college.org/covid-19-survey/ (accessed on 11 March 2022). The survey was conceived using the eForm WordPress form builder [54].

2.2. Methods

2.2.1. Target Population

The survey was first sent per email to project contacts and networks of the EO * GI sector, considering people involved in teaching at the university level. Colleagues were asked to take part in the survey and forward it to their students and corresponding networks in order to maximize its impact and allow an overarching study, not bound to one specific university or country. Furthermore, it was promoted on social media platforms and in dedicated EO-newsletters.

As many universities performed their own survey after the first pandemic term in order to obtain an overview of the impact and acceptance of a completely digital term by students and teaching personals, a moderate participation rate was expected for this survey.

2.2.2. Measurement Instruments

The analysis of the survey responses was performed using different statistical representation and metrics in R (R version 4.0.0, RStudio Version 1.2.5042) [55]. In particular, various bar charts were used to represent the percentage of respondent per category. Additionally, simple statistical metrics were used for questions permitting an appreciation ranking from strongly disagree to strongly agree or not important to very important, where each category was replaced by numbers ranging from 1 to 5. There, the arithmetic mean of the responses has been determined in order to determine the overall consensus of the participants. Additionally, the standard deviation was calculated in order to analyze the distribution of the responses, a higher standard deviation showing disagreeing opinions for the specific question. Finally, free-text questions were read and analyzed individually, and a ranking of the answer was conducted where necessary.

3. Results

This section gives an overview of the responses of the survey for lecturers and students separately. It is organized for each in the four main categories of questions (general, online learning form, specific GIS and EO questions, and self-appreciation). For the sake of visibility, only a few selected graphics are presented, the corresponding percentage of participants are given in the text.

3.1. Lecturers Responses

3.1.1. General Information

A total of 31 lecturers responded to the survey, most of them coming from Europe (67.5%) and America (26%). A total of 6.5% of the participant came from Asia, no participant from Africa or Oceania were recorded. A majority (73%) of the lecturers had more than six years of teaching experience, 23% had between 2 and 6 years, and only 4% had less than two years. Overall, 41% of the lecturers taught from home, either alone or without childcare responsibility (24.5%). In total, 27% of the participants had the possibility to teach from the workplace. The majority of the lecturers (84%) rated the level of digitalization of their teaching place at least very good.

3.1.2. Online Learning Form

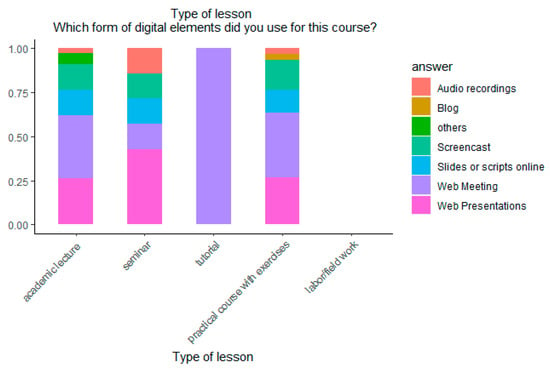

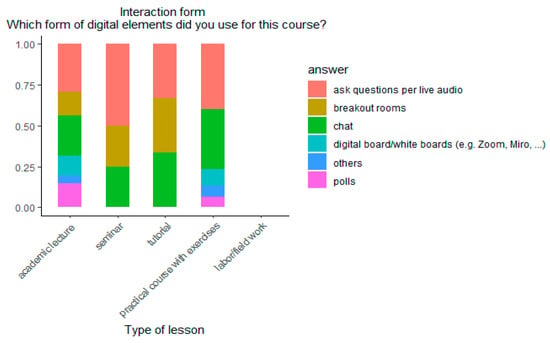

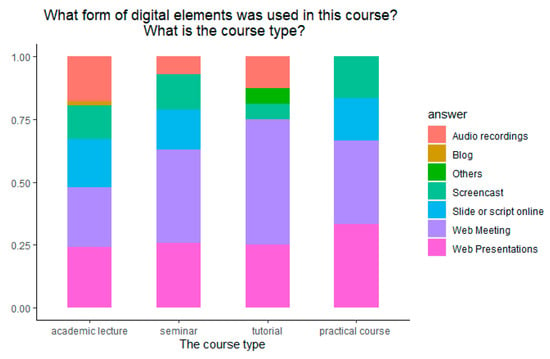

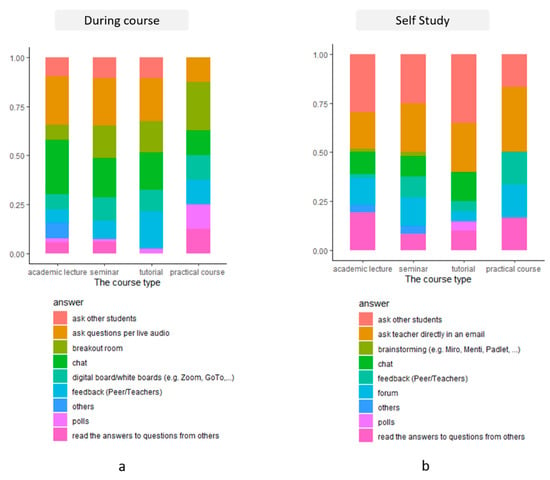

Academic lectures and practical courses with exercises were both selected most frequently for rating by the lecturers (43% of the answers each). Overall, 10% of the lecturers decided to rate a seminar during this survey, and 4% rated a tutorial. The majority of the responses acquired during the survey relate to student groups of 10 to 50 students (70%). Most of the courses took place using web meetings or web presentations, i.e., live meetings (62.3%)—see definitions in Appendix B. Screencasts were used by 15.2% of the lecturers. 14% of the lecturers additionally made their slides or scripts available online for the students to learn. Only 4% of the participating lecturers recorded their lecture and made the recording available online. Figure 1 shows the repartition of the different digital elements used for the different types of courses, normalized to one for each course type for better intercomparison. Although for tutorials only web meetings were used, the other type of courses showed a mix of all digital elements, with web presentations prevailing for seminars. A relatively high number of seminars were audio recorded compared to the other course types. For practical courses, blogs have also been used in some cases. Although many lecturers organized their lesson in 1 or 1.5 h video (46%), 30% reported organizing their session by topic, leading to shorter videos of approximately 20 to 30 min, after which some screen-breaks were planned before starting the next topic, i.e., a few minutes to be spent elsewhere than behind the monitor screen, for both students and lecturers. Few lecturers reported the use of screencasts in preparation to the lesson and web meetings for reviewing the materials and answer questions, as in a flipped classroom concept. Others used mixed interaction forms or group sessions in web meetings where lecturers and students alternatively shared their screen or performed live demonstrations. Most lecturers found the digital elements they used during the courses appropriate (52%), 24% had a neutral opinion about their use. In order to facilitate the exchange of course material, most lecturers (83%) used existing learning management system (LMS) provided by their university. The interaction during the courses was fostered principally using direct questions and answers per live audio (34.7%) or the chat function (29.6%) of the used digital elements. Polls and digital whiteboards were principally used during academic lectures and practical courses whereas breakout rooms were most used during seminar or tutorials, where group work happens most often (Figure 2). In the free-text options, few lecturers indicated that they used email as interaction form during course, for example for students to send screenshots from practical exercises. The majority of the lecturers (52%) found the interaction tools appropriate, 20% had a neutral opinion about these.

Figure 1.

Repartition of the form of digital elements used for each type of course in the field of Earth Observation and Geomatics, in percent normalized to 1 for each type of course.

Figure 2.

Repartition of the form of interaction elements used for each type of course, in percent normalized to 1 for each type of course.

In total, 83% of the participating lecturers indicated not having encountered any problem due to the data privacy policy of the tools. About 60% of the participants indicated that their institution invested extra money, either for buying licenses and material, e.g., web meetings licenses or webcams, or for additional formation of their lecturers in acquiring eLearning competences.

No particular correlation could be observed between the overall satisfaction of the lecturers over the online teaching and a particular digital element or interaction form, although the combined used of several digital elements showed a general higher satisfaction than the use of either one of them individually.

3.1.3. Use of Dedicated EO/GI Software and Related Technical Challenges

During the pandemic year, one of the principal challenges for higher education in the EO/GI field was the maintenance of high-quality standards in practical courses, ensuring that the students can use dedicated GIS and remote sensing software and gain practical experience for applications with different relevant software solutions. About 40% of the participating lecturers indicated the use of commercial software solutions during the pandemic year, which was facilitated either through remote login to local computer pools or extra (campus) licenses bought by the university to be installed locally for each student on their own computer. Few lecturers mentioned also the need to move from expensive virtual desktop infrastructures to low-cost cloud-based solutions. Over 60% of the lecturers also used open-source software solutions for teaching during the pandemic year, mostly GIS and programming software. In total, 40% of the participants indicated problems encountered by their students with the use of the proposed software solutions, mostly due to the use of different operating systems or connection problems, and 60% of the lecturers reported to have changed the structure of their practical courses because of software availability or technical equipment of the students. Many of the responding lecturers encouraged the students to use online help, such as forums, blogs, or tutorials. Other used mutual help from student to student during web meetings, or set up individual web meetings to help each student with screen sharing and individual guidance. Even though all interrogated lecturers used their own material, 40% of them additionally used tutorials or existing materials from the community.

3.1.4. Self-Appreciation

The self-appreciation of the pandemic situation in higher education was evaluated according to several criteria allowing to deduce which are important for lecturers. Considering those criteria, optimal teaching designs can be then sketched (see Section 4). The criteria are resumed in Table 1 and their average score from 1 (not relevant) to 5 (very important) is indicated together with the standard deviation, indicating the distribution of the responses.

Table 1.

Importance of specific criteria for online teaching—lecturer’s answers are averaged; 1 = not relevant–5 = very important.

For the lecturers, the interaction with the students is essential (score of 4.6 with the lowest standard deviation of 0.7). They prefer live interaction (score: 4.4) to asynchronous interaction (score: 3.2), which is also shown by a score of 3.8 (rather important) for a structured schedule allowing regular live meetings. An autonomous learning of the students (score: 3.6) is slightly more important than the supervision of the students (score: 3.4) for lecturers at higher education levels. A rather neutral score of 3.2 for the flexibility of location is observed, whereby the standard deviation for the flexibility of location is slightly higher (score: 1.3), meaning that some lecturers rather fancy the location flexibility and others do not. The time flexibility is not important for lecturers (score: 2.6), probably as teaching is part of their job and, therefore, belongs to normal working hours. The material quality plays and important role for the lecturers, the audio quality showing a slightly higher importance than the video quality (score: 3.9 and 3.7, respectively). Only the use or need for white board explanations is neutral for the lecturers, here also showing a slightly higher standard deviation, meaning that for some lecturers or particular course white board explanations may be more relevant than for others.

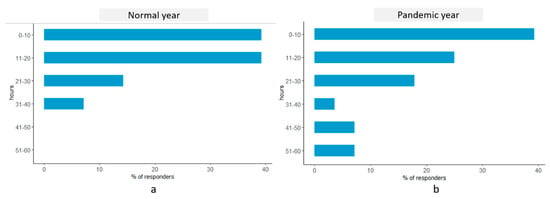

Considering the question whether lecturers appreciated teaching during the digital pandemic year, 80% answered that they still enjoyed it and would like to continue using some of the digital elements after the pandemic situation. The reasons for the lecturers’ satisfaction during the pandemic semester can be separated into private and educational reasons. The most recurring private reason why lecturers appreciated teaching during the pandemic situation was that they had less commute time to the lectures. The educational reasons are more diverse: some lecturers reported having more interaction and discussion with students in a virtual situation than in a face-to-face situation because of the virtual situation breaking usual structures where teachers are seen as untouchable individuals whereas in a virtual environment or, e.g., teaching from their living room they are seen as normal person. Many lecturers were also glad for the new challenge of switching to a digital environment and enjoyed using a combination of diverse digital elements (screencast, web meetings, individual teaching, etc.). Many indicated spending a lot more time to prepare their lecture and to create meaningful learning experience for the students but were satisfied with the outcomes. Figure 3 shows a comparison between the amount of time spent by lecturers for course preparation and student supervision during the pandemic year and in normal situation, in weekly average. Although the percentage of lecturers investing 0–10 h a week for teaching did not change, more lecturers recorded a significant increase in time spent for teaching during the pandemic year. The additional time consumption was principally due to preparation of the lectures for the digital format, as well as for the pre-recording of the lectures. Few lecturers also mentioned additional time spend on uploading recordings or providing additional help for students in the form of one-to-one supervisions.

Figure 3.

Weekly average of the time spent by lectures for teaching (preparation, execution, supervision): (a) during a normal year; (b) during the pandemic year.

Among the 20% of lecturers who did not like teaching during the pandemic situation, various reasons were mentioned, ranging from ever changing demands from the university needing each time to adapt the teaching concepts anew in a very short time, significant additional preparation time, especially for practical exercises, to connectivity problems from students hindering interaction.

The digital elements that were particularly appreciated in digital courses were breakout rooms, live polls, the use of videos and recorded lectures in a flipped classroom model, and the learning platforms themselves. In particular, the use of breakout rooms and cloud platform for practical exercises were recognized as very effective. Many lecturers indicated the will to continue using web meetings for individual consultations, even after the pandemic situation. Some reported wanting to further use the flipped classroom model, especially for tutorials or for ensuring course continuity when the lecturer is indisposed. Few lecturers indicated also the possible continuity of live interactions with speakers in other countries, the use of digital whiteboard instead of an erasable blackboard, for keeping track, and the digital correction of exercises and exams, which is must faster using online exam formats.

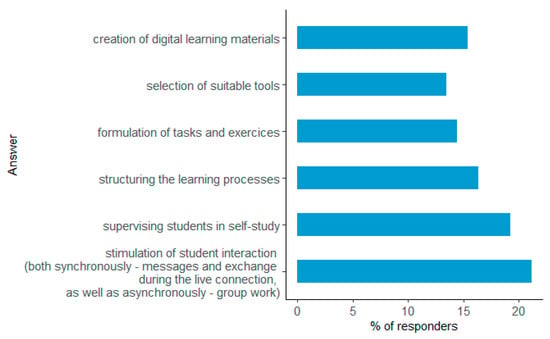

Figure 4 represents several didactical challenges faced by lecturers when designing and conducting classes during the pandemic semester. The major challenge was the stimulation of student interaction and their supervision, both during the courses and during self-study, followed by the creation and preparation of didactically appealing digital teaching material. The selection of suitable digital elements for teaching represented a challenge for 13.3% of the respondent. Although 16.7% of the respondents indicated that the structuring of the learning process of the students was a challenge, 70% estimated they were able to assess the students learning process (not shown here). Most did it using portfolio exams or regular grading of assignments, others using incorporated quizzes in the learning platforms, accompanying the lectures.

Figure 4.

Didactical challenges faced by lecturers during the pandemic year.

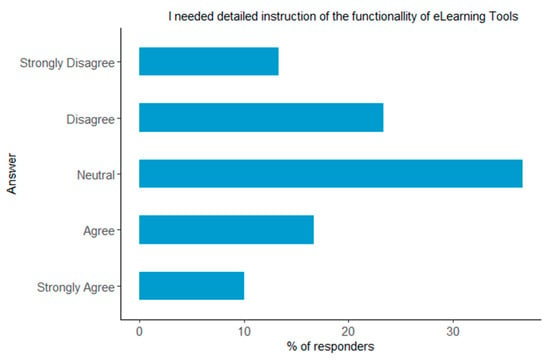

Figure 5 shows in percentage of participants the need of the lecturers for detailed instruction of the functionalities of the eLearning tools, which is balanced between a higher instruction need (a total of 25.5% strongly agree and agree), no instruction need (a total of 38% strongly disagree and disagree) and no particular need (36.5% neutral).

Figure 5.

Need of teachers for detailed instructions of the functionality of eLearning tools.

Considering the self-appreciation component, 64% of the lecturers estimated having explained thoroughly the structure of the course. Most lecturers also estimated answering quickly and efficiently to students needs during the pandemic semester (70% strongly agree and agree).

Considering if lecturers had any wishes for improving the eLearning tools and what would it need to use the digital elements in the long term, many mentioned that the tools are not the issue in the pandemic semester but that they needed to be used correctly for unfolding their full potential. In this sense, some teachers answered the digital elements need to be easier to use or should come with specific tutorial. Few specific improvements were mentioned, as, for example, an easier pop in and out of breakout rooms for lecturers (and hosts), a facilitated screensharing with students with “pointer” sharing for easing the interaction, easier use of quizzes elements of specific learning management platforms, and the improvement of interaction in videos and whiteboard.

3.2. Students Responses

3.2.1. General Information

A total of 73 students participated to the survey, 89% of which came from Germany. The second most represented country was the USA with 6% of the participants. Remaining participants came from Brazil and Italy. Overall, 45% of the student participants were studying for a Bachelor degree, 15% for a Master degree, and 37% were in a teacher’s training formation at the university. The remaining 3% are Ph.D. students in their first year of study. About 44% of the Bachelor participants were in their first year of study, 31.5% in their second year. For the Master students, 64% of the participants were in their first year of master studies. Similarly to the Bachelor student distribution, 44.5% of the students in teacher’s training were in their first year and 29.5% were in their second year. The remaining 26% were past their third year of study. The study places of the students were almost equally distributed between a flat shared with other students (35.5%), at home alone (33.5%), and at their parents’ home (27%). Many indicated a combination of two places of study during the pandemic year, not having to go to the university. The level of digitalization of the students’ study places was estimated good for 42.5% of the students, a total of 51.5% of the students estimated the digitalization level of their study place either very good or excellent and 6% rated the digitalization level of their study place as bad.

3.2.2. Online Learning Form

A majority of the students rated academic lectures during the survey (61.5%), 21.5% rated seminars, 12% tutorial courses, and 5% practical courses. The majority of the responses acquired during this survey relates to groups of 10 to 30 students (45.2%), followed closely by groups of more than 100 students (42.5%). Most courses took place using either web meetings or web presentations (55%), accompanied with slides or scripts made available online (17%). In total, 13.5% of the participating students also reported the use of screencast during their courses and 13.5% reported the use of audio recordings. Figure 6 represents the distribution of the digital elements per course type attended by the students. Similarly to the lecturers’ survey, the digital elements that were most used during the pandemic year are synchronous elements such as web meetings and web presentations. More web meetings were used during tutorials, similar to the lecturers’ survey, allowing more interaction during the course. Additionally, observable here, no audio recordings were performed during practical courses. Instead, a combination of web presentation, meetings, or screencast together with the availability of the slides or scripts online was used.

Figure 6.

Repartition of the digital elements for the different course types (students).

In total, 76% of the interrogated students mentioned that they attended the courses regularly. Overall, 37% of the students had a neutral opinion about the used digital elements during courses, while 39.6% found them appropriate and were satisfied with their use. A rather high number of students (23.4%) found the digital elements not appropriate and did not enjoy their use. For 95% of the students, the course materials were made available on a learning management platform. As interaction form during the courses, most students favored asking questions per live audio or chat (23.5% and 23%, respectively). 13% additionally reported the use of breakout rooms during the courses, and 9.7% of students reported having asked directly other students. Additionally, for 9.7% of the participants, the digital whiteboard function was used as interaction form. Polls were used in 2.4% of the courses attended by the students. Overall, 3.7% reported no types of interaction form during the courses, because of the asynchronous concept of the course. During self-study, the majority of the students reported having asked fellow students for support (28%) before asking teacher directly via email (21%). Forums have been used as indirect form of interaction during self-study, where students could discuss (13.6%) or read the answers to questions from fellow students (15.1%). Live consultations via web meeting with peer or teachers happened in 5% of the cases. Few students (1.5%) reported the use of quizzes, made available on the learning management system (LMS), as interaction form with the course material for self-study. Similarly to the digital elements used for the courses, most students (50.1%) had a neutral opinion about the interaction form used during courses. Although a total of 24% indicated rather enjoying their use, 25.9% found them inappropriate and did not appreciate them.

Regarding the form of interaction form used for each type of course (Figure 7a), chat was favored by students during academic courses, followed closely by questions per live audio. During seminars and tutorials, live questions were favored in comparison to chat, as they encourage discussion. Whereas breakout rooms were not used much during academic courses, their use increased during seminars and tutorials and they were the interaction form used the most during practical courses. Direct feedback from peers and teachers, also using live audio, were used the most during tutorials. It is also observable that the use of polls and only listening to or reading the answers from peers increased during practical courses, where a few students may participate actively and shy students may prefer to listen. This differs from the results of the teachers’ survey (Figure 2), where lecturers reported many questions per each live audio. Additionally, the participating lecturers did not use breakout rooms during practical courses but favored their use during tutorials and seminars. An increase in the use of digital whiteboards is noticed for the students’ answers during tutorials and seminars. During self-study (Figure 7b), students helped each other for all types of courses. A larger proportion of students asked the teachers directly via email if problems or questions aroused during self-study of a practical course than for an academic course (almost twice as much). For academic courses, seminars, and tutorials, students also used chat services such as group messengers for communicating with each other. Forums and reading answers to questions from others were used principally for practical courses and academic lectures.

Figure 7.

Repartition of the interaction forms for the different course types (students) (a) during the courses and (b) during self-study.

When suggesting further interaction forms, few students asked for regular, specific public chats or virtual rooms to encourage communication between students outside of the course. Many students insisted that live interaction is important, some options, such as forums and online meetings dedicated to students, were suggested, or even the use of further tools allowing chat rooms for students only that could better support practice-oriented seminars. A few respondents even suggested individual breakout rooms for consultations. Additionally, regular additional meetings with the lecturer for checking organizational question (form of the exam, deadline, workload) were mentioned as possible improvement. Few students indicated that the use of quizzes and screen-breaks during courses is appreciated and allows to stay focused.

3.2.3. Use of Dedicated EO/GI Software and Related Technical Challenges

The use of dedicated GIS and remote sensing software is highly relevant for the formation of the students. Even if open-source solutions are praised in the education, specific tasks require commercial software solutions and the students should obtain an overview and become familiar with a broad range of software solutions during their education, so that they stay competitive on the job market. In total, 26% of the interrogated students reported the use of commercial software during the pandemic semester, and 52% used open-source solutions. In the case of commercial software solutions, students reported that their universities bought extra licenses or that the software provider supplied extra student licenses. In the case where no extra licenses were available, remote access was provided to the computer labs of the universities. Out of the students having used dedicated EO/GI software, 30% reported having encountered problems with the software, either with the installation or with their use. Most students asked their fellow students for help or used online tutorials (32.5%) and forums (41%) to solve their problems by themselves. Few students reported having used the help provided by their lecturer, either via online meeting or email, and one student reported having given up trying, as problems persisted.

3.2.4. Self-Appreciation

The self-appreciation of the pandemic situation in higher education was evaluated according to several criteria allowing to deduce what is important for students to facilitate their learning experience. Considering the importance of each criteria in accordance with the lecturers’ self-appreciation, optimal teaching designs can be then sketched. The criteria are resumed in Table 2.

Table 2.

Importance of specific criteria for online learning—students’ answers are averaged; 1 = not relevant—5 = very important.

For the students, the interaction with the teacher is less important than the interaction with their fellow students (score 3.8 compared to score of 4.3). They have a quite neutral opinion about live (score of 3.8) or asynchronous (score of 3.3) interaction, whereby the standard deviation in both cases is higher than 1, showing some differences of opinion between students. In comparison to lecturers, the quality of both audio and video is for students essential (Score of 4.2 and 4.4, respectively), which makes sense as they are at the receiver end of the courses and should be able to see and hear the provided content. Whereas a structured schedule and the flexibility of location are not very important for the students (scores of 3.6 and 3.3, respectively), the time flexibility is for them a very important factor, with a mean score of 4.0. The lecturers did not consider this last criterion important, probably because lectures are part of their work schedule.

Asking the students the same question as for the lecturers whether they appreciated the digital learning during the pandemic semesters, 60% of the students answered negatively against 40% that rather liked it. Students appreciating digital learning mentioned the arguments of time flexibility and not having to commute between their living place and the university. Few students also mentioned they liked organizing their learning routine more freely than with a structured schedule, and that they were less stressed before exams. All students that did not appreciate learning during pandemic situation mentioned in the free-text answers the lack of interaction with their fellow students as principal source of boredom and stress. Few students mentioned that they felt left alone through the isolation and asynchronous interaction. They also mentioned that it was harder concentrating, especially with the external circumstances. Few students that did not appreciate digital education mentioned insurmountable technical difficulties, others a more important workload due to more housework and felt misunderstood by the lecturers. Some felt overwhelmed having no guidance. One student wrapped up the whole situation in a few sentences: “On the one hand, I would have been feared going to the university (because of the virus). On the other hand, I missed going out, seeing other students, sitting in seminars. Because of the online-semester only “the worst part of studying” was left: learning at home alone”.

There seems to be a discrepancy between the type of course attended and the level of satisfaction with the digital semester, for the students. In total, 100% of the students rating a practical course appreciated the digital semester and 44% rating a tutorial appreciated studying during the pandemic situation, whereas only a bit more than 30% rating an academic lecture reported appreciating studying online. This could be due to a more intensive live interaction during practicals, where more breakout rooms were used and allowed peer-to-peer interaction. This result should be considered carefully though, as the sample size varies between the different course types. Furthermore, while there is no correlation between the place of study and the level of satisfaction, there seem to be a small correlation between the year of study and the satisfaction with online education. Although students in their early Bachelor studies did not appreciate studying during the pandemic situation (about 33% reported enjoying studying last year), 55% of the Master students reported liking studying during the pandemic situation. This could be explained by the usually smaller groups of students during Master studies than during Bachelor studies, facilitating group work, as well as a more independent way of working during the Master studies. Additionally, an increase in the satisfaction level of 10% is noticeable for future teachers between their first and second year of study, which could be explained by the fact that students in later study years already know each other and the cogs of the university.

A majority of the students (53%) reported spending less than 30 h a week for studying during the pandemic situation. In total, 24% reported spending between 31 and 40 h a week for studying, and a total of 25% spent much more time (41–60 h) for studying. Of those students, few mentioned that they did not have anything else to do (“positive side-effect”), many more reported a more important workload triggered by the lecturers or because they ended up rewinding and pausing the recorded videos and taking notes like a dictation (“negative side-effect”, as the student were not able to identify the essence of the course). In addition to the learning itself, many students reported taking much more time organizing themselves, understanding the structure of the courses on the learning platform and obtaining all necessary documents for learning via the different channels used by the different lecturers. Some students reported here also problems with connection or particular software that were challenging to solve and took much more time than in a presence situation.

When asked whether they would like to receive further online learning offers after the pandemic situation, 47% of the students answered affirmatively. The principal digital element that students would appreciate having even after the pandemic situation are video recordings of the lectures in order to rewind them at will for exam preparation. Some students also mentioned that short eLearning components and short video clips are a great add-on that should be kept even after the pandemic situation. Additionally, few students mentioned that they particularly appreciated the online tests and exams. Many students found seminars and tutorials in their digital form via web meetings great and would like to continue it that way.

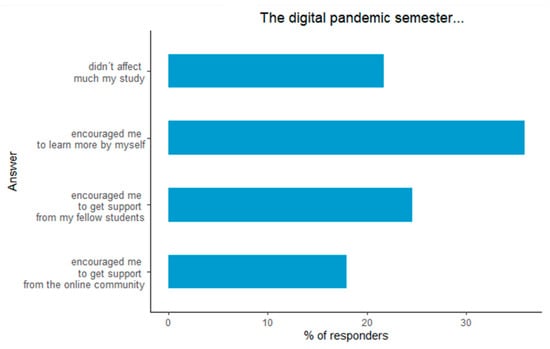

Concerning the self-appreciation, 22% of the students think that the pandemic semester did not affect much their studies. Although 36% recognized to have been encouraged to learn more by themselves, 24.5% received more support from their fellow students, and 18% also received support online using community forums (Figure 8).

Figure 8.

Student’s answers to multiple choice question about how much the pandemic situation affected their study, in the field of Earth Observation and Geomatics.

Table 3 resumes the self-appreciation scores of the student during the pandemic situation, with scores ranging from 1 (strongly disagree) to 5 (strongly agree).

Table 3.

Self-appreciation scores for students during the online pandemic situation—the scores given by all students are averaged.

The majority of the students indicated that the pandemic situation required more self-disciple for studying (Mean score of 4.2 with low standard deviation of 0.8). They have a neutral to disagreeing opinion of whether they learned and understood better during the pandemic situation (score of 2.7), and indicated similar disagreeing opinion whether the pandemic situation provided enough interaction with instructor and fellow students (score of 2.6 with standard deviation of 1.2). The opinion of students towards the intuitiveness of eLearning tools and the lecturer’s organization and support was neutral to positive, with scores between 3.2 and 3.6 and maximal standard deviation of 1.1. In particular, the higher scores of 3.5 and 3.6 achieved for the questions whether the instructors were well prepared and provided enough support confirm the self-estimation of the lecturers (Section 3.1.4).

4. Discussion

The answers from the survey show, in particular, that more direct forms of interaction were favored in specific courses types: a higher percentage of digital elements fostering direct and live interaction were used in seminars and tutorials than in academic lectures. This is probably due to the different didactical challenges of these course types: whereas seminars and tutorials usually require a lot of interaction between students and with the lecturers, an academic course is usually didactically designed to mainly supply students with theoretical content, which usually happen in one way, from the lecturers to the students, without much interaction. An additional explanation for the use of more interactive tools during seminars and tutorials could be the usually lower number of students than in an academic course, which facilitate direct dialogue amongst the participants.

The survey also showed that all lecturers continued using their own material for the courses. However, a high amount (40%) additionally used Open Educational Resources (OER). This shift towards the use of already existing material from the community could be supported and encouraged even more in different kind of courses: OER in the form of, e.g., screencasts could be used as preparation of academic lectures, or consolidation of knowledge and skills afterwards. Additionally, for tutorials and practical exercises, many tutorial videos exist online explaining specific tasks with a particular software solution (usually open source software)—such videos could be used to support the students in solving specific parts of their practical applications.

In general, it is observable that the pandemic situation posed challenges for both lecturers and students. Whereas lecturers mostly faced didactical challenges related to the rapid migration into digital teaching formats, students faced challenges related to their organization and self-discipline. Both missed the interaction, being during lectures (teacher–students interaction) or during self-study time (student–student interaction).

Whereas lecturers mostly considered the pandemic situation as a positive challenge and a possibility to adapt their classes into a digital format and learn new teaching methods, many students were overwhelmed by the new situation and felt left alone or misunderstood. In order to design teaching and learning methods suitable for both, those diverging points of view are essential to understand and take into consideration. Here, we want to draw attention to the main discrepancies and the main similarity between lecturers’ and students’ opinions in order to give advice and outline possible use of digital elements in future, partly digital educational concepts.

4.1. Different Expectations between Teachers and Students

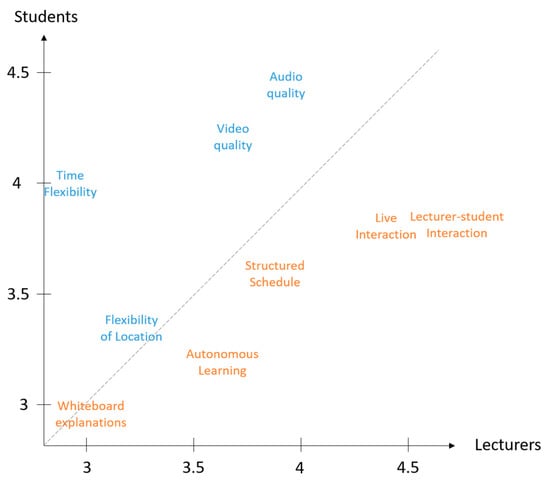

There are many points where lecturers and students favor different criteria for teaching or learning (Figure 9). One of them is the “time flexibility”. Whereas it is of high importance for students, it is not recognized as an essential criterion for good teaching by lecturers. As lecturers usually perform their lectures during their working hours, they do not much question whether the lecture should be at another time, as they otherwise will continue with their research. Similarly, audio and video quality are more important for students than for lecturers. This is understandable, as students are at the receiving end of the course: they mostly watch and listen whereas the lecturers speak. In this way, it is much more important for students that they can listen correctly the content of the lectures and this may be a factor that is not well appreciated by the lecturers. Additionally, whereas live interaction with students is a very important criterion for lecturers (Score of 4.5), students favor interaction with their fellow students and have a more neutral appreciation of live interaction with the teachers (Score of 3.7). This assertion needs to be considered in some more details. It appears that it is slightly dependent of the considered year of study of the students and of the type of attended lecture. Whereas Bachelor students have a rather neutral opinion of the importance of interaction with their lecturer (average score of 3.5, not shown here), students in teachers training think that this interaction is important (average score of 4.0, not shown here). Additionally, the need for teacher interaction seems slightly more important for tutorial and practical course (average score of 4.0, not shown here) than for academic lectures (average score of 3.7, not shown here). Academic lectures require usually less students’ participation, and here it seems that a live interaction with the lecturer is less required than in tutorials and practical courses, probably because more questions arise when doing practical exercises than while just listening to theoretical content.

Figure 9.

Criteria importance for students and lecturers in the field of Earth Observation and Geomatics, according to Table 1 and Table 2. Blue criteria indicate a higher importance score for students, orange criteria for lecturers. The axis represent the average score obtained by both students and lecturers, for these criteria.

4.2. Similar Expectation of Teachers and Students

Whereas the time flexibility was a diverging criterion, a structured schedule is for both students and lecturers of relatively high importance, probably as it allows both to plan their week or day according to the lecture schedule (Figure 9). Similarly, white board explanations are considered as a nice add-on, but not mandatory for both sides, probably as it highly depend on the didactical context it is utilized.

Not directly asked during the survey, but coming as a result from the free text options, a good structure of courses and learning processes is, in an online context, very important for both teachers and students, with clear tasks and deadlines. Teachers spend much time restructuring the learning processes during the pandemic semester, especially due to much shorter terms, in order for the students to better understand the course structure and receive the essential content. Probably due to the shorting of the terms, this resulted for the students in an additional amount of work that was not foreseen by the teachers and came on top on the other challenges they were facing during the pandemic situation. An intuitive use of digital elements is also appreciated from both lecturers and students. Especially, the use of pools and small tests between the courses for checking the comprehension and the use of breakout rooms for group work was appreciated from both sides, as they often help to reach the didactical goals of the course they were used in.

Finally, a supervision of the students in self-study is an important criterion for teachers and quick and fast support is expected from students from their lecturers. During the pandemic situation, direct contact via email, forum, or short conference calls with specific students or student groups seemed to work well.

4.3. Proposition of Sustainable Digital Course Designs

With respect to digital teaching and learning, the pandemic situation had both positive and negative side effects. Among the positive effects, it is undeniable that the level of digitalization at higher education increased in all disciplines: many tools were made available by the universities in a short period of time and diverse solutions were found to provide course material and content “as best as possible” while trying to keep the didactical goals of each course in a digital environment. At the same time, a negative side effect may arise in the many provided solutions and the sometimes suboptimal use of those. Lecturers had to decide in a short amount of time which digital element is best suited for which course, and students had to cope with the digital element sand learning platform favored by their lecturers. In particular, students in their first year of study reported that the use of several learning platforms and digital elements made the start at the university very difficult. They already have to find their marks in a new environment, and changing software or method of learning for each course was very challenging. Especially required from the first-year students, a clear course structure on the learning platform and the use of a single platform for all materials of all courses is of high importance.

Trying to consider the answers and needs of both lecturers and students resulting from the survey, we propose here the use of specific digital elements and means of interaction according to the different types of courses and their respective didactical goals. Each type of lecture is indeed related to a specific didactical challenge that should be facilitated by the use of specific digital elements. Additionally, some types of courses are often visited by a larger number of students, requiring different means of interaction than for smaller classes. Finally, in order to allow for more flexibility and respect the different learning or teaching preferences, the use of supplementary material or elements is suggested. A distinction is made between the use of specific digital elements and interaction forms during course and during self-study time.

4.3.1. Pandemic-like Situation

In this case, we consider a completely digital teaching and learning environment, which happens under severe lockdown conditions and may only make sense in the case of an ongoing pandemic situation or in a future pandemic situation (Table 4). There is, of course, not only one concept for a particular type of course. The following is rather to be seen as suggestions based on the respective experiences of teachers and students from the survey. Additionally, the suggested media do not claim to be exhaustive: the final selection of specific methods and media should be goal-oriented from a didactical point of view.

Table 4.

Proposed digital elements and interaction form for different course types, during a pandemic situation.

In order to keep a structured schedule and allow direct feedback, a live web meeting using, e.g., white board functionalities could be used during academic lectures. A possibility to use screencast organized by topics instead of a live meeting can also be considered, if the focus of the course is to provide students with theoretical content. In order to encourage the students to stay in their schedule and focus on the essence of the course, all screencast or live-recordings could be made available only for a certain amount of time for self-study (e.g., a few weeks), also for students not able to attend the course. If using a web meeting during course, live questions could be asked by the students or they could make use of the chat function, allowing the lecturer to answer the question when it best suits the course topic. One possibility to enhance interaction during academic lecture without changing the global didactical goal of such a lecture would be to use digital elements, such as live polls, in between, in order to verify that the content has been well understood by the students and react accordingly, for example by explaining again a topic that may have been misunderstood by a majority of the students according to the pool results.

During self-study, dedicated comprehension and knowledge tests could be made available during the semester for the student on the learning management platform for self-assessment, either on a weekly or topic basis. A forum could be additionally offered to discuss specific topics or clarify organizational issues. This form of lectures and interaction shows some similarities with Massive Open Online Courses (MOOC), which are usually available for a limited amount of time and where screencast in combination with self-assessment test are usually used as knowledge communication’s medium. The advantage of such a lecture form is that there is still a possibility to interact directly with the lecturer, e.g., during dedicated Q&A web meetings. This form has already been adapted, for example, in recent summer school formats (https://eo-college.org/sar-edu-sommerschule-2021/, accessed on 11 March 2022).

Seminars, practical courses, and tutorials are usually course types where more interaction is encouraged, either between students and lecturers, or amongst students. Therefore, it could be advisable to observe a strict schedule in order to foster this interaction and no recordings of the course should be made available online. As those course types focus on different types of interaction and student participation, different digital elements are suggested. For seminars, the emphasis is on the interaction of students with each other. Screencasts could be used for teaching short theoretical content, and web meetings can be used to allow student interaction and foster the discussion and argumentation skills of the students by interacting with peers. Different types of interactions could be considered depending on the group size, e.g., breakout room organized by topic could be used in larger student group to allow participation of each student. As active student participation should be encouraged, the chat function of web meetings could be only restricted to the exchange of useful links. During self-study, Open Educational Resources could be relied upon to provide more information about a specific topic or practical application. Additionally, specific web meetings organized by and for students only could be considered to exchange ideas and advice in an informal way. Different forum threads could be additionally created for the different seminar topics to keep everyone informed and foster peer feedback on the LMS. For practical courses, live web meetings should be preferred as there is usually an intensive teacher–student exchange during the practical application and direct need of support with specific software. In order to consider possibly different computer capacities of the students, the access to computing and storage resources could be granted using institutional storage and computing facilities or remote access to existing computer pools. Additionally, if the use of specific commercial software is planed during course, specific license models could be used if remote access to institutional facilities cannot be granted. Especially in the field of Earth Observation, specific educational licenses could be thought of for accessing specific cloud platforms where Earth Observation data and processing tools are already available (e.g., RUS [56]). Depending on the group size, short breakout sessions could be suggested, where students help each other resolve a problem and come back to the main room to discuss the solution with the lecturer. For help with specific technical problems or software issues during self-study, specific forum threads could be used, which would allow students having similar problems to discuss and find a solution, together with the lecturer. The interaction with the lecturer during self-study time could be limited to web consultation in case of remaining issues.

Similar to the practical courses, web meetings could be used for tutorials, with students interacting during the meeting either via chat or asking their questions per live audio. Depending on the exercises, a cloud platform could be used to exchange data and documents. For self-study and preparation of the tutorials, short and topic related screencast could be used. For fostering the interaction amongst students, specific web meetings could be arranged to solve the exercises among peers, and self-assessment tests could be used on the LMS to confirm achievements.

4.3.2. Hybrid Designs

In the case of hybrid course situation, we consider educational designs that may arise after the pandemic situation, when courses can happen both, in face-to-face or in a digital way (Table 5). For all cases, we consider that all students attend class in the same way, i.e., all in face-to-face or all in digital format. Even if the pandemic has shown that it is possible to divide the students in several working groups, some of them attending the course in face-to-face, and some of them virtually, it is not recommended for the future, as, beside a larger organizational load, equal opportunities for all students cannot be guaranteed. In the case of most courses would go back to face-to-face format during the schedule course time, the use of specific digital elements to enhance interaction and support the didactical concept is suggested. Additionally, for self-study time, new digital opportunities could be used in order to foster interaction and communication amongst students.

Table 5.

Proposed digital elements and interaction form for different course types, during a hybrid situation.

In a hybrid situation, academic lectures could take place in face-to-face format on a regular schedule, to allow active student participation and direct reaction of the lecturer to student’s behavior. As the principal goal of an academic lecture is to provide information about a specific topic, this content could be additionally provided in the form of screencasts, either to be visualized before the course or during course. Those screencast could be organized by topic and be short, in order to present the essential information that is then developed and discussed in more details during the face-to-face course. To foster the interaction during a face-to-face course and allow an engagement of all students with the course content, polls could be used as a digital enhancement of the course to receive direct feedback from the students, whether a notion has been well understood or if additional explanation is needed. During self-study time, content related screencasts or recordings of the face-to-face course could be made available for students to rehearse the course content. Such recordings also give students the possibility to attend academic lectures only in a digital format. In order to keep a structured learning schedule, the recordings could be made available online at the time of the corresponding lecture. As such recordings are important for exam preparation, they could be left online until the end of the term. In this case, students should be careful to identify the essence of the course instead of watching the video on replay. Different elements for fostering interaction with the course content, such as self-assessment tests on the LMS, or interaction amongst students and with the course content, such as topic dedicated forum threads, could be additionally used during self-study time to exchange on an informal basis. A specific forum thread for organizational matters could also allow shifting all organizational issues online and focusing the face-to-face interaction time on the course content. Such approaches are reminiscent of blended-learning models, where face-to-face time is enhanced with the use of specific digital content during self-study time.

For seminars in a hybrid context, working practice examples of flipped classroom approaches could become the new normal educational design. Especially, approaches combining the use of asynchronous screencast elements with synchronous face-to-face meetings can be thought of, in order to focus on student interaction during the course. Here, existing teaching material, such as MOOCs elements, could be used as screencast elements to give more insight into particular topics. In particular for seminars, which aim the implementation of small project tasks, peer-to-peer seminar concepts could be thought of, where the input of the lecturer only comes in form of screencast during semester, possibly also relying on existing OER during self-study time, and the students discuss the content together during dedicated meetings. A forum on the LMS could also foster interaction amongst students during self-study, to discuss about different seminar topics or issues in specific threads. The use of the forums could be endorsed by specifying it as only mean of communication during the seminar. Teacher consultation can then happen selectively in the form of web-meeting, for providing specific help and feedback.

For practical courses in a hybrid situation, the interaction with the lecturer should be in focus. Therefore, face-to-face formats are preferable, which could be enhanced with short screencasts in the form of tutorial videos, either during the course or during self-study, whereby the lecturer assumes the role of a trainer or assistant for answering occurring problems or specific processing details, and interpret the results. The supplementary screencasts could be either provided directly by the lecturer or could be already existing OER, as many good tutorials are available online, especially in the Earth Observation community. For allowing best possible interaction with the datasets and software both during course and during self-study, the use of a cloud-platform is recommended, for storing the data and accessing computing capacities. This would ensure that the student can continue processing the data at home, even with limited technical equipment. During self-study and in cases where many homework assignments are given, specific web meetings could be arranged for students to exchange about specific computing issues and try to resolve them amongst peers. This would support peer interaction during self-study time and the culture of feedback among peers. In case of remaining issues, a direct contact to the lecturer could be established during self-study via email.

For tutorials, it is also important from a didactical point of view that there is enough possibilities for the students to talk and ask questions about the content. Face-to-face meetings in small groups should be preferred. For preparation of specific exercises, screencast taken for example out of a corresponding academic course and OER could also be used. Such external resources allow having a different explanation of the same phenomenon or processing and therefore improve its comprehension. In order to ensure that all students understand the tutorial topic, poll tools could be used during the face-to-face tutorial to enhance interaction and allow active participation of each student in a direct and live manner during course. Questions that could not be answered correctly by all students could be discussed in more details or given more example.

For both presented situations, it is visible that there is no clear preference for the use of specific digital elements. Rather, we can observe a change in the recommended elements depending on the type of courses, according to their respective didactical challenges. Whereas an academic course would rather focus on a good interaction between content and students, more practical courses and seminar would focus on the interaction amongst students. Therefore, more passive or asynchronous interaction elements can be used for academic lectures whereas a practical course would require a more direct mode of interaction and corresponding digital elements. One variable mentioned in the previous chapters is the time invested by both lecturers and students during the digital terms. All proposed digital additions to face-to-face formats require at first for the lecturers more preparation time in order to be didactically useful and support the students learning, even though the effort is reduced for the following terms. All the same, it has to be considered that these elements should facilitate the learning of the students and not increase their workload. The use of the suggested digital elements could also be conditioned by the number of attendees and their learning preferences. In each case, the different forms of interaction and course modalities should be clearly presented at the beginning of the course, so that students can benefit from their deployment. Finally, data privacy policy was not the focus of this study, therefore it has not been further investigated here. However, this should not be forgotten, as some digital tools may or may not be allowed for use in specific countries or universities due to their data privacy policy. Whereas at the beginning of the pandemic, the principal objective was mainly to “get the courses online” and most lecturers did not really care about the data privacy policy of the tools they were using, many universities extended their offer with many digital elements whilst advising about specific data policy issues. An experience of two years with these many tools have shown which are adapted for use in a university context respecting data privacy policies, but new tools should be consistently checked before being proposed to the lecturers, in order to avoid the lecturers the become used to tools that are not suitable due to their policy.

5. Conclusions