2.1. Physics Laboratory Course at University of Helsinki

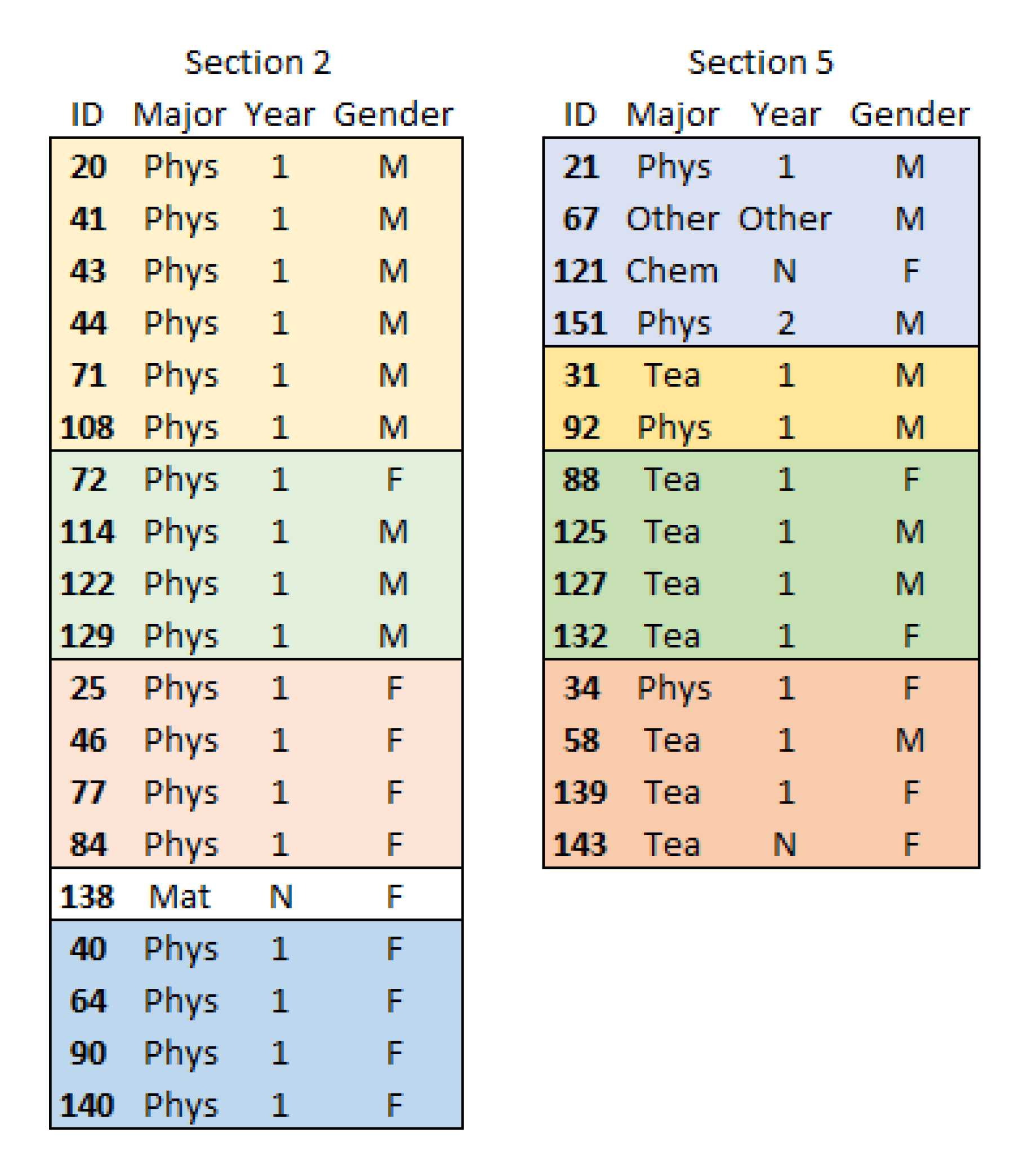

The introductory laboratory course at the University of Helsinki runs concurrent with the lecture courses in introductory physics, and is mandatory for all students who complete at least 25 credits (European Credit Transfer and Accumulation System, ECTS) of physics. The majority of students on this course are first-year physics majors, and the laboratory is one important place to learn to know other students. Six laboratory assignments are completed each term, and each assignment spans 2–3 weeks, giving students freedom to experiment and iterate their measurement set-up. The course is set up in seven sections, with up to 20 students present at the same time. The assignments are done in small-groups of 3–5 students.

In total, the number of students on the laboratory course is around 140-150 in the beginning of the year. The majority of the participants (60%) are physics major students. The rest are physics minor students, mostly other science majors, or non-degree students participating through open university. An important category of students are pre-service teachers who have mathematics as their first and physics as their second subject.

We have identified the laboratory course as a course which students easily drop out of. To see whether we could identify risk factors in the complex social dynamics of laboratory work, we set out to explore the social dynamics by using the SocioPattern platform. We wanted to see both how social networks evolve in an undergraduate laboratory, and whether we can find correlations between demographics, initial knowledge and being excluded out of collaborative learning in the laboratory.

The students form the small-groups during the first week. They are allowed to change sections and to visit other sections, but the recommendation is to work with the same people the whole year. Subgroups in general are less diverse than the group they are drawn from [

22], and we assumed this also to be the case for small groups formed by the students themselves during the beginning of their physics studies. Hence, we collected information on major subject, year of study and gender. We wanted to go beyond surface-level diversity measures and also collected a pre-test on mechanics knowledge (MBT [

33]) and the attitude survey CLASS [

35]. MBT was chosen because the incoming students at UH saturate many conceptual surveys in mechanics, including the FCI.

2.2. The SocioPatterns Platform

The SocioPatterns platform is a tool developed by the SocioPatterns collaboration (

www.sociopatterns.org) to collect information about human interactions in the physical world in an automated, observer-free way [

43]. The original goal was to detect transmission routes of airborne diseases. However, it has since been widely used to study patterns in human interactions in order to analyse social phenomena [

44,

45,

46,

47,

48,

49].

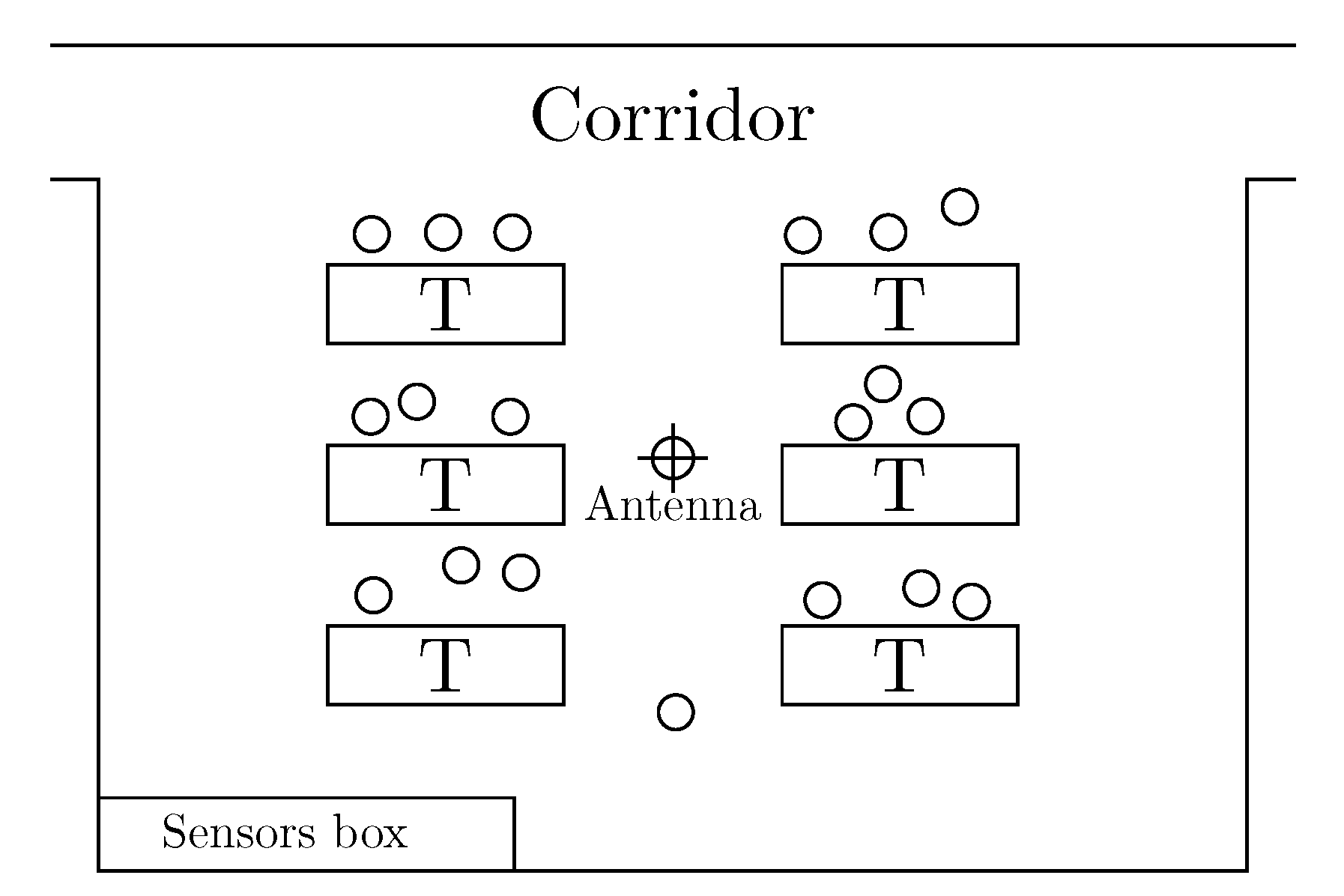

The system is designed to detect face-to-face contacts between individuals. It consists in sensors that are worn by the participants (

Figure 1), able to detect each other at short range (1.5 m maximum) through the use of RFID chips and radio emitters. Furthermore, as the signal used for the detection is blocked by the body, detection is only possible when the individuals are in their respective front half-spheres. A

contact as detected by this method is thus defined as a physical proximity (less than 1.5 m), where both individuals are facing each other (they are located in the front space of each other).

Sensors are calibrated so that a contact lasting 20 s will be detected with probability ∼100%. Contacts lasting less than 20 s are detected with a probability which decreases with their duration. This calibration sets the temporal resolution of the system: contacts are recorded every 20 s. The minimum contact duration is therefore set at 20 s. The internal detection system of the sensors limits the number of simultaneous contacts to 25 per interval of 20 s.

The sensors have a very limited built-in memory: antennas are used to collect and store the contact data. As a consequence, only the areas covered by these antennas are monitored: any contact occurring outside will not be recorded. Antennas have a theoretical range of detection of 30 m, but it is limited by the presence of obstacles such as walls.

2.5. Identifying Connections

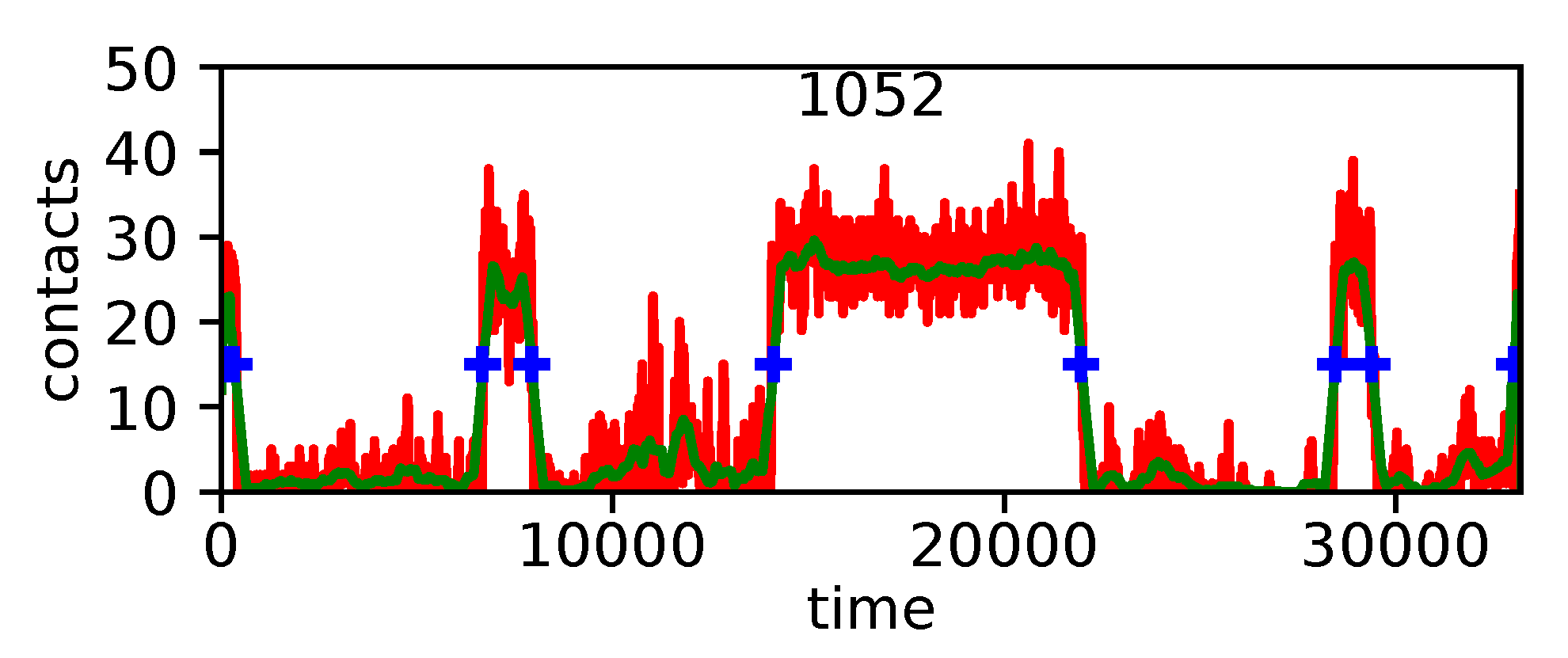

A network is comprised of nodes and links, which bind the nodes to each other. The contact data forms a temporal network, in which nodes are individual students and links are contacts between them, that appear and disappear as time passes. From this rich and complex data, we compute the aggregated network, in which a link exist between two nodes if they have been in contact at least once, and the weight of this link is the total contact duration between the two individuals.

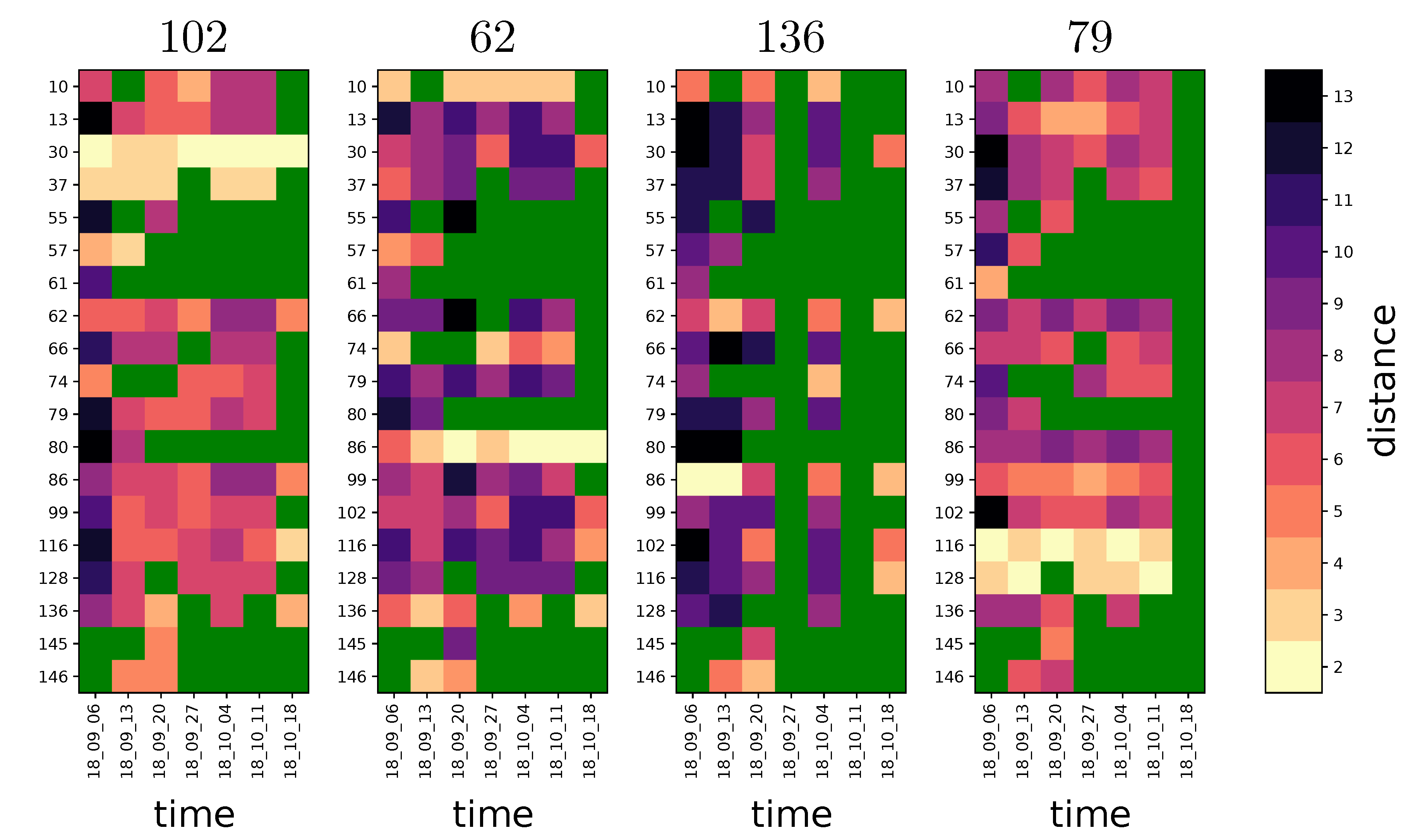

As seen on

Figure 5, teaching assistants (TAs) always adopt a central position in the networks, while students are aggregated in groups around him/her. The next step is identifying which students are working together (forming a small-group).

Usually, the nodes in a network could be characterized by e.g., betweenness centrality, which is a measure of how central a node is to the network. Betweenness centrality for a node is calculated by calculating the shortest paths (number of links weighed by link strength) between all possible pairs of nodes, and calculating the number of shortest paths through each node. Thus, higher betweenness centrality means a more central place in the network. However, the laboratory networks are too small for the betweenness centrality to vary much between students. As only a 20 people are in the laboratory at the same time, no individuals are far from each other in terms of the number of links needed to get from one person to another. Also, we have numerous contacts between students from different groups. Instead of the usual community detection algorithms, such as the modularity method [

29], we use a divisive approach, in which we progressively remove links to make the groups appear.

The traditional method is the Girvan-Newman algorithm [

50], in which at each step we rank the link in decreasing betweenness centrality and remove the most central one. This algorithm is based on the assumption that a network is made of densely connected groups joined by few links. However, in our case the links between groups are too numerous for such a detection to work. Using the weighted betweenness centrality even worsens the problem, as the strong links exist

within the groups. Hence, removing links with high betweenness centrality breaks the subgroups, rather than makes them appear.

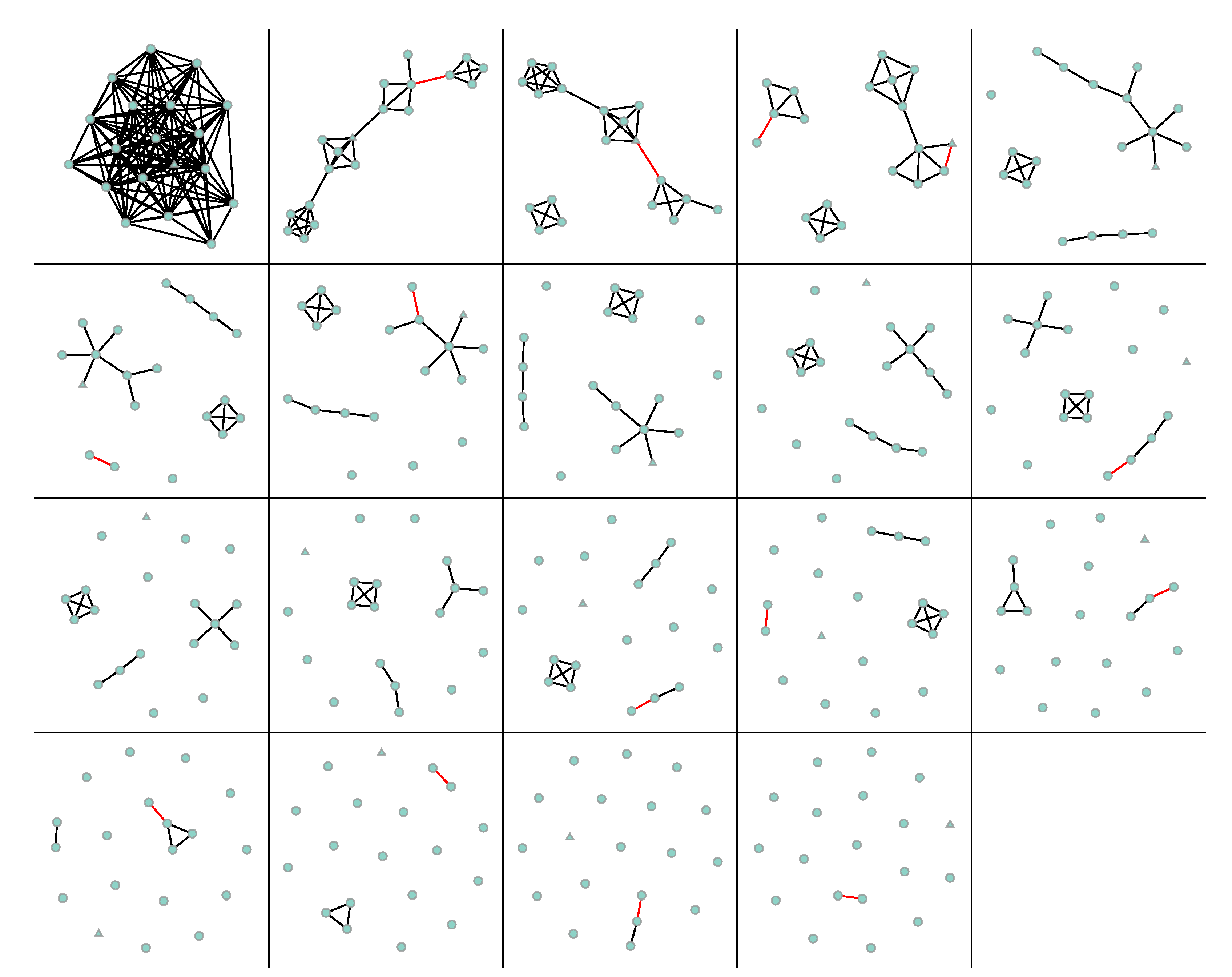

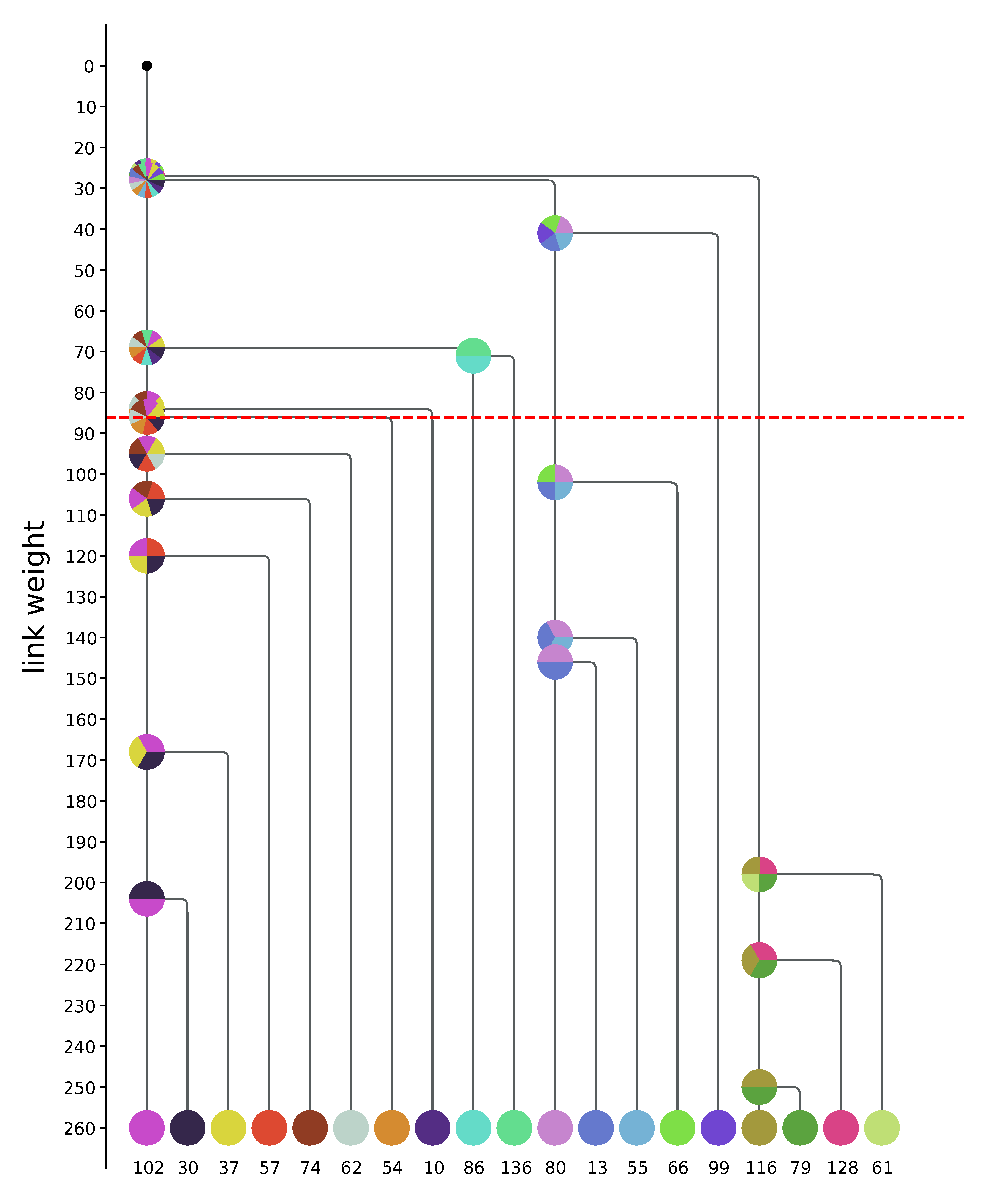

For these reasons, we use directly the weights of the links as the criterion for link removal. Since strong links are within the groups, we remove links starting with the weakest and then following increasing weights. As for the usual method, we then track the

depercolation steps: the steps in which the number of connected components in the network increases, i.e., the steps where a group breaks away from the network (

Figure 6). In the beginning (upper left corner of

Figure 6) all links, meaning all contacts between individuals, are present). As the weakest links are removed, progressively, subgroups appear. When stronger and stronger links are removed, the number of subgroups increases, but progressively groups are broken up to individuals as the percolation steps reach a point which removes the strong links inside the groups.

We keep track of the groups thus formed at each step in

Figure 6, and note the link which removal is responsible for the breaking, along with its weight. This allows us to build a

depercolation tree joining these groups (

Figure 7), similar to the dendrogram what one would get from a clustering algorithm [

51].

This tree gives the whole internal structure of the network in terms of link depercolation. For example, in

Figure 7, the group first breaks into a group of four students (61, 128, 79, 116) and the rest of the class. We can thus interpret that this group of four is only weakly connected to the whole group, as all links connecting it to the rest have low weights. However, the students in this group of four are strongly connected to each other.

Such tree gives many possible partitions of the original network, depending on where we cut in terms of limit weight. In our case, we however have a potential natural threshold by using the TA as a reference. At some point in the depercolation process, the TA becomes isolated in the network. By definition, the TA does not belong to any student group. We can thus consider that links weaker than the strongest link connecting the TA to the network are not relevant. In the tree, this is noted as the red dashed line. All groups that break before this point (i.e., above the red line in

Figure 7) are coined as

weakly connected as they are connected at best with a link weaker than the threshold. All groups that break after (i.e., under the red line in

Figure 7) are coined as

strongly connected and are assumed to be relevant student groups. In the example of

Figure 7, that means we have one group of six students (Group A: 102, 30, 37, 57, 74, 62), the TA (54), three isolated students (10, 86, 136), a group of four students (Group B: 80, 13, 55, 66) another isolated student (99) and the previously spotted group of four students (Group C: 61, 128, 79, 116).

The tree also allows us to investigate the inner structure of the groups, by looking at the branching under the threshold line. All of them are made of one strong pair, to which the other nodes are singly connected: (102, 30) for Group A, (80, 13) for Group B, (116, 79) for Group C. Similarly, looking at the branching above the threshold line, we can understand how the groups connect to form the whole network:

isolated node 10 connects to Group A;

isolated nodes 86 and 136 form a pair, which then connects to Group A;

isolated node 99 connects to Group B, which then connects to Group A;

Group C connects last to Group A.

This analysis provides thus a method to interpret behaviour from the aggregated network, simplifying the structure by focusing on the strongest links between them. From these connecting steps, one can then make hypotheses about the relations between the students, and the group structure that exists within the class.

2.7. Survey Data

All students participating in the laboratory course were asked to complete a background information form, stating their study track (Physical sciences, Mathematics, Science teacher, Chemistry or Other), study year (1, 2, higher or other, where other accounts for non-degree students) and gender (male, female or other), along with their consent to combine these data with data collected from sensors and surveys collected on introductory physics courses. Students who did not wish to participate were instructed to not wear a sensor in the laboratory and to decline their consent in the background survey. Filling in the consent form was compulsory for unlocking the return of lab reports, meaning all students who returned laboratory work for grading had to give or decline consent. Hence, voluntary participation and informed consent were ensured. All data was treated anonymously. As the research also did not involve intervention in the physical integrity of the participants, deviation from informed consent, studying children under the age of 15, exposure to exceptionally strong stimuli, causing long-term mental harm beyond the risks of daily life, or risks to the security of the participants, the study did not require an ethics review, according to the guidelines of Finnish Advisory Board on Research Integrity [

52].

To collect information on the students’ level of physics knowledge and their attitudes towards learning science, the MBT and CLASS surveys were administered on the physics course lectured concurrently with the laboratory course. The MBT and CLASS surveys are administered as a part of homework exercises, and participation is rewarded with exercise credit equal to one homework problem. This credit was available to students regardless of whether they gave consent to use the data for research. The data were collected electronically and improper data were discarded. For MBT, using less than 300 seconds and for CLASS less than 250 seconds for finishing the survey were used as cut-off. For CLASS, also having more than 4 missing answers, having more than 26 same answers (out of 41), and an incorrect answer to the control item (31) were used to discard improper data.

The MBT was administered in the first week and CLASS in the second week of studies, which also corresponds to the first and second week of the lab course. The students are meant to take the laboratory and the lecture course at the same time, but many students postpone the laboratory course, and these surveys were not compulsory. Out of the students consenting to participate in the study, 79% answered the MBT and 75% the CLASS.