4. Results

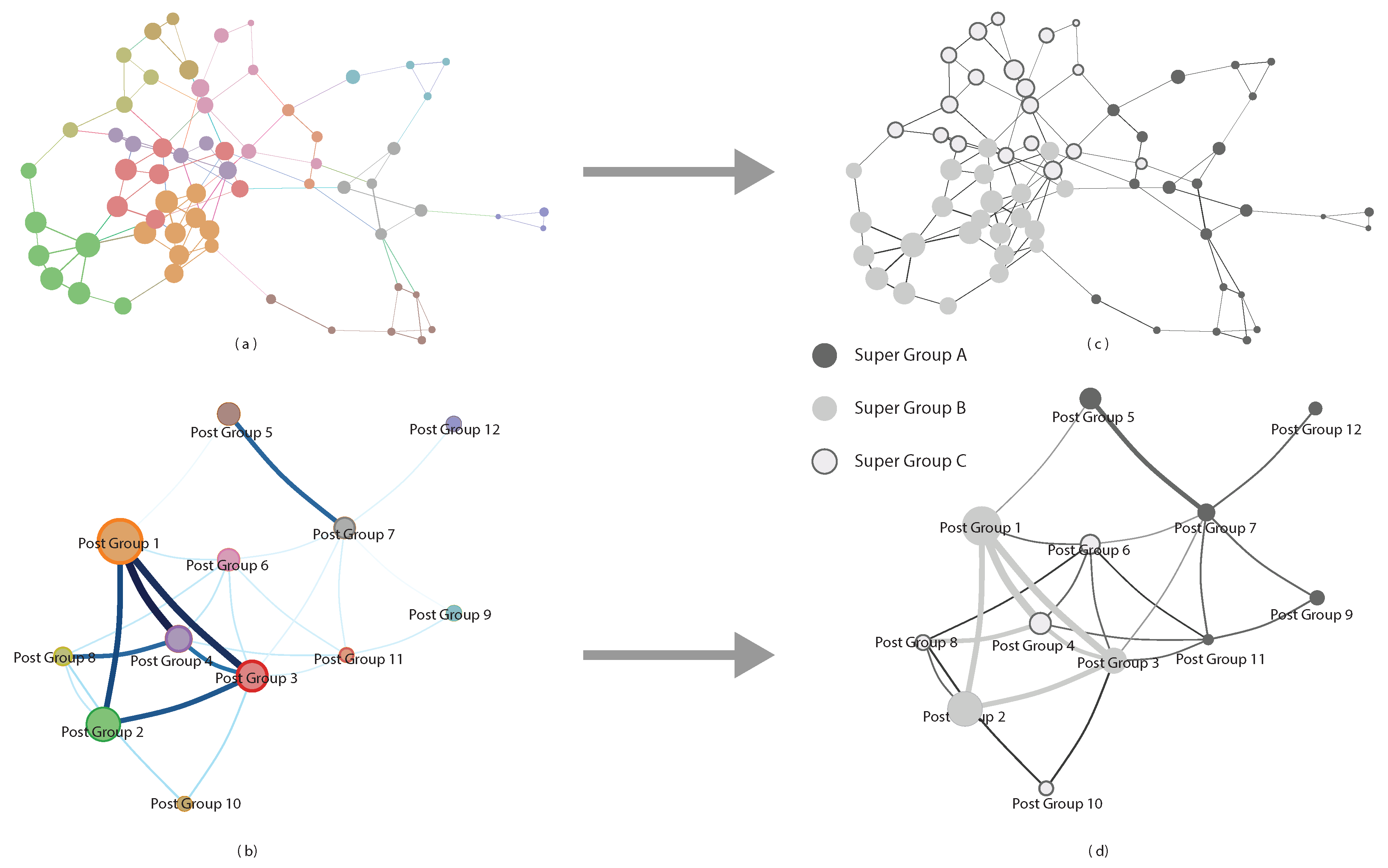

For the participants in the study, the methodology resulted in a pre intervention network (, ) of teacher responses before participating and a post intervention network (, ) of teacher responses after participating in the study. Both networks could individually be stably grouped, but groups were not stable between the two networks. This indicates that teachers changed their responses, but also that the network analysis did not identify particular groups of teachers that tightly followed each other in changing their specific responses to the questionnaire. Since this study focused on results of the intervention further network analyses focused on the post intervention network.

The community detection algorithm revealed 12 groups in the post intervention network, which were labelled ‘Post Group 1’ and so forth. A ‘modularity’ score of 0.58 indicated that overall the groups were distinct to a high degree [

41]. However, some groups were very small (

for Post Group 12, for example), which made a detailed analyses frequency of answers intractable. To overcome this difficulty, we used both the network and the network map shown in

Figure 3a,b to visually identify groupings of groups—‘super groups’. The depiction of the network in

Figure 3a was made using a force-based algorithm in Gephi. We used this algorithm numerous times and found the same structure of the layout every time, except that the layout was sometimes mirrored or rotated. Thus, for example, the green nodes of Post Group 2 would always be near the red nodes of Post Group 3 and the orange nodes of Post Group 1 in the same spatial configuration as shown in

Figure 3a. We also used this feature of the layout algorithm in our identification of super groups. However, since this was based on a visual inspection, we took a number of steps to ensure the validity and reproducibility of the super groups. Having identified candidate super groups, we checked the quality of the new grouping by confirming that the average internal similarity between teachers in super groups was larger than the external similarities—the average similarity between teachers in a super group and all teachers outside of that super group. The next paragraph explains the visual analyses, while the details of the quantitative analyses are given in the

Supplemental File S6 Finding and quantitatively characterising the optimal super group solution.

Based on a visual inspection of the network and network map as depicted in

Figure 3a,b, we initially merged Post Groups 1–12 into Super Groups A, B, and C. Super Group A consisted of Post Groups 5, 7, 9, and 12, Super Group B of Post Groups 1–4, and Super Group C of Post Groups, 6, 8, 10, and 11. This initial grouping was made by investigating shared links in the network map (

Figure 3c) and spatial placement in the layout of the network (

Figure 3a). Since it was done by visual inspection, we made slight permutations of Super Groups to see if they would result in a better-quality solution. We used the Modularity,

Q, as our measure of quality. Thus, we searched for the solution with three Super Groups that would maximise modularity. However, we also wanted to embed the Post Groups found empirically and robustly by Infomap in Super Groups. As such, our first rule for changing Super Groups was that whole Post Groups could switch between Super Groups. Our second rule was that Post Groups could only switch to a Super Group to which they were adjacent in the network map. The third rule, our decision rule, was that if a switch increased

Q, the new solution was accepted, if not, it was rejected. If a new solution was accepted, the adjacency of all Post Groups to Super Groups were re-evaluated. The end result was Super Group A consisting of Post Groups 5, 7, 9, 11, and 12 (22 teachers); Super Group B consisting of Post Groups 1, 2, and 3 (23 teachers); and Super Group C consisting of Post Groups 4, 6, 8, and 10 (19 teachers).

Figure 3c,d show Super Groups A, B, and C. The modularity of the new grouping was

, which is lower than the optimal solution but still indicative of a strong group structure [

41]. The analyses of similarities yielded an average of internal similarities of 0.51 (

), which was significantly higher (

, 95% CI = [0.56, 1.43]) than the average external similarities of 0.45 (

).

The measure for over-representation revealed that some LWGs were over-represented in some Super Groups (

).

Table 1 shows the distribution of LWG per super group. Thus, Super Group A consists mainly of teachers from LWG 2 and all of LWG 2’s teachers are in Super Group A. Furthermore, all four teachers in LWG 5 and most teachers from LWG 6 were in Super Group B.

We also tested for over-representation in terms of subject, years of teaching, and gender. Two other results emerged. First, we found that ten participating teachers who taught integrated science were placed in Super Groups A (six teachers) and B (four teachers). Closer inspection revealed that the six integrated science teachers in Super Group A belonged to LWG2 while the four integrated science teachers in Super Group B were from LWG5 and LWG6. Since these were the LWGs, which were over-represented in Super Groups A and B respectively, we believe that this result stems from that association rather than being an effect of teaching integrated science. We found no over-representation for other subjects. We found no evidence of over-representation in terms of years of teaching.

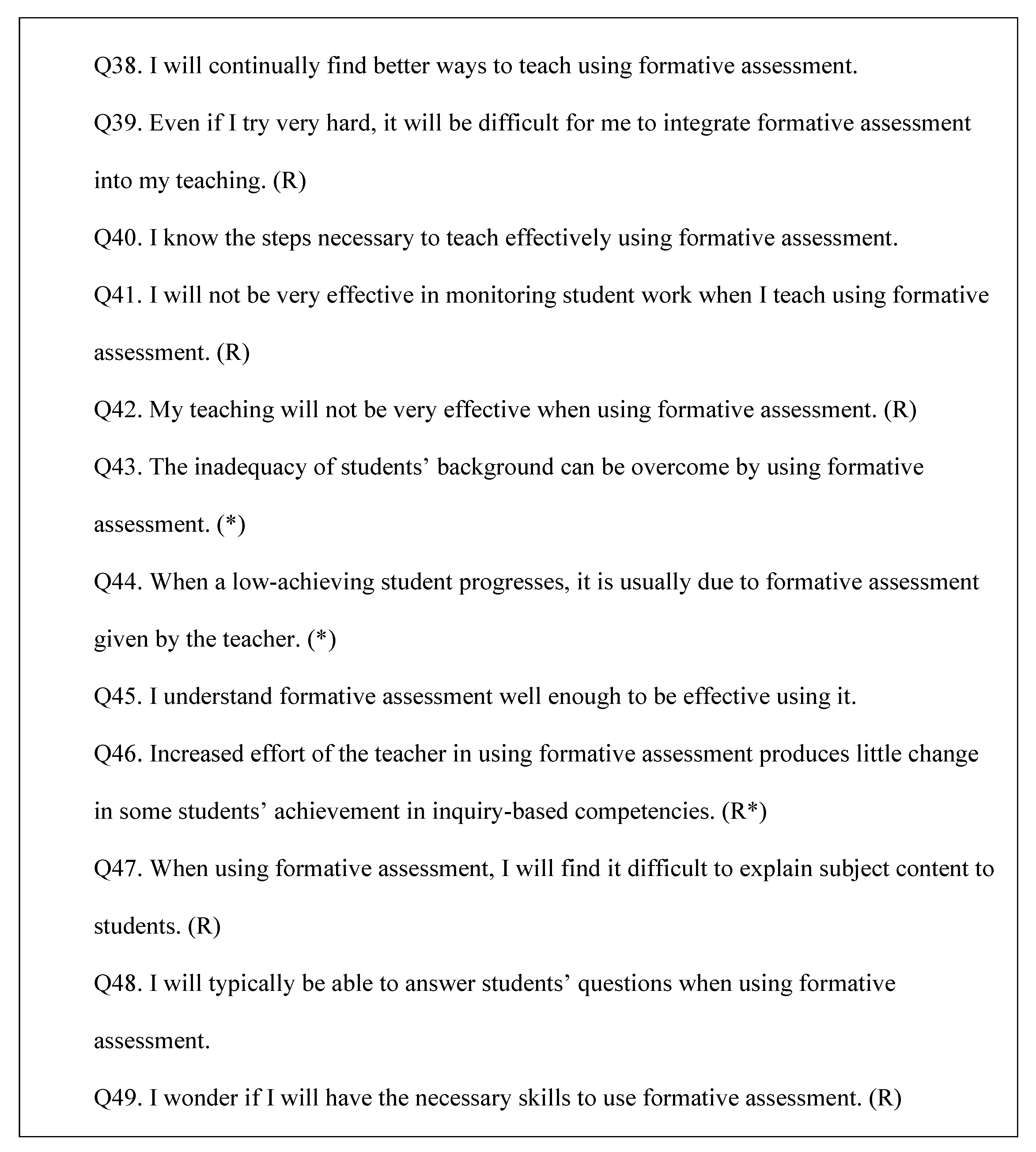

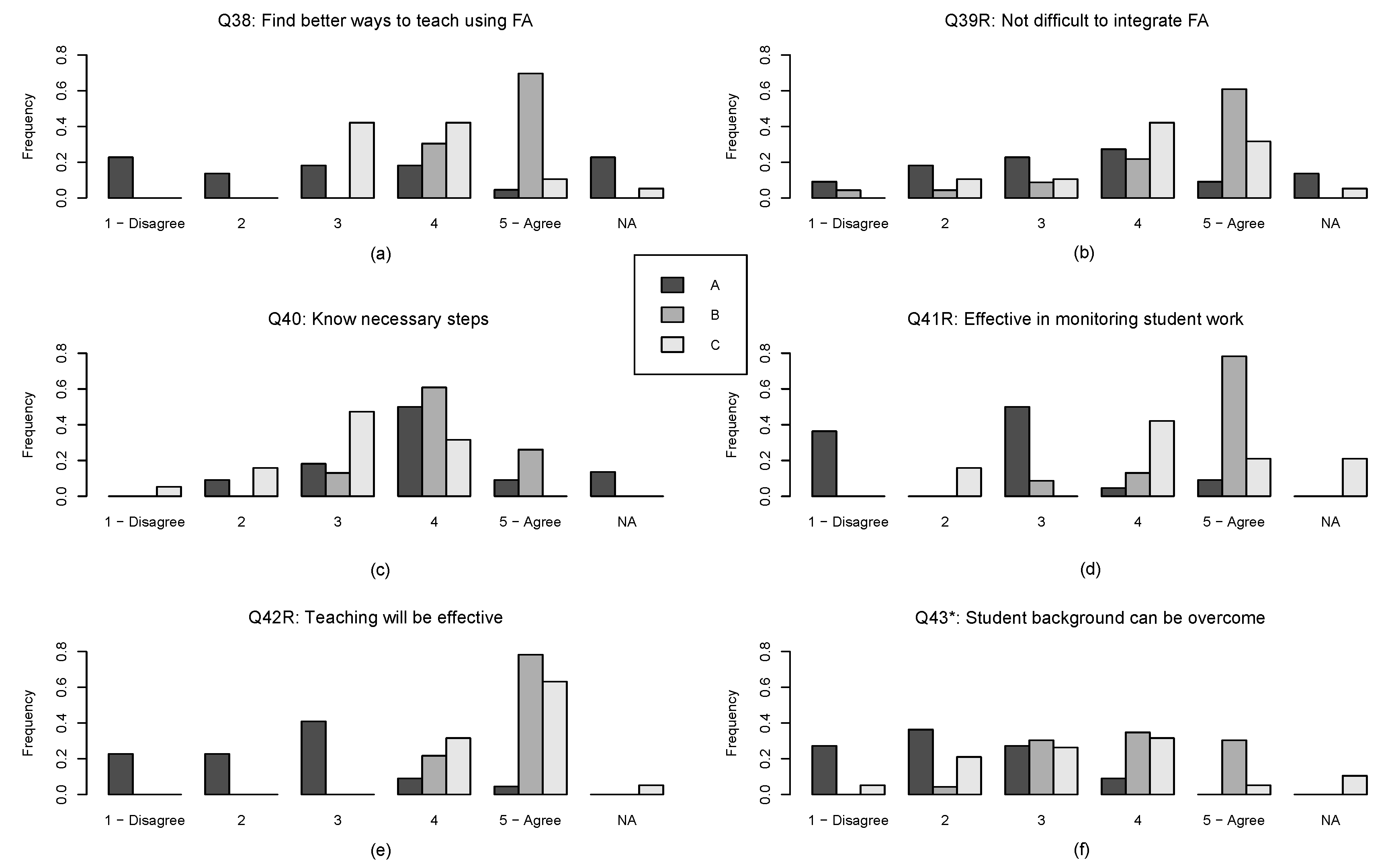

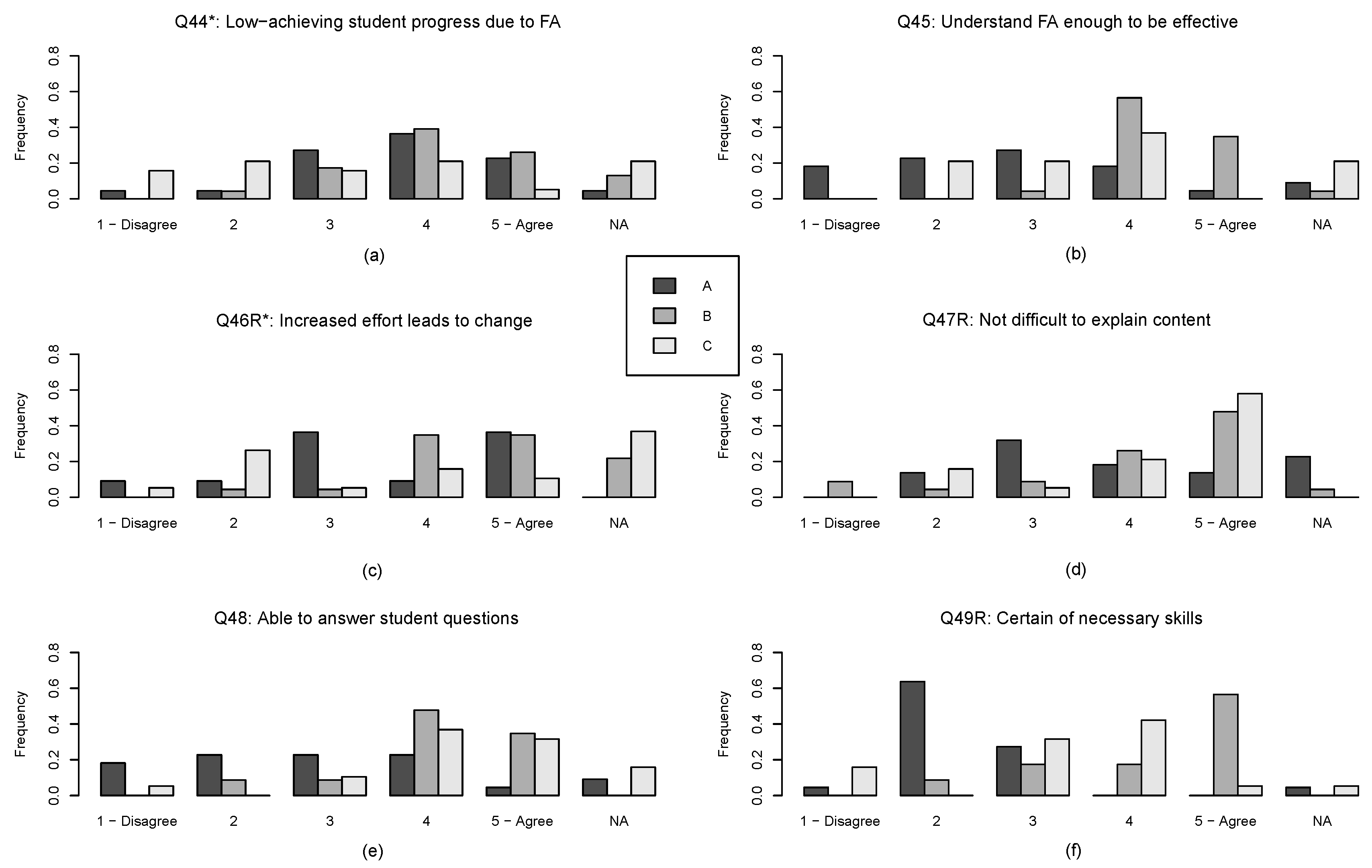

Next, we examined general response commonalities by looking at the frequency distributions for responses for each question for each of the three super groups. We did this for both pre intervention and post intervention responses. For their five Likert-type level selections, we treated a ‘1’ as a low self-efficacious response while ‘5’ was high. We maintained the not answered (NA) category in the results because it contains relevant information about the super groups. For four of the twelve questionnaire items we reversed the scores due to negatively worded self-efficacy questions (Q39, Q41, Q42, Q46, Q47 and Q49). These reversals were made before graphing and are therefore included in the graphs of

Figure 4 and

Figure 5. Higher scores for every question mean higher self-efficacious answers. For clarity here, we have 360 reworded the question statements in the graphs for the six questions with reversed scores by slightly 361 rewording the questions so that a high self-efficacious answer yields a high self-efficacy score. (See the original questions in

Figure 2 for comparison).

This closer question-by-question look at the twelve items clarifies the general network maps from

Figure 3c,d. Overall, Super Group A’s black bars for each item (see

Figure 4 and

Figure 5) show a tendency toward less self-efficacious responses while the shaded bars of Super Groups B and C represent more self-efficacious responses with the medium grey bars of Super Group B trending the highest. Furthermore, a visual inspection indicates that Super Group A and C tend have more disparate answers than Super Group B on self-efficacy attribute questions. For the outcome expectations questions (Q43, Q44, and Q46R), Super Group C tend to have more disparate answers. This visual observation needed to be quantified to make sure that it was not a visual artefact. This was done by calculating the entropy [

42] of each distribution of responses to each question for each super group. See the

Supplemental File S5 Technical results for details. In this case, the entropy tells us whether responses are restricted to few categories (low entropy) or more broadly distributed (high entropy, see e.g., [

43,

44]). For example, in Q38, Super Group A uses the full range of possibilities (high entropy), while Super Groups B and C primarily makes use two each (lower entropy). In contrast, in Q43, Super Group A and B each make use of primarily three categories (medium entropy), while Super Group C has a broader distribution. However, there are important additions to this broad characterisation, so next we characterise each super group in terms of their answers to specific combinations of questions. The point is to show that using this study’s network methodology adds important nuance to how teacher self-efficacy attributes were differentially affected by participating in the project.

Super Group A indicates to a large extent that they know the necessary steps to teach effectively using formative assessment, since their answers are centred on 4 in Q40. The Kruskal-Wallis test revealed that there were significant differences () and the subsequent post-hoc test revealed that there were significant differences between Super Group B and C (), but not between A and B (), and marginally between A and C (). Thus, Super Group A and B can be said to agree on the question of having the necessary knowledge.

While such an agreement is not found on question Q49R on having the have the necessary skills to provide formative assessment. Again, a Kruskal-Wallis test revealed significant differences (

). Here, there are significant differences between Super Group A and B (

), between A and C (

), and marginally between B and C (

). The

Supplemental material provides detailed calculations for all question frequency distributions. 63% of Super Group A were coded with a 2 on Q49R, giving them the lowest entropy of the three groups on that question. The agreement of Super Group A on Q49R contrasts their answers to questions that may be seen to pertain to different formative assessment skills. The group is diverse on whether they perceive it as difficult to integrate formative assessment (Q39R), whether they will be effective in monitoring student work (Q41R, almost dichotomous between 1 and 3), whether their teaching with formative assessment will be effective (Q42R and Q45), and whether they will be able to explain content (Q47R) or able to answer student questions (Q48) using formative assessment. Thus, although the problem for this group seems to be that they do not believe that they have the necessary skills, it is different skills they miss. Super Group A shows somewhat less diversity with regards to outcome expectations. They generally do not seem to believe that the inadequacy of student background can be overcome by using formative assessment, but seem to agree that if a low-achieving student should progress, it will be because of the teachers’ use of formative assessment (Q44).

This conundrum reflects a common divergence between teacher preparation and classroom action. For self-efficacy, we believe the explanation lies within the theoretical basis for self-efficacy, The work of Bandura [

10] shows that beliefs that one can perform a task (such as providing formative assessment) are constantly mediated by the context of the performance. So, the self-efficacy attributes we measured were composed of ‘capacity beliefs’ and ‘outcome expectations’, meaning that even with strong teacher beliefs in a capacity to use formative assessment, a given teaching situation may make it difficult or impossible to implement. For example, the participants in this study learned the affordances of peer feedback in their teaching. However, when they implemented it, they sometimes found that their students were not skilled at providing useful peer feedback to one another and so the teachers either became reluctant to use it or they actively taught their students how to provide good feedback [

45]. Their negative ‘outcome expectation’ based on novice student feedback was sometimes overcome through student practice at giving feedback, thereby allowing the participant’s high efficacy belief about peer feedback to be realized.

Super Group B can be contrasted with Super Group A in the sense that they both choose higher ranking categories (relevant

- and

p-values for all questions are given in the

supplementary File) and also show less variety (lower entropy) on questions that pertain to formative assessment skill. This difference can be confirmed by returning to the network representation. Super Group B appears as a more tightly knit group in

Figure 3c, and this is confirmed numerically in that the internal similarity of the group is higher than for the two other groups (see

Supplemental File, Table S2). There is some variation in their answers to questions pertaining to outcome expectations (Q43, Q44, and Q46R). Interestingly, some chose not to answer Q44 (13%) and Q46 (21%). This may be due to the slight uncertainty of the effect of formative assessment in general even if they believe that they are very capable of providing formative assessment.

Super Group C represents a middle ground between Super Group A and B. The responses in this super group are typically marginally different from one super group while significantly different from the other. Interestingly, Super Group C has higher diversity (entropy) than the two other super groups with regards to outcome expectations (Q43, Q44, Q46R), knowing the necessary steps to teach effectively using formative assessment (Q40), and having the necessary skills to use formative assessment (Q49R). In this sense, Super Group C seems to represent a group of teachers for which knowledge, skills and even the general applicability of formative assessment is uncertain.

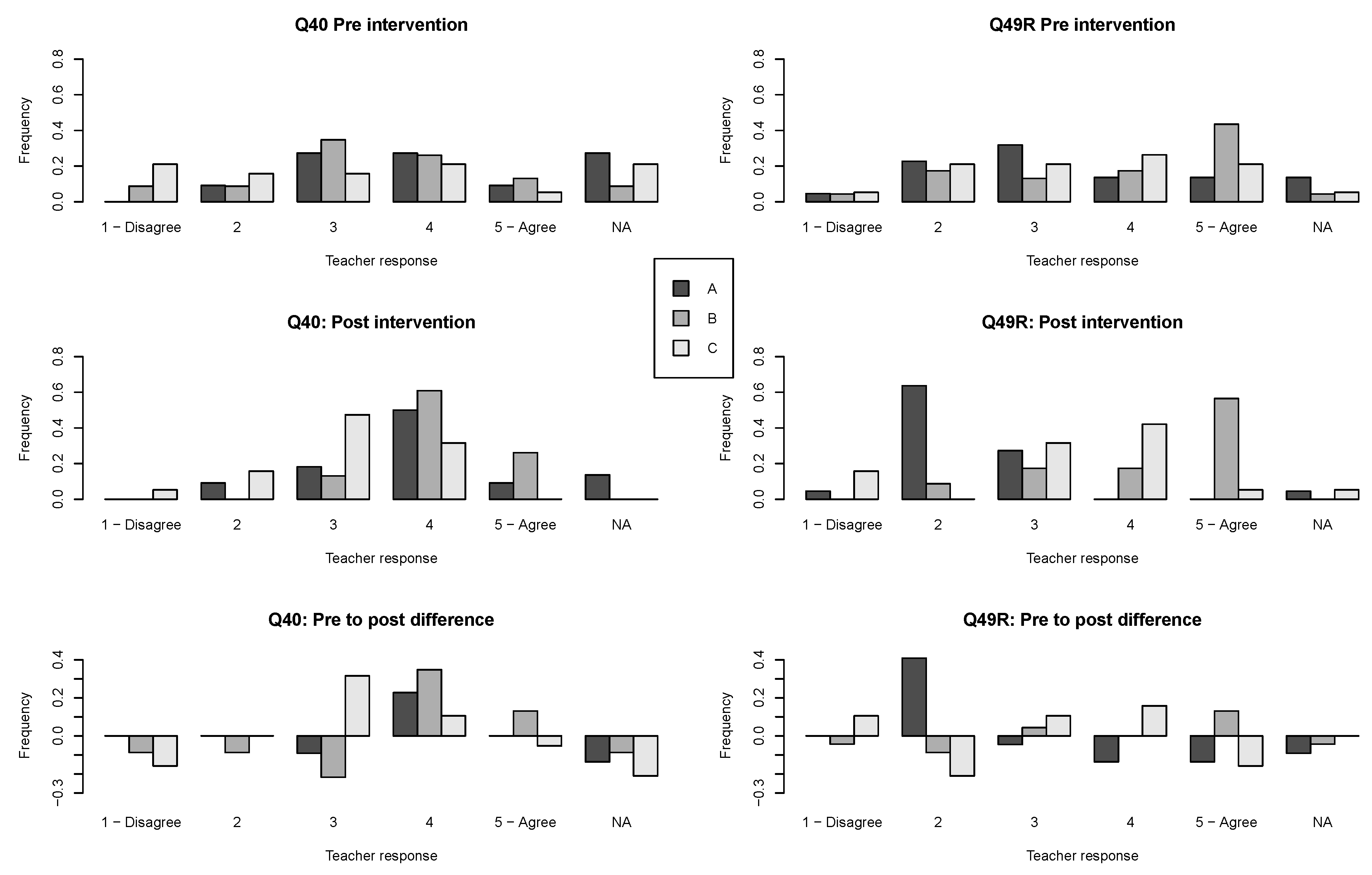

To investigate how Super Groups differentiate with respect to change in self-efficacy attributes, we compared pre intervention with post intervention frequency distributions, focusing on differences in change for Super Groups.

Figure 6 compares pre intervention and post intervention assessment attribute choices and shows the differences, or shift, for each Likert-type option for each question. So taking graph for Q40 for example, the positive value of 0.21 for Likert-type option ‘3’ for Super Group C is because 0.16 of Super Group C members chose ‘3’ at pre intervention while 0.47 of Super Group C members chose this option at the post, signifying an positive shift in the number of respondents from this group choosing this option. Similarly, negative values signify a decrease.

In

Figure 6, we notice apparent shifts towards more consensus for Super Groups on Q40 (know necessary steps). Super Group A’s responses seem shifted towards option 4 (indicated by the positive value), as are Super Group B’s. Super Group C’s responses seem shifted towards option 3. To quantify these apparent shifts, we compared them with all 216 shifts (

, see Supporting File for details) by calculating the Z-score,

. Compared to all shift-values, the shift in option ‘4’ on Q40 for Super Group A was marginally significant (

), for Super Group B on option ‘4’, the shift was significant (

), for Super Group C on option ‘3’ the shift was also significant (

). Furthermore, the negative shift for Super Group B on option ‘3’ was marginally significant (

). No other shifts in the distribution were significant. Thus, we can say that a positive shift occurred for each Super Group towards either a medium or a medium high level of agreement with Q40s statement, but we cannot claim that this resulted from any one negative shift on other options. Rather it seems that each Super Group’s distribution narrowed towards medium or medium high levels of agreement with knowing the necessary steps to teach effectively using formative assessment. For Q49, only one shift was significant; Super Group A had a significantly positive shift on option ‘2’ (

). No other shifts were significant for this question. Thus, while Super Group B and C did not change their position on Q49, Super Group A shifted towards not being certain of having the necessary skills to use formative assessment. We have included pre intervention to post intervention graphs for all questions in the

Supplemental Material.

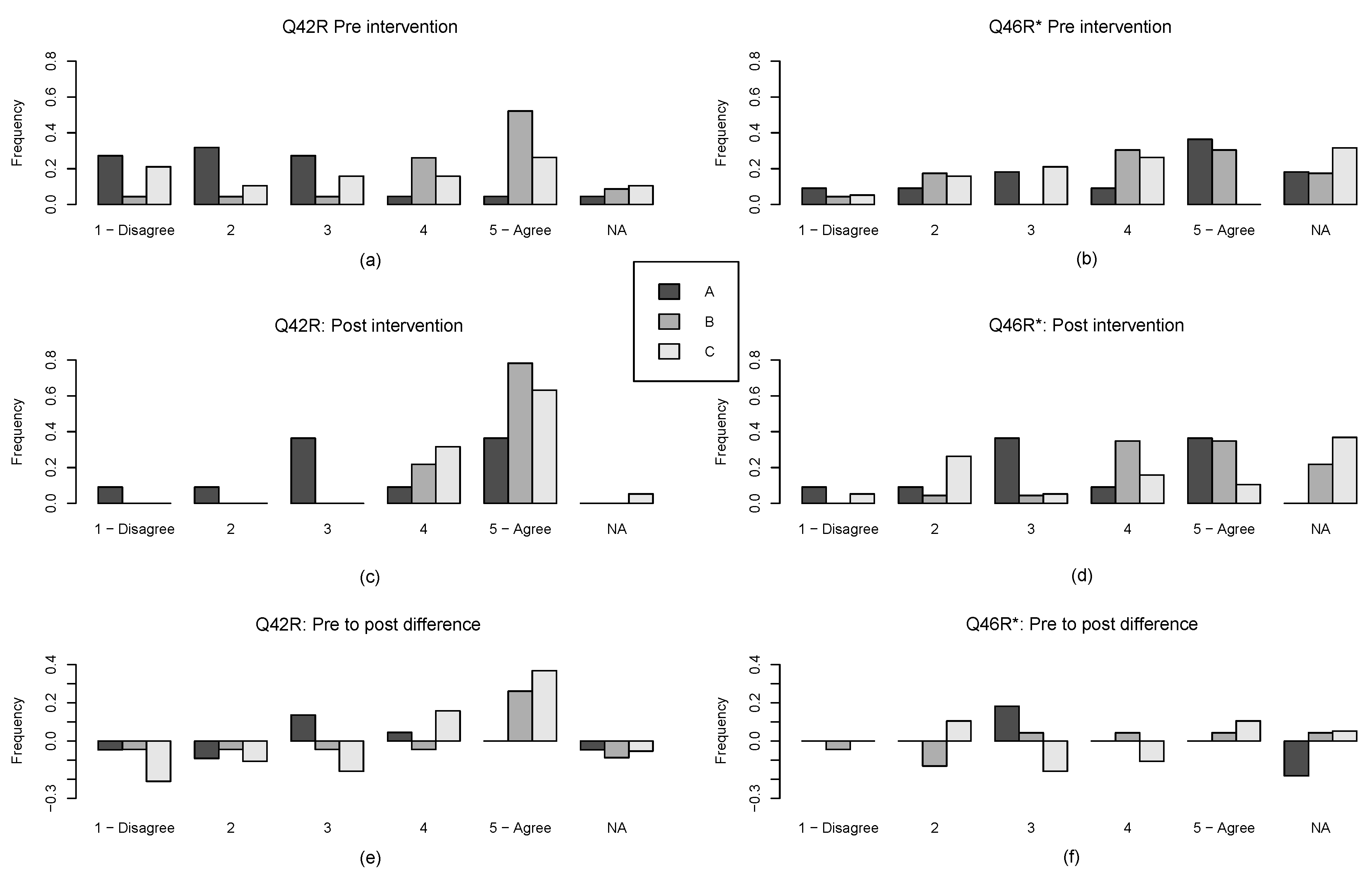

As a different illustrative example, we compared Super Group shifts on Questions Q42 and Q46.

Figure 7 shows that Super Group B and C shifted their selection towards agreeing with the the self-efficacy attribute statement that their teaching would be very effective using formative assessment. The shifts towards option ‘5’ were significant for Super Group B (

), and for Super Group C (

). Super Group C has some uncertainty as to whether an increased effort in using formative assessment will produce change in some students’ achievement (options ‘2’ and ’NA’ are prevalent in

Figure 7f). Super Group B, on the other hand, seems to be more certain that increased effort in using formative assessment may produce change in student achievement. This may be a subtle signifier that the intervention supported both groups in their beliefs that they are able to use formative assessment, but did not convince teachers in Super Group C that this would be beneficial to all students. Super Group B may have gone into the project with the belief that formative assessment would be beneficial to students and did not change that belief.

These examples of detailed analyses of Q40, Q49, Q42, and Q46 serve as illustrations of the differential responses and patterns of movement for each Super Group. While we believe that these two illustrative findings could potentially be important, any interpretation would be speculative, and a richer analyses involving e.g., interview data would be needed to substantiate this picture.

5. Discussion

The purpose of this study was to find out how when working with formative assessment strategies in collaboration with researchers and other teachers’, individual self-efficacy attributes of practicing teachers are differentially ‘affected’ in different educational contexts, here represented by Local Working Groups. A network-based methodology to analyse similarity of teachers’ responses to attributes of self-efficacy questions was developed to meet this purpose. The results showed that based on similarity of answers, there were heterogeneities among groups.

For example, the methodologically identified Super Group A did not perceive themselves to have the necessary skills to use formative assessment strategies in their teaching (Q49R), even if they did know the necessary steps to teach effectively using formative assessment (Q40). We see this as a gap between knowledge and practice, which, if verified, would be useful during teacher development. The patterns of the Super groups can alert teacher educators to unique strengths and challenges of teachers in their local and national context, providing more finely tuned efforts to affect self-efficacy attributes with Bandura’s suggestions for change [

10]. At the same time, Super Group A showed a lot of diversity in their responses with regards to more specific aspects of the necessary skills. On the other hand, Super Group B responses were similar and were indicative of high self-efficacy attributes. Super Group B did show some variation on outcome expectations, which may be indicative of some uncertainty in the group about the possible effects of formative assessment on student learning. Super Group C’s responses represented slightly lower self-efficacy attributes, their answers were not as diverse as Super Group A’s but more diverse than Super Group B’s. Particularly, their responses to outcome expectation showed the most diversity (entropy), possibly signifying more uncertainty about the effects of formative assessment on student learning than was found in Super Group B.

With the approach described here, new detailed patterns, derived from the raw data, become visible. The pre intervention to post intervention differences in

Figure 6 and

Figure 7 show differential changes for each of the Super groups, which offer useful patterns of responses to self-efficacy attributes. Our analyses provided illustrative examples of such patterns in comparing shifts from pre intervention to post intervention on responses to questions Q40, Q49, Q42, and Q46. While not conclusive, these are analyses of changes and suggest how learning processes could be described differently for different groups of participating teachers.

The network analyses for Super Groups A, B and C (

Figure 3a,b) showed cluster relationships based on similarity of post intervention answers to the self-efficacy questions. They showed that Super Group A was composed largely by teachers from LWG 2. Super Groups B and C were more heterogeneous with regards to LWGs. This study’s analysis does not reveal why the different patterns between super groups in self-efficacy attributes occur. The analysis of over-representation showed no evidence of teaching subject or years of teaching experience being factors. Instead, LWG origins did show evidence of over representation. However, any influence from LWG on self-efficacy development remains speculative. The question of why, might in principle be answered quantitatively if (1) the data consisted of a larger group and/or (2) other variables, for example, more detailed knowledge about participant teaching practices and school context, were integrated into the analyses. Likewise, a detailed qualitative analysis of teacher-teacher and researcher-teacher interactions in the six LWGs could have been coupled with the analyses of this paper to produce explanations as to change patterns self-efficacy attributes. Still, the results of this methodology raise interesting questions that may be posed to data resulting from this kind of study.

One set of questions pertains to the details of the distributions of answers to the questionnaire. Does Super Group A’s apparent agreement on a lack of skills and their diversity in terms of which skills they seem to lack, mean that a further targeted intervention might have raised their self-efficacies? For instance, the intervention might have used the Post Groups as a basis for coupling teachers with similar problems. Does the diversity of Super Group C on outcome expectations mean that their experiences of how formative assessment affected students does not match with literature suggesting that most or all students benefit from formative assessment strategies? We need further data from the LWGs to develop and confirm these and other newly emerging hypotheses. The network analyses have given us more detailed and insightful looks into the self-efficacy attributes of our project participants than traditional pre intervention to post intervention overall comparison would provide.

Another set of questions pertains to background variables and point to a finer grained analysis of activities in the LWGs. Previous analyses [

27] showed mainly positive, yet somewhat mixed changes on each question for the whole cohort. We argue that the network analyses presented here have yielded greater understanding via our characterisation of the super groups. Using the background variable descriptions of super groups (e.g., that LWGs 2 and 4 were over-represented in Super Group A but that subject and years of teaching were not) may help identify on which LWGs to focus. For example, records of LWG activity during the intervention might be used to find opportunities for changes in self-efficacy attributes that may have shaped responses as found in the super groups. Such insights may provide a finer grained understanding of the changes in teacher confidence using formative assessment methods of the project.

A third set of questions pertains to how this kind of analysis could be used in future projects. While we have made the case that this yields a finer grained analysis and thus deeper understanding, we also believe that there is room for integrating the analysis into an earlier stage in research. Working with LWGs means that researchers not only act as observers but purposefully interact with teachers to help them implement research-based methods for teaching, for example, formative assessment methods. Researchers are also purposefully providing self-efficacy raising experiences for the teachers. Knowing how different teachers manage different kinds of interventions may help researchers develop and test training methodologies in the future. In using this method in future interventions studies we propose that researchers might use the Post Groups as an even more detailed unit of analysis. Each Super Group consisted of 3–5 Post Groups each with 3–9 participating teachers. Knowing each of these groups response patterns might help researchers design specific interventions for teachers represented in a Post Group. For instance, if a group shows the same gap between knowledge and skill as Super Group A, researchers may provide opportunities for guidance. For example, researchers could help with a detailed plan for employing a particular strategy of formative assessment, then observe teaching and facilitate reflection afterwards for these teachers. Such a strategy is costly to employ on a whole cohort level, but using the described methodology would allow researchers to focus on particular groups.

Such a strategy would benefit from more measurements during the project. With more measurements, for example after a targeted intervention as just described, researchers might be able to gauge to what extent teachers’ self-efficacy attributes changed. Such a strategy would also better reflect the nature of self-efficacy as malleable and constantly changing.

Given the organization of teachers into LWGs, a fourth set of questions pertains to the group dynamics of each LWG. The literature offers several theoretical frameworks for analyses of group dynamics, for example, Teacher Learning Communities (TLC) [

46,

47] or Communities of Practice [

48] (CoP). A TLC requires “formation of group identity; ability to encompass diverse views; ability to see their own learning as a way to enhance student learning; willingness to assume responsibility for colleagues’ growth” ([

46], p. 140). A CoP in the sense of Wenger [

48] requires a joint enterprise, mutual engagement and a shared repertoire. While a deeper analysis of e.g., LWG meeting minutes in a TLC- or CoP-perspective might help explain some of the findings of this study, this analysis in itself was not designed to capture elements of neither TLCs nor CoPs.

One more result merits a discussion of a future research direction. We found that the pre intervention groups were different from the post intervention groups (Post Group 1–12). However, network analysis offers tools with which to analyse differences in network group structure [

33]. One method is a so-called alluvial diagram [

49], which visualises changes in group structure. Using such tools, one could investigate if teachers move groups together, which responses remain constant and which change for such moving groups. However, with our current data set, any explanation of such potential changes would be highly speculative.

Obviously, the study is limited because of the size of the population of teachers. Thus, it is not certain that a new study will produce Super Groups that resemble the groups found in this study. A second issue is that we have not verified the validity of the self-efficacy attributes of teachers in each group. Even though self-efficacy attributes are commonly assessed with questionnaires, the added dimensions this network analysis reveals may be validated through, for example, teacher interviews, meeting minutes, and observations. The major strength of the methodology seems to us to be that it allows us to scrutinize the data in new ways and ask questions that we find relevant and which we were not able to ask before.