Comparing Approaches for Evaluating Digital Interventions on the Shop Floor †

Abstract

1. Introduction

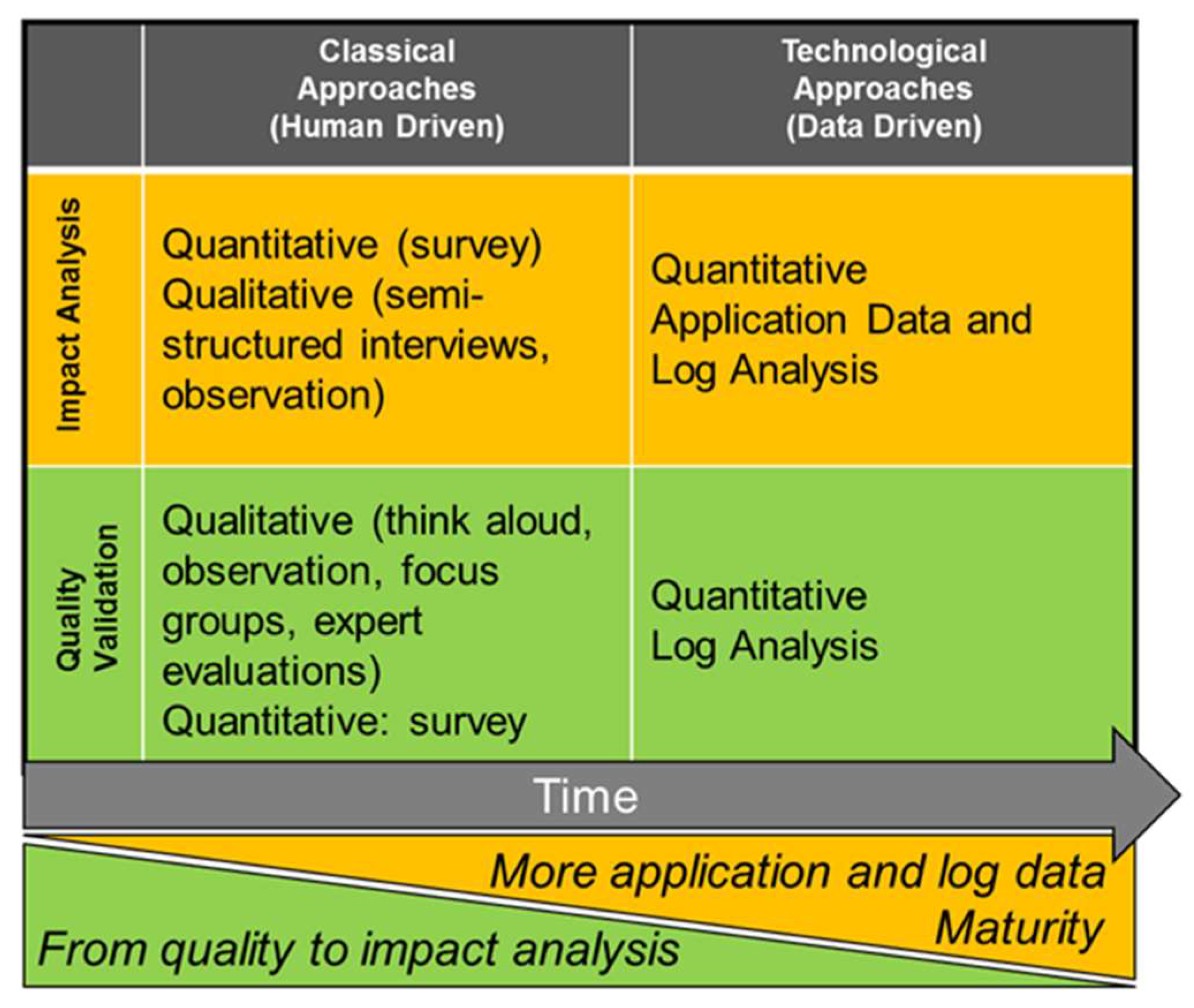

2. The Evaluation Frameworks

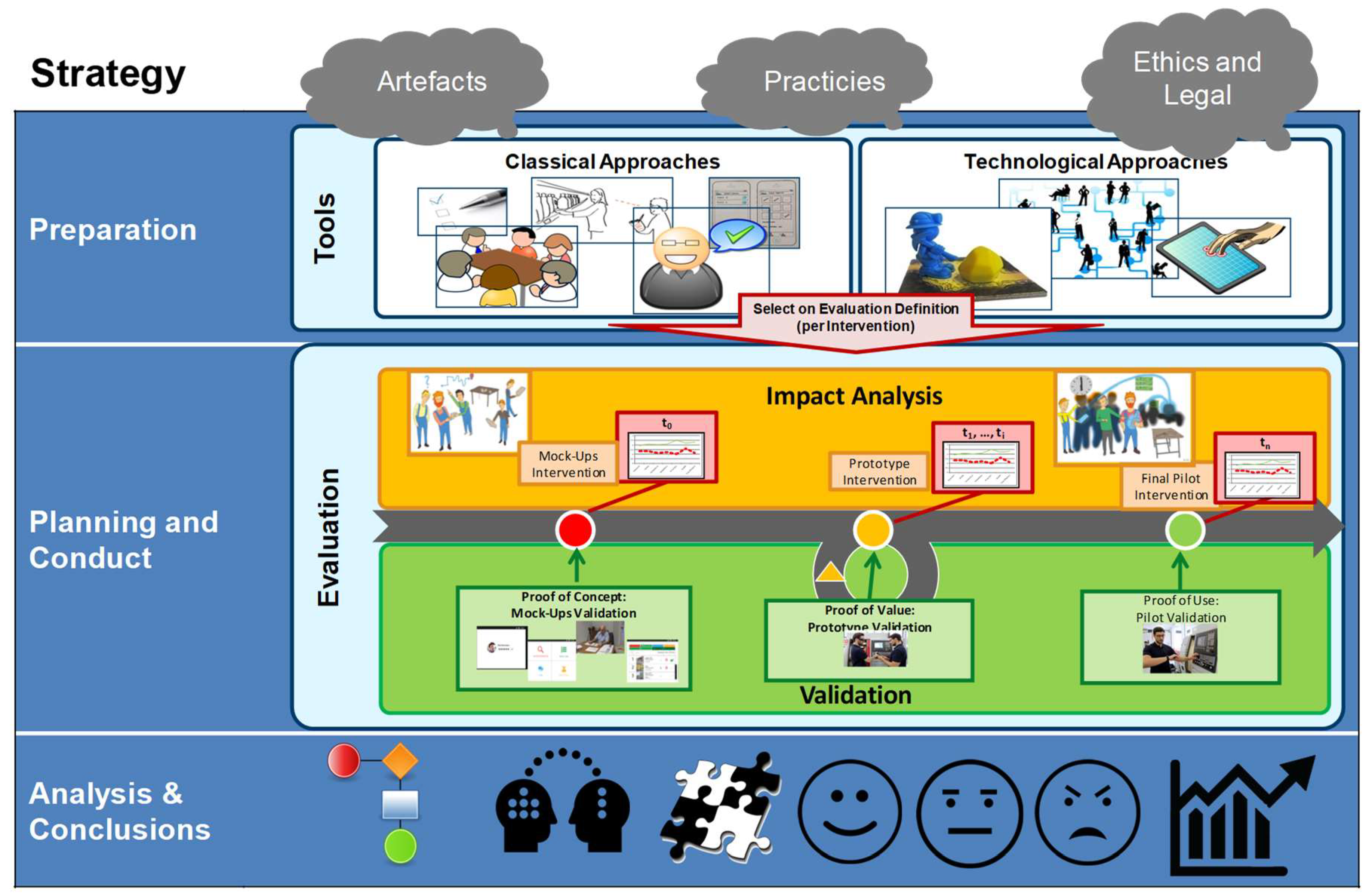

2.1. F4W Evaluation Framework Method and Strategy

- the support to scientific research and the use by IT practitioners;

- the need to support artefacts with different development maturity states (mockups, prototypes, pilots);

- the use of the framework in different legal and regulatory environments.

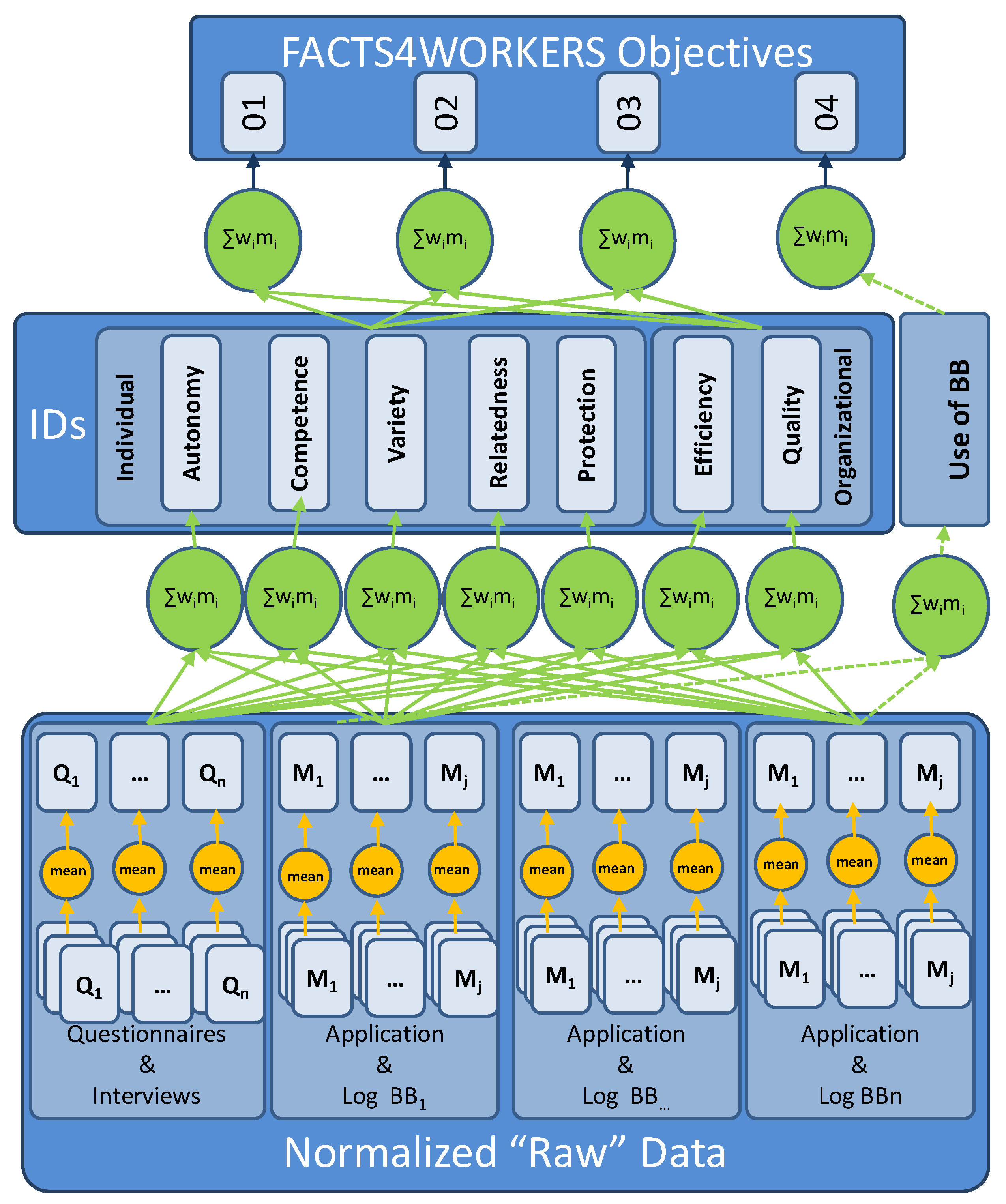

2.1.1. F4W Impact Assessment Quantification Process

2.1.2. F4W Evaluation Strategy

2.2. Heuristics for Exploring Socio-Technical Systems

- #1

- Visibility and feedback about task handling success. Focused information is continuously offered about the progress of technical processes and as far as permitted about collaborative workflows. This helps us to understand what further steps are possible or not possible and why, and how far the expectations of others are met;

- #2

- Flexibility for variable task handling, leading to a participatory evolution of the system. One can vary manifold options of task handling and can flexibly decide about technology usage, time management, sharing of tasks, etc. Consequently, one can develop a wide range of competences that support participation in the ongoing evolution of the whole system;

- #3

- Communication support for task handling and social interaction. By technical and spatial support for communication, one can be reached to an influenceable extent for purposes of task handling and coordination This support is intertwined with negotiating duties and rights of roles, including values, so that reciprocal reliability can be developed;

- #4

- Purpose orientated information exchange for facilitating mental work. To support task handling, information is purposefully exchanged via technical means, updated, and kept available and minimized. This implies the technical linking of information and the emergence of personal profiles that must be visible and a subject of privacy-related self-determination;

- #5

- Balance between effort and benefit experienced by organizational structuring of tasks. Tasks being assigned to people are pooled, and technically supported in a way that they make sense and provide fun. They comply with individual technical, social, and physical competences and support health. These measures aim at the sustainable balancing of efforts and benefits;

- #6

- Compatibility between requirements, development of competences, and the system’s features. Technical and organizational features of the system are continuously adjusted to each other. Within clarified limits, they meet the requirements from outside in a way that is based on the development of competencies and proactive help for dealing with varying challenges;

- #7

- Efficient organization of task handling for holistic goals. Through appropriate sequencing, tailoring, and distribution of tasks between humans and technology seamless collaboration is supported. Unnecessary steps or a waste of resources are avoided. An increase of efficiency can be realized if needed;

- #8

- Supportive technology and resources for productive and flawless work. Technology and further resources support work and collaboration by taking the intertwining of criteria into account, such as technology acceptance, usability, and accessibility for different users, avoiding consequences of mistakes and misuse, security, and constant updating.

3. Smart Factory Workplace Solution

3.1. Industrial Challenge Self-Learning Manufacturing Workplaces

3.2. Case Vignette Hidria

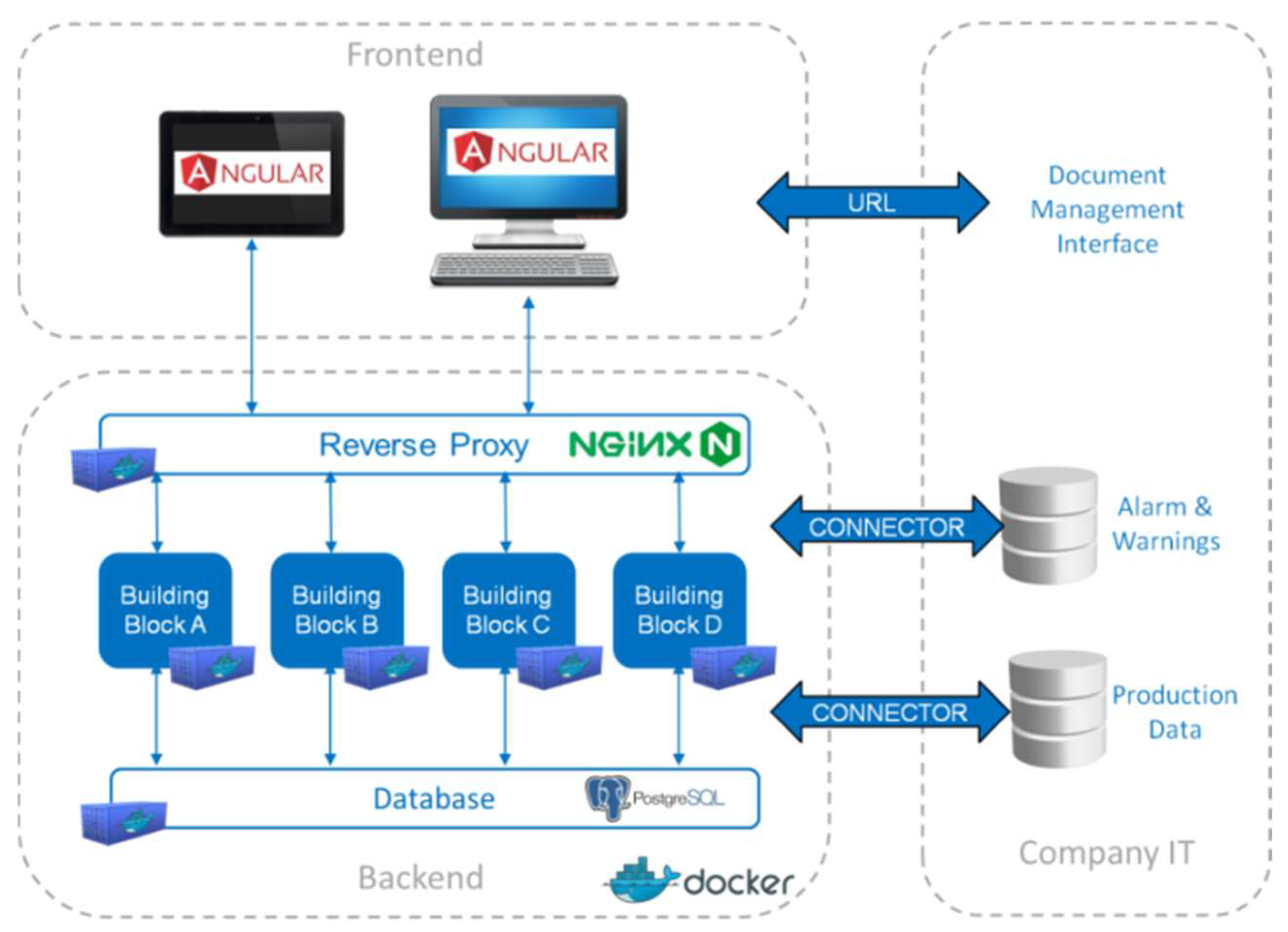

3.3. Technological Approach

3.4. The F4W Solution at Hidria

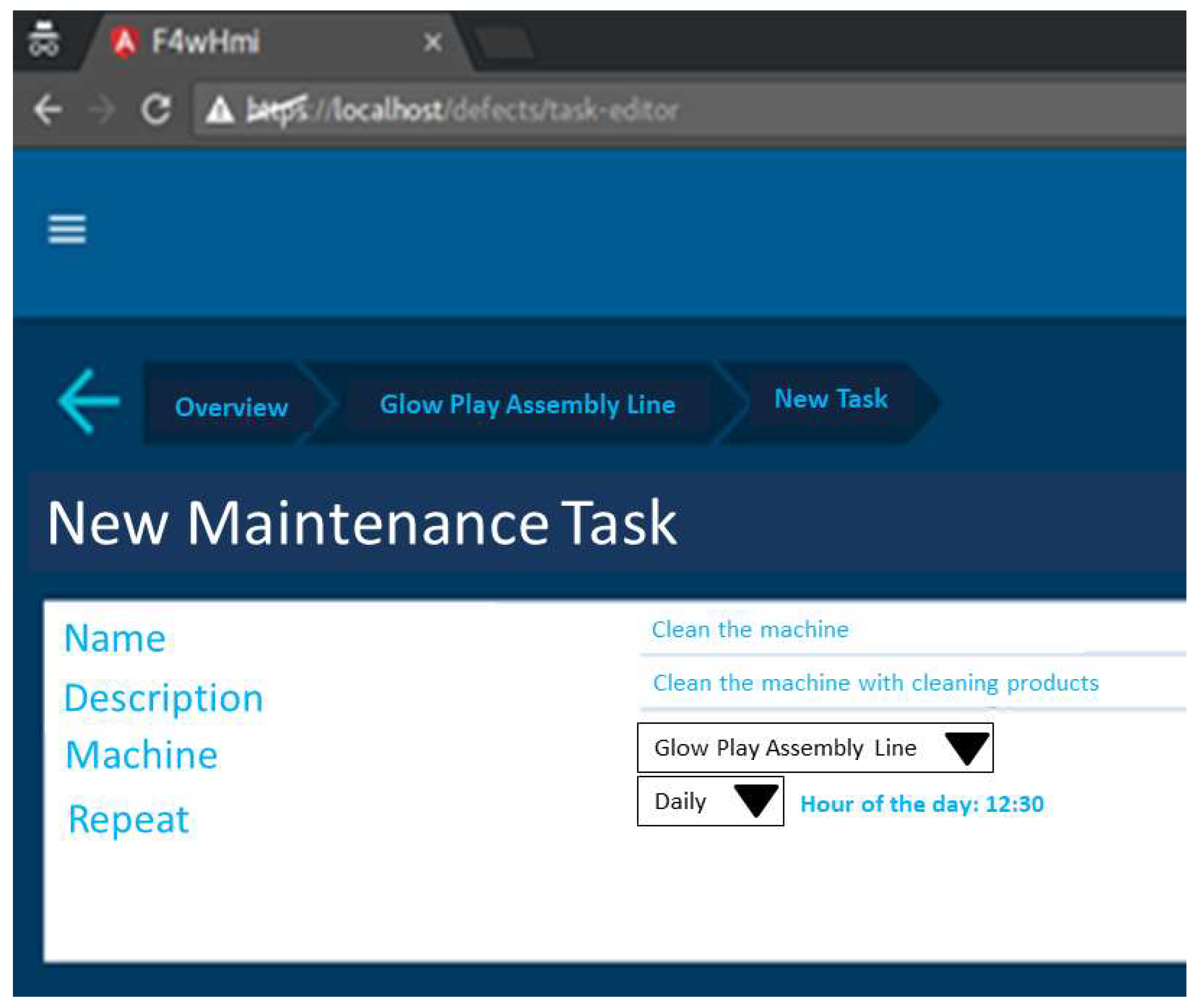

3.4.1. Maintenance Scheduling

3.4.2. Defects and Solutions

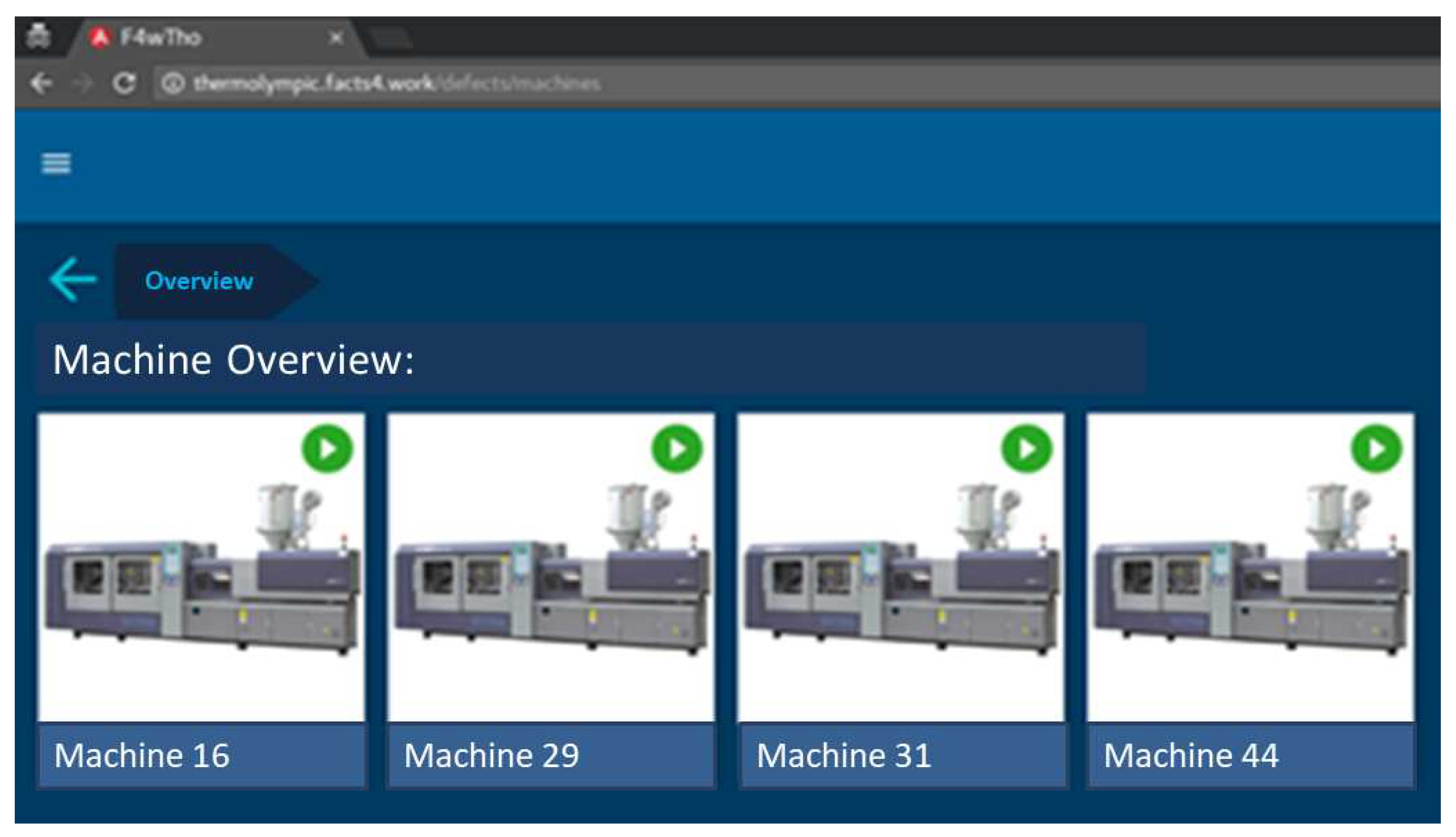

3.4.3. Digital Data Visualization

3.4.4. Trend Analysis

4. Results and Discussion

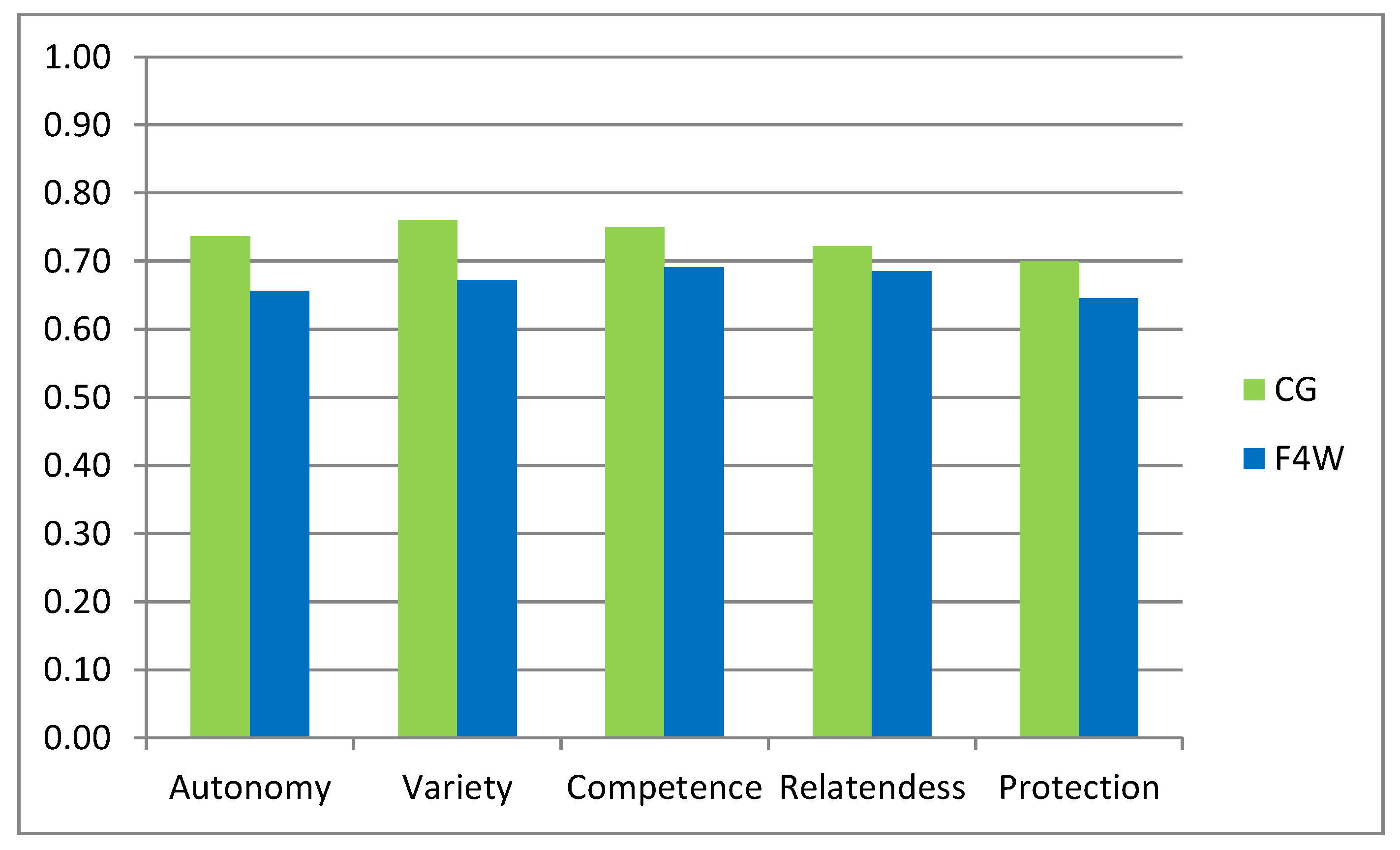

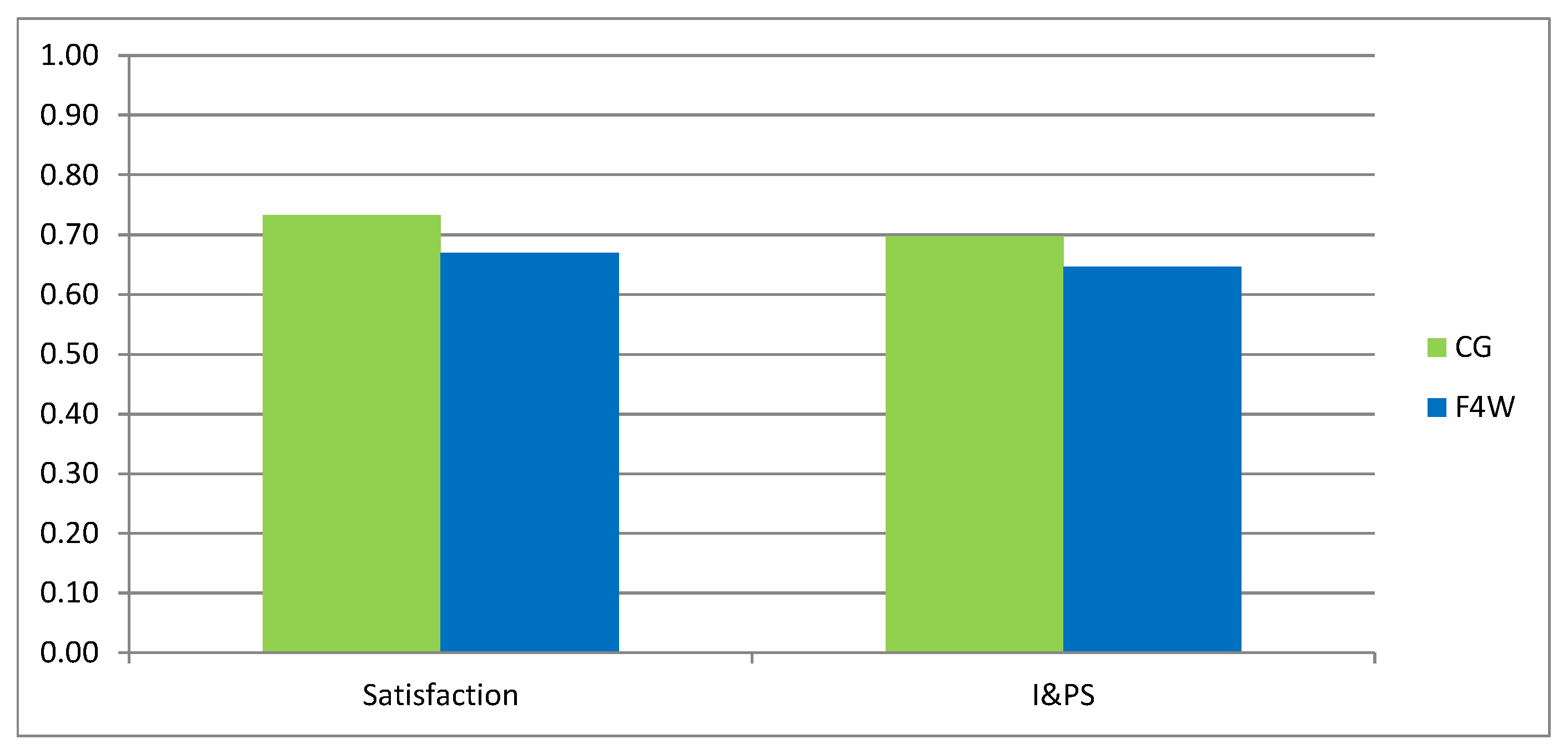

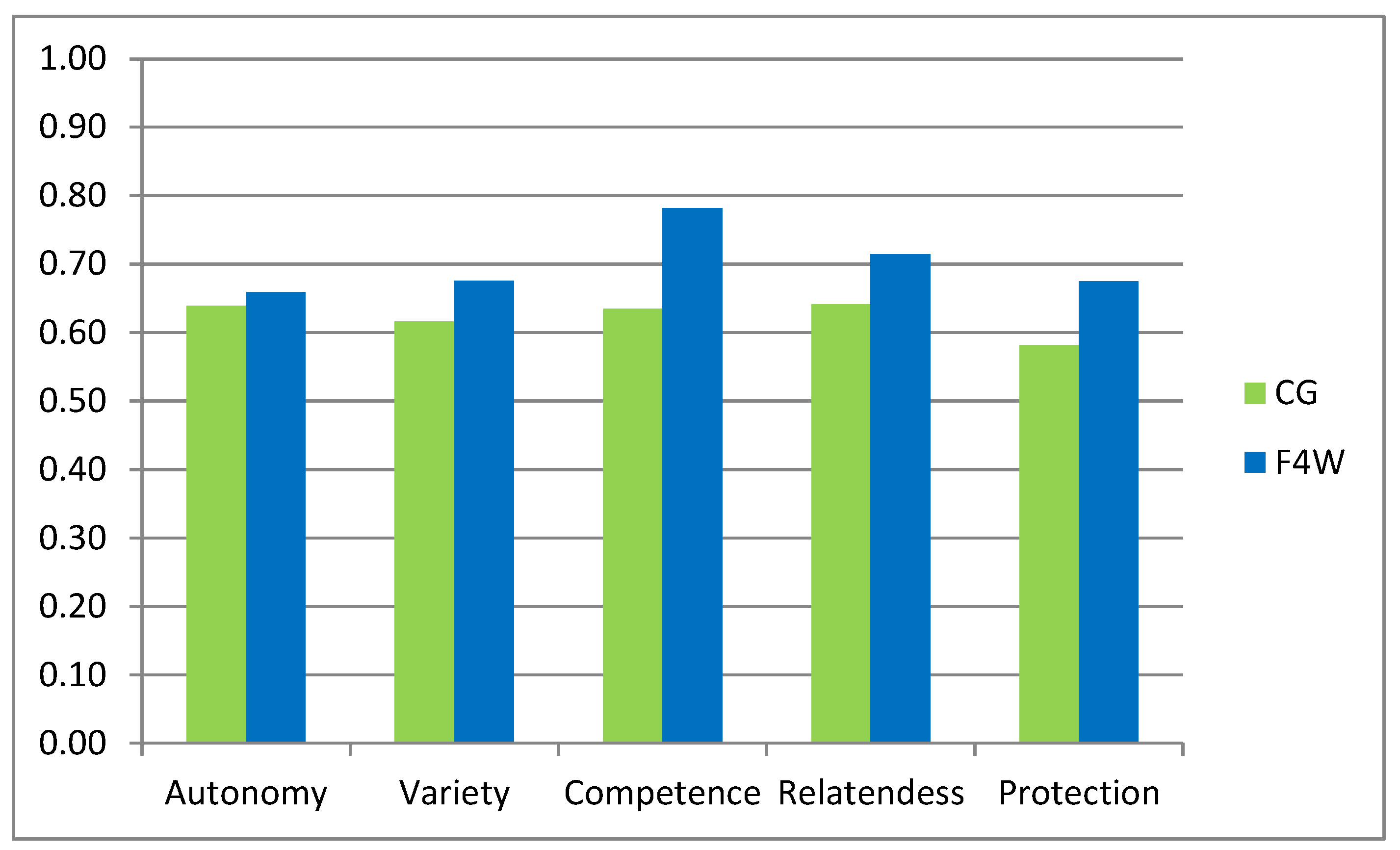

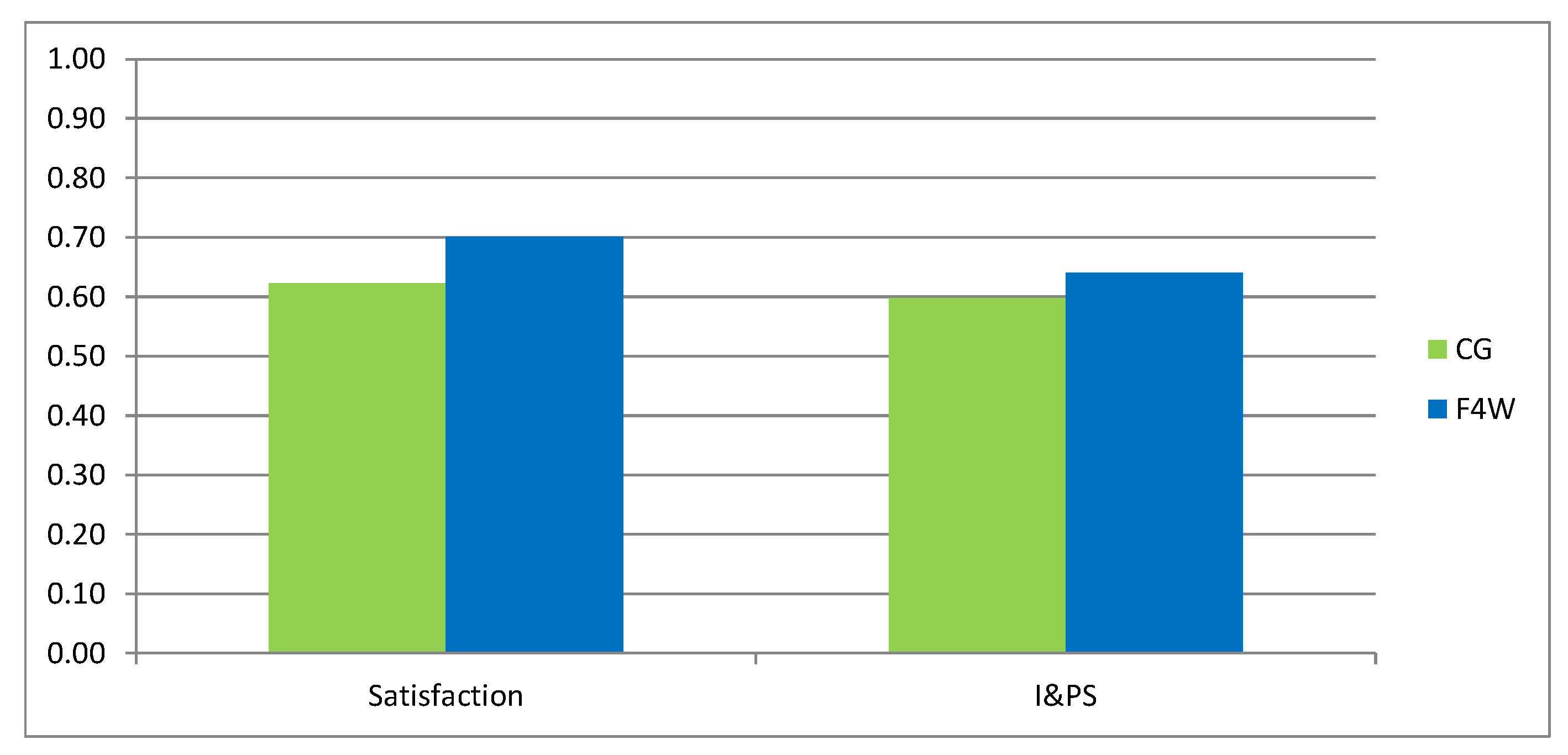

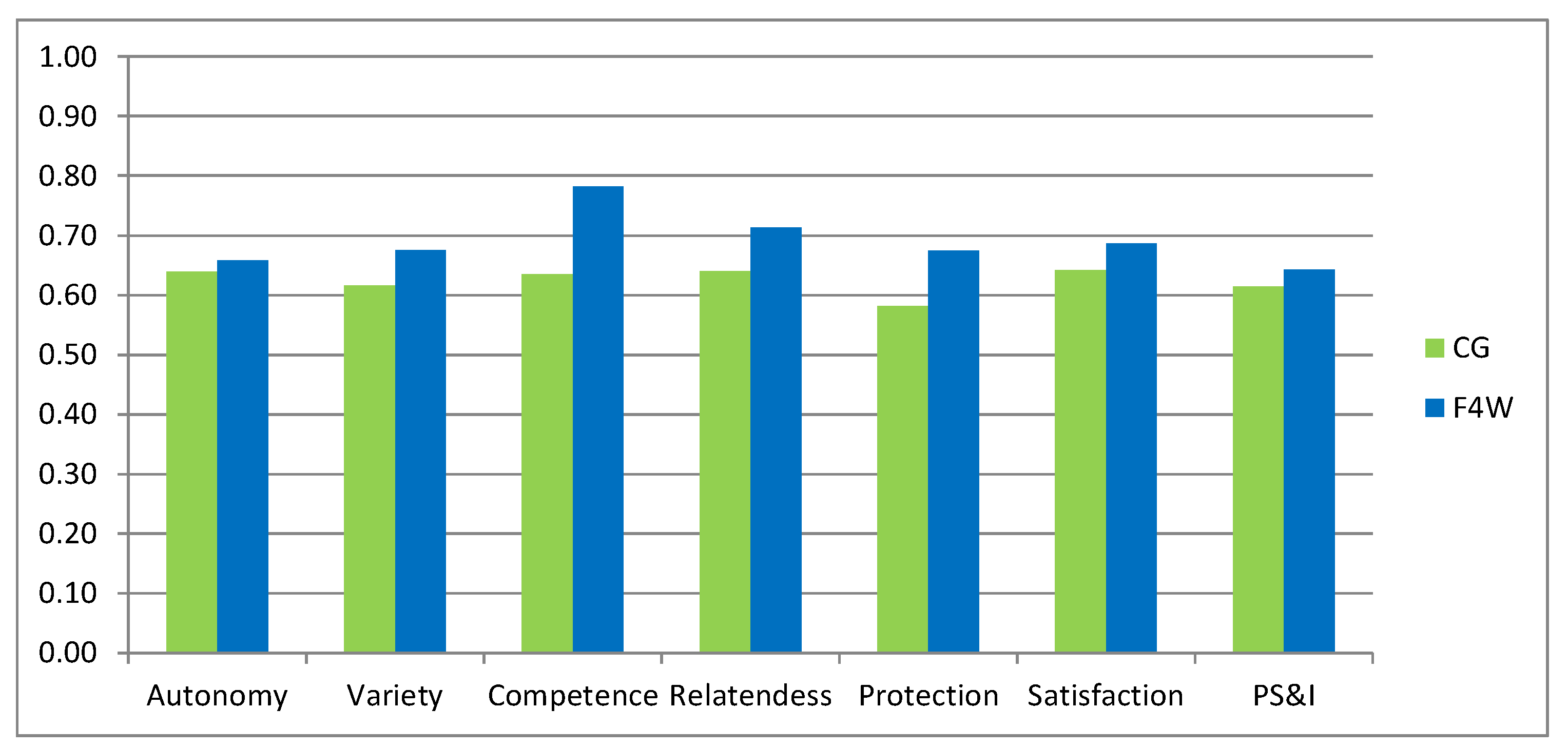

4.1. Evaluation Results Based on the F4W Evaluation Framework

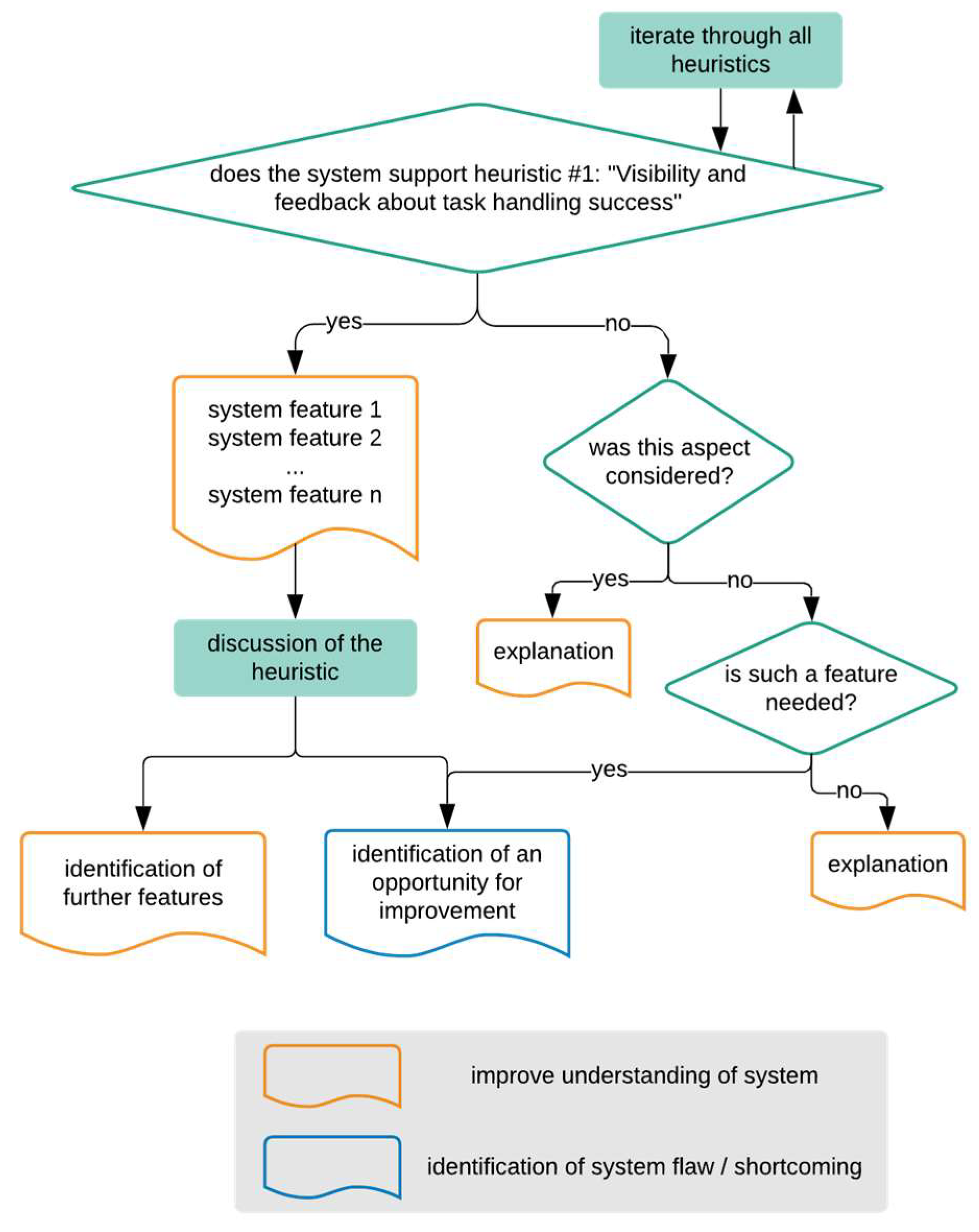

4.2. Analyzing the Hidria Use Case with the Help of Heuristics

- Visibility and feedback about task handling success;

- Flexibility for variable task handling, leading to a participatory evolution of the system;

- Communication support for task handling and social interaction;

- Purpose orientated information exchange for facilitating mental work;

- Balance between effort and experienced benefit by organizational structuring of tasks;

- Compatibility between requirements, development of competences, and the system’s features;

- Efficient organization of task handling holistic goals;

- Supportive technology and resources for productive and flawless work.

- to deepen the comprehension of the system and to find out about the features that are being offered to its users;

- to understand why the system designers added certain features while others were left out;

- to identify blind spots of the system design that demand further clarification or give hints for improvement.

5. Summary

Author Contributions

Funding

Acknowledgments

Conflicts of Interest

References

- Kiel, D.; Müller, J.M.; Arnold, C.; Voigt, K.I. Sustainable industrial value creation: Benefits and challenges of industry 4.0. Int. J. Innov. Manag. 2017, 21, 1740015. [Google Scholar] [CrossRef]

- Kagermann, H.; Helbig, J.; Hellinger, A.; Wahlster, W. Recommendations for implementing the strategic initiative INDUSTRIE 4.0: Securing the future of German manufacturing industry. In Final Report of the Industrie 4.0 Working Group; Forschungsunion: Berlin, Germany, 2013. [Google Scholar]

- Müller, J.M.; Kiel, D.; Voigt, K.I. What Drives the Implementation of Industry 4.0? The Role of Opportunities and Challenges in the Context of Sustainability. Sustainability 2018, 10, 247. [Google Scholar] [CrossRef]

- Wittenberg, C. Human-CPS Interaction-requirements and human-machine interaction methods for the Industry 4.0. IFAC-Pap. OnLine 2016, 49, 420–425. [Google Scholar] [CrossRef]

- Ansaria, F.; Erola, S.; Sihna, W. Rethinking Human-Machine Learning in Industry 4.0: How Does the Paradigm Shift Treat the Role of Human Learning? Educ. Train. 2018, 2351, 9789. [Google Scholar] [CrossRef]

- Kritzler, M.; Murr, M.; Michahelles, F. Remotebob—Support of On-site Workers via a Telepresence Remote Expert System. In Proceedings of the 6th International Conference on the Internet of Things, Stuttgart, Germany, 7–9 November 2016. [Google Scholar]

- van Saane, N.; Sluiter, J.; Verbeek, J.; Frings-Dresen, M. Reliability and validity of instruments measuring job satisfaction—A systematic review. Occup. Med. 2002, 53, 191–200. [Google Scholar] [CrossRef]

- Hannola, L.; Lacueva-Pérez, F.J.; Steinhueser, M.; Kokkonen, K.; Ojanen, V.; Schafler, M. An evaluation framework for worker-centric solutions in production environments. DEStech Trans. Eng. Technol. Res. 2017. [Google Scholar] [CrossRef]

- Spector, P.E. Measurement of Human Service Staff Satisfaction: Development of the JS Survey. Am. J. Commun. Psychol. 1985, 13, 693–713. [Google Scholar] [CrossRef]

- Fishbein, M.; Ajzen, I. Belief, Attitude, Intention and Behavior: An Introduction to Theory and Research; Reading Addison-Wesley: Boston, MA, USA, 1975. [Google Scholar]

- Venkatesh, V.; Morris, M.G.; Davis, G.B.; Davis, F.D. User Acceptance of Information Technology: Toward a Unified View. MIS Q. 2003, 27, 425–478. [Google Scholar] [CrossRef]

- Gable, G.G.; Sedera, D.; Chan, T. Re-conceptualizing information system success: The IS-impact measurement model. J. Assoc. Inf. Syst. 2008, 9, 18. [Google Scholar] [CrossRef]

- Caldiera, V.R.B.G.; Rombach, H.D. Goal question metric paradigm. Ency. Softw. Eng. 1994, 1, 528–532. [Google Scholar]

- Chen, M.; Mao, S.; Liu, Y. Big data: A survey. Mob. Netw. Appl. 2014, 19, 1–39. [Google Scholar]

- Stone, A.A.; Bachrach, C.A.; Jobe, J.B.; Kurtzman, H.S.; Cain, V.S. The Science of Self-Report: Implications for Research and Practice; Psychology Press: London, UK, 1999. [Google Scholar]

- Mayring, P. Qualitative content analysis. Forum Qual. Soc. Res. 2000, 2, 1–28. [Google Scholar]

- Walsham, G. Doing Interpretive Research. Eur. J. Inf. Syst. 2006, 15, 320–330. [Google Scholar] [CrossRef]

- Delone, W.H.; McLean, E.R. The DeLone and McLean model of information systems success: A ten-year update. J. Manag. Inf. Syst. 2003, 19, 9–30. [Google Scholar]

- Hannola, L.; Heinrich, P.; Richter, A.; Stocker, A. Sociotechnical challenges in knowledge-intensive production environments. In Proceedings of the XXVII ISPIM Innovation Conference, Porto, Portugal, 19–22 June 2016. [Google Scholar]

- Hannola, L.; Steinhüser, M.; Richter, A.; Schafler, M.; Lacueva-Pérez, F.J. Assessing the Impact of Digital Interventions on the Shop Floor. In Proceedings of the 20th International Working Seminar on Production Economics, Innsbruck, Germany, 19–23 February 2018. [Google Scholar]

- Heinrich, P.; Richter, A. Captured and structured practices of workers and contexts of organizations. In Project Report—FACTS4WORKERS: Worker-Centric Workplaces in Smart Factories; VIRTUAL VEHICLE Research Center: Graz, Austria, 2015. [Google Scholar]

- Denner, J.; Heinrich, P.; Heldmann, C.; Richter, A. First version of requirements of workers and organisations. In Project Report—FACTS4WORKERS: Worker-Centric Workplaces in Smart Factories; VIRTUAL VEHICLE Research Center: Graz, Austria, 2015. [Google Scholar]

- Schafler, M.; Hannola, L.; Lacueva-Pérez, F.J.; Milfelner, M.; Steinhüser, M.; Gracia-Bandrés, M.A. Evaluating worker-centered smart interventions on the shop floor. In Proceedings of the Mensch und Computer 2017-Workshopband, Regensburg, Germany, 10–13 September 2017. [Google Scholar]

- Nielsen, J. Heuristic Evaluation. In Usability Inspection Methods; Nielsen, J., Mack, R.L., Eds.; Wiley: Hoboken, NJ, USA, 1994; pp. 25–62. [Google Scholar]

- Baxter, G.; Sommerville, I. Socio-technical systems: From design methods to systems engineering. Interact. Comput. 2011, 23, 4–17. [Google Scholar] [CrossRef]

- Suchman, L. Making work visible. Commun. ACM 1995, 38, 56–64. [Google Scholar] [CrossRef]

- Schafler, M.; Lacueva-Pérez, F.J.; Hannola, L.; Damalas, S.; Nierhoff, J.; Herrmann, T. Insights into the Introduction of Digital Interventions at the shop floor. In Proceedings of the 11th Pervasive Technologies Related to Assistive Environments Conference (PETRA’18), Corfu, Greece, 26–29 June 2018; pp. 331–338. [Google Scholar] [CrossRef]

- Unzeitig, W.; Wifling, M.; Stocker, A.; Rosenberger, M. Industrial challenges in human-centred production. In Proceedings of the MOTSP 2015-International Conference Management of Technology, Porec, Croatia, 22–24 April 2015; pp. 10–12. [Google Scholar]

- Hannola, L.; Kutvonen, A.; Ojanen, V.; Papinniemi, J. Detailed and Refined Industrial Challenges, version I. In Deliverable 1.3. Project Report—FACTS4WORKERS: Worker-Centric Workplaces in Smart Factories; VIRTUAL VEHICLE Research Center: Graz, Austria, 2016. [Google Scholar]

- Fischer, G.; Herrmann, T. Socio-technical systems: A meta-design perspective. IGI Glob. 2011, 3, 1–33. [Google Scholar] [CrossRef]

- Richter, A.; Vodanovich, S.; Steinhüser, M.; Hannola, L. IT on the Shop Floor—Challenges of the Digitalization of manufacturing companies. In Proceedings of the 30th Bled eConference, Bled, Slovenia, 18–21 June 2017. [Google Scholar]

- Lacueva-Pérez, F.J.; Gracia-Bandrés, M.A. Final Evaluation Report. In Deliverable 6.4. Project Report—FACTS4WORKERS: Worker-Centric Workplace in Smart Factories; VIRTUAL VEHICLE Research Center: Graz, Austria, 2018. [Google Scholar]

| Date | No. of Participants | Object | Method | |

|---|---|---|---|---|

| (Future) F4W Users | Control Group | |||

| April 2017 | 6 | 3 | F4W: IA base line | Questionnaires |

| April 2017 | 3 | 0 | F4W QV | Observation, interview |

| June 2018 | 3 | 0 | F4W: IA | Questionnaires |

| Heuristic | Finding | System Support (+/−) |

|---|---|---|

| 1. Visibility | Rating solutions makes the quality of these proposals comprehensible for others. | + |

| Workers can identify the authors of the documented solutions. | + | |

| 2. Flexibility | Documenting solutions on shop-floor is not enforced. | + |

| The descriptions of solutions can be created and edited by the users | + | |

| The set of problems on which the system can react is fixed. | − | |

| Worker are able to rate the quality and appropriateness of the proposed solutions | + | |

| Rating solutions potentially fosters continuous improvement. | + | |

| The possibility that some workers may be too timid to record a video, which could be bypassed by allowing anonymous postings, was not taken into consideration. | − | |

| It has to be understood whether following the proposed solution is mandatory or at least socially solicited. | ? | |

| 3. Communication Support | Authors of the solutions can be contacted if questions arise. | + |

| It is unclear though, if the system offers a direct communication channel with the authors. | ? | |

| Connecting the knowledge management system with other technological components such as additional channels for human-human communication. | − | |

| 4. Information Exchange | Knowledge management (KM) a central contribution to the proper exchange of information. | + |

| No aggregated data is provided to allow the management to evaluate the workers performance; consequently, privacy is maintained. | + | |

| 5. Balance between effort and benefit | After a roll out in the large it should be evaluated whether the workforce in general is motivated to contribute to documentation. | ? |

| Initial loaded content is requested to be extended/improved by workers. | + | |

| Do worker perceive the provided solutions as beneficial for them? | ? | |

| 6. Compatibility | Provided solutions/workings must be evaluated in order to determine how appropriate they are for the situation a worker has to deal with. | ? |

| The whole knowledge management system offers fluent transitions between working and learning on the job; editing or authorizing solutions is an opportunity for reflection. | + | |

| 7. Efficient organization | KM requires additional workload for documenting but can be minimized by making capturing as easy as possible. | + |

| Documenting solutions immediately on the shop floor was identified as the ideal task workflow. | + | |

| Automated provision of the resources (tools, replacement parts) that are needed to work on a problem. | − | |

| How the processes of editing an existing solution and of creating a new description are defined? | ? | |

| 8. Supportive technology | Videos can be used for capturing knowledge from workers on shop floor. | + |

| Provided solutions/workings must be evaluated in order to determine how appropriate they are for the situation a worker has to deal with. | ||

| Rating solutions potentially eliminates bad solutions. | ? | |

| Connecting the knowledge management system with other technological components such as additional channels for human-human communication or the automated provision of the resources (tools, replacement parts) that are needed to work on a problem are open tasks. | + |

© 2018 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Lacueva-Pérez, F.J.; Hannola, L.; Nierhoff, J.; Damalas, S.; Chatterjee, S.; Herrmann, T.; Schafler, M. Comparing Approaches for Evaluating Digital Interventions on the Shop Floor. Technologies 2018, 6, 116. https://doi.org/10.3390/technologies6040116

Lacueva-Pérez FJ, Hannola L, Nierhoff J, Damalas S, Chatterjee S, Herrmann T, Schafler M. Comparing Approaches for Evaluating Digital Interventions on the Shop Floor. Technologies. 2018; 6(4):116. https://doi.org/10.3390/technologies6040116

Chicago/Turabian StyleLacueva-Pérez, Francisco José, Lea Hannola, Jan Nierhoff, Stelios Damalas, Soumyajit Chatterjee, Thomas Herrmann, and Marlene Schafler. 2018. "Comparing Approaches for Evaluating Digital Interventions on the Shop Floor" Technologies 6, no. 4: 116. https://doi.org/10.3390/technologies6040116

APA StyleLacueva-Pérez, F. J., Hannola, L., Nierhoff, J., Damalas, S., Chatterjee, S., Herrmann, T., & Schafler, M. (2018). Comparing Approaches for Evaluating Digital Interventions on the Shop Floor. Technologies, 6(4), 116. https://doi.org/10.3390/technologies6040116