Mapping Linear and Configurational Dynamics to Fake News Sharing Behaviors in a Developing Economy

Abstract

1. Introduction

2. Scholarly Review

2.1. Foundational Theory

2.2. News-Find-Me Perception and Its Impact on Fake News Sharing

2.3. Social Media Trust and Fake News-Sharing Behavior

2.4. Information Sharing and Fake News Sharing Behavior

2.5. Status-Seeking and Fake News Sharing Behavior

2.6. Social Media Literacy as Moderator

2.7. Control Variables and Model Development

2.8. Configural Model with sfQCA

3. Method

3.1. Research Context

3.2. Instrument Development

3.3. Data Collection and Sample Composition

3.4. Missing Values and Confirmatory Factor Analysis

4. Results

4.1. Measurements

4.2. Results of the Hypothesized Relationships via SEM

4.3. Results of Direct Effects

- News-find-me demonstrates a negligible and statistically non-significant effect on fake news sharing, both without (β = −0.013, p = 0.715) and with sociodemographic controls (β = −0.015, p = 0.667), leading to the rejection of Hypothesis 1.

- Social media trust, however, exhibits a robust positive association with fake news sharing, remaining significant in both models (β = 0.170, p < 0.001 without sociodemographics; β = 0.140, p < 0.001 with sociodemographics), supporting Hypothesis 2.

- Information sharing negatively predicts fake news sharing, with strong significance in both specifications (β = −0.307, p < 0.001 without sociodemographic; β = −0.291, p < 0.001 with sociodemographic), rejecting Hypothesis 3.

- Status-seeking shows no meaningful effect (β = 0.001, p = 0.989 without sociodemographic; β = 0.004, p = 0.900 with sociodemographic), resulting in the rejection of Hypothesis 4.

| A. Hypothesized Relationships, Excluding Demographic | Standardized Estimate | S.E. | C.R. | p |

|---|---|---|---|---|

| H1: NFM → FNSB | −0.013 | 0.040 | −0.366 | 0.715 |

| H2: SMT → FNSB | 0.170 | 0.038 | 5.122 | *** |

| H3: IS → FNSB | −0.307 | 0.037 | −8.880 | *** |

| H4: SSM → FNSB | 0.001 | 0.037 | 0.018 | 0.986 |

| B. Hypothesized Relationships, Including Demographic | Standardized Estimate | S.E. | C.R. | p |

| H1: NFM → FNSB | −0.015 | 0.039 | −0.430 | 0.667 |

| H2: SMT → FNSB | 0.140 | 0.037 | 4.246 | *** |

| H3: IS → FNSB | −0.291 | 0.036 | −8.502 | *** |

| H4: SSM → FNSB | 0.004 | 0.036 | 0.125 | 0.900 |

| Education → FNSB | 0.064 | 0.036 | 2.187 | 0.029 |

| Age → FNSB | 0.077 | 0.027 | 2.632 | 0.008 |

| Family Status → FNSB | 0.109 | 0.022 | 3.705 | *** |

| Gender → FNSB | −0.069 | 0.058 | −2.352 | 0.019 |

| Occupation → FNSB | −0.024 | 0.021 | −0.824 | 0.410 |

4.4. Results of Moderating Effects

- Non-significant moderations:

- ○

- The buffering effect of social media literacy proved insignificant for both news-find-me perception (β = −0.12, p = 0.34) and status-seeking (β = 0.08, p = 0.42), leading to the rejection of H1a and H4a

- Conditional moderation:

- ○

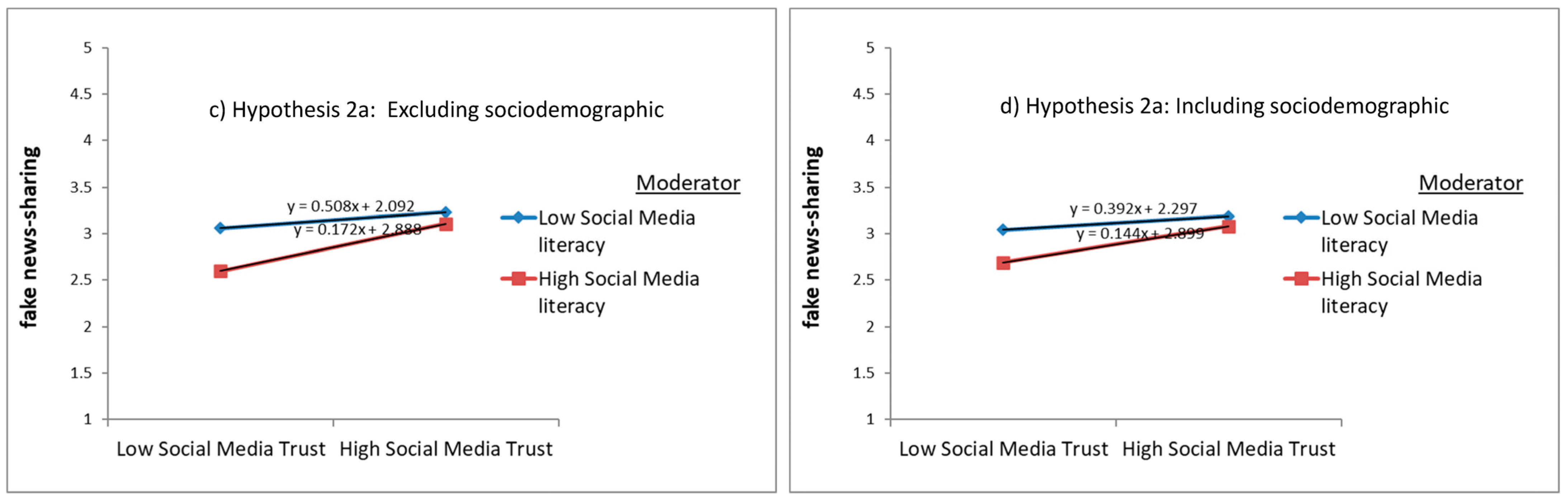

- Social media literacy significantly attenuated the link between social media trust and fake news sharing in the baseline model (β = −0.25, p < 0.01), but this effect diminished when demographics were controlled (β = −0.14, p = 0.06), yielding partial support for H2a

- Robust moderation:

- ○

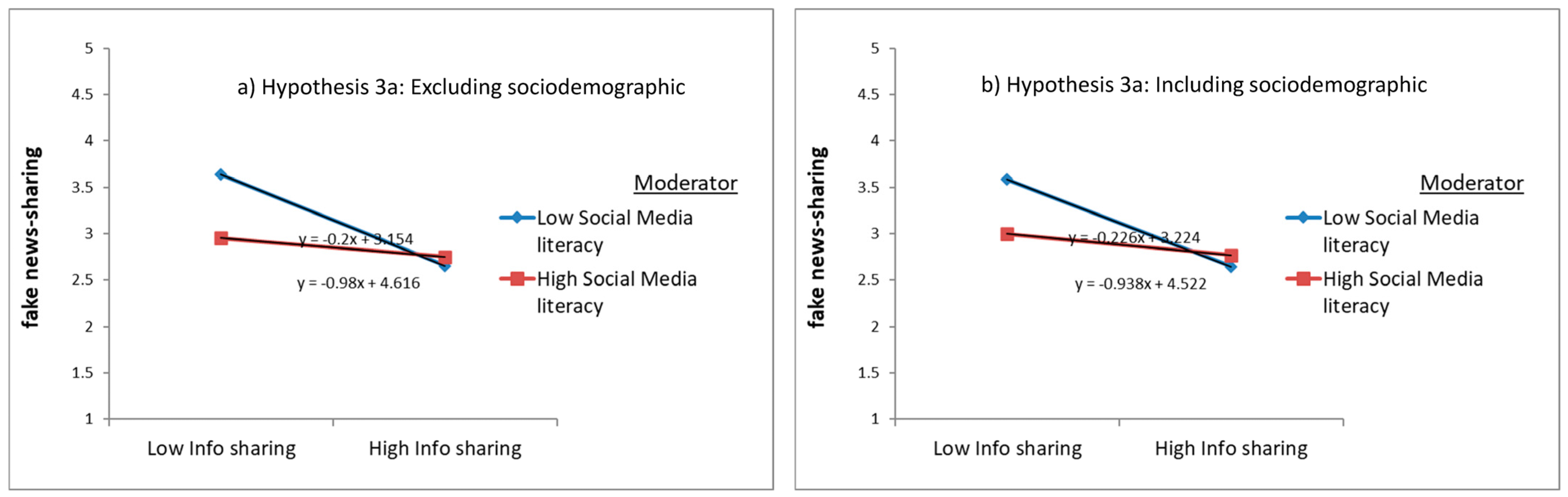

- The weakening effect of social media literacy on information seeking relationship with fake news sharing remained significant across both models (β = −0.31, p < 0.001; β = −0.28, p < 0.01), strongly supporting H3a

| A. Hypothesized Moderating Effects, Excluding the Demographic | Standardized Estimate | S.E. | C.R. | p | Concluding Remark |

|---|---|---|---|---|---|

| H1: SML*NFM → FNSB | −0.061 | 0.036 | −1.607 | 0.108 | Not confirmed |

| H2: SML*SMT → FNSB | 0.097 | 0.033 | 2.590 | 0.010 | Confirmed |

| H3: SML*IS → FNSB | 0.199 | 0.037 | 5.223 | *** | Confirmed |

| H4: SML*SSM → FNSB | −0.004 | 0.037 | −0.113 | 0.910 | Not confirmed |

| SML → FNSB | −0.135 | 0.039 | −3.710 | *** | Significant |

| B. Hypothesized Moderating Effects, Including the Demographic | Standardized Estimate | S.E. | C.R. | p | Concluding Remark |

| H1: SML*NFM → FNSB | −0.047 | 0.035 | −1.236 | 0.216 | Not confirmed |

| H2: SML*SMT → FNSB | 0.072 | 0.032 | 1.945 | 0.052 | Partially confirmed |

| H3: SML*IS → FNSB | 0.184 | 0.037 | 4.840 | *** | Confirmed |

| H4: SML*SSM → FNSB | 0.018 | 0.036 | 0.472 | 0.637 | Not confirmed |

| SML → FNSB | −0.108 | 0.039 | −2.973 | 0.003 | Significant |

| Education → FNSB | 0.066 | 0.035 | 2.286 | 0.022 | Significant |

| Age → FNSB | 0.057 | 0.026 | 1.975 | 0.048 | Significant |

| Family Status → FNSB | 0.104 | 0.021 | 3.615 | *** | Significant |

| Gender → FNSB | −0.058 | 0.057 | −2.016 | 0.044 | Significant |

| Occupation → FNSB | −0.021 | 0.020 | −0.739 | 0.460 | Not significant |

- ○

- Information sharing: Literacy significantly attenuated the negative association with fake news sharing (β = −0.28, p < 0.01), consistent across models with/without sociodemographic controls;

- ○

- Social media trust: Counterintuitively, literacy amplified the positive trust–misinformation link (β = 0.19, p < 0.05) in both model specifications.

- ○

- Positive predictors: Education (β = 0.066, p = 0.022), age (β = 0.057, p = 0.048), and family status (β = 0.104, p < 0.001);

- ○

- Negative predictors: Gender (β = −0.058, p = 0.044);

- ○

- Non-significant: Occupation (β = −0.021, p = 0.460).

4.5. fsQCA: Methodology and Solution Configurations

4.6. Results of the fsQCA

- Configuration Stability: The core causal recipe must remain invariant across both analytical stages, with no alterations to its constituent factors.

- Conditional Transformation: At minimum, one moderated configuration must exhibit transition(s) in condition status (core⇄peripheral) between stages.

- Moderator Centrality: In at least one qualifying configuration, the moderator must function as a core (rather than peripheral) present condition.

- Moderator Centrality: Social media literacy emerged as a core present condition in Configuration 3 (Table A3), fulfilling Criterion 3’s requirement for moderator significance.

- Conditional Transformation: While we observed stability in solution terms, the anticipated transitions between core and peripheral conditions (Criterion 2) were not fully evidenced. This partial fulfillment (2/3 criteria) suggests social media literacy operates as a selective moderator—effectively influencing certain causal pathways but not fundamentally restructuring condition hierarchies.

5. Discussion

5.1. Theoretical Contributions

5.2. Practical Implications

5.3. Limitations

6. Concluding Remark

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

Appendix A

| Itemized Measurements | Sources | ||

|---|---|---|---|

| Construct | Code | Item | Authors |

| Fake News-sharing behavior | SharingFNs1 Deleted | I shared news on social media that I recognized as visibly fabricated or intentionally misleading. | [27], Wei, Gong [28], Apuke and Omar [34] |

| SharingFNs2 | I shared news on social media that I strongly suspected was false but chose to post anyway. | ||

| SharingFNs3 | I shared exaggerated claims on social media, aware they were inflated, but did not check their accuracy. | ||

| SharingFNs4 | I intentionally shared exaggerated news on social media to attract attention, knowing it was misleading. | ||

| SharingFNs5 | I shared news on social media without assessing its credibility, even though I felt uncertain about its accuracy. | ||

| SharingFNs6 | Despite concerns that it might be inauthentic, I shared news on social media without confirming its source. | ||

| SharingFNs7 | I shared news on social media after only skimming it, even though I doubted its truthfulness. | ||

| SharingFNs8 | I shared news on social media, fully aware it likely contained inaccuracies or half-truths. | ||

| News-find-me | NewsFM1 | When news is released, I rely on my friends to inform me of the essentials. | Wei, Gong [28], Apuke and Omar [34] |

| NewsFM2 | Despite not actively keeping up with the news, I can stay well-informed. | ||

| NewsFM3 | I do not feel pressured to stay updated with the news because I trust that it will reach me. | ||

| NewsFM4 | I depend on the news shared by my friends, tailored to their interests or social media activities. | ||

| Status seeking | SSeeking1 | Posting news on social media makes me feel significant. | Thompson, Wang [69]; Apuke and Omar [34] |

| SSeeking2 | Sharing information on social media enhances my sense of status. | ||

| SSeeking3 | Utilizing social media for information dissemination boosts my professional image. | ||

| SSeeking4_Deteted | I post news on social media because my peers are pushing me to get involved. | ||

| SSeeking5 | I utilize social media platforms to share news and garner support and respect. | ||

| Information Sharing | InfoSharing1 | I share content that may be valuable to others on social media. | Thompson, Wang [69]; Apuke and Omar [34] |

| InfoSharing2 | I share information on social media to gather feedback on my findings. | ||

| InfoSharing3 | I share information on social media to keep others informed. | ||

| InfoSharing4 | I share practical knowledge and skills with others through social media. | ||

| InfoSharing5 | I use social media as a platform for easy self-expression. | ||

| InfoSharing6 | I share engaging content on social media that may interest or entertain others. | ||

| InfoSharing7_Deleted | I share personal insights about myself on social media. | ||

| InfoSharing8 | I use social media to offer a glimpse into my life and experiences. | ||

| Trust in social media platforms | TrustSNS1 | Social networking platforms serve as dependable social networks. | Laato, Islam [29]; Apuke and Omar [34] |

| TrustSNS2 | I trust social media sites to safeguard my privacy and personal information. | ||

| TrustSNS3 | I rely on social media platforms to protect my data from unauthorized access. | ||

| TrustSNS4 | I have confidence in social media platforms to fulfill their commitments. | ||

| Social media literacy | SMLit1_Deleted | I possess the knowledge to create a social media account. | Wei, Gong [28] |

| SMLit2_Deleted | I am familiar with the process of deleting or deactivating my social media account. | ||

| SMLit3_Deleted | I understand how to post content, such as photos, on my social media profiles. | ||

| SMLit4_Deleted | I know how to remove undesirable content from my social media accounts. | ||

| SMLit5 | I am knowledgeable about copyright laws governing social media platforms. | ||

| SMLit6 | I am adept at managing conflicts that arise on social media. | ||

| SMLit7 | I understand the dynamics and etiquette of social media platforms. | ||

| SMLit8 | I can verify the accuracy of information shared on social media using various sources. | ||

| SMLit9 | I can discern whether information on social media is true or false. | ||

| SMLit10_Deleted | Social media platforms like Facebook curate the content I see. | ||

| SMLit11 | The content I post on social media remains permanent. | ||

| SMLit12 | The advertisements I encounter on social media are tailored to my preferences. | ||

| SMLit13 | I utilize social media platforms to share news and garner support and respect. | ||

| SMLit14_Deleted | When engaging with social media, I become deeply absorbed. | ||

Appendix B. Scattergrams of fsQCA Solutions

Appendix C

| --- COMPLEX SOLUTION --- | |||

| frequency cutoff: 10 | |||

| consistency cutoff: 0.815266 | |||

| Raw coverage | Unique coverage | Consistency | |

| ~C_Sseeking | 0.645516 | 0.0671741 | 0.686231 |

| ~C_InfoSharing | 0.720071 | 0.123247 | 0.71413 |

| ~C_NFMe*C_TrustSM | 0.427153 | 0.0210943 | 0.80281 |

| solution coverage: 0.835441 | |||

| solution consistency: 0.657643 | |||

| --- PARSIMONIOUS SOLUTION --- | |||

| frequency cutoff: 10 | |||

| consistency cutoff: 0.815266 | |||

| Raw coverage | Unique coverage | Consistency | |

| ~C_Sseeking | 0.645516 | 0.0671741 | 0.686231 |

| ~C_InfoSharing | 0.720071 | 0.123247 | 0.71413 |

| ~C_NFMe*C_TrustSM | 0.427153 | 0.0210943 | 0.80281 |

| solution coverage: 0.835441 | |||

| solution consistency: 0.657643 | |||

| --- INTERMEDIATE SOLUTION --- | |||

| frequency cutoff: 10 | |||

| consistency cutoff: 0.815266 | |||

| Raw coverage | Unique coverage | Consistency | |

| ~C_Sseeking | 0.645516 | 0.0671741 | 0.686231 |

| ~C_InfoSharing | 0.720071 | 0.123247 | 0.71413 |

| ~C_NFMe*C_TrustSM | 0.427153 | 0.0210943 | 0.80281 |

| solution coverage: 0.835441 | |||

| solution consistency: 0.657643 |

Appendix D

| Solutions | 1 | 2 | 3 |

|---|---|---|---|

| News find me |  | ||

| Social media trust |  | ||

| Information sharing |  | ||

| Status seeking |  | ||

| solution coverage: 0.835441 | |||

| solution consistency: 0.657643 |

Appendix E

| --- COMPLEX SOLUTION --- | |||

| frequency cutoff: 2 | |||

| consistency cutoff: 0.821985 | |||

| Raw coverage | Unique coverage | Consistency | |

| ~C_Literacy | 0.708607 | 0.0440198 | 0.708498 |

| ~C_Sseeking | 0.645514 | 0.0318702 | 0.686231 |

| ~C_InfoSharing | 0.720069 | 0.039423 | 0.714132 |

| ~C_NFMe*C_TrustSM | 0.427152 | 0.0135803 | 0.802809 |

| solution coverage: 0.879461 | |||

| solution consistency: 0.638975 | |||

| --- PARSIMONIOUS SOLUTION --- | |||

| frequency cutoff: 2 | |||

| consistency cutoff: 0.821985 | |||

| Raw coverage | Unique coverage | Consistency | |

| ~C_Literacy | 0.708607 | 0.0440198 | 0.708498 |

| ~C_Sseeking | 0.645514 | 0.0318702 | 0.686231 |

| ~C_InfoSharing | 0.720069 | 0.039423 | 0.714132 |

| ~C_NFMe*C_TrustSM | 0.427152 | 0.0135803 | 0.802809 |

| solution coverage: 0.879461 | |||

| solution consistency: 0.638975 | |||

| --- INTERMEDIATE SOLUTION --- | |||

| frequency cutoff: 10 | |||

| consistency cutoff: 0.815266 | |||

| Raw coverage | Unique coverage | Consistency | |

| ~C_Sseeking | 0.645514 | 0.0318702 | 0.686231 |

| ~C_InfoSharing | 0.720069 | 0.039423 | 0.714132 |

| ~C_NFMe*C_TrustSM | 0.427152 | 0.0135803 | 0.802809 |

| ~C_Literacy | 0.708607 | 0.0440198 | 0.708498 |

| solution coverage: 0.879461 | |||

| solution consistency: 0.638975 |

Appendix F

| Solutions | 1 | 2 | 3 | 4 |

|---|---|---|---|---|

| News-find-me |  | |||

| Social media trust |  | |||

| Information sharing |  | |||

| Status seeking |  | |||

| SM literacy |  | |||

| Solution Coverage: 0.879461 | ||||

| Solution Consistency: 0.638975 |

References

- Zhou, Q.; Li, B.; Scheibenzuber, C.; Li, H. Fake news land? Exploring the impact of social media affordances on user behavioral responses: A mixed-methods research. Comput. Hum. Behav. 2023, 148, 107889. [Google Scholar] [CrossRef]

- Cano-Marin, E.; Mora-Cantallops, M.; Sanchez-Alonso, S. The power of big data analytics over fake news: A scientometric review of Twitter as a predictive system in healthcare. Technol. Forecast. Soc. Change 2023, 190, 122386. [Google Scholar] [CrossRef]

- Li, N. Analyzing the Complexity of Public Opinion Evolution on Weibo: A Super Network Model. J. Knowl. Econ. 2024, 16, 3404–3439. [Google Scholar] [CrossRef]

- Xie, Z.; Chiu, D.K.W.; Ho, K.K.W. The Role of Social Media as Aids for Accounting Education and Knowledge Sharing: Learning Effectiveness and Knowledge Management Perspectives in Mainland China. J. Knowl. Econ. 2024, 15, 2628–2655. [Google Scholar] [CrossRef] [PubMed]

- Chaudhuri, N.; Gupta, G.; Popovič, A. Do you believe it? Examining user engagement with fake news on social media platforms. Technol. Forecast. Soc. Change 2025, 212, 123950. [Google Scholar] [CrossRef]

- Hossain, M.A.; Quaddus, M.; Warren, M.; Akter, S.; Pappas, I. Are you a cyberbully on social media? Exploring the personality traits using a fuzzy-set configurational approach. Int. J. Inf. Manag. 2022, 66, 102537. [Google Scholar] [CrossRef]

- Lee, J.; Kim, K.; Park, G.; Cha, N. The role of online news and social media in preventive action in times of infodemic from a social capital perspective: The case of the COVID-19 pandemic in South Korea. Telemat. Inform. 2021, 64, 101691. [Google Scholar] [CrossRef]

- Raj, C.; Meel, P. People lie, actions Don’t! Modeling infodemic proliferation predictors among social media users. Technol. Soc. 2022, 68, 101930. [Google Scholar] [CrossRef]

- Pang, H.; Liu, J.; Lu, J. Tackling fake news in socially mediated public spheres: A comparison of Weibo and WeChat. Technol. Soc. 2022, 70, 102004. [Google Scholar] [CrossRef]

- Marsh, E.J.; Stanley, M.L. False beliefs: Byproducts of an adaptive knowledge base? In The Psychology of Fake News; Routledge: London, UK, 2020; pp. 131–146. [Google Scholar]

- Scheibenzuber, C.; Hofer, S.; Nistor, N. Designing for fake news literacy training: A problem-based undergraduate online-course. Comput. Hum. Behav. 2021, 121, 106796. [Google Scholar] [CrossRef]

- Shu, K.; Sliva, A.; Wang, S.; Tang, J.; Liu, H. Fake news detection on social media: A data mining perspective. ACM SIGKDD Explor. Newsl. 2017, 19, 22–36. [Google Scholar] [CrossRef]

- Velichety, S.; Shrivastava, U. Quantifying the impacts of online fake news on the equity value of social media platforms—Evidence from Twitter. Int. J. Inf. Manag. 2022, 64, 102474. [Google Scholar] [CrossRef]

- Carlson, M. Fake news as an informational moral panic: The symbolic deviancy of social media during the 2016 US presidential election. Inf. Commun. Soc. 2020, 23, 374–388. [Google Scholar] [CrossRef]

- Kantartopoulos, P.; Pitropakis, N.; Mylonas, A.; Kylilis, N. Exploring Adversarial Attacks and Defences for Fake Twitter Account Detection. Technologies 2020, 8, 64. [Google Scholar] [CrossRef]

- Olan, F.; Jayawickrama, U.; Arakpogun, E.O.; Suklan, J.; Liu, S. Fake news on Social Media: The Impact on Society. Inf. Syst. Front. 2024, 26, 443–458. [Google Scholar] [CrossRef]

- Byun, K.J.; Park, J.; Yoo, S.; Cho, M. Has the COVID-19 pandemic changed the influence of word-of-mouth on purchasing decisions? J. Retail. Consum. Serv. 2023, 74, 103411. [Google Scholar] [CrossRef]

- Bermes, A. Information overload and fake news sharing: A transactional stress perspective exploring the mitigating role of consumers’ resilience during COVID-19. J. Retail. Consum. Serv. 2021, 61, 102555. [Google Scholar] [CrossRef]

- Matthes, J.; Corbu, N.; Jin, S.; Theocharis, Y.; Schemer, C.; van Aelst, P.; Strömbäck, J.; Koc-Michalska, K.; Esser, F.; Aalberg, T.; et al. Perceived prevalence of misinformation fuels worries about COVID-19: A cross-country, multi-method investigation. Inf. Commun. Soc. 2023, 26, 3133–3156. [Google Scholar] [CrossRef]

- Liu, Y.; Liu, S.; Ye, D.; Tang, H.; Wang, F. Dynamic impact of negative public sentiment on agricultural product prices during COVID-19. J. Retail. Consum. Serv. 2022, 64, 102790. [Google Scholar] [CrossRef]

- Harris, S.; Hadi, H.J.; Ahmad, N.; Alshara, M.A. Fake News Detection Revisited: An Extensive Review of Theoretical Frameworks, Dataset Assessments, Model Constraints, and Forward-Looking Research Agendas. Technologies 2024, 12, 222. [Google Scholar] [CrossRef]

- Duffy, A.; Tandoc, E.; Ling, R. Too good to be true, too good not to share: The social utility of fake news. Inf. Commun. Soc. 2020, 23, 1965–1979. [Google Scholar] [CrossRef]

- Wasserman, H.; Madrid-Morales, D. An Exploratory Study of “Fake News” and Media Trust in Kenya, Nigeria and South Africa. Afr. J. Stud. 2019, 40, 107–123. [Google Scholar] [CrossRef]

- Masavah, V.M.; Turpin, M. Fake News in Developing Countries: Drivers, Mechanisms and Consequences; Springer Nature: Cham, Switzerland, 2024. [Google Scholar]

- Nenno, S.; Puschmann, C. All The (Fake) News That’s Fit to Share? News Values in Perceived Misinformation across Twenty-Four Countries. Int. J. Press/Politics 2025. [Google Scholar] [CrossRef]

- Talwar, S.; Dhir, A.; Kaur, P.; Zafar, N.; Alrasheedy, M. Why do people share fake news? Associations between the dark side of social media use and fake news sharing behavior. J. Retail. Consum. Serv. 2019, 51, 72–82. [Google Scholar] [CrossRef]

- Apuke, O.D.; Omar, B. Fake news and COVID-19: Modelling the predictors of fake news sharing among social media users. Telemat. Inform. 2021, 56, 101475. [Google Scholar] [CrossRef]

- Wei, L.; Gong, J.; Xu, J.; Abidin, N.E.Z.; Apuke, O.D. Do social media literacy skills help in combating fake news spread? Modelling the moderating role of social media literacy skills in the relationship between rational choice factors and fake news sharing behaviour. Telemat. Inform. 2023, 76, 101910. [Google Scholar] [CrossRef]

- Laato, S.; Islam, A.K.M.N.; Islam, M.N.; Whelan, E. What drives unverified information sharing and cyberchondria during the COVID-19 pandemic? Eur. J. Inf. Syst. 2020, 29, 288–305. [Google Scholar] [CrossRef]

- Gray, R.; Owen, D.; Adams, C. Some theories for social accounting?: A review essay and a tentative pedagogic categorisation of theorisations around social accounting. In Sustainability, Environmental Performance and Disclosures; Freedman, M., Jaggi, B., Eds.; Emerald Group Publishing Limited: Leeds, UK, 2009; pp. 1–54. [Google Scholar]

- Fernando, S.; Lawrence, S. A theoretical framework for CSR practices: Integrating legitimacy theory, stakeholder theory and institutional theory. J. Theor. Account. Res. 2014, 10, 149–178. [Google Scholar]

- Apuke, O.D.; Omar, B. Modelling the antecedent factors that affect online fake news sharing on COVID-19: The moderating role of fake news knowledge. Health Educ. Res. 2020, 35, 490–503. [Google Scholar] [CrossRef]

- Wang, X.; Chao, F.; Yu, G.; Zhang, K. Factors influencing fake news rebuttal acceptance during the COVID-19 pandemic and the moderating effect of cognitive ability. Comput. Hum. Behav. 2022, 130, 107174. [Google Scholar] [CrossRef]

- Apuke, O.D.; Omar, B. Social media affordances and information abundance: Enabling fake news sharing during the COVID-19 health crisis. Health Inform. J. 2021, 27, 14604582211021470. [Google Scholar] [CrossRef]

- Talwar, S.; Dhir, A.; Singh, D.; Virk, G.S.; Salo, J. Sharing of fake news on social media: Application of the honeycomb framework and the third-person effect hypothesis. J. Retail. Consum. Serv. 2020, 57, 102197. [Google Scholar] [CrossRef]

- Balakrishnan, V.; Ng, K.S.; Rahim, H.A. To share or not to share—The underlying motives of sharing fake news amidst the COVID-19 pandemic in Malaysia. Technol. Soc. 2021, 66, 101676. [Google Scholar] [CrossRef]

- Ahmed, S. Disinformation Sharing Thrives with Fear of Missing Out among Low Cognitive News Users: A Cross-national Examination of Intentional Sharing of Deep Fakes. J. Broadcast. Electron. Media 2022, 66, 89–109. [Google Scholar] [CrossRef]

- Aoun Barakat, K.; Dabbous, A.; Tarhini, A. An empirical approach to understanding users’ fake news identification on social media. Online Inf. Rev. 2021, 45, 1080–1096. [Google Scholar] [CrossRef]

- Kumar, A.; Shankar, A.; Behl, A.; Arya, V.; Gupta, N. Should I share it? Factors influencing fake news-sharing behaviour: A behavioural reasoning theory perspective. Technol. Forecast. Soc. Change 2023, 193, 122647. [Google Scholar] [CrossRef]

- Ostrom, E. A Behavioral Approach to the Rational Choice Theory of Collective Action: Presidential Address, American Political Science Association, 1997. Am. Political Sci. Rev. 1998, 92, 1–22. [Google Scholar] [CrossRef]

- Driscoll, A.; Krook, M.L. Feminism and rational choice theory. Eur. Political Sci. Rev. 2012, 4, 195–216. [Google Scholar] [CrossRef]

- Blumler, J.G.; Katz, E. The Uses of Mass Communications: Current Perspectives on Gratifications Research; Sage: Beverly Hills, CA, USA, 1974. [Google Scholar]

- Halpern, D.; Valenzuela, S.; Katz, J.; Miranda, J.P. From Belief in Conspiracy Theories to Trust in Others: Which Factors Influence Exposure, Believing and Sharing Fake News; Springer International Publishing: Cham, Switzerland, 2019. [Google Scholar]

- Ragin, C.C. Redesigning Social Inquiry: Fuzzy Sets and Beyond; University of Chicago Press: Chicago, IL, USA, 2009. [Google Scholar]

- Fiss, P.C. A set-theoretic approach to organizational configurations. Acad. Manag. Rev. 2007, 32, 1180–1198. [Google Scholar] [CrossRef]

- Browning, G.; Webster, F.; Halcli, A. Understanding contemporary society: Theories of the present. In Understanding Contemporary Society; Sage Publications: Singapore, 1999; pp. 1–520. [Google Scholar]

- Hopmann, D.N.; Wonneberger, A.; Shehata, A.; Höijer, J. Selective Media Exposure and Increasing Knowledge Gaps in Swiss Referendum Campaigns. Int. J. Public Opin. Res. 2015, 28, 73–95. [Google Scholar] [CrossRef]

- de Zúñiga, H.G.; Cheng, Z. Origin and evolution of the News Finds Me perception: Review of theory and effects. Prof. Inf. 2021, 30, 1–17. [Google Scholar] [CrossRef]

- Gil de Zúñiga, H.; Diehl, T. News finds me perception and democracy: Effects on political knowledge, political interest, and voting. New Media Soc. 2019, 21, 1253–1271. [Google Scholar] [CrossRef]

- Pentina, I.; Tarafdar, M. From “information” to “knowing”: Exploring the role of social media in contemporary news consumption. Comput. Hum. Behav. 2014, 35, 211–223. [Google Scholar] [CrossRef]

- Toff, B.; Nielsen, R.K. “I Just Google It”: Folk Theories of Distributed Discovery. J. Commun. 2018, 68, 636–657. [Google Scholar] [CrossRef]

- Gil de Zúñiga, H.; Weeks, B.; Ardèvol-Abreu, A. Effects of the News-Finds-Me Perception in Communication: Social Media Use Implications for News Seeking and Learning About Politics. J. Comput.-Mediat. Commun. 2017, 22, 105–123. [Google Scholar] [CrossRef]

- Ruggiero, T.E. Uses and gratifications theory in the 21st century. Mass Commun. Soc. 2000, 3, 3–37. [Google Scholar] [CrossRef]

- Müller, T.; Husain, M.; Apps, M.A. Preferences for seeking effort or reward information bias the willingness to work. Sci. Rep. 2022, 12, 19486. [Google Scholar] [CrossRef] [PubMed]

- Caplin, A.; Dean, M. Revealed Preference, Rational Inattention, and Costly Information Acquisition. Am. Econ. Rev. 2014, 105, 2183–2203. [Google Scholar] [CrossRef]

- Chong, D.; Mullinix, K. Rational Choice and Information Processing. In The Cambridge Handbook of Political Psychology; Cambridge University Press: Cambridge, UK, 2022; pp. 118–138. [Google Scholar]

- Tandoc, E.C.; Ling, R.; Westlund, O.; Duffy, A.; Goh, D.; Wei, L.Z. Audiences’ acts of authentication in the age of fake news: A conceptual framework. New Media Soc. 2018, 20, 2745–2763. [Google Scholar] [CrossRef]

- Omar, B.; Dequan, W. Watch, Share or Create: The Influence of Personality Traits and User Motivation on TikTok Mobile Video Usage. 2020. Available online: https://www.learntechlib.org/p/216454/?nl=1 (accessed on 23 March 2024).

- Fiss, P.C. Building Better Causal Theories: A Fuzzy Set Approach to Typologies in Organization Research. Acad. Manag. J. 2011, 54, 393–420. [Google Scholar] [CrossRef]

- McLoughlin, K.L.; Brady, W.J. Human-algorithm interactions help explain the spread of misinformation. Curr. Opin. Psychol. 2024, 56, 101770. [Google Scholar] [CrossRef]

- Mayer, R.C.; Davis, J.H.; Schoorman, F.D. An integrative model of organizational trust. Acad. Manag. Rev. 1995, 20, 709–734. [Google Scholar] [CrossRef]

- DuBois, T.; Golbeck, J.; Srinivasan, A. Predicting Trust and Distrust in Social Networks. In Proceedings of the 2011 IEEE Third International Conference on Privacy, Security, Risk and Trust and 2011 IEEE Third International Conference on Social Computing, Boston, MA, USA, 9–11 October 2011. [Google Scholar]

- Grabner-Kräuter, S.; Bitter, S. Trust in online social networks: A multifaceted perspective. Forum Soc. Econ. 2015, 44, 48–68. [Google Scholar] [CrossRef]

- Warner-Søderholm, G.; Bertsch, A.; Sawe, E.; Lee, D.; Wolfe, T.; Meyer, J.; Engel, J.; Fatilua, U.N. Who trusts social media? Comput. Hum. Behav. 2018, 81, 303–315. [Google Scholar] [CrossRef]

- Lin, S.-W.; Liu, Y.-C. The effects of motivations, trust, and privacy concern in social networking. Serv. Bus. 2012, 6, 411–424. [Google Scholar] [CrossRef]

- Yuan, Y.-P.; Tan, G.W.-H.; Ooi, K.-B. What shapes mobile fintech consumers’ post-adoption experience? A multi-analytical PLS-ANN-fsQCA perspective. Technol. Forecast. Soc. Change 2025, 217, 124162. [Google Scholar] [CrossRef]

- Metzger, M.J.; Flanagin, A.J. Credibility and trust of information in online environments: The use of cognitive heuristics. J. Pragmat. 2013, 59, 210–220. [Google Scholar] [CrossRef]

- Salehan, M.; Kim, D.J.; Koo, C. A study of the effect of social trust, trust in social networking services, and sharing attitude, on two dimensions of personal information sharing behavior. J. Supercomput. 2018, 74, 3596–3619. [Google Scholar] [CrossRef]

- Thompson, N.; Wang, X.; Daya, P. Determinants of News Sharing Behavior on Social Media. J. Comput. Inf. Syst. 2020, 60, 593–601. [Google Scholar] [CrossRef]

- Torres, R.; Gerhart, N.; Negahban, A. Epistemology in the era of fake news: An exploration of information verification behaviors among social networking site users. ACM SIGMIS Database DATABASE Adv. Inf. Syst. 2018, 49, 78–97. [Google Scholar] [CrossRef]

- Freiling, I.; Waldherr, A. Why Trusting Whom? Motivated Reasoning and Trust in the Process of Information Evaluation. In Trust and Communication: Findings and Implications of Trust Research; Blöbaum, B., Ed.; Springer International Publishing: Cham, Switzerland, 2021; pp. 83–97. [Google Scholar]

- Guess, A.; Nagler, J.; Tucker, J. Less than you think: Prevalence and predictors of fake news dissemination on Facebook. Sci. Adv. 2019, 5, eaau4586. [Google Scholar] [CrossRef]

- Paletz, S.B.F.; Auxier, B.E.; Golonka, E.M. Motivation to Share. In A Multidisciplinary Framework of Information Propagation Online; Springer International Publishing: Cham, Switzerland, 2019; pp. 37–45. [Google Scholar]

- Oh, S.; Syn, S.Y. Motivations for sharing information and social support in social media: A comparative analysis of Facebook, Twitter, Delicious, YouTube, and Flickr. J. Assoc. Inf. Sci. Technol. 2015, 66, 2045–2060. [Google Scholar] [CrossRef]

- McGonagle, T. “Fake news”: False fears or real concerns? Neth. Q. Hum. Rights 2017, 35, 203–209. [Google Scholar] [CrossRef]

- Narwal, B. Fake news in digital media. In Proceedings of the 2018 International Conference on Advances in Computing, Communication Control and Networking (ICACCCN), Greater Noida, India, 12–13 October 2018. [Google Scholar]

- Tandoc, E.C.; Jenkins, J.; Craft, S. Fake News as a Critical Incident in Journalism. J. Pract. 2019, 13, 673–689. [Google Scholar] [CrossRef]

- Chen, X.; Sin, S.-C.J.; Theng, Y.-L.; Lee, C.S. Why Students Share Misinformation on Social Media: Motivation, Gender, and Study-level Differences. J. Acad. Librariansh. 2015, 41, 583–592. [Google Scholar] [CrossRef]

- Chen, X.; Sin, S.-C.J.; Theng, Y.-L.; Lee, C.S. Why do social media users share misinformation? In Proceedings of the 15th ACM/IEEE-CS Joint Conference on Digital Libraries, Knoxville, TN, USA, 21–25 June 2015. [Google Scholar]

- Allcott, H.; Gentzkow, M. Social media and fake news in the 2016 election. J. Econ. Perspect. 2017, 31, 211–236. [Google Scholar] [CrossRef]

- Igbinovia, M.O.; Okuonghae, O.; Adebayo, J.O. Information literacy competence in curtailing fake news about the COVID-19 pandemic among undergraduates in Nigeria. Ref. Serv. Rev. 2021, 49, 3–18. [Google Scholar] [CrossRef]

- Pennycook, G.; Epstein, Z.; Mosleh, M.; Arechar, A.A.; Eckles, D.; Rand, D.G. Shifting attention to accuracy can reduce misinformation online. Nature 2021, 592, 590–595. [Google Scholar] [CrossRef]

- Anderson, C.; Hildreth, J.A.D.; Howland, L. Is the desire for status a fundamental human motive? A review of the empirical literature. Psychol. Bull. 2015, 141, 574–601. [Google Scholar] [CrossRef]

- Delhey, J.; Schneickert, C.; Hess, S.; Aplowski, A. Who values status seeking? A cross-European comparison of social gradients and societal conditions. Eur. Soc. 2022, 24, 29–60. [Google Scholar] [CrossRef]

- Cruz, J.A.B., Jr.; Caguiat, M.R.R.; Ebardo, R.A. Investigating the Design of Social Networking Sites to Examine the Spread of Political Misinformation Using the Uses and Gratifications Theory. Int. J. Res. Sci. Innov. 2024, 11, 1–11. [Google Scholar] [CrossRef]

- Haridakis, P. Uses and Gratifications. In The International Encyclopedia of Media Studies; Routledge: London, UK, 2019. [Google Scholar]

- Bergström, A.; Jervelycke Belfrage, M. News in Social Media. Digit. J. 2018, 6, 583–598. [Google Scholar] [CrossRef]

- Ma, L.; Sian Lee, C.; Hoe-Lian Goh, D. Understanding news sharing in social media. Online Inf. Rev. 2014, 38, 598–615. [Google Scholar] [CrossRef]

- Ali, K.; Li, C.; Zain-Ul-Abdin, K.; Muqtadir, S.A. The effects of emotions, individual attitudes towards vaccination, and social endorsements on perceived fake news credibility and sharing motivations. Comput. Hum. Behav. 2022, 134, 107307. [Google Scholar] [CrossRef]

- Lee, C.S.; Ma, L.; Goh, D.H.-L. Why Do People Share News in Social Media? Springer: Berlin/Heidelberg, Germany, 2011. [Google Scholar]

- Sharma, K.; Qian, F.; Jiang, H.; Ruchansky, N.; Zhang, M.; Liu, Y. Combating fake news: A survey on identification and mitigation techniques. ACM Trans. Intell. Syst. Technol. (TIST) 2019, 10, 1–42. [Google Scholar] [CrossRef]

- Kim, A.; Dennis, A.R. Says who? The effects of presentation format and source rating on fake news in social media. Mis Q. 2019, 43, 1025–1040. [Google Scholar] [CrossRef]

- Schreurs, L.; Vandenbosch, L. Introducing the Social Media Literacy (SMILE) model with the case of the positivity bias on social media. J. Child. Media 2021, 15, 320–337. [Google Scholar] [CrossRef]

- Festl, R. Social media literacy & adolescent social online behavior in Germany. J. Child. Media 2021, 15, 249–271. [Google Scholar]

- Guess, A.M.; Lerner, M.; Lyons, B.; Montgomery, J.M.; Nyhan, B.; Reifler, J.; Sircar, N. A digital media literacy intervention increases discernment between mainstream and false news in the United States and India. Proc. Natl. Acad. Sci. USA 2020, 117, 15536–15545. [Google Scholar] [CrossRef]

- Lee, N.M. Fake news, phishing, and fraud: A call for research on digital media literacy education beyond the classroom. Commun. Educ. 2018, 67, 460–466. [Google Scholar] [CrossRef]

- Moore, R.C.; Hancock, J.T. A digital media literacy intervention for older adults improves resilience to fake news. Sci. Rep. 2022, 12, 6008. [Google Scholar] [CrossRef] [PubMed]

- Apuke, O.D.; Omar, B.; Asude, E. Tunca, Literacy Concepts as an Intervention Strategy for Improving Fake News Knowledge, Detection Skills, and Curtailing the Tendency to Share Fake News in Nigeria. Child Youth Serv. 2023, 44, 88–103. [Google Scholar] [CrossRef]

- Musgrove, A.T.; Powers, J.R.; Rebar, L.C.; Musgrove, G.J. Real or fake? Resources for teaching college students how to identify fake news. Coll. Undergrad. Libr. 2018, 25, 243–260. [Google Scholar] [CrossRef]

- Goldani, M.H.; Safabakhsh, R.; Momtazi, S. Convolutional neural network with margin loss for fake news detection. Inf. Process. Manag. 2021, 58, 102418. [Google Scholar] [CrossRef]

- Melchior, C.; Warin, T.; Oliveira, M. An investigation of the COVID-19-related fake news sharing on Facebook using a mixed methods approach. Technol. Forecast. Soc. Change 2025, 213, 123969. [Google Scholar] [CrossRef]

- Lisi, G. Is Education the Best Tool to Fight Disinformation? J. Knowl. Econ. 2024, 15, 15996–16012. [Google Scholar] [CrossRef]

- Becker, T.E. Potential Problems in the Statistical Control of Variables in Organizational Research: A Qualitative Analysis with Recommendations. Organ. Res. Methods 2005, 8, 274–289. [Google Scholar] [CrossRef]

- Becker, T.E.; Atinc, G.; Breaugh, J.A.; Carlson, K.D.; Edwards, J.R.; Spector, P.E. Statistical control in correlational studies: 10 essential recommendations for organizational researchers. J. Organ. Behav. 2016, 37, 157–167. [Google Scholar] [CrossRef]

- Shiau, W.-L.; Chau, P.Y.; Thatcher, J.B.; Teng, C.-I.; Dwivedi, Y.K. Have we controlled properly? Problems with and recommendations for the use of control variables in information systems research. Int. J. Inf. Manag. 2024, 74, 102702. [Google Scholar] [CrossRef]

- Carlson, K.D.; Wu, J. The Illusion of Statistical Control: Control Variable Practice in Management Research. Organ. Res. Methods 2012, 15, 413–435. [Google Scholar] [CrossRef]

- Gao, L.; Waechter, K.A. Examining the role of initial trust in user adoption of mobile payment services: An empirical investigation. Inf. Syst. Front. 2017, 19, 525–548. [Google Scholar] [CrossRef]

- Elena-Bucea, A.; Cruz-Jesus, F.; Oliveira, T.; Coelho, P.S. Assessing the Role of Age, Education, Gender and Income on the Digital Divide: Evidence for the European Union. Inf. Syst. Front. 2021, 23, 1007–1021. [Google Scholar] [CrossRef]

- Haight, M.; Quan-Haase, A.; Corbett, B.A. Revisiting the digital divide in Canada: The impact of demographic factors on access to the internet, level of online activity, and social networking site usage. In Current Research on Information Technologies and Society; Routledge: London, UK, 2016; pp. 113–129. [Google Scholar]

- Booker, C.L.; Kelly, Y.J.; Sacker, A. Gender differences in the associations between age trends of social media interaction and well-being among 10-15 year olds in the UK. BMC Public Health 2018, 18, 321. [Google Scholar] [CrossRef]

- Nas, E.; de Kleijn, R. Conspiracy thinking and social media use are associated with ability to detect deepfakes. Telemat. Inform. 2024, 87, 102093. [Google Scholar] [CrossRef]

- Gefen, D.; Straub, D.; Boudreau, M.-C. Structural equation modeling and regression: Guidelines for research practice. Commun. Assoc. Inf. Syst. 2000, 4, 7. [Google Scholar] [CrossRef]

- Joreskog, K.G.; Wold, H. The ML and PLS Techniques for Modeling with Latent Variables: Historical and Comparative Aspects. In Systems Under Indirect Observation: Causality, Structure, Prediction, Part I; Joreskog, K.G., Wold, H., Eds.; Elsevier: Amsterdam, The Netherlands, 1982; pp. 263–270. [Google Scholar]

- Davcik, N.S. The use and misuse of structural equation modeling in management research. J. Adv. Manag. Res. 2014, 11, 47–81. [Google Scholar] [CrossRef]

- Pappas, I.O.; Bley, K. Fuzzy-set qualitative comparative analysis: Introduction to a configurational approach. In Researching and Analysing Business; Routledge: London, UK, 2023; pp. 362–376. [Google Scholar]

- Urry, J. The Complexity Turn. Theory Cult. Soc. 2005, 22, 1–14. [Google Scholar] [CrossRef]

- Park, Y.; Mithas, S. Organized complexity of digital business strategy: A configurational perspective. MIS Q. 2020, 44, 85–127. [Google Scholar] [CrossRef]

- Pappas, I.O.; Woodside, A.G. Fuzzy-set Qualitative Comparative Analysis (fsQCA): Guidelines for research practice in Information Systems and marketing. Int. J. Inf. Manag. 2021, 58, 102310. [Google Scholar] [CrossRef]

- Woodside, A.G. Embrace•perform•model: Complexity theory, contrarian case analysis, and multiple realities. J. Bus. Res. 2014, 67, 2495–2503. [Google Scholar] [CrossRef]

- Khedhaouria, A.; Cucchi, A. Technostress creators, personality traits, and job burnout: A fuzzy-set configurational analysis. J. Bus. Res. 2019, 101, 349–361. [Google Scholar] [CrossRef]

- Kumar, S.; Sahoo, S.; Lim, W.M.; Kraus, S.; Bamel, U. Fuzzy-set qualitative comparative analysis (fsQCA) in business and management research: A contemporary overview. Technol. Forecast. Soc. Change 2022, 178, 121599. [Google Scholar] [CrossRef]

- Humprecht, E. Where ‘fake news’ flourishes: A comparison across four Western democracies. Inf. Commun. Soc. 2019, 22, 1973–1988. [Google Scholar] [CrossRef]

- Jordan, P.J.; Troth, A.C. Common method bias in applied settings: The dilemma of researching in organizations. Aust. J. Manag. 2020, 45, 3–14. [Google Scholar] [CrossRef]

- Podsakoff, P.M.; MacKenzie, S.B.; Lee, J.-Y.; Podsakoff, N.P. Common method biases in behavioral research: A critical review of the literature and recommended remedies. J. Appl. Psychol. 2003, 88, 879. [Google Scholar] [CrossRef]

- Podsakoff, P.M.; MacKenzie, S.B.; Podsakoff, N.P. Sources of method bias in social science research and recommendations on how to control it. Annu. Rev. Psychol. 2012, 63, 539–569. [Google Scholar] [CrossRef]

- George, D. SPSS for Windows Step by Step: A Simple Study Guide and Reference, 17.0 Update, 10/e; Pearson Education India: Tamil Nadu, India, 2011. [Google Scholar]

- Johnson, R.A.; Wichern, D.W. Applied Multivariate Statistical Analysis, 6th ed.; Pearson: Upper Saddle River, NJ, USA, 2007. [Google Scholar]

- Byrne, B.M. Structural Equation Modeling with Mplus: Basic Concepts, Applications, and Programming; Routledge: London, UK, 2013. [Google Scholar]

- Fornell, C.; Larcker, D.F. Evaluating structural equation models with unobservable variables and measurement error. J. Mark. Res. 1981, 18, 39–50. [Google Scholar] [CrossRef]

- Hair, J.F.; Black William, C.; Babin, B.J.; Anderson Rolph, E.; Tatham, R.L. Multivariate Data Analysis; Pearson New International Edition; Pearson Education Limited: Harlow, Essex, UK, 2014. [Google Scholar]

- Hair, J.F.; Black, W.C.; Babin, B.J.; Anderson, R.E.; Tatham, R. Multivariate Data Analysis, 6th ed.; Pearson Prentice Hall: Saddle River, NJ, USA, 2009. [Google Scholar]

- Voorhees, C.M.; Brady, M.K.; Calantone, R.; Ramirez, E. Discriminant validity testing in marketing: An analysis, causes for concern, and proposed remedies. J. Acad. Mark. Sci. 2016, 44, 119–134. [Google Scholar] [CrossRef]

- Hu, L.-t.; Bentler, P.M. Cutoff criteria for fit indexes in covariance structure analysis: Conventional criteria versus new alternatives. Struct. Equ. Model. 1999, 6, 1–55. [Google Scholar] [CrossRef]

- Neter, J. Applied Linear Statistical Models, 4th ed.; Irwin: Chicago, IL, USA, 1996. [Google Scholar]

- Kock, N. Common method bias in PLS-SEM: A full collinearity assessment approach. Int. J. E-Collab. (IJEC) 2015, 11, 1–10. [Google Scholar] [CrossRef]

- Woodside, A.G. Proposing a new logic for data analysis in marketing and consumer behavior: Case study research of large-N survey data for estimating algorithms that accurately profile X (extremely high-use) consumers. J. Glob. Sch. Mark. Sci. 2012, 22, 277–289. [Google Scholar] [CrossRef]

- Castelló-Sirvent, F.; Pinazo-Dallenbach, P. Corruption Shock in Mexico: fsQCA Analysis of Entrepreneurial Intention in University Students. Mathematics 2021, 9, 1702. [Google Scholar] [CrossRef]

- Duşa, A. QCA with R: A Comprehensive Resource; Springer: Berlin/Heidelberg, Germany, 2018. [Google Scholar]

- Ma, T.; Cheng, Y.; Guan, Z.; Li, B.; Hou, F.; Lim, E.T.K. Theorising moderation in the configurational approach: A guide for identifying and interpreting moderating influences in QCA. Inf. Syst. J. 2024, 34, 762–787. [Google Scholar] [CrossRef]

- Porcuna-Enguix, L.; Bustos-Contell, E.; Serrano-Madrid, J.; Labatut-Serer, G. Constructing the Audit Risk Assessment by the Audit Team Leader When Planning: Using Fuzzy Theory. Mathematics 2021, 9, 3065. [Google Scholar] [CrossRef]

- Buchanan, T. Why do people spread false information online? The effects of message and viewer characteristics on self-reported likelihood of sharing social media disinformation. PLoS ONE 2020, 15, e0239666. [Google Scholar] [CrossRef]

- Armeen, I.; Niswanger, R.; Tian, C. Combating Fake News Using Implementation Intentions. Inf. Syst. Front. 2024, 27, 1107–1120. [Google Scholar] [CrossRef]

- Zhang, L.; Iyendo, T.O.; Apuke, O.D.; Gever, C.V. Experimenting the effect of using visual multimedia intervention to inculcate social media literacy skills to tackle fake news. J. Inf. Sci. 2025, 51, 135–145. [Google Scholar] [CrossRef]

- Ma, R.; Wang, X.; Yang, G.-R. Fighting fake news in the age of generative AI: Strategic insights from multi-stakeholder interactions. Technol. Forecast. Soc. Change 2025, 216, 124125. [Google Scholar] [CrossRef]

| Independent Variables | Moderator/Mediator | Dependent Variable(s) | Theories | Authors |

|---|---|---|---|---|

| Individual social networking sites dependency; Parasocial interaction; Information seeking; Perceived herd; Social tie strength; Status-seeking. | Fake news knowledge (Mediator) | Fake news sharing. | Dependency theory; Social impact theory; Social networking sites; Uses and gratification theory. | Apuke and Omar [32] |

| Information sharing; News finds me; Status seeking; Trust in social networking sites. | Social media literacy skills (Moderator) | Rational choice theory. | Wei, Gong [28] | |

| Status-seeking; Socializing; Entertainment; Pastime; Information sharing. | News quality; source credibility (moderators) | Uses and gratification theory; Information adoption model. | Thompson, Wang [33] | |

| Information overload; Information sharing; News-find-me perception; Self-expression; Status seeking; Trust in online information. | The affordance theory. | Apuke and Omar [34] | ||

| Active corrective action on fake news; Authenticating news before sharing; Instantaneous sharing of fake news to create awareness; Passive corrective action on fake news. | Sharing fake news related lack of time; sharing fake news related to religiosity. | The third-person effect hypothesis; The honeycomb framework. | Talwar, Dhir [35] | |

| Authoritativeness of source; Consensus indicators; Demographic variables; Digital literacy; Personality. | Spread false information. | |||

| Information overload; Online information trust; Perceived severity; Perceived susceptibility. | Unverified information sharing; cyberchondria. | Cognitive load theory health belief model; Protection-motivation theory. | Laato, Islam [29] | |

| Technological factors; Fear of missing out; Entertainment; Ignorance; Pastime; Altruism. | Fake news sharing. | Self-determination theory; Socio-cultural-psychological-technology model; Uses and gratification theory. | Balakrishnan, Ng [36] | |

| Social media news use; Fears of missing out. | Cognitive ability (mediator) | Deepfake sharing | ---- | Ahmed [37] |

| Usage intensity; Social Credibility; Expertise. | Trust in social media (mediator); verification behavior (mediator) | Fake news identification | Referential theory of the illusory truth effect; “Theory of frequency and referential validity”. | Aoun Barakat, Dabbous [38] |

| Argument quality; Information readability; Source authority; Source influence. | Cognitive ability (mediator) | Fake news rebuttals | Elaboration likelihood model; Rebuttal acceptance. | Wang, Chao [33] |

| Authenticating news before sharing; Fear of missing out (FOMO); Government regulation; Information quality; Joy of missing out (JOMO); Source credibility. | Perceived believability (mediator); social status-seeking and cognitive influence (moderators). | Intention to share fake news | Behavioral Reasoning Theory. | Kumar, Shankar [39] |

| Variables | Items | Loadings | CR | AVE | MSV | MaxR(H) | VIF |

|---|---|---|---|---|---|---|---|

| News-find-me | NewsFM4 | 0.696 | 0.825 | 0.541 | 0.240 | 0.828 | 1.403 |

| NewsFM3 | 0.778 | ||||||

| NewsFM2 | 0.757 | ||||||

| NewsFM1 | 0.708 | ||||||

| Social media trust | TrustSNS4 | 0.693 | 0.836 | 0.561 | 0.240 | 0.840 | 1.297 |

| TrustSNS3 | 0.787 | ||||||

| TrustSNS2 | 0.778 | ||||||

| TrustSNS1 | 0.734 | ||||||

| Social media literacy | SMLit13 | 0.720 | 0.922 | 0.597 | 0.332 | 0.925 | 1.565 |

| SMLit12 | 0.716 | ||||||

| SMLit11 | 0.818 | ||||||

| SMLit9 | 0.814 | ||||||

| SMLit8 | 0.768 | ||||||

| SMLit7 | 0.801 | ||||||

| SMLit6 | 0.788 | ||||||

| SMLit5 | 0.748 | 0.854 | 0.594 | 0.256 | 0.859 | 1.383 | |

| Status-seeking | SSeeking5 | 0.696 | |||||

| SSeeking3 | 0.804 | ||||||

| SSeeking2 | 0.782 | ||||||

| SSeeking1 | 0.797 | ||||||

| Information sharing | InfoSharing8 | 0.708 | 0.912 | 0.597 | 0.332 | 0.913 | 1.635 |

| InfoSharing6 | 0.778 | ||||||

| InfoSharing5 | 0.784 | ||||||

| InfoSharing4 | 0.782 | ||||||

| InfoSharing3 | 0.780 | ||||||

| InfoSharing2 | 0.779 | ||||||

| InfoSharing1 | 0.795 | ||||||

| Fake news-sharing | SharingFNs8 | 0.837 | 0.922 | 0.631 | 0.093 | 0.929 | |

| SharingFNs7 | 0.840 | ||||||

| SharingFNs6 | 0.836 | ||||||

| SharingFNs5 | 0.819 | ||||||

| SharingFNs4 | 0.803 | ||||||

| SharingFNs3 | 0.770 | ||||||

| SharingFNs2 | 0.634 |

| Focal Variables | Mean | Std. Deviation | 1 | 2 | 3 | 4 | 5 | 6 |

|---|---|---|---|---|---|---|---|---|

| 1. News-find-me | 2.9981 | 0.88648 | 0.735 | 0.490 *** | 0.366 *** | 0.418 *** | 0.442 *** | −0.080 * |

| 2. Social media trust | 2.8720 | 0.87430 | 0.500 | 0.749 | 0.374 *** | 0.366 *** | 0.288 *** | 0.086 * |

| 3. Social media literacy | 2.5278 | 0.93742 | 0.385 | 0.384 | 0.772 | 0.447 *** | 0.576 *** | −0.208 *** |

| 4. Status-seeking | 2.8160 | 0.95608 | 0.438 | 0.380 | 0.459 | 0.771 | 0.506 *** | −0.112 ** |

| 5. Information Sharing | 2.6208 | 0.95060 | 0.443 | 0.299 | 0.598 | 0.515 | 0.773 | −0.305 *** |

| 6. Fake news-sharing | 3.3573 | 1.00949 | 0.064 | 0.101 | 0.200 | 0.098 | 0.286 | 0.794 |

| Calibration Method | Info Sharing | S-Seeking | NFM | SM Trust | SM Literacy | FN Sharing |

|---|---|---|---|---|---|---|

| Full non-membership (5%) | 1.1429 | 1.2500 | 1.2500 | 1.2500 | 1.2500 | 1.4286 |

| Cross (50%) | 2.5714 | 2.7500 | 3.0000 | 3.0000 | 2.3750 | 3.5714 |

| Full membership (95%) | 4.2857 | 4.2938 | 4.2500 | 4.2500 | 4.1250 | 4.7143 |

| Conditions | High Fake News Sharing | Low Fake News Sharing | ||

|---|---|---|---|---|

| Consistency | Coverage | Consistency | Coverage | |

| C_InfoSharing | 0.554614 | 0.58236 | 0.699955 | 0.706066 |

| ~C_InfoSharing | 0.720069 | 0.71413 | 0.585975 | 0.558289 |

| C_Sseeking | 0.631878 | 0.61948 | 0.692767 | 0.652469 |

| ~C_Sseeking | 0.645514 | 0.68623 | 0.595983 | 0.608659 |

| C_NFMe | 0.659095 | 0.62595 | 0.705692 | 0.643851 |

| ~C_NFMe | 0.624993 | 0.68853 | 0.590027 | 0.624441 |

| C_TrustSM | 0.646258 | 0.6771 | 0.636424 | 0.640572 |

| ~C_TrustSM | 0.656941 | 0.65288 | 0.679189 | 0.648444 |

| C_Literacy | 0.547366 | 0.56987 | 0.69652 | 0.696631 |

| ~C_Literacy | 0.708607 | 0.7085 | 0.569934 | 0.547436 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Mombeuil, C.; Séraphin, H.; Diunugala, H.P. Mapping Linear and Configurational Dynamics to Fake News Sharing Behaviors in a Developing Economy. Technologies 2025, 13, 341. https://doi.org/10.3390/technologies13080341

Mombeuil C, Séraphin H, Diunugala HP. Mapping Linear and Configurational Dynamics to Fake News Sharing Behaviors in a Developing Economy. Technologies. 2025; 13(8):341. https://doi.org/10.3390/technologies13080341

Chicago/Turabian StyleMombeuil, Claudel, Hugues Séraphin, and Hemantha Premakumara Diunugala. 2025. "Mapping Linear and Configurational Dynamics to Fake News Sharing Behaviors in a Developing Economy" Technologies 13, no. 8: 341. https://doi.org/10.3390/technologies13080341

APA StyleMombeuil, C., Séraphin, H., & Diunugala, H. P. (2025). Mapping Linear and Configurational Dynamics to Fake News Sharing Behaviors in a Developing Economy. Technologies, 13(8), 341. https://doi.org/10.3390/technologies13080341