A Combined Mirror–EMG Robot-Assisted Therapy System for Lower Limb Rehabilitation

Abstract

1. Introduction

2. Materials and Methods

2.1. The Proposed Rehabilitation Protocol

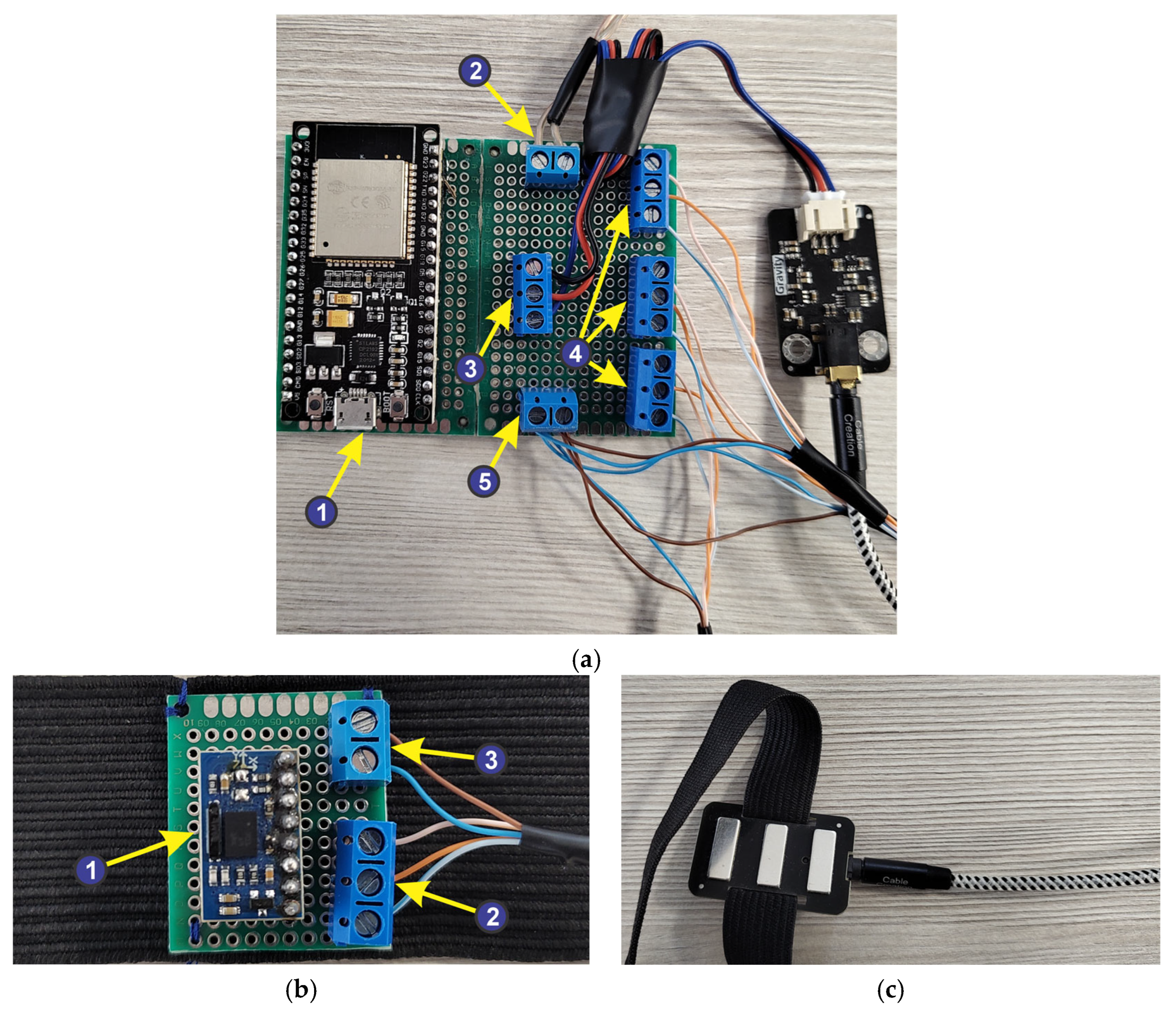

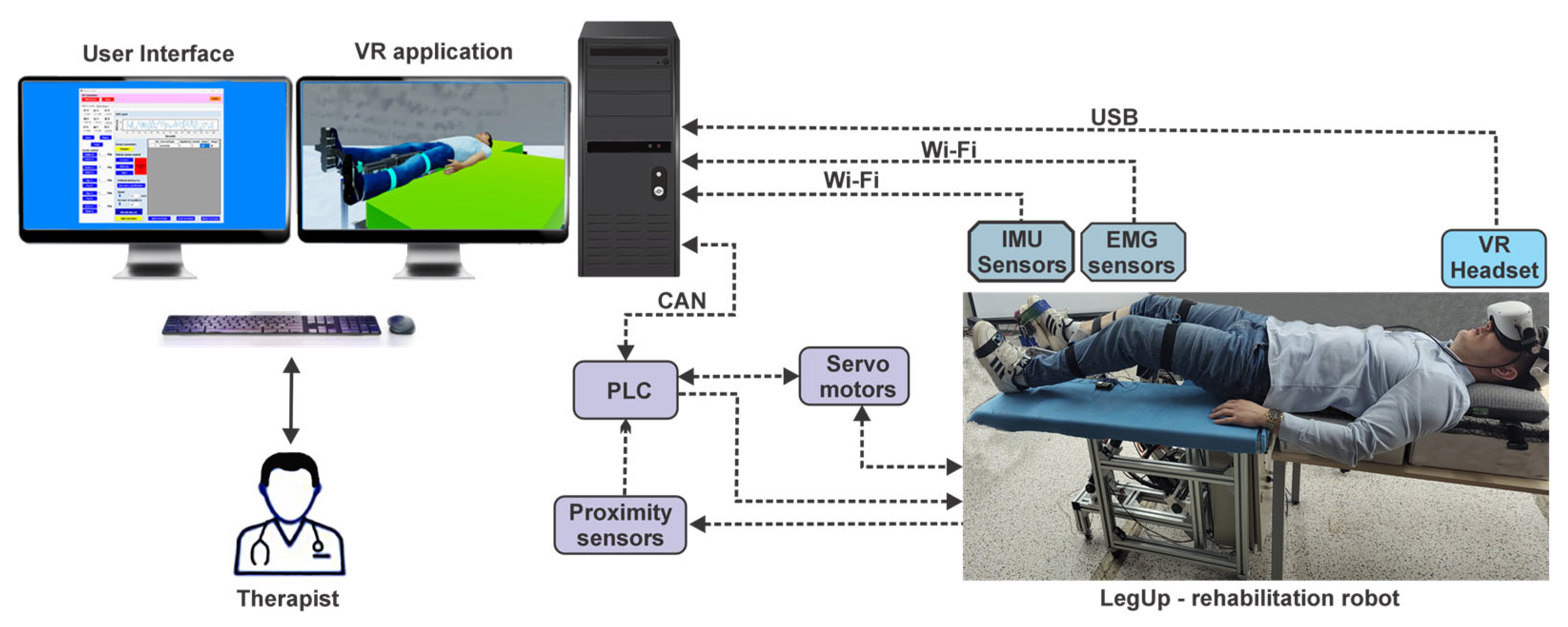

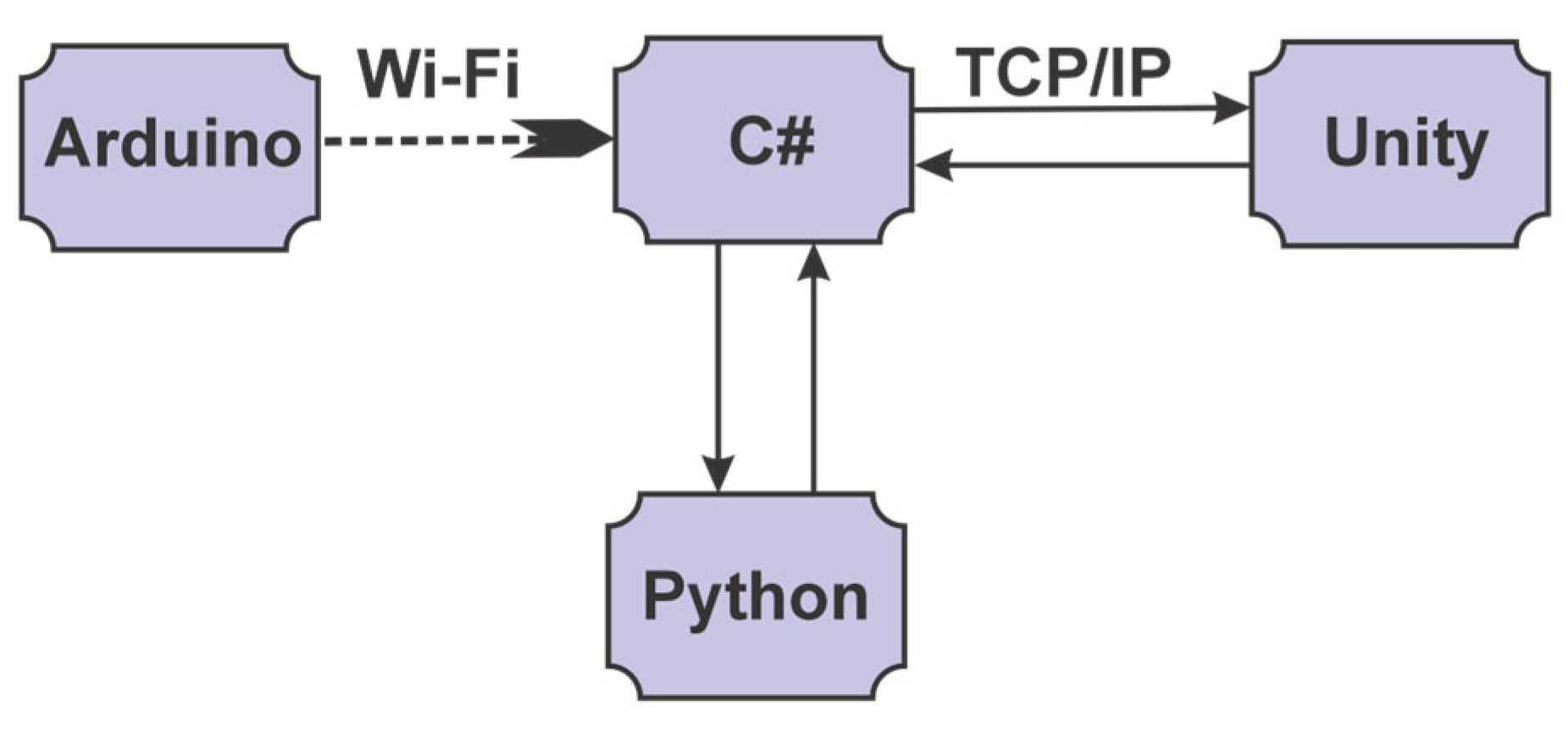

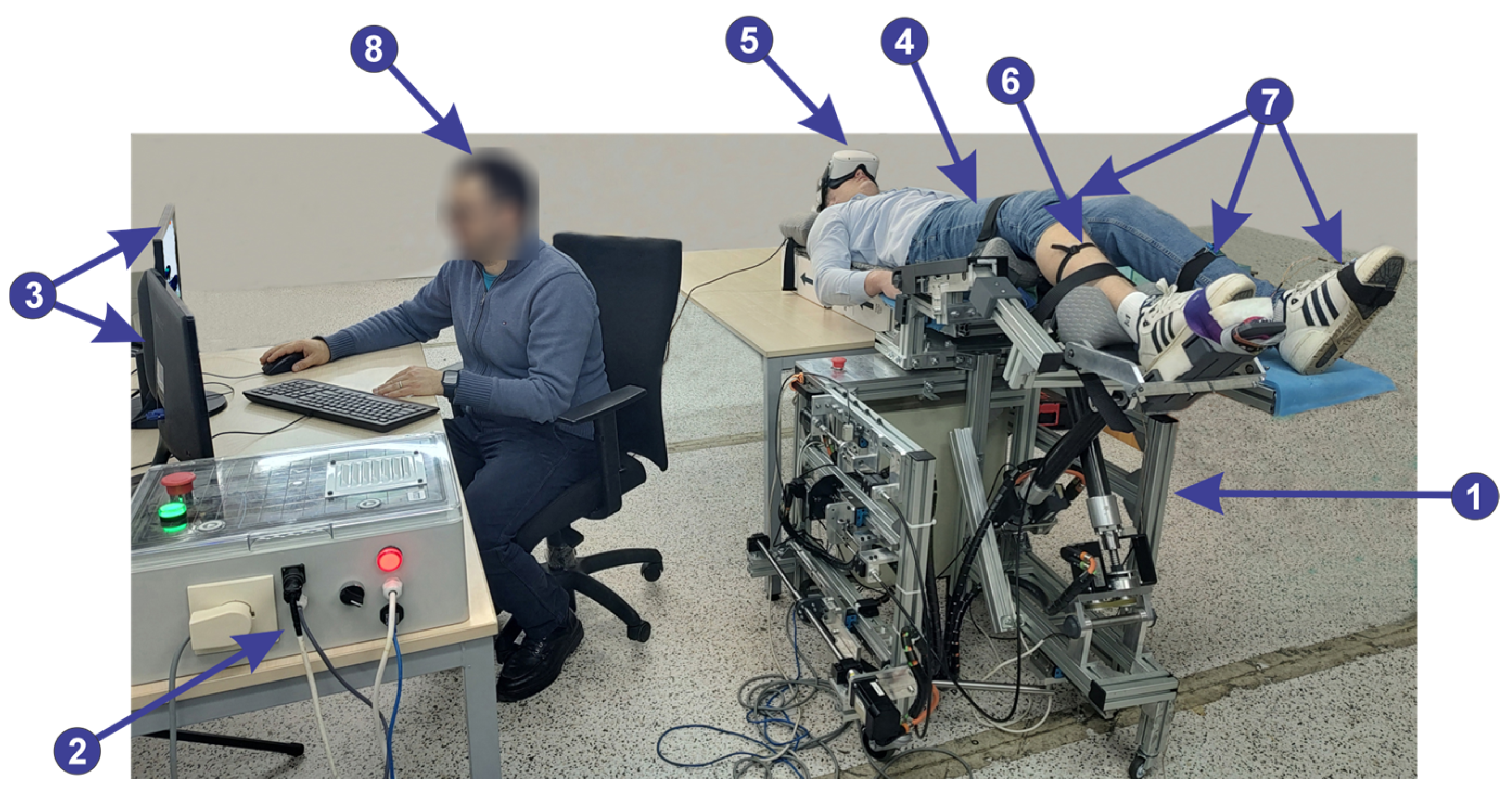

2.2. The Main Elements of the System and Their Interconnectivity

- 1—ESP32 microcontroller development board, which contains the following specifications:

- -

- 240 MHz microcontroller, dual-core—Tensilica LX6 (Espressif Systems, Bucuresti, Romania);

- -

- 520 KB SRAM memory;

- -

- 16 MB flash memory;

- -

- Wi-Fi: 802.11 b/g/n (802.11n up to 150 Mbps);

- -

- Bluetooth: Bluetooth v4.2 BR/EDR and BLE;

- 2—power supply: 5 V DC;

- 3—connecting the EMG sensor;

- 4—connecting IMU sensors;

- 5—power supply for IMU sensors: 3.3 V DC;

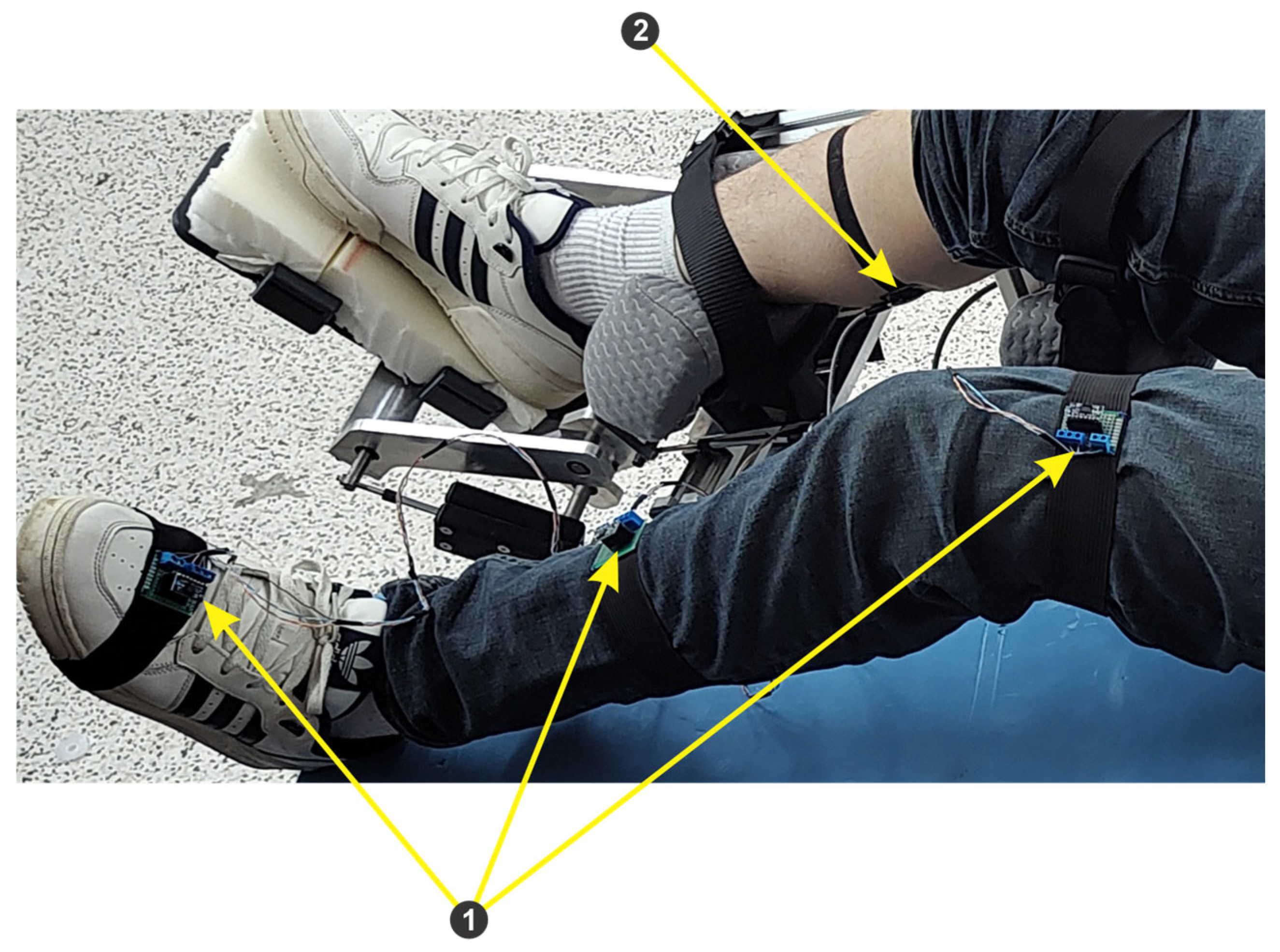

- 1—absolute orientation sensor—IMU BNO055, having the following characteristics:

- -

- built in nine-axis sensor and MCU resources;

- -

- Communication mode: Standard IIC/serial communication protocol;

- -

- absolute orientation (Euler Vector, 100 Hz)—three-axis orientation data based on a 360° sphere;

- -

- Angular velocity vector (100 Hz)—three axes for “rotational speed” in rad/s;

- -

- Acceleration vector (100 Hz)—three axes for acceleration (gravity + linear motion) in m/s2;

- -

- Magnetic field strength vector (20 Hz)—three axes for magnetic field strength detecting in microTesla (μT);

- -

- Linear acceleration vector (100 Hz)—three axes for linear acceleration (acceleration minus gravity) in m/s2;

- -

- Vector for gravity (100 Hz)—three axes for gravitational acceleration in m/s2.

- 2—IMU sensor connection jacks to the ESP32 microcontroller development board;

- 3—power supply connectors for IMU sensors.

2.3. Software Application Development

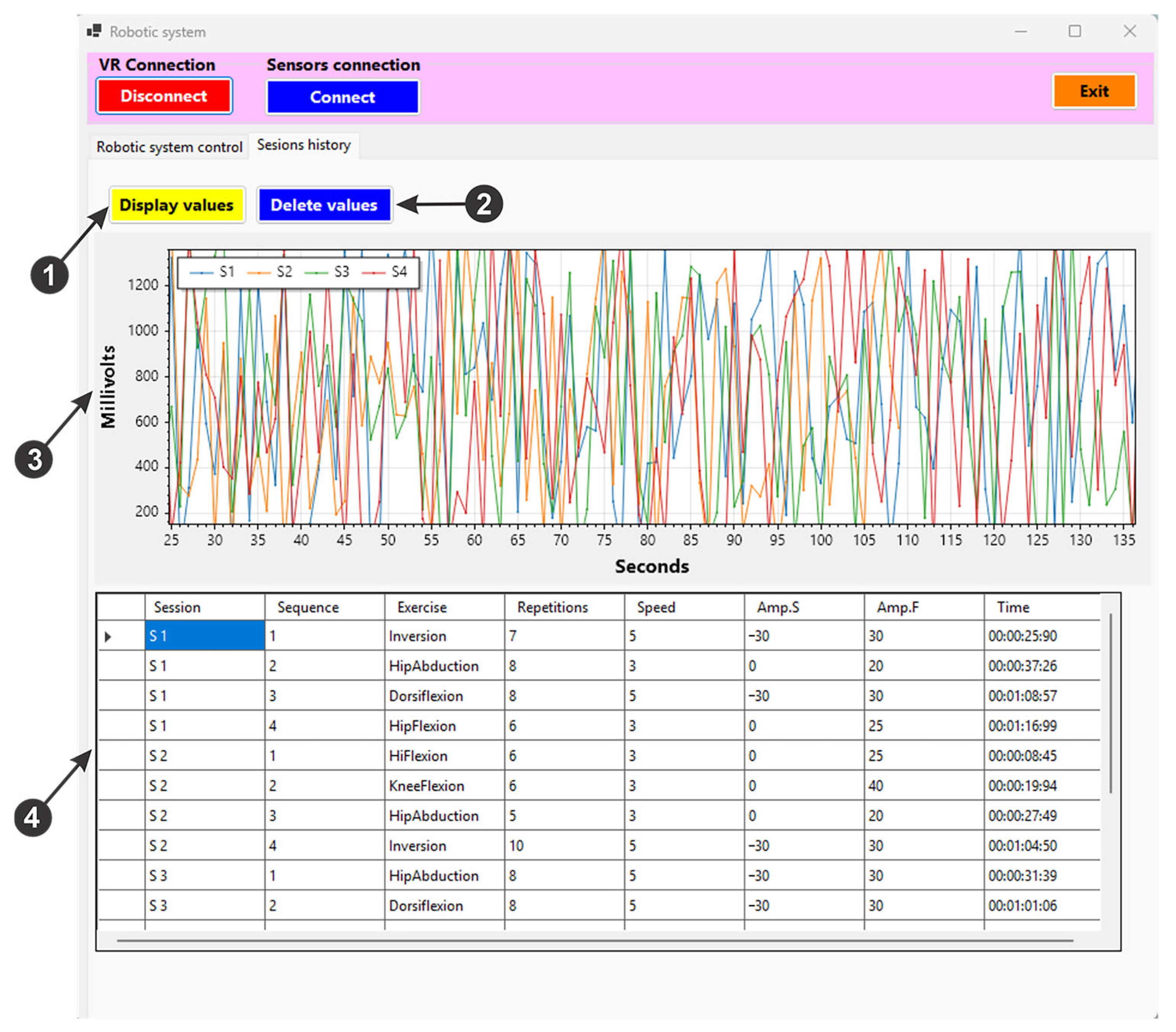

2.4. C# Software Application

- 13 use cases that specify the functionality of the software application: 7 cases associated with the application implemented in the C# programming language, 3 use cases associated with the Arduino script, and 3 cases associated with the Unity virtual reality application;

- 4 actors: the human user of the software application (the therapist), the Python classifier, the parallel robot, and the EMG and IMU sensors;

- 8 association relationships between actor and use cases;

- 6 dependency relationships between use cases.

- The ExercisesGUI class, derived from the Panel class, allows the therapist to interact with the application’s graphical interface, control the robotic structure, and interact with the virtual reality application;

- The HistoryGUI class, derived from the Panel class, allows the therapist to monitor the patient’s progress.

- The UnityConnection class allows connections to be made using sockets between the application implemented in C# and the VR application implemented in Unity by using 3 classes from the System.Net.Sockets package;

- The ArduinoConnection class enables the connections between the application implemented in C# and the Arduino script by using the System.Net.Sockets package;

- The RoboticStructureConnection class enables the connections between the application implemented in C# and the parallel robot.

- For the “Connect” button in Figure 9, (1) is used to initiate a connection via the TCP/IP protocol between the user interface and the virtual reality application.

- For the “Connect” button in Figure 9, (2) initiates the connection between the user interface, the device created to acquire the signals of the IMU sensors and the EMG sensor, using the ESP32 microcontroller development board via the Wi-Fi protocol. It also stores the current position of the sensors to be used as reference during the training exercises within the mirror therapy. Once the connection is created, data are sent from the three IMU sensors (for the X, Y, and Z axes) and displayed on the user interface (Figure 9, (3)). These data are saved in an excel file via the “Save” button (Figure 9, (4)) and can be deleted by pressing the “Clear” button (Figure 9, (4)). Pressing the “Pause” button (Figure 9, (4)) these data are no longer sent to the excel file to be saved. The EMG signal is displayed on the user interface (Figure 9, (5)).

- For the “Connect” button in Figure 9, (6), is used to initiate the control of the rehabilitation parallel robot. The “Home” button performs the homing procedure of the robot, while the “Start” button (Figure 9, (6)) drives the robot to the starting position, before the actual training exercises begin. The “Emergency Stop” button (Figure 9, (6)) can be used in case of emergency, cutting off the power and thus stopping the robotic system.

- By pressing the “Exercises classification” button (Figure 9, (7)), the artificial intelligence algorithm performs a classification of the healthy limb motions using the data received from the IMU sensors.

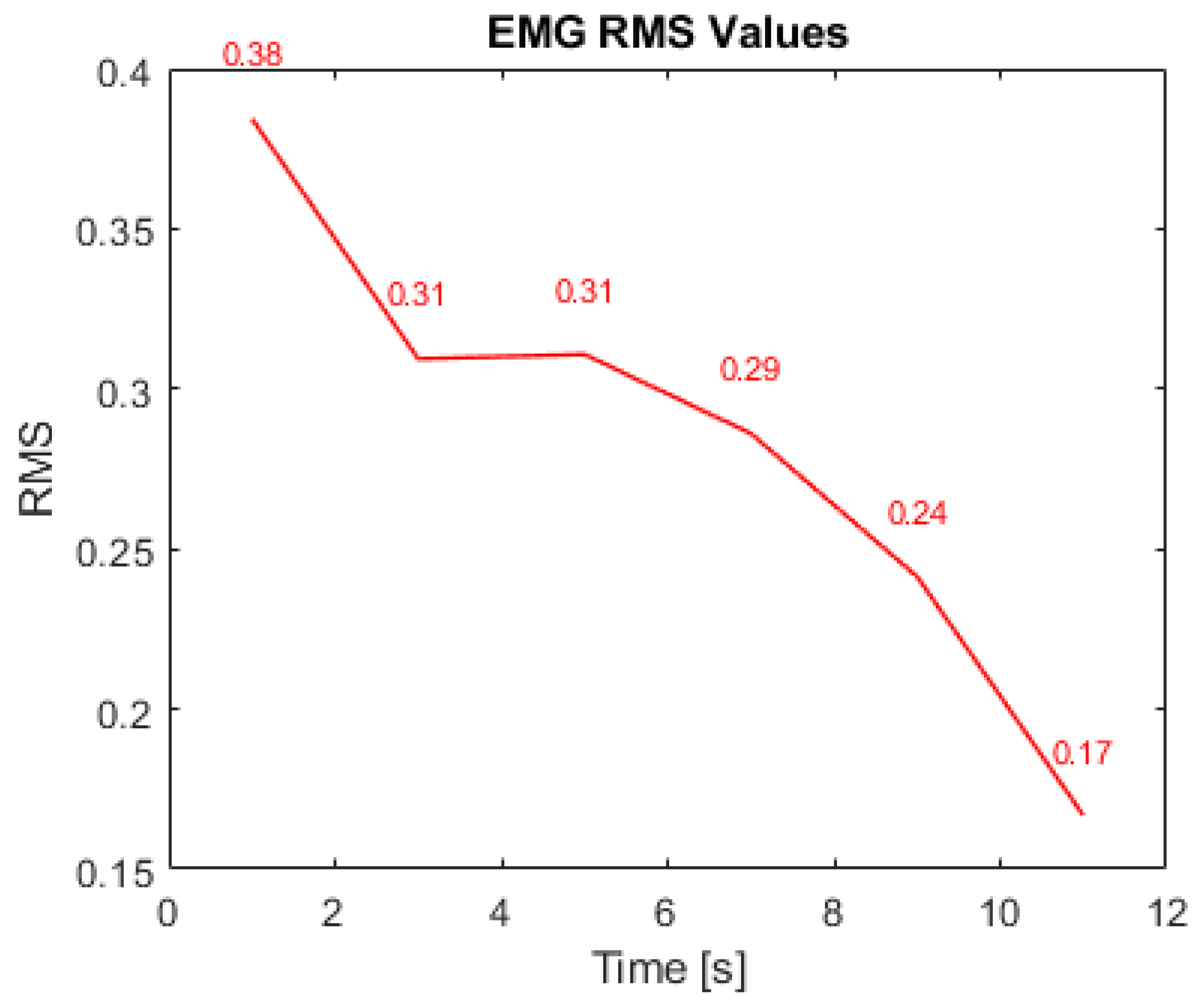

- When pressing the “Start exercises” button (Figure 9, (8)), a timer measures the duration of an exercise session, and the robotic rehabilitation system performs the rehabilitation exercises by following the movements of the patient’s healthy leg. It also saves the EMG signal performed in a session in an excel file.

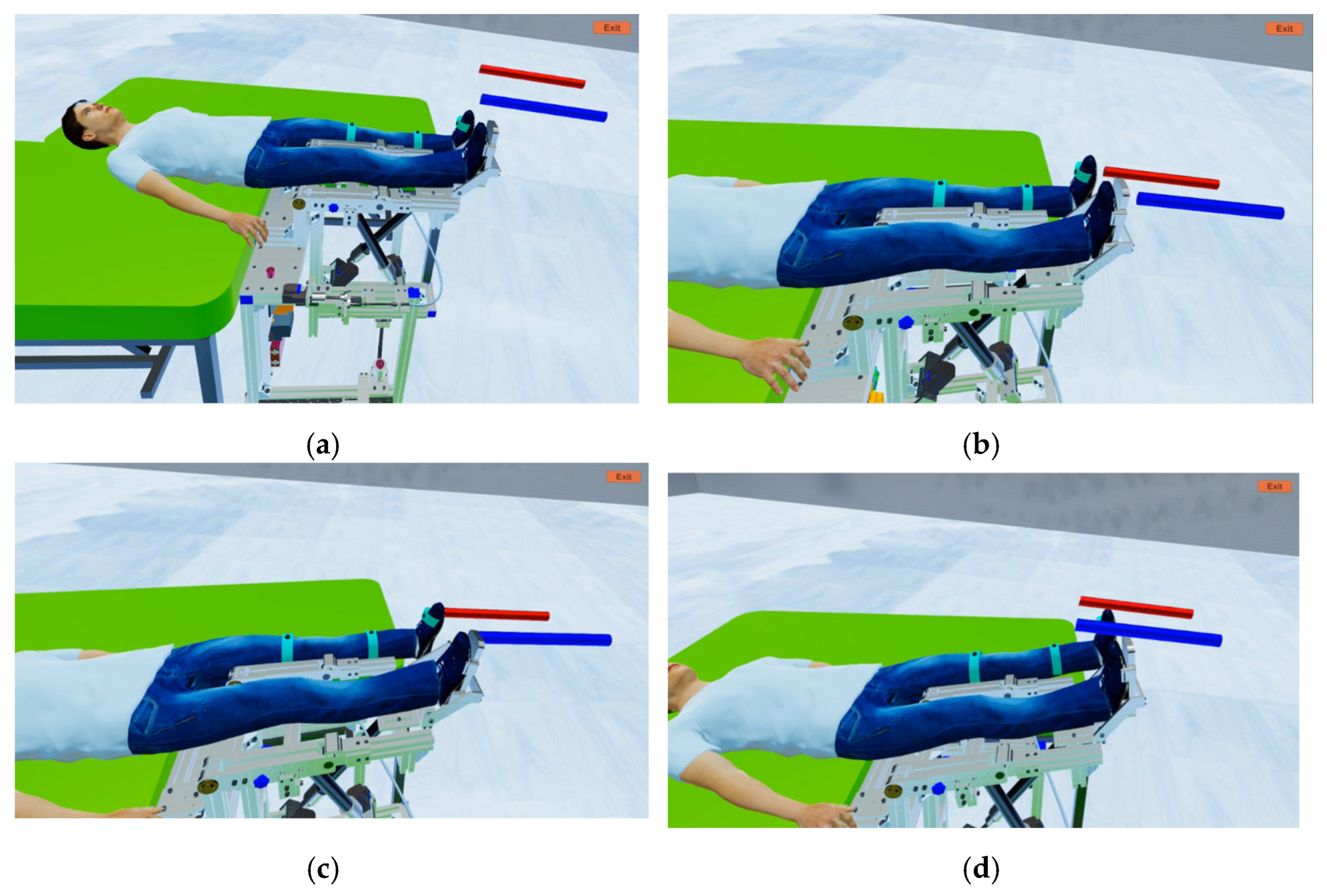

- Using the buttons in the “Avatar control” field (Figure 9, (9)), the virtual patient’ leg motion (human avatar) is controlled, thus being able to perform various rehabilitation exercises at the hip, knee, and ankle level.

- The rehabilitation exercises in a single session are displayed in a table (Figure 9, (10)), where the type of exercise, the number of repetitions of the exercise, the speed level at which the exercise is performed, and the start and end amplitude can be monitored.

- The speed and number of repetitions of the rehabilitation exercise can be set using the two sliders in Figure 9, (11).

- The user interface also enables saving the exercise session in an excel file by pressing the “Save Exercises” button (Figure 9, (12)). Loading the saved exercise session is performed via the “Load Exercises” button (Figure 9, (12)) and resetting the exercise session by pressing the “Reset Exercises” button.

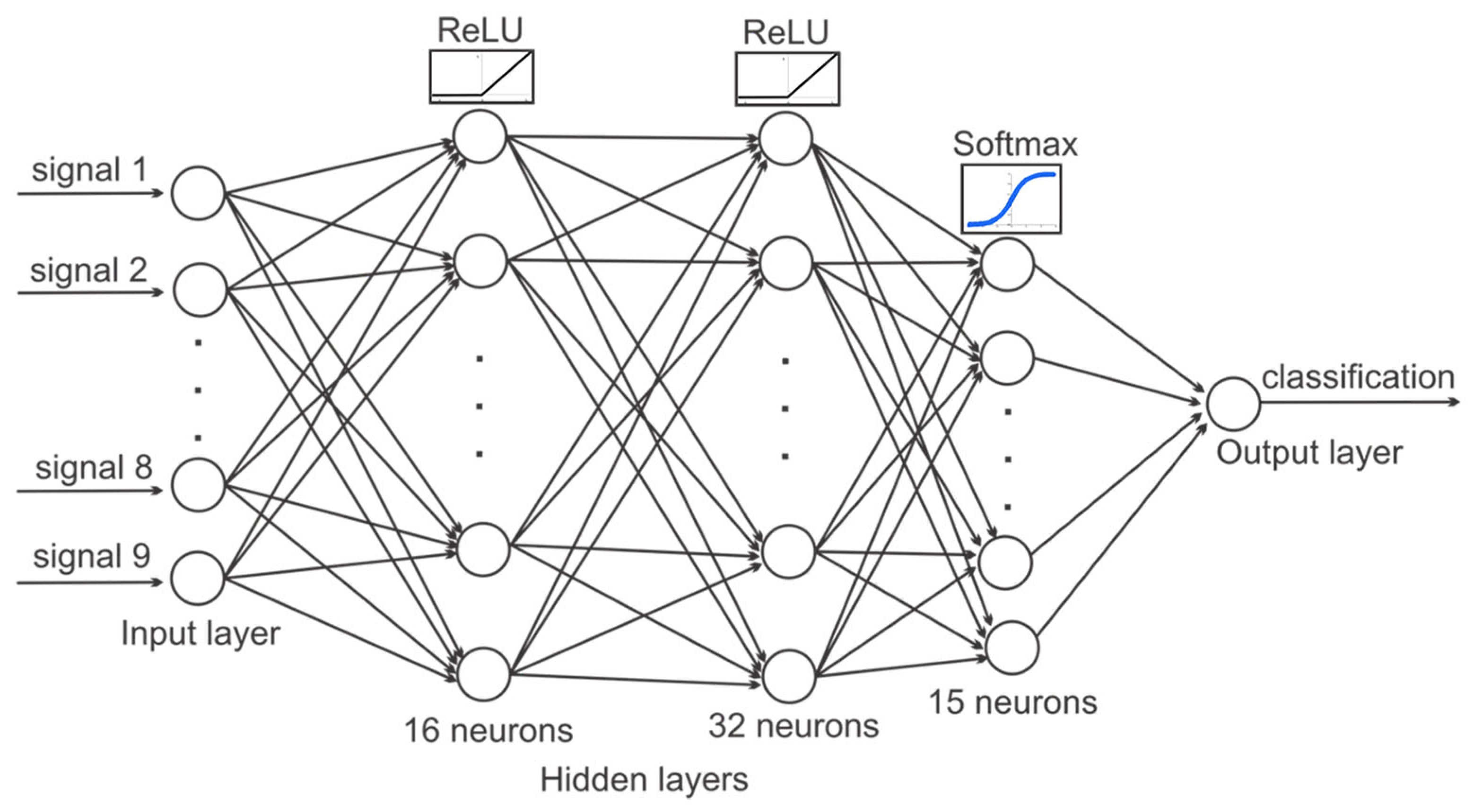

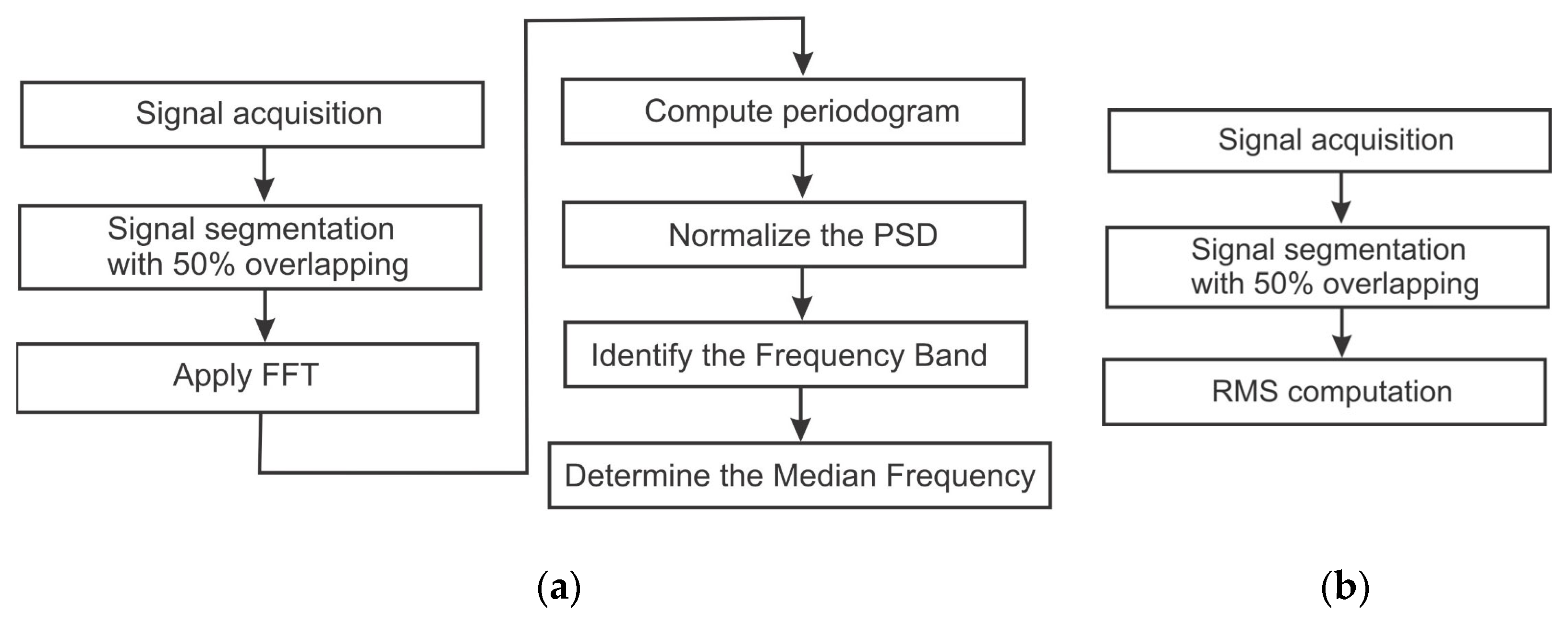

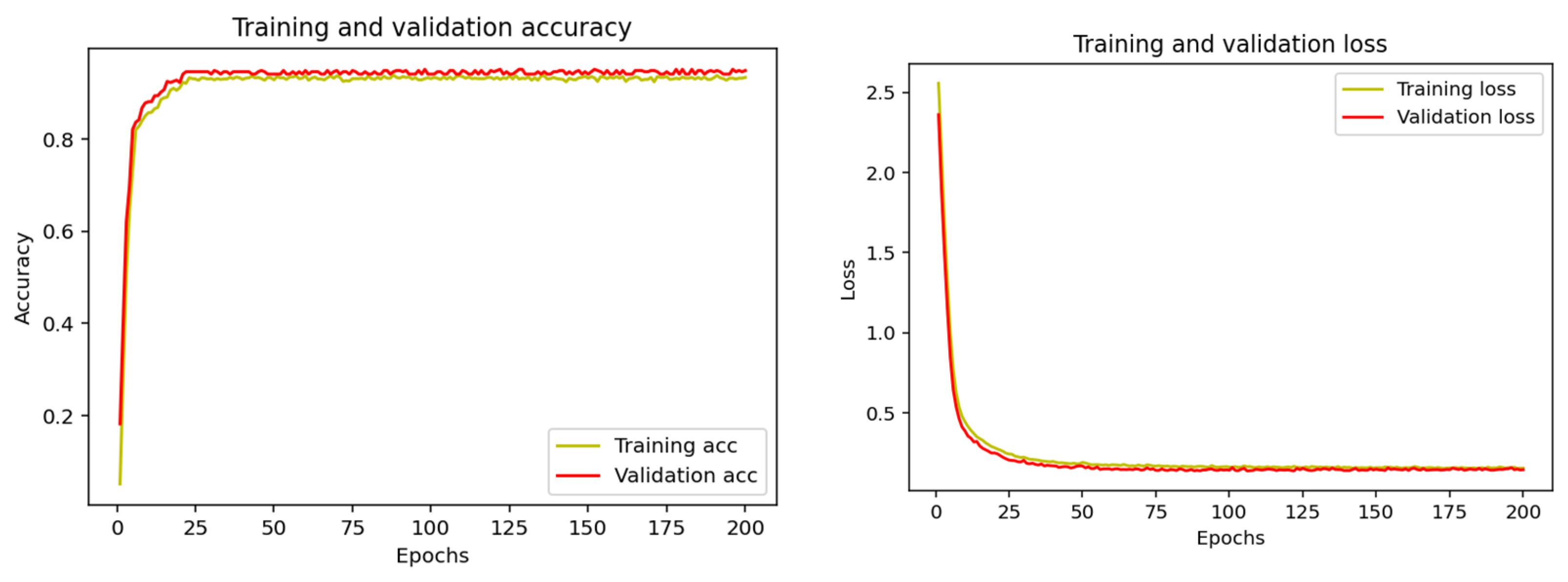

2.5. Keras-Based Artificial Intelligence Used in the Classification of Rehabilitation Exercises

- The first layer is the input layer that receives the initial data;

- The middle layer is hidden, and it processes the data through neurons by applying mathematical functions;

- The last one is the output layer, which produces the final result of the neural network.

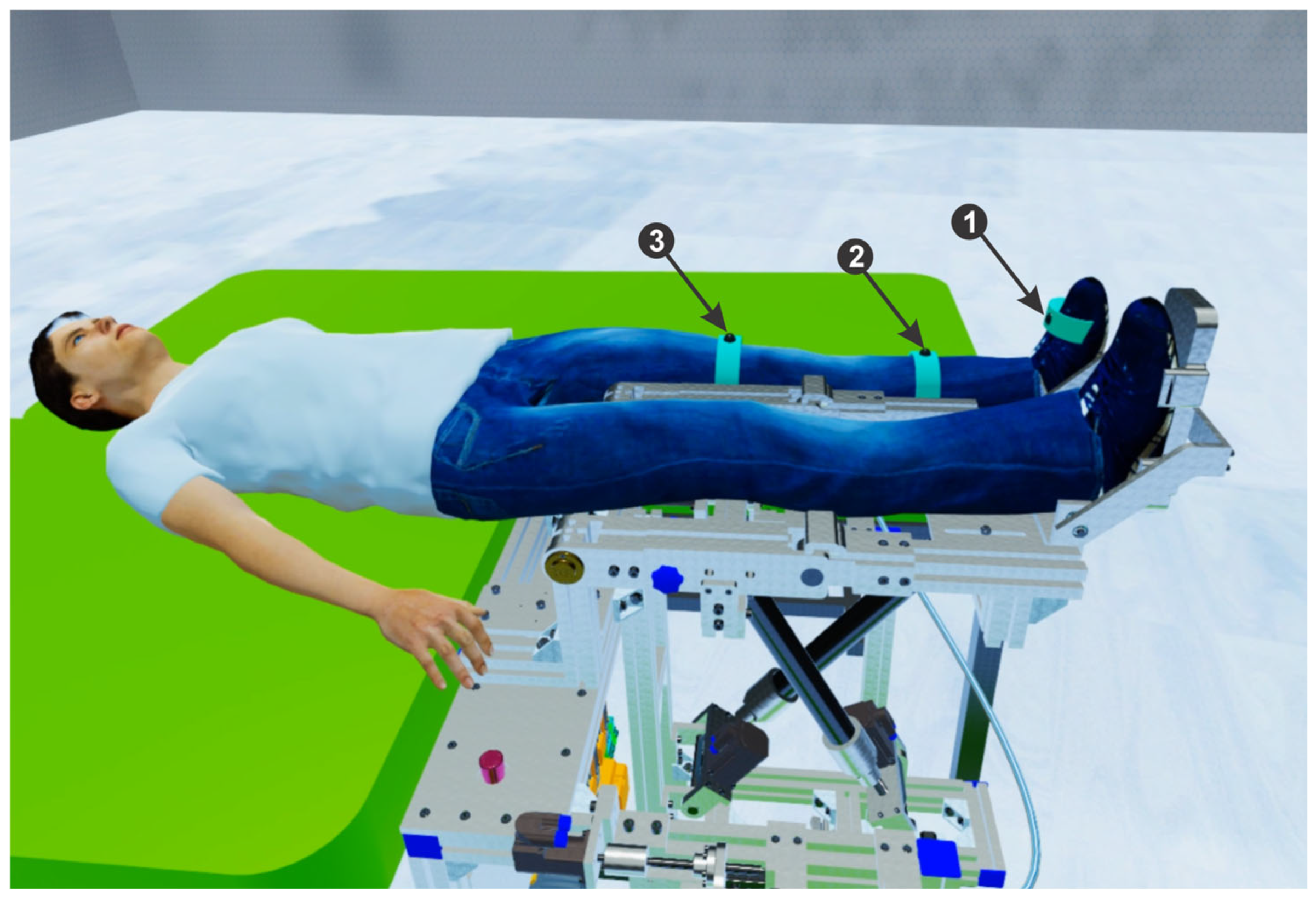

2.6. The Lower Limb Rehabilitation Parallel Robot

- Module 1 consists of five servo motors through which the parallel robot is actuated, communicating with the programmable logic controller (PLC). Further, the PLC communicates with the computer using the TCP/IP.

- Module 2 consists of five proximity sensors that are placed at the end of the stroke to limit the servo motors. Using these sensors the active joints initial position is defined.

- Module 3 consists of an EMG sensor and three IMU sensors, connected to the ESP32 microcontroller development board. The data acquired from the sensors through the ESP32 microcontroller are sent to the computer via the Wi-Fi protocol.

2.7. Development and Integration of the VR Application in the Mirror Therapy

3. Results and Discussion

4. Summary and Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Bindal, P.; Kumar, V.; Kapil, L.; Singh, C.; Singh, A. Therapeutic management of ischemic stroke. Naunyn-Schmiedeberg’s Arch. Pharmacol. 2024, 397, 2651–2679. [Google Scholar] [CrossRef] [PubMed]

- Facciorusso, S.; Spina, S.; Picelli, A.; Baricich, A.; Molteni, F.; Santamato, A. May Spasticity-Related Unpleasant Sensations Interfere with Daily Activities in People with Stroke and Traumatic Brain Injury? Secondary Analysis from the CORTOX Study. J. Clin. Med. 2024, 13, 1720. [Google Scholar] [CrossRef]

- Miller, E.T.; Murray, L.; Richards, L.; Zorowitz, R.; Bakas, T.; Clark, P.C.; Billinger, S.A. Comprehensive overview of nursing and interdisciplinary rehabilitation care of the stroke patient: A scientific statement from the American Heart Association. Stroke 2010, 41, 2402–2448. [Google Scholar] [CrossRef]

- Gandhi, D.; Sterba, A.; Khatter, H.; Pandian, J. Mirror therapy in stroke rehabilitation: Current perspectives. Ther. Clin. Risk Manag. 2020, 16, 75–85. [Google Scholar] [CrossRef]

- Amin, F.; Waris, A.; Iqbal, J.; Gilani, S.O.; Rehman, M.Z.U.; Mushtaq, S.; Khan, N.B.; Khan, M.I.; Jameel, M.; Tamam, N. Maximizing stroke recovery with advanced technologies: A comprehensive assessment of robot-assisted, EMG-Controlled robotics, virtual reality, and mirror therapy interventions. Results Eng. 2024, 21, 101725. [Google Scholar] [CrossRef]

- Geonea, I.D.; Tarnita, D.; Pisla, D.; Carbone, G.; Bolcu, A.; Tucan, P.; Georgescu, M.; Tarniță, D.N. Dynamic Analysis of a Spherical Parallel Robot Used for Brachial Monoparesis Rehabilitation. Appl. Sci. 2021, 11, 11849. [Google Scholar] [CrossRef]

- Hsu, S.Y.; Kuo, L.C.; Lin, Y.C.; Su, F.C.; Yang, T.H.; Lin, C.W. Effects of a Virtual Reality–Based Mirror Therapy Program on Improving Sensorimotor Function of Hands in Chronic Stroke Patients: A Randomized Controlled Trial. Neurorehabilit. Neural Repair 2022, 36, 335–345. [Google Scholar] [CrossRef] [PubMed]

- Gebreheat, G.; Antonopoulos, N.; Porter-Armstrong, A. Application of immersive virtual reality mirror therapy for upper limb rehabilitation after stroke: A scoping review. Neurol Sci. 2024, 45, 4173–4184. [Google Scholar] [CrossRef]

- Rosero-Herrera, J.D.; Acuña-Bravo, W. A lower limb rehabilitation platform with mirror therapy, electrical stimulation and virtual reality for people with limited dorsiflexion movement. HardwareX 2022, 11, e00285. [Google Scholar] [CrossRef]

- da Silva Jaques, E.; Figueiredo, A.I.; Schiavo, A.; Loss, B.P.; da Silveira, G.H.; Sangalli, V.A.; da Silva Melo, D.A.; Xavier, L.L.; Pinho, M.S.; Mestriner, R.G. Conventional Mirror Therapy versus Immersive Virtual Reality Mirror Therapy: The Perceived Usability after Stroke. Stroke Res. Treat. 2023, 2023, 5080699. [Google Scholar] [CrossRef]

- Lee, K.E.; Choi, M.; Jeoung, B. Effectiveness of Rehabilitation Exercise in Improving Physical Function of Stroke Patients: A Systematic Review. Int. J. Environ. Res. Public Health 2022, 19, 12739. [Google Scholar] [CrossRef] [PubMed]

- Banyai, A.D.; Brișan, C. Robotics in Physical Rehabilitation: Systematic Review. Healthcare 2024, 12, 1720. [Google Scholar] [CrossRef] [PubMed]

- Tohanean, N.; Tucan, P.; Vanta, O.-M.; Abrudan, C.; Pintea, S.; Gherman, B.; Burz, A.; Banica, A.; Vaida, C.; Neguran, D.A.; et al. The Efficacity of the NeuroAssist Robotic System for Motor Rehabilitation of the Upper Limb—Promising Results from a Pilot Study. J. Clin. Med. 2023, 12, 425. [Google Scholar] [CrossRef]

- Luo, Z.; Zhou, Y.; He, H.; Lin, S.; Zhu, R.; Liu, Z.; Liu, J.; Liu, X.; Chen, S.; Zou, J.; et al. Synergistic Effect of Combined Mirror Therapy on Upper Extremity in Patients with Stroke: A Systematic Review and Meta-Analysis. Front. Neurol. 2020, 11, 155. [Google Scholar] [CrossRef]

- Ramachandran, V.S.; Rogers-Ramachandran, D. Synaesthesia in phantom limbs induced with mirrors. Proc. Biol. Sci. 1996, 263, 377–386. [Google Scholar] [PubMed]

- Deconinck, F.J.; Smorenburg, A.R.; Benham, A.; Ledebt, A.; Feltham, M.G.; Savelsbergh, G.J. Reflections on mirror therapy: A systematic review of the effect of mirror visual feedback on the brain. Neurorehabil. Neural Repair 2015, 29, 349–361. [Google Scholar] [CrossRef]

- Miclaus, R.S.; Roman, N.; Henter, R.; Caloian, S. Lower Extremity Rehabilitation in Patients with Post-Stroke Sequelae through Virtual Reality Associated with Mirror Therapy. Int. J. Environ. Res. Public Health 2021, 18, 2654. [Google Scholar] [CrossRef] [PubMed]

- Yang, Z.; Guo, S.; Hirata, H.; Kawanishi, M. A Mirror Bilateral Neuro-Rehabilitation Robot System with the sEMG-Based Real-Time Patient Active Participant Assessment. Life 2021, 11, 1290. [Google Scholar] [CrossRef]

- Nizamis, K.; Athanasiou, A.; Almpani, S.; Dimitrousis, C.; Astaras, A. Converging Robotic Technologies in Targeted Neural Rehabilitation: A Review of Emerging Solutions and Challenges. Sensors 2021, 21, 2084. [Google Scholar] [CrossRef]

- Zhang, H.; Liao, Y.; Zhu, C.; Meng, W.; Liu, Q.; Xie, S.Q. VR-Aided Ankle Rehabilitation Decision-Making Based on Convolutional Gated Recurrent Neural Network. Sensors 2024, 24, 6998. [Google Scholar] [CrossRef]

- Fan, H.; Luo, Z. Functional integration of mirror neuron system and sensorimotor cortex under virtual self-actions visual perception. Behav. Brain Res. 2022, 423, 113784. [Google Scholar] [CrossRef] [PubMed]

- Hercog, D.; Lerher, T.; Truntič, M.; Težak, O. Design and Implementation of ESP32-Based IoT Devices. Sensors 2023, 23, 6739. [Google Scholar] [CrossRef]

- Thavitchasri, P.; Maneetham, D.; Crisnapati, P.N. Intelligent Surface Recognition for Autonomous Tractors Using Ensemble Learning with BNO055 IMU Sensor Data. Agriculture 2024, 14, 1557. [Google Scholar] [CrossRef]

- Wang, J.H.; Kim, J.Y. Development of a whole-body walking rehabilitation robot and power assistive method using EMG signals. Intell. Serv. Robot. 2023, 16, 139–153. [Google Scholar] [CrossRef]

- Iordan, A.E. A comparative study of three heuristic functions used to solve the 8-puzzle. Br. J. Math. Comput. Sci. 2016, 16, 1–18. [Google Scholar] [CrossRef] [PubMed]

- Iordan, A.E. Optimal solution of the Guarini puzzle extension using tripartite graphs. IOP Conf. Ser.-Mater. Sci. Eng. 2019, 477, 012046. [Google Scholar] [CrossRef]

- Kridera, S.; Kanavos, A. Exploring Trust Dynamics in Online Social Networks: A Social Network Analysis Perspective. Math. Comput. Appl. 2024, 29, 37. [Google Scholar] [CrossRef]

- Major, Z.Z.; Vaida, C.; Major, K.A.; Tucan, P.; Brusturean, E.; Gherman, B.; Birlescu, I.; Craciunaș, R.; Ulinici, I.; Simori, G.; et al. Comparative Assessment of Robotic versus Classical Physical Therapy Using Muscle Strength and Ranges of Motion Testing in Neurological Diseases. J. Pers. Med. 2021, 11, 953. [Google Scholar] [CrossRef] [PubMed]

- Covaciu, F.; Pisla, A.; Iordan, A.E. Development of a virtual reality simulator for an intelligent robotic system used in ankle rehabilitation. Sensors 2021, 21, 1537. [Google Scholar] [CrossRef]

- Kumar, J.; Patel, T.; Sugandh, F.; Dev, J.; Kumar, U.; Adeeb, M.; Kachhadia, M.P.; Puri, P.; Prachi, F.; Zaman, M.U.; et al. Innovative Approaches and Therapies to Enhance Neuroplasticity and Promote Recovery in Patients with Neurological Disorders: A Narrative Review. Cureus 2023, 15, e41914. [Google Scholar] [CrossRef]

- Wareham, L.K.; Liddelow, S.A.; Temple, S.; Benowitz, L.I.; Di Polo, A.; Wellington, C.; Goldberg, J.L.; He, Z.; Duan, X.; Bu, G.; et al. Solving Neurodegeneration: Common Mechanisms and Strategies for New Treatments; BioMed Central Ltd.: London, UK, 2022. [Google Scholar]

- Yang, Y.L.; Guo, J.L.; Yao, Y.F.; Yin, H.S. Development of a Compliant Lower-Limb Rehabilitation Robot Using Underactuated Mechanism. Electronics 2023, 12, 3436. [Google Scholar] [CrossRef]

- Vaida, C.; Birlescu, I.; Pisla, A.; Carbone, G.; Plitea, N.; Ulinici, I.; Gherman, B.; Puskas, F.; Tucan, P.; Pisla, D. RAISE-An Innovative Parallel Robotic System for Lower Limb Rehabilitation. Adv. Theory Pract. 2019, 4, 293–302. [Google Scholar]

- Covaciu, F.; Iordan, A.-E. Control of a Drone in Virtual Reality Using MEMS Sensor Technology and Machine Learning. Micromachines 2022, 13, 521. [Google Scholar] [CrossRef]

- Fang, Y.-M. Exploring Usability, Emotional Responses, Flow Experience, and Technology Acceptance in VR: A Comparative Analysis of Freeform Creativity and Goal-Directed Training. Appl. Sci. 2024, 14, 6737. [Google Scholar] [CrossRef]

- Huang, Y.; Guo, Z.; Chu, H.; Sengupta, R. Evacuation Simulation Implemented by ABM-BIM of Unity in Students’ Dormitory Based on Delay Time. ISPRS Int. J. Geo-Inf. 2023, 12, 160. [Google Scholar] [CrossRef]

- Meier, C.; Berriel, I.S.; Nava, F.P. Creation of a Virtual Museum for the Dissemination of 3D Models of Historical Clothing. Sustainability 2021, 13, 12581. [Google Scholar] [CrossRef]

- Catania, V.; Rundo, F.; Panerai, S.; Ferri, R. Virtual Reality for the Rehabilitation of Acquired Cognitive Disorders: A Narrative Review. Bioengineering 2024, 11, 35. [Google Scholar] [CrossRef]

- Wei, D.; Hua, X.Y.; Zheng, M.X.; Wu, J.J.; Xu, J.G. Effectiveness of robot-assisted virtual reality mirror therapy for upper limb motor dysfunction after stroke: Study protocol for a single-center randomized controlled clinical trial. BMC Neurol. 2022, 22, 307. [Google Scholar] [CrossRef]

- Nisar, H.; Annamraju, S.; Deka, S.A.; Horowitz, A.; Stipanović, D.M. Robotic mirror therapy for stroke rehabilitation through virtual activities of daily living. Comput. Struct. Biotechnol. J. 2024, 24, 126–135. [Google Scholar] [CrossRef]

- Rong, J.; Ding, L.; Xiong, L.; Zhang, W.; Wang, W.; Deng, M.; Wang, Y.; Chen, Z.; Jia, J. Mirror Visual Feedback Prior to Robot-Assisted Training Facilitates Rehabilitation After Stroke: A Randomized Controlled Study. Front. Neurol. 2021, 12, 683703. [Google Scholar] [CrossRef]

- Chen, Y.W.; Li, K.Y.; Lin, C.H.; Hung, P.H.; Lai, H.T.; Wu, C.Y. The effect of sequential combination of mirror therapy and robot-assisted therapy on motor function, daily function, and self-efficacy after stroke. Sci. Rep. 2023, 13, 16841. [Google Scholar] [CrossRef] [PubMed]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Covaciu, F.; Gherman, B.; Vaida, C.; Pisla, A.; Tucan, P.; Caprariu, A.; Pisla, D. A Combined Mirror–EMG Robot-Assisted Therapy System for Lower Limb Rehabilitation. Technologies 2025, 13, 227. https://doi.org/10.3390/technologies13060227

Covaciu F, Gherman B, Vaida C, Pisla A, Tucan P, Caprariu A, Pisla D. A Combined Mirror–EMG Robot-Assisted Therapy System for Lower Limb Rehabilitation. Technologies. 2025; 13(6):227. https://doi.org/10.3390/technologies13060227

Chicago/Turabian StyleCovaciu, Florin, Bogdan Gherman, Calin Vaida, Adrian Pisla, Paul Tucan, Andrei Caprariu, and Doina Pisla. 2025. "A Combined Mirror–EMG Robot-Assisted Therapy System for Lower Limb Rehabilitation" Technologies 13, no. 6: 227. https://doi.org/10.3390/technologies13060227

APA StyleCovaciu, F., Gherman, B., Vaida, C., Pisla, A., Tucan, P., Caprariu, A., & Pisla, D. (2025). A Combined Mirror–EMG Robot-Assisted Therapy System for Lower Limb Rehabilitation. Technologies, 13(6), 227. https://doi.org/10.3390/technologies13060227