Hardware Design of DRAM Memory Prefetching Engine for General-Purpose GPUs

Abstract

1. Introduction

- Improve memory hierarchy efficiency. GPGPUs incorporate large memory hierarchies, enabling a wider range of applications to be executed efficiently. However, relying solely on the memory hierarchy system to handle complex memory access patterns in GPGPU workloads has been shown to be inefficient [2,13]. Moreover, due to the large number of concurrently executing threads, it is highly challenging for GPGPUs to exploit data locality and utilize cache structures as effectively as general-purpose processors. Consequently, data movement between main memory and caches often results in contention and degraded performance [14,15,16]. Memory prefetching can detect memory access patterns and proactively fetch the required data into the cache or local memory before it is needed, thereby improving the effectiveness of the memory hierarchy.

- Improve data locality. The large number of threads running on a GPGPU can significantly impact data locality. Due to the limited capacity of the memory system, as the number of concurrent threads increases, the pressure on memory resources rises. This leads to an increased cache miss ratio and restricts the footprint size of each thread in the on-chip memory. In such scenarios, memory prefetching can enhance memory locality and reduce the average memory access time.

- Reduce asynchronous task switching overhead. Heterogeneous systems consisting of a CPU and a GPGPU often execute workloads divided into two kernels: a control kernel running on the CPU and a computational kernel with data parallelism running on the GPGPU. These workloads are structured such that the control kernel executes on the CPU first. Once completed, the workload is passed to the GPGPU for execution of the computational kernel. Upon completion, the results are passed back to the CPU. This asynchronous back-and-forth task switching between the CPU and GPGPU can continue until the workload is complete. During each task switch, it is necessary to reload the thread context into the GPGPU’s local memory, which increases task switching overhead and reduces overall throughput. Memory prefetching can mitigate this overhead by preloading necessary data into the GPGPU’s local memory, thereby improving overall utilization.

- Improve core utilization. GPGPUs execute a massive number of threads using fine-grained scheduling. Since threads are switched every clock cycle, any long-latency memory access or absence of required data in the local memory may stall threads, reducing effective core utilization. Memory prefetching can minimize these memory access latencies, thereby enhancing GPGPU utilization and overall performance.

- Modular DRAM-based Prefetching Engine. We propose a specialized hardware prefetch subsystem for GPGPU architectures featuring multiple parallel engines with dedicated address ranges, self-learned context mechanisms, and adaptive stride detection for efficient handling of diverse memory access patterns.

- Robust System Integration Features. We design comprehensive system-level features, including context flushing for handling access pattern changes, watchdog timers for deadlock recovery, and flexible configuration interfaces for runtime parameter optimization.

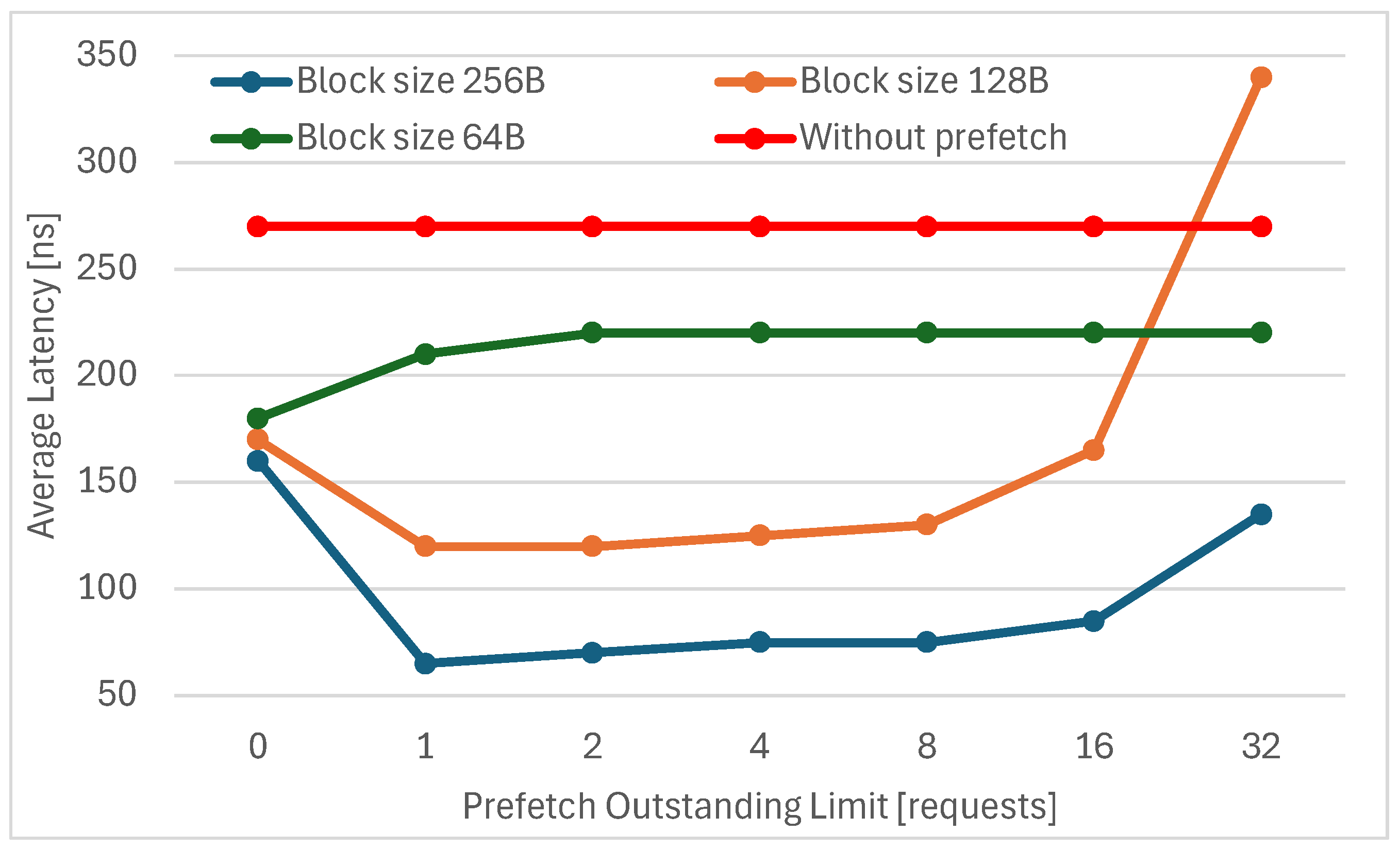

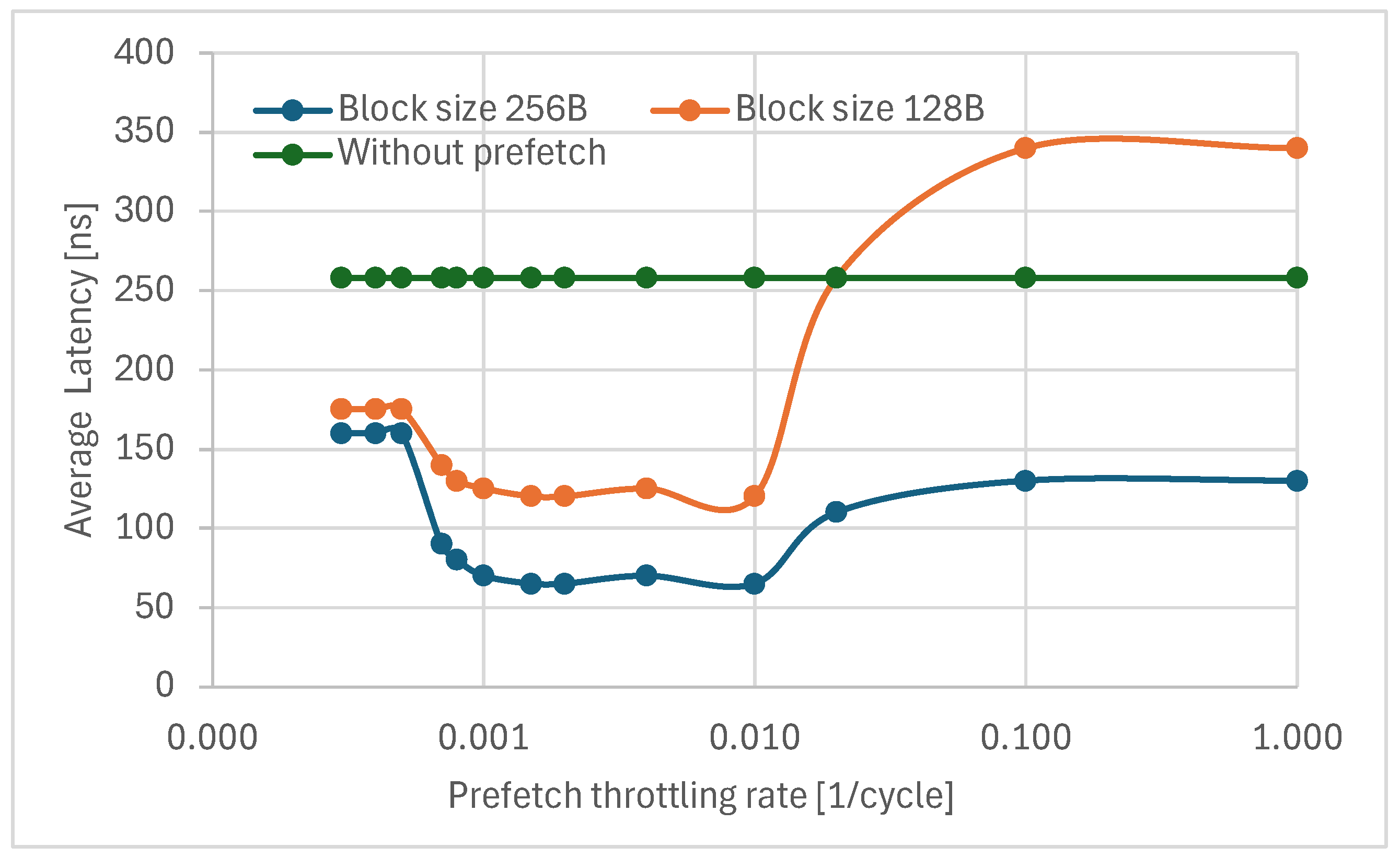

- Comprehensive Experimental Validation. We conduct an extensive performance analysis using real-world workloads, demonstrating memory access latency improvements of up to 82% and providing detailed characterization of optimal configuration parameters, including block sizes, outstanding limits, and throttling rates.

- Design Guidelines and Performance Insights. We provide critical insights into the relationship between prefetch configuration parameters and performance across different workload types, establishing guidelines for optimal prefetcher deployment in practical GPGPU systems.

2. Background and Related Work

2.1. GPGPU Architecture

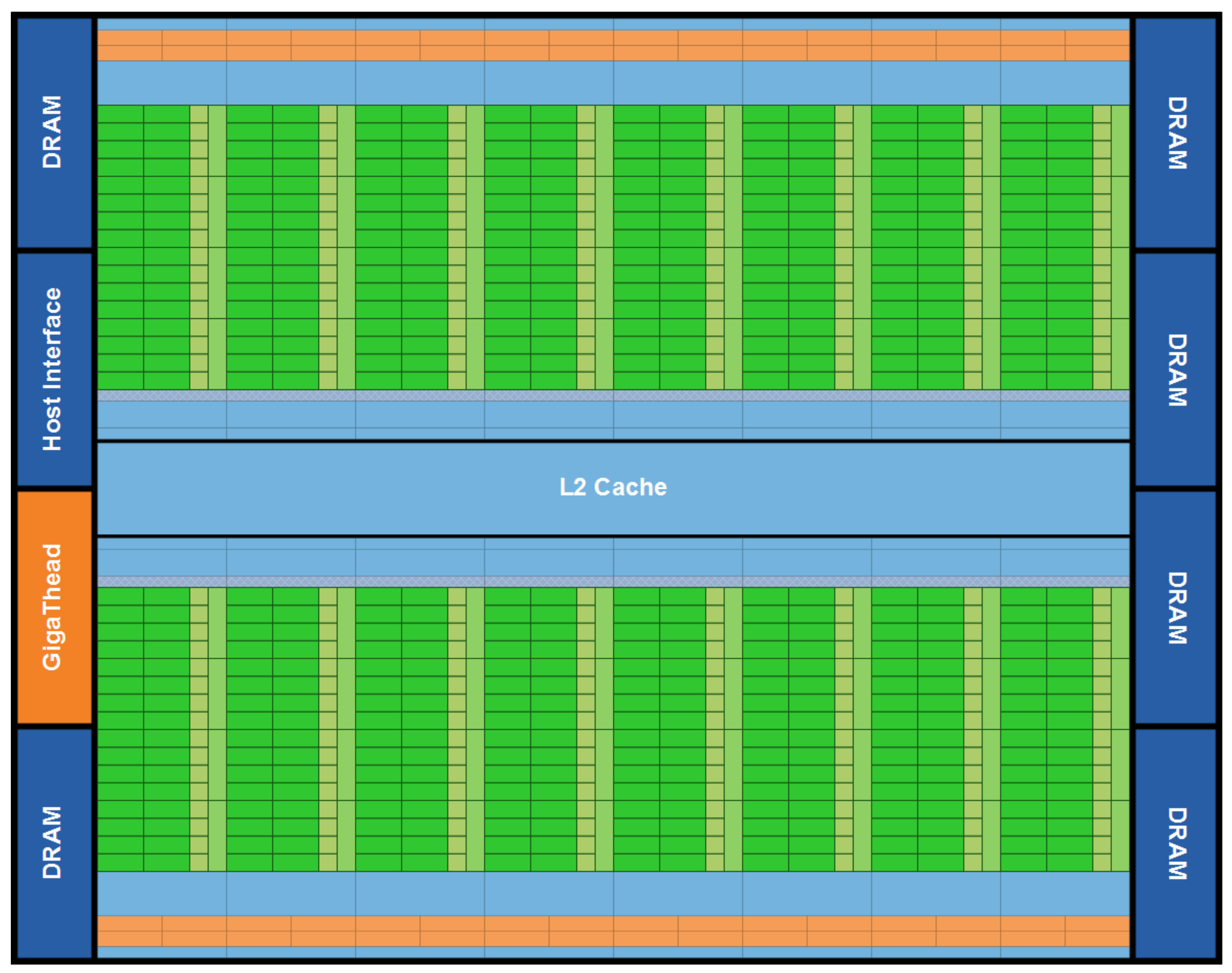

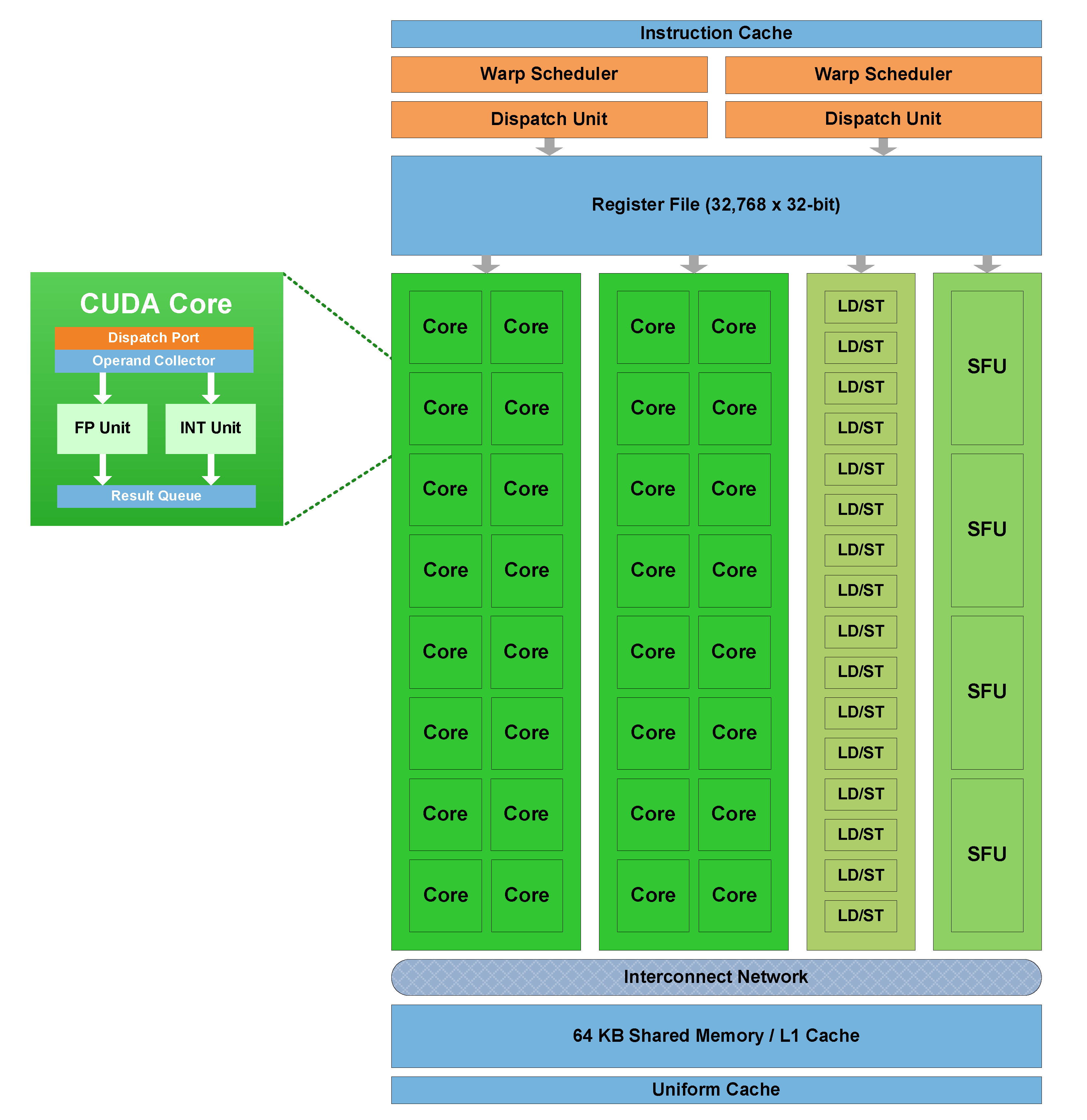

- Multiple processing cores that are organized into one or more “streaming multiprocessor” (SM) units as illustrated in Figure 2. Each SM unit is responsible for executing a set of instructions in parallel such that all processing cores at any given time execute the same instruction of different data elements. SM also consists of a large register file, an instruction cache, a data cache/scratchpad memory, a dispatch unit, and a thread scheduler.

- L2 cache/shared memory is located between the SMs and the external physical memory. The shared memory is used to store shared data between all SMs.

- Multi-channel off-chip physical memory (DRAM) is typically slower than the other types of memory in the GPGPU but is much larger and can be used to store larger amounts of data. GPGPUs typically employ multiple memory controllers which provide multiple simultaneous access channels to the physical memory to satisfy the high bandwidth requirements.

2.2. DDR Memory and HBM

2.3. Prior Works

3. GPGPU Prefetcher Design

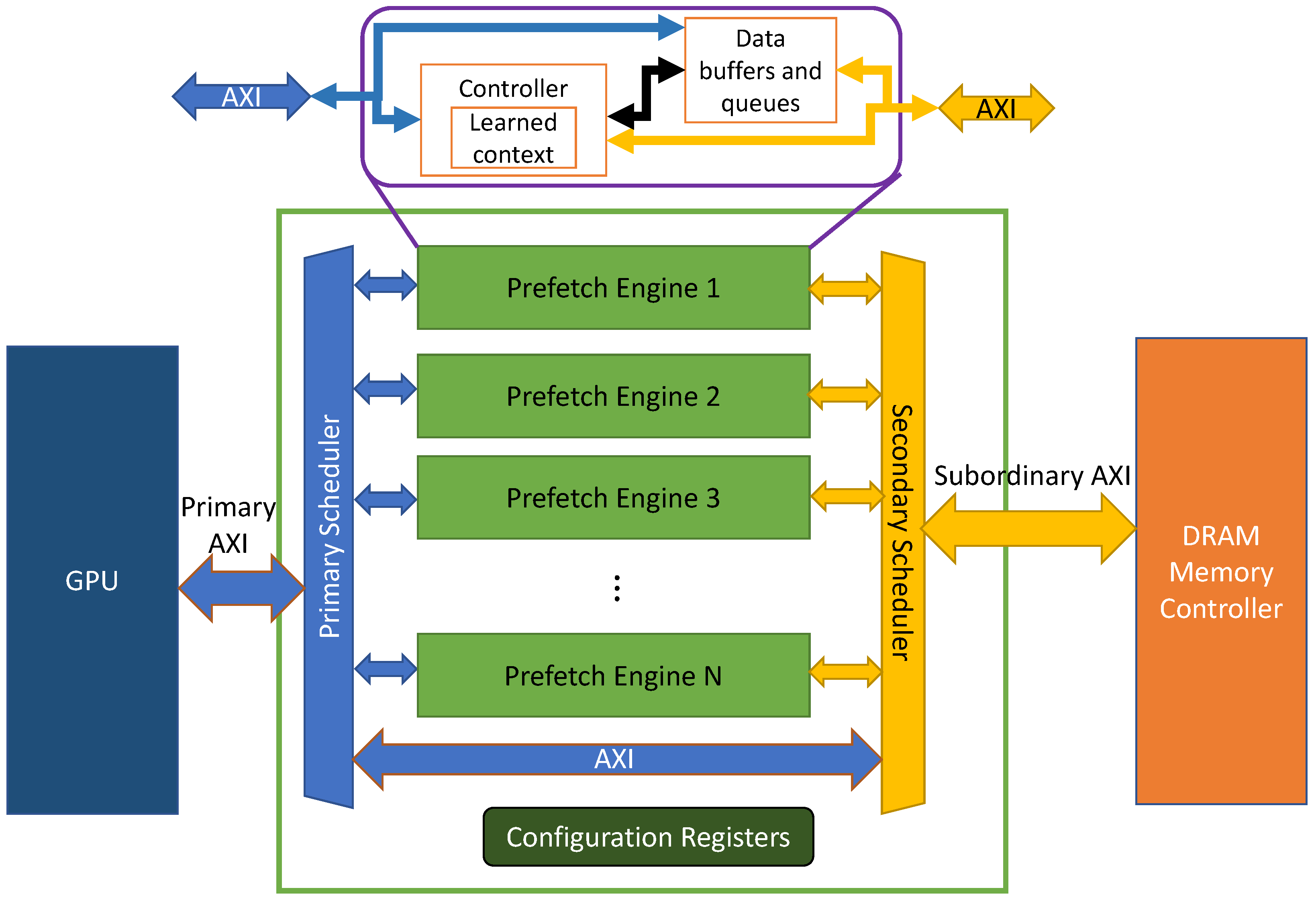

3.1. Microarchitectural Overview

- Detection and learning capability of relevant transactions. Based on the arbitration configuration policy (which will be described later) and the prefetcher engine context, each transaction is either claimed by its dedicated prefetch engine that is assumed to take the ownership for handling the transaction or forwarded to the DRAM controller.

- Stride access detection and prediction. The prefetch engine can exploit both spatial and temporal locality of memory accesses on the primary AXI bus. Repeated accesses on the primary AXI bus (temporal locality) can leverage fast access to data stored in the prefetch engine data buffers. Additionally, memory accesses with a fixed address stride are learned by the prefetch engine resulting in prefetching data blocks with a corresponding stride.

- Self-learned context, which maintains the state of the engine during the prefetch operation. The context consists of the following elements:

- (a)

- AXI transaction ID (AXI ID): Specifying the transaction ID field on AXI bus for the prefetch transactions. The AXI ID is used to avoid ordering violations and guarantee consistency and coherency of all transactions within the same AXI ID.

- (b)

- AXI address: Identifying the next address to be prefetched from the memory system.

- (c)

- AXI burst length: Denoting the number of beats required for a burst operation. Typically, consecutive memory accesses are assumed to have the same burst length and size.

- (d)

- Stride: Specifies the learned distance between two consecutive memory accesses issued on the primary AXI bus that are within the memory region of the prefetch engine.

- Flush mechanism: When a new transaction mapped to the prefetch engine misses the learned context, i.e., the requested data are not available in the data buffer or when the stride is changed, the prefetch engine will flush its learned context and restart the learning process.

- Watchdog timer: Upon detection of a lack of activity either on the primary AXI bus or the subordinary AXI bus (due to a potential system failure or deadlocks), a designated watchdog mechanism will flush the learned context of the prefetcher and allow restarting to learn a new context.

- Configurability: Allowing users to configure prefetch engine functionality, which will be further described.

3.2. Prefetch Engine Microarchitecture

- Read or write memory accesses that are outside the address range of the prefetch engines are forwarded directly to the DRAM controller.

- Read memory accesses that hit the address window of the prefetch engine are claimed by the prefetch engines, which take ownership generating a read response to the GPGPU.

- Write memory accesses within the address range of the data stored in the prefetch buffer will result in flushing the learned context. The data prefetched by the engine will be disposed, outbound prefetch requests will be canceled, and the prefetch engine will initiate the learning process anew.

3.3. Controller Microarchitecture

- IDLE. An initial state. Upon the first read request, that falls into the prefetch engine address range, the predictor stores the AXI ID and AXI burst length, and transitions to the ARM state.

- ARM. Upon a read request, that falls into the prefetch engine address range, if the AXI ID and burst length match those learned in the IDLE state, then 1. the stride will be stored in the prefetch engine context as the distance from the current address to the address stored in the context, and 2. the predictor will transition into an ACTIVE state. In case of mismatch, the predictor will transition to a CLEANUP state.

- ACTIVE. On every cycle, the predictor will issue consecutive prefetch requests if the following conditions apply: 1. The number of outstanding prefetch requests have not reached the configured limit (specified in the configuration registers which will be described later). 2. The prefetch queue in the data plane has sufficient storage space for the next prefetch request. 3. The predicted prefetch address falls within the configured address range. In case of a new read request that mismatches the learned context, the controller will transition to the CLEANUP state.

- CLEANUP. This state can be reached by the conditions that have been described previously, or by a special signal indication from the arbiter. It prevents receiving new requests from the AXI initiator until the completion of all outstanding requests, to ensure a safe flush.

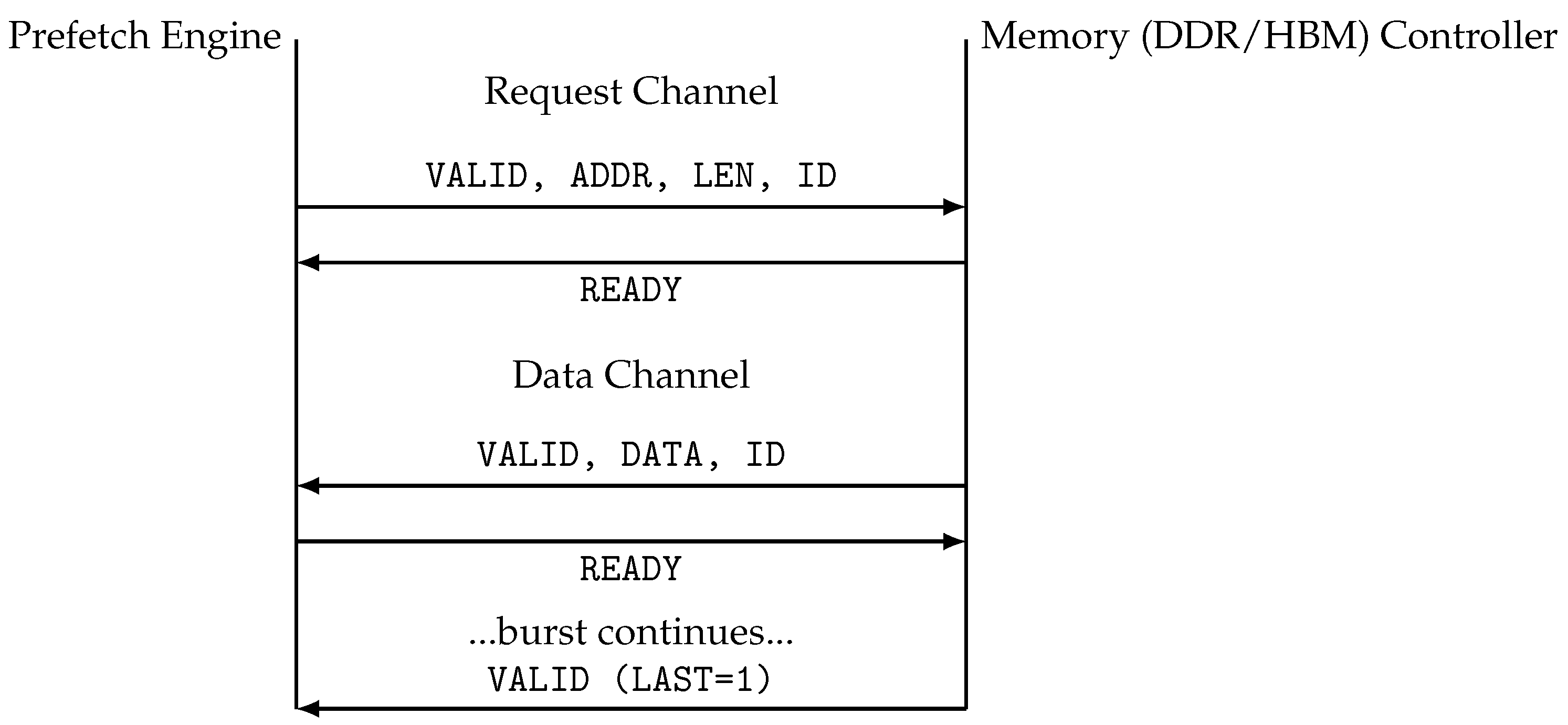

- Valid read request from the AXI initiator. When a new transaction is initiated on the primary AXI bus, the handler acknowledges the initiator’s request and assigns a new request to the data path. In the case of a read request falling into the prefetch window that has not been prefetched yet, it issues a new read request to the subordinate AXI bus.

- Data path responses. When new read responses have been prefetched to the data path, the handler dispatches the data from the data path to the AXI initiator.

- Subordinate AXI bus pending responses. When the subordinate AXI bus initiates a response, the handler acknowledges the subordinate bus response and places the data responses into the data path buffers.

- Ready address prediction. When a new address prediction is ready, the handler initiates a new prefetch transaction to the subordinate AXI bus.

3.4. Data Path

- The head-of-queue pointer, which identifies the block at the front of the queue;

- The tail pointer, which indicates the next available block to be populated;

- The burst offset pointer, which tracks the position of the next AXI beat within an ongoing burst transfer.

- Reserve. Allocates a container block in the queue for a new prefetch request.

- Read. Retrieves data from a container block for transfer to the primary AXI bus initiator.

- Write. Stores incoming data from the subordinate into a previously reserved block within the queue. The controller provides the burst length information from the prefetch engine context as part of this command.

- Flush. Used by the controller to clear the container data when transitioning to CLEANUP state.

- Queue Almost Full. Signals that the container queue has reached a predefined “almost full” threshold. This notification informs the controller about the queue’s occupancy level, enabling proactive management of incoming data.

- Prefetch Request Count. Provides the controller with the total number of prefetched requests currently stored in the container queue. This count allows the controller to enforce the configured limit on outstanding prefetch requests.

- Outstanding Request. Indicates the presence of at least one pending request in the queue. During the controller’s CLEANUP state, this signal ensures that the controller refrains from initiating a flush operation until all outstanding requests have been fully processed.

3.5. Configurable Features

- Address Range Configuration (Bar and Limit). Specifies the memory address range for each prefetch engine. Only read requests within the defined address range are processed by the prefetch engine, ensuring efficient handling of memory operations.

- Prefetch Engine Performance Settings.

- −

- Outstanding Prefetch Requests. Sets an upper limit on the number of outstanding prefetch requests. This limit prevents oversubscription of a prefetch engine and ensures fair resource allocation, avoiding starvation of other engines.

- −

- Bandwidth Throttling. Defines the maximum rate of prefetch requests. This configuration enables control over the bandwidth consumption of individual prefetch engines, optimizing system performance.

- Almost Full Threshold. Establishes the threshold value for prefetch buffer occupancy, signaling when the buffer is nearing its capacity.

- Watchdog Timer. Specifies the time limit for the watchdog timer, which will be elaborated upon in subsequent sections.

3.6. Prefetch Engine Flow of Operation

- Read request. Address: 0x1000, burst length: 3, AXI ID: 10.

- The prefetch engines store the address, AXI ID, and burst length in prefetch context.

- The prefetch engine transitions into the ‘ARM’ state.

- Read request. Address: 0x1004, burst length: 3, AXI ID: 10.

- Since the access address falls into configured window and matches the learned burst length and AXI ID, the prefetch engine calculate and store the stride in the prefetch context which consists of the following learned context: stride: 0x4, burst length: 3, AXI ID: 10.

- The prefetch engine transitions into the ‘ACTIVE’ state.

- The prefetch engine issues a prefetch request to fetch the next predicted address: 0x1008, burst length: 3, AXI ID: 10. Once the data of the prefetch request is received from memory, the prefetch engine stores the data in the container.

- The GPGPU issues a read request on address: 0x1008, burst length: 3, AXI ID: 10. Since the data has already been prefetched, the data is forwarded immediately to the GPGPU.

- The GPGPU performs a read request of address: 0x1100, burst length: 3, AXI ID: 10.

- The request has a mismatch with the learned stride in the prefetch engine.

- The prefetch engine moves to CLEANUP state and forwards the request to the subordinary AXI bus.

- When there are no outstanding requests, the prefetch engine flushes the learned context and data container and transitions to IDLE state.

4. Simulation Environment

4.1. DRAM Memory Stub

- Every prefetch engine is associated with a single DRAM memory window.

- The DRAM memory stub maintains a single queue for all memory requests serving in a “First-Come First-Serve” (FCFS) policy.

- The DRAM memory controller page policy assumes an open-page policy, i.e., last accessed memory page is kept open. This allows accesses that fall into the open page to be accessed faster (fast page mode).

4.2. GPGPU and CPU Stubs

- Trace Module. Responsible for reading the gpgpu-sim trace file, parsing the memory access records, and supplying the transactions.

- AXI Initiator Module. Converts the transactions provided by the trace module into AXI-compliant transactions and initiates them on the primary AXI bus.

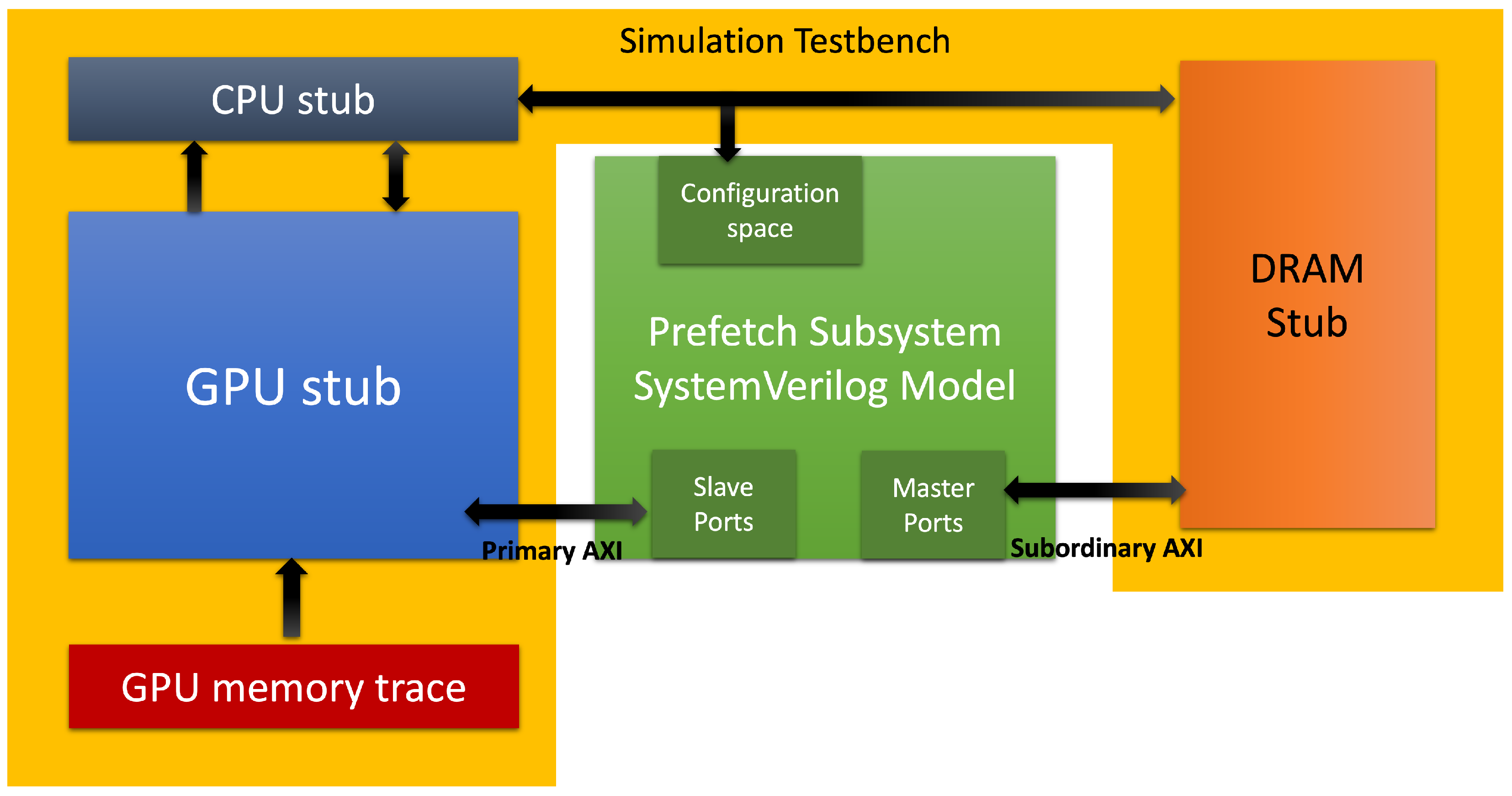

4.3. Complete Simulation Flow

- Trace Generation ❶. Memory access traces are generated using gpgpu-sim executing CUDA-based applications. The traces contain memory access records, including cycle numbers and addresses.

- Tracer Module ❷. The Tracer module reads the gpgpu-sim trace files and processes the memory access records. It ensures that transactions are generated on the AXI bus while adhering to the timing constraints specified in the trace file.

- Prefetching Engine: The prefetcher is the core component under evaluation. It interacts with two sets of AXI ports.

- −

- Slave Ports ❸. Receive memory transactions from the Tracer module.

- −

- Master Ports ❹. Issue prefetching requests to the DRAM memory controller and DRAM stub.

Additionally, the Configuration Space AXI Port is managed by the CPU stub, which orchestrates the simulation and configures the prefetcher. - Memory Controller and DRAM Stub ❺: The Memory Controller and DRAM stub emulate DRAM memory behavior, including latency modeling for open and closed page accesses. This stub responds to read and write requests generated by the prefetcher and Tracer modules.

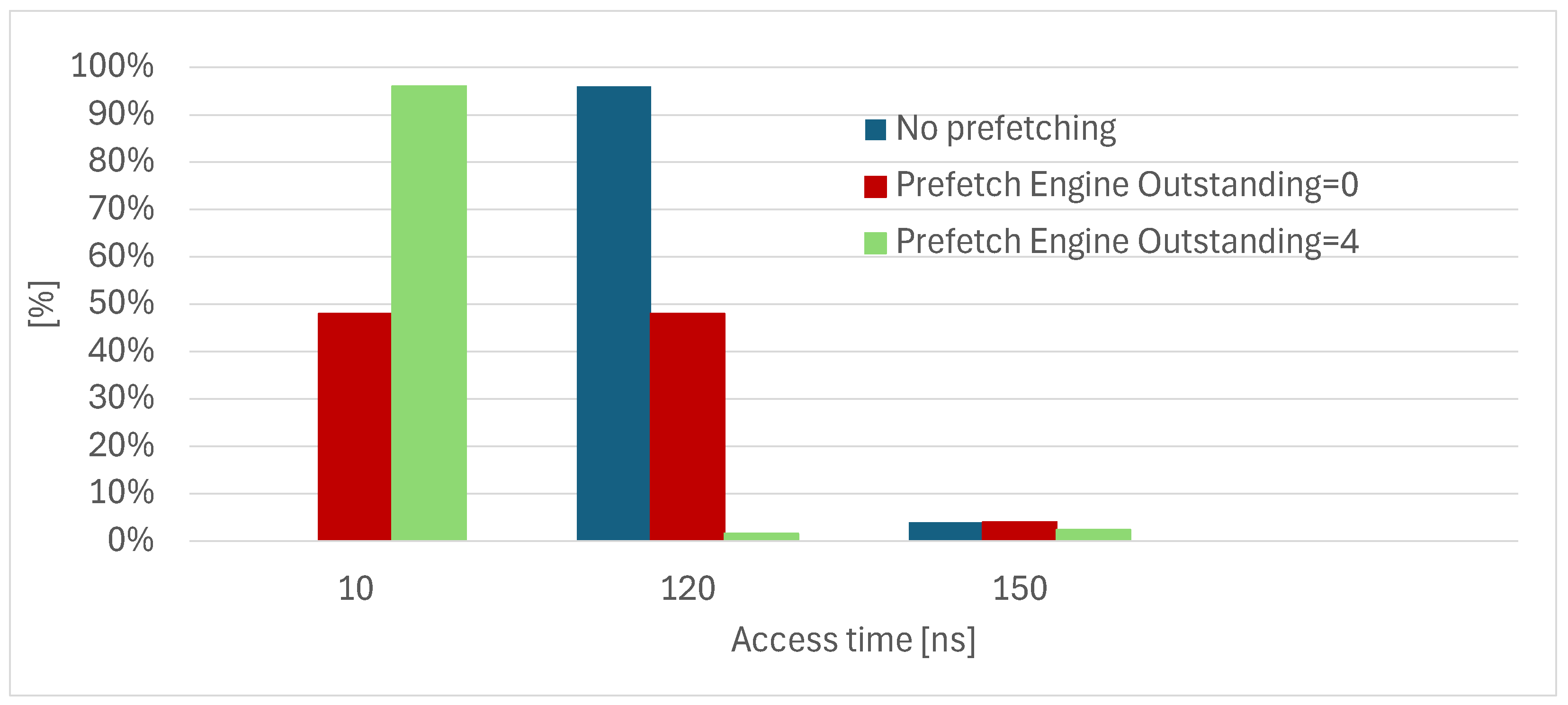

- Testbench Monitors ❻. Testbench Monitors collect statistics during the simulation, including memory latency, prefetching efficiency (defined as the fraction of memory accesses served by useful prefetches, as reflected in the reduced-latency bins of the histograms), and overall system performance. These metrics are compiled into a Statistics Report File for post-simulation analysis.

5. Experimental Analysis

- Operating Frequency: 667 MHz.

- DRAM Stub Configuration (aligned with DDR5 specifications):

- −

- Page Size: 2 KBytes.

- −

- Access Latency: 120 ns for page hits and 150 ns for page misses.

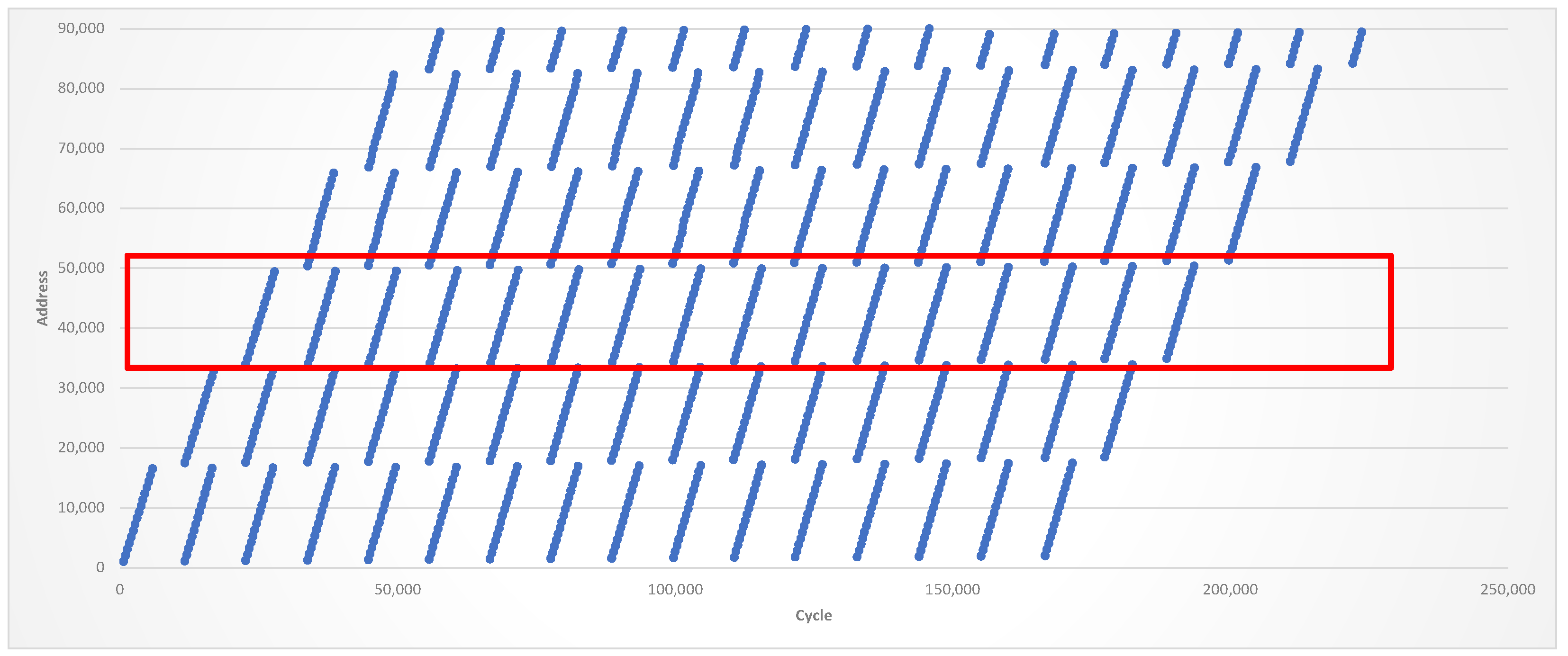

5.1. Benchmark Characteristics

5.1.1. CNN Model

5.1.2. The Needleman–Wunch Algorithm

d ← Gap penalty score

for i = 0 to length(A)

F(i,0) ← d ∗ i

for j = 0 to length(B)

F(0,j) ← d ∗ j

for i = 1 to length(A)

for j = 1 to length(B)

Match ← F(i − 1, j − 1) + S(Ai, Bj)

Delete ← F(i − 1, j) + d

Insert ← F(i, j − 1) + d

F(i,j) ← max(Match, Insert, Delete)

5.2. Performance Analysis

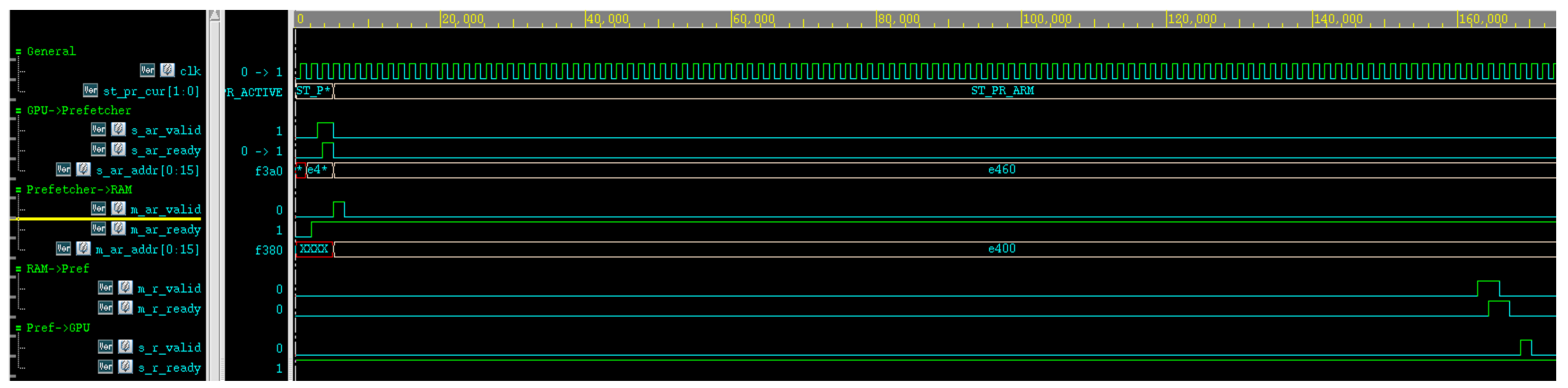

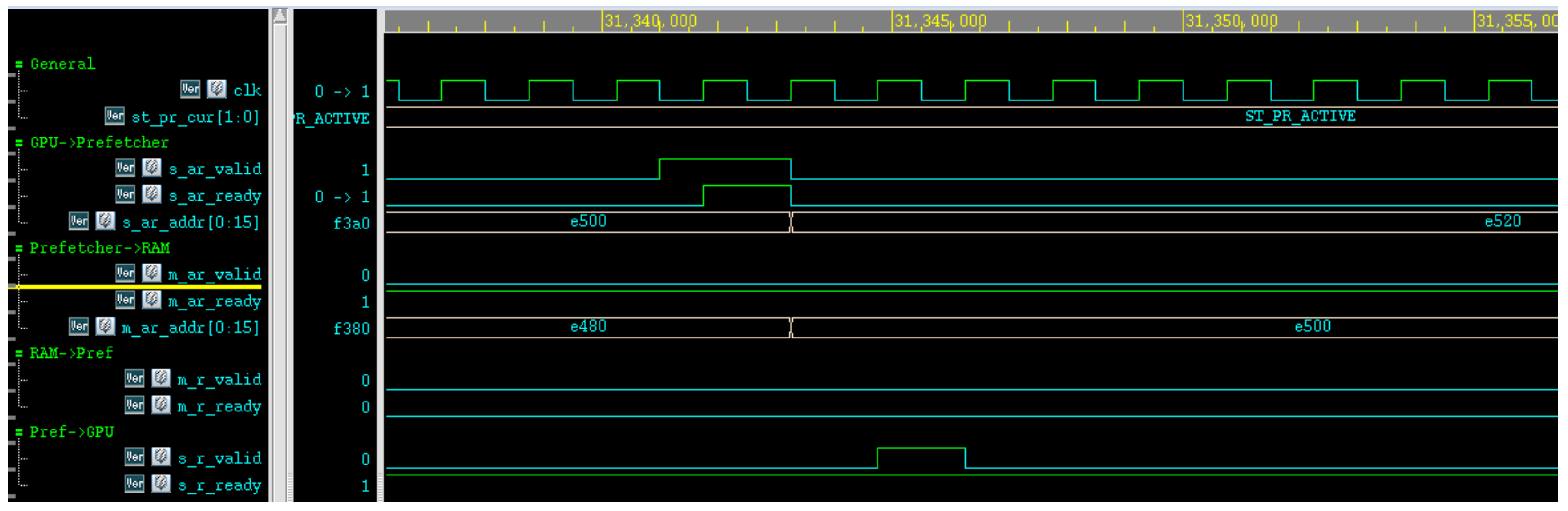

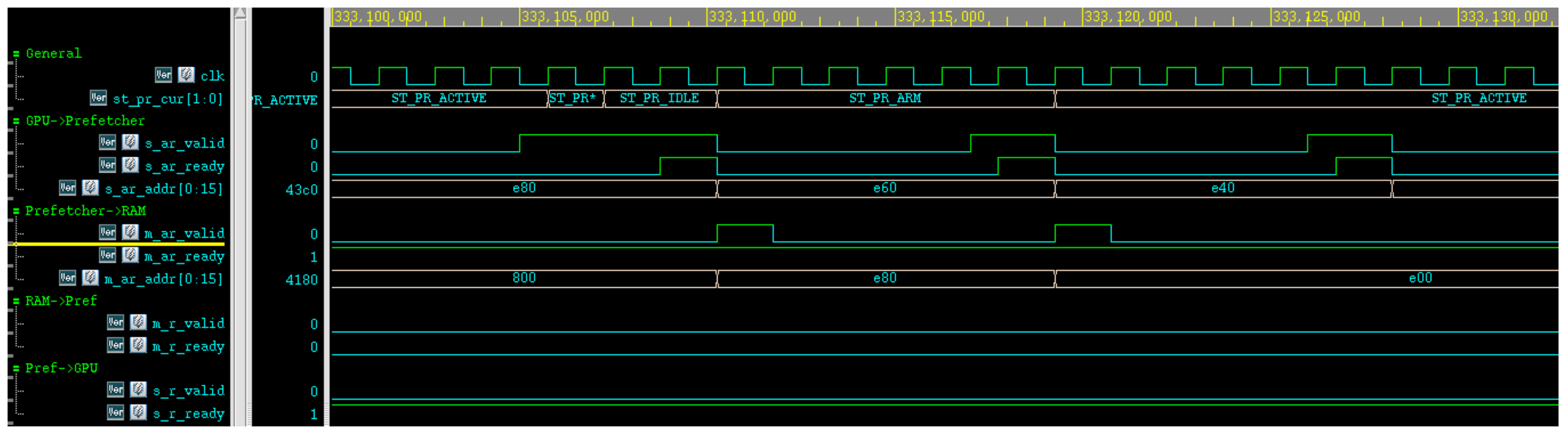

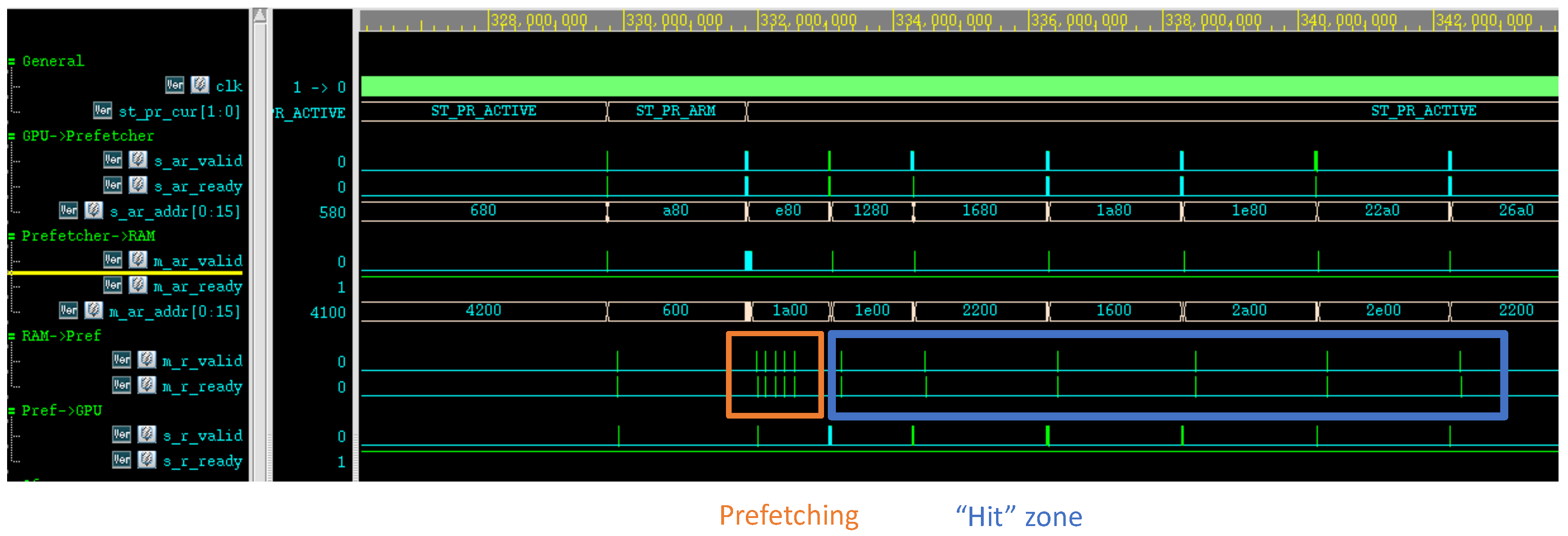

- General Signals: Includes the clock signal (clk) and the predictor state of the prefetcher (st_pr_cur).

- GPU to Prefetcher: AXI signals representing read requests initiated by the GPU and directed to the prefetcher.

- Prefetcher to RAM: AXI signals representing read requests initiated by the prefetcher and forwarded to the DRAM memory stub.

- RAM to Prefetcher: AXI signals representing read data responses sent from the DRAM memory stub back to the prefetcher.

- Prefetcher to GPU: AXI signals representing read data responses sent from the prefetcher to the GPU.

6. Discussion

7. Conclusions

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

References

- Whitepaper: NVIDIA’s Next Generation CUDA Compute Architecture: Fermi. 2009. Available online: https://www.nvidia.com/content/PDF/fermi_white_papers/NVIDIA_Fermi_Compute_Architecture_Whitepaper.pdf (accessed on 2 October 2025).

- Kirk, D.B.; Hwu, W.W. Programming Massively Parallel Processors: A Hands-on Approach, 1st ed.; Morgan Kaufmann Publishers Inc.: San Francisco, CA, USA, 2010. [Google Scholar]

- Luebke, D.; Harris, M.; Govindaraju, N.; Lefohn, A.; Houston, M.; Owens, J.; Segal, M.; Papakipos, M.; Buck, I. GPGPU: General-purpose computation on graphics hardware. In Proceedings of the 2006 ACM/IEEE Conference on Supercomputing, Tampa, FL, USA, 11–17 November 2006; p. 208-es. [Google Scholar]

- Raju, K.; Chiplunkar, N.N. A survey on techniques for cooperative CPU-GPU computing. Sustain. Comput. Inform. Syst. 2018, 19, 72–85. [Google Scholar]

- Hu, L.; Xilong, C.; Si-Qing, Z. A closer look at GPGPU. ACM Comput. Surv. (CSUR) 2016, 48, 1–20. [Google Scholar] [CrossRef]

- Owens, J.D.; Luebke, D.; Govindaraju, N.; Harris, M.; Krüger, J.; Lefohn, A.E.; Purcell, T.J. A survey of general-purpose computation on graphics hardware. In Computer Graphics Forum; Blackwell Publishing Ltd.: Oxford, UK, 2007; Volume 26, pp. 80–113. [Google Scholar]

- Dokken, T.; Hagen, T.R.; Hjelmervik, J.M. An introduction to general-purpose computing on programmable graphics hardware. In Geometric Modelling, Numerical Simulation, and Optimization: Applied Mathematics at SINTEF; Springer: Berlin/Heidelberg, Germany, 2007; pp. 123–161. [Google Scholar]

- Kim, Y.; Choi, H.; Lee, J.; Kim, J.S.; Jei, H.; Roh, H. Efficient large-scale deep learning framework for heterogeneous multi-GPU cluster. In Proceedings of the 2019 IEEE 4th International Workshops on Foundations and Applications of Self Systems, Umea, Sweden, 16–20 June 2019; pp. 176–181. [Google Scholar]

- Mittal, S.; Vaishay, S. A survey of techniques for optimizing deep learning on GPUs. J. Syst. Archit. 2019, 99, 101635. [Google Scholar] [CrossRef]

- Ovtcharov, K.; Ruwase, O.; Kim, J.Y.; Fowers, J.; Strauss, K.; Chung, E.S. Accelerating deep convolutional neural networks using specialized hardware. Microsoft Res. Whitepaper 2015, 2, 1–4. [Google Scholar]

- Mittal, S. A survey of recent prefetching techniques for processor caches. ACM Comput. Surv. (CSUR) 2016, 49, 1–35. [Google Scholar] [CrossRef]

- Falahati, H.; Hessabi, S.; Abdi, M.; Baniasadi, A. Power-efficient prefetching on GPGPUs. J. Supercomput. 2015, 71, 2808–2829. [Google Scholar] [CrossRef]

- Jia, W.; Shaw, K.A.; Martonosi, M. Characterizing and improving the use of demand-fetched caches in GPUs. In Proceedings of the 26th ACM International Conference on Supercomputing, Venice, Italy, 25–29 June 2012; pp. 15–24. [Google Scholar]

- Jia, W.; Shaw, K.; Martonosi, M. MRPB: Memory request prioritization for massively parallel processors. In Proceedings of the 2014 IEEE 20th International Symposium on High Performance Computer Architecture (HPCA), Orlando, FL, USA, 15–19 February 2014; pp. 272–283. [Google Scholar]

- Torres, Y.; Gonzalez-Escribano, A.; Llanos, D.R. Understanding the impact of CUDA tuning techniques for Fermi. In Proceedings of the International Conference on High Performance Computing and Simulation (HPCS), Istanbul, Turkey, 4–8 July 2011; pp. 631–639. [Google Scholar]

- Xie, X.; Liang, Y.; Wang, Y.; Sun, G.; Wang, T. Coordinated static and dynamic cache bypassing for GPUs. In Proceedings of the 2015 IEEE 21st International Symposium on High Performance Computer Architecture (HPCA), Burlingame, CA, USA, 7–11 February 2015; pp. 76–88. [Google Scholar]

- AXI Protocol Specification. Document Number: ARM IHI 0022, August 2025. Available online: https://developer.arm.com/documentation/ihi0022/latest (accessed on 2 October 2025).

- Yu, S. Semiconductor Memory Devices and Circuits; CRC Press: Boca Raton, FL, USA, 2022. [Google Scholar]

- Lee, D.U. Tutorial: HBM DRAM and 3D Stacked Memory. In Proceedings of the 2022 IEEE International Solid-State Circuits Conference (ISSCC), San Francisco, CA, USA, 20–24 February 2022; pp. 1–113. [Google Scholar]

- Callahan, D.; Kennedy, K.; Porterfield, A. Software Prefetching. In Proceedings of the Fourth International Conference on Architectural Support for Programming Languages and Operating Systems (ASPLOS IV), New York, NY, USA, 8–11 April 1991; pp. 40–52. [Google Scholar] [CrossRef]

- Mowry, T.C.; Lam, M.S.; Gupta, A. Design and Evaluation of a Compiler Algorithm for Prefetching. ACM SIGPLAN Not. 1992, 27, 62–73. [Google Scholar] [CrossRef]

- Caragea, G.C.; Taznnes, A.; Keceli, F.; Barua, R.; Vishkin, U. Resource-Aware Compiler Prefetching for Many-Cores. In Proceedings of the IEEE 9th International Symposium on Parallel and Distributed Computing, Istanbul, Turkey, 7–9 July 2010; pp. 133–140. [Google Scholar]

- Ainsworth, S.; Jones, T.M. Software prefetching for indirect memory accesses. In Proceedings of the IEEE/ACM International Symposium of Code Generation and Optimization (CGO), Austin, TX, USA, 4–8 February 2017; pp. 305–317. [Google Scholar]

- Hadade, I.; Jones, T.M.; Wang, F.; di Mare, L. Software Prefetching for Unstructured Mesh Applications. ACM Trans. Parallel Comput. 2020, 7, 1–23. [Google Scholar] [CrossRef]

- Falsafi, B.; Wenisch, T.F. A Primer on Hardware Prefetching; Springer Nature: Berlin/Heidelberg, Germany, 2022. [Google Scholar]

- Jouppi, N.P. Improving direct-mapped cache performance by the addition of a small fully-associative cache and prefetch buffers. In Proceedings of the 17th Annual International Symposium on Computer Architecture, Seattle, WA, USA, 28–31 May 1990; pp. 364–373. [Google Scholar]

- Palacharla, S.; Kessler, R.E. Evaluating stream buffers as a secondary cache replacement. In Proceedings of the 21st International Symposium on Computer Architecture (ISCA), Chicago, IL, USA, 18–21 April 1994; pp. 24–33. [Google Scholar]

- Chen, T.F.; Baer, J.L. Effective hardware-based data prefetching for high-performance processors. IEEE Trans. Comput. 1995, 44, 609–623. [Google Scholar] [CrossRef]

- Fu, J.W.C.; Patel, J.H.; Janssens, B.L. Stride directed prefetching in scalar processors. ACM Sigmicro Newsl. 1992, 23, 102–110. [Google Scholar] [CrossRef]

- Nesbit, K.J.; Smith, J.E. Data Cache Prefetching Using a Global History Buffer. In Proceedings of the IEEE 10th International Symposium on High Performance Computer Architecture (HPCA’04), Madrid, Spain, 14–18 February 2004. [Google Scholar]

- Lee, J.; Lakshminarayana, N.B.; Kim, H.; Vuduc, R. Many-Thread Aware Prefetching Mechanisms for GPGPU Applications. In Proceedings of the 43rd Annual IEEE/ACM International Symposium on Microarchitecture (MICRO), Atlanta, GA, USA, 4–8 December 2010; pp. 213–224. [Google Scholar]

- Yang, Y.; Xiang, P.; Kong, J.; Zhou, H. A GPGPU compiler for memory optimization and parallelism management. ACM SIGPLAN Not. 2010, 45, 86–97. [Google Scholar] [CrossRef]

- Jog, A.; Kayiran, O.; Nachiappan, N.C.; Mishra, A.K.; Kandemir, M.T.; Mutlu, O.; Iyer, R.; Das, C.R. OWL: Cooperative Thread Array Aware Scheduling Techniques for Improving GPGPU Performance. ACM SIGPLAN Not. 2013, 48, 395–406. [Google Scholar] [CrossRef]

- Jeon, H.; Koo, G.; Annavaram, M. CTA-Aware Prefetching for GPGPU; Computer Engineering Technical Report Number CENG-2014-08; University of Southern California Los Angeles: Los Angeles, CA, USA, 2014. [Google Scholar]

- Lakshminarayana, N.B.; Kim, H. Spare Register Aware Prefetching for Graph Algorithms on GPUs. In Proceedings of the IEEE 20th International Symposium on High Performance Computer Architecture (HPCA), Orlando, FL, USA, 15–19 February 2014; pp. 614–625. [Google Scholar]

- Koo, G.; Jeon, H.; Liu, Z.; Kim, N.S.; Annavaram, M. CTA-Aware Prefetching and Scheduling for GPU. In Proceedings of the IEEE International Parallel and Distributed Processing Symposium (IPDPS), Vancouver, BC, Canada, 21–25 May 2018; pp. 137–148. [Google Scholar]

- Sethia, A.; Dasika, G.; Samadi, M.; Mahlke, S. APOGEE: Adaptive prefetching on GPUs for energy efficiency. In Proceedings of the 22nd International Conference on Parallel Architectures and Compilation Techniques, Edinburgh, UK, 7–11 September 2013; pp. 73–82. [Google Scholar]

- Jog, A.; Kayiran, O.; Mishra, A.K.; Kandemir, M.T.; Mutlu, O.; Iyer, R.; Das, C.R. Orchestrated Scheduling and Prefetching for GPGPUs. In Proceeding of the 40th Annual International Symposium on Computer Architecture (ISCA ’13), Tel-Aviv, Israel, 23–27 June 2013; pp. 332–343. [Google Scholar]

- Neves, N.; Tomás, P.; Roma, N. Stream data prefetcher for the GPU memory interface. J. Supercomput. 2018, 74, 2314–2328. [Google Scholar] [CrossRef]

- Oh, Y.; Yoon, M.K.; Park, J.H.; Park, Y.; Ro, W.W. WASP: Selective Data Prefetching with Monitoring Runtime Warp Progress on GPUs. IEEE Trans. Comput. 2018, 67, 1366–1373. [Google Scholar] [CrossRef]

- Oh, Y.; Kim, K.; Yoon, M.K.; Park, J.H.; Annavaram, M.; Ro, W.W. Adaptive Cooperation of Prefetching and Warp Scheduling on GPUs. IEEE Trans. Comput. 2019, 68, 609–616. [Google Scholar] [CrossRef]

- Sadrosadati, M.; Mirhosseini, A.; Ehsani, S.B.; Sarbazi-Azad, H.; Drumond, M.; Falsafi, B.; Ausavarungnirun, R.; Mutlu, O. LTRF: Enabling High-Capacity Register Files for GPUs via Hardware/Software Cooperative Register Prefetching. ACM SIGPLAN Not. 2018, 53, 489–502. [Google Scholar] [CrossRef]

- Guo, H.; Huang, L.; Lü, Y.; Ma, J.; Qian, C.; Ma, S.; Wang, Z. Accelerating BFS via Data Structure-Aware Prefetching on GPU. IEEE Access 2018, 6, 60234–60248. [Google Scholar] [CrossRef]

- Zhang, P.; Srivastava, A.; Nori, A.V.; Kanna, R.; Prasanna, V.K. Fine-Grained Address Segmentation for Attention-Based Variable-Degree Prefetching. In Proceedings of the 19th ACM International Conference on Computing Frontiers (CF ’22), Turin, Italy, 17–22 May 2022; pp. 103–112. [Google Scholar]

- Forencich, A. Verilog AXI Components Github Repository. 2022. Available online: https://github.com/alexforencich/verilog-axi (accessed on 2 October 2025).

- Aamod, T. GPGPU-Sim Manual. Available online: http://www.gpgpu-sim.org/manual (accessed on 2 October 2025).

- Needleman, S.B.; Wunsch, C.D. A general method applicable to the search for similarities in the amino acid sequence of two proteins. J. Mol. Biol. 1970, 48, 443–453. [Google Scholar] [CrossRef] [PubMed]

- Huangfu, Y.; Zhang, W. Boosting GPU Performance by Profiling-Based L1 Data Cache Bypassing. In Proceedings of the 2015 15th IEEE/ACM International Symposium on Cluster, Cloud and Grid Computing, Shenzhen, China, 4–7 May 2015; pp. 1119–1122. [Google Scholar] [CrossRef]

- Stoltzfus, L.; Emani, M.K.; Lin, P.; Liao, C. Data Placement Optimization in GPU Memory Hierarchy using Predictive Modeling. In Proceedings of the Workshop on Memory Centric High Performance Computing, Dallas, TX, USA, 11 November 2018. [Google Scholar]

- Li, M.; Zhang, Q.; Gao, Y.; Fang, W.; Lu, Y.; Ren, Y.; Xie, Z. Profile-Guided Temporal Prefetching. In Proceedings of the 52nd Annual International Symposium on Computer Architecture (ISCA ’25), Tokyo, Japan, 21–25 June 2025; pp. 572–585. [Google Scholar]

- Bakhoda, A.; Yuan, G.L.; Fung, W.W.L.; Wong, H.; Aamodt, T.M. Analyzing CUDA workloads using a detailed GPU simulator. In Proceedings of the IEEE International Symposium on Performance Analysis of Systems and Software, Boston, MA, USA, 26–28 April 2009; pp. 163–174. [Google Scholar] [CrossRef]

- Schatz, M.; Trapnell, C.; Delcher, A.; Varshney, A. High-throughput sequence alignment using Graphics Processing Units. BMC Bioinform. 2007, 8, 474. [Google Scholar] [CrossRef] [PubMed]

- Giles, M. Jacobi Iteration for a Laplace Discretisation on a 3D Structured Grid. Available online: https://people.maths.ox.ac.uk/gilesm/codes/laplace3d/laplace3d.pdf (accessed on 2 October 2025).

- Hesthaven, J.S.; Warburton, T. Nodal Discontinuous Galerkin Methods: Algorithms, Analysis, and Applications, 1st ed.; Springer Publishing Company, Incorporated: Berlin/Heidelberg, Germany, 2007. [Google Scholar]

- Harish, P.; Narayanan, P.J. Accelerating Large Graph Algorithms on the GPU Using CUDA. In Proceedings of the International Conference on High-Performance Computing, Goa, India, 18–21 December 2007; pp. 197–208. [Google Scholar]

- Michalakes, J.; Vachharajani, M. GPU acceleration of numerical weather prediction. In Proceedings of the IPDPS 2008: International Symposium on Parallel and Distributed Processing, Miami, FL, USA, 14–18 April 2008; pp. 1–7. [Google Scholar]

| Data Field | Number of Bits | Direction (m/s) | Description |

|---|---|---|---|

| AXI Read Request Channel | |||

| valid | 1 | Output/Input | Read request valid |

| ready | 1 | Input/Output | Read request ready |

| len | 8 | Output/Input | Burst length |

| addr | 64 | Output/Input | Read address |

| id | 7 | Output/Input | Read request transaction ID |

| AXI Read Data Channel | |||

| valid | 1 | Input/Output | Read data valid |

| ready | 1 | Output/Input | Read data ready |

| last | 1 | Input/Output | Last read data indicator |

| data | 256 | Input/Output | Read data |

| id | 7 | Input/Output | Read response transaction ID |

| AXI Write Request Channel | |||

| valid | 1 | Output/Input | Write request valid |

| ready | 1 | Input/Output | Write request ready |

| len | 8 | Output/Input | Burst length |

| addr | 64 | Output/Input | Write address |

| id | 7 | Output/Input | Write request transaction ID |

| Benchmark | Prefetch Outstanding Limit [Requests] | Block Size [Bytes] | Latency [ns] |

|---|---|---|---|

| CNN | 1 | 32 | 8 |

| NW | 1 | 256 | 65 |

| Benchmark | Prefetch Throttling Rate [1/Cycles] | Block Size [Bytes] | Latency [ns] |

|---|---|---|---|

| NW | 0.01 | 256 | 65 |

| Benchmark | Type | Latency Reduction | Min. Latency [ns] | Speedup |

|---|---|---|---|---|

| CNN [46] | ML workload | Up to 80% | ∼8–10 | 1.589× |

| NW [47] | Bioinformatics | Up to 80% | ∼60–80 | 1.794× |

| MUMmerGPU [52] | Bioinformatics | Up to 74% | ∼50–100 | 1.671× |

| LPS [53] | Scientific | Up to 78% | ∼8–15 | 1.556× |

| DG [54] | Scientific | Up to 64% | ∼30–60 | 1.463× |

| BFS [55] | Graph analytics | Up to 40% | ∼60–120 | 1.240× |

| WP [56] | Weather forecast | Up to 82% | ∼10–20 | 1.557× |

| Method | Approach | Key Features | Limitations | Performance Improvement |

|---|---|---|---|---|

| Many-thread-aware prefetching [31] | Hardware and software | Inter-thread prefetching: prefetching is performed between threads | Limited in capturing runtime irregular patterns that are unknown at compile time; Inter-thread prefetching introduces dependencies between threads, reducing overall efficiency | 15–16% |

| GPGPU compiler-based prefetching [32] | Software | Compiler prefetches via temporary variables, improving memory usage and workload distribution | Limited ability to predict dynamic/irregular memory access patterns; can result in cache pollution | Minor |

| OWL: Opportunistic prefetching [33] | Hardware | Opportunistic memory-side prefetching taking advantage of open DRAM rows | Limited to open DRAM rows | 2% |

| CTA-aware prefetching [34,36] | Hardware | Cooperative thread arrays stride prefetching | Limited thread scope within the cooperative array | 10% |

| Spare register-aware prefetching [35] | Hardware | Data prefetching mechanism for load pairs where one load depends on the other | Limited to graph algorithms | 10% |

| APOGEE [37] | Hardware | Uses adjacent threads to identify address patterns and dynamically adapt prefetching timeliness | Limited to adjacent threads; relies on latency hiding through SIMT | 19% |

| Orchestrated prefetching and scheduling [38] | Hardware | Coordinates thread scheduling and prefetching decisions | Requires modification in warp scheduling | 7–25% |

| Stream data prefetcher [39] | Hardware and software | Data prefetching based on application-specific data-pattern descriptions | Tightly coupled to an offline data-pattern specification per application | |

| Warp-aware selective prefetching (WASP) [40] | Hardware | Dynamically selects slow-progress warps for prefetching | Limited if warp behavior is unpredictable | 16.8% |

| Adaptive prefetching and scheduling (APRES) [41] | Hardware | Group warps predicted to execute the same load instruction in the near future | Contentions between demand fetch and prefetch require cache partitioning; dependent on thread cooperative characteristics | 27.8% |

| Latency-tolerant register file (LTRF) [42] | Hardware and software | Two-level hierarchical register structure; estimated working set prefetched to register cache | Relies on compile-time prefetch decisions, which may miss dynamic runtime behavior | 31% |

| This study | Hardware | Runtime configurable (32–256B blocks, 1–64 outstanding requests); context flushing; watchdog timers; handles diverse access patterns | Hardware complexity; requires proper throttling configuration; power consumption considerations | Up to 82% latency reduction; and speedup up to |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Gabbay, F.; Salomon, B.; Golan, I.; Shema, D. Hardware Design of DRAM Memory Prefetching Engine for General-Purpose GPUs. Technologies 2025, 13, 455. https://doi.org/10.3390/technologies13100455

Gabbay F, Salomon B, Golan I, Shema D. Hardware Design of DRAM Memory Prefetching Engine for General-Purpose GPUs. Technologies. 2025; 13(10):455. https://doi.org/10.3390/technologies13100455

Chicago/Turabian StyleGabbay, Freddy, Benjamin Salomon, Idan Golan, and Dolev Shema. 2025. "Hardware Design of DRAM Memory Prefetching Engine for General-Purpose GPUs" Technologies 13, no. 10: 455. https://doi.org/10.3390/technologies13100455

APA StyleGabbay, F., Salomon, B., Golan, I., & Shema, D. (2025). Hardware Design of DRAM Memory Prefetching Engine for General-Purpose GPUs. Technologies, 13(10), 455. https://doi.org/10.3390/technologies13100455