Abstract

As a popular strategy in collaborative learning, peer assessment has attracted keen interest in academic studies on online language learning contexts. The growing body of studies and findings necessitates the analysis of current publication trends and citation networks, given that studies in technology-enhanced language learning are increasingly active. Through a bibliometric analysis involving visualization and citation network analyses, this study finds that peer assessment in online language courses has received much attention since the COVID-19 outbreak. It remains a popular research topic with a preference for studies on online writing courses, and demonstrates international and interdisciplinary research trends. Recent studies have led peer assessment in online language courses to more specific research topics, such as critical factors for improving students’ engagement and feedback quality, unique advantages in providing online peer assessment, and designs to enhance peer assessment quality. This study also provides critical aspects about how to effectively integrate educational technologies into peer assessment in online language courses. The findings in this study will encourage future studies on peer assessment in online learning, language teaching methods, and the application of educational technologies.

1. Introduction

Collaborative learning can enhance learning outcomes, cognitive abilities, and social skills (Laal and Ghodsi 2012). A growing size of evidence can be found in previous empirical studies and reviews to support the advantages of collaborative learning, or more specifically, peer assessment (Jung et al. 2021). Online learning has demonstrated its significant role and advantages, especially during the COVID-19 pandemic (Adedoyin and Soykan 2020). Online learning and peer assessment in various subjects continue to develop, inspiring integrative research to investigate peer assessment in online language courses and provide enhanced and diversified forms of it. With the development of information and technology, educational technologies have become popular, and more technologies have been introduced to teaching practice, as listed by Haleem et al. (2022). Although collaborative learning may take forms other than peer assessment, many recent studies focus on it in online education contexts, where educational technologies are usually integrated. Consequently, peer assessment in online language courses sparks constant academic interest and is now a critical component of studies on online education.

The significance of peer assessment in online language education is evident in its vast advantages when applied to distance education. For instance, peer assessment effectively promotes learners’ writing skills, reflective thinking abilities, and problem-solving efficiency (Lin 2019; Liang and Tsai 2010). Implementing peer assessment activities in online language teaching also brings improved academic achievements compared with traditional feedback and offline courses, such as transferable skills, a better understanding of the assessment criteria, timely feedback, and constant learning and development (Adachi et al. 2018). However, there are also problems with peer assessment in online language education. The quality of peer assessment may be limited due to students’ expertise; how to enhance the students’ engagement and self-efficacy in peer assessment is still not completely clear (Lin 2019; Adachi et al. 2018). There is still a controversy about peer assessment in online language courses, which requires researchers to continue further investigations. Although previous studies have provided reviews and comments on the existing studies and findings about this topic, little is known about the research trends and the whole citation network of the related literature. Therefore, a bibliometric and systematic citation network analysis is significant in pushing the frontiers of this topic.

In order to bridge the research gap and inspire future investigations on this topic, this study intends to conduct a bibliometric analysis of peer assessment in online language courses. In this article, we will first review literature related to this topic and then provide methods of a bibliometric analysis with VOSviewer and CitNetExplorer. Based on the literature search, we conducted visualization and citation network analyses where literature clustering generates further discussion. This bibliometric study focuses on the theoretical foundations, previous empirical studies, and the recent developments related to this topic. By investigating the existing literature, we aim to help understand how peer assessment in online language courses has been established and integrated into teaching practice. The findings of this study will provide a clearer overall view of this topic and help identify how the current findings may be further developed in future studies.

2. Literature Review

2.1. Introducing Peer Assessment to Online Language Courses with Interdisciplinary Research

Rising interest in peer assessment and online language courses has been revealed by researchers in recent years (Li et al. 2020). A considerable number of studies have combined these two aspects, investigating the implementation of peer assessment in online language courses (Lin 2019). The overall findings of most existing studies suggested positive outcomes: peer assessment in online contexts could enhance learners’ language learning achievements (Liu et al. 2018; Ghahari and Farokhnia 2018). With the popularity of e-learning contexts, peer assessment has been actively applied. To illustrate this point, empirical results on this topic have been significantly enriched by technological advancements and applications since the advent of the 2020s. Examples can be found in further applications of video annotation tools, online teaching platforms to satisfy the educational needs in the post-COVID-19 era, and automated evaluation tools corresponding to e-learning environments (Fang et al. 2022; Shek et al. 2021). These studies contributed to understanding peer assessment in the era of online learning and teaching triggered by COVID-19 (Adedoyin and Soykan 2020).

Researchers in linguistics extended their interest to different language skills and learning contents in language courses, and they actively sought interdisciplinary methods and perspectives outside language education studies. Empirical evidence supporting peer assessment in online language education was provided from the existing literature, even if research interest in different language skills could vary. Among them, commonly investigated language skills included listening (Tran and Ma 2021), speaking (Nicolini and Cole 2019), and writing (Sun and Zhang 2022; Zhang et al. 2022). More integrative language courses included English for specific purposes (Salem and Shabbir 2022), academic writing (Topping et al. 2000; Cheong et al. 2022), and communication skills (Shek et al. 2021). The development of applied linguistics by involving theories and concepts from various subjects and research areas made interdisciplinary approaches increasingly crucial. The interactions between linguistic studies and theories in other research areas have conceived popular research methods and open-minded perspectives in current studies. Dominantly reflecting the interdisciplinary trends, psychological constructs were examined when educational technologies were introduced to language courses, shedding light on the effects of e-learning methods on students’ motivation, engagement, self-efficacy, and cognitive load (Akbari et al. 2016; Lai et al. 2019; Yu et al. 2022a, 2022b).

2.2. Theoretical Foundations of Peer Assessment and Reviews in Online Learning Contexts

The idea of peer assessment was derived from early discussions on collaborative learning, and the subsequent studies continued extending the theories and the applications of peer assessment. Inspired by Piaget (1929), who suggested that collaborative learning and cognitive construction were related and developed together (Roberts 2004), Vygotsky started with his theories, indicating how learners would more easily learn knowledge and develop particular skills (Vygotsky and Cole 1978). However, the concept of peer assessment was not termed first until Keith J. Topping. Although Topping (2009) suggested earlier origins of peer assessment in educational contexts, researchers now dominantly attributed the blossoming studies related to this topic to Topping’s systematic foundations of peer assessment (Lin 2019). In his earlier reviews of peer assessment, Topping predicted the rising trend of computer-assisted peer assessment (Topping 1998). In a series of articles and books, he reviewed and summarized the developments of peer assessment (Topping 1998, 2009, 2018), inspiring emerging research topics related to peer assessment in various educational contexts. Relying on the theoretical foundations, studies in recent years have contributed to new theories with growing empirical evidence in diversifying educational contexts.

Apart from the reviews of peer assessment from an educational perspective generally, existing reviews and meta-analyses of peer assessment in online language courses concentrated on relatively narrow scopes. For example, reviews and meta-analyses concentrated on particular language skills like argumentative writing (Awada and Diab 2021) or technology-assisted writing without involving peer assessment (Williams and Beam 2019). One problem was that these reviews and meta-analyses focused on particular aspects while leaving reviews from a general perspective a research gap. The existing reviews and meta-analyses needed a citation network analysis to include studies with peer assessment in online language education. The perspective of this review is an intermediate scope, not limited to language education in traditional classrooms but revealing the characteristics specific to peer assessment in online language education.

2.3. Educational Technology Applications for Peer Assessment in Online Langauge Courses

Although collaborative learning was proposed much earlier, introducing educational technologies to peer assessment benefited from rapid and revolutionary changes in the era of information and technology (Roberts 2005). Not specifically for peer assessment, educational technologies were introduced into language education for various purposes. As summarized by Haleem et al. (2022), in technology-enhanced language education studies, dominant educational technologies included at least the following: (1) quick assessment technologies, for example, automated writing evaluation tools (Nunes et al. 2022); (2) resources for distance learning, especially video-based instructions, such as Massive Open Online Courses (MOOCs) (Fang et al. 2022) and video conferencing (Hampel and Stickler 2012); (3) electronic books and digital reading technologies (Reiber-Kuijpers et al. 2021); (4) broad access to the most up-to-date knowledge and enhanced learning opportunities; (5) mobile-assisted language learning (as reviewed by Burston and Giannakou 2022); and (6) social-media-assisted language learning (Akbari et al. 2016). With diversified techniques and designs, technology-enhanced language learning in recent years was established as an emerging, rapidly developing, interdisciplinary, and critical research area.

With the emergence of educational technologies, the frontiers in studies on peer assessment in online language education witnessed the welcoming integration of education technologies. Many empirical studies in more recent years closely turned to popular educational technologies, and were eager to discover the application of peer assessment in online language courses. Video-based peer feedback was considered an efficient tool to provide emotionally supportive feedback for language learners with an enhanced sense of realistic perception using virtual reality (Chien et al. 2020). Compared with traditional forms of written feedback, video peer feedback improved learners’ Chinese-to-English translation performance (Ge 2022). Digital note-taking technologies demonstrated unique advantages in addressing learners’ special needs when suffering from language-related disabilities (Belson et al. 2013). However, the educational technologies applied to peer assessment in online language courses still comprised a small proportion of dominant educational technologies assisting language learning.

2.4. Research Questions

Based the research gaps revealed in the previous sections, we would like to answer the following research questions (RQs):

- RQ1:

- In terms of the number of publications, research areas, and distribution of publication journals, what are the publication trends of studies on peer assessment in online language courses?

- RQ2:

- What are the top authors, keywords, countries, and organizations in the studies on peer assessment in online language courses?

- RQ3:

- What are the most studied language skills in the existing literature related to peer assessment in online language courses?

- RQ4:

- What are the commonly investigated interdisciplinary topics related to peer assessment in online language courses?

- RQ5:

- How are the theoretical foundations of peer assessment commonly cited in current studies on the application to online language courses?

- RQ6:

- How can educational technologies be integrated into peer assessment in online language courses?

First, understanding the publication trends and the most cited study contributors would be a basic and essential way to follow the development and approach the frontiers of this topic. Based on the existing literature, this study would provide the primary bibliometric results of studies on peer assessment in online language courses to answer RQ1 and 2. To provide more details of the existing literature, RQ3 would consider studies within language education research and identify the dominant research topics. In contrast, RQ4 would take perspectives outside language education, investigating the interdisciplinary approaches to linguistic and educational research. We would also explore how the theoretical foundations were cited in current studies and examine whether new sources of updated theoretical foundations in the existing literature have arisen. Therefore, RQ5 was proposed and would be answered based on our citation network analysis. In properly enhancing the effectiveness of language teaching and learning in technology-assisted environments, there are opportunities, but also challenges. By exploring peer assessment in online language courses, we would like to provide some suggestions about how to integrate educational technologies into peer assessment in online language courses by answering RQ6.

3. Methods

3.1. Literature Search and Result Analysis on Web of Science

We searched the related literature on Web of Science (WOS), a worldwide literature search engine that provides access to databases and journals. More specifically, we selected the Core Collection of WOS, i.e., a selected collection of journals. The Core Collection comprises multiple indexes of high-quality journals, including Science Citation Index Expanded (2013 to present), Social Sciences Citation Index (2006 to present), Arts and Humanities Citation Index (2008 to present), and Emerging Sources Citations Index (2017 to present). We did not use Current Chemical Reactions (1985 to present) and Index Chemicus (1993 to present) due to their unrelated research areas to this study. Keywords were searched as follows: Peer assessment OR peer evaluation OR peer feedback OR peer review (Topic) AND online (Topic) AND writing OR speaking OR oral OR spoken OR listening OR reading OR language (Topic). The search results were filtered by selecting related research areas to concentrate the results on the studies of online language education, including “Education and Education Research”, “Language and Linguistics”, “Communication”, “Knowledge Engineering and Representation”, and “Translational Studies”. WOS was used to analyze the search results. The analysis function integrated into the website would allow a preliminary bibliometric analysis by counting results published in different years, WOS categories, and sources (journals). These three items would provide the distribution of year-based publications, the top 10 published categories, and the top 10 publication journals.

3.2. Visualization and Citation Network Analysis

We exported full records and cited references of the search results in plain text files for visualization analysis. This study used two popular computer programs for visualization and citation network analyses, i.e., VOSviewer (Van Eck and Waltman 2014) and CitNetExplorer (Van Eck and Waltman 2010). VOSviewer allowed the visualization of authors, keywords, countries, and organizations based on the relationships between items according to the literature records. We generated the visualized maps by setting the minimum occurrence = 2 for each item and the lists that counted the citations and occurrences of authors, keywords, countries, and organizations. In order to offer representative results while covering a relatively large picture of studies on this topic, the top 20 authors, keywords, countries, and organizations with the highest citations or occurrences would be presented and analyzed in this study. Excluding online-first papers was required to avoid technical problems because publication years were not provided for the online-first papers to be analyzed by CitNetExplorer. Then, the rest of search results were used for a citation network analysis. We chose to exhibit the non-matching cited references in the software so that the entire network could be included. The clustering function in this software would categorize the results to show the citation network of the search results (Van Eck and Waltman 2017). The function of longest path analysis allowed examining how previous theories and findings were cited and developed by more recent studies via multiple times of citations. The longest paths would help identify the pioneering research where valuable foundations were set and inherited to current studies.

4. Results

4.1. Literature Search

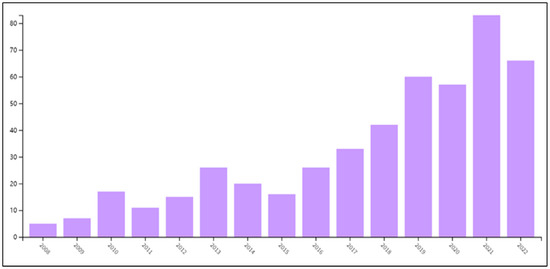

On 16 November 2022, we searched the Core Collection of WOS. The filtered results by research areas included 484 records. To reveal publication trends (RQ1), we found the answers from figures and lists generated by the “Analyze Results” function on WOS. Figure 1 demonstrates the number of publications on peer assessment in online language courses between 2008 and 2022. Since 2008, the studies related to peer assessment in online language courses have been steadily increasing, especially with a rapid increase in 2019 and 2021. During the past 15 years, the current climax was in 2021, with 83 results. The year 2022, despite the incomplete counting, recorded N = 66. For all 484 results related to language and linguistic studies, the primary Web of Science categories were demonstrated in Table 1. The primary publication journals were shown in Table 2, where the top 10 journals have published 26.24% results (N = 127). Among the 484 results, 27 belonged to the online-first papers, which were excluded for citation network analysis using CitNetExplorer. The exported full records of the results after excluding online-first papers included 457 studies.

Figure 1.

Publication trend of the studies on peer assessment in online language courses.

Table 1.

The top 10 Web of Science Categories with most search results.

Table 2.

The top 10 journals in the search results.

4.2. Visualization Analysis and Most-Cited Items

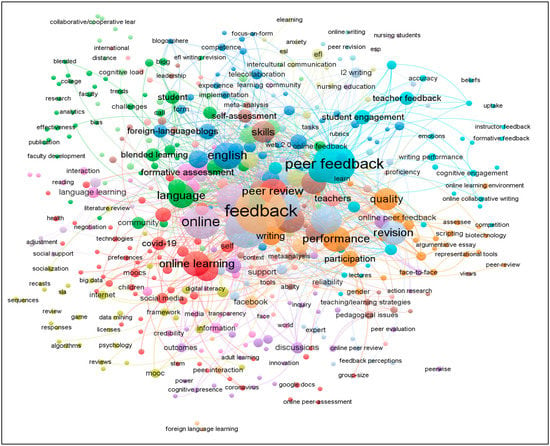

In order to answer RQ2, the filtered studies with online-first papers were processed with VOSviewer, where 468 keywords were visualized in Figure 2. Keyword items were grouped by their connections into 17 clusters. Clusters were distinguished with different colors, and the connections between nodes demonstrated how the keyword items were combined in published articles. Table 3 displayed seven clusters that contained more than 30 keyword items, which amounted to 270 keywords (57.69% of all). The other 10 clusters were relatively small and less frequently used, so they might not reflect the academic interest related to this topic. Through the visualization analysis of the primary clusters, the representative keyword items in Table 3 and the salient ones in Figure 2 revealed the interest in specific research topics related to peer assessment in online language courses. The integration of educational technology into online language education was particularly demonstrated by various keyword items related to technologies. The keywords with the highest occurrences slightly varied in referring to “peer assessment”. Although peer assessment belongs to collaborative learning strategies and teaching approaches, researchers used peer “assessment”, “feedback”, “evaluation”, and “review”. Regarding educational levels, “higher-education” was the 17th most used keyword. Popular keyword items also included different learning content, including “knowledge” and “skills”, as well as psychological constructs, such as “motivation” and “perceptions”.

Figure 2.

Visualization of the co-occurrence of keywords in the studies.

Table 3.

Keyword item clustering in VOSviewer.

Similarly, we generated lists of 64 authors, 127 organizations, and 44 countries that occurred at least twice. In Table 4 and Table 5, we listed the top 20 authors, organizations, and countries according to their citations, and listed the top 20 keyword items according to their occurrences in the search results. Most studies were conducted in English-speaking countries, such as the US, the UK, and Australia. Many studies on peer assessment in online language courses were conducted in non-English-speaking countries. Countries such as China, Spain, and the Netherlands do not use English as their official language, but they have contributed to hundreds of publications on this topic.

Table 4.

The top 20 authors and keyword items in the search results.

Table 5.

The top 20 organizations and countries in the search results.

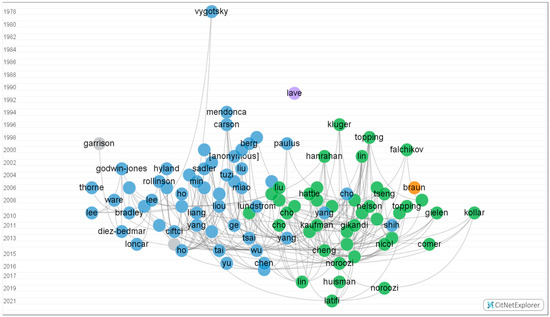

4.3. Literature Clustering

The results without online-first publications (N = 457) were grouped by the “clustering” function in CitNetExplorer according to their citations. This function could analyze the relatedness of the publications and determine the cluster to which each publication belongs according to the overall measurements of its citation relationships with other publications (Van Eck and Waltman 2017). As Figure 3 shows, four clusters were identified, while 188 publications did not belong to a group due to the minimum setting of group size (minimum size = 10). Group 1 (G1, N = 168) and Group 2 (G2, N = 140) took up 67.40% of all publications, reflecting the primary relationships in the literature search results. Group 3 (N = 16) and Group 4 (N = 12) were relatively small. Therefore, we primarily considered the two large clusters to further examine the contents of the literature.

Figure 3.

The citation network of publications.

RQ3 and 4 led us to the distinct research focuses between the two major groups (G1 and G2). We exported the clustering results of G1 and G2, identifying their interests in specific educational technologies, learning contents, and participants’ educational levels by reading their titles, keywords, and abstracts. Studies in G1 mainly concentrated on the discussions on collaborative writing in online contexts (Tang et al. 2022; Sun and Zhang 2022; Zhang et al. 2022) with a small proportion of other language skills. Corresponding to the keyword clustering results in Table 3, topics related to writing skills have been frequently investigated in the search results. The studies on writing were extended to the virtual writing course (Payant and Zuniga 2022), academic writing (Zhang et al. 2022), writing revision according to automated and human feedback (Tian et al. 2022), and so forth. By contrast, G2 took a different perspective and attached the priority to learning outcomes of peer assessment and peer feedback in online language courses, dominantly examining the psychological and behavioral aspects. This cluster demonstrated the interdisciplinary research trends revealed in previous sections. Examples of interacting research areas could be found in the literature search results: Peer feedback could affect learners’ motivations in gamified learning (Saidalvi and Samad 2019). Peer assessment was also related to reflexive practice (Shek et al. 2021).

4.3.1. The Theoretical Foundations of Peer Assessment

In order to examine the theoretical foundations and the related citation networks (RQ5), we analyzed the citation paths in G1 and G2. The most cited publication in all clusters was Vygotsky and Cole (1978), with citations = 40. Other highly cited publications included Topping (1998) (in G1) with citations = 33, and Liu and Carless (2006) (in G2) with citations = 31. Vygotsky and Cole (1978), cited more than 70 times in the filtered results, served as the pioneer of the studies on peer assessment in language courses. In their Mind in Society, the theory of “Zone of Proximal Development” (ZPD) set the foundation of collaborative learning, suggesting learners make advancements as a result of their collaborative activity (Ali 2021; Vygotsky and Cole 1978). Similar to the idea of ZPD, Vygotsky’s scaffolding theory indicated the importance of guidance suitable to learners’ cognitive levels (Vygotsky and Cole 1978). Topping’s article conducted an early review of peer assessment, providing a typology, benefits, theoretical foundations, validity, and reliability evaluations of peer assessment (Topping 1998). Previous studies also established that Topping’s article was a pioneer in proposing peer assessment (Lin 2019). Strong evidence from a literature review and a large-scale questionnaire survey provided rationales for peer assessment that might enhance students’ learning outcomes (Liu and Carless 2006).

Analyses of each group’s citation network revealed the relationships after literature clustering. In G1 (studies in blue color in Figure 3), we selected the publications with the highest citation scores, including Vygotsky and Cole (1978), Lundstrom and Baker (2009), Yang et al. (2006), Min (2005), Min (2006), Cho and Schunn (2007), and Tuzi (2004). However, we found no citation path longer than 3. Recent studies, such as Van den Bos and Tan (2019), Saeed et al. (2018), Pham (2021), and Lv et al. (2021), directly cited the former group of publications, i.e., the longest citation path length = 1. Thus, citation network analysis was not suitable for this group. However, we found that the theoretical foundations represented by Vygotsky and Cole (1978) were still accepted in current studies.

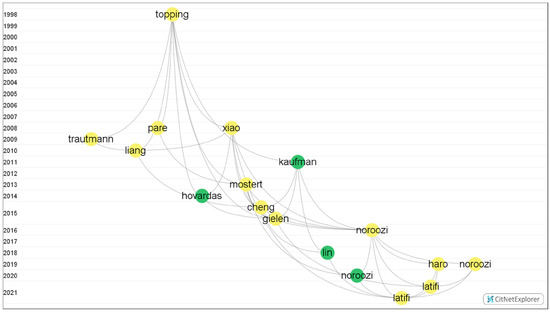

In G2, the longest path was identified by selecting the most cited publication by Topping in 1998 and one of the recent studies, i.e., Latifi et al. (2021). We exhibited all intermediate publications between these two papers and identified 15 results between them. Multiple longest paths (length = 8 publications) between these two involved 11 publications and were marked in light yellow in Figure 4. The other four dark-green dots were also intermediate publications between the two selected ones, but not on the longest paths. Interestingly, we found that a group of common citations identified in these paths existed between multiple recent studies and Topping’s article in 1998, as demonstrated in Table 6. Between Topping 1998 and each of the recent studies in the second column, all or most of the common citations in the third column appeared as intermediate publications.

Figure 4.

Multiple longest paths between Topping (1998) and Latifi et al. (2021).

Table 6.

Common citations in multiple longest paths between Topping (1998) and eight recent studies published in 2021 and 2022.

4.3.2. Integration of Educational Technologies into Peer Assessment in Online Language Courses

We examined the most recent studies published in 2021 and 2022, including 31 studies in G1 and 37 in G2 identified by CitNetExplorer to answer RQ6. More specifically, we identified from these studies the educational technologies applied to peer assessment in online language courses. Similar to the identification of distinct focus above, studies in G1 indicated that teachers of online language courses developed multiple designs of collaborative learning, with peer assessment considered an easily performed and beneficial learning strategy (Lv et al. 2021). Academic writing, notetaking in lectures, and revision tasks could be enhanced with peer assessment (Zhang et al. 2021; Choi et al. 2021). Applied to synchronous computer-mediated online language courses, corrective feedback provided by peer learners could benefit grammatical knowledge learning for EFL students (Mardian and Nafissi 2022). Compared with automated writing evaluation technologies in self-regulated language learning, peer assessment and teacher feedback activated more cognitive and motivational strategies (Tian et al. 2022). Additionally, educational technologies in providing peer assessment in online language courses included the following: an online learning community for teaching writing (Tang et al. 2022), video peer feedback in a Chinese-English translation course (Ge 2022; Odo 2022), learning analytics (Chen et al. 2022), an academic English writing MOOC (Wright and Furneaux 2021; Fang et al. 2022), Google Docs (Ali 2021), and video annotation used for peer assessment (Shek et al. 2021).

5. Discussion

5.1. Publication Trends

A possible reason that publications about peer assessment in online language courses have seen a sharp increase around 2019 is the urgent need for distance education triggered by COVID-19 (Adedoyin and Soykan 2020), which requires active interdisciplinary research. In order to provide a more comprehensive understanding, researchers resort to perspectives of multiple research domains, such as psychology in education, cultural studies, and technological advancements. The integration of research domains and subjects also contributes to the formation of the interdisciplinary trend of peer assessment in online language education. Introducing theories and approaches in multiple research areas into language education research produces diversified focuses on peer assessment in online language courses. As found in the most used keywords, researchers referred to peer assessment as a collaborative learning strategy with slightly different terms. This demonstrates different pedagogical purposes in actual teaching practice. As Stovner and Klette (2022) suggested, assessment and evaluation are distinguished from feedback in that the former terms tend to be summative, while the latter is a formative approach to learning. Students can improve their further learning based on instructive and evaluative information in peer feedback, while assessment primarily emphasizes grading outcomes than constructive guidance. Such a distinction can explain why other terms are used in line with “assessment”.

Higher education is a top-used keyword, while other educational levels are less investigated. This is probably because higher education contexts allow more flexible and diversified teaching designs or even teaching experiments. Higher education involves more diversified but specialized learning contents, where instructors actively seek effective methods to deliver learning contents to their students. Additionally, students’ expertise is relatively limited before higher education levels, so they may not evaluate their peer performances from a comprehensive perspective of particular subjects. When faced with difficulties in academic contexts, higher education students can rely on more experience in exploring how to provide proper suggestions on improvements for their peer students. Similarly, based on academic expectations and experiences, higher-education students have stronger abilities to explore expertise in their subjects and think critically and independently than students under the higher education level. Peer assessment in higher education finds a balance between using its advantages and minimizing the risk caused by students’ limited expertise. The challenges and the benefits exist simultaneously, while overemphasizing the risk may prevent peer assessment from wide application and further development in higher education (Ashenafi 2017). The solutions to some concerns are being provided by the updated and emerging technologies in recent years.

On the global scale, the shared research interest in language education encourages researchers to investigate peer assessment in online language courses, whether the studies be conducted in English- or non-English-speaking countries. English-speaking countries outnumber non-English-speaking countries in terms of publications probably because the literature search is based on English publications. It is reasonable to assume that researchers in various countries across the globe are devoted to localizing educational technologies in online teaching and learning their local languages. For English publications, studies on peer assessment in online language courses are not restricted to English-as-first-language contexts. They also include foreign language and second language teaching (see Ge 2022 as an example of the Chinese EFL learning context). Peer assessment in teaching languages other than English online can also be found (Tsunemoto et al. 2022). The enhanced learning effectiveness and benefits of peer assessment in online language education are supported by evidence from search results around the globe.

The clustering of the literature demonstrates a preference for studies on writing over other linguistic skills. This research trend may result from more convenient and practical attempts to implement peer assessment in online writing tasks than other language skills. First, comments on writing are clear and efficient, while it requires much more time to review peer performances in other forms, let alone the reviewers providing efficient comments and the receivers’ understanding. For instance, performances in videos and spoken tasks are not as straightforward as written tasks regarding review and evaluation. Time is critical in implementing self-assessment and peer-assessment activities (Siow 2015). The time-consuming nature, with presumably limited accuracy, prevents students from accepting peer assessment and teachers from considering peer assessment activities. Therefore, the required time for peer assessment and the convenience of providing peer suggestions for improving language skills may be an important element in explaining the existing preference for investigating peer assessment in online writing courses. Second, broader and higher-level knowledge is required to identify problems in performances other than general writing tasks, including academic writing, thinking, learning strategies, oral presentation, and communication, and provide practical solutions to improvements (Zhang et al. 2021; Shek et al. 2021). In contrast, writing tasks for general purposes allow peer students to provide their understanding and suggestions even if the reviewers still need to develop their expertise in writing. The relatively common requirements can enhance the acceptance and usefulness of peer assessment activities.

5.2. Theoretical Advancements

According to Vygotsky and Cole (1978), students can easily learn what they can achieve under suitable guidance to their current levels of cognition and knowledge, which explains the importance of peer support. Consequently, peer students with similar levels of knowledge and cognitive competence are the best sources of such guidance. This explains why peer assessment could be originated from his theory. Between his original theories and the current studies, a group of common citations identified in the above section delineate critical issues related to peer assessment: Engaging students in online peer assessment is an essential challenge and research topic; the roles of students who provide and receive peer assessment can be the key to the challenge (Gielen and De Wever 2015). Comparison between self-assessment, peer assessment, and expert assessment is a way to understand the unique advantages of peer assessment (Liang and Tsai 2010; Paré and Joordens 2008; Trautmann 2009). Peer assessment in online language courses shows advantages over traditional formats. In this sense, findings by comparison of online module-based and traditional paper-based peer feedback are significant in technology-assisted educational contexts (Mostert and Snowball 2013). Different peer assessment designs can also help to explore the enhanced approaches to successful peer assessment (Xiao and Lucking 2008). How peer feedback can succeed in terms of its quality is also a meaningful topic. High-quality, engaging, elaborated, and justified peer feedback leads to better writing outcomes (Noroozi et al. 2016).

The findings of the citation network analysis have led to the above critical research issues in the existing literature. Current studies are still interested in peer assessment in online language courses and widely cite findings about student engagement, unique advantages, enhanced designs, and critical factors for success. These may largely explain the citations used in the most recent studies about writing (Hoffman (2019); Lin (2019); more specifically, argumentative writing and learning in Latifi et al. (2021); Nicolini and Cole (2019); Noroozi and Hatami (2019); Noroozi et al. (2020)). The most recent studies are based on the identified common citations, since they have pointed out the above specific research directions and topics in this area.

5.3. Integration of Educational Technologies and Interdisciplinary Trends

The increasing use of educational technologies and the interdisciplinary research trends have been consistent with the influences of Web 2.0 on collaborative learning since around 2005 (Hegelheimer and Lee 2013). Following internet technologies, portable and function-specific technologies have emerged in the past two decades. This encourages peer assessment in online language courses to involve multiple educational technologies, with close investigations of recent studies published in 2021 and 2022 on the rapid updates and developments of educational technologies, as listed by Haleem et al. in 2022. The diversified educational technologies integrated into peer assessment are also consistent with previous reviews and meta-analyses regarding technology-assisted language learning (Burston and Giannakou 2022). As a learning and teaching strategy, peer assessment can now be realized by various technologies and can enhance multi-faceted language skill acquisition and linguistic knowledge in specific aspects.

Interdisciplinary trends in this area can explain why many most-used keywords belong to subjects outside language studies: studies combine peer assessment in online language courses and hot issues in psychology, including keyword items such as efficacy, engagement, perception, and motivation (see Table 3 and Figure 2). Similarly, one publication can be classified into multiple categories (see Table 1). Consequently, the percentages of the top publication categories amount to over 100 percent due to multiple counts for the same publication in different categories. Studies on peer assessment in online language courses now involve multiple subjects and research areas. By comparing the predominant technologies and those applied to peer assessment in online language courses, factors such as instructors’ technology literacy, support from policies and regulations, and the effectiveness of different technologies can impact the actual application of peer assessment in online language courses. The acceptance of educational technologies has been another popular research topic, especially with the wide adoption of structural equation modeling (Zhang and Yu 2022). An increasing number of factors are being included in explaining this mechanism whereby particular educational technologies can be accepted by learners and instructors and adopted in teaching practice.

Based on recent investigations on educational technologies and interdisciplinary research trends, the integration of educational technologies into peer assessment in online language courses should at least consider the following aspects. First, functional characteristics of technologies (such as interactivity, the timing and pacing of the learning process, and simulated or immersive experience) would determine whether students will significantly benefit from technology applications to particular learning contents. When the characteristics of the learning contents can be enhanced by the advantages of the educational technologies, the matched technologies and contents will more likely promote students’ learning outcomes. For example, demonstration through video feedback may be more useful than other technologies for skill learning where simulation and visual aids matter (Shek et al. 2021).

Second, diversifying designs and updating features of the existing educational technologies may provide solutions to previous limitations, which requires the instructors to stay on pace with the advancements of educational technology developments. Some educational technologies still need validation through practical experience of peer assessment applications in online contexts. Through comparing the most recent studies and a broader framework of educational technologies proposed by Hallem et al. in 2022, the enhanced effectiveness of peer assessment has been well established for technologies in online language education, such as mobile applications (Chang and Lin 2020) and web-based learning communities (Lai et al. 2019). However, with the unbalanced attention attached to different technologies, some may need greater attention in order to reveal their usefulness in peer assessment in online language courses. As an emerging technology, artificial intelligence chatbots have recently been introduced into language education, though their effectiveness remains controversial (Huang et al. 2022). Learning analytics has received keen interest and helped understand students’ perceptions of peer assessment (Misiejuk et al. 2021), but only limited literature can be found. For less-investigated technologies, instructors may need to consider the features and functions of the technologies, i.e., compare how characteristics of particular educational technologies fit the teaching contents of the targeted language skills.

Third, according to studies on the influencing factors in subjects outside language education and linguistics, instructors should consider psychological, social, cultural, gender-related, and other factors. Educational technologies can demonstrate varying effects for different participants (Yu et al. 2022a, 2022b). This principle, that multi-faceted factors should be investigated and considered, is consistent with some existing studies that aimed to provide an understanding of influencing factors on online learning. For example, Yu et al. (2022a) identified influencing psychological factors on MOOCs, including learning engagement, students’ motivation, perceptions, and satisfaction. Wu and Yu explored achievement emotions in online learning (Wu and Yu 2022). Learning strategy can be diversified in mobile English learning (Yu et al. 2022b), and it may also be a critical factor in peer assessment in online language earning. Such interdisciplinary studies evaluating technology-enhanced education provide an understanding of the influencing factors in educational technologies. According to these studies, solutions may be found to diversify the application of educational technologies to online language courses and enhance the effectiveness of online peer assessment.

6. Conclusions

6.1. Major Findings

This bibliometric analysis used visualization and citation network analysis to investigate peer assessment in online language courses. We found that this topic has witnessed a sharp increase in publications since 2019 and remained popular. The publications also demonstrated international and interdisciplinary trends by introducing concepts and approaches in multiple research areas, as well as emerging educational technologies. Peer assessment was not a new learning and teaching strategy that occurred way earlier than online learning. Despite theoretical foundations from more than a century ago, recent studies developed and identified specific research topics and important findings, including critical factors for improving students’ engagement and feedback quality, unique advantages in online peer assessment, and designs to enhance peer assessment quality. Current studies preferred to investigate writing skills with online peer assessment, but other integrative and complex linguistic skills were also studied in terms of the effects of peer assessment. Another robust research trend was that current studies had strongly welcomed studies involving emerging educational technologies. International and interdisciplinary research trends and the increasingly popular integration of educational technologies may diversify peer assessment in online language courses and enhance the effectiveness of online peer assessment activities.

6.2. Limitations

Some limitations have to be acknowledged regarding the methods of visualization, citation network, and bibliometric analyses. Compared with systematic reviews and meta-analyses, bibliometric analyses could not dig into details of the literature, even if it might include a much larger size of literature at a time. However, in order to address such primary limitations, this bibliometric analysis study not only utilizes the vivid demonstration of authors, keyword items, organizations, countries, and studies, but also examines some frequently cited and most recent studies on this topic to reveal the frontier research topics and the research trend. This study is subjected to some limitations specific to this study. First, this study conducted a literature search in English publications due to the limitation of our linguistic knowledge. Studies published in other languages were not included in this study. Second, the exploration of theoretical foundations relied on the literature search results and did not fully take a chronological perspective in tracking the origin of the related theories.

6.3. Implications for Future Research

Theoretically, the critical research directions identified in citation network analysis will continue encouraging studies to contribute to those research issues. The overall goal will still be to improve the understanding of peer assessment in online language courses, ultimately facilitating language teaching in the era of e-learning. More practically, future studies may extend the studies on peer assessment in online language courses in multiple directions. Localization of peer assessment encourages researchers across the globe to explore and establish the effectiveness of peer assessment in their native languages other than English. Educational technologies are emerging and updating quickly, encouraging researchers to integrate some less-studied technologies into peer assessment in online language courses. Peer assessment, in proper forms, may also be investigated at other educational levels, extending teaching practice and the experience of implementing peer-assessment approaches. Future studies that aim to review studies on this topic may adopt other approaches, such as meta-analysis and systematic review. These two methods may lead future researchers to more specific analyses in this area. Studies on peer assessment under online learning contexts will also shed light on the further application of educational technologies and enhance teaching and learning outcomes.

Author Contributions

Conceptualization, Y.L. and Z.Y.; methodology, Y.L.; software, Y.L.; vali-dation, Y.L. and Z.Y.; writing—original draft preparation, Y.L.; writing—review and editing, Y.L. and Z.Y.; visualization, Y.L.; supervision, Z.Y.; project administration, Z.Y.; funding acquisition, Z.Y. All authors have read and agreed to the published version of the manuscript.

Funding

This research was funded by the 2019 MOOC of Beijing Language and Culture University (MOOC201902) (Important) “Introduction to Linguistics”; the “Introduction to Linguistics” of online and offline mixed courses in Beijing Language and Culture University in 2020; the special fund of the Beijing co-construction project—research and reform of the “Undergraduate Teaching Reform and Innovation Project” of Beijing higher education in 2020—an innovative “multilingual+” excellent talent training system (202010032003); and the research project of the graduate students of Beijing Language and Culture University, “Xi Jinping: The Governance of China” (SJTS202108).

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

Not applicable.

Acknowledgments

The authors would like to extend sincere gratitude to their funders, journal editors, anonymous reviewers, and all other participants who offered help to review and publish this paper. The first author would like to deliver special thanks to his supervisors in the master’s program.

Conflicts of Interest

The authors declare no conflict of interest.

References

- Adachi, Chie, Joanna Hong-Meng Tai, and Phillip Dawson. 2018. Academics’ perceptions of the benefits and challenges of self and peer assessment in higher education. Assessment & Evaluation in Higher Education 43: 294–306. [Google Scholar] [CrossRef]

- Adedoyin, Olasile Babatunde, and Emrah Soykan. 2020. COVID-19 pandemic and online learning: The challenges and opportunities. Interactive Learning Environments, 1–13. [Google Scholar] [CrossRef]

- Akbari, Elham, Ahmad Naderi, Robert-Jan Simons, and Albert Pilot. 2016. Student engagement and foreign language learning through online social networks. Asian-Pacific Journal of Second and Foreign Language Education 1: 4. [Google Scholar] [CrossRef]

- Ali, Amira D. 2021. Using Google Docs to enhance students’ collaborative translation and engagement. Journal of Information Technology Education 20: 503–28. [Google Scholar] [CrossRef] [PubMed]

- Ashenafi, Michael Mogessie. 2017. Peer-assessment in higher education–twenty-first century practices, challenges and the way forward. Assessment & Evaluation in Higher Education 42: 226–51. [Google Scholar] [CrossRef]

- Awada, Ghada M., and Nuwar Mawlawi Diab. 2021. Effect of online peer review versus face-to-Face peer review on argumentative writing achievement of EFL learners. Computer Assisted Language Learning 36: 238–56. [Google Scholar] [CrossRef]

- Belson, Sarah Irvine, Daniel Hartmann, and Jennifer Sherman. 2013. Digital note taking: The use of electronic pens with students with specific learning disabilities. Journal of Special Education Technology 28: 13–24. [Google Scholar] [CrossRef]

- Burston, Jack, and Konstantinos Giannakou. 2022. MALL language learning outcomes: A comprehensive meta-analysis 1994–2019. ReCALL 34: 147–68. [Google Scholar] [CrossRef]

- Chang, Ching, and Hao-Chiang Koong Lin. 2020. Effects of a mobile-based peer-assessment approach on enhancing language-learners’ oral proficiency. Innovations in Education and Teaching International 57: 668–79. [Google Scholar] [CrossRef]

- Chen, Si, Fan Ouyang, and Pengcheng Jiao. 2022. Promoting student engagement in online collaborative writing through a student-facing social learning analytics tool. Journal of Computer Assisted Learning 38: 192–208. [Google Scholar] [CrossRef]

- Cheong, Choo Mui, Na Luo, Xinhua Zhu, Qi Lu, and Wei Wei. 2022. Self-assessment complements peer assessment for undergraduate students in an academic writing task. Assessment & Evaluation in Higher Education 48: 135–48. [Google Scholar] [CrossRef]

- Chien, Shu-Yun, Gwo-Jen Hwang, and Morris Siu-Yung Jong. 2020. Effects of peer assessment within the context of spherical video-based virtual reality on EFL students’ English-speaking performance and learning perceptions. Computers & Education 146: 103751. [Google Scholar] [CrossRef]

- Cho, Kwangsu, and Christian D. Schunn. 2007. Scaffolded writing and rewriting in the discipline: A web-based reciprocal peer review system. Computers & Education 48: 409–26. [Google Scholar] [CrossRef]

- Choi, Eunjeong, Diane L. Schallert, Min Jung Jee, and Jungmin Ko. 2021. Transpacific telecollaboration and L2 writing: Influences of interpersonal dynamics on peer feedback and revision uptake. Journal of Second Language Writing 54: 100855. [Google Scholar] [CrossRef]

- Fang, Jian-Wen, Gwo-Jen Hwang, and Ching-Yi Chang. 2022. Advancement and the foci of investigation of MOOCs and open online courses for language learning: A review of journal publications from 2009 to 2018. Interactive Learning Environments 30: 1351–69. [Google Scholar] [CrossRef]

- Ge, Zi-Gang. 2022. Exploring the effect of video feedback from unknown peers on e-learners’ English-Chinese translation performance. Computer Assisted Language Learning 35: 169–89. [Google Scholar] [CrossRef]

- Ghahari, Shima, and Farzaneh Farokhnia. 2018. Peer versus teacher assessment: Implications for CAF triad language ability and critical reflections. International Journal of School & Educational Psychology 6: 124–37. [Google Scholar] [CrossRef]

- Gielen, Mario, and Bram De Wever. 2015. Scripting the role of assessor and assessee in peer assessment in a wiki environment: Impact on peer feedback quality and product improvement. Computers & Education 88: 370–86. [Google Scholar] [CrossRef]

- Haleem, Abid, Mohd Javaid, Mohd Asim Qadri, and Rajiv Suman. 2022. Understanding the role of digital technologies in education: A review. Sustainable Operations and Computers 3: 275–85. [Google Scholar] [CrossRef]

- Hampel, Regine, and Ursula Stickler. 2012. The use of videoconferencing to support multimodal interaction in an online language classroom. ReCALL 24: 116–37. [Google Scholar] [CrossRef]

- Haro, Anahuac Valero, Omid Noroozi, Harm J. A. Biemans, and Martin Mulder. 2019. The effects of an online learning environment with worked examples and peer feedback on students’ argumentative essay writing and domain-specific knowledge acquisition in the field of biotechnology. Journal of Biological Education 53: 390–98. [Google Scholar] [CrossRef]

- Hegelheimer, Volker, and Jooyoung Lee. 2013. The role of technology in teaching and researching writing. In Contemporary Computer-Assisted Language Learning. Edited by Michael Thomas, Hayo Reinders and Mark Warschauer. London: Bloomsbury Publishing Plc, pp. 287–302. [Google Scholar]

- Hoffman, Bobby. 2019. The influence of peer assessment training on assessment knowledge and reflective writing skill. Journal of Applied Research in Higher Education 11: 863–75. [Google Scholar] [CrossRef]

- Huang, Weijiao, Khe Foon Hew, and Luke K. Fryer. 2022. Chatbots for language learning—Are they really useful? A systematic review of chatbot-supported language learning. Journal of Computer Assisted Learning 38: 237–57. [Google Scholar] [CrossRef]

- Jung, Jaewon, Yoonhee Shin, and Joerg Zumbach. 2021. The effects of pre-training types on cognitive load, collaborative knowledge construction and deep learning in a computer-supported collaborative learning environment. Interactive Learning Environments 29: 1163–75. [Google Scholar] [CrossRef]

- Laal, Marjan, and Seyed Mohammad Ghodsi. 2012. Benefits of collaborative learning. Procedia-Social and Behavioral Sciences 31: 486–90. [Google Scholar] [CrossRef]

- Lai, Chih-Hung, Hung-Wei Lin, Rong-Mu Lin, and Pham Duc Tho. 2019. Effect of peer interaction among online learning community on learning engagement and achievement. International Journal of Distance Education Technologies 17: 66–77. [Google Scholar] [CrossRef]

- Latifi, Saeed, Omid Noroozi, Javad Hatami, and Harm J. A. Biemans. 2021. How does online peer feedback improve argumentative essay writing and learning? Innovations in Education and Teaching International 58: 195–206. [Google Scholar] [CrossRef]

- Li, Hongli, Yao Xiong, Charles Vincent Hunter, Xiuyan Guo, and Rurik Tywoniw. 2020. Does peer assessment promote student learning? A meta-analysis. Assessment & Evaluation in Higher Education 45: 193–211. [Google Scholar] [CrossRef]

- Liang, Jyh-Chong, and Chin-Chung Tsai. 2010. Learning through science writing via online peer assessment in a college biology course. The Internet and Higher Education 13: 242–47. [Google Scholar] [CrossRef]

- Lin, Chi-Jen. 2019. An online peer assessment approach to supporting mind-mapping flipped learning activities for college English writing courses. Journal of Computers in Education 6: 385–415. [Google Scholar] [CrossRef]

- Liu, Ngar-Fun, and David Robert Carless. 2006. Peer feedback: The learning element of peer assessment. Teaching in Higher Education 11: 279–90. [Google Scholar] [CrossRef]

- Liu, Xiongyi, Lan Li, and Zhihong Zhang. 2018. Small group discussion as a key component in online assessment training for enhanced student learning in web-based peer assessment. Assessment & Evaluation in Higher Education 43: 207–22. [Google Scholar] [CrossRef]

- Lundstrom, Kristi, and Wendy Baker. 2009. To give is better than to receive: The benefits of peer review to the reviewer’s own writing. Journal of Second Language Writing 18: 30–43. [Google Scholar] [CrossRef]

- Lv, Xiaoxuan, Wei Ren, and Yue Xie. 2021. The effects of online feedback on ESL/EFL writing: A meta-analysis. The Asia-Pacific Education Researcher 30: 643–53. [Google Scholar] [CrossRef]

- Mardian, Fatemeh, and Zohre Nafissi. 2022. Synchronous computer-mediated corrective feedback and EFL learners’ grammatical knowledge development: A sociocultural perspective. Iranian Journal of Language Teaching Research 10: 115–36. [Google Scholar] [CrossRef]

- Min, Hui-Tzu. 2005. Training students to become successful peer reviewers. System 33: 293–308. [Google Scholar] [CrossRef]

- Min, Hui-Tzu. 2006. The effects of trained peer review on EFL students’ revision types and writing quality. Journal of Second Language Writing 15: 118–41. [Google Scholar] [CrossRef]

- Misiejuk, Kamila, Barbara Wasson, and Kjetil Egelandsdal. 2021. Using learning analytics to understand student perceptions of peer feedback. Computers in Human Behavior 117: 106658. [Google Scholar] [CrossRef]

- Mostert, Markus, and Jen D. Snowball. 2013. Where angels fear to tread: Online peer-assessment in a large first-year class. Assessment & Evaluation in Higher Education 38: 674–86. [Google Scholar] [CrossRef]

- Nicolini, Kristine M., and Andrew W. Cole. 2019. Measuring peer feedback in face-to-face and online public-speaking workshops. Communication Teacher 33: 80–93. [Google Scholar] [CrossRef]

- Noroozi, Omid, and Javad Hatami. 2019. The effects of online peer feedback and epistemic beliefs on students’ argumentation-based learning. Innovations in Education and Teaching International 56: 548–57. [Google Scholar] [CrossRef]

- Noroozi, Omid, Harm Biemans, and Martin Mulder. 2016. Relations between scripted online peer feedback processes and quality of written argumentative essay. The Internet and Higher Education 31: 20–31. [Google Scholar] [CrossRef]

- Noroozi, Omid, Javad Hatami, Arash Bayat, Stan Van Ginkel, Harm J. A. Biemans, and Martin Mulder. 2020. Students’ online argumentative peer feedback, essay writing, and content learning: Does gender matter? Interactive Learning Environments 28: 698–712. [Google Scholar] [CrossRef]

- Nunes, Andreia, Teresa Limpo Carolina Cordeiro, and São Luís Castro. 2022. Effectiveness of automated writing evaluation systems in school settings: A systematic review of studies from 2000 to 2020. Journal of Computer Assisted Learning 38: 599–620. [Google Scholar] [CrossRef]

- Odo, Dennis Murphy. 2022. An action research investigation of the impact of using online feedback videos to promote self-reflection on the microteaching of preservice EFL teachers. Systemic Practice and Action Research 35: 327–43. [Google Scholar] [CrossRef]

- Paré, Dwayne E., and Steve Joordens. 2008. Peering into large lectures: Examining peer and expert mark agreement using peerScholar, an online peer assessment tool. Journal of Computer Assisted Learning 24: 526–40. [Google Scholar] [CrossRef]

- Payant, Caroline, and Michael Zuniga. 2022. Learners’ flow experience during peer revision in a virtual writing course during the global pandemic. System 105: 102715. [Google Scholar] [CrossRef]

- Pham, V. P. Ho. 2021. The effects of lecturer’s model e-comments on graduate students’ peer e-comments and writing revision. Computer Assisted Language Learning 34: 324–57. [Google Scholar] [CrossRef]

- Piaget, Jean. 1929. The Child’s Conception of the World. New York: Harcourt, Brace Jovanovich. [Google Scholar]

- Reiber-Kuijpers, Manon, Marijke Kral, and Paulien Meijer. 2021. Digital reading in a second or foreign language: A systematic literature review. Computers & Education 163: 104115. [Google Scholar] [CrossRef]

- Roberts, Tim S. 2005. Computer-supported collaborative learning in higher education. In Computer-Supported Collaborative Learning in Higher Education. Hershey: IGI Global, pp. 1–18. [Google Scholar]

- Roberts, Tim S., ed. 2004. Online Collaborative Learning: Theory and Practice. Hershey: IGI Global. [Google Scholar]

- Saeed, Murad Abdu, Kamila Ghazali, and Musheer Abdulwahid Aljaberi. 2018. A review of previous studies on ESL/EFL learners’ interactional feedback exchanges in face-to-face and computer-assisted peer review of writing. International Journal of Educational Technology in Higher Education 15: 9504. [Google Scholar] [CrossRef]

- Saidalvi, Aminabibi, and Adlina Abdul Samad. 2019. Online peer motivational feedback in a public speaking course. GEMA Online Journal of Language Studies 19: 258–77. [Google Scholar] [CrossRef]

- Salem, Ashraf Atta, and Aiza Shabbir. 2022. Multimedia Presentations through digital storytelling for sustainable development of EFL learners’ argumentative writing skills, self-directed learning skills & learner autonomy. Frontiers in Education 7: 884709. [Google Scholar] [CrossRef]

- Shek, Mabel Mei-Po, Kim-Chau Leung, and Peter Yee-Lap To. 2021. Using a video annotation tool to enhance student-teachers’ reflective practices and communication competence in consultation practices through a collaborative learning community. Education and Information Technologies 26: 4329–52. [Google Scholar] [CrossRef]

- Siow, Lee-Fong. 2015. Students’ perceptions on self-and peer-assessment in enhancing learning experience. Malaysian Online Journal of Educational Sciences 3: 21–35. [Google Scholar]

- Stovner, Roar Bakken, and Kirsti Klette. 2022. Teacher feedback on procedural skills, conceptual understanding, and mathematical practices: A video study in lower secondary mathematics classrooms. Teaching and Teacher Education 110: 103593. [Google Scholar] [CrossRef]

- Sun, Peijian Paul, and Lawrence Jun Zhang. 2022. Effects of translanguaging in online peer feedback on Chinese university English-as-a-foreign-language students’ second language writing performance. RELC Journal 53: 325–41. [Google Scholar] [CrossRef]

- Tang, Eunice, Lily Cheng, and Ross Ng. 2022. Online writing community: What can we learn from failure? RELC Journal 53: 101–17. [Google Scholar] [CrossRef]

- Tian, Lili, Qisheng Liu, and Xingxing Zhang. 2022. Self-regulated writing strategy use when revising upon automated, peer, and teacher feedback in an online English as a foreign language writing course. Frontiers in Psychology 13: 873170. [Google Scholar] [CrossRef]

- Topping, Keith J. 1998. Peer assessment between students in colleges and universities. Review of Educational Research 68: 249–76. [Google Scholar] [CrossRef]

- Topping, Keith J. 2009. Peer assessment. Theory into Practice 48: 20–27. [Google Scholar] [CrossRef]

- Topping, Keith J. 2018. Using Peer Assessment to Inspire Reflection and Learning. London: Routledge. [Google Scholar]

- Topping, Keith J., Elaine F. Smith, Ian Swanson, and Audrey Elliot. 2000. Formative peer assessment of academic writing between postgraduate students. Assessment & Evaluation in Higher Education 25: 149–69. [Google Scholar] [CrossRef]

- Tran, Thi Thanh Thao, and Qing Ma. 2021. Using formative assessment in a blended EFL listening course: Student perceptions of effectiveness and challenges. International Journal of Computer-Assisted Language Learning and Teaching 11: 17–38. [Google Scholar] [CrossRef]

- Trautmann, Nancy M. 2009. Interactive learning through web-mediated peer review of student science reports. Educational Technology Research and Development 57: 685–704. [Google Scholar] [CrossRef]

- Tsunemoto, Aki, Pavel Trofimovich, Josée Blanchet, Juliane Bertrand, and Sara Kennedy. 2022. Effects of benchmarking and peer-assessment on French learners’ self-assessments of accentedness, comprehensibility, and fluency. Foreign Language Annals 55: 135–54. [Google Scholar] [CrossRef]

- Tuzi, Frank. 2004. The impact of e-feedback on the revisions of L2 writers in an academic writing course. Computers and Composition 21: 217–35. [Google Scholar] [CrossRef]

- Van den Bos, Anne Hester, and Esther Tan. 2019. Effects of anonymity on online peer review in second-language writing. Computers & Education 142: 103638. [Google Scholar] [CrossRef]

- Van Eck, Nees Jan, and Ludo Waltman. 2010. Software survey: VOSviewer, a computer program for bibliometric mapping. Scientometrics 84: 523–38. [Google Scholar] [CrossRef]

- Van Eck, Nees Jan, and Ludo Waltman. 2014. CitNetExplorer: A new software tool for analyzing and visualizing citation networks. Journal of Informetrics 8: 802–23. [Google Scholar] [CrossRef]

- Van Eck, Nees Jan, and Ludo Waltman. 2017. Citation-based clustering of publications using CitNetExplorer and VOSviewer. Scientometrics 111: 1053–70. [Google Scholar] [CrossRef]

- Vygotsky, Lev Semenovich, and Michael Cole. 1978. Mind in Society: Development of Higher Psychological Processes. Cambridge: Harvard University Press. [Google Scholar]

- Williams, Cheri, and Sandra Beam. 2019. Technology and writing: Review of research. Computers & Education 128: 227–42. [Google Scholar] [CrossRef]

- Wright, Clare, and Clare Furneaux. 2021. ‘I Am Proud of Myself’: Student satisfaction and achievement on an academic English writing MOOC. International Journal of Computer-Assisted Language Learning and Teaching 11: 21–37. [Google Scholar] [CrossRef]

- Wu, Rong, and Zhonggen Yu. 2022. Exploring the Effects of Achievement Emotions on Online Learning Outcomes: A Systematic Review. Frontiers in Psychology 13: 977931. [Google Scholar] [CrossRef] [PubMed]

- Xiao, Yun, and Robert Lucking. 2008. The impact of two types of peer assessment on students’ performance and satisfaction within a Wiki environment. The Internet and Higher Education 11: 186–93. [Google Scholar] [CrossRef]

- Yang, Miao, Richard Badger, and Zhen Yu. 2006. A comparative study of peer and teacher feedback in a Chinese EFL writing class. Journal of Second Language Writing 15: 179–200. [Google Scholar] [CrossRef]

- Yu, Zhonggen, Wei Xu, and Paisan Sukjairungwattana. 2022a. A meta-analysis of eight factors influencing MOOC-based learning outcomes across the world. Interactive Learning Environments, 1–20. [Google Scholar] [CrossRef]

- Yu, Zhonggen, Wei Xu, and Paisan Sukjairungwattana. 2022b. Motivation, learning strategies, and outcomes in mobile English language learning. The Asia-Pacific Education Researcher, 1–16. [Google Scholar] [CrossRef]

- Zhang, Han, Ashleigh Southam, Mik Fanguy, and Jamie Costley. 2021. Understanding how embedded peer comments affect student quiz scores, academic writing and lecture note-taking accuracy. Interactive Technology and Smart Education 19: 222–35. [Google Scholar] [CrossRef]

- Zhang, Kexin, and Zhonggen Yu. 2022. Extending the UTAUT model of gamified English vocabulary applications by adding new personality constructs. Sustainability 14: 6259. [Google Scholar] [CrossRef]

- Zhang, Meng, Qiaoling He, Jianxia Du, Fangtong Liu, and Bosu Huang. 2022. Learners’ perceived advantages and social-affective dispositions toward online peer feedback in academic writing. Frontiers in Psychology 13: 973478. [Google Scholar] [CrossRef]

- Zheng, Lanqin, Nian-Shing Chen, Panpan Cui, and Xuan Zhang. 2019. A systematic review of technology-supported peer assessment research: An activity theory approach. International Review of Research in Open and Distributed Learning 20: 168–91. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).