LEO Object’s Light-Curve Acquisition System and Their Inversion for Attitude Reconstruction

Abstract

1. Introduction

2. System Description

3. Photometric Routine

3.1. Reference Star Selection Criterion

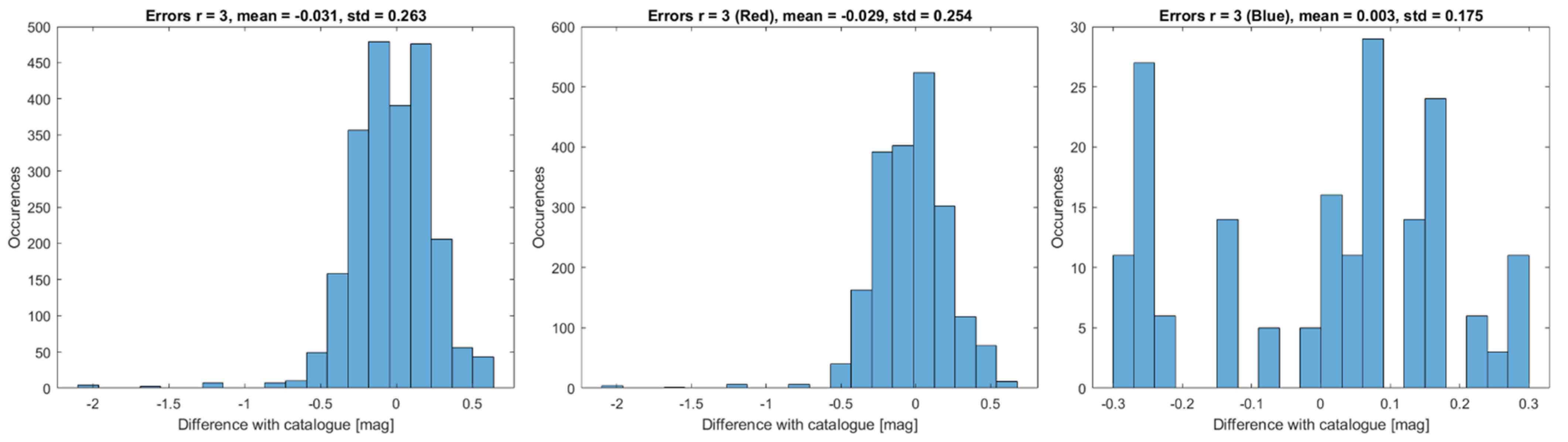

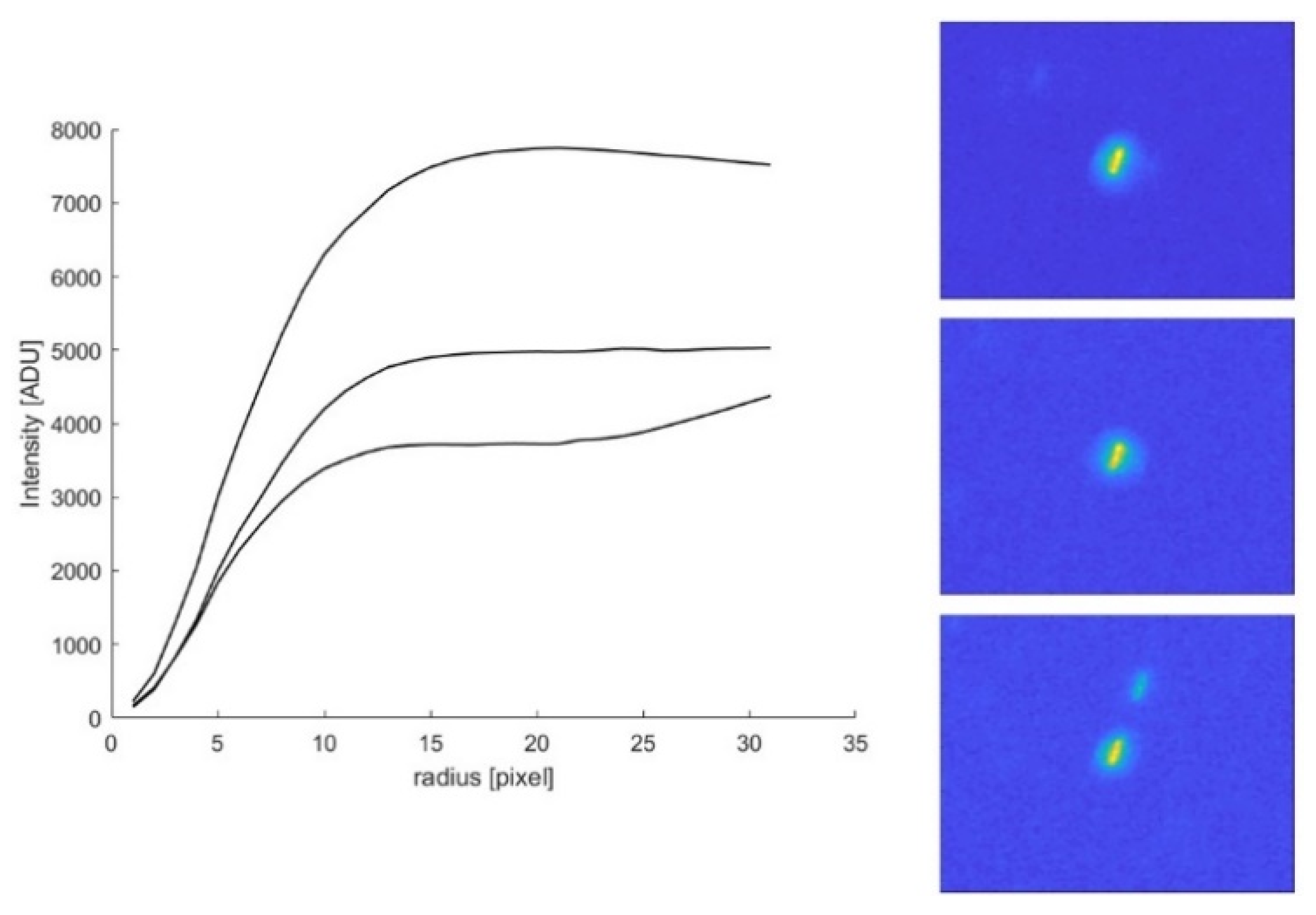

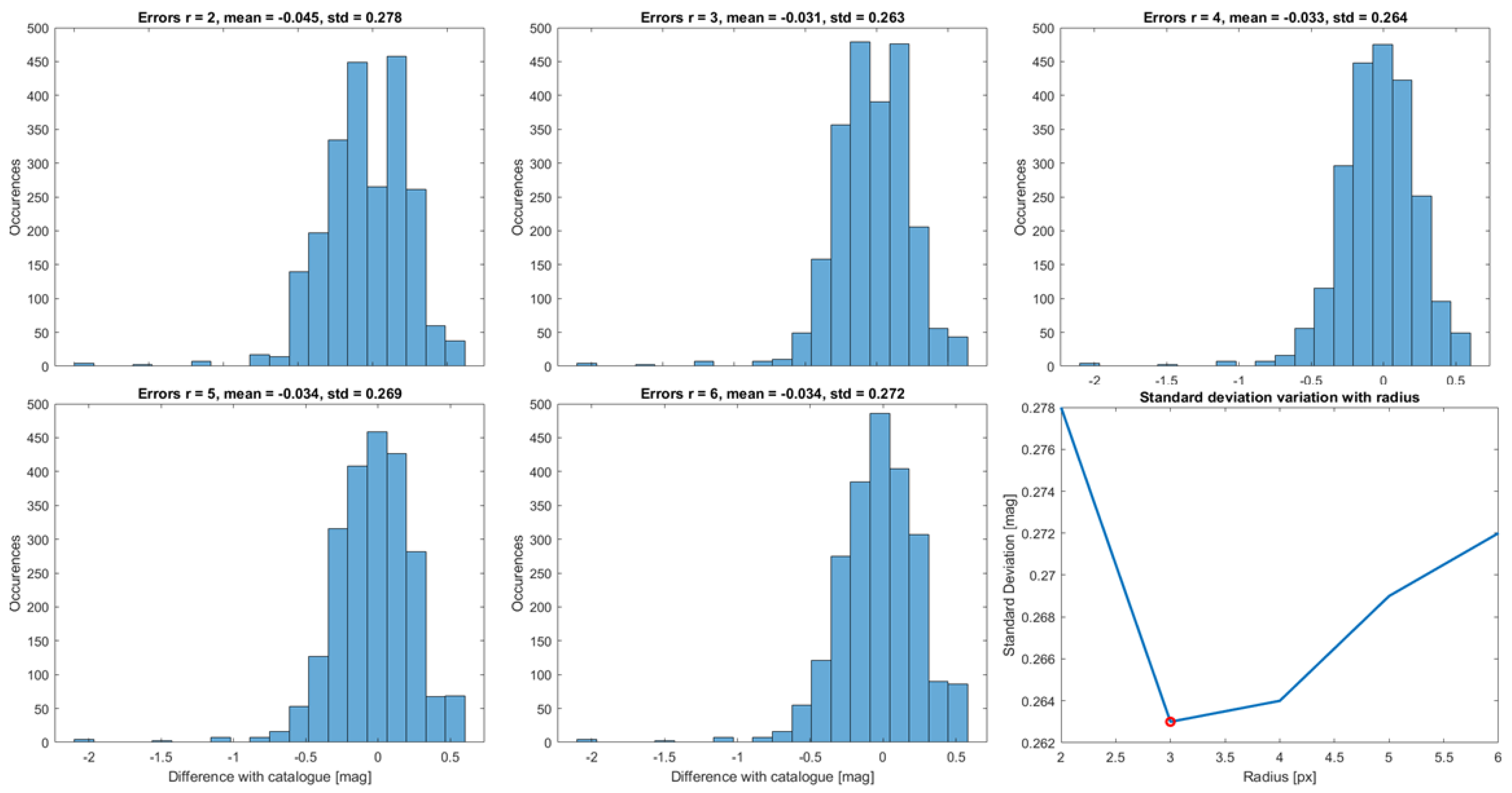

3.2. Star Intensity and Background Estimation

3.3. Stars Magnitude

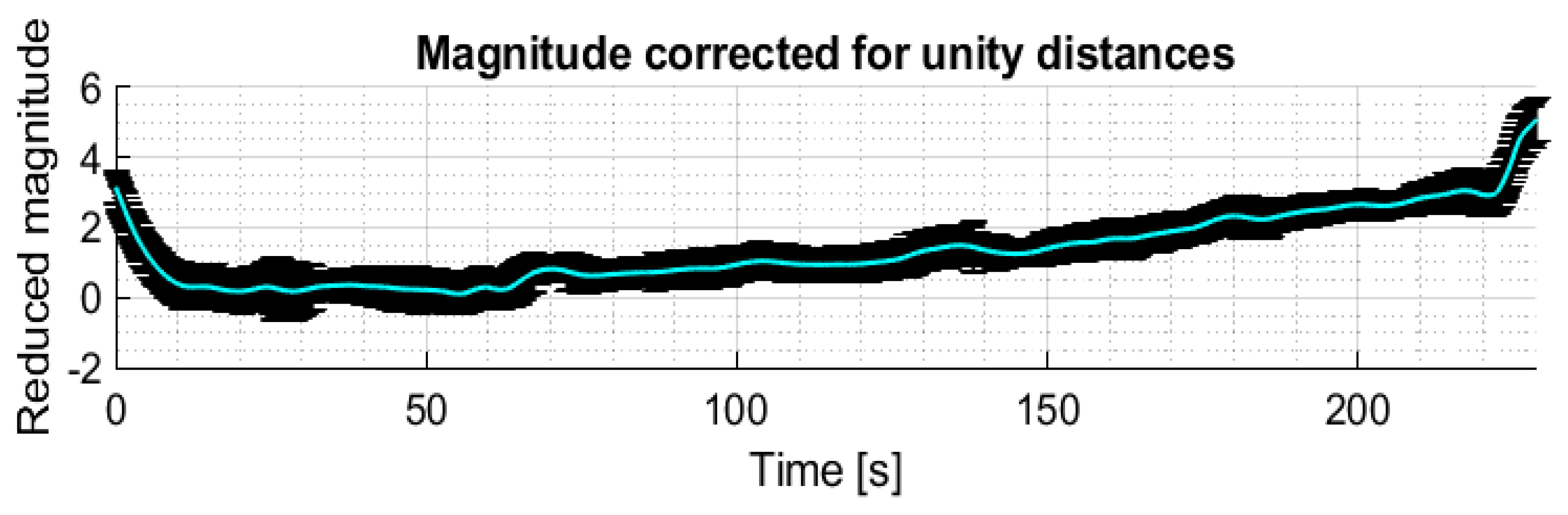

3.4. Object Magnitude Variation

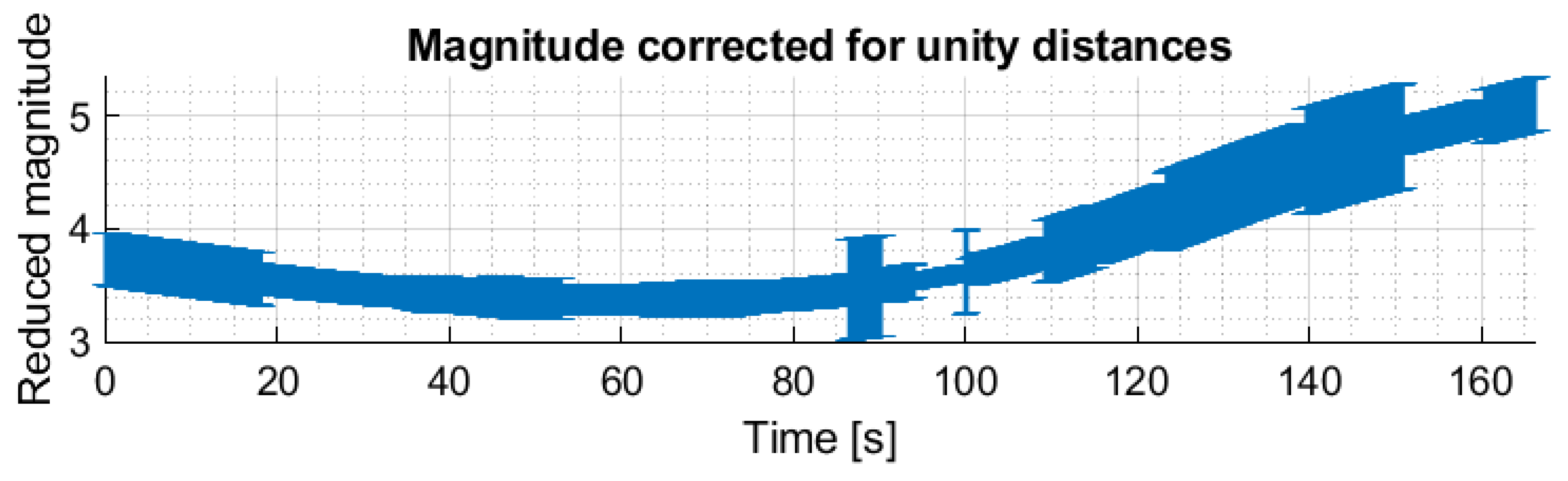

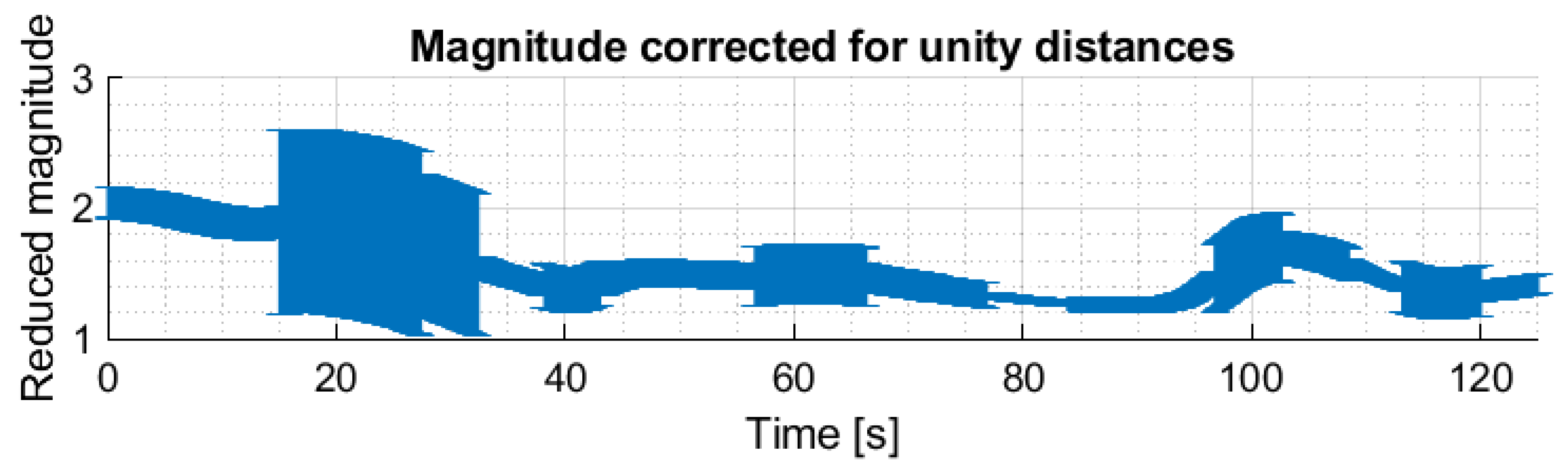

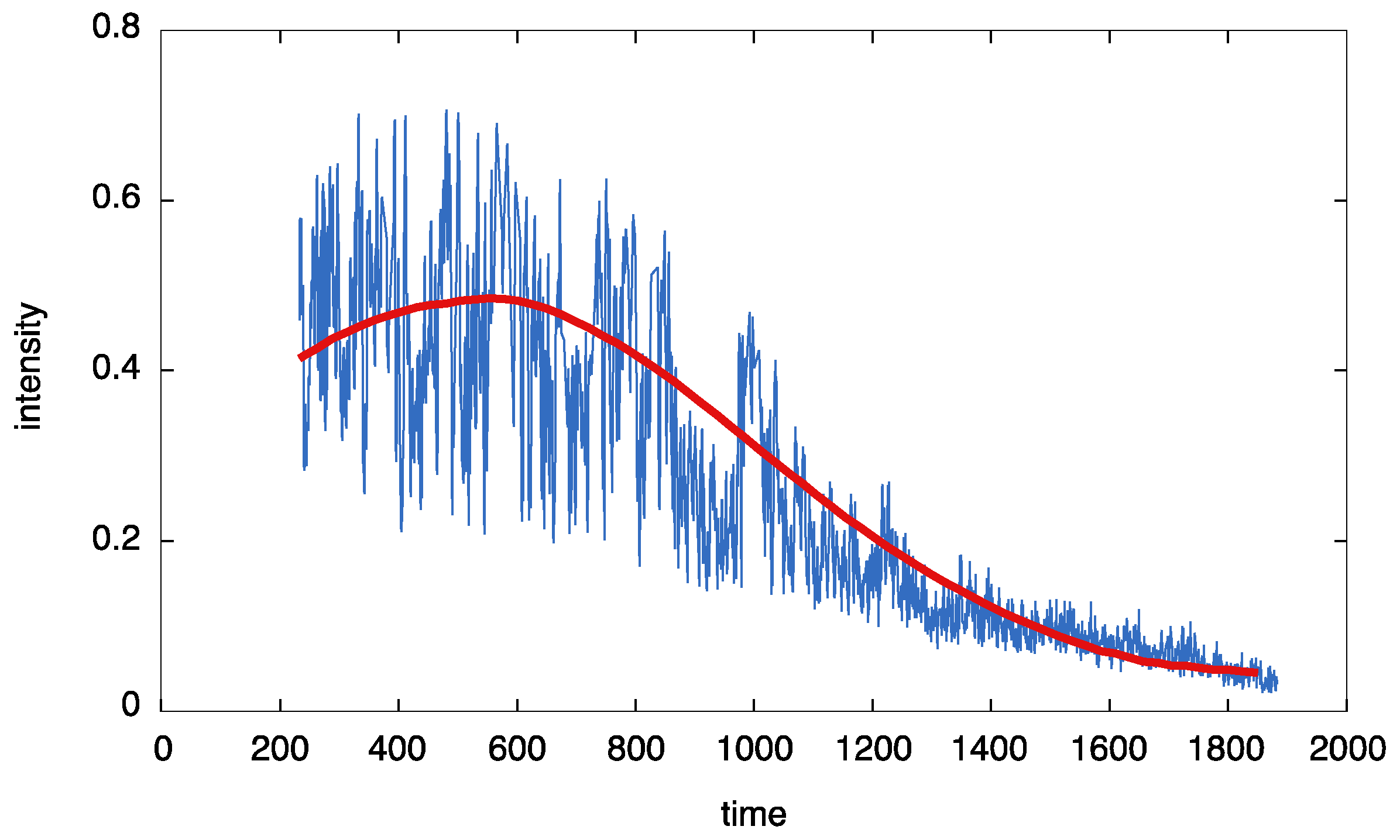

3.5. Light-Curves Examples

4. Attitude Reconstruction

4.1. Overview of the Method

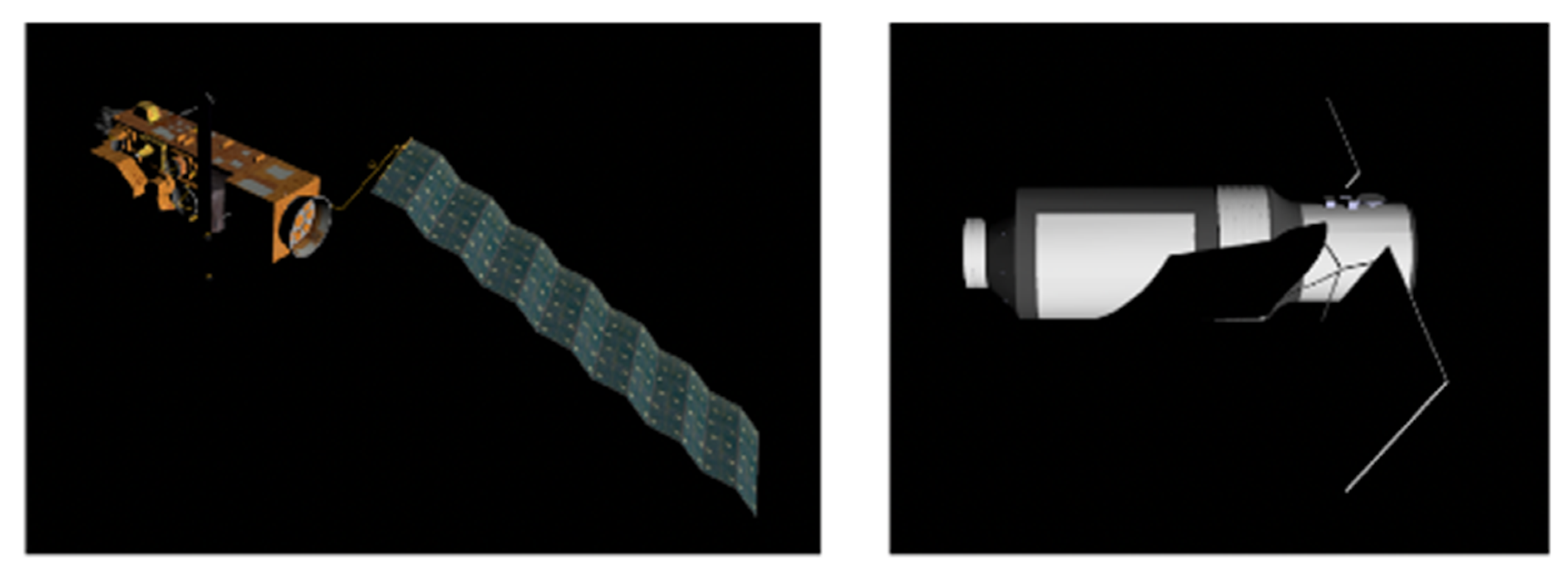

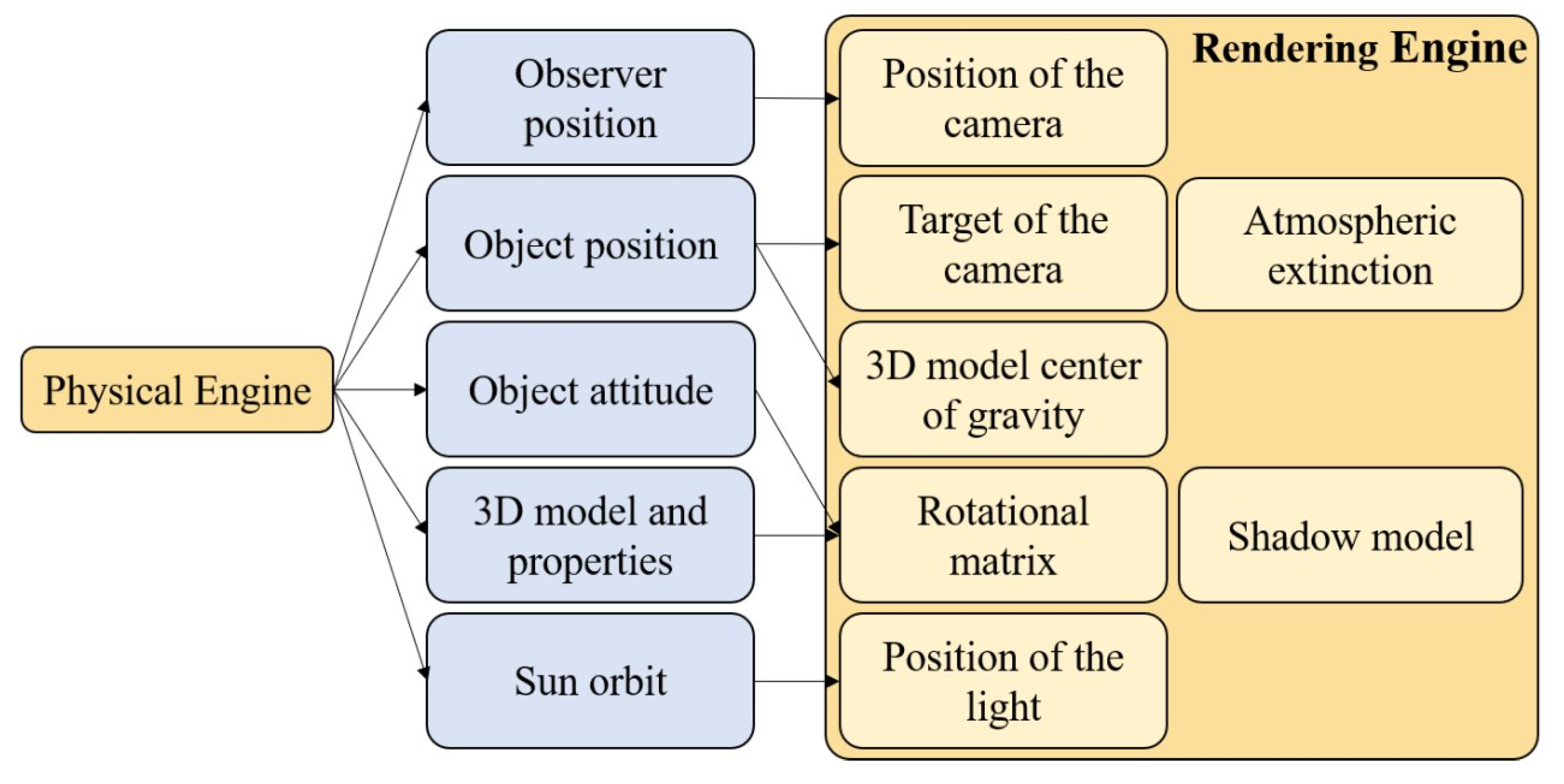

4.2. Virtual Reality Simulation

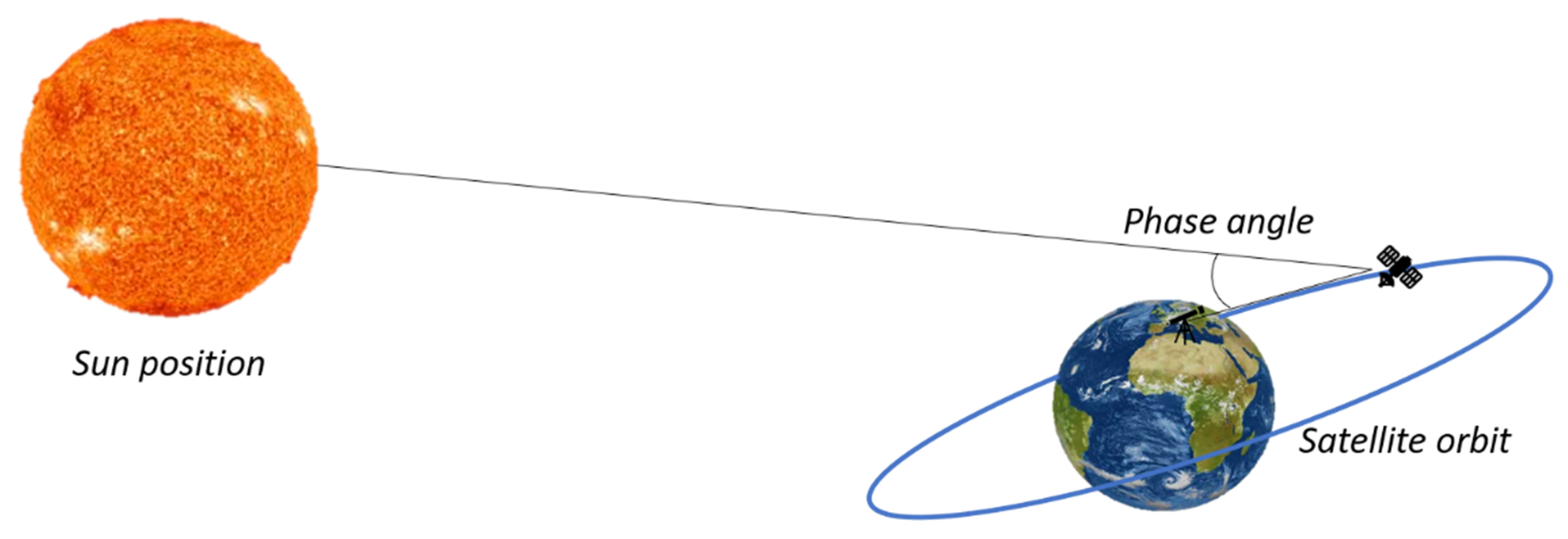

4.2.1. Physical Engine

- Orbital position;

- Attitude;

- Observer position (i.e., ground station);

- Position of the sun.

4.2.2. Rendering Engine

4.3. Automatic Attitude Determination

4.4. Method Validation

4.4.1. Synthetic Data

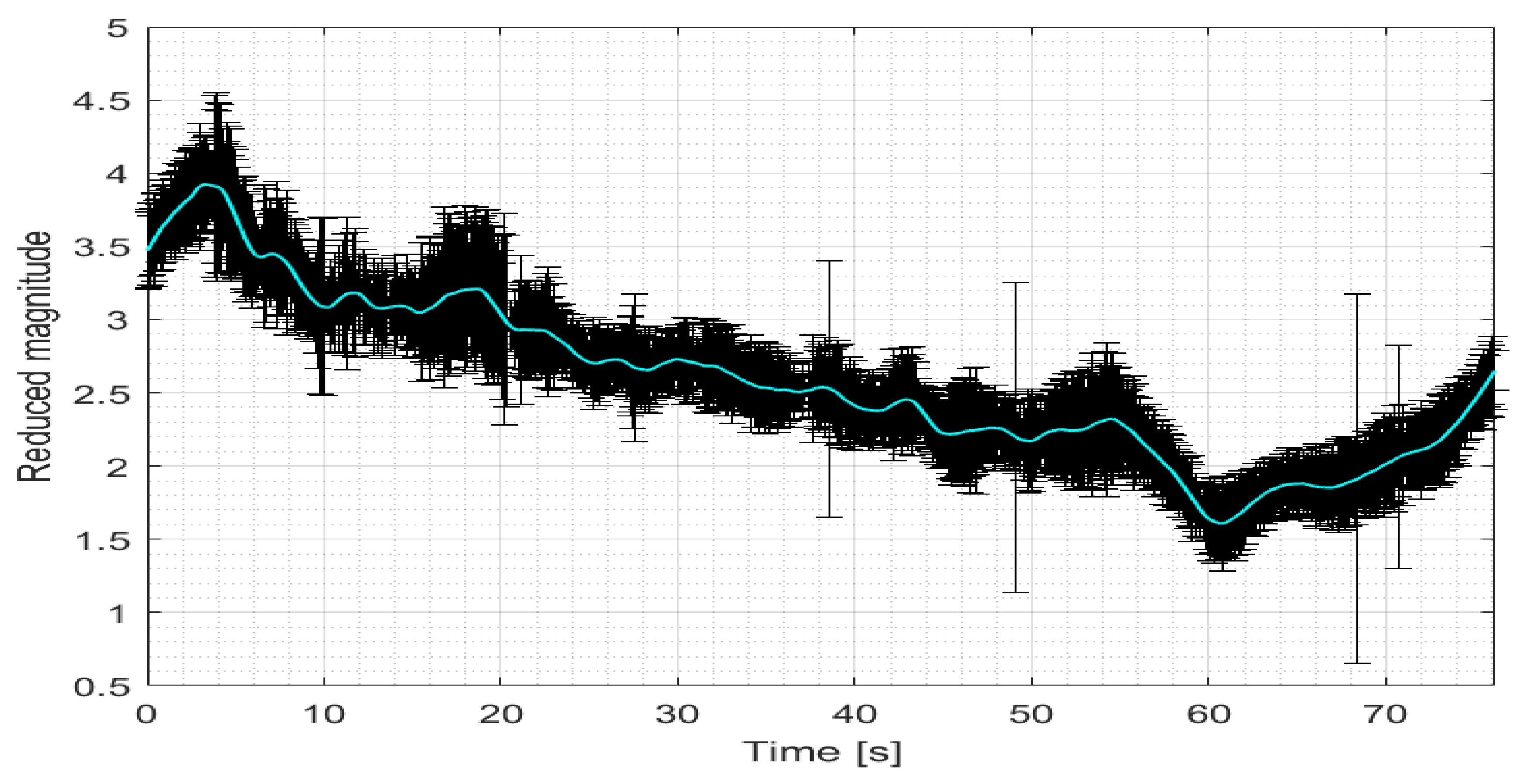

4.4.2. Real Case Test

5. Conclusions

Author Contributions

Funding

Conflicts of Interest

References

- ESA. “Space Debris by the Numbers,” European Space Agency. Available online: https://www.esa.int/Our_Activities/Operations/Space_Debris/Space_debris_by_the_numbers (accessed on 28 June 2018).

- NSTCC. The National Science and Technology Council Committee on Transportation Research & Development; Interagency Report on Orbital Debris 1995; NSTCC: Washingtong, DC, USA, 1995. [Google Scholar]

- Reihs, B.; McLean, F.; Lemmens, S.; Merz, K.; Krag, H. Analysis of CDM covariance consistency in operational collision avoidance. In Proceedings of the 7th European Conference on Space Debris, Darmstadt, Germany, 18–21 April 2017; ESA Space Debris Office: Darmstadt, Germany, 2017. [Google Scholar]

- Valladoe, D.A.; McClain, W.D. Fundamentals of Astrodynamics and Applications, 3rd ed.; Microcosm Press/Springer: Hawthorne, CA, USA, 2007. [Google Scholar]

- Vallado, D.; Cefola, P. «Two-line element sets-Practice and use». In Proceedings of the 63rd International Astronautical Congress, Naples, Italy, 1–5 October 2012; IAF: Paris, France, 2012. IAC-12,C1,6,12,x13640. Volume 7, pp. 5812–5825. [Google Scholar]

- MehtaiD, P.M.; Kubicek, M.; Minisci, E.; Vasile, M. Sensitivity analysis and probabilistic re-entry modeling for debris using high dimensional model representation based uncertainty treatment. Adv. Space Res. 2017, 59, 193–211. [Google Scholar] [CrossRef]

- Acernese, M.; Zarcone, G. Improving accuracy of LEO objects Two-line elements through optical measurements. In Proceedings of the 69th International Astronautical Congress, Bremen, Germany, 1–5 October 2018. IAC-18,A6,9,8,x47421. [Google Scholar]

- DiPrima, F.; Santoni, F.; Piergentili, F.; Fortunato, V.; Abbattista, C.; Amoruso, L. Efficient and automatic image reduction framework for space debris detection based on GPU technology. Acta Astronaut. 2018, 145, 332–341. [Google Scholar] [CrossRef]

- Scire, G.; Piergentili, F.; Santoni, F. Spacecraft Recognition in Co-Located Satellites Cluster through Optical Measures. IEEE Trans. Aerosp. Electron. Syst. 2017, 53, 1699–1708. [Google Scholar] [CrossRef]

- Piergentili, F.; Santoni, F.; Seitzer, P. Attitude Determination of Orbiting Objects from Lightcurve Measurements. IEEE Trans. Aerosp. Electron. Syst. 2017, 53, 81–90. [Google Scholar] [CrossRef]

- Cardona, T.; Seitzer, P.; Rossi, A.; Piergentili, F.; Santoni, F. BVRI photometric observations and light-curve analysis of GEO objects. Adv. Space Res. 2016, 58, 514–527. [Google Scholar] [CrossRef]

- Santoni, F. Dynamics of Spring-Deployed Solar Panels for Agile Nanospacecraft. J. Aerosp. Eng. 2015, 28, 04014122. [Google Scholar] [CrossRef]

- Santoni, F.; Piergentili, F. Analysis of the UNISAT-3 Solar Array In-Orbit Performance. J. Spacecr. Rocket. 2008, 45, 142–148. [Google Scholar] [CrossRef]

- Graziani, F.; Santoni, F.; Piergentili, F.; Bulgarelli, F.; Sgubini, M.; Bernardini, S. Manufacturing and Launching Student-Made Microsatellites: “Hands-on” Education at the University of Roma. In Proceedings of the 55th International Astronautical Congress of the International Astronautical Federation, the International Academy of Astronautics, and the International Institute of Space Law, Vancouver, BC, Canada, 4–8 October 2004; IAF: Paris, France, 2004. [Google Scholar] [CrossRef]

- Vaccari, L.; Altissimo, M.; Di Fabrizio, E.; De Grandis, F.; Manzoni, G.; Santoni, F.; Graziani, F.; Gerardino, A.; Pérennès, F.; Miotti, P. Design and prototyping of a micropropulsion system for microsatellites attitude control and orbit correction. J. Vac. Sci. Technol. B Microelectron. Nanometer Struct. 2002, 20, 2793. [Google Scholar] [CrossRef]

- Marzioli, P.; Curiano, F.; Picci, N.; Piergentili, F.; Santoni, F.; Gianfermo, A.; Frezza, L.; Amadio, D.; Acernese, M.; Parisi, L.; et al. LED-based attitude reconstruction and back-up light communication: Experimental applications for the LEDSAT CubeSat. In Proceedings of the 2019 IEEE 5th International Workshop on Metrology for AeroSpace (MetroAeroSpace), Torino, Italy, 19–21 June 2019; pp. 720–725. [Google Scholar]

- Menchinelli, A.; Ingiosi, F.; Pamphili, L.; Marzioli, P.; Patriarca, R.; Msc, F.C.; Piergentili, F. A Reliability Engineering Approach for Managing Risks in CubeSats. Aerospace 2018, 5, 121. [Google Scholar] [CrossRef]

- Marzioli, P.; Gugliermetti, L.; Santoni, F.; Delfini, A.; Piergentili, F.; Nardi, L.; Metelli, G.; Benvenuto, E.; Massa, S.; Bennici, E. CultCube: Experiments in autonomous in-orbit cultivation on-board a 12-Units CubeSat platform. Life Sci. Space Res. 2020, 25, 42–52. [Google Scholar] [CrossRef]

- Hossein, S.H.; Acernese, M.; Cardona, T.; Cialone, G.; Curianò, F.; Mariani, L.; Marini, V.; Marzioli, P.; Parisi, L.; Piergentili, F.; et al. Sapienza Space debris Observatory Network (SSON): A high coverage infrastructure for space debris monitoring. J. Space Saf. Eng. 2020, 7, 30–37. [Google Scholar] [CrossRef]

- Santoni, F.; Piergentili, F.; Cardona, T.; Curianò, F.; Diprima, F.; Hossein, S.H.; Canu, C.; Mariani, L. EQUO-Equatorial Italian Observatory at The Broglio Space Center For Space Debris Monitoring. In Proceedings of the 68th International Astronautical Congress, Adelaide, Australia, 25–29 September 2017. IAC-17,A6,IP,10,x38808. [Google Scholar]

- Piergentili, F.; Ceruti, A.; Rizzitelli, F.; Cardona, T.; Battagliere, M.L.; Santoni, F. Space Debris Measurement Using Joint Mid-Latitude and Equatorial Optical Observations. IEEE Trans. Aerosp. Electron. Syst. 2014, 50, 664–675. [Google Scholar] [CrossRef]

- Piattoni, J.; Ceruti, A.; Piergentili, F. Automated image analysis for space debris identification and astrometric measurements. Acta Astronaut. 2014, 103, 176–184. [Google Scholar] [CrossRef]

- Porfilio, M.; Piergentili, F.; Graziani, F. Two-site orbit determination: The 2003 GEO observation campaign from Collepardo and Mallorca. Adv. Space Res. 2006, 38, 2084–2092. [Google Scholar] [CrossRef]

- Scire, G.; Santoni, F.; Piergentili, F. Analysis of orbit determination for space based optical space surveillance system. Adv. Space Res. 2015, 56, 421–428. [Google Scholar] [CrossRef]

- Piergentili, F.; Ravaglia, R.; Santoni, F. Close Approach Analysis in the Geosynchronous Region Using Optical Measurements. J. Guid. Control. Dyn. 2014, 37, 705–710. [Google Scholar] [CrossRef]

- Siccardi, M. About Time Measurements. In Proceedings of the IEEE 2012 European Frequency and Time Forum (EFTF), Gothenburg, Sweden, 23–27 April 2012. [Google Scholar]

- Hogg, D.W.; Blanton, M.; Lang, D.; Mierle, K.; Roweis, S. Automated Astrometry, Astronomical Data Analysis Software and Systems XVII. Available online: http://adsabs.harvard.edu/full/2008ASPC..394...27H (accessed on 12 December 2020).

- Høg, E.; Fabricius, C.; Makarov, V.V.; Urban, S.; Corbin, T.; Wycoff, G.; Bastian, U.; Schwekendiek, P.; Wicenec, A. The Tycho-2 Catalogue of the 2.5 Million Brightest Stars; Naval Observatory: Washington, DC, USA, 2000. [Google Scholar]

- Olson, T. The Colors of the Stars. In Proceedings of the Color Imaging Conference, Scottsdale, AZ, USA, 17–20 November 1998. [Google Scholar]

- Howell, S. Handbook of CCD Astronomy, 2nd ed.; Cambridge University Press: Cambridge, UK, 2006. [Google Scholar]

- Howell, S.B. Two-dimensional aperture photometry-Signal-to-noise ratio of point-source observations and optimal data-extraction techniques. Publ. Astron. Soc. Pac. 1989, 101, 616. [Google Scholar] [CrossRef]

- Stetson, P.B. On the growth-curve method for calibrating stellar photometry with CCDs. Publ. Astron. Soc. Pac. 1990, 102, 932. [Google Scholar] [CrossRef]

- IADC. Inter-Agency Space Debris Coordination Committee. Available online: https://www.iadc-home.org/ (accessed on 1 June 2020).

- Fruh, C.; Schildknecht, T. Analysis of observed and simulated light curves of space debris. In Proceedings of the International Astronautical Congress, Prague, Czech Republic, 27 September–1 October 2010. IAC-10.A6.1.9. [Google Scholar]

- Bradley, B.; Axelrad, P. Lightcurve Inversion for Shape Estimation of Geo Objects from Space-Based Sensors. In Proceedings of the International Space Symposium for Flight Dynamics, Laurel, MA, USA, 5–9 May 2014. [Google Scholar]

- Linder, E. Extraction of Spin Periods of Space Debris from Optical Light Curves. In Proceedings of the International Astronautical Congress, Jerusalem, Israel, 12–16 October 2015. IAC-15,A6,1,2,x29020. [Google Scholar]

- Schulze, T.; Gessler, A.; Kulling, K.; Nadlinger, D.; Klein, J.; Sibly, M.; Gubisch, M. Open Asset Import Library (Assimp). January 2012. Computer Software. Available online: https:github.com/assimp/assimp (accessed on 1 October 2020).

- James, F. Blinn. Models of light reflection for computer synthesized pictures. In ACM SIGGRAPH Computer Graphics; ACM: New York, NY, USA, 1977; Volume 11, pp. 192–198. [Google Scholar]

- Annen, T.; Mertens, T.; Seidel, H.; Flerackers, E.; Kautz, J. Exponential shadow maps. In Proceedings of Graphics Interface; Canadian Information Processing Society: Mississauga, ON, Canada, 2008; pp. 155–161. [Google Scholar]

- Ponsich, A.; Azzaro-Pantel, C.; Domenech, S.; Pibouleau, L. Constraint handling strategies in Genetic Algorithms application to optimal batch plant design. Chem. Eng. Process. Process. Intensif. 2008, 47, 420–434. [Google Scholar] [CrossRef]

- Vellutini, E.; Bianchi, G.; Pardini, C.; Anselmo, L.; Pisanu, T.; Di Lizia, P.; Piergentili, F.; Monaci, F.; Reali, M.; Villadei, W.; et al. Monitoring the final orbital decay and the re-entry of Tiangong-1 with the Italian SST ground sensor network. J. Space Saf. Eng. 2020, 7, 487–501. [Google Scholar] [CrossRef]

- Sommer, S.; Karamanavis, V.; Schlichthaber, F.; Patzelt, T.; Rosebrock, J.; Cerutti-Maori, D.; Leushacke, L. February. Analysis of the attitude motion and cross-sectional area of Tiangong-1 during its uncontrolled re-entry. In Proceedings of the 1st NEO and Debris Detection Conference, Darmstadt, Germany, 22–24 January 2019; ESA Space Safety Programme Office: Darmstadt, Germany, 2019. [Google Scholar]

- Pardini, C.; Anselmo, L. Monitoring the orbital decay of the Chinese space station Tiangong-1 from the loss of control until the re-entry into the Earth’s atmosphere. J. Space Saf. Eng. 2019, 6, 265–275. [Google Scholar] [CrossRef]

| RESDOS/SCUDO | ||

|---|---|---|

| Sensor | Type | sCMOS |

| Resolution | 5.5 Mpx | |

| Sensor Diagonal | 22 mm | |

| Max Fps | 100 | |

| Telescope | Focal Length | 750 mm |

| Diameter | 150 mm | |

| Mount Type | Equatorial | |

| φ0 | θ0 | ψ0 | p0 | q0 | r0 | |

|---|---|---|---|---|---|---|

| Real | 10.0 | 60.0 | 210.0 | 5.0 | 1.5 | 0.5 |

| Found | 10.5 | 61.7 | 209.3 | 5.2 | 2.7 | 0.3 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2020 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Piergentili, F.; Zarcone, G.; Parisi, L.; Mariani, L.; Hossein, S.H.; Santoni, F. LEO Object’s Light-Curve Acquisition System and Their Inversion for Attitude Reconstruction. Aerospace 2021, 8, 4. https://doi.org/10.3390/aerospace8010004

Piergentili F, Zarcone G, Parisi L, Mariani L, Hossein SH, Santoni F. LEO Object’s Light-Curve Acquisition System and Their Inversion for Attitude Reconstruction. Aerospace. 2021; 8(1):4. https://doi.org/10.3390/aerospace8010004

Chicago/Turabian StylePiergentili, Fabrizio, Gaetano Zarcone, Leonardo Parisi, Lorenzo Mariani, Shariar Hadji Hossein, and Fabio Santoni. 2021. "LEO Object’s Light-Curve Acquisition System and Their Inversion for Attitude Reconstruction" Aerospace 8, no. 1: 4. https://doi.org/10.3390/aerospace8010004

APA StylePiergentili, F., Zarcone, G., Parisi, L., Mariani, L., Hossein, S. H., & Santoni, F. (2021). LEO Object’s Light-Curve Acquisition System and Their Inversion for Attitude Reconstruction. Aerospace, 8(1), 4. https://doi.org/10.3390/aerospace8010004