The Industry Internet of Things (IIoT) as a Methodology for Autonomous Diagnostics in Aerospace Structural Health Monitoring

Abstract

1. Introduction

2. Methods

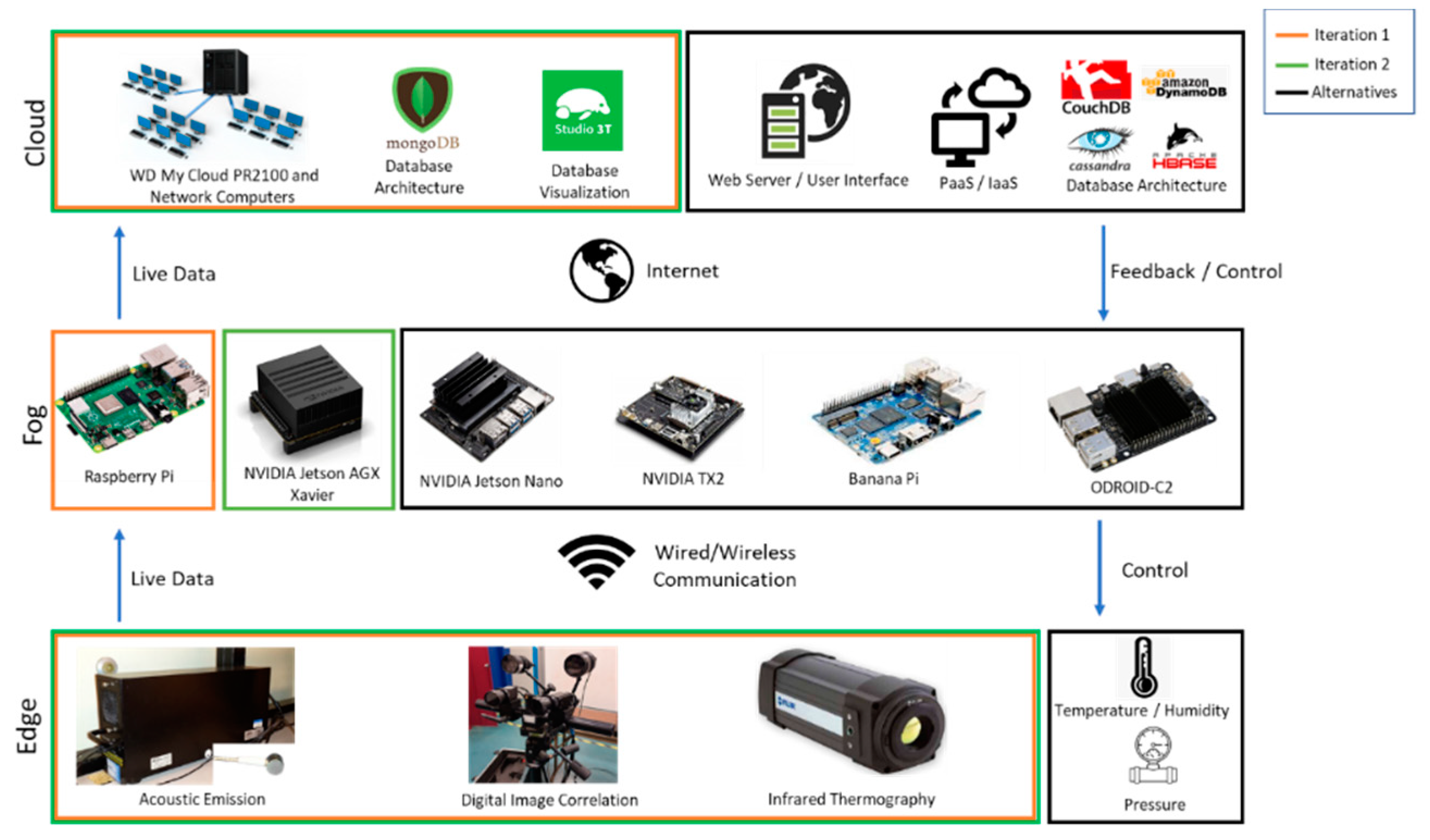

2.1. IoT Hardware & Software

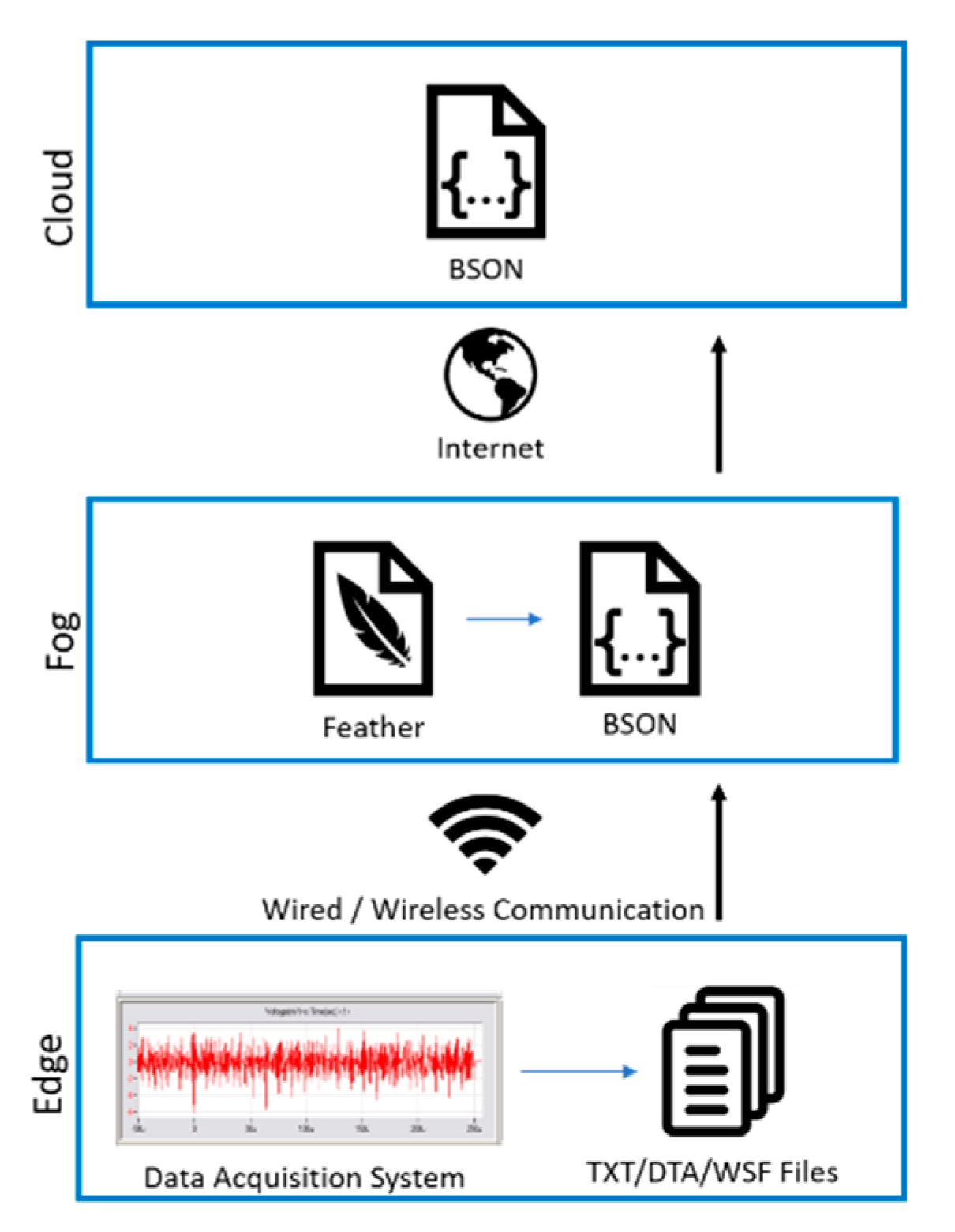

2.2. Data Structure

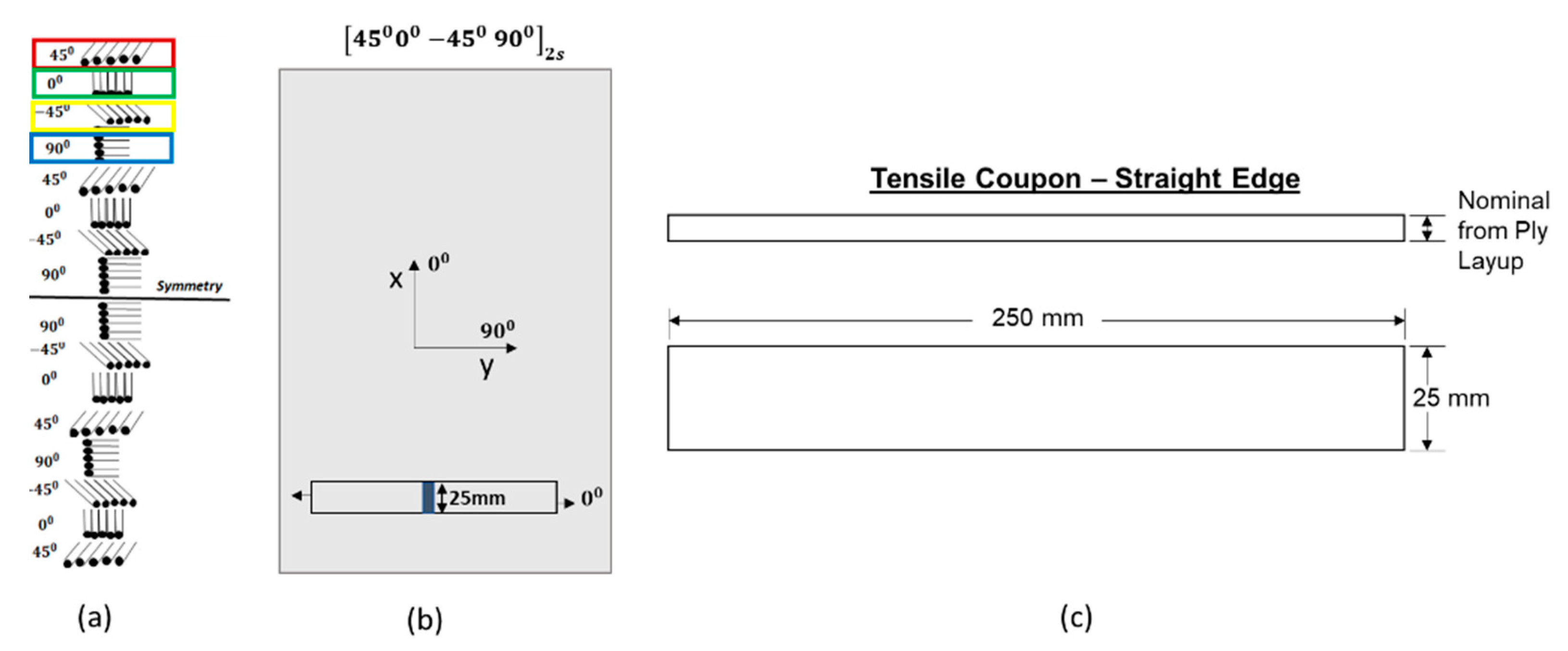

2.3. Test Case

3. Results

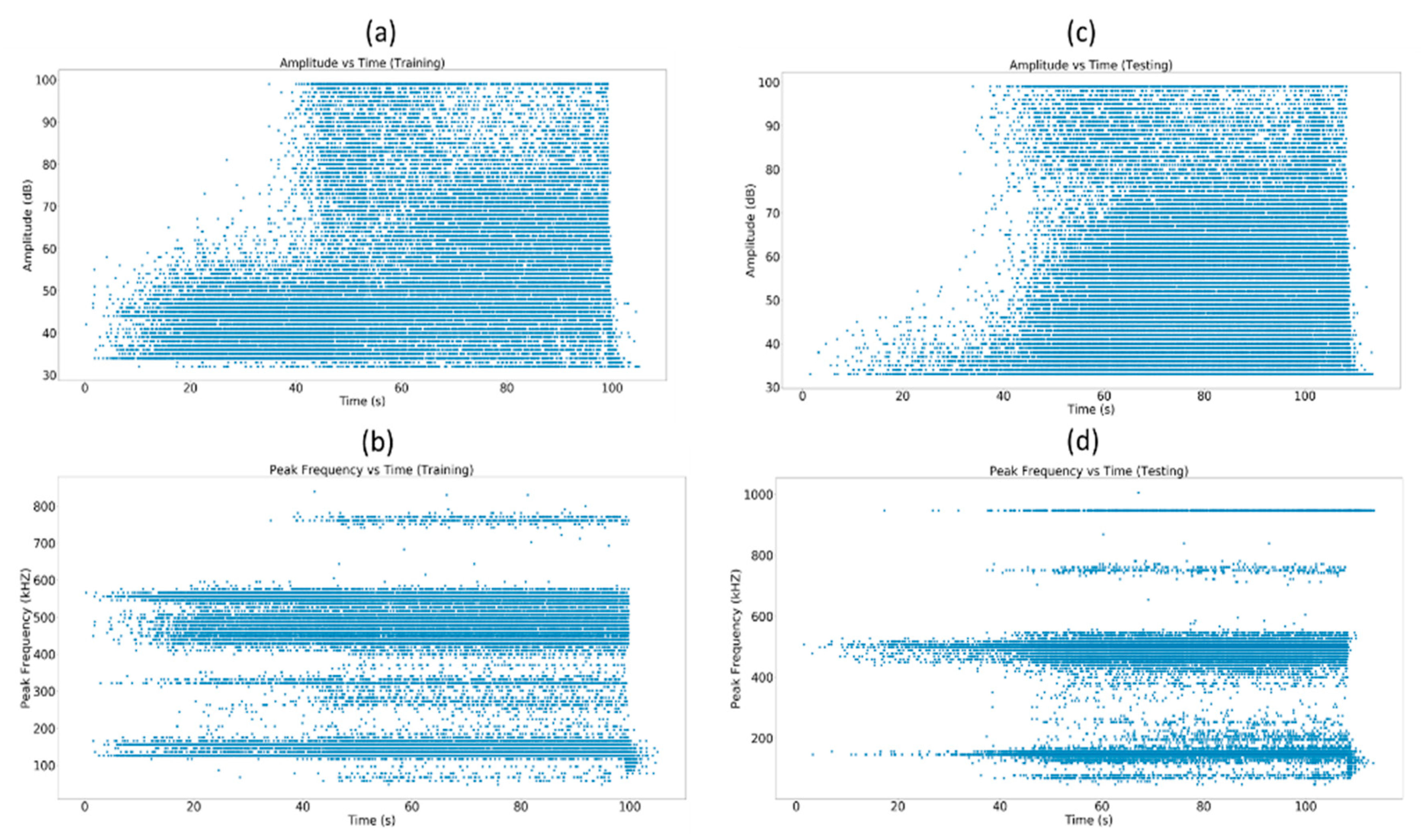

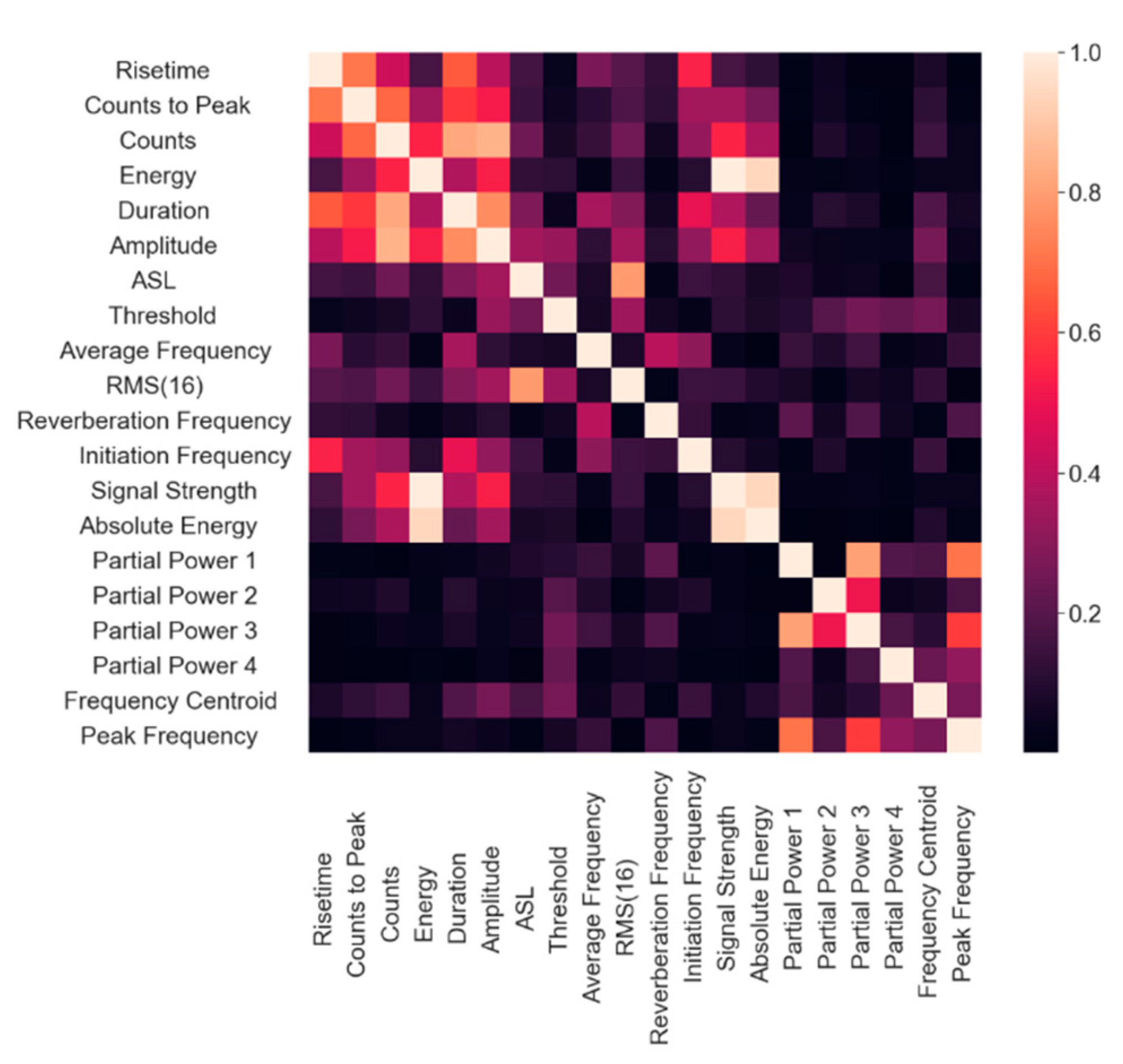

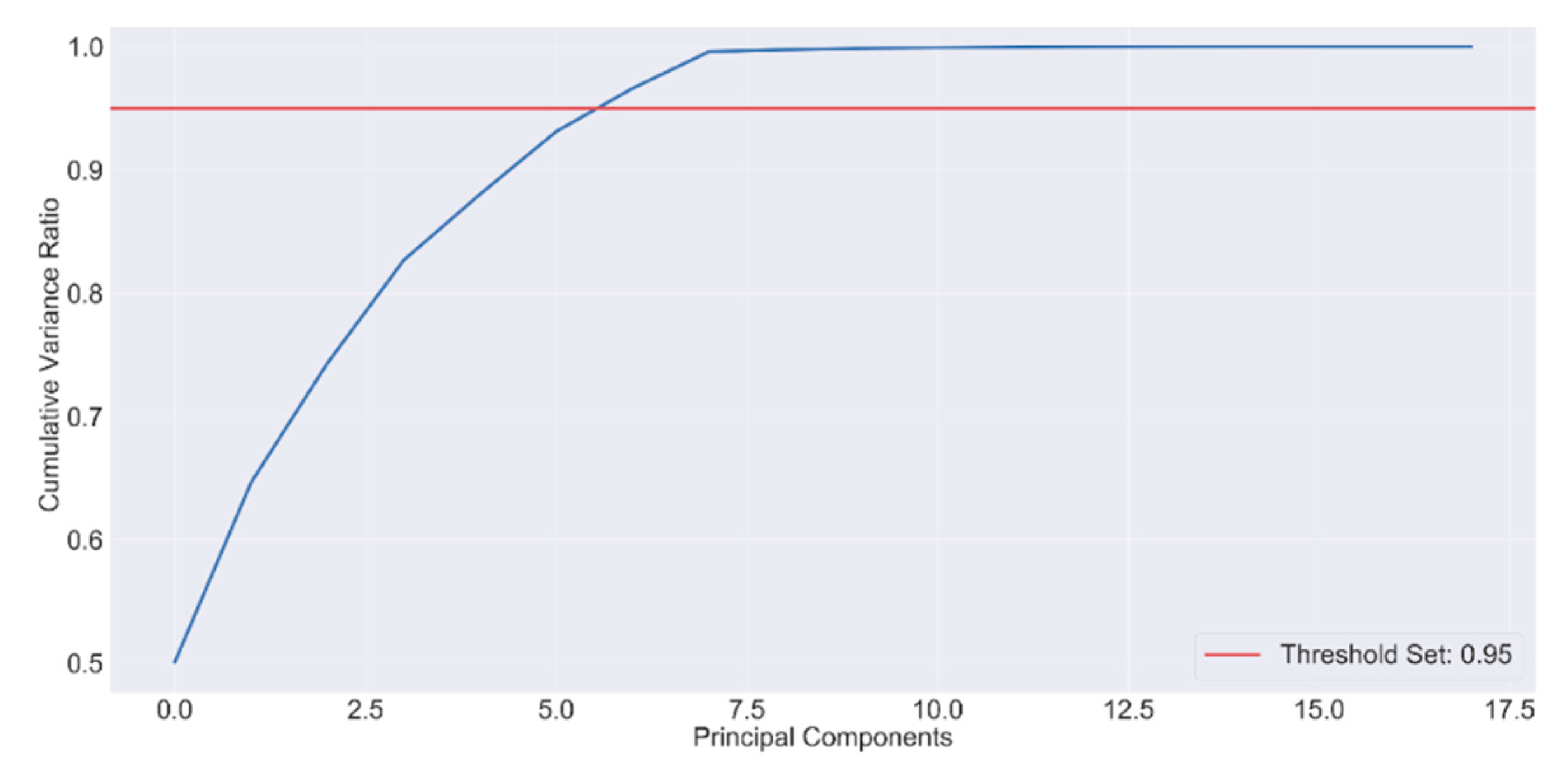

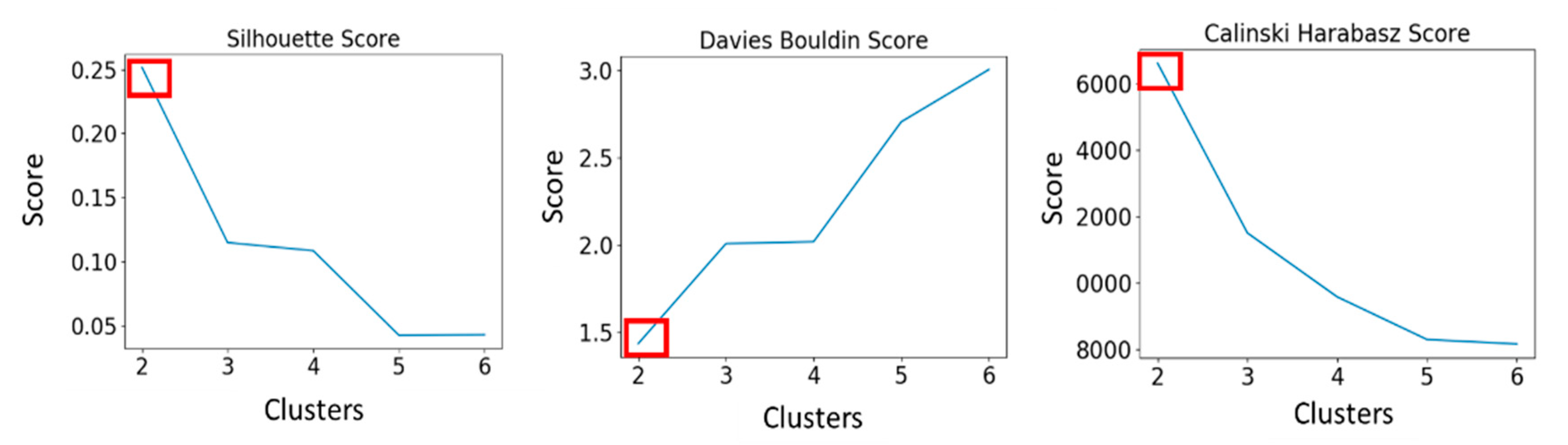

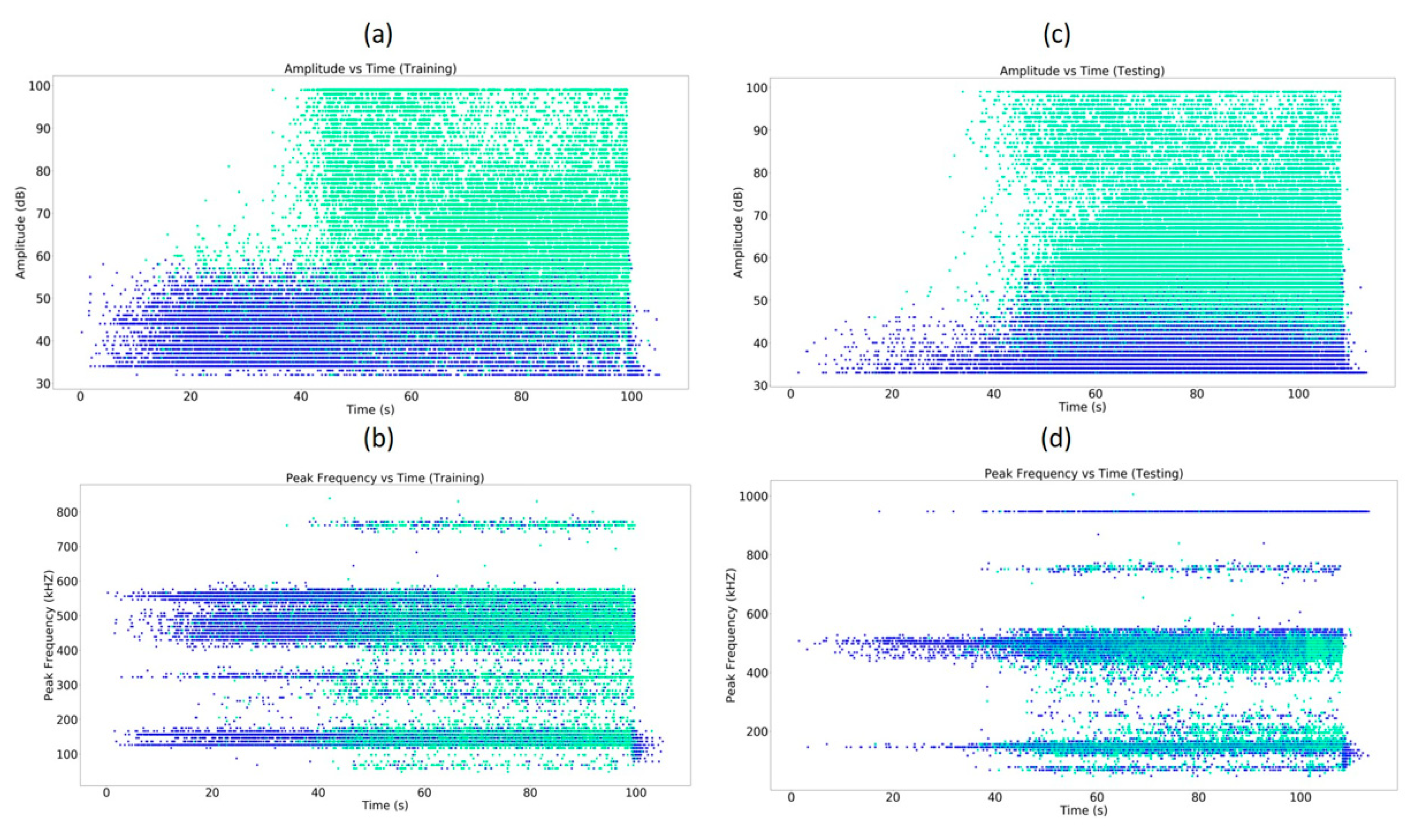

3.1. Data Processing

3.2. Data Structure

3.3. System Performance

3.3.1. Edge to Fog Data Throughput

3.3.2. Fog to Cloud Data Throughput (Raspberry Pi Cluster)

3.3.3. Fog to Cloud Data Throughput (Xavier Jetson)

4. Discussion

5. Concluding Remarks

Author Contributions

Funding

Acknowledgments

Conflicts of Interest

References

- Saltoğlu, R.; Humaira, N.; İnalhan, G. Aircraft scheduled airframe maintenance and downtime integrated cost model. Adv. Oper. Res. 2016, 2016. [Google Scholar] [CrossRef]

- Kinnison, H.A.; Siddiqui, T. Aviation Maintenance Management; McGraw-Hill Education: New York, NY, USA, 2012. [Google Scholar]

- Quantas. The A, C and D of Aircraft Maintenance. Available online: www.qantasnewsroom.com.au/roo-tales/the-a-c-and-d-of-aircraft-maintenance/ (accessed on 22 May 2020).

- Kingsley-Jones, M. Airbus Sees Big Data Delivering 'Zero-AOG' Goal within 10 Years. Available online: https://www.flightglobal.com/mro/airbus-sees-big-data-delivering-zero-aog-goal-within-10-years/126446.article (accessed on 22 May 2020).

- Abdelgawad, A.; Yelamarthi, K. Structural health monitoring: Internet of things application. In Proceedings of the 2016 IEEE 59th International Midwest Symposium on Circuits and Systems (MWSCAS), Abu Dhabi, UAE, 16–19 October 2016; pp. 1–4. [Google Scholar]

- Abdelgawad, A.; Yelamarthi, K. Internet of things (IoT) platform for structure health monitoring. Wirel. Commun. Mob. Comput. 2017, 2017. [Google Scholar] [CrossRef]

- Tokognon, C.A.; Gao, B.; Tian, G.Y.; Yan, Y. Structural health monitoring framework based on Internet of Things: A survey. IEEE Internet Things J. 2017, 4, 619–635. [Google Scholar] [CrossRef]

- Cañete, E.; Chen, J.; Martín, C.; Rubio, B. Smart Winery: A Real-Time Monitoring System for Structural Health and Ullage in Fino Style Wine Casks. Sensors 2018, 18, 803. [Google Scholar] [CrossRef] [PubMed]

- Jeong, S.; Law, K. An IoT Platform for Civil Infrastructure Monitoring. In Proceedings of the 2018 IEEE 42nd Annual Computer Software and Applications Conference (COMPSAC), Tokyo, Japan, 23–27 July 2018; IEEE: New York, NY, USA, 2018; pp. 746–754. [Google Scholar]

- Wang, J.; Fu, Y.; Yang, X. An integrated system for building structural health monitoring and early warning based on an Internet of things approach. Int. J. Distrib. Sens. Netw. 2017, 13, 1550147716689101. [Google Scholar] [CrossRef]

- Yan, Z.; Zhang, P.; Vasilakos, A.V. A survey on trust management for Internet of Things. J. Netw. Comput. Appl. 2014, 42, 120–134. [Google Scholar] [CrossRef]

- Whitmore, A.; Agarwal, A.; Da Xu, L. The Internet of Things—A survey of topics and trends. Inf. Syst. Front. 2015, 17, 261–274. [Google Scholar] [CrossRef]

- Lin, N.; Shi, W. The research on Internet of things application architecture based on web. In Proceedings of the 2014 IEEE Workshop on Advanced Research and Technology in Industry Applications (WARTIA), Ottawa, ON, Canada, 29–30 September 2014; pp. 184–187. [Google Scholar]

- Sheng, Z.; Mahapatra, C.; Zhu, C.; Leung, V.C. Recent advances in industrial wireless sensor networks toward efficient management in IoT. IEEE Access 2015, 3, 622–637. [Google Scholar] [CrossRef]

- Mahmoud, M.S.; Mohamad, A.A. A study of efficient power consumption wireless communication techniques/modules for internet of things (IoT) applications. Adv. Internet Things 2016, 6, 19–29. [Google Scholar] [CrossRef]

- Wang, L.; Ranjan, R. Processing distributed internet of things data in clouds. IEEE Cloud Comput. 2015, 2, 76–80. [Google Scholar] [CrossRef]

- Padhy, R.P.; Patra, M.R.; Satapathy, S.C. RDBMS to NoSQL: Reviewing some next-generation non-relational database’s. Int. J. Adv. Eng. Sci. Technol. 2011, 11, 15–30. [Google Scholar]

- Han, J.; Haihong, E.; Le, G.; Du, J. Survey on NoSQL database. In Proceedings of the 2011 6th International Conference on Pervasive Computing and Applications, Port Elizabeth, South Africa, 25–29 October 2011; pp. 363–366. [Google Scholar]

- ASTM Standard. D3039/D3039M-00. Standard Test Method for Tensile Properties of Polymer Matrix Composite Materials; ASTM International: West Conshohocken, PA, USA, 2000. [Google Scholar]

- Marlett, K.; Ng, Y.; Tomblin, J. Hexcel 8552 IM7 Unidirectional Prepreg 190 gsm & 35% RC Qualification Material Property Data Report; Test Report CAM-RP-2009-015, Rev. A; National Center for Advanced Materials Performance: Wichita, KS, USA, 2011; pp. 1–238. [Google Scholar]

- Stelzer, S.; Brunner, A.; Argüelles, A.; Murphy, N.; Pinter, G. Mode I delamination fatigue crack growth in unidirectional fiber reinforced composites: Development of a standardized test procedure. Compos. Sci. Technol. 2012, 72, 1102–1107. [Google Scholar] [CrossRef]

- Ohtsu, M.; Enoki, M.; Mizutani, Y.; Shigeishi, M. Principles of the acoustic emission (AE) method and signal processing. In Practical Acoustic Emission Testing; Springer: Tokyo, Japan, 2016; pp. 5–34. [Google Scholar]

- Wisner, B.; Kontsos, A. In situ monitoring of particle fracture in aluminium alloys. Fatigue Fract. Eng. Mater. Struct. 2018, 41, 581–596. [Google Scholar] [CrossRef]

- Mazur, K.; Wisner, B.; Kontsos, A. Fatigue Damage Assessment Leveraging Nondestructive Evaluation Data. JOM 2018, 70, 1182–1189. [Google Scholar] [CrossRef]

- Wold, S.; Esbensen, K.; Geladi, P. Principal component analysis. Chemom. Intell. Lab. Syst. 1987, 2, 37–52. [Google Scholar] [CrossRef]

- Jackson, J.E. A User's Guide to Principal Components; John Wiley & Sons: New York, NY, USA, 2005; Volume 587. [Google Scholar]

- Bishop, C.M. Pattern Recognition and Machine Learning; Springer: New York, NY, USA, 2006. [Google Scholar]

- Steinwart, I.; Christmann, A. Support Vector Machines; Springer Science & Business Media: New York, NY, USA, 2008. [Google Scholar]

- Yang, P.; Hsieh, C.-J.; Wang, J.-L. History PCA: A New Algorithm for Streaming PCA. arXiv 2018, arXiv:1802.05447. [Google Scholar]

| Iteration 1 | Iteration 2 | Iteration 3 | Iteration 4 | Iteration 5 | Average Cross Validation Score | |

|---|---|---|---|---|---|---|

| SVM | 92.37% | 92.56% | 92.44% | 93.37% | 92.68% | 92.68% |

| Size of Data (MB) | Processing Time (Seconds) | Throughput (MB/S) |

|---|---|---|

| 1415 | 103.9 | 13.61 |

| 1415 | 115.6 | 12.24 |

| 1415 | 104.8 | 13.5 |

| 1415 | 112.2 | 12.61 |

| 1415 | 101.9 | 13.88 |

| Size of Data (MB) | Preprocessing Time (Seconds) | Preprocessing Throughput (MB/S) | Cloud Upload Time (Seconds) | Cloud Upload Throughput (MB/S) |

|---|---|---|---|---|

| 1530 | 24.44 | 62.60 | 35.98 | 42.51 |

| 1530 | 21.28 | 63.00 | 35.83 | 44.2 |

| 1530 | 20.73 | 73.78 | 33.41 | 45.58 |

| 1530 | 20.72 | 73.84 | 33.65 | 45.45 |

© 2020 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Malik, S.; Rouf, R.; Mazur, K.; Kontsos, A. The Industry Internet of Things (IIoT) as a Methodology for Autonomous Diagnostics in Aerospace Structural Health Monitoring. Aerospace 2020, 7, 64. https://doi.org/10.3390/aerospace7050064

Malik S, Rouf R, Mazur K, Kontsos A. The Industry Internet of Things (IIoT) as a Methodology for Autonomous Diagnostics in Aerospace Structural Health Monitoring. Aerospace. 2020; 7(5):64. https://doi.org/10.3390/aerospace7050064

Chicago/Turabian StyleMalik, Sarah, Rakeen Rouf, Krzysztof Mazur, and Antonios Kontsos. 2020. "The Industry Internet of Things (IIoT) as a Methodology for Autonomous Diagnostics in Aerospace Structural Health Monitoring" Aerospace 7, no. 5: 64. https://doi.org/10.3390/aerospace7050064

APA StyleMalik, S., Rouf, R., Mazur, K., & Kontsos, A. (2020). The Industry Internet of Things (IIoT) as a Methodology for Autonomous Diagnostics in Aerospace Structural Health Monitoring. Aerospace, 7(5), 64. https://doi.org/10.3390/aerospace7050064