1. Introduction

Cruise missiles are missiles that fly within the atmosphere in a cruising state, and their flight process can be divided into multiple stages such as altitude adjustment, cruise, and dive, each with different aerodynamic characteristics and control requirements [

1]. The control system must ensure that the missile maintains a stable attitude and precise trajectory tracking in complex and variable flight environments. Cruise missile controllers commonly use PID controllers for attitude control [

2,

3]. However, the traditional proportional–integral–derivative (PID) control method, due to its static design [

4,

5,

6], exhibits significant limitations in addressing nonlinear systems and external disturbances [

7]. Studies indicate that traditional PID control struggles to accurately model the dynamic characteristics of missiles, and parameter tuning requires iterative adjustments, which is a cumbersome process [

8,

9]. Furthermore, the multi-stage flight requirements of cruise missiles necessitate compromises in control parameters across different phases, making it impossible to achieve optimal control across the full speed range, full airspace, and under large maneuver conditions [

10].

In order to improve the adaptability and tedious parameter adjustment problems caused by fixed-gain PID, real-time adaptive control schemes with dynamic parameter adjustment functions have received widespread attention. Adaptive PID control typically encompasses rule-based [

11,

12], and model-based approaches [

13,

14]. Rule-based methods, such as Ziegler–Nichols tuning [

15] and fuzzy logic adaptive PID control, adaptively adjust gains by leveraging control signal characteristics like overshoot and PID error. Model-based techniques, like Model Reference Adaptive Control [

16], neural adaptive sliding mode guidance for UAV piloting [

17] and three-dimensional sliding pursuit guidance for surface-to-air missiles [

18], utilize mathematical models to adjust PID parameters, accommodating changes in system dynamics or external disturbances, thereby improving tracking performance and stability. Although many methods [

19,

20] have made significant progress in their respective fields, they still have shortcomings for missile attitude control with strong nonlinearity. Rule-based methods rely heavily on predefined rules or expert knowledge, limiting their adaptability to unforeseen conditions in highly dynamic missile flight scenarios. Model-based approaches face challenges in highly nonlinear systems where design complexity increases significantly, and unmodeled dynamics or model errors lead to performance degradation. These challenges are particularly pronounced in the rapidly evolving field of cruise missile control. A novel approach is needed to enhance adaptability to dynamic systems and environments.

With advancements in artificial intelligence, reinforcement learning (RL) [

21,

22,

23] offers a promising direction for missile attitude control [

24,

25,

26,

27]. Especially for unmanned aerial vehicle attitude control, RL performs better than traditional control methods. By leveraging deep reinforcement learning techniques combined with PID controllers [

28] and designing a reinforcement learning framework [

29], they achieved online optimization of network parameters, enhanced the control performance of the aircraft, and attained satisfactory tracking accuracy and robustness. Similarly, Zhao [

30] designed a PPO based attitude control framework for unmanned aerial vehicles in a simulation environment demonstrating superior sample efficiency and stability in attitude control. The integration of RL with missiles has primarily focused on guidance law design for autonomous obstacle avoidance [

31] and target tracking [

32]. For missile attitude control, Zhang [

33] developed a DDPG-based longitudinal controller for missile attitude control. Through longitudinal plane simulations, the study demonstrated the feasibility of this data-driven control method in stabilizing the nonlinear angle of attack dynamics in the missile’s longitudinal motion. Lee [

34] designed an RL-based PID control strategy for large angle-of-attack commands, achieving improved tracking performance.

Despite these developments, current research has certain limitations: existing methods often train in specific scenarios, limiting validation across diverse flight conditions. Simultaneously, they overlook the inherent non-Markovian nature of PID controllers—existing RL controller designs discard crucial historical state information. Additionally, as RL-based AI systems become more general and autonomous, designing reward mechanisms to elicit desired behaviors becomes increasingly important and challenging. Current researchers often focus solely on system design and straightforward objectives, thereby neglecting the design of reward engineering [

35], which results in inadequate adaptability of algorithms in multi-stage tasks [

32].

To address these limitations, we developed an adaptive PID tuning framework utilizing the Time-Fusion Proximal Policy Optimization (TF-PPO) algorithm. TF-PPO is an enhancement of the established Proximal Policy Optimization (PPO) [

22] algorithm, whose stability and efficiency have been demonstrated in adaptive PID tuning applications [

36]. Our approach integrates long short-term memory (LSTM) [

37] network, robust training method, and adaptive reward function, which can effectively perceive historical states and adaptively adjust parameters to cope with changes in flight states in different scenarios. Specifically, we construct a PPO-based adaptive PID parameter tuning algorithm for missile attitude control, incorporating LSTM networks to capture historical state dependencies and achieve precise attitude regulation. This approach employs a full six-degree-of-freedom aerodynamic model, trained through interactions with a game-based adversarial simulation system. This approach utilizes a full six-degree-of-freedom aerodynamic model and trains through adversarial interactions within a simulation environment. During optimization, PID controller gains function as policy parameters in the RL actor network, updated through online environment interactions. For characterizing flight-phase dynamics, we implement reward engineering with specialized functions for altitude adjustment, cruise, descent and terminal guidance phases. Through these designs, optimal parameters for each phase can be automatically identified without relying on initial values.

Table 1 compares our method with existing approaches.

To experimentally assess the proposed framework, a rapid-prototyping missile control system was engineered. This verification infrastructure integrated step-response testing with full-trajectory simulation, with experimental data confirming robustness under divergent initial conditions and wind disturbances. We designed and constructed a reinforcement learning-based rapid prototyping system for missiles, establishing a verification framework that combines step response testing and full envelope simulation. By evaluating attitude tracking performance under varied initial conditions and different wind disturbances, we demonstrated the robustness and effectiveness of the proposed algorithm. This study advances reinforcement learning applications in missile attitude control, providing robust and adaptive solutions for nonlinear dynamics and multi-phase flight scenarios.

The rest of this paper is organized as follows:

Section 2 presents system modeling and missile attitude control architecture.

Section 3 details the TF-PPO algorithm, including reward engineering and implementation workflow. In

Section 4, all experimental results are presented, including step response tests, offline training, and comparative experiments on the missile rapid prototyping system.

Section 5 summarizes contributions and future work.

2. System Modeling and Control System Architecture

For high-fidelity missile dynamics modeling, aerodynamic parameter estimation of a cruise missile was performed. Parameters are assumed representative of a generic subsonic cruise missile, which utilizes aerodynamic controls, scaled for simulation purposes based on typical values from literature [

38]. The following key parameters from

Table 2 are utilized in the missile system model.

This paper conducts numerical modeling and analysis of missile aerodynamic characteristics using ANSYS FLUENT (v2022 R2). Combined with key parameters in

Table 1 and six-degree-of-freedom rigid-body equations of motion [

39,

40,

41], a nonlinear dynamic model of the pitch channel is established. Given the similarity in control logic across pitch, roll, and yaw channels, this study focuses exclusively on the self-evolutionary parameter analysis of the pitch loop. Under the assumption of small angles of attack (

) [

42] and symmetric missile configuration, the coupling from yaw and roll channels can be reasonably neglected [

43]. Neglecting coupling effects from yaw and roll channels, the nonlinear pitch dynamics can be described by

The symbols used in Equation (1) are defined in

Table 3.

The trajectory of a cruise missile consists of a planned trajectory and a guided trajectory. Before launch, the missile’s flight plan is predetermined and cannot be altered once launched [

1]. The input to the missile’s attitude controller is the desired attitude angle generated by a fixed control law. The task of attitude control is to track the desired angle, minimizing the attitude angle error

. The PID controller for the pitch loop attitude can be expressed as

In the equation,

represents the elevator command, and the steering gear model is represented by a second-order model [

44]:

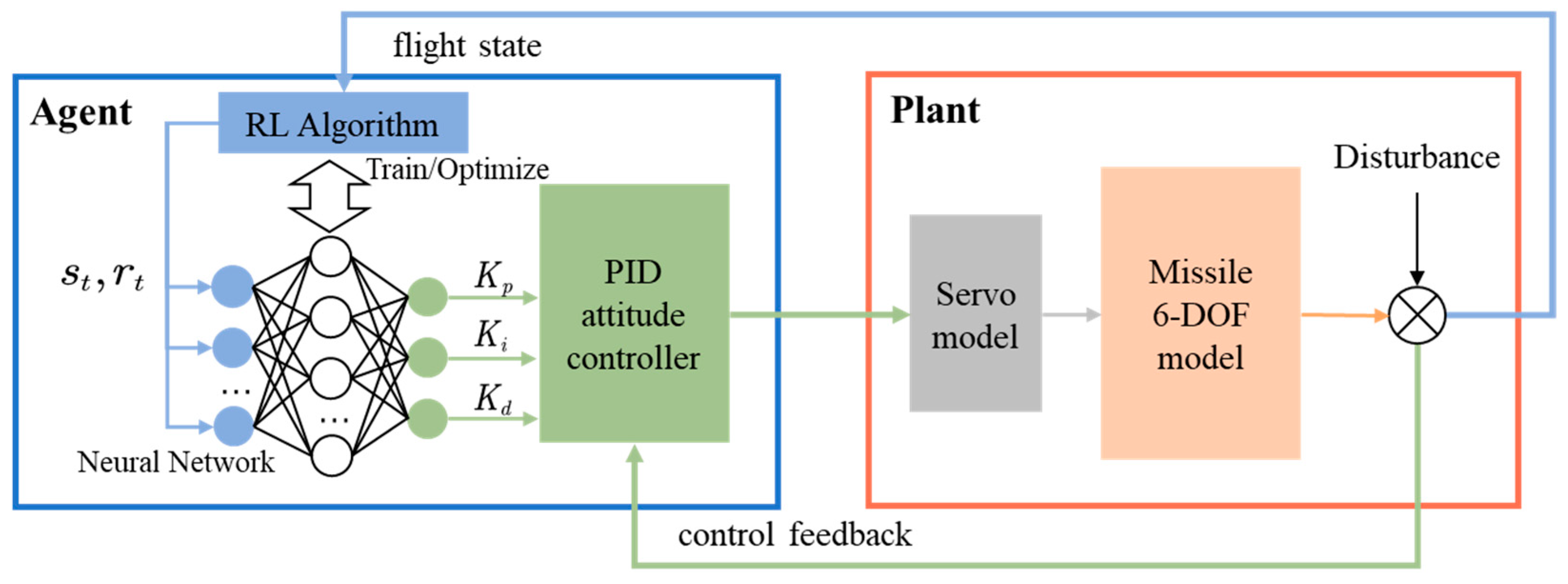

To address the attitude control requirements of cruise missiles with complex motion characteristics, this paper designs an RL-PID intelligent control system that integrates deep reinforcement learning with classical PID control. Through an online parameter tuning mechanism, optimal control is achieved under different flight conditions.

The overall system architecture, as shown in

Figure 1, consists of the RL-PID agent and the missile system model. The RL-PID agent utilizes a deep neural network to real-time parse missile state information

, calculates rewards based on a designed reward function, and dynamically outputs the optimal tuning values

for PID parameters. It can be expressed as

The reinforcement learning algorithm is divided into a training phase and an online optimization phase. During the training phase, a maximum reward mechanism drives the agent to learn parameter tuning strategies. In online operation, the network parameters are fixed, and the , , and coefficients are updated based on real-time flight data, enabling the PID controller to achieve condition awareness and parameter evolution capabilities.

3. Method

TF-PPO algorithm is an optimized framework for missile attitude control, developed on the basis of the Proximal Policy Optimization (PPO) algorithm. Its innovations involve reconstructing the state space, incorporating a temporal network to capture historical state dependencies, tailoring the algorithm workflow specifically for missile autopilot dynamics, and introducing a meticulously designed reward function that accounts for stage-specific characteristics of the cruise missile’s flight.

The complete TF-PPO implementation necessitates comprehensive design specifications for states, rewards, actions, and network architecture. As specified in

Section 2, the RL-PID controller’s action outputs are formally defined. Subsequent sections will elaborate on the design methodology for each component.

3.1. State Space Reframing

Missile control system design relies on long-period observable parameters (e.g., Mach number

, angle of attack

, flight altitude

) as states tailored to specific flight phases. Given that the attitude controller’s output consists of pitch angle commands, the commanded pitch angle

and the current body pitch angle

must be incorporated. The state variables selected in this study are

During missile flight, frequent PID adjustments may induce undesirable oscillations. We select

as the control interval for the RL-PID system. However, since PID response constitutes a dynamic process, the state space design for missile attitude control must satisfy dual constraints: dynamic response representational completeness and computational feasibility. Considering both missile performance characteristics and state information within control intervals, this paper proposes a sliding-window based temporal state space construction method. The complete 13-dimensional state vector is defined as follows:

where

and

denote the commanded pitch angle and actual body pitch angle at time

, respectively, forming a raw temporal sequence spanning a 5 s time window.

3.2. Neural Architecture Design

The TF-PPO algorithm operates on an Actor–Critic [

45] framework to collaboratively optimize control policies. Although the Actor–Critic architecture inherently assumes a Markov Decision Process (MDP), the missile PID control system exhibits non-Markovian characteristics due to its integral and derivative components requiring historical signal information prior to the current timestep. To address these non-Markovian properties, we embed a shared LSTM layer within the conventional Actor–Critic structure, thereby constructing a temporal feature fusion network. The detailed architecture is depicted in

Figure 2.

The input layer receives a 13-dimensional state vector

, which undergoes Batch Normalization preprocessing before entering the shared feature extraction layer. The shared LSTM module employs a unidirectional structure. The temporal dependency between commands and responses is formulated as

where

denotes the hidden state output from the LSTM network,

represents the cell state in long-term memory. The hidden state is concurrently fed into both Actor and Critic networks, where two distinct fully connected layers generate the action

and state-value function

, respectively, achieving historical feature reuse.

3.3. Reward Engineering

Reward engineering in reinforcement learning is the process of designing reward systems. Through deliberate reward structuring, it provides agents with discriminative signals that indicate behavioral correctness [

35]. The missile control system represents a highly nonlinear precision engineering system. Prior to reinforcement learning deployment, the identification of reward-shaping metrics critical to optimizing control precision is essential for RL-driven controllers. Addressing distinct dynamic characteristics during altitude adjustment, cruise, and descent phases of cruise missiles, this section proposes an adaptive stage-strength quantified reward function. This framework reconstructs RL rewards through phase-strength quantification, enabling stable optimization trajectories for control policies.

3.3.1. Stage-Strength Quantification

The stage-strength

parameter characterizes the dominance of the current flight phase, where a higher value indicates greater priority for phase-specific control objectives. This parameter depends solely on instantaneous flight states, eliminating inter-phase coupling complexities while ensuring global adaptability with seamless phase transitions. The quantification mechanism is defined as follows:

where the shape parameter

controls the function slope,

denotes the phase offset and

represents the rate of change of altitude. To eliminate dimensional disparities and establish probabilistic weight allocation, we apply a softmax normalization, this guarantees

, thereby ensuring continuous transitions of phase dominance rights.

The schematic of the stage-strength evolution is shown in

Figure 3.

3.3.2. Adaptive Reward Function Formulation

The RL-PID system targets high-precision tracking of guidance-commanded pitch attitudes. Therefore, pitch angle error

and pitch rate error

should be considered in the reward function. To mitigate unnecessary oscillations induced by frequent PID adjustments, the elevator deflection angle

is incorporated into the reward function. The global reward function is formulated around three core components: pitch angle error

, pitch rate error

, and elevator deflection

. Phase-adaptive optimization is achieved through the weighting matrix

. Its mathematical form is

where

denotes the parameters considered for the reward function. Tailored to distinct phase characteristics, the weighting matrix

is architected as

Each column weight must meet the normalization constraint , The values of the weight matrix are manually designed based on the characteristics of the flight phase. Through explicit functional coupling between the stage-strength parameter and weight matrix , adaptive prioritization switching of control objectives is achieved.

3.4. Training Process

The training process of the TF-PPO algorithm introduces an adaptive exploration mechanism to optimize stability within the canonical PPO framework. Parameters of the Actor and Critic networks are initialized orthogonally [

46], ensuring the initial policy satisfies Gaussian distribution properties. The agent generates PID parameters

,

,

through the Actor network, with adaptive Gaussian noise superimposed to maintain exploratory behavior:

Traditional PPO algorithms typically employ fixed-decay exploration strategies, which cannot guarantee convergence. This paper proposes an adaptive exploration strategy based on advantage functions. When substantial policy advantage exists, it reduces randomness in action sampling to guarantee stable convergence to optimal policies. If the current strategy has strong advantages, reduce the randomness of exploration. This ensures that the algorithm converges to the optimal strategy.

In the formula, is the standard deviation of action sampling for the current episode, is the dominant function value in state . is the relevant attenuation coefficient , used to control the influence of the number of training episodes on the standard deviation. is the total number of training episodes or the preset upper limit of training times.

During this process, the interaction between the missile autopilot and the environment forms a closed-loop control loop. The PID parameter increment output by the intelligent agent is applied in real-time to the six degrees of freedom dynamic model of the missile, generating elevator deflection commands and driving flight trajectory adjustment. The observation includes the attitude angle error , altitude change rate , and other critical state variables at the next moment under environmental feedback. Next, the scalar reward signal will be calculated using the reward function designed in the previous section. After each training round, the system calculates discount returns and advantage functions based on accumulated rewards, Where is the state value estimated by the critic network, represents the discount factor and Instant reward obtained from time step .

For the process of strategy optimization, the objective function of TF-PPO algorithm is designed as:

The actor network employs a clipped policy ratio mechanism to constrain parameter updates and prevent abrupt policy shifts. Simultaneously, the critic network updates by minimizing value estimation errors:

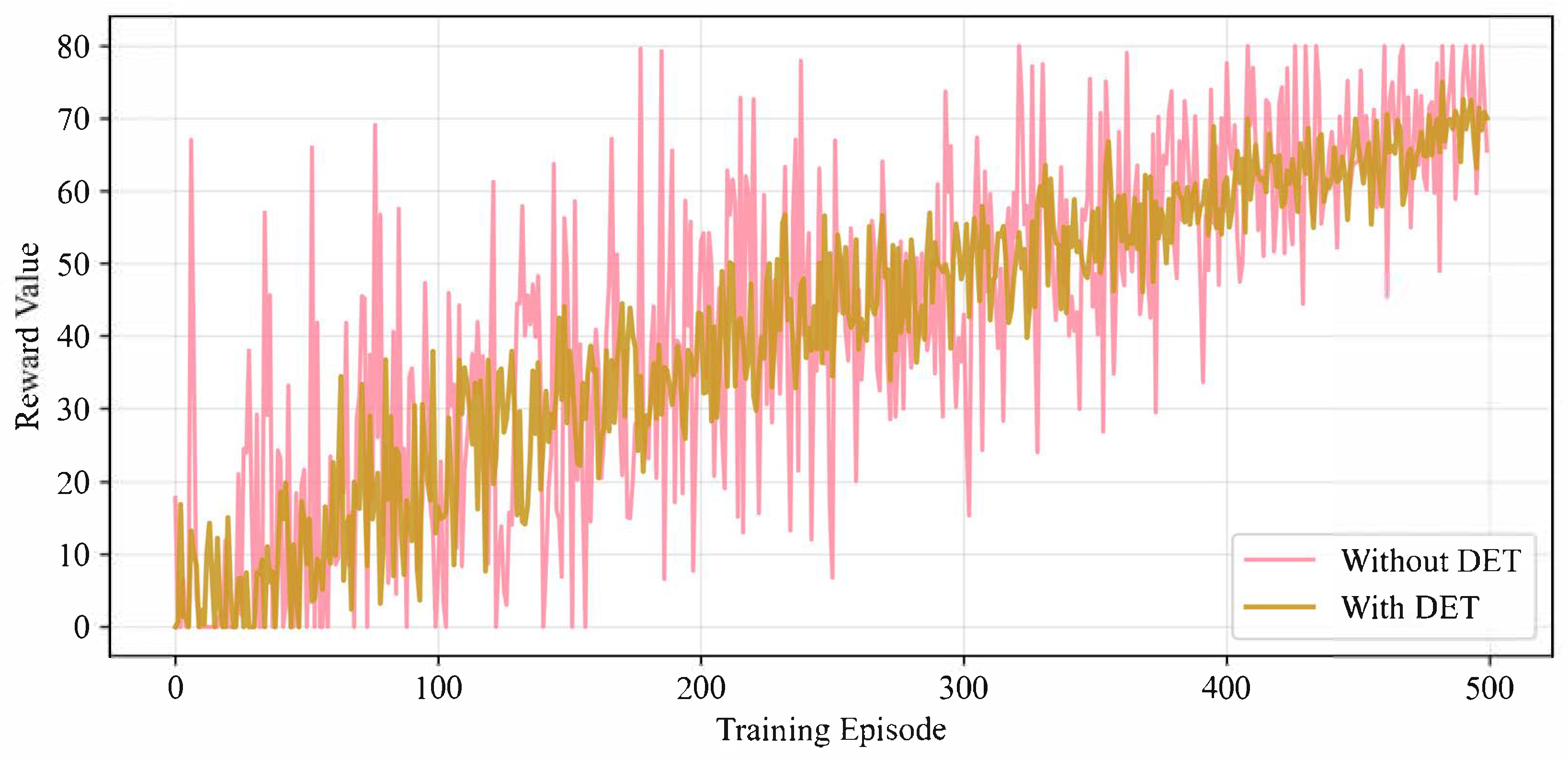

During algorithm training, reinforcement learning agents exhibit high sensitivity to training data quality in their parameter optimization. When suboptimal control trajectories are significantly present in the experience replay buffer, agents risk acquiring detrimental control patterns through policy gradient updates, leading to convergence toward suboptimal solutions or even divergence. This study incorporates dynamic early termination (DET) into the training process, enhancing operational robustness to mitigate this vulnerability.

The early termination criterion is mathematically defined as

When

, terminate the current training round and adjust the termination time reward to

where

denotes the raw reward value at termination,

represents the penalty coefficient, and

is a predefined constant penalty term. This design ensures training stability through policy exploration constraints and bounded reward scaling, achieving a 62% reduction in reward variance, as shown in

Figure 4.

The pseudocode for the TF-PPO algorithm procedure is presented in Algorithm 1.

| Algorithm 1: TF-PPO |

| Initialize policy network π and value network V |

| Set hyperparameters: learning rate α, discount factor γ, clip parameter ε, episodes E, update interval U |

| do |

| do |

|

| Check termination condition via DET |

|

Decay action selection noise:

|

then

according to the objective function

|

| end if |

| end for |

| end for |

4. Experimentation and Evaluation

To validate the performance of the proposed TF-PPO algorithm, this section presents comprehensive mathematical simulations and hardware-in-the-loop (HIL) experiments. The validation is conducted in two parts: First, mathematical simulations using step response prove that the TF-PPO algorithm offers faster convergence and better performance than other RL algorithms under large step signal excitation. Second, a missile control rapid prototyping system is designed. The attitude tracking performance of TF-PPO and traditional PID methods is evaluated under different initial conditions and wind disturbances. This verifies the robustness and self-evolution capability of parameters of the proposed method.

4.1. Step Response Experiment

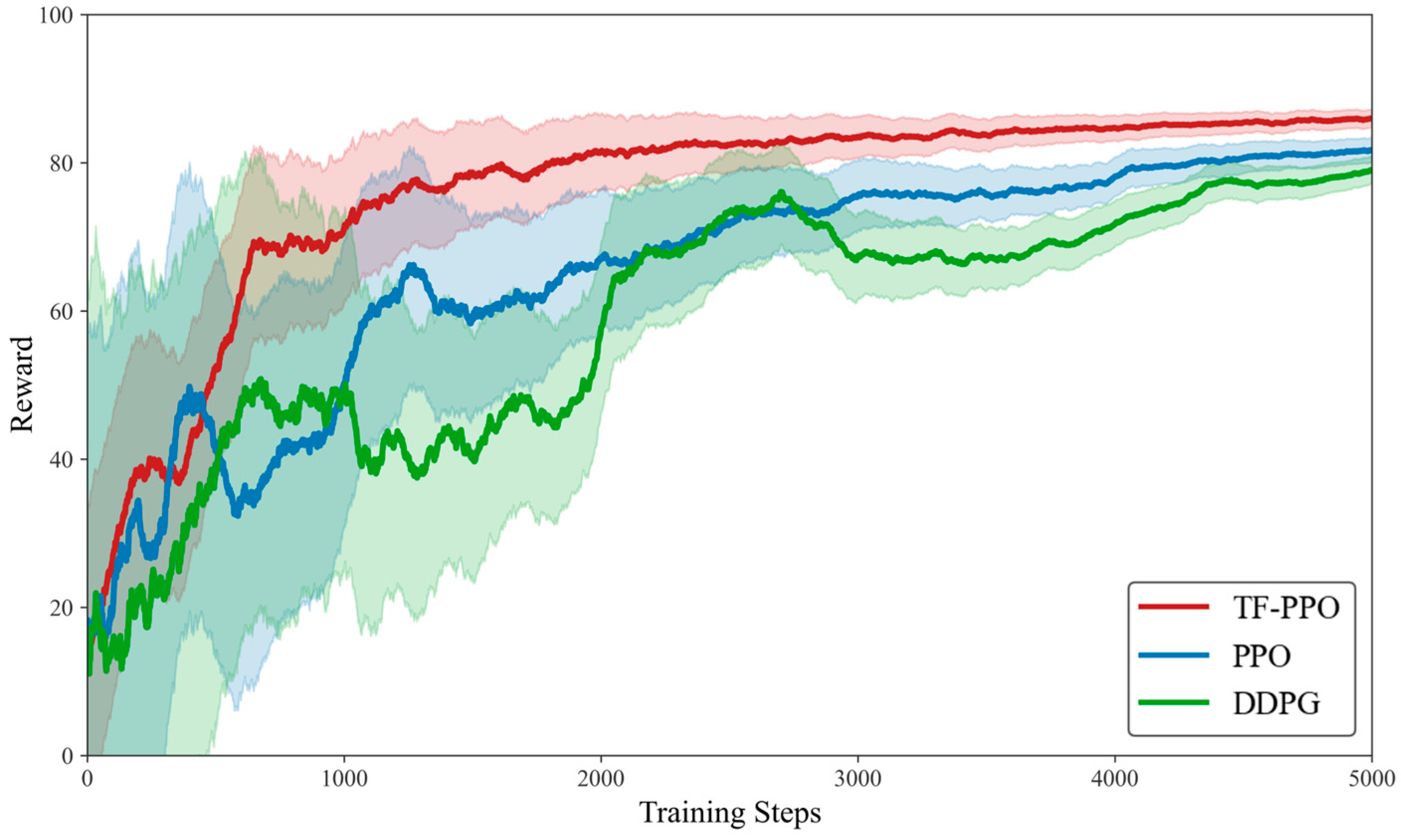

To evaluate the core contribution of the Temporal Feature Fusion mechanism, this part of the experiment uses a simplified reward function to compare TF-PPO with other algorithms. First, a 6-DOF mathematical simulation model of the missile is constructed. Then, different RL algorithms are connected to this mathematical model. We designed a fixed scenario where a large step command is given to the control system every 15 s. Using this scenario, each algorithm is trained for 5000 episodes. The convergence effectiveness of the different algorithms is assessed.

This paper uses three algorithms, TF-PPO, PPO, and DDPG, for comparative step response experiments.

Table 4 describes the relevant algorithm training parameters. The PPO algorithm uses the same training parameters. For DDPG, the parameter

is set to 0.005, while other parameters remain consistent. The training results are shown in

Figure 5, where a moving average is applied to the results. The shaded area represents the variance of the reward.

From the results, it can be seen that the TF-PPO and PPO algorithms, due to the existence of the clip method, avoid drastic changes in strategy and have relatively stable training processes. Compared to the classical PPO algorithm, TF-PPO exhibits faster convergence speed and learns better results. The trained models are reconnected to the simulation system to compare the effects of the different algorithms.

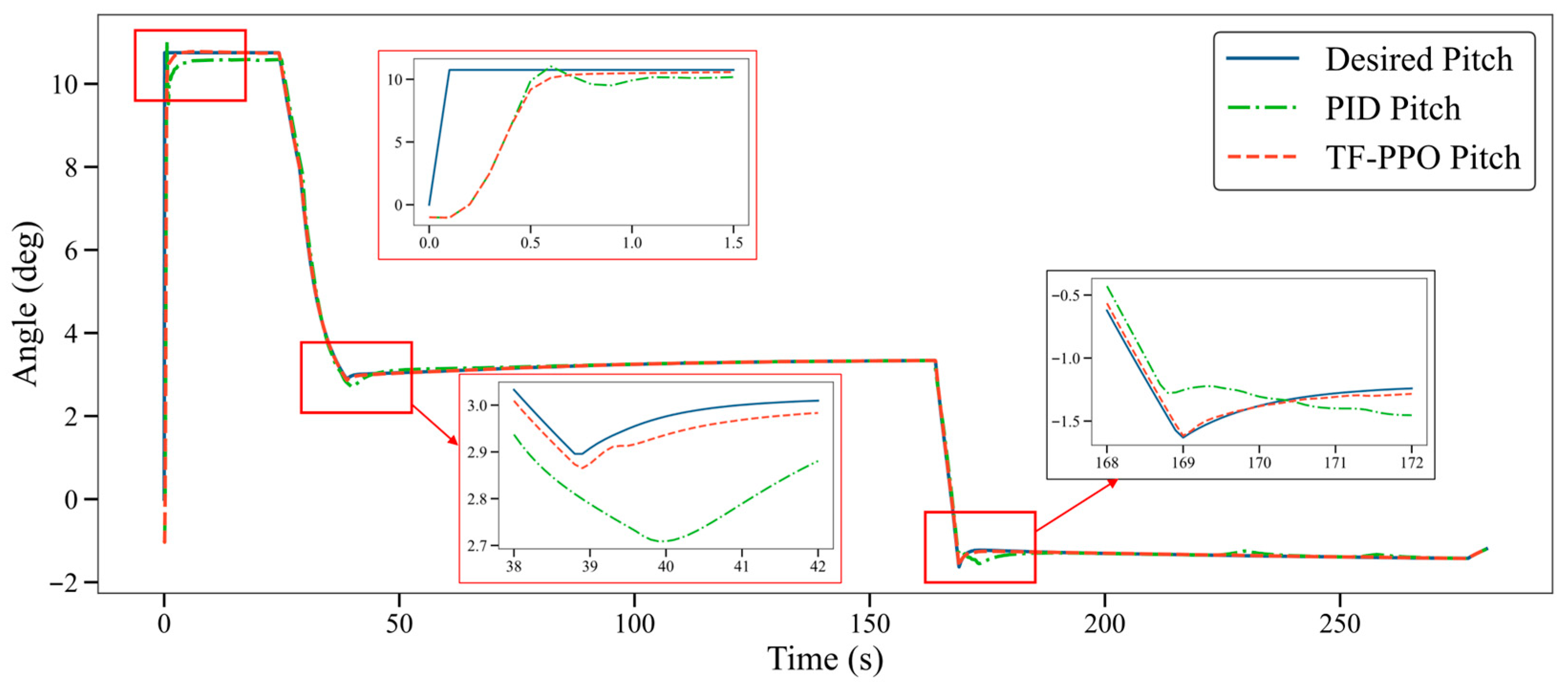

The specific effects are shown in

Figure 6. The control accuracy of the TF-PPO algorithm is superior to the other algorithms. Compared with the traditional PPO algorithm, TF-PPO achieves an average control accuracy improvement of 14.3% with similar settling time, as summarized in

Table 5. This demonstrates that the proposed algorithm has better training effectiveness for the missile guidance and control system.

4.2. Offline Training

The online optimization of the TF-PPO algorithm relies on high-quality offline training. Although TF-PPO is an on-policy algorithm, its missile-borne deployment on cruise missiles requires training the network parameters within the ground segment beforehand. Therefore, constructing a training environment that approximates real missile application conditions is essential. We use the AFSIM simulation platform as the training environment, deploy our six degrees of freedom missile model in AFSIM, and train the missile reinforcement learning control system by designing different initial conditions and flight plans.

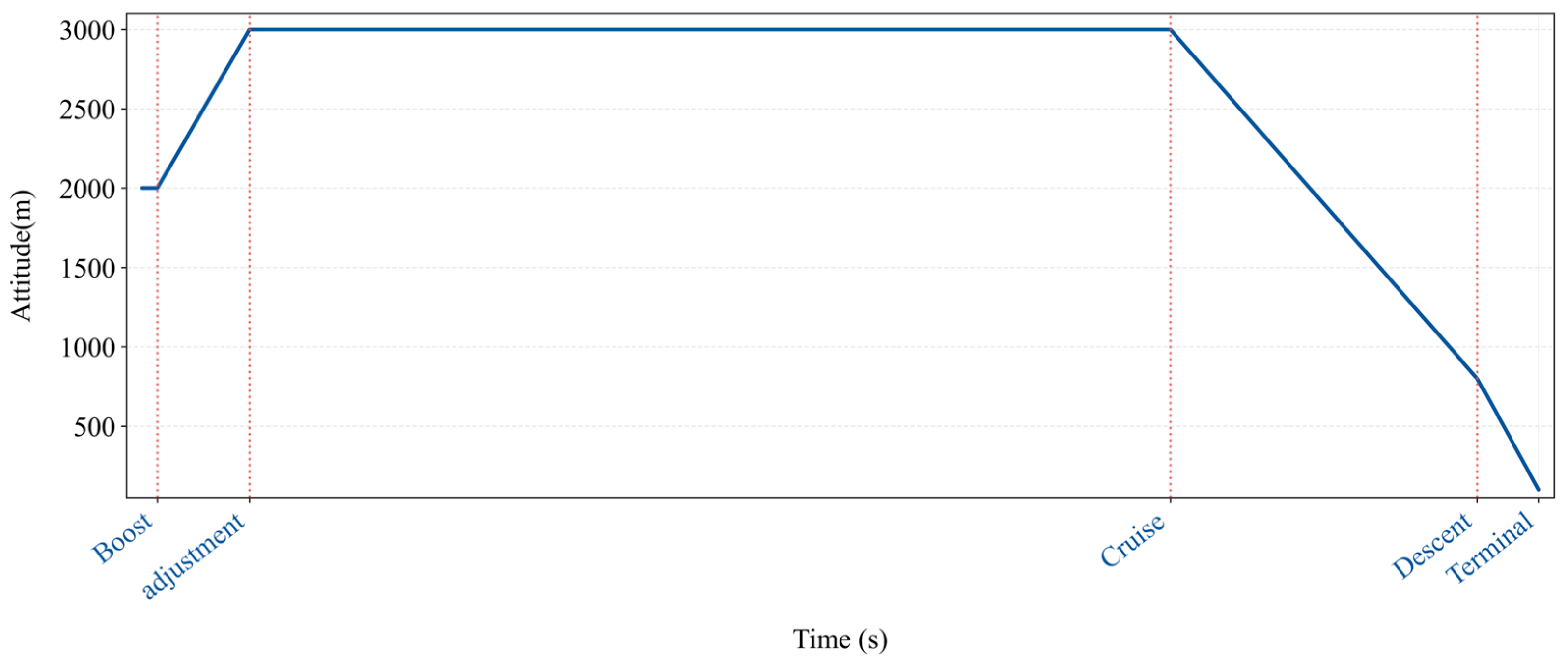

This experiment constructed a cruise trajectory plan based on a simulation platform, which includes an acceleration phase, an altitude adjustment phase, a cruise phase, a descent phase, and a terminal guidance phase. The missile was launched by air launch, and

Figure 7 shows a typical trajectory plan for missile flight. Based on typical trajectory schemes, the initial launch conditions and cruising altitude for each training round are determined through uniformly distributed random sampling, and the missile control system network is trained accordingly.

Table 6 describes the specific parameter design of offline training parameters. The TF-PPO algorithm was trained using an adaptive reward function, and the model training hyperparameters were consistent with

Table 4. After completing offline training of the algorithm, the trained algorithm was reconnected to a typical trajectory through a simulation system for full trajectory flight simulation. The simulation results are shown in

Figure 8.

The TF-PPO algorithm trained offline can automatically adjust the PID parameters of the pitch loop without prior knowledge. The results show that the missile pitch loop attitude control system under the TF-PPO algorithm exhibits good tracking performance, and in the stage transition stage, the algorithm outperforms the PID control system based on static design.

Figure 9 illustrates the dynamic adjustment of PID parameters by RL-PID during missile flight. The PID parameters will be adaptively adjusted according to the stage characteristics.

4.3. Rapid Prototyping System

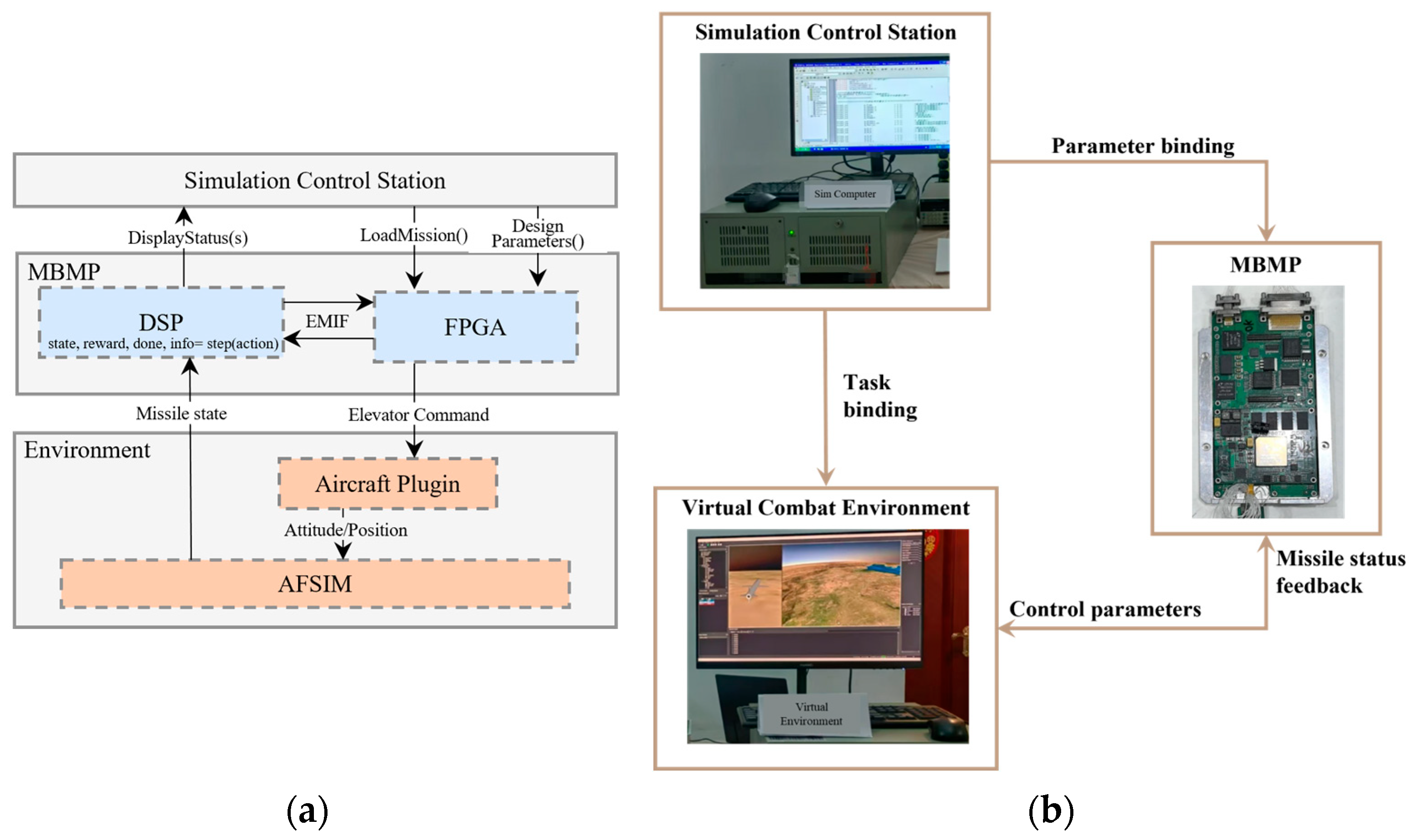

To validate the performance of the RL-PID algorithm in practical control, this study constructed a missile rapid prototyping system consisting of a simulation computer, missile-borne mission computer, and simulation platform, as shown in

Figure 10. Hardware-in-the-loop (HIL) simulation was implemented to achieve closed-loop verification of multi-phase trajectories.

Rapid prototyping is a prototype development methodology that focuses on creating prototypes early in the development cycle [

47]. This enables early feedback and analysis to support the development process. The rapid prototyping system described in this paper integrates three components: a Simulation Control Station for initial parameter configuration and mission programming that exchanges data bidirectionally with the core systems; a Missile-Borne Mission Processor (MBMP) implementing DSP + FPGA architecture to emulate physical missile control hardware, where this embedded processor executes the full missile control algorithm suite by receiving real-time missile states, computing aerodynamic surface commands, and transmitting control signals; and a Virtual Combat Environment leveraging the U.S. Air Force Research Laboratory’s AFSIM [

48] platform, within which our six-degree-of-freedom missile model operates to execute received control commands, simulate adversarial engagements, and provide continuous state feedback.

Table 7 describes the hardware configuration of MBMP.

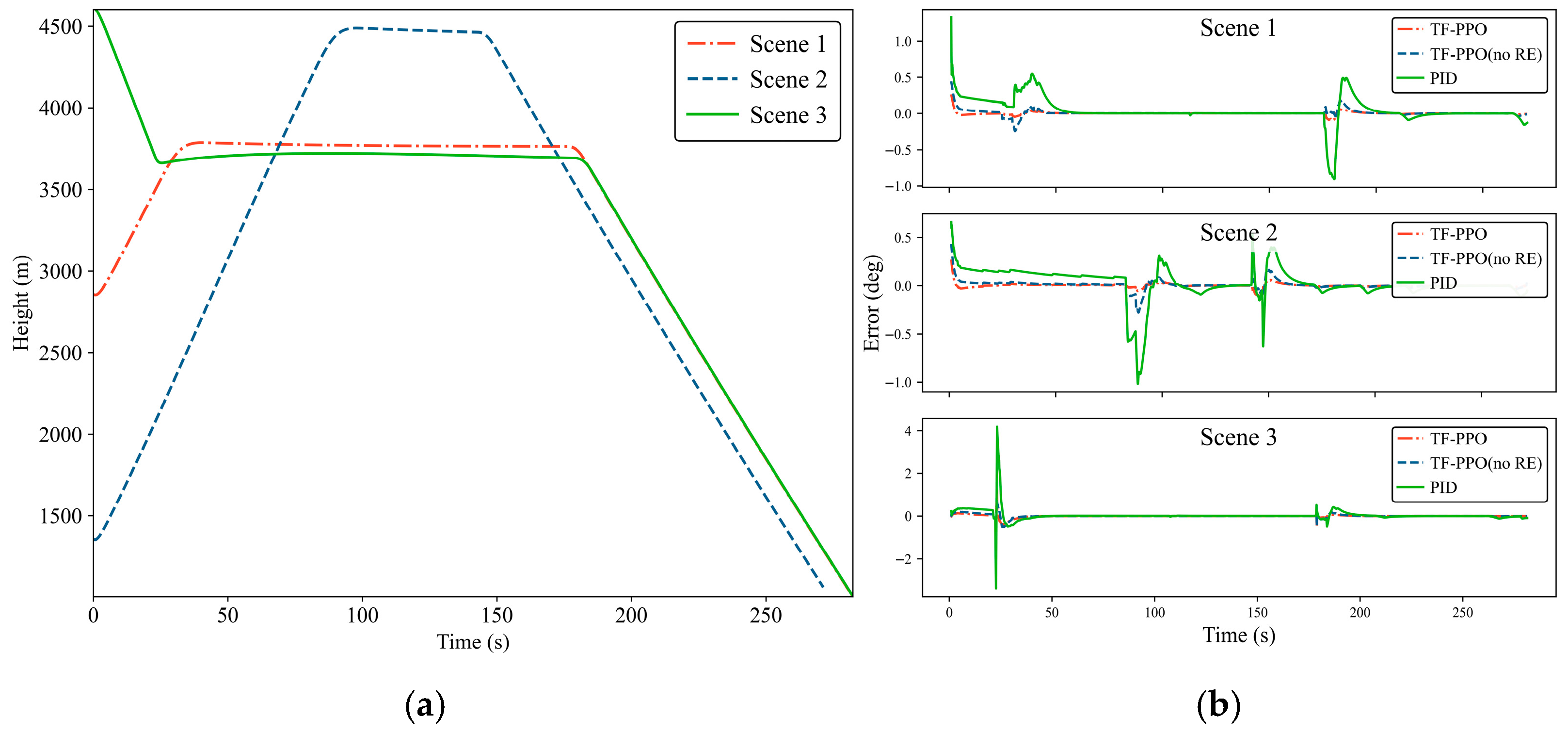

To validate the control algorithm’s adaptability to various flight profiles, multiple distinct planned trajectories were randomly generated within the parameter boundaries specified in

Table 6 for simulation. Concurrently implemented two control groups demonstrate algorithm and reward engineering advantages: the first group utilizes a manually tuned PID controller with fixed gains for standard flight profiles, and the second group employs a TF-PPO algorithm with

as its reward function (also trained offline for 5000 iterations under identical conditions; no reward engineering, labeled no RE in figures). Both groups were validated under identical randomized initial condition configurations as the experimental group.

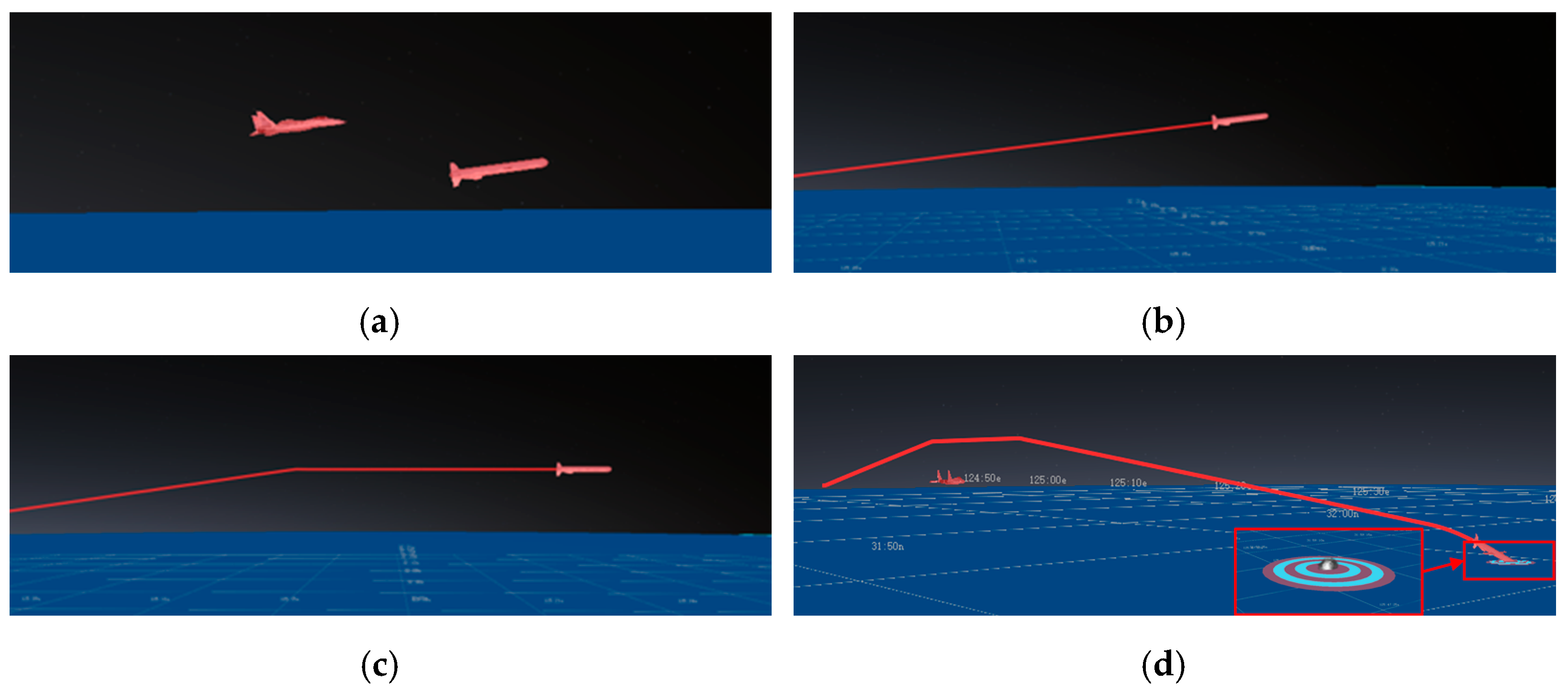

The experimental framework was designed around a simulated test scenario featuring an air-launched cruise missile deployed by a fighter aircraft. The missile’s objective is to strike a designated maritime target in a simulated maritime environment.

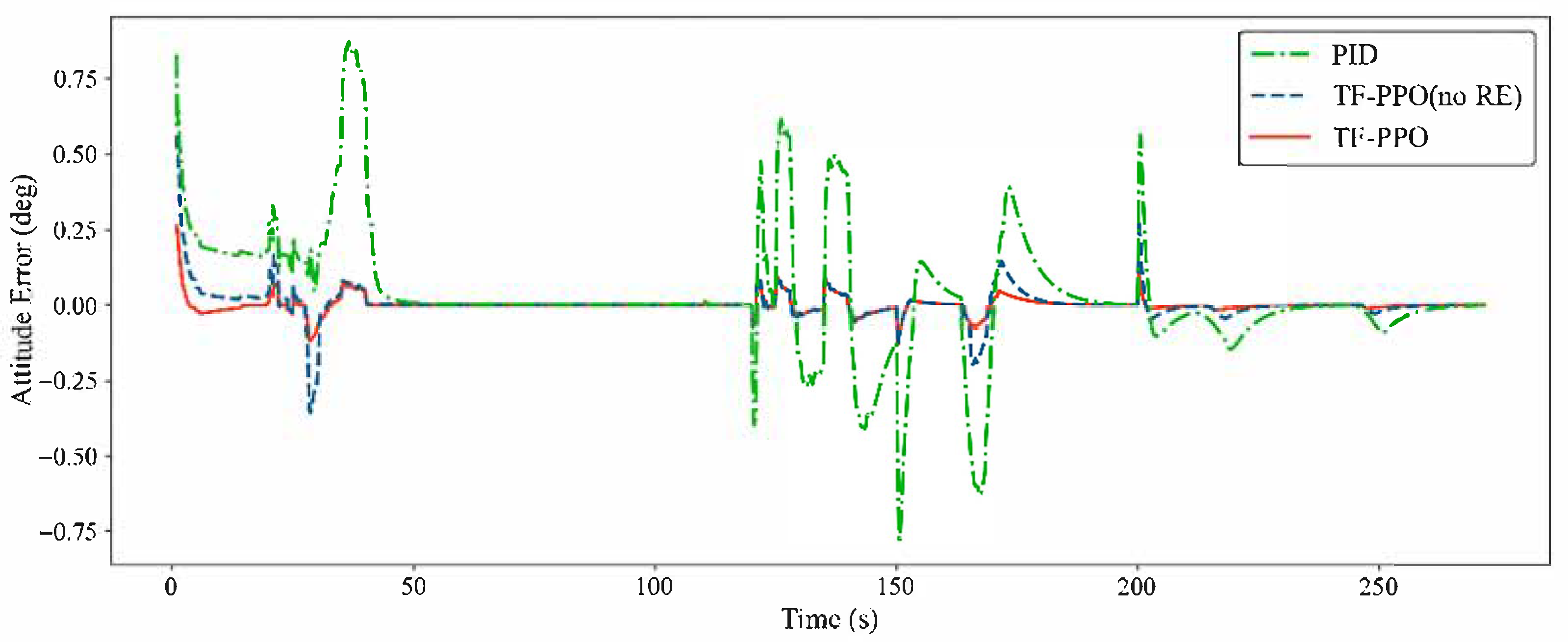

Due to different control strategies employed in the terminal guidance phase, data collection was limited to the launch-to-descent phase. Statistical results from all experiments are shown in

Table 8. Representative experimental runs are depicted in

Figure 11, which illustrates the missile flight altitude and attitude angle error.

Figure 12 shows simulation snapshots capturing key moments of the engagement scenario within the AFSIM environment. The results indicate that the TF-PPO algorithm demonstrates significant improvement over the empirically tuned PID controller, achieving a 36.3% increase in pitch angle control accuracy across the entire trajectory. Analysis of maximum values reveals that control errors predominantly concentrate during transitional phases. During these transitional phases, compared to TF-PPO without DET, the TF-PPO algorithm reduced the maximum attitude tracking error by 52.1%.

Furthermore, reward engineering constraints on elevator deflection prevent excessive PID adjustments, reducing control error variance by 54% throughout the flight. These findings demonstrate the practical utility and robustness of the reward engineering-based TF-PPO algorithm.

To further validate the disturbance rejection capability of the TF-PPO-controlled longitudinal channel, wind disturbance profiles were applied during altitude adjustment, cruise, and descent phases in typical simulation scenarios, verifying the algorithm’s attitude stabilization performance under external disturbances.

Table 9 details the design matrix for wind disturbance variations.

The results demonstrate that TF-PPO’s adaptable reward mechanism effectively mitigates attitude fluctuations induced by external disturbances. Under wind disturbances, the TF-PPO algorithm exhibits significantly lower average error than conventional PID control, as quantitatively compared in

Table 10 and

Figure 13. Compared to the version without reward engineering, the complete TF-PPO algorithm achieves 31.6% higher comprehensive accuracy, confirming its practical value for engineering implementation in complex battlefield environments.