1. Introduction

With the rapid development of imaging payload technology, agile Earth observation satellites have significantly extended the observable time window for targets through their high-precision pitch and yaw control systems, enabling the acquisition of high-resolution images and supporting multi-angle stereoscopic imaging (

Figure 1a), regional target mosaic imaging

Figure 1b, and line target non-adjacent imaging

Figure 1c) and other diverse mission requirements. The related capabilities have been widely applied in key fields such as intelligent transportation systems, emergency response, and military operations. However, current Earth observation tasks often require the simultaneous acquisition of image data of three heterogeneous targets: point targets that need precise positioning, line targets that require continuous trajectory coverage, and region targets that need large-scale monitoring. To support comprehensive situational awareness, existing studies have independently designed algorithms for point targets, line targets, and region targets. Due to the characteristic differences in the need for precise positioning of point targets, continuous path coverage of line targets, and multi-view data stitching of region targets, a unified representation framework is missing, which leads to threefold complex scheduling challenges: under the strong constraints of limited resources such as satellite maneuverability, energy supply, and observation window, it is necessary to coordinate the priority conflicts between high-urgency point target monitoring tasks such as disaster response and large-scale environmental assessment tasks such as region monitoring; it is necessary to integrate diverse constraints such as coordinate accuracy, continuous coverage path, and multi-temporal data requirements into a unified modeling framework; and it is necessary to simultaneously optimize the observation efficiency and resource allocation of dynamic arriving heterogeneous targets such as sudden disaster point targets and continuous monitoring surface targets through a single scheduling decision. These challenges jointly restrict the collaborative observation capability of agile Earth observation satellites for heterogeneous targets, highlighting the necessity of developing a unified scheduling method to improve operational efficiency and ensure the timely execution of key tasks.

Over the past few decades, research on the Agile Earth Observation Satellite Scheduling Problem (AEOSSP) has been conducted separately for three types of task objectives: point, line, and region targets. In point target planning, ref. [

1] first modeled the task as a joint optimization problem of task selection and planning. Early studies predominantly employed exact algorithms such as CPLEX [

2,

3,

4], yet encountered bottlenecks in solving efficiency within large-scale task scenarios, which spurred the development of heuristic and meta-heuristic algorithms. Representative approaches include: tabu search [

5], iterative local search [

6], improved genetic algorithms [

7,

8,

9], greedy algorithms, dynamic programming [

10], hybrid differential evolution [

11], and adaptive large neighborhood search [

4,

12], among others. For line target planning, research has primarily focused on the observation of low-speed moving line targets. Refs. [

13,

14,

15] and colleagues established a three-stage scheduling framework “search-positioning-tracking” based on Bayesian estimation and Gaussian Markov motion prediction. Regarding region target planning, ref. [

16] first decomposed the original problem into the region target decomposition problem and the Set Cover Problem (SCP). Since region targets are typically discretized into a large number of point targets, the task scale expands dramatically while computational complexity increases substantially. To address this challenge, scholars have proposed two methods: the strip method and the grid method. The strip method generates satellite coverage rectangular strips through parallel or dynamic segmentation [

17,

18], focusing on orbital characteristics and task sequence optimization. The grid method discretizes the region into point sets and enhances coverage efficiency through Gaussian projection or equal-latitude division [

19,

20]. In summary, current research independently designs algorithms for heterogeneous point, line, and region targets, facing the dilemma of fragmented paradigms for multi-objective collaborative modeling. Moreover, these methods generally rely on specific heuristic strategies, which exhibit insufficient robustness—leading to high risks of model tuning failures—and their computational complexity increases exponentially with task scale, rendering them inadequate for meeting the real-time observation demands of multiple target types.

Deep reinforcement learning (DRL), leveraging its robust generalization capabilities and online learning features exemplified by breakthroughs such as the AlphaGo series, has demonstrated remarkable advantages in addressing the AEOSSP. Specifically, ref. [

21] approached the real-time scheduling of a single satellite by formulating dynamic task allocation as a dynamic knapsack problem and applied the A3C algorithm to enable online decision-making for stochastic tasks. While the method benefits from efficient parallel sampling, the synchronization challenges inherent in the actor-critic architecture may result in training instability, thereby limiting its effectiveness in environments with stringent real-time requirements; ref. [

22] developed an end-to-end deep learning framework; however, their model was confined to fixed scenarios, which constrained the generalization capability of the learned policy; ref. [

23] introduced a two-stage neural network-based combinatorial optimization approach that enhanced decision-making efficiency in complex environments. However, the separation into two distinct stages may lead to conflicting objectives between the sub-problems; ref. [

24] proposed the RLPT method to achieve non-iterative multi-objective optimization, effectively reducing the number of computational iterations. Nevertheless, the approach exhibited limited responsiveness to high-priority dynamic tasks; ref. [

25] integrated graph clustering with the Deep Deterministic Policy Gradient (DDPG) algorithm to manage continuous-time planning, leveraging graph structures to reduce state space complexity. However, DDPG is sensitive to sparse reward signals in continuous action spaces (e.g., attitude angle adjustments), necessitating the use of reward shaping techniques for performance improvement; ref. [

26] modified the DQN architecture to better accommodate task sequencing and temporal constraints by simplifying decision logic through a discrete action space. However, this discretization may compromise the precision of continuous attitude adjustments; ref. [

27] designed the GDNN framework to improve decision-making efficiency by accelerating inference through neural network compression. However, such model compression may reduce adaptability in scenarios involving small sample sizes; and ref. [

28] applied supervised Monte Carlo Tree Search (MCTS) to address collaborative scheduling of imaging and data transmission under multiple constraints. While this method excels in explicitly handling complex constraints, its computational complexity increases exponentially with the number of constraints due to the expansion of the search space. However, despite surpassing traditional heuristic methods in efficiency, DRL remains constrained by repetitive floating-point operations inherent in latitude–longitude-based time window calculations, creating a structural contradiction between computational power and timeliness requirements. Consequently, there is an urgent need to develop innovative space computing paradigms and dynamic resource scheduling mechanisms to overcome the real-time response bottlenecks in agile satellite multi-target collaborative observation.

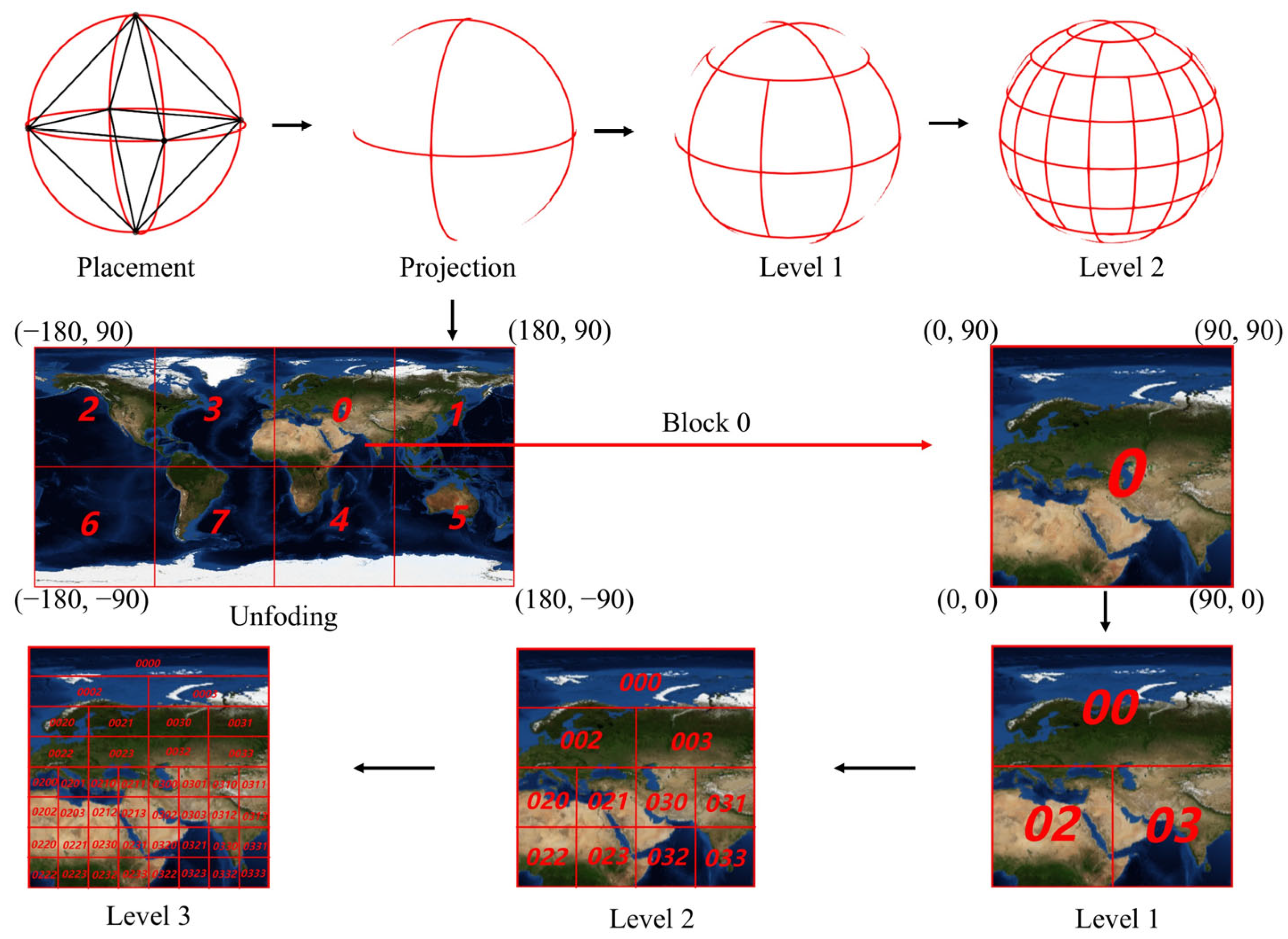

The Discrete Global Grid System (DGGS) a multi-scale earth-fitting grid constructed on a spherical surface with infinite subdivision capability while preserving its geometric integrity, enables uniform modeling of point, line, and region targets through its globally unique encoded indexing system [

29]. This system effectively addresses the computational inefficiency of traditional latitude–longitude models and has been applied in diverse satellite observation tasks [

30,

31,

32,

33,

34,

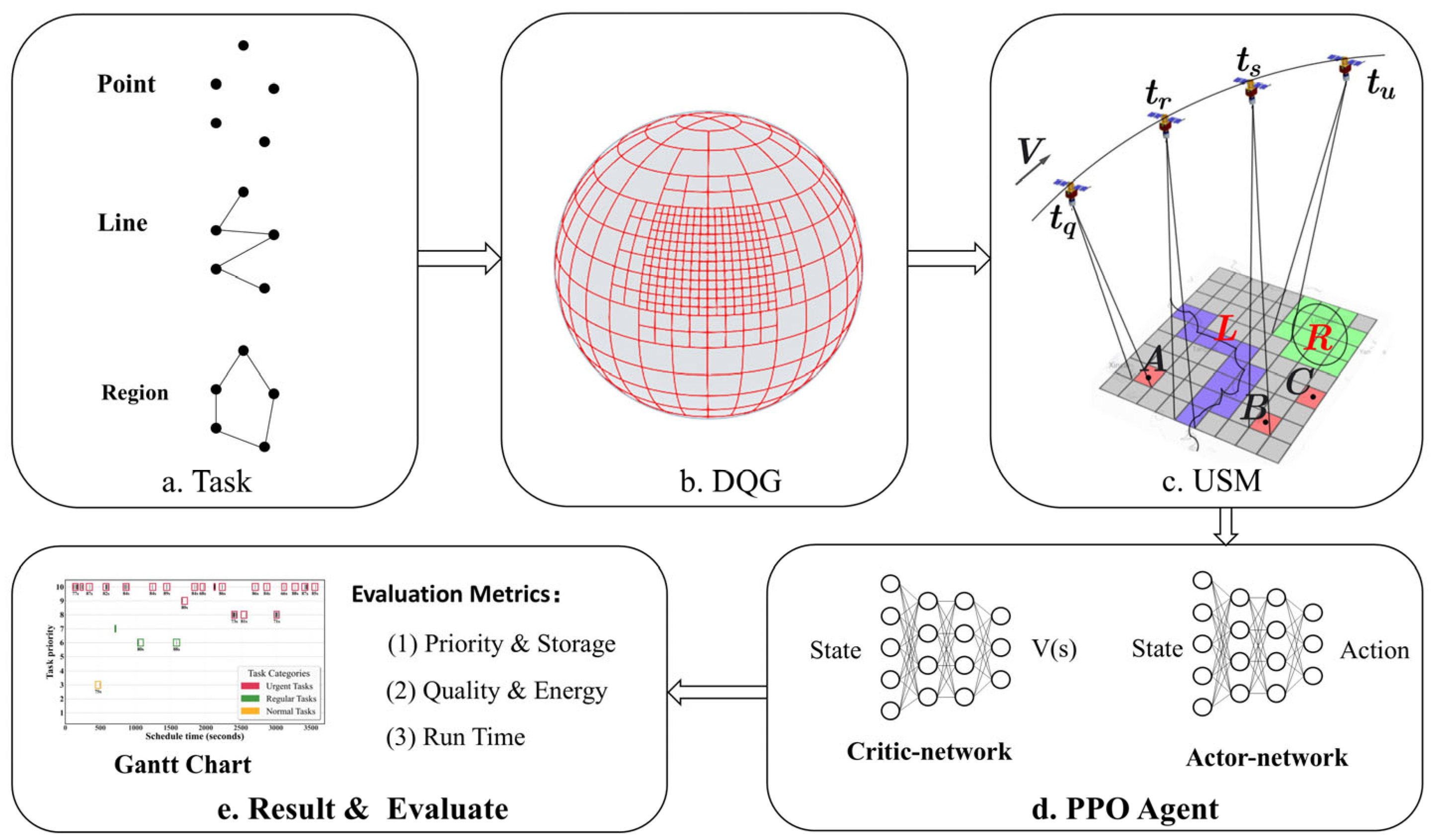

35]. However, its application remains unexplored in the AEOSSP. To address this gap, this study proposes a unified scheduling model for agile Earth observation satellites based on the DQG [

36] and PPO. The proposed model enhances rapid response capabilities and planning efficiency for multi-type target scheduling through two key innovations: (1) leveraging DQG’s global seamless coverage and multi-scale characteristics to establish a unified management framework for point, line, and region targets, thereby constructing a collaborative scheduling paradigm; and (2) replacing traditional latitude–longitude-based time window calculations with grid encoding computation to significantly improve computational efficiency. The main contributions are as follows:

- (1)

An integrated solution framework for AEOSSP that combines the DQG unified scheduling model with the PPO deep reinforcement learning algorithm, improving real-time response capabilities and system generalization performance.

- (2)

A unified scheduling model grounded in the DQG discrete grid system, establishing the grid as the fundamental computational unit to enable integrated management and modeling of heterogeneous targets (points, lines, and regions).

- (3)

A novel time window calculation paradigm utilizing DQG encoding to replace traditional latitude–longitude-based computations, significantly enhancing task scheduling efficiency with PPO.

The study is structured as follows: The second part systematically elaborates on the research model and methodology; the third part details the experimental design and analysis, including the datasets, performance evaluation of task scheduling, comparative experiments, and generalization capability verification; the fourth part explores the intrinsic mechanisms underlying the model’s effectiveness; the fifth part summarizes the algorithm’s core value, application scenarios, theoretical contributions, and prospects for future research.

3. Results and Analysis

This experiment aims to evaluate the performance of the AEOSSP-USM algorithm under multi-constraint conditions, with a focus on analyzing the USM framework’s capabilities in priority reward, imaging quality, energy consumption control, resource utilization efficiency, and real-time response. The experimental setup is based on simulated satellite parameters and remote sensing task requirements. By integrating WRS-2 grids and DQGs, a composite task set comprising point targets, linear targets, and area targets is generated to construct an experimental dataset that reflects realistic satellite mission characteristics. Priority weight simulation is employed to mimic practical application scenarios. The experiments were carried out on a system running the Ubuntu 22.04 operating system, equipped with an NVIDIA GeForce RTX 4090 GPU, an Intel Core i9-14900K CPU, and 64 GB of RAM.

3.1. Dataset Construction

The core objective of AEOSSP is to enable efficient imaging of ground targets using limited satellite resources. Consequently, the dataset construction focuses on two key components: satellite parameters and task data.

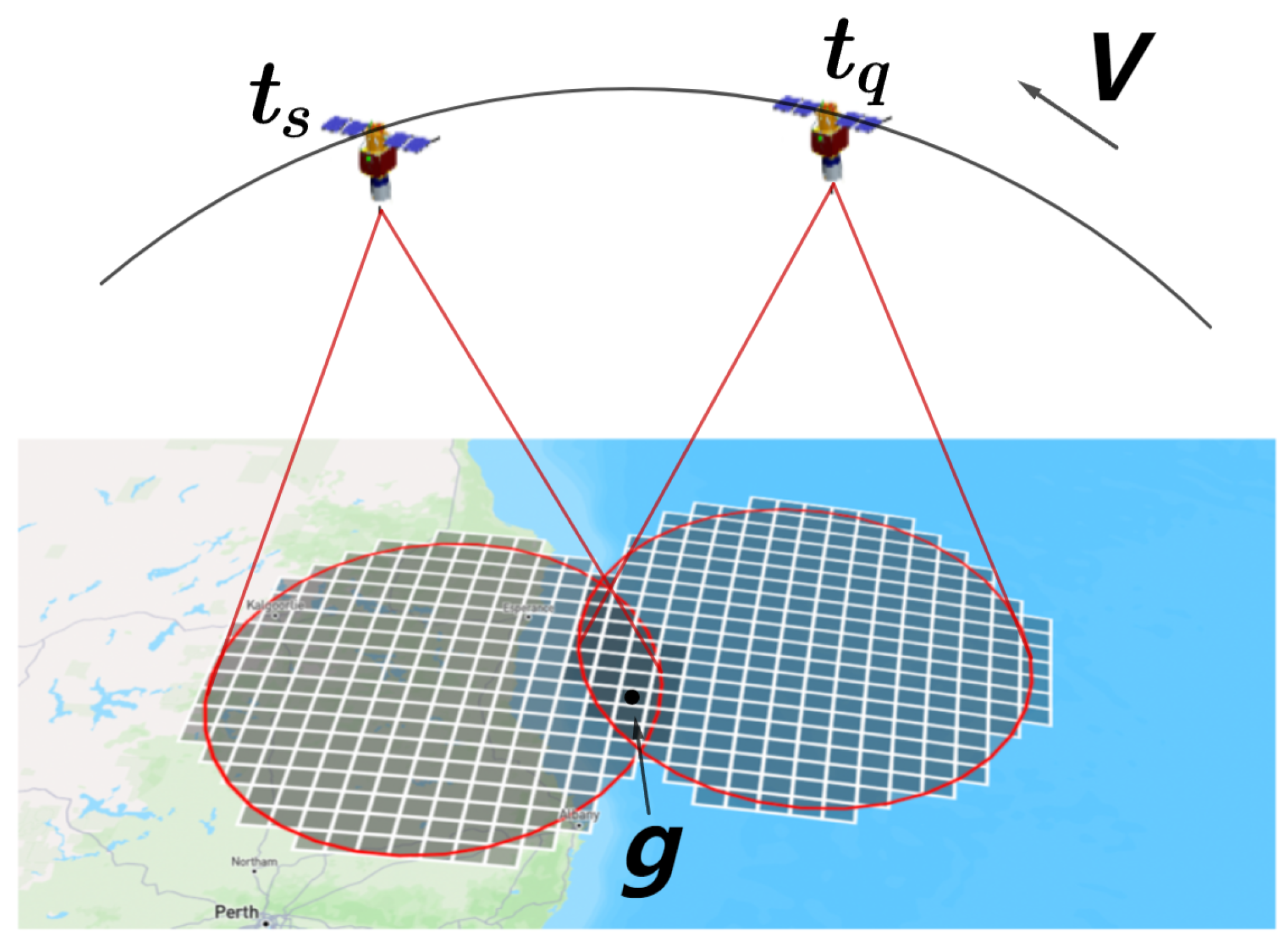

Satellite Parameters: A typical low-Earth-orbit agile satellite (orbital altitude: 500 km, velocity: 7.8 km/s) was selected as the research subject, with technical specifications referencing China’s GaoJing-1 satellite. This satellite supports continuous imaging, multi-target observation, and stereoscopic imaging modes, with a maximum single imaging area of 60 × 70 km. It employs three-axis attitude control technology (maximum maneuverability: 120°/47 s, attitude stability: <0.0005°/s), enabling large-scale precision observation. The simulation parameters are configured as follows: orbital inclination of 75°, bidirectional (cross-track/pitch) attitude adjustment range of 30°, and single-orbit coverage width of 15 km.

Task Data: Task data were managed using a grid-based approach, following the Landsat WRS-2 grid standard for generation. WRS-2 grid divides the observation area into 57,784 globally uniform cells (each 185 × 185 km), with unique spatial mapping achieved through path/row identifiers. In this study, 2000 point targets, 500 linear targets, and 500 area targets were randomly sampled from the WRS-2 grid, with each target assigned a priority weight ranging from 1 to 10. Based on priority distribution, tasks with priorities 8–10, 6–7, and 1–5 were classified as Urgent Tasks, Regular Tasks, and Normal Tasks, respectively. The USM was employed to uniformly organize multi-type targets, generating a standardized ground task dataset for scheduling experiments.

3.2. Experimental Results

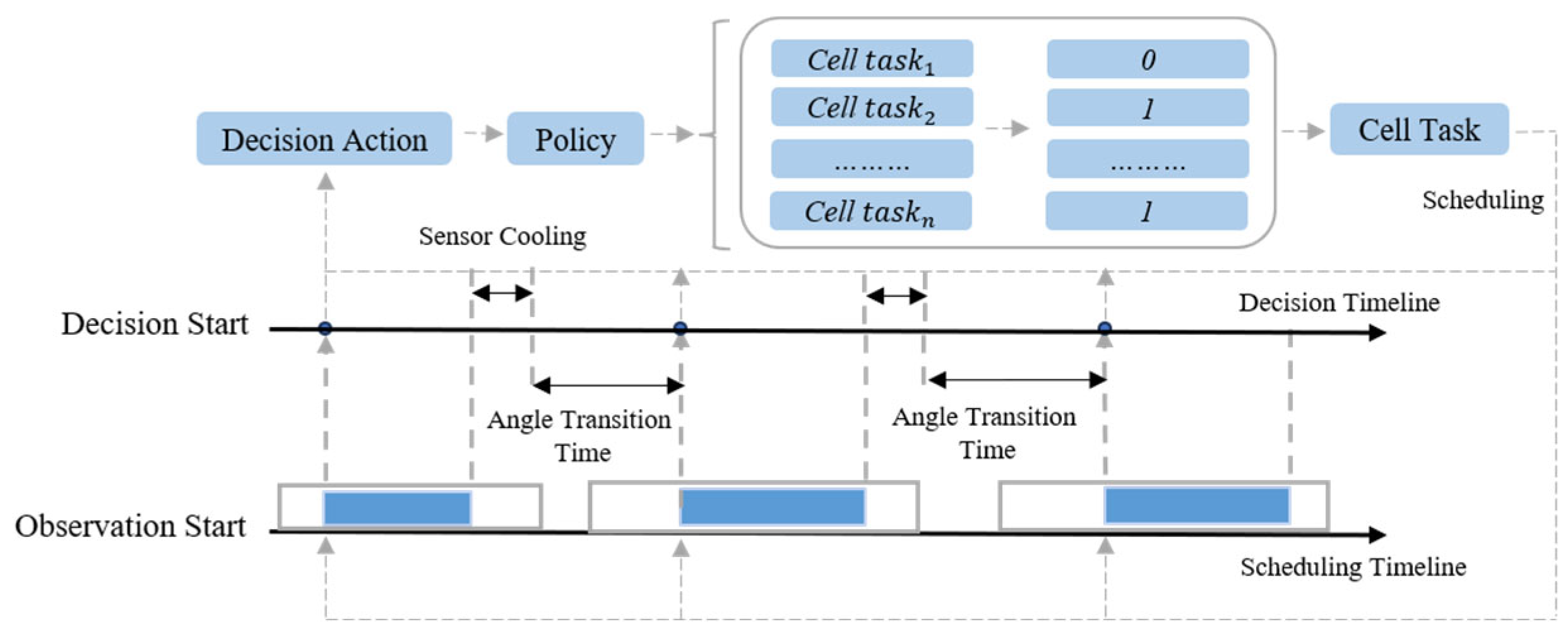

To enhance the realism and comprehensiveness of the simulation experiments, the initial states of the satellite (including position, velocity, and other parameters) are initialized using a random strategy. The simulation cycle is set to 3600 s, and the SGP4 satellite trajectory calculation library is employed to compute the satellite’s observable time windows for targets with a time step of 1 s. Based on the PPO algorithm, the performance of the USM unified scheduling model is compared with the latitude–longitude strip (LLS) direct coverage method to verify the advantages of USM in unified task scheduling.

To ensure training stability and optimize algorithmic performance, the hyperparameters of PPO are configured as follows: learning rate η = 0.0004, discount factor γ = 0.99995, clipping factor ϵ = 0.2, and 10 policy network updates per training step.

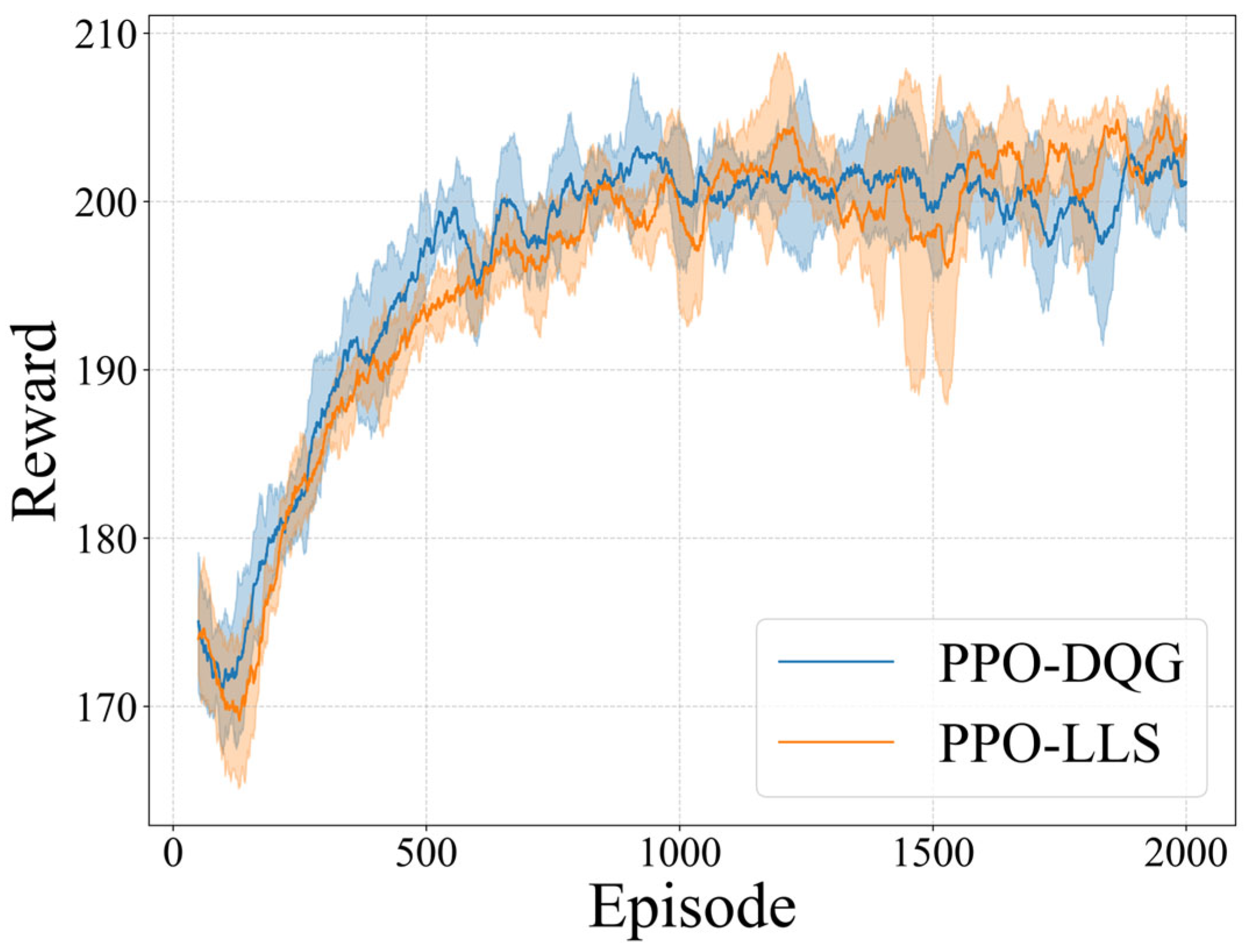

The experimental results are presented in

Figure 6, where PPO-DQG represents the USM method implemented based on the DQG, and PPO-LLS denotes the traditional latitude–longitude strip (LLS) direct coverage approach. As shown, after 2000 training epochs, both algorithms achieve stable convergence to the optimal target values without exhibiting training failure or value oscillation. Under identical training configurations, the task rewards of the two algorithms exhibit comparable performance, demonstrating that USM can effectively replace the LLS method for rational task allocation and scheduling.

To comprehensively evaluate the overall performance of both methods, a comparative analysis was conducted across five key metrics: Image Quality (IQ), Storage Occupy (SO), Energy Consume (EC), Task Reward (TR), and Time Waste (TW). Statistical measures including mean and standard deviation were employed to quantify performance disparities, with detailed results summarized in

Table 2.

The experimental results demonstrate that, under comparable task reward conditions, the proposed algorithm exhibits significant advantages over the traditional latitude–longitude strip method: imaging quality is improved by approximately 3 times, energy consumption is reduced by approximately 10%, memory usage is decreased by over 90%, and task scheduling computational efficiency is enhanced by up to 35 times.

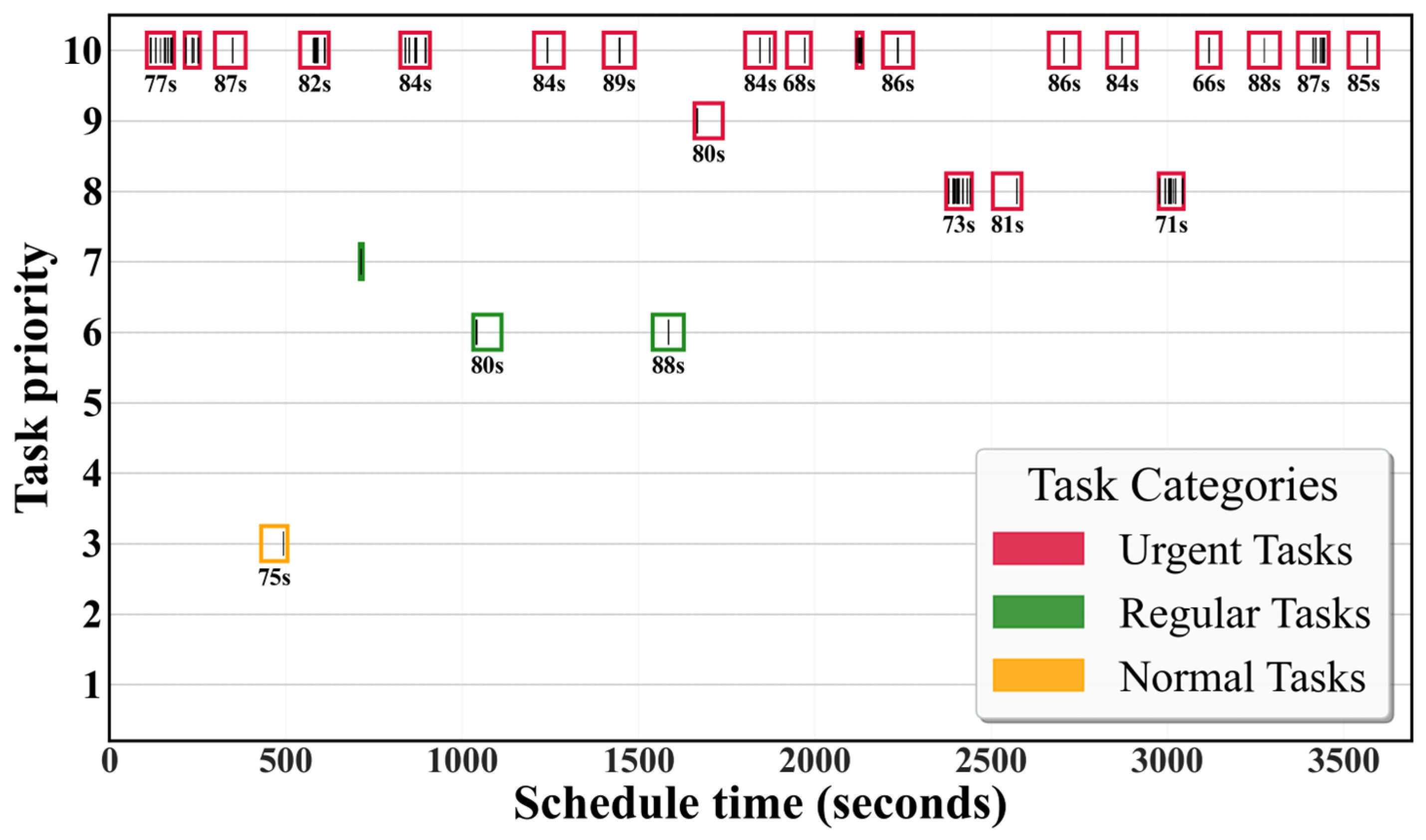

Furthermore, scheduling results are categorized by priority into urgent, regular, and normal tasks for grouped visualization. As shown in

Figure 7, the vertical axis represents the task priority (1–10), while the horizontal axis denotes the simulation time. Different colored rectangular boxes represent the observable time windows of the USM basic units, and the black stripes within these boxes indicate the scheduling time of fine-resolution points, lines, and region targets within the corresponding USM. Results show all tasks meet timing and attitude constraints, with over 80% classified as urgent, validating priority optimization. Meanwhile, execution of regular and normal tasks demonstrates efficient satellite resource utilization.

3.3. Comparative Analysis

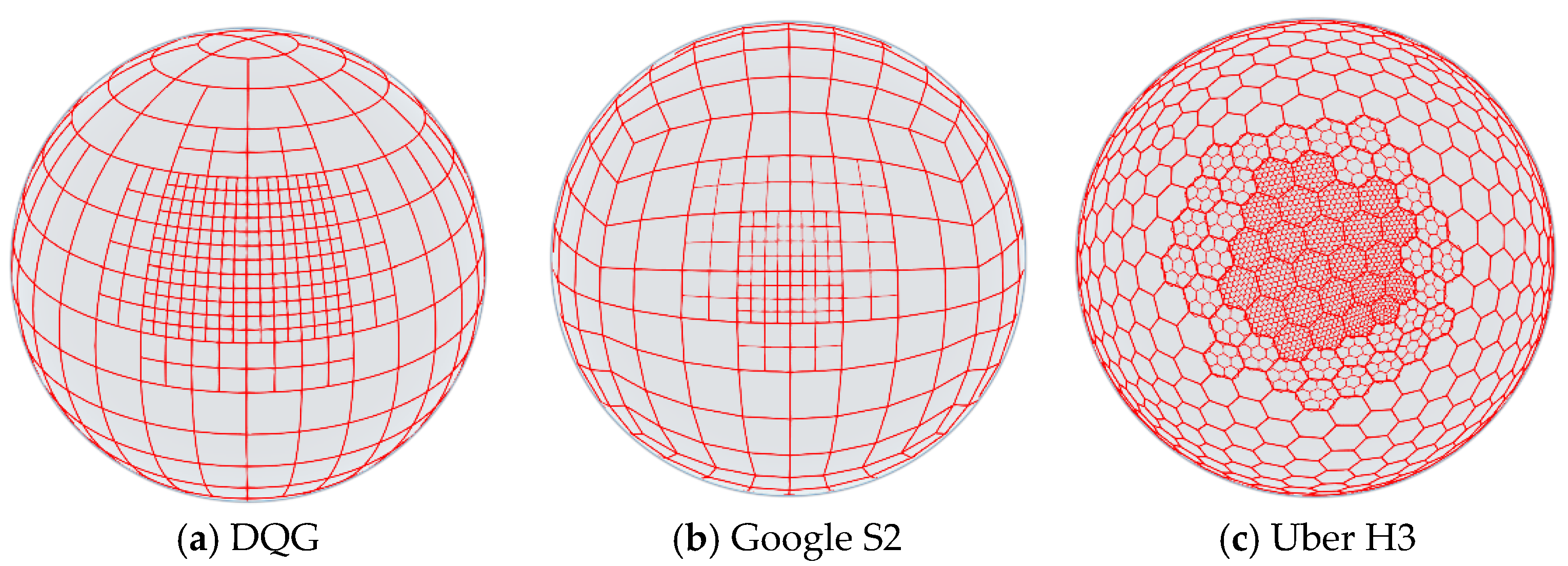

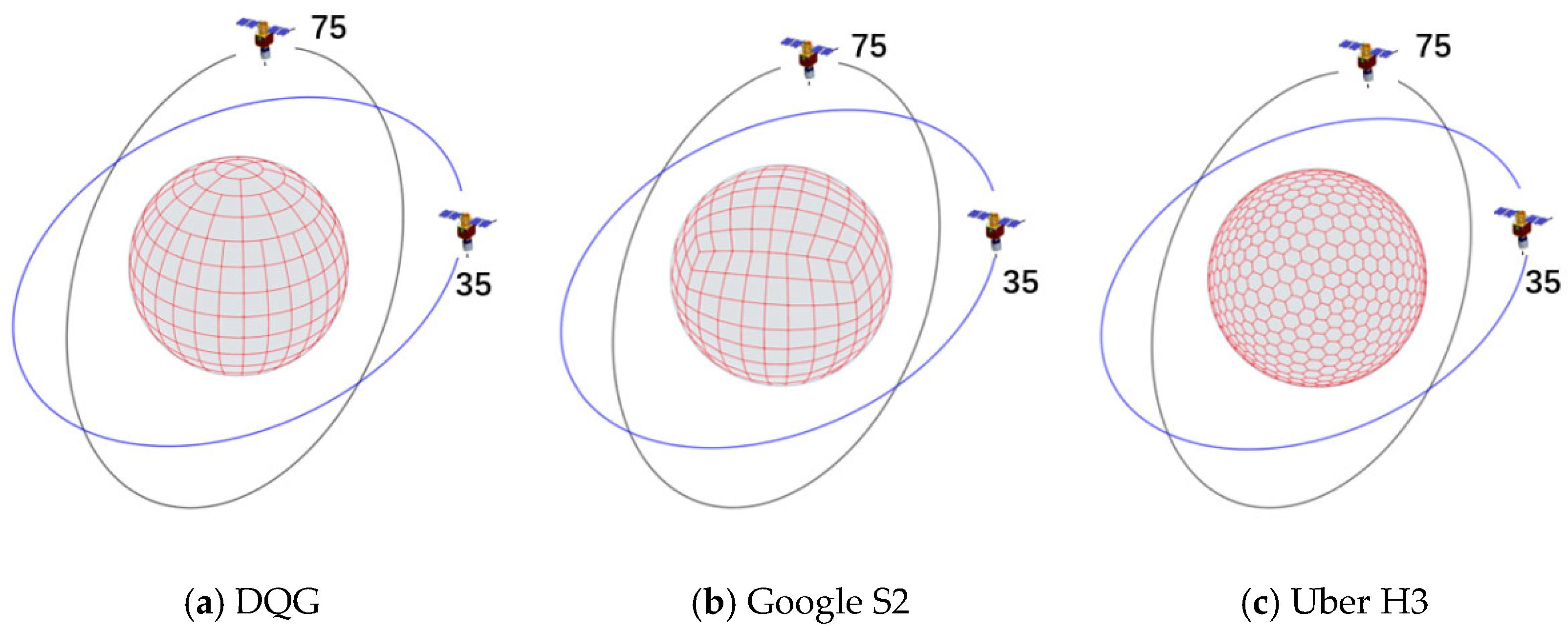

Different discrete grid systems employ distinct base partitioning units and encoding methods. As shown in

Figure 8, these grid systems exhibit significant differences in surface partitioning approaches, spatial coverage capacity, computational complexity, and scalability, thereby impacting satellite scheduling performance to varying degrees. Consequently, in addition to the DQG adopted in this study, we selected Google’s S2 grid and Uber’s H3 grid for comparative performance analysis in satellite scheduling. Specifically, the S2 grid is a probability-equalized projection grid based on spherical segmentation, featuring superior spatial uniformity and computational efficiency. The H3 grid adopts a hexagonal lattice structure and has been widely utilized in domains such as ride-hailing service scheduling.

This study implements the USM on three global discrete grids (S2, H3, DQG), trains the model using the PPO algorithm, and conducts comparative experiments with the latitude–longitude strip method. Five key performance metrics, namely Image Quality (IQ), Storage Occupy (SO), Energy Consume (EC), Task Reward (TR), and Time Waste (TW), are used to systematically evaluate the four models (PPO-LLS, PPO-S2, PPO-H3, PPO-DQG). Statistical indicators such as mean and standard deviation are employed to quantify performance disparities, with detailed results presented in

Table 3.

The experimental data show that there is a significant difference in task reward (TR) and memory usage among the four models: PPO-S2 has the highest TR mean of 203.56 ± 13.44, which is 2.0% higher than PPO-DQG (199.61 ± 14.2), 2.1% higher than PPO-H3 (199.48 ± 14.06), and 2.7% higher than PPO-LL (198.28 ± 15.62). However, the high TR of S2 is accompanied by a significant increase in memory overhead (SO), with an SO mean of 58.05 ± 10.89 units, which is 6.4% higher than H3 (54.57 ± 11.75) and 36.2% lower than DQG (91.04 ± 17.78). Further analysis reveals that for every 1% increase in TR of S2, approximately 3.6% of additional memory resources are consumed, while PPO-LL has an SO mean of 1352.56 ± 41.11 units due to its fixed strip coverage strategy, resulting in an insufficient memory utilization rate of less than 5% and being suitable only for static offline scenarios.

Energy consumption (EC) and imaging quality (IQ) show a significant negative correlation: PPO-H3 achieves the optimal energy efficiency with an EC mean of 85.31 ± 5.28 units, which is 16.6% lower than PPO-LL (102.34 ± 5.21), but its IQ mean (32.39 ± 9.04) is 50.6% lower than PPO-DQG (65.5 ± 12.97). PPO-DQG increases its IQ by 100.6% by increasing energy consumption by 4.8% (89.58 ± 6.05 vs. 85.31 ± 5.28), while controlling the quality variability (Δσ = 0.58) at a lower level. The experiment further reveals that models with EC below 90 units (such as PPO-H3) generally have an IQ drop of more than 50%, indicating that energy efficiency optimization comes at the cost of significant image quality loss. For example, although PPO-LL has the highest EC mean (102.34), its IQ is only 32.39 ± 9.04, reflecting that low energy efficiency models are difficult to balance quality stability.

The processing time (TW) metric reveals the differences in real-time performance among the models: PPO-DQG has the absolute advantage with a TW mean of 0.4063 ± 0.0015 s, which is 63.6% faster than PPO-H3 (1.1182 ± 0.00382 s) and 70.5% faster than PPO-S2 (1.3770 ± 0.00138 s), and its 99th percentile delay is stable at 0.4256 s, meeting the strict requirements of real-time systems. However, the SO standard deviation of DQG reaches 17.78 units, which is 236.4% and 63.3% higher than H3 (5.28) and S2 (10.89), indicating that efficient processing is accompanied by memory fluctuation risks. In contrast, PPO-LL has an TW mean of 13.956 ± 0.00131 s, with a delay level 10 times higher than other models, and its distribution is significantly skewed, only suitable for non-real-time offline tasks (such as historical data analysis).

Overall, models based on discrete grid systems demonstrate significant performance improvements over the latitude–longitude strip method in terms of energy consumption, imaging quality, memory usage, and response time. These results further validate the feasibility of replacing traditional latitude–longitude models with discrete grid systems, enabling more efficient execution of AEOSSP task scheduling.

3.4. Generalization Analysis

In on-board deployment scenarios, satellite agents must exhibit robust adaptability and generalization capabilities due to the highly dynamic and uncertain operational environment, as well as limited computational resources. The agent is required not only to stably execute tasks in the training environment but also to rapidly adapt its strategies and make decisions in complex, real-world situations (e.g., sudden changes in imaging resource availability). To this end, we designed a comparative experiment: transferring the policy trained in a 25-imaging-capability environment to a new environment with 15-imaging-capability, evaluating its environmental adaptability. The results are presented in

Table 4.

When the resources in

Table 4 were reduced to 60% of those in

Table 3, all models exhibited linear performance degradation: the mean values of Task Reward (TR), Energy Consume (EC), and Image Quality (IQ) decreased proportionally by approximately 60% (e.g., PPO-LL’s TR dropped from 198.28 to 118.68). Although the variance reduction to zero led to a sharp decline in performance fluctuations, this indicates more stable scheduling under resource constraints. Notably, the PPO-DQG model maintained the fastest computation speed (TW = 0.42 s). Overall, agents based on discrete grid systems demonstrated superior generalization capabilities, potentially attributed to their enhanced ability to capture spatial environmental information through discrete grid structures.