Once the methodological framework is established, the MCDM problem can be addressed. Following alternative evaluation, a set of fuzzy weights will be generated for each weighting mechanism. These weight sets, along with the decision matrix, will serve as inputs to the proposed ranking algorithms. The combination of different weighting methods and ranking techniques will produce a total of nine distinct alternative prioritization rankings, which will require comprehensive comparative analysis.

Upon obtaining the MCDM-derived rankings, an independent sensitivity analysis will be performed to assess how potential deviations in the determined weights could affect the resulting prioritized lists. This sensitivity analysis will serve two critical purposes: verifying the stability of top-ranked asteroid positions and validating that the results derived from both classical and innovative MCDM algorithm combinations are robust and reliable.

4.1. Weight Determination

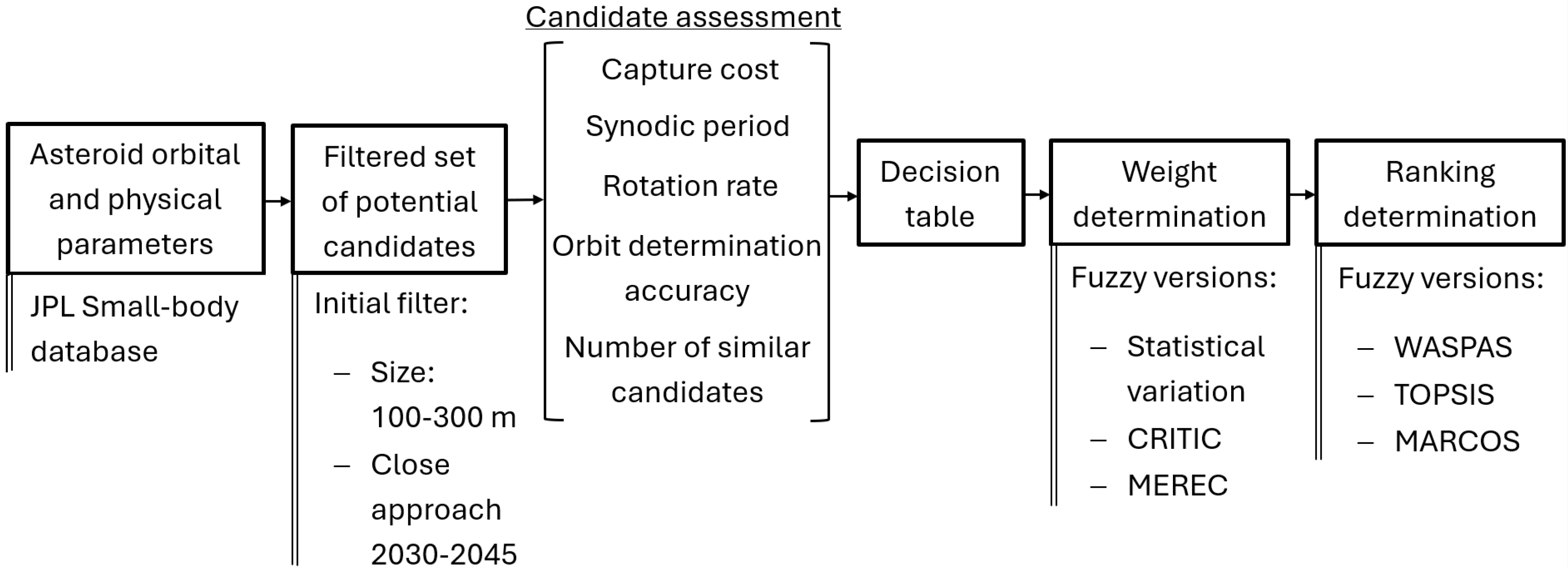

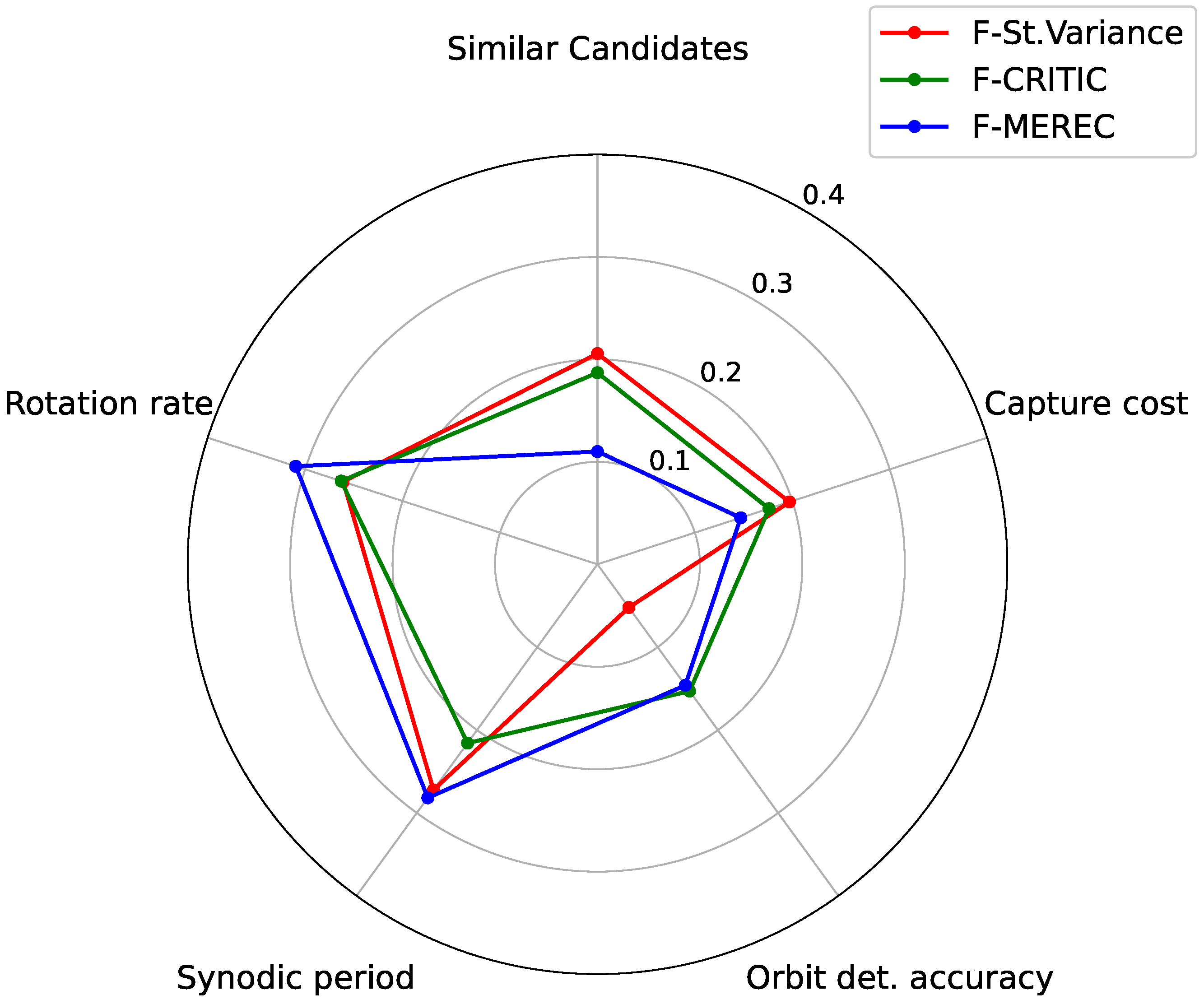

The proposed evaluation methods, fuzzy statistical variance, fuzzy CRITIC, and fuzzy MEREC, were applied to the decision matrix presented in

Table 3. Since each weighting method assesses criterion importance through distinct normalization and information processing techniques, the resulting fuzzy weight sets are expected to differ from one another.

Consequently,

Table 4 presents the fuzzy weight evaluation results for each criterion, while

Table 5 and

Figure 2 display the defuzzified weight values using Equation (

1).

It is worth highlighting that although the CRITIC method already incorporates correlation control within its weighting algorithm, it is important to assess the correlation among criteria to ensure the robustness of the results. A straightforward way to do so is through the calculation of the Variance Inflation Factor (VIF) [

80]. A high VIF value for a given criterion indicates that its behavior may be explained by the remaining criteria. Accordingly, the VIF was calculated for all criteria, and in all cases, the values were found to be below 1.2, which is under the commonly accepted threshold of 5 used in the literature [

81]. This fact confirms that the correlation among criteria in this problem is adequately controlled.

To compare the results calculated by different weighting MCDM methods, a common approach involves computing the Pearson correlation coefficient and constructing a correlation matrix. Each entry in this matrix represents the Pearson correlation coefficient between the weights obtained from two different methods. A coefficient close to unity indicates a strong positive correlation, meaning that if a criterion’s weight is high in one method, it tends to be high in the other as well. A negative coefficient suggests an inverse relationship, where a relatively high weight in one method corresponds to a low weight in the other. Finally, a coefficient near zero implies no clear relationship between the weight tendencies of the two methods.

The correlation matrix comparing the three weighting methods is shown in

Figure 3.

As observed, all correlation indices are positive and close to 1. The lowest correlation occurs between fuzzy statistical variance and fuzzy MEREC, which can be attributed to their fundamentally different weighting procedures [

49]. Notably, the largest discrepancy lies in the orbit determination accuracy criterion, where MEREC assigns a weight of 0.153 compared to statistical variance’s 0.0521, and there are a number of similar candidates for which the distribution is the opposite (0.11 according to MEREC and 0.206 according to the statistical variance method).Such discrepancies are inherent to MCDM weighting techniques, yet the correlation coefficient value of 0.638 between fuzzy statistical variance and fuzzy MEREC remains sufficiently high to confirm that all methods produce highly correlated, coherent results [

82].

Finally, it is important to note that while the defuzzified weights in

Table 5 and

Figure 3 were used for comparison purposes, fuzzy weights will be employed for calculations of alternative rankings.

4.2. Alternative Rankings

Each of the three fuzzy weight sets calculated in the previous section will be used, along with the decision matrix, as input for the different fuzzy ranking methods of F-WASPAS, F-TOPSIS, and F-MARCOS, resulting in a total of nine alternative rankings. As discussed in

Section 2.3, the diverse normalization approaches and data-processing techniques will naturally lead to variations between the different rankings, which is a common outcome when applying MCDM methods [

14,

34], though these differences must be systematically analyzed and controlled.

Following this approach,

Table 6 presents the alternative rankings in descending order for all nine combinations of weighting and ranking methods.

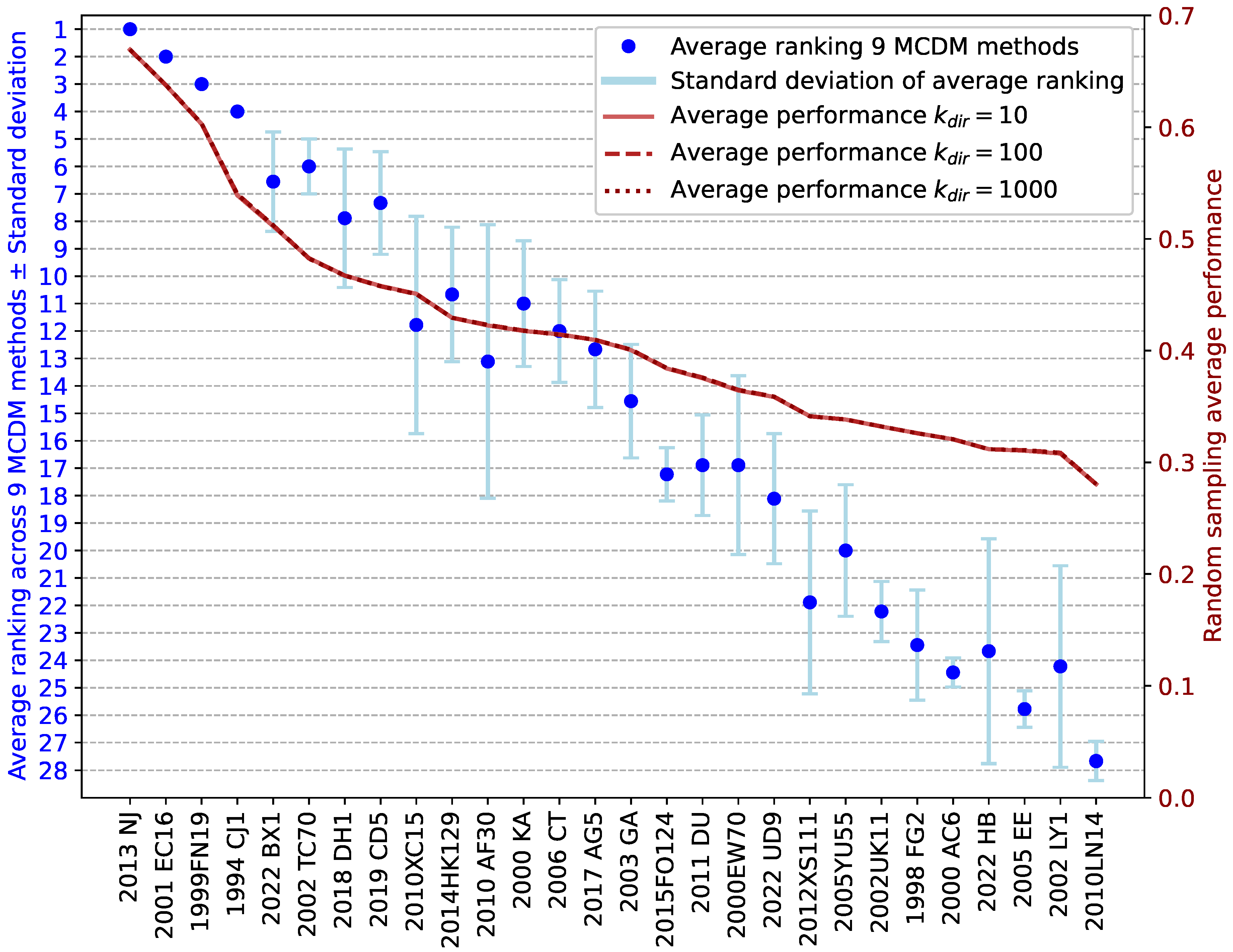

As evidenced by the results, while the rankings differ, a consistent trend emerges where the top performing asteroids in one ranking maintain strong positions across all others and vice versa. Notably, the four highest-ranked candidates remain identical in all rankings, with 2013 NJ consistently outperforming all other alternatives.

Interestingly, 2013 NJ is a small Apollo-class NEO with an estimated diameter on the order of 0.1–0.2 km. Its orbit—semimajor axis

, eccentricity

, inclination

, longitude of the ascending node

and argument of perihelion

—has been well determined over multiple apparitions and radar astrometry techniques. Photometric and spectroscopic observations show it has a relative low rotation rate (0.004 revolutions per hour) with a Q-type (high-albedo, olivine/pyroxene–rich) surface [

83] analogous to ordinary chondritic or ureilite meteorites with minimal space weathering.

It is worth noting that the four main candidates for the boulder capture option of the ARM mission—Itokawa (1998 SF36), Bennu (1999 RQ36), 1999 JU3, and 2008 EV5—do not appear in the rankings, as their sizes exceed the limits defined in this study and were therefore excluded during the preliminary screening phase. However, other asteroids proposed by ARM as potential targets are included, such as 2006 CT, which was ranked between positions 10 and 15 across the different methods, and 2000 AC6, which was ranked between positions 24 and 25 due to its higher rotation rate and capture cost.

Although the agreement in the top four ranking position suggests robust and coherent solutions across methodologies, a correlation analysis was conducted to quantitatively validate this observed consistency. As a result,

Figure 4 presents the ranking correlation matrix for all method combinations proposed.

As shown in

Figure 4, all correlation coefficients are positive and close to one, indicating strong relationships between the rankings. The lowest value, 0.814, corresponds to the MEREC–WASPAS and CRITIC–TOPSIS combinations, which still reflects a strong correlation. It is worth noting that the high correlation values observed among the rankings suggest a notable robustness of the obtained solutions. On average, these correlations exceed those observed between the weight distributions, indicating that the resulting rankings remain stable under small variations in the weighting of criteria.

To provide preliminary evidence on robustness prior to the dedicated sensitivity study, a set of targeted methodological tests addressing three key choices—defuzzification, normalization, and the TOPSIS distance metric—were performed. First, the defuzzification method proposed in Equation (

1) was replaced by the best non-fuzzy performance-based defuzzification approach [

44]. After conducting the simulations, it was found that the resulting rankings are robust, with correlation coefficients between the rankings obtained through the different defuzzification methods exceeding 0.99 in all cases. Second, to test sensitivity to normalization, the original procedures for fuzzy statistical variance and fuzzy CRITIC were subsequently replaced by linear max–min normalization and simple linear normalization [

55], respectively, to test the robustness of the prioritization results. The simulation results using these alternative procedures yield correlation coefficients greater than 0.97 in all cases when compared to those obtained with the original normalization techniques. Third, to assess the sensitivity of the results to distance metric selection, the standard TOPSIS implementation using Euclidean distance [

59] was compared with an alternative formulation proposed by Tran and Duckstein [

60]. The resulting rankings demonstrated remarkable consistency, with correlation coefficients between the rankings obtained using the original TOPSIS implementation and those obtained with the alternative metric across the different weighting methods exceeding 0.99, confirming the robustness of the implementation to this methodological variation. In addition, the ranking of the top performing candidates remained invariant across all tested methodological variations.

4.3. Sensitivity Analysis

To conclude the analysis of the prioritized results obtained through the combination of MCDM methods, it is advisable to perform a sensitivity analysis to evaluate how slight deviations in the calculated weights may affect the ranking of alternatives. Traditionally, such analyses involve a series of simulations in which the weight of the most influential criterion is modified while adjusting the remaining weights proportionally [

84]. However, this approach leads to a constrained weight distribution that does not comprehensively explore the space of possible weight combinations. To address this limitation, this study introduces a novel sensitivity analysis technique based on random sampling of weight combinations in the vicinity of a reference weight vector. This sampling is conducted using a Dirichlet distribution centered on the defuzzified average weights of each criterion shown in

Table 5.

Although readers interested in the mathematical formulation of the Dirichlet distribution can refer to the specialized literature [

85], in essence, the Dirichlet distribution generates the components of the weight vector,

according to a set of parameters

. By expressing

, where

, as

, a distribution centered around the reference weights

with the dispersion controlled by the parameter

is obtained.

It should be emphasized that this approach not only ensures that the sampled weight vectors remain focused within the region of interest defined by the reference weights but also guarantees that the sum of the weights in each simulation equals one, maintaining consistency in the decision making framework.

Thus, to conduct a robust sensitivity analysis, different values of the dispersion parameter

were evaluated. For each value, a set of 10,000 simulations were performed, generating different weight combinations, around the reference weight vector. The distribution of simulated weights around the reference values for each

is shown in

Table 7.

In each simulation, the sampled weight vector was combined with the defuzzified decision matrix using a normalized additive weighting model. Based on the outcomes of all simulations for each

, two metrics were derived: the random sampling average performance and the dominance percentage [

86].

The first metric, the random sampling average global performance across the simulations, serves as a validation measure for the rankings produced by the different MCDM method combinations. Candidates exhibiting high average performance values across simulations should correspond to those achieving top rankings in the method evaluations, while low average performance values align with poorly ranked alternatives.

Figure 5 presents these results for the various

parameter values tested.

The results presented in

Figure 5 yield several key observations. First, the trend in random sampling average performance closely aligns with the average results from the proposed MCDM methods, confirming the robustness of the MCDM-derived rankings. Second, the slope of the random sampling average performance curve flattens for lower-ranked asteroids, indicating that the top performing candidates demonstrate markedly superior performance compared to the remainder. Third, as expected, the dispersion parameter

shows no significant influence on the random sampling average performance metric.

The second metric, the dominance percentage, quantifies the frequency with which each alternative attained the top ranking position across all simulated scenarios. A higher dominance percentage directly correlates with greater ranking stability for the top performing candidate around the reference weights, indicating stronger robustness to weight variations.

Table 8 presents this metric for the five asteroids exhibiting the highest average performance values.

Based on the data presented in

Table 8, the following conclusions can be drawn. First, the dispersion parameter

has a significant influence on the dominance percentage metric. For small values of

, there is a certain proportion of weight combinations under which alternatives with a lower random sampling average performance than 2013 NJ are able to dominate the ranking. As expected, as

increases, the weights become more concentrated around the reference weights, and dominance tends to converge toward the best performing candidate. However, it is worth noting that the leading position of the candidate 2013 NJ is remarkably stable, as it consistently ranks highest in both average performance and dominance percentage across the different values of

.

Second, it is noteworthy that asteroids such as 1999 FN19 and 1994 CJ1 exhibit a higher dominance percentage than 2001 EC16, despite receiving a lower overall ranking based on the combined results of the MCDM methods and the random sampling average performance. This phenomenon should not be interpreted as a lack of robustness in the ranking obtained, but rather as an indication that if decision makers were to shift the weight allocation in specific directions, the ranking would change in favor of the candidates with higher dominance percentages.

Thus, although 2001 EC16 shows high overall performance on average, the individual criterion-specific performances of other candidates tend to be stronger, often relegating 2001 EC16 to second place. For instance, if decision makers were to assign greater importance to the capture cost criterion, the ranking would likely be dominated by 1999 FN19. Conversely, if more weight were placed on the number of similar candidates, 1994 CJ1 would tend to dominate, overshadowing 2001 EC16 despite its high global performance. However, when 2013 NJ is removed from the ranking and the dominance percentages are recalculated, 2001 EC16 achieves dominance percentages of 65.81%, 95.39%, and 100% for = 10, 100, and 1000, respectively. This reinforces the idea that the random sampling average performance remains a suitable metric for validating the obtained rankings, while the dominance percentage provides a useful indication of which candidates are most likely to take the top position if deviations from the reference weights occur.

Finally, it is worth noting that an alternative way of selecting involves progressively increasing its value and simulating the resulting rankings until they stabilize, particularly with regard to the top performing candidates. Once such a value is identified, it can be accepted by the analyst if the trade-off between the dominance percentage of the top-ranked alternative and the average percentage variation of the simulated weights is deemed satisfactory. As an example, in this study, all integer values of within the interval [1, 1000] were explored, and it was found that the rankings stabilized from onwards. For this value, the variation introduced by the simulations was sufficient, as the mean variation of the simulated criterion weights exceeded the average variation observed among the original weighting methods in all cases and, furthermore, 2013 NJ exhibited an acceptably high dominance (77.67%). Therefore, selecting a value around 40 may be considered acceptable for the purpose of sensitivity analysis in this work.

As an additional robustness check, an alternative sensitivity analysis was performed using normal distributions centered on the mean weight [

86], with a standard deviation equal to that observed across the three weighting methods. The results of this analysis were consistent with those obtained in the primary evaluation, further reinforcing the stability of the proposed approach.

In conclusion, the sensitivity analysis applied to this problem indicates not only that the ranking trends produced by the proposed combination of MCDM methodologies are coherent and satisfactory but also that the resulting classification is highly robust with respect to deviations around the calculated weights.

4.4. Limitations and Future Work

To conclude this section, it is important to remark that future work should consider extending the framework as new data become available. For example, a key limitation of this study is that rotation rates are currently known for only 4.46% of candidate asteroids, significantly restricting the final candidate selection. As observational campaigns progressively expand this dataset, the methodological framework presented here could be applied to a larger, more comprehensive pool of potential targets.

Furthermore, future research could enhance this decision making framework by integrating spectral or taxonomic classifications as data coverage improves, or by developing hybrid approaches that combine expert elicitation (subjective weights) with existing objective fuzzy methods.

Although the proposed fuzzy multi-criteria decision making approach provides a robust framework for preliminary asteroid prioritization using established accessibility metrics, we acknowledge that the current model’s scope does not fully capture potential mission design constraints that emerge during a more detailed mission planning [

87,

88]. Specifically, Earth-departure energy limits (

), launch-window dynamics, mass-return efficiency and rotation axis orientation were not incorporated into this model. The absence of a launch-window criterion may underestimate the importance of time frame in mission design, while payload mass exclusions could mask important trade-offs between sample return mass and trajectory design. Furthermore, while rotation rate provides a useful preliminary metric, it neglects important factors like spin-axis orientation and libration modes that significantly impact proximity operations’ cost and complexity. These omissions stem from the focus on high-level assessment during the initial screening phase but warrant further investigation to enhance practical applicability. For these reasons, in future work, trajectory simulation tools for launch-window and

analysis, as well as the development of a mass-return metric will be implemented to support more detailed operational feasibility assessments. By coupling our systematic MCDM framework with these mission-design considerations, it would be possible to deliver a comprehensive selection tool that bridges theoretical accessibility with practical implementation requirements.

Moreover, it is worth highlighting that the ranking methods used in this study belong to the class of compensatory methods [

14]. These methods offer the advantage of being more intuitive, as they combine the performance across different criteria into a single representative index used to produce the final ranking. However, they present certain limitations. Specifically, they do not adequately account for situations where a poor result in one evaluation criterion can be offset by an excellent result in another, which may mask critical weaknesses or generate rankings not so influenced by the criteria with greater weights [

44]. Moreover, to generate an aggregated metric that produces a final ranking, these methods require a defuzzification procedure in the final stages to obtain a crisp ranking.

These limitations suggest that future work should explore a comparison between the results obtained using compensatory methods and those derived from the implementation of fuzzy outranking methods such as ELECTRE [

89] and PROMETHEE [

90], which allow the uncertainty contained in the weights and performance values to be preserved until the final stages of the classification process [

91]. Nevertheless, the use of such methods also entails a series of disadvantages. First, the results produced by these approaches are not always conclusive, often requiring additional techniques to derive a complete ranking of the candidates. Second, it is necessary to define a set of preference or veto thresholds for each criterion, which would require expert input and add subjectivity to the decision model. Finally, these methods tend to be less interpretable, due to the complex interactions formulated between the criteria [

14].

Finally, the modular design of this MCDM system will allow decision makers and designers to expand and adapt this methodology to support broader aerospace decision making under uncertainty or apply this fuzzy MCDM framework to other NEA mission types (e.g., observation campaign target selection, or complete capture missions) or to different small-body populations (e.g., main-belt asteroids and comets). In addition, this modularity also opens up the opportunity for future works to build upon the results obtained within the proposed framework in order to integrate machine learning-based MCDM methods, enabling the development of classification models capable of predicting the evaluation of new candidates as more asteroid data become available.