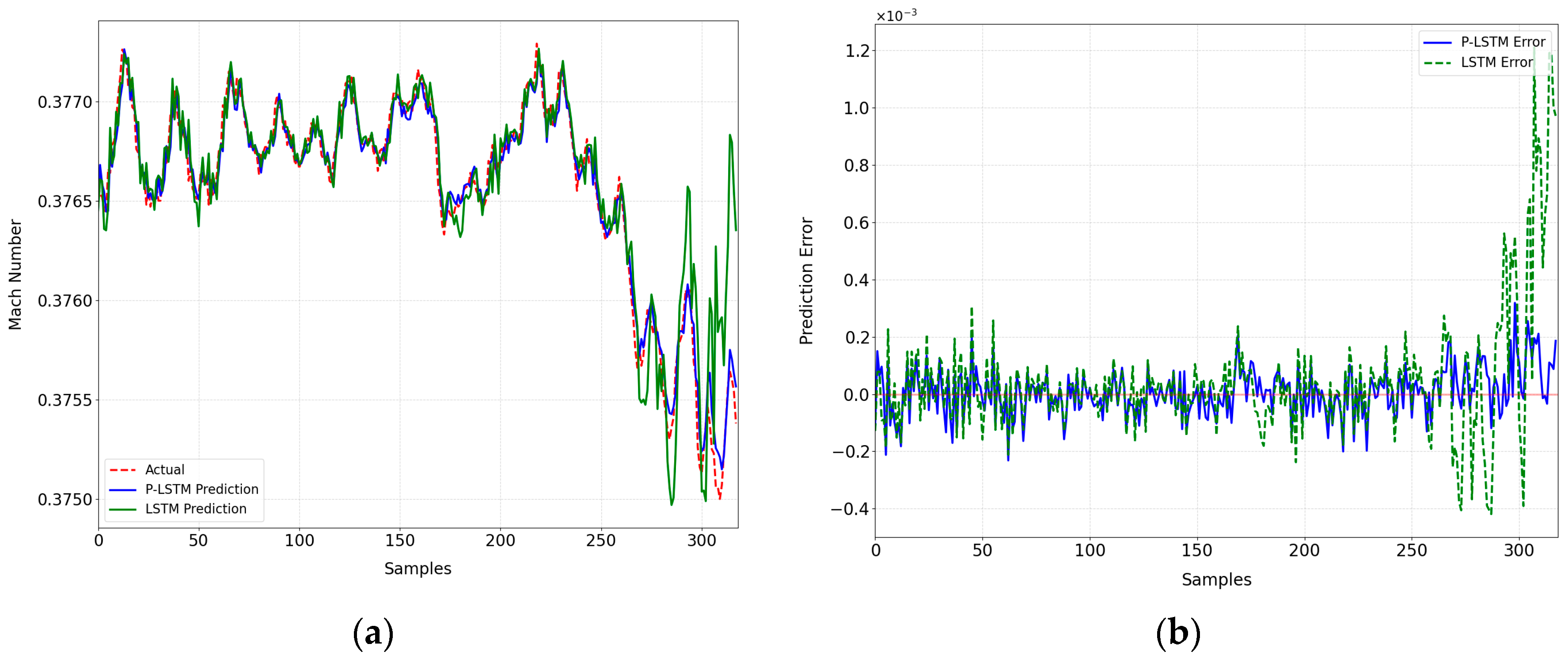

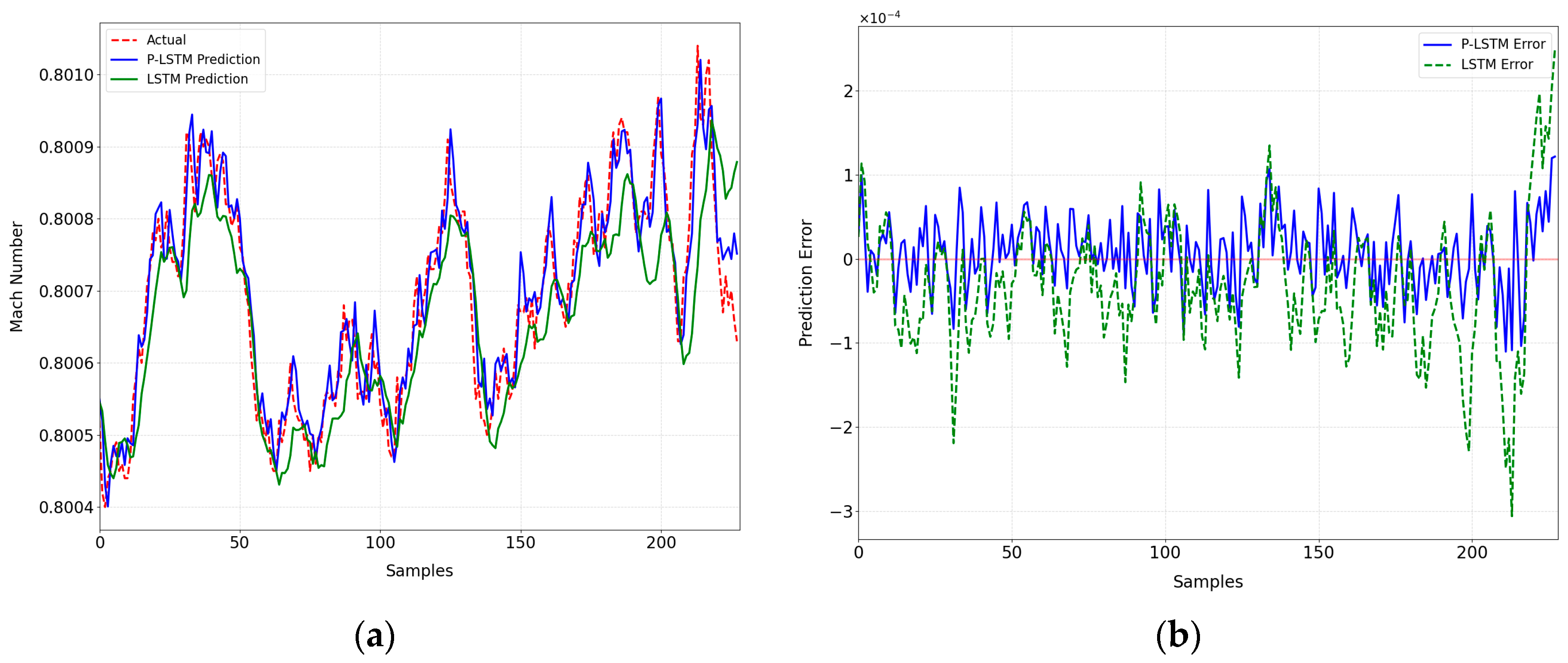

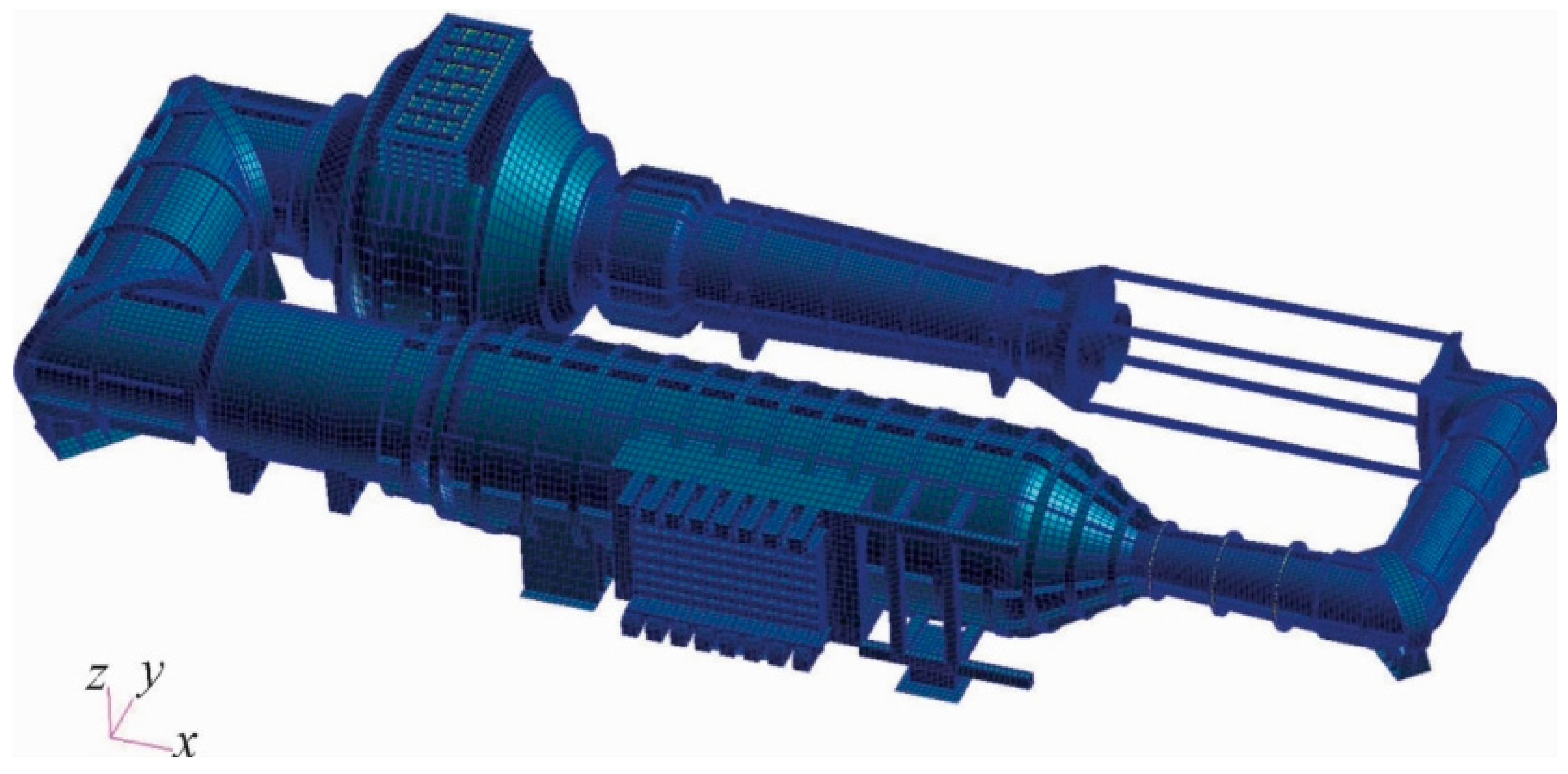

In the study of Mach number prediction in a 0.6 m continuous wind tunnel (CWT), this paper first determines the input and output parameters of the model. Then, due to the limitations of the traditional LSTM model in capturing the characteristics of the Mach number sequence, a P-LSTM model is proposed to integrate the physical loss function into the two-layer LSTM architecture. Finally, three evaluation indexes are proposed to verify the accuracy of the model prediction.

3.1.1. Determine Inputs and Outputs

As mentioned above, the core objective of a wind tunnel control system is to achieve stable control of Mach number. In most studies, Mach number is typically used as the model’s output variable to illustrate the fitting effect. This study focuses on CWT Mach number characterization, which is set as the core predictor variable. In view of the dynamic evolution of Mach number, there is a significant temporal correlation between the current state and the predicted value in the future, which provides an important basis for the modeling and control strategy design in this study.

The operating conditions of a CWT are affected by the coupling of multiple variables, and the dynamic changes in each parameter will directly affect the output characteristics of the model. After analyzing the aerodynamic structure and process sequence of the wind tunnel, it can be seen that the main control variable is the fan speed (Fp), while the model angle of attack (An) exists as a disturbance quantity. For the flow field system, the Mach number (Ma) of the test section is the core control variable, and its dynamic characteristics are mainly governed by the coupling of the total pressure (P0) of the stabilized section and the static pressure (P) of the test section. In this experiment, the temperature range was small and the measurement accuracy was limited, so we did not include temperature as an input in the model for now. The physical concepts of each variable are as follows:

- (1)

Mach number (Ma):

The Mach number is a core dimensionless parameter in fluid dynamics that characterizes the ratio of fluid flow velocity to the speed of sound in a medium. It is named in honor of the Austrian physicist, Ernst Mach. In a wind tunnel experimental system, the Mach number is the primary control parameter used to regulate the experimental conditions. By precisely adjusting the airflow velocity, the complex flow field environment of an aircraft at different flight speeds can be effectively reproduced.

- (2)

Fan speed (Fp):

In a continuous wind tunnel, the fan is one of the core drive components, whose function is to draw in air and create a directed airflow, which is then conveyed through ducts to the wind tunnel test section. The fan’s rotational speed directly affects the speed and stability of the airflow within the wind tunnel: the higher the rotational speed, the stronger the airflow’s driving capability, and the higher the airflow speed achievable in the test section. Therefore, by adjusting the fan’s rotational speed, the airflow speed within the wind tunnel can be precisely controlled to meet the requirements of different test conditions. In wind tunnel tests, the angle of attack is defined as the angle between the model’s reference axis and the freestream velocity vector.

- (3)

Model angle of attack (An):

In wind tunnel tests, the angle of attack is defined as the angle between the model’s reference axis and the freestream velocity vector. In the phase of variable angle of attack test, the model angle of attack needs to be adjusted gradually to evaluate the aerodynamic characteristics after the wind tunnel Mach number is stabilized, during which the flow field inevitably generates perturbations, which leads to the challenge of Mach number stability. Research indicates that as the angle of attack shifts from a negative value up to zero degrees, the flow’s Mach number tends to rise. Conversely, when the angle is further increased from zero to positive degrees, the Mach number of the airflow correspondingly diminishes [

22]. However, it should be noted that this trend typically applies to models without horizontal plane symmetry. For models with horizontal plane symmetry, this relationship will not hold.

- (4)

Total pressure (P0) and static pressure (P):

The Mach number is significantly influenced by both total pressure and static pressure.

Figure 3 illustrates the relationship between total pressure and static pressure. The total pressure is defined as the theoretical pressure of the gas flow when it is stagnant to zero velocity in an isentropic adiabatic process, and its magnitude is equal to the sum of the static and dynamic pressures of the gas flow, which is usually measured in the stabilization section of wind tunnels, while the static pressure refers to the pressure component perpendicular to the direction of the flow, and the data collection is performed in the test section. There is a clear coupling between the two and the Mach number: the total pressure can be independently adjusted, but its fluctuation will directly trigger the change in the Mach number, and vice versa; when the total pressure is maintained at a constant level, the static pressure becomes the dominant parameter in the regulation of the Mach number.

Figure 3.

Schematic diagram of the physical significance of total pressure and static pressure.

Figure 3.

Schematic diagram of the physical significance of total pressure and static pressure.

3.1.2. Long Short-Term Memory Networks (LSTM)

Mach number data exhibit significant time-sequence dynamics, and their evolution process depends not only on the current state of the system, but also on historical data, implying complex long-term dependencies. These characteristics require more advanced modeling methods that can handle long series data and capture long-term dependence information.

LSTM networks excel at sequence-to-sequence modeling. They have a unique recurrent connectivity mechanism that continuously retains historical information during the learning process. This allows them to effectively utilize previously learned knowledge of sequence data to predict the next state.

Figure 4 illustrates a simplified LSTM network architecture. A standard LSTM architecture is built around three core components: an input layer, one or more hidden layers, and a final output layer. While “LSTM” technically describes the entire neural network structure, it is commonly used to refer to individual layers or even the specialized memory cells within them. The network processes sequential data by transforming an input at time τ into a corresponding output at the same timestep (where τ ranges from 1 to m), which makes it particularly effective for sequence-to-sequence tasks. For proper functioning, both input and output data must be formatted as three-dimensional tensors. Dimension 1 is the time dimension, which characterizes the length of the sequence; dimension 2 is the batch dimension, which corresponds to the size of the batch or the number of data objects; and dimension 3 is the feature dimension, which consists of the input/output feature vectors.

The LSTM network is structured with multiple LSTM cells [

23]. At the heart of an LSTM cell lies the cell state, serving as the main highway for information to flow across time steps with subtle interactions. Four key gates—forget, input, hyperbolic tangent (often called the candidate state), and output—work together to regulate this flow, enabling the network to retain or discard information as needed. These gates give the LSTM its cyclical nature and empower it to grasp intricate, time-dependent patterns within data. Among these, the forgetting gate removes unnecessary information, the input gate and hyperbolic tangent layer filter inputs, and the output gate regulates outputs.

Figure 5 illustrates a single LSTM network cell. In the

lth LSTM layer, the operation of each control gate can be represented by Equations (1)–(6), which have corresponding weights

and biases

. Here, α = {

f,

i,

c,

o} corresponds to the

f oblivion gates, the

input gates, the

hyperbolic tangent layer, and the

output gate. The input is

, forgetting gate output is

, input gate output is

, hyperbolic tangent gate output is

, output gate output is

, cell state memory is

, and hidden state output is

.

where

is the logistic sigmoid function, whose value range is between 0 and 1, and is often used to output probabilities or weights; tanh stands for the hyperbolic tangent function, which maps the data to the interval between −1 and 1, and realizes the normalization of the information;

denotes the Hadamard product, which is the multiplication of the corresponding elements of the matrix.

As a key parameter of fluid dynamics, the Mach number is a time series with nonstationary and high-order nonlinear characteristics. These characteristics include fluctuations in working conditions, coupling of historical states, and noise interference. (LSTM) is well-suited to handle such data due to its gated loop architecture. The forget gate filters historical information and retains trend features, the input and update gates collaborate to filter data features and update the state to capture dynamic changes, and the output gate combines historical and current information to output the hidden state with long-term dependence. Compared to traditional models, LSTM’s gating mechanism can more accurately model the dynamics of Mach number series, guaranteeing high-precision predictions.

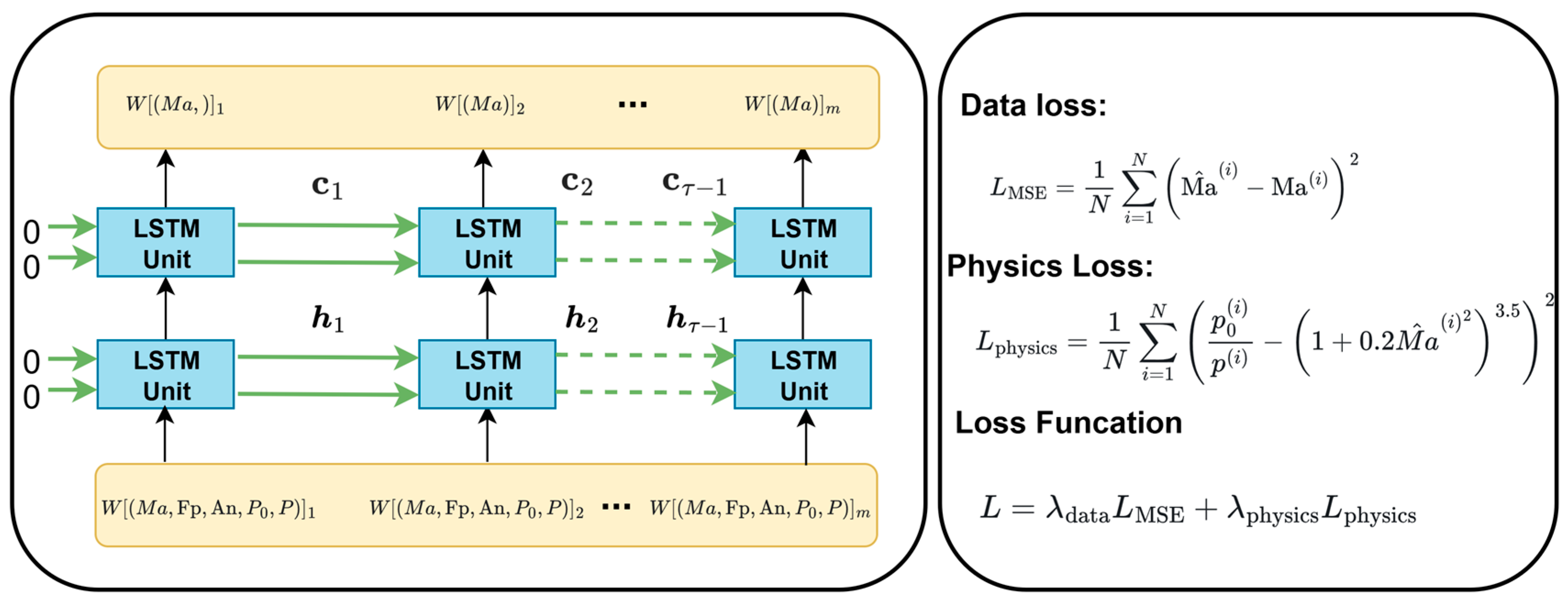

3.1.3. Physical Information-Based Long Short-Term Memory Network Model (P-LSTM)

Nonetheless, the conventional LSTM model exhibits notable limitations. Primarily, its reliance on data-driven modeling hinders the capture of underlying physical laws, leading to low interpretability of model predictions and the potential for prediction bias due to the oversight of physical constraints. Additionally, the absence of an explicit fusion mechanism for physical a priori knowledge and the incapacity to integrate the strengths of theoretical fluid dynamics models into the training process constrain the model’s generalizability in complex working conditions. The model’s capacity for generalization is constrained in complex working environments. In light of these observations, this paper puts forth a proposal for a novel model, termed the P-LSTM model, that aims to address the aforementioned limitations by integrating physical constraints with the deep learning architecture.

The P-LSTM architecture proposed in this paper represents an innovation over the traditional LSTM model by incorporating the laws of physics into the model [

24]. This incorporation can significantly enhance prediction accuracy, interpretability, and generalization ability compared to a single data-driven time-series model. The LSTM network itself is analogous to the network architecture employed in traditional machine learning. As illustrated in

Figure 6, the P-LSTM framework encompasses a comprehensive structure. The model’s distinguishing characteristic is that the weights and biases of the LSTM network are constrained by a physical loss function during training. This constraint ensures that the model predictions are consistent with the fundamental laws of gas dynamics and enhances interpretability. The P-LSTM network’s infrastructure remains consistent with that of a conventional model, thereby ensuring the capacity to efficiently model time-series data.

The P-LSTM model is composed of two LSTM layers, a structural element that facilitates the extraction of long-term dependent features and complex dynamic patterns in the data. The initial LSTM layer accepts the standardized historical data, including Mach number, fan speed, angle of attack, total pressure, and static pressure, as inputs. It then processes the timing information through the gating mechanism. The resulting output comprises the hidden state, cell state, and the intermediate feature information that is transmitted to the subsequent layer. The output layer of the second LSTM layer consists of a single node that outputs the predicted Mach number value.

In the P-LSTM framework, the loss computation combines elements of data-informed and physics-based losses. The data-informed part of the loss employs the mean square error (MSE) to gauge how far off the model’s forecasts are from the actual figures. This data-related loss is computed by Equation (7).

where

N denotes the total number of samples,

represents the model’s Mach number prediction for the

ith sample, and

indicates the actual Mach number value for that sample. This loss function encourages the model to capture temporal patterns and features within the data.

However, purely data-driven learning may have limitations. The model may overfit to noise in the data or produce predictions that violate physical laws under operating conditions not covered by the training samples. To address this issue, this paper introduces physical constraints based on the fundamental laws of gas dynamics, and the adiabatic flow equation serves as the core theoretical basis for constructing such constraints. The following derives the key formula for the ratio of total pressure to static pressure in adiabatic flow starting from the basic properties of calorically perfect gases, providing theoretical support for the construction of physical loss.

To study the flow of calorically perfect gases, it is first necessary to clarify their equation of state and the relationships between relevant thermodynamic parameters [

25]. A calorically perfect gas satisfies the equation of state:

where

is the static pressure,

is the density, R is the gas constant, and

T is the static temperature.

Meanwhile, there is a definite relationship between the specific heat at constant pressure

cp and the specific heat ratio

:

Under steady, adiabatic, and inviscid flow conditions, the law of conservation of energy holds along a streamline. For any two points on the streamline, the sum of enthalpy and kinetic energy remains constant, i.e., the energy equation:

where

h is the enthalpy and

u is the flow velocity.

Since the enthalpy of a calorically perfect gas can be expressed as

, the above energy equation can be transformed into a relationship between temperature and flow velocity:

To establish a connection between flow parameters and the stagnation state, the total temperature T0 is defined as the temperature when the flow is isentropically stagnated to zero velocity, i.e., the temperature when the flow is hypothetically decelerated to rest adiabatically.

Based on the definition of total temperature, setting the flow velocity at a certain point

u2 = 0 in the energy equation, the corresponding temperature is the total temperature

T0, and the energy equation simplifies to

Through algebraic transformation, the ratio expression of total temperature to static temperature can be directly obtained:

To enhance the practicality of the formula, it is necessary to establish a connection with the Mach number. According to the speed of sound formula

and the definition of Mach number

, combined with

, substituting into the above formula and simplifying yields the total temperature ratio formula:

This formula expresses the ratio of total temperature to static temperature in terms of Mach number and specific heat ratio. The total pressure

is defined as the pressure when the flow is isentropically stagnated to zero velocity. In an isentropic process, there are definite relationships between the pressure, density, and temperature of a calorically perfect gas:

Since the relationship between the total temperature ratio and Mach number has been derived, substituting the total temperature ratio formula into the pressure–temperature relationship in the isentropic process establishes the connection between the ratio of total pressure to static pressure and Mach number:

In summary, starting from the equation of state and energy equation of calorically perfect gases, by first defining total temperature and deriving its ratio relationship with static temperature, then utilizing the correlation characteristics between pressure and temperature in isentropic processes, the formula for the ratio of total pressure to static pressure in steady, adiabatic, and inviscid flow of calorically perfect gases is finally obtained:

This formula indicates that the ratio of total pressure to static pressure depends solely on the Mach number Ma and the gas specific heat ratio

. This study selects

= 1.4, a value widely recognized and adopted in engineering fields involving air flow such as aerospace and fluid machinery. It can well reflect the thermodynamic properties of air in compressible flow and ensure that the analysis and calculation results based on this formula have high consistency with actual flow conditions.

The core purpose of applying the adiabatic flow equation to the physical loss component is to introduce strict physical constraints into model predictions, ensuring that data-driven prediction results do not violate the basic laws of compressible flow. Relying solely on data-driven loss functions may lead to physically unreasonable prediction results—for example, contradictory situations where the Mach number does not match the ratio of total pressure to static pressure. As a fundamental law describing compressible flow, the adiabatic flow equation establishes a universal quantitative relationship between Mach number, total pressure, and static pressure. By embedding this equation into the physical loss component, the deviation between predicted values and theoretical physical laws can be quantified: when the total pressure/static pressure ratio calculated by substituting the Mach number output by the model into the formula is inconsistent with the actual measured value, the physical loss will increase significantly, thereby reversely correcting model parameters and forcing the prediction results to converge towards conforming to adiabatic flow laws. This constraint is particularly important in engineering scenarios. The physical loss component based on the adiabatic flow equation establishes a balance between data fitting and physical laws, improving the reliability and engineering applicability of model predictions. Its expression is

where

denotes the total pressure and

denotes the static pressure of the

ith sample. The Mach number

is predicted by the model. In instances where the predicted Mach number results in a deviation from the actual measured ratio of total pressure to static pressure, as indicated by the theoretical ratio, the value of the physical loss function is known to increase. This increase, in turn, prompts the model to adjust its parameters in order to satisfy the physical constraints.

Despite the demonstrated efficacy of constant weighting parameters in training physically informative neural networks, this paper proposes an adaptive rule, analogous to the one proposed by Wang S et al. [

26], with the objective of enhancing the training process. The total loss function is expressed as a linear combination of the two losses previously defined, with the weights being adaptive and defined as follows:

The adaptive weights are adjusted according to the dynamic changes in the two losses during the training process. Specifically, starting from the second training cycle, the Exponential Weighted Moving Average [

27] is used to calculate the average of data loss and physical loss. This method gives higher weight to the recent losses and reflects the trend of losses in a more timely manner. The calculation formula is as follows:

where

denotes the training cycle,

is the smoothing coefficient (set to 0.5 in the experiments),

and

are the moving averages of the data loss and the physical loss of the

tth cycle, respectively.

and

are the moving averages of the previous training cycle, which are initialized to the loss values of the first training cycle at the beginning of training.

The core idea of calculating the adaptive weights based on the average loss is to make the weights correlate with the inverse of the loss. The smaller the loss is, the better the model performs in that aspect, and the corresponding weight is larger to encourage the model to continue to maintain that advantage in subsequent training; conversely, the larger the loss is, the smaller the weight is. The specific calculation formula is as follows:

In this way, the sum of the two weights is always 1, which determines the contribution ratio of the data-driven loss and physical loss in the total loss function. At the same time, the model is able to dynamically balance the emphasis on data fitting and physical law following and gradually optimize the prediction performance during the training process to achieve highly accurate and physically realistic Mach number prediction.