1. Introduction

Aviation, like any other industry, is benefiting from current advances in artificial intelligence (AI) and machine learning (ML). However, unlike some other industries, aviation relies on numerous safety-critical systems, which are subject to strict certification processes. As such, AI-based systems for aviation must be certified according to the same standards as traditional systems [

1]. To ensure the certification of AI-based systems, a transparent and structured development process is necessary. The current state-of-the-art and industry standard in aviation is the well-established V-model process for verification and validation (V&V) [

2]. However, it is not suitable for the development process of AI-based systems, which cannot be approached in the same way as traditional software [

3,

4]. Typically, the V-model focuses on executing tests in a predetermined order, which does not align with the iterative and dynamic nature of developing AI-based systems. Given the long history and general success of the V-model, any new standard for these AI-based systems should comply with the V-model to ease adoption. To address this issue, the European Union Aviation Safety Agency (EASA) introduced processes for the development of AI-based systems, such as the W-shaped process [

5,

6]. The proposed W-shaped process is executed parallel to the V-model [

2], adding dedicated AI constituent requirements and certain tasks for data management and model training. Furthermore, it ensures sufficient generalization and robustness capabilities for AI-based systems. The W-shaped process supports an iterative process during the implementation phase, allowing for feedback loops in training and testing. Due to the iterative training, V&V, and testing, the W-shaped process ensures that an AI-based system is continually assessed and improved, ultimately leading to a more robust and trustworthy AI system [

6]. In its current setup, however, the W-shaped process is only applicable for supervised learning, despite including initial concepts from unsupervised and self-supervised learning; reinforcement learning is not yet addressed in the W-shaped process [

6]. Due to its ability to combine classical development methods with the novel requirements of AI-based systems, the W-shaped process has already been used in several domains outside of aviation [

7]. However, the EASA learning assurance process—and consequently the whole structure of the W-shaped process, including the proposed extended W-shaped process—are not without critique [

8]. It has been noted by other works, that, although the general process is indisputable, some objectives proposed by EASA can only be verified empirically, while others are outright impossible to verify.

Nevertheless, the W-shaped process is not the only process currently undergoing standardization activities for the development of AI-based systems in aviation [

9]. Another proposed framework is currently being developed under the G34/WG-114 Standardization Working Group, a joint effort between EUROCAE and SAE, for the machine learning development lifecycle (MLDL) [

10]. The MLDL process aims to ensure the comprehensive management and interoperability of model-based data throughout the development process, supporting the certification/approval process of AI-based systems in aviation [

10].

Applying the Development Operations (DevOps) cycle, which merges development and operations into a holistic process for continuous improvement, represents the state of the art in software development. By adopting continuous integration and continuous deployment (CI/CD) practices, DevOps enhances collaboration through rapid feedback and embodies an agile approach. This characteristic fits well with the complexity of developing AI-based systems, which requires iterations early in the development phase in contrast with linear processes [

11]. Therefore, a process combining the advantages of both the W-shaped process and the DevOps cycle promises to ease the development of AI-based systems in aviation by streamlining the AI Engineering process. The possibility to continuously deploy updated ML models even after the first deployment offers a more flexible development framework. However, the increase in flexibility comes at the cost of a non-fixed list of requirements. While software-based components can be updated iteratively, hardware components in aviation cannot. Thus, the full integration of DevOps into both the software and hardware within the standard aviation development process remains an area of ongoing research. In this work, several approaches for the development of AI-based systems, such as the W-shaped process and the proposed framework by the G34/WG-114 Standardization Working Group, are investigated; further advancements incorporating the DevOps cycle are also outlined.

To efficiently capture all requirements, a Concept of Operations (ConOps) is created. The ConOps documentation outlines all stakeholder requirements based on their specific needs and expectations, helping with communication between stakeholders [

12,

13]. Moreover, a fixed list of high-level requirements is essential to ensure compatibility between independently developed subsystems, where each subsystem could potentially be an AI-based subsystem [

14]. However, each subsystem must have its own detailed but mutable requirements list, which can be updated throughout the development process. The requirements list for a subsystem is currently derived by combining the ConOps documentation with more specific requirements derived from the W-shaped process’ requirements. As the development progresses along the W-shaped process, the focus shifts to data gathering, analysis, and dataset preparation. If a re-evaluation of the requirements is necessary, the W-shaped process already allows for this procedure to occur during the aforementioned steps, resulting in the requirements list being updated iteratively. The AI Engineering framework presented here advances this process structure and places greater emphasis on a potential re-evaluation of the whole architecture based on monitoring feedback to enhance the AI-based (sub)system’s capabilities in each iteration. This integration is achieved by further deepening the incorporation of certain DevOps concepts into the W-shaped process-based framework.

As part of the ConOps, a clear definition of the expected operational environment is not only helpful but required by EASA for all future AI applications in aviation. This idea has been developed in the automotive domain, where methodologies for the development of safety-critical AI-based systems are more advanced and has since been standardized [

15,

16,

17,

18]. Different terms describing various aspects of the environment have been defined. First, the Operational Domain (OD) is defined in the automotive domain as the set of all possible operating conditions. Next, driven by the design of the Automated Driving System (ADS), is the Operational Design Domain (ODD), which defines the operating conditions for which the ADS has been designed. In the aviation domain, however, EASA has proposed slightly different definitions, which will be used from here on [

6]. What SAE and ISO define as an ODD, EASA defines as an OD—the operating conditions for the full system. The term ODD has been repurposed and, under EASA definition, describes the operating conditions of only the AI/ML constituent—the part of the full system that contains artificial intelligence. It can either be a subset or a superset of the OD, and whether the OD or ODD covers a broader range of values may depend on the parameter in question. The ODD being a superset of the OD helps improve the performance of the AI/ML constituent by allowing a broader range of values and thus more variety, especially in the border regions. A more complete introduction to ConOps, OD, and ODD will be given in

Section 5.1.

In summary, while the traditional V-model remains the backbone of safety-critical system development in aviation, it falls short in addressing the iterative, data-driven, and non-deterministic nature of the development process for AI-based systems. Even the recently proposed W-shaped process, though a step forward, lacks essential features to fully support resilient AI Engineering, particularly concerning system-wide feedback integration and continuous deployment. This work identifies these gaps and proposes an extended AI Engineering framework that advances the W-shaped process by incorporating DevOps principles. Key contributions include a dynamic requirement management approach, deeper feedback loop integration for iterative model improvement, and a stronger emphasis on monitoring and re-evaluation to ensure adaptability and trustworthiness of AI-based systems or subsystems.

The paper is structured as follows: First, in

Section 2, the current state of the art is discussed, focusing on both the evolution from the V-model to EASA’s W-shaped process, as well as DevOps and traditional software development processes. For both topics, prior research concerning the expansion toward the development of AI-based systems is discussed. Based on those findings, the current challenges in AI Engineering within the aviation domain are discussed in

Section 3. In

Section 4, the extension potential of the W-shaped process is discussed. Here, the main focus is the missing operations phase from the DevOps framework, which is crucial for the continuous improvement of AI-based systems. Next, in

Section 5, a new framework is proposed that combines the strengths of the W-shaped process with ideas from DevOps. Aside from the aforementioned operations phase, the new framework also starts earlier in the development process with the creation of a ConOps document and therefore extends beyond the W-shaped process. After proposing this updated framework, a comparison with the machine learning development lifecycle, as defined by the G34/WG-114 Standardization Working Group, is presented in

Section 6. In this section, the focus is on the differences between the two frameworks, as well as the possible conflicts that arise from these differences. Examples of how the framework applies to specific AI-based systems are given. Finally, in

Section 7, the results of the paper are discussed, while in

Section 8, conclusions are drawn.

2. State of the Art

Clearly defined engineering frameworks are the basis for a safe development process. As such, they are crucial for both the development and subsequent certification in aviation, ranging from small subsystems and components to the entire aircraft. Here, the V-model [

19] is the current standard in aircraft development. However, it is not suitable for the new challenges associated with the development of AI-based systems. Therefore, the W-shaped process [

6] was developed, based on similar ideas and principles as the V-model. In contrast, DevOps is the current default for AI-based systems in modern software engineering, as it enables shorter iterations and provides increased feedback. To better understand the history and reasoning of these two frameworks, the following section formally introduces them and highlights their differences.

2.1. The W-Shaped Process for AI-Based Applications

In February 2020, EASA issued the first version of their artificial intelligence roadmap for AI-based applications in aviation [

20], followed by the publication of a concept paper for level 1 machine learning applications [

21]. Therein, the novel concept of learning assurance was proposed to provide means of compliance. To achieve compliance, learning assurance refers to the assurance that all actions of the AI-based systems that could result in an error have been identified and corrected [

21]. To support learning assurance, EASA proposed the W-shaped learning assurance process, covering dedicated AI/ML constituent requirements throughout the process. This W-shaped process follows a long-standing tradition of adaptations and extensions of the initial V-model. One of the first processes in the realm of software development was the waterfall model [

22,

23], in which the development process was divided into separate phases. Each phase needed to be finalized before the next phase could start. Years later, the V-model was developed and, in its various types and forms, became the standard process for safety-critical applications in aviation [

24,

25]. The principal idea was to separate development and testing activities and track the required steps on all system levels [

26]. Later, the V-model was introduced to help with the verification and validation of software [

19]. However, the structure of the process allowed for extensive testing of the developed software only after it had been finalized. This issue led to the development of a W-shaped adjustment of the classical V-model, marking the first mention of a W-model, similar to the W-shaped process [

26]. This model is also known as the VV-model, Double-V-model, or Two-V-model [

27,

28]. As 3040 of the activities in software development are related to testing, initiating testing activities early is crucial [

26]. Therefore, the idea was to bridge the gap between development and testing for software applications by introducing an early testing phase, as illustrated by the second V-model placed on top. Consequently, testing would start in parallel with the development process instead of following finalization. It has also been mentioned that, although models simplify reality, their simplifications make them successful in their applications [

26]. Aspects such as resource allocation seem to be equal in the W-model; however, depending on the application, reality might be different.

Based on this early W-model, further adjustments have been made for other applications. Subsequently, the W-model was adjusted for testing software product lines [

29]. The left side of the W covers the domain engineering, while the right side covers application engineering. In the associated study, several test procedures for variability and regression tests were addressed. Other works have adapted the W-model for component-based software development using two conjoined V’s. One V represents the component development process, while the other V stands for the system development process [

30]. By having a dedicated V-model for the component life-cycle, component V&V can be executed, and pre-verified components are stored in the repository.

The most recent adjustment of the W-shaped process was EASA’s adaptation for AI-based systems in aviation applications [

21]. Two years later, in 2023, the newly proposed W-shaped process was first applied to a use case outside the aviation domain [

7]. This study outlined an approach for the implementation of a reliable resilience model based on machine learning. Liquefied natural gas bunkering served as a use case to show that the system could learn from incomplete data and still provide predictions on latent states while enhancing system resilience.

Out of a joint project with EASA, Daedalean published two reports applying the W-shaped process to visual landing guidance [

31] and visual traffic detection [

32]. Based on both use cases, Daedalean analyzed the steps of the W-shaped process, identifying points of interest for future research activities, standard developments, and certification exercises. The first report [

31] focused on the theoretical aspects of learning assurance, solely considering non-recurrent convolutional neural networks. Some of the main findings included that traditional development assurance frameworks are not adapted to machine learning; there is a lack of standardized methods for evaluating the operational performance of ML applications; and issues related to bias and variance in ML applications. As an outlook for future work, the risks associated with various types of training frameworks and inference platforms were identified. However, the types of changes applied to a model after certification were not discussed. The second report [

32] investigated software/hardware platforms for implementing neural networks and other tools in the development and operational environments. Regarding the safety assessment, out-of-distribution detection, filtering and tracking to handle time dependencies, and uncertainty prediction were investigated. Other aspects, such as changes after the type certificate, proportionality, and non-adaptive supervised learning, were not covered by the report and remain topics for future investigation.

Initiated by EASA, the MLEAP project [

33] investigated the challenges and objectives of the W-shaped process, addressing the remaining limitations to the acceptance of ML applications in aviation. Three aeronautical AI-based use cases, namely speech-to-text in air traffic control, drone collision avoidance (ACAS Xu), and vision-based maintenance inspection, were used. One goal of the project was to identify promising methods and tools and preliminarily test them on toy use cases, followed by the validation of the results on more complex aviation use cases. The report stated that defining the OD was challenging, as estimating completeness and representativeness requires knowledge of the exact extent and distribution of certain phenomena. It further stated that the currently publicly available set of tools and methods for the development of AI-based systems lack operationalizability. One of the main conclusions of the joint report was that data are the centerpiece of the development process and severely influences model performance [

33].

2.2. DevOps and Traditional Software Development

DevOps, a term combining the “development” and “operations” of a product, originated in the software development domain to enable continuous delivery and integration. In conventional heavyweight development methods, such as the waterfall model, the process often leads to longer development times and poor communication between teams, resulting in delays and inefficiencies [

34,

35]. To address this problem, the Manifesto for Agile Software Development was written [

36], promoting transparency and improving communication within teams. Nevertheless, some problems continued even after the introduction of Agile methods [

37,

38]. Conflicts arose between the development and operations teams, particularly during the deployment of new features [

39]. Additionally, maintaining and updating software as needed was not always straightforward [

40]. To solve this, the development and operations teams needed to collaborate more closely to streamline processes. As an extension of the Agile methodology, DevOps was introduced to enhance collaboration and communication [

41]. It emphasizes continuous integration and delivery, ensuring more frequent software updates and improvements. Previous works [

42] have outlined the following four key requirements for DevOps in the context of software development within the automotive domain: deployability, modifiability, testability, and monitorability. These elements support the processes of continuous delivery, integration, and deployment. The authors also suggest that, to enhance the effectiveness of DevOps, the following three additional principles should be considered: modularity, encapsulation, and compositionality [

42].

Given its general success, DevOps has also been introduced into the aviation domain. It has helped to enhance the airline booking system by streamlining interactions between the development and operations teams [

43]. Moreover, in Industry 4.0, the collaborative practices used in DevOps have proven to be beneficial in addressing the gaps between traditional industrial production environments and the requirements of Industry 4.0. As such, Industrial DevOps led to the development of a modular platform designed to integrate and monitor production systems [

44]. Apart from industry applications, DevOps and the Agile methods have also gained attention in the scientific community [

45]. DevOps has been shown to enhance collaboration among researchers throughout the development cycle [

46].

Despite—or perhaps because of—its widespread adoption across many domains, new ideas for the DevOps cycle continue to emerge. The integration of machine learning workflows into the DevOps cycle is also being considered to manage complex software components that involve ML components. Some advantages of using DevOps include streamlined ML artifact versioning, as well as support for testing and deploying ML models through continuous integration. Moreover, combining DevOps and ML workflows can enhance the collaboration between data scientists and software engineers [

47]. The research for using DevOps in ML applications has also led to the development of the Machine Learning Operations (MLOps) framework [

48]. This DevOps derivate focuses on methodologies and development approaches aimed at operationalizing machine learning products by leveraging DevOps and adapting it for the specific needs of machine learning applications [

49]. MLOps integrates machine learning, software engineering, and data engineering to bridge the gap between development and operations [

50]. Although DevOps practices already provide continuous integration and delivery, as well as enhance team collaboration, other derivations of DevOps focus more on the safety of the system. Thus, SafeOps was developed, designed to improve the safety of autonomous systems through a model of “continuous safety” inspired by DevOps principles [

51]. SafeOps emphasizes continuous monitoring, feedback loops, and integration across both development and operational phases, ensuring that autonomous systems remain compliant with safety standards during operation. The three pillars of SafeOps are diagnosis, measurement, and modification, all of which provide continuous safety assurance and faster deployment [

51]. Similar to these safety considerations, security aspects in the software development lifecycle are addressed by yet another derivate, DevSecOps [

52], which focuses on integrating development, security, and operations. To ensure security, the team incorporated security-focused tools into the CI/CD pipeline. For faster development cycles, DevSecOps relies on automated security tools.

2.3. Differences in Philosophy Between the W-Shaped Process and the DevOps Cycle

Both EASA’s W-shaped process and the DevOps cycle aim to achieve reliable software and system development; however, they approach the development lifecycle with different philosophies and goals. Historically, the W-shaped process has been used for safety-critical application, such as avionics and aircraft systems in the aviation sector, with a focus on safety, regulatory compliance, and rigorous testing. In contrast, the DevOps cycle is a widely adopted approach for general software engineering. It is centered around continuous integration, delivery, and deployment to accelerate development cycles while maintaining high-quality output. Furthermore, it encourages collaboration between the development and operations teams. Considering the process structure, one apparent difference between both methodologies is the sequential and structured phases of the W-shaped process compared to the cyclical, iterative, and constantly looping phases of the DevOps cycle. The W-shaped process progresses linearly from system requirements and moves through design and development before finishing with a well-documented testing and V&V phase. On the contrary, documentation during the DevOps cycle is kept to a required minimum, focusing on code and release comments.

In the context of testing, the W-shaped process focuses heavily on the formal verification and validation typical for safety-critical aviation applications. This includes exhaustive documentation and testing at each stage, ensuring each step meets compliance standards before moving on to the next. Here, DevOps emphasizes the automation of tasks through CI/CD, enabling faster, more frequent updates and subsequent releases. Automated testing is integrated throughout the process to identify issues as early as possible.

Feedback loops are key to identifying issues early on in the development phase. The W-shaped process emerged from the V-model to promote early feedback through predefined feedback loops. It allows for iterations during both the model training and implementation. On the contrary, the DevOps cycle features continuous feedback loops throughout development. Arguably, this continuous feedback is one of the most important features of the DevOps cycle and therefore one core difference in comparison to the W-shaped process.

Furthermore, both methodologies differ in terms of typical cycle length. The W-shaped process defines the whole development process up to the final product release, following verification of AI/ML constituent requirements. The DevOps cycle is theoretically an ongoing, continuous loop of improvement and frequent deliveries, unlike the single delivery of the W-shaped process. Thus, a single iteration of the DevOps cycle is shorter compared to that of the W-shaped process.

3. Current Challenges in AI Engineering for Aviation

AI Engineering is gaining significant attention due to the increase in AI-based functions within safety-critical areas such as aviation, robotics, and the automotive sector. At its core, AI Engineering focuses on systematically developing every aspect of an AI component or function throughout its entire lifecycle. Therefore, the development and V&V processes constitute a considerable amount of the entire challenge. In addition to the core processes, other aspects such as requirements engineering, data generation, monitoring, and many others play a crucial role.

Specifically, the integration of AI in aviation systems poses a significant challenge because of the inherent risk that comes with deploying passenger aircraft. Therefore, AI engineers are required to be meticulous when using CI/CD processes. Updates, in particular, need to be executed in a safe, reliable, and transparent manner. Additionally, there are many aspects to consider regarding the human-AI interaction in assistance systems that are currently being developed. Ensuring applicable interactions between humans and AI-based systems will require additional engineering work. Especially when AI-based systems are used as assistants, the interface between humans and AI requires exhaustive investigation, commonly explored through research in the field of Human-in-the-Loop (HTL) [

53]. Here, different approaches to how an AI-based system and humans can complement each other prevail, from the strict separation of roles, e.g., human oversight performed by a human supervisor, to collaborating as equal teammates in either a cooperative or collaborative approach [

5,

54]. Thus, depending on the specific use case, different approaches may be preferable. In addition to the different concepts of how the Human-in-the-Loop approach is implemented in the individual use cases, there are also questions about human factors that need to be taken into account. For instance, the issue of human trust is also relevant to the safety of the overall system as overtrust or mistrust of the AI-based system can lead to potential errors that could compromise the safe operation of said system [

55].

As already mentioned, it is crucial to have sufficient data to train and evaluate the model to provide safe AI-based systems. In this context, sufficient data mean not only covering all relevant scenarios but also ensuring they are of the necessary quality. This challenging task can only be solved by combining different approaches for data generation to cover all requirements, for example, by training only on virtual data and later fine-tuning using real data [

56]. To clearly define the system under test within the operational environment and its current development stage, concepts from the automotive industry [

57] have been transferred to the aviation sector [

58]. One potential starting point for the generation of synthetic data is simulations, as they are often cheap to perform in comparison to real experiments and offer high availability. Simulation-Enabled Engineering is therefore the basis for creating a dataset for the learning process of Safety-by-Design AI-based systems. Although simulations have great potential, the obtainable data quality is limited. Thus, careful evaluation is required to identify the correct balance between the quantity of simulation-based data and other more realistic, and therefore higher quality but lower quantity, data, like hybrid or real data. To improve the quality of simulation-based data, generative AI might also be able to enhance the realism of simulations or increase their variation [

59,

60]. Altogether, a combination of approaches will provide the optimal balance between quantity and quality of data, necessary to develop Safety-by-Design AI-based applications.

4. Extension Potential of the W-Shaped Process

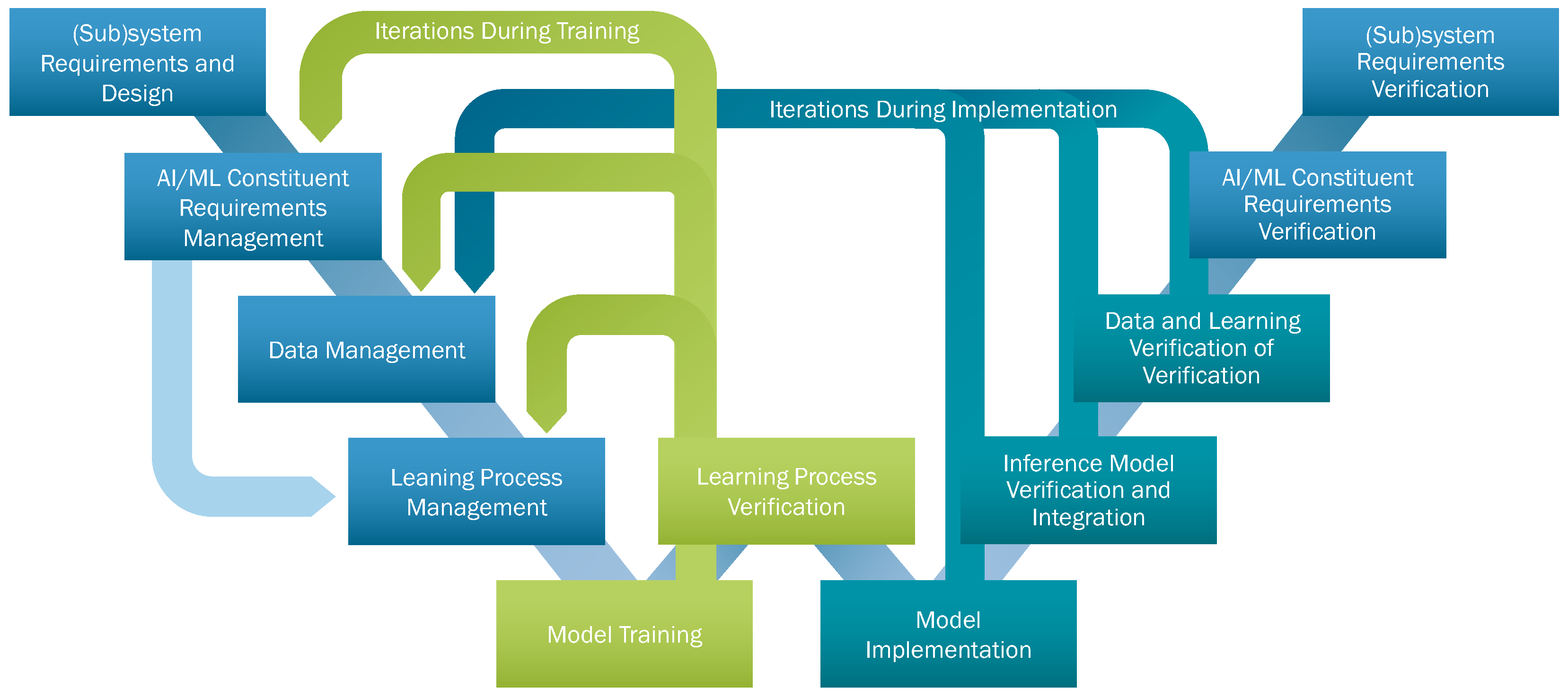

The W-shaped process, designed to run in parallel with the V-model, is required for the development assurance of AI/ML constituents [

6], see

Figure 1. As such, it introduces some important changes to the V-model to adapt it to the specific needs of developing AI-based systems. The W-shaped process emphasizes the importance of learning assurance, as well as having iterative feedback loops early on in the development process. Both are crucial for the safe and secure development of AI-based systems, allowing for certification later on.

In Daedalean’s reports on design assurance [

31,

32] the W-shaped process was investigated. Based on the use case of visual landing and traffic detection, the general feasibility of the W-shaped process for level 1 ML applications was largely confirmed. The report found that future improvements are required, for example, strengthening the link between learning assurance and data, which is required for improved AI explainability. However, the report was focused on the training phase and did not consider the implementation and inference phase verification. Therefore, this gap remains to be investigated. Especially with increasing algorithm complexity and higher levels of autonomy, the W-shaped process is potentially not as suitable for the development of AI-based systems as the well-established DevOps cycle, which can be thought of, to some extent, as iterating over the W-shaped process multiple times [

58]. However, simply enforcing a purely DevOps-based approach in aviation is also not feasible, given the strict certification requirements. While it is understandable that the W-shaped process is based upon the well-established V-model, other safety-critical domains, such as automotive, are already transitioning to the DevOps cycle. It has been shown that it better fits the iterative development process with which both traditional software and AI-based systems are developed [

42,

61]. As such, the W-shaped process is a good first step towards a more agile development process for AI-based systems in aviation. It lacks, however, some necessary elements from the DevOps approach to fully utilize the advantages of an iterative development process. At least some of those remaining extension potentials will be addressed in this section.

Section 5 will then propose a new framework that further combines the strengths of the W-shaped process with those of the DevOps cycle.

The first important constraint of the W-shaped process is that it is currently only applicable for supervised learning and not for self-supervised/unsupervised and reinforcement learning [

6]. As the authors of the W-shaped process are already well aware of this limitation, they plan to extend the guidance document to include these learning techniques in the future [

6]. Thus, it will not be part of the current discussion in this article.

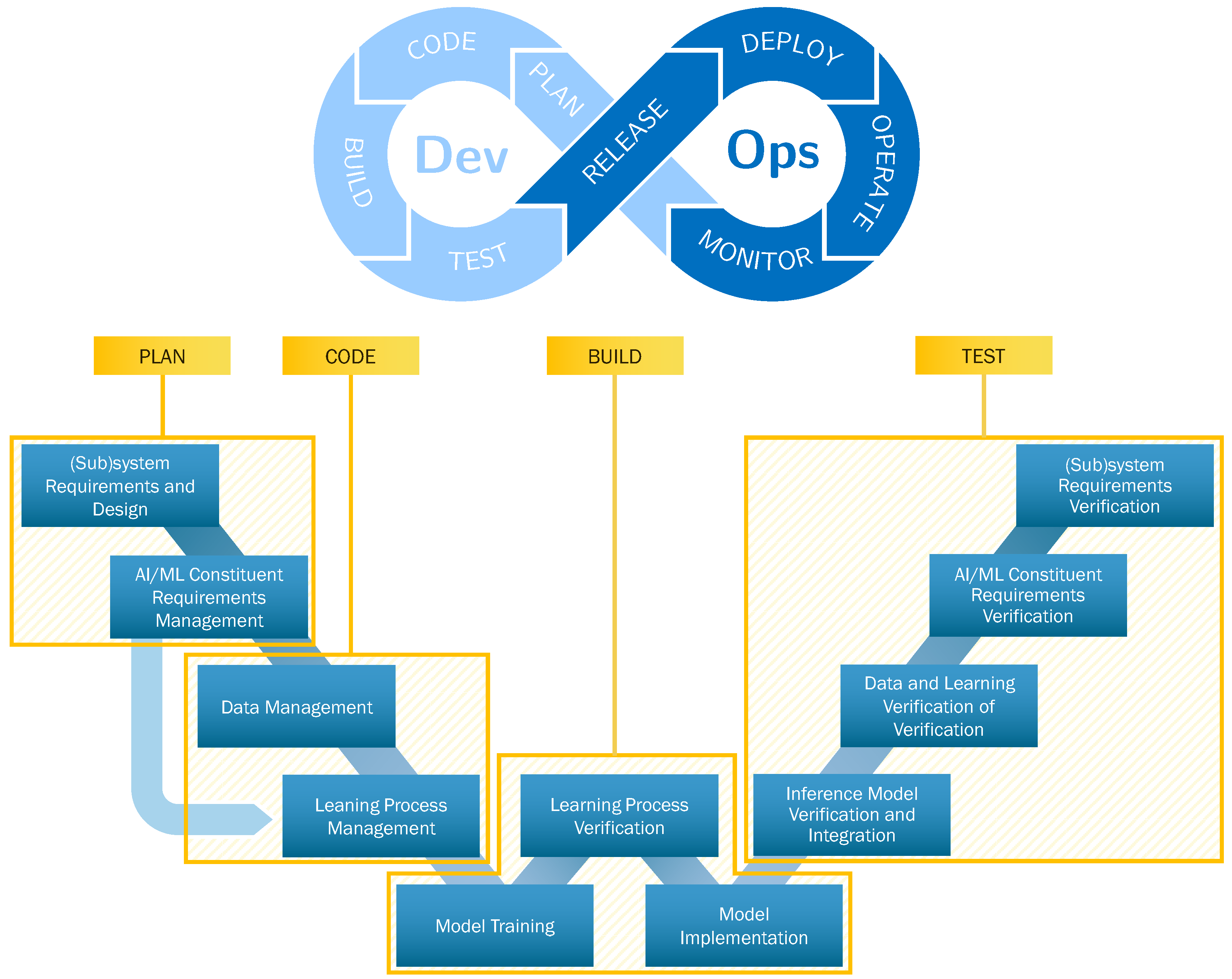

Compared to both the V- and W-shaped processes, DevOps is characterized by a strong connection between development and operations. This effective collaboration enhances the agility of the software development process. Moreover, DevOps is separated into two phases; the development phase consists of planning, coding, building, and testing, whereas the operations phase consists of releasing, deploying, operating, and monitoring, as illustrated in

Figure 2.

As both the W-shaped process and DevOps have a similar goal in mind, namely streamlining a development process, a comparison is helpful to understand their differences and similarities; see

Figure 2 for a graphical representation of the following paragraph. During the planning step of the DevOps cycle, stakeholders and developers identify new features and fixes for the system, as well as determine the quality criteria for each step [

11,

62]. Similarly, in the W-shaped process, the planning step involves establishing system and subsystem requirements and design, leading to the extraction of AI/ML-specific requirements [

6]. These requirements are essential for understanding the necessary data and models for specific applications, dividing them into AI/ML data and model requirements. In the DevOps cycle, after planning, developers proceed to the coding step, writing code for each feature or fix of the software. In contrast, the W-shaped process involves collecting, preparing, and organizing data based on the requirements for training, testing, and V&V. This stage also requires some coding activities, particularly for data generation, preprocessing, labeling, and splitting the dataset. Apart from defining the ML model’s architecture, including but not limited to the learning algorithms, activation functions, and hyperparameters, the learning process management includes generating the training pipeline for the model training. It also involves the verification of the learning process. During the building step of DevOps, developers use special automated tools to ensure the code builds correctly for the desired target platform, thereby preparing it for testing. In the W-shaped process, the model is trained based on the preceding steps, especially the data management and the learning process management phases, after which the learning process is verified, allowing a loop back to the earlier steps in case of failure. Next, in the model implementation step, the trained ML model can be implemented on the target platform for further V&V, analogous to the building step in DevOps. In the testing step of DevOps, all software components undergo continuous testing using automated tools. Here, the W-shaped process is more expressive, as it clearly defines multiple levels of testing, one for every abstraction layer within the scope of the full product, ensuring that all AI assurance objectives are met at every layer.

The operations process in DevOps extends beyond the current scope of the W-shaped process. In DevOps, the operations phase begins immediately after development, initiating the release of the software. The deployment process is designed to be continuous, utilizing deployment tools to facilitate easy software deployment for all stakeholders. This approach increases productivity and accelerates the delivery of new software builds and versions. The operations phase involves managing software in production, including installation, configuration, and resource management. Finally, during the monitoring phase, the operations continuously monitor the software to ensure proper functionality [

6,

11,

62].

While the W-shaped process offers several advantages during the system development process, for example, the more expressive description of required tests, it lacks certain elements crucial for the continuous development of an AI-based system. Specifically, the W-shaped process ends after the testing phase of the AI-based system. It does not extend into the operational phase, as depicted in the DevOps cycle [

6,

11]; see

Figure 2. In real-world applications, especially for safety-critical systems, it is essential to have mechanisms for the post-deployment monitoring and continuous evaluation of the deployed AI-based system to ensure both the safety and security of the system even after certification and release. Moreover, ongoing supervision in the form of monitoring also ensures the system’s reliability and performance throughout its operational lifecycle, detecting failures of the AI-based system as soon as they occur [

63].

5. Improving upon the W-Shaped Process

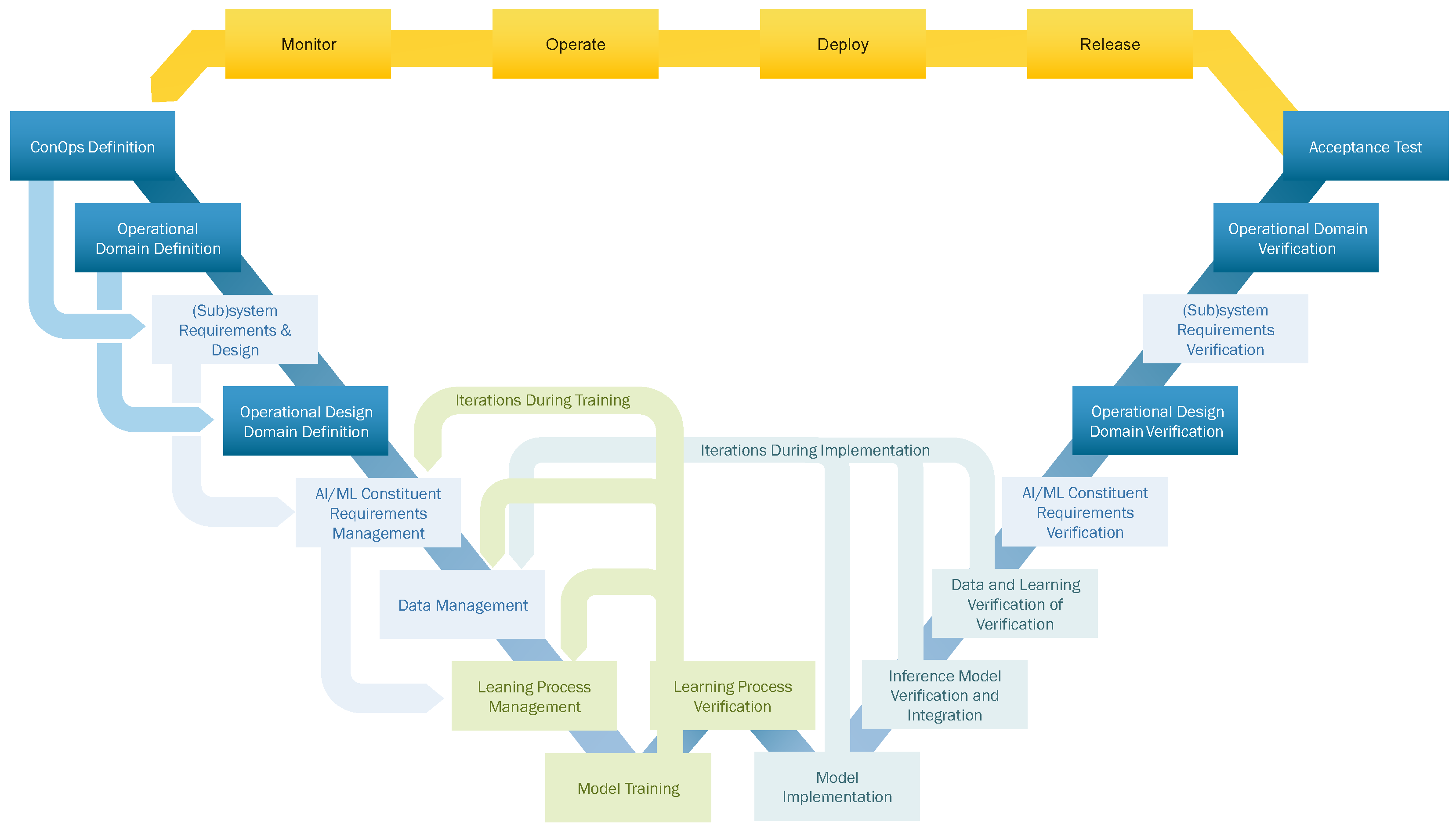

As seen in the previous section, the W-shaped process lacks certain features required for a continuous development process often used for AI-based systems in other domains. Most noteworthy is the absence of an operations phase, which is crucial for the continuous improvement of AI-based systems. As such, a new framework is proposed that combines the strengths of the W-shaped process with those of the DevOps method. Furthermore, the proposed framework also starts earlier in the development process than the W-shaped process, beginning with the creation of a ConOps document [

5,

6,

12]. The ConOps document is crucial for capturing the requirements—based on the qualitative and quantitative characteristics of the system—from all stakeholders, and for defining a common ground from which further work can be derived [

12,

64,

65]. From this ConOps document, the OD of the AI-based system can be derived [

12]. This OD captures the intended working environment of the AI-based system, providing an ordered description that is effortlessly readable by humans but also machine parsable. Later, the ODD can be derived from these earlier steps, guiding the development of the AI/ML constituent. Similar to the OD, the ODD is also a well-structured document that helps to establish a better understanding of the desired environment the (sub)system is expected to handle.

The proposed changes are discussed in the following section, starting with the ConOps, OD, and ODD in

Section 5.1, followed by the addition of the operations phase in

Section 5.2. Lastly, the combined framework is introduced in

Section 5.3 and visualized in

Figure 3.

5.1. Concept of Operations, Operational Domain, and Operational Design Domain

A Concept of Operations is a concise user-oriented document agreed upon by all stakeholders outlining the high-level system characteristics for a proposed system. It describes the qualitative and quantitative characteristics of the system for all stakeholders [

12,

64,

65]. As such, it is the primary interface between the customer and the developers. However, although the ConOps is defined at the beginning of a project and serves as a fixed baseline for all stakeholders, it is not immutable and may be subject to change requests. Utilizing the ConOps, all stakeholders can establish a common understanding of the system from which the Operational Domain can be derived [

12]. Here, it is important to clarify the distinct definitions of the terms Operational Domain and Operational Design Domain. As already stated in

Section 1, the definition of EASA differs from the commonly accepted definitions proposed by SAE and ISO [

6,

15,

16]. SAE and ISO define the Operational Domain as “set of operating conditions, including, but not limited to, environmental, geographical, and time-of-day restrictions, and/or the requisite presence or absence of certain traffic or roadway characteristics” and the Operational Design Domain as “the operating conditions under which an ADS is designed to operate safely” [

16]. In comparison, EASA defines the OD as the “operating conditions under which a given AI-based system is specifically designed to function as intended, in line with the defined ConOps” and the ODD as the “[o]perating conditions under which a given AI/ML constituent is specifically designed to function as intended, including but not limited to environmental, geographical, and/or time-of-day restrictions” [

6]. From these definitions alone, it is apparent that EASA defines the OD as equivalent to SAE’s definition of the ODD. Both are the operating conditions to be considered for the safe design of an autonomous system, regardless of whether it is an ADS or AI-based system. What EASA defines as the ODD, however, is similar to SAE’s definition of the OD, only that the scope does not include the full AI-based system but the part of the environment relevant to the AI/ML constituent. This can be both a subset and a superset of the OD.

Based on the definitions from EASA, the OD, as derived from the ConOps, describes the exact operating conditions under which a system is designed to function [

6,

66,

67]. It is already extensively used for autonomous vehicles in the automotive domain [

67,

68], and its transfer to aviation is the subject of ongoing research [

13]. In the automotive domain, the equivalent of the OD has been used for multiple years already; therefore, its content and structure are well defined. For the aviation domain, however, although required by EASA for future AI-based systems [

5,

6], the structure of the OD has yet to be clarified [

13]. Nevertheless, defining the OD first, followed by the AI/ML constituent requirements and the ODD, is crucial for the development of AI-based systems in aviation. As the ODD depends on the OD, which in turn depends on the ConOps, any change requests to the ConOps will most likely influence both the OD and ODD, even if only to verify that the existing OD and ODD are still valid.

Based on the previous discussion, the ConOps and OD are crucial for the development of AI-based systems and the ODD for their corresponding AI/ML constituent. As such, the definitions of the ConOps and OD are part of the proposed framework, preceding the Requirements Allocated to AI/ML Constituent step in the W-shaped process [

6]. However, as the (sub)system requirements contain non-AI-related elements, these will need to be defined first before the ODD can be derived and specified. Accordingly, three new test steps will also be added—for the ODD, OD, and finally the ConOps. All these newly proposed steps for the ConOps, OD, and ODD are visualized in

Figure 3, and the individual parts will be discussed in their corresponding subsections.

It is worth noting, however, that both the ConOps and OD are mentioned as the input for the Requirements Allocated to AI/ML Constituent step in the W-shaped process [

6]. Nevertheless, as they are mutable, the proposed framework explicitly includes these two since they are part of the DevOps cycle.

5.2. Operations Phase

In the DevOps framework, releasing is often as easy as moving changes from the development environment to the production environment. This is not possible in aviation, as the production environment is oftentimes the aircraft itself. In aviation, releasing an AI-based system almost always requires a certification process. In general, for safety assessments in aviation, systems are categorized into different Development Assurance Levels (DALs) based on their safety impact on the aircraft [

25,

69,

70,

71,

72]. Here, the higher the DAL of a system, the more stringent the certification process. The highest DAL, DAL A, is reserved for systems with a catastrophic failure condition, while DAL E is reserved for systems with no safety effect on the aircraft [

70,

71,

73]. The different DALs are listed in

Table 1, which also lists further information about each DAL, including but not limited to the accepted failure rate and the effect on the aircraft and passengers. In

Table 1, however, the effect on the crew is not explicitly listed, although relevant. Only for DAL E systems certification is not required as those systems have no impact on the safety of the aircraft [

70,

71,

73].

However, DAL was never designed for AI-based systems and is not always applicable or sufficient for AI-based systems. Due to the need for a similar rating system for AI-based systems, EASA distinguishes between three levels for AI [

5,

9]; see

Table 2. The three levels are based on the intended purpose of an AI-based system, whether it is used for assistance only (level 1), for supporting humans in a human-AI teaming situation (level 2), or for advanced automation up to non-overridable decisions (level 3) [

5]. Future AI-based systems will most likely be categorized under both ratings, as an AI-based system always requires traditional software components to interface with other components. Thus, systems with a high DAL rating but low AI level, or vice-versa, can be derived. For example, an AI-based movie recommendation system for the In-Flight Entertainment (IFE) system would be a DAL E system, since it does not affect safety, and a level 1 application because it only assists passengers [

70,

71,

73]. If the same system now includes a chatbot that interactively chats with the passengers and helps them find a suitable movie or TV show, this system is now a level 2 application. Still, such a system would most likely have no certification requirements. Other AI-based systems in aviation already being researched are vision-based landing systems [

74,

75]. While of clearly higher DAL ratings due to the inherent safety implications, as long as such a system only assists the pilots and does not make a decision, it will most likely be a level 1 application. However, due to the common problem of adversarial attacks, even this supposedly level 1 application will have stronger safety and security regulations than the aforementioned IFE recommendation system [

76,

77]. As a third example, the next generation of collision avoidance, ACAS X, is currently under development and part of ongoing research [

78,

79,

80]. For this system, although no official AI level rating is available, initial certification activities are already part of ongoing research [

58,

81,

82,

83,

84,

85,

86]. Finally, AI applications in the aviation domain are not limited to AI-based systems deployed on an aircraft. Notably, regarding air traffic management, many potential AI-based systems have been studied, from predicting aircraft trajectory and fuel consumption to enabling single-controller operations for air traffic control [

87,

88,

89,

90]. Compared to prediction tasks—generally level 1 applications—advances towards single-controller operations are level 2 applications.

While there are no official guidelines on how to design and certify an AI-based system, special care has to be taken for current developments. For now, only the DAL rating can be used for the certification process of new AI-based systems. Nevertheless, the three levels for AI applications will be important for future regulations. As such, for AI-based systems that can be classified as DAL A to DAL D, more care has to be taken in the development process to reduce the risk of failing the certification process. Accordingly, the higher the classification level of an AI-based system, the higher the number of future requirements such a system will face for certification. Thus, as a compromise, the operations phase can be executed multiple times before the final deployment and prior to starting with the certification of the AI-based system. For example, the operations phase could be executed in a flight simulator, where the AI-based system is tested in a controlled environment. After multiple rounds of testing, the AI-based system can be deployed in the actual aircraft, where the operations phase is executed again, now in the actual production environment. This way, the development of AI-based systems can benefit from a more dynamic and iterative way of developing systems, while still achieving the same standards as classical components, ensuring the safety and security of the whole airplane. Exact numbers on the required amount of iterations cannot be given, as this is highly dependent on the system and consequently hard to estimate beforehand.

Next, the deployment step of the operations phase has to be executed. Again, given the vastly different AI-based systems for aviation one can imagine, it is not possible to define a general process for the deployment step. Some systems might be able to be deployed in a secure, over-the-air-like process, where a fleet of aircraft automatically downloads the new software, similar to other domains [

91]. This could be possible for systems with a DAL E classification, such as the aforementioned AI-based IFE recommendation system [

70,

71,

73]. Other AI-based systems, however, might also need a hardware update, which would require grounding the aircraft and likely involve many man-hours. These updates could be scheduled during the regular maintenance checks every aircraft has to undergo.

After deploying the AI-based system, the operating phase begins. For a successful operation, it is crucial that the previous steps have been conducted diligently. Furthermore, a general recommendation is that the neural networks should be static—often referred to as frozen—during operations, as learning dynamically adds significant complexity not only to the system design but also to certification [

32,

63]. Moreover, as AI-based systems often exhibit a black-box-like behavior, explainability is crucial for systems to be accepted by human operators [

92]. For example, for the aforementioned next-generation collision avoidance system, it might not be enough to issue the correct advisory to the pilots; the AI-based system should also briefly explain how it came to the advisory. Fortunately, this is a field of active and ongoing research, in which guidelines for explainable AI have already been developed [

93].

Once an AI-based system is certified and deployed, monitoring it and its environment is crucial for future improvements. Although monitoring a system and receiving feedback during operation is often not part of aviation operations, it is decisive for the Safety-by-Design development and operation of AI-based systems. Thus, it should be adopted for future AI-based systems in aviation. Monitoring also does not necessarily mean an invasion of privacy of the passengers or the operating company. Here, developers and operators have to work together to ensure the safety and security of the system, while also respecting the privacy of all stakeholders. However, only continuous monitoring can ensure future improvements, as without monitoring, no data from operations will be available for the developer to improve the system. Continuous monitoring, in this context, consists of both functional and non-functional aspects, requiring the implementation of a real-time monitoring pipeline. Functional aspects include, among others, prediction accuracy, measuring whether the output of the AI-based system aligns with the expected results, and data integrity, ensuring that incoming data remain consistent and uncorrupted. Additionally, system-level failures, such as timeouts or crashes, must be effectively monitored. Non-functional aspects involve OD and ODD monitoring, wherein environmental variables and system parameters are observed to ensure the AI-based system operates within its boundaries. This is a crucial aspect of the monitoring for all AI-based systems, because both the OD and ODD are essential for ensuring the safe and reliable operation of an AI-based system [

13].

Current approaches for runtime monitoring utilize system information to compare OD and ODD data against their predefined specifications, either online during operations or offline afterwards [

94,

95,

96]. Runtime monitoring confirms that the system stays within its predefined environmental boundaries. For automated systems, adhering to safety standards and regulations is essential, and one of the fundamental principles is closely monitoring the OD to guarantee overall system safety. Thus, continuous monitoring during the operational phase of DevOps plays a vital role in maintaining safety by ensuring that the AI-based system operates only within its safe operational parameters and can thus be trusted to provide accurate guidance. Approaches like predictive OD monitoring, which can utilize tools such as temporal scene analysis, can issue early warnings if the system is approaching the boundaries of its corresponding OD [

97,

98].

5.3. Proposition of the Novel Framework

Finally, bringing everything together, the proposed new framework is visualized in

Figure 3. As it is based on the W-shaped process by EASA [

6], the existing steps are colored in light blue and green. In contrast, the novel steps are depicted in a darker blue, such as the ConOps, OD, and ODD definitions. The operations phase is depicted in yellow. Corresponding test steps are also added to ensure accurate V&V. After the test phase of the W-shaped process, elements from the operations phase of the DevOps method are introduced, namely the release, deploy, operate, and monitor steps. As explained earlier in

Section 5.2, the operations phase is crucial for the continuous improvement of AI-based systems, especially in aviation. Only a continuously developed system can overcome the current problems with AI-based systems, such as their black-box-like nature and the lack of transparency. However, with an operations phase and its corresponding steps, an AI-based system can be continuously improved, leading to a more transparent and trustworthy system. In addition, through iterative testing and feedback, the proposed frameworks’ structure supports investigating the explainability of AI algorithms, crucial for any safety-related AI-based application. By incorporating continuous testing and validation into the development workflow through several feedback loops, input from end-users or domain experts can be used to identify areas of insufficiencies or unexpected decisions. Also, this feedback structure supports the development of resilient systems in terms of error detection, error correction, monitoring, and logging. As with all AI-based systems, resilience, “the ability to recover quickly after an upset” [

99], is one of the main goals of the Safety-by-Design development process. The new framework, combining the W-shaped process with ideas from DevOps, is a promising approach for the development of AI-based systems in aviation. Its representation is visualized in

Figure 3. Here, the development process starts in the top left corner, with a classical V-model running in parallel for non-AI-based systems. It is important to note—and already part of the proposed W-shaped process by EASA [

6]–that the development process follows an iterative approach, allowing for faster feedback and an easier system improvements. These iterative steps allow for a more flexible development process and thus quicker responses to findings that emerge later in the development of an AI-based system.

Developing a new AI-based system using the proposed framework first requires defining the ConOps. For an exemplary use case, the next-generation collision avoidance system for an aircraft, ACAS X, the ConOps might contain high-level requirements such as the desired behavior, i.e., avoid near mid-air collisions, as well as more specific performance metrics, for example, updating the advisory once per second [

85,

86,

100,

101,

102,

103]. Based on the ConOps and OD—and following the W-shaped process—the (sub)system requirements can be derived. Both are important to better guide the development of an actual AI-based system in a safety-critical environment. The OD will contain information about the scenery, e.g., airspace information, as well as more general environmental information such as the weather conditions [

86,

97]. Of utmost importance—at least in the aforementioned use case—however, are dynamic elements, i.e., intruders invading the airspace. As the OD also contains parameter ranges for every element, automated tests can subsequently be directly derived from the ODD [

58,

104]. Based on the ConOps, the OD, and the (sub)system requirements of the step before, an ODD can be derived, finally leading to the actual requirements for the AI/ML constituent. From there, the W-shaped process proceeds as defined by EASA [

6].

As every step on the left-hand side of a V-model-inspired process requires corresponding tests on the right-hand side, so do the proposed steps for the ODD, OD and ConOps, ConOps Definition and Acceptance Test, Operational Domain Definition and Operational Domain Verification, and Operational Design Domain Definition and Operational Design Domain Verification. The Operational Design Domain Verification step, verifying the ODD, requires that the system is shown to cover all aspects and areas of the hyper-dimensional parameter space of the ODD. As all elements in the ODD have a corresponding parameter range, the creation of automated tests is straightforward [

104]. However, determining the actual coverage of the ODD, especially for continuous parameter ranges, like altitude, is a complex problem. Still, current research is looking into exactly this topic [

105,

106]. Once the system is shown to cover the target ODD fully, testing can continue on the (sub)system level. Afterward, the tests for the ODD have to be repeated, now with the system-level OD in the Operational Domain Verification and Validation step. The final test, in line with most V-model representations, is the acceptance test. On the one hand, it marks the final step in the certification of a system; on the other hand, it is the first interface with the customer, since all stakeholders have contributed to defining the ConOps.

After the W-shaped process is successfully passed, a system can go into certification and then be deployed. However, in many cases, a single pass through the W-shaped process might not be enough to develop a system that meets all certification requirements, given its designated DAL. The collision avoidance system, for example, with its DAL B rating has way more certification requirements than a DAL E system, for example, an AI-based movie recommendation system for the IFE system. As the IFE is generally categorized as DAL E, according to

Table 1, an AI-based system purely for the IFE will also be a DAL E system. As such, it has no certification requirements. Such a system could, in theory, be deployed regularly via an over-the-air update, similar to how most software updates for smartphones and personal computers are rolled out. The aforementioned ACAS X, with its higher DAL rating, cannot be rolled out and improved in multiple iterations in the actual aircraft during operation. As errors in the collision avoidance system can easily lead to tragic catastrophes, every new version of such an AI-based system has to go through extensive certification efforts to ensure the safety of all lives on board an aircraft [

85,

107,

108]. Thus, it might be desirable to split the operations phase into two different cycles. First, a faster cycle can be implemented only on the developer’s side to more efficiently develop improvements. And only once a certain maturity has been reached, the system can go into certification and be deployed to the customers, in this case to an actual aircraft. Still, even such a system might require later updates to the underlying AI model. For that reason, continuous improvement is still important, even for DAL B or higher systems. Therefore, in the operations phase of the proposed framework, steps similar to DevOps have to be undertaken. First, the developed AI-based system has to be released. In the case of aviation, and for systems of DAL D or higher, this requires a certification process as described earlier. Once this release process is finished, the developed system can be deployed to the target platform. Depending on the target platform, this can be more or less complicated. For some updates, especially those that might also require a new generation of hardware, grounding of the aircraft will be necessary. Those deployment steps can take weeks to years, as it might be more efficient to deploy the changes when maintenance checks are planned anyway. Other deployment steps, however, might be, as discussed earlier, a simple over-the-air update, one that an aircraft can automatically search for on a specific schedule, for example, once a week. Once the system is deployed, operations can begin. This step is again strongly dependent on the developed AI-based system; however, in general, this step should be part of the normal operations. The last important step of the framework, also derived from DevOps and somewhat parallel to the operating step, is the monitor step. As many AI-based systems lack realistic data, or the abundance thereof, constant monitoring of the real operating conditions is required to continuously improve an AI-based system. Here, DevOps can provide established methods and tools, for example, tracking functional aspects of the AI system’s performance. Furthermore, non-functional aspects, such as OD monitoring, ensure the safety and reliability of the AI-based system. Considering the example of an AI-based collision avoidance system, it is imperative to monitor prediction accuracy in different environmental conditions. The AI-based system may provide these predictions based on certain operating conditions described in the OD and ODD specifications. If the AI-based system operates outside of these conditions, it may provide erroneous predictions, which could lead to tragic events. Such a continuous monitoring framework, becoming more complex as the application moves from DAL E towards DAL A, runs in parallel with operations to ensure that the system is running safely and securely, without compromising the system’s performance. It is also responsible for logging real-world data, especially in the event of failures, to help improve the AI system’s performance in the future. Only with feedback from the real system and real data, a realistic dataset for training can be built. As such, this step is one of the most crucial steps in the proposed framework and might take the most effort to implement. The monitoring step requires the data from the actual system in operations to flow back to the developers, something not yet commonly seen in aviation. However, only with an evergrowing dataset that is also built on real data can continuous improvement be achieved, leading to a safe AI-based system for aviation. It is the basis for a new iteration of the proposed framework, leading towards safe and secure AI-based systems in aviation.

6. Compatibility to the Machine Learning Development Lifecycle

Besides EASA, other groups are also working on similar standards for the development of AI-based systems in aviation. One of these important standards is being developed by the G34/WG-114 Standardization Working Group, a joint effort between EUROCAE and SAE. Their standard, currently only published as a draft of chapter 6 of AS6983/ED-XXX, focuses on the development of AI-based systems in aviation, specifically the Machine Learning Development Lifecycle, currently only designed for offline applications [

10]. As it is still a draft, all the following results are only preliminary. Still, the goal of the MLDL, as described in the draft, is to establish support for the certification and approval process of AI-based systems in aviation. To achieve this, the MLDL aims to define and organize the objectives and outputs of the systems in an easy to comprehend manner, suitable also for non-experts in the field of AI and ML. These objectives are closely aligned with the DAL, as well as the Software Assurance Level [

10]. However, compared to the W-shaped process developed by EASA [

6], the MLDL does not require a specific development process, but rather it provides a framework to support the development of AI-based systems in aviation in general. Nevertheless, there are many similarities, as well as some differences, between the two frameworks that are worth exploring.

The MLDL is divided into development activities for both AI-based and traditional (sub)systems and the V&V activities for those (sub)systems. The architecture of a system in the MLDL is segmented into two main parts, the System/subsystem Architecture and the Item Architecture. The MLDL process starts with the execution of the requirements phase, called the System/Subsystem Requirements Process. This is similar to the proposed framework with the primary difference that, in the proposed framework, requirements can be directly derived from the ConOps, creating a continuous chain of trust. This chain of trust is essential for clearly defining all requirements and their corresponding rationales, ensuring that all relevant requirements of the system, its surrounding environment, and operational conditions are captured. Since the ConOps serves as the primary interface with the customer, all developments are based upon the requirements defined in it. Thus, it plays a crucial role in the proposed framework, while not present in the MLDL. Based on the results of this phase, the System/Subsystem Requirements Process, a set of (sub)system requirements, including the OD and ODD, can be derived.

In addition, the results from the System/subsystem Architecture phase are utilized to define the ML Model Architecture in the MLDL, and, correspondingly, in the proposed framework, the Requirements Allocated to AI/ML Constituent are derived. At this stage, the ML Requirements Process is divided into ML Data Requirements and ML Model Requirements. The ML Data Requirements guide the ML Data Management, while the ML Model Requirements guide the ML Model Design Process. In the W-shaped process, and thus also proposed framework, these processes are referred to as Data Management and Learning Process Management, leading to a similar output. This sets the stage for training and verifying the ML model, the ML Model Design Process, and subsequently implementing the ML model on the designated target platform, the ML Inference Model Design and Implementation Process and the Item Integration Process. Both approaches include feedback loops from model training back to learning process management, data management, and AI/ML requirements, allowing for iterative improvements during training and the learning assurance of the AI-based system. However, only the proposed framework integrates continuous improvement of the trained ML model, even after deployment.

Moving from implementation to testing, the AI-based system will be verified and validated against the different levels of requirements, as defined previously. This process takes place on the right-hand side of the proposed framework and accordingly in the second half of the MLDL. While for the proposed framework, and also the W-shaped process that it is based upon, this will again lead to a split, after which traditional soft- and hardware items will be tested against the V-model. The MLDL, however, incorporates both traditional and AI-based (sub)systems in one holistic process, allowing for a better overview of the whole development process. Nevertheless, while the MLDL, similar to the W-shaped process, stops at the System/Subsystem Requirements, the proposed framework follows through until the Acceptance Test phase, serving as the interface to all stakeholders, especially the customer, by verifying the ConOps. Moreover, the proposed framework is designed for the continuous development of the AI-based system by integrating ideas from DevOps. As such, compared to the MLDL, the development does not end with the release of the AI-based system but focuses also on the operations, ensuring continuous improvement by utilizing feedback from the deployed system and real data.

The comparison between the W-shaped process from EASA [

6], see

Figure 1, the MLDL process from the G34/WG-114 Standardization Working Group [

10], and the newly introduced framework, see

Figure 3, enhances the understanding of safe AI system development. Comparing this framework to the MLDL creates a common understanding for the development of safe and secure AI-based systems. It emphasizes the high-level requirements derived from the ConOps, while the MLDL starts at a lower level of abstraction and thus later in the development of the full system. Additionally, the proposed framework integrates the operations cycle to utilize feedback from operations, which is crucial to evaluating and improving the system’s performance, leading to the development of a safe and secure AI-based system. Ultimately, it appears to be compatible with the MLDL although the latter is more expressive at lower levels while the new framework is more oriented towards continuous development of AI-based systems.

7. Discussion

This work showed that future AI-based systems need a rigorous development process based on novel AI Engineering methodologies to ensure both the safety and security of such systems. To combat this problem, the European Union Aviation Safety Agency (EASA) has already provided the so-called W-shaped process, an advancement of the V-model, meant for AI-based systems. It is intended to be used in parallel with the V-model-based development of traditional soft- and hardware items in the development process of a complete system. However, the EASA learning assurance process has received criticism for its potential limitations, as some of its objectives might be inherently unverifiable. Thus, the W-shaped process still lacks important features to ensure the safety and security of an AI-based system throughout its operational lifecycle. Moreover, the W-shaped process lacks continuous verification and validation due to its sequential design. For AI-based systems, however, continuous verification and validation are crucial to accomodate the dynamic nature of AI-driven requirements. The processes required to achieve not only continuous updates but also continuous verification and validation have already been manifested in other development processes, namely the established DevOps process. A naive implementation of the DevOps cycle is, however, also not suitable, as it is not compatible with current aviation processes and certification standards. As the DevOps process also sees a rise in adoption in other safety-critical domains, such as automotive, the framework proposed in this work builds upon the W-shaped process by integrating aspects from DevOps to further improve and extend the W-shaped process.

The proposed novel process, an extension of the W-shaped process, aims to enforce more feedback loops through its more holistic approach by starting at the initial definition phase in which the Concept of Operations document is defined. Furthermore, the proposed process adds dedicated steps for the creation of both the Operational Domain, as well as the Operational Design Domain, and their corresponding verification steps, thereby creating a more accountable process. Finally, the novel process integrates even more ideas and processes from DevOps into the W-shaped process by incorporating the operations phase firmly into the process. Including the operations phase in the process ensures that information from the operations of the developed AI-based system can flow back into the update of said system. This is the fundamental idea of continuous development and is required for the continuous verification and validation of any AI-based system, not only in aviation. It is essential for the Safety-by-Design-process in the field of AI Engineering. As such, the proposed framework facilitates the certification-related safety assessments while allowing fast iterative improvements.

Furthermore, this work discusses how different Development Assurance Levels (DALs) lead to varying requirements for the operations phase of the DevOps and, in turn, influence the safety assessment of the AI-based system. Given the stringent certification requirements of systems with a high DAL, for these systems, it is recommended to go through multiple rounds of the process before submitting a system for certification, with the subsequent release and deployment of updates to the AI/ML constituent of the AI-based system.

Nevertheless, even the proposed framework is not yet fully suitable for widespread adoption in aviation. Similar to the W-shaped process it is built upon, it lacks compatibility with both unsupervised learning and reinforcement learning methods. Moreover, clear guidance on how the operations phase should be executed is still under investigation, as well as how this phase can be integrated into the current aviation processes, especially the certification process. Part of this investigation is to better understand the associated cost of implementing the proposed framework. While eventually the process helps to certify interactively updated versions faster and cheaper, the initial overhead is not to be ignored. Thus, a higher financial investment upfront might be necessary. Moreover, implementing a new framework always has a time complexity associated with it. But as the proposed framework is built upon common frameworks, no new procedures are required, limiting the time necessary to train people to use the framework.

Furthermore, some questions on the interaction of traditional soft- and hardware with AI-based systems are still open. For example, how to handle the integration and deployment of an updated AI-based system if this would require new hardware to also be deployed. Here, a conflict between the more DevOps-oriented software development and the V-model-oriented hardware development might introduce further challenges in aligning all stakeholders to the combined process.

Next, guidelines on the required amount of feedback from the operations phase to the development phase are missing, as well as guidelines on how exactly these data can be safely and securely transferred from the aircraft to the developers. Nevertheless, the proposed framework has been shown to be compatible with the Machine Learning Development Lifecycle (MLDL) developed by the G34/WG-114 Standardization Working Group, a joint effort between EUROCAE and SAE. It is the overall goal of this work to enhance the field of AI Engineering for aviation leading to a safe and secure application of AI-based systems, whether they were developed with the here proposed framework or any other framework, as long as the focus shifts towards continuous development and integration to continuously improve any AI-based system deployed.

8. Conclusions

In this paper, a more accountable and holistic development process for the Safety-by-Design development of AI-based systems in safety-critical environments has been proposed. It extends the W-shaped process introduced by EASA, incorporating ideas from the DevOps approach. This novel process intends to ensure that the development follows a Safety-by-Design approach from the high-level system down to the AI/ML constituent. By following proven ideas from the field of AI Engineering, the proposed process allows for continuous improvements in the AI-based system and thus continuous verification and validation, leading to a potentially certifiable AI-based system.

Future research will focus on the enhancement of the Safety- and Security-by-Design methodologies for safety-critical AI-based systems, considering measurable quality criteria such as explainability, traceability, and robustness. Automating the methodology will ensure the systematic and strategic development and improvement of AI-based systems throughout the entire MLDL. Moreover, investigations on how the methodology can be further enhanced through AI-driven feature engineering will be conducted. Ultimately, the methodology will be applicable across different domains, such as space, transportation, and robotics.