Abstract

A passive localization algorithm based on UAV aerial images and Angle of Arrival (AOA) is proposed to solve the target passive localization problem. In this paper, the images are captured using fixed-focus shooting. A target localization factor is defined to eliminate the effect of focal length and simplify calculations. To synchronize the positions of multiple UAVs, a dynamic navigation coordinate system is defined with the leader at its center. The target positioning factor is calculated based on image information and azimuth elements within the UAV photoelectric reconnaissance device. The covariance equation is used to derive AOA, which is then used to obtain the target coordinate value by solving the joint UAV swarm positional information. The accuracy of the positioning algorithm is verified by actual aerial images. Based on this, an error model is established, the calculation method of the co-localization PDOP is given, and the correctness of the error model is verified through the simulation of the Monte Carlo statistical method. At the end of the article, the trackless Kalman filter algorithm is designed to improve positioning accuracy, and the simulation analysis is performed on the stationary and moving states of the target. The experimental results show that the algorithm can significantly improve the target positioning accuracy and ensure stable tracking of the target.

1. Introduction

Reconnaissance-type UAVs are equipped with key features that enable them to locate targets quickly and accurately, as well as predict their behavior with precision. As technology has advanced, UAVs have become capable of multi-machine collaborative operations, thanks to bionic clustering and communication networking technologies. Optoelectronic information technology has also undergone significant development, resulting in the integration, miniaturization, and cost-effectiveness of airborne optoelectronic detection devices [1,2]. To further improve target localization accuracy, UAVs now employ a clustered approach to execute target localization and situational awareness duties [3].

In general, there are two types of UAV target localization techniques: active localization and passive localization. Active localization is the process of actively locating a target using a radio instrument, such as a UAV radar. The UAV actively ranges the target while actively positioning itself, which has a bigger impact on the UAV’s own concealing abilities and survivability [4,5]. By passively collecting target information rather than actively producing electromagnetic waves, lasers, etc., to obtain ranging information, passive placement helps to some extent, ensuring the safety of the UAV itself. According to the type of observation quantity, passive localization techniques are divided into several categories: primarily Collinear Equation, Image Matching, Binocular Vision 3D Localization, Doppler Rate of Frequency Change (DRC), Doppler Rate of Chang (DRC), Phase Difference Rate of Change (PDRC), Time Difference of Arrival (TDOA), Frequency Difference of Arrival (FDOA), Angle of Arrival (AOA), and other techniques [6,7]. The UAVs mentioned in this paper perform clustered localization tasks, and they distinguish themselves by being small, light, and having low power consumption, as well as better anti-jamming and stealthiness. To accommodate the UAV platform and usage needs, localization techniques need to be improved.

Collinear equation, image matching, and binocular vision 3D localization methods in the aforementioned passive localization are localization methods based on image information that can localize the target via a single image but with significant localization error. The flat terrain assumption, which is not always true in real-world application circumstances, is the foundation of the covariance equation approach. Although feature-based image matching is more efficient and gray-scale correlation-based image matching is more widely used, image-matching algorithms are more difficult to use, take longer to complete, and demand more computing resources, and thus they cannot be employed in situations where real-time performance is crucial. The secret to binocular or multicamera vision 3D localization is to shoot the target from various angles and acquire local feature points of the object, which cannot satisfy the measurement accuracy of further away targets due to the restriction of baseline distance. Although direction-finding cross-localization improves target maneuvering performance prediction, it has a significant flaw in multi-target localization and falls short of UAVs’ general criteria. In wireless sensor networks, where target information is typically received from sensors mounted on several observation points, methods like DRC, PDRC, TDOA, FDOA, and AOA are based on fast-improving localization algorithms [8].

To sum up, this work suggests an enhanced passive localization technique with the following key contributions based on the picture data obtained from aerial photography.

- 1.

- The solution technique does not require the input of focal length and elevation information;

- 2.

- Simultaneous localization of multiple targets is possible;

- 3.

- The target localization error may be estimated based on the error component of each observation;

- 4.

- The proposed traceless Kalman filtering approach can significantly increase the target localization and tracking accuracy while maintaining good robustness.

The article is organized as follows. Section 2 discusses the multi-UAV cooperative target localization method, including algorithm assumptions and a schematic depiction of the computational flow. Section 3 introduces the multi-UAV target cooperative localization algorithm. Section 4 examines the localization error and constructs a cooperative localization error model based on Section 3. Section 5 describes the traceless Kalman filter’s principles and computational methods. Section 6 simulates the algorithm’s correctness and highlights its benefits and drawbacks. Section 7 presents the conclusions.

2. Scenario Problem Description

2.1. Cooperative Target Localization Process for Multiple UAVs

The scenario is described in terms of multiple UAVs performing real-time reconnaissance and localization missions, as follows: The UAV is equipped with an electro-optical load to obtain wide-field of view, high-resolution infrared and visible image information, allowing both target and target-assisted localization to be performed.

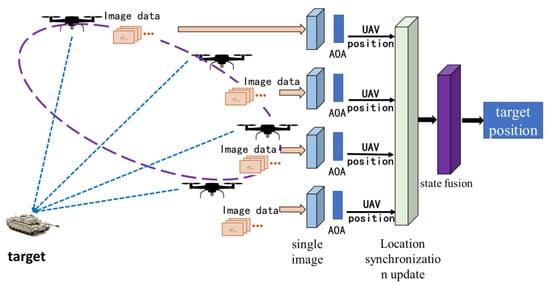

Through mission mustering, multiple UAVs in the scenario area coordinate to pinpoint the objective. Within the electric-optic load action range, multiple UAVs gather the corresponding target pixel coordinates based on image data, sync the image data with the appropriate navigation data to determine the target’s relative position using the pertinent interior orientation data, and then convert the target’s absolute position data, The specific process is shown in Figure 1. A single UAV’s positioning process requires preassembled elevation or range information. We design a collaborative target placement solution approach in the absence of elevation information, taking into account the benefits of passive positioning and relative height measurement accuracy. Continuous tracking and gazing of the target is impossible due to the complex combat environment, but to take advantage of the UAV’s wide field of view for efficient reconnaissance in a limited time window, it is necessary to complete multiple target localization solutions based on multiple images. Furthermore, because absolute target position information is required, multiple UAVs should establish spatial relative relationships through position sharing prior to collaborative target localization, and the time uniformity problem is solved by synchronizing and fusing multiple information of respective UAVs.

Figure 1.

Schematic diagram of the process of Multi-UAV performing target positioning tasks.

2.2. Model Assumptions

The following assumptions are made in the above scenario problem:

- (1)

- Because the UAV’s camera center corresponds with the origin of the navigation coordinate system, any position mistake between them is ignored.

- (2)

- The UAV’s own location information is updated without delay;

- (3)

- The data link has no latency, a big bandwidth, and anti-interference properties to ensure that information is properly transferred.

- (4)

- The image’s optical distortion is ignored.

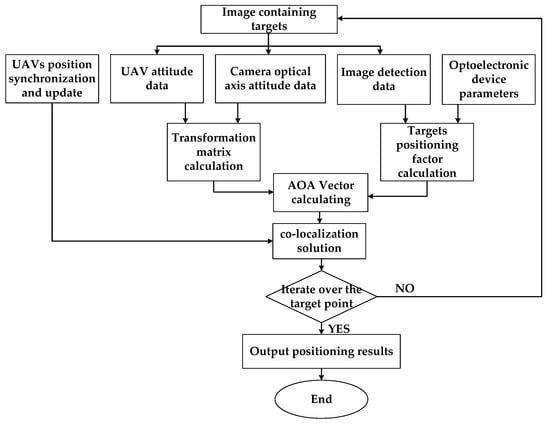

The input parameters for cooperative target localization of numerous UAVs are primarily separated into the following categories: UAV flight status parameters, navigation data, and picture data, among others. The output parameter is the location information of the target, and the specific calculation process is shown in Figure 2.

Figure 2.

Flow chart of target co-location calculation.

3. Multi-UAV Target Co-Location Modeling

The set of UAVs involved in cooperative positioning is denoted by , where N is the total number of UAVs involved in localization; the set of targets that may be scouted and located is denoted by , where K denotes the total number of targets that can be scouted and located.

3.1. WGS-84 Earth Ellipsoid Model

The Earth ellipsoid is a mathematically defined Earth surface that approximates the geodetic level and serves as the reference framework for geodesy and global positioning techniques [9]. This reference also displays the WGS-84 Earth ellipsoid model’s major parameters.

3.2. Synchronization and Updating of Observational Position

The coordinates of the UAV coordinate system are derived from the image and the cooperative positioning of the target by the UAV, but because the positions of numerous UAVs are continually changing, the positions of multiple UAVs must be synchronized and updated.

It is expected that each UAV may collect its own geodetic coordinates and share their position with one another. Localization UAVs are classified into two types: leaders and followers. The mission planning technique assures that there is always one leader in the system to participate in positioning while the rest of the UAVs are followers. The method described in [10] is used in this paper to pick the leader aircraft.

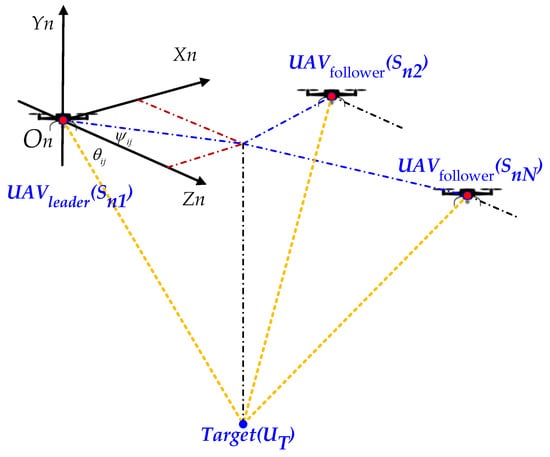

This work develops a dynamic navigation coordinate system to ease the calculation. The dynamic navigation coordinate system () is defined as follows: the coordinate system’s origin is solidly connected to the camera center of the lead aircraft, the axis is positively pointing to the north, the axis is in the plumb plane and positively pointing to the sky, and the axis follows the right-hand rule. The positions of the other UAVs in the dynamic navigation coordinate system are dynamically updated as the leader’s position changes. Figure 3 depicts the position of each UAV in the dynamic navigation coordinate system at a given time.

Figure 3.

Schematic diagram of position synchronization and update based on dynamic navigation coordinate system.

The longitude, latitude, and altitude information for the UAV in the WGS-84 Earth ellipsoidal geodetic coordinate system is , and its position in the dynamic navigation coordinate system is , where the lead aircraft coordinates are denoted as and , respectively, and the positions of other UAVs in the dynamic navigation coordinate system are shown in Equation (1):

where denotes the ratio transformation of the geographical Cartesian coordinate system to the navigation coordinate system.

3.3. Image Based Localization Factor Solution Method

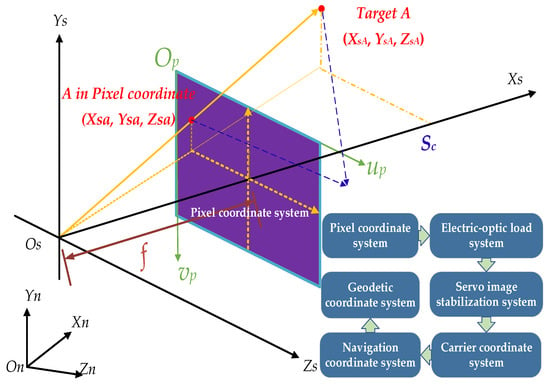

The target positioning solution process has to specify the coordinate system, angle, and coordinate system conversion matrix for a single image. Six coordinate systems: carrier coordinate system, servo stabilization coordinate system, electric-optic load system, and pixel coordinate system, as well as parameters like aircraft attitude angle, electric-optic load installation angle, servo frame angle, and look-down angle [11], are involved in addition to the definition of the coordinate systems shown in Section 3.1 and Section 3.2.

By identifying the targets and using the coaxial image plane, the electric-optic load may be used to determine the pixel coordinates of each target. Through image detection data and optoelectronic device characteristics, the target information on the image can be determined. Give the symbol to this information and define it as a target positioning factor.

First, build the camera coordinate system as depicted in Figure 4 and use the UAV’s position as the coordinate origin. In the navigation coordinate system, the coordinates of are , those of the target point , which corresponds to the image point , are , and the inverse equation of the common line equation is as follows:

where , the conversion matrix between the navigation coordinate system and the UAV camera coordinate system, corresponds to the coefficients of .

Figure 4.

Schematic diagram of single-image based object detection.

Set the UAV electric-optic load’s longitudinal and lateral resolution to , the half field of view angles in the longitudinal and lateral directions to , the physical dimensions of the image elements in the longitudinal and lateral directions to , the focal length to , and using the definition in Figure 4 we can obtain , and the principal point of the image’s position in the pixel coordinate system to . Target’s pixel coordinates are , image point ’s camera coordinates are , and the transformation between the two-dimensional image coordinate system and the camera coordinate system can be expressed as follows:

The desired placement factor is the right-hand side of Equation (4), and the organized expression is as follows:

Define , where , , and . We can obtain the following by converting Equation (3):

The internal orientation components and target pixel coordinates used in the UAV ’s reconnaissance of the target are represented by in Equation (7). It is clear that for a specific kind of electric-optic load, the internal orientation elements , , , , and have constant values and that the localization factor only varies with the target pixel coordinates and is unaffected by the actual size and focal length of the image element.

3.4. Image-Based AOA Vector Solution Process

The AOA can be solved if the current position and attitude of the UAV and the orientation factor within the target are known as follows:

represents the AOA Vector. The following equation is obtained using Equation (8).

From Equation (9) we obtain:

Define as follows:

As evident from Equations (9)–(13), targets positioning factor , the transformation matrix and the target coefficient have the biggest effects on the AOA vector.

This paper performs ground reconnaissance operations with set to −1.

The AOA Vector for N UAVs is:

3.5. Co-Location Solution Model

The targets’ location coordinates are . Figure 3 depicts the relationship between the UAV and the target , with serving as a measure of their separation.

According to Figure 3 and Equations (8) and (12), the following equation can be obtained:

Define , then:

The procedure suggested in [12] allows us to roughly eliminate :

where is the symmetric matrix and , and thus Equation (17) requires more equations than necessary to meet the target position’s solution.

For UAVs involved in localization , there exists:

It is possible to determine the target location coordinates by using Equation (1), Equation (7), Equation (12) and Equation (19). By converting the Earth’s Cartesian coordinate system to the geodetic coordinate system and iteratively calculating the final geodetic coordinates, satisfying the accuracy longitude, latitude, and altitude as , and the result is the coordinates of the navigation system of each target calculated with the coordinates of the UAV in the navigation coordinate system as the reference point. After converting the measure to degrees, the targets’ Earth coordinates are finally discovered.

4. Collaborative Positioning Error Model

4.1. AOA Error Model Based on Image

The look-down angle of the electric-optic load is , with error , the installation angle of the electric-optic load is , with error , the yaw, pitch, and roll angles at the camera moment are , with measurement errors , the altitude and azimuth angles of the frame are , with error , and let the true value of the pixel coordinates of the target observed by UAV at time k. It should be noted that since the navigation device and the electric-optic load are solidly coupled to reduce error, the installation angle is 0.

Then the observation vector is:

The observation measurement error is:

At time k, The measured value of AOA Vector is , The true value of AOA Vector is , then we get:

where is the Jacobian matrix, the residual vector, and each value in is the residual of a single observation from its standardized value.

The calculation of the matrix is performed below.

To facilitate the calculation, set the auxiliary variable as , is normalized to obtain unit vector , and is transformed by :

Equation (24) is transformed to obtain a new expression for the AOA Vector.

A linearized transformation of Equation (23) yields :

where: ,

4.2. Collaborative Positioning Error Model Based on PDOP

Position Dilution of Precision, or PDOP, is simply a measure of how accurate a location is, and in a satellite positioning system, the degree of the PDOP value indicates how well-distributed the satellite terminals are [13]. During the cooperative positioning of several UAVs, the DOP value of each measurement site in the reconnaissance region is influenced, with reduced DOP values frequently resulting in higher positioning accuracy [14]. In order to analyze the error range of cooperative positioning and to suggest optimization ideas, PDOP simulation and analysis of the cooperative positioning of numerous UAVs are employed in this study. The derivation formula in question is displayed below.

Assuming that the target is indeed in the position , the target solution is in the position , and we obtain:

is the Jacobian matrix, and is a vector of residuals between the observed values and the standard values. Each value in this vector represents the residual of a single observation compared to its standard value.

According to Equation (29), is obtained as:

The covariance array of the error is:

The PDOP solution procedure for cooperative localization of multiple UAV targets is provided by Equations (30)–(33).

4.3. Error Analysis

There are two sections to the error analysis. One is the external orientation element, or the UAV’s flying status at the time of target launch, as illustrated in Table 1. The other is the internal orientation element, which is represented by the target pixel coordinates at the time of target launch in Table 2 and Table 3, which displays the distribution of the measurement errors for the external orientation element.

Table 1.

Observation parameters.

Table 2.

Electro-optical reconnaissance equipment parameters.

Table 3.

Observation parameters.

The simulation is run under the conditions indicated in Table 1 to ensure that the error model is accurate, and the additional parameters are shown in Table 2 and Table 3.

The location factor can be calculated as from Equation (7), assuming that the target is close to the center of the image. According to the circumstances outlined in [11], the error when the target is at the edge of the image is greater than the error when the target is in the center of the image, so four places in Figure 5A–D were chosen for a Monte Carlo simulation using arithmetic. The look-down angle of view was set at −90°, and the relative altitude of the flight was 3000 m.

Figure 5.

Points selected for Monte Carlo simulation to obtain maximum error. A, B, C and D correspond to the maximum error position and E corresponds to the minimum error position.

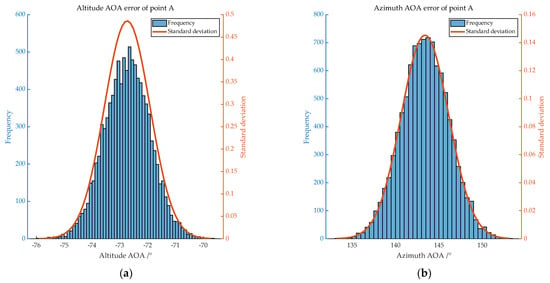

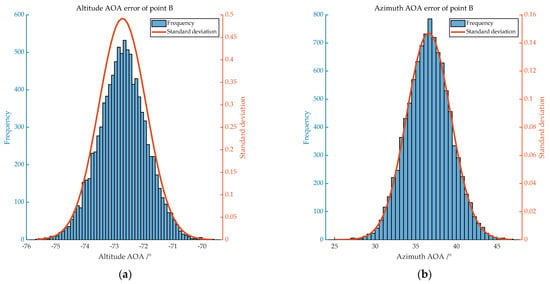

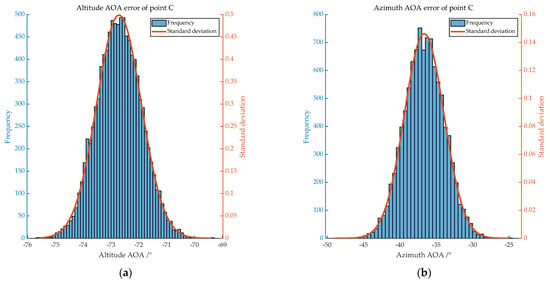

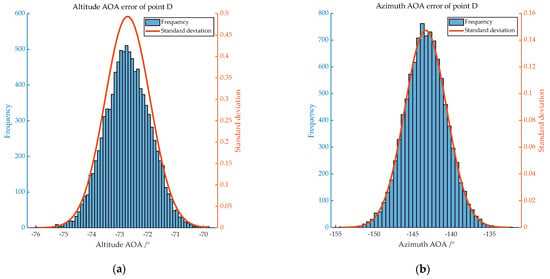

Figure 6, Figure 7, Figure 8 and Figure 9 display the distribution of AOA errors for the target sites when the parameter values in Table 1 are used. Table 4 displays the computation results and observational parameters.

Figure 6.

Distribution of AOA errors of A. (a) shows the distribution of altitude AOA error, with mean −72.747 and standard deviation 0.810; (b) shows the distribution of azimuth AOA error, with mean 143.377 and standard deviation 2.734.

Figure 7.

Distribution of AOA errors of B. (a) shows the distribution of altitude AOA error, with mean −72.733 and standard deviation 0.803; (b) shows the distribution of azimuth AOA error, with mean 36.590 and standard deviation 2.701.

Figure 8.

Distribution of AOA errors of C. (a) shows the distribution of altitude AOA error, with mean −72.702 and standard deviation 0.799; (b) shows the distribution of azimuth AOA error, with mean −36.655 and standard deviation 2.715.

Figure 9.

Distribution of AOA errors of D. (a) shows the distribution of altitude AOA error, with mean −72.726 and standard deviation 0.808; (b) shows the distribution of azimuth AOA error, with mean −143.310 and standard deviation 2.727.

Table 4.

AOA of measurements at each point under condition 1.

According to the experiments, under ideal flight conditions, the target’s image-based AOA azimuth angle and altitude angles should both not exceed 2.73° and 0.81°, respectively.

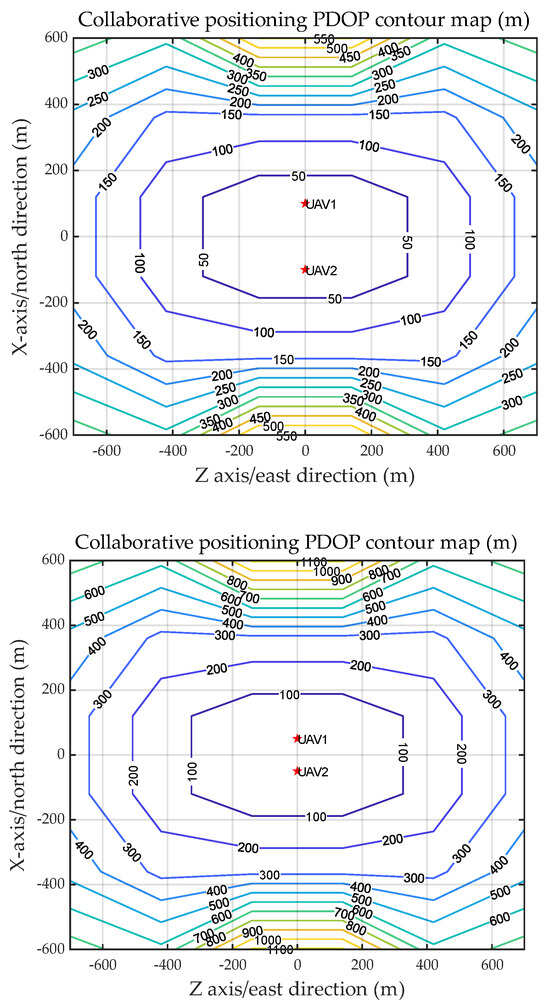

Based on the calculation method of PDOP value proposed in Section 4.1 and Section 4.2, the multi-aircraft cooperative localization error analysis is carried out. Firstly, two UAVs are set up for target localization; the position coordinates of the UAVs are shown in Table 5, the position error is 0 m, and the flight altitude is 3000 m. According to the parameters in Table 2, it can be known that the size of the corresponding area of the captured image is calculated to be about 1400 m × 1200 m when flying at a relative altitude of 3000 m. In this area, the PDOP value under any position can be calculated and plotted into a contour distribution map, as shown in Figure 10.

Table 5.

Coordinate distribution of UAVs.

Figure 10.

Two-UAV cooperative reconnaissance PDOP simulation (Baseline: 200 m and 100 m).

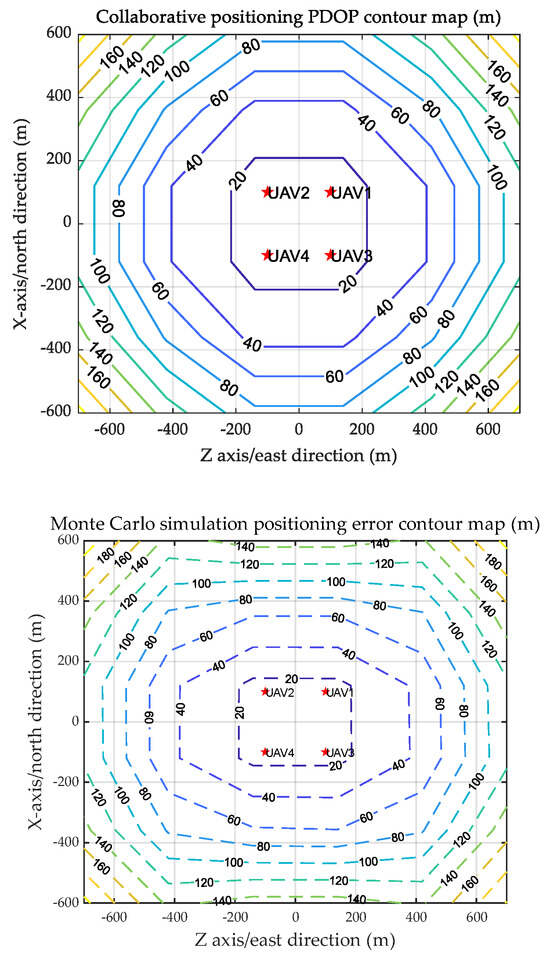

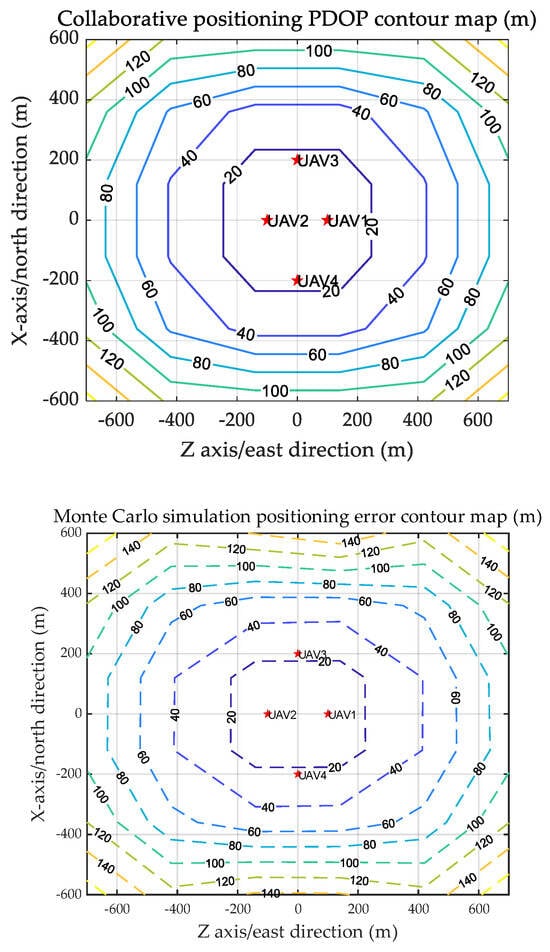

The results of cooperative localization of two UAVs show that when the distance between UAVs is 200 m and 100 m, the minimum value of PDOP distribution is 50 m and 100 m, respectively, i.e., the smaller the spacing is, the larger the localization error is. In addition, the target localization accuracy is also related to the distribution of UAVs; therefore, four UAVs are set up for target localization, and their errors are analyzed. Assuming that four UAVs fly at a relative height of 3000 m, the position coordinates in the 2D plane are shown in Table 6. The calculation results are shown in Figure 11 and Figure 12.

Table 6.

Coordinate distribution of UAVs.

Figure 11.

PDOP and Monte Carlo simulation position error conture map of square formation flying.

Figure 12.

PDOP and Monte Carlo simulation position error conture map of diamond formation flying.

Figure 11 and Figure 12 compare the PDOP values of four unmanned aerial vehicles’ target collaborative positioning under different flying modes with Monte Carlo shooting simulation results. The errors between the two are roughly equal, with a minimum placement inaccuracy of about 20 m.

In summary, utilizing the collaborative positioning model of many unmanned aerial vehicles, a PDOP-based error computation model was constructed, and it was validated using Monte Carlo simulation. The simulation findings show that the baseline has a significant impact on the collaborative positioning error of the two UAVs, with a minimal positioning error of roughly 50 m. The collaborative positioning error of four unmanned aerial vehicles is less affected by the formation mode under the same conditions, with a minimal positioning error of roughly 20 m. Filtering methods can increase the accuracy of error models.

5. Target Localization Method Based on Cubature Kalman Filter

Nonlinear filtering is used because the observation functions of both the time difference and the measured AOA observations are nonlinear functions [15,16]. Because of its linearization procedure, the Extended Kalman Filter (EKF) can only achieve a greater filtering performance provided the linearization error of the system’s state and observation equations is small [17,18]. The particle filter (PF) algorithm, which has recently been developed, is a good algorithm for solving the nonlinear estimation problem [19,20]. As a result, the improvements in localization error by the EKF and PF algorithms were compared.

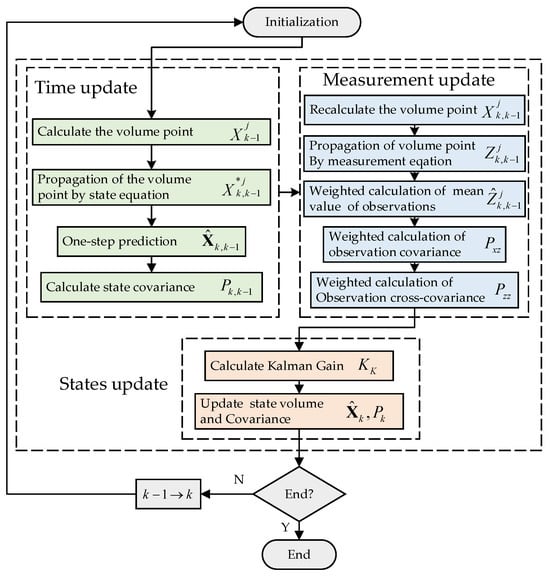

Arasaratnam and Haykin et al. proposed the Cubature Kalman Filter (CKF) algorithm [21] to solve the integration problem of nonlinear functions in filtering algorithms, which is similar to the Unscented Kalman Filter (UKF), first calculates the sampling points (called volume points), then calculates the one-step prediction of the volume points by the state equation, and then corrects the predicted value of the state by the quantitative update and the Kalman gain calculation. In comparison to the Unscented Kalman filter algorithm, the Cubature Kalman Filter algorithm obtains the volume points by calculating the spherical radial volume criterion without linearizing the state equation and directly transferring the volume points by the nonlinear state equation while ensuring that the weights are always positive. This improves the algorithm’s robustness and accuracy [22,23,24].

According to the third-order spherical radial criterion, the number of volume points for an n-dimensional state vector is , and the set of volume points is designated as:

where denotes the volume point, i.e., the column of , and can be expressed as:

The weights of each volume point are equal, as written:

For the following target state equation and the measurement equation:

The Cubature Kalman Filtering algorithm and the specific process are given below:

Step 1: Calculation of volume points.

where a Cholesky decomposition of gives .

Step 2: One-step prediction of volume points.

Step 3: Compute one-step prediction and covariance matrix of state quantities.

Step 4: Calculation of new volume points based on one-step predicted values.

Step 5: Observation prediction for new volume points.

Step 6: Calculate the mean and covariance of the target observations weighted by the observation predictions of the volume points.

Step 7: Calculating Kalman gain.

Step 8: Calculate system state update and covariance update.

The flow of the Cubature Kalman Filtering algorithm is shown in Figure 13.

Figure 13.

Flow of the Cubature Kalman Filtering algorithm.

6. Simulation and Analysis

6.1. Co-Localization Algorithm Verification

An external field test was performed to validate the co-localization algorithm’s correctness. For the same ground identifier, twelve groups of UAV aerial photographs were selected under varied working conditions, with two images in each group, and the target location was solved separately, yielding a total of six groups of target coordinate values. An example of a group of aerial images is shown in Figure 14.

Figure 14.

The ground images captured by two drones at the same time, with the number “28” in the red box as the ground identifier, and their true coordinates are known.

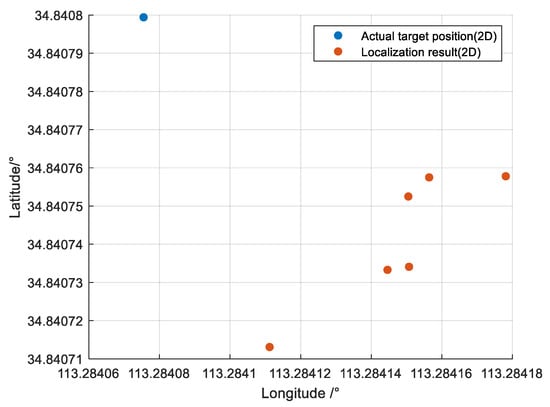

Figure 15 shows the target localization results (the status of UAV collaborative target localization is shown in Table 7, The calculation results of the target position in this state are shown in Table 8). The blue solid dots in the figure represent the true position of the ground identification (target), while the red solid dots represent the results of collaborative target localization by two machines. There are a total of six sets of data. It can be seen that the algorithm can accurately calculate the position of the target in the two-dimensional plane.

Figure 15.

The distribution of actual target position and localization result in a two-dimensional plane.

Table 7.

States of 12 UAVs using for localization.

Table 8.

Two UAVs collaborative target positioning results (first group ID: 1&5, second group ID: 2&6, third group ID: 3&4, fourth group ID: 7&9, fifth group ID: 8&11, sixth group ID: 10&12).

6.2. Multi-UAV Co-Location and Tracking

Simulations for fixed and moving targets are discussed in this section, and these were used to validate the effectiveness of CKF. During the process of target discovery and localization by the four UAV observatories, the UAV moves along a specific trajectory and makes numerous observations of the target area. The observations from the first measurement are combined with the initial position where the UAV observatory begins positioning to obtain the initial position estimate of the target and the corresponding covariance matrix of the zero-mean estimation error as the initial estimate of the filtering algorithm, and the filtering algorithm is then used to process the multiple observations to obtain a more accurate estimate of the target.

Figure 3 shows the NUE (North-Up-East) coordinate system ,and Table 9 shows the beginning condition of the ground target, data related to the number of UAVs, initial status, and measurement errors.

Table 9.

Co-location simulation parameters.

Ref. [25] provides the basic motion model of the target, and the efficiency of the Unscented Kalman Filter-based target localization approach is validated in this study using the target’s constant linear and rotating motion.

The discrete constant velocity linear and rotating motion models of the target are shown in the following equations:

where and are the state vectors of the target’s constant linear and turning motion models, and the status transition matrices, and , the system noise.

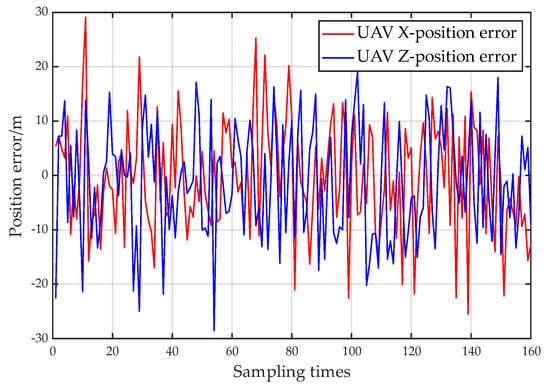

To model the positional adequacy and location error of the UAVs, a random normal error with a mean value of 0 and a standard deviation of 10 m is added to the position of the UAVs’ path. Figure 16 depicts the error between the measuring position and the real position of the UAV.

Figure 16.

Diagrammatic representation of the noise interference on the drone’s position, superimposed on the motion trajectory, with a mean of 0 and a standard deviation of 10 m.

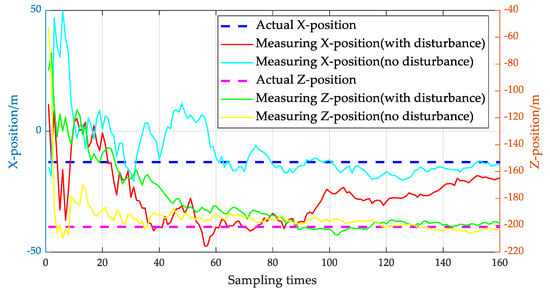

Four UAVs approach the target from various angles. Figure 17 depicts the position result for a stationary object. The target position’s positioning deviance quickly converges from tens of meters to less than ten meters.

Figure 17.

The position result for stationary target.

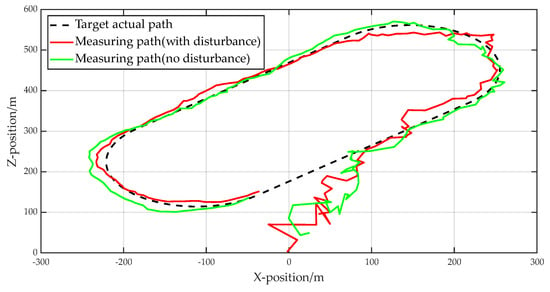

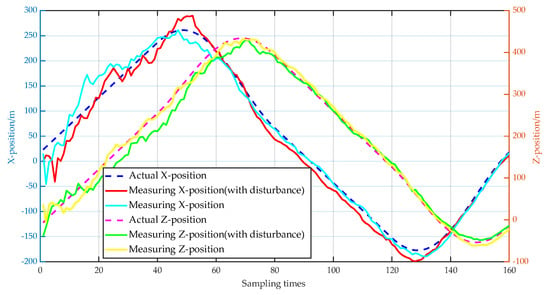

Assume that the target moves in a straight path and turns in a straight line and that one revolution of the motion takes 80 s. Four UAVs are programmed to proceed toward the target’s initial position to finish the target’s continual localization and tracking. Figure 18 depicts the target’s and UAVs’ respective motion trajectories and tracking at a certain time.

Figure 18.

Moving target localization and tracking trajectory status (2D).

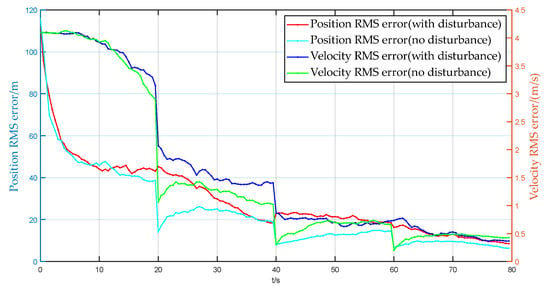

To account for the interference of random perturbations on positioning outcomes, 100 Monte Carlo simulations were run, and the RMSE (Root Mean Square Error) was employed as the accuracy judgment measure.

Figure 19 shows the measurement results of the position component when tracking a moving target, and it can be seen that the algorithm proposed in this paper can quickly converge and stably trac the target. Figure 20 shows the position and velocity RMS errors when tracking a moving target, and the tracking accuracy gradually improves with the change of time, the position error converges within 12 m, and the velocity error converges within 0.5 m/s, which also shows that the algorithm has high accuracy under external interference.

Figure 19.

Tracking status of moving target position component (2D).

Figure 20.

RMSE of target position and velocity prediction.

7. Conclusions

This paper’s target co-localization approach is a localization method that does not rely on elevation and ranging information. It can calculate the positions of many targets at once, considerably improving UAV detecting capability. The approach is almost hardware-independent and thus appropriate for low-cost small UAV cluster systems. The error model is used in this study to examine the lowest target positioning error of 20 m at 3000 m relative flight altitude under typical flight conditions. This paper uses the traceless Kalman filtering algorithm to simulate and verify the stationary and moving targets, respectively, and the target localization accuracy is improved by 40% compared to the original one, and the target can be continuously tracked in the case of interference with a high degree of accuracy guaranteed.

Author Contributions

Conceptualization, M.D.; methodology, M.D.; software, M.D., T.W. and K.Z.; validation, M.D., H.Z. and T.W.; formal analysis, M.D. and K.Z.; investigation, T.W.; resources, H.Z.; data curation, H.Z.; writing—original draft preparation, M.D. and H.Z.; writing—review and editing, M.D. and H.Z.; visualization, M.D. and K.Z.; supervision, M.D.; project administration, M.D.; funding acquisition, M.D. All authors have read and agreed to the published version of the manuscript.

Funding

This research received no external funding.

Data Availability Statement

The data that support the findings of this study are available on request from the corresponding author, Minglei Du, upon reasonable request.

Conflicts of Interest

No potential conflict of interest was reported by the authors.

References

- Chen, W.-C.; Lin, C.-L.; Chen, Y.-Y.; Cheng, H.-H. Quadcopter Drone for Vision-Based Autonomous Target Following. Aerospace 2023, 10, 82. [Google Scholar] [CrossRef]

- Elmeseiry, N.; Alshaer, N.; Ismail, T. A Detailed Survey and Future Directions of Unmanned Aerial Vehicles (UAVs) with Potential Applications. Aerospace 2021, 8, 363. [Google Scholar] [CrossRef]

- Cai, Y.; Guo, H.; Zhou, K.; Xu, L. Unmanned Aerial Vehicle Cluster Operations under the Background of Intelligentization. In Proceedings of the 2021 3rd International Conference on Artificial Intelligence and Advanced Manufacture (AIAM), Manchester, UK, 23–25 October 2021; IEEE: Piscataway, NJ, USA, 2021; pp. 525–529. [Google Scholar] [CrossRef]

- Chen, X.; Qin, K.; Luo, X.; Huo, H.; Gou, R.; Li, R.; Wang, J.; Chen, B. Distributed Motion Control of UAVs for Cooperative Target Location Under Compound Constraints. In Proceedings of the 2021 18th International Computer Conference on Wavelet Active Media Technology and Information Processing (ICCWAMTIP), Chengdu, China, 17–19 December 2021; IEEE: Piscataway, NJ, USA, 2021; pp. 576–580. [Google Scholar] [CrossRef]

- Kim, B.; Pak, J.; Ju, C.; Son, H.I. A Multi-Antenna-based Active Tracking System for Localization of Invasive Hornet Vespa velutina. In Proceedings of the 2022 22nd International Conference on Control, Automation and Systems (ICCAS), Jeju, Republic of Korea, 27 November–1 December 2022; IEEE: Piscataway, NJ, USA, 2022; pp. 1693–1697. [Google Scholar] [CrossRef]

- Li, H.; Fan, X.; Shi, M. Research on the Cooperative Passive Location of Moving Targets Based on Improved Particle Swarm Optimization. Drones 2023, 7, 264. [Google Scholar] [CrossRef]

- Wang, S.; Li, Y.; Qi, G.; Sheng, A. Sensor Selection and Deployment for Range-Only Target Localization Using Optimal Sensor-Target Geometry. IEEE Sens. J. 2023, 23, 21757–21766. [Google Scholar] [CrossRef]

- Zhao, Y.; Hu, D.; Zhao, Y.; Liu, Z. Moving target localization for multistatic passive radar using delay, Doppler and Doppler rate measurements. J. Syst. Eng. Electron. 2020, 31, 939–949. [Google Scholar] [CrossRef]

- Sudano, J.J. An exact conversion from an Earth-centered coordinate system to latitude, longitude and altitude. In Proceedings of the IEEE 1997 National Aerospace and Electronics Conference, NAECON 1997, Dayton, OH, USA, 14–17 July 1997; IEEE: Piscataway, NJ, USA, 1997; Volume 2, pp. 646–650. [Google Scholar] [CrossRef]

- Wang, M.; Zhang, D.; Tang, S.; Xu, B.; Zhao, J. Online Task Planning Method for Drone Swarm based on Dynamic Coalition Strategy. Acta Armamentarii 2023, 44, 2207–2223. [Google Scholar]

- Du, M.; Li, S.; Zheng, K.; Li, H.; Che, X. Target Location Method of Small Unmanned Reconnaissance Platform Based on POS Data. In Proceedings of the 2021 International Conference on Autonomous Unmanned Systems, Changsha, China, 24–26 September 2021. [Google Scholar]

- Wu, L.; Wang, B.; Wei, J.; He, S.; Zhao, Y. Dual-aircraft passive localization model based on AOA and its solving method. Syst. Eng. Electron. Technol. 2020, 42, 978–986. [Google Scholar]

- Fan, B.; Li, G.; Li, P.; Yi, W.; Yang, Z. Research and Application of PDOP Model for Laser Interferometry Measurement of Three-Dimensional Point Coordinates. Surv. Mapp. Bull. 2015, 11, 28–31. [Google Scholar]

- Yang, K.; Huang, J. Positioning Accuracy Evaluation of Satellite Navigation Systems. Mar. Surv. Mapp. 2009, 29, 26–28. [Google Scholar]

- Qin, Y.; Zhang, H.; Wang, S. Kalman Filter and Combined Navigation Principles; Northwestern Polytechnical University Press: Xi’an, China, 2015. [Google Scholar]

- Neusypin, K.; Kupriyanov, A.; Maslennikov, A.; Selezneva, M. Investigation into the nonlinear Kalman filter to correct the INS/GNSS integrated navigation system. GPS Solut. 2023, 27, 91. [Google Scholar] [CrossRef]

- Gong, B.; Wang, S.; Hao, M.; Guan, X.; Li, S. Range-based collaborative relative navigation for multiple unmanned aerial vehicles using consensus extended Kalman filter. Aerosp. Sci. Technol. 2021, 112, 106647. [Google Scholar] [CrossRef]

- Easton, P.; Kalin, N.; Joshua, M. Invariant Extended Kalman Filtering for Underwater Navigation. IEEE Robot. Autom. Lett. 2021, 6, 5792–5799. [Google Scholar]

- Yue, J.; Wang, H.; Zhu, D.; Aleksandr, C. UAV formation cooperative navigation algorithm based on improved particle filtering. Chin. J.f Aeronaut. 2023, 44, 251–262. [Google Scholar]

- Liu, Y.; He, Z.; Lu, Y.; Di, K.; Wen, D.; Zou, X. Autonomous navigation and localization in IMU/UWB group domain based on particle filtering. Transducer Microsyst. Technologies. 2022, 41, 47–50. [Google Scholar]

- Ienkaran, A.; Simon, H. Cubature Kalman Filters. IEEE Trans. Autom. Control 2009, 54, 1254–1269. [Google Scholar]

- Luo, Q.; Shao, Y.; Li, J.; Yan, X.; Liu, C. A multi-AUV cooperative navigation method based on the augmented adaptive embedded cubature Kalman filter algorithm. Neural Comput. Appl. 2022, 34, 18975–18992. [Google Scholar] [CrossRef]

- Liu, W.; Shi, Y.; Hu, Y.; Hsieh, T.H.; Wang, S. An improved GNSS/INS navigation method based on cubature Kalman filter for occluded environment. Meas. Sci. Technol. 2023, 34, 035107. [Google Scholar] [CrossRef]

- Gao, B.; Hu, G.; Zhang, L.; Zhong, Y.; Zhi, X. Cubature Kalman filter with closed-loop covariance feedback control for integrated INS/GNSS navigation. Chin. J. Aeronaut. 2023, 36, 363–376. [Google Scholar] [CrossRef]

- Jin, G.; Tan, L. Targeting Technology for Unmanned Reconnaissance Aircraft Optronic Platforms; Xi’an University of Electronic Science and Technology Press: Xi’an, China, 2012. [Google Scholar]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).