Abstract

We discuss several multivariate extensions of the Multiplicative Error Model to take into account dynamic interdependence and contemporaneously correlated innovations (vector MEM or vMEM). We suggest copula functions to link Gamma marginals of the innovations, in a specification where past values and conditional expectations of the variables can be simultaneously estimated. Results with realized volatility, volumes and number of trades of the JNJ stock show that significantly superior realized volatility forecasts are delivered with a fully interdependent vMEM relative to a single equation. Alternatives involving log–Normal or semiparametric formulations produce substantially equivalent results.

Keywords:

GARCH; MEM; realized volatility; trading volume; trading activity; trades; copula; volatility forecasting JEL Classification:

C32; C53; C58

1. Introduction

Dynamics in financial markets can be characterized by many indicators of trading activity such as absolute returns, high-low range, number of trades in a certain interval (possibly labeled as buys or sells), volume, ultra-high frequency based measures of volatility, financial durations, spreads and so on.

Engle (2002) reckons that one striking regularity behind financial time series is that persistence and clustering characterizes the evolution of such processes. As a result, the dynamics of such variables can be specified as the product of a conditionally deterministic scale factor, which evolves according to a GARCH–type equation, and an innovation term which is iid with unit mean. Such models are labeled Multiplicative Error Models (MEM) and can be seen as a generalization of the GARCH (Bollerslev 1986) and of the Autoregressive Conditional Duration (ACD, Engle and Russell (1998)) approaches. One of the advantages of such a model is to avoid the need to resort to logs (not possible when zeros are present in the data) and to provide conditional expectations of the variables of interest directly (rather than expectations of the logs). Empirical results show a good performance of these types of models in capturing the stylized facts of the observed series (e.g., for daily range, Chou (2005); for volatility, volume and trading intensity Hautsch (2008)).

The model was specified by Engle and Gallo (2006) in a multivariate context (vector MEM or vMEM) allowing just the lagged values of each variable of interest to affect the conditional expectation of the other variables. Such a specification lends itself to producing multi–step ahead forecasts: in Engle and Gallo (2006) three different measures of volatility (absolute returns, daily range and realized volatility), influence each other dynamically. Although such an equation–by–equation estimation ensures consistency of the estimators in a quasi-maximum likelihood context, given the stationarity conditions discussed by Engle (2002), correlation among the innovation terms is not taken into account and leads to a loss in efficiency.

However, full interdependence is not allowed by an equation–by–equation approach both in the form of past conditional expectations influencing the present and a contemporaneous correlations of the innovations. The specification of a multivariate distribution of the innovations is far from trivial: a straightforward extension to a joint Gamma probability distribution is not available except in very special cases. In this paper, we want to compare the features of a novel maximum likelihood estimation strategy adopting copula functions to other parametric and semiparametric alternatives. Copula functions allow to link together marginal probability density functions for individual innovations specified as Gamma as in Engle and Gallo (2006) or as zero–augmented distributions (as in Hautsch et al. (2013), distinguishing between the zero occurrences and the strictly positive realizations). Copula functions are used in a Multiplicative Error framework, but in a Dynamic Conditional Correlation context by Bodnar and Hautsch (2016). Within a Maximum Likelihood approach to the vector MEM, we want also to explore the performance of a multivariate log–Normal specification for the joint distribution of the innovations and the semiparametric approach presented in Cipollini et al. (2013) resulting in a GMM estimator. The range of potential applications of a vector MEM is quite wide: dynamic interactions among different values of volatility, volatility spillovers across markets (allowing multivariate-multi-step ahead forecasts and impulse response functions, order execution dynamics Noss (2007) specifies a MEM for execution depths).

This paper aims at discussing merits of each approach and their performance in forecasting realized volatility with augmented information derived from the dynamic interaction with other measures of market activity (namely, the volumes and the number of trades). We want conditional expectations to depend just on past values (not also on some contemporary information as in Manganelli (2005) and Hautsch (2008)).

What the reader should expect is the following: in Section 2 we lay out the specification of a vector Multiplicative Error Model, discussing the issues arising from the adoption of several types of copula functions linking univariate Gamma marginal distributions. In Section 3 we describe the Maximum Likelihood procedure leading to the inference on the parameters. In Section 4 we discuss the features of a parametric specification with a log–Normal and of a semiparametric approach suggested by Cipollini et al. (2013). In Section 5 we present the empirical application to three series of market trading activity, namely realized kernel volatility, traded volumes and the number of trades. The illustration is performed on the JNJ stock over a period between 2007 and 2013. What we find is that specifying the joint distribution of the innovations allowing for contemporaneous correlation dramatically improves the log–likelihood over an independent (i.e., equation–by–equation) approach. Richer specifications (where simultaneous estimation is unavoidable) deliver a better fit, improved serial correlation diagnostics, and a better performance in out–of–sample forecasting. The Student–T copula possesses better features than the Normal copula. Overall, the indication is that we will have significantly superior realized volatility forecasts when other trading activity indicators and contemporaneous correlations are considered. Concluding remarks follow.

2. A Copula Approach to Multiplicative Error Models

Let be a K–dimensional process with non–negative components. A vector Multiplicative Error Model (vMEM) for is defined as

where ⊙ indicates the Hadamard (element–by–element) product and indicates a diagonal matrix with its vector argument on the main diagonal. Conditionally upon the information set , a fairly general specification for is

where is and , and are . The vector has a generic element multiplied by a function related to a signed variable, be it a positive or negative return ( values) or a signed trade (buy or sell values), as to capture asymmetric effects. Let the parameters relevant for be collected in a vector .

Conditions for stationarity of are a simple generalization of those of the univariate case (e.g., Bollerslev (1986); Hamilton (1994)): a vMEM(1,1) with defined as in Equation (2) is stationary in mean if all characteristic roots of are smaller than 1 in modulus. We can think of as the impact matrix in the expression

If more lags are considered, the model is

where L is the maximum lag occurring in the dynamics. It is often convenient to represent the system (3) in its equivalent companion form

where is a vector obtained by stacking its elements columnwise and

with , is a identity matrix and is a matrix of zeros. The same stationarity condition holds in terms of eigenvalues of .

The innovation vector is a K–dimensional iid process with probability density function (pdf) defined over a support, the unit vector as expectation and a general variance–covariance matrix ,

The previous conditions guarantee that

where the latter is a positive definite matrix by construction.

The distribution of the error term can be specified in different ways. We discuss here a flexible approach using copula functions; different specifications are presented in Section 4.

Using copulas (cf., among others, Joe (1997) and Nelsen (1999), Embrechts et al. (2002), Cherubini et al. (2004), McNeil et al. (2005) and the review of Patton (2013) for financial applications), the conditional pdf of is given by

where is the pdf of the copula, and are the pdf and the cdf, respectively, of the marginals, and are parameters. A copula approach, hence, requires the specification of two objects: the distribution of the marginals and the copula function.

In view of the flexible properties shown elsewhere (Engle and Gallo 2006), for the first we adopt Gamma pdf’s (but other choices are possible, such as Inverse-Gamma, Weibull, log–Normal, and mixtures of them). This has the important feature of guaranteeing an interpretation of a Quasi Maximum Likelihood Estimator even if the choice of the distribution is not appropriate. For the second, we discuss some possible specifications within the class of Elliptical copulas which provides an unified framework encompassing the Normal, the Student-T and any other member of this family endowed with an explicit pdf.

Elliptical copulas have interesting features and widespread applicability in Finance (for details see McNeil et al. (2005), Frahm et al. (2003), Schmidt (2002)). They are copulas generated by Elliptical distributions, exactly in the same way as the Normal copula and the Student-T copula stem from the multivariate Normal and Student-T distributions, respectively.1

We consider a copula generated by an Elliptical distribution whose univariate ‘standardized’ marginals (intended here with location parameter 0 and dispersion parameter 1) have an absolutely continuous symmetric distribution, centered at zero, with pdf and cdf ( represents a vector of shape parameters). The density of the copula can then be written as

for suitable choices of , and , where , .

Two notable cases will be considered. The first is the Normal copula (McNeil et al. (2005), Cherubini et al. (2004), Bouyé et al. (2000)); with no explicit shape parameter , we have , . The pdf in Equation (9) becomes:

where , and denotes the cdf of the standard Normal distribution computed at x.

The Normal copula has many interesting properties: the ability to reproduce a broad range of dependencies (the bivariate version, according to the value of the correlation parameter, is capable of attaining the lower Fréchet bound, the product copula and the upper Fréchet bound), the analytical tractability, the ease of simulation. When combined with marginals, the resulting multivariate distribution is a special case of dispersion distribution generated from a Gaussian copula (Song 2000). We note that the conditional correlation matrix of has generic element approximately equal to , which can assume negative values too.

One of the limitations of the Normal copula is the asymptotic independence of its tails. Empirically, tail dependence is a behavior observed frequently in financial time series (see McNeil et al. (2005), among others). Elements of (being different indicators of the same asset or different assets) tend to be affected by the same extreme events. For this reason, as an alternative, we consider the Student-T copula which allows for asymptotically dependent tails. Differently from the Normal copula, when we get uncorrelated but not independent marginals (details in McNeil et al. (2005)). Thus, when considering the Student-T copula in Equation (9), with a scalar shape parameter, we have , , , and its explicit pdf is

where , and denotes the cdf of the Student-T distribution with degrees of freedom computed at x. Further specifications of the Student-T copula are in Demarta and McNeil (2005).

3. Maximum Likelihood Inference

In this section we discuss how to get full Maximum Likelihood (ML) inferences from the vMEM with the parametric specification (3) for (dependent on a parameter vector ) and a copula-based formulation of the conditional distribution of the vector error term (characterized by the parameter vector ). Inference on and can be discussed in turn, given that from the model assumptions the log-likelihood function l is

Considering a generic time t, it is useful to recall the sequence of calculations:

where is the parameter involved in the i-th element of the vector.

3.1. Parameters in the Conditional Mean

Irrespective of the specification chosen for , the structure of the vMEM allows to express the portion of the score function corresponding to as

where

In order to have a zero expected score, we need or, equivalently, . As a consequence, the information matrix and the expected Hessian are given by

and

respectively, where the matrices

and

depend only on but not on .

For a particular parametric choice of the conditional distribution of , we need to plug the specific expression of into . For instance, considering the generic copula formulation (8), then

so that has elements

In what follows we provide specific formulas for the elliptical copula formulation (9) and its main sub-cases.

3.2. Parameters in the pdf of the Error Term

Under a copula approach, the portion of the score function corresponding to the term (cf. Equation (12)) depends on a vector ( and are the parameters of the copula function and of the marginals, respectively—cf. Section 2),

Therefore,

and

As detailed before, beside a possible shape parameter , elliptical copulas are characterized by a correlation matrix which, in view of its full ML estimation, can be expressed (cf. McNeil et al. (2005), p. 235) as

where is an upper-triangular matrix with ones on the main diagonal and is a diagonal matrix with diagonal entries and for . By so doing, the estimation of is transformed into an unconstrained problem, since the free elements of can vary into . We can then write .

Let us introduce a compact notation as follows: , , , , , . We can then write

where we used .

In specific cases we get:

- Normal copula: , ;

- Student-T copula: , , .

Parameters entering the matrix

The portion of the score relative to the free parameters of the matrix has elements

Using some algebra we can show that

and

By replacing them into (23), we obtain

Parameters entering the vector

The portion of the score relative to is

The derivative of can sometimes be computed analytically. For instance, for the Student–T copula we have

For the remaining quantities, we suggest numerical derivatives when, as in the Student–T case, the quantile function cannot be computed analytically.Parameters entering the vector

The portion of the score relative to has elements

After some algebra we obtain

where

For instance, if a marginal has a distribution then

whereas can be computed numerically.

Parameters entering the vector

By exploiting the notation introduced in this section, we can now detail the structure of entering into (16) and then into the portion of the score function relative to . From (22), (cf. (16)) has elements

where is given in (25). For our choice, is the pdf of a distribution, so that

3.2.1. Expectation Targeting

Assuming weak-stationarity of the process, numerical stability and a reduction in the number of parameters to be estimated can be achieved by expressing in terms of the unconditional mean of the process, say , which can be easily estimated by the sample mean (expectation targeting2). Since is well defined and is equal to

Equation (3) becomes

In a GARCH framework, the consequences of this practice have been been investigated by Kristensen and Linton (2004) and, more recently, by Francq et al. (2011) who show its merits in terms of stability of estimation algorithms and accuracy in estimation of both coefficients and long term volatility (cf. Appendix A for some details in the present context).

From a technical point of view, a trivial replacement of by the sample mean followed by a ML estimation of the remaining parameters, preserves consistency but leads to wrong standard errors (Kristensen and Linton 2004). The issue can be faced by identifying the inference problem as involving a two-step estimator (Newey and McFadden (1994, ch. 6)), namely by rearranging as , where collects all model parameters but . Under conditions able to guarantee consistency and asymptotic normality of (in particular, the existence of : see Francq et al. (2011)), we can adapt the notation of Newey and McFadden (1994) to write the asymptotic variance of as

where

and

is the moment function giving the sample average as an estimator of . The matrix denotes the variance-covariance matrix of partitioned in the corresponding blocks.

To give account of the necessary modifications to be adopted with expectation targeting, we provide sufficiently general and compact expressions for the parameters (the unconditional mean) and (the remaining parameters) in the conditional mean expressed by (29).

where

The expression for is obtained by using the technique in Horváth et al. (2006) and Francq et al. (2011). In this sense, we extend the cited works to a multivariate formulation including asymmetric effects as well.

Some further simplification is also possible when the model is correctly specified since, in such a case, and leading to and to

for what concerns the inner part of (30).

3.2.2. Concentrated Log-Likelihood

Some further numerical estimation stability and reduction in the number of parameters can be achieved—if needed—within the framework of elliptical copulas: we can use current values of residuals to compute current estimates of (Kendall correlations are suggested by Lindskog et al. (2003)) and of the shape parameter (tail dependence indices are proposed by Kostadinov (2005)). This approach may be fruitful with other copulas as well when sufficiently simple moment conditions can be exploited. A similar strategy can be applied also to the parameters of the marginals. For instance, if they are assumed distributed, the relationship leads to very simple estimator of from current values of residuals. By means of this approach, the remaining parameters can be updated from a sort of pseudo-loglikelihood conditioned on current estimates of the pre-estimated parameters.

In the case of a Normal copula a different strategy can be followed. The formula of the (unconstrained) ML estimator of the matrix (Cherubini et al. 2004, p. 155), namely

where is a matrix, can be plugged into the log-likelihood in place of obtaining a sort of concentrated log-likelihood

leading to a relatively simple structure of the score function. However, this estimator of is obtained without imposing any constraint relative to its nature as a correlation matrix ( and positive definiteness). Computing directly the derivatives with respect to the off–diagonal elements of we obtain, after some algebra, that the constrained ML estimator of satisfies the following equations:

for , where and indicate, respectively, the i–th row and the j–th column of the matrix . Unfortunately, these equations do not have an explicit solution.3 An acceptable compromise which should increase efficiency, although formally it cannot be interpreted as an ML estimator, is to normalize the estimator obtained above in order to transform it to a correlation matrix:

where . This solution can be justified observing that the copula contribution to the likelihood depends on exactly as if it was the correlation matrix of iid rv’s normally distributed with mean and correlation matrix (see also (McNeil et al. 2005, p. 235)). Using this constrained estimator of , the concentrated log-likelihood becomes

It is interesting to note that, as long as (31), (32) too gives a relatively simple structure of the score function. Using some tedious algebra, we can show that the components of the score corresponding to and have exactly the same structure as above, with the quantity into (25) changed to

where the matrix is here given by

and indicates here the pdf of the standard normal computed at its argument. Of course, also in this case the parameters of the marginals can be updated by means of moment estimators computed from current residuals (instead that via ML) exactly as explained above.

3.3. Asymptotic Variance-Covariance Matrix

As customary in ML inference, the asymptotic variance-covariance matrix of parameter estimators can be expressed by using the Outer-Product of Gradients (OPG), the Hessian matrix, or in the sandwich form, more robust toward error distribution misspecification. The last option is what we use in the application of Section 5.

4. Alternative Specifications of the Distribution of Errors

4.1. Multivariate Gamma

The generalization of the univariate gamma adopted by Engle and Gallo (2006) to a multivariate counterpart is frustrated by the limitations of the multivariate Gamma distributions available in the literature (all references below come from (Johnson et al. 2000, chapter 48)): many of them are bivariate formulations, not sufficiently general for our purposes; others are defined via the joint characteristic function, so that they require tedious numerical inversion formulas to find their pdf’s. The formulation that is closest to our needs (it provides all univariate marginal probability functions for as ), is a particular version of the multivariate Gamma’s by Cheriyan and Ramabhadran (henceforth , which is equivalent to other versions by Kowalckzyk and Trycha and by Mathai and Moschopoulos):

where and (Johnson et al. 2000, pp. 454–470). The multivariate pdf is expressed in terms of a cumbersome integral and the conditional correlations matrix of has generic element

which is restricted to be positive and is strictly related to the variances and . Given these drawbacks, Multivariate Gamma’s will not be adopted here.

4.2. Multivariate Lognormal

A different specification is obtained assuming conditionally log–Normal,

where and are, respectively, the mean vector and the variance-covariance matrix of and the constraint in (34) is needed in order for to be equal to (cf. Equation (5)).

The log-likelihood function is obtained by replacing the expression of coming from (34) into Equation (12), leading to

Accordingly, the portion of the score related to the derivative of l in (the parameters in the conditional mean) is given by Equation (14), with defined as in (15) and

leading to

A full application of the ML principle would require optimization of (35) also in the elements of the matrix . However, differently from the maximization with respect to the same parameters of a Gaussian log-likelihood, this approach is tedious since the diagonal elements of appear also in the term , complicating the constraints to preserve its positive definiteness.

A possible approach to bypass this issue would be to resort to a reparameterization of based on its Cholesky decomposition, as we did in Section 3 to represent the correlation matrix in the copula-based specifications. Here we adopted a simpler approach, based on a method of moment estimator of the elements. The ensuing estimation algorithm follows the sequence:

iterating until convergence.

Following (Newey and McFadden 1994, ch. 6) once again, the resulting asymptotic variance-covariance matrix of can be expressed as

where

is the t-th addend in (37) and is estimated with the corresponding portion of the variance-covariance matrix of log-residuals (). After some algebra we get

where is defined in (15) and indicates the -th element of .4 Because of (39) and (40), Equation (38) simplifies to

4.3. Semiparametric

As an alternative, we can use the semiparametric approach by Cipollini et al. (2013), where the distribution of the error term is left unspecified. In this case the pseudo-score equation corresponding to the parameter is again given by Equation (14), with defined as in (15) and

leading to the equation

The ensuing estimation algorithm works similarly to the log–Normal case iterating until convergence the following sequence:

The asymptotic variance-covariance matrix of is given by

the form of which looks very similar to in Equation (39).

The (pseudo-)score Equations (37) and (43) look quite similar: beside a simpler expression of the GMM asymptotic variance-covariance matrix of , the essential difference rests in the nature of the martingale difference (MD) ‘driving’ the equation. The GMM relies on minimal model assumptions and does not require logs; as a consequence, it is feasible also in case of zeros in the data. Apart from the need to take logs, the expression of in the log–Normal case is a MD only if has as its mean; if this is not true, we would have a corresponding bias in estimating the coefficient appearing in the conditional mean .

5. Trading Activity and Volatility within a vMEM

Trading activity produces a lot of indicators characterized by both simultaneous and dynamic interdependence. In this application, we concentrate on the joint dynamics of three series stemming from such trading activity, namely volatility (measured as realized kernel volatility, cf. Barndorff-Nielsen et al. (2011), but also Andersen et al. (2006) and references therein), volume of shares and number of shares traded daily. The relationship between volatility and volume as relating to trading activity was documented, for example, in the early contribution by Andersen (1996). Ever since, the evolution of the structure of financial markets, industry innovation, the increasing participation of institutional investors and the adoption of automated trading practices have strengthened such a relationship, and the number of trades clearly reflects an important aspect of trading strategies. For the sake of parsimony, we choose to focus on the realized volatility forecasts as the main product of interest from the multivariate model exploiting the extra information in the other indicators, as well as the contemporaneous correlation in the error terms.

The availability of ultra high frequency data allows us to construct daily series of the variables exploiting the most recent development in the volatility measurement literature.

As a leading example, we consider Johnson & Johnson (JNJ) between 3 January 2007 to 30 June 2013 (1886 observations). Such a stock has desirable properties of liquidity and a limited riskiness represented by a market beta generally smaller than 1. Raw trade data from TAQ are cleaned according to the Brownlees and Gallo (2006) algorithm. Subsequently, we build the daily series of realized kernel volatility, following Barndorff-Nielsen et al. (2011), computing the value at day t as

where is the Parzen kernel

is the j-th high frequency return computed according to (Barndorff-Nielsen et al. 2009, Section 2.2) and is the intradaily return of the j-th bin (equally spaced on 15 min intervals). For volumes (), that is the total number of shares traded during the day, and the number of trades () executed during the day (irrespective of their size), we simply aggregate the data (sum of intradaily volumes and count of the number of trades, respectively).

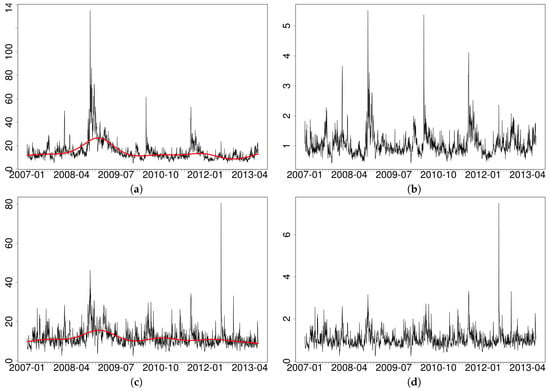

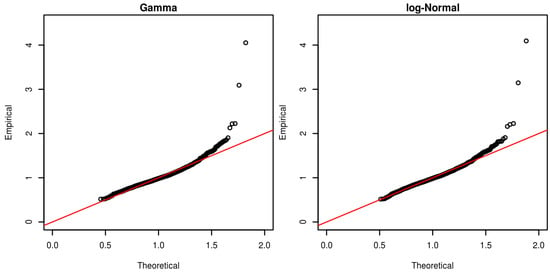

According to Figure 1, the turmoil originating with the subprime mortgage crisis is clearly affecting the profile of the series with an underlying low frequency component. For volatility, the presence of a changing average level was analyzed by Gallo and Otranto (2015) with a number of non-linear MEM’s. Without adopting their approach, in what follows we implement a filter (separately for each indicator) in order to identify short term interactions among series apart from lower frequency movements. The presence of an upward slope in the first portion of the sample is apparent and is reminiscent of the evidence produced by Andersen (1996) for volumes on an earlier period. Remarkably, this upward movement is interrupted after the peak of the crisis in October 2008, with a substantial and progressive reduction of the average level of the variables. To remove this pattern, we adopt a solution similar to Andersen (1996), that is, a flexible function of time which smooths out the series. Extracting low frequency movements in a financial market activity series with a spline is reminiscent of the stream of literature initiated by Engle and Rangel (2008) with a spline-GARCH and carried out in Brownlees and Gallo (2010) within a MEM context.

Figure 1.

Time series of the trading activity indices for JNJ (3 January 2007–30 June 2013). (a,c,e) original data; (b,d,f) data after removing the low frequency component. (a,b) realized kernel volatility (annualized percentage); (c,d) volumes (millions); (e,f) number of trades (thousands). The spike on 13 June 2012 corresponds to an important acquisition and a buy back of some of its common stock by a subsidiary.

In detail, assuming that this component is multiplicative, we remove it in each indicator as follows:

- we take the log of the original series;

- we fit on each log-series a spline based regression with additive errors, using time t (a progressive counter from the first to the last observation in the sample) as an independent variable;5

- the residuals of the previous regression are then exponentiated to get the adjusted series.

When used to produce out-of-sample forecasts of the original quantities, the described approach is applied assuming that the low frequency component remains constant at the last in-sample estimate. This strategy is simple to implement and fairly reliable for forecasting at moderate horizons.

Table 1 shows some similarities across original and adjusted series, with some expected increase in the correlation between volumes and number of trades in the adjusted series (due to a smaller correlation in the corresponding low frequency components). Although still quite large overall, after adjustment, correlations are more spread apart: as mentioned, the value concerning volumes and the number of trades, above , is the highest one; is about , whereas the is still above , all confirming the intuition that the information contained in other variables related to trading activity relevant.

Table 1.

Correlations for JNJ (3 January 2007–30 June 2013). realized kernel volatility; volume; number of trades.

5.1. Modeling Results

In the application, we consider a vMEM on adjusted data, where the conditional expectation has the form (cf. Equation (3))

In order to appreciate the contribution of the different model variables, we consider alternative specifications for the coefficient matrices and ( and are kept diagonal in all specifications), and for the error term.

As of the former, we consider specifications with both and diagonal (labeled D); full and diagonal (labeled A); diagonal and full (labeled B); both and full (labeled ). For the joint distribution of the errors, we adopt a Student–T, a Normal and an Independent copula (T, N and I as respective labels), in all cases with Gamma distributed marginals. As a comparison, the specification is also estimated by ML with log–Normal (AB-LN) and with GMM in the semiparametric AB-S. The estimated specifications are summarized in Table 2. When coupled with the conditional means in the table, the specifications with the Independent copula can be estimated equation–by–equation.

Table 2.

Estimated specifications of the vMEM defined by (1) and (44), with error distribution specified, alternatively, by (8), or (34), or (5). Notation: I for Independent copula with Gamma marginals; N for Normal copula with Gamma marginals; T for Student-T copula with Gamma marginals; for log–Normal; S for semiparametric.

Estimation results are reported in Table 3, limiting ourselves to the equation for the realized volatility.6 Among copula-based specifications, the Student-T copula turns out to be the favorite version, judging upon a significantly higher log–likelihood function value, and lower information criteria;7 the equation–by–equation approach (Independent copula) is dominated in both respects.8

Table 3.

Estimated coefficients of the realized volatility equation for different model formulations (cf. Table 2) for JNJ (3 January 2007–30 June 2013). Robust t-stats in parentheses. An empty space indicates that the specification did not include the corresponding coefficient. Rows labeled and report the p-values for causality tests (see the text for details). Overall model diagnostics report Log-likelihood values, Akaike and Bayesian Information Criteria, and p-values of a joint Ljung–Box test of no autocorrelation at various lags. Last row reports the estimation time (in seconds).

An estimation of the Diagonal model with the Normal/Student-T copula function (details not reported) shows log-likelihood values of , respectively, (relative to the base value of in the first column), pointing to both a substantial improvement coming from the contemporaneous correlation of the innovations and to the joint significance of the other indicators when the A specification is adopted. The comparison of the A ( full; and diagonal) versus the B ( full; and diagonal) formulations, seems to indicate a dominance of the former, at least judging upon the overall log-likelihood values. It is also interesting to note that model residual autocorrelation is substantially reduced only in the case of richer parameterizations (), where non–diagonality in both and captures possible interdependencies, with a sharp improvement in the LB diagnostics (although only marginally satisfying).

In general, it seems that the more relevant contribution in determining realized volatility comes from the lagged number of trades, but only in the models (some collinearity effect, diminishing individual significance, is to be expected); the relevance of such information is also highlighted by the Granger Causality tests, to check whether lagged model components attributable to either or have joint significance. In detail, using three indices where the first refers to the LHS variable, the second to the conditioning variable, and the third the lag, we test () in the A-based formulations; () in the B-based formulations; () in the -based formulations.

The own volatility coefficients at lag 2 are always significant (with negative signs); surprisingly, the leverage effect related to the sign of the returns is not.

The Normal and the Student-T specifications appear to provide similar point estimates, except for the non-diagonal coefficients: for the latter, once again, the picture may be clouded by collinearity, since a formal log–likelihood test does indicate joint significance.9

The overview of the estimation results is complete by examining the Table 4, where we report the square root of the estimated elements on the main diagonal of for all specifications, showing substantial similarity.

Table 4.

Square root of the estimated elements on the main diagonal of (cf. Equation (5)).

Finally, the correlation coefficients implied by the various specifications are reported in Table 5, showing that a strong correlation among innovations further supports the need for taking simultaneity into account.

Table 5.

Estimated correlation matrices of the copula functions (specifications -N and -T), and of (specifications -LN and -S).

The alternative log–Normal and Semiparametric formulations deliver parameter inferences qualitatively similar to the corresponding versions based on copulas. The AIC and BIC statistics of the log–Normal are better than the copula based formulations (for the semiparametric they are not available); also its Ljung-Box diagnostics are marginally better.

A comparison of the estimation times across specifications10 reveals some differences. Student-T copula based formulations are the slowest; the Normal copula based estimated with the concentrated likelihood approach takes about 1/6 of time; finally, the log–Normal and the Semiparametric are a lot faster (about 1/20 of the Normal based). Differences notwithstanding, the largest times involved are very low on an absolute scale.

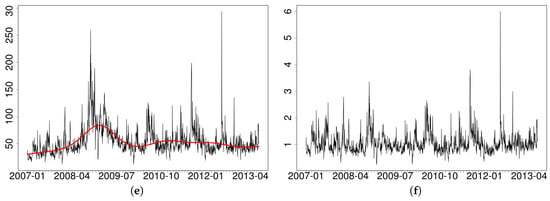

Figure 2 shows a comparison between the estimated residuals against their theoretical counterparts in the case of the Gamma and log–Normal distributions. In both cases, the fit seems to break apart for the tail of the distribution, pointing to the need for some care about the evolution of volatility of volatility (a mixture of distribution hypothesis is contemplated in, say, De Luca and Gallo (2009) in a MEM model).

Figure 2.

QQ-plot between theoretical and empirical residuals for the realized volatility of JNJ. Comparison with Gamma and log–Normal distributions. Estimation period: 3 January 2007– 30 June 2013.

5.2. Forecasting

We left the period 1 July–31 December 2013 (128 observations) for out–of–sample forecasting comparisons. We adopt a Diebold and Mariano (1995) test statistic for superior predictive ability using the error measures

where and denote here the observed and the predicted values, respectively. is the squared error, and can be interpreted as the loss behind an Normally distributed with mean ; similarly, can be interpreted as the loss we can derive considering as Gamma distributed with mean and variance proportional to . Comparisons are performed on both Original and Adjusted (i.e., removing the low frequency component) series.

Table 6 reports the values of the Diebold-Mariano test statistic of different model formulations, against the AB-T specification (which has the lowest values of the information criteria among copula-based specifications, cf. Table 3), considering one-step ahead predictions.

Table 6.

Diebold-Mariano test statistics for unidirectional comparisons, against the AB-T specification, considering 1-step ahead forecasts (out–of–sample period 1 July–31 December 2013). The error measures are defined as (Normal loss) and (Gamma loss), where and denote the observed and the predicted values, respectively (cf. Section 5.2 for the interpretation). Boldface (italic) indicates 5% (10%) significant statistics.

There are no substantial differences in performance between the results on the original and the adjusted series.11 We notice a definite improvement of the specification involving simultaneous innovations over the equation–by–equation specifications (first two rows). The Student–T Copula improves upon the Normal formulation (marginally, line AB-N), but is substantially equivalent to the AB-LN (slightly better) and the AB-S (slightly worse).

6. Conclusions

The Multiplicative Error Model was originally introduced by Engle (2002) as a positive valued product process between a scale factor following a GARCH type dynamics and a unit mean innovation process. In this paper we have presented a general discussion of a vector extension of such a model with a dynamically interdependent formulation for the scale factors where lagged variables and conditional expectations may be both allowed to impact each other’s conditional mean. Engle and Gallo (2006) estimate a vMEM where the innovations are Gamma–distributed with a diagonal variance–covariance matrix (estimated equation–by-equation). The extension to a truly multivariate process requires a complete treatment of the interdependence among the innovation terms. One possibility is to avoid the specification of the distribution and adopt a semiparametric GMM approach as in Cipollini et al. (2013). In this paper we have derived a maximum likelihood estimator by framing the innovation vector as a copula function linking Gamma marginals; a parametric alternative with a multivariate log-Normal distribution is discussed, while we show that the specification using a multivariate Gamma distribution has severe shortcomings.

We illustrate the procedure on three indicators related to market trading activity: realized volatility, volumes, and number of trades. The empirical results are presented in reference to daily data on the Johnson and Johnson (JNJ) stock. The data on the three variables show a (slowly moving) time varying local average which was removed before estimating the parameters. The three components are highly correlated with one another, but interestingly, their removal does not have a substantial impact on the correlation among the adjusted series. The specifications adopted start from a diagonal structure where no dynamic interaction is allowed, and an Independent copula (de facto an equation–by–equation specification). Refinements are obtained by inserting a Normal copula and a Student–T copula (which dramatically improve the estimated log–likelihood function values) and then allowing for the presence of dynamic interdependence in the form of links either between lagged variables, or between lagged conditional means, or both. Although hindered by the presence of some collinearity, the results clearly show a significant improvement for the fit of the equation for realized volatility when volumes and number of trades are considered. This is highlighted by significantly better model log–likelihoods, lower overall information criteria and improved autocorrelation diagnostics. The results of an out–of–sample forecasting exercise confirm the need for a simultaneous approach to model specification and estimation, with a substantial preference for the Student-T copula results within the copula–based formulations. From a interpretive point of view, the results show that the past realized volatility is not enough information by itself to reconstruct the dynamics: its modeling and forecastability are clearly influenced by other indicators of market trading activity, and simultaneous correlations in the innovations must be accounted for.

Under equal models for conditional means, the copula approach is substantially equivalent to other forms of specifications, be they parametric with a multivariate log-Normal error, or semiparametric (with GMM estimation). The advantage of a slightly more expensive (from a computational point of view) procedure lies in gaining flexibility in the tail dependence offered by the Student–T copula, which can be a plus when reconstructing joint conditional distributions, or when reproducing more realistic behavior in simulation is desirable.

Acknowledgments

We thank Marcelo Fernandes, Nikolaus Hautsch, Loriano Mancini, Joseph Noss, Andrew Patton, Kevin Sheppard, David Veredas as well as participants in seminars at CORE, Humboldt Universität zu Berlin, Imperial College, IGIER–Bocconi for helpful comments. The usual disclaimer applies. Financial support from the Italian MIUR under grant PRIN MISURA is gratefully acknowledged.

Author Contributions

The three authors contributed equally to this work.

Conflicts of Interest

The authors declare no conflict of interest.

Appendix A. Expectation Targeting

We show how to obtain the asymptotic distribution of the estimator of the parameters of the vMEM when the constant model is reparameterized, via expectation targeting (Section 3.2.1), by exploiting the assumption of weak-stationarity of the process (Section 2). Under assumptions detailed in what follows, the distribution of can be found by extending the results in Francq et al. (2011) and Horváth et al. (2006) to a multivariate framework and to asymmetric effects.

Appendix A.1. Framework

Let us assume a model defined by (1), (5) and (3) where the ’s are associated with negative returns on the basis of assumptions detailed in Section 2.

Besides mean-stationarity, in order to get asymptotic normality also, we assume the stronger condition that exists (a similar condition on existence of the unconditional squared moment of the conditional variance is assumed in the cited papers).

Appendix A.2. Auxiliary results

In order to simplify the exposition we introduce two quantities employed in the following, namely the zero mean residual

and

Since , and , we can easily check that

We remark that represents also the unconditional average of . By consequence, the sample averages and are such that

Appendix A.3. The Asymptotic Distribution of the Sample Mean

By replacing (A1) and (A2) into Equation (3) and arranging it we get

so that, averaging both sides,

Deriving we get

where is given in (28).

By means of (A3), the asymptotic distribution of follows immediately as

References

- Andersen, Torben G. 1996. Return Volatility and Trading Volume: An Information Flow Interpretation of Stochastic Volatility. The Journal of Finance 51: 169–204. [Google Scholar] [CrossRef]

- Andersen, Torben G., Tim Bollerslev, Peter F. Christoffersen, and Francis X. Diebold. 2006. Volatility and Correlation Forecasting. In Handbook of Economic Forecasting. Edited by Graham Elliott, Clive W.J. Granger and Allan Timmermann. New York: North Holland, pp. 777–878. [Google Scholar]

- Barndorff-Nielsen, Ole E., Peter Reinhard Hansen, Asger Lunde, and Neil Shephard. 2009. Realised kernels in Practice: trades and quotes. Econometrics Journal 12: 1–32. [Google Scholar] [CrossRef]

- Barndorff-Nielsen, Ole E., Peter Reinhard Hansen, Asger Lunde, and Neil Shephard. 2011. Multivariate realised kernels: Consistent positive semi-definite estimators of the covariation of equity prices with noise and non-synchronous trading. Journal of Econometrics 162: 149–69. [Google Scholar] [CrossRef]

- Bodnar, Taras, and Nikolaus Hautsch. 2016. Dynamic conditional correlation multiplicative error processes. Journal of Empirical Finance 36: 41–67. [Google Scholar] [CrossRef]

- Bollerslev, Tim. 1986. Generalized Autoregressive Conditional Heteroskedasticity. Journal of Econometrics 31: 307–27. [Google Scholar] [CrossRef]

- Bouyé, Eric, Valdo Durrleman, Ashkan Nikeghbali, Gaël Riboulet, and Thierry Roncalli. 2000. Copulas for Finance: A Reading Guide and Some Applications. Technical Report. Credit Lyonnais, Paris: Groupe de Recherche Opérationelle. [Google Scholar]

- Brownlees, Christian T., and Giampiero M. Gallo. 2006. Financial Econometric Analysis at Ultra–High Frequency: Data Handling Concerns. Computational Statistics and Data Analysis 51: 2232–45. [Google Scholar] [CrossRef]

- Brownlees, Christian T., and Giampiero M. Gallo. 2010. Comparison of Volatility Measures: A Risk Management Perspective. Journal of Financial Econometrics 8: 29–56. [Google Scholar] [CrossRef]

- Cerrato, Mario, John Crosby, Minjoo Kim, and Yang Zhao. 2015. Modeling Dependence Structure and Forecasting Market Risk with Dynamic Asymmetric Copula. Technical Report. Glasgow, Scotland: Adam Smith Business School, University of Glasgow. [Google Scholar]

- Cherubini, Umberto, Elisa Luciano, and Walter Vecchiato. 2004. Copula Methods in Finance. New York: Wiley. [Google Scholar]

- Chou, Ray Yeutien. 2005. Forecasting Financial Volatilities with Extreme Values: The Conditional Autoregressive Range (CARR) Model. Journal of Money, Credit and Banking 37: 561–82. [Google Scholar] [CrossRef]

- Cipollini, Fabrizio, and Giampiero M. Gallo. 2010. Automated variable selection in vector multiplicative error models. Computational Statistics & Data Analysis 54: 2470–86. [Google Scholar] [CrossRef]

- Cipollini, Fabrizio, Robert F. Engle, and Giampiero M. Gallo. 2013. Semiparametric Vector MEM. Journal of Applied Econometrics 28: 1067–86. [Google Scholar] [CrossRef]

- De Luca, Giovanni, and Giampiero M. Gallo. 2009. Time-varying Mixing Weights in Mixture Autoregressive Conditional Duration Models. Econometric Reviews 28: 102–20. [Google Scholar] [CrossRef]

- Demarta, Stefano, and Alexander J. McNeil. 2005. The t Copula and Related Copulas. International Statistical Review 73: 111–29. [Google Scholar] [CrossRef]

- Diebold, Francis X., and Roberto S. Mariano. 1995. Comparing Predictive Accuracy. Journal of Business & Economic Statistics 13: 253–63. [Google Scholar] [CrossRef]

- Embrechts, Paul, Alexander McNeil, and Daniel Straumann. 2002. Correlation and dependence in risk management: properties and pitfalls. In Risk Management: Value at Risk and Beyond. Edited by M. A. H. Dempster. Cambridge: Cambridge University Press, pp. 176–223. [Google Scholar]

- Engle, Robert F. 2002. New Frontiers for ARCH Models. Journal of Applied Econometrics 17: 425–46. [Google Scholar] [CrossRef]

- Engle, Robert F., and Giampiero M. Gallo. 2006. A Multiple Indicators Model for Volatility Using Intra-Daily Data. Journal of Econometrics 131: 3–27. [Google Scholar] [CrossRef]

- Engle, Robert F., and Jeffrey R. Russell. 1998. Autoregressive Conditional Duration: A New Model for Irregularly Spaced Transaction Data. Econometrica 66: 1127–62. [Google Scholar] [CrossRef]

- Engle, Robert F., and Joe Mezrich. 1995. Grappling with GARCH. Risk 8: 112–17. [Google Scholar]

- Engle, Robert F., and Jose Gonzalo Rangel. 2008. The Spline-GARCH Model for Low Frequency Volatility and Its Global Macroeconomic Causes. Review of Financial Studies 21: 1187–222. [Google Scholar] [CrossRef]

- Frahm, Gabriel, Markus Junker, and Alexander Szimayer. 2003. Elliptical Copulas: Applicability and Limitations. Statistics & Probability Letters 63: 275–86. [Google Scholar] [CrossRef]

- Francq, Christian, Lajos Horvath, and Jean-Michel Zakoïan. 2011. Merits and drawbacks of variance targeting in GARCH models. Journal of Financial Econometrics 9: 619–56. [Google Scholar] [CrossRef]

- Gallo, Giampiero M., and Edoardo Otranto. 2015. Forecasting realized volatility with changing average levels. International Journal of Forecasting 31: 620–34. [Google Scholar] [CrossRef]

- Hamilton, James Douglas. 1994. Time Series Analysis. Princeton: Princeton University Press. [Google Scholar]

- Hautsch, Nikolaus. 2008. Capturing common components in high-frequency financial time series: A multivariate stochastic multiplicative error model. Journal of Economic Dynamics and Control 32: 3978–4015. [Google Scholar] [CrossRef]

- Hautsch, Nikolaus, Peter Malec, and Melanie Schienle. 2013. Capturing the Zero: A New Class of Zero-Augmented Distributions and Multiplicative Error Processes. Journal of Financial Econometrics 12: 89–121. [Google Scholar] [CrossRef]

- Horváth, Lajos, Piotr Kokoszka, and Ricardas Zitikis. 2006. Sample and Implied Volatility in GARCH Models. Journal of Financial Econometrics 4: 617–35. [Google Scholar] [CrossRef]

- Joe, Harry. 1997. Multivariate Models and Dependence Concepts. London: Chapman & Hall. [Google Scholar]

- Johnson, Norman L., Samuel Kotz, and Narayanaswamy Balakrishnan. 2000. Continuous Multivariate Distributions. New York: John Wiley & Sons, vol. 1. [Google Scholar]

- Kostadinov, Krassimir. 2005. Non-parametric Estimation of Elliptical Copulae with Application to Credit Risk. Technical Report. Munich, Germany: Munich University of Technology. [Google Scholar]

- Kristensen, Dennis, and Oliver Linton. 2004. Consistent Standard Errors for Target Variance Approach to GARCH Estimation. Econometric Theory 20: 990–93. [Google Scholar] [CrossRef]

- Lindskog, Filip, Alexander McNeil, and Uwe Schmock. 2003. Kendall’s tau for elliptical distributions. In Credit Risk: Measurement, Evaluation and Management. Edited by Georg Bol, Gholamreza Nakhaeizadeh, Svetlozar T. Rachev, Thomas Ridder and Karl-Heinz Vollmer. Heidelberg: Physica-Verlag. [Google Scholar]

- Manganelli, Simone. 2005. Duration, Volume and Volatility Impact of Trades. Journal of Financial Markets 8: 377–99. [Google Scholar] [CrossRef]

- McNeil, Alexander J., Rüdiger Frey, and Paul Embrechts. 2005. Quantitative Risk Management: Concepts, Techniques, and Tools. Princeton: Princeton University Press. [Google Scholar]

- Nelsen, Roger B. 1999. An introduction to Copulas. Lecture Notes in Statistics. New York: Springer. [Google Scholar]

- Newey, Whitney K., and Daniel McFadden. 1994. Large sample estimation and hypothesis testing. In Handbook of Econometrics. Edited by Robert Engle and Dan McFadden. New York: Elsevier, vol. 4, chapter 36. pp. 2111–45. [Google Scholar]

- Nocedal, Jorge, and Stephen J. Wright. 2006. Numerical Optimization. Springer Series in Operations Research and Financial Engineering; Berlin: Springer. [Google Scholar]

- Noss, Joseph. 2007. The Econometrics of Optimal Execution in an Order-Driven Market. Master’s dissertation, Nuffield College, Oxford University, Oxford, UK. [Google Scholar]

- Okimoto, Tatsuyoshi. 2008. New Evidence of Asymmetric Dependence Structures in International Equity Markets. The Journal of Financial and Quantitative Analysis 43: 787–815. [Google Scholar] [CrossRef]

- Patton, Andrew J. 2006. Modelling asymmetric exchange rate dependence. International Economic Review 47: 527–56. [Google Scholar] [CrossRef]

- Patton, Andrew J. 2013. Copula Methods for Forecasting Multivariate Time Series. In Handbook of Economic Forecasting. Edited by Graham Elliott and Allan Timmermann. New York: Elsevier, vol. 2, Part B. pp. 899–960. [Google Scholar]

- Powell, Michael J.D. 2006. The NEWUOA software for unconstrained optimization without derivatives. In Large-Scale Nonlinear Optimization. Edited by Gianni D. Pillo and Massimo Roma. New York: Springer Verlag, pp. 255–97. [Google Scholar]

- Schmidt, Rafael. 2002. Tail dependence for elliptically contoured distributions. Mathematical Methods of Operations Research 55: 301–27. [Google Scholar] [CrossRef]

- Song, Peter Xue-Kun. 2000. Multivariate Dispersion Models Generated From Gaussian Copula. Scandinavian Journal of Statistics 27: 305–20. [Google Scholar] [CrossRef]

| 1 | In some applications, especially involving returns, their elliptical symmetry may constitute a limit (cf., for example, Patton (2006), Okimoto (2008), Cerrato et al. (2015) and reference therein). Copulas in the Archimedean family (Clayton, Gumbel, Joe-Clayton, etc.) offer a way to bypass such a limitation but suffer from other drawbacks and, in any case, seem to be less relevant for the variables of interest for a vMEM (here, different indicators of trading activity). They will not be pursued in what follows. |

| 2 | This is equivalent to variance targeting in a GARCH context (Engle and Mezrich 1995), where the constant term of the conditional variance model is assumed to be a function of the sample unconditional variance and of the other parameters. In this context, other than a preference for the term expectation targeting since we are modeling a conditional mean, the main argument stays unchanged. |

| 3 | Even when is a matrix, the value of has to satisfy the cubic equation:

|

| 4 | |

| 5 | Alternative methods, such as a moving average of fixed length (centered or uncentered), can be used but in practice they deliver very similar results and will not be discussed in detail here. The spline regression is estimated with the gam() function in the R package mgcv by using default settings. |

| 6 | All models are estimated using Expectation Targeting (Section 3.2.1). The Normal copula based specifications are estimated resorting to the concentrated log-likelihood approach (Section 3.2.2). We omit estimates of the constant term . |

| 7 | The estimated degrees of freedom are (s.e. ) and (s.e. ), respectively, in the A-T and AB-T formulations. We also tried full ML estimation of the AB-N specification getting a value of the log-likelihood equal to , very close to the concentrated log-likelihood approach (Section 3.2.2) used in Table 3. |

| 8 | The stark improvement in the likelihood functions, coming from the explicit consideration of the correlation structure, does not guarantee similar gains in the forecasting ability of the same variables. This is similar to what happens in modeling returns, when the likelihood function of ARMA models is improved by superimposing a GARCH structure on the conditional variances: no substantive change in the fit of the conditional mean and no better predictive ability. |

| 9 | No attempt at pruning the structure of the model following the automated procedure suggested in Cipollini and Gallo (2010) was performed. |

| 10 | Calculations with our routines written in R were performed on an Intel i7-5500U 2.4Ghz processor. We did not perform an extensive comparison of estimation times and we did not optimize performance by removing tracing of intermediate results. Moreover, the copula-based and the alternative specifications are optimized resorting to different algorithms: the first ones, more cumbersome to optimize, are estimated toward a combination of NEWUOA (Powell 2006) and Newton-Raphson; for the second ones we used a dogleg algorithm (Nocedal and Wright 2006, ch. 4). |

| 11 | One-step ahead predictions at time t for the original series are computed multiplying the corresponding forecast of the adjusted indicator by the value of the low frequency component at . |

© 2017 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).