A Hybrid CNN–GRU Deep Learning Model for IoT Network Intrusion Detection

Abstract

1. Introduction

- This article focuses on the use of various deep learning techniques to detect intrusion in IoT networks.

- Using two benchmark datasets, the Bot-IoT and IoTID20 datasets, we develop a hybrid deep learning model using a convolutional neural network (CNN) and a gated recurrent unit (GRU) in conjunction with various regularization and optimization techniques to increase prediction accuracy while reducing computational complexity.

- We compare and evaluate the proposed models and other models in the literature.

2. Related Works

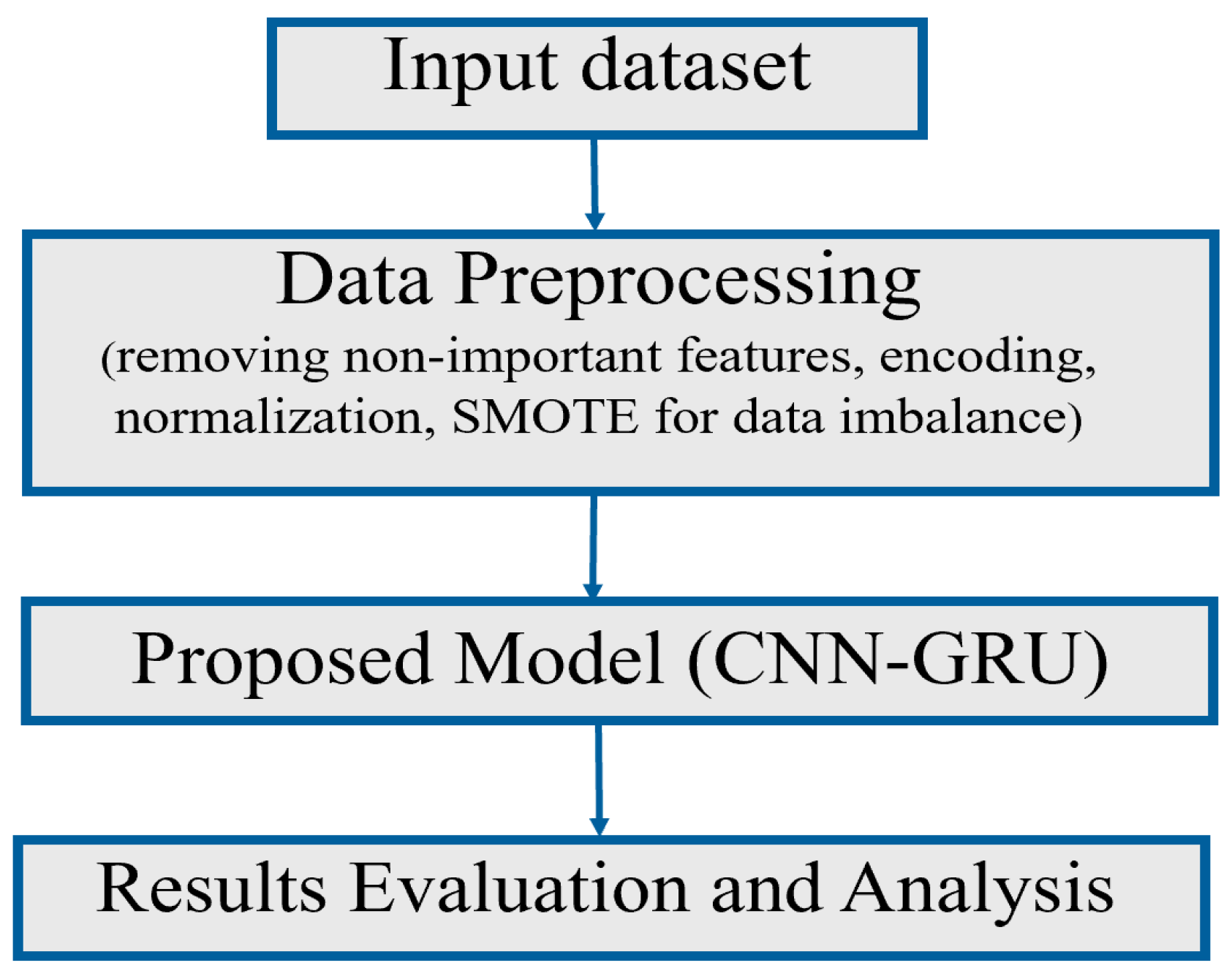

3. Methodology

3.1. Dataset Description

3.2. Data Preprocessing

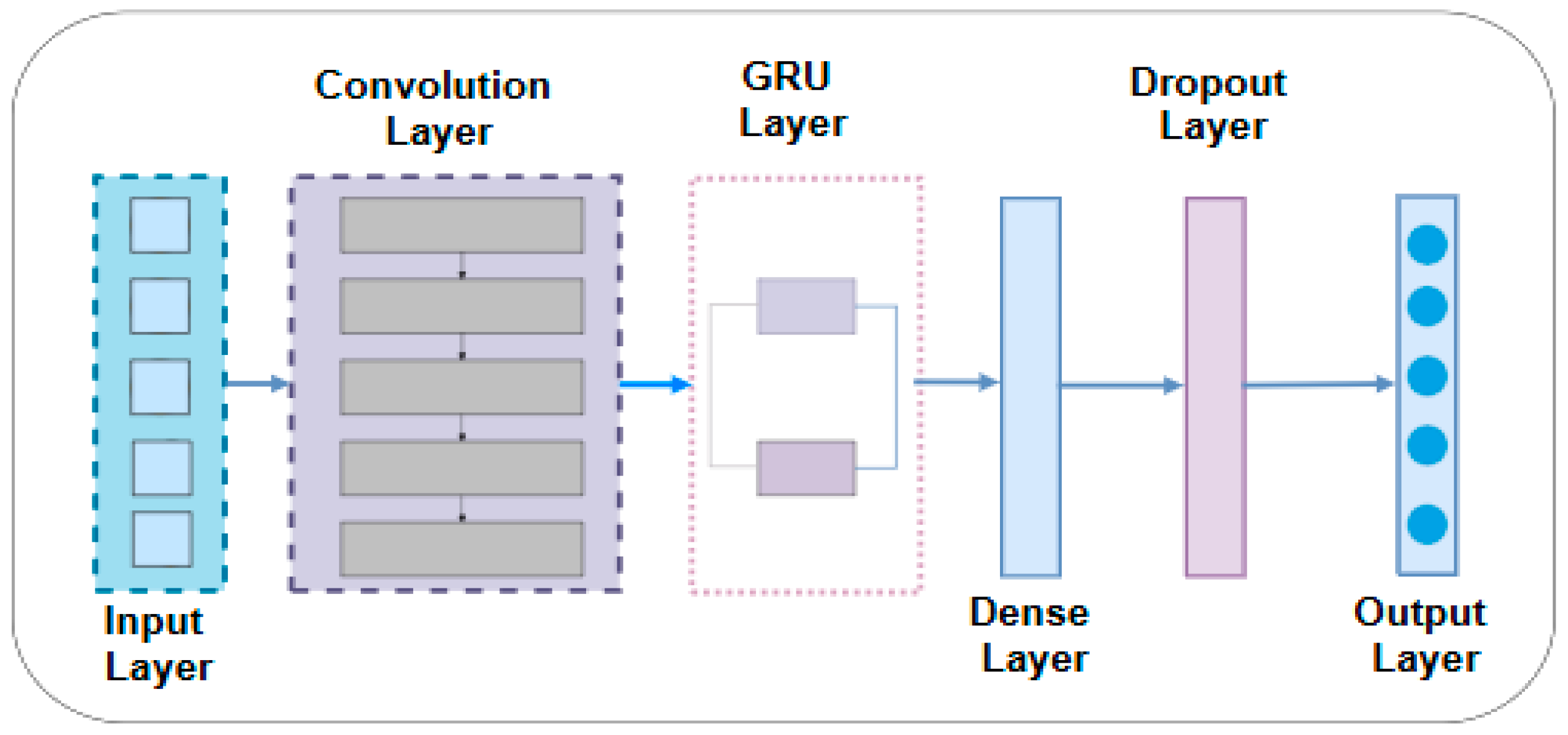

3.3. Proposed CNN-GRU Model

3.4. Experimentation Setup

3.5. Baseline Methods

3.5.1. Feedforward Neural Network (FFNN)

3.5.2. Long Short-Term Memory (LSTM)

3.6. Performance Metrics

4. Experimental Results

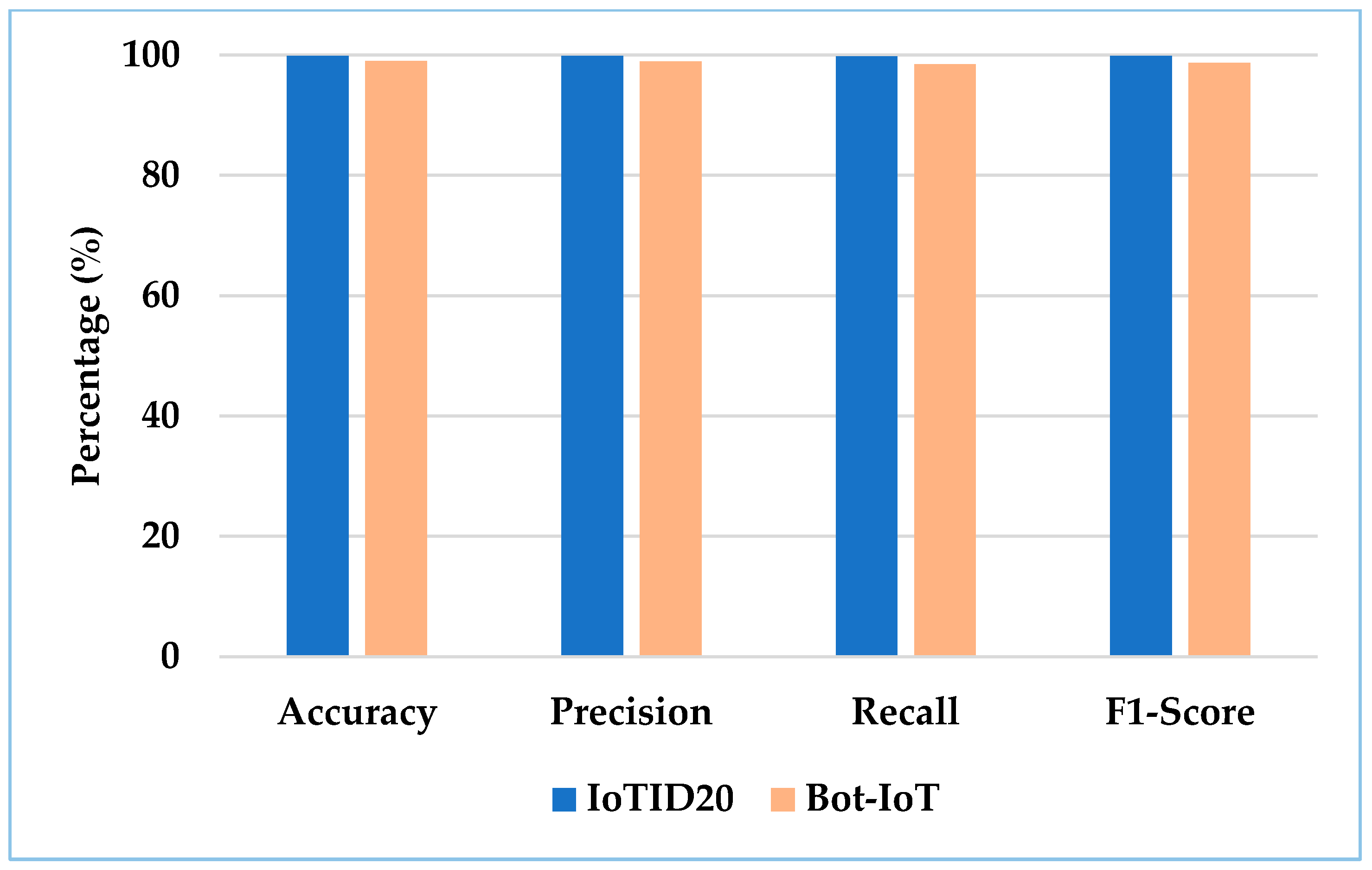

4.1. The Proposed CNN-GRU Results on the Two Datasets

4.2. Performance Comparison of the Proposed Model with Baseline Methods

4.3. Statistical Significance Analysis

5. Discussion and Interpretation of Key Findings

5.1. Comparison with Results from Similar Studies

- Comparison with hybrid models: The model outperformed the hybrid Autoencoder-LSTM-CNN architecture presented in [17], which achieved an accuracy of 99.15% on the CICIoT2023 dataset. While this result is commendable, our CNN-GRU model’s higher performance (99.83%) suggests that the combination of a CNN with a computationally efficient GRU can be effective.

- Comparison with standalone models: The proposed hybrid model consistently outperforms standalone models. For instance, it exceeded the accuracy of a standalone CNN model (98.7%) on the CIC-IoT2017 dataset [18], another standalone CNN model that obtained 95.5% on Bot-IoT and IoT-NI [20], and a standalone LSTM model (98.00%) on the IoT-NI dataset [24]. This performance gap highlights the inherent limitation of models that specialize in only one type of feature (spatial or temporal) when faced with the complex nature of network intrusion data. While the studies in [18,19,20] indeed report excellent results, the advantages of the proposed CNN-GRU model extend beyond a marginal increase in accuracy; the proposed model also demonstrated robustness. The hybrid CNN-GRU architecture provides an advantage over the CNN and LSTM standalone models presented in [18,20,24]. Network intrusion data possesses both spatial patterns within packets and temporal dependencies across traffic flows. A standalone model is primarily limited to capturing only one type of feature. Our model’s ability to learn both feature types provides a more holistic analysis, which is likely the reason for its improved performance. Secondly, a key strategic advantage is the choice of GRU over the more frequently used LSTM for the temporal component. While the hybrid CNN-LSTM model in [24] performs well, GRUs are known to achieve comparable accuracy with lower computational overhead due to their simpler gating mechanism. This makes the proposed CNN-GRU model not just accurate, but also more efficient and better suited for the computational constraints of real-world IoT deployments, a significant practical advantage. Our model’s ability to achieve state-of-the-art results on two distinct datasets (IoTID20 and BoT-IoT) strongly indicates that it learns generalizable features of malicious traffic.

- Comparison with advanced architectures: The model also demonstrates strong performance against other approaches. It exceeded the F1-score (95.00%) reported for a Graph Neural Network (GNN) on the ToN-IoT dataset [21]. While GNNs are powerful for modeling network topology, the CNN-GRU approach offers a potent alternative that achieves high detection rates by focusing on the spatio-temporal patterns within the traffic flows themselves, without requiring explicit graph-structure data.

5.2. Critical Analysis and Limitations of the Comparison

- Dataset differences: Studies utilize different datasets (e.g., CICIoT2023, CIC-IoT2017, BoT-IoT, ToN-IoT), each with unique characteristics, class distributions, and attack profiles. A model highly tuned to one dataset may not generalize directly to another.

- Data preprocessing and feature selection: The specific features extracted, normalization techniques applied, and handling of class imbalance vary significantly across studies and greatly impact model performance.

- Data volume: Some studies use full datasets, while others use modified or fractional components [18,20]. Training on a larger, more diverse volume of data, as in this study, often leads to a more robust and generalizable model. Therefore, the most significant evidence of the CNN-GRU model’s strength is not solely its superior metrics, but its demonstrated ability to achieve higher performance across different datasets (IoTID20 and BoT-IoT), indicating a degree of robustness and generalizability.

6. Conclusions and Limitations

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

References

- Sayed, M. The Internet of Things (IoT), Applications and Challenges: A Comprehensive Review. J. Innov. Intell. Comput. Emerg. Technol. 2024, 1, 20–27. [Google Scholar]

- Adefemi Alimi, K.O.; Ouahada, K.; Abu-Mahfouz, A.M.; Rimer, S.; Alimi, O.A. Refined LSTM Based Intrusion Detection for Denial-of-Service Attack in Internet of Things. J. Sens. Actuator Netw. 2022, 11, 32. [Google Scholar] [CrossRef]

- Das, S.; Namasudra, S. Introducing the Internet of Things: Fundamentals, Challenges, and Applications. Adv. Comput. 2025, 137, 1–36. [Google Scholar]

- Alzahrani, A.I. Exploring AI and Quantum Computing Synergies in Holographic Counterpart Frameworks for IoT Security and Privacy. J. Supercomput. 2025, 81, 1194. [Google Scholar] [CrossRef]

- Sharma, S.B.; Bairwa, A.K. Leveraging AI for Intrusion Detection in IoT Ecosystems: A Comprehensive Study. IEEE Access 2025, 13, 66290–66317. [Google Scholar] [CrossRef]

- Dritsas, E.; Trigka, M. A Survey on Cybersecurity in IoT. Future Internet 2025, 17, 30. [Google Scholar] [CrossRef]

- Yaras, S.; Dener, M. IoT-Based Intrusion Detection System Using New Hybrid Deep Learning Algorithm. Electronics 2024, 13, 1053. [Google Scholar] [CrossRef]

- Zahid, M.; Bharati, T.S. Enhancing Cybersecurity in IoT Systems: A Hybrid Deep Learning Approach for Real-Time Attack Detection. Discov. Internet Things 2025, 5, 73. [Google Scholar] [CrossRef]

- Wei, C.; Xie, G.; Diao, Z. A Lightweight Deep Learning Framework for Botnet Detecting at the IoT Edge. Comput. Secur. 2023, 129, 103195. [Google Scholar] [CrossRef]

- Al-Shurbaji, T.; Anbar, M.; Manickam, S.; Hasbullah, I.H.; ALfriehate, N.; Alabsi, B.A.; Alzighaibi, A.R.; Hashim, H. Deep Learning-Based Intrusion Detection System for Detecting IoT Botnet Attacks: A Review. IEEE Access 2025, 13, 11792–11822. [Google Scholar] [CrossRef]

- Ali, W.; Amin, M.; Alarfaj, F.K.; Al-Otaibi, Y.D.; Anwar, S. AI-Enhanced Differential Privacy Architecture for Securing Consumer Internet of Things (CIoT) Data. IEEE Trans. Consum. Electron. 2025, 71, 5201–5215. [Google Scholar] [CrossRef]

- Shah, Z.; Ullah, I.; Li, H.; Levula, A.; Khurshid, K. Blockchain Based Solutions to Mitigate Distributed Denial of Service (DDoS) Attacks in the Internet of Things (IoT): A Survey. Sensors 2022, 22, 1094. [Google Scholar] [CrossRef] [PubMed]

- Alimi, O.A. Data-Driven Learning Models for Internet of Things Security: Emerging Trends, Applications, Challenges and Future Directions. Technologies 2025, 13, 176. [Google Scholar] [CrossRef]

- Singh, N.J.; Hoque, N.; Singh, K.R.; Bhattacharyya, D.K. Botnet-Based IoT Network Traffic Analysis Using Deep Learning. Secur. Priv. 2024, 7, e355. [Google Scholar] [CrossRef]

- Kamal, H.; Mashaly, M. Robust Intrusion Detection System Using an Improved Hybrid Deep Learning Model for Binary and Multi-Class Classification in IoT Networks. Technologies 2025, 13, 102. [Google Scholar] [CrossRef]

- Albanbay, N.; Tursynbek, Y.; Graffi, K.; Uskenbayeva, R.; Kalpeyeva, Z.; Abilkaiyr, Z.; Ayapov, Y. Federated Learning-Based Intrusion Detection in IoT Networks: Performance Evaluation and Data Scaling Study. J. Sens. Actuator Netw. 2025, 14, 78. [Google Scholar] [CrossRef]

- Susilo, B.; Muis, A.; Sari, R.F. Intelligent Intrusion Detection System Against Various Attacks Based on a Hybrid Deep Learning Algorithm. Sensors 2025, 25, 580. [Google Scholar] [CrossRef]

- Alhasawi, Y.; Alghamdi, S. Federated Learning for Decentralized DDoS Attack Detection in IoT Networks. IEEE Access 2024, 12, 42357–42368. [Google Scholar] [CrossRef]

- Sahu, A.K.; Sharma, S.; Tanveer, M.; Raja, R. Internet of Things Attack Detection Using Hybrid Deep Learning Model. Comput. Commun. 2021, 176, 146–154. [Google Scholar] [CrossRef]

- Saba, T.; Rehman, A.; Sadad, T.; Kolivand, H.; Bahaj, S.A. Anomaly-Based Intrusion Detection System for IoT Networks through Deep Learning Model. Comput. Electr. Eng. 2022, 99, 107810. [Google Scholar] [CrossRef]

- Villegas Ch, W.; Govea, J.; Maldonado Navarro, A.M.; Játiva, P.P. Intrusion Detection in IoT Networks Using Dynamic Graph Modeling and Graph-Based Neural Networks. IEEE Access 2025, 13, 65356–65375. [Google Scholar] [CrossRef]

- Alomari, E.S.; Manickam, S.; Anbar, M. Adaptive Hybrid Deep Learning Model for Real-Time Anomaly Detection in IoT Networks. J. Adv. Res. Des. 2026, 137, 278–289. [Google Scholar]

- Emeç, M.; Özcanhan, M.H. A Hybrid Deep Learning Approach for Intrusion Detection in IoT Networks. Adv. Electr. Comput. Eng. 2022, 22, 3–12. [Google Scholar] [CrossRef]

- Azumah, S.W.; Elsayed, N.; Adewopo, V.; Zaghloul, Z.S.; Li, C. A Deep LSTM Based Approach for Intrusion Detection IoT Devices Network in Smart Home. In Proceedings of the 2021 IEEE 7th World Forum on Internet of Things (WF-IoT), New Orleans, LA, USA, 20–24 June 2021; pp. 836–841. [Google Scholar]

- Shirley, J.J.; Priya, M. An Adaptive Intrusion Detection System for Evolving IoT Threats: An Autoencoder-FNN Fusion. IEEE Access 2025, 13, 4201–4217. [Google Scholar] [CrossRef]

- Omarov, B.; Auelbekov, O.; Suliman, A.; Zhaxanova, A. CNN–BiLSTM Hybrid Model for Network Anomaly Detection in Internet of Things. Int. J. Adv. Comput. Sci. Appl. 2023, 14, 436–444. [Google Scholar] [CrossRef]

- Koroniotis, N.; Moustafa, N.; Sitnikova, E.; Slay, J. Towards Developing Network Forensic Mechanism for Botnet Activities in the IoT Based on Machine Learning Techniques. In Mobile Networks and Management, Proceedings of the 9th International Conference, MONAMI 2017, Melbourne, Australia, 13–15 December 2017; Springer: Cham, Switzerland, 2018; pp. 30–44. [Google Scholar]

- Ullah, I.; Mahmoud, Q.H. A Scheme for Generating a Dataset for Anomalous Activity Detection in IoT Networks. In Advances in Artificial Intelligence; Goutte, C., Zhu, X., Eds.; Lecture Notes in Computer Science; Springer: Cham, Switzerland, 2020; Volume 12109, pp. 508–520. [Google Scholar] [CrossRef]

- Chawla, N.V.; Bowyer, K.W.; Hall, L.O.; Kegelmeyer, W.P. SMOTE: Synthetic Minority Over-Sampling Technique. J. Artif. Intell. Res. 2002, 16, 321–357. [Google Scholar] [CrossRef]

- Adefemi, K.O.; Mutanga, M.B. A Robust Hybrid CNN–LSTM Model for Predicting Student Academic Performance. Digital 2025, 5, 16. [Google Scholar] [CrossRef]

- Ullah, I.; Mahmoud, Q.H. Design and Development of RNN Anomaly Detection Model for IoT Networks. IEEE Access 2022, 10, 62722–62750. [Google Scholar] [CrossRef]

- Halbouni, A.; Gunawan, T.S.; Habaebi, M.H.; Halbouni, M.; Kartiwi, M.; Ahmad, R. CNN-LSTM: Hybrid Deep Neural Network for Network Intrusion Detection System. IEEE Access 2022, 10, 99837–99849. [Google Scholar] [CrossRef]

| Article | Dataset Used | Model | Performance |

|---|---|---|---|

| Albanbay et al. [16] | CICIoT23 | DNN, CNN, BiLSTM | 94.84% |

| Susilo et al. [17] | CICIoT23 | AE, LSTM, CNN | 99.15% |

| Alhasawl et al. [18] | CICIoT17 | CNN | 98.7% |

| Sahu et al. [19] | CICIoT23 | CNN+LSTM | 96% |

| Saba et al. [20] | Bot-IoT and IoT-IN | CNN | 95.55% |

| Villegas Ch et al. [21] | ToN-IoT | GNN | 95% |

| Alomari et al. [22] | DDoSDataset | AE, DNN | 92.8% |

| Emec et al. [23] | CIC2018 and BoT-Iot | BLSTM-GRU | 98.58% |

| Azumah et al. [24] | IoT-NI | LSTM | 98% |

| Shirley et al. [25] | CICIoT23 | AE and FFNN | 99.55% |

| Omarov et al. [26] | UNSW-NB | CNN-BiLSTM | 96.28% |

| Category | Subcategory | Number of Instances |

|---|---|---|

| Normal | Normal | 105,202 |

| DoS | HTTP | 34,057 |

| TCP | 19,111,830 | |

| UDP | 37,881,485 | |

| DDoS | HTTP | 51,934 |

| TCP | 15,975,894 | |

| UDP | 21,049,846 | |

| Scan | OS fingerprinting | 350,093 |

| Service scanning | 1,481,465 | |

| Data theft | Data exfiltration | 5003 |

| Key logging | 1387 | |

| Total | 96,048,196 |

| Category | Number of Instances |

|---|---|

| Normal | 40,073 |

| DoS | 59,391 |

| MITM | 35,377 |

| Mirai | 415,677 |

| Scan | 75,265 |

| Dataset | Model | Accuracy | Precision | Recall | F1-Score | AUC |

|---|---|---|---|---|---|---|

| IoTID20 | FFNN | 90.47 | 90.23 | 90.23 | 90.23 | 0.90 |

| LSTM | 97.26 | 97.69 | 97.19 | 97.43 | 0.97 | |

| CNN | 98.90 | 98.93 | 98.90 | 98.91 | 0.99 | |

| GRU | 98.8 | 98.80 | 98.80 | 98.80 | 0.99 | |

| CNN-GRU | 99.83 | 99.83 | 99.82 | 99.83 | 1.00 | |

| Bot-IoT | FFNN | 89.24 | 89.45 | 89.42 | 89.43 | 0.89 |

| LSTM | 96.12 | 96.12 | 97.03 | 97.57 | 0.96 | |

| CNN | 98.41 | 98.33 | 98.30 | 98.31 | 0.98 | |

| GRU | 98.16 | 98.18 | 98.09 | 98.13 | 0.98 | |

| CNN-GRU | 99.01 | 98.94 | 98.53 | 98.73 | 0.99 |

| Dataset | Comparison | t-Statistic | p-Value |

|---|---|---|---|

| IoTID20 | CNN-GRU vs. FFNN | 4.05 | 0.0155 |

| CNN-GRU vs. LSTM | 4.00 | 0.0161 | |

| BoT-IoT | CNN-GRU vs. FFNN | 4.05 | 0.0155 |

| CNN-GRU vs. LSTM | 4.05 | 0.0169 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Adefemi, K.O.; Mutanga, M.B.; Alimi, O.A. A Hybrid CNN–GRU Deep Learning Model for IoT Network Intrusion Detection. J. Sens. Actuator Netw. 2025, 14, 96. https://doi.org/10.3390/jsan14050096

Adefemi KO, Mutanga MB, Alimi OA. A Hybrid CNN–GRU Deep Learning Model for IoT Network Intrusion Detection. Journal of Sensor and Actuator Networks. 2025; 14(5):96. https://doi.org/10.3390/jsan14050096

Chicago/Turabian StyleAdefemi, Kuburat Oyeranti, Murimo Bethel Mutanga, and Oyeniyi Akeem Alimi. 2025. "A Hybrid CNN–GRU Deep Learning Model for IoT Network Intrusion Detection" Journal of Sensor and Actuator Networks 14, no. 5: 96. https://doi.org/10.3390/jsan14050096

APA StyleAdefemi, K. O., Mutanga, M. B., & Alimi, O. A. (2025). A Hybrid CNN–GRU Deep Learning Model for IoT Network Intrusion Detection. Journal of Sensor and Actuator Networks, 14(5), 96. https://doi.org/10.3390/jsan14050096