Impact Evaluation of Sound Dataset Augmentation and Synthetic Generation upon Classification Accuracy

Abstract

1. Introduction

2. State of the Art

2.1. Sound Augmentation Techniques

2.2. Synthetic Sound Generation

2.3. Sound Classification Methods

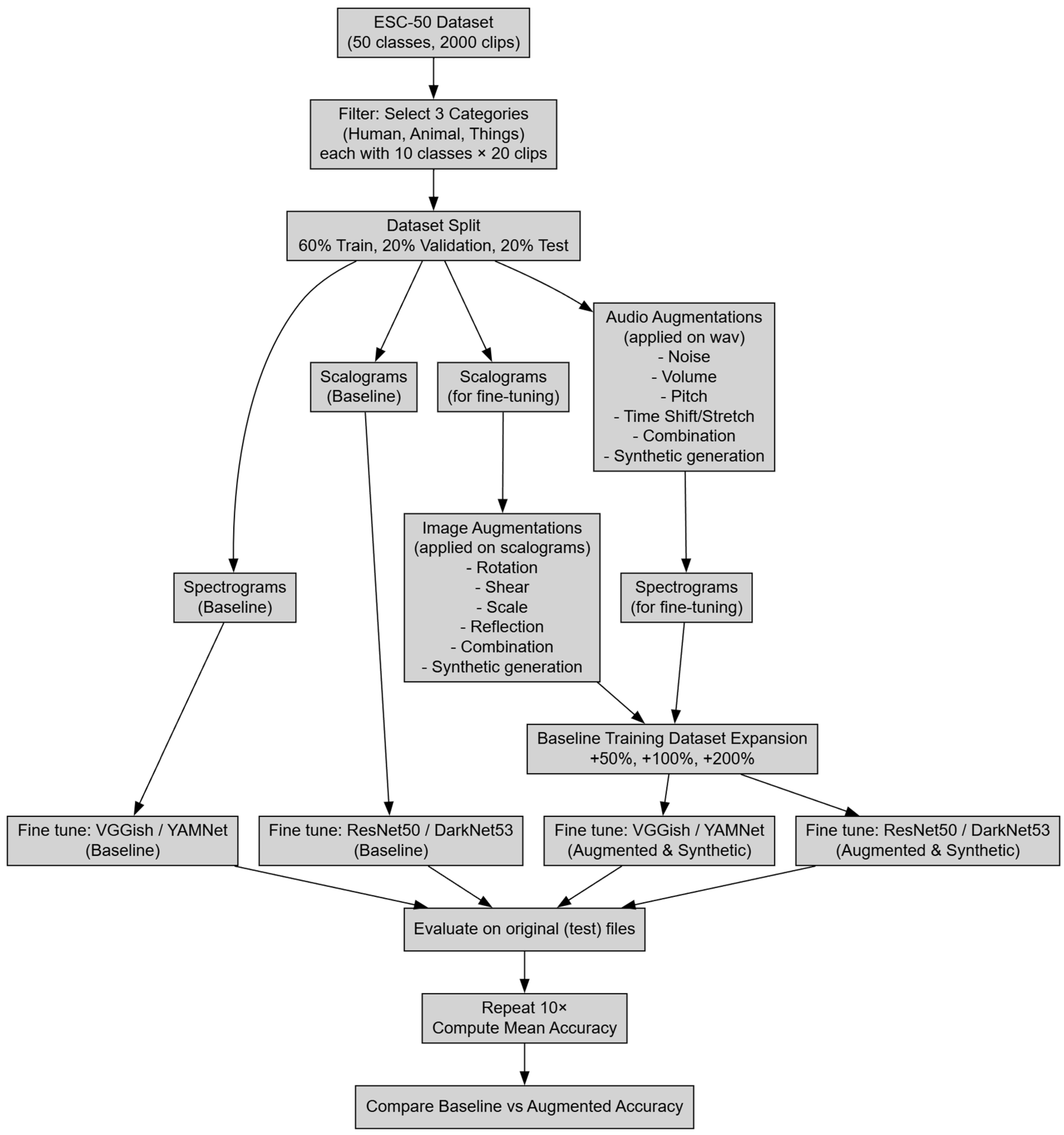

3. Materials and Methods

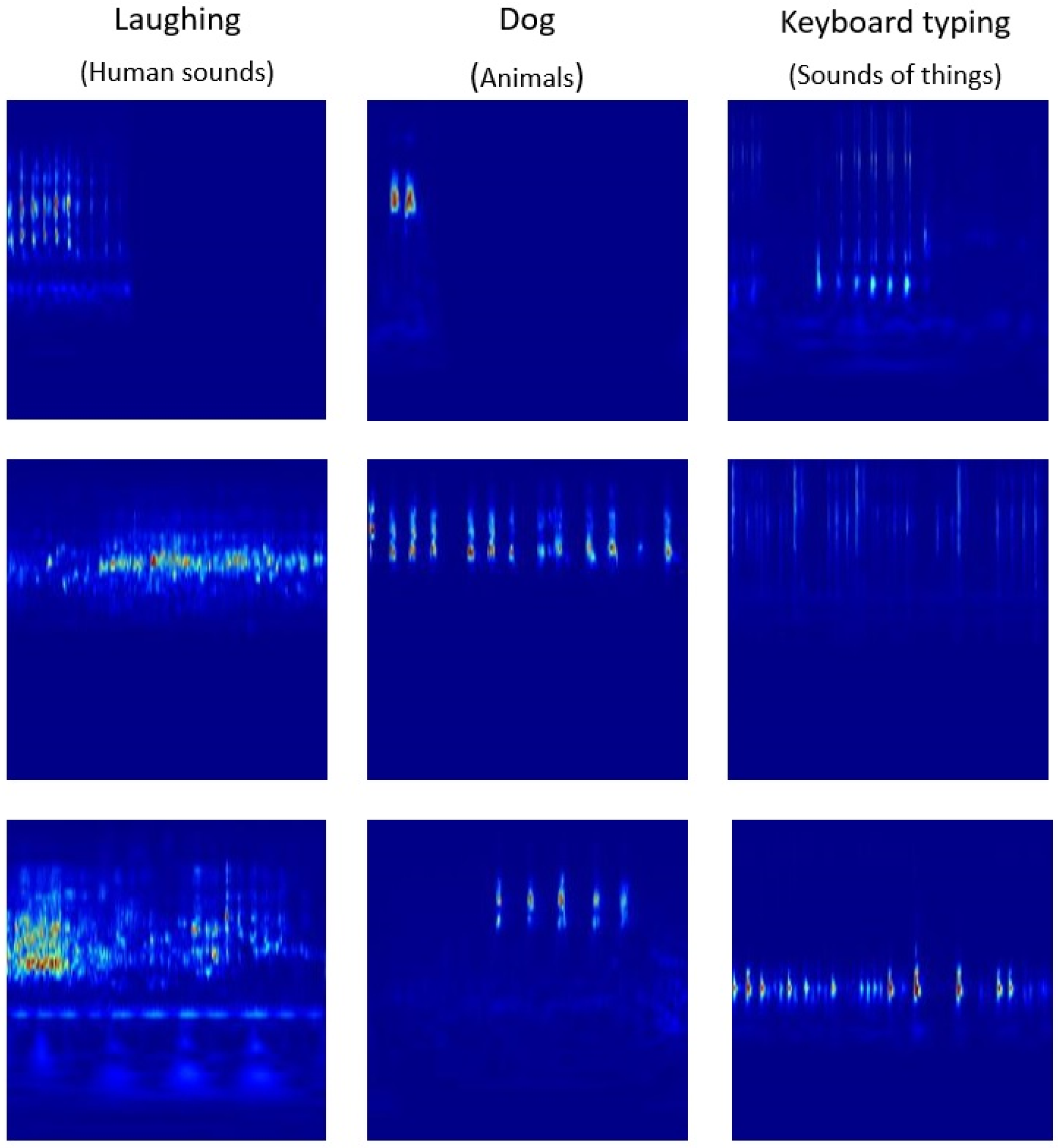

3.1. Selection of Sound Categories and Dataset Configuration

3.2. Augmentation Techniques

3.3. Synthetic Audio Generation

3.4. Methodology

4. Results

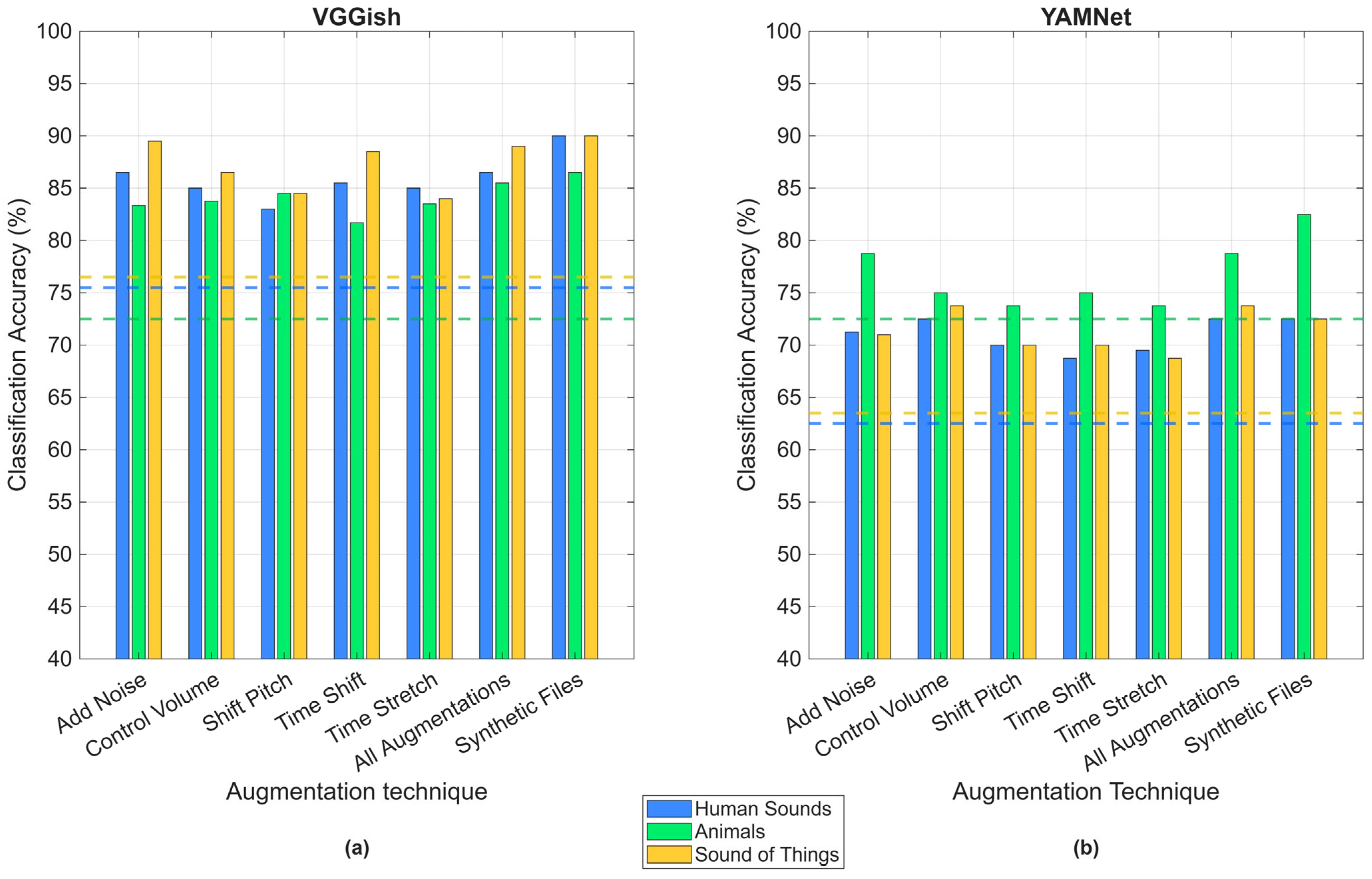

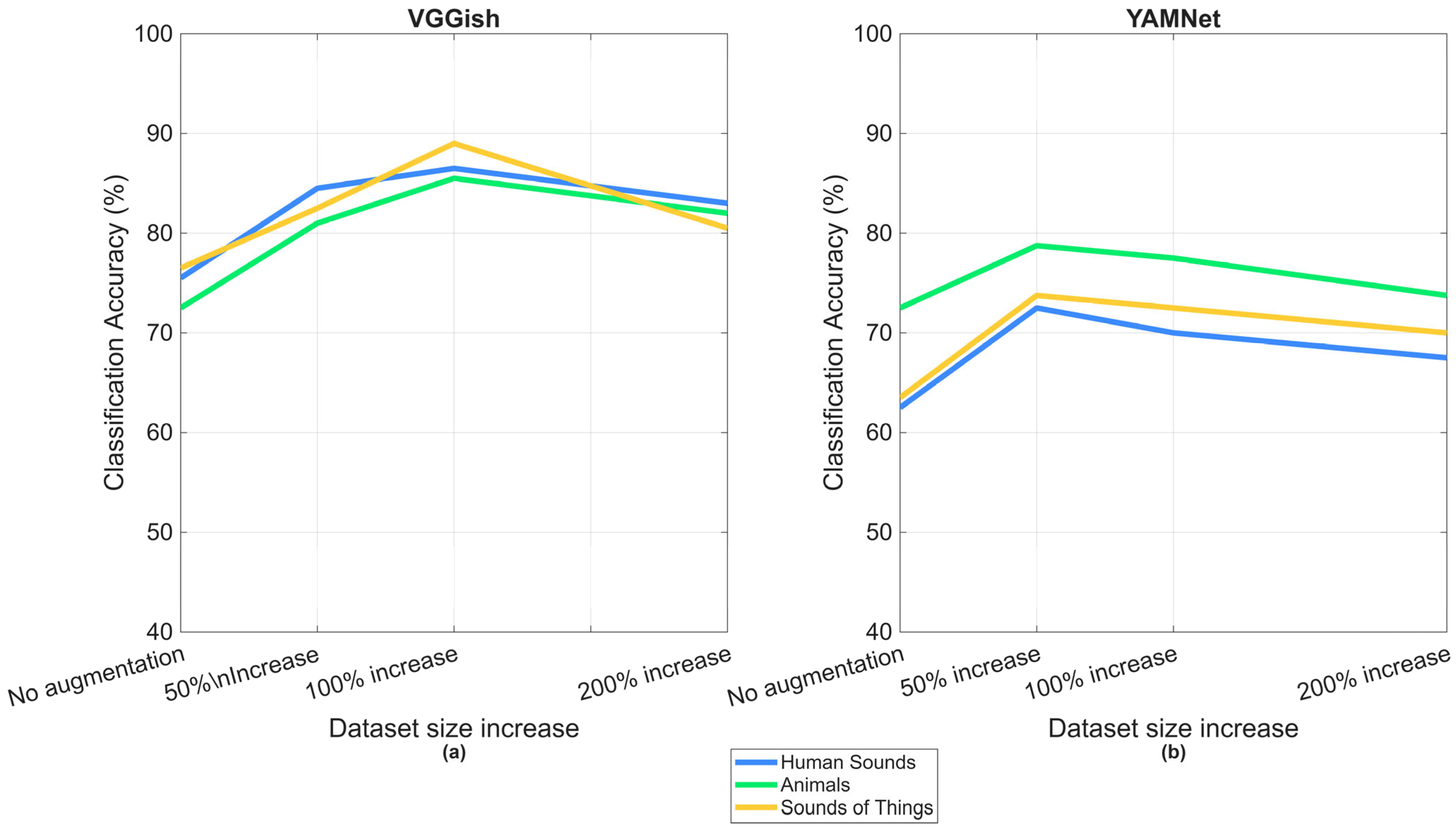

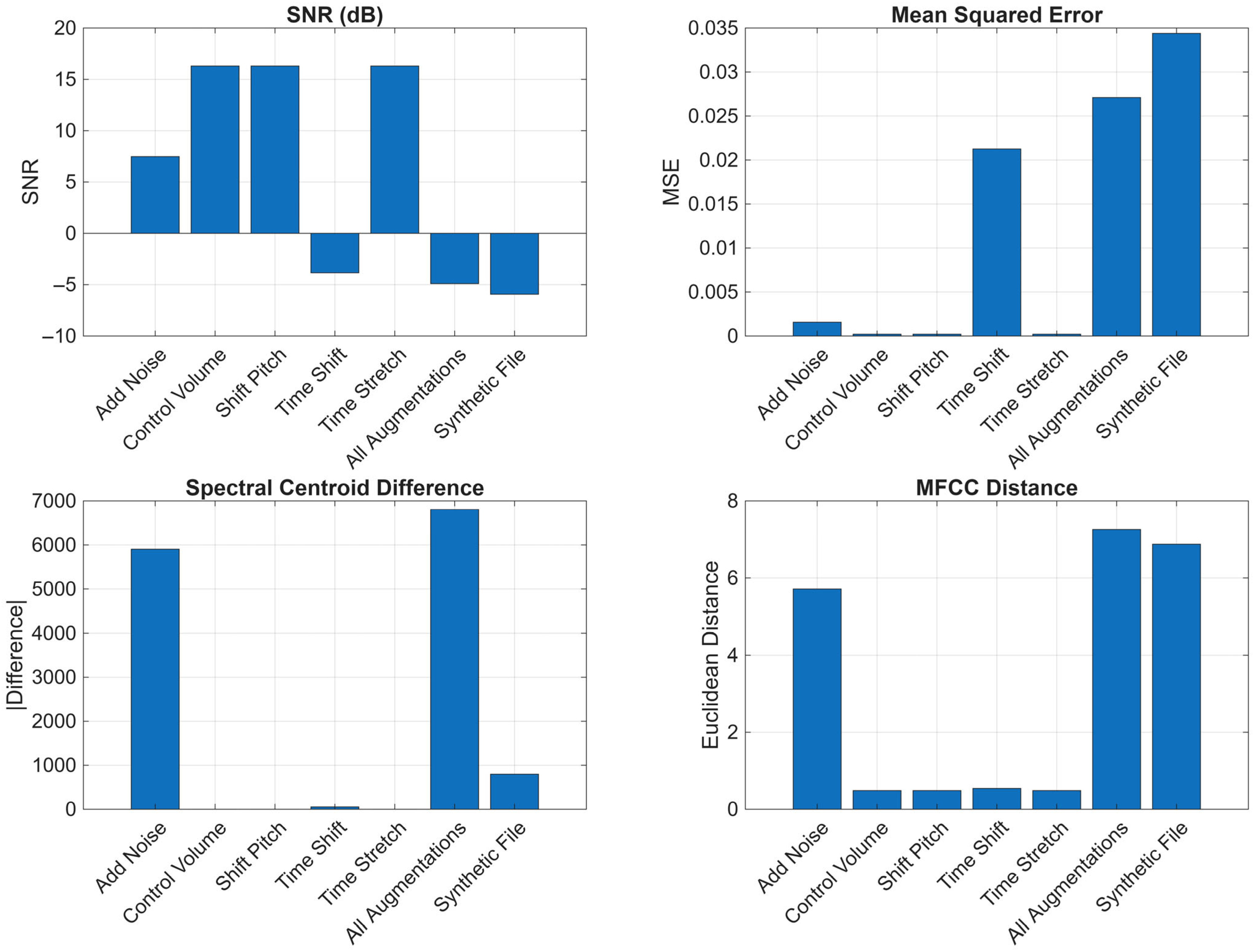

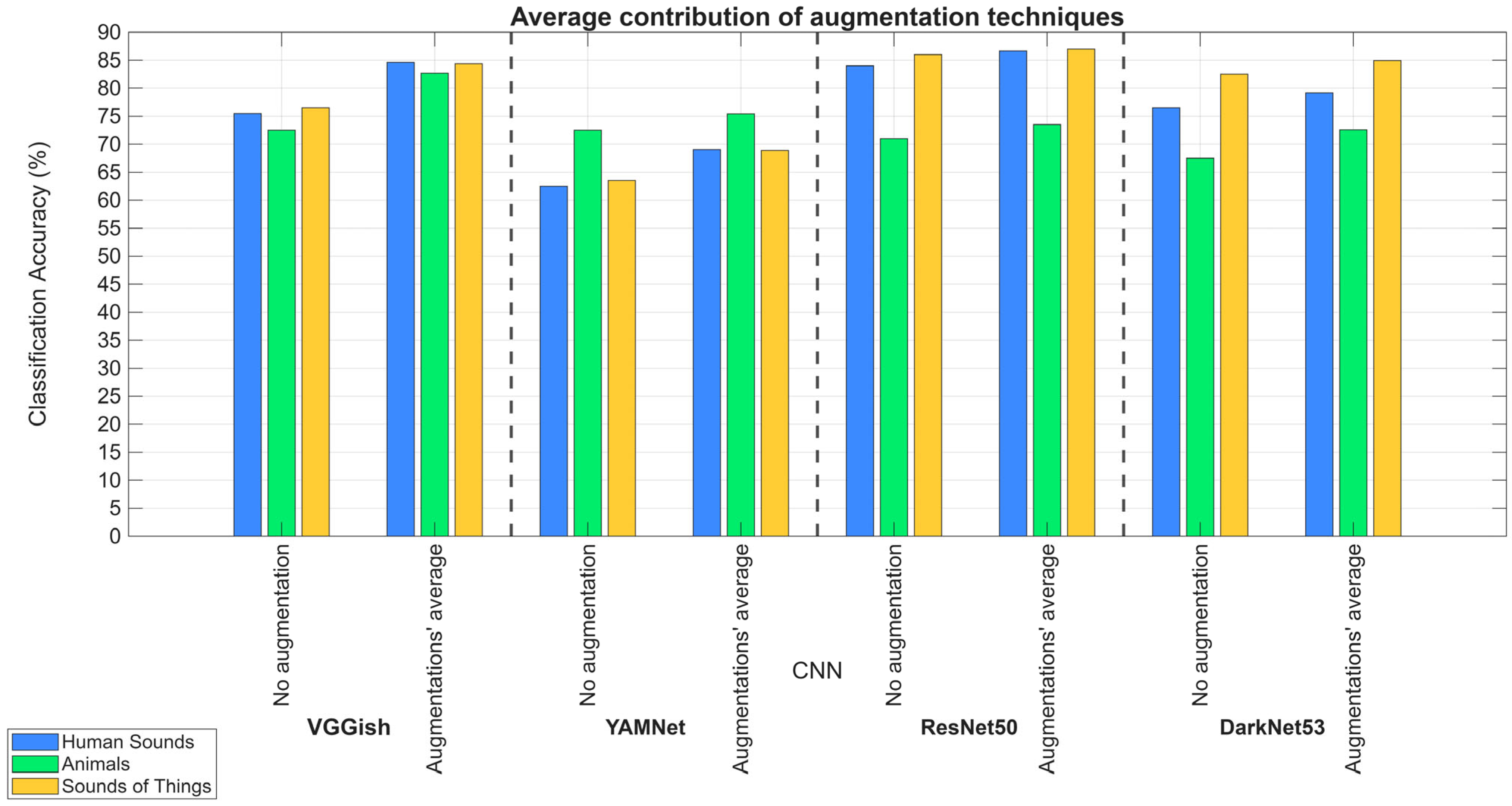

4.1. Sound Augmentation Techniques

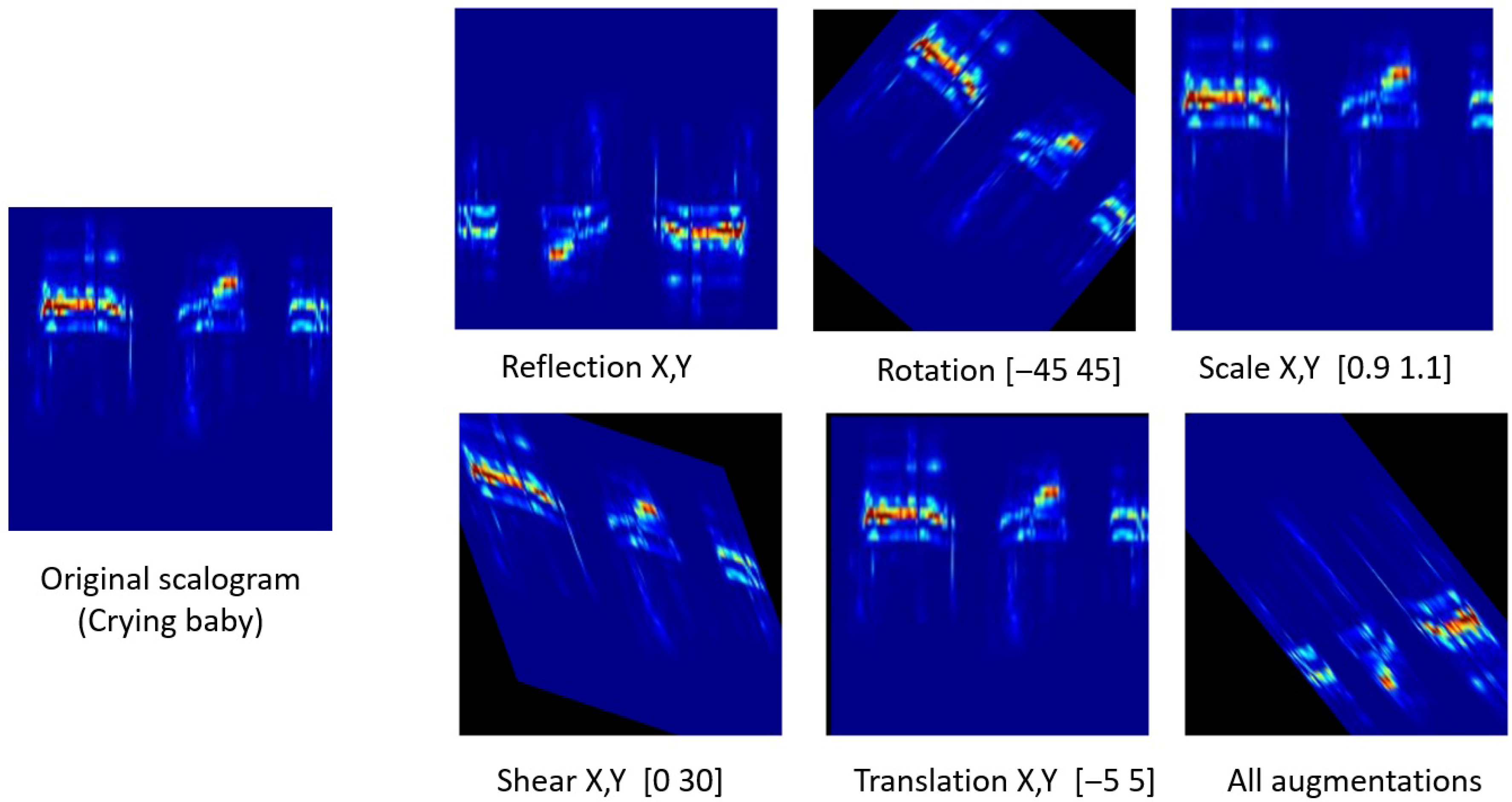

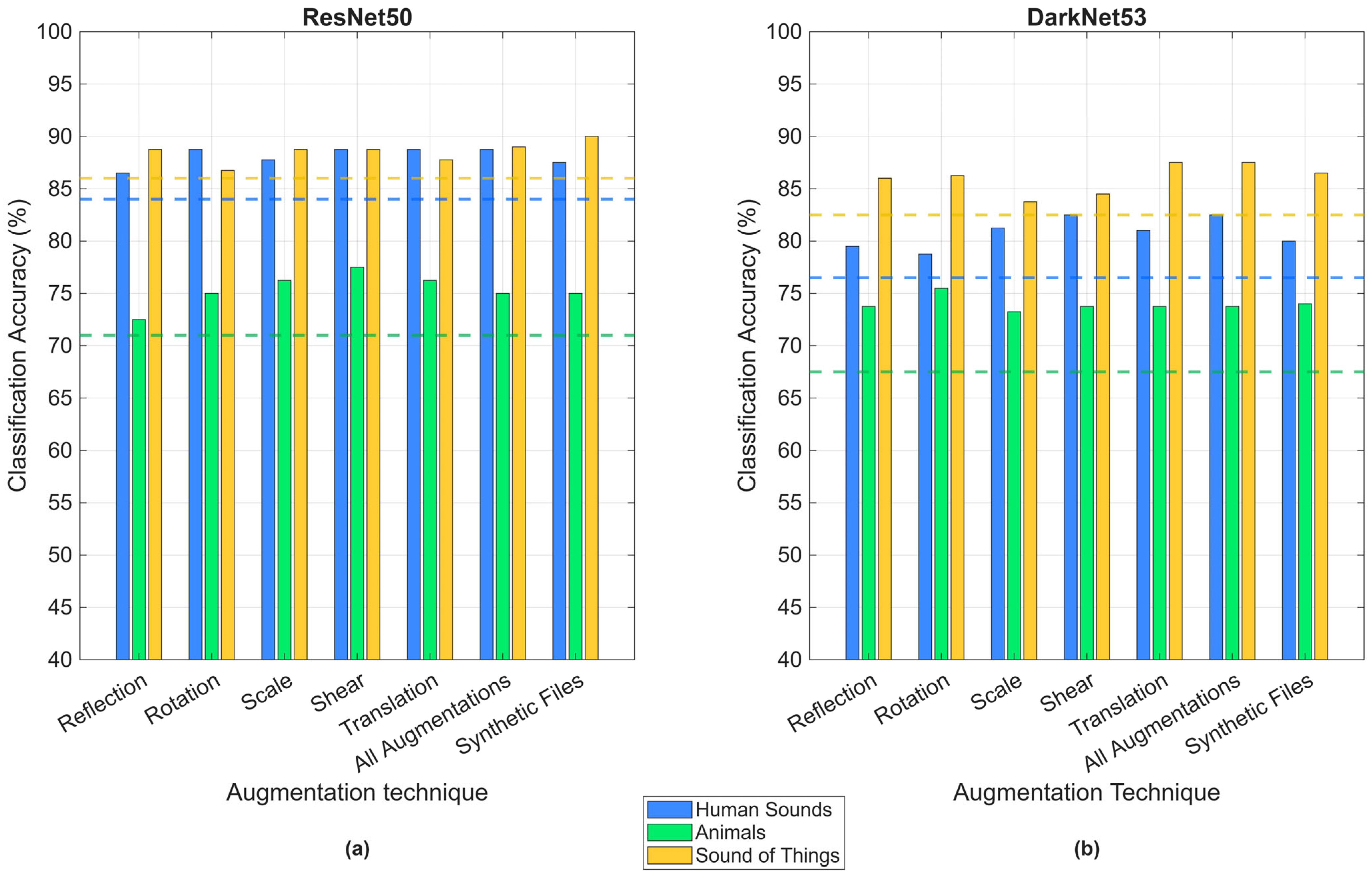

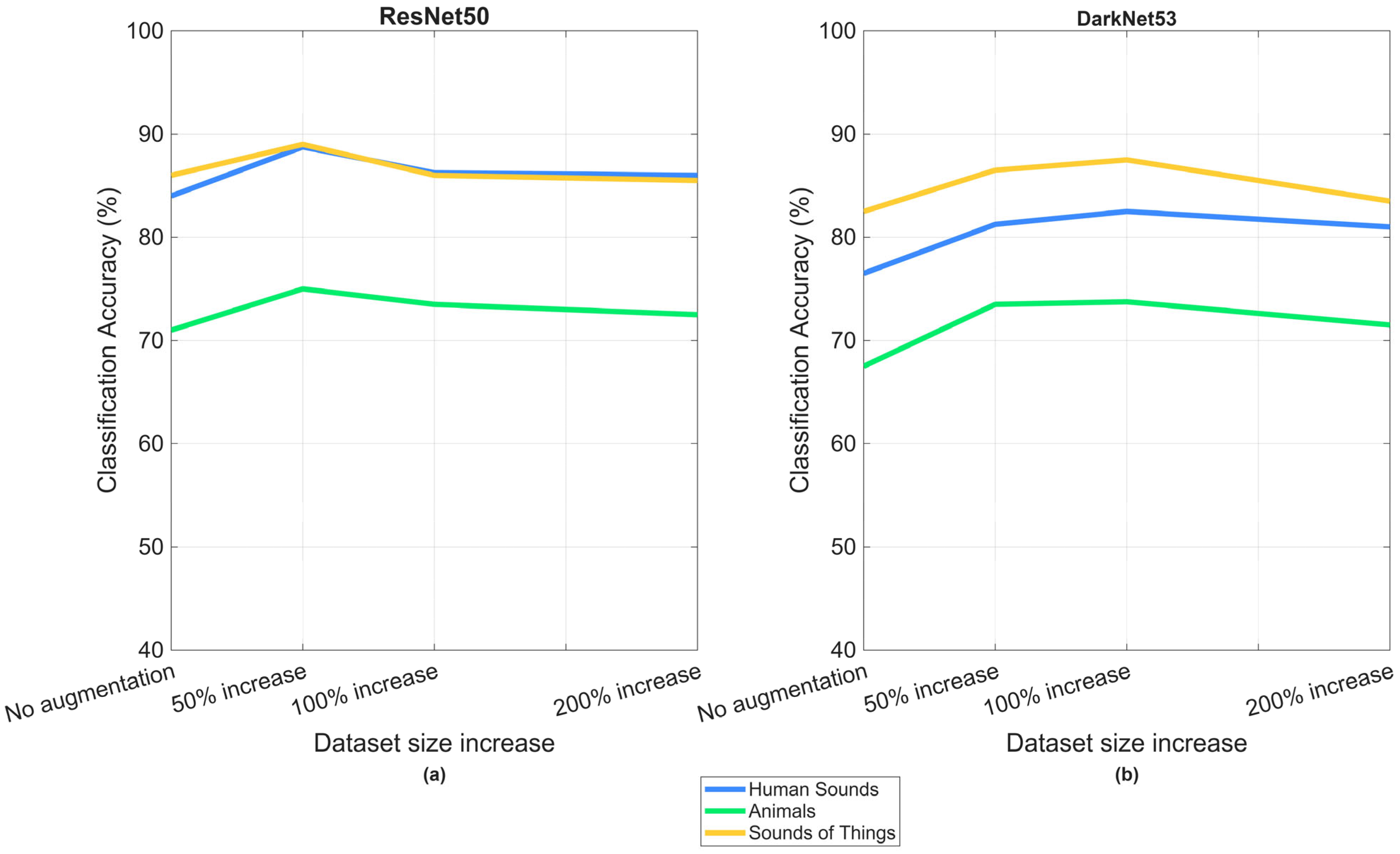

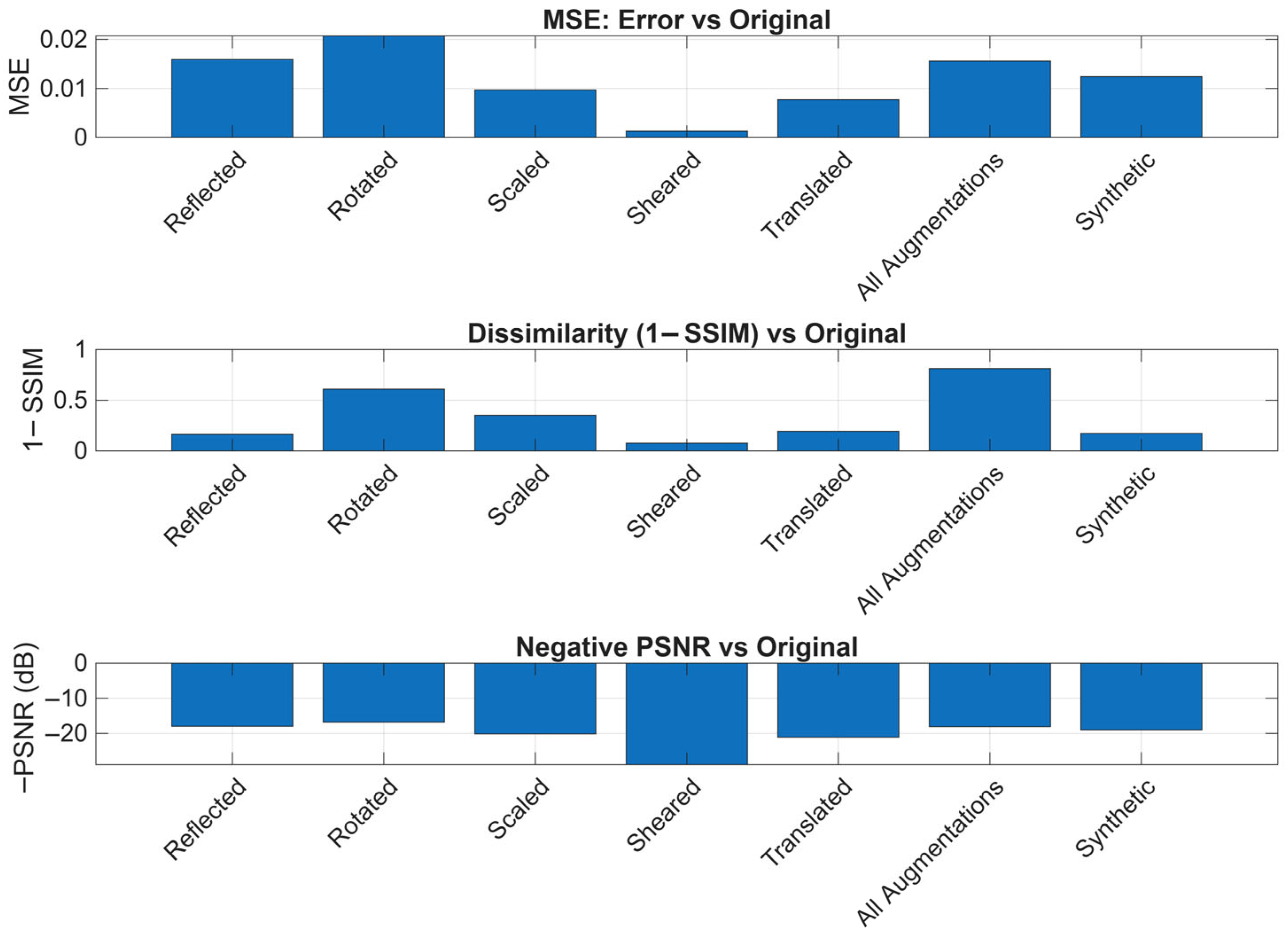

4.2. Scalogram Augmentation Techniques

5. Discussion

5.1. Sound Augmentation

5.2. Image (Scalogram) Augmentation

6. Conclusions

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

Abbreviations

| AI | Artificial Intelligence |

| AST | Audio Spectrogram Transformer |

| CNN(s) | Convolutional Neural Network(s) |

| CWT | Continuous Wavelet Transformation |

| DCASE | Detection and Classification of Acoustic Scenes and Events |

| GAN(s) | Generative Adversarial Network(s) |

| HITL | Human-In-The-Loop |

| LLM(s) | Large Language Models |

| LPCNet | Linear Prediction Coding Network |

| LSTM | Long Short-Term Memory |

| MFCC | Mel-Frequency Cepstral Coefficients |

| MIR | Music Information Retrieval |

| ML | Machine Learning |

| MSE | Mean Square Error |

| (P)SNR | (Peak) Signal-to-Noise Ratio |

| RNN | Recurrent Neural Network |

| RVQ | Residual Vector Quantization |

| SER | Sound Event Recognition |

| SSIM | Structural Similarity Index Measure |

| STFT | Short-Time Fourier Transform |

| TSM | Time-Scale Modification |

| (VQ-)VAE | (Vector Quantized-) Variational AutoEncoder |

Appendix A

| Sound Augmentations | Human Sounds | Animals | Sound of Things | |||

|---|---|---|---|---|---|---|

| Baseline (with No Augmented Files) | VGGish 75.50 | YAMNet 62.50 | VGGish 72.50 | YAMNet 72.50 | VGGish 76.50 | YAMNet 63.50 |

| Add Noise | ||||||

| 50% increase | 81.50 | 71.25 | 82.73 | 78.75 | 85.00 | 71.00 |

| 100% increase | 86.50 | 67.50 | 83.33 | 75.00 | 89.50 | 68.75 |

| 200% increase | 83.50 | 67.50 | 80.70 | 75.00 | 81.60 | 68.55 |

| Control Volume | ||||||

| 50% increase | 84.50 | 72.50 | 83.75 | 75.00 | 82.50 | 73.75 |

| 100% increase | 85.00 | 71.25 | 83.75 | 73.75 | 86.50 | 67.50 |

| 200% increase | 84.00 | 65.00 | 78.75 | 73.00 | 83.50 | 66.75 |

| Shift Pitch | ||||||

| 50% increase | 82.50 | 70.00 | 80.00 | 73.75 | 82.00 | 70.00 |

| 100% increase | 83.00 | 68.75 | 84.50 | 73.75 | 84.50 | 70.00 |

| 200% increase | 82.00 | 67.50 | 81.70 | 73.50 | 80.50 | 66.25 |

| Time Shift | ||||||

| 50% increase | 84.00 | 68.75 | 81.70 | 75.00 | 82.00 | 70.00 |

| 100% increase | 85.50 | 67.25 | 81.70 | 73.75 | 88.50 | 63.75 |

| 200% increase | 84.00 | 65.00 | 80.80 | 73.75 | 84.50 | 65.75 |

| Time Stretch | ||||||

| 50% increase | 83.50 | 69.50 | 81.70 | 73.75 | 81.50 | 68.75 |

| 100% increase | 85.00 | 67.75 | 83.50 | 73.50 | 84.00 | 63.75 |

| 200% increase | 81.50 | 67.50 | 82.00 | 73.00 | 81.50 | 62.50 |

| All augmentations | ||||||

| 50% increase | 84.50 | 72.50 | 81.00 | 78.75 | 82.50 | 73.75 |

| 100% increase | 86.50 | 70.00 | 85.50 | 77.50 | 89.00 | 72.50 |

| 200% increase | 83.00 | 67.50 | 82.00 | 73.75 | 80.50 | 70.00 |

| Synthetic Sounds | ||||||

| 50% increase | 88.33 | 72.50 | 85.50 | 82.50 | 85.50 | 72.50 |

| 100% increase | 90.00 | 70.00 | 86.50 | 80.00 | 90.00 | 71.00 |

| 200% increase | 88.33 | 67.50 | 85.00 | 77.50 | 86.50 | 70.00 |

| Image Augmentations | Human Sounds | Animals | Sound of Things | |||

|---|---|---|---|---|---|---|

| Baseline (with No Augmented Files) | ResNet50 84.00 | DarkNet53 76.50 | ResNet50 71.00 | DarkNet53 67.50 | ResNet50 86.00 | DarkNet53 82.50 |

| Reflection [X, Y] | ||||||

| 50% increase | 86.50 | 77.00 | 72.50 | 72.50 | 88.75 | 85.00 |

| 100% increase | 85.75 | 79.50 | 71.25 | 73.75 | 86.50 | 86.00 |

| 200% increase | 83.50 | 75.25 | 68.50 | 71.25 | 85.50 | 85.50 |

| Rotation [−45 45] | ||||||

| 50% increase | 88.75 | 77.50 | 75.00 | 73.50 | 86.75 | 84.00 |

| 100% increase | 87.50 | 78.75 | 73.75 | 75.50 | 85.50 | 86.25 |

| 200% increase | 86.25 | 77.00 | 71.50 | 71.00 | 84.00 | 85.50 |

| Scale [0.8 1.2] | ||||||

| 50% increase | 87.75 | 77.50 | 76.25 | 75.00 | 88.75 | 83.50 |

| 100% increase | 86.50 | 81.25 | 73.75 | 73.25 | 86.25 | 83.75 |

| 200% increase | 86.25 | 77.50 | 72.50 | 72.50 | 85.75 | 84.00 |

| Shear [−30 30] | ||||||

| 50% increase | 88.75 | 77.75 | 77.50 | 70.00 | 88.75 | 83.75 |

| 100% increase | 87.50 | 82.50 | 76.25 | 73.75 | 87.50 | 84.50 |

| 200% increase | 86.25 | 81.00 | 73.75 | 72.00 | 86.00 | 83.75 |

| Translation [−50 50] | ||||||

| 50% increase | 88.75 | 78.75 | 76.25 | 72.50 | 87.75 | 83.50 |

| 100% increase | 86.25 | 81.00 | 75.00 | 73.75 | 87.00 | 87.50 |

| 200% increase | 86.00 | 78.75 | 72.50 | 71.25 | 86.25 | 86.25 |

| All augmentations | ||||||

| 50% increase | 88.75 | 81.25 | 75.00 | 73.50 | 89.00 | 86.50 |

| 100% increase | 86.25 | 82.50 | 73.50 | 73.75 | 86.00 | 87.50 |

| 200% increase | 86.00 | 81.00 | 72.50 | 71.50 | 85.50 | 83.50 |

| Synthetic Sounds | ||||||

| 50% increase | 87.50 | 78.50 | 75.00 | 72.50 | 90.00 | 85.50 |

| 100% increase | 86.25 | 80.00 | 73.50 | 74.00 | 89.50 | 86.50 |

| 200% increase | 81.00 | 78.50 | 68.00 | 67.50 | 85.75 | 81.50 |

References

- Abayomi-Alli, O.O.; Damaševičius, R.; Qazi, A.; Adedoyin-Olowe, M.; Misra, S. Data augmentation and deep learning methods in sound classification: A systematic review. Electronics 2022, 11, 3795. [Google Scholar] [CrossRef]

- Donahue, C.; McAuley, J.; Puckette, M. Adversarial Audio Synthesis. arXiv 2018, arXiv:1802.04208. [Google Scholar]

- Mehri, S.; Kumar, K.; Gulrajani, I.; Kumar, R.; Jain, S.; Sotelo, J.; Courville, A.; Bengio, Y. SampleRNN: An Unconditional End-to-End Neural Audio Generation Model. arXiv 2016, arXiv:1612.07837. [Google Scholar]

- Wang, H.; Zou, Y.; Wang, W. SpecAugment++: A Hidden Space Data Augmentation Method for Acoustic Scene Classification. arXiv 2021, arXiv:2103.16858. [Google Scholar]

- Sarris, A.L.; Vryzas, N.; Vrysis, L.; Dimoulas, C. Investigation of Data Augmentation Techniques in Environmental Sound Recognition. Electronics 2024, 13, 4719. [Google Scholar] [CrossRef]

- Nanni, L.; Maguolo, G.; Paci, M. Data Augmentation Approaches for Improving Animal Audio Classification. Ecol. Inform. 2020, 57, 101084. [Google Scholar] [CrossRef]

- Ramires, A.; Serra, X. Data Augmentation for Instrument Classification Robust to Audio Effects. arXiv 2019, arXiv:1907.08520. [Google Scholar] [CrossRef]

- Bian, W.; Wang, J.; Zhuang, B.; Yang, J.; Wang, S.; Xiao, J. Audio-Based Music Classification with DenseNet and Data Augmentation. In Proceedings of the Pacific Rim International Conference on Artificial Intelligence (PRICAI 2019), Cham, Switzerland, 26–30 August 2019; Springer: Cham, Switzerland, 2019; pp. 56–65. [Google Scholar] [CrossRef]

- Salamon, J.; Bello, J.P. Deep Convolutional Neural Networks and Data Augmentation for Environmental Sound Classification. IEEE Signal Process. Lett. 2017, 24, 279–283. [Google Scholar] [CrossRef]

- Davis, N.; Suresh, K. Environmental sound classification using deep convolutional neural networks and data augmentation. In Proceedings of the 2018 IEEE Recent Advances in Intelligent Computational Systems (RAICS), Thiruvananthapuram, India, 6–8 December 2018; IEEE: Piscataway, NJ, USA; pp. 41–45. [Google Scholar] [CrossRef]

- Mushtaq, Z.; Su, S.F. Environmental sound classification using a regularized deep convolutional neural network with data augmentation. Appl. Acoust. 2020, 167, 107389. [Google Scholar] [CrossRef]

- Lu, Y.; Shen, M.; Wang, H.; Wang, X.; van Rechem, C.; Fu, T.; Wei, W. Machine Learning for Synthetic Data Generation: A Review. arXiv 2023, arXiv:2302.04062. [Google Scholar] [CrossRef]

- Goyal, M.; Mahmoud, Q.H. A Systematic Review of Synthetic Data Generation Techniques Using Generative AI. Electronics 2024, 13, 3509. [Google Scholar] [CrossRef]

- Kalchbrenner, N.; Elsen, E.; Simonyan, K.; Noury, S.; Casagrande, N.; Lockhart, E.; Stimberg, F.; van den Oord, A.; Dieleman, S.; Kavukcuoglu, K. Efficient Neural Audio Synthesis. arXiv 2019. Available online: https://arxiv.org/abs/1802.08435 (accessed on 21 May 2025).

- Valin, J.M.; Skoglund, J. LPCNet: Improving Neural Speech Synthesis through Linear Prediction. In Proceedings of the IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP 2019), Brighton, UK, 12–17 May 2019; IEEE: Piscataway, NJ, USA, 2019; pp. 5891–5895. [Google Scholar] [CrossRef]

- Liu, X.; Singh, S.; Cornelius, C.; Busho, C.; Tan, M.; Paul, A.; Martin, J. Synthetic Dataset Generation for Adversarial Machine Learning Research. arXiv 2022, arXiv:2207.10719. [Google Scholar] [CrossRef]

- Van Den Oord, A.; Dieleman, S.; Zen, H.; Simonyan, K.; Vinyals, O.; Graves, A.; Kalchbrenner, N.; Senior, A.; Kavukcuoglu, K. WaveNet: A Generative Model for Raw Audio. arXiv 2016, arXiv:1609.03499. [Google Scholar] [CrossRef]

- Dhariwal, P.; Jun, H.; Payne, C.; Kim, J.W.; Radford, A.; Sutskever, I. Jukebox: A Generative Model for Music. arXiv 2020, arXiv:2005.00341. [Google Scholar] [CrossRef]

- Kreuk, F.; Synnaeve, G.; Polyak, A.; Singer, U.; Défossez, A.; Copet, J.; Parikh, D.; Taigman, Y.; Adi, Y. Textually Guided Audio Generation. arXiv 2022, arXiv:2209.15352. [Google Scholar]

- Lhoest, L.; Lamrini, M.; Vandendriessche, J.; Wouters, N.; da Silva, B.; Chkouri, M.Y.; Touhafi, A. Mosaic: A Classical Machine Learning Multi-Classifier Based Approach Against Deep Learning Classifiers for Embedded Sound Classification. Appl. Sci. 2021, 11, 8394. [Google Scholar] [CrossRef]

- Tsalera, E.; Papadakis, A.; Samarakou, M.; Voyiatzis, I. CNN-Based Segmentation and Classification of Sound Streams under Realistic Conditions. In Proceedings of the 26th Pan-Hellenic Conference on Informatics (PCI 2022), Athens, Greece, 25–27 November 2022; pp. 373–378. [Google Scholar] [CrossRef]

- Abdul, Z.K.; Al-Talabani, A.K. Mel Frequency Cepstral Coefficient and Its Applications: A Review. IEEE Access 2022, 10, 122136–122158. [Google Scholar] [CrossRef]

- Ren, Z.; Qian, K.; Zhang, Z.; Pandit, V.; Baird, A.; Schuller, B. Deep Scalogram Representations for Acoustic Scene Classification. IEEE/CAA J. Autom. Sin. 2018, 5, 662–669. [Google Scholar] [CrossRef]

- Phan, D.T.; Jakob, A.; Purat, M. Comparison Performance of Spectrogram and Scalogram as Input of Acoustic Recognition Task. arXiv 2024, arXiv:2403.03611. [Google Scholar] [CrossRef]

- Chi, P.H.; Chung, P.H.; Wu, T.H.; Hsieh, C.C.; Chen, Y.H.; Li, S.W.; Lee, H.Y. Audio ALBERT: A Lite BERT for Self-Supervised Learning of Audio Representation. In Proceedings of the IEEE Spoken Language Technology Workshop (SLT 2021), Shenzhen, China, 19–22 January 2021; pp. 344–350. [Google Scholar] [CrossRef]

- Tsalera, E.; Papadakis, A.; Samarakou, M. Comparison of Pre-Trained CNNs for Audio Classification Using Transfer Learning. J. Sens. Actuator Netw. 2021, 10, 72. [Google Scholar] [CrossRef]

- Gong, Y.; Chung, Y.A.; Glass, J. AST: Audio Spectrogram Transformer. arXiv 2021, arXiv:2104.01778. [Google Scholar] [CrossRef]

- Palanisamy, K.; Singhania, D.; Yao, A. Rethinking CNN Models for Audio Classification. arXiv 2020, arXiv:2007.11154. [Google Scholar] [CrossRef]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Deep Residual Learning for Image Recognition. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR 2016), Las Vegas, NV, USA, 26 June–1 July 2016; pp. 770–778. [Google Scholar] [CrossRef]

- Redmon, J. YOLOv3: An Incremental Improvement. arXiv 2018, arXiv:1804.02767. [Google Scholar] [CrossRef]

- Tsalera, E.; Papadakis, A.; Samarakou, M.; Voyiatzis, I. Feature Extraction with Handcrafted Methods and Convolutional Neural Networks for Facial Emotion Recognition. Appl. Sci. 2022, 12, 8455. [Google Scholar] [CrossRef]

- Piczak, K.J. ESC: Dataset for Environmental Sound Classification. In Proceedings of the 23rd ACM International Conference on Multimedia (MM 2015), Brisbane, Australia, 26–30 October 2015; pp. 1015–1018. [Google Scholar] [CrossRef]

- Gemmeke, J.F.; Ellis, D.P.W.; Freedman, D.; Jansen, A.; Lawrence, W.; Moore, R.C.; Plakal, M.; Ritter, M. Audio Set: An ontology and human-labeled dataset for audio events. In Proceedings of the 2017 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), New Orleans, LA, USA, 5–9 March 2017; pp. 776–780. [Google Scholar] [CrossRef]

- Driedger, J.; Müller, M. A Review of Time-Scale Modification of Music Signals. Appl. Sci. 2016, 6, 57. [Google Scholar] [CrossRef]

- Morrison, M.; Jin, Z.; Bryan, N.J.; Caceres, J.P.; Pardo, B. Neural Pitch-Shifting and Time-Stretching with Controllable LPCNet. arXiv 2021, arXiv:2110.02360. [Google Scholar]

- Shorten, C.; Khoshgoftaar, T.M. A Survey on Image Data Augmentation for Deep Learning. J. Big Data 2019, 6, 60. [Google Scholar] [CrossRef]

- Wei, S.; Zou, S.; Liao, F. A Comparison on Data Augmentation Methods Based on Deep Learning for Audio Classification. J. Phys. Conf. Ser. 2020, 453, 012085. [Google Scholar] [CrossRef]

- Lin, G.; Jiang, J.; Bai, J.; Su, Y.; Su, Z.; Liu, H. Frontiers and developments of data augmentation for image: From unlearnable to learnable. Inf. Fusion 2025, 114, 102660. [Google Scholar] [CrossRef]

- Krizhevsky, A.; Sutskever, I.; Hinton, G.E. ImageNet classification with deep convolutional neural networks. In Advances in Neural Information Processing Systems; Neural Information Processing Systems Foundation: Red Hook, NY, USA, 2012; Volume 25. [Google Scholar] [CrossRef]

- Jensen, J.H.; Christensen, M.G.; Ellis, D.P.W.; Jensen, S.H. Quantitative Analysis of a Common Audio Similarity Measure. IEEE Trans. Audio Speech Lang. Process. 2009, 17, 693–703. [Google Scholar] [CrossRef]

| Human Sounds | Animals | Sounds of Things |

|---|---|---|

| Crying baby | Dog | Pouring water |

| Sneezing | Rooster | Toilet flush |

| Clapping | Pig | Door knock |

| Breathing | Cow | Mouse click |

| Coughing | Frog | Keyboard typing |

| Footsteps | Cat | Door, wood cracks |

| Laughing | Hen | Can opening |

| Brushing teeth | Insects (flying) | Vacuum cleaner |

| Snoring | Crow | Clock alarm |

| Drinking/sipping | Chirping birds | Clock tick |

| Augmentation Technique | Analogous Real-World Condition |

|---|---|

| Add Noise | Unexpected environmental interference |

| Control Volume | Differences in recording sensitivity or microphone distance |

| Pitch Shift | Variability in vocalizations or mechanical tones |

| Time Shift | Temporal misalignments in uncontrolled environments |

| Time Stretch | Temporal variability in sound events |

| Augmentation Technique | Analogous Real-World Condition |

|---|---|

| Reflection | Mirrored or inverted acoustic structures |

| Rotation | Changes in angular positioning of time-frequency features |

| Scale | Amplitude or frequency scaling differences due to different devices |

| Shear | Distortions from recording processes |

| Translation | Temporal or frequency shifts in the scalograms |

| Prompts (Including Background) | Prompts (Without Background) |

|---|---|

| Dog | Dog |

| “A puppy whining softly in its crate.” | “A dog growling protectively.” |

| “Excited barking during a game of fetch.” | “A dog panting after exercise.” |

| “A dog growling protectively at night.” | “A small dog barking at a cat.” |

| Baby crying | Baby crying |

| “A newborn baby softly crying in a hospital room.” | “A baby crying loudly.” |

| “A baby crying loudly in a small apartment.” | “A baby crying intermittently.” |

| “A baby crying while being rocked in a cradle.” | “A baby crying continuously.” |

| Water pouring | Water pouring |

| “Pouring water into a glass in a quiet kitchen.”, | “Pouring water into a kettle.” |

| “Water being poured from a kettle into a teacup.” | “Pouring water into a jug.” |

| “Pouring water into a bathtub with echoes in the bathroom.” | “Pouring water into a sink.” |

| CNN | Depth | Millions of Parameters | Memory Size (MB) | FLOPs (GFLOPs) |

|---|---|---|---|---|

| VGGish | 10 | 62 | 237 | 15.5 |

| YAMNet | 28 | 3.2 | 12 | 0.57 |

| ResNet50 | 50 | 25.6 | 98 | 4.1 |

| DarkNet53 | 53 | 41.6 | 159 | 7.3 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Tsalera, E.; Papadakis, A.; Pagiatakis, G.; Samarakou, M. Impact Evaluation of Sound Dataset Augmentation and Synthetic Generation upon Classification Accuracy. J. Sens. Actuator Netw. 2025, 14, 91. https://doi.org/10.3390/jsan14050091

Tsalera E, Papadakis A, Pagiatakis G, Samarakou M. Impact Evaluation of Sound Dataset Augmentation and Synthetic Generation upon Classification Accuracy. Journal of Sensor and Actuator Networks. 2025; 14(5):91. https://doi.org/10.3390/jsan14050091

Chicago/Turabian StyleTsalera, Eleni, Andreas Papadakis, Gerasimos Pagiatakis, and Maria Samarakou. 2025. "Impact Evaluation of Sound Dataset Augmentation and Synthetic Generation upon Classification Accuracy" Journal of Sensor and Actuator Networks 14, no. 5: 91. https://doi.org/10.3390/jsan14050091

APA StyleTsalera, E., Papadakis, A., Pagiatakis, G., & Samarakou, M. (2025). Impact Evaluation of Sound Dataset Augmentation and Synthetic Generation upon Classification Accuracy. Journal of Sensor and Actuator Networks, 14(5), 91. https://doi.org/10.3390/jsan14050091