Drone Imaging and Sensors for Situational Awareness in Hazardous Environments: A Systematic Review

Abstract

1. Introduction

- This study aims, first and foremost, to investigate the integration and synergy of advanced imaging and sensor technologies in drone operations, with a focus on enhancing situational awareness in hazardous environments that pose significant risks to human health, safety, and operations. These include dangerous environments with extreme conditions, restricted zones with controlled access, and high-risk areas prone to natural disasters or industrial accidents. Critical zones and threat-sensitive regions require heightened surveillance due to security concerns. In contrast, unsafe environments, such as contaminated zones and hostile territories, can contain hazardous pollutants or present security threats, necessitating remote sensing for situational awareness.

- Secondly, by examining the integration of these technologies, this research aims to assess the effectiveness of UAVs in detecting hazards; enhancing real-time decision-making, and ensuring safer operations in complex and challenging conditions.

- Lastly, the review will assess their effectiveness in identifying and managing hazardous materials, thereby contributing to enhanced safety measures and improved risk mitigation strategies in hazardous environments.

2. Background of the Research

2.1. UAVs in Disaster and Emergency Management

2.2. UAVs in Military Operations

2.3. UAVs for Hazardous Material Incidents

3. Research Methodology

3.1. Research Method

3.2. Search Criteria

3.2.1. Search Query for Disaster and Emergency Management

3.2.2. Searching Query for Military Operations

3.2.3. Searching Query for Hazardous Material (HAZMAT) Incidents

3.3. Inclusion and Exclusion Criteria of Sampling

3.4. Review of Relevant Literature

4. Discussion

4.1. Imaging and Sensor Technologies Used in Disaster and Emergency Management

- Imaging and sensor technologies integrated with drones not only play a critical role in managing natural disasters and hazardous situations, but also contribute supplementary functions to a range of high-risk scenarios. These include supporting the delivery of first aid, detecting falls among elderly individuals, enhancing situational awareness for first responders to improve decision-making under duress, and monitoring the storage conditions of dangerous goods to prevent potentially fatal incidents for personnel working in such environments.

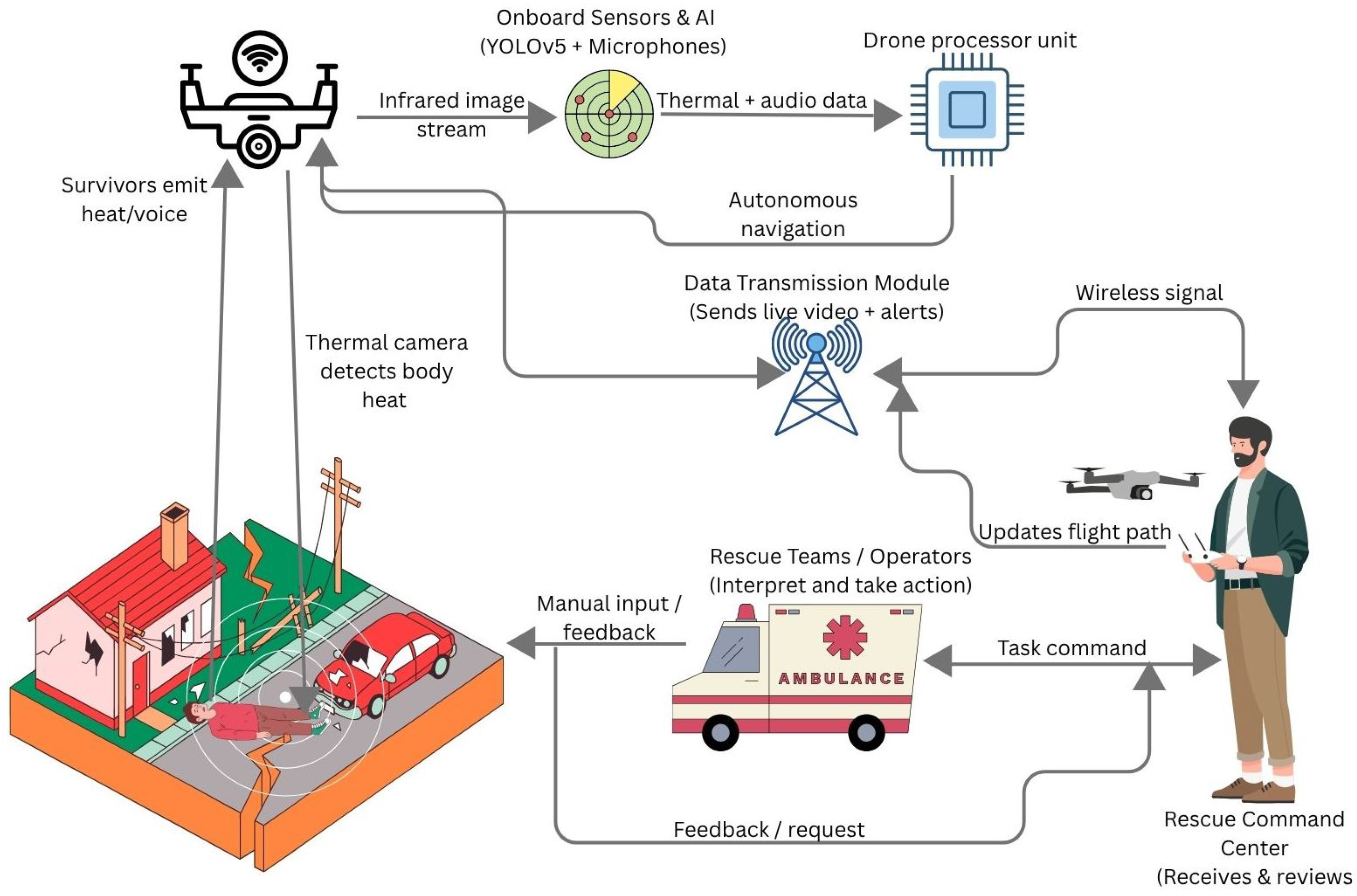

- Most imaging and sensor technologies in drones are not stand-alone systems; they could provide greater benefits when integrated with wireless networks, big data, and AI. This combination enables real-time data processing, enhancing the accuracy and effectiveness of disaster response operations.

- Most research in disaster and emergency management aims to support frontline personnel by enhancing situational awareness, reducing unnecessary workload, and facilitating more effective decision-making. These improvements contribute not only to the safety of emergency responders but also to the timely assistance and potential survival of individuals in critical need during emergencies.

- Crowdsensing platforms have attracted growing research interest, mainly due to the increased accessibility of smartphones, wearable devices, and intelligent systems integrated into modern vehicles. These platforms enable the collection of large-scale, real-time data from numerous individuals, offering valuable insights for disaster and emergency management. However, concerns regarding data privacy and user security remain critical and must be carefully addressed in both the design and implementation of such systems.

- A significant proportion of the excluded papers—representing 62 out of 100—primarily focus on data transmission between sensors and the integration of technologies such as AI, big data, and wireless communication. This trend aligns with the earlier observation that imaging and sensor technologies are no longer stand-alone systems. Their integration within intelligent networks enhances both the speed and accuracy of results. Consequently, there is a notable shift in research interest toward system-level integration and data processing, rather than solely examining how cameras detect objects or individuals, or how sensors function in isolation.

- The findings indicate that the majority of studies prioritise enhancing situational awareness and decision-making for first responders, highlighting the central role of UAVs in real-time disaster management. A clear distinction emerges between experimental studies, which focus on developing and testing UAV prototypes, and observational or conceptual studies, which emphasize frameworks, systematic reviews, and integration strategies.

- Thermal and infrared imaging technologies remain the most dominant tools across applications, while multispectral, hyperspectral, and LiDAR systems are increasingly adopted to provide high-resolution environmental monitoring and mapping. The integration of multi-sensor platforms, spanning environmental (temperature, humidity, gas, radiation), biomedical (ECG, respiration, blood pressure), and safety-critical (seismic, motion, pressure) domains, demonstrates the expanding scope of UAV-based disaster monitoring.

- Several studies underscore the role of AI, deep learning, and 5G connectivity in improving automation, predictive analytics, and coordination efficiency in emergency response operations. The emergence of extended reality (XR) and augmented reality (AR) applications suggests growing attention to training, decision support, and situational awareness in disaster risk reduction.

4.2. Imaging and Sensor Technologies Used in Military Operations

- Some research works were excluded from the results in the military context because the technologies were primarily applied in geological, environmental, and marine surveillance, overlapped with disaster and emergency management studies, or utilized for tracking, delivery services, and general surveillance applications. This suggests that the search query included the term ’surveillance,’ which is broadly used across various non-military industries.

- In addition to excluding works that are not directly related to the military context, several other studies were also omitted from consideration. This exclusion was primarily due to these studies focusing predominantly on areas such as the Internet of Things (IoT), wireless sensor networks, and data extraction and collection processes. While these topics are important, they do not emphasize imaging and sensor technologies, which are the central focus of this paper.

- The studies presented in Table 2 predominantly explore the application of drone technologies integrated with advanced sensor systems to enhance military operations. These include the use of sensors for real-time threat detection, integration with wireless sensor networks for improved communication and data sharing, and monitoring systems for tracking the health and activity of military personnel. Collectively, these technologies play a crucial role in strengthening situational awareness, supporting tactical decision-making processes for soldiers and commanders, and improving the effectiveness of Intelligence, Surveillance, and Reconnaissance (ISR) missions.

- The body of research indicates that imaging and sensor technologies developed for military drones are increasingly applicable to civilian domains. Although drone technology originally emerged from military contexts, contemporary advancements have facilitated significant improvements in various civilian sectors. Consequently, this crossover is evident in the research findings, where the reviewed technologies are shown to be shared and adapted across both military and civilian industries.

- UAVs have become increasingly central to military operations, with research emphasizing improvements in situational awareness, reconnaissance, surveillance, and decision-making support across diverse operational contexts. Experimental studies typically focus on developing and validating UAV prototypes, multi-sensor platforms, and AI-driven systems, whereas observational studies emphasize frameworks, reviews, and integration strategies for operational deployment.

- Imaging technologies commonly employed include thermal and infrared cameras, high-resolution optical cameras, multispectral and hyperspectral cameras, and visible light imaging. Sensor technologies encompass environmental (temperature, pressure, seismic, chemical, biological, and radiation), motion (PIR, acoustic, and radar), biomedical (ECG and wearable), and LiDAR or laser-based systems. Integration of these multi-modal sensors facilitates autonomous or semi-autonomous UAV operations in complex and high-risk environments. AI, machine learning, and data fusion enhance predictive capabilities, automate threat detection, identify anomalies, and improve operational decision-making.

- Key challenges in military UAV applications include operational reliability in GPS-denied or communication-limited environments, complex integration of emerging technologies (e.g., swarm UAVs, 5G connectivity, additive manufacturing of sensors), and cybersecurity concerns such as secure data transmission, operator and UAV monitoring, and mitigation of potential misuse.

- Emerging solutions aim to enhance UAV operational efficiency and effectiveness, including the deployment of edge computing, AI, and data fusion to optimize situational awareness and reduce reliance on centralized command; swarm UAV frameworks and autonomous coordination algorithms to improve coverage, responsiveness, and mission success; and multi-sensor UAV platforms combining optical, infrared, LiDAR, and chemical/biological sensors for comprehensive battlefield intelligence.

4.3. Imaging and Sensor Technologies Used in HAZMAT

- Although the reviewed studies do not explicitly state drone use in HAZMAT or CBRN scenarios, several show potential for such applications. The research aligns broadly with disaster and emergency management, with cases like hazardous environment monitoring, toxic gas detection, and industrial safety inspections suggesting relevance to HAZMAT. Thus, while not all studies directly target HAZMAT or CBRN, some drone systems exhibit characteristics that make them suitable for high-risk deployments.

- The excluded studies primarily concentrate on environmental monitoring applications that are not directly relevant to HAZMAT or CBRN scenarios. These include areas such as water quality assessment, detection of algal blooms or other forms of water contamination, crop health monitoring, and precision agriculture. While necessary for sustainability and resource management, these applications fall outside the scope of hazardous material detection and emergency response.

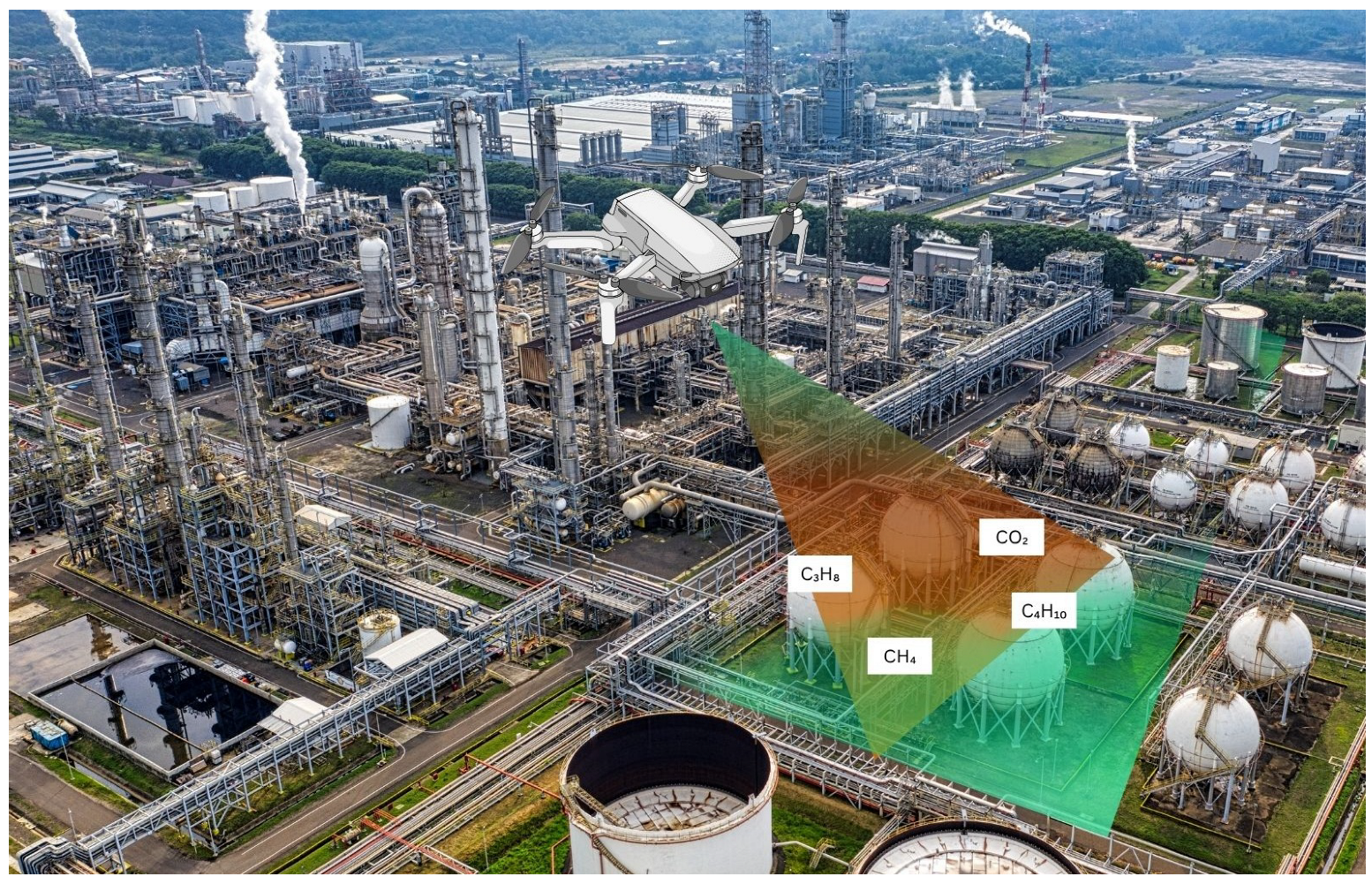

- Interestingly, the majority of the reviewed studies place greater emphasis on sensor technologies rather than imaging systems. Most of the included research utilised metal-oxide (MOX) sensors and electrochemical sensors, highlighting a focus on gas detection and chemical analysis over visual data capture.

- Most of the studies included under the HAZMAT category predominantly address scenarios involving chemical spills and the detection of hazardous gases. These works often emphasize the use of real-time monitoring and early warning systems, utilizing sensor-equipped drones to detect toxic substances in industrial environments. Comparatively fewer studies have explored other types of hazardous materials, such as radiological or biological agents, indicating a research focus on chemical-related threats.

- UAVs are increasingly employed in hazardous materials (HAZMAT) operations to improve real-time situational awareness, emergency response, and industrial safety. Applications span chemical plants, waste management, oil spill response, and environmental pollution monitoring. Both experimental and observational studies highlight UAVs as practical tools to reduce human exposure to high-risk environments while enhancing detection, monitoring, and decision-making processes.

- Sensor technologies utilized in HAZMAT UAV applications are diverse, encompassing gas and smoke sensors, electrochemical sensors, MOX sensors, turbidity sensors, LiDAR, thermocouples, and biomedical or environmental sensors. The integration of multiple sensors allows UAVs to simultaneously monitor complex chemical, biological, radiological, and environmental parameters.

- Key challenges in deploying UAVs for HAZMAT operations include operational reliability in hazardous, dynamic, or toxic environments, accurate real-time detection of multiple hazardous agents under varying environmental conditions (e.g., airflow, temperature fluctuations, chemical interactions), limitations in UAV endurance and power supply for extended monitoring or large-area coverage, difficulties in integrating heterogeneous sensor data for timely situational awareness, and the high costs and technical complexity of implementing robust multi-sensor platforms.

- Emerging strategies to address these challenges involve the deployment of multi-sensor UAV platforms combining thermal, infrared, hyperspectral imaging with environmental sensors (gas, electrochemical, MOX, LiDAR) to improve hazard detection accuracy, the development of solar-powered or low-power UAVs to enhance endurance and autonomy, and the integration of crowdsensing and wireless sensor networks (WSNs) to enable large-scale environmental monitoring and remote HAZMAT incident assessment.

4.4. Systematic Insights into Drone Imaging and Sensor Technologies Across Key Sectors

4.5. Limitations and Challenges of Drone Operations

4.6. AI Technologies for Drones

4.6.1. AI Technical Solutions Commonly Used in Disaster Management and Emergency Response

4.6.2. AI Technical Solutions Commonly Used in UAV-SAR

4.6.3. AI Technical Solutions Commonly Used in Military Operations

4.6.4. AI Technical Solutions Commonly Used in Hazardous Materials Incident Response (HAZMAT/CBRN)

4.7. Convergence and Integration of Technologies

5. Conclusions

Supplementary Materials

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

Abbreviations

| AI | Artificial Intelligence |

| CBRN | Chemical, Biological, Radiological, and Nuclear |

| CO2 | Carbon Dioxide |

| ECG | Electrocardiograph |

| EO | Electro-optical |

| GPS | Global Positioning System |

| HAZMAT | Hazardous Materials |

| HSI | Hyperspectral Imaging |

| IMINT | Imagery Intelligence |

| INS | Inertial Navigation System |

| IoT | Internet of Things |

| IR | Infrared |

| ISR | Intelligence, Surveillance, and Reconnaissance |

| LiDAR | Light Detection and Ranging |

| MOX | Metal-Oxide Semiconductor |

| MSI | Multispectral |

| NBC | Nuclear, Biological, and Chemical |

| NIR | Near Infrared |

| PIR | Passive infrared detectors |

| PM | Particulate Matter |

| RADAR | Radio Detection and Ranging |

| RGB | Red, Green, Blue |

| SAR | Search and Rescue |

| VNIR | Very Near Infrared |

| WSN | Wireless Sensor Network |

References

- Mohsan, S.A.H.; Othman, N.Q.H.; Li, Y.; Alsharif, M.H.; Khan, M.A. Unmanned aerial vehicles (UAVs): Practical aspects, applications, open challenges, security issues, and future trends. Intell. Serv. Robot. 2023, 16, 109–137. [Google Scholar] [CrossRef]

- Emimi, M.; Khaleel, M.; Alkrash, A. The current opportunities and challenges in drone technology. Int. J. Electr. Eng. Sustain. 2023, 1, 74–89. [Google Scholar]

- Dokoro, H.A.; Hassan, I.; Yarda, M.Y.; Umar, M. Exploring the Technological Advancement in Drone Technology for Surveillance. Gombe State Polytech. Bajoga, J. Sci. Technol. 2024, 1, 78–85. [Google Scholar]

- Velasco, J.A.H.; Guevara, H.S.D. Development of uavs/drones equipped with thermal sensors for the search of individuals lost under rubble due to earthquake collapses or any eventuality requiring such capabilities. In Proceedings of the 34th International Council of the Aeronautical Sciences (ICAS) Congress, Florence, Italy, 9–13 September 2024. [Google Scholar]

- Yuen, P.W.; Richardson, M. An introduction to hyperspectral imaging and its application for security, surveillance and target acquisition. Imaging Sci. J. 2010, 58, 241–253. [Google Scholar] [CrossRef]

- Seidaliyeva, U.; Ilipbayeva, L.; Utebayeva, D.; Smailov, N.; Matson, E.T. LiDAR Technology for UAV Detection: From Fundamentals and Operational Principles to Advanced Detection and Classification Techniques. Sensors 2025, 25, 2757. [Google Scholar] [CrossRef]

- Balestrieri, E.; Daponte, P.; De Vito, L.; Picariello, F.; Tudosa, I. Sensors and measurements for UAV safety: An overview. Sensors 2021, 21, 8253. [Google Scholar] [CrossRef] [PubMed]

- Balestrieri, E.; Daponte, P.; De Vito, L.; Lamonaca, F. Sensors and measurements for unmanned systems: An overview. Sensors 2021, 21, 1518. [Google Scholar] [CrossRef]

- Kunertova, D. Drones have boots: Learning from Russia’s war in Ukraine. Contemp. Secur. Policy 2023, 44, 576–591. [Google Scholar] [CrossRef]

- Kunertova, D. The war in Ukraine shows the game-changing effect of drones depends on the game. Bull. At. Sci. 2023, 79, 95–102. [Google Scholar] [CrossRef]

- Rejeb, A.; Rejeb, K.; Simske, S.; Treiblmaier, H. Humanitarian drones: A review and research agenda. Internet Things 2021, 16, 100434. [Google Scholar] [CrossRef]

- Maghazei, O.; Netland, T. Drones in manufacturing: Exploring opportunities for research and practice. J. Manuf. Technol. Manag. 2020, 31, 1237–1259. [Google Scholar] [CrossRef]

- Mohsan, S.A.H.; Khan, M.A.; Noor, F.; Ullah, I.; Alsharif, M.H. Towards the unmanned aerial vehicles (UAVs): A comprehensive review. Drones 2022, 6, 147. [Google Scholar] [CrossRef]

- Hildmann, H.; Kovacs, E. Using unmanned aerial vehicles (UAVs) as mobile sensing platforms (MSPs) for disaster response, civil security and public safety. Drones 2019, 3, 59. [Google Scholar] [CrossRef]

- Yang, Z.; Yu, X.; Dedman, S.; Rosso, M.; Zhu, J.; Yang, J.; Xia, Y.; Tian, Y.; Zhang, G.; Wang, J. UAV remote sensing applications in marine monitoring: Knowledge visualization and review. Sci. Total Environ. 2022, 838, 155939. [Google Scholar] [CrossRef] [PubMed]

- Shakhatreh, H.; Sawalmeh, A.H.; Al-Fuqaha, A.; Dou, Z.; Almaita, E.; Khalil, I.; Othman, N.S.; Khreishah, A.; Guizani, M. Unmanned aerial vehicles (UAVs): A survey on civil applications and key research challenges. IEEE Access 2019, 7, 48572–48634. [Google Scholar] [CrossRef]

- Telli, K.; Kraa, O.; Himeur, Y.; Ouamane, A.; Boumehraz, M.; Atalla, S.; Mansoor, W. A comprehensive review of recent research trends on unmanned aerial vehicles (uavs). Systems 2023, 11, 400. [Google Scholar] [CrossRef]

- U.S. Department of Homeland Security. National Incident Management System: Emergency Operations Center How-to Quick Reference Guide; U.S. Department of Homeland Security: Washington, DC, USA, 2022. Available online: https://www.fema.gov (accessed on 22 March 2025).

- Kedia, T.; Ratcliff, J.; O’Connor, M.; Oluic, S.; Rose, M.; Freeman, J.; Rainwater-Lovett, K. Technologies enabling situational awareness during disaster response: A systematic review. Disaster Med. Public Health Prep. 2022, 16, 341–359. [Google Scholar] [CrossRef] [PubMed]

- U.S. Department of Health and Human Services. Department of Health and Human Services All-Hazards Plan. Administration for Strategic Preparedness & Response. 2024. Available online: https://aspr.hhs.gov/legal/Documents/AHP-final-2024-508.pdf (accessed on 20 March 2025).

- Li, T.; Hu, H. Development of the use of unmanned aerial vehicles (UAVs) in emergency rescue in China. Risk Manag. Healthc. Policy 2021, 14, 4293–4299. [Google Scholar] [CrossRef]

- Restas, A. Drone applications for supporting disaster management. World J. Eng. Technol. 2015, 3, 316–321. [Google Scholar] [CrossRef]

- Abuali, T.M.; Ahmed, A.A. Innovative Applications of Swarm Drones in Disaster Management and Rescue Operations. Open Eur. J. Eng. Sci. Res. OEJESR 2025, 1, 23–31. [Google Scholar]

- Eshtaiwi, A.; Ahmed, A.A. Emergency response and disaster management leveraging drones for rapid assessment and relief operations. Afr. J. Adv. Pure Appl. Sci. AJAPAS 2024, 3, 35–50. [Google Scholar]

- Udeanu, G.; Dobrescu, A.; Oltean, M. Unmanned aerial vehicle in military operations. Sci. Res. Educ. Air Force 2016, 18, 199–206. [Google Scholar] [CrossRef]

- Fowler, M. The strategy of drone warfare. J. Strateg. Secur. 2014, 7, 108–119. [Google Scholar] [CrossRef]

- Mahadevan, P. The military utility of drones. CSS Anal. Secur. Policy 2010, 78, 1–3. [Google Scholar]

- Wallace, D.; Costello, J. Eye in the sky: Understanding the mental health of unmanned aerial vehicle operators. J. Mil. Veterans Health 2017, 25, 36–41. [Google Scholar]

- Gargalakos, M. The role of unmanned aerial vehicles in military communications: Application scenarios, current trends, and beyond. J. Def. Model. Simul. 2024, 21, 313–321. [Google Scholar] [CrossRef]

- Jeler, G.E. Military and civilian applications of UAV systems. In Proceedings of the International Scientific Conference Strategies XXI. The Complex and Dynamic Nature of the Security Environment-Volume 1; Carol I National Defence University Publishing House: Bucharest, Romania, 2019; pp. 379–386. [Google Scholar]

- Brust, M.R.; Danoy, G.; Stolfi, D.H.; Bouvry, P. Swarm-based counter UAV defense system. Discov. Internet Things 2021, 1, 1–19. [Google Scholar] [CrossRef]

- Molloy, D.O. Drones in Modern Warfare: Lessons Learnt from the War in Ukraine. Aust. Army Res. Cent. 2024. [CrossRef]

- Kunertova, D. Learning from the Ukrainian Battlefield: Tomorrow’s Drone Warfare, Today’s Innovation Challenge; Technical report; ETH Zurich: Zurich, Switzerland, 2024. [Google Scholar]

- Kostenko, I.; Hurova, A. Connection of Private Remote Sensing Market with Military Contracts–the Case of Ukraine. Adv. Space Law 2024, 13. [Google Scholar] [CrossRef]

- Victor-Luca, I. Multispectral, Hyperspectral imaging and their military applications. Commun. Across Cult. 2024, 29. [Google Scholar]

- Rabajczyk, A.; Zboina, J.; Zielecka, M.; Fellner, R. Monitoring of selected CBRN threats in the air in industrial areas with the use of unmanned aerial vehicles. Atmosphere 2020, 11, 1373. [Google Scholar] [CrossRef]

- Aiello, G.; Hopps, F.; Santisi, D.; Venticinque, M. The employment of unmanned aerial vehicles for analyzing and mitigating disaster risks in industrial sites. IEEE Trans. Eng. Manag. 2020, 67, 519–530. [Google Scholar] [CrossRef]

- Safie, S.; Khairil, R. Regulatory, technical, and safety considerations for UAV-based inspection in chemical process plants: A systematic review of current practice and future directions. Transp. Res. Interdiscip. Perspect. 2025, 30, 101343. [Google Scholar] [CrossRef]

- Tarr, A.A.; Perera, A.G.; Chahl, J.; Chell, C.; Ogunwa, T.; Paynter, K. Drones—Healthcare, humanitarian efforts and recreational use. In Drone Law and Policy; Routledge: Abingdon, UK, 2021; pp. 35–54. [Google Scholar]

- Khanam, F.T.Z.; Chahl, L.A.; Chahl, J.S.; Al-Naji, A.; Perera, A.G.; Wang, D.; Lee, Y.; Ogunwa, T.T.; Teague, S.; Nguyen, T.X.B.; et al. Noncontact sensing of contagion. J. Imaging 2021, 7, 28. [Google Scholar] [CrossRef]

- Mohsan, S.A.H.; Zahra, Q.u.A.; Khan, M.A.; Alsharif, M.H.; Elhaty, I.A.; Jahid, A. Role of drone technology helping in alleviating the COVID-19 pandemic. Micromachines 2022, 13, 1593. [Google Scholar] [CrossRef]

- Solodov, A.; Williams, A.; Al Hanaei, S.; Goddard, B. Analyzing the threat of unmanned aerial vehicles (UAV) to nuclear facilities. Secur. J. 2018, 31, 305–324. [Google Scholar] [CrossRef]

- Ardiny, H.; Beigzadeh, A.; Mahani, H. Applications of unmanned aerial vehicles in radiological monitoring: A review. Nucl. Eng. Des. 2024, 422, 113110. [Google Scholar] [CrossRef]

- Daud, S.M.S.M.; Yusof, M.Y.P.M.; Heo, C.C.; Khoo, L.S.; Singh, M.K.C.; Mahmood, M.S.; Nawawi, H. Applications of drone in disaster management: A scoping review. Sci. Justice 2022, 62, 30–42. [Google Scholar] [CrossRef] [PubMed]

- AL-Dosari, K.; Hunaiti, Z.; Balachandran, W. Systematic review on civilian drones in safety and security applications. Drones 2023, 7, 210. [Google Scholar] [CrossRef]

- Yucesoy, E.; Balcik, B.; Coban, E. The role of drones in disaster response: A literature review of operations research applications. Int. Trans. Oper. Res. 2025, 32, 545–589. [Google Scholar] [CrossRef]

- Harzing, A.W. Publish or Perish. 2007. Available online: https://www.harzing.com/resources/publish-or-perish (accessed on 14 May 2025).

- Mohsin, B.; Steinhäusler, F.; Madl, P.; Kiefel, M. An innovative system to enhance situational awareness in disaster response. J. Homel. Secur. Emerg. Manag. 2016, 13, 301–327. [Google Scholar] [CrossRef]

- Damaševičius, R.; Bacanin, N.; Misra, S. From sensors to safety: Internet of Emergency Services (IoES) for emergency response and disaster management. J. Sens. Actuator Netw. 2023, 12, 41. [Google Scholar] [CrossRef]

- Papyan, N.; Kulhandjian, M.; Kulhandjian, H.; Aslanyan, L. AI-based drone assisted human rescue in disaster environments: Challenges and opportunities. Pattern Recognit. Image Anal. 2024, 34, 169–186. [Google Scholar] [CrossRef]

- Zeng, F.; Pang, C.; Tang, H. Sensors on the internet of things systems for urban disaster management: A systematic literature review. Sensors 2023, 23, 7475. [Google Scholar] [CrossRef]

- Jagatheesaperumal, S.K.; Hassan, M.M.; Hassan, M.R.; Fortino, G. The duo of visual servoing and deep learning-based methods for situation-aware disaster management: A comprehensive review. Cogn. Comput. 2024, 16, 2756–2778. [Google Scholar] [CrossRef]

- Balamurugan, M.; Sathesh, M.; Ramakrishnan, K.; Raja, M.; Kalaiarasi, K. Integrating Remote Sensing Technologies for Real-Time Disaster Management, Mitigation, and Decision Support Systems. In Proceedings of the 2024 International Conference on Recent Advances in Science and Engineering Technology (ICRASET), Mandya, India, 21–22 November 2024; pp. 1–5. [Google Scholar]

- Kanand, T.; Kemper, G.; König, R.; Kemper, H. Wildfire detection and disaster monitoring system using UAS and sensor fusion technologies. Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. 2020, 43, 1671–1675. [Google Scholar] [CrossRef]

- Gunturu, V.R. GIS, remote sensing and drones for disaster risk management. In 5th World Congress on Disaster Management: Volume I; Routledge: Abingdon, UK, 2022; pp. 182–194. [Google Scholar]

- Friedrich, M.; Lieb, T.J.; Temme, A.; Almeida, E.N.; Coelho, A.; Fontes, H. Respondrone-a situation awareness platform for first responders. In Proceedings of the 2022 IEEE/AIAA 41st Digital Avionics Systems Conference (DASC), Portsmouth, VA, USA, 18–22 September 2022; pp. 1–7. [Google Scholar]

- Hussain, M.; Mehboob, K.; Ilyas, S.Z.; Shaheen, S.; Abdulsalam, A. Drones application scenarios in a nuclear or radiological emergency. Kerntechnik 2022, 87, 260–270. [Google Scholar] [CrossRef]

- Fakhrulddin, S.S.; Gharghan, S.K.; Al-Naji, A.; Chahl, J. An advanced first aid system based on an unmanned aerial vehicles and a wireless body area sensor network for elderly persons in outdoor environments. Sensors 2019, 19, 2955. [Google Scholar] [CrossRef] [PubMed]

- He, Y.; Liu, Z.; Guo, Y.; Zhu, Q.; Fang, Y.; Yin, Y.; Wang, Y.; Zhang, B.; Liu, Z. UAV based sensing and imaging technologies for power system detection, monitoring and inspection: A review. Nondestruct. Test. Eval. 2024, 1–68. [Google Scholar] [CrossRef]

- Yang, S. Natural Disaster Impact and Emergency Response System Design Under Drone Vision. 2024. Available online: https://papers.ssrn.com/sol3/papers.cfm?abstract_id=4710030 (accessed on 20 March 2025). [CrossRef]

- Li, Q.; Xu, Y. Intelligent Early Warning Method Based on Drone Inspection. J. Uncertain Syst. 2021, 15, 137–146. [Google Scholar] [CrossRef]

- Fascista, A. Toward integrated large-scale environmental monitoring using WSN/UAV/Crowdsensing: A review of applications, signal processing, and future perspectives. Sensors 2022, 22, 1824. [Google Scholar] [CrossRef]

- Bruzzone, A.; Longo, F.; Massei, M.; Nicoletti, L.; Agresta, M.; Di Matteo, R.; Maglione, G.L.; Murino, G.; Padovano, A. Disasters and emergency management in chemical and industrial plants: Drones simulation for education and training. In Proceedings of the International Workshop on Modelling and Simulation for Autonomous Systems, Rome, Italy, 15–16 June 2016; pp. 301–308. [Google Scholar]

- Lyu, M.; Zhao, Y.; Huang, C.; Huang, H. Unmanned aerial vehicles for search and rescue: A survey. Remote Sens. 2023, 15, 3266. [Google Scholar] [CrossRef]

- Fang, Z.; Savkin, A.V. Strategies for optimized uav surveillance in various tasks and scenarios: A review. Drones 2024, 8, 193. [Google Scholar] [CrossRef]

- Ray, P.P.; Mukherjee, M.; Shu, L. Internet of things for disaster management: State-of-the-art and prospects. IEEE Access 2017, 5, 18818–18835. [Google Scholar] [CrossRef]

- Aretoulaki, E.; Ponis, S.T.; Plakas, G.; Tzanetou, D.; Kitsantas, A. A proposed drone-enabled platform for holistic disaster management. In Proceedings of the IADIS International Conference Applied Computing, Madeira Island, Portugal, 21–23 October 2023; pp. 239–244. [Google Scholar]

- Mavi, T.; Priya, D.; Grih Dhwaj Singh, R.; Singh, A.; Singh, D.; Upadhyay, P.; Singh, R.; Katyal, A. Enhancing unmanned vehicle navigation safety: Real-time visual mapping with CNNs with optimized Bezier trajectory smoothing. Robot. Intell. Autom. 2025, 45, 28–69. [Google Scholar] [CrossRef]

- Majumdar, S.; Kirkley, S.; Srivastava, M. Optimizing Emergency Response with UAV-Integrated Fire Safety for Real-Time Prediction and Decision-Making: A Performance Evaluation. In Proceedings of the 2024 IEEE Long Island Systems, Applications and Technology Conference (LISAT), Holtsville, NY, USA, 15 November 2024; pp. 1–7. [Google Scholar]

- Grigoriou, E.; Fountoulakis, M.; Kafetzakis, E.; Giannoulakis, I.; Fountoukidis, E.; Karypidis, P.A.; Margounakis, D.; Mikelidou, C.V.; Sennekis, I.; Boustras, G. Towards the RESPOND-A initiative: Next-generation equipment tools and mission-critical strategies for First Responders. In Proceedings of the 2022 IEEE International Conference on Omni-layer Intelligent Systems (COINS), Barcelona, Spain, 1–3 August 2022; pp. 1–5. [Google Scholar]

- Nagaiah, K.; Kalaivani, K.; Palamalai, R.; Suresh, K.; Sethuraman, V.; Karuppiah, V. A Logical Remote Sensing Based Disaster Management and Alert System Using AI-Assisted Internet of Things Technology. Remote Sens. Earth Syst. Sci. 2024, 7, 457–471. [Google Scholar] [CrossRef]

- Terwilliger, B.; Vincenzi, D.; Ison, D.; Witcher, K.; Thirtyacre, D.; Khalid, A. Influencing factors for use of unmanned aerial systems in support of aviation accident and emergency response. J. Autom. Control Eng. 2015, 3, 246. [Google Scholar] [CrossRef][Green Version]

- Yao, J.; Gao, X.; Liu, H. Design of UAV-Based Information Acquisition and Environmental Monitoring System for Dangerous Goods Warehouse. In Proceedings of the International Conference on Traffic and Transportation Studies; Springer: Singapore, 2024; pp. 435–443. [Google Scholar]

- Symeonidis, S.; Samaras, S.; Stentoumis, C.; Plaum, A.; Pacelli, M.; Grivolla, J.; Shekhawat, Y.; Ferri, M.; Diplaris, S.; Vrochidis, S. An extended reality system for situation awareness in flood management and media production planning. Electronics 2023, 12, 2569. [Google Scholar] [CrossRef]

- Gurung, P. Drone-Assisted Imaging and Vehicle Telemetry Integration for Enhanced Smart Mobility Applications. Rev. Internet Things IoT Cyber-Phys. Syst. Appl. 2025, 10, 26–38. [Google Scholar]

- Sheng, H.; Chen, G.; Li, X.; Men, J.; Xu, Q.; Zhou, L.; Zhao, J. A novel unmanned aerial vehicle driven real-time situation awareness for fire accidents in chemical tank farms. J. Loss Prev. Process Ind. 2024, 91, 105357. [Google Scholar] [CrossRef]

- Tao, Y.; Tian, B.; Adhikari, B.R.; Zuo, Q.; Luo, X.; Di, B. A Review of Cutting-Edge Sensor Technologies for Improved Flood Monitoring and Damage Assessment. Sensors 2024, 24, 7090. [Google Scholar] [CrossRef]

- Lauterbach, H.A.; Koch, C.B.; Hess, R.; Eck, D.; Schilling, K.; Nüchter, A. The Eins3D project—Instantaneous UAV-based 3D mapping for Search and Rescue applications. In Proceedings of the 2019 IEEE International Symposium on Safety, Security, and Rescue Robotics (SSRR), Würzburg, Germany, 2–4 September 2019; pp. 1–6. [Google Scholar]

- Kannan, K.; Awati, A.N.; Rao, S.S.; Malagi, V.P. DROPEX: Disaster Rescue Operations and Probing using EXpert drones. In Proceedings of the 2024 8th International Conference on Computational System and Information Technology for Sustainable Solutions (CSITSS), Bengaluru, India, 7–9 November 2024; pp. 1–5. [Google Scholar]

- Singh, S.S.; Themvinah, A.; Sharma, T.S.; Tarao, D.K.; Shougaijam, B.; Singh, T.C.; Singh, R.B. Real-Time Monitoring of Atmospheric Air Pollutants using Sensor Integrated UAV. In Proceedings of the 2024 International Conference on Electrical Electronics and Computing Technologies (ICEECT), Greater Noida, India, 29–31 August 2024; Volume 1, pp. 1–6. [Google Scholar]

- Abrahamsen, H.B. A remotely piloted aircraft system in major incident management: Concept and pilot, feasibility study. BMC Emerg. Med. 2015, 15, 12. [Google Scholar] [CrossRef]

- Maladyka, I.; Stas, S.; Pustovit, M.; Dzhulay, O. Application of UAV Video Communication Systems During Investigation of Emergency Situations. Adv. Sci. Technol. 2022, 114, 27–39. [Google Scholar] [CrossRef]

- Dehghan, M.; Khosravian, E. A review of cognitive UAVs: AI-driven situation awareness for enhanced operations. AI Tech Behav. Soc. Sci. 2024, 2, 54–65. [Google Scholar] [CrossRef]

- Chmielewski, M.; Kukiełka, M.; Gutowski, T.; Pieczonka, P. Handheld combat support tools utilising IoT technologies and data fusion algorithms as reconnaissance and surveillance platforms. In Proceedings of the 2019 IEEE 5th World Forum on Internet of Things (WF-IoT), Limerick, Ireland, 15–18 April 2019; pp. 219–224. [Google Scholar]

- Ehala, J.; Kaugerand, J.; Pahtma, R.; Astapov, S.; Riid, A.; Tomson, T.; Preden, J.S.; Motus, L. Situation awareness via Internet of things and in-network data processing. Int. J. Distrib. Sens. Netw. 2017, 13, 1550147716686578. [Google Scholar] [CrossRef]

- Wichai, P. A Survey in Adaptive Hybrid Wireless Sensor Network for Military Operations. In Proceedings of the IEEE Second Asian Conference on Defence Technology (ACDT), Chiang Mai, Thailand, 21–23 January 2016. [Google Scholar]

- Hua, Z. From Battlefield to Border: The Evolving Use of Drones in Surveillance Operations. ITEJ Inf. Technol. Eng. J. 2024, 9, 44–52. [Google Scholar] [CrossRef]

- Marut, A.; Wojciechowski, P.; Wojtowicz, K.; Djabin, J.; Kochan, J.; Kurenda, M. Surveillance and protection of critical infrastructure with Unmanned Aerial Vehicles. In Proceedings of the 2024 IEEE International Workshop on Technologies for Defense and Security (TechDefense), Naples, Italy, 11–13 November 2024; pp. 312–317. [Google Scholar]

- Bouvry, P.; Chaumette, S.; Danoy, G.; Guerrini, G.; Jurquet, G.; Kuwertz, A.; Muller, W.; Rosalie, M.; Sander, J. Using heterogeneous multilevel swarms of UAVs and high-level data fusion to support situation management in surveillance scenarios. In Proceedings of the 2016 IEEE International Conference on Multisensor Fusion and Integration for Intelligent Systems (MFI), Baden-Baden, Germany, 19–21 September 2016; pp. 424–429. [Google Scholar]

- Rajora, R.; Rajora, A.; Sharma, B.; Aggarwal, P.; Thapliyal, S. The Impact of the IoT on Military Operations: A Study of Challenges, Applications, and Future Prospects. In Proceedings of the 2024 4th International Conference on Innovative Practices in Technology and Management (ICIPTM), Noida, India, 21–23 February 2024; pp. 1–5. [Google Scholar]

- Kumsap, C.; Mungkung, V.; Amatacheewa, I.; Thanasomboon, T. Conceptualization of military’s common operation picture for the enhancement of disaster preparedness and response during emergency and communication blackout. Procedia Eng. 2018, 212, 1241–1248. [Google Scholar] [CrossRef]

- Adel, A.; Alani, N.H.; Whiteside, S.T.; Jan, T. Who is Watching Whom? Military and Civilian Drone: Vision Intelligence Investigation and Recommendations. IEEE Access 2024, 12, 177236–177276. [Google Scholar] [CrossRef]

- Amezquita, N.; Gonzalez, S.; Teran, M.; Salazar, C.; Corredor, J.; Corzo, G. Preliminary approach for UAV-based multi-sensor platforms for reconnaissance and surveillance applications. Ingeniería 2023, 28. [Google Scholar] [CrossRef]

- Yang, H.; Yang, J.; Zhang, B.; Wang, C. Visualization analysis of research on unmanned-platform based battlefield situation awareness. In Proceedings of the 2021 IEEE International Conference on Artificial Intelligence and Computer Applications (ICAICA), Dalian, China, 28–30 June 2021; pp. 334–338. [Google Scholar]

- Kaniewski, P.; Romanik, J.; Zubel, K.; Golan, E.; R-Moreno, M.D.; Skokowski, P.; Kelner, J.M.; Malon, K.; Maślanka, K.; Guszczyński, E.; et al. Heterogeneous wireless sensor networks enabled situational awareness enhancement for armed forces operating in an urban environment. In Proceedings of the 2023 Communication and Information Technologies (KIT), Vysoke Tatry, Slovakia, 11–13 October 2023; pp. 1–8. [Google Scholar]

- Chen, J.; Seng, K.P.; Smith, J.; Ang, L.M. Situation awareness in ai-based technologies and multimodal systems: Architectures, challenges and applications. IEEE Access 2024, 12, 88779–88818. [Google Scholar] [CrossRef]

- Rangel, R.K.; Terra, A.C. Development of a Surveillance tool using UAV’s. In Proceedings of the 2018 IEEE Aerospace Conference, Big Sky, MT, USA, 3–10 March 2018; pp. 1–11. [Google Scholar]

- Bird, D.T.; Ravindra, N.M. Additive manufacturing of sensors for military monitoring applications. Polymers 2021, 13, 1455. [Google Scholar] [CrossRef]

- Kim, J.; Gregory, T.; Freeman, J.; Korpela, C.M. System-of-systems for remote situational awareness: Integrating unattended ground sensor systems with autonomous unmanned aerial system and android team awareness kit. In Proceedings of the 2022 International Conference on Unmanned Aircraft Systems (ICUAS), Dubrovnik, Croatia, 21–24 June 2022; pp. 1418–1423. [Google Scholar]

- Elia, R.; Theocharides, T. Real-Time and On-Board Anomalous Command Detection in UAV Operations via Simultaneous UAV-Operator Monitoring. In Proceedings of the 2024 International Conference on Unmanned Aircraft Systems (ICUAS), Chania-Crete, Greece, 4–7 June 2024; pp. 248–255. [Google Scholar]

- Gong, J.; Yan, J.; Kong, D.; Li, D. Introduction to drone detection radar with emphasis on automatic target recognition (ATR) technology. arXiv 2023, arXiv:2307.10326. [Google Scholar] [CrossRef]

- Chang, G.; Fu, W.; Zhao, J.; Li, J.; Miao, H.; Zhang, X.; Dong, P. Overview of research on intelligent swarm munitions. Def. Technol. 2024. in Press. [Google Scholar] [CrossRef]

- Kim, J.; Lin, K.; Nogar, S.M.; Larkin, D.; Korpela, C.M. Detecting and localizing objects on an unmanned aerial system (uas) integrated with a mobile device. In Proceedings of the 2021 International Conference on Unmanned Aircraft Systems (ICUAS), Athens, Greece, 15–18 June 2021; pp. 738–743. [Google Scholar]

- Carapau, R.S.; Rodrigues, A.V.; Marques, M.M.; Lobo, V.; Coito, F. Interoperability of Unmanned Systems in Military Maritime Operations: Developing a controller for unmanned aerial systems operating in maritime environments. In Proceedings of the OCEANS 2017-Aberdeen, Aberdeen, UK, 19–22 June 2017; pp. 1–7. [Google Scholar]

- Kumari, P.; Gosula, H.S.; Lokhande, N. Real-Time Monitoring and Battery Life Enhancement of Surveillance Drones. In Intelligent Methods in Electrical Power Systems; Springer: Berlin/Heidelberg, Germany, 2024; pp. 151–171. [Google Scholar]

- Vaseashta, A.; Kudaverdyan, S.; Tsaturyan, S.; Bölgen, N. Cyber-physical systems to counter CBRN threats–sensing payload capabilities in aerial platforms for real-time monitoring and analysis. In Nanoscience and Nanotechnology in Security and Protection Against CBRN Threats; Springer: Berlin/Heidelberg, Germany, 2020; pp. 3–20. [Google Scholar]

- Hassan, C.A.Z.C.; Yaacob, M.S.Z.; Razif, M.R.M.; Zaik, M.A.; Bostaman, N.S. An Industrial Floor Monitoring System Drone with Hazardous Gas and Smoke Detection. Prog. Eng. Appl. Technol. 2024, 5, 1–2. [Google Scholar]

- Vitale, M.; Barresi, A.; Demichela, M. The Use of Aerial Platforms for Identification of Loss of Containment. Chem. Eng. Trans. 2024, 111, 73–78. [Google Scholar]

- Nagarajapandian, M.; Gopu, G.; Anitha, T.; Raksha, G.; Sabarisri, S.; Sarmitha, S. Determining the Toxicity of Water After Oilspill using UAV. In Proceedings of the 2024 9th International Conference on Communication and Electronics Systems (ICCES), Coimbatore, India, 16–18 December 2024; pp. 36–40. [Google Scholar]

- Ramesh, K.B.; Saran, S. Drone-Based Aerial Surveillance and Real-Time Hazardous Gas Leakage Detection System with Power BI Dashboard Integration for Enhanced Environmental Safety. In Proceedings of the 2025 6th International Conference on Mobile Computing and Sustainable Informatics (ICMCSI), Goathgaun, Nepal, 7–8 January 2025; pp. 541–547. [Google Scholar]

- Gopikumar, S. Evaluation of landfill leachate biodegradability using IOT through geotracking sensor based drone surveying. Environ. Res. 2023, 236, 116883. [Google Scholar] [CrossRef]

- Caragnano, G.; Ciccia, S.; Bertone, F.; Varavallo, G.; Terzo, O.; Capello, D.; Brajon, A. Unmanned aerial vehicle platform based on low-power components and environmental sensors: Technical description and data analysis on real-time monitoring of air pollutants. In Proceedings of the 2020 IEEE 7th International Workshop on Metrology for AeroSpace (MetroAeroSpace), Pisa, Italy, 22–24 June 2020; pp. 550–554. [Google Scholar]

- Sonkar, S.K.; Kumar, P.; George, R.C.; Philip, D.; Ghosh, A.K. Detection and estimation of natural gas leakage using UAV by machine learning algorithms. IEEE Sens. J. 2022, 22, 8041–8049. [Google Scholar] [CrossRef]

- Menon, G.S.; Ramesh, M.V.; Divya, P. A low cost wireless sensor network for water quality monitoring in natural water bodies. In Proceedings of the 2017 IEEE Global Humanitarian Technology Conference (GHTC), San Jose, CA, USA, 19–22 October 2017; pp. 1–8. [Google Scholar]

- Ciccia, S.; Bertone, F.; Caragnano, G.; Giordanengo, G.; Scionti, A.; Terzo, O. Unmanned Aerial Vehicle for the Inspection of Environmental Emissions. In Proceedings of the Conference on Complex Intelligent, and Software Intensive Systems; Springer: Berlin/Heidelberg, Germany, 2019; pp. 869–875. [Google Scholar]

- Burgués, J.; Esclapez, M.D.; Doñate, S.; Pastor, L.; Marco Colás, S. Aerial mapping of odorous gases in a wastewater treatment plant using a small drone. Remote Sens. 2021, 13, 1757. [Google Scholar] [CrossRef]

- Leal, V.G.; Silva-Neto, H.A.; da Silva, S.G.; Coltro, W.K.T.; Petruci, J.F.d.S. AirQuality lab-on-a-drone: A low-cost 3D-printed analytical IoT platform for vertical monitoring of gaseous H2S. Anal. Chem. 2023, 95, 14350–14356. [Google Scholar] [CrossRef]

- Omar, T.M.; Alshehhi, H.M.; Alnauimi, M.M.; Alblooshi, S.A.; El Moutaouakil, A. Solar-Powered Automated Drone for Industrial Safety and Anomaly Detection. In Proceedings of the 2024 IEEE 18th International Conference on Application of Information and Communication Technologies (AICT), Turin, Italy, 25–27 September 2024; pp. 1–6. [Google Scholar]

- Visser, H.; Petersen, A.C.; Ligtvoet, W. On the relation between weather-related disaster impacts, vulnerability and climate change. Clim. Change 2014, 125, 461–477. [Google Scholar] [CrossRef]

- Gilli, A.; Gilli, M. The diffusion of drone warfare? Industrial, organizational, and infrastructural constraints. Secur. Stud. 2016, 25, 50–84. [Google Scholar] [CrossRef]

- Alsayed, A.; Yunusa-Kaltungo, A.; Quinn, M.K.; Arvin, F.; Nabawy, M.R. Drone-assisted confined space inspection and stockpile volume estimation. Remote Sens. 2021, 13, 3356. [Google Scholar] [CrossRef]

- Fabris, A.; Kirchgeorg, S.; Mintchev, S. A soft drone with multi-modal mobility for the exploration of confined spaces. In Proceedings of the 2021 IEEE International Symposium on Safety, Security, and Rescue Robotics (SSRR), New York, NY, USA, 25–27 October 2021; pp. 48–54. [Google Scholar]

- Page, M.J.; McKenzie, J.E.; Bossuyt, P.M.; Boutron, I.; Hoffmann, T.C.; Mulrow, C.D.; Shamseer, L.; Tetzlaff, J.M.; Akl, E.A.; Brennan, S.E.; et al. The PRISMA 2020 statement: An updated guideline for reporting systematic reviews. BMJ 2021, 372, n71. [Google Scholar] [CrossRef]

| Study Title | Study Design | Goal | Imaging Technology Used | Sensor Technology Used |

|---|---|---|---|---|

| An innovative system to enhance situational awareness in disaster response [48] | Experimental study | Develop a user-friendly system for responder situational awareness | Infrared camera | Radiation, gas sensors |

| From Sensors to Safety: Internet of Emergency Services (IoES) [49] | Observational study | Examine IoES role in disaster management and real-time coordination | N/A | Environmental, motion, gas sensors |

| AI-Based drone-assisted human rescue [50] | Observational study | Explore UAV-based survivor detection using imaging, acoustics, and signals | Infrared thermal, RGB depth camera | 3D depth, time-of-flight, optical, infrared sensors |

| Sensors on IoT systems for urban disaster management [51] | Observational study | Review IoT sensors for urban disaster response | LWIR, visual range, omnidirectional cameras | Water level/pressure, soil moisture, UV, pressure, temperature, rainfall, ultrasonic, thermal, infrared sensors |

| Visual servoing and deep learning methods for disaster management [52] | Observational study | Improve UAV precision with deep learning and visual servoing | Infrared, CMOS, thermal, multispectral, hyperspectral cameras | LiDAR, hyperspectral sensors |

| Integrating remote sensing for disaster management [53] | Conceptual study | Propose DSS integrating remote sensing and modeling | Thermal, satellite, radar imagery; multispectral fusion | Remote, airborne, thermal infrared, spatiotemporal sensors |

| Wildfire detection using UAS and sensor fusion [54] | Experimental study | Integrate UAS and sensor fusion with 5G for wildfire detection | RGB, thermal cameras | Sensor fusion technology |

| GIS, remote sensing and drones for disaster risk management [55] | Observational study | Explore GIS, remote sensing, UAVs in risk management | Multispectral, SPOT, IRS images; hyperspectral, SAR | Hyperspectral, chemical sensors |

| ResponDrone - A situation awareness platform for first responders [56] | Experimental study | Improve disaster response via UAV real-time data sharing | Electro-optical, infrared cameras | N/A |

| Drones application scenarios in a nuclear or radiological emergency [57] | Observational study | Highlight UAV applications in nuclear/radiological events | Compton, optical cameras; 3D-LiDAR | Gamma-ray, altitude sensors |

| Advanced first aid UAV system [58] | Experimental study | Detect falls and deliver UAV-based first aid | N/A | Heartbeat, ECG, LiDAR, temperature, humidity, BP, ultrasonic sensors |

| UAV sensing for power system inspection [59] | Observational study | Review UAV-based power system monitoring | Infrared thermal, IR thermography, UV imagers | Ultraviolet, acoustic, vibration, thermal, gas, magnetic, piezoelectric sensors |

| Drone vision for disaster impact response [60] | Experimental study | UAV-based emergency response system | Thermal map | Infrared, LiDAR sensors |

| UAV remote sensing applications in marine monitoring: Knowledge visualization and review [15] | Observational study | Review UAV sensing for marine disaster monitoring | Thermal, infrared, multispectral cameras | Multispectral, near/shortwave infrared, hyperspectral, thermal, fluorescence, radar |

| Big data and emergency management [61] | Experimental study | Enhance UAV inspections for real-time crisis detection | RGB, depth cameras | Dual-light, IR, LiDAR, ultrasonic radar |

| Integrated WSN/UAV/crowdsensing monitoring [62] | Observational study | Review integrated monitoring approaches and prospects | Thermal, optical, multispectral, hyperspectral, infrared cameras | Chemical, thermal, biological; temperature, pressure, turbidity, radiation; LiDAR, optical RGB, infrared, UV, hyperspectral |

| Disasters and emergency management in chemical plants [63] | Experimental study | Develop drone-based training for situational awareness in industrial disasters | N/A | Air contamination, biomedical sensors |

| UAVs for search and rescue: A survey [64] | Observational study | Review UAV roles and improvements in SAR operations | Thermal, RGB, depth cameras | Thermal, infrared, optical, PIR, ultrasonic, LiDAR |

| Optimized UAV surveillance strategies [65] | Observational study | Review UAV optimisation strategies for diverse surveillance tasks | Near-infrared, multispectral, hyperspectral | Laser scanning, LiDAR sensors |

| IoT for disaster management: State-of-the-Art and Prospects [66] | Observational study | Survey IoT disaster applications, challenges, and trends | N/A | Temperature, humidity, gas, tilt, pressure, moisture, strain gauge, acoustic sensors |

| A proposed drone-enabled platform for holistic disaster management [67] | Conceptual study | Propose a drone platform for disaster data, logistics, and communication | Electro-optical, infrared cameras | Gas, gamma radiation, chemical sensors |

| Enhancing vehicle navigation safety [68] | Experimental study | UAV safety with real-time pothole detection and trajectory planning | 2D/3D LiDAR | GPS, vision sensors |

| Optimizing emergency response with UAV-integrated fire safety for real-time prediction and decision-making [69] | Experimental study | Evaluate UAV-cloud and ML for real-time fire prediction and response | Thermal cameras, high-resolution infrared imagery | LiDAR, thermal, infrared sensors |

| Towards the respond-a initiative: Next-generation equipment tools and mission-critical strategies for first responders [70] | Experimental study | Develop 5G/AR/IoT/UAV platform to support first responders | Thermal, infrared, AR cameras | Biometric, environmental, personnel location, health sensors |

| A logical remote sensing based disaster management and alert system using AI-assisted IoT technology [71] | Experimental study | Develop a neural network system for early disaster prediction | N/A | Seismic, temperature, humidity, pressure, thermistor, infrared, thermal, LiDAR |

| Factors in UAS use for aviation accidents [72] | Observational study | Identify factors influencing UAS use in aviation emergencies | N/A | Infrared, near-infrared sensors |

| UAV-IoT warehouse monitoring system [73] | Experimental study | Design a UAV-IoT system for real-time monitoring in dangerous goods warehouses | N/A | Temperature, humidity, gas, dust sensors |

| Extended reality for flood management [74] | Experimental study | Develop an XR platform for decision-making in floods and media planning | Multispectral image | Water level, ECG, respiration sensors |

| Drone imaging with vehicle telemetry [75] | Observational study | Enhance smart mobility with UAV imaging and telemetry | Thermal, optical cameras | LiDAR, multispectral sensors |

| UAV-based fire prediction in tank farms [76] | Experimental study | Develop a UAV system for real-time fire prediction and assessment | Infrared thermal camera/imaging | Thermocouple sensor |

| Flood monitoring sensor technologies [77] | Observational study | Review flood sensors and AI integration for monitoring and response | Optical, infrared, multispectral, hyperspectral cameras | Hyperspectral, ultrasonic, radar, infrared sensors |

| Eins3d project for 3D SAR mapping [78] | Experimental study | Develop a UAV system for real-time 3D SAR mapping | Thermal camera/mapping, 3D LiDAR | Attitude, laser, GPS sensors |

| DROPEX autonomous drone swarm [79] | Experimental study | Propose a drone swarm for faster, safer SAR operations | Thermal imaging | Infrared, LiDAR sensors |

| UAV for air pollutant monitoring [80] | Experimental study | Develop a UAV system for real-time air pollution monitoring | N/A | CO2, PM2.5, temperature, humidity sensors |

| Drone tech for surveillance [3] | Observational study | Review UAV surveillance advancements for safety | Thermal, infrared cameras | Multispectral, infrared, LiDAR sensors |

| RPAS feasibility for major incidents [81] | Experimental study | Assess the feasibility of RPAS for incident management | Thermal, infrared cameras | N/A |

| UAV video systems for emergencies [82] | Observational study | Assess UAV video transmission range for emergency response | Multispectral, infrared, thermal cameras | N/A |

| Study Title | Study Design | Goal | Imaging Technology Used | Sensor Technology Used |

|---|---|---|---|---|

| A Review of cognitive UAVs: AI-Driven situation awareness for enhanced operations [83] | Observational study | Review AI’s role in improving UAV situational awareness | Visible, thermal images; RGB video | Environmental sensors |

| Handheld combat support tools utilizing IoT technologies and data fusion algorithms as reconnaissance and surveillance platforms [84] | Experimental study | Develop mobile IoT tools for reconnaissance and decision support | N/A | Infrared motion, electromagnetic radar, fibre optic, microwave sensors |

| Situation awareness via Internet of Things and in-network data processing [85] | Experimental study | Enhance situational awareness via IoT and edge-processed data fusion | N/A | Passive infrared (PIR), sound sensor |

| Survey in adaptive hybrid wireless sensor network for military operations [86] | Observational study | Review adaptive hybrid WSNs for military situational awareness | Thermal imager | Seismic, acoustic, magnetic, electro-optical, radar, RF, PIR sensors |

| From battlefield to border: The evolving use of drones in surveillance operations [87] | Observational study | Analyze UAV surveillance applications, benefits, and challenges | High-resolution cameras | Thermal sensors |

| Surveillance and protection of critical infrastructure with unmanned aerial vehicles [88] | Observational study | Assess UAV and AI use in critical infrastructure security | Thermal, high-resolution cameras | LiDAR |

| Using heterogeneous multilevel swarms of UAVs and high-level data fusion to support situation management in surveillance scenarios [89] | Experimental study | Use UAV swarms and fusion for improved surveillance and detection | Electro-optical, infrared cameras | Long-range radar, infrared sensors |

| The Impact of the IoT on military operations [90] | Observational study | Examine IoT applications, challenges, and prospects in military ops | N/A | Smartwatches, health sensors |

| Conceptualization of the military’s common operation picture [91] | Experimental study | Develop a COP system with geospatial data and unmanned vehicles | N/A | Mine-detection sensor |

| Who is watching whom? Military and civilian drone: Vision intelligence investigation and recommendations [92] | Observational study | Survey UAV cyber threats, vulnerabilities, and countermeasures | Infrared, thermal cameras | Radar, infrared, optical, motion, acoustic sensors |

| Preliminary approach for UAV-based multi-sensor platforms [93] | Experimental study | Design an efficient UAV sensor platform with edge computing | Multispectral, thermal, infrared cameras | Multispectral, thermal, image sensors |

| Visualization analysis of research on unmanned-platform based battlefield situation awareness [94] | Observational study | Analyze battlefield situational awareness research trends | N/A | Photoelectric, infrared, LiDAR sensors |

| Heterogeneous wireless sensor networks for armed forces in urban environments [95] | Conceptual study | Improve urban situational awareness with autonomous WSNs | N/A | Optical, infrared, radar sensors |

| Situation awareness in AI-based technologies and multimodal systems [96] | Observational study | Apply AI and multimodal fusion to improve system awareness | Visible, infrared images | N/A |

| Development of a surveillance tool using UAV’s [97] | Experimental study | Build a UAV-based surveillance tool for urban police | Thermal, NIR, high-resolution cameras | Imaging sensors |

| Additive manufacturing of sensors for military monitoring applications [98] | Experimental study | Advance 3D-printed sensors for troop monitoring | N/A | Strain, chemical, biological sensors |

| System-of-Systems for remote situational awareness [99] | Experimental study | Integrate ground sensors with UAV for real-time awareness | N/A | Stereo, tracking, RGB-D, imaging sensors |

| Real-time anomalous command detection in UAV operations [100] | Experimental study | Detect abnormal UAV commands via operator-UAV monitoring | N/A | Wearable, ECG sensors |

| Introduction to drone detection radar with ATR technology [101] | Experimental study | Improve small drone detection with ATR-enhanced radar | Optical camera | Electro-optical, infrared sensors |

| Overview of research on intelligent swarm munitions [102] | Observational study | Review advances in collaborative swarm munitions | Infrared images | N/A |

| Detecting and localizing objects on a UAS with mobile integration [103] | Experimental study | Develop autonomous UAS for target localization in GPS-denied areas | N/A | LiDAR, vision, acoustic/laser, RGB-D sensors |

| Interoperability of unmanned systems in military maritime operations [104] | Experimental study | Develop interoperable unmanned maritime systems and UAV controller | N/A | Infrared markers, optical sensor |

| Real-time monitoring and battery life enhancement of surveillance drones [105] | Experimental study | Improve drone endurance and real-time processing with edge AI | Microphone | N/A |

| Study Title | Study Design | Goal | Imaging Technology Used | Sensor Technology Used |

|---|---|---|---|---|

| Cyber-physical systems to counter CBRN threats [106] | Observational study | Develop a UAV platform for real-time HAZMAT monitoring | NIR, VNIR hyperspectral imaging | Hyperspectral, LiDAR, EO/IR sensors |

| A novel UAV driven real-time situation awareness for fire accidents [76] | Experimental study | Create a UAV system for real-time fire detection and prediction | Infrared thermal, infrared cameras | Thermocouple sensors |

| Disasters and emergency management in chemical plants [63] | Observational study | Enhance UAV pilot training via 3D simulation for emergencies | N/A | Air contamination, biomedical sensors |

| Industrial floor monitoring system drone with hazardous gas detection [107] | Experimental study | Develop an autonomous drone to detect hazardous gas and smoke | N/A | Gas, smoke sensors |

| Aerial platforms for hydrogen leak detection [108] | Observational study | Detect hydrogen leaks in real-time with UAV systems | Thermal, multispectral, hyperspectral, infrared cameras | Catalytic bed, MOX sensors |

| Toward integrated large-scale environmental monitoring using WSN/UAV/Crowdsensing [62] | Observational study | Advance large-scale monitoring via UAVs, WSNs, crowdsensing | Thermal, multispectral, hyperspectral, optical cameras | Physical, chemical environmental sensors |

| Determining water toxicity after oil spills using UAV [109] | Experimental study | Monitor water toxicity post-oil spill via UAV sensors | N/A | Turbidity sensor |

| Drone-based aerial surveillance and hazardous gas leakage detection [110] | Experimental study | Develop a low-cost UAV system for air quality and gas leak monitoring | N/A | Gas, CO, temperature/humidity sensors |

| Evaluation of landfill leachate biodegradability using IoT drone surveying [111] | Experimental study | Monitor toxic waste and leachate with IoT drones | Thermal cameras | Hyperspectral, electromagnetic, inductive sensors |

| UAV platform with low-power components for air pollutant monitoring [112] | Experimental study | Develop a UAV system for real-time air pollution monitoring | N/A | Electrochemical gas sensors |

| Detection of natural gas leakage using UAV with ML [113] | Experimental study | Detect gas leaks via UAV and machine learning | N/A | Gas sensors, LiDAR |

| Low-cost wireless sensor network for water quality monitoring [114] | Conceptual study | Monitor water quality in real-time using solar-powered IoT sensors | N/A | Hydrogen, turbidity, ammonia sensors |

| UAV for inspection of environmental emissions [115] | Conceptual study | Monitor hazardous emissions in real-time with UAV | N/A | Electrochemical gas sensors |

| Aerial mapping of odorous gases in wastewater treatment plants [116] | Conceptual study | Map hazardous gas emissions with UAVs | N/A | Electrochemical, MOX sensors |

| AirQuality Lab-on-a-Drone for monitoring [117] | Observational study | Monitor gas in real-time using UAV IoT system | N/A | MOX sensors |

| Solar-powered automated drone for industrial safety [118] | Experimental study | Deploy solar-powered UAVs for autonomous inspections in HAZMAT sites | N/A | Ultrasonic sensors |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Rattanaamporn, S.; Perera, A.; Nguyen, A.; Ngo, T.B.; Chahl, J. Drone Imaging and Sensors for Situational Awareness in Hazardous Environments: A Systematic Review. J. Sens. Actuator Netw. 2025, 14, 98. https://doi.org/10.3390/jsan14050098

Rattanaamporn S, Perera A, Nguyen A, Ngo TB, Chahl J. Drone Imaging and Sensors for Situational Awareness in Hazardous Environments: A Systematic Review. Journal of Sensor and Actuator Networks. 2025; 14(5):98. https://doi.org/10.3390/jsan14050098

Chicago/Turabian StyleRattanaamporn, Siripan, Asanka Perera, Andy Nguyen, Thanh Binh Ngo, and Javaan Chahl. 2025. "Drone Imaging and Sensors for Situational Awareness in Hazardous Environments: A Systematic Review" Journal of Sensor and Actuator Networks 14, no. 5: 98. https://doi.org/10.3390/jsan14050098

APA StyleRattanaamporn, S., Perera, A., Nguyen, A., Ngo, T. B., & Chahl, J. (2025). Drone Imaging and Sensors for Situational Awareness in Hazardous Environments: A Systematic Review. Journal of Sensor and Actuator Networks, 14(5), 98. https://doi.org/10.3390/jsan14050098