YOLOv7-Plum: Advancing Plum Fruit Detection in Natural Environments with Deep Learning

Abstract

1. Introduction

2. Result

2.1. Impact of Data Enhancement

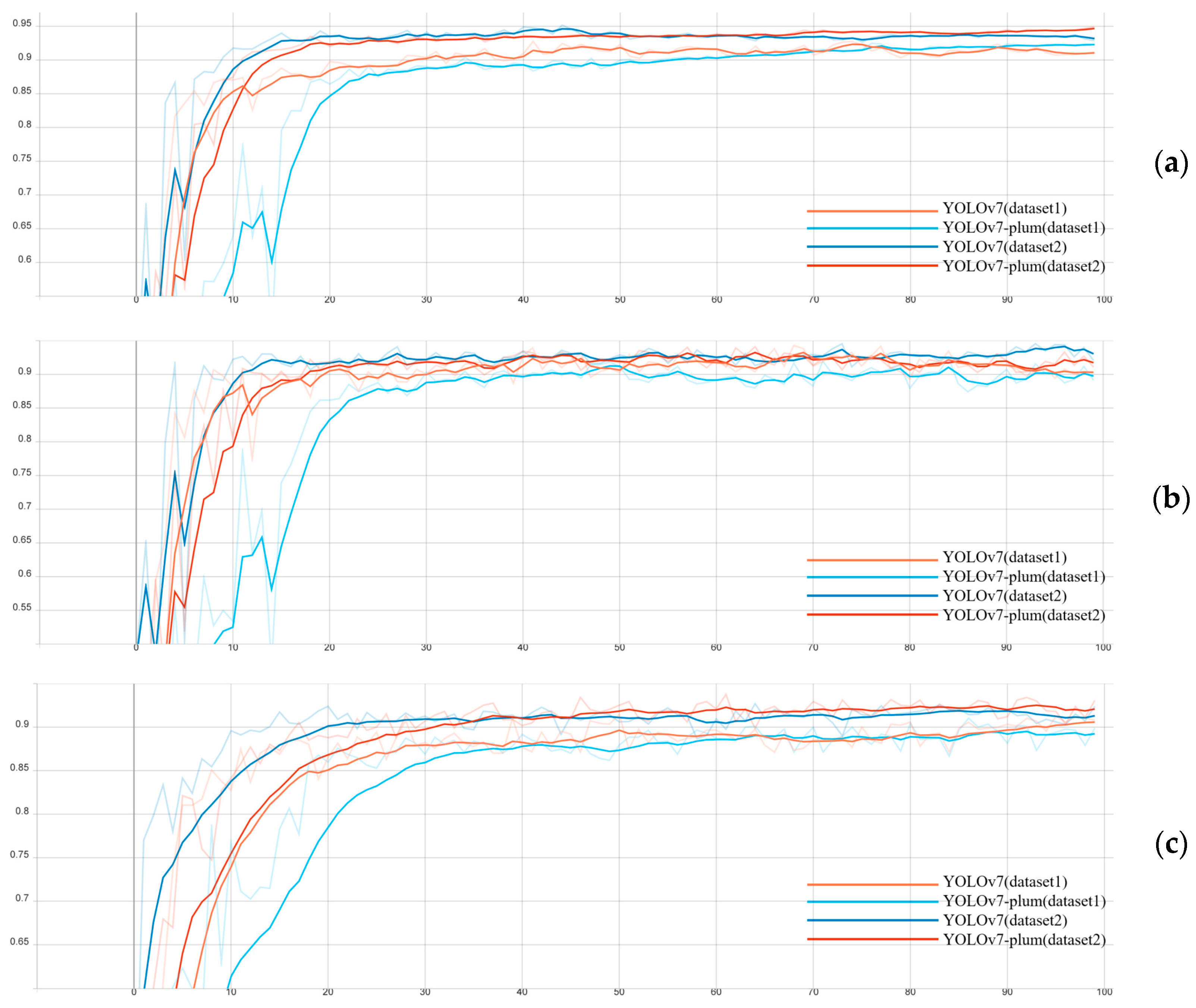

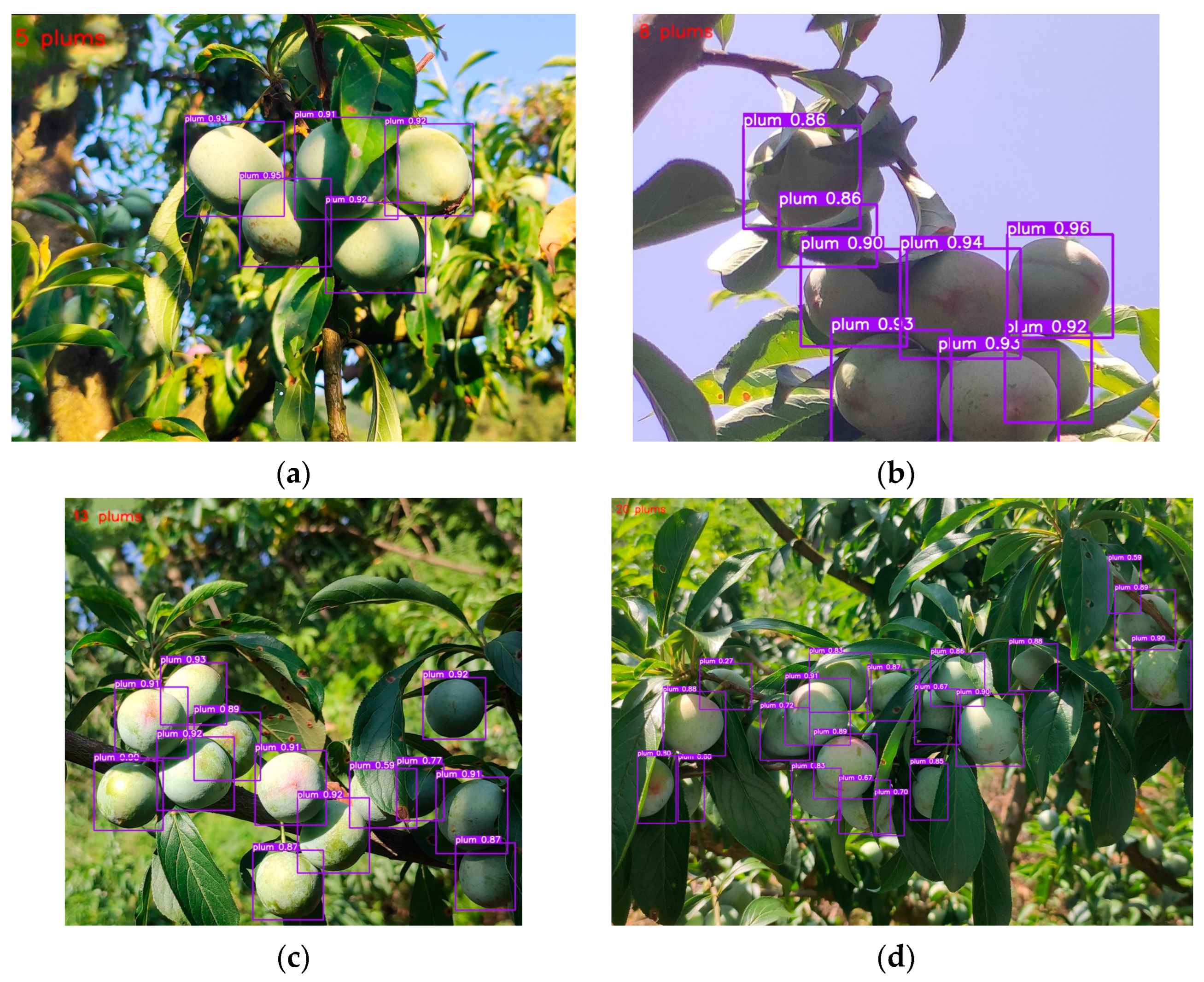

2.2. Comparison with YOLOv7

2.3. Ablation Experiments

2.4. Comparison with Mainstream Networks

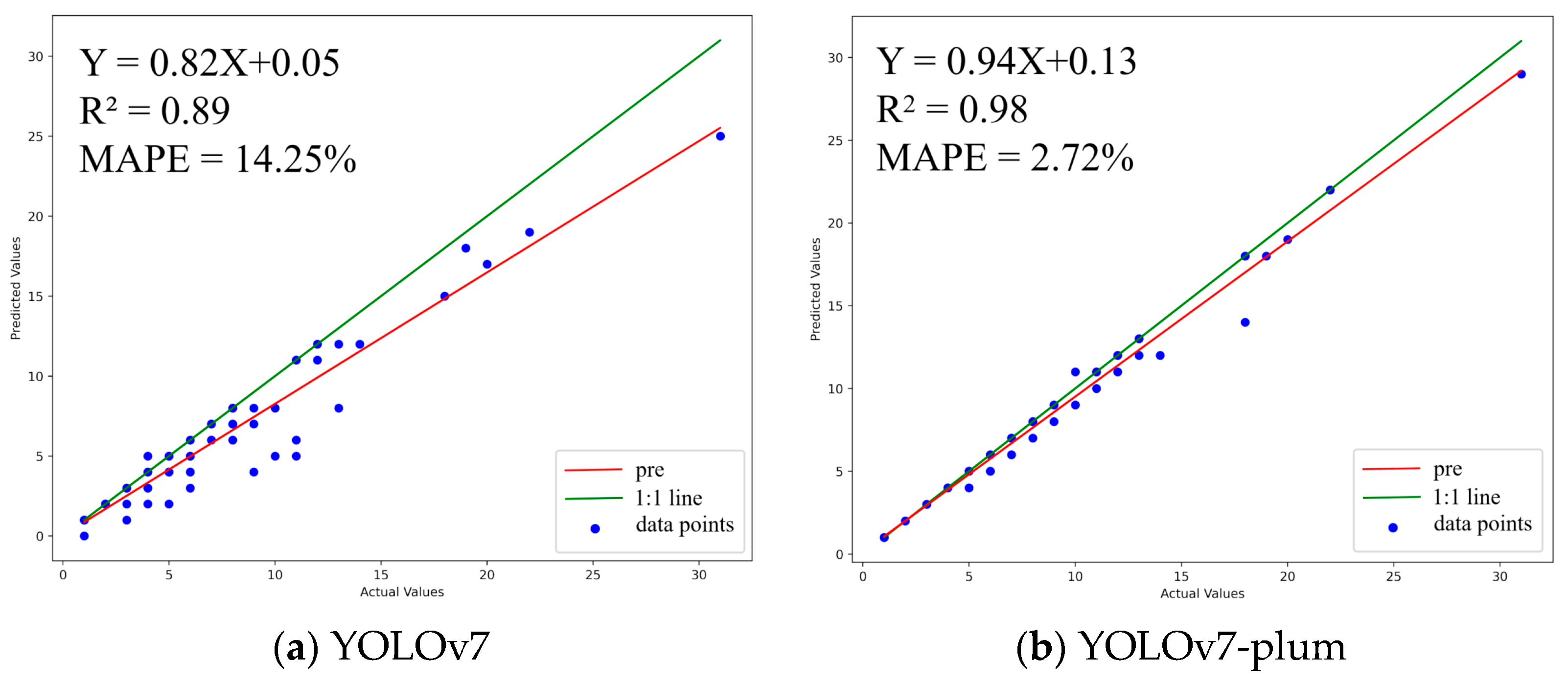

2.5. Statistical Analysis

3. Materials and Methods

3.1. Dataset

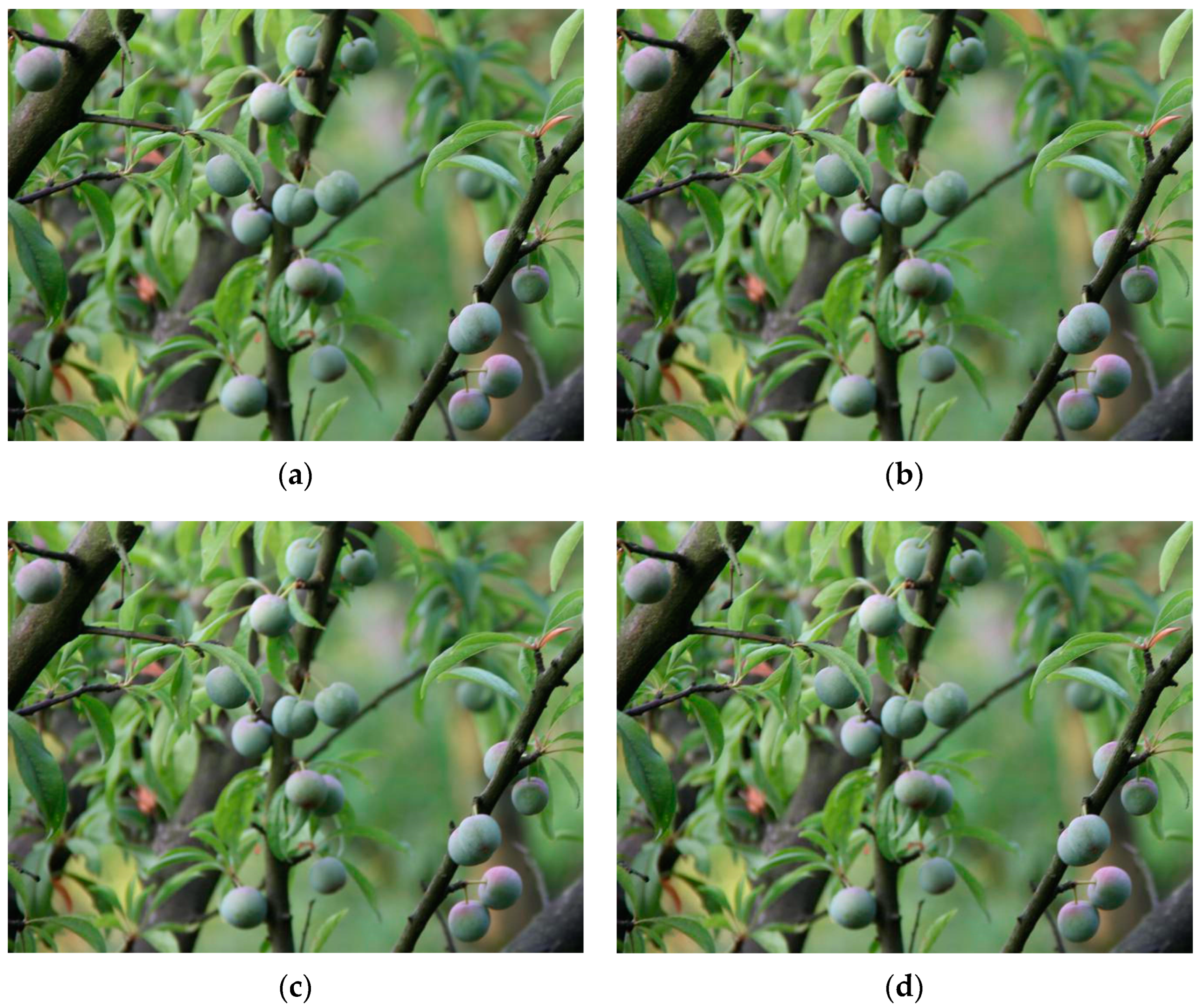

3.1.1. Experiment Field and Data Acquisition

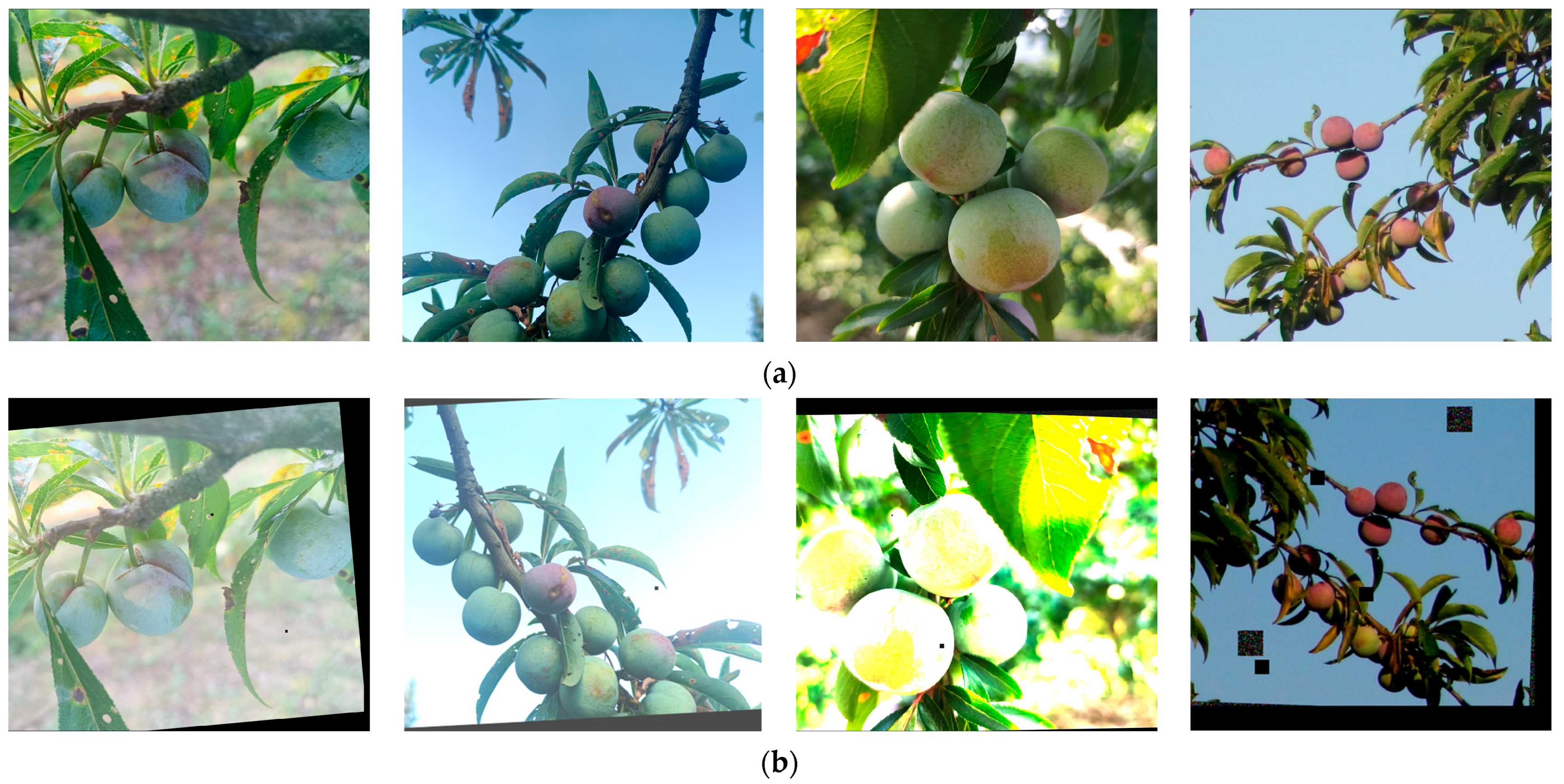

3.1.2. Data Enhancement and Data Annotation

3.2. Experimental Platform

3.3. Deep Learning Network Construction

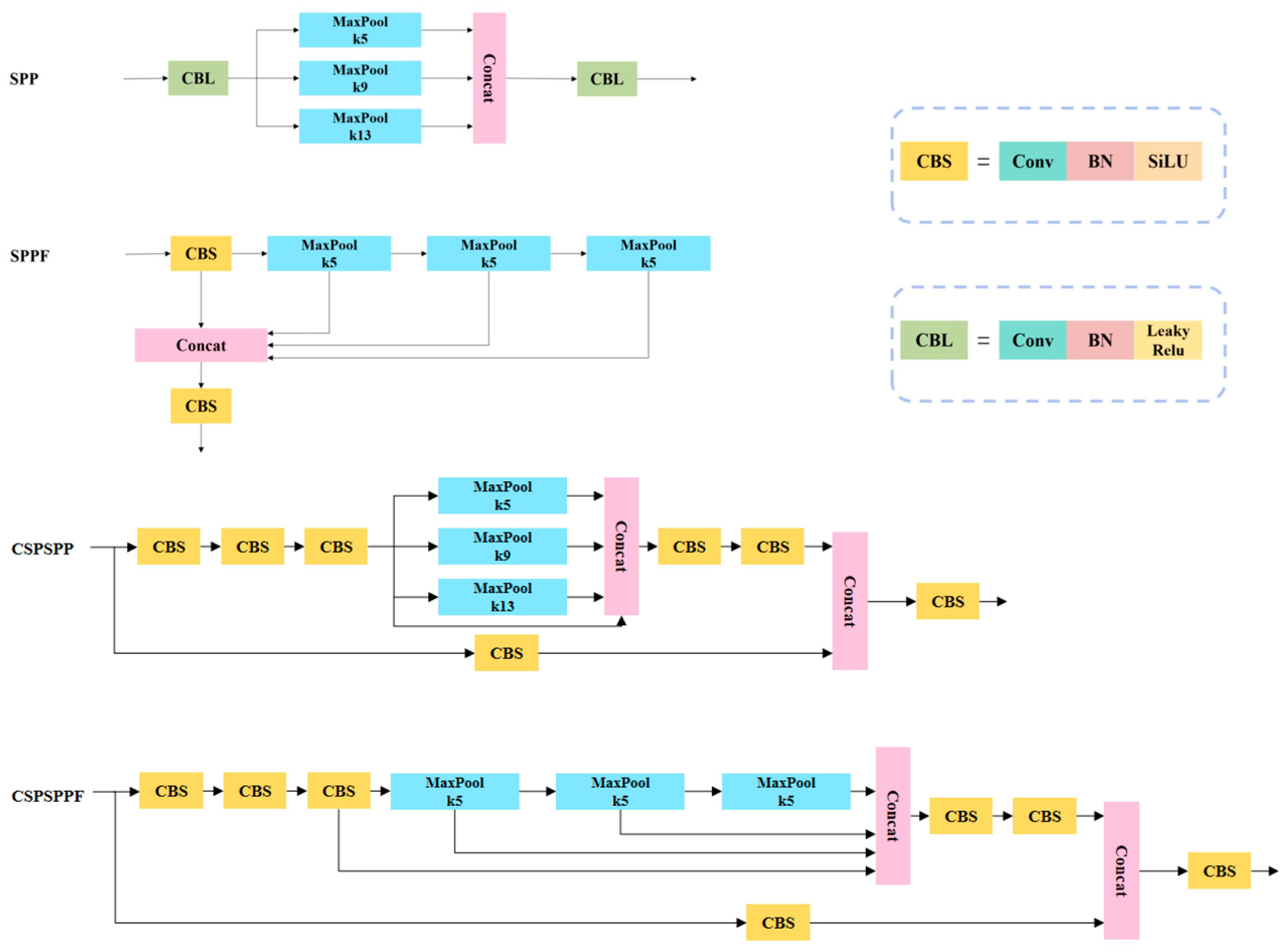

3.3.1. CSPSPPF Module

3.3.2. Bilinear Interpolation

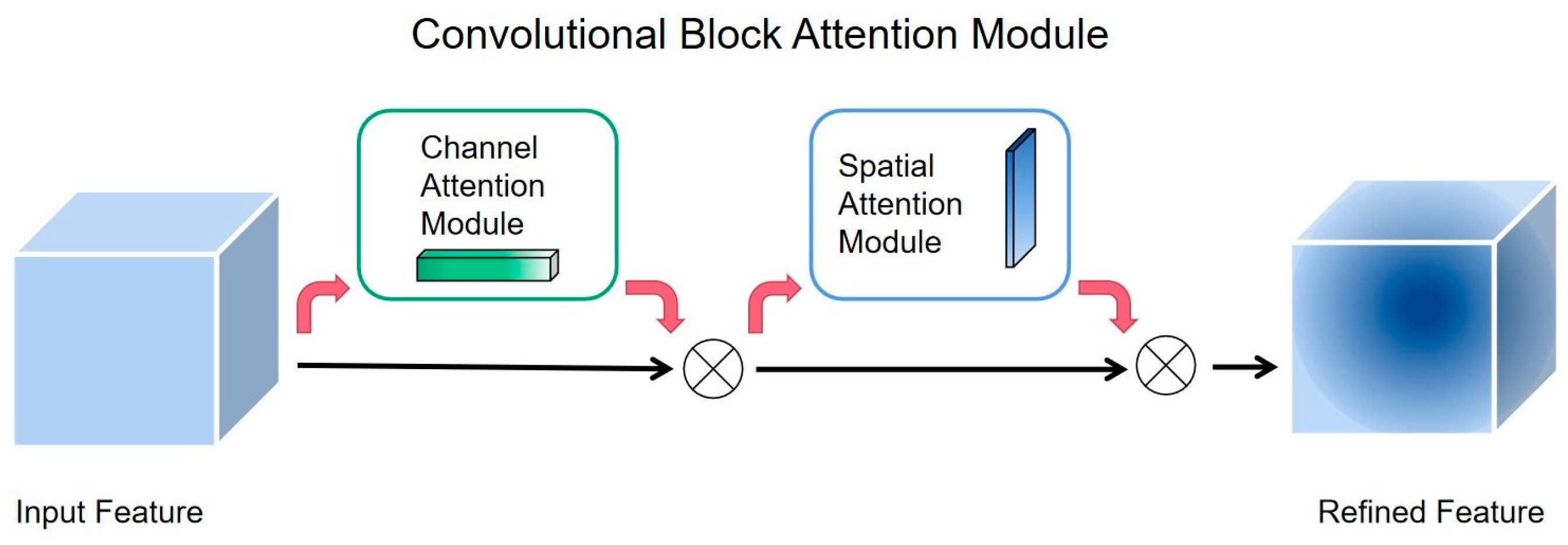

3.3.3. Attention Mechanism

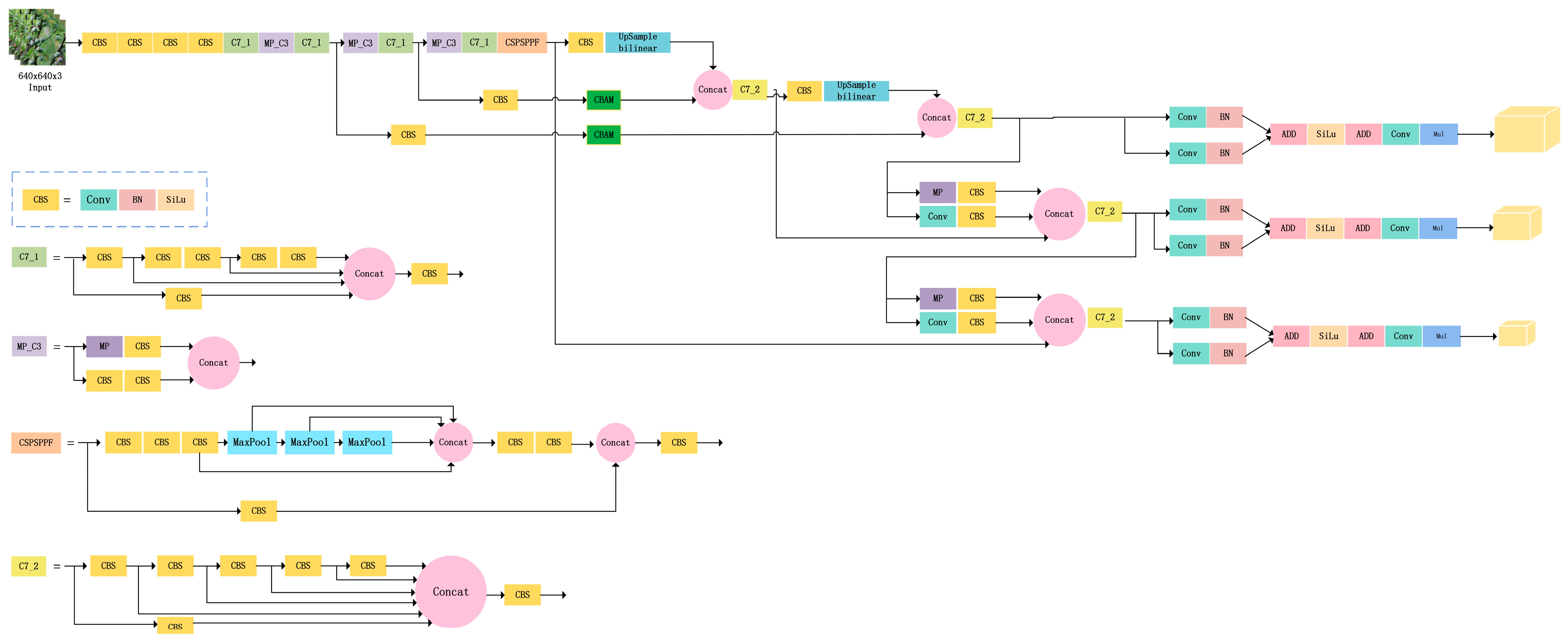

3.3.4. YOLOv7-Plum Deep Learning Network Structure

3.4. Evaluation Indicators

4. Discussion

4.1. Feasibility Analysis

4.2. Contribution to Intelligent Cultivation of the Plum Industry

5. Conclusions

Author Contributions

Funding

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Wu, W.; Chen, F.; Yeh, K.; Chen, J. ISSR Analysis of Genetic Diversity and Structure of Plum Varieties Cultivated in Southern China. Biology 2019, 8, 2. [Google Scholar] [CrossRef] [PubMed]

- Jiangbing, L.I.; Lin, Y.; Zhong, T. Research Progress on Processing of Plum. China Food Saf. Mag. 2022, 15, 117–120. [Google Scholar] [CrossRef]

- Mirheidari, F.; Khadivi, A.; Moradi, Y.; Paryan, S. The Selection of Superior Plum (Prunus domestica L.) Accessions Based on Morphological and Pomological Characterizations. Euphytica 2020, 216, 87. [Google Scholar] [CrossRef]

- Kim, E.; Hong, S.J.; Kim, S.Y.; Lee, C.H.; Kim, S.; Kim, H.J.; Kim, G. OPEN CNN—Based Object Detection and Growth Estimation of Plum Fruit (Prunus mume) Using RGB and Depth Imaging Techniques. Sci. Rep. 2022, 12, 20796. [Google Scholar] [CrossRef]

- Liu, W.; Nan, G.; Nisar, M.F.; Wan, C. Chemical Constituents and Health Benefits of Four Chinese Plum Species. J. Food Qual. 2020, 2020, 8842506. [Google Scholar] [CrossRef]

- Li, B.; Li, S.; Ma, B.; Jing, Y.; Zhang, Z.; Li, Z.; Zeng, D. The Influence of Different Harvest Time of Quality of “Bashan” Plum. IOP Conf. Ser. Earth Environ. Sci. 2020, 512, 012061. [Google Scholar] [CrossRef]

- Tian, Y.; Yang, G.; Wang, Z.; Wang, H.; Li, E.; Liang, Z. Apple Detection during Different Growth Stages in Orchards Using the Improved YOLO-V3 Model. Comput. Electron. Agric. 2019, 157, 417–426. [Google Scholar] [CrossRef]

- Ahmad, J.; Jan, B.; Farman, H.; Ahmad, W.; Ullah, A. Disease Detection in Plum Using Convolutional Neural Network under True Field Conditions. Sensors 2020, 20, 5569. [Google Scholar] [CrossRef]

- Wu, D.; Zhao, E.; Fang, D.; Jiang, S.; Wu, C.; Wang, W.; Wang, R. Determination of Vibration Picking Parameters of Camellia Oleifera Fruit Based on Acceleration and Strain Response of Branches. Agriculture 2022, 12, 1222. [Google Scholar] [CrossRef]

- Ali, S.; Id, N.; Li, J.; Bhatti, U.A.; Bazai, S.U.; Zafar, A.; Bhatti, M.A.; Mehmood, A.; Ain, Q.; Shoukat, U. A Hybrid Approach to Forecast the COVID-19 Epidemic Trend. PLoS ONE 2021, 16, e0256971. [Google Scholar] [CrossRef]

- Song, H.; Nguyen, A.-D.; Gong, M.; Lee, S. A Review of Computer Vision Methods for Purpose on Computer-Aided Diagnosis. J. Int. Soc. Simul. Surg. 2016, 3, 1–8. [Google Scholar] [CrossRef]

- Ji, W.; Gao, X.; Xu, B.; Pan, Y.; Zhang, Z.; Zhao, D. Apple Target Recognition Method in Complex Environment Based on Improved YOLOv4. J. Food Process Eng. 2021, 44, e13866. [Google Scholar] [CrossRef]

- Zhang, J.; Xu, S.; Sun, J.; Ou, D.; Wu, X.; Wang, M. Unsupervised Adversarial Domain Adaptation for Agricultural Land Extraction of Remote Sensing Images. Remote Sens. 2022, 14, 6298. [Google Scholar] [CrossRef]

- Soheli, S.J.; Jahan, N.; Hossain, M.B.; Adhikary, A.; Khan, A.R.; Wahiduzzaman, M. Smart Greenhouse Monitoring System Using Internet of Things and Artificial Intelligence; Springer: New York, NY, USA, 2022; Volume 124, ISBN 0123456789. [Google Scholar]

- Wang, T.; Chen, B.; Zhang, Z.; Li, H.; Zhang, M. Applications of Machine Vision in Agricultural Robot Navigation: A Review. Comput. Electron. Agric. 2022, 198, 107085. [Google Scholar] [CrossRef]

- Deng, B.; Lv, H. Survey of Target Detection Based on Neural Network. J. Phys. Conf. Ser. 2021, 1952, 022055. [Google Scholar] [CrossRef]

- Wu, Q.; Zeng, J.; Wu, K. Research and Application of Crop Pest Monitoring and Early Warning Technology in China. Front. Agric. Sci. Eng. 2022, 9, 19–36. [Google Scholar] [CrossRef]

- Li, S.; Li, K.; Qiao, Y.; Zhang, L. A Multi-Scale Cucumber Disease Detection Method in Natural Scenes Based on YOLOv5. Comput. Electron. Agric. 2022, 202, 107363. [Google Scholar] [CrossRef]

- Wang, L.; Zhao, Y.; Xiong, Z.; Wang, S.; Li, Y.; Lan, Y. Fast and Precise Detection of Litchi Fruits for Yield Estimation Based on the Improved YOLOv5 Model. Front. Plant Sci. 2022, 13, 965425. [Google Scholar] [CrossRef]

- Wu, J. Crop Growth Monitoring System Based on Agricultural Internet of Things Technology. J. Electr. Comput. Eng. 2022, 2022, 8466037. [Google Scholar] [CrossRef]

- Ukwuoma, C.C.; Zhiguang, Q.; Bin Heyat, M.B.; Ali, L.; Almaspoor, Z.; Monday, H.N. Recent Advancements in Fruit Detection and Classification Using Deep Learning Techniques. Math. Probl. Eng. 2022, 2022, 9210947. [Google Scholar] [CrossRef]

- Lin, T.Y.; Goyal, P.; Girshick, R.; He, K.; Dollar, P. Focal Loss for Dense Object Detection. IEEE Trans. Pattern Anal. Mach. Intell. 2020, 42, 318–327. [Google Scholar] [CrossRef]

- Zhang, X.; Wu, K.; Ma, Q.; Chen, Z. Research on Object Detection Model Based on Feature Network Optimization. Processes 2021, 9, 1654. [Google Scholar] [CrossRef]

- Sa, I.; Ge, Z.; Dayoub, F.; Upcroft, B.; Perez, T.; McCool, C. Deepfruits: A Fruit Detection System Using Deep Neural Networks. Sensors 2016, 16, 1222. [Google Scholar] [CrossRef] [PubMed]

- Ren, S.; He, K.; Girshick, R.; Sun, J. Faster R-CNN: Towards Real-Time Object Detection with Region Proposal Networks. IEEE Trans. Pattern Anal. Mach. Intell. 2017, 39, 1137–1149. [Google Scholar] [CrossRef]

- Yu, Y.; Zhang, K.; Yang, L.; Zhang, D. Fruit Detection for Strawberry Harvesting Robot in Non-Structural Environment Based on Mask-RCNN. Comput. Electron. Agric. 2019, 163, 104846. [Google Scholar] [CrossRef]

- Fu, L.; Majeed, Y.; Zhang, X.; Karkee, M. ScienceDirect Faster R e CNN e Based Apple Detection in Dense- Foliage Fruiting-Wall Trees Using RGB and Depth Features for Robotic Harvesting. Biosyst. Eng. 2020, 197, 245–256. [Google Scholar] [CrossRef]

- Kateb, F.A.; Monowar, M.M.; Hamid, M.A.; Ohi, A.Q.; Mridha, M.F. FruitDet: Attentive Feature Aggregation for Real-Time Fruit Detection in Orchards. Agronomy 2021, 11, 2440. [Google Scholar] [CrossRef]

- Xu, B.; Cui, X.; Ji, W.; Yuan, H.; Wang, J. Apple Grading Method Design and Implementation for Automatic Grader Based on Improved YOLOv5. Agriculture 2023, 13, 124. [Google Scholar] [CrossRef]

- Fu, L.; Yang, Z.; Wu, F.; Zou, X.; Lin, J.; Cao, Y.; Duan, J. YOLO-Banana: A Lightweight Neural Network for Rapid Detection of Banana Bunches and Stalks in the Natural Environment. Agronomy 2022, 12, 391. [Google Scholar] [CrossRef]

- Li, Z.; Jiang, X.; Shuai, L.; Zhang, B.; Yang, Y.; Mu, J. A Real-Time Detection Algorithm for Sweet Cherry Fruit Maturity Based on YOLOX in the Natural Environment. Agronomy 2022, 12, 2482. [Google Scholar] [CrossRef]

- Wang, D.; He, D. Channel Pruned YOLO V5s-Based Deep Learning Approach for Rapid and Accurate Apple Fruitlet Detection before Fruit Thinning. Biosyst. Eng. 2021, 210, 271–281. [Google Scholar] [CrossRef]

- Wang, C.-Y.; Bochkovskiy, A.; Liao, H.-Y.M. YOLOv7: Trainable Bag-of-Freebies Sets New State-of-the-Art for Real-Time Object Detectors. arXiv 2022, arXiv:2207.02696. [Google Scholar]

- Jiang, P.; Ergu, D.; Liu, F.; Cai, Y.; Ma, B. A Review of Yolo Algorithm Developments. Procedia Comput. Sci. 2021, 199, 1066–1073. [Google Scholar] [CrossRef]

- Wu, D.; Jiang, S.; Zhao, E.; Liu, Y.; Zhu, H.; Wang, W.; Wang, R. Detection of Camellia Oleifera Fruit in Complex Scenes by Using YOLOv7 and Data Augmentation. Appl. Sci. 2022, 12, 11318. [Google Scholar] [CrossRef]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Spatial Pyramid Pooling in Deep Convolutional Networks for Visual Recognition. IEEE Trans. Pattern Anal. Mach. Intell. 2015, 37, 1904–1916. [Google Scholar] [CrossRef]

- Zhang, T.; Filin, S. Deep-Learning-Based Point Cloud Upsampling of Natural Entities and Scenes. Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. ISPRS Arch. 2022, 43, 321–327. [Google Scholar] [CrossRef]

- Zhao, Y. An Improved Indoor Positioning Method Based on Nearest Neighbor Interpolation. Netw. Commun. Technol. 2021, 6, 1. [Google Scholar] [CrossRef]

- Yang, X.; Zhang, K.; Ni, C.; Cao, H.; Th, J. Ash Determination of Coal Flotation Concentrate by Analyzing Froth Image Using a Novel Hybrid Model Based on Deep Learning Algorithms and Attention Mechanism. Energy 2022, 260, 125027. [Google Scholar] [CrossRef]

- Yang, X. An Overview of the Attention Mechanisms in Computer Vision. J. Phys. Conf. Ser. 2020, 1693, 012173. [Google Scholar] [CrossRef]

- Li, S. A Fast Neural Network Based on Attention Mechanisms for Detecting Field Flat Jujube. Agriculture 2022, 12, 717. [Google Scholar] [CrossRef]

- Guo, M.; Xu, T.; Liu, J.; Liu, Z.; Jiang, P. Attention Mechanisms in Computer Vision: A Survey. Comput. Vis. Media 2022, 8, 331–368. [Google Scholar] [CrossRef]

- Woo, S.; Park, J.; Lee, J.; Kweon, I.S. CBAM: Convolutional Block Attention Module. arXiv 2018, arXiv:1807.06521. [Google Scholar]

- Wang, Y.; Li, J.; Chen, Z.; Wang, C. Ships’ Small Target Detection Based on the CBAM-YOLOX Algorithm. J. Mar. Sci. Eng. 2022, 10, 2013. [Google Scholar] [CrossRef]

- Li, L.; Fang, B.; Zhu, J. Applied Sciences Performance Analysis of the YOLOv4 Algorithm for Pavement Damage Image Detection with Different Embedding Positions of CBAM Modules. Appl. Sci. 2022, 12, 10180. [Google Scholar] [CrossRef]

- Du, L.; Lu, Z.; Li, D. Broodstock Breeding Behaviour Recognition Based on Resnet50-LSTM with CBAM Attention Mechanism. Comput. Electron. Agric. 2022, 202, 107404. [Google Scholar] [CrossRef]

- Yan, F.; Zhao, S.; Venegas-Andraca, S.E.; Hirota, K. Implementing Bilinear Interpolation with Quantum Images. Digit. Signal Process. A Rev. J. 2021, 117, 103149. [Google Scholar] [CrossRef]

- Bais, A. Evaluation of Model Generalization for Growing Plants Using Conditional Learning. Artif. Intell. Agric. 2022, 6, 189–198. [Google Scholar] [CrossRef]

- Tang, H.; Liang, S.; Yao, D.; Qiao, Y. A Visual Defect Detection for Optics Lens Based on the YOLOv5-C3CA-SPPF Network Model. Opt. Express 2023, 31, 2628–2643. [Google Scholar] [CrossRef]

- Aràndiga, F. A Nonlinear Algorithm for Monotone Piecewise Bicubic Interpolation. Appl. Math. Comput. 2016, 272, 100–113. [Google Scholar] [CrossRef]

- Wang, J.; Wang, J.; Zhang, X.; Yu, N. A Mask-Wearing Detection Model in Complex Scenarios Based on YOLOv7-CPCSDSA. Electronics 2023, 12, 3128. [Google Scholar] [CrossRef]

- Zhang, C.; Hu, Z.; Xu, L.; Zhao, Y. A YOLOv7 Incorporating the Adan Optimizer Based Corn Pests Identification Method. Front. Plant Sci. 2023, 14, 1174556. [Google Scholar] [CrossRef] [PubMed]

- Wang, L.; Zhao, Y.; Liu, S.; Li, Y.; Chen, S.; Lan, Y. Precision Detection of Dense Plums in Orchards Using the Improved YOLOv4 Model. Front. Plant Sci. 2022, 13, 839269. [Google Scholar] [CrossRef] [PubMed]

- Mirhaji, H.; Soleymani, M.; Asakereh, A.; Abdanan, S. Fruit Detection and Load Estimation of an Orange Orchard Using the YOLO Models through Simple Approaches in Different Imaging and Illumination Conditions. Comput. Electron. Agric. 2021, 191, 106533. [Google Scholar] [CrossRef]

| Category | Dataset | Precision | Recall | AP | Model Size |

|---|---|---|---|---|---|

| YOLOv7 | dataset1 | 0.9041 | 0.9079 | 0.9115 | 71.3 MB |

| YOLOv7-plum | dataset1 | 0.9018 | 0.8989 | 0.9233 | 71.4 MB |

| YOLOv7 | Dataset2 | 0.9397 | 0.9239 | 0.9288 | 71.3 MB |

| YOLOv7-plum | Dataset2 | 0.9342 | 0.9326 | 0.9491 | 71.4 MB |

| Model | CSPSPPF | CBAM | Bilinear | Precision | Recall | AP |

|---|---|---|---|---|---|---|

| YOLOv7 | × | × | × | 0.9397 | 0.9239 | 0.9288 |

| YOLOv7-S | √ | × | × | 0.9389 | 0.9176 | 0.9313 |

| YOLOv7-C | × | √ | × | 0.9264 | 0.9283 | 0.9396 |

| YOLOv7-B | × | × | √ | 0.9342 | 0.9112 | 0.9345 |

| YOLOv7-SC | √ | √ | × | 0.9116 | 0.9373 | 0.9376 |

| YOLOv7-SB | √ | × | √ | 0.9412 | 0.9087 | 0.9394 |

| YOLOv7-CB | × | √ | √ | 0.9236 | 0.9294 | 0.9413 |

| YOLOv7-SCB | √ | √ | √ | 0.9342 | 0.9326 | 0.9491 |

| Model | Faster R-CNN | YOLOv5 | DETR | YOLOv7 | YOLOv8l | YOLOv7-Plum |

|---|---|---|---|---|---|---|

| AP (%) | 90.83 | 92.68 | 93.94 | 92.88 | 93.90 | 94.91 |

| Model Size (MB) | 102 | 14.4 | 474 | 71.3 | 83.6 | 71.4 |

| Dataset | Percentage | Number of Pictures | Number of Labeled Boxes |

|---|---|---|---|

| training set | 80% | 976 | 7042 |

| validation set | 10% | 122 | 834 |

| test set | 10% | 122 | 869 |

| Total | 100% | 1220 | 8745 |

| Interpolation Algorithm | Original Image | Nearest Neighbor Interpolation | Bilinear Interpolation | Bicubic Interpolation |

|---|---|---|---|---|

| Image Size (pixels) | 664 × 498 | 1325 × 996 | ||

| Execution time (seconds) | 0.008976 | 0.010970 | 0.015955 | |

| Confusion Matrix | Predicted Results | ||

|---|---|---|---|

| Positive | Negative | ||

| Actual Results | Positive | TP | FN |

| Negative | FP | TN | |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Tang, R.; Lei, Y.; Luo, B.; Zhang, J.; Mu, J. YOLOv7-Plum: Advancing Plum Fruit Detection in Natural Environments with Deep Learning. Plants 2023, 12, 2883. https://doi.org/10.3390/plants12152883

Tang R, Lei Y, Luo B, Zhang J, Mu J. YOLOv7-Plum: Advancing Plum Fruit Detection in Natural Environments with Deep Learning. Plants. 2023; 12(15):2883. https://doi.org/10.3390/plants12152883

Chicago/Turabian StyleTang, Rong, Yujie Lei, Beisiqi Luo, Junbo Zhang, and Jiong Mu. 2023. "YOLOv7-Plum: Advancing Plum Fruit Detection in Natural Environments with Deep Learning" Plants 12, no. 15: 2883. https://doi.org/10.3390/plants12152883

APA StyleTang, R., Lei, Y., Luo, B., Zhang, J., & Mu, J. (2023). YOLOv7-Plum: Advancing Plum Fruit Detection in Natural Environments with Deep Learning. Plants, 12(15), 2883. https://doi.org/10.3390/plants12152883