Research on an Urban Building Area Extraction Method with High-Resolution PolSAR Imaging Based on Adaptive Neighborhood Selection Neighborhoods for Preserving Embedding

Abstract

1. Introduction

2. PolSAR Image Features

2.1. Backscattering Characteristics

2.2. Texture Features

2.3. Polarization Characteristics

3. ANSNPE Algorithm and Extraction Framework

3.1. ANSNPE Algorithm

- Finding the k nearest neighbors of the sample Xi, the affine reconstruction of Xi is performed by these neighborhood points. To minimize the reconstruction error, the optimized objective function is designed as the following Equation (1);

- Calculating the weight matrix W according to the optimized objective function;

- Solving the characteristic equation; the characteristic vectors corresponding to the d smallest eigenvalues of the equation is the projection matrix of A();

- New features of the training image are obtained by the feature mapping of training samples by the projection matrix.

- The initial neighbor parameter k, the minimum neighbor point parameter kmin, the maximum neighbor point parameter kmax, and the small event selection probability p are set. Finding the initial k nearest neighbors of samples Xi (Xi = [xij], j = 1, …, k);

- Selecting the k nearest to the neighbors adaptively. The mean Euclidean distance Di and the mean manifold distance Dm of the sample point Xi are calculated to obtain the parameter ki of sample Xi by Di and Dm (e.g., Equations (2)–(4)). If ki < k, it means that the Di is larger and the neighbor data of Xi is sparse; then, it is necessary to eliminate the larger (1 − p) (k − ki) [53] Euclidean distance in the data set. If ki > k, it means that the Di is smaller and that the data are more dense. At the same time, it retains Xi as the neighborhood data, and the rest (1 − p)(k − ki) of the Euclidean distance smaller points are selected to join the neighborhood Xi;

- Obtain the final neighbor of Xi and calculate the weight matrix W according to the optimized objective function;

- Solving the characteristic equation; the characteristic vectors corresponding to the d smallest eigenvalues of the equation is the projection matrix of A(); and

- New features of the training image are obtained using the feature mapping of training samples by the projection matrix.

3.2. Extraction Framework

4. Experiments and Results

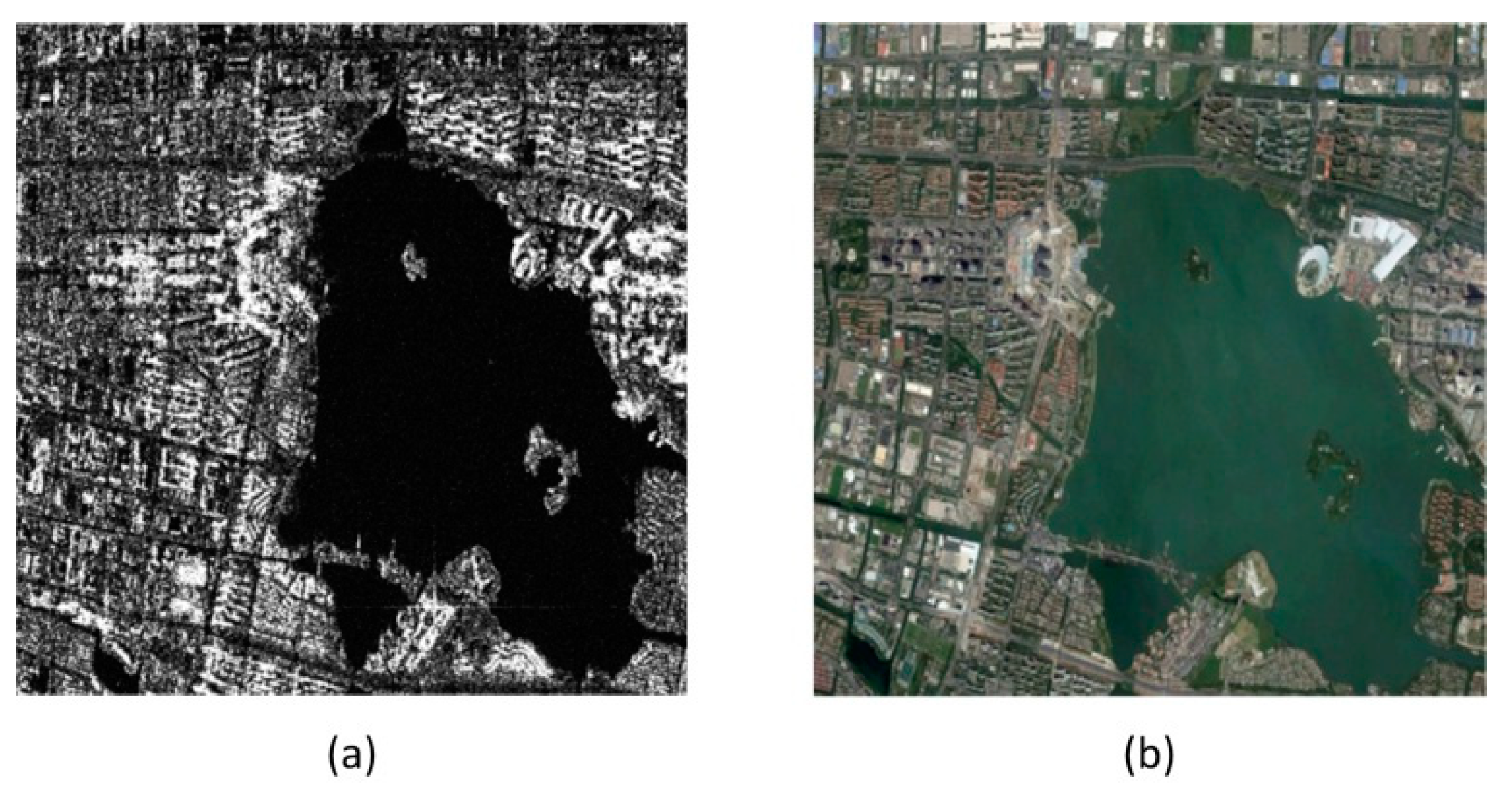

4.1. Data

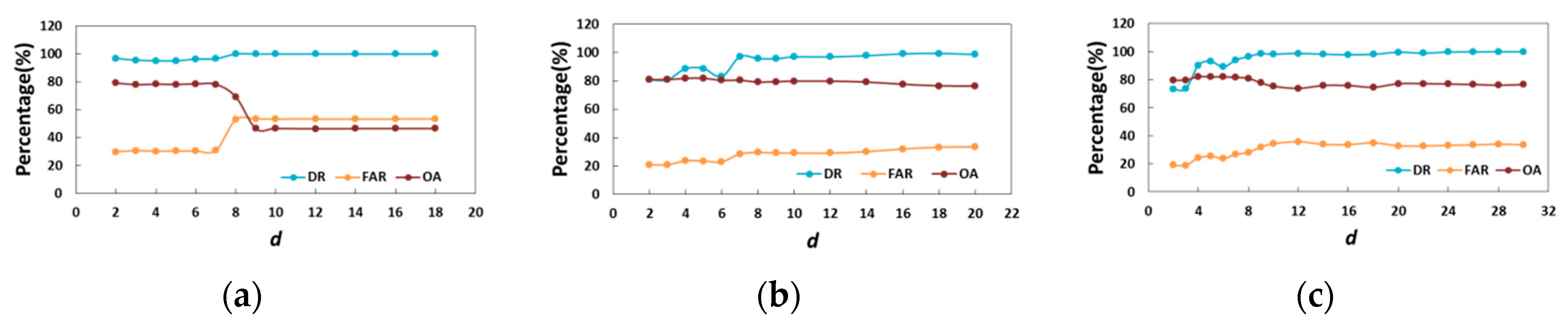

4.2. Discussion of the Parameter d

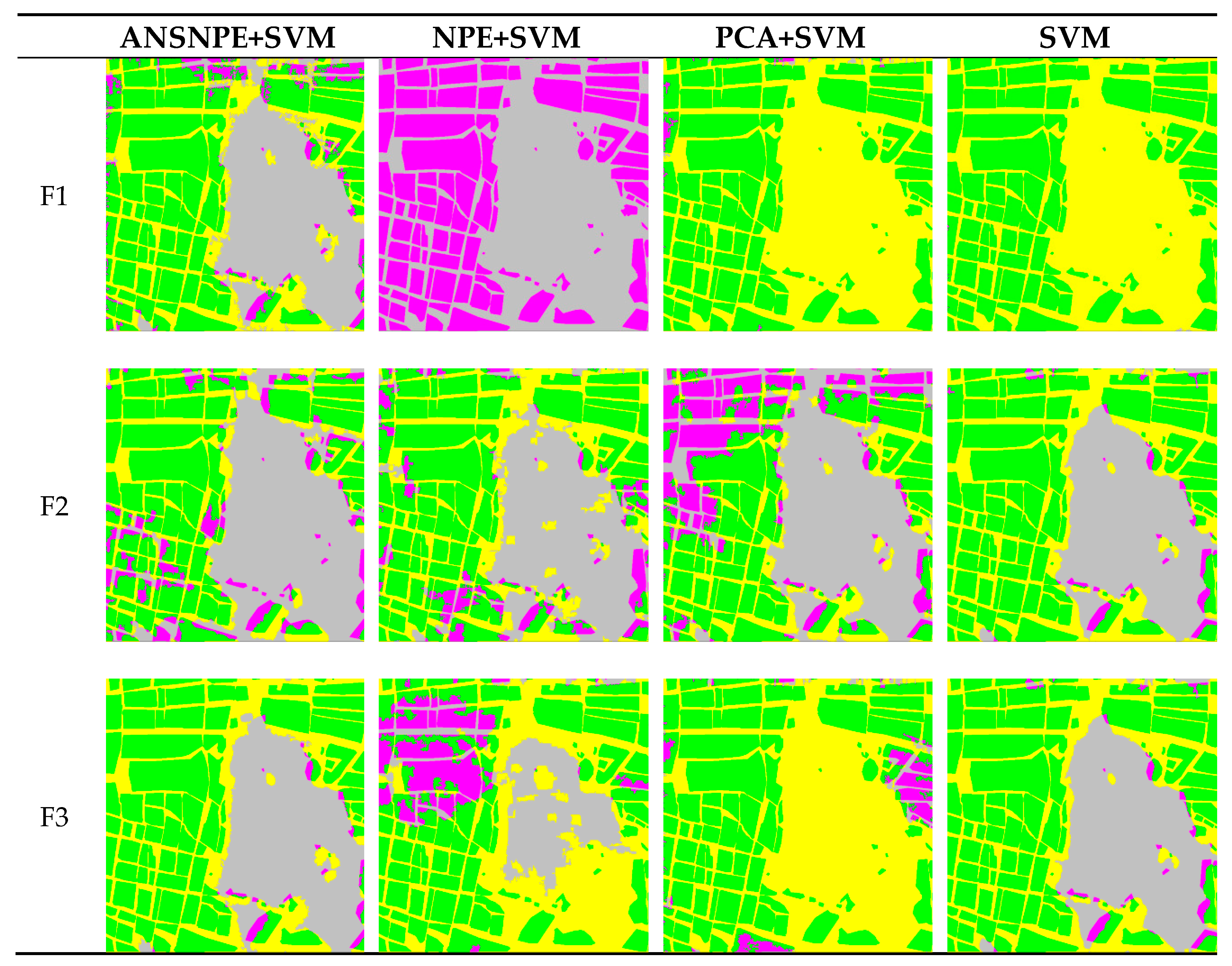

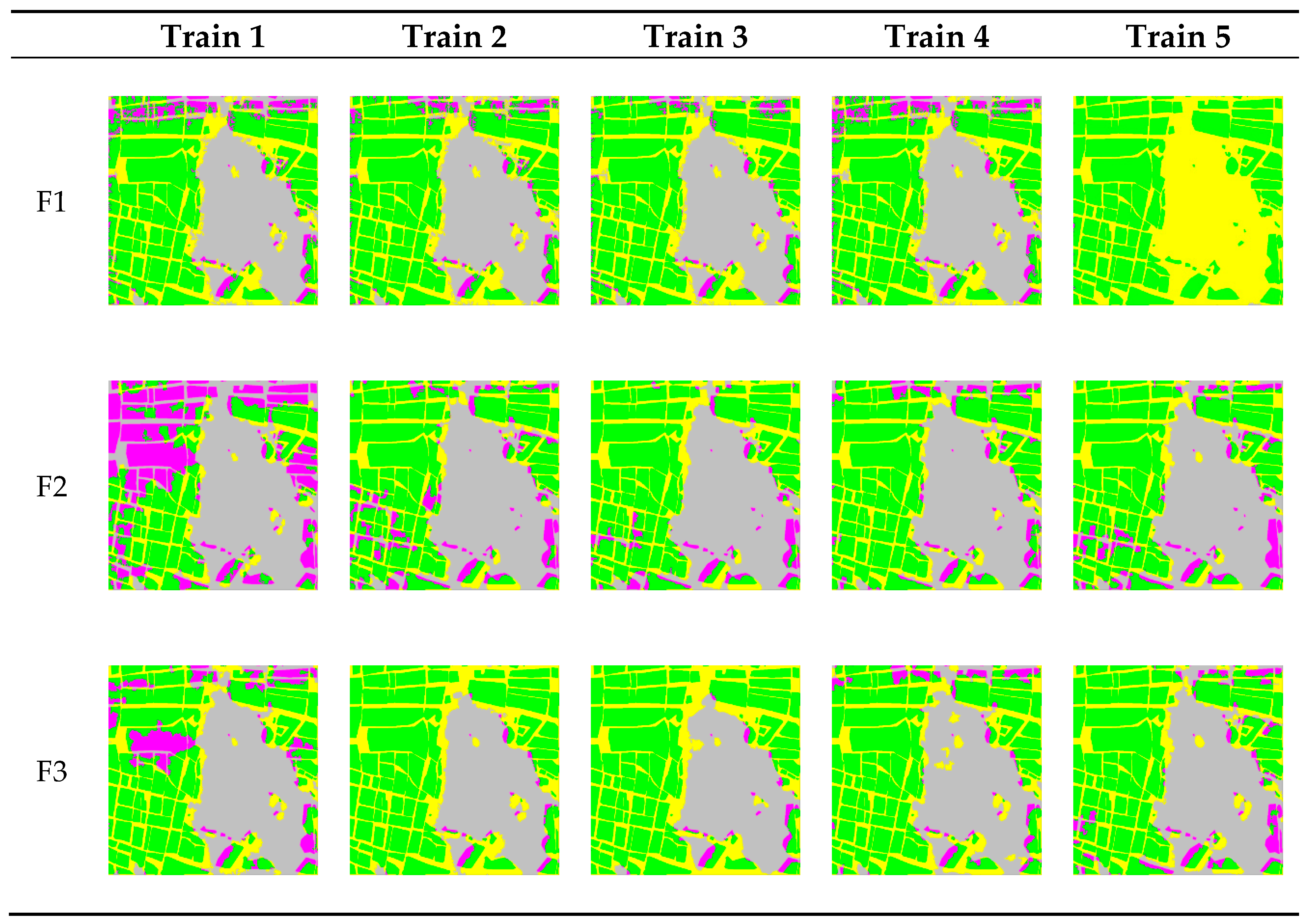

4.3. Experiments of Building Extraction

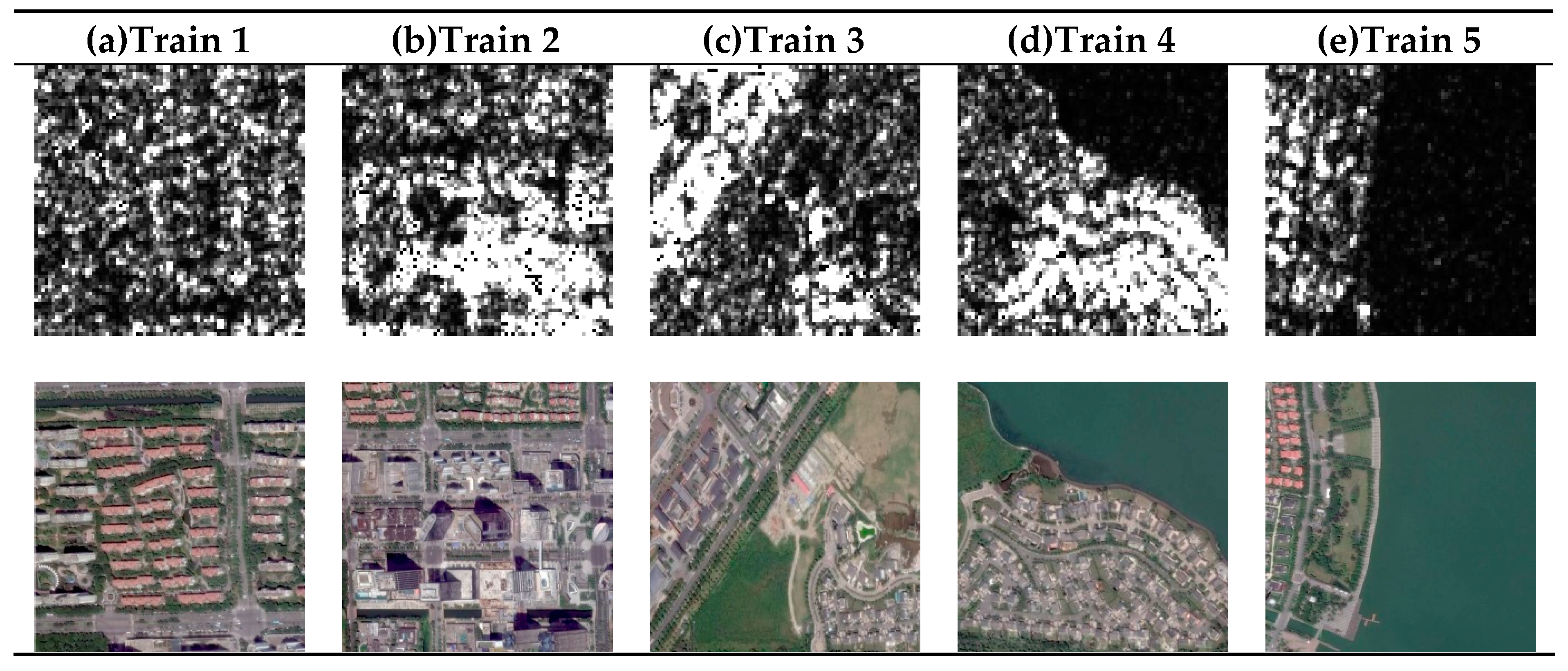

4.4. Applicability Analysis

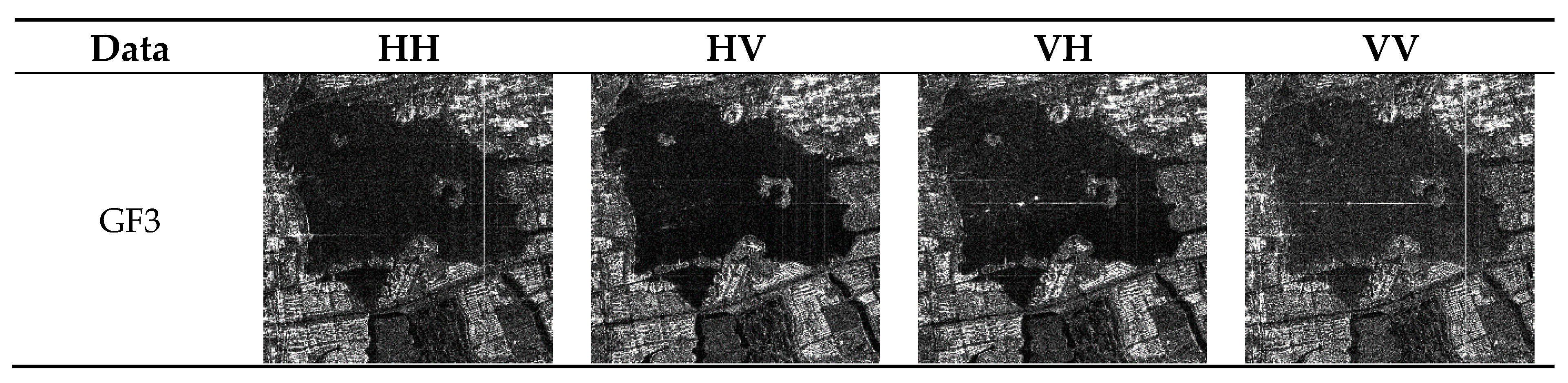

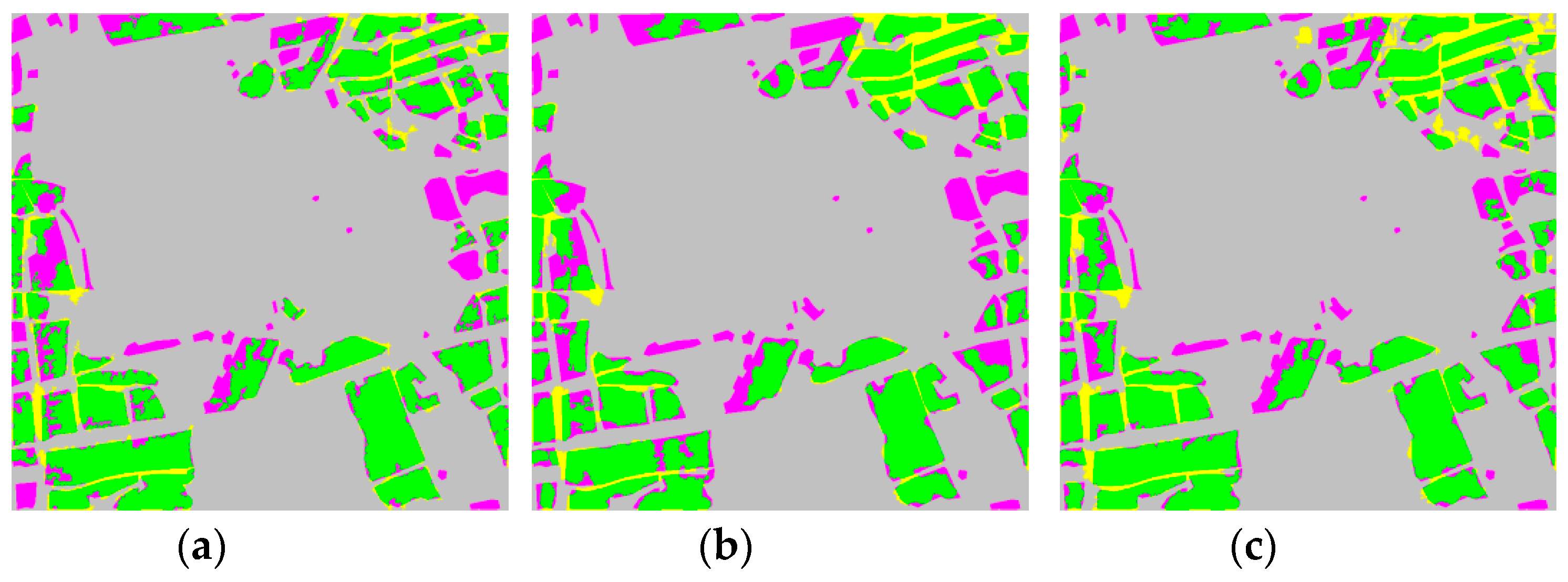

4.5. GF3 Data

5. Conclusions

Author Contributions

Funding

Acknowledgments

Conflicts of Interest

References

- Xu, J. Urban Change Detection from Space borne PolSAR Images with Radiometric Corrections. Ph.D. Thesis, Wuhan University, Wuhan, China, 2015. [Google Scholar]

- Luo, D. Fusion of High Spatial Resolution Optical and Polarimetric SAR Images for Urban Land Cover Classification. Master’s Thesis, Chongqing Jiaotong University, Chongqing, China, 2015. [Google Scholar]

- Tan, Q.L.; Shao, Y. A Study on the Development of New Classification Technology for Radar Remote Sensing Imagery. Remote Sens. Land Resour. 2001, 49, 1–7. [Google Scholar] [CrossRef]

- Zhao, L.J.; Gao, G.; Kuang, G.Y. Variogram-based Build-up Areas Extraction from High-resolution SAR Images. Signal Process. 2009, 25, 1433–1442. [Google Scholar] [CrossRef]

- Zhu, J.H.; Guo, H.D.; Fan, X.T.; Zhu, B.Q. The Application of the Wavelet Texture Method to the Classification of Single-Band, Single-Polarized and High-resolution Sar Images. Remote Sens. Land Resour. 2005, 64, 36–39. [Google Scholar] [CrossRef]

- Zhao, L.J.; Qin, Y.L.; Gao, G.; Kuang, G.Y. Detection of built-up areas from High-Resolution SAR Images Using GLCM textural analysis. J. Remote Sens. 2009, 13, 483–490. [Google Scholar] [CrossRef]

- Shang, T.T.; Jia, Y.C.; Wen, Y.; Sun, H. Laplacian Eigenmaps-Based Polarimetric Dimensionality Reduction for SAR Image Classification. IEEE Trans. Geosci. Remote Sens. 2012, 50, 170–178. [Google Scholar] [CrossRef]

- Huang, Q.; Liu, H. Overview of Nonlinear Dimensionality Reduction Methods in Manifold Learning. Appl. Res. Comput. 2007, 24, 19–25. [Google Scholar] [CrossRef]

- Tenenbaum, J.B.; Silva, V.; Langford, J.C. A Global Geometric Framework for Nonlinear Dimensionality Reduction. Science 2000, 290, 2319–2323. [Google Scholar] [CrossRef]

- Roweis, S.T.; Saul, L.K. Nonlinear dimensionality reduction by locally linear embedding. Science 2000, 290, 2323–2326. [Google Scholar] [CrossRef] [PubMed]

- Belkin, M.; Niyogi, P. Laplacian eigenmaps for dimensionality reduction and data representation. Neural Comput. 2003, 15, 1373–1396. [Google Scholar] [CrossRef]

- Zhang, Z.; Zha, H.Y. Principal Manifolds and Nonlinear Dimension Reduction via Local Tangent Space Alignment.SIAM. J. Sci. Comput. 2005, 26, 313–338. [Google Scholar] [CrossRef]

- He, X.; Niyogi, P. Locality Preserving Projections. Neural Inf. Process. Syst. 2004, 16, 153–160. [Google Scholar]

- He, X.; Cai, D.; Yan, S.; Zhang, H.J. Neighborhood Preserving Embedding. In Proceedings of the Tenth IEEE International Conference on Computer Vision, Beijing, China, 17–20 October 2005; Volume 2, pp. 1208–1213. [Google Scholar]

- Xia, J.S.; Bombrun, L.; Berthoumieu, Y.; Germain, C. Multiple features learning via rotation strategy. In Proceedings of the International Conference on Image Processing (ICIP), Phoenix, AZ, USA, 25–28 September 2016. [Google Scholar] [CrossRef]

- Chang, C.S.; Chen, K.C.; Kuo, B.C.; Wang, M.S.; Li, C.H. Semi-supervised local discriminant analysis with nearest neighbors for hyperspectral image classification. In Proceedings of the Geoscience and Remote Sensing Symposium, Quebec City, QC, Canada, 13–18 June 2014. [Google Scholar] [CrossRef]

- Xia, J.S.; Chanussot, J.; Du, P.J.; He, X. Spectral–Spatial Classification for Hyperspectral Data Using Rotation Forests With Local Feature Extraction and Markov Random Fields. IEEE Trans. Geosci. Remote Sens. 2015, 53, 2532–2546. [Google Scholar] [CrossRef]

- Liao, W.Z.; Pizurica, A.; Philips, W.; Pi, Y. Feature extraction for hyperspectral images based on semi-supervised local discriminant analysis. In Proceedings of the 2011 Joint Urban Remote Sensing Event, Munich, Germany, 11–13 April 2011. [Google Scholar] [CrossRef]

- Watanabe, K. Coherency Preserving Feature Transformation for Semantic Segmentation. In Proceedings of the 2018 IEEE International Conference on Systems, Man, and Cybernetics (SMC), Miyazaki, Japan, 7–10 October 2018; pp. 1368–1373. [Google Scholar] [CrossRef]

- Zhang, H.S.; Li, J.; Wang, T.; Lin, H.; Zheng, Z.; Li, Y.; Lu, Y. A manifold learning approach to urban land cover classifification with opticalandradar dat. Landsc. Urban Plan. 2018, 172, 11–24. [Google Scholar] [CrossRef]

- Lou, J.; Jin, T.; Zhou, Z.M. Feature extraction for landmine detection in uwb sar via swd and isomap. Prog. Electromagn. Res. 2013, 138, 157–171. [Google Scholar] [CrossRef]

- Li, B.; Gong, J.B.; Tian, J.W. Selection of matching area in sar scene-matching-aided navigation based on manifold learning. In Proceedings of the Seventh International Symposium on Multispectral Image Processing and Pattern Recognition (MIPPR2011), Guilin, China, 4–6 November 2011. [Google Scholar] [CrossRef]

- Chen, B.; Cao, Y.F.; Sun, H. Active sample-selecting and manifold learning-based relevance feedback method for synthetic aperture radar image retrieval. IET Radar Sonar Navig. 2011, 5, 118–127. [Google Scholar] [CrossRef]

- Shi, L.; Zhang, L.F.; Yang, J.; Zhang, L.; Li, P. Supervised Graph Embedding for Polarimetric SAR Image Classification. IEEE Geosci. Remote Sens. Lett. 2013, 10, 216–220. [Google Scholar] [CrossRef]

- Dong, G.G.; Kuang, G.Y. Target recognition in sar images via classification on riemannian manifolds. IEEE Geosci. Remote Sens. Lett. 2015, 12, 199–203. [Google Scholar] [CrossRef]

- Shi, L.; Zhang, L.F.; Zhao, L.L.; Zhang, L.; Li, P.; Wu, D. Adaptive laplacian eigenmap-based dimension reduction for ocean target discrimination. IEEE Geosci. Remote Sens. Lett. 2016, 13, 902–906. [Google Scholar] [CrossRef]

- Cao, H.; Zhang, H.; Wang, C. Supervised locally linear embedding for polarimetric sar image classification. In Proceedings of the 2016 IEEE International Geoscience and Remote Sensing Symposium (IGARSS), Fort Worth, TX, USA, 10–15 July 2016; pp. 7561–7564. [Google Scholar] [CrossRef]

- Yu, M.; Zhang, S.Q.; Dong, G.G. Target recognition in sar image based on robust locality discriminant projection. IET Radar Sonar Navig. 2018, 12, 1285–1293. [Google Scholar] [CrossRef]

- Hu, J.L.; Hong, D.F.; Wang, Y.Y. A comparative review of manifold learning techniques for hyperspectral and polarimetric sar image fusion. Remote Sens. 2019, 11, 681. [Google Scholar] [CrossRef]

- Wang, H.J.; Han, J.H.; Deng, Y.Y. Polsar image classification based on laplacian eigenmaps and superpixels. Eurasip J. Wirel. Commun. Netw. 2017, 2017, 198. [Google Scholar] [CrossRef]

- Wang, J.; Sun, L.Z. Research on supervised manifold learning for sar target classification. In Proceedings of the CIMSA 2009—International Conference on Computational Intelligence for Measurement Systems and Applications, Hong Kong, China, 11–13 May 2009. [Google Scholar] [CrossRef]

- Li, T. Method Research of Recognition of Urban Building Areas from High Resolution SAR Images Based on Manifold Learning. Master’s Thesis, University of Chinese Academy of Sciences, Beijing, China, 2015. [Google Scholar]

- Miao, A.M.; Ge, Z.Q.; Song, Z.H.; Shen, F. Nonlocal structure constrained neighborhood preserving embedding model and its application for fault detection. Chemom. Intell. Lab. Syst. 2015, 142, 184–196. [Google Scholar] [CrossRef]

- Miao, A.M.; Li, P.; Ye, L. Neighborhood preserving regression embedding based data regression and its applications on soft sensor modeling. Chemom. Intell. Lab. Syst. 2015, 147, 86–94. [Google Scholar] [CrossRef]

- Miao, S.; Wang, J.; Gao, Q.X.; Chen, F.; Wang, Y. Discriminant structure embedding for image recognition. Neurocomputing 2016, 174, 850–857. [Google Scholar] [CrossRef]

- Chen, L.; Li, Y.J.; Li, H.B. Facial expression recognition based on image Euclidean distance-supervised neighborhood preserving embedding. In Proceedings of the International Symposium on Optoelectronic Technology and Application 2014: Image Processing and Pattern Recognition, Beijing, China, 13–15 May 2014. [Google Scholar] [CrossRef]

- Zhang, S.H.; Li, W.H. Bearing Condition Recognition and Degradation Assessment under Varying Running Conditions Using NPE and SOM. Hindawi Publ. Corp. Math. Probl. Eng. 2014, 781583. [Google Scholar] [CrossRef]

- Pang, M.; Jiang, J.F.; Lin, C.; Wang, B. Two dimensional discriminant neighborhood preserving embedding in face recognition. In Proceedings of the Sixth International Conference on Graphic and Image Processing, Beijing, China, 24–26 October 2014. [Google Scholar] [CrossRef]

- Liu, X.M.; Yin, J.W.; Feng, Z.L.; Dong, L.; Wang, L. Orthogonal Neighborhood Preserving Embedding for Face Recognition. In Proceedings of the 2007 IEEE International Conference on Image Processing, San Antonio, TX, USA, 16–19 September 2007; pp. 1522–4880. [Google Scholar] [CrossRef]

- Wang, N.; Li, X. Face Clustering Using Semi-supervised Neighborhood Preserving Embedding with Pairwise Constraints. In Proceedings of the 2009 4th IEEE Conference on Industrial Electronics and Application, Xi’an, China, 25–27 May 2009; pp. 2156–2318. [Google Scholar] [CrossRef]

- Tao, X.; Dong, S.F.; Zhao, Q.X.; Han, Z. Kernel Neighborhood Preserving Embedding and its Essence Analysis. In Proceedings of the 2009 WRI Global Congress on Intelligent Systems, Xiamen, China, 19–21 May 2009; pp. 2155–6083. [Google Scholar] [CrossRef]

- Lai, Z. Sparse local discriminant projections for discriminant knowledge extraction and classification. IET Comput. Vis. 2012, 6, 551–559. [Google Scholar] [CrossRef]

- BAO, X.; Zhang, L.; Wang, B.J.; Yang, J. A Supervised Neighborhood Preserving Embedding for Face Recognition. In Proceedings of the International Joint Conference on Neural Networks (IJCNN), Beijing China, 6–11 July 2014. [Google Scholar] [CrossRef]

- Wen, J.H.; Yan, W.D.; Lin, W. Supervised Linear Manifold Learning Feature Extraction for Hyperspectral Image Classification. In Proceedings of the Geoscience and Remote Sensing Symposium, Quebec City, QC, Canada, 13–18 July 2014. [Google Scholar] [CrossRef]

- Yuan, X.F.; Ge, Z.Q.; Ye, L.J.; Song, Z. Supervised neighborhood preserving embedding for feature extraction and its application for soft sensor modeling. J. Chemom. 2016, 30, 430–441. [Google Scholar] [CrossRef]

- Ran, R.S.; Fang, B.; Wu, X.G. Exponential Neighborhood Preserving Embedding for Face Recognition. IEICE Trans. Inf. Syst. 2018, 101, 1410–1420. [Google Scholar] [CrossRef]

- Mehta, S.; Zhan, B.S.; Shen, X.J. Weighted Neighborhood Preserving Ensemble Embedding. Electronics 2019, 8, 219. [Google Scholar] [CrossRef]

- Wang, X.Y.; Cao, Z.J.; Cui, Z.Y.; Liu, N.; Pi, Y. PolSAR image classification based on deep polarimetric feature and contextual information. J. Appl. Remote Sens. 2019, 2019, 13. [Google Scholar] [CrossRef]

- Harakck, R.M.; Shanmugam, K.; Dinstein, I.H. Textural Features for Image Classification. Trans. Syst. Man Cybern. 1973, 3, 610–621. [Google Scholar] [CrossRef]

- Jong-Sen, L.; Eric, P. Polarimetric Radar Imaging: From Basics to Applications; Publishing House of Electronics Industry: Beijing, China, 2013; pp. 134–168. ISBN 978-7-121-20266-7. [Google Scholar]

- Hui, K.H.; Xiao, B.H.; Wang, C.H. Self-Regulation of Neighborhood Parameter for Locally Linear Embedding. Pattern Recognit. Artif. Intell. 2010, 23, 842–846. [Google Scholar] [CrossRef]

- Huang, L.Z.; Zheng, L.X.; Chen, C.Y.; Min, L. Locally Linear Embedding Algorithm with Adaptive Neighbors. In Proceedings of the International Workshop on Intelligent Systems and Applications, Wuhan, China, 23–24 May 2009; pp. 1–4. [Google Scholar] [CrossRef]

- Zhang, Y.L.; Zhuang, J.; Wang, N.; Wang, S.A. Fusion of Adaptive Local Linear Embedding and Spectral Clustering Algorithm with Application to Fault Diagnosis. J. Xi’an Jiaotong Univ. 2010, 44, 77–82. [Google Scholar] [CrossRef]

| Feature | Formula |

|---|---|

| Co-polarized HH backscattering coefficient | |

| Co-polarized HV backscattering coefficient | |

| Co-polarized VH backscattering coefficient | |

| Co-polarized VV backscattering coefficient |

| Feature | Formula |

|---|---|

| Mean | |

| Variance | |

| Homogeneity | |

| Entropy | |

| Dissimilarity | |

| Contrast | |

| Correlation | |

| Angular Second Moment |

| Polarizing Target Decomposition Method | Feature |

|---|---|

| Freeman & Durden | Ps,Pd,Pv |

| Yamaguchi | Ps,Pd,Pv |

| Cloude | H,α,A |

| Pauli | |

| Krogager | Ks,kd,kh |

| Span | |SHH|2 + 2|SHV|2 + |SVV|2 |

| Parameters | RADARSAT-2 | GF3 |

|---|---|---|

| Resolution | 6.17 m | 8.00 m |

| Direction | Ascending | Descending |

| Imaging Mode | Fine Quad-Pol | QPSI |

| Incidence Angle | 4.01–4.05 | 29.68–31.42 |

| Time | 2017.07.17 | 2017.01.29 |

| Feature Set | Evaluation | ANSNPE+SVM(%) | NPE+SVM(%) | PCA+SVM(%) | SVM(%) |

|---|---|---|---|---|---|

| F1 | IDR | 74.97 | 3.27 | 75.58 | 95.32 |

| IOA | 74.03 | 54.71 | 36.45 | 68.35 | |

| DR | 95.23 | 0 | 99.55 | 100 | |

| OA | 78.09 | 53.47 | 46.34 | 46.59 | |

| F2 | IDR | 87.79 | 80.32 | 70.43 | 95.55 |

| IOA | 81.94 | 69.92 | 75.38 | 76.77 | |

| DR | 88.78 | 89.43 | 73.23 | 99.07 | |

| OA | 81.88 | 73.69 | 75.28 | 77.75 | |

| F3 | IDR | 94.56 | 70.39 | 55.38 | 95.84 |

| IOA | 81.42 | 50.04 | 29.74 | 76.77 | |

| DR | 96.42 | 76.56 | 92.02 | 99.17 | |

| OA | 80.89 | 50.94 | 43.64 | 77.76 |

| Feature Set | Train 1 (%) | Train 2 (%) | Train 3 (%) | Train 4 (%) | Train 5 (%) | Average (%) | Standard Deviation | |

|---|---|---|---|---|---|---|---|---|

| F1 | IDR | 70.8 | 74.97 | 76.68 | 71.17 | 93.27 | 77.38 | 8.25 |

| IOA | 72.62 | 74.03 | 74.2 | 73.33 | 68.06 | 72.45 | 2.26 | |

| DR | 91.52 | 95.23 | 96.78 | 92.51 | 99.99 | 95.21 | 3.04 | |

| OA | 77.27 | 78.09 | 77.63 | 77.51 | 46.61 | 71.42 | 12.41 | |

| F2 | IDR | 46.83 | 87.79 | 92.31 | 87.22 | 88.92 | 80.61 | 16.98 |

| IOA | 67.62 | 81.94 | 80.99 | 80.45 | 81.73 | 78.55 | 5.49 | |

| DR | 51.39 | 88.78 | 92.96 | 93.68 | 90.59 | 83.48 | 16.13 | |

| OA | 68.65 | 81.88 | 80.51 | 81.35 | 81.72 | 78.82 | 5.11 | |

| F3 | IDR | 75.35 | 94.56 | 99.43 | 92.72 | 90.76 | 90.56 | 8.13 |

| IOA | 71.69 | 81.42 | 76.52 | 78.01 | 80.46 | 77.62 | 3.44 | |

| DR | 82.66 | 96.42 | 99.57 | 96.16 | 93.49 | 93.66 | 5.83 | |

| OA | 73.69 | 80.89 | 76.53 | 78.66 | 80.47 | 78.05 | 2.67 |

| Evaluation | F1 (%) | F2 (%) | F3 (%) |

|---|---|---|---|

| IDR | 68.95 | 66.56 | 75.29 |

| IOA | 87.53 | 87.5 | 88.18 |

| DR | 69.36 | 65.93 | 74.14 |

| OA | 88.32 | 87.24 | 88.32 |

© 2020 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Cheng, B.; Cui, S.; Ma, X.; Liang, C. Research on an Urban Building Area Extraction Method with High-Resolution PolSAR Imaging Based on Adaptive Neighborhood Selection Neighborhoods for Preserving Embedding. ISPRS Int. J. Geo-Inf. 2020, 9, 109. https://doi.org/10.3390/ijgi9020109

Cheng B, Cui S, Ma X, Liang C. Research on an Urban Building Area Extraction Method with High-Resolution PolSAR Imaging Based on Adaptive Neighborhood Selection Neighborhoods for Preserving Embedding. ISPRS International Journal of Geo-Information. 2020; 9(2):109. https://doi.org/10.3390/ijgi9020109

Chicago/Turabian StyleCheng, Bo, Shiai Cui, Xiaoxiao Ma, and Chenbin Liang. 2020. "Research on an Urban Building Area Extraction Method with High-Resolution PolSAR Imaging Based on Adaptive Neighborhood Selection Neighborhoods for Preserving Embedding" ISPRS International Journal of Geo-Information 9, no. 2: 109. https://doi.org/10.3390/ijgi9020109

APA StyleCheng, B., Cui, S., Ma, X., & Liang, C. (2020). Research on an Urban Building Area Extraction Method with High-Resolution PolSAR Imaging Based on Adaptive Neighborhood Selection Neighborhoods for Preserving Embedding. ISPRS International Journal of Geo-Information, 9(2), 109. https://doi.org/10.3390/ijgi9020109