2. Materials and Methods

2.1. Testing Approach

The performance testing in this study is conducted using the load testing approach. Load testing is a particular procedure aimed at measuring the response of a service when put under stress; this is to measure the service behaviour and performance under normal and peak load conditions. As defined by Menascé [

44], the test is done using software that simulates the service usage in terms of users’ (not only humans) behaviour and number. The user behaviour is detailed specifying what requests are executed and with what frequency, while the user number is simulated by specifying the number of concurrent users. The results of load testing are metrics that identify the system resource statistics of the server and the performance of the service.

For the scope of this research, among the numerous tools available for conducting load testing, the authors selected the open source framework named Locust [

45]. The selection was due to: its open license, its Python language, which perfectly fit with istSOS, and its event-based (not thread-bound) architecture, which make it possible to benchmarking thousands of users from a single machine. Locust is a scalable and distributed framework developed in Python and available under the MIT-license. This framework enables the set-up of a load test under different scenarios, identified by the number of concurrent users and the hatch-ratio, which indicates the users spawned for seconds. Without entering in technical details of the tools functionality, which can be found in Cannata et al. [

46], it is possible to simulate the users’ behaviours by coding different user types each characterized by its relative access frequency, the set of operations they are executing, and the minimum and maximum waiting time between requests.

In each set of operations, it is possible to implement the task to be performed (generally a HTTP requests), to associate a relative execution frequency of the operation and to catch responses that are considered an operation failure (e.g., a returned error message with HTTP status code 200). Creating and combining the collection of operations and user types, it is possible to define the test in code, a fact that provides a high degree of freedom and that facilitates the definition of realistic load tests that actually simulates the desired user behaviour.

Locust allows collecting information on: number of executed requests, number of failed requests, average content size and number of requests per second, response time statistics (i.e., min, max, ave, median). During the load testing, the

dstat tool [

47] was used to record hardware statistics of the server. This tool allows the monitoring of all the system resources instantly and the direct writing of date, time and metrics to a comma separated values file. The combination of user and server statistics permits to evaluate the whole system performance and behaviour.

2.2. Testing Environment (System Configuration)

In order to perform the tests, two different machines where used: a physical server with the application under test and a physical client which generates the load. Virtual environments were appropriately excluded to prevent possible interferences and I/O latencies due to the virtualisation layer. The configurations of the two used machines are described in

Table 1.

On the client side, a Locust environment has been installed and each test has been implemented to record the average number of requests and the response time for each request type for each minute. On the server side, the dstat tool was configured to record the usage of processors (CPU), the usage of the memory (RAM) and the disk performance (I/O—write and read speed) for each minute. The tests were conducted on the istSOS version 2.3. It can be reasonably assumed that during the tests, the measured response time is not affected by any data jam at the production stream since the 1 GB/sec transport rate of the used network is certainly faster than the service response.

2.3. Experiment Definition and Set-Up

Using the previously described selected technologies and configurations, this research tested three different monitoring networks inspired by real case applications. The tested monitoring systems are different in terms of number of sensors, length of stored time series, number of observed properties per sensor and measurement frequency.

The first real case mimics a regional monitoring system: the OASI air-quality monitoring network from the Canton Ticino [

48] where about 20 stations measure three observed properties (PM10, O3 and NO2) every 10 minutes. Data are available for the last 25 years. This monitoring network can be considered as mid-size monitoring network with regard to the number of sensors and the number of archived observations (about 100 million).

The second case replicates a national monitoring system: the SwissMetNet (Landl et al., 2009). It is the automatic monitoring network of the MeteoSwiss that comprises 160 stations measuring seven meteorological parameters (air temperature, precipitation, relative humidity, hours of sunshine, wind speed, global radiation, air pressure) with a resolution of 10 minutes. Digital data are available with this time resolution from the 80s. This monitoring network can be considered of large-size in terms of number of stations and number of measurements (about 1700 million).

The third mimicked case is another regional monitoring system: the Canton Ticino GESPOS monitoring network. It collects the regional springs, wells and bore-hole observations. It counts 5120 sensors that register often a single property (e.g., groundwater head, spring flow or water temperature) measured a few times only. Data are therefore sparse in time but the observed interval is about 45 years, ranging from the 70s to the current days. This monitoring network can be considered large-size because of the number of sensors. The tested synthetic monitoring systems, inspired from the three described real case monitoring networks, has been configured as described in

Table 2, and the database has been filled with synthetic data.

During the tests, two different types of user has been configured in Locust. One reflecting a data consumer and one reflecting a data producer (see

Figure 2). The data producer is likely a sensor that registers once to the system (SOS’s

registerSensor request) and then starts to send observations at its sampling frequency (SOS’s

insertObservation requests). The data consumer is likely a human that looks at the service capabilities (SOS’s

getCapabilities request), then individuates the sensor of its interest, looks for its specifications (SOS’s

sensorDescribe request) and finally download the data (SOS’s

getObservation request).

Each monitoring system of

Table 2 has been tested under different scenarios with increasing number of concurrent data consumers: 100, 200, 500, 1000, and 2000. Data producers are equal to the number of sensors of the tested monitoring system. In order to assign different relative frequencies to the different SOS requests performed by data consumers, weights derived from previous experiments have been used. In particular, the weights values were derived from the results presented in Cannata and Antonovic [

33], where the total number of executed SOS requests for each type over a period of two years were extracted from the analysis of server logs of an istSOS service used in the ENORASIS projects. As a result, the selected relative weights of the requests applied during the tests are reported in

Table 3.

While getCapabilities and describeSensor do not have complex filter capabilities, the getObservation request allows the selection of a period in time and the observed properties. During the execution of the tests, we decided to retrieve all the observed properties along a time interval randomly shifted in time within the data observed period. The time interval has been selected as a fixed period that is proportional to the observation frequency. For the system A and B the period was set to 1 day and for case C to 6 months. During the testing, data producers and data consumer operated simultaneously using the same SOS service.

In addition to the different scenarios tested for the different monitoring systems (A, B and C), the tests were conducted on two different WSGI server applications:

mod_wsgi [

49] is the Apache module to run Python application service and it is the environment where istSOS has been tested and therefore is the suggested server by the istSOS community.

gevent [

50] is a coroutine-based networking library that was selected as an alternative due to its promising results. In fact, in Piël [

51] a benchmark test comparing several WSGI server application showed that

gevent has low server latencies, system loads, CPU usage, response times and error rates.

While

gevent configuration was limited to set the number of processes to 12,

mod_wsgi has been configured with the following Apache MPM (Multi-Processing Module) worker module settings: StartServers = 12; MinSpareThreads = 50; MaxSpareThreads = 300; ThreadLimit = 128; ThreadsPerChild = 100; MaxRequestWorkers = 300; MaxConnectionsPerChild = 0. Interested readers can find details of theese parameters in the official Apache MPM worker documentation [

52].

Response sizes vary per different scenarios while requests sizes are constant within a single test since the number of sensors and data inserted and requested are fixed within the test execution. Values are listed in

Table 4.

2.4. Reference for the Evaluation of the Results

To analyse the performance of a service, which is an important aspect of the so-called Quality of Service (QoS), several metrics were used in literature [

53,

54,

55] but two of them are particularly prominent: the availability and the response time. While the availability indicates if a user has access to a web resource, the response time shows how fast this happens. During the load testing these two aspects help to assess how well a user will be satisfied with the web service.

To quantitatively evaluate the results of the tests there is the need to identify a reference. To this end, we selected as reference the Quality of Services (QoS) defined by INSPIRE in the requirements for network services. The QoS for INSPIRE is defined in the implementing directive 2007/2/EC (regulation (EC) No 976/2009) which specifies three criteria: performance, capacity and availability. While the service performance should guarantee to continuously serve the data within given time limits, capacity must handle at least a defined number of simultaneous users without degrading the performance, and availability must guarantee 99% of uptime, excluding planned breaks due to service maintenance. Specific values of limits and concurrent users for performance and capacity are defined in appropriate technical guidance documents for different service type; for example: discovery service like CSW, view service like WMS or download service like WFS. Currently, it seems that there’s no Technical Guidance (TG) on quality of service for SOS, nevertheless in the “Technical Guidance for implementing download services using the OGC Sensor Observation Service and ISO 19143 Filter Encoding”, it is stated that specification of TG for WFS can serve as an orientation for implementing QoS for SOS. TG for download services sets the performance limit to 10 seconds to receive the initial response in normal situation to retrieve metadata (e.g., describe spatial data set) and 30 seconds to retrieve data (e.g., get spatial data set). After that, the downstream speed must be higher than 0.5 MB/s. The capacity criterion is that 10 requests per second are served within the performance limits.

3. Results

The presented results of the load testing are related only to the requests that are repeated in time (therefore excluding the registerSensor request executed only once per sensor at database initialisation). Herein after, we will refer to the different SOS requests with the following acronyms:

Test results related to a specific request and number of concurrent users will be referred with the acronym of the request followed by the number of concurrent data consumers (e.g., GC500 for the test of getCapabilities request with 500 concurrent users). Finally, specific tests conducted with a selected WSGI interface will be designated with the suffix “m” for mod_wsgi and “g” for gevent, so DS200m refers to results of describeSensor request with 200 concurrent data consumers using the mod_wsgi server application.

3.1. Case A: Air-Quality Monitoring Network in Ticino

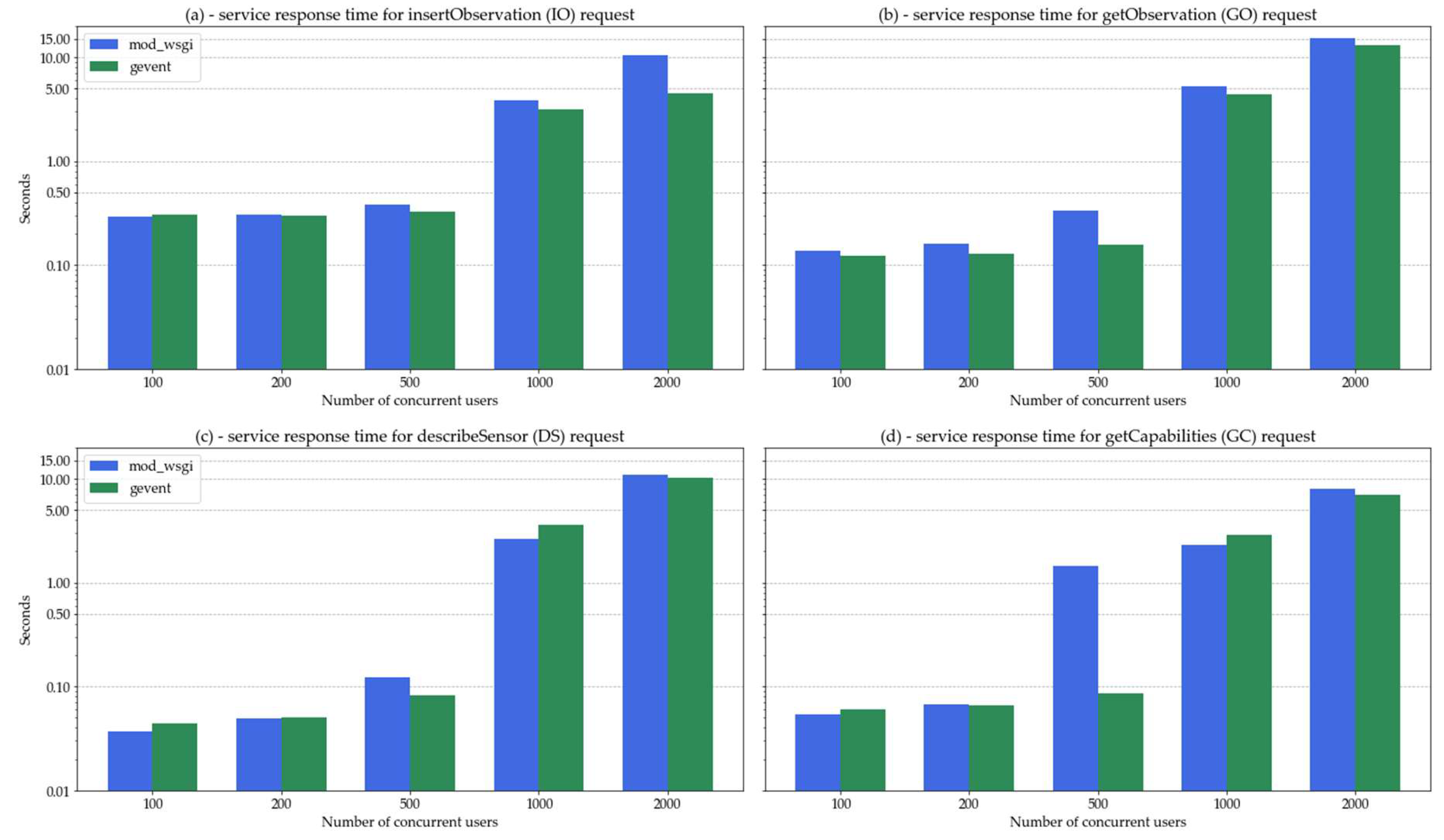

Average response time for different SOS requests are illustrated in

Figure 3 in logarithmic scale.

Table 5 reports the service loads of the tests, in terms of requests per seconds. In general, we can observe two different behaviours along all the requests: fast responses up to 500 concurrent data consumers and exponentially slower responses for 1000 and above. IO request response time up to 500 users is below 1 seconds (from a minimum of 0.2 to a maximum of 0.98) while with 2000 users the registered minimum is 0.2 seconds and the maximum is 67.35 seconds using

mod_wsgi. GO is the request that shows a worst performance: between 0.03 and 2.4 seconds up to 500 users and 129 seconds maximum with 2000 and

mod_wsgi. In

Table 6 and

Table 7, the percentage of IO and GO requests completed within given times for the different configuration of concurrent users and WSGI server are reported.

GC responses up to 500 users are comprised between 0.05 and 0.98 seconds (0.5 with

gevent) while with higher concurrent data consumers it reaches a maximum of 9.39 (0.3 with

gevent). DS responses are between 0.03 and 0.88 seconds up to 500 users and then increase up to 124.46 seconds in the worst case (29.31 with mod_wsgi). In

Table 8 and

Table 9, the percentage of GC and DS requests completed within given times for the different configuration of concurrent users and WSGI server are reported.

To compare mod_wsg vs gevent, we do not consider the test with 2000 m that produced errors that may affect the registered response time. In the “case A”, in terms of relative percentages gevent outperformed mod_wsgi in average of the 10%. In particular, better performance was constantly registered in GO and mostly in IO requests, while a dual behaviour (faster/slower) is detected in GC and DS. The GC500 request registered the larger difference in average response time with gevent 94 time faster than mod_wsgi.

3.2. Case B: Swiss Meteorological Monitoring Network

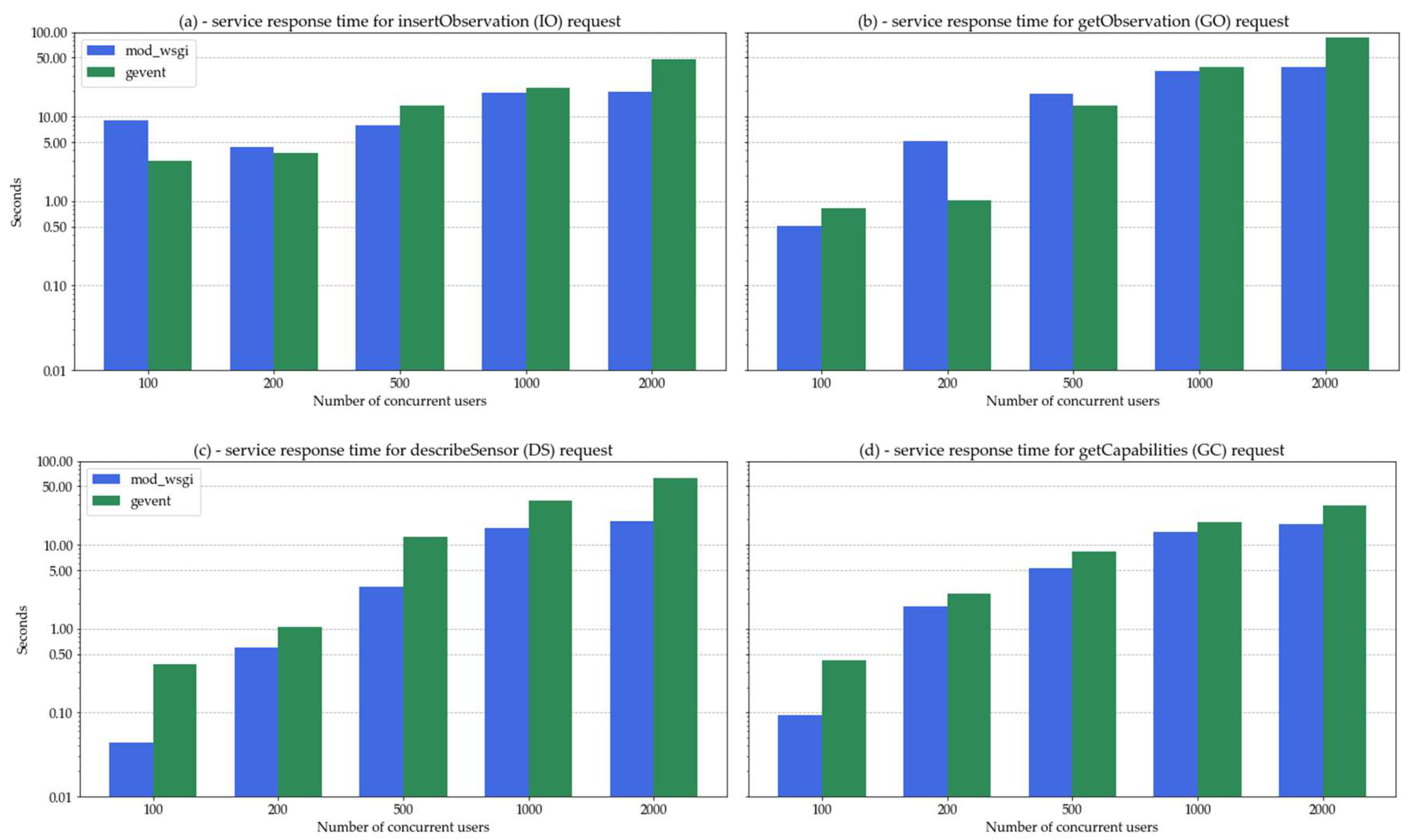

In

Figure 4 the average response times for different requests and concurrent users and WSGI application are represented in logarithmic scale. The service loads of the tests, in terms of requests per seconds, are reported in

Table 10.

Similar to case A, exponential decrease of performance with growing concurrent users is detected from 500 concurrent users on. Response time to IO requests show an average of 12 seconds. For IO100 and IO200 the average is about 3 seconds and

gevent better performed. GO average response times are slow and only GO100 and GO200g are within one seconds. GO2000g shows the worst results with an average response of 87 seconds and a maximum of 289.71 seconds. In

Table 11 and

Table 12 the percentage of IO and GO requests completed within given times for the different configuration of concurrent users and WSGI server are reported.

GC requests are executed in average in 7.8 seconds with

mod_wsgi and 11.4 seconds with

gevent. Slower recorded response is of about 3 minutes while slower average is in the case of GC2000g with 29.1 seconds. Average DS responses are below 1 seconds for 100 and 200 users but quickly get slower for more concurrent users: passing the 10 seconds waiting time in general and reaching up to 1 minute. The worst absolute response time is registered for DS2000g and is about 2.5 minutes. In

Table 13 and

Table 14 the percentage of GC and DS requests completed within given times for the different configuration of concurrent users and WSGI server are reported.

Similarly to case A, in comparing the two WSGI servers we do not consider the tests 1000m and 2000m which presented failures that may affect the recorded response time. Comparing the remaining tests, we see that gevent is in average 2.27 time slower than mod_wsgi. This is due to a general worst performance in all the cases but particularly in DS cases, in few cases (IO100, IO200 and GO500) gevent registered a faster response time.

3.3. Case C: Springs and Wells Monitoring Network in Ticino

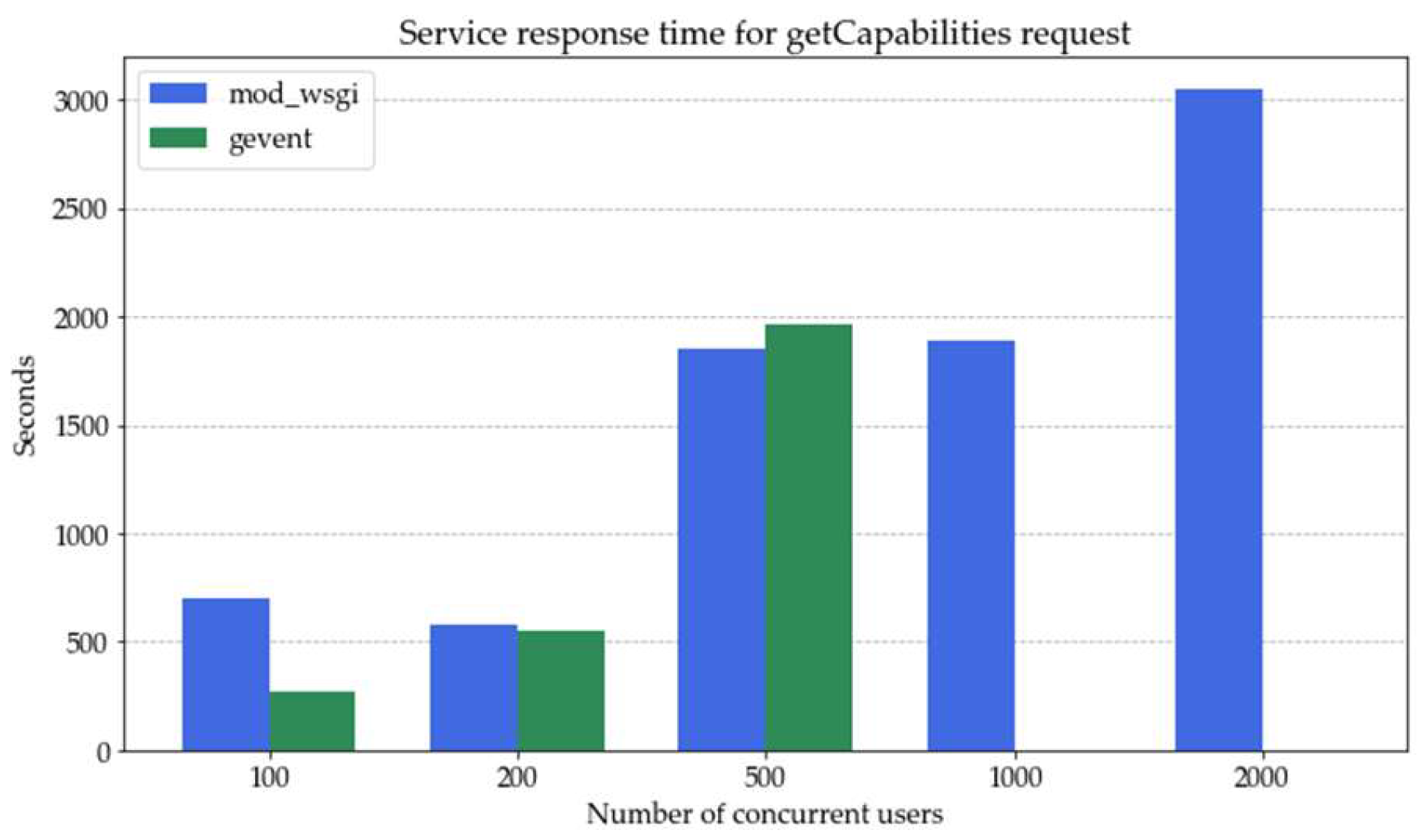

In case C, the

insertObservation operation has not been included in the test since the data registration frequency is 1 month and thus the frequency of this operation is clearly negligible with respect to the others. As a result, only the data consumer user profile has been tested in case C. In

Figure 5 the average response times for different requests and concurrent users and WSGI application are represented in logarithmic scale.

Table 15 shows the service loads of the tests, in terms of requests per seconds and rate of filure.

gevent registers a higher rate of failure but in the cases of no-error performed much faster (83 times in average) than

mod_wsgi in registered response-time.

In the pipe of the data consumer request workflow (see

Figure 2), GC is a blocking request. In fact, if no answer is received the user has no information to perform either the exploration of specific sensors or any request for data. The requests produced unacceptable long response time (see

Figure 5): close to 10 min in average for 100 and 200 concurrent users and rising up to about 26 min with 500 users. For higher concurrent users the time to obtain a response continues to increase with

mod_wsgi while

gevent was not able to provide any response in the timeframe of the test (see

n.a. values in

Table 15).

With both

mod_wsgi and

gevent the percentage of GC response failures for 500 users is about 50% and very close to 100% for 1000 and 2000 users. These percentages of error are invalidating the test results for the DS and GO requests for 500, 1000 and 2000 and thus they are omitted in

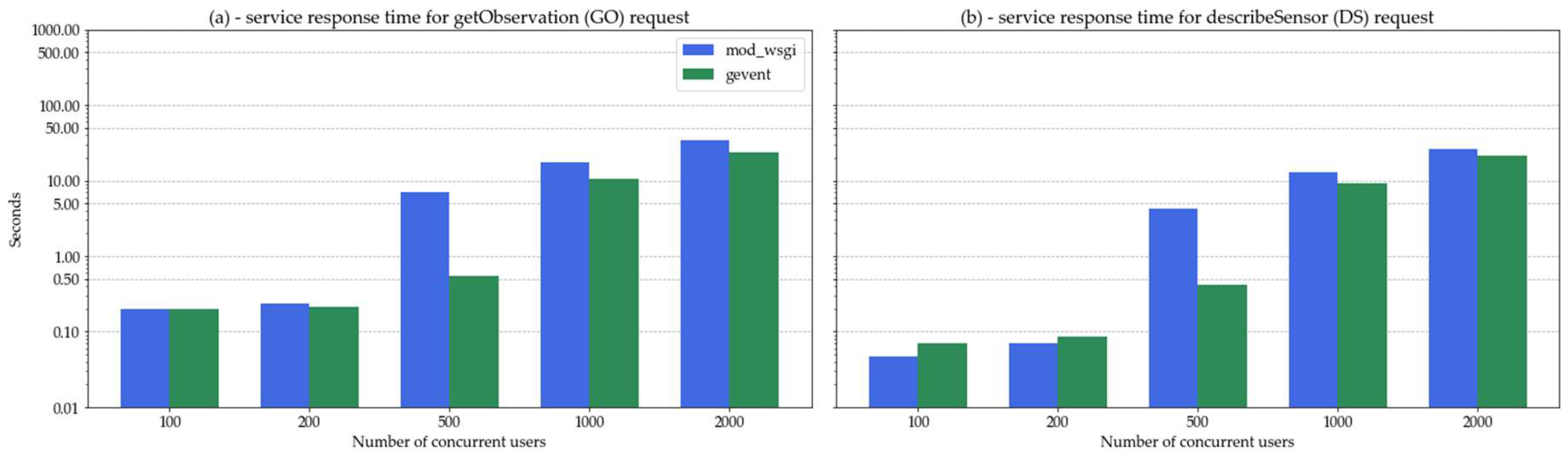

Figure 6.

In both these cases gevent registered faster responses. For example, it was 20 times faster for DS100 and DS200 (5 sec vs 100 sec) and 2 times faster for GO100 and GO200. In the case of DS500 users gevent over performed mod_wsgi of 64 times (13 vs 887 seconds) while in the case of GO500 of 30 times.

Due to the previously discussed bias introduced by the high error rate on the service testing, this load testing has been re-executed excluding the GC requests. In this case, the list of procedures and observed properties were hardcoded in the testing scripts. This is to overcome the lack of information for performing DS and GO requests. Results of this further test are reported in

Table 16 and

Figure 7.

The response errors drastically drop down and the executed requests per seconds increased. In average, not considering tests with failures,

gevent registered two times faster responses, ratio that became more important with higher concurrent users (for example 10 time faster in DS and GO 500 tests). In

Table 17 and

Table 18 the percentage of DS and GO requests completed within given times for the different configuration of concurrent users and WSGI server are reported.

3.4. Hardware Performance

The system performance metrics have been monitored and recorded during the test execution by means of

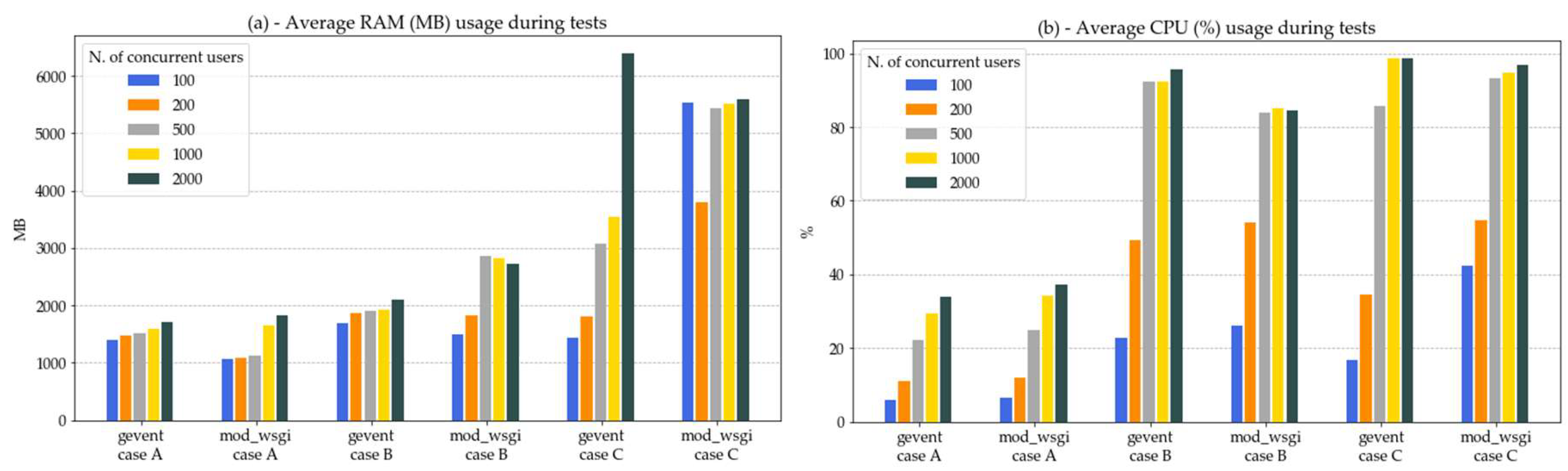

dstat. As illustrated in

Figure 8, only a minimal part of the 32GB available were used. In case A, it was limited to less than 2 GB; in case B less than 3 GB and in case C it reached a maximum of 6.4 GB.

The usage rate of the 6 available processors during the tests are plotted in

Figure 8. In case A it never exceeded the 40%. In case B in tests with more than 200 users it gets close to 90%: about 95% for gevent and 85 for mod_wsgi. In case C processors are almost saturated with more than 500 users.

Along all the tests, the registered disk I/O speed in writing had a mean of 2,049.32 ± 1,915.18 KB/s and a maximum of 77,744.00 KB/s. In reading an average of 32,694.31 ± 11,609.67 KB/s and a maximum of 477,288.00 KB/s. In all the cases below of the maximum SSD speed of 520,000 KB/s. The temporal behaviour of the I/O write speed parameter shows, in all the tests, an almost constant rate interleaved by high peaks in correspondence of insertObservation requests. The I/O read speed is always regular. As a representative example of this behaviours, the test on case B with 500 users and gevent is illustrated in

Figure 9.

3.5. WSGI Servers Performance

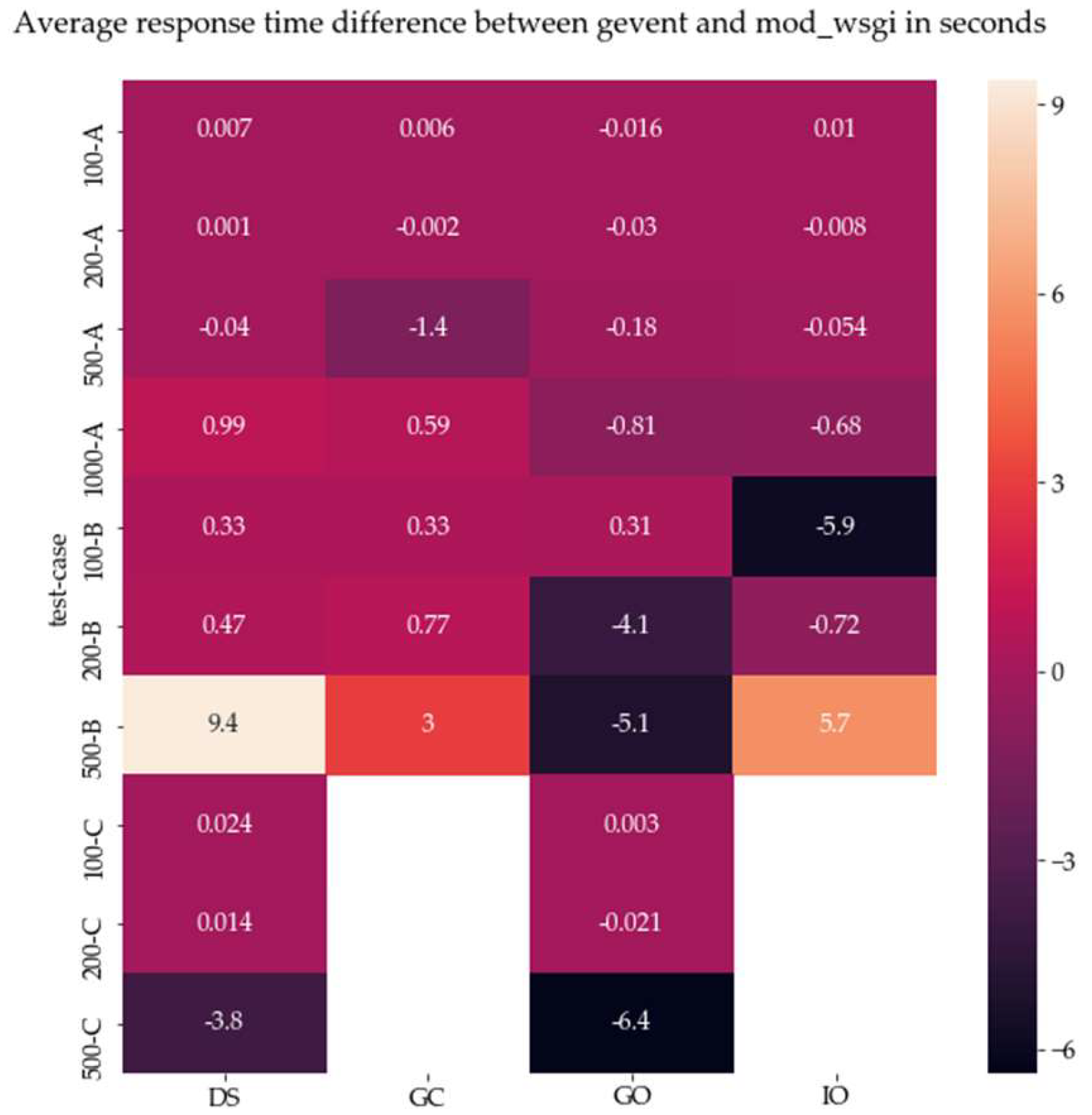

In the experiments two different WSGI server application were used and its response based on test case and request is reported in the previous section. In

Figure 10 the average response time difference between

gevent and

mod_wsgi WSGI servers registered during the load testing in case A, B and C with 100, 200, 500 and 1000 users is represented. Tests were failures were registered are not considered as they may affect the performance registered. In case C getCapabilities (GC) and insertObservations(IO) were not tested.

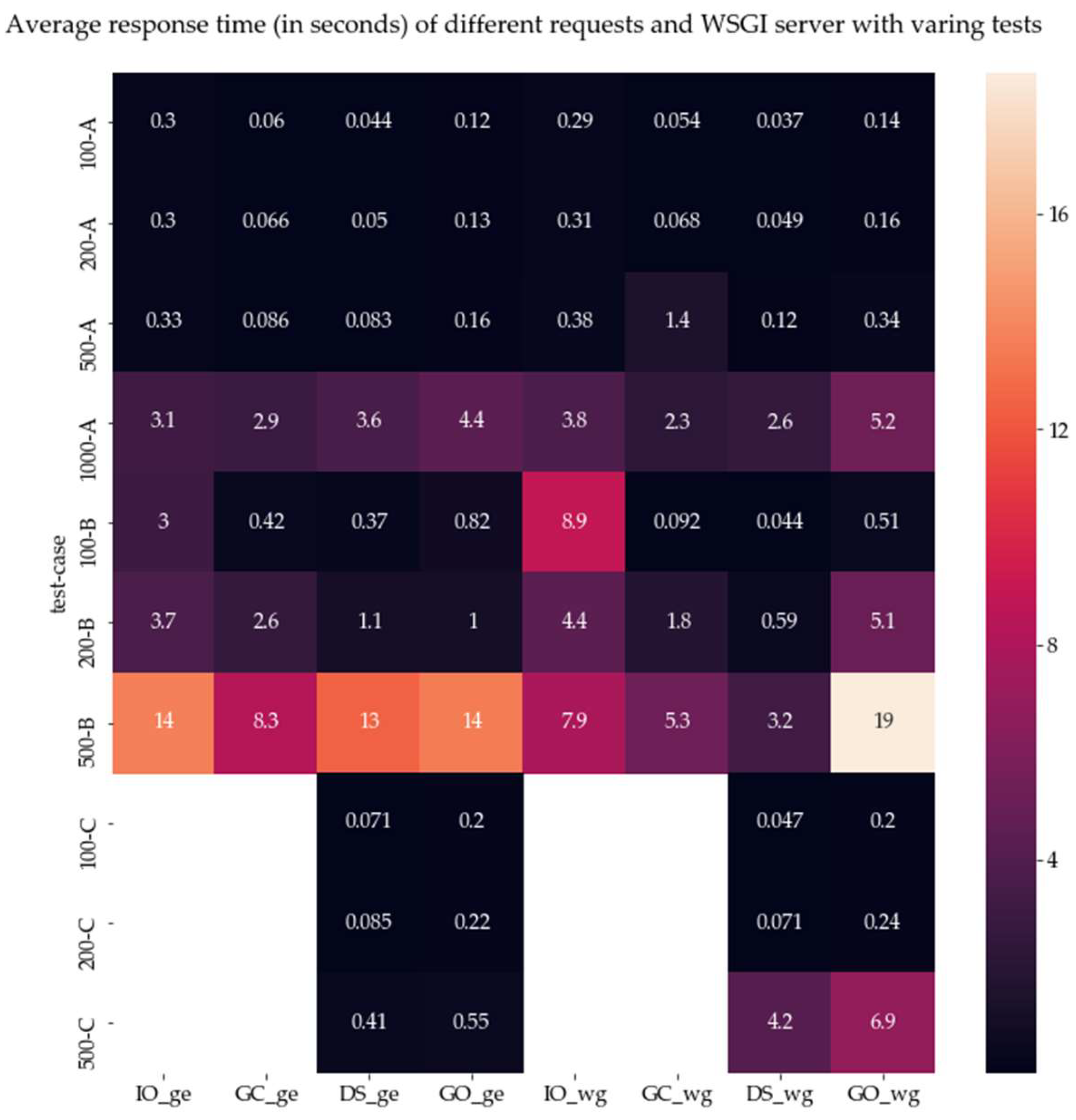

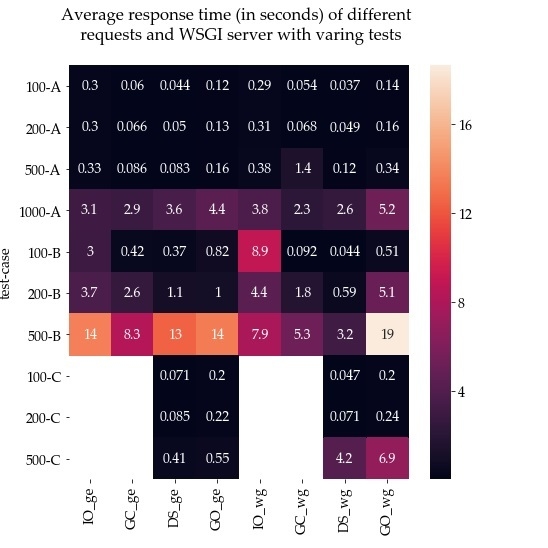

In

Figure 11, the average response times for the different tests are illustrated to provide a general overview of all the tests. In this image, as discussed previously in the paper, the test cases that registered errors are omitted since they could have affected the actual response time.

4. Discussion

As described in the methodology chapter, in this sections the results are evaluated with respect to the Quality of Services (QoS) defined by INSPIRE in the requirements for network services and respective Technical Guidance (TG) documents.

If we compare the SOS’s getCapabilities and describeSensor requests with INSPIRE’s Get Download Service Metadata, we can observe that in case A, mid-size monitoring system, istSOS is on average satisfying the 10 seconds response time limits for all the concurrency scenarios. If we look at the percentage of requests exceeding this limit we can clearly see that this performance is guaranteed to 100% only up to 500 concurrent users, 98% up to 1000 users and only up to 50% with 2000 users. In the case B, characterized by a big number of observations, the conformance with this limit is never guaranteed to 100% if gevent is used. Nevertheless, with the exception of a low percentage of requests (from 10% to 1%) it is satisfied with up to 200 users using gevent and 500 users using mod_wsgi. In case C, characterized by big number of sensors, in the test that excluded the getCapabilities request due to its high errors, the performance limit is satisfied with up to 500 users using both the WSGIs: only 1% of the responses in DS500m exceeded by the 12% the limit.

Similarly, if we compare the SOS’s getObservation request to the INSPIRE’s Get Spatial Data Set request we can see that istSOS satisfies the performance limits set by INSPIRE for data recovery in all load scenarios in case A, up to 1000 users. In the GO2000g scenario only 1% of the requests exceeded the limit, while in the GO2000m scenario only 80% of the requests were below the 30 seconds. In case B, the service fulfils the INSPIRE performance requirements for scenarios of 200 and 500 concurrent users regardless the WSGI used. With 500 users it is mostly satisfied (up to 95% of the requests with gevent and up to 90% with mod_wsgi) while with 1000 and 2000 it is never fulfilled. In the case C with GC excluded, the limit is fulfilled up to 1000 users with the exception of the 1% of requests in GO1000m scenario. With 2000 users only gevent partially satisfied the limit and only for the 66% of the requests.

There is no equivalent in INSPIRE services for insertObsrvation but being a transactional request to dynamically add data to the system we could, as a first instance, use the same 30 seconds limit used for data requests. Under this assumption, we can see from the load test results that istSOS satisfies the INSPIRE’s performance limits in all the load scenarios of case A, except for the 10% of requests in scenario IO2000m. In the case B, this limit is met only up to 500 users and in the case of IO500g up to 90% of the responses. With mod_wsgi and 1000 and 2000 users only the 80% of the responses are below the limit and with gevent only for 1000 users the system was able to keep the response time in the 66% of within the INSPIRE performance limit.

Since the tests have been performed for a short period of time, it is not possible to draw final conclusions about the robustness of istSOS, intended as the ability of the service to function correctly even in presence of errors. However, during the entire testing periods we have registered the absence of any downtime despite the presence of errors. The availability during the test period was therefore 100% since the service was always up. Nevertheless, the user perceived availability of the service is strongly impacted by the rate of failure. In this regard, if we consider the errors as service unavailability, istSOS showed that if gevent is used as WSGI server, the 99% of availability is guarantee in both case A and B, while for case C it is guarantee with the exception of the getCapabilities request. Results obtained with mod_wsgi are in average of worst quality, in fact they satisfy the availability criterion only up to 1000 simultaneous users in case A and 500 in case B and C.

The capacity limit set by the TG for INSPIRE download services, identifying the load scenario that the service should be able to sustain providing a compliant performance, are 10 requests per seconds (req/sec). The technical guidelines also allowed the server not to respond in orderly fashion to requests exceeding 50 simultaneous requests; in the current study this condition is ignored since the scope of this work is to test the service under high peak usage conditions. From the performance results, we have seen that the case A satisfies the performance criteria up to 1000 users, which means a capacity of 56.38 and 53.98 req/sec respectively with

mod_wsgi and

gevent (see

Table 5). In the case B the performance limits are met at maximum with 200 users, which identify for mod_wsgi 11.89 req/sec and for gevent 15.42 req/sec (see

Table 10). For case C (ex-cluding GC requests) the performance limits are respected up to 500 users, which registered 24.40 and 37.47 req/sec (see

Table 15).

Looking at the usage of the hardware resources during the tests execution, we can see that the configuration of the server was adequate to support the tested cases. In fact, with the exception in few cases of CPU usage (with 2000 concurrent users), the physical server limits were not reached. It is particularly interesting to see how the disk I/O is particularly stressed when the sensors register new observations (

insertObservation requests). In fact, contrary of what can be expected, the data retrieval is using less I/O than the data insertion. To better understand this apparently strange behaviours the database log files of case B with 500 concurrent users were analysed as a representative case. The total time of the queries for each specific SOS request has been evaluated and is reported in

Table 19. From these statistics we can clearly see how the execution of an IO request is in average a level of magnitude higher of the GO.

The main reason for the difference is due to the data integrity checks that the software performs before inserting new data. In fact, at each IO request before inserting the data, istSOS:

authenticates the sensor trough a unique identifier provided at registration;

verifies that the properties observed are exactly those specified at sensor registration.

verifies that the new data have later observation time then the latest registered observation for the same sensor;

These three operations take 98.8% on average of the total duration of the request’s queries, and the latest check is particular slow because it executes a “SELECT MAX()” query type over the time-series. The queries of a GO request, which has no data integrity checks but needs to compose the XML response in memory, cost 13.3% on average of the queries of a GO request.

Since we recognize that, in general, hardware configuration affects the results in load testing experiments, we tried to minimize this impact by avoiding the use of virtual systems, using a high-speed band and running the requests from a physically different machine. While we cannot state that the hardware did not affected the results we can safely assume that it does not play a crucial role in this tests. In fact the recorded hardware usage showed that the system was adequately dimensioned with the RAM that were never fully used and the CPU that only in the case C with 1000 and 2000 users was close (but below) to 100%.

Analysing

Figure 10, we can see that in general

gevent and

mod_wsgi, the two WSGI server we have tested, are equivalent for low concurrency. However, for the

getObservations request

gevent is faster and presents the higher performance differences with respect to the

mod_wsgi. On the contrary, in case B (larger number of observations) with 500 concurrent users the performance of

mod_wsgi outperformed

gevent in all the other requests (DS, GC and IO). A possible motivation for this behaviour could reside in a better capacity of

gevent, that is based on co-routines, in handling I/O blocking operations like the GO request, while

mod_wsgi using a multi-processes and multi-thread approach execute faster requests like GC, IO and DS without interferences of I/O blocking requests.

The three varying parameters of tests are: number of sensors, number of stored observations and number of concurrent users. From the experiments, it is clear that the increase in size of the observations and of the sensors, from case A (105 million of observations and 20 sensors) to case B (1.7 billions of observations and 130 sensors), produce slower responses regardless of the WSGI server used. Similarly, the increase of size in the sensors and reduction of observations, from case A (20 sensors and 105 million of observations) and case C (5100 sensors and 122 thousand observations) produced slower response time. This indicates that both the increase of stored historical observations and the number of deployed sensors negatively affect the istSOS performance. While it is not possible to separate the negative contribution on performance of the two components it is clear that the number of stored data has a greater negative impact than the number of sensors. In fact, from case A to case C with respect to case A to case B sensors increase of 50 times while observations diminish of 500 time and performance degrades of 5 times.

5. Conclusions

With the presented research the authors have conducted a quantitative test of the istSOS software solution, which implements the SOS standard from the OGC under different conditions. The objective of the test was to understand the capabilities of the software, under ordinary installation, to meet the requirements of interoperable temporal and real-time GIS posed by the current state of the art in environmental modelling, visualization and Earth observation. To this end, the test analysed the service behaviour under different realistic monitoring networks, number of concurrent users and WSGI server type. The synthetic networks used in the test were inspired by existing deployed systems and specifically mimicked a regular monitoring network with 20 sensors and 100 million observations, a monitoring network with more station and data, composed by 130 sensors and 1.7 billion measures, and a monitoring network with a large number of sensors (5100) and limited data (1.7 million). Scenarios with 100, 200, 500, 1000 and 2000 concurrent users were tested for each network and for two different WSGI server (mod_wsgi and gevent). The monitored and registered service metrics for each testing scenarios and different SOS requests were: served requests per second, response times and errors. Additionally, the server resource consumption in term of CPU, RAM and I/O has been monitored and registered.

The presented results show that istSOS is able to meet the INSPIRE requirements for a download service in terms of quality of service with some limitations, in case of high number of concurrent users. This limit is due to the demonstrated degradation of the software performance with spatial scaling (increasing number of deployed sensors) and temporal scaling (increasing length of time-series). In particular, in case of spatial scaling low performance, istSOS produced a large amount of errors because of the large size of the getCapabilities response document which overloaded the service and consequently registered a large amount of time-out failures. This study has also demonstrated that gevent produces less errors than mod_wsgi and is able to have higher throughput (executed requests per second). Specifically, gevent showed better performance in dispatching observations by executing getObservations requests while mod_wsgi is better in dispatching metadata (getCapabilities and describeSensors requests).

The results demonstrate also that with an high number of sensors and high concurrency the getCapabilities request undermine the correct working of istSOS. In fact, its extremely high response time causes timeout errors that block the server from executing other requests.

The study shows that istSOS’ strategy to implement data integrity checks before registering a new observation has a great cost. This cost may represent a great obstacle in case of monitoring systems characterized by high-frequency or high-number of sensors and requiring very fast data insertion to satisfy the real time geospatial applications needs.

As a general remark, the authors underline the fact that the QoS limits set for INSPIRE’s download service are designed to serve data to generally support the formulation, implementation, monitoring and evaluation of policies and activities, which have a direct or indirect impact on the environment. In this regard, this research has demonstrated that istSOS is suitable for most of the scientific or operational applications where data are accessed by tens of users with peaks of one hundred in emergency cases [

14,

39]. However, it also demonstrated that its capacity of scaling with increasing size of monitoring network, either in term of number of stored observations or sensors, is limited. The degrading performances make istSOS in a standalone installation not capable of providing a sufficient quality of service for Internet of Things (IoT) applications where thousands of concurrent users, directly accessing the data, and applications with thousands of connected sensors are expected.

From the software development perspective, the test has shown the challenges to be addressed by future versions: improve the response speed and the software capacity. The first would require some code optimisation, particularly to speed up the query execution time for data integrity checks. The latter would require the implementation of a strategy to scale istSOS at the application level. this could be addressed in future istSOS versions by exploring micro-services architecture and asynchronous programming.