Evaluating UAV Flight Parameters for High-Accuracy in Road Accident Scene Documentation: A Planimetric Assessment Under Simulated Roadway Conditions

Abstract

1. Introduction

2. Literature Review

2.1. Flight Altitude

2.2. Camera Angle

2.3. Image Overlap

2.4. Summary of Flight Parameters

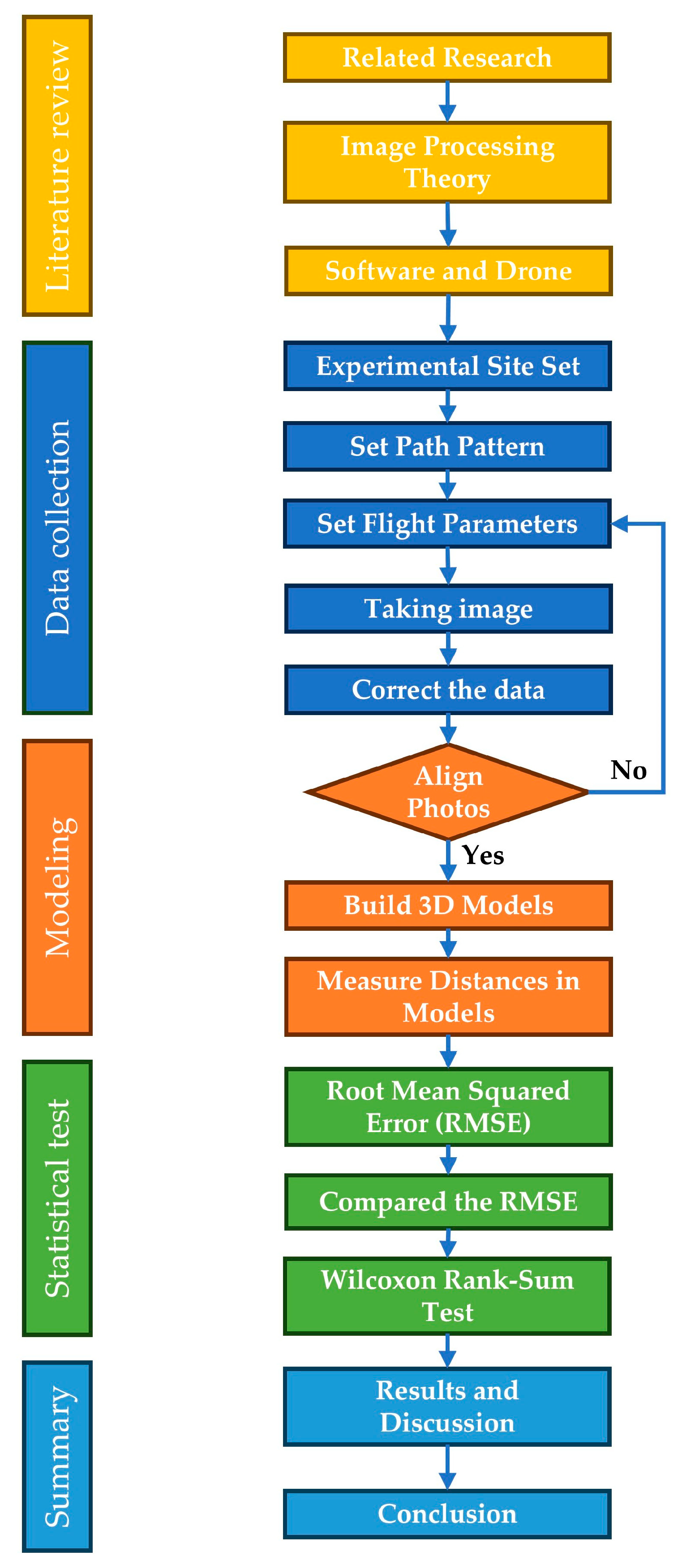

3. Methodology

3.1. Research Design

3.2. UAV Equipment

- Shooting Angle: Course Aligned;

- Capture Mode: Hover and Capture at Point;

- Flight Course Mode: Inside Mode.

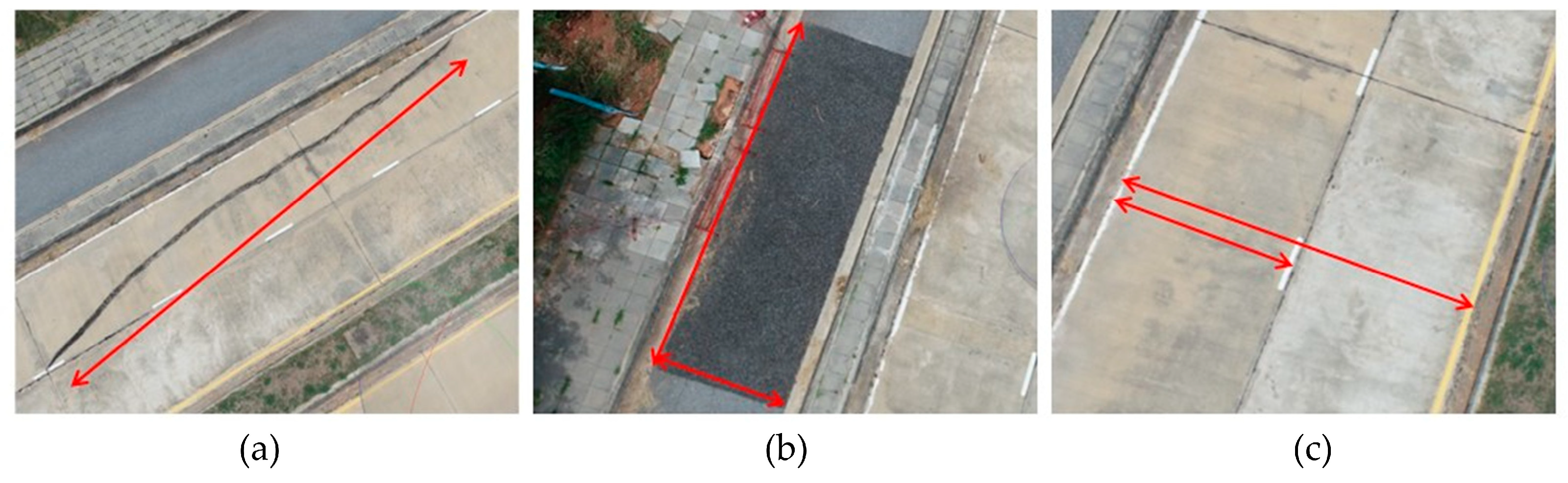

3.3. Study Area and Ground Control

3.4. Data Collection and Image Acquisition

3.5. Three-Dimensional Model Generation and Accuracy Assessment

3.6. Statistical Comparison Using Wilcoxon Rank-Sum Test

4. Results

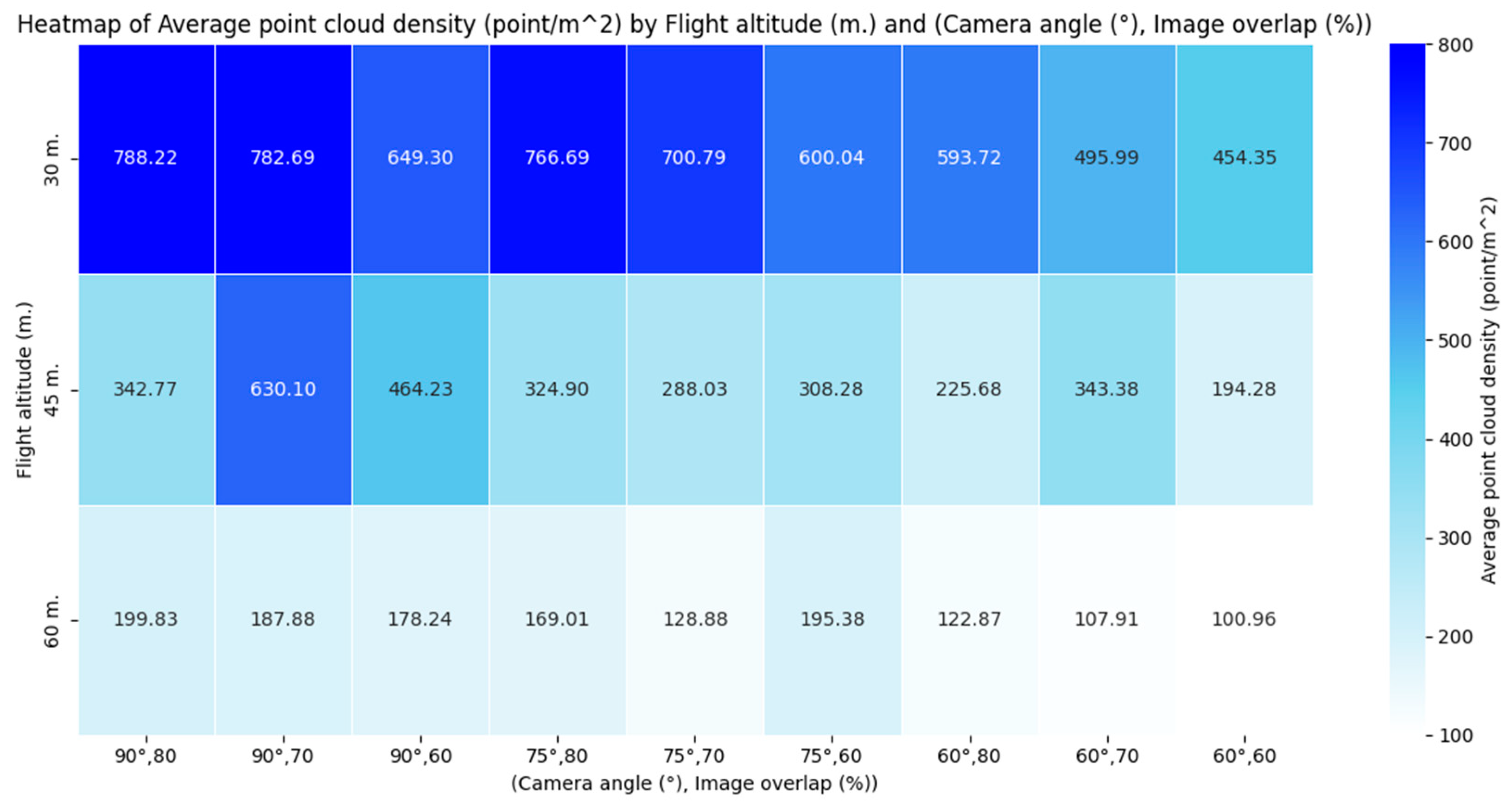

4.1. Overview of Model Generation

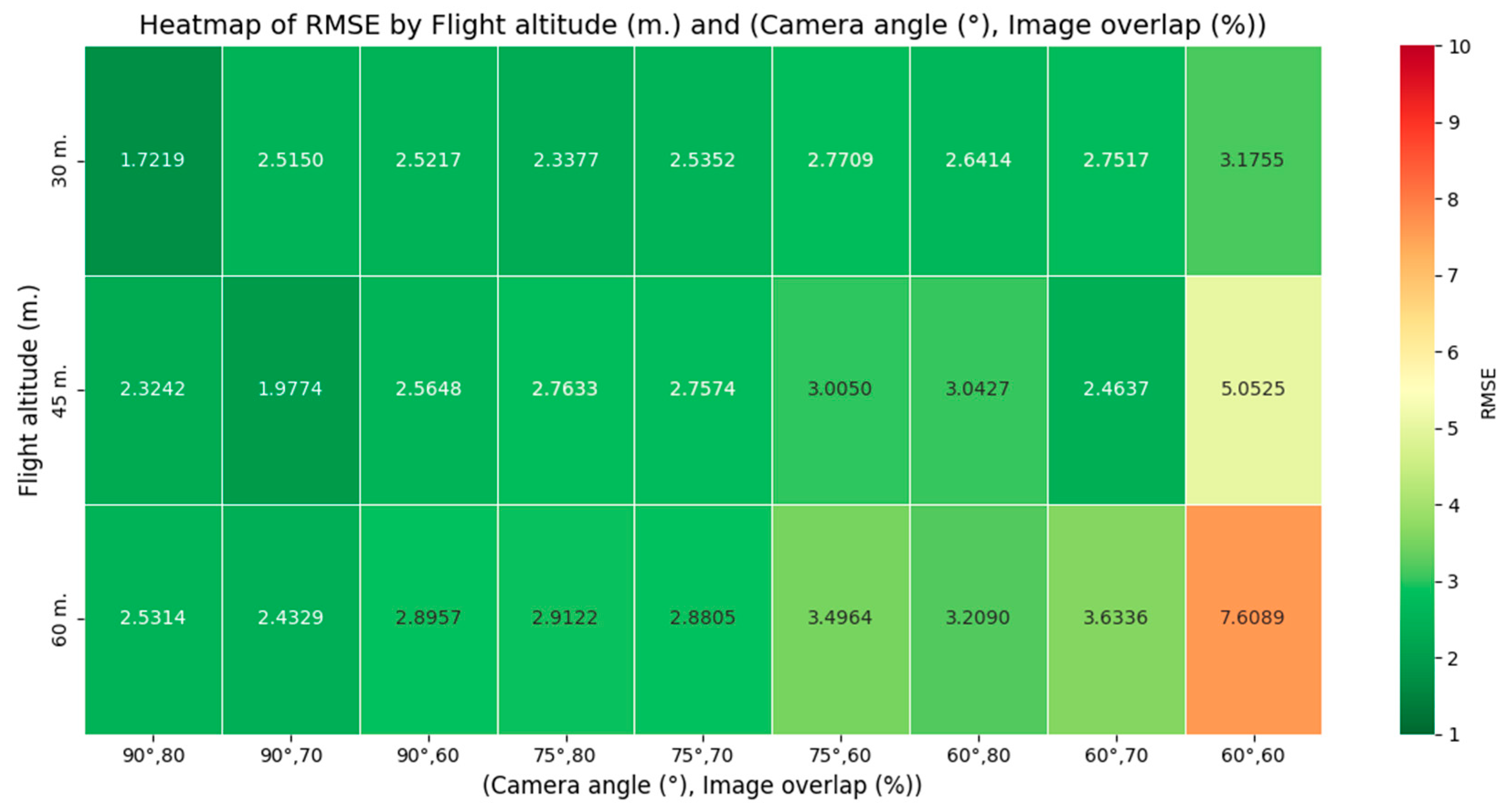

4.2. Results of Flight Altitude

4.3. Results of Camera Angle

4.4. Results of Image Overlap

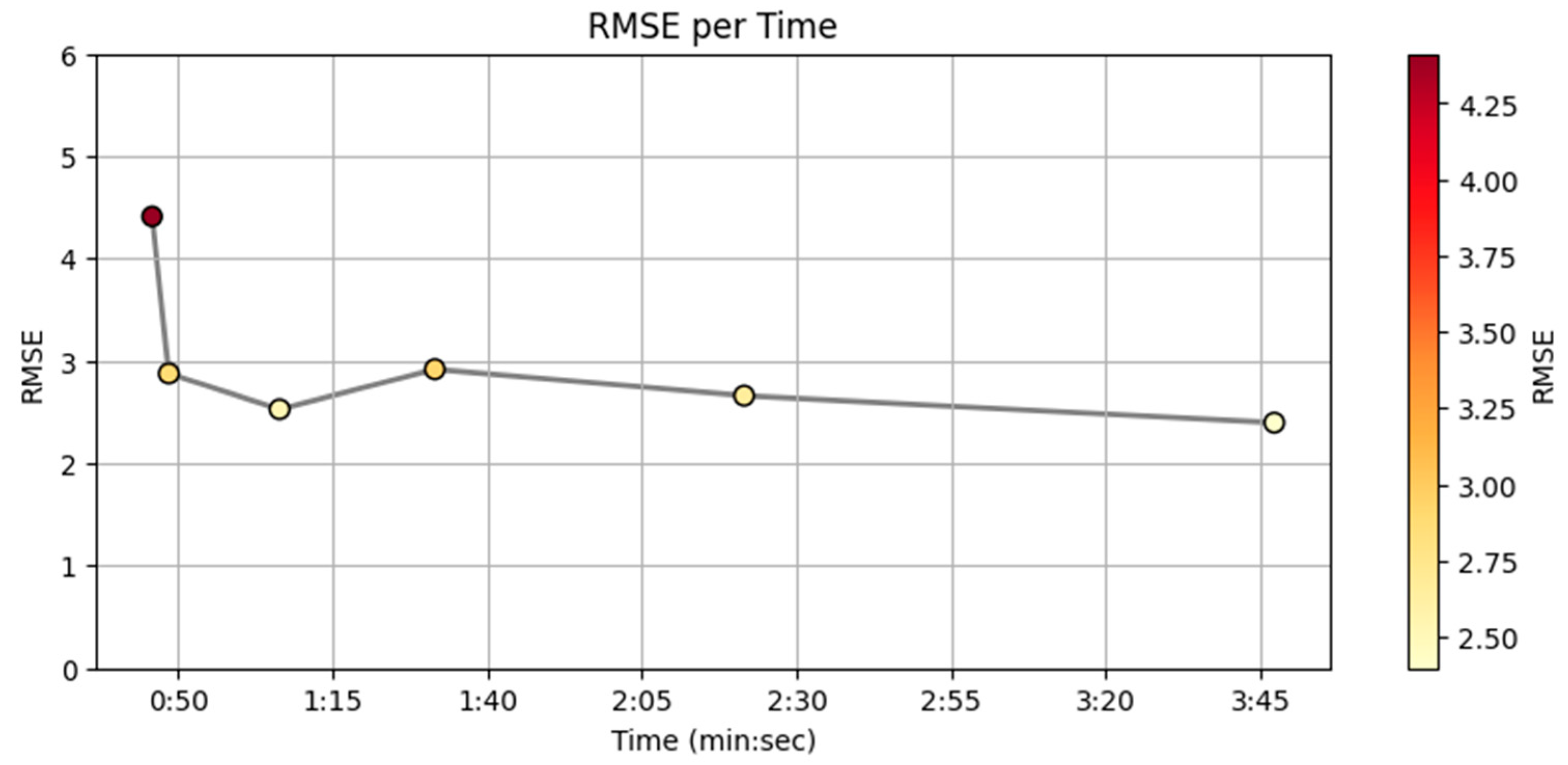

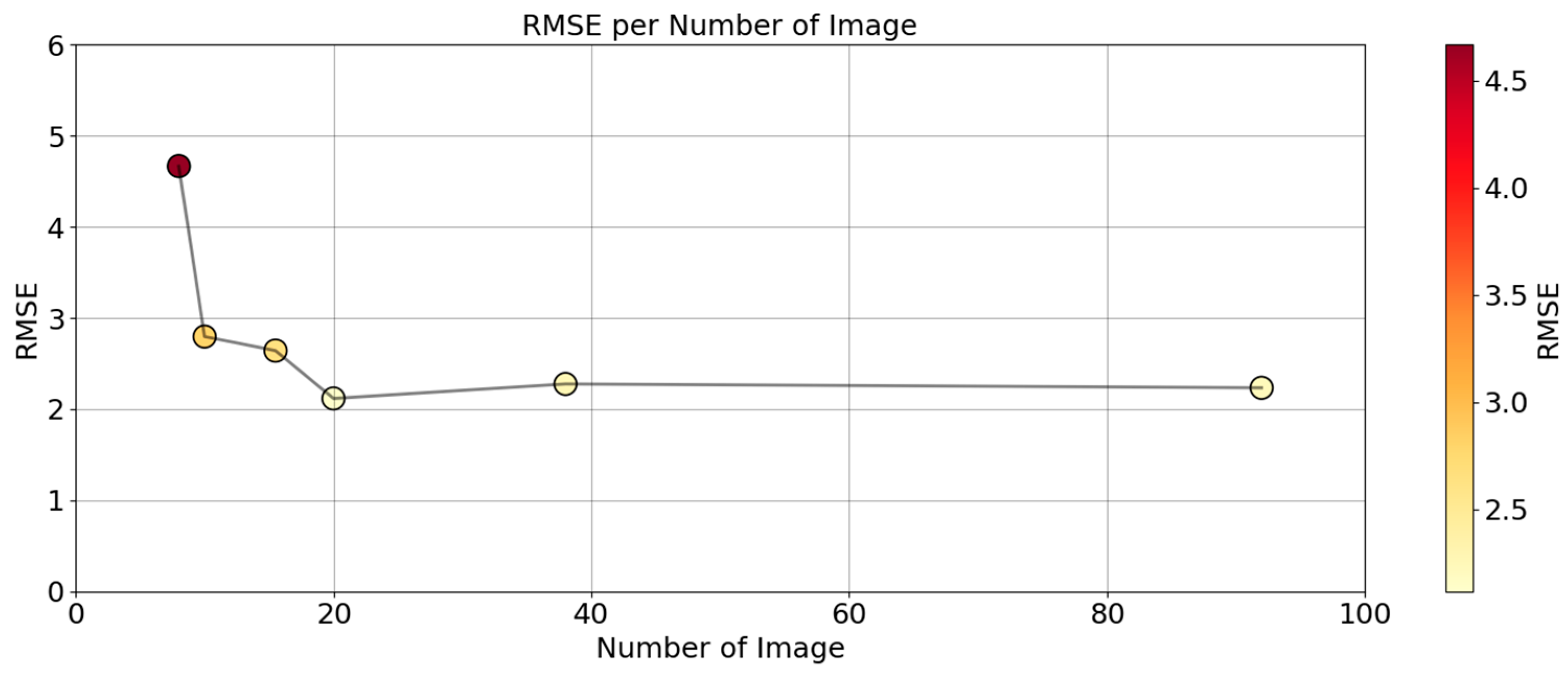

4.5. Relationship Between Number of Photographs, Flight Duration, and RMSE

4.6. Results of Wilcoxon Rank-Sum Test

5. Discussion

5.1. Influence of Flight Altitude

5.2. Influence of Camera Angle

- Reduction in geometric distortion: Including oblique imagery helps reduce systematic errors known as “doming” or “flattening,” especially when working with nadir-only imagery. Studies demonstrate that combining nadir and oblique images enhances reconstruction completeness and model geometry, particularly in areas with vertical structures or complex terrain [37,46];

- Improved detail in vertical features: Off-nadir images (20–35° tilt) boost the accuracy of building façades and roadside vertical elements by approximately 10–20%, compared to nadir-only captures. Experiments across more than 150 flight scenarios confirm that hybrid image sets (combining nadir and oblique angles) significantly improve cm-level accuracy [53].

5.3. Influence of Image Overlap Percentage

5.4. Influence of Flight Duration and Number of Images on RMSE

5.5. Statistical Confirmation via Wilcoxon Rank-Sum Test

5.6. Practical Implications and Recommendations

6. Conclusions and Recommendations

Author Contributions

Funding

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Gohari, A.; Ahmad, A.B.; Rahim, R.B.A.; Elamin, N.I.M.; Gismalla, M.S.M.; Oluwatosin, O.O.; Hasan, R.; Ab Latip, A.S.; Lawal, A. Drones for road accident management: A systematic review. IEEE Access 2023, 11, 109247–109256. [Google Scholar] [CrossRef]

- Su, S.; Liu, W.; Li, K.; Yang, G.; Feng, C.; Ming, J.; Liu, G.; Liu, S.; Yin, Z. Developing an unmanned aerial vehicle-based rapid mapping system for traffic accident investigation. Aust. J. Forensic Sci. 2016, 48, 454–468. [Google Scholar] [CrossRef]

- Raj, C.V.; Sree, B.N.; Madhavan, R. Vision based accident vehicle identification and scene investigation. In Proceedings of the 2017 IEEE Region 10 Symposium (TENSYMP), Cochin, India, 14–16 July 2017; pp. 1–5. [Google Scholar]

- Almeshal, A.M.; Alenezi, M.R.; Alshatti, A.K. Accuracy assessment of small unmanned aerial vehicle for traffic accident photogrammetry in the extreme operating conditions of Kuwait. Information 2020, 11, 442. [Google Scholar] [CrossRef]

- Vida, G.; Melegh, G.; Süveges, Á.; Wenszky, N.; Török, Á. Analysis of UAV Flight Patterns for Road Accident Site Investigation. Vehicles 2023, 5, 1707–1726. [Google Scholar] [CrossRef]

- Tan, Y.; Li, Y. UAV photogrammetry-based 3D road distress detection. ISPRS Int. J. Geo-Inf. 2019, 8, 409. [Google Scholar] [CrossRef]

- Iglesias, L.; De Santos-Berbel, C.; Pascual, V.; Castro, M. Using Small Unmanned Aerial Vehicle in 3D Modeling of Highways with Tree-Covered Roadsides to Estimate Sight Distance. Remote Sens. 2019, 11, 2625. [Google Scholar] [CrossRef]

- Barroso, A.; Henriques, R.; Cerqueira, Â.; Gomes, P.; Ribeiro Antunes, I.M.H.; Reis, A.P.M.; Valente, T.M. Acid mine drainage and waste dispersion in legacy mining sites: An integrated approach using UAV photogrammetry and geospatial analysis. J. Hazard. Mater. 2025, 495, 138827. [Google Scholar] [CrossRef]

- Puniach, E.; Gruszczyński, W.; Ćwiąkała, P.; Matwij, W. Application of UAV-based orthomosaics for determination of horizontal displacement caused by underground mining. ISPRS J. Photogramm. Remote Sens. 2021, 174, 282–303. [Google Scholar] [CrossRef]

- Liu, Y.; Zheng, X.; Ai, G.; Zhang, Y.; Zuo, Y. Generating a high-precision true digital orthophoto map based on UAV images. ISPRS Int. J. Geo-Inf. 2018, 7, 333. [Google Scholar] [CrossRef]

- Papadopoulou, E.-E.; Vasilakos, C.; Zouros, N.; Soulakellis, N. DEM-based UAV flight planning for 3D mapping of geosites: The case of olympus tectonic window, Lesvos, Greece. ISPRS Int. J. Geo-Inf. 2021, 10, 535. [Google Scholar] [CrossRef]

- Kerle, N.; Nex, F.; Gerke, M.; Duarte, D.; Vetrivel, A. UAV-based structural damage mapping: A review. ISPRS Int. J. Geo-Inf. 2019, 9, 14. [Google Scholar] [CrossRef]

- Liu, Y.; Han, K.; Rasdorf, W. Assessment and prediction of impact of flight configuration factors on UAS-based photogrammetric survey accuracy. Remote Sens. 2022, 14, 4119. [Google Scholar] [CrossRef]

- Nex, F.; Remondino, F. UAV for 3D mapping applications: A review. Appl. Geomat. 2014, 6, 1–15. [Google Scholar] [CrossRef]

- Inzerillo, L.; Di Mino, G.; Roberts, R. Image-based 3D reconstruction using traditional and UAV datasets for analysis of road pavement distress. Autom. Constr. 2018, 96, 457–469. [Google Scholar] [CrossRef]

- Wu, S.; Feng, L.; Zhang, X.; Yin, C.; Quan, L.; Tian, B. Optimizing overlap percentage for enhanced accuracy and efficiency in oblique photogrammetry building 3D modeling. Constr. Build. Mater. 2025, 489, 142382. [Google Scholar] [CrossRef]

- Santos Santana, L.; Ferraz, G.A.E.S.; Bedin Marin, D.; Dienevam Souza Barbosa, B.; Mendes Dos Santos, L.; Ferreira Ponciano Ferraz, P.; Conti, L.; Camiciottoli, S.; Rossi, G. Influence of flight altitude and control points in the georeferencing of images obtained by unmanned aerial vehicle. Eur. J. Remote Sens. 2021, 54, 59–71. [Google Scholar]

- Nagendran, S.K.; Tung, W.Y.; Ismail, M.A.M. Accuracy assessment on low altitude UAV-borne photogrammetry outputs influenced by ground control point at different altitude. IOP Conf. Ser. Earth Environ. Sci. 2018, 169, 012031. [Google Scholar]

- Anders, N.; Smith, M.; Suomalainen, J.; Cammeraat, E.; Valente, J.; Keesstra, S. Impact of flight altitude and cover orientation on Digital Surface Model (DSM) accuracy for flood damage assessment in Murcia (Spain) using a fixed-wing UAV. Earth Sci. Inform. 2020, 13, 391–404. [Google Scholar] [CrossRef]

- Udin, W.; Ahmad, A. Assessment of Photogrammetric Mapping Accuracy Based on Variation Flying Altitude Using Unmanned Aerial Vehicle. IOP Conf. Ser. Earth Environ. Sci. 2014, 18, 012027. [Google Scholar] [CrossRef]

- Siebert, S.; Teizer, J. Mobile 3D mapping for surveying earthwork projects using an Unmanned Aerial Vehicle (UAV) system. Autom. Constr. 2014, 41, 1–14. [Google Scholar] [CrossRef]

- Ahmed, S.; El-Shazly, A.; Abed, F.; Ahmed, W. The influence of flight direction and camera orientation on the quality products of UAV-based SfM-photogrammetry. Appl. Sci. 2022, 12, 10492. [Google Scholar] [CrossRef]

- Chiabrando, F.; Lingua, A.; Maschio, P.; Teppati Losè, L. The influence of flight planning and camera orientation in UAVs photogrammetry. A test in the area of Rocca San Silvestro (LI), TUSCANY. Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. 2017, 42, 163–170. [Google Scholar] [CrossRef]

- Hastedt, H.; Luhmann, T. Investigations on the quality of the interior orientation and its impact in object space for UAV photogrammetry. Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. 2015, 40, 321–328. [Google Scholar] [CrossRef]

- Seifert, E.; Seifert, S.; Vogt, H.; Drew, D.; Van Aardt, J.; Kunneke, A.; Seifert, T. Influence of drone altitude, image overlap, and optical sensor resolution on multi-view reconstruction of forest images. Remote Sens. 2019, 11, 1252. [Google Scholar] [CrossRef]

- Domingo, D.; Ørka, H.O.; Næsset, E.; Kachamba, D.; Gobakken, T. Effects of UAV image resolution, camera type, and image overlap on accuracy of biomass predictions in a tropical woodland. Remote Sens. 2019, 11, 948. [Google Scholar] [CrossRef]

- Georgiou, A.; Masters, P.; Johnson, S.; Feetham, L. UAV-assisted real-time evidence detection in outdoor crime scene investigations. J. Forensic Sci. 2022, 67, 1221–1232. [Google Scholar] [CrossRef]

- Pérez, J.A.; Gonçalves, G.R.; Barragan, J.R.M.; Ortega, P.F.; Palomo, A.A.M.C. Low-cost tools for virtual reconstruction of traffic accident scenarios. Heliyon 2024, 10, e29709. [Google Scholar] [CrossRef]

- Ruzgienė, B.; Kuklienė, L.; Kuklys, I.; Jankauskienė, D.; Lousada, S. The use of kinematic photogrammetry and LiDAR for reconstruction of a unique object with extreme topography: A case study of Dutchman’s Cap, Baltic seacoast, Lithuania. Front. Remote Sens. 2025, 6, 1397513. [Google Scholar] [CrossRef]

- Yang, Y.; Lin, Z.; Liu, F. Stable imaging and accuracy issues of low-altitude unmanned aerial vehicle photogrammetry systems. Remote Sens. 2016, 8, 316. [Google Scholar] [CrossRef]

- Rabiu, L.; Ahmad, A. Unmanned Aerial Vehicle Photogrammetric Products Accuracy Assessment: A Review. Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. 2023, 48, 279–288. [Google Scholar] [CrossRef]

- Zulkifli, M.H.; Tahar, K.N. The Influence of UAV Altitudes and Flight Techniques in 3D Reconstruction Mapping. Drones 2023, 7, 227. [Google Scholar] [CrossRef]

- Korumaz, S.A.G.; Yıldız, F. Positional Accuracy Assessment of Digital Orthophoto Based on UAV Images: An Experience on an Archaeological Area. Heritage 2021, 4, 1304–1327. [Google Scholar] [CrossRef]

- Bazrafkan, A.; Worral, H.; Perdigon, C.; Oduor, P.G.; Bandillo, N.; Flores, P. Evaluating Sensor Fusion and Flight Parameters for Enhanced Plant Height Measurement in Dry Peas. Sensors 2025, 25, 2436. [Google Scholar] [CrossRef]

- de Lima, R.S.; Lang, M.; Burnside, N.G.; Peciña, M.V.; Arumäe, T.; Laarmann, D.; Ward, R.D.; Vain, A.; Sepp, K. An Evaluation of the Effects of UAS Flight Parameters on Digital Aerial Photogrammetry Processing and Dense-Cloud Production Quality in a Scots Pine Forest. Remote Sens. 2021, 13, 1121. [Google Scholar] [CrossRef]

- Næsset, E. Effects of different flying altitudes on biophysical stand properties estimated from canopy height and density measured with a small-footprint airborne scanning laser. Remote Sens. Environ. 2004, 91, 243–255. [Google Scholar] [CrossRef]

- Nesbit, P.R.; Hugenholtz, C.H. Enhancing UAV–SfM 3D Model Accuracy in High-Relief Landscapes by Incorporating Oblique Images. Remote Sens. 2019, 11, 239. [Google Scholar] [CrossRef]

- Rossi, P.; Mancini, F.; Dubbini, M.; Mazzone, F.; Capra, A. Combining nadir and oblique UAV imagery to reconstruct quarry topography: Methodology and feasibility analysis. Eur. J. Remote Sens. 2017, 50, 211–221. [Google Scholar] [CrossRef]

- Nikolakopoulos, K.G.; Kyriou, A.; Koukouvelas, I.K. Developing a Guideline of Unmanned Aerial Vehicle’s Acquisition Geometry for Landslide Mapping and Monitoring. Appl. Sci. 2022, 12, 4598. [Google Scholar] [CrossRef]

- Elhadary, A.; Rabah, M.; Ghanem, E.; Abd El Ghany, R.; Soliman, A. The influence of flight height and overlap on UAV imagery over featureless surfaces and constructing formulas predicting the geometrical accuracy. NRIAG J. Astron. Geophys. 2022, 11, 210–223. [Google Scholar] [CrossRef]

- Wang, F.; Zou, Y.; del Rey Castillo, E.; Lim, J. Optimal UAV Image Overlap for Photogrammetric 3D Reconstruction of Bridges. IOP Conf. Ser. Earth Environ. Sci. 2022, 1101, 022052. [Google Scholar] [CrossRef]

- Dhruva, A.; Hartley, R.; Redpath, T.; Estarija, H.; Cajes, D.; Massam, P. Effective UAV Photogrammetry for Forest Management: New Insights on Side Overlap and Flight Parameters. Forests 2024, 15, 2135. [Google Scholar] [CrossRef]

- Liu, X.; Zou, H.; Niu, W.; Song, Y.; He, W. An Approach of Traffic Accident Scene Reconstruction Using Unmanned Aerial Vehicle Photogrammetry. In Proceedings of the 2019 2nd International Conference on Sensors, Signal and Image Processing, Prague, Czech Republic, 8–10 October 2019; pp. 31–34. [Google Scholar]

- Buunk, T.; Vélez, S.; Ariza-Sentís, M.; Valente, J. Comparing Nadir and Oblique Thermal Imagery in UAV-Based 3D Crop Water Stress Index Applications for Precision Viticulture with LiDAR Validation. Sensors 2023, 23, 8625. [Google Scholar] [CrossRef]

- Jiménez-Jiménez, S.I.; Ojeda-Bustamante, W.; Marcial-Pablo, M.D.J.; Enciso, J. Digital Terrain Models Generated with Low-Cost UAV Photogrammetry: Methodology and Accuracy. ISPRS Int. J. Geo-Inf. 2021, 10, 285. [Google Scholar] [CrossRef]

- Agüera-Vega, F.; Ferrer, E.; Martínez-Carricondo, P.; Sánchez-Hermosilla, J.; Carvajal-Ramírez, F. Influence of the Inclusion of Off-Nadir Images on UAV-Photogrammetry Projects from Nadir Images and AGL (Above Ground Level) or AMSL (Above Mean Sea Level) Flights. Drones 2024, 8, 662. [Google Scholar] [CrossRef]

- Róg, M.; Rzonca, A. The impact of photo overlap, the number of control points and the method of camera calibration on the accuracy of 3D model reconstruction. Geomat. Environ. Eng. 2021, 15, 67–87. [Google Scholar] [CrossRef]

- Zhao, M.; Chen, J.; Song, S.; Li, Y.; Wang, F.; Wang, S.; Liu, D. Proposition of UAV multi-angle nap-of-the-object image acquisition framework based on a quality evaluation system for a 3D real scene model of a high-steep rock slope. Int. J. Appl. Earth Obs. Geoinf. 2023, 125, 103558. [Google Scholar] [CrossRef]

- Zhang, S.; Zheng, L.; Zhou, H.; Zhao, Q.; Li, J.; Xia, Y.; Zhang, W.; Cheng, X. Fine-scale Antarctic grounded ice cliff 3D calving monitoring based on multi-temporal UAV photogrammetry without ground control. Int. J. Appl. Earth Obs. Geoinf. 2025, 142, 104620. [Google Scholar] [CrossRef]

- Elkhrachy, I. Accuracy assessment of low-cost unmanned aerial vehicle (UAV) photogrammetry. Alex. Eng. J. 2021, 60, 5579–5590. [Google Scholar] [CrossRef]

- Chiabrando, F.; Sammartano, G.; Spanò, A. Historical buildings models and their handling via 3D survey: From points clouds to user-oriented HBIM. Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. 2016, 41, 633–640. [Google Scholar]

- Wilcoxon, F. Individual Comparisons by Ranking Methods. Biom. Bull. 1945, 1, 80–83. [Google Scholar] [CrossRef]

- Eisenbeiß, H. UAV Photogrammetry; ETH Zurich: Zurich, Switzerland, 2009. [Google Scholar]

- Burdziakowski, P.; Bobkowska, K. UAV Photogrammetry under Poor Lighting Conditions—Accuracy Considerations. Sensors 2021, 21, 3531. [Google Scholar] [CrossRef]

- Santrač, N.; Benka, P.; Batilović, M.; Zemunac, R.; Antić, S.; Stajić, M.; Antonić, N. Accuracy analysis of UAV photogrammetry using RGB and multispectral sensors. Geod. Vestn. 2023, 67, 459–472. [Google Scholar] [CrossRef]

- Zaman, A.A.U.; Abdelaty, A. Effects of UAV imagery overlap on photogrammetric data quality for construction applications. Int. J. Constr. Manag. 2025, 1–16. [Google Scholar] [CrossRef]

- Chodura, N.; Greeff, M.; Woods, J. Evaluation of Flight Parameters in UAV-based 3D Reconstruction for Rooftop Infrastructure Assessment. arXiv 2025, arXiv:2504.02084. [Google Scholar] [CrossRef]

- Pargieła, K. Optimising UAV Data Acquisition and Processing for Photogrammetry: A Review. Geomat. Environ. Eng. 2023, 17, 29–59. [Google Scholar] [CrossRef]

- Leem, J.; Mehrishal, S.; Kang, I.-S.; Yoon, D.-H.; Shao, Y.; Song, J.-J.; Jung, J. Optimizing Camera Settings and Unmanned Aerial Vehicle Flight Methods for Imagery-Based 3D Reconstruction: Applications in Outcrop and Underground Rock Faces. Remote Sens. 2025, 17, 1877. [Google Scholar] [CrossRef]

- Yang, Q.; Li, A.; Liu, Y.; Wang, H.; Leng, Z.; Deng, F. Machine learning-based optimization of photogrammetric JRC accuracy. Sci. Rep. 2024, 14, 26608. [Google Scholar] [CrossRef]

- Mueller, M.; Dietenberger, S.; Nestler, M.; Hese, S.; Ziemer, J.; Bachmann, F.; Leiber, J.; Dubois, C.; Thiel, C. Novel UAV Flight Designs for Accuracy Optimization of Structure from Motion Data Products. Remote Sens. 2023, 15, 4308. [Google Scholar] [CrossRef]

- Lee, K.; Lee, W.H. Earthwork Volume Calculation, 3D Model Generation, and Comparative Evaluation Using Vertical and High-Oblique Images Acquired by Unmanned Aerial Vehicles. Aerospace 2022, 9, 606. [Google Scholar] [CrossRef]

| Study | Altitude (m) | Camera Angle (°) | Overlap (%) | RMSE/Accuracy/Point Cloud | Key Findings |

|---|---|---|---|---|---|

| Zulkifli and Tahar [32] | 5, 7, 10 | Nadir | 80–90 | RMSE ≈ 4 cm (best at 5 m, POI) | Lower altitude with the POI technique improved accuracy |

| Seifert et al. [25] | 25–100 | Nadir | Varied | RMSE ≈ 4 cm | High overlap + low altitude yielded the best reconstructions |

| Udin and Ahmad [20] | 40, 60, 80, 100 | Nadir | 60 | RMSE ≈ 0.249–0.296 cm | Lower altitude improved accuracy |

| de Lima et al. [35] | 90–150 | — | 70–90 | 565 points cloud/m2 | Lower altitude with the quality of DAP products improved the accuracy |

| Santos Santana et al. [17] | 30, 60, 90, 120 | — | — | RMSE < 7 cm (60 m altitude) | 60 m provided a balance between efficiency and accuracy |

| Agüera-Vega et al. [46] | 65, 80 | Nadir/ 11.25°, 22.5°, 33.75°, 45° | 90 (F), 70 (S) | Accuracy < 3.5 cm | Between 20°, 35° angles yielded the best accuracy and precision |

| Nesbit and Hugenholtz [37] | — | 0–35 (Oblique) | 70–90 | Mean accuracy < 3 cm | Increasing the camera tilt angle improved vertical accuracy |

| Rossi et al. [38] | — | Nadir, Oblique | — | Centimeter-level accuracy | The integration of nadir and oblique imagery enhances the geotechnical interpretation of spatially variable conditions |

| Buunk et al. [44] | 30 | Nadir, Oblique | 70 | 181,372–215,199 point cloud | Nadir outperforms oblique imagery point cloud in the orthomosaic |

| Nex and Remondino [14] | 100–200 | Nadir, Oblique | 60–80 | RMSE 3.7 cm in planimetry | High overlap and low altitude yielded the best 3D model |

| Jiménez-Jiménez et al. [45] | — | — | 70–90 (F), 60–80 (S) | RMSE 1 to 7 × GSD | Recommended 70–90% forward and 60–80% side overlap |

| Dhruva et al. [42] | 80, 120 | Nadir | 80–90 | RMSE of 0.6 cm for X, Y, and 0.04 cm for Z values | Identified 90% (f) and 85% (s) overlap at 120 m altitude as the optimal flight parameters |

| Elhadary et al. [40] | 140, 160, 180, 200 | — | 60, 70, 80 | RMSE < 6 cm | An increase in the image overlap leads to an increase in the RMSE and the point clouds’ geometric accuracy |

| Height | Angle | %Overlap | Measured Distances (m.) | ||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Control Point | L1 | L2 | L3 | L4 | L5 | L6 | L7 | L8 | L9 | L10 | |||

| Actual Reference | 17.04 | 19.879 | 20.619 | 8.51 | 2.78 | 6.44 | 3.24 | 1.02 | 10.505 | 9.969 | |||

| 30 m | 90 | 80 | Model 01 | 17.028 | 19.876 | 20.659 | 8.527 | 2.787 | 6.451 | 3.266 | 1.022 | 10.508 | 9.961 |

| 70 | Model 02 | 17.027 | 19.873 | 20.685 | 8.524 | 2.791 | 6.467 | 3.262 | 1.029 | 10.502 | 9.957 | ||

| 60 | Model 03 | 17.03 | 19.869 | 20.692 | 8.526 | 2.783 | 6.45 | 3.26 | 1.022 | 10.51 | 9.963 | ||

| 75 | 80 | Model 04 | 17.029 | 19.873 | 20.683 | 8.521 | 2.781 | 6.466 | 3.259 | 1.02 | 10.512 | 9.971 | |

| 70 | Model 05 | 17.017 | 19.868 | 20.684 | 8.531 | 2.786 | 6.461 | 3.263 | 1.024 | 10.5 | 9.961 | ||

| 60 | Model 06 | 17.001 | 19.863 | 20.683 | 8.515 | 2.796 | 6.471 | 3.261 | 1.021 | 10.516 | 9.969 | ||

| 60 | 80 | Model 07 | 17.01 | 19.852 | 20.683 | 8.511 | 2.777 | 6.462 | 3.261 | 1.026 | 10.521 | 9.964 | |

| 70 | Model 08 | 17.021 | 19.873 | 20.69 | 8.528 | 2.779 | 6.47 | 3.267 | 1.024 | 10.513 | 9.959 | ||

| 60 | Model 09 | 17.023 | 19.848 | 20.696 | 8.533 | 2.783 | 6.461 | 3.275 | 1.02 | 10.531 | 9.974 | ||

| 45 m | 90 | 80 | Model 10 | 17.023 | 19.858 | 20.672 | 8.538 | 2.767 | 6.45 | 3.262 | 1.029 | 10.519 | 9.962 |

| 70 | Model 11 | 17.03 | 19.85 | 20.65 | 8.51 | 2.776 | 6.453 | 3.273 | 1.022 | 10.504 | 9.942 | ||

| 60 | Model 12 | 17.034 | 19.876 | 20.605 | 8.505 | 2.774 | 6.483 | 3.28 | 1.019 | 10.49 | 9.918 | ||

| 75 | 80 | Model 13 | 17.03 | 19.901 | 20.687 | 8.525 | 2.793 | 6.452 | 3.274 | 1.03 | 10.53 | 9.966 | |

| 70 | Model 14 | 17.05 | 19.913 | 20.593 | 8.494 | 2.77 | 6.472 | 3.275 | 1.016 | 10.476 | 9.922 | ||

| 60 | Model 15 | 17.068 | 19.924 | 20.598 | 8.511 | 2.774 | 6.479 | 3.29 | 1.031 | 10.506 | 9.929 | ||

| 60 | 80 | Model 16 | 17.01 | 19.867 | 20.691 | 8.526 | 2.785 | 6.475 | 3.278 | 1.02 | 10.513 | 9.965 | |

| 70 | Model 17 | 17.044 | 19.898 | 20.622 | 8.514 | 2.768 | 6.503 | 3.269 | 1.028 | 10.5 | 9.944 | ||

| 60 | Model 18 | 17.081 | 19.978 | 20.617 | 8.531 | 2.811 | 6.53 | 3.306 | 1.026 | 10.503 | 9.957 | ||

| 60 m | 90 | 80 | Model 19 | 17.02 | 19.888 | 20.677 | 8.53 | 2.785 | 6.469 | 3.27 | 1.039 | 10.511 | 9.969 |

| 70 | Model 20 | 17.022 | 19.888 | 20.669 | 8.53 | 2.782 | 6.475 | 3.268 | 1.024 | 10.502 | 9.945 | ||

| 60 | Model 21 | 17.025 | 19.866 | 20.679 | 8.522 | 2.779 | 6.482 | 3.28 | 1.035 | 10.514 | 9.945 | ||

| 75 | 80 | Model 22 | 17.036 | 19.871 | 20.67 | 8.518 | 2.779 | 6.49 | 3.28 | 1.037 | 10.54 | 9.98 | |

| 70 | Model 23 | 17.041 | 19.925 | 20.608 | 8.499 | 2.78 | 6.488 | 3.278 | 1.034 | 10.48 | 9.932 | ||

| 60 | Model 24 | 17.056 | 19.937 | 20.58 | 8.515 | 2.76 | 6.483 | 3.295 | 1.03 | 10.507 | 9.928 | ||

| 60 | 80 | Model 25 | 17.001 | 19.87 | 20.677 | 8.543 | 2.779 | 6.491 | 3.28 | 1.014 | 10.507 | 9.968 | |

| 70 | Model 26 | 17.06 | 19.905 | 20.68 | 8.55 | 2.8 | 6.502 | 3.28 | 1.029 | 10.53 | 9.985 | ||

| 60 | Model 27 | 17.084 | 19.683 | 20.68 | 8.56 | 2.786 | 6.515 | 3.31 | 1.04 | 10.524 | 9.97 | ||

| Altitude (meters) | Angle (Degree) | Overlap (%) | |||||||

|---|---|---|---|---|---|---|---|---|---|

| Pairs | 30m V 45m | 30m V 60m | 45m V 60m | 90° V 75° | 90° V 60° | 75° V 60° | 80% V 70% | 80% V 60% | 70% V 60% |

| Z-stat | −0.662 | −2.252 | −1.369 | −2.517 | −2.958 | −1.369 | 0.132 | −1.810 | −2.252 |

| p-value | 0.508 | 0.024 | 0.171 | 0.012 | 0.003 | 0.171 | 0.895 | 0.070 | 0.024 |

| Results | No difference | Difference | No difference | Difference | Difference | No difference | No difference | No difference | Difference |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Published by MDPI on behalf of the International Society for Photogrammetry and Remote Sensing. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Phojaem, T.; Dangbut, A.; Wisutwattanasak, P.; Janhuaton, T.; Champahom, T.; Ratanavaraha, V.; Jomnonkwao, S. Evaluating UAV Flight Parameters for High-Accuracy in Road Accident Scene Documentation: A Planimetric Assessment Under Simulated Roadway Conditions. ISPRS Int. J. Geo-Inf. 2025, 14, 357. https://doi.org/10.3390/ijgi14090357

Phojaem T, Dangbut A, Wisutwattanasak P, Janhuaton T, Champahom T, Ratanavaraha V, Jomnonkwao S. Evaluating UAV Flight Parameters for High-Accuracy in Road Accident Scene Documentation: A Planimetric Assessment Under Simulated Roadway Conditions. ISPRS International Journal of Geo-Information. 2025; 14(9):357. https://doi.org/10.3390/ijgi14090357

Chicago/Turabian StylePhojaem, Thanakorn, Adisorn Dangbut, Panuwat Wisutwattanasak, Thananya Janhuaton, Thanapong Champahom, Vatanavongs Ratanavaraha, and Sajjakaj Jomnonkwao. 2025. "Evaluating UAV Flight Parameters for High-Accuracy in Road Accident Scene Documentation: A Planimetric Assessment Under Simulated Roadway Conditions" ISPRS International Journal of Geo-Information 14, no. 9: 357. https://doi.org/10.3390/ijgi14090357

APA StylePhojaem, T., Dangbut, A., Wisutwattanasak, P., Janhuaton, T., Champahom, T., Ratanavaraha, V., & Jomnonkwao, S. (2025). Evaluating UAV Flight Parameters for High-Accuracy in Road Accident Scene Documentation: A Planimetric Assessment Under Simulated Roadway Conditions. ISPRS International Journal of Geo-Information, 14(9), 357. https://doi.org/10.3390/ijgi14090357