1. Introduction

Taiwan lies between the tropical and subtropical zones and has long been a major cultural crossroads in East Asia. This strategic position has attracted diverse ethnic groups, each developing unique cultural identities over time. Among the earliest inhabitants of Taiwan are Indigenous peoples. However, with the prolonged dominance of external cultures and historical lack of written documentation, many Indigenous traditions and environmental knowledge have gradually eroded or disappeared. In response, there has been growing societal recognition, within Indigenous communities and broader Taiwanese society, of the urgent need to preserve their culture and environments [

1]. Cultural background shapes students’ perceptions, motivations, and engagement in learning. Hence, addressing disparities in science education requires careful consideration of cultural contexts, particularly for students in Indigenous communities.

Among Taiwan’s Indigenous groups, the Amis (Amis language: Amis or Pangcah) constitute the largest population, primarily residing along the eastern coast and in the central mountains. Traditional Amis cultural practices, including music, dance, clothing, rituals, and clan structures, are rich in meaning and deeply connected to their living environments. Although Taiwanese education policies have endeavored to preserve Indigenous languages and cultural heritage, disparities in instructional design persist, particularly due to geographic and resource constraints. As technology advances, there is growing emphasis on integrating scientific concepts with Indigenous knowledge systems to bridge regional and cultural disparities in learning outcomes.

The Jingpu Tribe is located on the plains at the mouth of the Xiuguluan River, offering expansive views of Taiwan’s eastern coastline. The river mouth’s rich ecology has enabled the Amis people to preserve their traditional fishing and hunting practices. Known as the Sun Tribe for being the first to greet the sunrise, Jingpu, the southernmost settlement in Hualien County, is a significant prehistoric cultural site in Taiwan. The Jingpu Cultural Site in Fengbin has been preserved for over 1500 years, safeguarding the heritage of the Amis ancestors. For the Amis people, the Sun carries profound cultural significance, reflecting their deep connection with natural environments.

In Taiwan’s elementary science curriculum, astronomy is closely connected to everyday life, covering topics such as the Earth, Sun, stars, Moon, and seasonal changes [

2]. Through astronomical observations, students can develop reflective thinking and metacognitive skills by exploring the relative motion of celestial bodies and drawing conclusions through scientific inquiry [

3]. The Sun provides vital light and energy that shape daily life. Seasonal changes, driven by Earth’s tilt and orbit, influence the Sun’s path and the duration of daylight. In the Northern Hemisphere, the Sun moves northward at the summer solstice, travels along an east–west path during the equinoxes and shifts southward at the winter solstice. Traditional observation methods are limited by weather, time, and equipment, limiting study opportunities and student motivation.

With the rise of the metaverse, immersive technologies such as virtual reality (VR) and augmented reality (AR) have become increasingly prominent in education. VR creates a fully immersive 3D environment accessed through head-mounted displays, offering users a sense of presence, while AR overlays digital information onto the physical world via devices like smartphones or tablets. In this study, a low-cost VR solution was developed by combining smartphones with Google Cardboard headsets to present 360-degree panoramic images. This approach aims to enhance students’ learning motivation and create a stronger sense of presence compared to traditional instruction.

Conventional tools such as slideshows and textbooks often fail to immerse learners, especially when teaching abstract scientific concepts. These limitations can increase cognitive load, thereby hindering comprehension. In contrast, virtual environments viewed through mobile devices enable learners to control their viewpoints, creating a more interactive and immersive experience. Such tools reduce cognitive load by making learning more intuitive and engaging [

4]. For instance, Araiza-Alba et al. used VR360 videos to teach children water safety skills through interactive scenarios [

5], while Ou et al. employed VR simulations of tree frog habitats to reduce practical barriers and enhance learning outcomes in environmental education [

6].

Current classroom practices in rural and Indigenous regions continue to rely on textbooks, projected media, and pre-recorded videos. By incorporating VR content, educators can offer more interactive and effective learning environments, especially compared to passive, paper-based materials [

7]. This study aims to integrate Amis Indigenous culture with local environments by combining VR360 and drone technology to create an accessible digital learning tool. Using a smartphone with low-cost, portable Cardboard goggles, learners can engage in an immersive experience. Interactive feedback and autonomous exploration further enhance learner motivation and engagement.

A drone, or Unmanned Aerial Vehicle (UAV), is an aircraft that operates without an onboard pilot. It can be controlled remotely by an operator or autonomously and is widely used in civilian, commercial, and military applications [

8]. Modern drones are typically equipped with high-resolution cameras, multiple sensors, and GPS, enabling applications such as aerial surveillance, terrain mapping, agricultural spraying, and material delivery, thereby enhancing operational efficiency and reducing labor costs [

9].

Drones generally operate in two modes: (1) Remote control, where an operator uses radio signals or network systems to manage the drone’s flight path and tasks in real time; and (2) Autonomous flight, in which the drone follows pre-programmed routes and can leverage Artificial Intelligence (AI) to make real-time adjustments, such as avoiding obstacles or adapting flight strategies to environmental changes, offering greater flexibility and autonomy in task execution [

10].

With advances in flight control, GPS, and communication technologies, drones are now widely used in disaster rescue, environmental monitoring, film production, and logistics. Modern drones transmit aerial data to support search and rescue, long-term environmental monitoring, and efficient material delivery [

11]. In the military domain, drones were initially deployed for reconnaissance and surveillance. Their lightweight, flexible design enables undetected operations deep within enemy territory, delivering real-time battlefield intelligence to support precise tactical decisions [

12]. Because of their high mobility, low cost, and autonomous capabilities, drones have become an essential technology across a wide range of contemporary applications.

This study integrated VR360 and drone technology into a VR learning system to teach Amis cultural heritage and local environments within the elementary science curriculum. The system also supports solar observation at the Tropic of Cancer Landmark near the Jingpu Tribe, allowing learners to measure the Sun’s azimuth and altitude angles in real time. Using smartphones paired with portable Cardboard goggles, students can interact with immersive content that enhances motivation and engagement [

13]. By combining scientific knowledge with Amis cultural traditions, the VR learning system facilitates solar observation while overcoming the limitations of traditional instruction.

This study aims to reduce educational disparities in remote areas by examining the effectiveness of a VR learning system that integrates Amis Indigenous culture and local environments with elementary science curricula. Based on prior research and the study’s objectives, the following research questions are proposed:

- (1)

What is the effectiveness of the VR system on students’ learning achievement?

- (2)

How does the VR system influence students’ learning motivation?

- (3)

What impact does the VR learning system have on students’ cognitive load?

- (4)

To what extent are students satisfied with the VR learning system?

2. Literature Review

To establish the theoretical foundation of this study, the literature was reviewed across six domains: (1) VR as an immersive and interactive medium for simulating real-world contexts and supporting conceptual understanding; (2) drone technology as a tool for connecting classroom learning with authentic experiences in science, environmental studies, and cultural exploration; (3) solar trajectory observation as a means to promote hands-on learning and deepen students’ understanding of Earth’s rotation, axial tilt, and the cause of seasonal change; (4) situated learning, which emphasizes authentic tasks, collaboration, and scaffolding to foster meaningful knowledge construction; (5) learning motivation, focusing on the roles of interest, enjoyment, and self-determination in sustaining student engagement; and (6) cognitive load theory, which informs instructional design to minimize extraneous demands and optimize cognitive processing. Collectively, these perspectives guided the design of a VR learning system that integrates cultural and environmental contexts within a robust pedagogical framework.

2.1. Virtual Reality

Virtual reality is an interactive environment created through 3D computer graphics and immersive technologies, bridging the physical and digital worlds. Through computers or mobile devices, users can interact with virtual objects and experience highly realistic simulated environments [

14]. VR also provides strong sensory stimulation and feedback, allowing users to immerse themselves in vivid interactions with the synthesized environment [

15]. The earliest VR device, the head-mounted display (HMD), originated in the 1960s and was later promoted by the U.S. Department of Defense and NASA in 1989, which accelerated the commercialization of VR. Over the years, VR technology has matured and diversified in form and definition. Today, VR has been applied widely in many disciplines, such as firefighting, medicine, and the military, providing learners with safe environments to practice skills required in dangerous real-world situations.

VR hardware can be classified into several types based on their presentation formats. Desktop VR provides relatively low immersion but is cost-effective and easy to set up, relying on interaction through a keyboard, mouse, joystick, or touchscreen. Simulation VR, among the earliest systems, integrates hardware with authentic or virtual environments to replicate real-world operations, such as medical training [

16]. Projection VR employs large screens and surround sound to deliver a panoramic experience, with learners wearing 3D glasses to enhance authenticity [

17]. Immersive VR offers the highest level of presence, requiring head-mounted displays (HMDs) or data gloves to stimulate multiple senses and generate perceptions that closely approximate reality [

18].

VR has been widely implemented in science education. For example, Chen et al. [

19] developed a desktop VR system simulating Earth’s motion to help students understand astronomical concepts. Allison et al. [

20] used HMDs to enable learners to explore gorilla habitats and observe social dominance interactions. Tarng et al. [

21] applied VR to simulate light-speed space travel, aiding comprehension of physical concepts such as time dilation and length contraction in learning special relativity. Sun et al. [

22] created a desktop VR model of the solar-lunar system to address misconceptions about astronomical phenomena, including day-night cycles, seasons, lunar phases, and solar eclipses.

Applications of Cardboard VR in science education have also been reported. For instance, Ou et al. [

23] combined Cardboard VR with 360-degree panoramic views to promote inquiry-based learning in ecological conservation. Their findings demonstrated that immersive experiences not only enhanced learning performance but also fostered motivation, creativity, and critical thinking. These studies highlight VR’s potential to enhance science education by providing interactive and learner-centered experiences that actively engage students and foster deeper conceptual understanding.

2.2. Educational Applications of Drones

Over the past decade, the use of drones in education has grown rapidly, with schools, universities, and training centers adopting them as innovative tools to foster hands-on learning, student engagement, and interdisciplinary connections. Commonly known as unmanned aerial vehicles (UAVs), drones are aircraft that operate without an onboard pilot, controlled remotely or through automated flight systems. Equipped with high-resolution cameras, sensors, and GPS, modern drones perform tasks such as aerial photography, terrain mapping, environmental monitoring, agricultural spraying, and logistics. Their efficiency, versatility, and cost-effectiveness make drones valuable across civilian, industrial, military, disaster response, and research applications. These features also establish drones a powerful educational tool, enabling students to engage with authentic, real-world technology applications across interdisciplinary context.

Drones contribute to the study of history, culture, and the arts. Aerial imagery can document archaeological sites, cultural landscapes, and Indigenous territories, supporting heritage preservation and cultural education. Students in social studies courses can use drone-generated maps to analyze land use, settlement patterns, and cultural geography, while visual arts and media students benefit from the novel perspectives that drones provide for storytelling, film production, and photography. In vocational and professional training, drones are increasingly applied in construction management, surveying, logistics, public safety, and disaster management, helping students acquire skills aligned with industry demands and workforce expectations.

Despite their potential, educational drones are limited by high costs, the need for specialized training, and legal restrictions such as licensing and no-fly zones (NFZ) [

24]. These barriers have led to virtual drone systems, which offer safe, cost-effective, and scalable alternatives. Delivered via simulation or VR, virtual drones allow students to practice navigation, mission planning, and aerial mapping without financial, safety, or regulatory constraints. Research shows that they enhance operational skills, situational awareness, and learning outcomes [

25,

26,

27]. Combined with VR360 systems, they provide immersive learning that integrates ecological, cultural, and technological perspectives.

This study adopts an approach that integrates virtual drones with VR360 explorations of Indigenous communities and natural landscapes. Specifically, it focuses on the Amis people, Taiwan’s largest Indigenous group, whose cultural practices and ecological knowledge offer rich resources for science education. Amis society has distinctive rituals, such as the Harvest Festival, which serve as social structures and educational processes [

28]. Indigenous science, transmitted orally across generations, embodies the accumulated wisdom of interactions with the natural environment [

29]. Incorporating this cultural knowledge into science education helps bridge the gap between formal curricula and students’ lived experiences, making learning more relevant and meaningful [

30].

Previous studies indicate that embedding Indigenous perspectives in science education enhances student understanding, motivation, and performance [

31]. This study uses virtual drones and VR360 to explore Amis landscapes and local environments, integrating technological innovation with cultural heritage and science education. Students can navigate virtual aerial missions over tribal territories, observe environmental features, and analyze ecological species while engaging with the cultural narratives embedded in the landscape. This approach builds technical skills in drone operation and geospatial analysis, while fostering appreciation for Indigenous heritage and environmental stewardship. The safe, accessible virtual platform also overcomes the cost, training, and regulatory barriers that often limit the use of physical drones in schools.

Drones—both physical and virtual—can serve as powerful educational tools that transcend disciplinary boundaries, supporting STEM learning, environmental and cultural education, vocational training, and creative expression. Virtual drones facilitate access to drone technology, making it feasible for schools with limited resources or strict aviation regulations. This study combines virtual drones with VR360 representations of Amis culture and local environments to enhance scientific understanding and cultural awareness. The approach equips learners with 21st-century skills—problem-solving, collaboration, and technological literacy—while promoting educational equity by integrating Indigenous perspectives into formal science education.

2.3. Solar Trajectory Observation

Astronomy naturally inspires curiosity among elementary school students, yet its complex spatial concepts often present challenges for learners. Due to limited class time, teachers often assign astronomical observations as homework without sufficient guidance, which may lead students to complete tasks superficially and gradually lose interest. To resolve this issue, educators can adopt hands-on, inquiry-based strategies supported by teaching aids such as celestial models, images, videos, and computer simulations to convey accurate astronomical concepts. Experiential learning allows students to “learn by doing,” enhancing observational skills, experimental techniques, and active engagement with both teachers and the natural environment.

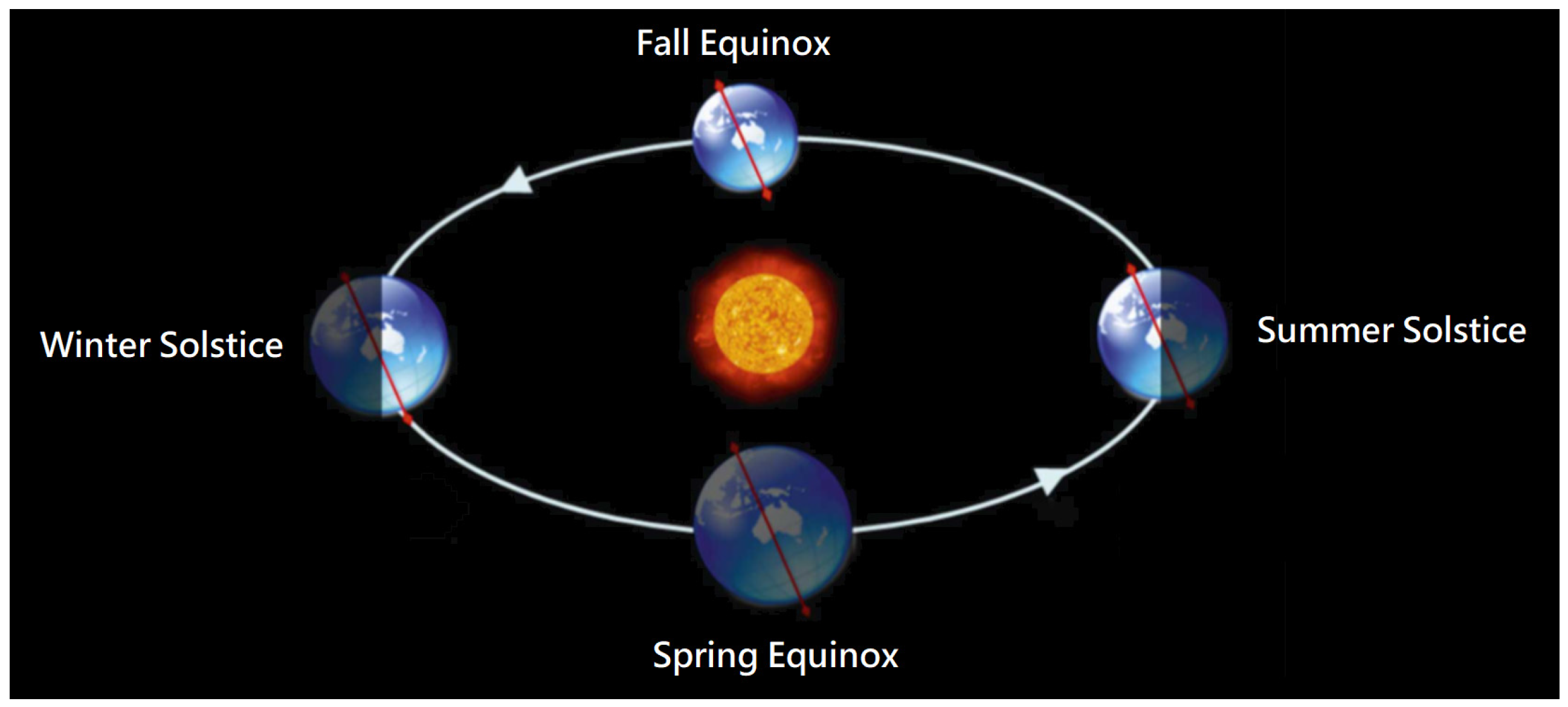

In elementary astronomy education, a common misconception is that winter is colder because the Sun is farther from Earth, and summer is hotter because it is closer. In fact, measurements show the opposite: during the Northern Hemisphere’s winter, Earth is slightly nearer to the sun, while in summer it is slightly farther away. The primary cause of the seasons is Earth’s revolution around the Sun, together with the 23.5° tilt of its rotational axis. This tilt changes the location of direct sunlight on Earth’s surface throughout the year. At the summer solstice, sunlight falls directly on the Tropic of Cancer; during the spring and autumn equinoxes, it strikes the equator; and at the winter solstice, it reaches the Tropic of Capricorn (

Figure 1).

Earth’s rotation influences the apparent position of the Sun in the sky, which changes both throughout the day and across seasons. During the summer solstice in Taiwan, the Sun appears nearly overhead at noon, causing a person standing outdoors to cast almost no shadow. In contrast, around late December at noon, the Sun is about 45° south of overhead, and a person’s shadow is nearly as long as their height. The Sun’s apparent path also varies with latitude. At higher northern latitudes during the summer solstice, the Sun’s arc shifts farther north, and within the Arctic Circle, it remains above the horizon for 24 h, creating the phenomenon known as the “White Night.”

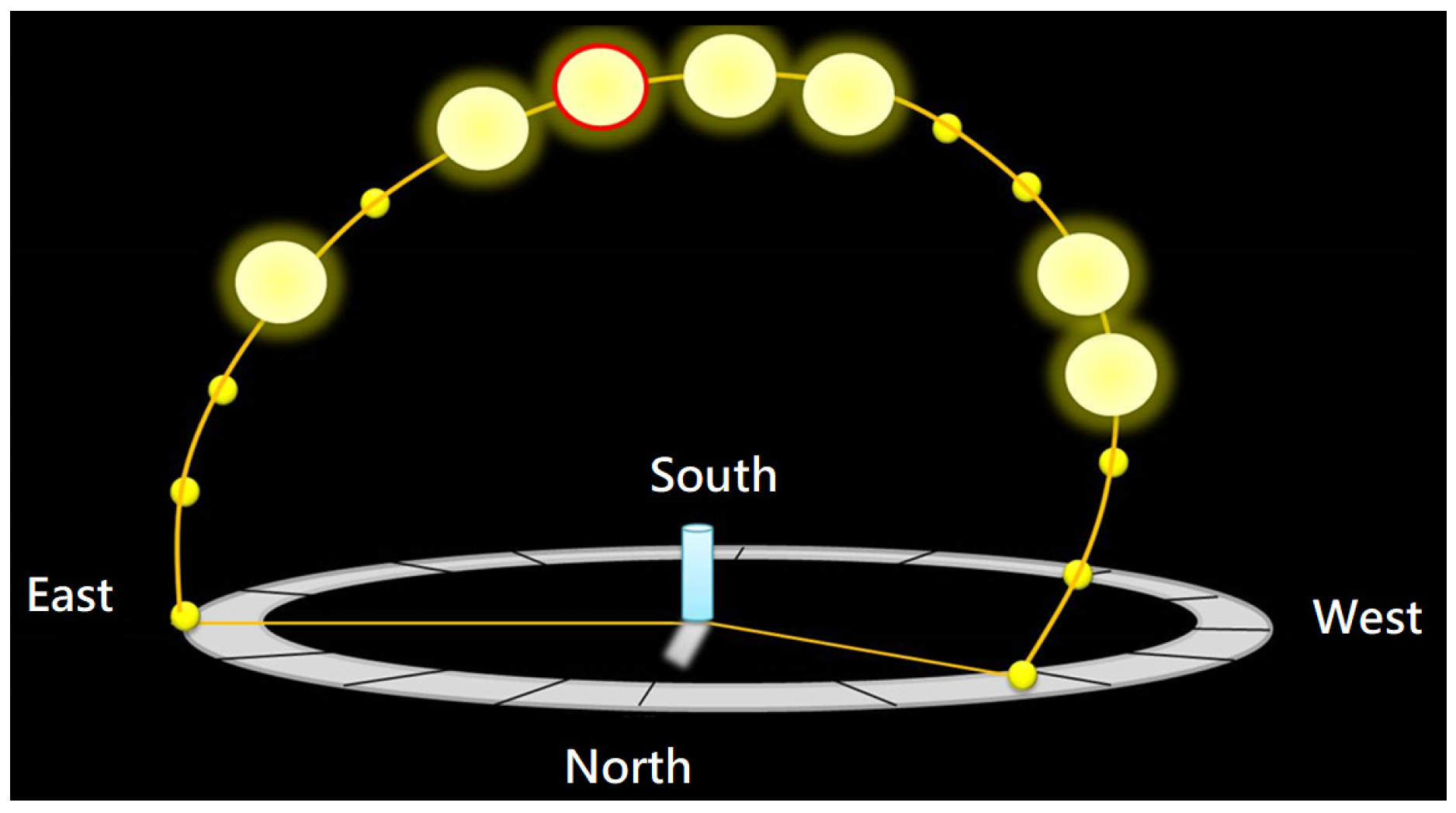

Because the Sun is dazzlingly bright and lacks clear reference points in the sky, ancient observers used vertical sticks to measure shadows, allowing them to track the Sun’s position and determine the four seasons and the 24 solar terms. In modern science education, solar observation activities often begin with flashlight models to demonstrate that shadows always appear on the side away from the light source. Students then record the direction of a stick’s shadow to infer the Sun’s position, which is 180° opposite the shadow, and measure its length and angle with a protractor to calculate solar altitude. Plotting these observations on a hemispherical model helps them identify the regular patterns of the Sun’s apparent motion across the sky (

Figure 2).

Earth’s rotation causes day and night and creates the Sun’s apparent daily motion. Along the horizontal (east–west) axis, the Sun moves rapidly due to rotation, while vertical (north–south) changes related to the seasons occur more gradually. In classroom activities, students record the Sun’s position on a hemispherical model to determine azimuth and altitude angles, helping them recognize patterns of solar motion. Diagrams or animations of Earth’s orbit are often used to link the Sun’s position with seasonal temperature variations. In Taiwan, elementary astronomy lessons are scheduled in September or October, but typhoons, seasonal rains, and urban structures often impede observations. High summer temperatures further restrict outdoor activities. Ideally, students would track solar paths throughout all four seasons, yet practical constraints make this challenging. These limitations highlight the need for innovative teaching strategies and tools that provide accurate learning while sustaining student engagement in astronomy.

2.4. Situated Learning

Brown et al. [

32] proposed situated learning theory, suggesting that educators design curricula using cognitive principles to help learners engage with cultural practices. In this framework, knowledge acts as a cognitive tool internalized through guided participation, collaborative problem-solving, and exploration in authentic contexts [

33], thereby enhancing learning effectiveness It transforms learners from passive recipients into active constructors of knowledge. By emphasizing authentic environments, guided practice, and expert mentorship, situated learning supports knowledge construction, critical reflection, and the development of autonomous learning skills [

34,

35].

Young identified four core components of instructional design in situated learning environments [

36]. First, learners engage with authentic exemplars while evaluating information from multiple perspectives [

37]. Second, educators provide context-appropriate scaffolding in real-world settings to support complex cognitive tasks [

38,

39]. Third, teachers continuously assess progress, monitor information processing, and design contextualized activities to enhance skills [

40]. Fourth, the effectiveness and relevance of situated learning interventions must be systematically evaluated and adapted to individual needs. Empirical research in science education emphasizes its practical efficacy. For example, Bursztyn et al. [

41] implemented a situated learning approach augmented with virtual field simulations in geology education, which improved learner motivation, increased engagement, and lowered instructional costs.

Situated learning theory emphasizes authentic contexts, guided practice, and scaffolding to transform learners into active constructors of knowledge. Empirical evidence shows VR-supported situated learning enhances motivation, engagement, and cost-effectiveness in science education. Applying these principles, this study integrates VR360 and drone technology to develop a VR learning system that facilitates immersive, interactive learning, promotes cultural appreciation, and advances scientific understanding through autonomous, inquiry-based experiences.

2.5. Cognitive Load

Cognitive load theory describes the mental effort involved in learning [

42]. Sweller emphasized that the limited capacity of working memory can impede learning when information processing is excessive [

43]. Effective instructional design reduces extraneous load and supports transfer to long-term memory, for example, by segmenting complex content using visual aids to provide guided practice for increasing learner autonomy. Therefore, effective instructional design should minimize irrelevant mental effort while promoting the efficient transfer of information into long-term memory.

Cognitive load is typically divided into three categories. Intrinsic cognitive load is determined by the complexity of the material itself and varies with task difficulty [

44]. Extraneous cognitive load arises from poor instructional design or irrelevant tasks, adding unnecessary mental effort that does not contribute to schema construction. Germane cognitive load refers to the mental resources devoted to schema building, which, although increasing cognitive demand, supports meaningful learning. Cognitive load can be assessed through multiple approaches. Subjective measures rely on learners’ self-reports, often using Likert-type scales. Physiological measures capture changes in learners’ biometric indicators, such as EEG signals [

45], during tasks. Performance-based measures evaluate learners’ task outcomes to estimate the cognitive effort involved.

Recent research has examined cognitive load in technology-enhanced learning, particularly in virtual reality and augmented reality (AR) environments. Wu et al. [

46] investigated learners’ cognitive load using portable Cardboard VR in science education. Huang et al. [

47] combined AR and board games to explore programming instruction and its effects on cognitive load. Similarly, Armougum et al. simulated train station environments through VR storytelling tasks, while Andersen et al. analyzed cognitive load in VR training systems [

48]. Together, these studies suggest that VR- and AR-based instruction generally does not impose excessive cognitive load on learners, emphasizing its potential to support engaging and effective learning experiences.

2.6. Learning Motivation

Motivation is crucial for shaping student engagement, learning outcomes, and long-term achievement. In the context of modern educational technologies, such as VR, AR, and drones, understanding the theoretical foundations of motivation is essential in leveraging these tools to enhance learning. This review examines key literature on motivation and its implications for applying VR and drones in education.

Stipek [

49] emphasized that motivation is a dynamic construct influenced by instructional practices. She proposed that students’ motivation to engage with learning activities depends on their perceptions of ability, material relevance, and feedback received. In the case of VR and drones, these technologies provide opportunities for students to engage with learning content interactively, which can increase perceived relevance and challenge to foster motivation. For instance, the immersive environments created by VR technology make learning more engaging, encouraging deeper exploration and fostering a sense of competence, which is a key element in motivation.

Maehr and Meyer [

50] further argued that motivation is context-dependent and influenced by both individual and social factors. Student motivation is shaped by their educational environment. VR and drones, offering personalized and authentic learning experiences, address diverse needs and integrate cultural and scientific knowledge, thereby fostering intrinsic motivation driven by curiosity and personal interest.

Ryan and Deci [

51] introduced self-determination theory (SDT), which distinguishes between intrinsic and extrinsic motivation. According to SDT, fostering autonomy, competence, and relatedness is essential for enhancing intrinsic motivation. In virtual environments, students can explore content independently, supporting their motivation. For instance, interacting with VR simulations or controlling drones for scientific exploration can give students a sense of autonomy, which further drives their motivation.

In summary, the above literature demonstrates that motivation is essential for learning, particularly in technology-enhanced environments. By fostering autonomy, competence, and engagement through innovative tools such as VR and drones, educators can create motivating experiences that enhance learning outcomes.

3. Materials and Methods

This study integrates VR360 and drone technology to develop a VR system for improving effectiveness in learning Amis culture and science education. A quasi-experimental design was employed, with data collected through achievement tests and questionnaires. This section is organized into seven parts:

Section 3.1 introduces the learning content of the VR system;

Section 3.2 describes the scene models and virtual drone design;

Section 3.3 presents the VR learning system;

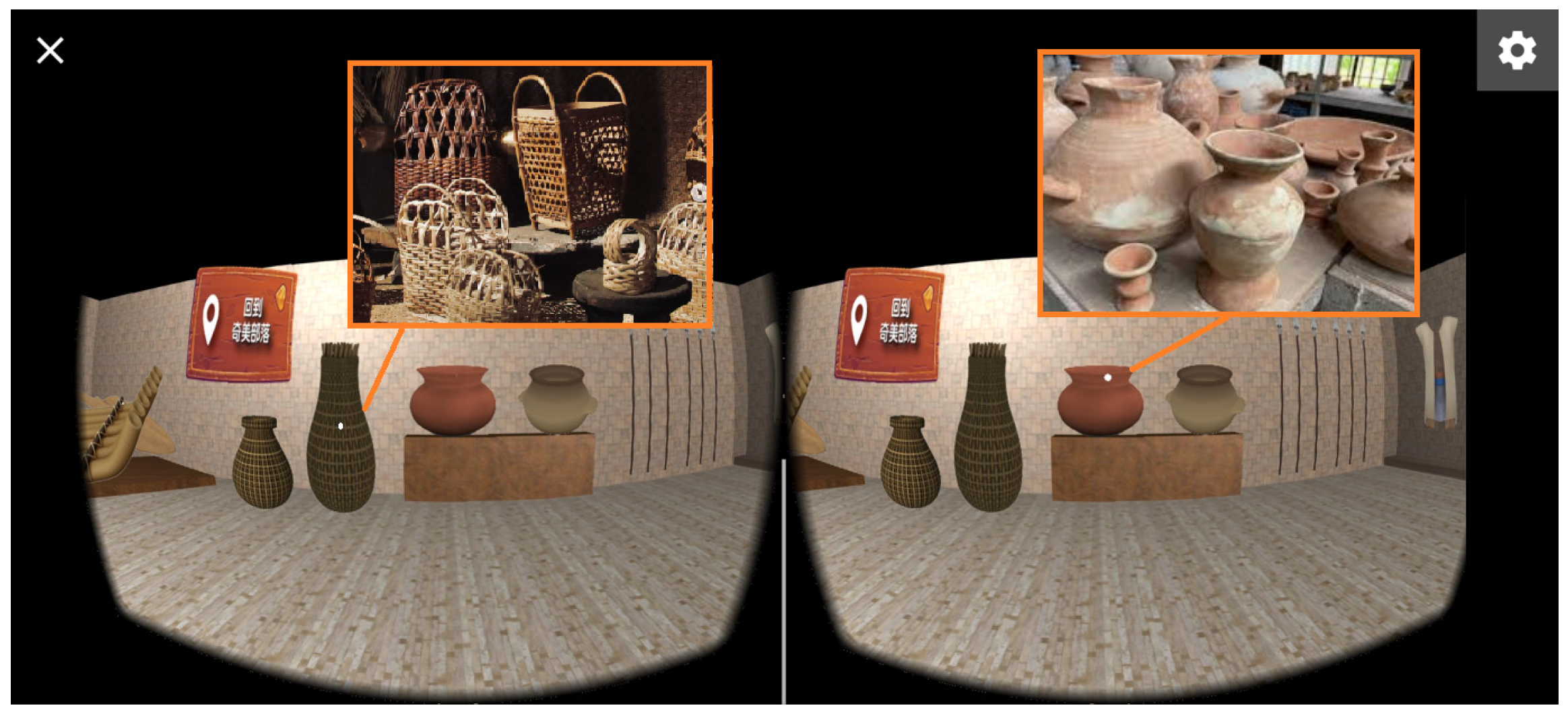

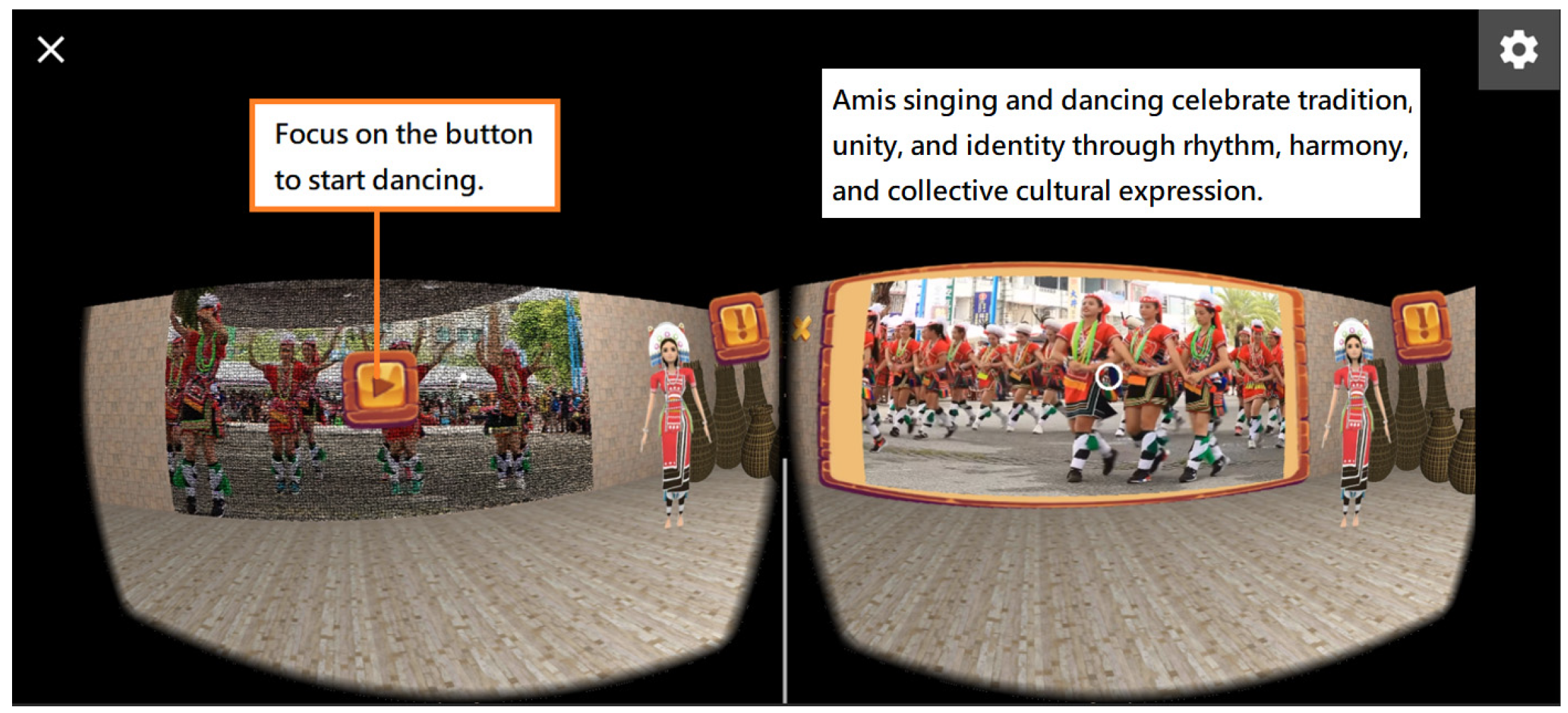

Section 3.4 illustrates virtual scene exploration with examples;

Section 3.5 details the Sun path simulation model;

Section 3.6 outlines the experimental design; and

Section 3.7 presents the research instruments.

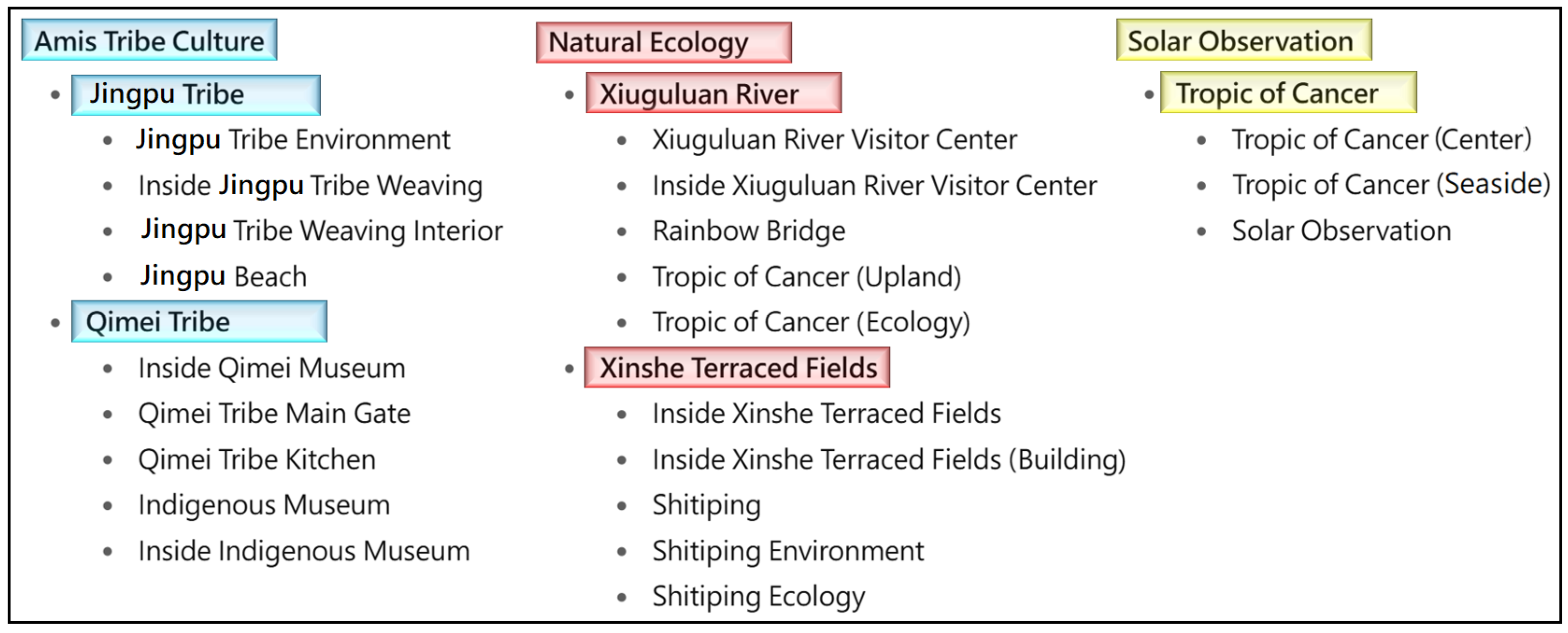

3.1. Learning Content

In this study, the learning content was designed based on Indigenous cultural and environmental resources of the Amis communities in Hualien, including the Kiwit and Jingpu tribes, the Tropic of Cancer Landmark, XinShe terraced fields, and the Xiouguluan River (

Figure 3). To support exploratory learning, the VR system incorporated multiple observation points, each with interactive tasks integrating cultural, environmental, and astronomical knowledge (

Table 1). The content was organized into three dimensions:

Amis Indigenous culture: traditional customs, festivals and artifacts

Local environments: Xiouguluan River and XinShe terraced fields

Solar observation: acquiring astronomical concepts through recording the Sun’s path

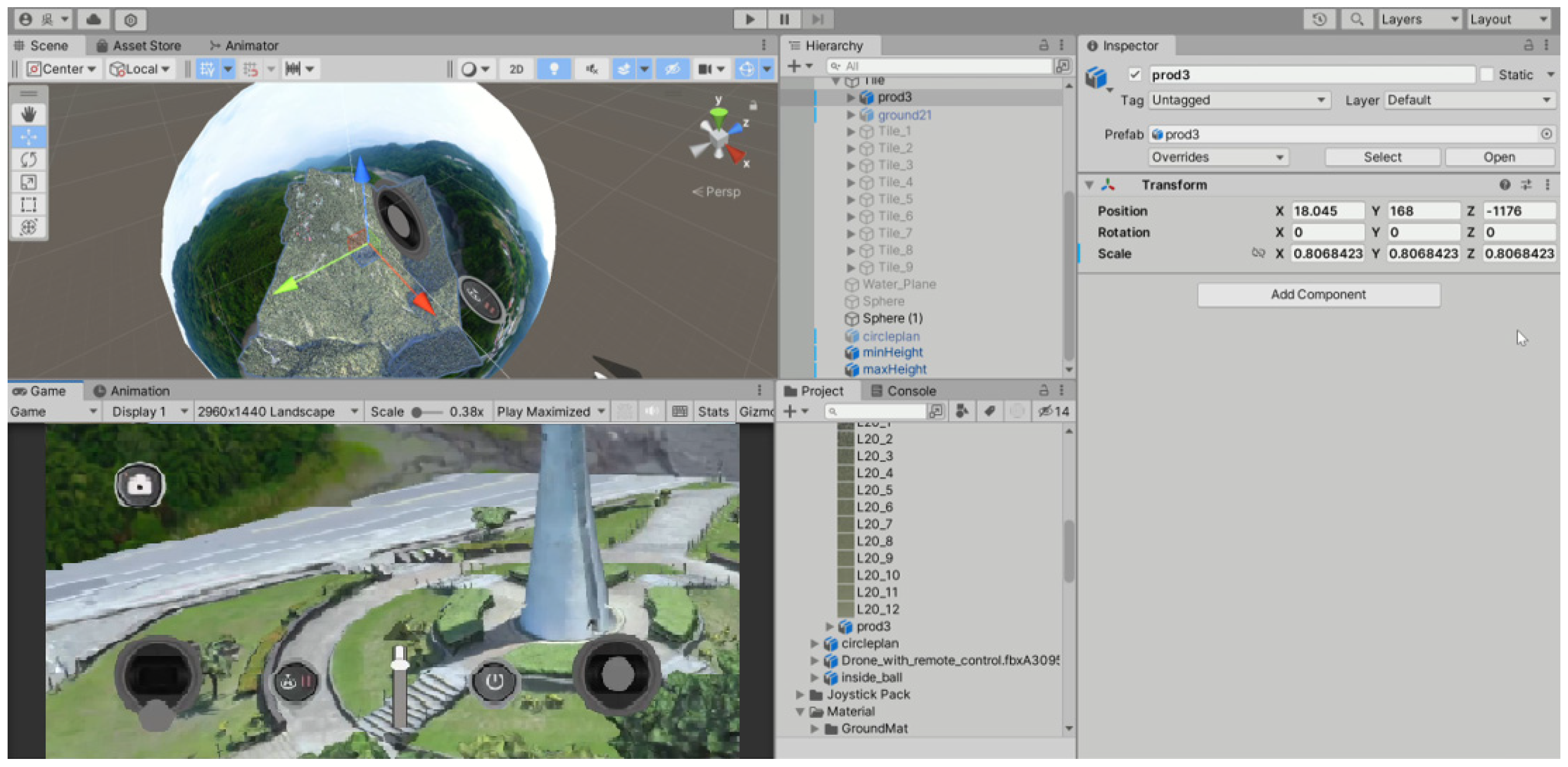

3.2. Scene Models and Virtual Drone Design

This section outlines the construction of physical scene models, the development of the virtual drone, and a description of its operation within the VR learning system.

3.2.1. Using a Physical Drone for Constructing Scene Models

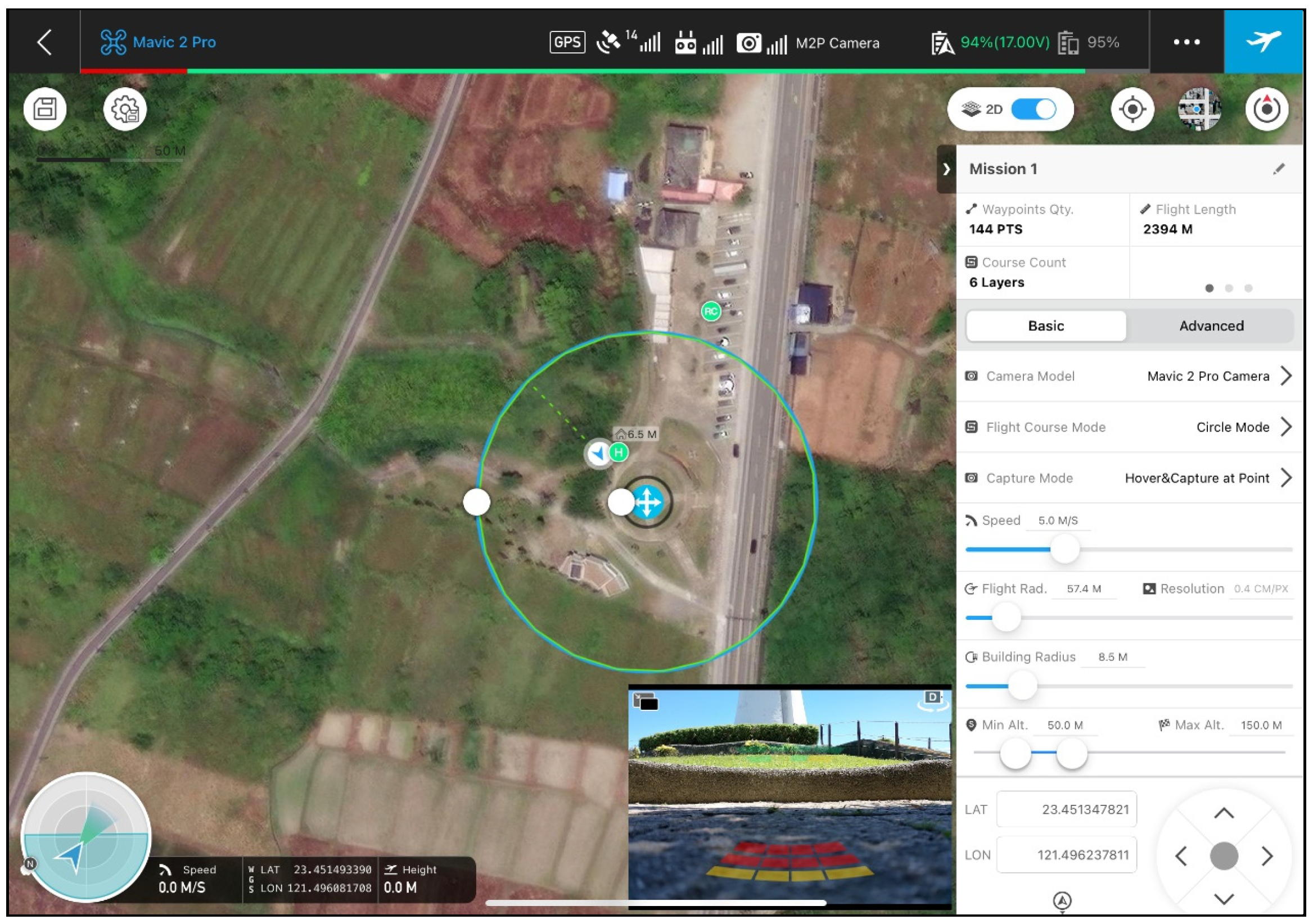

In this study, aerial imagery of the Amis Tribe region was captured using a DJI Mavic 2 Pro drone (Da-Jiang Innovations, Shenzhen, China) with a high-resolution camera (

Figure 4). Its flight paths were planned in DJI GS Pro 2.0, and the obtained images were processed with Bentley iTwin Capture Modeler 1.8 for 3D virtual scene creation. The survey area spanned approximately 1000 × 1000 m, with flight altitudes between 100 and 120 m above ground. To ensure comprehensive coverage of the whole observation region and key landmarks, a multi-angle circular flight strategy combining top-down and oblique imagery was employed. Adjacent images had 70–80% overlap, enhancing photogrammetry accuracy and the realism of reconstructed virtual scenes. In areas with significant terrain variation, multiple passes from different angles were required to capture the full complexity of the landscape, accurately reproducing the Amis Tribe and local environmental features.

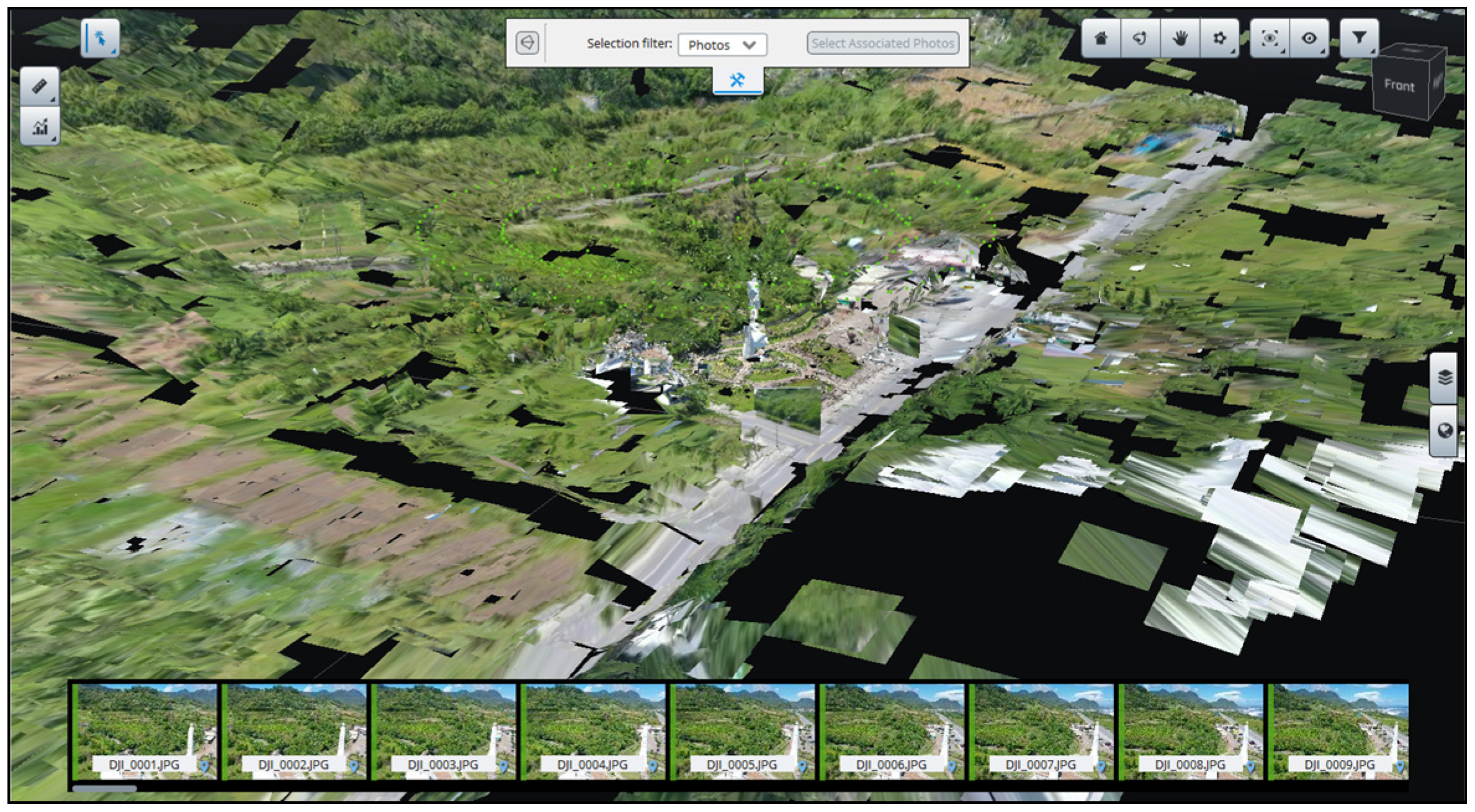

This study employed an image-based Structure-from-Motion (SfM) algorithm combined with Multi-View Stereo (MVS) to construct a high-resolution 3D model of the Tropic of Cancer Landmark and neighboring regions. The SfM–MVS approach has been widely used for 3D modeling [

52,

53], as it can automatically estimate camera poses and reconstruct both sparse and dense 3D structures from multiple overlapping images without requiring a specific sequence, ultimately generating measurable point clouds and mesh models. The main steps of this method are described as follows:

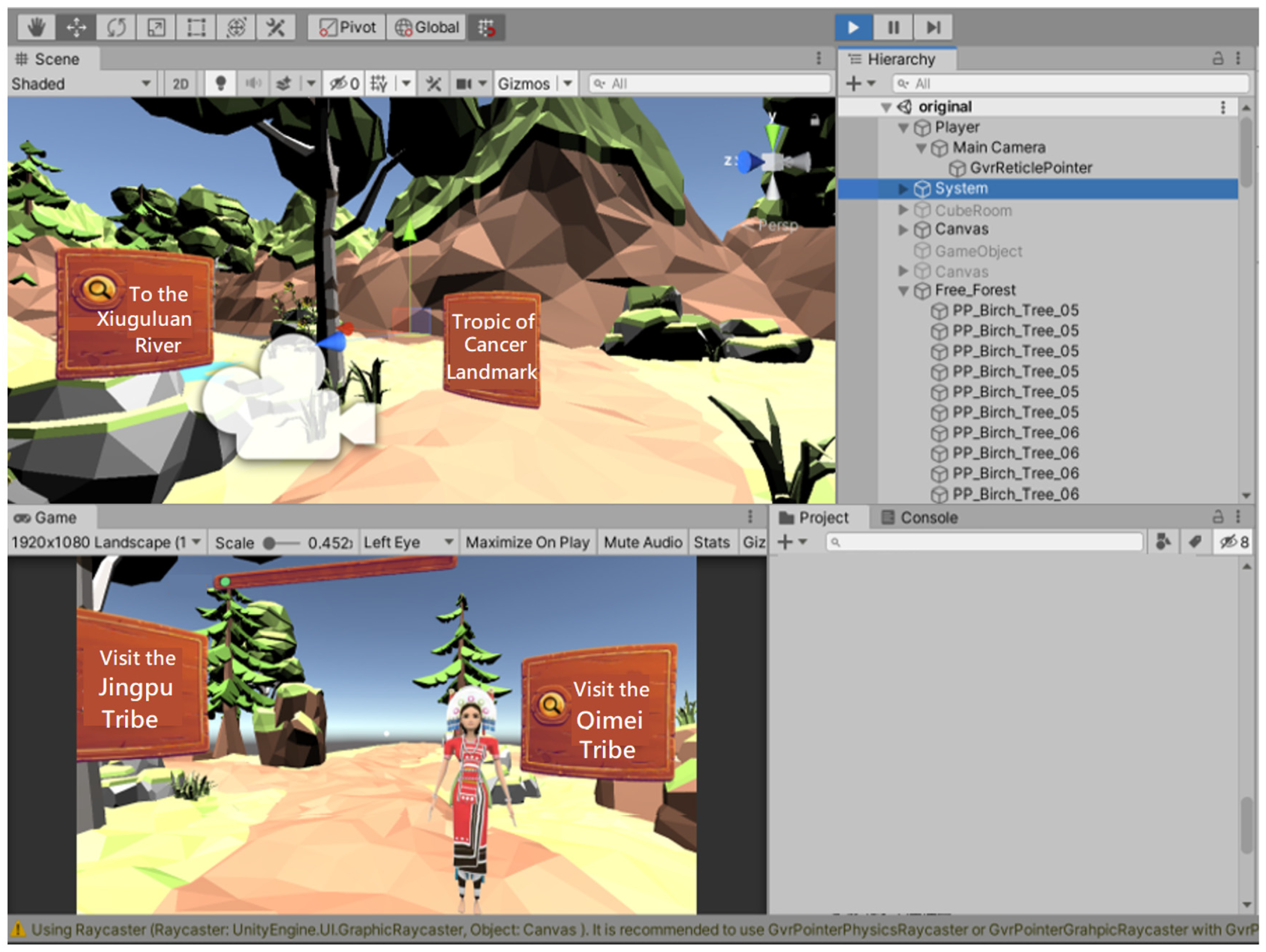

3.2.2. Creating Virtual Drone and Controller Models

This study employed Blender 4.0 to create detailed 3D models of a virtual drone and its controller. The drone model was designed to resemble commercially available civilian drones, incorporating key components such as the fuselage, camera lens, propellers, and landing gear. Symmetrical elements were generated using Blender’s mirror function to optimize development efficiency. The controller was modeled with geometric primitives, such as cubes and cylinders, to represent buttons, joysticks, and shell recesses.

Texture maps for both the drone and controller were created using Adobe Photoshop 23.0 and applied within Blender to enhance visual realism (

Figure 8). The completed 3D models were exported in the FBX format and imported into Unity Game Engine 2022.3.8f1, which supports cross-platform development for Windows, Mac OS, and multiplayer network applications. In Unity, C# programming was used to implement the user interface and operational functions of the virtual drone. Upon completion, the system could be compiled into an APK file for deployment on Android devices, enabling interactive, immersive experiences for drone operation and landscape observation.

The virtual drone and controller models were imported into the Unity game engine and integrated with the 3D terrain model, serving as interactive assets within the user interface and learning environment. This modeling process balanced realism, efficiency, and usability, providing a foundation for immersive educational applications such as drone operation training and observation of local environments (

Figure 9). At the development stage, the virtual drone was designed and tested on a desktop computer, integrated with the virtual scenes and user interface for refinement, and subsequently exported to Android smartphones, enabling students to experience immersive interaction through Google Cardboard headsets within the VR360 learning system.

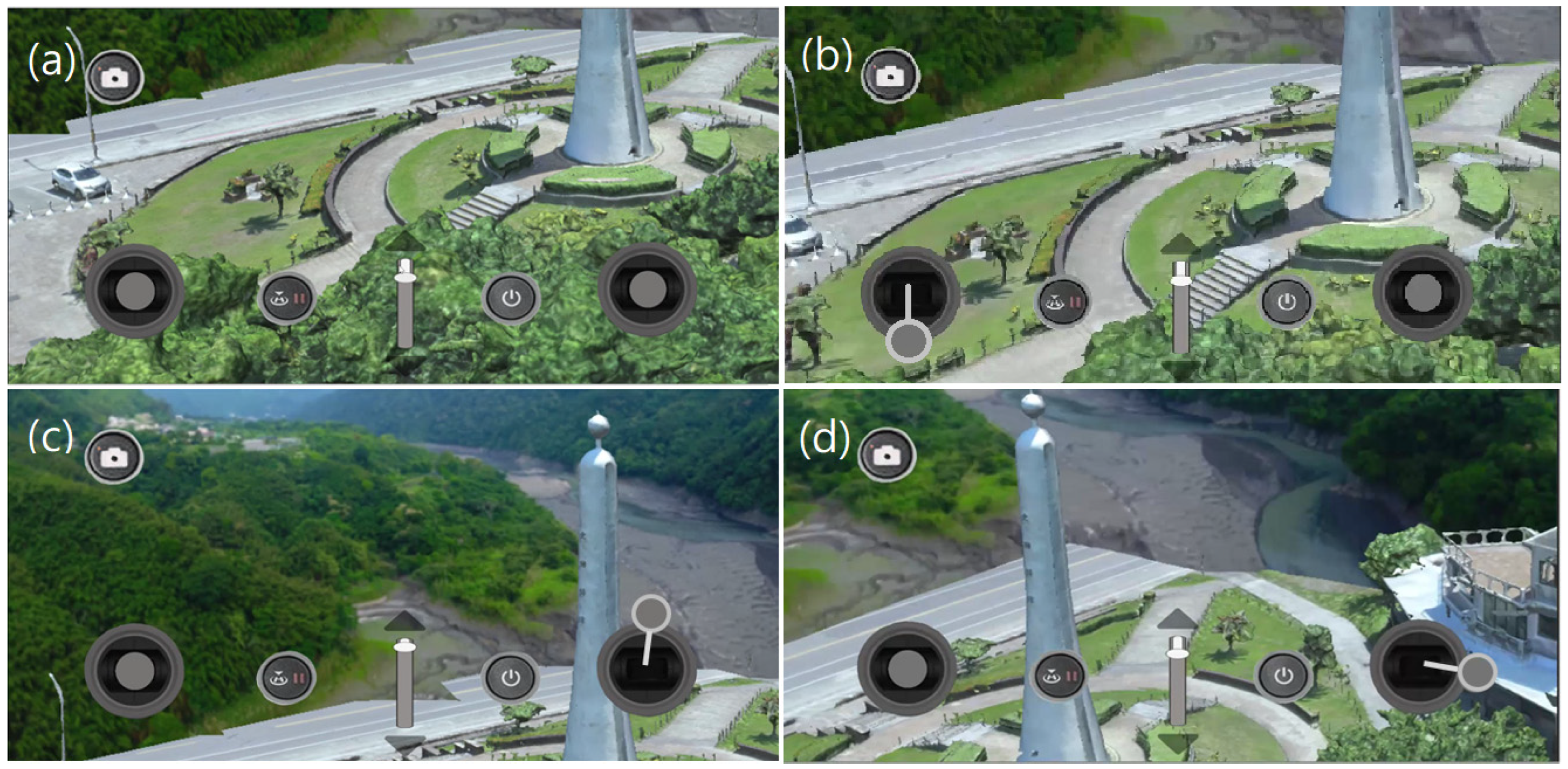

3.2.3. Virtual Drone Operation

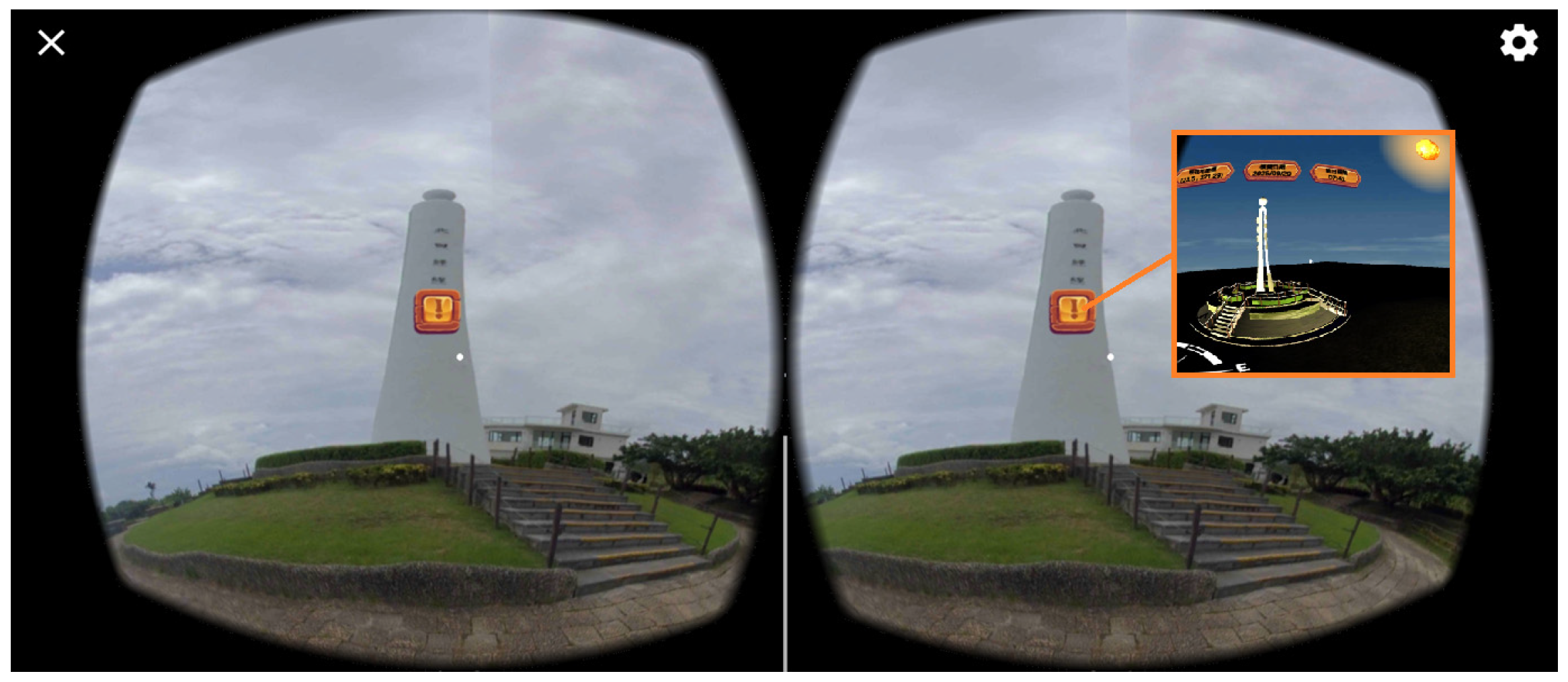

Users can freely control the virtual drone to explore and observe the landscape, for example, the Tropic of Cancer Landmark. When the drone exceeds the preset altitude limit, the VR system automatically switches to a first-person perspective, displaying the flight path over the reconstructed 3D landscape model. This design not only provides an intuitive and immersive operational experience but also enhances the realism of landscape visualization, improving learners’ spatial perception, situational awareness, and overall learning outcomes. For example,

Figure 10a shows the drone hovering in mid-air, along with the shadow of the landmark.

Figure 10b demonstrates that moving the left joystick downward causes the drone to retreat.

Figure 10c shows that moving the right joystick upward raises the drone, revealing the top of the landmark.

Figure 10d illustrates that moving the right joystick to the right rotates the drone clockwise, providing a comprehensive view of a house on the ground and surrounding features.

3.3. VR Learning System

The VR learning system was developed for execution on smartphones using Cardboard headsets to explore Amis culture and local environments. From a VR360 panoramic perspective, learners can view real-world landscapes and interact with objects in the virtual space. By triggering actions such as rotation, observation, and information browsing, learners can engage with content to gain knowledge about Amis culture, local environments, and astronomical concepts such as the Sun and four seasons.

In the design process, panoramic images were captured using a 360-degree camera, while photographs and videos of local environments were taken with mobile devices. These resources were processed and integrated into the Unity game engine, where additional objects—including ecological images, texts, videos, 3D models, and UI/UX components—were embedded. Interactive feedback was provided via the Google VR SDK for Unity v1.200.0. The system was then exported to Android smartphones for use with Cardboard headsets, creating a lightweight and portable VR learning platform (

Figure 11).

The VR system for exploring Amis culture and local environments was developed in a Microsoft Windows 10 environment using Visual Studio 2019, with programming and integration in C#. The system employed the Google VR SDK for Unity v1.200.0 (

Figure 12), enabling trigger events and interactive functions for virtual objects.

Figure 13 illustrates the system architecture, including the main themes and learning content. The virtual scene was created with Cinema 4D (C4D), images with Adobe Photoshop 23.0 and Illustrator 28.0, and videos edited using Adobe Premiere CS6 6.0.5. The development process involved:

Designing learning content focused on Amis culture and science education

Preparing photos, videos, and textual materials to support knowledge points

Developing virtual scenes and user interfaces within the VR learning system

Exporting the VR learning system and installing it on smartphones

3.4. System Operation

Prior to instruction, participants received a 15-min training session to ensure familiarity with the system operation. During the learning process, instructional prompts guided learners across five thematic modules, while worksheets were employed to record observations from each site. Interactive icons embedded within virtual scenes provided explanations of plants, animals, cultural features, or navigation cues (

Figure 14). Task completion was tracked through status icons displayed in the interface, and the worksheets functioned as a structured review activity.

The VR learning system was designed based on the principles of situated learning. After entering the system, learners first accessed the main interface and then an exploration hall displaying the available observation sites and knowledge points (

Figure 15). To complete the instructional sequence, learners were required to visit all sites, upon which the system unlocked assessment activities.

To enhance the authenticity of the situated learning experience, multiple interaction triggers were integrated into the system (

Figure 16). Within each virtual scene, learners could freely navigate natural environments, access explanatory media, and complete observation tasks. This design seamlessly combined cultural and ecological knowledge with interactive VR experiences, thereby fostering engagement and supporting deeper learning. Moreover, the situated learning framework ensured that learners interacted with content in contextually meaningful ways, directly aligning with the study’s objectives of enhancing motivation, reducing cognitive load, and improving learning achievement.

The Xiuguluan River (

Figure 17) originates from Xiuguluan Mountain in Taiwan’s Central Mountain Range and is the largest river in eastern Taiwan. It is also the only river that cuts through the Coastal Mountain Range. The section from Ruisui to Dagangkou stretches about 24 km, passing through more than twenty rapids and shoals, with majestic gorges and unique rock formations along the way, making it a popular destination for white-water rafting. It is one of Taiwan’s few unpolluted rivers, home to over 30 species of freshwater fish, among which the Formosan sucker (

Acrossocheilus paradoxus), a protected species, is the most valuable. The lower valley preserves patches of tropical rainforest, where Formosan macaques are commonly seen, and more than 100 bird species have been recorded, reflecting the river’s rich biodiversity.

The Jingpu Tropic of Cancer Landmark (

Figure 18), located in Fengbin Township, Hualien, is a significant geographical and cultural site marking the latitude of 23.5° N, where the Tropic of Cancer crosses Taiwan. Symbolizing the boundary between subtropical and tropical climate zones, the landmark provides educational value in both astronomy and geography. Designed as a sleek white tower, it highlights seasonal variations in solar altitude and serves as an ideal venue for observing the Sun’s trajectory, particularly during the summer solstice. The site attracts visitors for scientific exploration, cultural tourism, and its scenic coastal views. In the virtual scene, learners can observe the Tropic of Cancer Landmark rising prominently from the ground. By focusing on the designated information point, they can access a Sun path simulation model to explore variations in solar trajectories and the causes of the four seasons.

3.5. Sun Path Simulation Model

This study developed a Sun path simulation model to examine the solar trajectory within the virtual scene of the Jingpu Tropic of Cancer Landmark. The model integrates a virtual Sun synchronized with local time, a shadow-casting sundial for calculating solar azimuth and altitude angles, and a hemispherical sky model for recording the solar trajectory [

56]. Based on the local position, date, and time, the model displays the Sun and according to its azimuth and altitude angles as well as the corresponding shadow of the sundial. For learners, the solar altitude angle can also be calculated using the cotangent of the sundial’s height divided by its shadow length. They can compute the Sun’s position by measuring the sundial’s shadow length and azimuth, and record the position, current date, and time on the hemispherical model. By adjusting observation times, students can explore how the Sun’s trajectory varies across the seasons (

Figure 19).

The Sun path observation model was developed on a Windows 10 platform, using Java Development Kit (JDK) 21, Android Software Development Kit (SDK) 35.0, Eclipse IDE 4.34, and the Android Development Tools (ADT) 23.0.7 plug-in for Eclipse as the Android development environment. The Unity game engine was the primary development tool, with C# programming for system functionality. The ADT Bundle and Android SDK Tools 26.1.1 were installed to access mobile device sensors and display functionalities, supplementing Unity’s built-in capabilities. The model can provide timely and location-specific instructional content on the solar trajectory and transforms recorded data into organized and meaningful knowledge. For example, it illustrates the relationship between the Sun–Earth relative position and changes in solar azimuth and altitude, integrating observational data with spatial cognition to build accurate concepts of the solar trajectory.

Teachers can guide students to explore the relationship between the Sun and the causes of four seasons by explaining that Earth completes one revolution around the Sun in a year and that its axis is tilted at 23.5°. This tilt causes the position of direct sunlight on Earth’s surface to shift with the seasons. At the summer solstice, sunlight falls directly on the Tropic of Cancer; at the spring and autumn equinoxes, it strikes the equator; and at the winter solstice, it falls on the Tropic of Capricorn. Students can also learn from this model that Earth’s rotation produces the Sun’s apparent daily motion and is responsible for the alternation of day and night.

3.6. Experimental Design

This study adopted a quasi-experimental design to compare the learning effectiveness of the VR learning system with that of traditional teaching methods in the context of cultural and science education. Participants were assigned to either the experimental or control group. The experimental group received instruction using the VR learning system, whereas the control group was taught with PowerPoint slides and instructional videos. All instructional materials were designed by the researchers and delivered by the same teacher to ensure consistency in content and instructional style. Prior to instruction, both groups completed a pre-test to assess prior knowledge. The instructional process was divided into three sequential phases: preparation, instruction, and summary. During the preparation phase, both groups were introduced to solar-related knowledge through teacher explanations to stimulate learning motivation.

During the instructional phase, the two groups followed different approaches: the experimental group engaged in exploratory learning with the VR system and used worksheets to record observations, whereas the control group learned with PowerPoint slides and worksheets to reinforce understanding through observation and note-taking. After instruction, both groups completed a post-test to assess learning effectiveness. At the end of the experiment, all participants filled out learning motivation and cognitive load scales, and the experimental group additionally completed a system satisfaction questionnaire to evaluate their user experience with the VR learning system. The collected data were then analyzed to derive findings and draw research conclusions (

Figure 20).

The VR group engaged in exploratory learning by navigating immersive 360° scenes and virtual drone operation to explore local cultural and ecological sites. They identified natural and Indigenous features, observed solar movements using the Sun path simulation model, calculated solar orientation and altitude angles, and recorded observations on worksheets. These tasks emphasized interactive exploration, spatial reasoning, and inquiry-based reflection. In contrast, the control group completed similar content-based tasks using PowerPoint slides and instructional videos without interactive components. Their activities focused on passive observation and note-taking rather than active manipulation, spatial analysis, or experiential engagement within a virtual environment.

3.7. Research Instruments

This study employed four research instruments to evaluate the effectiveness of different instructional approaches: an achievement test, a learning motivation scale, a cognitive load scale, and a system satisfaction questionnaire. The design rationale, item structure, and analytical methods for each instrument are described below.

3.7.1. Achievement Test

The achievement test was developed based on instructional content related to Amis culture and the local environment, including the solar observation activity. It comprised 16 multiple-choice questions, each with four options. To ensure both reliability and validity, the test items were reviewed by two science teachers with professional expertise. The test was administered before and after the instructional to allow pre–post comparison. For statistical analysis, this study adopted a one-way analysis of covariance (ANCOVA), using pre-test scores as the covariate, post-test scores as the dependent variable, and instructional methods as the independent variable, to examine whether different instructional approaches significantly affected students’ learning outcomes.

3.7.2. Learning Motivation Scale

To assess students’ motivation under different instructional modes, this study employed the learning motivation scale developed by Hwang, Yang, and Wang [

57]. The scale used a five-point Likert format (1 = strongly disagree to 5 = strongly agree), with higher scores indicating greater motivation. It measured multiple dimensions, including learning interest, preference for challenge, and willingness to sustain effort. Data were analyzed using SPSS Statistics 25, calculating group means and conducting independent-samples

t-tests to examine significant differences within groups.

3.7.3. Cognitive Load Scale

Cognitive load was assessed using the scale originally developed by Thomashow [

58] and later revised by Hwang, Yang, and Wang [

57]. The scale included eight items rated on a five-point Likert scale (1 = strongly disagree to 5 = strongly agree) and comprised two dimensions: mental load, assessing the difficulty of the learning content or task, and mental effort, evaluating the cognitive resources invested, with lower scores indicating lower cognitive load. Data were analyzed using SPSS Statistics 25 by calculating mean scores and performing independent-samples

t-tests to determine whether the instructional methods produced significant differences in cognitive load.

3.7.4. System Satisfaction Questionnaire

The system satisfaction questionnaire was administered exclusively to the experimental group to assess their perceptions and user experiences with the VR learning system. Based on the Technology Acceptance Model (TAM) proposed by Davis [

59], the questionnaire used a five-point Likert scale (1 = strongly disagree to 5 = strongly agree), with higher scores indicating greater acceptance and satisfaction. Descriptive statistics, including means and standard deviations, were calculated to evaluate perceived usefulness and ease of use, providing insights for future system improvements.

5. Discussion

This study integrates VR360 and drone technology to develop a VR learning system for elementary science education on Amis culture and local environments. Learning achievement results confirmed the effectiveness of VR-based instruction, with the experimental group showing substantially greater post-test gains. The integration of immersive exploration with structured worksheets likely facilitated deeper processing by enabling embodied interaction with abstract concepts, thereby enhancing understanding through sensorimotor grounding and constructivist learning processes.

The VR learning system significantly enhanced student motivation compared with traditional slide-based instruction (M = 4.24 vs. 3.49, Cohen’s d = 1.16), particularly by stimulating intrinsic interest and willingness to engage with challenging tasks. This effect suggests that the VR system reduces perceived difficulty through multimodal feedback by minimizing abstract barriers, thereby reinforcing the competence dimension of Self-Determination Theory. In contrast, improvements in extrinsic motivation were less pronounced, indicating that the VR system primarily strengthens learners’ intrinsic drive rather than externally driven expectations. This distinction suggests the requirement for fostering sustained engagement and effective knowledge transfer.

Contrary to common concerns about cognitive overload, the experimental group reported reduced mental effort, supporting Cognitive Load Theory when multimedia principles are effectively applied. The VR system appears to alleviate extraneous load through coherent presentation and reduce intrinsic load for spatial concepts via 3D visualization, thereby enabling deeper processing. This finding reinforces the perspective that immersive environments can reduce cognitive burden by providing contextualized and intuitive representations. Minor challenges related to time constraints and device familiarity were noted, reflecting typical patterns of technology adoption. However, these issues were exceeded by sustained cognitive benefits, emphasizing the importance of adequate orientation and practice prior to full classroom implementation.

Learners reported high satisfaction with the VR learning system (M = 4.31), particularly emphasizing its usefulness (M = 4.40) and ease of use (M = 4.23), thereby validating the Technology Acceptance Model and supporting its sustainable adoption. Although some students required additional guidance in mastering the VR device, as reflected in lower ratings for acquisition speed (M = 3.96), the overall positive feedback demonstrates the feasibility of integrating VR and drone technology into elementary science education and emphasizes the importance of providing adequate training guidelines.

The findings suggest that the VR system enhances learning through multiple mechanisms, including increased intrinsic motivation, effective cognitive load management, and embodied engagement with content. These results offer evidence-based guidance for instructional design by emphasizing established learning principles rather than relying solely on technological novelty. However, this study has several limitations: its short duration precludes assessment of long-term retention, the small sample size restricts generalizability, and individual differences were not examined.

6. Conclusions and Future Work

This study integrated VR360 and drone technology to develop a VR system for teaching Amis culture and local environments within elementary natural science curricula. The results revealed that, when combined with task-based worksheets and observational activities, the VR system significantly enhanced students’ learning motivation, reduced cognitive load, and improved learning achievement compared with slide-based instruction. Learners also reported high satisfaction, indicating that the system enhanced their understanding of cultural and astronomical concepts while fostering curiosity and engagement. Nonetheless, some usability challenges related to device familiarity were identified, suggesting the need for further training or design refinements.

Future research could proceed in several directions. One promising approach is to explore the system’s cultural scalability by adapting it for other Indigenous communities in Taiwan and beyond, thereby evaluating its effectiveness across diverse cultural and educational contexts. Another primary direction is to enrich the system with advanced content formats, including interactive 3D celestial models, dynamic simulations, and augmented-reality overlays, to enhance immersion and provide more authentic, engaging learning experiences. Longitudinal studies are also needed to assess knowledge retention, learning transfer, and the sustained impact of VR on motivation and engagement. Furthermore, investigating individual differences—such as prior knowledge, spatial ability, and learning styles—is essential for refining the system to support differentiated instruction and maximize its effectiveness for diverse learners.

In addition, comparative studies could examine the system’s effectiveness relative to other immersive technologies, such as augmented reality, mixed reality, or desk-top VR, to clarify its unique strengths and limitations. From a practical perspective, research on classroom management strategies, teacher training models, and large-scale deployment is also needed to support real-world adoption. Finally, given the affordability and potential of Cardboard VR and virtual drones in education, the VR system could be applied to informal learning, cultural preservation, tourism, and professional training, thereby extending its impact beyond the classroom.

In Taiwan, Indigenous students represent a small population in elementary schools, limiting participant recruitment and the broader applicability of findings. Subsequent studies could include multiple schools across regions, increasing participant numbers and diversity, to enhance representativeness and validate the VR learning system’s effectiveness across broader Indigenous and non-Indigenous student populations.

Future work should explore the sustained effects of VR instruction across diverse populations, evaluate cost-effectiveness, and investigate improvements in scalability and usability to realize its educational potential and support sustainable classroom implementation. In addition, the research could actively involve Amis community members in co-designing VR content, providing feedback, and guiding cultural interpretation to ensure authentic representation and avoid one-sided perspectives.