Automatic Production of Deep Learning Benchmark Dataset for Affine-Invariant Feature Matching

Abstract

1. Introduction

- An effective algorithm for the production of a deep learning dataset for affine-invariant feature matching.

- The most extensive deep learning benchmark dataset to date for affine-invariant feature matching.

- A distribution evaluation model that considers both global and local image contents to accurately evaluate the spatial distribution quality for matching points.

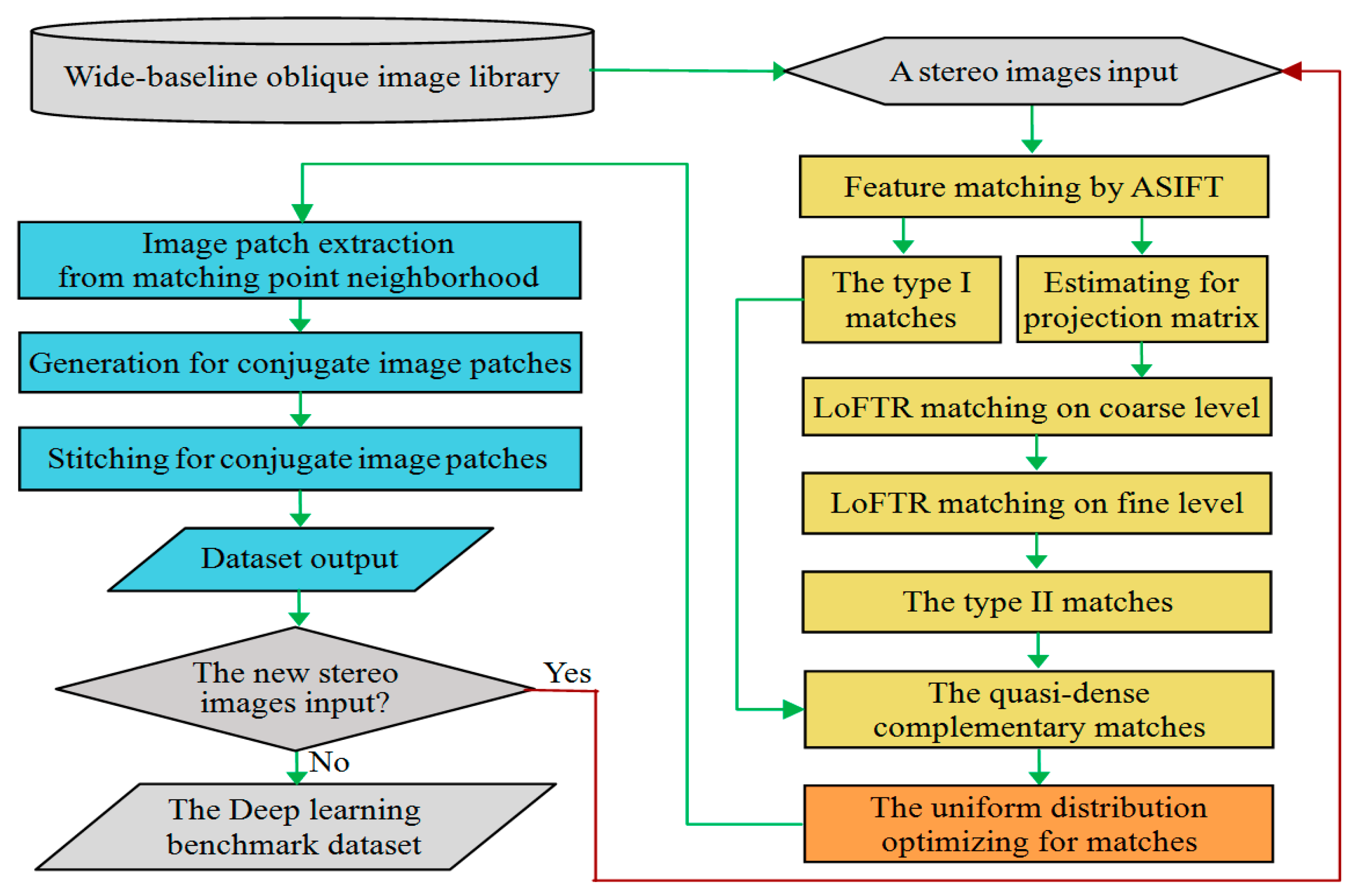

2. Data and Methods

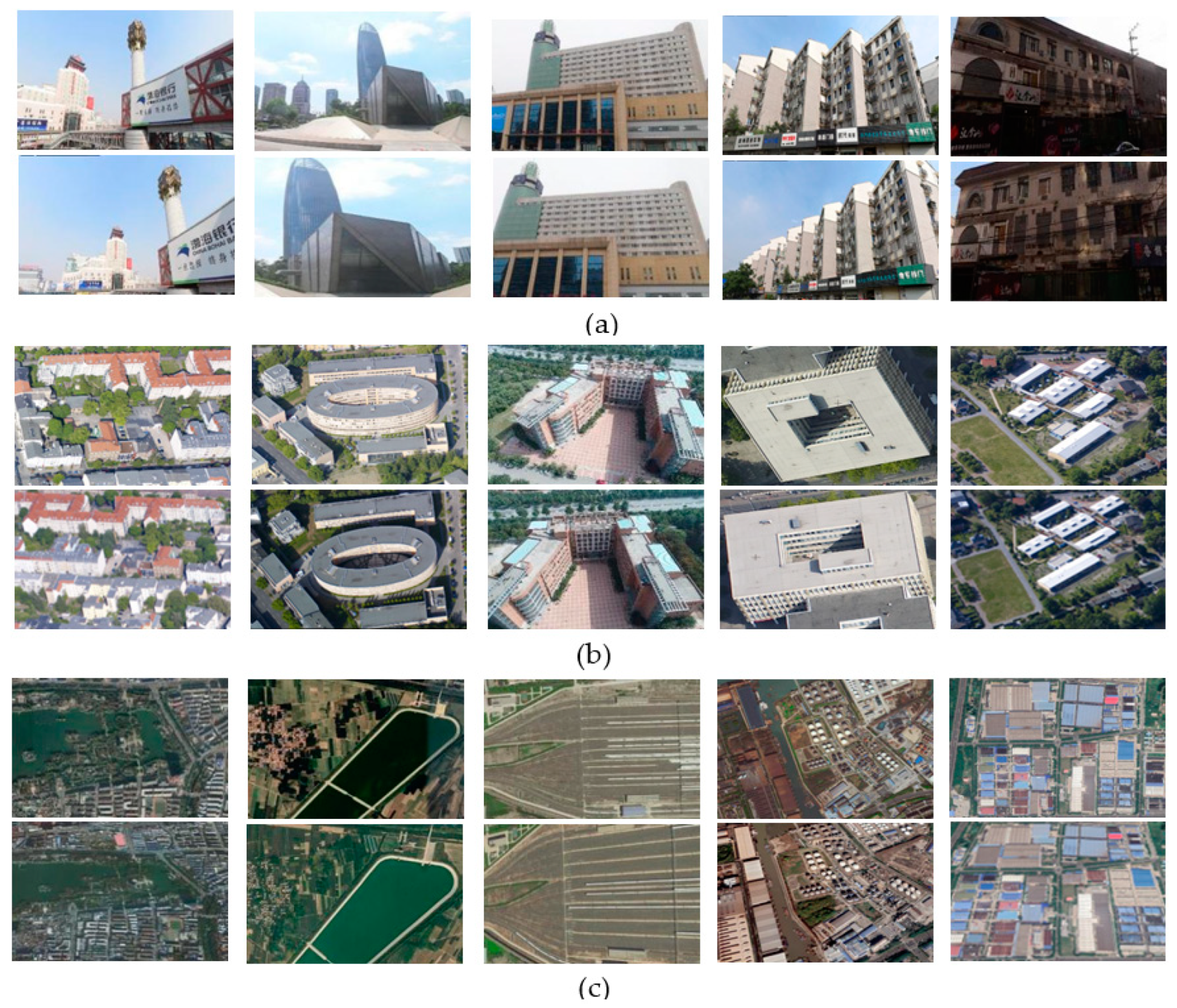

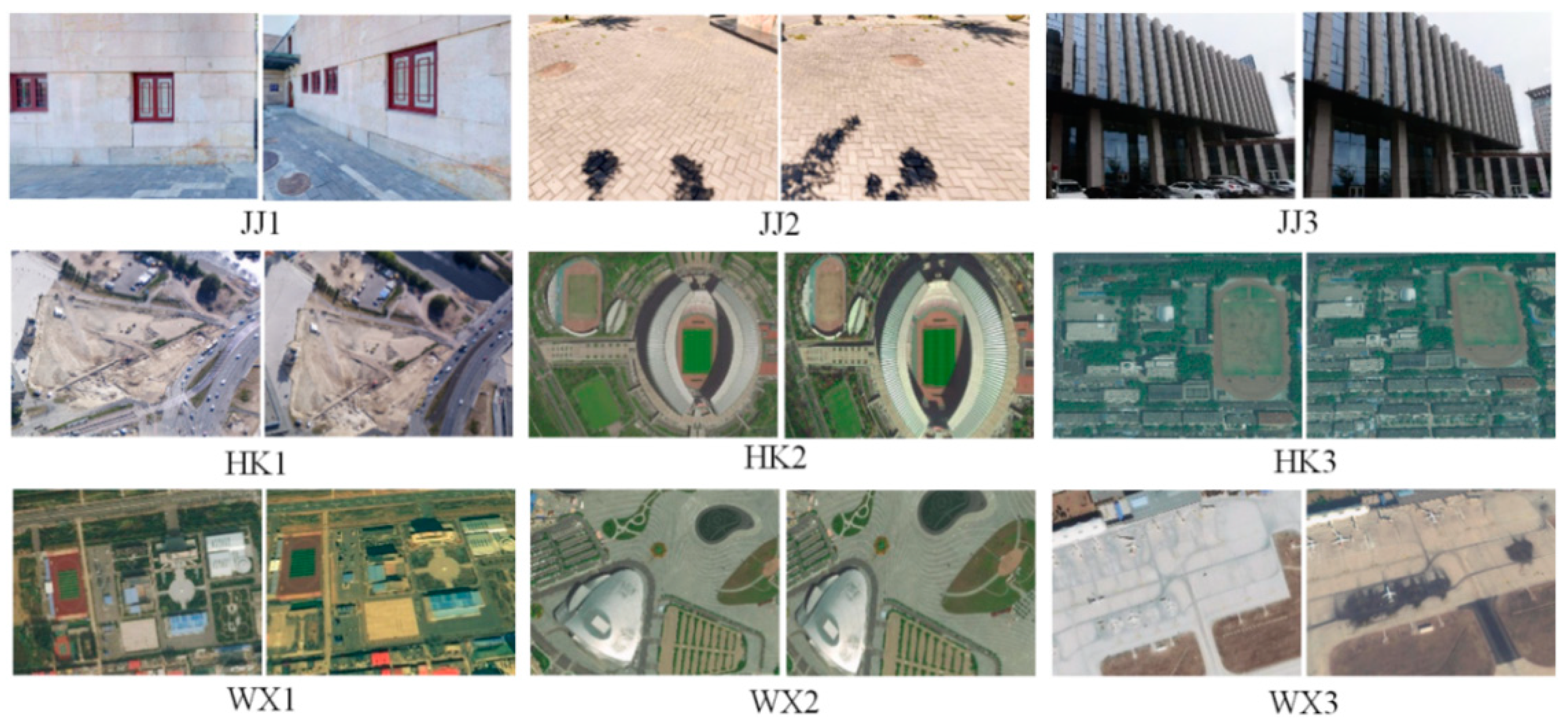

2.1. Wide-Baseline Oblique Image Library

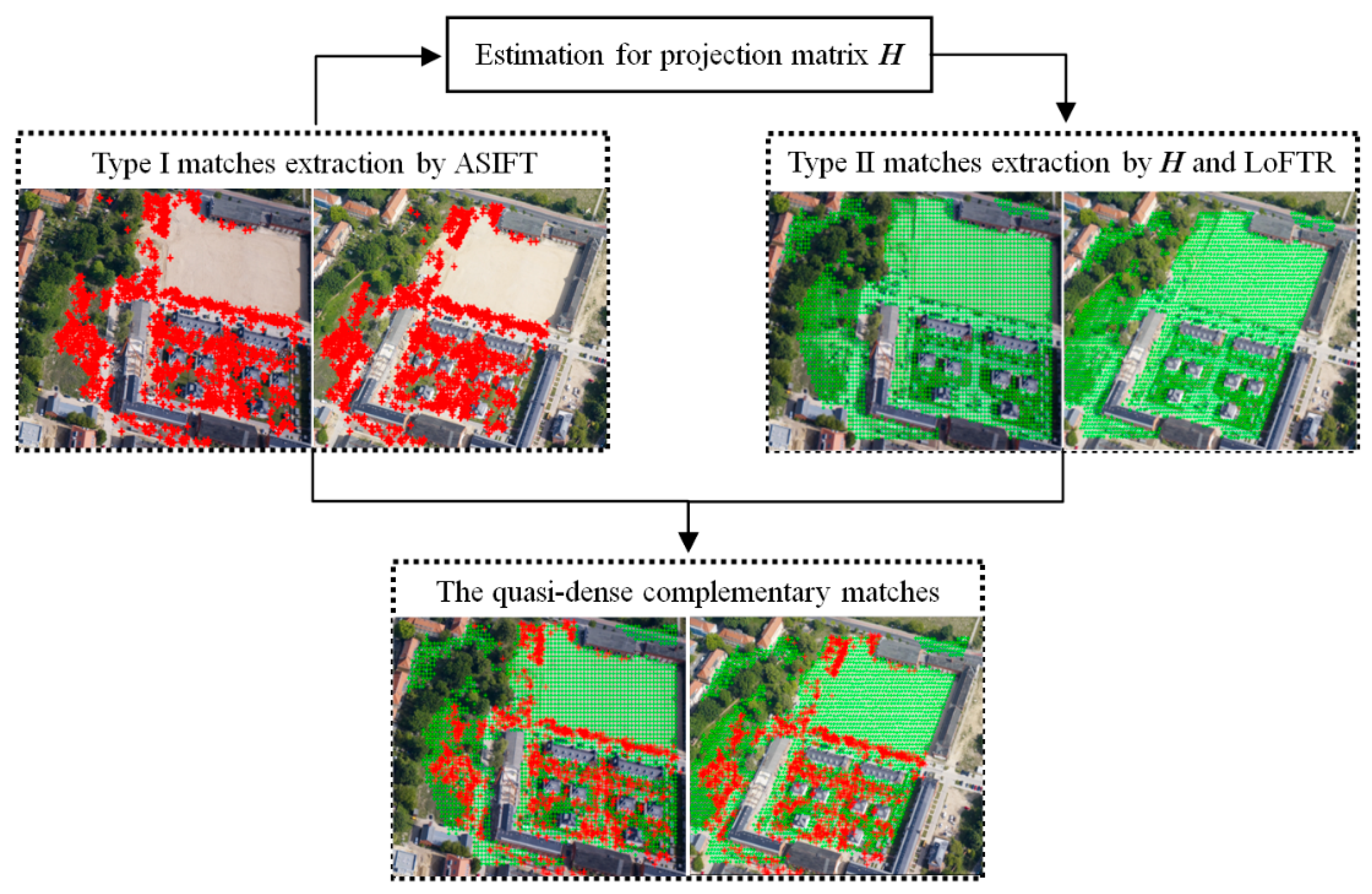

2.2. Extraction of Quasi-Dense Conjugate Points Using Complementary Features

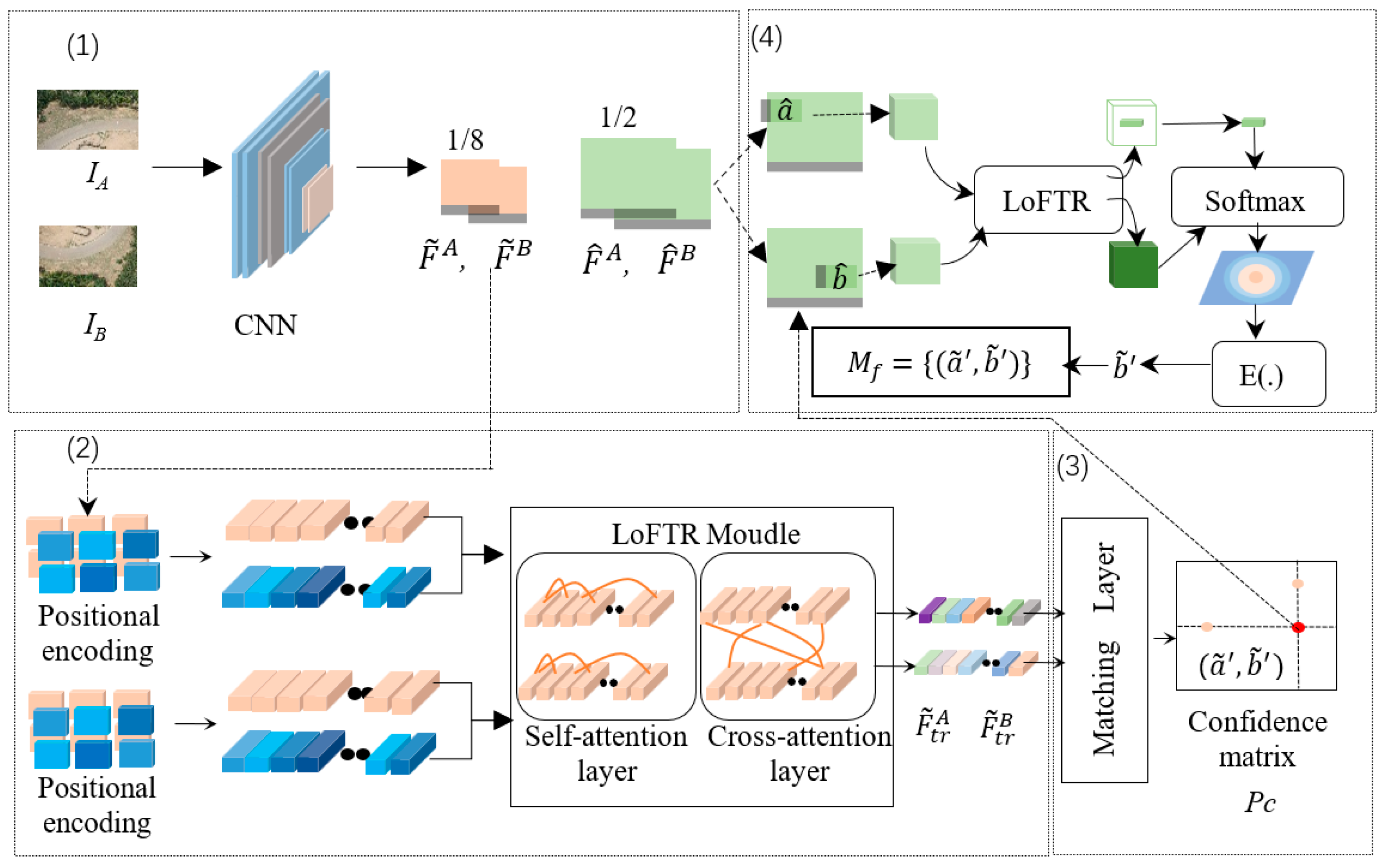

- (1)

- One image pair, IA and IB, was input into the CNN for feature extraction. The coarse (1/8) feature maps of the two images were denoted by and , respectively, and the fine (1/2) feature maps were denoted by and , respectively.

- (2)

- and were flattened to one-dimensional vectors, and positional encodings were produced for them. The vectors with positional encoding were then processed by the LoFTR module, which had two self-attention layers and two cross-attention layers. Finally, two texture- and feature-enhanced maps, and , with high discrimination, were output.

- (3)

- The differential matching layer was used to match the high-discrimination feature maps, and , to obtain a confidence matrix Pc. The matches in Pc were then determined according to the confidence threshold (0.2 in our experiment) and the mutual nearest neighbor criterion, and the coarse-level matching prediction Mc was obtained.

- (4)

- For each coarse-level matching prediction (i, j) in Mc, local corresponding windows with a size of 5 × 5 pixels were captured around and . Similarly, all coarse matches were refined according to fine-level local windows, and then the final sub-pixel matching prediction Mf was output.

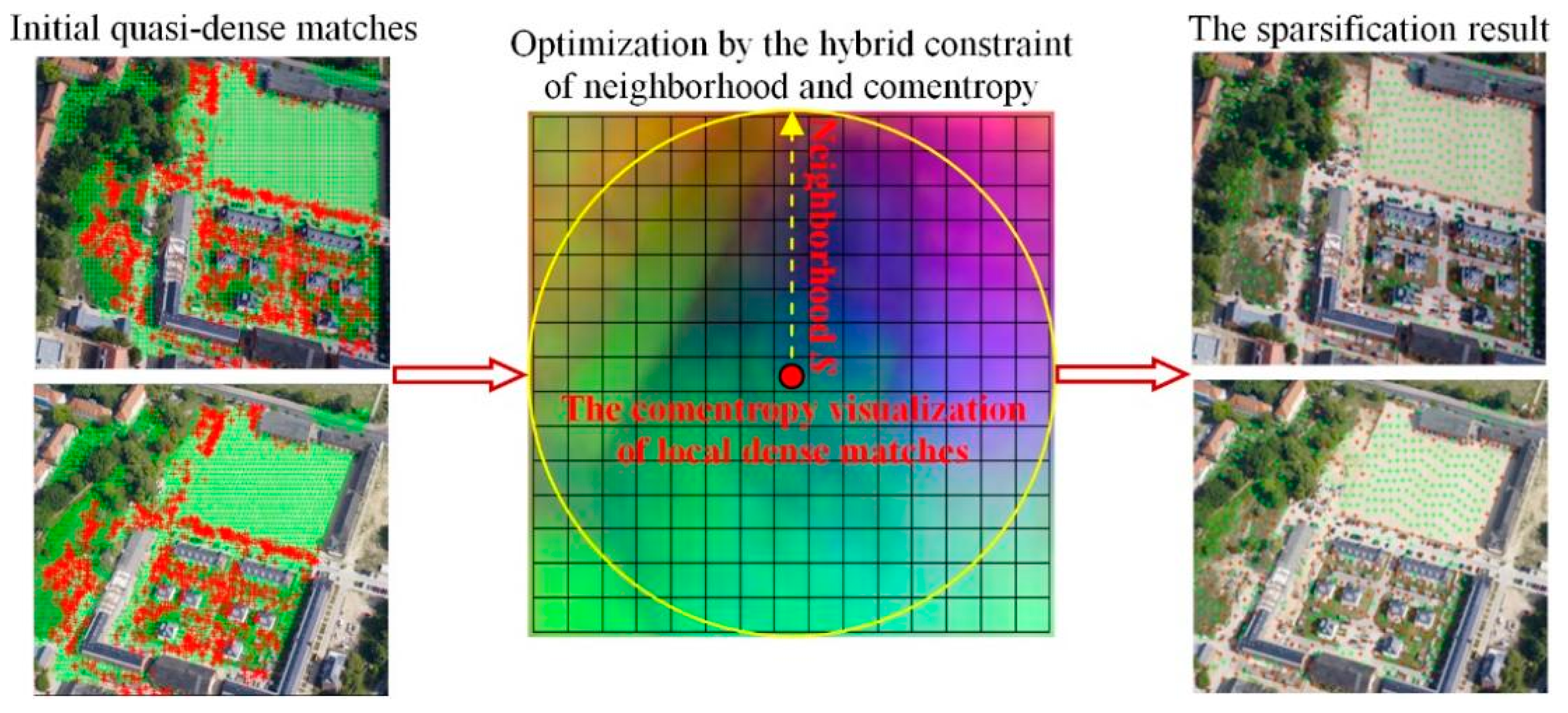

2.3. Generation of Uniform Matching Points

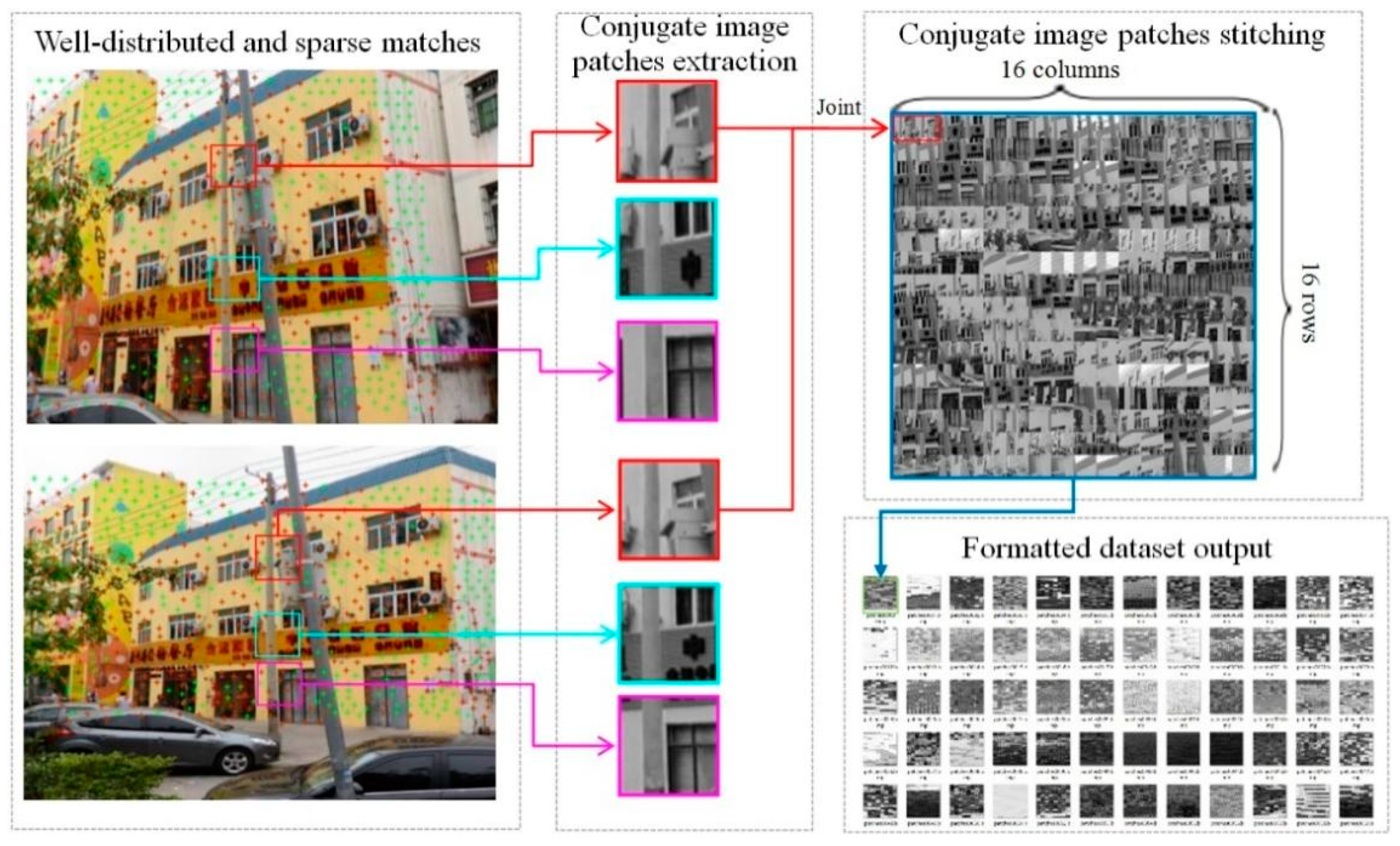

2.4. Automatic Production of Dataset

3. Results and Discussion

3.1. Model Training

3.2. Test Methods

3.3. Evaluation Metrics

- (1)

- Number of correct matching points . Fifteen pairs of uniformly distributed corresponding points were manually selected from stereo image pairs, the fundamental matrix F0 was estimated by least-squares adjustment, and this was regarded as the ground truth [26,27,28]. The known fundamental matrix, F0, was used to calculate the error of each matching point according to Equation (4), and a threshold, (set to 2.0), was imposed for the error. If the error was less than , the pair of points was a correct pair of matching points and was included in the count of correct matching points .where and denote any pair of corresponding points.

- (2)

- Match correct rate α. This was defined by α = , where k denotes the total number of matching points.

- (3)

- Matching root-mean-square error (pixel). This was calculated according to the following equation:

- (4)

- Matching spatial distribution quality . Zhu et al. generated a Delaunay triangulation [29] from the matching points and then evaluated the quality of the spatial distribution of the matching points according to the area and shape of each triangle, as shown in Equation (6):

3.4. Results and Analysis

- (1)

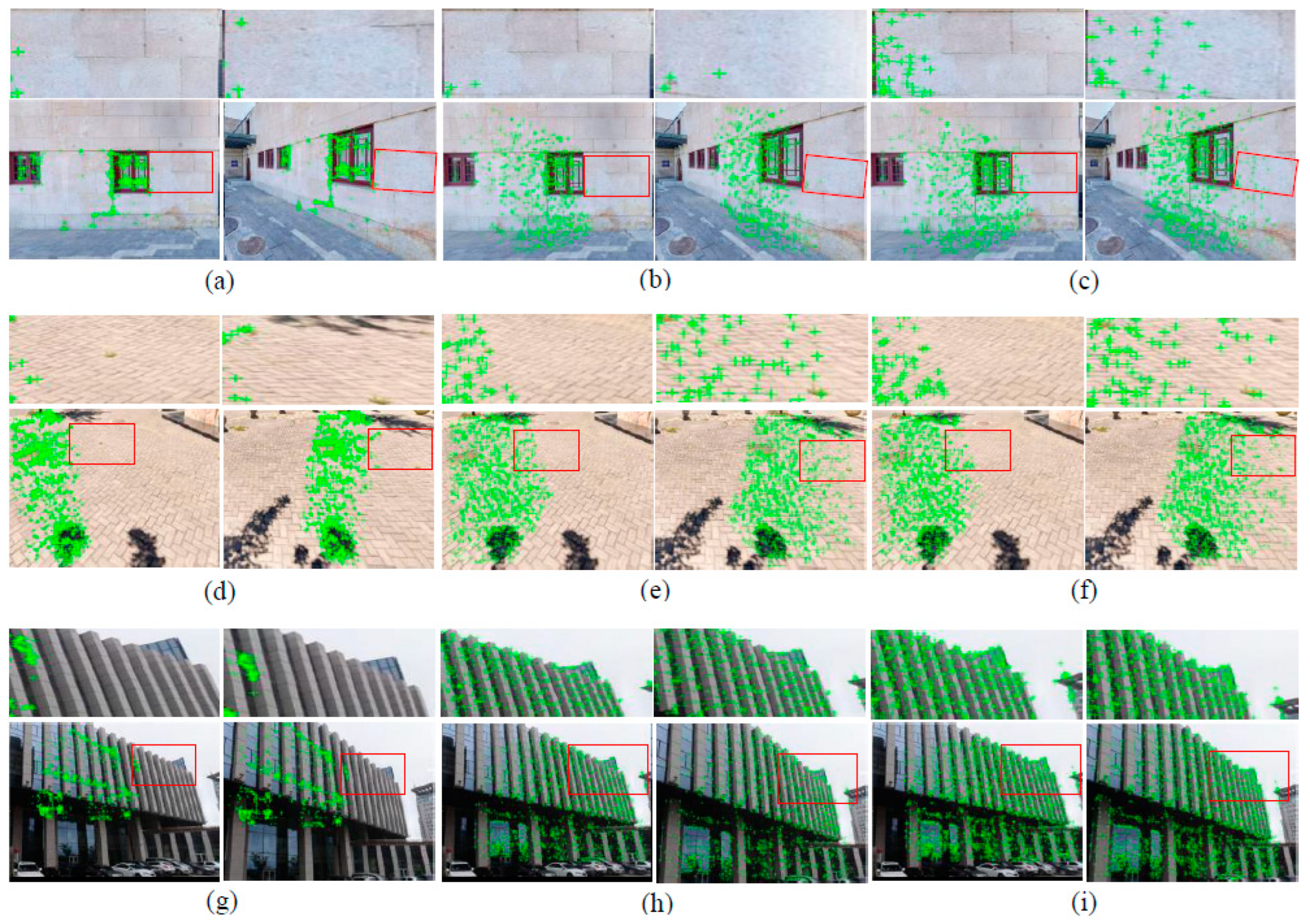

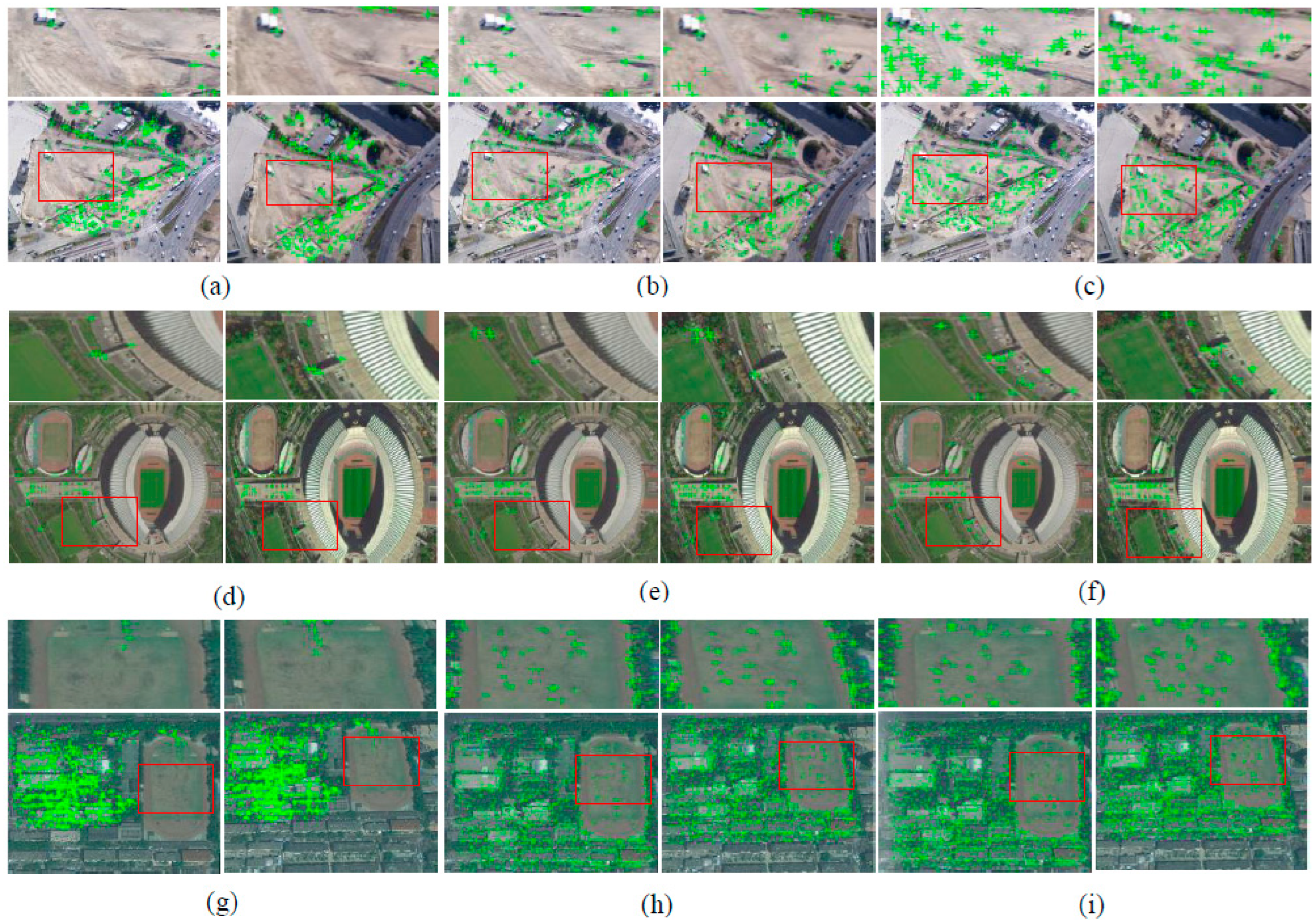

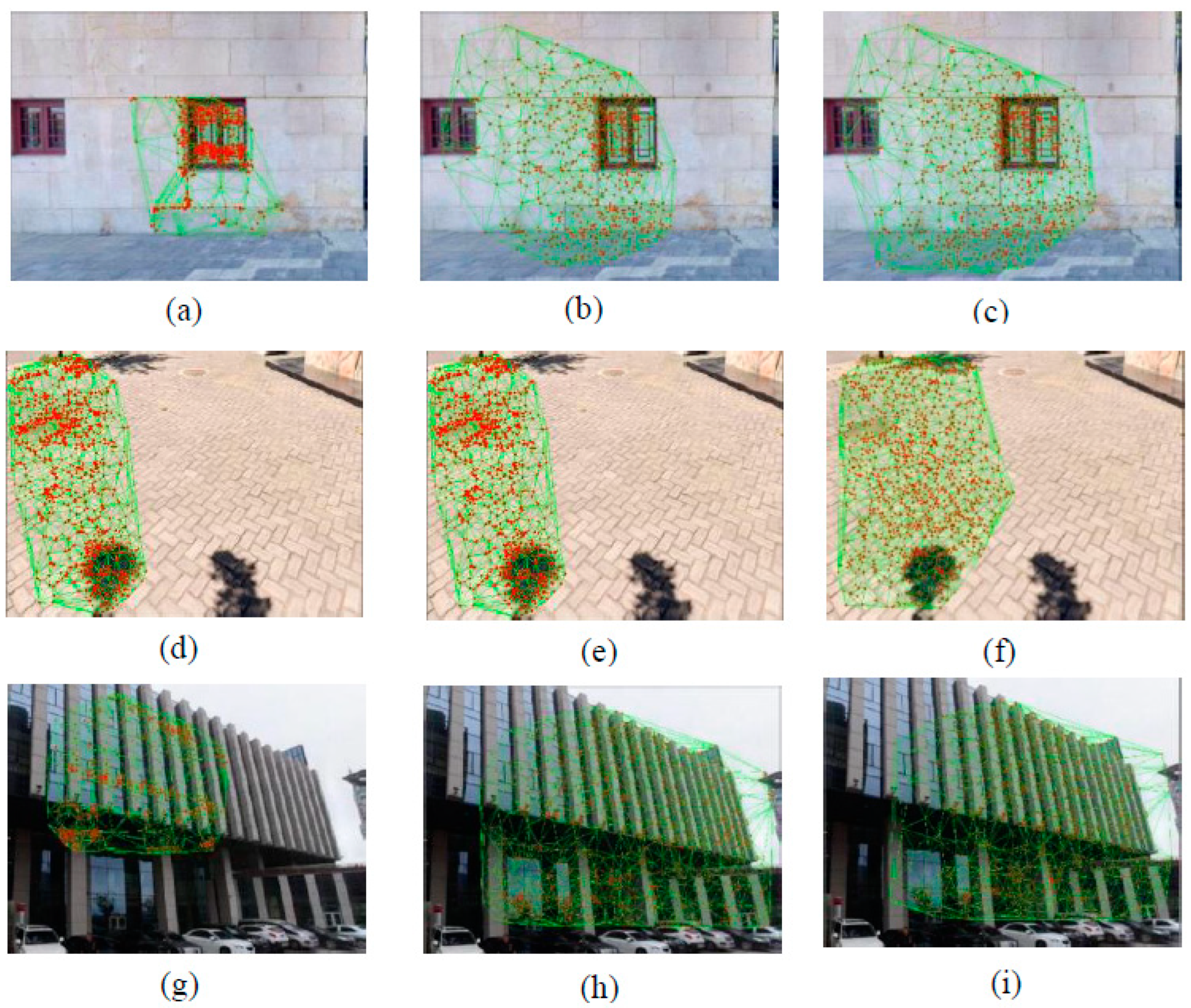

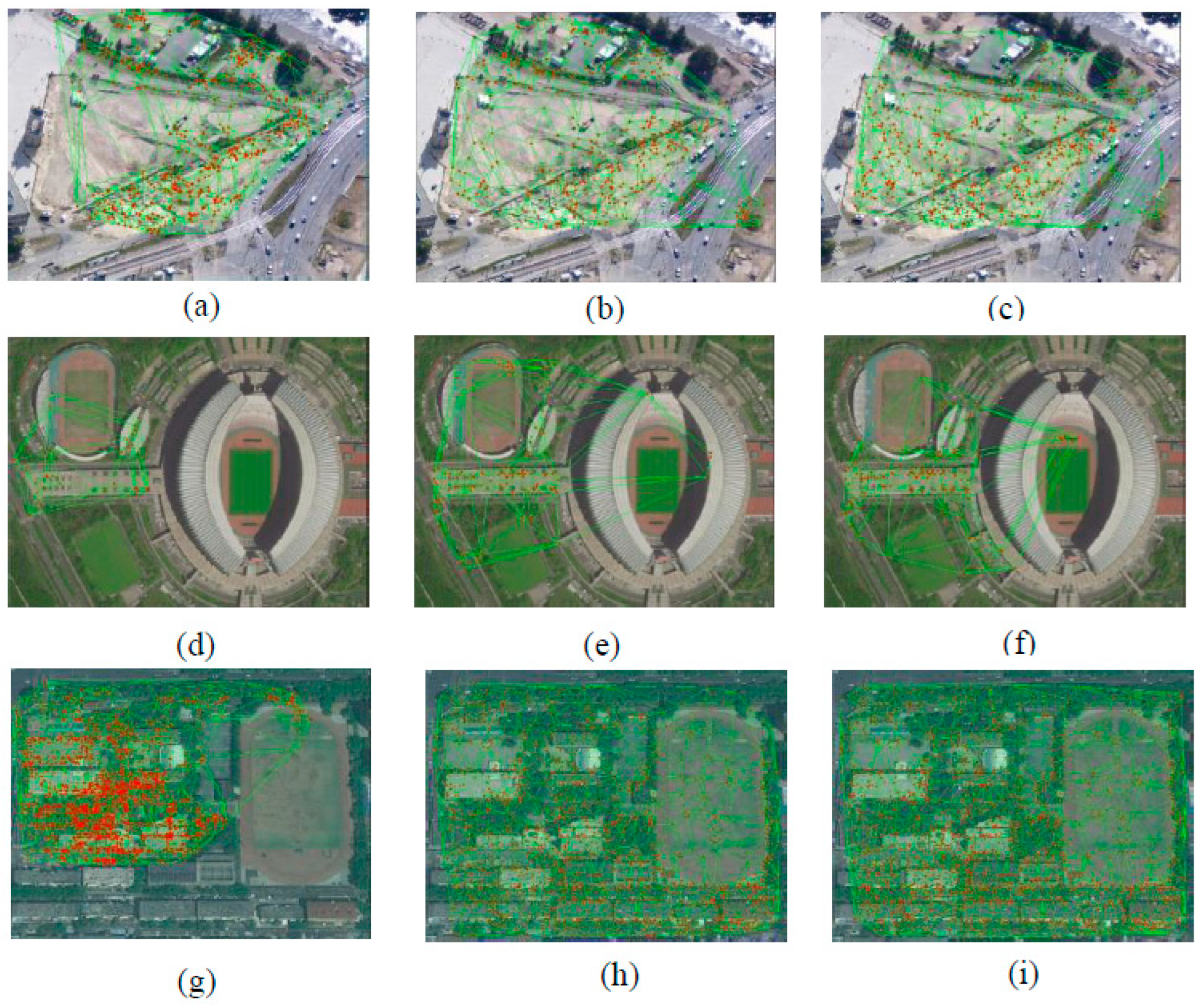

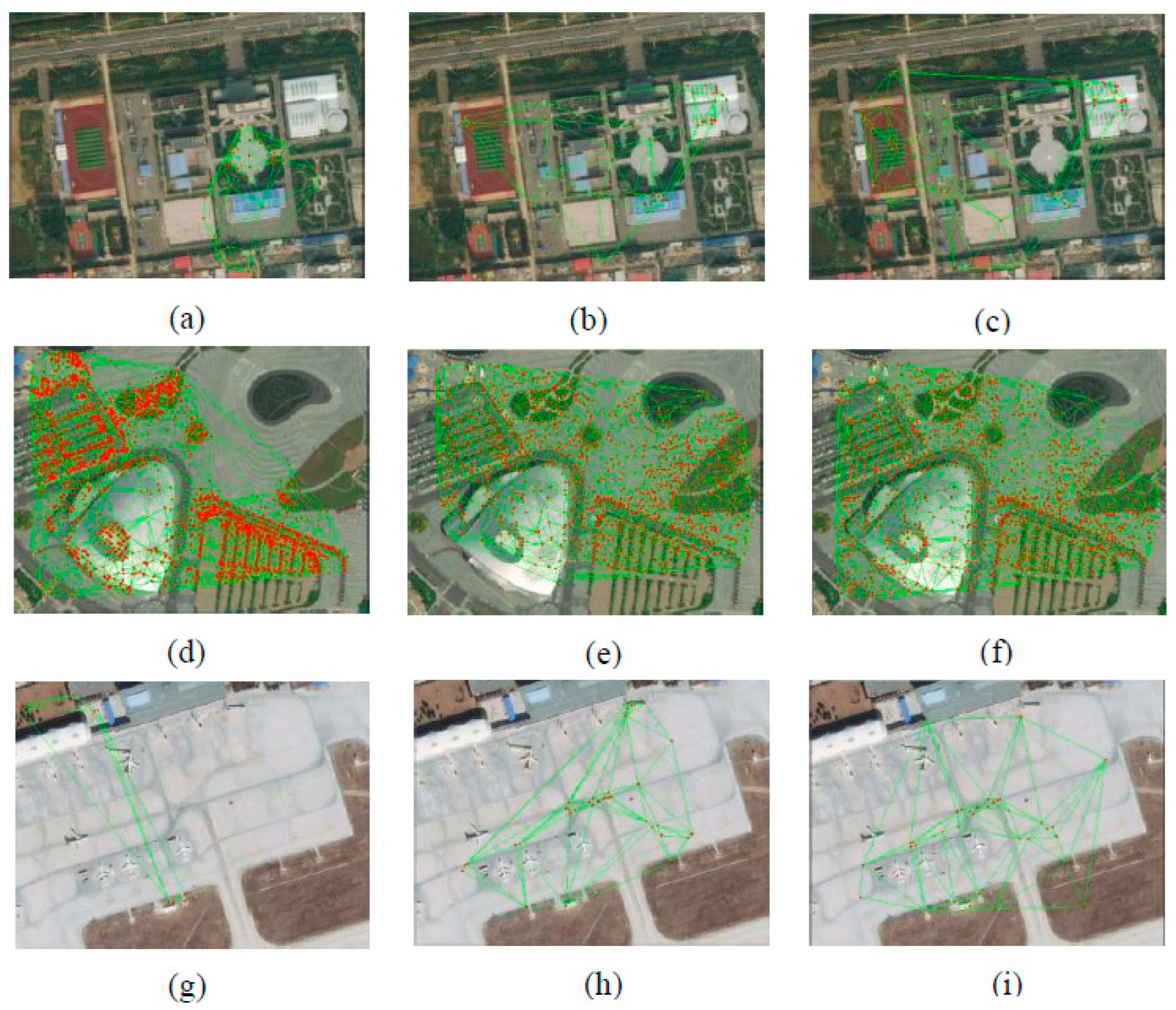

- The SJRS-AffNet method had advantages, with respect to the number of correct matching points and the match correct rate. There was a significant increase in the number of matching points in JJ1–2 in Figure 8; HK1 and HK3 in Figure 9; and WX1–2 in Figure 10. Figure 8, Figure 9 and Figure 10 show that SJRS-AffNet could obtain more matching point pairs for most test data. Table 1 also reveals that SJRS-AffNet was oriented toward wide-baseline weak-texture stereo images and could obtain more matching point pairs in most cases and achieve a higher match correct rate. Compared with Brown-AffNet, SJRS-AffNet had significant advantages because the SJRS dataset had a greater quantity and breadth than the Brown dataset. In particular, the images in the SJRS dataset came from different platforms (aerial, satellite, and ground-based close-range platforms), whereas the images in the Brown dataset were only ground-based close-range images, mainly of artificial statues and natural landscapes. Therefore, the trained SJRS-AffNet was more generalizable than Brown-AffNet.

- (2)

- Table 1 also shows that SJRS-AffNet had advantages with respect to match correct rate when tested on ground-based close-range image data. The reason is that relatively few types of scene texture, such as walls, bare ground, and green space, occur in ground-based close-range images. Therefore, the model trained on this dataset can achieve a higher match correct rate on ground-based close-range images. In contrast, the aerial and satellite photography platforms have larger fields of view, so more types of texture appear in the images in HK1–3 and WX1–3. To improve the match correct rate of such images in the future, it will be necessary to further increase the types and the quantity of textures covered by the SJRS dataset.

- (3)

- SJRS-AffNet had obvious advantages with respect to matching spatial distribution quality. Table 1 shows that SJRS-AffNet can achieve high matching spatial distribution quality for most aerial, satellite, and ground-based close-range images. In Figure 11, especially for JJ1–2, the distribution area in the Delaunay triangulation network was significantly improved; in Figure 12, only the distribution area of the HK1 Delaunay triangulation network was improved more significantly; in Figure 13, the distribution areas of WX1–3 triangular networks were all significantly improved. This result is consistent with the visual global and local matching results shown in Figure 8, Figure 9, Figure 10, Figure 11, Figure 12 and Figure 13. This shows the effectiveness of the matching spatial distribution quality model that considers both global and local images and verifies the superiority of SJRS.

- (4)

- Figure 8, Figure 9, Figure 10, Figure 11, Figure 12 and Figure 13 and Table 1 reveal that there is no method that can adapt to image data from all types of platforms and to images of all terrain textures. The size of the training dataset directly affected the image matching performance of the deep learning model. Although SJRS-AffNet could achieve better matching results for most of the test data, it was not as good as the ASIFT algorithm for individual test images and evaluation metrics. Figure 10 and Figure 13 show that, given the WX3 stereo pair, none of the three methods tested could obtain a large number of matches. The reason is that there are many obvious ground feature differences in the corresponding regions of WX3. Due to the low similarity between the corresponding areas, many corresponding features were eliminated as false matches.

4. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Wierzbicki, D.; Nienaltowski, M. Accuracy analysis of a 3D model of excavation, created from images acquired with an action camera from low altitudes. ISPRS Int. J. Geo-Inf. 2019, 8, 83. [Google Scholar] [CrossRef]

- Yao, G.B.; Yilmaz, A.; Meng, F.; Zhang, L. Review of wide-baseline stereo image matching based on deep learning. Remote Sens. 2021, 13, 3247. [Google Scholar] [CrossRef]

- Lin, C.; Heipke, C. Deep learning feature representation for image matching under large viewpoint and viewing direction change. ISPRS J. Photogramm. Remote Sens. 2022, 190, 94–112. [Google Scholar] [CrossRef]

- Sofie, H.; Bart, K.; Revesz, P.Z. Affine-invariant triangulation of spatio-temporal data with an application to image retrieval. Int. J. Geo-Inf. 2017, 6, 100. [Google Scholar] [CrossRef]

- Ma, J.; Sun, Q.; Zhou, Z.; Wen, B.; Li, S. A Multi-scale residential areas matching method considering spatial neighborhood features. ISPRS Int. J. Geo-Inf. 2022, 11, 331. [Google Scholar] [CrossRef]

- Kızılkaya, S.; Alganci, U.; Sertel, E. VHRShips: An extensive benchmark dataset for scalable deep learning-based ship detection applications. ISPRS Int. J. Geo-Inf. 2022, 11, 445. [Google Scholar] [CrossRef]

- Brown, M.; Hua, G.; Winder, S. Discriminative learning of local image descriptors. IEEE Trans. Pattern. Anal. Mach. Intell. 2011, 33, 43–57. [Google Scholar] [CrossRef]

- David, G.L. Distinctive image features from scale-invariant keypoints. Int. J. Comput. Vision. 2004, 60, 91–110. [Google Scholar] [CrossRef]

- Fischler, M.A.; Bolles, R.C. Random sample consensus: A paradigm for model fitting with applications to image analysis and automated cartography. Commun. ACM 1981, 24, 381–395. [Google Scholar] [CrossRef]

- Yang, H.; Zhang, S.; Wang, L. Robust and precise registration of oblique images based on scale-invariant feature transformation algorithm. IEEE Geosci. Remote Sens. Lett. 2012, 9, 783–787. [Google Scholar] [CrossRef]

- Zhang, Q.; Wang, Y.; Wang, L. Registration of images with affine geometric distortion based on maximally stable extremal regions and phase congruency. Image Vis. Comput. 2015, 36, 23–39. [Google Scholar] [CrossRef]

- Xiao, X.W.; Guo, B.X.; Li, D.R.; Zhao, X.A. Quick and affine invariance matching method for oblique images. Acta Geod. Et Cartogr. Sin. 2015, 44, 414–442. [Google Scholar] [CrossRef]

- Xiao, X.W.; Li, D.R.; Guo, B.X.; Jiang, W.T. A robust and rapid viewpoint-invariant matching method for oblique images. Geomat. Inf. Sci. Wuhan Univ. 2016, 41, 1151–1159. [Google Scholar] [CrossRef]

- Jiang, S.; Xu, Z.H.; Zhang, F.; Liao, R.C.; Jiang, W.S. Solution for efficient SfM reconstruction of oblique UAV images. Geomat. Inf. Sci. Wuhan Univ. 2019, 44, 1153–1161. [Google Scholar] [CrossRef]

- Morel, J.-M.; Yu, G. Asift: A new framework for fully affine invariant image comparison. SIAM J. Imaging Sci. 2009, 2, 438–469. [Google Scholar] [CrossRef]

- Yao, G.B.; Yilmaz, A.; Zhang, L.; Meng, F.; Ai, H.B.; Jin, F.X. Matching large baseline oblique stereo images using an end-to-end convolutional neural network. Remote Sens. 2021, 13, 274. [Google Scholar] [CrossRef]

- Liu, J.; Ji, S.P. Deep learning based dense matching for aerial remote sensing images. Acta Geod. Cartogr. Sin. 2019, 48, 1141–1150. [Google Scholar] [CrossRef]

- Tian, Y.R.; Fan, B.; Wu, F.C. L2-net: Deep learning of discriminative patch descriptor in euclidean space. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 661–669. [Google Scholar] [CrossRef]

- Mishchuk, A.; Mishkin, D.; Radenovic, F. Working hard to know your neighbor’s margins: Local descriptor learning loss. Adv. Neural Inf. Process. Syst. 2017, 1, 4826–4837. [Google Scholar] [CrossRef]

- Mishkin, D.; Radenovic, F.; Matas, J. Repeatability is not enough: Learning affine regions via discriminability. In Proceedings of the 2018 Computer Vision, Munich, Germany, 8–14 September 2018; pp. 287–304. [Google Scholar] [CrossRef]

- Sarlin, P.-E.; DeTone, D.; Malisiewicz, T.; Rabinovich, A. SuperGlue: Learning feature matching with graph neural networks. In Proceedings of the IEEE 2020 Conference on Computer Vision and Pattern Recognition (CVPR), Seattle, WA, USA, 14–19 June 2020. [Google Scholar] [CrossRef]

- Vaswani, A.; Shazeer, N.; Parmar, N.; Uszkoreit, J.; Jones, L.; Gomez, A.N.; Kaiser, L.; Polosukhin, I. Attention is all you need. arXiv 2017, arXiv:1706.03762. [Google Scholar] [CrossRef]

- Sun, J.; Shen, Z.; Wang, Y.; Bao, H.; Zhou, X. LoFTR: Detector-free local feature matching with transformers. arXiv 2021, arXiv:2104.00680. [Google Scholar] [CrossRef]

- Balntas, V.; Lenc, K.; Vedaldi, A.; Mikolajczyk, K. HPatches: A benchmark and evaluation of handcrafted and learned local descriptors. In Proceedings of the 2017 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Honolulu, HI, USA, 21–26 July 2017; pp. 3852–3861. [Google Scholar]

- Li, Z.; Snavely, N. MegaDepth: Learning single-view depth prediction from internet photos. In Proceedings of the Computer Vision and Pattern Recognition (CVPR), Salt Lake City, UT, USA, 18–23 June 2018. [Google Scholar]

- Yao, G.B.; Deng, K.Z.; Zhang, L. An automated registration method with high accuracy for oblique stereo images based on complementary affine invariant features. Acta Geod. Cartogr. Sin. 2013, 42, 869–876. [Google Scholar]

- Li, X.; Yang, Y.H.; Yang, B.; Yin, F.A. Multi-source remote sensing image matching method using directional phase feature. Geomat. Inf. Sci. Wuhan Univ. 2020, 45, 488–494. [Google Scholar] [CrossRef]

- Yuan, X.X.; Yuan, W.; Chen, S.Y. An automatic detection method of mismatching points in remote sensing images based on graph theory. Geomat. Inf. Sci. Wuhan Univ. 2018, 43, 1854–1860. [Google Scholar] [CrossRef]

- Zhu, Q.; Wu, B.; Xu, Z.X. Seed point selection method for triangle constrained image matching propagation. IEEE Geosci. Remote Sens. Lett. 2006, 3, 207–211. [Google Scholar] [CrossRef]

| Test Data | ASIFT | Brown-AffNet | SJRS-AffNet | |||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|

(Pair) | α (%) | (Pixel) | (Pair) | α (%) | (Pixel) | (Pair) | α (%) | (Pixel) | ||||

| JJ1 | 582 | 51.5 | 1.08 | 186.00 | 639 | 65.2 | 0.88 | 51.76 | 793 | 81.1 | 0.86 | 50.6 |

| JJ2 | 1394 | 42.7 | 0.82 | 132.79 | 1094 | 76.1 | 0.98 | 85.27 | 1160 | 80.5 | 0.89 | 90.13 |

| JJ3 | 735 | 22.2 | 0.66 | 109.62 | 1711 | 43.4 | 0.78 | 77.00 | 2922 | 71.1 | 0.65 | 72.06 |

| HK1 | 649 | 27.2 | 0.68 | 51.77 | 357 | 27.6 | 1.35 | 28.74 | 418 | 28.9 | 1.02 | 27.61 |

| HK2 | 97 | 86.6 | 0.56 | 32.72 | 116 | 33.6 | 3.60 | 22.78 | 123 | 31.3 | 0.97 | 38.63 |

| HK3 | 4541 | 55.3 | 0.87 | 125.53 | 1684 | 52.2 | 0.61 | 50.25 | 2051 | 62.8 | 0.65 | 47.86 |

| WX1 | 59 | 70.2 | 1.29 | 64.26 | 49 | 21.1 | 1.17 | 20.18 | 70 | 33.1 | 1.35 | 22.68 |

| WX2 | 4664 | 84.2 | 0.94 | 120.36 | 1318 | 62.5 | 0.90 | 57.57 | 1438 | 65.7 | 1.11 | 48.48 |

| WX3 | 11 | 73.3 | 0.31 | 19.61 | 38 | 34.8 | 1.39 | 16.19 | 39 | 27.0 | 1.14 | 10.74 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Yao, G.; Zhang, J.; Gong, J.; Jin, F. Automatic Production of Deep Learning Benchmark Dataset for Affine-Invariant Feature Matching. ISPRS Int. J. Geo-Inf. 2023, 12, 33. https://doi.org/10.3390/ijgi12020033

Yao G, Zhang J, Gong J, Jin F. Automatic Production of Deep Learning Benchmark Dataset for Affine-Invariant Feature Matching. ISPRS International Journal of Geo-Information. 2023; 12(2):33. https://doi.org/10.3390/ijgi12020033

Chicago/Turabian StyleYao, Guobiao, Jin Zhang, Jianya Gong, and Fengxiang Jin. 2023. "Automatic Production of Deep Learning Benchmark Dataset for Affine-Invariant Feature Matching" ISPRS International Journal of Geo-Information 12, no. 2: 33. https://doi.org/10.3390/ijgi12020033

APA StyleYao, G., Zhang, J., Gong, J., & Jin, F. (2023). Automatic Production of Deep Learning Benchmark Dataset for Affine-Invariant Feature Matching. ISPRS International Journal of Geo-Information, 12(2), 33. https://doi.org/10.3390/ijgi12020033