1. Introduction

As the material carrier of human survival and development, land resources have the characteristics of fixed location, non-renewable, unbalanced distribution of resources and so on [

1]. With the rapid development of population and socio-economic systems, the remaining disposable land resources are decreasing day by day. Therefore, the overall planning and rational planning of land resources has important social value. For urban areas, most of the landforms are composed of buildings and roads, the accurate segmentation of buildings and roads can help realize macro-urban planning. Therefore, the automatic segmentation of buildings and roads in remote sensing images is highly necessary.

In the past decades, many scholars had proposed effective feature engineering remote sensing image segmentation methods. For example, Yuan et al. [

2] used the local spectral histograms to calculate the spectral and texture features of the image. Each local spectral histograms linearly combined several representative features, and finally realized the remote sensing image segmentation by weight estimation. Li et al. [

3] proposed an improvement on the two key steps of label extraction and pixel labeling in the process of segmentation, which could effectively and efficiently improve the accuracy of high-resolution image edge segmentation. Fan et al. [

4] proposed a remote sensing image segmentation method based on prior information. This method used single point iterative weighted fuzzy c-means clustering algorithm to solve the impact of data distribution and random initialization of clustering center on clustering quality. The above feature engineering segmentation methods could effectively segment remote sensing images. However, they have some problems, such as poor noise resistance, slow segmentation speed and artificial parameter design, and could not competent for the tasks of automatic segmentation of large quantities of data.

In recent years, convolutional neural networks (CNNs) had achieved great success in many fields, such as health care [

5,

6], marketing [

7], power management [

8], civil engineering [

9], distributed database [

10], cyber security [

11] and so on. The field of computer vision semantic segmentation is no exception. In the semantic segmentation, CNNs not only has strong noise resistance, but also can realize the automatic segmentation of a large number of data, and has achieved excellent segmentation performance. Full Convolutional Network (FCN) was proposed by Long et al. [

12], and it was the first time to use full convolutional neural network to achieve image semantic segmentation, laying a foundation for subsequent segmentation methods. Ronneberger et al. [

13] proposed U-shaped structure (U-Net) for semantic segmentation. Based on the FCN framework, U-Net had improved the feature fusion method, and the features of different grades were fused to realize the feature reuse. Fusion of different levels of feature maps enabled the network to contain multi-level semantic information and improve the segmentation accuracy. However, compared with FCN, the calculation amount was increased to a certain extent. Zhao et al. [

14] proposed Pyramid Scene Parsing Network (PSPNet) using pyramid structure to aggregate the context information of different regions and can mine the global context information. DeeplabV3+ proposed by Chen et al. [

15] used atrous convolution to construct multi-scale pyramid feature map, which enabled subsampling to obtain multi-scale context information and obtain larger receptive field without bringing computational overhead.

Liu et al. [

16] proposed a new multi-channel deep convolutional neural network. This network had solved the problem that the spatial and scale features of segmenting objects were lost in some remote sensing images, but it was easy to make mistakes in the case of shadow occlusion. Aiming at the super-high resolution and complex features of remote sensing images, Qi et al. [

17] proposed a segmentation model using multi-scale convolution and attention mechanisms. However, the attention mechanism could only capture local receptive field. Therefore, it was necessary to use the self-attention method to obtain important information through its own global receptive field and made effective use of it in remote sensing images. Cao et al. [

18] proposed a deep feature fusion method based on self-attention, which performed deep feature fusion for complex objects in remote sensing scene images and emphasized their weight. Sinha et al. [

19] used a guided self-attention mechanism to capture the context dependencies of the pixels in the image. Moreover, additional loss was used to emphasize feature correlation between different modules, which guided the attention mechanism to ignore irrelevant information and focused on more discriminant areas of the image. The above self-attention methods [

18,

19] had achieved initial results in the field of remote sensing images, but there were still more room for exploration, such as the use of self-attention mechanism to achieve hidden layer feature transfer.

In summary, these convolutional neural semantic segmentation networks [

12,

13,

14,

15,

16,

17,

18,

19] had made significant contributions to the field of semantic segmentation in computer vision. Compared with the feature engineering segmentation method [

2,

3,

4], it had strong anti-noise performance and could realized end-to-end mass automatic segmentation. FCN [

12] and U-Net [

13] achieved feature enhancement through feature fusion at different levels and repeated use of feature maps. However, segmentation target lacks scene understanding, so PSPNet [

14] built feature pyramid pooling layer, used different size pooling layers to splicing and fusion features, and finally performed feature analysis on the network to obtain scene understanding of segmentation target. In the segmentation process, there were segmentation targets of different scales, Deeplabv3+ [

15] used atrous convolution of different atrous rates to achieve multi-scale fusion. The above convolutional neural network models [

12,

13,

14,

15] put forward analysis for different problems in the segmentation process, including feature map reused and fusion of different levels of features, feature pyramid pooling layer to realized segmentation target scene understanding, and multi-scale feature fusion of atrous convolution with different atrous rates. However, in the process of feature fusion of these networks, almost all feature maps were directly concatenated and merged in the channel dimension, and the feature information of the hidden layers (the channel dimension of feature map) were not independently developed and utilized. Ignoring the importance level mining of hidden layer features led to the lack of category information of context pixels in pixel classification, resulting in problems such as large area misjudgment of building and road disconnection. In addition, the following semantic segmentation methods [

12,

13,

14,

15] are high complexity, slow reasoning speed and high cost of model training. To solve these problems, this paper proposes a Non-Local Feature Search Network (NFSNet). The network can improve the segmentation accuracy of buildings and roads from remote sensing images, and help achieve accurate urban planning through high-precision buildings and roads extraction. In general, there are three contributions in our work: (1) the Self-Attention Feature Transfer (SAFT) module is constructed through the self-attention method to effectively explore the feature information of the hidden layer. A feature map containing the category information of each pixel and the category semantic information of the context pixels are obtained. To avoid the problem of large area misjudgement of building and road disconnection. (2) Global Feature Refinement (GFR) module is constructed, and the hidden layer feature information extracted from the SAFT module is effectively integrated with the backbone network. The GFR module guides the backbone network feature map to obtain the feature information in the hidden layer spatial dimension, and enhances the semantic information of the feature map. It helps to restore the feature map with more precise up-sampling, and improves the segmentation accuracy. (3) Experiments are carried out on remote sensing image semantic segmentation dataset and obtain 70.54% mean intersection over union, which outperforms the existing model. In addition, the amount of model parameters and model complexity are the lowest among all comparison models, saving training time and cost.

2. Methodology

In the process of feature fusion, the existing semantic segmentation methods generally used splicing method to fuse the feature map in the channel dimension. The semantic information of the hidden layers (the channel dimension of the feature map) were not been developed separately. Due to the high resolution of remote sensing images and the high complexity of the target, the pixel failed to capture the category of context pixels in the semantic segmentation of remote sensing images, resulting in the misjudgment of large area of building and road disconnection. Secondly, the existing semantic segmentation algorithm models [

12,

13,

14,

15] had high complexity and high reasoning time cost. In order to solve these two problems, this paper proposes a Non-Local Feature Search Network (NFSNet) for building and road segmentation in remote sensing images. The overall framework of the NFSNet is shown in

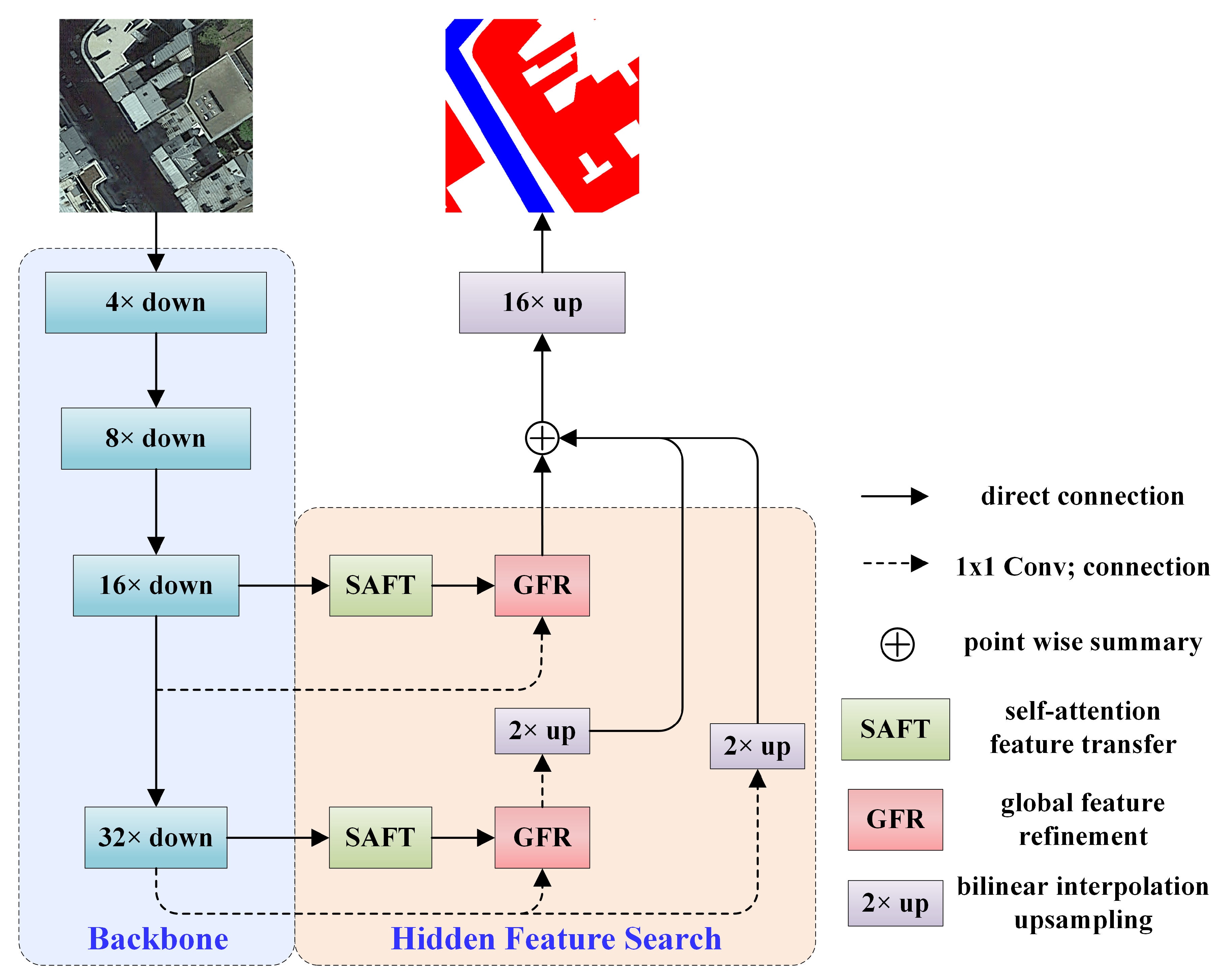

Figure 1. The NFSNet proposed in this work is an end-to-end training model, and the overall framework is divided into encoding network and decoding network. ResNet [

20] is used as the backbone network for feature extraction in the encoding network, the decoding network constructs Self-Attention Feature Transfer (SAFT) module and Global Feature Refinement (GFR) module. The decoding network is the hidden feature search part in

Figure 1. The SAFT module explores the feature associations between hidden layers through its self-attention query. The semantic information of the hidden layer is transferred to the original feature map, and a feature map containing the category information of each pixel itself and its context pixels are obtained. So as to improve the problem of large-area misclassification of building and road disconnection in the segmentation process, the GFR module effectively integrates the backbone network feature map and the hidden layer semantic information extracted by SAFT. The GFR module makes global average pooling of features extracted by SAFT, instructs backbone network feature map to obtain semantic information of hidden layer in spatial dimension, and improves segmentation accuracy. Finally, after the feature fusion of the encoding network and the decoding network, the bilinear interpolation 16 times upsampling is directly used to obtain the segmentation output result.

2.1. Encoding Network

In this paper, CNNs are used as the backbone network to achieve network feature extraction. In recent years, many excellent CNNs have emerged, such as VGG [

21], GoogLeNet [

22], and ResNet [

20]. This work chooses ResNet as the backbone network for feature extraction after weighing the number of network parameters and accuracy. ResNet is the first method to propose the use of skip connections to mitigate model degradation as the network depth increases. ResNet sets different convolution layers for different application scenarios, including 18, 34, 50, 101 and 152 layers respectively. NFSNet proposed in this paper is a lightweight network, so the least number of convolution layers network ResNet-18 is selected as the backbone network. ResNet-18 is sampled layer by layer to obtain the feature map with rich semantic information, the size of the feature map of the last layer is 1/32 of the input image. ResNet-18 is sampled to obtain 1/16 size feature map and 1/32 feature map (hereinafter referred to as CNN), CNN feature maps of different sizes containing rich semantic information are used as the output of the encoding network and provided to the decoding network for semantic information decoding.

2.2. Decoding Network

The decoding network is responsible for decoding the encoded information and restoring the semantic feature information of the feature map. The input of the decoding network is the feature map of 1/16 and 1/32 sizes of the original image, which is sampled from the backbone network of the encoding network. The decoding network is mainly composed of SAFT module and GFR module. The SAFT module uses the self-attention mechanism to mine the association between hidden layers and transfers the feature information of hidden layers to the original feature map. A feature map containing the category information of each pixel’s own category and its context pixels are obtained. The feature map containing the semantic information of hidden layer can alleviate the problems of building misclassification and road disconnection. The GFR module refines the semantic information extracted from SAFT and integrates it with the feature map of the backbone network. GFR module can helps the backbone network feature graph to obtain the semantic information of the hidden layer in the spatial dimension, and improves the segmentation accuracy.

2.2.1. Self-Attention Feature Transfer Module

The prototype of self-attention mechanism was proposed by Vaswani [

23], which usually used for information extraction in the encoding and decoding process of natural language processing. When a text message is entered, the relationship between each character in the text and its context is extracted to obtain the importance degree of each character in the text [

24]. Inspired by this idea, the self-attention mechanism is embedded into the hidden layers of convolutional neural network. The association between each hidden layer and its context hidden layers are obtained through self-attention, so as to realize the transfer of hidden layer feature information to the original feature map. When the feature maps containing the semantic information of the hidden layers are obtained, the category of the current pixel and its context pixels can be captured during pixel classification, which can effectively reduce pixel misclassification and avoid large area building misjudgment and road disconnection.

The self-attention feature transfer module proposed in this paper is shown in

Figure 2. Firstly, the query matrix, key value matrix and numerical value matrix are obtained by three 1 × 1 convolutions and mapping functions of

,

,

; secondly, after multiplying the query matrix and the key value matrix, softmax is calculated in the first channel dimension; finally, depth separable convolution is used to enhance features. The input of this module is a feature map of 1/32 (or 1/16) size from the original image after the backbone network down-sampled. The dimension of feature map X (CNN in

Figure 2) is

. Due to the number of channels

(or

) too large, the calculation amount in the parameter transfer process is relatively large. In order to reduce the computational burden,

convolution is used to reduce the dimensionality of features, get the feature map with

channels. The three branches go through

convolution, and the batch normalization (BN) [

25] and ReLU activation [

26] layers get

with dimension

respectively. The calculation process is shown in Equation (

1):

where

is

convolution,

is BN,

is ReLU activation function.

Next, we need to calculate the attention information between channels, mining the semantic information between channels, so as to capture the category information of each pixel and its context pixels. Three mapping functions

,

,

are used to map

to the query matrix

, key matrix

and value matrix

of the channel respectively. The purpose of feature mapping is to facilitate matrix multiplication. Matrix multiplication can transfer the extracted feature information of hidden layer to the original feature map [

27].

Through flattening function

, mapping function

flattens the last two dimensions of feature map

into

. The calculation process is shown in Equation (

2).

where

is similar to

. Firstly, the last two dimensions of the feature map

are flattened into

by using the flattening function

. Then transpose

using the function

to get

. The transpose operation is to match the dimensions when multiplying

and

matrices. See Equation (

3) for the calculation process.

The value matrix of the channel

is obtained by mapping function

in the same way as the channel query matrix

, and Equation (

4) is obtained by referring to Equation (

2).

The query matrix

, key value matrix

and value matrix

are obtained. Query matrix is used to query the feature information between channels by the key matrix. The key matrix is multiplied by the query matrix, which can get the feature matrix of dimension

. Softmax is performed on the first dimension of the obtained feature matrix, and normalized scores are generated for each channel to obtain the feature matrix

. The calculation process is shown in Equation (

5):

where × is matrix multiplication,

is calculated softmax in the first dimension.

The importance of each channel of the eigenmatrix

is distinguished. Multiply the value matrix

with the containing the degree of channel importance matrix

, the eigenmatrix

can be obtained. The mapping function

decomposes the second dimension of the feature matrix

into two dimensions through the flattening function

, a two-dimensional matrix

maps to a three-dimensional matrix

. The calculation process is shown in Equation (

6):

where × is matrix multiplication,

is flattening function.

The attention information between each channel is extracted in , which can capture the category of its context pixels and search for the characteristics of the hidden layer. The hidden layer feature information is transferred to the original feature map, and the feature map containing the category information of each pixel and its context pixels are obtained. Thus, the problems of large area misclassification of building and road disconnection in the segmentation process can be improved.

Finally, the feature map

obtained by the feature search of the hidden layer is feature-enhanced to extract the effective information of the feature map. Considering the computational efficiency of the model, deep separable convolution is used for feature enhancement, and feature enhancement can be achieved without introducing more calculation parameters. Sets the groups of depth separable convolution to the number of channels [

28]. After the depth separable convolution, the connection is Batch Normalization. The forward propagation is shown in Equation (

7):

where

is the depth separable convolution of convolution kernel

,

is Batch Normalization,

is output.

2.2.2. Global Feature Refinement Module

After the SAT module explores the hidden layer feature information, this work builds the GFR module to fuse the hidden layer feature information with the backbone network feature map. The GFR module can guide the backbone network feature graph to obtain the rich semantic information of the hidden layer. The feature map with rich semantic information can help to restore the details better in the process of upsampling. The GFR module proposed in this work is shown in

Figure 3. The GFR module integrates the backbone network feature map and the hidden layer feature map extracted by the SAFT module. Similar to the idea of SENet [

29], the hidden layer feature map extracted by the SAFT module is globally averaged pooling to obtain the feature information of the hidden layer in the spatial dimension. The corresponding multiplication with the backbone network feature map can guide the backbone network feature map to obtain the semantic information of the hidden layer in the spatial dimension [

30,

31,

32]. Finally, the backbone network feature map and the feature map extracted by the SAFT module are merged to improve the segmentation accuracy.

GFR can fuse feature maps of different scales. As shown in

Figure 1, GFR is used to fuse the backbone network feature map of 1/32 (or 1/16) size of the original image and SAFT feature map. Feature maps of different scales provide semantic information of different receptive fields. The number of output channels of the SAFT module is reduced to 1/2 of its input channels, and the input of the SAFT module is the backbone network feature map. Therefore, before the GFR module integrates SAFT module feature map and backbone network feature map, the channel number of backbone network feature map and SAFT module feature map should be standardized to the same level [

33]. This work reduce the dimensionality of the channel of the backbone network feature map X (CNN in

Figure 3) to 1/2 of the original backbone network feature map by

convolution, which matches the channel dimension of the SAFT module feature map. The output of the SAFT module

is globally averaged pooling by

, and the feature map of the original dimension

is mapped to

, which can obtain the SAFT modules spatial dimension information. The reduced dimensionality of the backbone network feature map is multiplied by the spatial information of the SAFT module feature map in the channel dimension, and the backbone network feature map is guided to obtain spatial semantic information in the channel dimension [

34]. Finally, the backbone network feature map containing the spatial semantic information of the hidden layer, the original backbone network feature map and the SAFT module feature map are combined and fused. In this way, not only the original backbone network feature information and the hidden layer feature information extracted from the original SAFT module are retained, but also the backbone network feature map containing the spatial semantic information of the hidden layer is added. Through the GFR module, different types of feature images can be fused [

35], which can help to further improve the segmentation accuracy. The calculation and derivation process of GFR is shown in Equation (

8):

where

is global average pooling on a channel dimension,

is

convolution, · is corresponding multiplication, + is corresponding addition,

is the output of the SAFT module,

is the output of GFR module.

4. Conclusions

In this paper, NFSNet is proposed for building and road segmentation of high resolution remote sensing images. Compared with existing semantic segmentation networks, NFSNet has the following advantages: (1) SAFT module is constructed to enhance the importance search between hidden layer channels and obtain the correlation between channels. The semantic information of the hidden layer is transferred to the original feature map, which contains the category semantic information of each pixel and its context pixels. Thus, the problems of large area misclassification of building and road disconnection in the segmentation process can be improved. (2) Using the GFR module, the hidden layer feature information extracted from the SAFT module is effectively fused with the backbone network feature map. In this way, the backbone network can obtain the feature information of the hidden layer in the spatial dimension, enhance the up-sampling feature information and improve the segmentation accuracy. (3) The model has the lowest complexity but achieves the highest precision index.

However, there are still some defects in the segmentation of building and road: (1) There is room for improvement in the accuracy of edge segmentation of building and road. (2) When there is a lot of noise in the remote sensing image, the segmentation accuracy will decrease. We will continue to optimize NFSNet to improve the edge segmentation accuracy of building and road, and overcome the reduction of segmentation accuracy caused by large amounts of noise in remote sensing images. (3) The module structure proposed in this paper can be easily transplanted to other models, and we will experiment on more benchmark networks to expand richer application scenarios.