Robotics: Five Senses plus One—An Overview

Abstract

1. Introduction

2. Literature Review

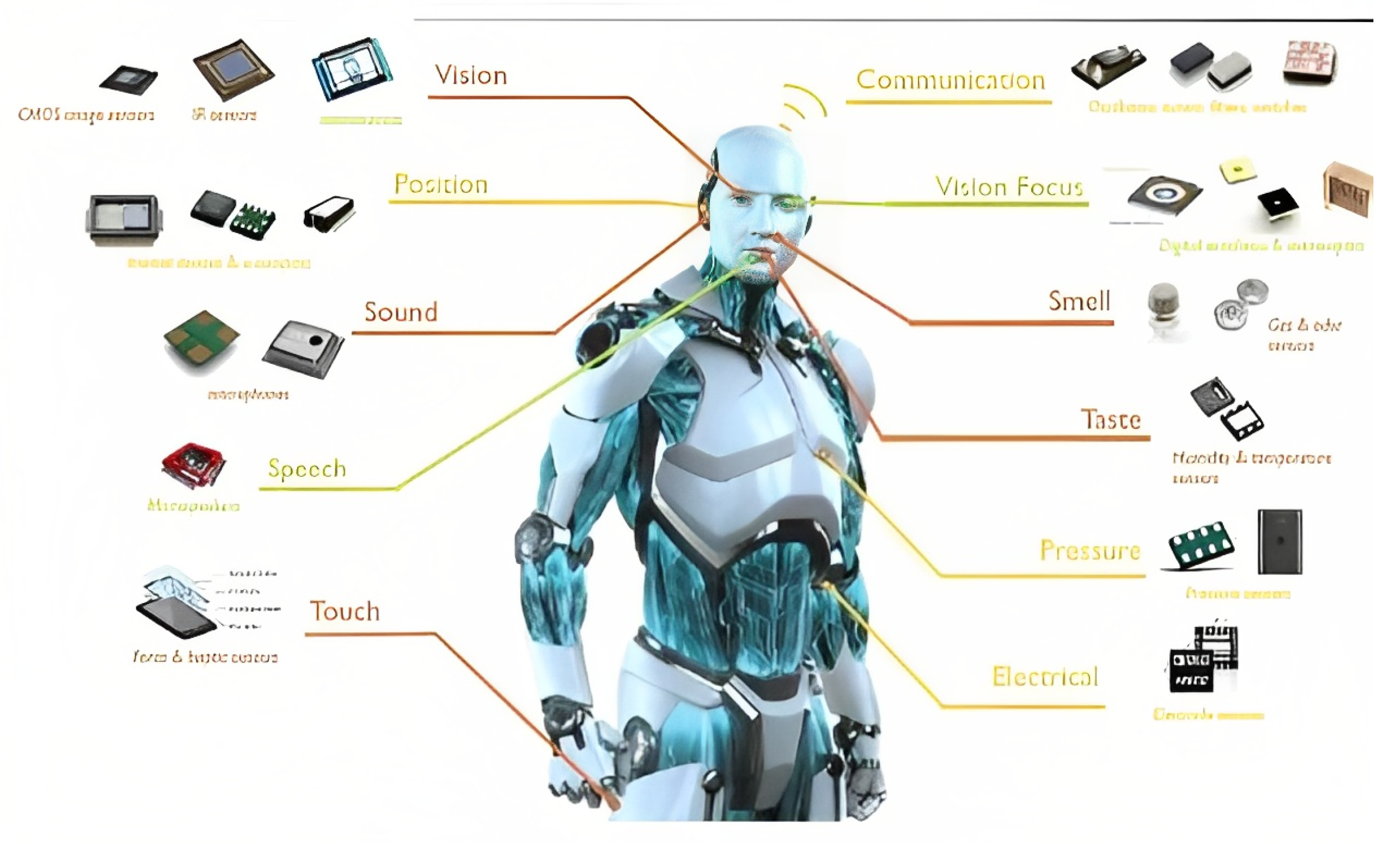

3. Vision

3.1. Component of Robot Vision System

- Lighting: Proper lighting is vital to the success of robotic vision systems. Poor lighting can cause an image to be undetectable to a robot, resulting in inefficient operation and loss of information.

- Lenses: The lens of a vision system directs the light in order to capture an image.

- Image sensor: Image sensors are responsible for converting the light that is captured by the lens into a digital image for the robot to later analyze.

- Vision processing: Vision processing is how robotic vision systems obtain data from an image that is used by robots for analysis in order to determine the best course of action for operation.

- Communications: Communications connect and coordinate all robotic vision components. This allows all vision components to effectively and quickly interact and communicate for a successful system.

- Data collection: The sensor data are collected by the control system and stored in a buffer or memory [22].

- Sensing and digitizing: This process yields a visual image of sufficient contrast that is typically digitized and stored in the computer’s memory.

- Image processing and analysis: The digitized image is subjected to image processing and analysis for data reduction and interpretation of the image. This function may be further subdivided into:

- Prepossessing: It deals with techniques such as noise reduction and enhancement details.

- Segmentation: It partitions an image into objects of interest.

- Description: It computes various features, such as size, shape, etc., suitable for differentiating one object from another.

- Recognition: It defines the object.

- Interpretation: It assigns meaning to recognized objects in the scene.

- Application: The current applications of robot vision include the following:(inspection, part identification, location, and orientation) [23].

3.2. Types of Vision Sensors Used in Robotics

- Orthographic projection-type sensors: The rectangular field of view of orthographic projection-type robotic vision sensors is the most common. They are ideal for infrared sensors with short-range or laser-range finders.

- Perspective projection-type sensors: The field of view of robotic vision sensors that use perspective projection has a trapezoidal shape. They are ideal for sensors that are used in cameras [18].

3.3. The Most Popular Trends and Challenges for Vision Sensing in Robotics

- Multi-sensor perception: Multi-sensor perception was identified as a popular trend in vision sensing in robotics in 2022. This field involves combining data from multiple sensors, such as cameras, LIDAR, and RADAR, to improve the accuracy and robustness of perception in robotics [24].

- Explainable artificial intelligence (XAI): Explainable artificial intelligence (XAI) has emerged as a popular trend in vision sensing in robotics, where robots can explain their perceptions and decision-making processes to humans in a transparent and understandable manner [25].

- Human–robot collaboration and interaction: Human–robot collaboration/interaction continues to be a popular trend in vision sensing in robotics, with a focus on improving the ability of robots to perceive and respond to human gestures, expressions, and speech [29].

- Robustness to lighting conditions: One of the key challenges in robotic vision is in developing systems that can work effectively work under varying lighting conditions. Possible solutions include developing algorithms that can adapt to different lighting conditions, using high dynamic range (HDR) cameras, and incorporating machine learning techniques to learn and adapt to different lighting conditions [30].

4. Hearing Sense

4.1. Component of Robotic Hearing Systems

- Microphones or other sound sensors: These are the devices that detect sound waves and convert them into electrical signals. There are many different types of microphones and sound sensors that can be used, including those that use diaphragms, piezoelectric crystals, or lasers to detect vibrations.

- Amplifiers: These are electronic devices that are used to amplify the electrical signals that are generated by microphones or sound sensors. They can help to improve the sensitivity and accuracy of hearing sensors.

- Analog-to-digital converters (ADCs): These are devices that are used to convert the analog electrical signals from the microphones or sound sensors into digital data that can be processed by the robot’s computer system.

- Computer system: This is the central processing unit of the robot, which is responsible for controlling the various functions and sensors of the robot. The computer system is used to process digital data from ADCs and interpret and understand spoken commands or other sounds in the environment.

- Algorithms and software: These are the instructions and programs that are used by the computer system to analyze and interpret digital data from microphones or sound sensors. The algorithms and software may be designed to recognize specific words or sounds or to understand and respond to more complex spoken commands.

- Data collection: The sensor data are collected by the control system and stored in a buffer or memory [22].

4.2. Functionality of the Hearing System

- Sound waves enter the microphone or sensor.

- The microphone or sensor converts the sound waves into electrical signals.

- The electrical signals are sent to the robot’s computer system.

- The computer system processes the signals and converts them into digital data.

- The digital data are analyzed using algorithms and software designed to understand and interpret spoken language or other sounds.

- Based on the analysis, the robot can take appropriate actions or respond to the sounds it has heard.

4.3. Types of Hearing Sensors Used in Robotics

- Sound sensor: This is a simple, easy-to-use, and low-cost device used to detect sound waves in the air. It can measure the intensity of sound and convert it into an electrical signal that can be read through a microcontroller [35].

- Pressure microphone: This is a microphone in which only one side of the diaphragm is exposed to the sound as the back is a closed chamber. The diaphragm responds solely to pressure, which has no direction. Therefore pressure microphones are omnidirectional.

- High amplitude pressure microphone: Designed for very high amplitude measurements. It is used in small–closed couplers, confined spaces, or flush-mounted applications.

- Probe microphone: Used for measurements in difficult or inaccessible situations, such as high temperatures or conditions of airflow. Its right-angled design makes it well-suited for measurements in exhaust systems, machinery, and scanning surfaces, such as loudspeakers and cabinets.

- Acoustic pressure sensor: Consists of a stack of one or more acoustically responsive elements housed within a housing. The external acoustic pressure to be sensed is transmitted to the stack through a diaphragm, which is located in an end cap that closes the top portion of the housing.

- Piezoelectric sensor: Uses a crystal to detect vibrations, including those caused by sound waves. Piezoelectric sensors are often used in robots because they are relatively small and can be easily integrated into the robot’s design [35].

- Laser Doppler vibrometer: Uses lasers to detect vibrations, including those caused by sound waves. These vibrometers are highly sensitive and can detect very small vibrations. However, they are also more expensive and complex compared to some other types of hearing sensors [36].

- Ultrasonic sensor: Uses infrared light to detect objects and measure distances. Infrared sensors are often used in robots to help them navigate and avoid obstacles, but they can also be used to detect certain types of sounds by detecting the vibrations that they cause in the environment [37].

- Infrared sensor: Uses high-frequency sound waves to detect objects and measure distances. Ultrasonic sensors are often used in robots to help them navigate and avoid obstacles, but they can also be used to detect and interpret certain types of sounds [38].

4.4. The Most Popular Trends and Challenges in the Field of Robotic Hearing

- Speech recognition and synthesis: They continue to be popular trends in the field of robotic hearing, with a focus on improving the ability of robots to understand and produce human speech [41].

- Auditory scene analysis: This has emerged as a popular trend in the field of robotic hearing, where robots can analyze complex sound scenes and identify individual sound sources [42].

5. Tactile Sense

5.1. Components of Robotic Tactile Sensing

- Sensing: The robot uses sensors to detect physical sensations, such as pressure, temperature, and force. These sensors may be mounted on the surface of the robot’s skin or limbs, and they may be connected to the control system through wires or wireless signals.

- Data collection: The sensor data are collected by the control system and stored in a buffer or memory [22].

- Data processing: The control system processes the sensor data using algorithms that interpret the data and provide the robot with a sense of touch. This may involve filtering the data to remove noise or errors and applying algorithms to extract information about the shape, size, and texture of objects in the environment.

- Decision-making: The control system uses the processed sensor data to make decisions about how to interact with the environment and how to move the robot’s body. This may involve adjusting the robot’s grip on an object, avoiding collisions, or navigating around obstacles.

- Actuation: The control system sends commands to the robot’s actuators, which are responsible for moving the robot’s body. The actuators may be motors, servos, or other types of mechanical devices, and they use the commands from the control system to move the robot’s limbs and other body parts [47].

5.2. Functionally of a Robotic Tactile System

5.3. Types of Tactile Sensors Used in Robotics

- Pressure sensors: These sensors detect the amount of pressure applied to a surface. They can be used to measure the weight of an object or to detect when an object comes into contact with the robot.

- Temperature sensors: These sensors are used to measure the temperature of an object or surface. They can be used to detect changes in temperature or to monitor the temperature of an object over time [49].

- Force sensors: These sensors are used to measure the force or strength of an object or surface. They can be used to detect the amount of force being applied to the robot or to measure the strength of an object [50].

- Strain sensors: These sensors are used to measure the deformation of an object or surface. They can be used to detect changes in an object’s shape or size or measure the amount of strain being applied to the robot [51].

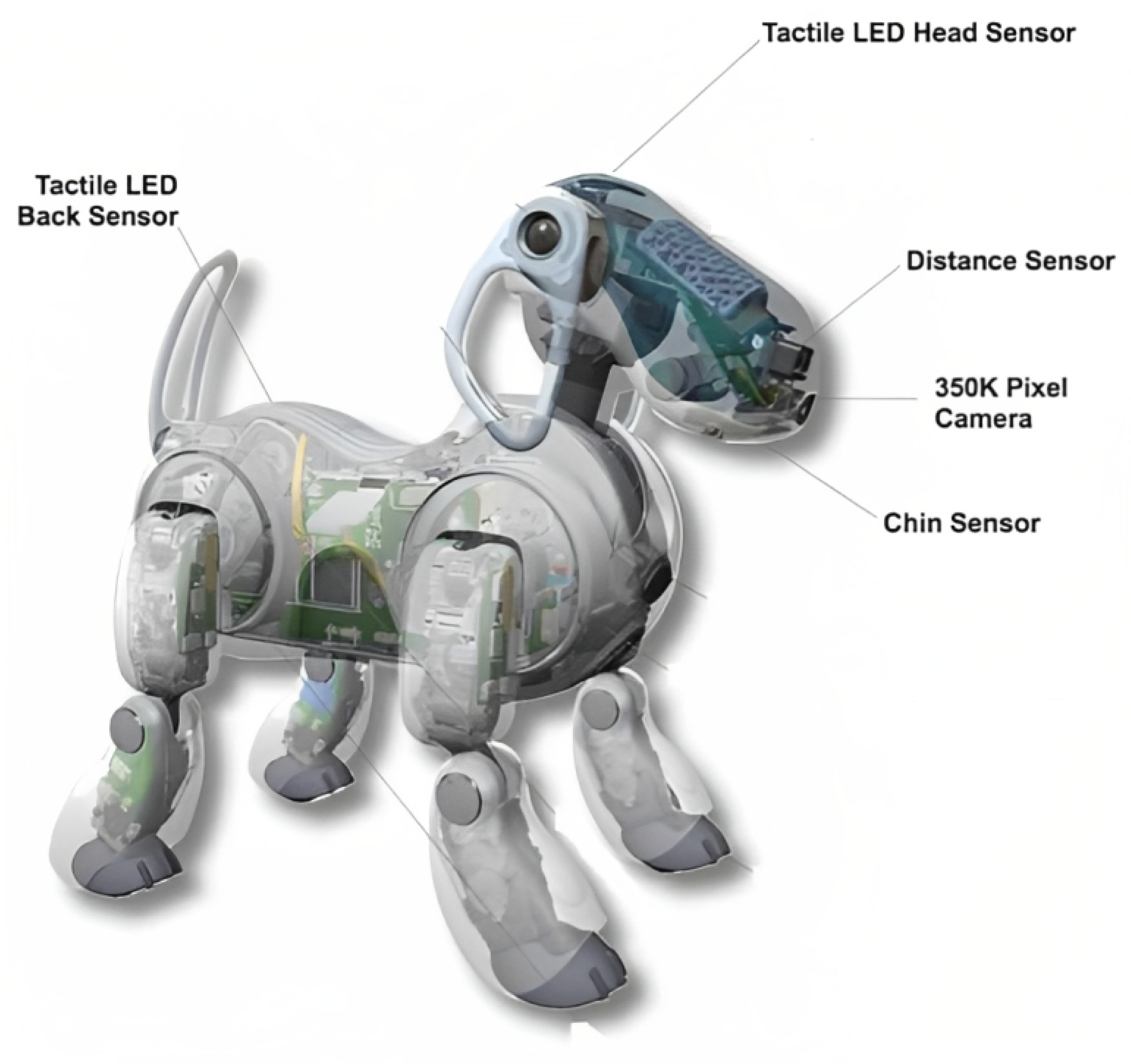

- Tactile array sensors: These sensors are made up of a large number of individual sensors that are arranged in a grid or matrix. They can be used to detect the texture, shape, and size of an object or to detect the movement of an object across the surface of the sensor [52], One of the examples of applications in this Type of Tactile Sensor Displays in the Figure 2.

5.4. The Most Popular Trends and Challenges in Robotic Tactile Sensing

- Soft robotics: Soft robots are robots that are made from flexible materials, such as silicone or rubber, and are designed to be able to deform and adapt to their environment. Soft robots have the potential to be more dexterous and capable of delicate manipulation; tactile sensing is a critical component of their ability to interact with the world around them [53].

- Artificial skin: Researchers are working on developing artificial skin for robots that is capable of detecting and interpreting tactile information; for example: manipulating cloth. Reference [54] proposed using tactile sensing to improve the ability of a robot to manipulate cloth. This can include pressure, temperature, and texture, which can help robots interact more effectively with their environments and humans [55].

- Grasping and manipulation: Tactile sensing is essential for robots to be able to grasp and manipulate objects, especially those that are delicate or irregularly shaped. Researchers are working on developing new algorithms and sensors to improve the ability of robots to sense and manipulate objects, even in complex environments [56].

- Prosthetics and rehabilitation: Tactile sensing is also important in the development of prosthetics and rehabilitation devices. By incorporating tactile sensors into these devices, it is possible to provide users with a more natural and intuitive experience, which can help improve their quality of life [57].

- Perception and learning: Tactile sensing can be used to help robots learn about their environment and develop more sophisticated perception capabilities. By using tactile feedback, robots can better understand the physical properties of objects and surfaces, which can help them make more informed decisions and improve their ability to interact with the world around them [58].

6. Electrical Nose

6.1. Components of an Electrical Nose

- Sensors: These are the components that detect the chemical compounds present in the sample being analyzed [63].

- Data acquisition system: This component is responsible for collecting and storing the data from the sensors [64].

- Data collection: The sensor data are collected by the control system and stored in a buffer or memory [22].

- Data analysis system: This component is responsible for analyzing the data from the sensors and determining the specific chemicals present in the sample [65].

- Display or output device: This component is used to present the results of the analysis to the user [66].

- Sample introduction system: This component is responsible for introducing the sample to be analyzed into the electronic nose [67].

- Power supply: This component provides the electrical power required to operate the electronic nose [66].

- Housing or enclosure: This component encloses and protects the other components of the electronic nose [66].

6.2. Functionality of Electrical Nose

6.3. Types of Electrical Nose Sensors

- Metal oxide semiconductor (MOS) sensors: These sensors are based on the principle of detecting changes in electrical conductivity in response to chemical exposure. They are often used in electronic noses because they are relatively inexpensive and have a fast response time.

- Quartz crystal microbalance (QCM) sensors: These sensors are based on the principle of detecting changes in the resonant frequency of a quartz crystal in response to chemical exposure. They are highly sensitive and can detect very small amounts of chemicals.

- Surface acoustic wave (SAW) sensors: These sensors are based on the principle of detecting changes in the velocity of an acoustic wave propagating on the surface of a piezoelectric material in response to chemical exposure. They are highly sensitive and can detect very small amounts of chemicals.

- Gas sensors: Devices that detect the presence and concentration of gases in the air or environment. They work by converting the interaction between gas molecules and the sensing material into an electrical signal. There are different types of gas sensors, such as electrochemical, optical, and semiconductor sensors, each with specific principles and applications. These sensors are used in a variety of industries and applications, including air quality monitoring, industrial safety, environmental monitoring, and medical diagnosis [70].

- Mass spectrometry sensors: These sensors use mass spectrometry to identify and quantify individual chemical compounds. They are highly sensitive and can identify a wide range of chemicals, but they are also relatively expensive and require a long analysis time.

6.4. The Most Popular Trends and Challenges for Robotic Electrical Noses

- Miniaturization: One of the biggest challenges in the development of electrical noses for robotics is the need for miniaturization, the overall size of the e-nose is 164 × 143 × 65 mm [71]. In order for robots to be able to use an electrical nose, the sensor array must be small and lightweight enough to be integrated into the robot’s design. Researchers are working on developing new materials and fabrication techniques to create smaller and more efficient electrical noses [72].

- Sensitivity and selectivity: Achieving high sensitivity and selectivity poses significant challenges in the development of electrical noses for robotics. The sensor array must be able to detect a wide range of different odors and distinguish between them, even in complex environments. Researchers are working on developing new sensor materials and signal-processing algorithms to improve the sensitivity and selectivity of electrical noses [73].

- Real-time response: In many applications, such as environmental monitoring or industrial process control, it is important for the electrical nose to provide a real-time response. This requires fast data acquisition and processing, as well as a robust control system to interpret and respond to the sensor data [74].

- Machine learning: With the growing volume of data generated by electrical noses, machine learning algorithms are playing an increasingly vital role in the development of robotic applications. Researchers are working on developing new machine learning techniques to improve the accuracy and reliability of electrical noses in detecting and identifying different odors [75].

- Integration with other sensors: In order to provide a comprehensive understanding of the environment, it is often necessary to integrate electrical noses with other sensors, such as cameras or microphones. This requires the development of new algorithms and control systems to integrate and interpret data from multiple sources [76].

7. Electronic Tongue

7.1. Components of an Electronic Tongue

- Conductive polymers: These are special polymers that are highly conductive and can be used to detect changes in conductivity, which can be indicative of certain flavors or chemical properties [80].

- Ion-selective electrodes: These are electrodes that are selectively sensitive to particular types of ions, such as sodium or potassium. They can be used to detect changes in the concentration of these ions, which can be indicative of certain flavors or chemical properties [81].

- Optical fibers: These are fibers made of special materials that can transmit light over long distances. They can be used to detect changes in the refractive index or other optical properties of a substance, which can be indicative of certain flavors or chemical properties [82].

- Data collection: The sensor data are collected by the control system and stored in a buffer or memory [22].

- Piezoelectric materials: These are materials that produce an electrical charge when subjected to mechanical stress or strain. They can be used to detect changes in the mechanical properties of a substance, which can be indicative of certain flavors or chemical properties [83].

- Surface acoustic wave devices: These are devices that use sound waves to detect changes in the properties of a substance. They can be used to detect changes in the viscosity, density, or other properties of a substance, which can be indicative of certain flavors or chemical properties [84].

7.2. Functionality of Electronic Tongue

7.3. Types of Electric Tongue Sensors

- pH sensors: These sensors are used to measure the acidity or basicity of a substance, and are often used in food and beverage production to monitor the pH of products.

- Conductivity sensors: These sensors measure the ability of a substance to conduct electricity, and can be used to detect the presence of certain ions or compounds in a sample.

- Temperature sensors: These sensors measure the temperature of a substance and can be used to monitor the temperature of food or other products during processing.

- Spectroscopy sensors: These sensors use light or other electromagnetic radiation to analyze the chemical composition of a substance, and can be used to detect specific compounds or elements.

- Chromatography sensors: These sensors use techniques such as gas chromatography or liquid chromatography to separate and analyze the components of a substance; they can be used to identify specific compounds or measure the concentrations of different substances.

- Electrochemical sensors: These sensors use electrical currents to detect the presence of certain ions or compounds in a sample, and can be used to detect the presence of contaminants or other substances of interest.

7.4. The Most Popular Trends and Challenges for Robotic Electronic Tongues

- Miniaturization: Similar to electrical noses, one of the biggest challenges in the development of electronic tongues for robotics is the need for miniaturization. The sensor array must be small and lightweight enough to be integrated into the robot’s design [72].

- Sensitivity and selectivity: As with electrical noses, achieving high sensitivity and selectivity is an important challenge for electronic tongues. The sensor array must be able to detect and distinguish between different taste properties in a wide range of environments and conditions [73].

- Real-time response: Similar to electrical noses, real-time response is important in many applications for electronic tongues, such as food and beverage quality control. This requires fast data acquisition and processing, as well as a robust control system to interpret and respond to the sensor data [74].

- Machine learning: Machine learning algorithms are becoming increasingly important in the development of robotics applications, including electronic tongues. Researchers are working on developing new machine learning techniques to improve the accuracy and reliability of electronic tongues in detecting and identifying different taste properties [75].

- Integration with other sensors: To provide a comprehensive understanding of food and beverage products, it is often necessary to integrate electronic tongues with other sensors, such as color sensors or pH sensors. This requires the development of new algorithms and control systems to integrate and interpret data from multiple sources [76].

8. Sixth Sense

8.1. The Difference between the Sixth Sense and the Other Five Senses in Robots

8.2. Components of a Sixth Sense System

- Sensors: These would include visual sensors cameras, auditory sensors microphones, tactile sensors, gustatory sensors, sensors for detecting taste, and olfactory sensors.

- Manipulators: These would include arms, hands, or other devices that allow the robot to interact with its environment, such as picking up objects or manipulating tools.

- Processor: This would be the central "brain" of the robot, responsible for processing the data from the sensors, executing commands, and controlling the manipulators.

- Data collection: The sensor data are collected by the control system and stored in a buffer or memory [22].

- Power supply: This would provide the electrical power required to operate the robot.

- Housing or enclosure: This would enclose and protect the other components of the robot.

- Machine learning system: This would allow the robot to learn and adapt to new situations and environments, using techniques such as artificial neural networks and other machine learning algorithms.

8.3. Functionality of a Sixth Sense System

- Navigate its environment using visual and/or other sensors to avoid obstacles and locate objects or destinations.

- Identify and classify objects and other beings using visual and/or other sensors, and possibly use machine learning algorithms to improve its ability to recognize and classify new objects and beings.

- Interact with objects and other beings using its manipulators, and possibly using force sensors and other sensors to gauge the appropriate amount of force to apply.

- Communicate with other beings using various modalities such as speech, gestures, and facial expressions.

- Learn and adapt to new situations and environments using machine learning algorithms and other techniques to improve its performance over time.

8.4. Types of Sixth Sense Sensors

- Temperature sensors: These sensors can detect changes in temperature and could be used to enable a robot to sense and respond to changes in its environment [92].

- Pressure sensors: These sensors can detect changes in pressure and could be used to enable a robot to sense and respond to changes in its environment, such as changes in the amount of force being applied to it [93].

- Humidity sensors: These sensors can detect changes in humidity and could be used to enable a robot to sense and respond to changes in its environment [92].

- Cameras: These sensors can capture images and video, and could be used to enable a robot to perceive and understand its environment in a more sophisticated way [94].

- Microphones: These sensors can detect and record sound waves, and could be used to enable a robot to perceive and understand its environment through hearing [94].

- LiDAR sensors: These sensors use lasers to measure distance and can be used to enable a robot to build up a detailed 3D map of its environment [95].

8.5. Sixth Sense Techniques

- Using sensors to detect and interpret physical phenomena that are not directly visible to the robot, such as temperature, pressure, or humidity. For example, a robot might have a Sixth Sense for temperature that allows it to detect changes in the ambient temperature and adjust its behavior accordingly [96].

- Using machine learning algorithms to process and interpret complex visual or auditory information in real-time. For example, a robot might be equipped with cameras and machine learning algorithms that allow it to recognize and classify objects in its environment, or to understand and respond to spoken commands [97].

- Using neural networks or other artificial intelligence techniques to enable the robot to make decisions and take actions based on its environment and its goals. For example, a robot might be programmed to navigate through a crowded environment by using its Sixth Sense to avoid obstacles and find the optimal path [98].

8.6. The Most Popular Trends and Challenges for a Robot’s Sixth Sense

- Multi-sensor fusion: One of the main challenges in developing Sixth Sense capabilities for robots is the need to integrate data from multiple sensors and sources. This involves developing sophisticated algorithms for data fusion and interpretation that can combine information from a wide range of sensors, such as cameras, microphones, pressure sensors, and temperature sensors [99].

- Machine learning: Machine learning and artificial intelligence (AI) are key technologies that can help robots develop a Sixth Sense. By analyzing and interpreting data from multiple sensors, robots can learn to recognize patterns and make predictions about their environment. This can help robots navigate complex environments, detect and avoid obstacles, and interact more intelligently with their surroundings [100].

- Haptic feedback: Haptic feedback, which involves providing robots with the ability to feel and respond to physical stimuli, is a key part of developing a Sixth Sense for robots. This involves developing sensors and actuators that can provide feedback to robots about their environment, such as changes in pressure or temperature [101].

- Augmented reality (AR): Augmented reality technology can be used to enhance a robot’s perception of the world by providing additional visual or auditory information. This can help robots recognize and interact with objects more effectively, even in complex and changing environments [102].

- Human–robot interaction: Developing a Sixth Sense for robots also requires the ability to interact with humans in a natural and intuitive way. This involves developing sensors and algorithms that can recognize human gestures and expressions, as well as natural language processing capabilities that enable robots to understand and respond to human speech [103].

9. Summary

10. Conclusions

Author Contributions

Funding

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Wang, J.; Gao, Q.; Pan, M.; Fang, Y. Device-Free Wireless Sensing: Challenges, Opportunities, and Applications. IEEE Netw. 2018, 32, 132–137. [Google Scholar] [CrossRef]

- Zhu, Z.; Hu, H. Robot Learning from Demonstration in Robotic Assembly: A Survey. Robotics 2018, 7, 17. [Google Scholar] [CrossRef]

- Yousef, H.; Boukallel, M.; Althoefer, K. Tactile sensing for dexterous in-hand manipulation in robotics—A review. Sens. Actuators A Phys. 2011, 167, 171–187. [Google Scholar] [CrossRef]

- Liakos, K.; Busato, P.; Moshou, D.; Pearson, S.; Bochtis, D. Machine Learning in Agriculture: A Review. Sensors 2018, 18, 2674. [Google Scholar] [CrossRef]

- Ishida, H.; Wada, Y.; Matsukura, H. Chemical Sensing in Robotic Applications: A Review. IEEE Sens. J. 2012, 12, 3163–3173. [Google Scholar] [CrossRef]

- Andrea, C.; Navarro-Alarcon, D. Sensor-Based Control for Collaborative Robots: Fundamentals, Challenges, and Opportunities. Front. Neurorobot. 2021, 113, 576846. [Google Scholar] [CrossRef]

- Coggins, T.N. More work for Roomba? Domestic robots, housework and the production of privacy. Prometheus 2022, 38, 98–112. [Google Scholar] [CrossRef]

- Blanes, C.; Ortiz, C.; Mellado, M.; Beltrán, P. Assessment of eggplant firmness with accelerometers on a pneumatic robot gripper. Comput. Electron. Agric. 2015, 113, 44–50. [Google Scholar] [CrossRef]

- Russell, R.A. Survey of Robotic Applications for Odor-Sensing Technology. Int. J. Robot. Res. 2001, 20, 144–162. [Google Scholar] [CrossRef]

- Deshmukh, A. Survey Paper on Stereo-Vision Based Object Finding Robot. Int. J. Res. Appl. Sci. Eng. Technol. 2017, 5, 2100–2103. [Google Scholar] [CrossRef]

- Deuerlein, C.; Langer, M.; Seßner, J.; Heß, P.; Franke, J. Human-robot-interaction using cloud-based speech recognition systems. Procedia Cirp 2021, 97, 130–135. [Google Scholar] [CrossRef]

- Alameda-Pineda, X.; Horaud, R. Vision-guided robot hearing. Int. J. Robot. Res. 2014, 34, 437–456. [Google Scholar] [CrossRef]

- Tan, J.; Xu, J. Applications of electronic nose (e-nose) and electronic tongue (e-tongue) in food quality-related properties determination: A review. Artif. Intell. Agric. 2020, 4, 104–115. [Google Scholar] [CrossRef]

- Chanda, P.; Mukherjee, P.K.; Modak, S.; Nath, A. Gesture controlled robot using Arduino and android. Int. J. 2016, 6, 227–234. [Google Scholar]

- Chen, G.; Dong, W.; Sheng, X.; Zhu, X.; Ding, H. An Active Sense and Avoid System for Flying Robots in Dynamic Environments. IEEE/ASME Trans. Mechatron. 2021, 26, 668–678. [Google Scholar] [CrossRef]

- Cong, Y.; Gu, C.; Zhang, T.; Gao, Y. Underwater robot sensing technology: A survey. Fundam. Res. 2021, 1, 337–345. [Google Scholar] [CrossRef]

- De Jong, M.; Zhang, K.; Roth, A.M.; Rhodes, T.; Schmucker, R.; Zhou, C.; Ferreira, S.; Cartucho, J.; Veloso, M. Towards a robust interactive and learning social robot. In Proceedings of the 17th International Conference on Autonomous Agents and MultiAgent Systems, Stockholm, Sweden, 10–15 July 2018; pp. 883–891. [Google Scholar]

- Chakraborty, E. What Is Robotic Vision?|5+ Important Applications. Lambda Geeks. Available online: https://lambdageeks.com/robotic-vision-important-features/ (accessed on 4 February 2023).

- YouTube. Robotic Vision System AEE Robotics Part 9. 2021. Available online: https://www.youtube.com/watch?v=7csTyRjKAeE (accessed on 4 February 2023).

- Tao, S.; Cao, J. Research on Machine Vision System Design Based on Deep Learning Neural Network. Wirel. Commun. Mob. Comput. 2022, 2022, 4808652. [Google Scholar] [CrossRef]

- LTCC, PCB an Reticle Inspection Solutions—Stratus Vision AOI. (n.d.). Stratus Vision AOI. Available online: https://stratusvision.com/ (accessed on 4 February 2023).

- Pan, L.; Yang, S.X. An Electronic Nose Network System for Online Monitoring of Livestock Farm Odors. IEEE/ASME Trans. Mechatron. 2009, 14, 371–376. [Google Scholar] [CrossRef]

- Understanding What Is a Robot Vision System|Techman Robot. Techman Robot. 2021. Available online: https://www.tm-robot.com/en/robot-vision-system/ (accessed on 4 February 2023).

- Yeong, D.J.; Velasco-Hernandez, G.; Barry, J.; Walsh, J. Sensor and Sensor Fusion Technology in Autonomous Vehicles: A Review. Sensors 2021, 21, 2140. [Google Scholar] [CrossRef]

- Ahmed, I.; Jeon, G.; Piccialli, F. From Artificial Intelligence to Explainable Artificial Intelligence in Industry 4.0: A Survey on What, How, and Where. IEEE Trans. Ind. Inform. 2022, 18, 5031–5042. [Google Scholar] [CrossRef]

- Nikravan, M.; Kashani, M.H. A review on trust management in fog/edge computing: Techniques, trends, and challenges. J. Netw. Comput. Appl. 2022, 204, 103402. [Google Scholar] [CrossRef]

- Huang, P.; Zeng, L.; Chen, X.; Huang, L.; Zhou, Z.; Yu, S. Edge Robotics: Edge-Computing-Accelerated Multirobot Simultaneous Localization and Mapping. IEEE Internet Things J. 2022, 9, 14087–14102. [Google Scholar] [CrossRef]

- Wang, S.-T.; Li, I.-H.; Wang, W.-Y. Human Action Recognition of Autonomous Mobile Robot Using Edge-AI. IEEE Sens. J. 2023, 23, 1671–1682. [Google Scholar] [CrossRef]

- Matarese, M.; Rea, F.; Sciutti, A. Perception is Only Real When Shared: A Mathematical Model for Collaborative Shared Perception in Human-Robot Interaction. Front. Robot. AI 2022, 9, 733954. [Google Scholar] [CrossRef] [PubMed]

- Billah, M.A.; Faruque, I.A. Robustness in bio-inspired visually guided multi-agent flight and the gain modulation hypothesis. Int. J. Robust Nonlinear Control. 2022, 33, 1316–1334. [Google Scholar] [CrossRef]

- Attanayake, A.M.N.C.; Hansamali, W.G.R.U.; Hirshan, R.; Haleem, M.A.L.A.; Hinas, M.N.A. Amigo (A Social Robot): Development of a robot hearing system. In Proceedings of the IET 28th Annual Technical Conference, Virtual, 28 August 2021. [Google Scholar]

- ElGibreen, H.; Al Ali, G.; AlMegren, R.; AlEid, R.; AlQahtani, S. Telepresence Robot System for People with Speech or Mobility Disabilities. Sensors 2022, 22, 8746. [Google Scholar] [CrossRef]

- Karimian, P. Audio Communication for Multi-Robot Systems. Mater’s Thesis, Simon Fraser University, Burnaby, BC, Canada, 2007. [Google Scholar]

- Robotics 101: Sensors That Allow Robots to See, Hear, Touch, and Move|Possibility|Teledyne Imaging. Available online: https://possibility.teledyneimaging.com/robotics-101-sensors-that-allow-robots-to-see-hear-touch-and-move/ (accessed on 4 February 2023).

- Shimada, K. Morphological Fabrication of Equilibrium and Auditory Sensors through Electrolytic Polymerization on Hybrid Fluid Rubber (HF Rubber) for Smart Materials of Robotics. Sensors 2022, 22, 5447. [Google Scholar] [CrossRef]

- Darwish, A.; Halkon, B.; Oberst, S. Non-Contact Vibro-Acoustic Object Recognition Using Laser Doppler Vibrometry and Convolutional Neural Networks. Sensors 2022, 22, 9360. [Google Scholar] [CrossRef]

- Alkhatib, A.A.; Elbes, M.W.; Abu Maria, E.M. Improving accuracy of wireless sensor networks localisation based on communication ranging. IET Commun. 2020, 14, 3184–3193. [Google Scholar] [CrossRef]

- Masoud, M.; Jaradat, Y.; Manasrah, A.; Jannoud, I. Sensors of smart devices in the internet of everything (IoE) era: Big opportunities and massive doubts. J. Sens. 2019, 2019, 6514520. [Google Scholar] [CrossRef]

- Senocak, A.; Ryu, H.; Kim, J.; Kweon, I.S. Learning sound localization better from semantically similar samples. In Proceedings of the ICASSP 2022-2022 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), Singapore, 23–27 May 2022; pp. 4863–4867. [Google Scholar]

- Qiu, Y.; Li, B.; Huang, J.; Jiang, Y.; Wang, B.; Huang, Z. An Analytical Method for 3-D Sound Source Localization Based on a Five-Element Microphone Array. IEEE Trans. Instrum. Meas. 2022, 71, 7504314. [Google Scholar] [CrossRef]

- Kumar, T.; Mahrishi, M.; Meena, G. A comprehensive review of recent automatic speech summarization and keyword identification techniques. In Artificial Intelligence in Industrial Applications: Approaches to Solve the Intrinsic Industrial Optimization Problems; Springer: Berlin/Heidelberg, Germany, 2022; pp. 111–126. [Google Scholar]

- Nakadai, K.; Okuno, H.G. Robot Audition and Computational Auditory Scene Analysis. Adv. Intell. Syst. 2020, 2, 2000050. [Google Scholar] [CrossRef]

- Bruck, J.N.; Walmsley, S.F.; Janik, V.M. Cross-modal perception of identity by sound and taste in bottlenose dolphins. Sci. Adv. 2022, 8, eabm7684. [Google Scholar] [CrossRef]

- Zhou, W.; Yue, Y.; Fang, M.; Qian, X.; Yang, R.; Yu, L. BCINet: Bilateral cross-modal interaction network for indoor scene understanding in RGB-D images. Inf. Fusion 2023, 94, 32–42. [Google Scholar] [CrossRef]

- Dahiya, R.S.; Metta, G.; Valle, M.; Sandini, G. Tactile Sensing—From Humans to Humanoids. IEEE Trans. Robot. 2009, 26, 1–20. [Google Scholar] [CrossRef]

- Thai, M.T.; Phan, P.T.; Hoang, T.T.; Wong, S.; Lovell, N.H.; Do, T.N. Advanced intelligent systems for surgical robotics. Adv. Intell. Syst. 2020, 2, 1900138. [Google Scholar] [CrossRef]

- Liu, Y.; Aleksandrov, M.; Hu, Z.; Meng, Y.; Zhang, L.; Zlatanova, S.; Ai, H.; Tao, P. Accurate light field depth estimation under occlusion. Pattern Recognit. 2023, 138, 109415. [Google Scholar] [CrossRef]

- Lepora, N.F. Soft Biomimetic Optical Tactile Sensing With the TacTip: A Review. IEEE Sensors J. 2021, 21, 21131–21143. [Google Scholar] [CrossRef]

- Li, S.; Zhang, Y.; Wang, Y.; Xia, K.; Yin, Z.; Wang, H.; Zhang, M.; Liang, X.; Lu, H.; Zhu, M.; et al. Physical sensors for skin-inspired electronics. InfoMat 2020, 2, 184–211. [Google Scholar] [CrossRef]

- Templeman, J.O.; Sheil, B.B.; Sun, T. Multi-axis force sensors: A state-of-the-art review. Sens. Actuators A Phys. 2020, 304, 111772. [Google Scholar] [CrossRef]

- Seyedin, S.; Zhang, P.; Naebe, M.; Qin, S.; Chen, J.; Wang, X.; Razal, J.M. Textile strain sensors: A review of the fabrication technologies, performance evaluation and applications. Mater. Horiz. 2019, 6, 219–249. [Google Scholar] [CrossRef]

- Scimeca, L.; Hughes, J.; Maiolino, P.; Iida, F. Model-Free Soft-Structure Reconstruction for Proprioception Using Tactile Arrays. IEEE Robot. Autom. Lett. 2019, 4, 2479–2484. [Google Scholar] [CrossRef]

- Whitesides, G.M. Soft Robotics. Angew. Chem. Int. Ed. 2018, 57, 4258–4273. [Google Scholar] [CrossRef] [PubMed]

- Tirumala, S.; Weng, T.; Seita, D.; Kroemer, O.; Temel, Z.; Held, D. Learning to Singulate Layers of Cloth using Tactile Feedback. In Proceedings of the 2022 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Kyoto, Japan, 23–27 October 2022; pp. 7773–7780. [Google Scholar]

- Pang, Y.; Xu, X.; Chen, S.; Fang, Y.; Shi, X.; Deng, Y.; Wang, Z.-L.; Cao, C. Skin-Inspired Textile-Based Tactile Sensors Enable Multifunctional Sensing of Wearables and Soft Robots. SSRN Electron. J. 2022, 96, 107137. [Google Scholar] [CrossRef]

- Babin, V.; Gosselin, C. Mechanisms for robotic grasping and manipulation. Annu. Rev. Control Robot. Auton. Syst. 2021, 4, 573–593. [Google Scholar] [CrossRef]

- Liu, H.; Guo, D.; Sun, F.; Yang, W.; Furber, S.; Sun, T. Embodied tactile perception and learning. Brain Sci. Adv. 2020, 6, 132–158. [Google Scholar] [CrossRef]

- Beckerle, P.; Salvietti, G.; Unal, R.; Prattichizzo, D.; Rossi, S.; Castellini, C.; Hirche, S.; Endo, S.; Amor, H.B.; Ciocarlie, M.; et al. A human–robot interaction perspective on assistive and rehabilitation robotics. Front. Neurorobot. 2017, 11, 24. [Google Scholar] [CrossRef]

- Bianco, A. The Sony AIBO—The World’s First Robotic Dog. Available online: https://sabukaru.online/articles/the-sony-aibo-the-worlds-first-robotic-dog (accessed on 13 April 2023).

- Göpel, W. Chemical imaging: I. Concepts and visions for electronic and bioelectronic noses. Sens. Actuators D Chem. 1998, 52, 125–142. [Google Scholar] [CrossRef]

- Fitzgerald, J.E.; Bui, E.T.H.; Simon, N.M.; Fenniri, H. Artificial Nose Technology: Status and Prospects in Diagnostics. Trends Biotechnol. 2017, 35, 33–42. [Google Scholar] [CrossRef]

- Kim, S.; Chen, J.; Cheng, T.; Gindulyte, A.; He, J.; He, S.; Li, Q.; Shoemaker, B.A.; Thiessen, P.A.; Yu, B.; et al. PubChem in 2021: New data content and improved web interfaces. Nucleic Acids Res. 2021, 49, D1388–D1395. [Google Scholar] [CrossRef]

- James, D.; Scott, S.M.; Ali, Z.; O’Hare, W.T. Chemical Sensors for Electronic Nose Systems. Microchim. Acta 2005, 149, 1–17. [Google Scholar] [CrossRef]

- Chueh, H.-T.; Hatfield, J.V. A real-time data acquisition system for a hand-held electronic nose (H2EN). Sens. Actuators B Chem. 2002, 83, 262–269. [Google Scholar] [CrossRef]

- Pan, L.; Yang, S.X. A new intelligent electronic nose system for measuring and analysing livestock and poultry farm odours. Environ. Monit. Assess. 2007, 135, 399–408. [Google Scholar] [CrossRef] [PubMed]

- Ampuero, S.; Bosset, J.O. The electronic nose applied to dairy products: A review. Sens. Actuators B Chem. 2003, 94, 1–12. [Google Scholar] [CrossRef]

- Simpkins, A. Robotic Tactile Sensing: Technologies and System (Dahiya, R.S. and Valle, M.; 2013) (On the Shelf). IEEE Robot. Autom. Mag. 2013, 20, 107. [Google Scholar] [CrossRef]

- Shepherd, G.M. Smell images and the flavour system in the human brain. Nature 2006, 444, 316–321. [Google Scholar] [CrossRef]

- Nagle, H.T.; Gutierrez-Osuna, R.; Schiffman, S.S. The how and why of electronic noses. IEEE Spectrum 1998, 35, 22–31. [Google Scholar] [CrossRef]

- Tladi, B.C.; Kroon, R.E.; Swart, H.C.; Motaung, D.E. A holistic review on the recent trends, advances, and challenges for high-precision room temperature liquefied petroleum gas sensors. Anal. Chim. Acta 2023, 1253, 341033. [Google Scholar] [CrossRef]

- Sun, Z.H.; Liu, K.X.; Xu, X.H.; Meng, Q.H. Odor evaluation of vehicle interior materials based on portable E-nose. In Proceedings of the 2020 39th Chinese Control Conference (CCC), Shenyang, China, 27–29 July 2020; pp. 2998–3003. [Google Scholar]

- Viejo, C.G.; Fuentes, S.; Godbole, A.; Widdicombe, B.; Unnithan, R.R. Development of a low-cost e-nose to assess aroma profiles: An artificial intelligence application to assess beer quality. Sens. Actuators B Chem. 2020, 308, 127688. [Google Scholar] [CrossRef]

- Szulczyński, B.; Wasilewski, T.; Wojnowski, W.; Majchrzak, T.; Dymerski, T.; Namieśnik, J.; Gębicki, J. Different ways to apply a measurement instrument of E-nose type to evaluate ambient air quality with respect to odour nuisance in a vicinity of municipal processing plants. Sensors 2017, 17, 2671. [Google Scholar] [CrossRef]

- Trivino, R.; Gaibor, D.; Mediavilla, J.; Guarnan, A.V. Challenges to embed an electronic nose on a mobile robot. In Proceedings of the 2016 IEEE ANDESCON, Arequipa, Peru, 19–21 October 2016; pp. 1–4. [Google Scholar]

- Ye, Z.; Liu, Y.; Li, Q. Recent progress in smart electronic nose technologies enabled with machine learning methods. Sensors 2021, 21, 7620. [Google Scholar] [CrossRef] [PubMed]

- Chiu, S.W.; Tang, K.T. Towards a chemiresistive sensor-integrated electronic nose: A review. Sensors 2013, 13, 14214–14247. [Google Scholar] [CrossRef] [PubMed]

- Seesaard, T.; Wongchoosuk, C. Recent Progress in Electronic Noses for Fermented Foods and Beverages Applications. Fermentation 2022, 8, 302. [Google Scholar] [CrossRef]

- Rodríguez-Méndez, M.L.; Apetrei, C.; de Saja, J.A. Evaluation of the polyphenolic content of extra virgin olive oils using an array of voltammetric sensors. Electrochim. Acta 2010, 53, 5867–5872. [Google Scholar] [CrossRef]

- Ribeiro, C.M.G.; Strunkis, C.D.M.; Campos, P.V.S.; Salles, M.O. Electronic nose and tongue materials for Sensing. In Reference Module in Biomedical Sciences; Elsevier: Amsterdam, The Netherlands, 2021. [Google Scholar]

- Sierra-Padilla, A.; García-Guzmán, J.J.; López-Iglesias, D.; Palacios-Santander, J.M.; Cubillana-Aguilera, L. E-Tongues/noses based on conducting polymers and composite materials: Expanding the possibilities in complex analytical sensing. Sensors 2021, 21, 4976. [Google Scholar] [CrossRef]

- Yan, R.; Qiu, S.; Tong, L.; Qian, Y. Review of progresses on clinical applications of ion selective electrodes for electrolytic ion tests: From conventional ISEs to graphene-based ISEs. Chem. Speciat. Bioavailab. 2016, 28, 72–77. [Google Scholar] [CrossRef]

- Floris, I.; Sales, S.; Calderón, P.A.; Adam, J.M. Measurement uncertainty of multicore optical fiber sensors used to sense curvature and bending direction. Measurement 2019, 132, 35–46. [Google Scholar] [CrossRef]

- Kiran, E.; Kaur, K.; Aggarwal, P. Artificial senses and their fusion as a booming technique in food quality assessment—A review. Qual. Assur. Saf. Crop. Foods 2022, 14, 9–18. [Google Scholar]

- Zhou, B. Construction and simulation of online English reading model in wireless surface acoustic wave sensor environment optimized by particle swarm optimization. Discret. Dyn. Nat. Soc. 2022, 2022, 1633781. [Google Scholar] [CrossRef]

- Mohamed, Z.; Shareef, H. An Adjustable Machine Learning Gradient Boosting-Based Controller for Pv Applications. SSRN Electron. J. 2022. [Google Scholar] [CrossRef]

- Shimada, K. Artificial Tongue Embedded with Conceptual Receptor for Rubber Gustatory Sensor by Electrolytic Polymerization Technique with Utilizing Hybrid Fluid (HF). Sensors 2022, 22, 6979. [Google Scholar] [CrossRef] [PubMed]

- Cominelli, L.; Carbonaro, N.; Mazzei, D.; Garofalo, R.; Tognetti, A.; De Rossi, D. A Multimodal Perception Framework for Users Emotional State Assessment in Social Robotics. Future Internet 2017, 9, 42. [Google Scholar] [CrossRef]

- Grall, C.; Finn, E.S. Leveraging the power of media to drive cognition: A media-informed approach to naturalistic neuroscience. Soc. Cogn. Affect. Neurosci. 2022, 17, 598–608. [Google Scholar] [CrossRef]

- Bonci, A.; Cen Cheng, P.D.; Indri, M.; Nabissi, G.; Sibona, F. Human-robot perception in industrial environments: A survey. Sensors 2021, 21, 1571. [Google Scholar] [CrossRef] [PubMed]

- Laut, C.L.; Leasure, C.S.; Pi, H.; Carlin, S.M.; Chu, M.L.; Hillebr, G.H.; Lin, H.K.; Yi, X.I.; Stauff, D.L.; Skaar, E.P. DnaJ and ClpX Are Required for HitRS and HssRS Two-Component System Signaling in Bacillus anthracis. Infect. Immun. 2022, 90, e00560-21. [Google Scholar] [CrossRef]

- Bari, R.; Gupta, A.K.; Mathur, P. An Overview of the Emerging Technology: Sixth Sense Technology: A Review. In Proceedings of the Second International Conference on Information Management and Machine Intelligence: ICIMMI 2020, Jaipur, India, 24–25 July 2020; Springer: Singapore, 2021; pp. 245–254. [Google Scholar]

- Wikelski, M.; Ponsford, M. Collective behaviour is what gives animals their “Sixth Sense”. New Sci. 2022, 254, 43–45. [Google Scholar] [CrossRef]

- Xu, G.; Wan, Q.; Deng, W.; Guo, T.; Cheng, J. Smart-Sleeve: A Wearable Textile Pressure Sensor Array for Human Activity Recognition. Sensors 2022, 22, 1702. [Google Scholar] [CrossRef]

- Hui, T.K.L.; Sherratt, R.S. Towards disappearing user interfaces for ubiquitous computing: Human enhancement from Sixth Sense to super senses. J. Ambient. Intell. Humaniz. Comput. 2017, 8, 449–465. [Google Scholar] [CrossRef]

- Li, N.; Ho, C.P.; Xue, J.; Lim, L.W.; Chen, G.; Fu, Y.H.; Lee, L.Y.T. A Progress Review on Solid-State LiDAR and Nanophotonics-Based LiDAR Sensors. Laser Photonics Rev. 2022, 16, 2100511. [Google Scholar] [CrossRef]

- Randall, N.; Bennett, C.C.; Šabanović, S.; Nagata, S.; Eldridge, L.; Collins, S.; Piatt, J.A. More than just friends: In-home use and design recommendations for sensing socially assistive robots (SARs) by older adults with depression. Paladyn J. Behav. Robot. 2019, 10, 237–255. [Google Scholar] [CrossRef]

- Shih, B.; Shah, D.; Li, J.; Thuruthel, T.G.; Park, Y.-L.; Iida, F.; Bao, Z.; Kramer-Bottiglio, R.; Tolley, M.T. Electronic skins and machine learning for intelligent soft robots. Sci. Robot. 2020, 5, eaaz9239. [Google Scholar] [CrossRef]

- Anagnostis, A.; Benos, L.; Tsaopoulos, D.; Tagarakis, A.; Tsolakis, N.; Bochtis, D. Human Activity Recognition through Recurrent Neural Networks for Human–Robot Interaction in Agriculture. Appl. Sci. 2021, 11, 2188. [Google Scholar] [CrossRef]

- Tsanousa, A.; Bektsis, E.; Kyriakopoulos, C.; González, A.G.; Leturiondo, U.; Gialampoukidis, I.; Karakostas, A.; Vrochidis, S.; Kompatsiaris, I. A review of multisensor data fusion solutions in smart manufacturing: Systems and trends. Sensors 2022, 22, 1734. [Google Scholar] [CrossRef] [PubMed]

- Kumar S.N., N.; Zahid, M.; Khan, S.M. Sixth Sense Robot For The Collection of Basic Land Survey Data. Int. Res. J. Eng. Technol. 2021, 8, 4484–4489. [Google Scholar]

- Saracino, A.; Deguet, A.; Staderini, F.; Boushaki, M.N.; Cianchi, F.; Menciassi, A.; Sinibaldi, E. Haptic feedback in the da Vinci Research Kit (dVRK): A user study based on grasping, palpation, and incision tasks. Int. J. Med. Robot. Comput. Assist. Surg. 2019, 15, E1999. [Google Scholar] [CrossRef]

- García, A.; Solanes, J.E.; Muñoz, A.; Gracia, L.; Tornero, J. Augmented Reality-Based Interface for Bimanual Robot Teleoperation. Appl. Sci. 2022, 12, 4379. [Google Scholar] [CrossRef]

- Akalin, N.; Kristoffersson, A.; Loutfi, A. Evaluating the sense of safety and security in human–robot interaction with older people. In Social Robots: Technological, Societal and Ethical Aspects of Human-Robot Interaction; Springer: Berlin/Heidelberg, Germany, 2019; pp. 237–264. [Google Scholar]

| Robotic Sensing | Components of Sensing System | Functionality | Sensors Types | Applications |

|---|---|---|---|---|

| Vision |

|

|

|

|

| Hearing Sense |

|

|

|

|

| Tactile Sense |

|

|

|

|

| Electrical Nose |

|

|

|

|

| Electronic Tongue |

|

|

|

|

| Sixth Sense |

|

|

|

|

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Albustanji, R.N.; Elmanaseer, S.; Alkhatib, A.A.A. Robotics: Five Senses plus One—An Overview. Robotics 2023, 12, 68. https://doi.org/10.3390/robotics12030068

Albustanji RN, Elmanaseer S, Alkhatib AAA. Robotics: Five Senses plus One—An Overview. Robotics. 2023; 12(3):68. https://doi.org/10.3390/robotics12030068

Chicago/Turabian StyleAlbustanji, Rand N., Shorouq Elmanaseer, and Ahmad A. A. Alkhatib. 2023. "Robotics: Five Senses plus One—An Overview" Robotics 12, no. 3: 68. https://doi.org/10.3390/robotics12030068

APA StyleAlbustanji, R. N., Elmanaseer, S., & Alkhatib, A. A. A. (2023). Robotics: Five Senses plus One—An Overview. Robotics, 12(3), 68. https://doi.org/10.3390/robotics12030068