Introducing Machine Learning in Teaching Quantum Mechanics

Abstract

1. Introduction

2. Quantum Mechanical Systems

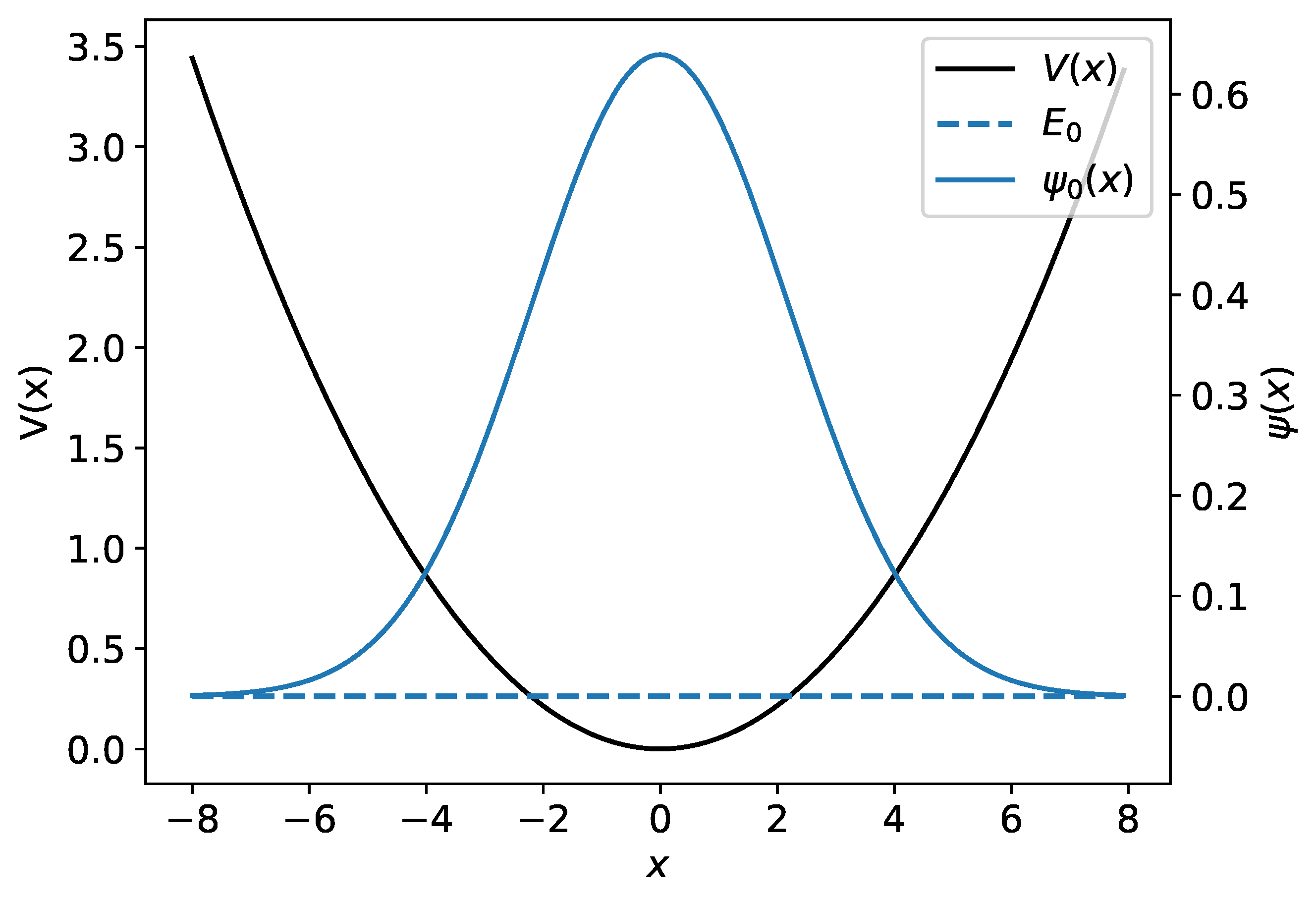

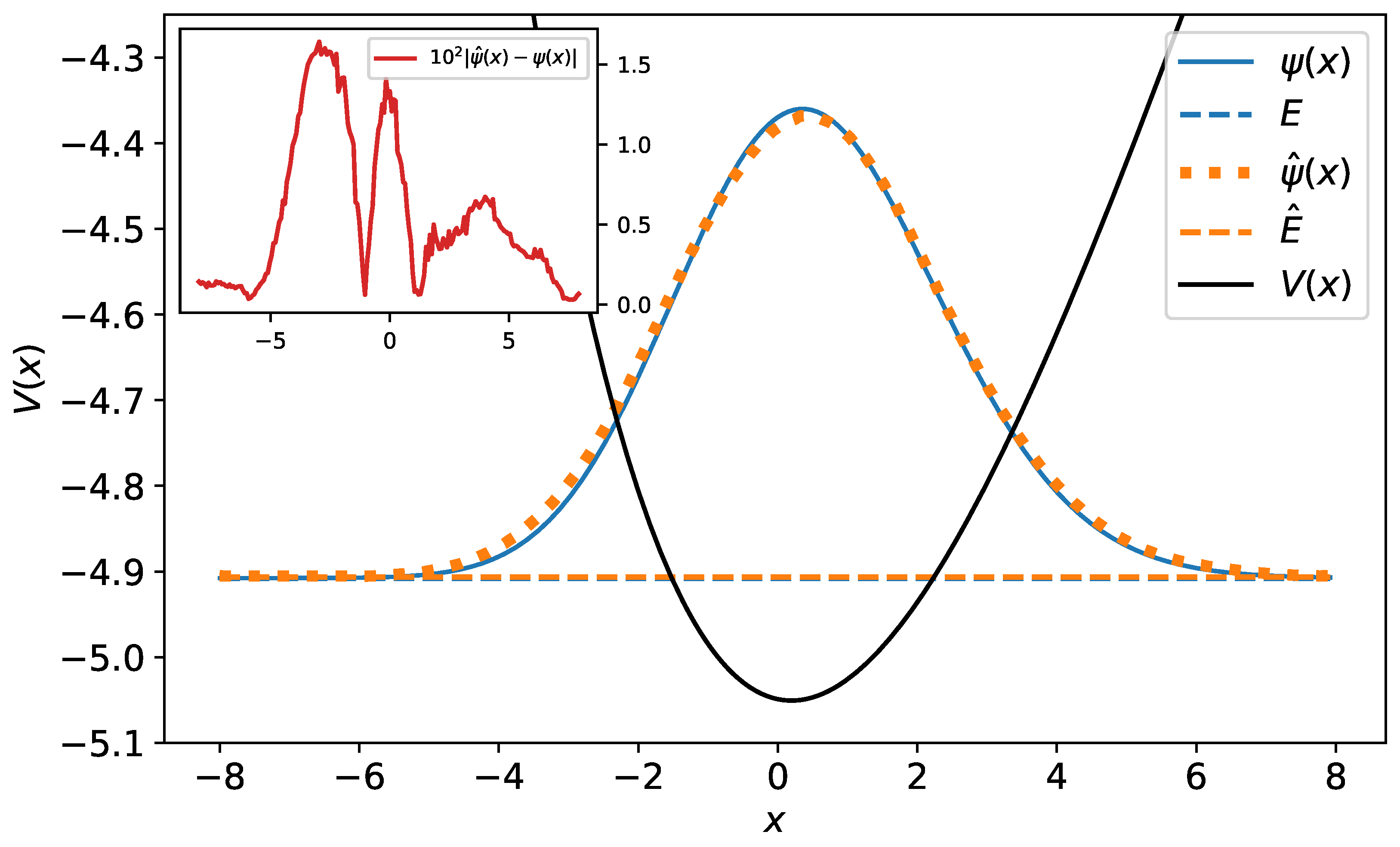

2.1. The Harmonic Oscillator

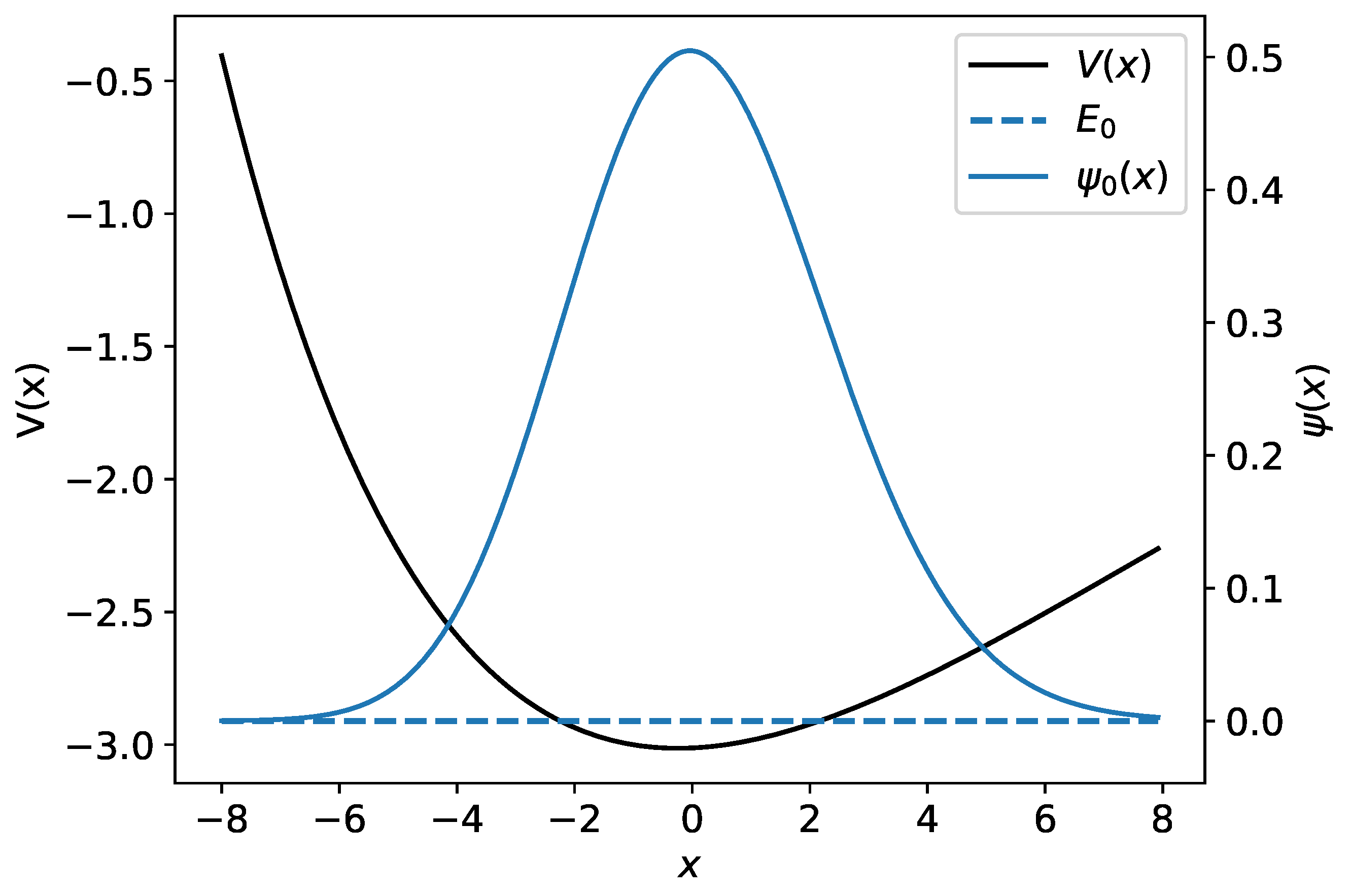

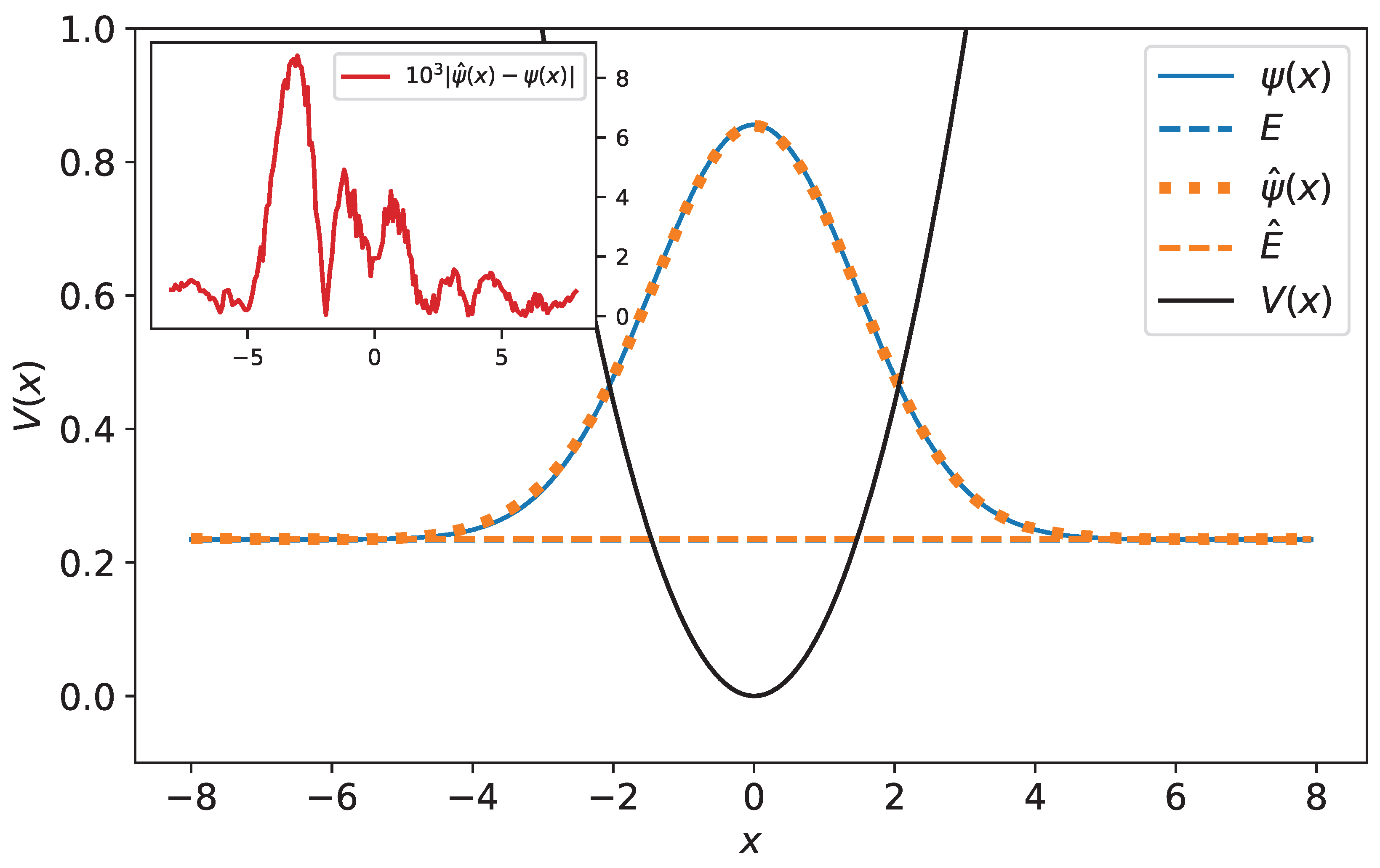

2.2. The Morse Potential

2.3. Polynomial Potentials

2.4. Numerical Solutions to the Schrödinger Equation

- The choice of the truncation dimension N is important for minimising the error, which comes from approximation. The coefficients vanish quickly enough such that after some term N, the error can be neglected. However, the choice of the correct truncation depends on the energy, and therefore the choice of N is important.

- The coefficients and have to be integrated. Numerical integration of the coefficients can be performed numerically or, in some cases, analytically.

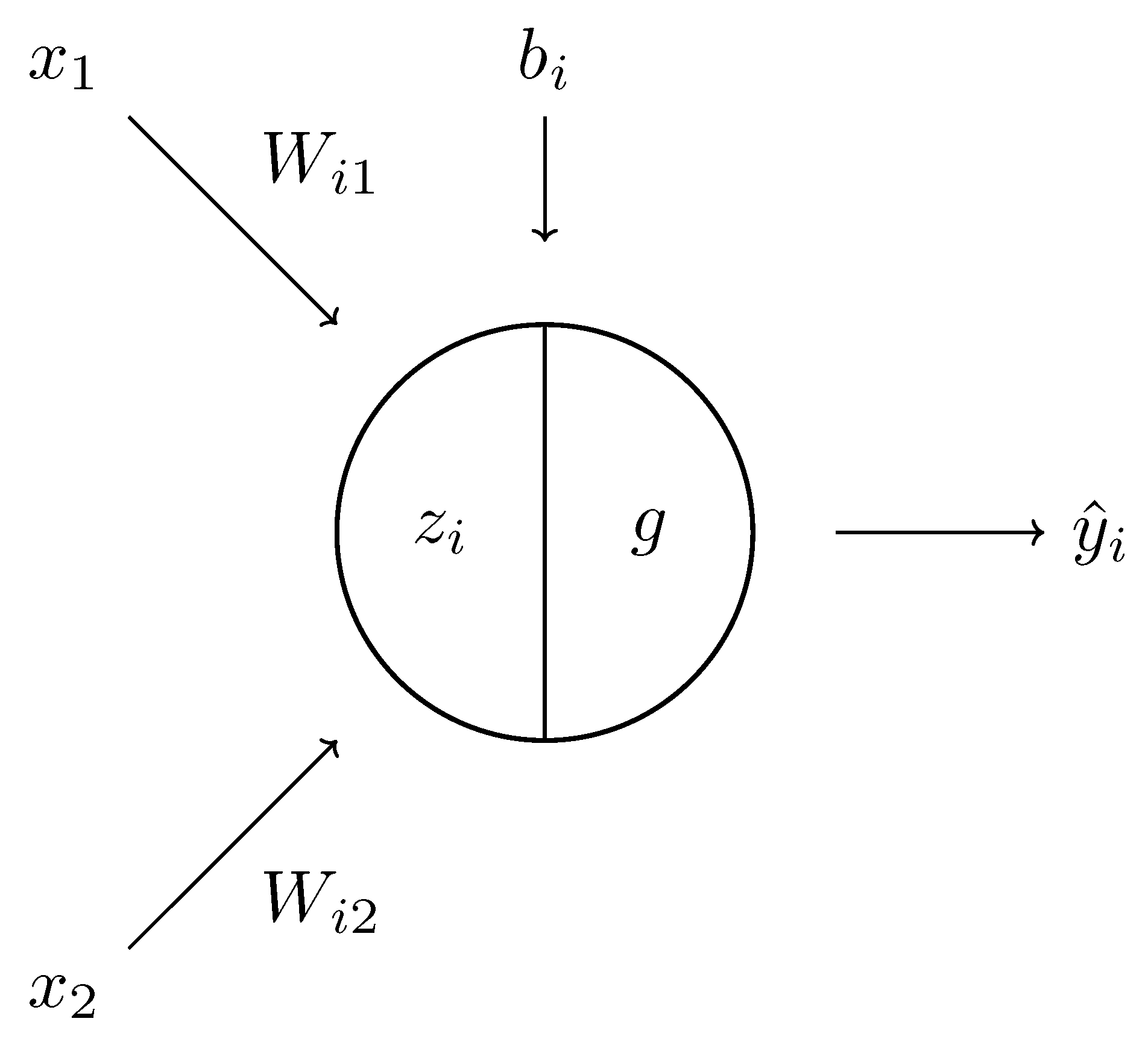

3. Neural Network Models

- How can one evaluate the correctness of the predictions of a neural network?

- Given the correctness, how one can improve the weights iteratively so that they give better results?

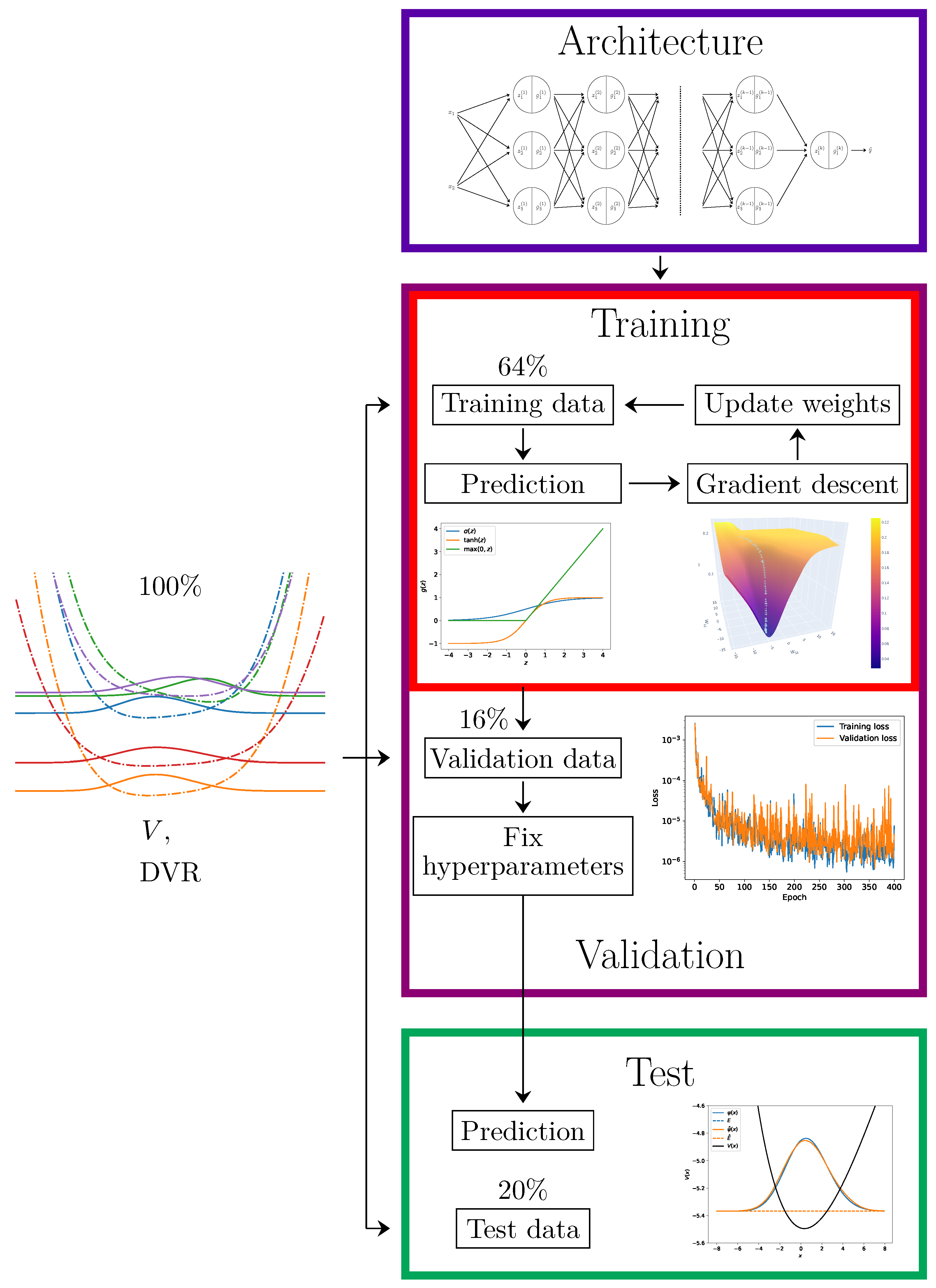

4. Machine Learning Training and Prediction

- Training data—the largest part of the initial data, which is used to train the machine learning models;

- Validation data—the part of the initial data which is used to determine the hyperparameters of the model;

- Test data—the part of the initial data (∼20%) which is used to determine the quality of the model and check whether it is under- or overfitted.

5. Neural Networks for Solving the Schrödinger Equation

6. Conclusions

Author Contributions

Funding

Data Availability Statement

Acknowledgments

Conflicts of Interest

Appendix A. Integration by Quadrature

Appendix B. DVR

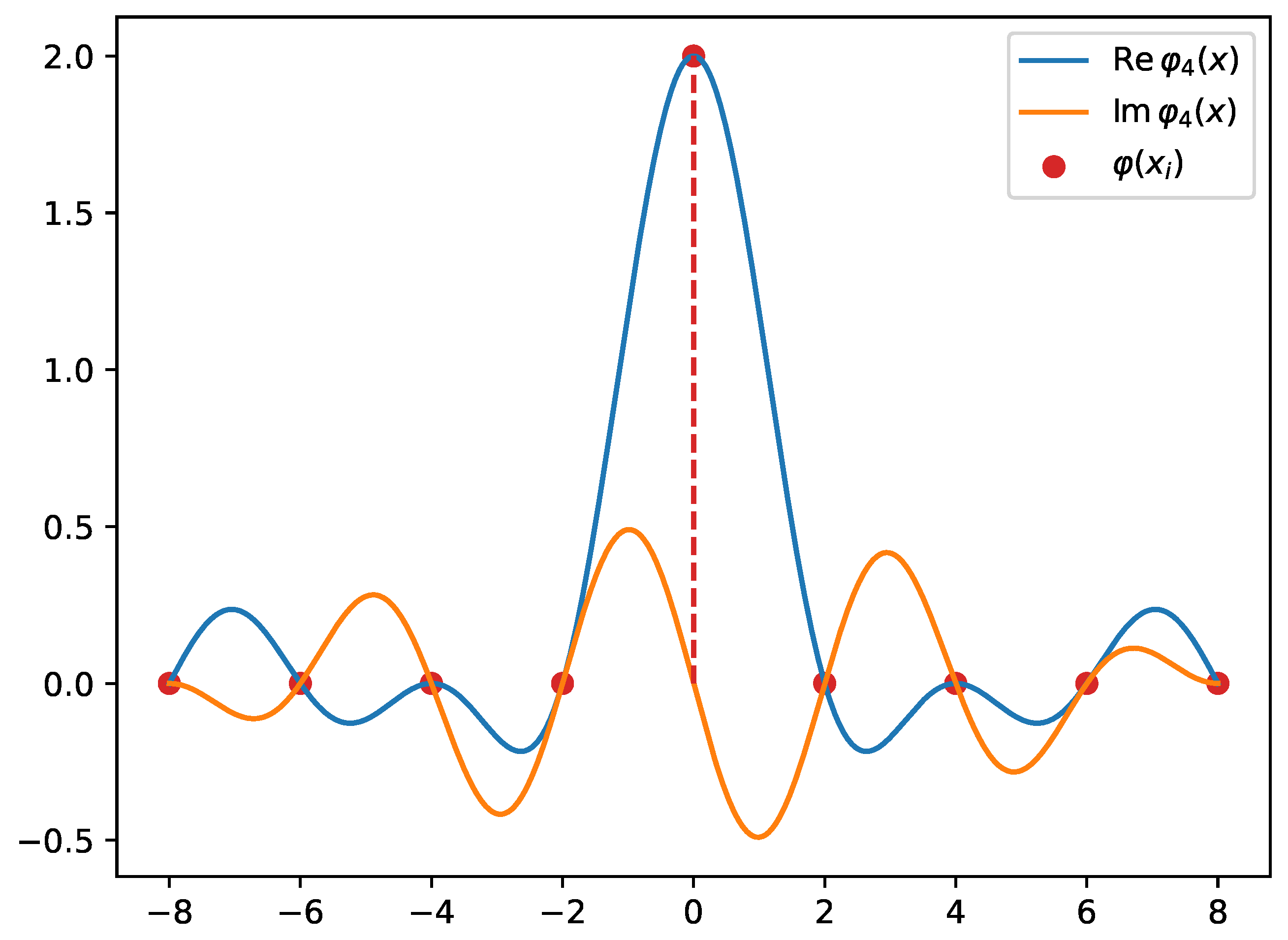

Appendix B.1. Plane-Wave DVR

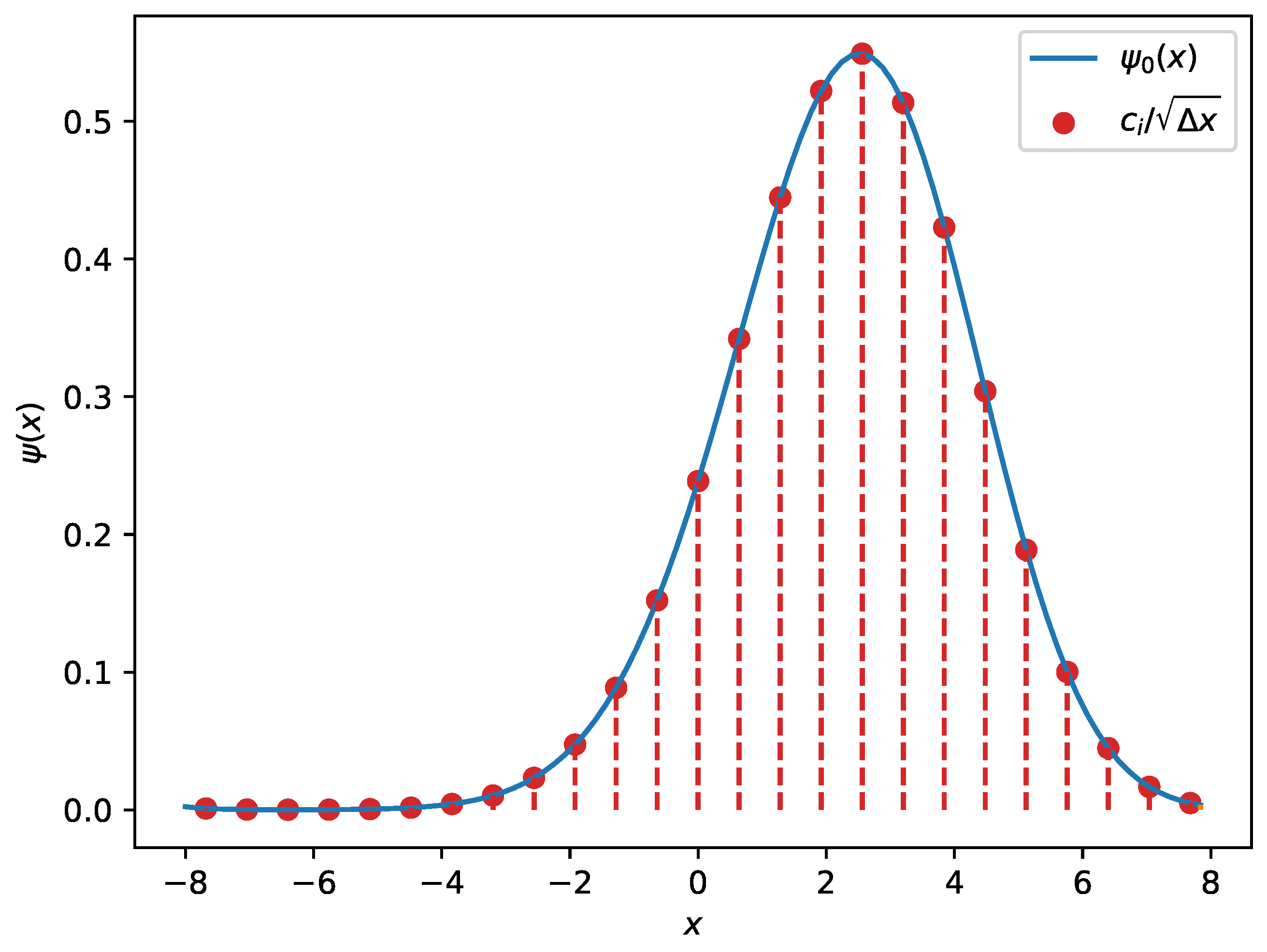

Appendix B.2. Orthogonal Polynomial DVR

References

- Boser, B.E.; Guyon, I.M.; Vapnik, V.N. A training algorithm for optimal margin classifiers. In Proceedings of the Fifth Annual Workshop on Computational Learning Theory, New York, NY, USA, 27–29 July 1992; COLT ’92. pp. 144–152. [Google Scholar] [CrossRef]

- Cortes, C.; Vapnik, V. Support-vector networks. Mach. Learn. 1995, 20, 273–297. [Google Scholar] [CrossRef]

- Burges, C.J.; Schölkopf, B.; Smola, A.J. Advances in Kernel Methods: Support Vector Learning; The MIT Press: Cambridge, MA, USA, 1998. [Google Scholar] [CrossRef]

- Goodfellow, I.; Bengio, Y.; Courville, A.; Bengio, Y. Deep Learning; MIT Press: Cambridge, UK, 2016; Volume 1. [Google Scholar]

- Gatti, F. Artificial Neural Networks. In Machine Learning in Geomechanics 1; John Wiley & Sons, Ltd.: Hoboken, NJ, USA, 2024; Chapter 5; pp. 145–236. [Google Scholar] [CrossRef]

- Gatti, F. Artificial Neural Networks. In Machine Learning in Geomechanics 2; John Wiley & Sons, Ltd.: Hoboken, NJ, USA, 2024; Chapter 5; pp. 185–275. [Google Scholar] [CrossRef]

- McCulloch, W.S.; Pitts, W. A logical calculus of the ideas immanent in nervous activity. Bull. Math. Biophys. 1943, 5, 115–133. [Google Scholar] [CrossRef]

- ROSENBLATT, M. Some Purely Deterministic Processes. J. Math. Mech. 1957, 6, 801–810. [Google Scholar]

- Rosenblatt, F. The perceptron: A probabilistic model for information storage and organization in the brain. Psychol. Rev. 1958, 65, 386–408. [Google Scholar] [CrossRef] [PubMed]

- Rosenblatt, F. Principles of Neurodynamics; Spartan: New York, NY, USA, 1962. [Google Scholar]

- Hecht-Nielsen, R. Kolmogorov’s Mapping Neural Network Existence Theorem. In Proceedings of the IEEE First International Conference on Neural Networks (San Diego, CA); IEEE: Piscataway, NJ, USA, 1987; Volume III, pp. 11–13. [Google Scholar]

- Hecht-Nielsen, R. III.3—Theory of the Backpropagation Neural Network. In Neural Networks for Perception; Wechsler, H., Ed.; Academic Press: Cambridge, MA, USA, 1992; pp. 65–93. [Google Scholar] [CrossRef]

- Cybenko, G. Approximation by superpositions of a sigmoidal function. Math. Control. Signals Syst. 1989, 2, 303–314. [Google Scholar] [CrossRef]

- Leshno, M.; Lin, V.Y.; Pinkus, A.; Schocken, S. Multilayer feedforward networks with a nonpolynomial activation function can approximate any function. Neural Netw. 1993, 6, 861–867. [Google Scholar] [CrossRef]

- Rumelhart, D.E.; Hinton, G.E.; Williams, R.J. Learning representations by back-propagating errors. Nature 1986, 323, 533–536. [Google Scholar] [CrossRef]

- Rumelhart, D.E.; Hinton, G.E.; Williams, R.J. Learning internal representations by error propagation. In Parallel Distributed Processing; Rumelhart, D.E., McClelland, J.L., Eds.; MIT Press: Cambridge, UK, 1986; Volume 1, Chapter 8; pp. 318–362. [Google Scholar]

- Hinton, G.E.; Osindero, S.; Teh, Y.W. A Fast Learning Algorithm for Deep Belief Nets. Neural Comput. 2006, 18, 1527–1554. [Google Scholar] [CrossRef]

- Schölkopf, B.; Platt, J.; Hofmann, T. Greedy Layer-Wise Training of Deep Networks. In Advances in Neural Information Processing Systems 19: Proceedings of the 2006 Conference; The MIT Press: Cambridge, MA, USA, 2007; pp. 153–160. [Google Scholar]

- Lecun, Y.; Bottou, L.; Bengio, Y.; Haffner, P. Gradient-based learning applied to document recognition. Proc. IEEE 1998, 86, 2278–2324. [Google Scholar] [CrossRef]

- Hochreiter, S.; Schmidhuber, J. Long Short-Term Memory. Neural Comput. 1997, 9, 1735–1780. [Google Scholar] [CrossRef]

- Vaswani, A.; Shazeer, N.; Parmar, N.; Uszkoreit, J.; Jones, L.; Gomez, A.N.; Kaiser, L.; Polosukhin, I. Attention is all you need. In Proceedings of the 31st International Conference on Neural Information Processing Systems, Red Hook, NY, USA, 4–9 December 2017; NIPS’17. pp. 6000–6010. [Google Scholar]

- Carleo, G.; Cirac, I.; Cranmer, K.; Daudet, L.; Schuld, M.; Tishby, N.; Vogt-Maranto, L.; Zdeborová, L. Machine learning and the physical sciences. Rev. Mod. Phys. 2019, 91, 045002. [Google Scholar] [CrossRef]

- Dawid, A.; Arnold, J.; Requena, B.; Gresch, A.; Płodzień, M.; Donatella, K.; Nicoli, K.A.; Stornati, P.; Koch, R.; Büttner, M.; et al. Modern applications of machine learning in quantum sciences. arXiv 2022, arXiv:quant-ph/2204.04198. [Google Scholar]

- Carrasquilla, J. Machine learning for quantum matter. Adv. Physics X 2020, 5, 1797528. [Google Scholar] [CrossRef]

- Julian, D.; Koots, R.; Pérez-Ríos, J. Machine-learning models for atom-diatom reactions across isotopologues. Phys. Rev. A 2024, 110, 032811. [Google Scholar] [CrossRef]

- Guan, X.; Heindel, J.P.; Ko, T.; Yang, C.; Head-Gordon, T. Using machine learning to go beyond potential energy surface benchmarking for chemical reactivity. Nat. Comput. Sci. 2023, 3, 965–974. [Google Scholar] [CrossRef] [PubMed]

- Krenn, M.; Landgraf, J.; Foesel, T.; Marquardt, F. Artificial intelligence and machine learning for quantum technologies. Phys. Rev. A 2023, 107, 010101. [Google Scholar] [CrossRef]

- Carleo, G.; Troyer, M. Solving the quantum many-body problem with artificial neural networks. Science 2017, 355, 602–606. [Google Scholar] [CrossRef]

- Yıldız, c.; Heinonen, M.; Lahdesmäki, H. ODE2VAE: Deep generative second order ODEs with Bayesian neural networks. In Proceedings of the 33rd International Conference on Neural Information Processing Systems; Curran Associates Inc.: Red Hook, NY, USA, 2019. [Google Scholar]

- Huang, S.; Feng, W.; Tang, C.; He, Z.; Yu, C.; Lv, J. Partial Differential Equations Meet Deep Neural Networks: A Survey. In IEEE Transactions on Neural Networks and Learning Systems; IEEE: New York, NY, USA, 2025; pp. 1–21. [Google Scholar] [CrossRef]

- E, W.; Yu, B. The Deep Ritz Method: A Deep Learning-Based Numerical Algorithm for Solving Variational Problems. Commun. Math. Stat. 2018, 6, 1–12. [Google Scholar] [CrossRef]

- Hermann, J.; Schätzle, Z.; Noé, F. Deep-neural-network solution of the electronic Schrödinger equation. Nat. Chem. 2020, 12, 891–897. [Google Scholar] [CrossRef]

- Zhang, Y.; Jiang, B.; Guo, H. SchrödingerNet: A Universal Neural Network Solver for the Schrödinger Equation. J. Chem. Theory Comput. 2025, 21, 670–677. [Google Scholar] [CrossRef] [PubMed]

- Nakajima, M.; Tanaka, K.; Hashimoto, T. Neural Schrödinger Equation: Physical Law as Deep Neural Network. IEEE Trans. Neural Networks Learn. Syst. 2022, 33, 2686–2700. [Google Scholar] [CrossRef] [PubMed]

- Raissi, M.; Perdikaris, P.; Karniadakis, G. Physics-informed neural networks: A deep learning framework for solving forward and inverse problems involving nonlinear partial differential equations. J. Comput. Phys. 2019, 378, 686–707. [Google Scholar] [CrossRef]

- Han, J.; Zhang, L.; E, W. Solving many-electron Schrödinger equation using deep neural networks. J. Comput. Phys. 2019, 399, 108929. [Google Scholar] [CrossRef]

- Kluyver, T.; Ragan-Kelley, B.; Pérez, F.; Granger, B.; Bussonnier, M.; Frederic, J.; Kelley, K.; Hamrick, J.; Grout, J.; Corlay, S.; et al. Jupyter Notebooks—A publishing format for reproducible computational workflows. In Positioning and Power in Academic Publishing: Players, Agents and Agendas; Loizides, F., Schmidt, B., Eds.; IOS Press: Amsterdam, Netherlands, 2016; pp. 87–90. [Google Scholar] [CrossRef]

- Javadi-Abhari, A.; Treinish, M.; Krsulich, K.; Wood, C.J.; Lishman, J.; Gacon, J.; Martiel, S.; Nation, P.D.; Bishop, L.S.; Cross, A.W.; et al. Quantum computing with Qiskit. arXiv 2024, arXiv:quant-ph/2405.08810. [Google Scholar] [CrossRef]

- Bergholm, V.; Izaac, J.; Schuld, M.; Gogolin, C.; Ahmed, S.; Ajith, V.; Alam, M.S.; Alonso-Linaje, G.; AkashNarayanan, B.; Asadi, A.; et al. PennyLane: Automatic differentiation of hybrid quantum-classical computations. arXiv 2022, arXiv:quant-ph/1811.04968. [Google Scholar]

- Morse, P.M. Diatomic Molecules According to the Wave Mechanics. II. Vibrational Levels. Phys. Rev. 1929, 34, 57–64. [Google Scholar] [CrossRef]

- Jensen, P. An introduction to the theory of local mode vibrations. Mol. Phys. 2000, 98, 1253–1285. [Google Scholar] [CrossRef]

- Child, M.S.; Halonen, L. Overtone Frequencies and Intensities in the Local Mode Picture. In Advances in Chemical Physics; John Wiley & Sons, Ltd.: Hoboken, NJ, USA, 1984; pp. 1–58. [Google Scholar] [CrossRef]

- Endre Süli, D.F.M. An Introduction to Numerical Analysis; Cambridge University Press: Cambridge, UK, 2003. [Google Scholar]

- Runge, C. Ueber die numerische Auflösung von Differentialgleichungen. Math. Ann. 1895, 46, 167–178. [Google Scholar] [CrossRef]

- Kutta, W. Beitrag zur näherungsweisen Integration totaler Differentialgleichungen. Z. Für Math. Und Phys. 1901, 46, 435. [Google Scholar]

- Numerov, B. Note on the numerical integration of d2x/dt2 = f(x, t). Astron. Nachrichten 1927, 230, 359–364. [Google Scholar] [CrossRef]

- Harris, D.O.; Engerholm, G.G.; Gwinn, W.D. Calculation of Matrix Elements for One-Dimensional Quantum-Mechanical Problems and the Application to Anharmonic Oscillators. J. Chem. Phys. 1965, 43, 1515–1517. [Google Scholar] [CrossRef]

- Dickinson, A.S.; Certain, P.R. Calculation of Matrix Elements for One-Dimensional Quantum-Mechanical Problems. J. Chem. Phys. 1968, 49, 4209–4211. [Google Scholar] [CrossRef]

- Lill, J.; Parker, G.; Light, J. Discrete variable representations and sudden models in quantum scattering theory. Chem. Phys. Lett. 1982, 89, 483–489. [Google Scholar] [CrossRef]

- Heather, R.W.; Light, J.C. Discrete variable theory of triatomic photodissociation. J. Chem. Phys. 1983, 79, 147–159. [Google Scholar] [CrossRef]

- Light, J.C.; Hamilton, I.P.; Lill, J.V. Generalized discrete variable approximation in quantum mechanics. J. Chem. Phys. 1985, 82, 1400–1409. [Google Scholar] [CrossRef]

- Kokoouline, V.; Dulieu, O.; Kosloff, R.; Masnou-Seeuws, F. Mapped Fourier methods for long-range molecules: Application to perturbations in the Rb2(0u+) photoassociation spectrum. J. Chem. Phys. 1999, 110, 9865–9876. [Google Scholar] [CrossRef]

- Littlejohn, R.G.; Cargo, M.; Carrington, T.; Mitchell, K.A.; Poirier, B. A general framework for discrete variable representation basis sets. J. Chem. Phys. 2002, 116, 8691–8703. [Google Scholar] [CrossRef]

- Szalay, V. Discrete variable representations of differential operators. J. Chem. Phys. 1993, 99, 1978–1984. [Google Scholar] [CrossRef]

- Baye, D. Lagrange-mesh method for quantum-mechanical problems. Phys. Status Solidi (b) 2006, 243, 1095–1109. [Google Scholar] [CrossRef]

- Colbert, D.T.; Miller, W.H. A novel discrete variable representation for quantum mechanical reactive scattering via the S-matrix Kohn method. J. Chem. Phys. 1992, 96, 1982–1991. [Google Scholar] [CrossRef]

- Meyer, R. Trigonometric Interpolation Method for One-Dimensional Quantum-Mechanical Problems. J. Chem. Phys. 1970, 52, 2053–2059. [Google Scholar] [CrossRef]

- Virtanen, P.; Gommers, R.; Oliphant, T.E.; Haberland, M.; Reddy, T.; Cournapeau, D.; Burovski, E.; Peterson, P.; Weckesser, W.; Bright, J.; et al. SciPy 1.0: Fundamental Algorithms for Scientific Computing in Python. Nat. Methods 2020, 17, 261–272. [Google Scholar] [CrossRef] [PubMed]

- Masi, F. Introduction to Regression Methods. In Machine Learning in Geomechanics 1; John Wiley & Sons, Ltd.: Hoboken, NJ, USA, 2024; Chapter 2; pp. 31–92. [Google Scholar] [CrossRef]

- Duchi, J.; Hazan, E.; Singer, Y. Adaptive Subgradient Methods for Online Learning and Stochastic Optimization. J. Mach. Learn. Res. 2011, 12, 2121–2159. [Google Scholar]

- Kingma, D.P.; Ba, J. Adam: A Method for Stochastic Optimization. arXiv 2017, arXiv:cs.LG/1412.6980. [Google Scholar]

- Harris, C.R.; Millman, K.J.; van der Walt, S.J.; Gommers, R.; Virtanen, P.; Cournapeau, D.; Wieser, E.; Taylor, J.; Berg, S.; Smith, N.J.; et al. Array programming with NumPy. Nature 2020, 585, 357–362. [Google Scholar] [CrossRef]

- Pedregosa, F.; Varoquaux, G.; Gramfort, A.; Michel, V.; Thirion, B.; Grisel, O.; Blondel, M.; Prettenhofer, P.; Weiss, R.; Dubourg, V.; et al. Scikit-learn: Machine Learning in Python. J. Mach. Learn. Res. 2011, 12, 2825–2830. [Google Scholar]

- Abadi, M.; Agarwal, A.; Barham, P.; Brevdo, E.; Chen, Z.; Citro, C.; Corrado, G.S.; Davis, A.; Dean, J.; Devin, M.; et al. TensorFlow: Large-Scale Machine Learning on Heterogeneous Distributed Systems. arXiv 2016, arXiv:cs.DC/1603.04467. Software available from tensorflow.org. [Google Scholar]

- Krylov, V.I. Approximate Calculation of Integrals; Macmillan: New York, NY, USA, 1962. [Google Scholar]

| Step of Machine Learning Process | Detailed Summary |

|---|---|

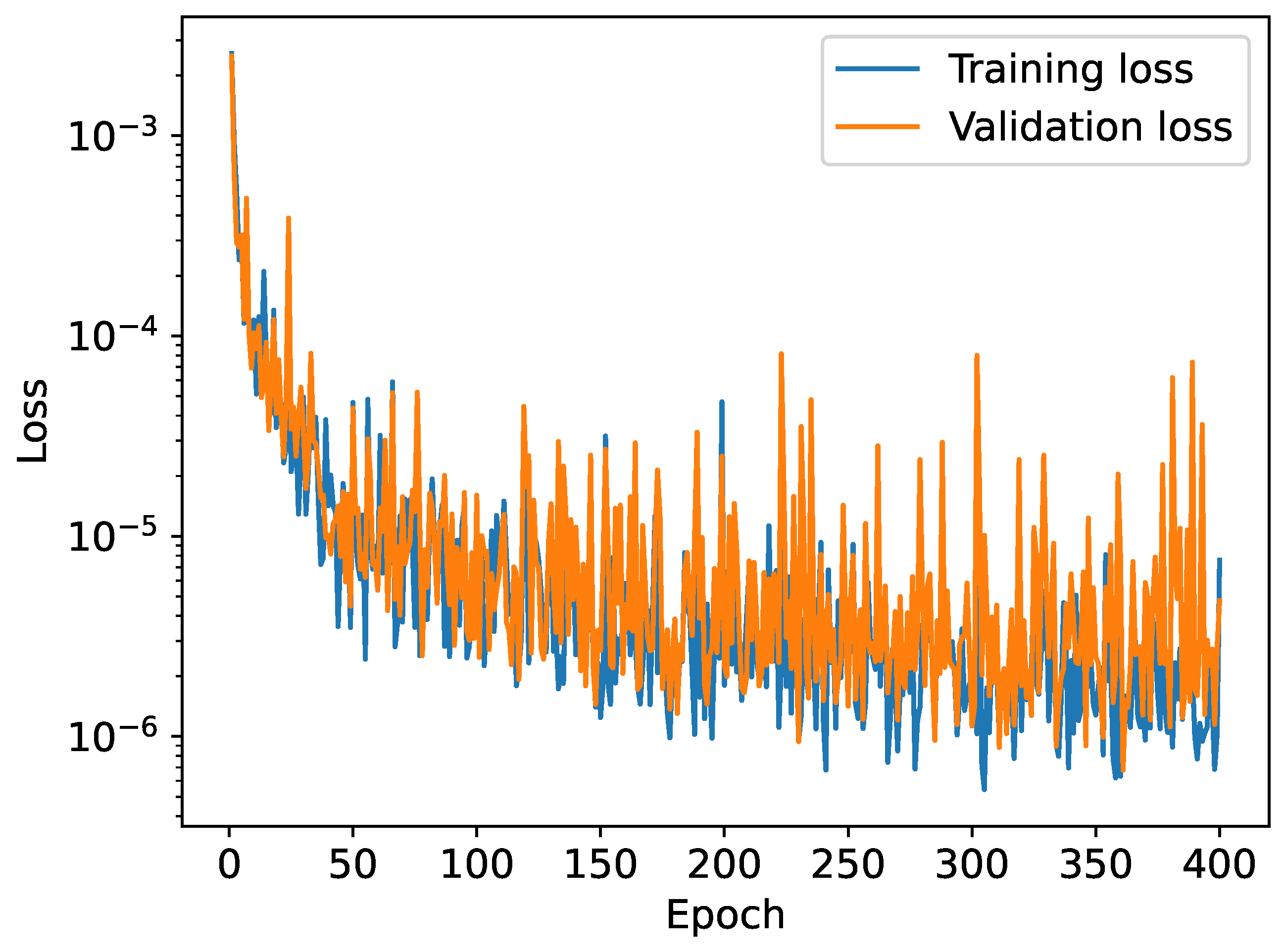

| Training and validation datasets | Polynomial–wave function pairs evaluated on grid points; polynomial degree: 5; number of grid points: 200; training data size: 3350; validation data size: 1650 |

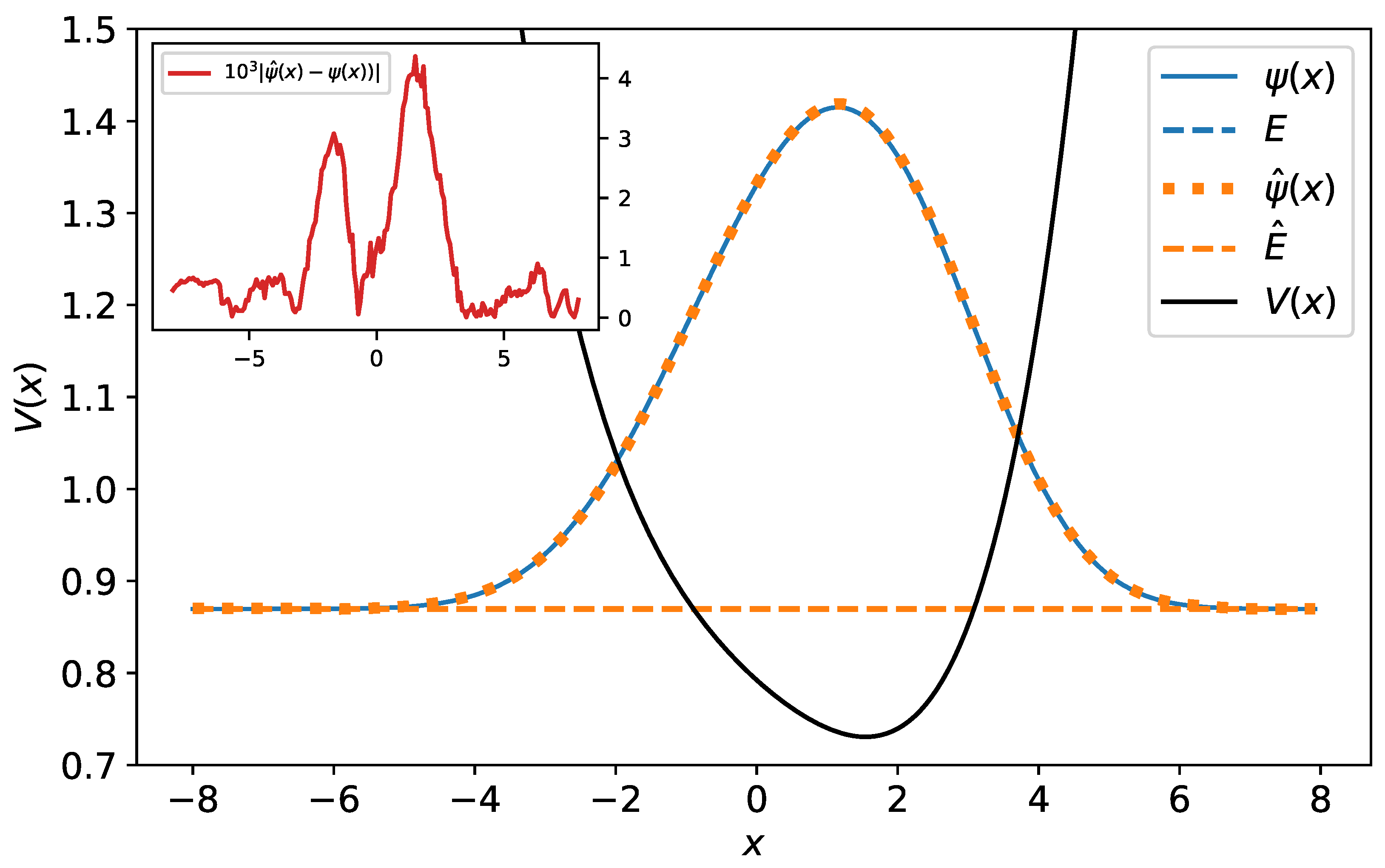

| Test datasets | Function–wave function pairs evaluated on grid points; polynomial subset size: 10; harmonic oscillator subset size: 10; Morse subset size: 10 |

| Neural network | Five layers; input size: 200; output size: 200; hidden layer output sizes: 256, 256, 128, 128. activation functions: ReLU, ReLU, ReLU, ReLu, linear; dropout: after hidden layers |

| Loss function | Mean square error |

| Training | Learning rate: 0.0005; early stopping; gradient descent algorithm: Adam; batch size: 64 |

| Training Data | Validation Data | Test Data | |||

|---|---|---|---|---|---|

| Polynomial Potentials | Polynomial Potentials | Polynomial Potentials | Harmonic Oscillator | Morse Potential | |

| Loss (10−6) | 2.60 | 6.07 | 3.49 | 4.05 | 35.7 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Pawelkiewicz, M.K.; Gatti, F.; Clouteau, D.; Kokoouline, V.; Ayouz, M.A. Introducing Machine Learning in Teaching Quantum Mechanics. Atoms 2025, 13, 66. https://doi.org/10.3390/atoms13070066

Pawelkiewicz MK, Gatti F, Clouteau D, Kokoouline V, Ayouz MA. Introducing Machine Learning in Teaching Quantum Mechanics. Atoms. 2025; 13(7):66. https://doi.org/10.3390/atoms13070066

Chicago/Turabian StylePawelkiewicz, M. K., Filippo Gatti, Didier Clouteau, Viatcheslav Kokoouline, and Mehdi Adrien Ayouz. 2025. "Introducing Machine Learning in Teaching Quantum Mechanics" Atoms 13, no. 7: 66. https://doi.org/10.3390/atoms13070066

APA StylePawelkiewicz, M. K., Gatti, F., Clouteau, D., Kokoouline, V., & Ayouz, M. A. (2025). Introducing Machine Learning in Teaching Quantum Mechanics. Atoms, 13(7), 66. https://doi.org/10.3390/atoms13070066