Abstract

In general, a convolutional neural network (CNN) consists of one or more convolutional layers, pooling layers, and fully connected layers. Most designers adopt a trial-and-error method to select CNN parameters. In this study, an AlexNet network with optimized parameters is proposed for face image recognition. A Taguchi method is used for selecting preliminary factors and experiments are performed through orthogonal table design. The proposed method filters out factors that are significantly affected. Finally, experimental results show that the proposed Taguchi-based AlexNet network obtains 87.056% and 98.72% average accuracy of image gender recognition in the CIA and MORPH databases, respectively. In addition, the average accuracy of the proposed Taguchi-based AlexNet network is 1.576% and 3.47% higher than that of the original AlexNet network in CIA and MORPH databases, respectively.

1. Introduction

In recent years, face recognition has been widely used in various fields. Because the face is a very complex and important biological feature, it contains a lot of information, such as gender, age, and expression. Refs [1,2,3,4] Automatic gender and age analysis has produced many business model applications. For example, demographic data collection and product sales [5]. The intelligent facial recognition system can be based on the big data of the customer’s gender and age, so that the seller can accurately and clearly understand the customer group that purchased this product. Therefore, this study focuses on gender classification analysis.

Machine learning has been successfully applied in gender recognition. Saatci et al. [4] proposed a method to identify the gender and expression of facial images through the active appearance model (AAM). Features extracted using trained AAM can be used to construct support vector machine (SVM) classifiers. These classifiers are arranged in a cascade structure to optimize the overall recognition performance. Mäkinen and Raisamo [5] conducted experiments by arithmetically combining the output of the gender classifier. This leads to improved classification accuracy. Singh et al. [6] used local binary pattern (LBP) and histogram of oriented gradient (HOG) feature extraction algorithms and SVM classifiers to obtain the more efficient gender classification from face images. Eidinger et al. [7] proposed the dropout-support vector machine approach for face attribute estimation in order to avoid over-fitting. This method was inspired by the popular dropout learning technology in deep belief networks. In addition, a robust face alignment technique is also proposed that explicitly considers the uncertainty of the face feature detector. Although the aforementioned methods can successfully achieve gender recognition, the user needs to determine the features of gender images in advance. Therefore, this study will adopt a convolutional neural network (CNN) to overcome the above problems.

Recently, a CNN is a type of deep neural network that is commonly used to identify and detect visual images [8,9,10,11]. CNN has been widely used in various fields, such as object detection [12], face recognition [13,14], and speech recognition [15]. For the gender classification, Duan [8] proposed a hybrid of CNN and extreme learning machine (ELM) to incorporate the synergy of two classifiers for classifying gender images. Levi and Hassner [14] used deep-convolutional neural networks to learn representations. A significant increase in performance can be obtained on the gender classification. Ozbulak et al. [16] explored the portability of the existing CNN models for age and gender classification. A common AlexNet-like architecture and domain-specific VGG-Face CNN model were used and fine-tuned with the Adience data set to classify age and gender in an uncontrolled environment. In order to obtain better gender classification performance, users usually use the trial-and-error method to adjust the structural parameters of CNN during training process.

Many researchers [17,18,19] used various methods to optimize the structural parameters. Esteban et al. [17] proposed a genetic algorithm (GA) and deoxyribonucleic acid (DNA) based coding scheme to determine the CNN architecture parameters. Benteng et al. [18] proposed an autonomous and continuous learning (ACL) algorithm to generate automatically a deep convolutional neural network (DCNN) architecture for each given vision task. Zoph and Le [19] proposed neural architecture search (NAS) with reinforcement learning. The recurrent network architecture is used to generate neural networks. CNN is trained through reinforcement learning to maximize the expected accuracy of the architecture generated on the validation set. NAS is based on reinforcement learning and uses a total of 800 graphics processing unit (GPUs). This method has a very large search space and is also very time-consuming.

Competition is becoming increasingly fierce in various manufacturing sectors. To optimize product quality, Taguchi methods are usually used. Taguchi methods [20,21,22] were proposed by Dr. Genichi Taguchi from the 1950s to the early 1960s. Well known robust design methods now face competition from Taguchi quality engineering and Taguchi experimental design methods. A Taguchi method usually selects an appropriate orthogonal table based on the number of design parameters and the number of levels; an appropriately selected table can minimize the number of tests. The goal is to discover the best optimal product quality characteristics and maintain the stability of product quality. With Taguchi methods, the average loss of the product during the manufacturing process can be minimized.

To reduce the number of experiments and to optimize the parameters of a CNN architecture for gender image recognition, this study proposes a Taguchi-based convolutional neural network. Four well-known CNN architectures, LeNet [23], AlexNet [24], GoogLeNet [25], VggNet [26] and ResNet [27] are commonly used by researchers. In this study, we focus on the popular AlexNet network architecture, which is bigger than LeNet and smaller than GoogLeNet and ResNet. In addition, transfer learning [28] is suitable for large neural networks and small data sets. For the case where the data set itself is small, it is unrealistic to start training a large neural network with thousands of parameters because the generated model requires basic data. The problem of overfitting is unavoidable. Since this study does not use large neural networks, it does not use transfer learning in this study. Recently, researchers proposed to use transfer learning before training GoogleNet, SqueezeNet, and ResNet50 network architectures [29]. These network architectures are relatively complex, so transfer learning is needed to avoid overfitting. In this study, using AlexNet and omitting transfer learning can also achieve high accuracy. To avoid using the trial-and-error method to determine the architectural parameters of the AlexNet network, we use a Taguchi method with an orthogonal table based on the numbers of design parameters and levels. In our experiments, the Taguchi-based AlexNet network recognized the genders of faces in the Computational Intelligence Application (CIA) and MORPH Facial Recognition database. The major contributions of this study are presented as follows:

- (1)

- To avoid using the trial-and-error method to determine the parameters of the network architecture, the Taguchi-based AlexNet network is proposed.

- (2)

- To avoid time-consuming for optimal parameters of the network, the orthogonal array is used. Without the orthogonal array, the 486 experimental number would be needed; however, there is 18 needed in this study.

- (3)

- Experimental results proved that the average accuracy rates of the proposed Taguchi-based AlexNet network is higher 1.576% and 3.47% than the original AlexNet network in CIA and MORPH databases, respectively.

- (4)

- Although this study only uses the Taguchi method to optimize the structural parameters of the AlexNet network, other networks can also adopt the proposed method to determine their network structure and obtain a better average accuracy.

2. The Taguchi-Based AlexNet Network

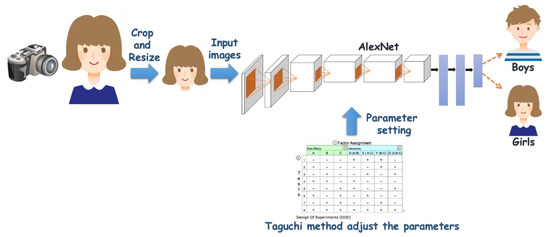

This section presents a Taguchi-based CNN for detecting gender from an image data set. Figure 1 shows the flowchart of the proposed Taguchi-based AlexNet [24] architecture

Figure 1.

Flowchart of the proposed Taguchi-based AlexNet architecture.

2.1. Convolutional Neural Networks

Any major breakthrough in deep learning is relevant to CNNs. CNNs constitute the main force in the current development of deep neural networks; they recognize images even more accurately than humans. A CNN is a feed-forward neural network structure that responds to the pixel surrounding relevant image sections; CNNs excel at large image processing. LeCun et al. [23] have demonstrated that CNNs can have powerful image classification capabilities. CNNs mainly consists of three types of layers: convolutional layers, pooling layers, and fully connected layers. Convolutional layers and pooling layers are the most vital layers. A convolution layer is used to extract features by convolving an image area with multiple filters. Because its number of layers is increased, a CNN can more accurately parse the features of its input image. The pooling layer reduces the size of the convolution output mapping. If these two layers are managed properly, the number of parameters and the calculation complexity in a CNN can be minimized.

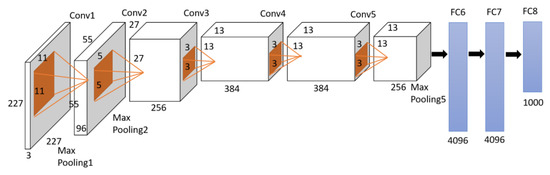

AlexNet is a classic model for image classification. As illustrated in Figure 2, the AlexNet architecture has five convolution layers (Conv), three pooling layers (Pool), and three fully connected layers (each marked in the figure with FC and a number in Figure 2). Table 1 lists the parameters of each layer.

Figure 2.

Architecture of AlexNet.

Table 1.

Parameters for each layer of AlexNet.

- Convolution layer

The convolution layer uses a specific feature detector called the convolution kernel to perform the convolution operation on the original image. The picture size is 227 × 227 × 3 during training. In the first convolution layer, 96 number of 11 × 11 × 3 convolution kernels are used for convolution calculation to generate new pixels. The input data of the second layer is the 27 × 27 × 96 pixel layer output by the first layer. In order to facilitate subsequent processing, the upper, lower, left, and right edges of each pixel are filled with 2 pixels. The size of the convolution kernel size of the second layer is 5 × 5, and the stride is 1 pixel, which is the same as the calculation formula of the first layer. The input data of the third layer is a set of 13 × 13 × 128 pixel layers output by the second layer. For the convenience of subsequent processing, the upper, lower, left, and right edges of each pixel layer are filled with 1 pixel. The input data of the fourth layer is sets of 13 × 13 × 192 pixel layers output by the third layer, similar to the third layer. The input data of the fifth layer is a set of 13 × 13 × 192 pixel layers output by the fourth layer. For the convenience of subsequent processing, the upper, lower, left, and right edges of each pixel layer are filled with one pixel.

The KS, stride (S), and padding (P) in the convolution layer are used to determine the sizes of input and output images. Specifically, the calculation formula of the convolution layer.

- Pooling layer

This study mainly uses max pooling, which picks the maximum value in the array. When the image is translated by several pixels, the max pooling does not affect the judgment at all. The major advantage of max pooling is to remove noise efficaciously. Max pooling layers are only after the first, second and fifth convolution layers.

- Fully connected layer

In AlexNet, there are three fully connected layers. 4096 convolution kernels are in the first and second fully connected layer, and the size of each convolution kernel is 6 × 6 × 256. It is because the size of the convolution kernel is exactly the same as the size of the feature map to be processed. Each coefficient in the convolution kernel is only multiplied by a pixel value of the size of the feature map, and one-to-one correspondence. Therefore, the size of the pixel layer after convolution is 4096 × 1 × 1, that is, there are 4096 neurons. Because the number of categories is 2 in this study, 2 neurons in the third fully connected layer are set.

2.2. Proposed Taguchi Method

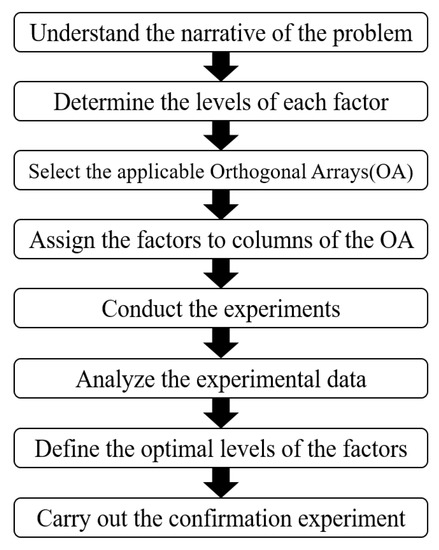

The proposed Taguchi method is a low-cost, high-efficiency quality engineering method. It emphasizes that product quality is improved by design rather than by inspection. In this study, the optimization parameters of Taguchi-based AlexNet are taken as the research object to explore the stability of AlexNet design. First, numerous factors are screened for the most pivotal factors to determine the optimal performance conditions. We use the screened factors as the design parameters of the Taguchi method. The quality of our objective function must be defined, and the factors and levels that affect the objective function must be identified. We use orthogonal arrays (abbreviated as OA in Figure 3) to determine the number of experiments and the factor allocations of experiments. It is expected that reference data equivalent to the full factor can be obtained with a few experiments. Finally, the key factor levels must be determined to verify that the Taguchi-based AlexNet parameters are optimal. This experiment considers cost- effectiveness to discover the best combination of factor levels. A flowchart of the Taguchi method is depicted in Figure 3. The detailed process of the Taguchi method is explained as follows:

Figure 3.

Flow chart of the Taguchi method.

- Step 1: Understand the narrative of the problem

To understand the tasks need to be completed. To achieve simplified experiments and a good accuracy, this study adopts the Taguchi method to adjust the parameters of the convolutional neural network.

- Step 2: Determine the levels of each factor

This study uses the Taguchi method to determine the structural parameters of the AlexNet network. AlexNet has five convolutional layers. Many researchers suggest that the convolutional layers of LeNet or AlexNet have the highest impact [30,31]. Therefore, we also use the first and fifth convolution layers of the AlexNet network as the structural parameters. In the first and fifth convolution layers, the structural parameters include the kernel size, stride, and padding. In this study, there are six affecting factors in total. Except for the two levels used for the padding of the first convolutional layer, three levels are selected among these affecting factors.

- Step 3: Select the applicable Orthogonal Arrays (OA)

Before selecting the OA, the number of affecting factors and levels must be considered. According to the selected levels and factors, a suitable OA is selected. In this study there is one factor of two levels and five factors of three levels. So that OA is selected. Up to 6 factors can be configured, with 3 and 2 representing the number of levels.

- Step 4: Assign the factors to columns of the OA

The purpose of using the OA is to obtain the desired result with a very small number of experiments. For example, when parameter design only considers the main effect and does not consider the interaction between various factors. After the configuration of the factors and levels of the OA is completed, the experiment can be conducted according to the experimental combination configured in the OA to obtain the optimal level combination. This experiment selects 3 levels and 2 levels for each of the selected 6 factors, so an L18 orthogonal array is used for the experiment. The factors KS of conv-1, S of conv-1, P of conv-1, KS of conv-5, S of conv-5, and P of conv-5 are arranged in rows 1, 2, 3, 4, 5 and 6, and shown in Table 2.

Table 2.

orthogonal arrays.

- Step 5: Conduct the experiments

According to the OA and conduct the experiments.

- Step 6: Analyze the experimental data

Through the experimental factor level planning of the Taguchi experiment design, the observation values obtained from the experiment are carried out. It is decided that the number of repeats for each experiment is 5 times. Calculate the Signal-to-noise ratio (S/N ratio), and then analyze the experimental data.

- Step 7: Define the optimal the levels of the factors

According to the Step 6 results define the optimal the levels of the factors.

- Step 8: Carry out the confirmation experiment

Finally, carry out the confirmation experiment. Use the optimal parameter combination to train the AlexNet.

3. Experimental Results

In this experiment, there are two data sets. First is the CIA data set and the second is the MORPH database. Our trials randomly selected 70% of the data set for training and 30% of the data for testing. All experiments are performed on Ubuntu 16.04 with a 3.30 GHz Intel® Xeon® E3-1225 v5(4C4T) processor and Nvidia GTX 1080 8GB (MSI, New Taipei City, Taiwan).

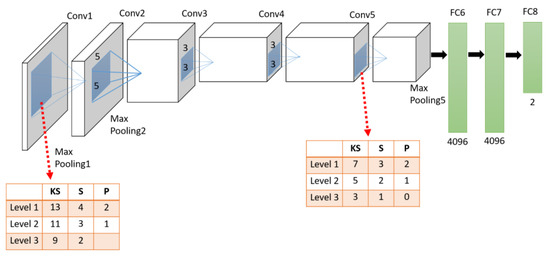

3.1. AlexNet

The architecture of AlexNet consists of eight layers. The first to fifth layers are convolutional layers for convolution operation and pooling; the sixth to eighth layers are fully connected layers. In this experiment, the first and fifth convolutional layers are used for structural parameter optimization. Figure 4 shows the structural parameter optimization diagram of the AlexNet. Because the main purpose of the convolutional layer is to capture the characteristics of the image, it affects the quality of the feature map and the accuracy of recognition. Therefore, this paper uses the Taguchi method to optimize the structural parameters of the convolution layer has obtained better characteristics. As for the fully connected neural network, we adopt the same as the original AlexNet.

Figure 4.

Diagram of structural parameter optimization in the AlexNet.

3.2. Computational Intelligence Application (CIA) Data Set

To evaluate the proposed Taguchi-based AlexNet network, CIA data set is used. The CIA data set is a small face database collected by our laboratory. The database images depict male and female persons in the age range of 6 to 80 years, as illustrated in Figure 5. This data set includes 1880 face pictures and comprises male and female subjects. Most subjects are East Asian in this data set.

Figure 5.

Computational Intelligence Application (CIA) data set.

3.2.1. Determine the Levels of Each Factor

In this experiment, only the convolution kernel parameters are considered in the first and fifth convolutional layers. The affecting factors selected in AlexNet include KS, stride (S), and padding (P) of the convolution kernel in the first and fifth convolutional layers. The system had six affecting factors in total. The levels of key factors are set by referring to the results of experts and actual experiments in Table 3.

Table 3.

Control factors and levels.

3.2.2. Factor Allocation of Taguchi Experiments

This experiment is based on five previously selected factors with three experimental levels and one factor with two experimental levels. The total number of degrees of freedom is 12. Therefore, the L18 is used. The factors, KS of conv-1, S of conv-1, P of conv-1, KS of conv-5, S of conv-5, and P of conv-5, are configured in columns 2, 3, 4, 5, 6, and 7, as shown in Table 4. The selected factors and levels originally require experiments, and only 18 experiments after using the Taguchi experiment.

Table 4.

orthogonal array.

3.2.3. Accuracy and S/N Ratio Results

Because of the experimental factor level planning of the Taguchi experiment design, the quality characteristics of this study are larger the better (LTB). A cross-validation experiment is performed and tested. The S/N ratio of the experimental response value is calculated. The experimental accuracy values were calculated with five rounds of cross-validation, and the S/N ratios are listed in Table 5. This study does not consider the interaction between these factors. The LTB is calculated as follows.

where t is the number of repeated experiments and the ith accuracy of repeated experiments. The larger S/N ratio presents a smaller standard deviation and illustrates higher stability for the quality.

Table 5.

Accuracy and S/N ratio of AlexNet in CIA data set.

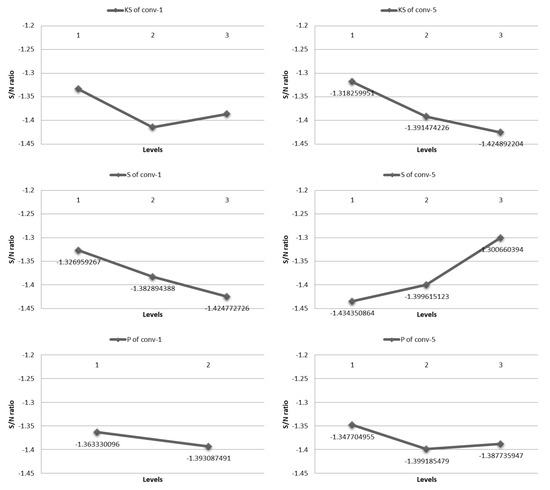

3.2.4. Quality Characteristics and Optimal Parameters

The average S/N ratio of each factor and level is listed in Table 5. The higher S/N ratio represents the higher stability of the quality. The optimal parameter combination is displayed in the last row in Table 6. That is, the best parameter combination has levels 1, 1, 1, 1, 3, and 1 for KS of conv-1, S of conv-1, P of conv-1, KS of conv-5, S of conv-5, and P of conv-5, respectively. In addition, the S/N ratio effect of each factor is depicted in Figure 6. The first three significant factors are S of conv-5, KS of conv-5, and S of conv-1.

Table 6.

Average S/N ratio of each factor and level in CIA data set.

Figure 6.

Effects of all factors in the CIA data set.

3.2.5. Optimal Combination of Experimental Parameters

In light of the experimental quality results, the final experiment is performed based on the optimal parameter combination. Table 7 lists five cross-validation experiments and the average accuracy of the optimal combination for experiments in the proposed Taguchi-based AlexNet network. In addition, the original AlexNet network is tested with the CIA data set and performance levels are compared. And Table 7 lists the accuracy of the original AlexNet network. The average accuracy values of the proposed Taguchi-based AlexNet network and the original AlexNet network are 87.056% and 85.48%, respectively. Experimental results prove that the proposed Taguchi-based AlexNet network has a better average accuracy than the original AlexNet network.

Table 7.

Accuracy of the optimal parameter combination in CIA data set.

3.3. MORPH Facial Recognition Database

MORPH database is a large database and contains the classes of age, gender and ethnicity. In this study, we focus on gender classification. MORPH database contains about 55,000 facial images, including about 46,660 boys and 8493 girls. In this experiment, the same reference to the CIA experiment only considers the convolution kernel parameters in the first and fifth convolutional layers. The selected affecting factors include the KS, convolution (S) and padding (P) of the convolution kernels in the first and fifth convolutional layers. There are six key factors. Therefore, the level and distribution of each factor in the Taguchi experiment are the same as those in the CIA data experiment.

3.3.1. Results of Accuracy and S/N Ratio

The experimental accuracy values are calculated with five rounds of cross-validation, and the S/N ratios are listed in Table 8. This study also does not consider the interaction between these factors.

Table 8.

Accuracy and S/N ratio of AlexNet in MORPH database.

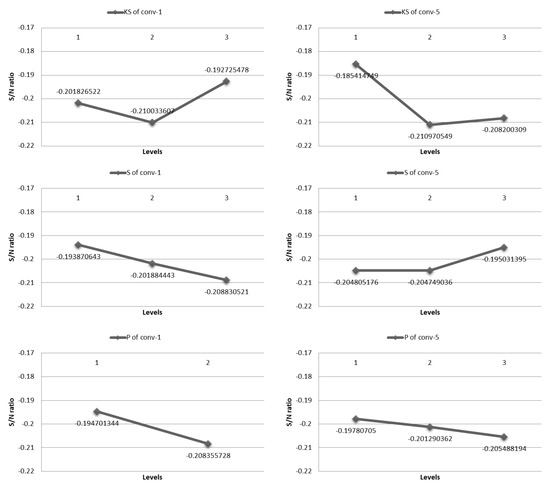

3.3.2. Quality Characteristics and Optimal Parameters

The average S/N ratio of each factor and level are listed in Table 8. The optimal parameter combination is displayed in the last row in Table 9. That is, the best parameter combination has levels 3, 1, 1, 1, 3, and 1 for KS of conv-1, S of conv-1, P of conv-1, KS of conv-5, S of conv-5, and P of conv-5, respectively. In addition, the S/N ratio effect of each factor is depicted in Figure 7. The first three significant factors are KS of conv-5, KS of conv-1, and S of conv-1.

Table 9.

Average S/N ratio of each factor and level in MORPH database.

Figure 7.

Effects of all factors in the MORPH database.

3.3.3. Optimal Combination of Experimental Parameters

This experiment is performed by using the optimal parameter combination. Table 10 lists the accuracy of the original AlexNet network. The average accuracy values of the proposed Taguchi-based AlexNet network and the original AlexNet network are 95.25% and 98.72%, respectively. Experimental results again prove that the proposed Taguchi-based AlexNet network has a better average accuracy than the original AlexNet network. Besides, Table 11 also shows the comparison results of accuracy and training time using various methods in the MORPH database. The results show that the proposed method not only has higher accuracy than other methods, but also has a shorter training time.

Table 10.

Accuracy of the optimal parameter combination in the MORPH database.

Table 11.

Comparison results of accuracy and training time using various methods in the MORPH database.

4. Conclusions

Generally, the adjustment of CNN parameters is time-consuming. In this study, a Taguchi-based AlexNet network is proposed for optimizing the parameters of an AlexNet architecture. To avoid using the trial-and-error method to determine the network parameters of the AlexNet, a Taguchi method is used. The Taguchi method has an orthogonal array based on the number of design parameters and levels. Without the orthogonal array, 486 experimental number would be needed; however, there are 18 needed in this study. According to the average S/N ratio of each factor and level obtained, S of conv-5 and KS of conv-5 are significant factors. Experimental results proved that the average accuracy rates of the proposed Taguchi-based AlexNet network is higher 1.576% and 3.47% than the original AlexNet network in CIA and MORPH databases.

In future work, we will use the proposed Taguchi-based AlexNet network to realize practical hardware applications in embedded systems. An extensible open computing platform NVIDIA DRIVE™ AGX is used. It is like an artificial intelligence brain that can provide industry-leading performance and energy-efficient computing. In order to develop and produce functional safety and adopt artificial intelligence technology, we join this hardware and are able to practically apply various systems including this gender classification system. In addition, the experimental results prove that, in CIA and MORPH databases, the average accuracy of the Taguchi-based AlexNet network is higher than the original AlexNet network. Therefore, this method will be extended to LeNet, GoogLeNet and ResNet in the future.

Author Contributions

Conceptualization, C.-J.L.; methodology, C.-J.L. and Y.-C.L.; software, Y.-C.L. and H.-Y.L.; data curation, Y.-C.L.; writing—original draft preparation, C.-J.L., Y.-C.L. and H.-Y.L.; funding acquisition, C.-J.L. All authors have read and agreed to the published version of the manuscript.

Funding

This research was funded by the Ministry of Science and Technology of the Republic of China, grant number MOST 109-2221-E-167-027.

Conflicts of Interest

The authors declare no conflict of interests regarding the publication of this paper.

References

- Erik, H.; Boon, K.L. Face detection: A survey. Comput. Vis. Image Underst. 2001, 83, 236–274. [Google Scholar] [CrossRef]

- Yang, M.H.; Kriegman, D.J.; Ahuja, N. Detecting faces in images: A survey. IEEE Trans. Pattern Anal. Mach. Intell. 2002, 24, 34–58. [Google Scholar] [CrossRef]

- WenYi, Z.; Rama, C.; Jonathon, P.; Azriel, R. Face recognition: A literature survey. ACM Comput. Surv. 2003, 35, 399–458. [Google Scholar] [CrossRef]

- Saatci, Y.; Town, C. Cascaded classification of gender and facial expression using active appearance models. In Proceedings of the 7th International Conference on Automatic Face and Gesture Recognition (FGR06), Southampton UK, 10–12 April 2006; pp. 393–398. [Google Scholar] [CrossRef]

- Mäkinen, E.; Raisamo, R. An experimental comparison of gender classification methods. Pattern Recognit. Lett. 2008, 29, 1544–1556. [Google Scholar] [CrossRef]

- Singh, V.; Shokeen, V.; Singh, B. Comparison of feature extraction algorithms for gender classification from face images. Int. J. Eng. Res. Technol. 2010, 2, 61034767. [Google Scholar]

- Eidinger, E.; Enbar, R.; Hassner, T. Age and Gender Estimation of Unfiltered Faces. IEEE Trans. Inf. Forensics Secur. 2014, 9, 2170–2179. [Google Scholar] [CrossRef]

- Duan, M.; Li, K.; Yang, C.; Li, K. A hybrid deep learning CNN–ELM for age and gender classification. Pattern Recognit. Lett. 2018, 275, 448–461. [Google Scholar] [CrossRef]

- Kumar, A.; Kim, J.; Lyndon, D.; Fulham, M.; Feng, D. An Ensemble of Fine-Tuned Convolutional Neural Networks for Medical Image Classification. IEEE J. Biomed. Health Inform. 2017, 21, 31–40. [Google Scholar] [CrossRef]

- Ševo, I.; Avramović, A. Convolutional Neural Network Based Automatic Object Detection on Aerial Images. IEEE Geosci. Remote Sens. Lett. 2016, 13, 740–744. [Google Scholar] [CrossRef]

- Ponce, J.M.; Aquino, A.; Andújar, J.M. Olive-Fruit Variety Classification by Means of Image Processing and Convolutional Neural Networks. IEEE Access 2019, 7, 147629–147641. [Google Scholar] [CrossRef]

- Ding, C.; Tao, D. Trunk-Branch Ensemble Convolutional Neural Networks for Video-Based Face Recognition. IEEE Trans. Pattern Anal. Mach. Intell. 2018, 40, 1002–1014. [Google Scholar] [CrossRef] [PubMed]

- Rasti, P.; Uiboupin, T.; Escalera, S.; Anbarjafari, G. Convolutional Neural Network Super Resolution for Face Recognition in Surveillance Monitoring. In Proceedings of the 2016 International Conference on Articulated Motion and Deformable Objects, Palma de Mallorca, Spain, 13–15 July 2016; pp. 175–184. [Google Scholar]

- Levi, G.; Hassncer, T. Age and gender classification using convolutional neural networks. In Proceedings of the 2015 IEEE Conference on Computer Vision and Pattern Recognition Workshops (CVPRW), Boston, MA, USA, 7–12 June 2015; pp. 34–42. [Google Scholar]

- Hou, J.; Wang, S.; Lai, Y.; Tsao, Y.; Chang, H.; Wang, H. Audio-Visual Speech Enhancement Using Multimodal Deep Convolutional Neural Networks. IEEE Trans. Emerg. Top. Comput. Intell. 2018, 2, 117–128. [Google Scholar] [CrossRef]

- Ozbulak, G.; Aytar, Y.; Ekenel, H.K. How transferable are CNN-based features for age and gender classification. In Proceedings of the 2016 International Conference of the Biometrics Special Interest Group (BIOSIG), Darmstadt, Germany, 21–23 September 2016. [Google Scholar] [CrossRef]

- Esteban, R.; Sherry, M.; Andrew, S.; Saurabh, S.; Yutakaleon, S.; Jie, T.; Quoc, V.L.; Alexey, L.K. Large-scale evolution of image classifiers. In Proceedings of the ICML’17: 34th International Conference on Machine Learning, Sydney, Australia, 6–11 August 2017; Volume 70, pp. 2902–2911. [Google Scholar] [CrossRef]

- Benteng, M.; Xiang, L.; Yong, X.; Yanning, Z. Autonomous deep learning: A genetic DCNN designer for image classification. Neurocomputing 2020, 379, 152–161. [Google Scholar] [CrossRef]

- Zoph, B.; Le, Q.V. Neural Architecture Search with Reinforcement Learning. arXiv, 2016; arXiv:1611.01578. [Google Scholar]

- Yang, W.H.; Tarng, Y.S. Design optimization of cutting parameters for turning operations based on the Taguchi method. J. Mater. Process. Tech. 1998, 84, 122–129. [Google Scholar] [CrossRef]

- Ballantyne, K.N.; van Oorschot, R.A.; Mitchell, R.J. Reduce optimisation time and effort: Taguchi experimental design methods. Forensic Sci. Int. Genet. Suppl. Ser. 2008, 1, 7–8. [Google Scholar] [CrossRef]

- Harlapur, M.D.; Mallapur, D.G.; Rajendra, K. AIP Conference Proceeding; AIP Publishing: New York, NY, USA, 2018; Volume 1943. [Google Scholar]

- LeCun, Y.; Bottou, L.; Bengio, Y.; Haffner, P. Gradient-based learning applied to document recognition. Proc. IEEE 1998, 86, 2278–2324. [Google Scholar] [CrossRef]

- Alex, K.; Ilya, S.; Geoffrey, H. ImageNet classification with deep convolutional neural networks. Neural Inf. Process. Syst. 2012, 60, 84–90. [Google Scholar] [CrossRef]

- Szegedy, C.; Liu, W.; Jia, Y.; Sermanet, P.; Reed, S.; Anguelov, D.; Vanhoucke, V.; Rabinovich, A. Going deeper with convolutions. In Proceedings of the 2015 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Boston, MA, USA, 7–12 June 2015; pp. 1–9. [Google Scholar] [CrossRef]

- Simonyan, K.; Zisserman, A. Very deep convolutional neural network based image classification using small training sample size. In Proceedings of the 2015 3rd IAPR Asian Conference on Pattern Recognition (ACPR), Kuala Lumpur, Malaysia, 3–6 November 2015; pp. 730–734. [Google Scholar] [CrossRef]

- Kaiming, H.; Xiangyu, Z.; Shaoqing, R.; Jian, S. Deep Residual Learning for Image Recognition. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Las Vegas, NV, USA, 27–30 June 2016; pp. 770–778. [Google Scholar]

- Weiss, K.; Khoshgoftaar, T.M.; Wang, D. A survey of transfer learning. J. Big Data 2016, 3, 9. [Google Scholar] [CrossRef]

- Islam, M.M.; Tasnim, N.; Baek, J.-H. Human Gender Classification Using Transfer Learning via Pareto Frontier CNN Networks. Inventions 2020, 5, 16. [Google Scholar] [CrossRef]

- Lin, C.J.; Lin, C.H.; Jeng, S.Y. Using feature fusion and parameter optimization of dual-input convolutional neural network for face gender recognition. Appl. Sci. 2020, 10, 3166. [Google Scholar] [CrossRef]

- Lin, C.J.; Jeng, S.Y.; Chen, M.K. Using 2D CNN with Taguchi parametric optimization for lung cancer recognition from CT images. Appl. Sci. 2020, 10, 2591. [Google Scholar] [CrossRef]

© 2020 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).