BlocklyAR: A Visual Programming Interface for Creating Augmented Reality Experiences

Abstract

1. Introduction

2. Motivation and Research Aim

- Helps young learners and enthusiasts express their programming ideas without memorizing syntax;

- Enables learners to evaluate their coding promptly;

- Allows learners to generate an AR application with minimal effort;

- Supports users’ ability to share newly created AR applications with others;

- Shows the applicability of BlocklyAR by recreating an existing AR application through a visual programming interface;

- Evaluates BlocklyAR tool using the technology acceptance model with the following hypotheses:

3. Related Work

4. Methods

4.1. System Design

- Task 1 (T1). It enables users to program an AR application visually.

- Task 2 (T2). It supports multiple content types, including text, sound, primitive models, and external 3D models (e.g., GLTF, OBJ).

- Task 3 (T3). It allows young learners to generate both AR content and animations associated with it.

- Task 4 (T4). It enables users to experience their coding scheme on the visual interface.

- Task 5 (T5). It allows users to share AR applications they have developed with others.

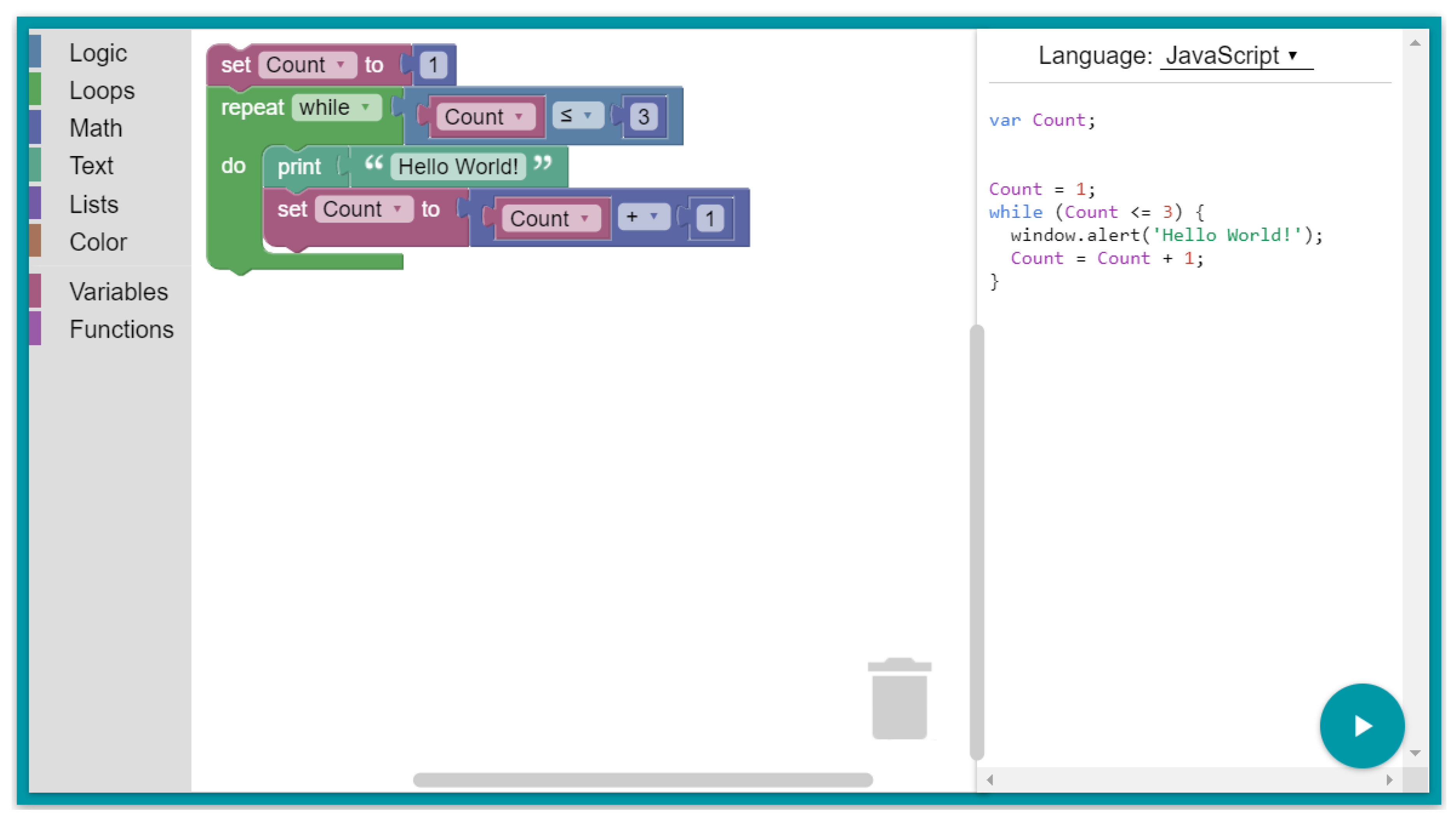

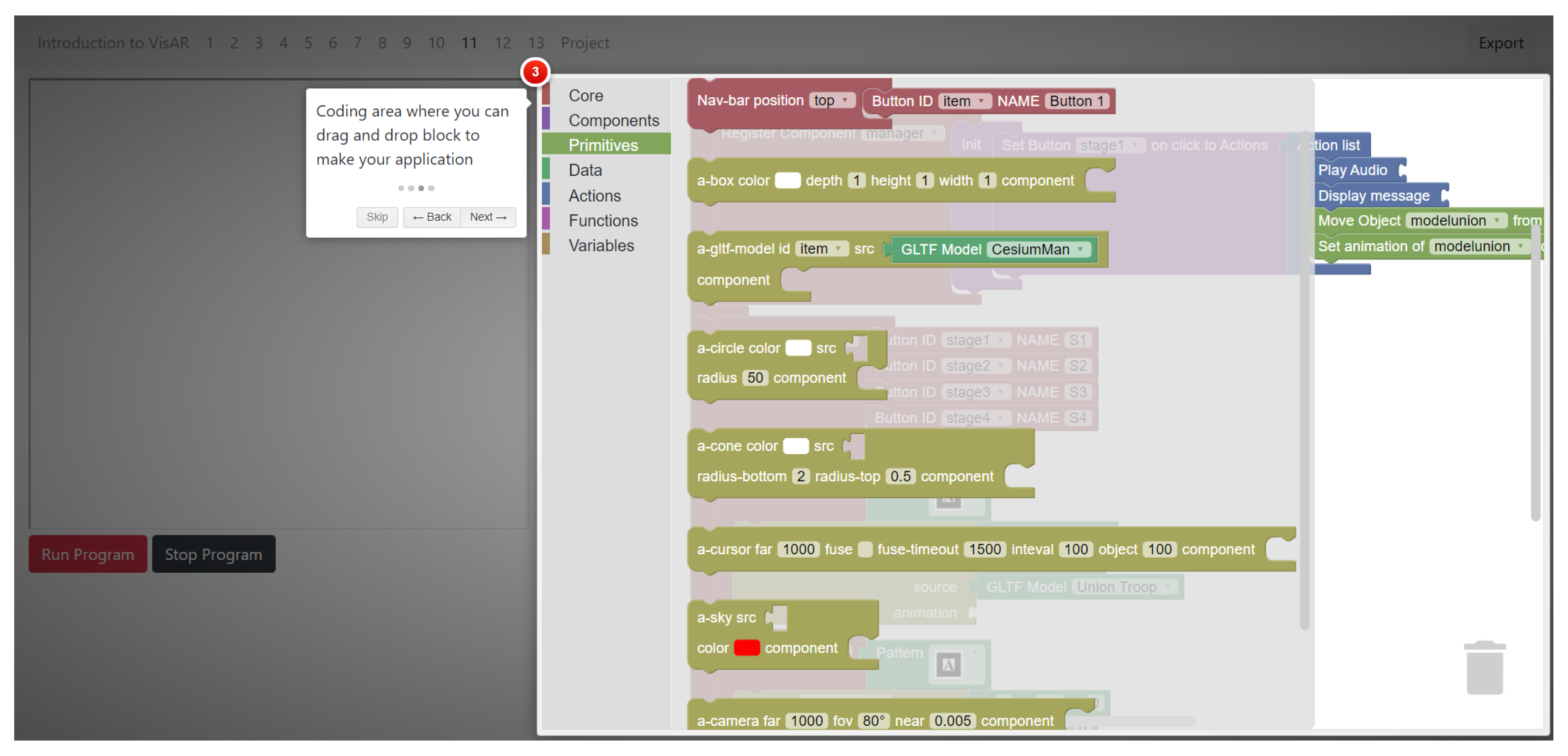

4.1.1. The Coding Editor

- Core: There are four block types in these category consisting of AR scene, marker, entity, and script. The AR scene block is translated into a typescript tag as "<a-scene arjs></a-scene>". Similarly, marker and entity blocks are transcribed as "<a-marker>...</a-marker>" and "<a-entity>...</a-entity>" respectively. The Script block is a special cue that allows creators to inject arbitrary JavaScript into the AR app. The script tag is often inserted at the end of the HTML document body. However, in A-Frame components should be declared first so the Script block must be placed before the AR scene block. The Script block is translated as "<script>...</script>". The three dots "..". in the translated tags indicate that we can nest other entities within tags.

- Components: Currently, A-Frame provides 42 components for users to work with. As mentioned earlier, most components are used for navigation and interactions in the VR environment (hand control, look control, keyboard control or Oculus/HTC Vive control, etc.). Thus, we only keep 10 general components, as follows: position, rotation, scale, camera, look-at, background, geometry, material, visible, and light. The shape of the component blocks is shown in Figure 3; the default value for each block is adapted from the A-Frame components. Each block and its translation are described in detail as follows:

- Position: This component places an entity at certain location in 3D space. It takes a coordinate value from three space-delimited numbers (i.e., "0 1 2" corresponds to x, y, and z position accordingly). In the AR app, the position of an object is relative to its marker. The position block is translated as "position = value" where value is the input from Vector3 block (which is described in the Data category). If the position component is not explicitly attached to an entity, the entity is assumed to be located at the origin (position = "0 0 0") in the 3D space or at the center of the marker.

- Rotation: This component sets the orientation of an entity in degrees. It takes the pitch (x), yaw (y), and roll (z) to indicate degrees of rotation. The rotation block is translated as "rotation = value" where value is the input from Vector3 block.

- Scale: The scale component defines the transformation of an entity such as shrinking, stretching, or skewing along the axes x, y, and z. respectively. The scale block is translated as "scale = value" where value is the input from Vector3 block.

- Camera: The camera component identifies from which perspective the user views the scene. Properties of the camera component include active (whether this camera is active), far (camera frustum far clipping plane), fov (field of view), near (camera frustum near clipping plane), spectator (used to render a third-person view), and zoom (zoom factor of the camera). The translated code for this component is "camera ="active: input_value; far: input_value; fov: input_value; near: input_value; spectator: input_value; zoom: input_value"" where input_value is defined by users from the block.

- Look-at: This component defines the orientation behavior of an entity over time. It updates the rotation component in such a way that the entity always faces toward another entity or position. The translated code for this component is "look-at =’value’" where value can be either a Vector3 block (in the Data category) or an identifier of an object (in the Variables section).

- Background: The background component defines a basic color background of a scene. It takes two inputs as color and transparency. The background block is translated as "background = ’color: input_value; transparent: boolean’" where the input_value and boolean are set by users.

- Geometry: This is a universal component to define the shape of an entity. The input of this component can be either data defined by users or primitive shape (which is described in the Primitives category). The translated code from the geometry block is "geometry =’buffer: boolean; primitive: input_value; skipCache: boolean’".

- Material: The material component defines the appearance as an entity such as color, wireframe, transparency, or visibility. The translated code from the geometry block is "material =’color: input_value; wireframe: boolean; transparent: boolean; visible: boolean’".

- Visible: This component determines whether to render an object in the scene or not. The translated code from the visible block is "visible = boolean". If the boolean value is false, the object is not drawn and vice versa.

- Light: This component defines the entity as a source of light. Its properties include type of light (ambient, directional, hemisphere, point, spot), color of the light, and the intensity (light strength). We translate the light block into the component as "light = ’type: input_value; color: input_value; intensity: input_value’".

- Primitives: BlocklyAR supports 27 visual cues that can be translated into primitives available in A-Frame. In addition, our tool helps to generate HTML tags such as div or button. The mechanism to generate code for the primitives is similar to entity block. Due to a large number of primitives, we do not describe all of them in detail in this section. Instead, we describe blocks that are often used in our AR application.

- Box: This primitive geometry enables the system to draw a box in the scene. Basically, it is defined by color, height, width, and depth. The box is put in BlocklyAR for testing purposes. The translation from block to A-Frame tag as "<a-box color=’input_value’ depth=’input_value’ width=’input_value’ height=’input_value’>...</a-box>". The ellipses mean that other boxes or entities can be nested in this box.

- gltf: This is one of the most important blocks that makes BlocklyAR unique (Task 2). Its translation enables the system to render a 3D object and animations in GLTF format [29]. We use these animations to make the characters in the scene perform behaviors. The available animations were extracted in the animation lists block (described in the Data category). The translation from this block is defined as "<a-gltf-model id="input_variable" src="input_value"></a-gltf-model>" where input_variable is retrieved from block in the Variables category and input_value comes from Data category.

- Data: As its name implies, this block allows users to define the data type, default value, and constraint of a given block. Blocks in this category are usually used as inputs for the component and primitive blocks. We define seven block types dedicated for the AR application.

- Vector3: This block defines a coordinate value as three space-delimited numbers. The translation of this block is "x y z" corresponding to x, y, and z axes in the 3D space. The output of this block is used to set the position, location, and scale of an object.

- Animation lists: This block defines all available animations of GLTF models (Task 3). Its output gives instruction to the system for playing a particular animation of a 3D object. The translation for this block is "animation_type" where animation_type can be idle, walking, running, etc.

- Audio lists: Similar to animation list block, the audio lists blocks determines a set of available audio files in the system (Task 2). Its output provides the audio location as "audio_path".

- Preset: The preset block defines the type for the marker block. It is translated as "preset = ’dropdown_value’" where dropdown_value includes hiro and kanji - two popular markers for testing AR application.

- Pattern: Users can use custom markers for their AR application. This block determines the marker and its pattern location and is translated as "pattern = ’pattern_path’" where pattern_path is the location of the pattern file.

- GLTF: This block specifies the location of the GLTF model (Task 2). It is used as a source for the gltf block in the Primitives category.

- Text: This block defines the text content to be displayed or used by other blocks. Its output is "text_content" (Task 2).

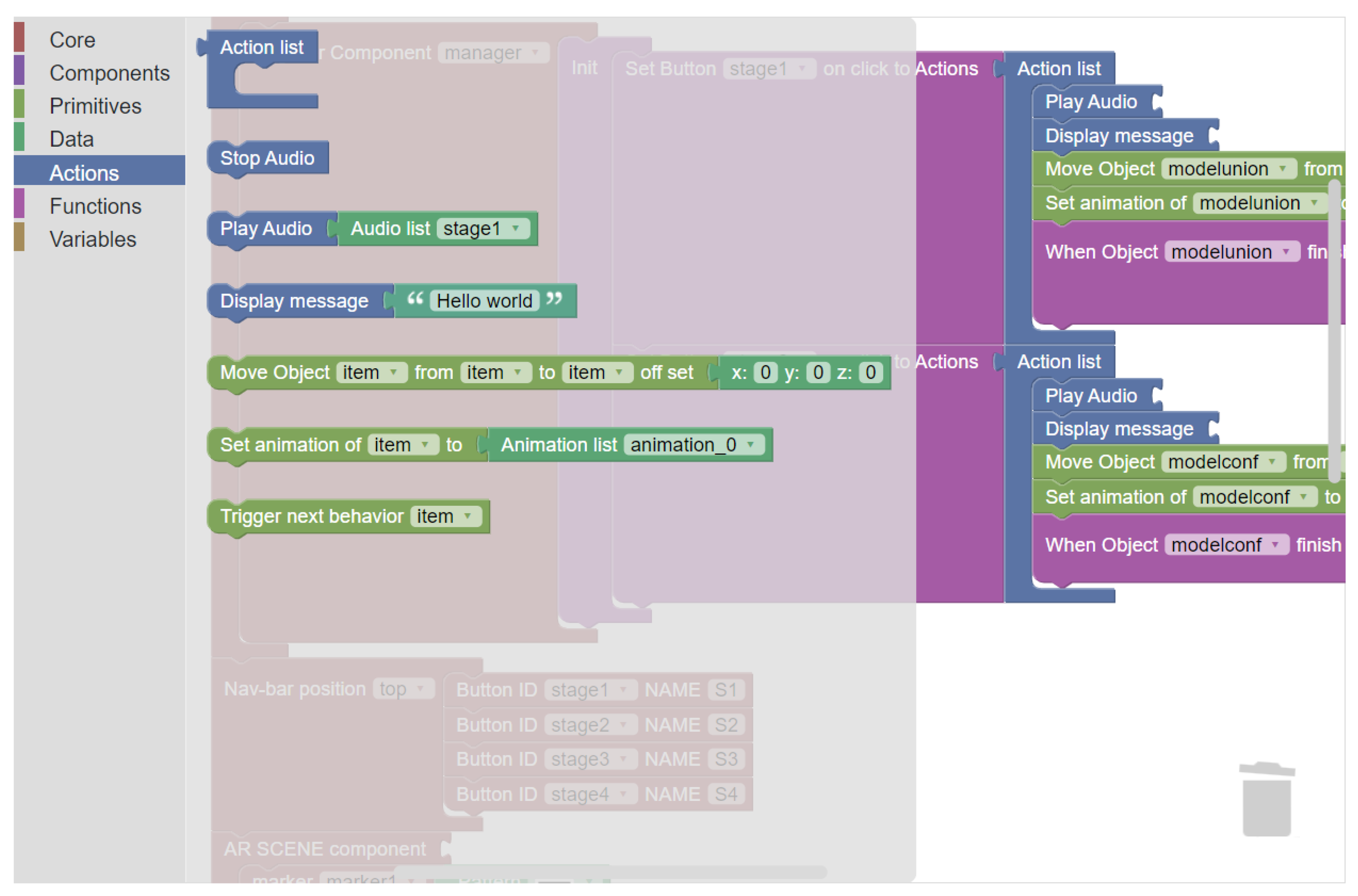

- Actions: This category consists of blocks that define typical atomic events in the AR application. The shape of each of the blocks is illustrated in Figure 4.

- Action list: This block defines an empty action container that allows a series of event to be performed within it. The translation of this block is an anonymous function in JavaScript as "()=>{...}" where "..". represents the atomic event.

- Stop audio: This block definition enables users to stop playing all audio in the AR app. It traverses the HTML DOM (Document Object Model) elements, finds the audio objects, and triggers the pause event. The block is translated into JavaScript command as "$(’audio’).trigger(’pause’)".

- Play audio: This block is translated into an executable code where a particular audio object is triggered. It is transcribed as "$(’#input_value’).trigger(]play])", where #input_value is the identifier of the audio object.

- Display message: The display message block defines a message to be displayed on the screen (Task 2). It takes the text block as the input. The literal translation of this block is "$(’body’).appendChild(’input_value’)".

- Move object: This is one of the most important blocks in BlocklyAR since it allows a particular 3D model to travel and move between markers (Task 3). This literal block identifies which 3D object involves movement from which location and where the destination is. Due to a large number of command lines, we do not present the translation here. Scholars can find it on our GitHub repository.

- Set animation: When the set animation block is used, it prompts the system to play a specified animation of a particular 3D object (Task 3). The translated code for this block is "$(’#object_identifier’).attr(’animation-mixer’,’clip: animation_type’)"

- Trigger next action: This block defines a transition between a different stage of an application or simulates an event. Particularly, it takes an input, an HTML element and triggers the "click" event. The translation is described as "$(’#object_identifier’).trigger(’click’)"

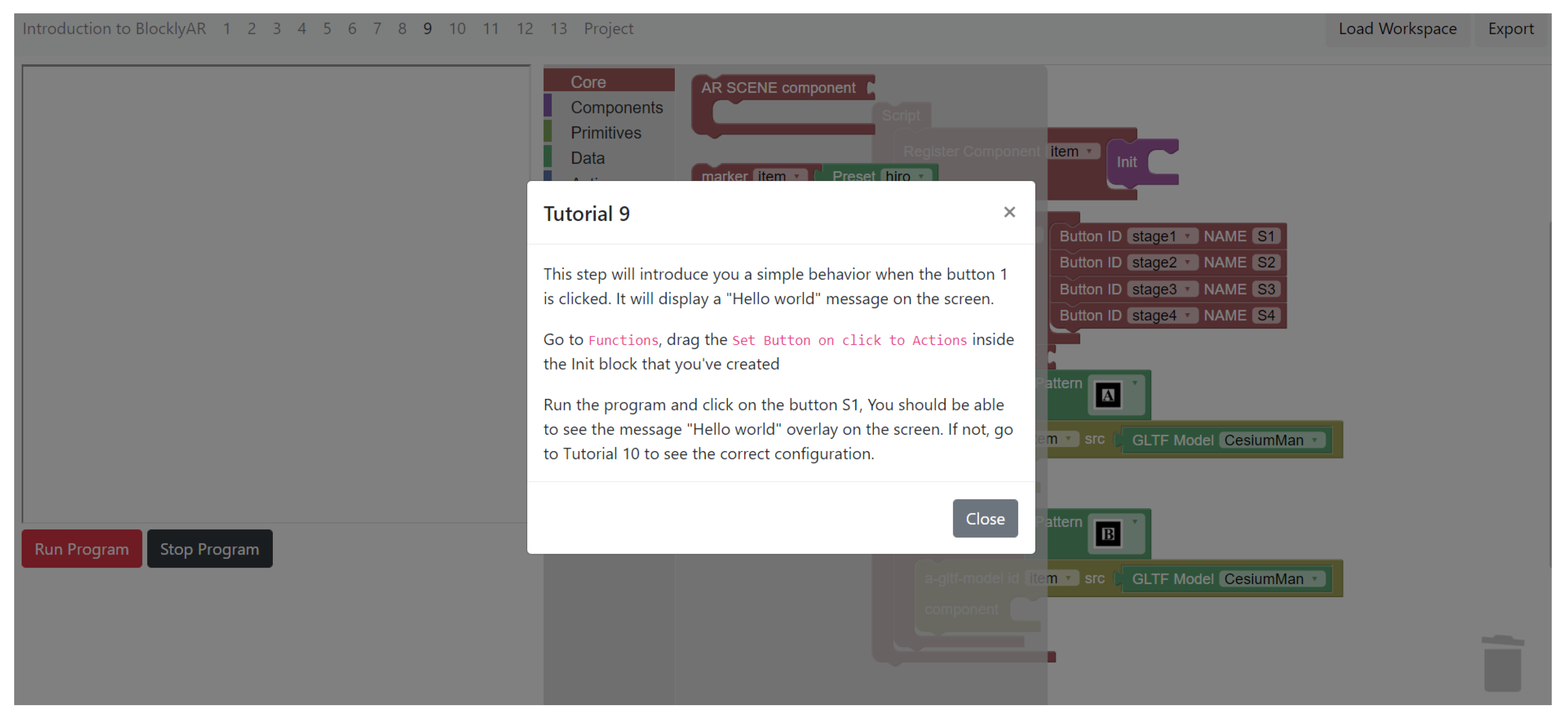

- Functions: The category contains blocks that define the sequence of events or the object’s behaviors over time. The arrangement of blocks allow ordering of the execution codes.

- Set actions to an object: This block enables users to create a series of actions for a particular object. These actions are taken from the Action List block. The translation is described as "$(’#object_identifier’).on(’click’, action_list)".

- Next actions: This block is used when users want to perform other actions when a given object finishes running actions. The next action block is translated as "$(’#object_identifier’).on(’end’, action_list)" where action_list contains the next instructions (i.e., the Action_List block).

- Wait: This block defines a delay time for the next execution. Its translation is expressed as "setTimeout(function(){actions},second})" where actions are taken from the action blocks and second is the delay time.

- Variables: This category enables users to define variables in the AR application. Since BlocklyAR allows the addition of any arbitrary 3D objects in the scene, each object needs to have a unique identifier. The variable block defines the variable name to be used in other blocks. The variable block is provided by the Blockly library.

4.1.2. The Visual Augmented Reality Component

4.1.3. The Supporting Panel Component

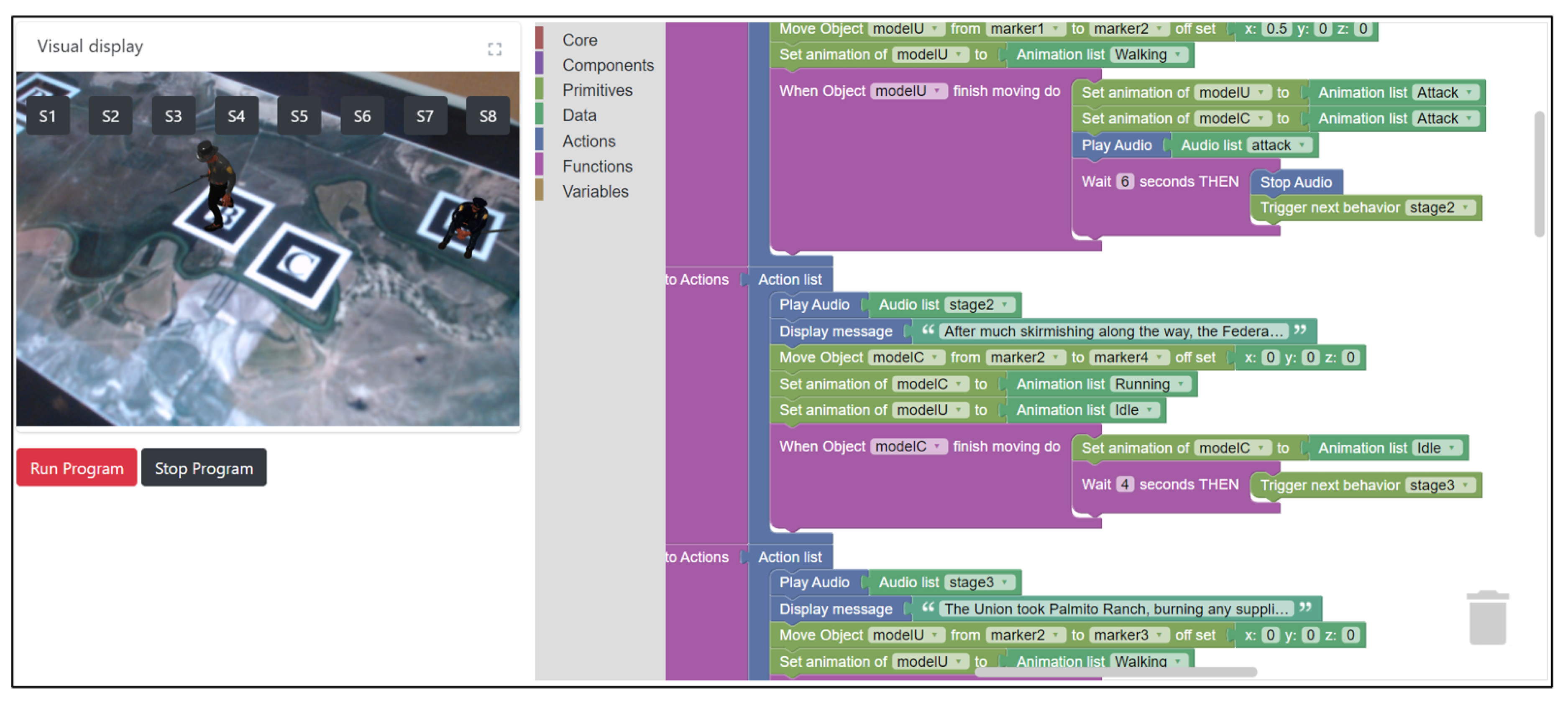

4.2. Use Case

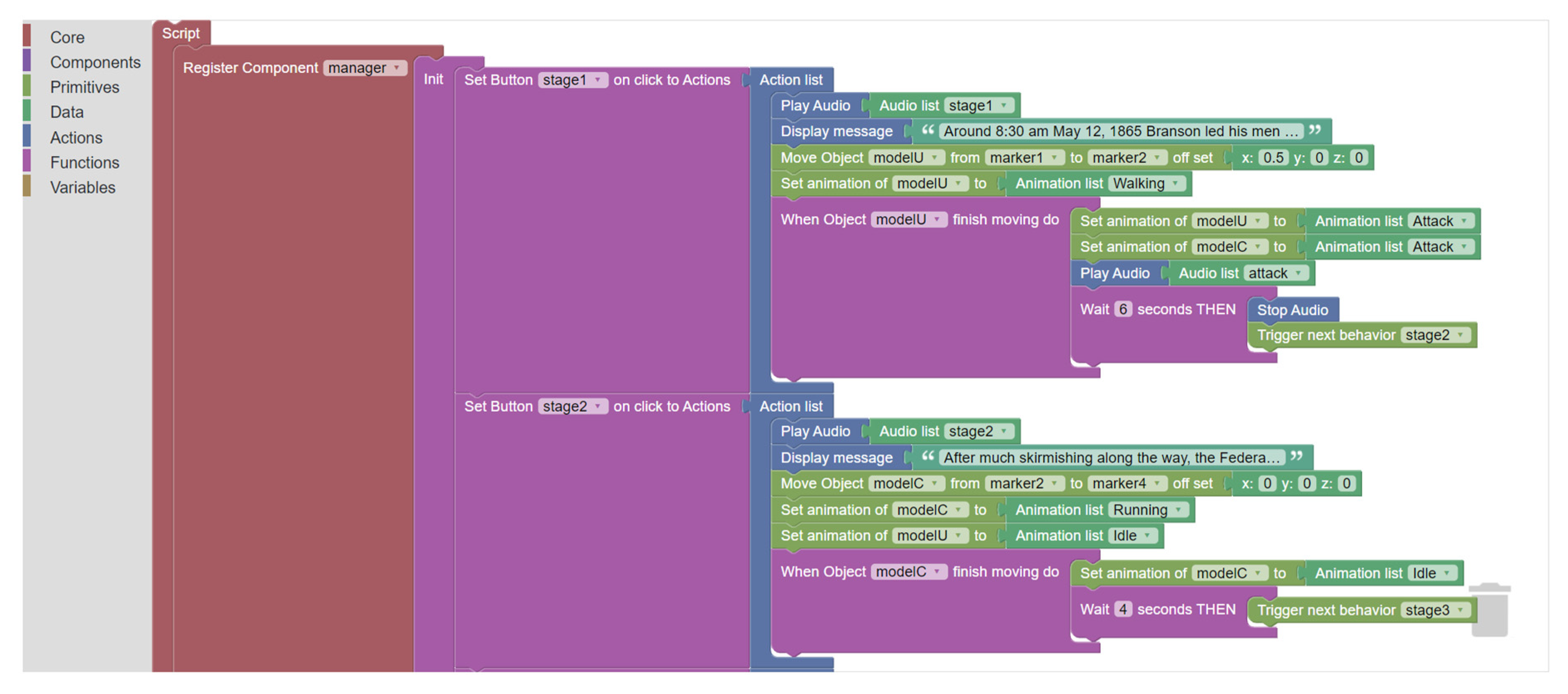

- Stage 1 (S1): The Union began moving from White Ranch toward Palmito to attack the Confederacy—play audio, display message, move the Union 3d models from a marker to another marker, and set animation of the Union models to "walking". When the Union finishes moving set animation of the Union and Confederacy to "attack", play audio "attack", wait 6 s, and then transition to the next stage (S2).

- Stage 2 (S2): The Confederacy scattered to different locations—play audio, display message, move the Confederate 3d models from a marker to another marker, set animation of the Union to "idle", and set animation of the Confederacy to "running". When the Confederacy finishes the movements, set animation of the Union to "idle", wait for 6 s, and then transition to next the stage (S3).

- Stage 3 (S3): The Union burned supplies and remained at the site to feed themselves and their horses—play audio, display message, move the Union from a marker to another marker, set animation of the Confederacy to "walking". When the Union finishes the movements, set animation of the Union to "resting", wait for 6 s, and then transition to the next stage (S4).

- Stage 4 (S4): The Confederacy arrived with reinforcements and forced the Union back to White Ranch—play audio, display message, move 3D models from a marker to another maker, and set animation of all models to "walking". When the 3D models finish their movements, set animation of all models to "attack". Wait for 6 s and then move the Union from a marker to another marker, set animation of the Union to "running", and then set animation of the Confederate to "idle". When the Union finishes the movements, set animation of the Union to "idle", wait for 6 s, and then transition to next the stage (S5).

4.3. Evaluation

4.4. Research Model

4.5. Data Collection and Analysis

5. Results

5.1. Qualitative Analysis

5.2. Quantitative Analysis

6. Discussion

7. Conclusions

Author Contributions

Funding

Conflicts of Interest

References

- Azuma, R.T. A survey of augmented reality. Presence Teleoperators Virtual Environ. 1997, 6, 355–385. [Google Scholar] [CrossRef]

- Bruno, F.; Barbieri, L.; Marino, E.; Muzzupappa, M.; D’Oriano, L.; Colacino, B. An augmented reality tool to detect and annotate design variations in an Industry 4.0 approach. Int. J. Adv. Manuf. Technol. 2019, 105, 875–887. [Google Scholar] [CrossRef]

- Jung, K.; Nguyen, V.T.; Yoo, S.C.; Kim, S.; Park, S.; Currie, M. PalmitoAR: The Last Battle of the US Civil War Reenacted Using Augmented Reality. Int. J. Geo-Inf. 2020, 9, 75. [Google Scholar] [CrossRef]

- Norouzi, N.; Bruder, G.; Belna, B.; Mutter, S.; Turgut, D.; Welch, G. A systematic review of the convergence of augmented reality, intelligent virtual agents, and the internet of things. In Artificial Intelligence in IoT; Springer: Berlin/Heidelberg, Germany, 2019; pp. 1–24. [Google Scholar]

- Azuma, R.; Baillot, Y.; Behringer, R.; Feiner, S.; Julier, S.; MacIntyre, B. Recent advances in augmented reality. IEEE Comput. Graph. Appl. 2001, 21, 34–47. [Google Scholar] [CrossRef]

- T. Nguyen, V.; Hite, R.; Dang, T. Web-Based Virtual Reality Development in Classroom: From Learner’s Perspectives. In Proceedings of the 2018 IEEE International Conference on Artificial Intelligence and Virtual Reality (AIVR), Taichung, Taiwan, 10–12 December 2018; pp. 11–18. [Google Scholar] [CrossRef]

- Linowes, J.; Babilinski, K. Augmented Reality for Developers: Build Practical Augmented Reality Applications with Unity, ARCore, ARKit, and Vuforia; Packt Publishing Ltd.: Birmingham, UK, 2017. [Google Scholar]

- Kato, H. ARToolKit: Library for Vision-Based augmented reality. IEICE PRMU 2002, 6, 2. [Google Scholar]

- Nguyen, V.T.; Jung, K.; Dang, T. Creating Virtual Reality and Augmented Reality Development in Classroom: Is it a Hype? In Proceedings of the 2019 IEEE International Conference on Artificial Intelligence and Virtual Reality (AIVR), San Diego, CA, USA, 9–11 December 2019; pp. 212–2125. [Google Scholar]

- Danchilla, B. Three.js framework. In Beginning WebGL for HTML5; Springer: Berlin/Heidelberg, Germany, 2012; pp. 173–203. [Google Scholar]

- Mozilla. A Web Framework for Building Virtual Reality Experiences. 2019. Available online: https://aframe.io (accessed on 23 January 2020).

- WebAssembly. World Wide Web Consortium. 2020. Available online: https://webassembly.org/ (accessed on 5 April 2020).

- Weintrop, D. Block-based programming in computer science education. Commun. ACM 2019, 62, 22–25. [Google Scholar] [CrossRef]

- Resnick, M.; Maloney, J.; Monroy-Hernández, A.; Rusk, N.; Eastmond, E.; Brennan, K.; Millner, A.; Rosenbaum, E.; Silver, J.; Silverman, B.; et al. Scratch: Programming for all. Commun. ACM 2009, 52, 60–67. [Google Scholar] [CrossRef]

- Radu, I.; MacIntyre, B. Augmented-reality scratch: A children’s authoring environment for augmented-reality experiences. In Proceedings of the 8th International Conference on Interaction Design and Children, Como, Italy, 3–5 June 2009; pp. 210–213. [Google Scholar]

- CoSpaces. Make AR & VR in the Classroom. 2020. Available online: https://cospaces.io/edu/ (accessed on 5 April 2020).

- Laine, T.H. Mobile educational augmented reality games: A systematic literature review and two case studies. Computers 2018, 7, 19. [Google Scholar] [CrossRef]

- Wu, H.K.; Lee, S.W.Y.; Chang, H.Y.; Liang, J.C. Current status, opportunities and challenges of augmented reality in education. Comput. Educ. 2013, 62, 41–49. [Google Scholar] [CrossRef]

- Klopfer, E.; Squire, K. Environmental Detectives—The development of an augmented reality platform for environmental simulations. Educ. Technol. Res. Dev. 2008, 56, 203–228. [Google Scholar] [CrossRef]

- Inc, G. Blockly: A JavaScript Library for Building Visual Programming Editors. 2020. Available online: https://developers.google.com/blockly (accessed on 5 April 2020).

- Mota, J.M.; Ruiz-Rube, I.; Dodero, J.M.; Arnedillo-Sánchez, I. Augmented reality mobile app development for all. Comput. Electr. Eng. 2018, 65, 250–260. [Google Scholar] [CrossRef]

- Clarke, N.I. Through the Screen and into the World: Augmented Reality Components with MIT App Inventor. Ph.D. Thesis, Massachusetts Institute of Technology, Cambridge, MA, USA, 2019. [Google Scholar]

- Anonymous. BlocklyAR: A Visual Programming Interface for Creating Augmented Reality Experience. 2020. Available online: https://youtu.be/lSsQd8GTcQ8 (accessed on 1 June 2020).

- Etienne, J. Creating Augmented Reality with AR.js and A-Frame. 2019. Available online: https://aframe.io/blog/arjs (accessed on 23 January 2020).

- Nguyen, V.T.; Hite, R.; Dang, T. Learners’ Technological Acceptance of VR Content Development: A Sequential 3-Part Use Case Study of Diverse Post-Secondary Students. Int. J. Semant. Comput. 2019, 13, 343–366. [Google Scholar] [CrossRef]

- Nguyen, V.T.; Zhang, Y.; Jung, K.; Xing, W.; Dang, T. VRASP: A Virtual Reality Environment for Learning Answer Set Programming. In Proceedings of the International Symposium on Practical Aspects of Declarative Languages, New Orleans, LA, USA, 20–21 January 2020; Springer: Berlin/Heidelberg, Germany, 2020; pp. 82–91. [Google Scholar]

- Jung, K.; Nguyen, V.T.; Diana, P.; Seung-Chul, Y. Meet the Virtual Jeju Dol Harubang—The Mixed VR/AR Application for Cultural Immersion in Korea’s Main Heritage. Int. J. Geo-Inf. 2020, 9, 367. [Google Scholar] [CrossRef]

- Munzner, T. Visualization Analysis and Design; CRC Press: Boca Raton, FL, USA, 2014. [Google Scholar]

- Khronos. GL Transmission Format. Available online: https://www.khronos.org/gltf/ (accessed on 5 April 2020).

- Nguyen, V.T.; Jung, K.; Yoo, S.; Kim, S.; Park, S.; Currie, M. Civil War Battlefield Experience: Historical Event Simulation using Augmented Reality Technology. In Proceedings of the 2019 IEEE International Conference on Artificial Intelligence and Virtual Reality (AIVR), San Diego, CA, USA, 9–11 December 2019; pp. 294–2943. [Google Scholar] [CrossRef]

- Davis, F.D. A Technology Acceptance Model for Empirically Testing New End-User Information Systems: Theory Additionally, Results. Ph.D. Thesis, Massachusetts Institute of Technology, Cambridge, MA, USA, 1985. [Google Scholar]

- Davis, F.D.; Bagozzi, R.P.; Warshaw, P.R. User acceptance of computer technology: A comparison of two theoretical models. Manag. Sci. 1989, 35, 982–1003. [Google Scholar] [CrossRef]

- Dishaw, M.T.; Strong, D.M. Extending the technology acceptance model with task–technology fit constructs. Inf. Manag. 1999, 36, 9–21. [Google Scholar] [CrossRef]

- Li, Y.M.; Yeh, Y.S. Increasing trust in mobile commerce through design aesthetics. Comput. Hum. Behav. 2010, 26, 673–684. [Google Scholar] [CrossRef]

- Verhagen, T.; Feldberg, F.; van den Hooff, B.; Meents, S.; Merikivi, J. Understanding users’ motivations to engage in virtual worlds: A multipurpose model and empirical testing. Comput. Hum. Behav. 2012, 28, 484–495. [Google Scholar] [CrossRef]

- Anonymous. Battle of Palmito Ranch Augmented Reality Demo Version 3. 2019. Available online: https://youtu.be/PH9rLrZxQhk (accessed on 1 June 2020).

- Becker, D. Acceptance of mobile mental health treatment applications. Procedia Comput. Sci. 2016, 98, 220–227. [Google Scholar] [CrossRef]

- Davis, F.D. Perceived usefulness, perceived ease of use, and user acceptance of information technology. MIS Q. 1989, 13, 319–340. [Google Scholar] [CrossRef]

- Hwang, H.; Takane, Y. Generalized Structured Component Analysis: A Component-Based Approach to Structural Equation Modeling; Chapman and Hall/CRC: Boca Raton, FL, USA, 2014. [Google Scholar]

- Hwang, H.; Takane, Y.; Jung, K. Generalized structured component analysis with uniqueness terms for accommodating measurement error. Front. Psychol. 2017, 8, 2137. [Google Scholar] [CrossRef] [PubMed]

- Jung, K.; Panko, P.; Lee, J.; Hwang, H. A comparative study on the performance of GSCA and CSA in parameter recovery for structural equation models with ordinal observed variables. Front. Psychol. 2018, 9, 2461. [Google Scholar] [CrossRef]

- Jung, K.; Lee, J.; Gupta, V.; Cho, G. Comparison of Bootstrap Confidence Interval Methods for GSCA Using a Monte Carlo Simulation. Front. Psychol. 2019, 10, 2215. [Google Scholar] [CrossRef] [PubMed]

- Hwang, H.; Jung, K.; Kim, S. WEB GESCA. 2019. Available online: http://sem-gesca.com/webgesca (accessed on 24 June 2020).

- Shelstad, W.J.; Chaparro, B.S.; Keebler, J.R. Assessing the User Experience of Video Games: Relationships Between Three Scales. In Proceedings of the Human Factors and Ergonomics Society Annual Meeting, Washington, DC, USA, 28 October–2 November 2019; SAGE Publications Sage CA: Los Angeles, CA, USA, 2019; Volume 63, pp. 1488–1492. [Google Scholar]

- Beshai, S. Examining the Efficacy of an Online Program to Cultivate Mindfulness and Self-Compassion Skills (Mind-OP): Randomized Controlled Trial on Amazon’s Mechanical Turk. PsyArXiv 2020. [Google Scholar] [CrossRef]

- Tsai, J.; Huang, M.; Wilkinson, S.T.; Edelen, C. Effects of video psychoeducation on perceptions and knowledge about electroconvulsive therapy. Psychiatry Res. 2020, 286, 112844. [Google Scholar] [CrossRef] [PubMed]

- Paine, A.M.; Allen, L.A.; Thompson, J.S.; McIlvennan, C.K.; Jenkins, A.; Hammes, A.; Kroehl, M.; Matlock, D.D. Anchoring in destination-therapy left ventricular assist device decision making: A Mechanical Turk survey. J. Card. Fail. 2016, 22, 908–912. [Google Scholar] [CrossRef] [PubMed]

- Rauschnabel, P.A.; He, J.; Ro, Y.K. Antecedents to the adoption of augmented reality smart glasses: A closer look at privacy risks. J. Bus. Res. 2018, 92, 374–384. [Google Scholar] [CrossRef]

- Venkatesh, V.; Thong, J.Y.; Xu, X. Consumer acceptance and use of information technology: Extending the unified theory of acceptance and use of technology. MIS Q. 2012, 36, 157–178. [Google Scholar] [CrossRef]

- Williams, M.D.; Rana, N.P.; Dwivedi, Y.K. The unified theory of acceptance and use of technology (UTAUT): A literature review. J. Enterp. Inf. Manag. 2015, 28, 443–488. [Google Scholar] [CrossRef]

- Nguyen, V.T.; Dang, T. Setting up Virtual Reality and Augmented Reality Learning Environment in Unity. In Proceedings of the 2017 IEEE International Symposium on Mixed and Augmented Reality (ISMAR-Adjunct), Nantes, France, 9–13 October 2017; pp. 315–320. [Google Scholar] [CrossRef]

| Construct | Source |

|---|---|

| Perceived task–technology fit | [37] |

| (TTF1) The application is adequate for AR-based visual programming education. | |

| (TTF2) The application is compatible with the task of controlling virtual objects. | |

| (TTF3) The application fits the task (i.e., AR-based visual programming education) well. | |

| (TTF4) The application is sufficient for a AR-based visual programming learning toolkit. | |

| Perceived visual design | [35] |

| (VD1) The visual design of the BlocklyAR is appealing. | |

| (VD2) The size of 3D virtual objects is adequate. | |

| (VD3) The layout structure is appropriate. | |

| Perceived usefulness | [38] |

| (PU1) Using BlocklyAR would improve my knowledge in AR-based visual programming skills. | |

| (PU2) Using BlocklyAR, I would accomplish tasks (e.g., AR-based visual programming) more quickly. | |

| (PU3) Using BlocklyAR would increase my interest in AR-based visual programming. | |

| (PU4) Using BlocklyAR would enhance my effectiveness on the task (i.e., AR-based visual programming). | |

| (PU5) Using BlocklyAR would make it easier to do my task (i.e., AR-based visual programming). | |

| Perceived ease of use | [38] |

| (PEU1) Learning to use the AR-based visual programming toolkit would be easy for me. | |

| (PEU2) I would find it easy to get the AR-based visual programming toolkit to do what I want it to do. | |

| (PEU3) My interaction with the AR-based visual programming toolkit would be clear and understandable. | |

| (PEU4) I would find the AR-based visual programming toolkit to be flexible to interact with. | |

| (PEU5) It would be easy for me to become skillful at using the AR-based visual programming toolkit. | |

| (PEU6) I would find the AR-based visual programming toolkit easy to use. | |

| Intention to use | [38] |

| (BI1) I intend to use the AR-based visual programming toolkit in the near future. | |

| (BI2) I intend to check the availability of the AR-based visual programming toolkit in the near future. |

| Variable | Category | Number | Percentag |

|---|---|---|---|

| Gender | Male | 53 | 80.3 |

| Female | 12 | 18.18 | |

| Not to say | 1 | 1.52 | |

| English as a First Language | Yes | 39 | 59.09 |

| No | 27 | 40.91 | |

| Ethnic heritage | Asian | 39 | 59.09 |

| Hispanic/Latino | 3 | 4.55 | |

| Caucasian/White | 18 | 27.27 | |

| American Indian/Alaska Native | 5 | 12.82 | |

| African American/Black | 1 | 1.52 | |

| Total | 66 | 100 |

| Construct | Item | Mean | SD |

|---|---|---|---|

| Perceived Task Technology Fit | TTF1 | 4.409 | 0.607 |

| TTF2 | 4.364 | 0.694 | |

| TTF3 | 4.439 | 0.659 | |

| TTF4 | 4.091 | 0.799 | |

| Perceived Visual Design | VD1 | 4.030 | 0.928 |

| VD2 | 3.985 | 0.813 | |

| VD3 | 4.212 | 0.755 | |

| Perceived Usefulness | PU1 | 4.333 | 0.771 |

| PU2 | 4.197 | 0.863 | |

| PU3 | 4.258 | 0.933 | |

| PU4 | 4.303 | 0.744 | |

| PU5 | 4.333 | 0.771 | |

| Perceived Ease of Use | PEU1 | 3.970 | 0.859 |

| PEU2 | 3.864 | 0.892 | |

| PEU3 | 4.136 | 0.802 | |

| PEU4 | 4.030 | 0.859 | |

| PEU5 | 4.015 | 0.868 | |

| PEU6 | 4.061 | 0.959 | |

| Intention to use | BI1 | 3.636 | 1.145 |

| BI2 | 3.864 | 1.162 |

| Construct | Item | Dillon-Goldstein’s rho | Average Variance Extracted |

|---|---|---|---|

| Perceived task–technology fit (TTF) | 4 | 0.809 | 0.519 |

| Perceived visual design (VD) | 3 | 0.780 | 0.543 |

| Perceived usefulness (PU) | 5 | 0.853 | 0.540 |

| Perceived easy of use (PEU) | 6 | 0.901 | 0.605 |

| Intention to use (BI) | 2 | 0.929 | 0.868 |

| Estimate | SE | 95%CI_LB | 95%CI_UB | |

|---|---|---|---|---|

| TTF1 | 0.809 | 0.046 | 0.712 | 0.892 |

| TTF2 | 0.544 | 0.099 | 0.270 | 0.680 |

| TTF3 | 0.787 | 0.086 | 0.586 | 0.892 |

| TTF4 | 0.711 | 0.103 | 0.428 | 0.844 |

| VD1 | 0.677 | 0.129 | 0.293 | 0.852 |

| VD2 | 0.787 | 0.097 | 0.572 | 0.887 |

| VD3 | 0.743 | 0.142 | 0.326 | 0.857 |

| PU1 | 0.762 | 0.068 | 0.612 | 0.871 |

| PU2 | 0.802 | 0.053 | 0.698 | 0.904 |

| PU3 | 0.732 | 0.062 | 0.574 | 0.829 |

| PU4 | 0.724 | 0.056 | 0.592 | 0.816 |

| PU5 | 0.644 | 0.087 | 0.465 | 0.810 |

| PEU1 | 0.765 | 0.054 | 0.648 | 0.862 |

| PEU2 | 0.770 | 0.067 | 0.598 | 0.872 |

| PEU3 | 0.762 | 0.081 | 0.570 | 0.866 |

| PEU4 | 0.726 | 0.050 | 0.631 | 0.817 |

| PEU5 | 0.809 | 0.045 | 0.709 | 0.884 |

| PEU6 | 0.829 | 0.037 | 0.755 | 0.888 |

| BI1 | 0.930 | 0.025 | 0.877 | 0.971 |

| BI2 | 0.933 | 0.026 | 0.870 | 0.971 |

| Estimates | Std.Error | 95%CI_LB | 95%CI_UB | |

|---|---|---|---|---|

| VD → TTF | 0.439 * | 0.124 | 0.175 | 0.686 |

| VD → PU | 0.127 | 0.208 | −0.278 | 0.464 |

| TTF → PEU | 0.434 * | 0.118 | 0.184 | 0.678 |

| PEU → PU | 0.212 | 0.161 | −0.066 | 0.554 |

| PU → BI | 0.319 * | 0.136 | 0.026 | 0.574 |

| PEU → BI | 0.417 * | 0.134 | 0.132 | 0.662 |

© 2020 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Nguyen, V.T.; Jung, K.; Dang, T. BlocklyAR: A Visual Programming Interface for Creating Augmented Reality Experiences. Electronics 2020, 9, 1205. https://doi.org/10.3390/electronics9081205

Nguyen VT, Jung K, Dang T. BlocklyAR: A Visual Programming Interface for Creating Augmented Reality Experiences. Electronics. 2020; 9(8):1205. https://doi.org/10.3390/electronics9081205

Chicago/Turabian StyleNguyen, Vinh T., Kwanghee Jung, and Tommy Dang. 2020. "BlocklyAR: A Visual Programming Interface for Creating Augmented Reality Experiences" Electronics 9, no. 8: 1205. https://doi.org/10.3390/electronics9081205

APA StyleNguyen, V. T., Jung, K., & Dang, T. (2020). BlocklyAR: A Visual Programming Interface for Creating Augmented Reality Experiences. Electronics, 9(8), 1205. https://doi.org/10.3390/electronics9081205