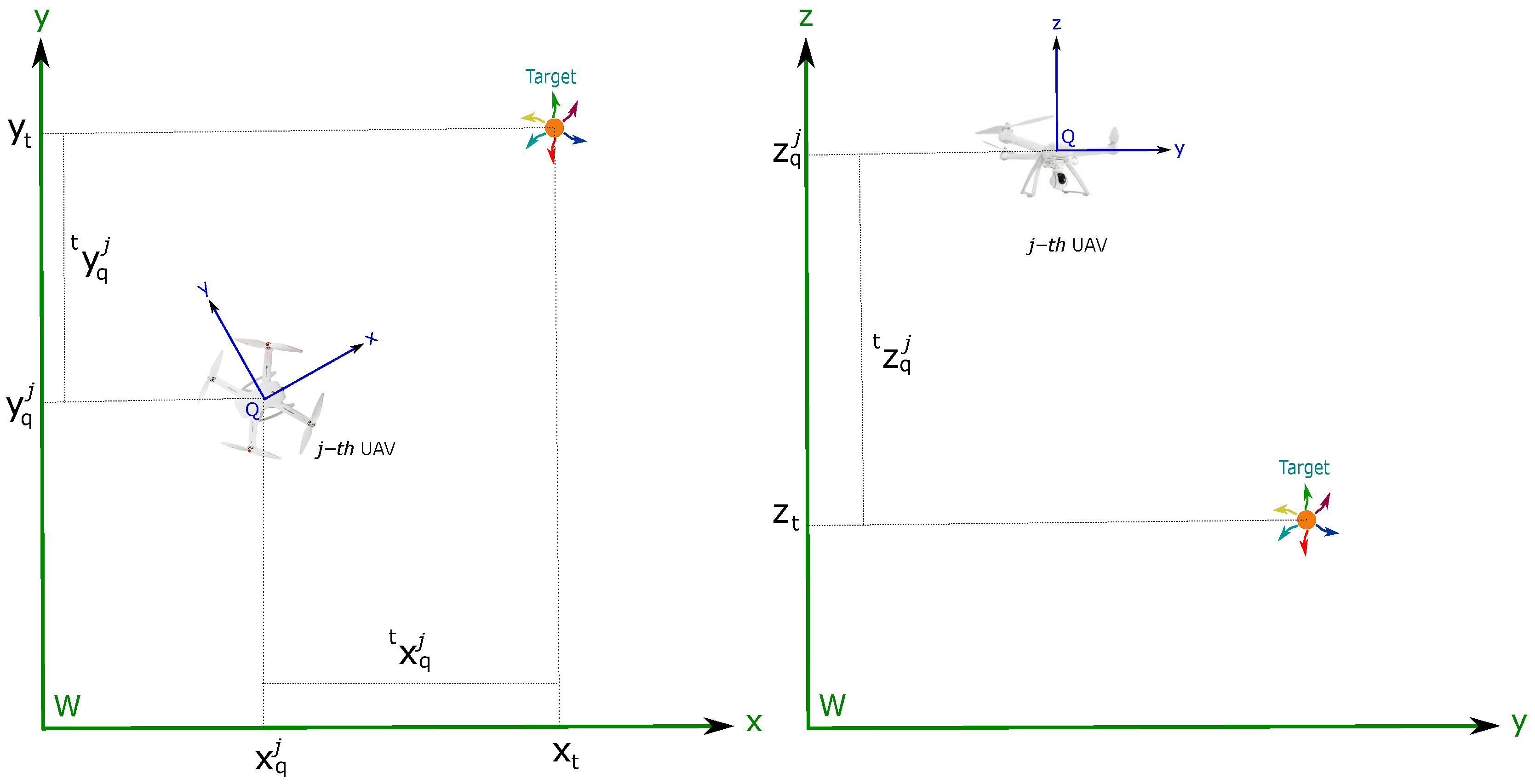

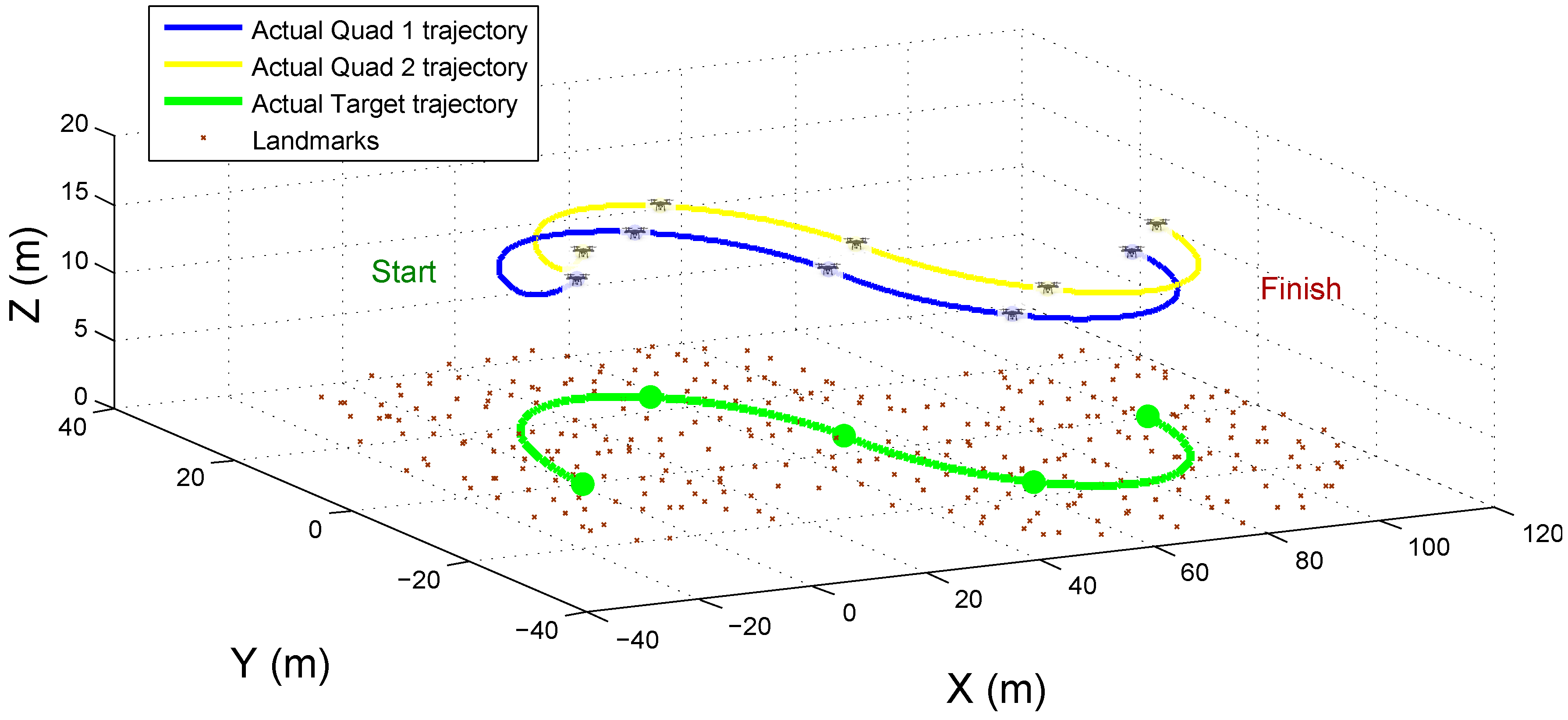

6.1. Simulation Setup

In this section, the proposed cooperative visual-SLAM system for UAV-based target tracking is validated through computer simulations. A Matlab

implementation of the proposed scheme was used for this purpose. To this aim, a simulation environment has been developed. The environment is composed of

landmarks, randomly distributed over the ground (See

Figure 6). To execute the tests, two quadcopters (Quad 1 and Quad 2), equipped with the set of sensors required by the proposed method are simulated. In simulations, it is assumed that there exist enough landmarks in the environment that allow for being observed in common by the cameras of the UAVs, at least a subset of them.

The measurements from the sensors are emulated to be taken with a frequency of 10 Hz. To emulate the system uncertainty, the following Gaussian noise is added to measurements: Gaussian noise with pixels is added to the measurements given by the cameras. Gaussian noise with cm is added to the measurements of altitude differential between two quadcopters obtained by the altimeters. It is important to note that the noise considered for emulating monocular measurements is bigger than the typical magnitude of the noise of real monocular measurement. In this way, the errors in camera orientation are considered caused by the gimbal, in addition to the imperfection of the sensor.

In simulations, the target was moved along a predefined trajectory (see

Figure 6).

The parameter values used for the quadcopters and the cameras are shown in

Table 2. The quadcopters’ parameters are like those presented in [

66]. The camera parameters are like those presented in [

17].

Figure 6 shows the trajectories of the three elements composing the flight formation. To highlight them, the position of the elements of the formation is indicated at different instants of time.

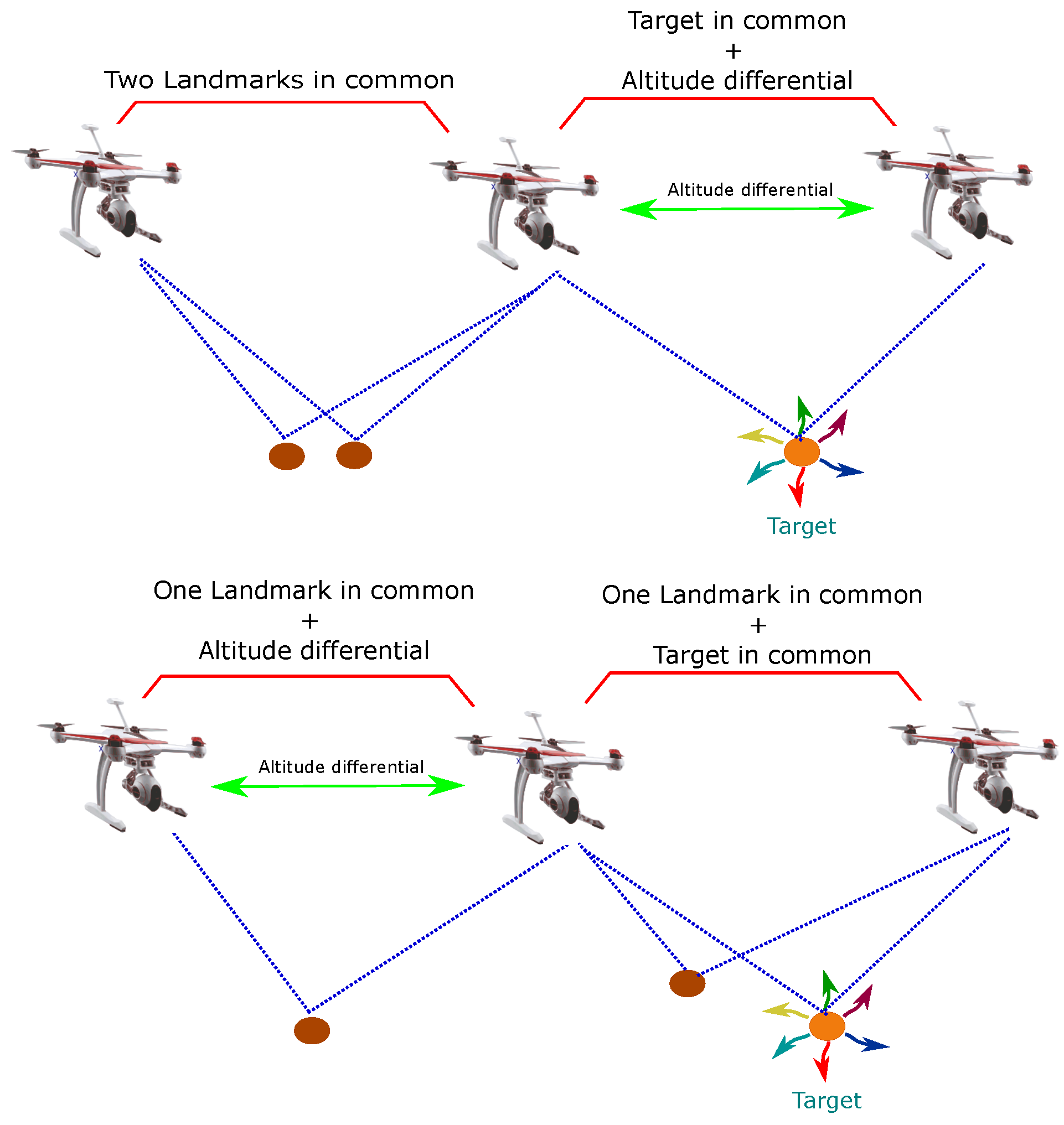

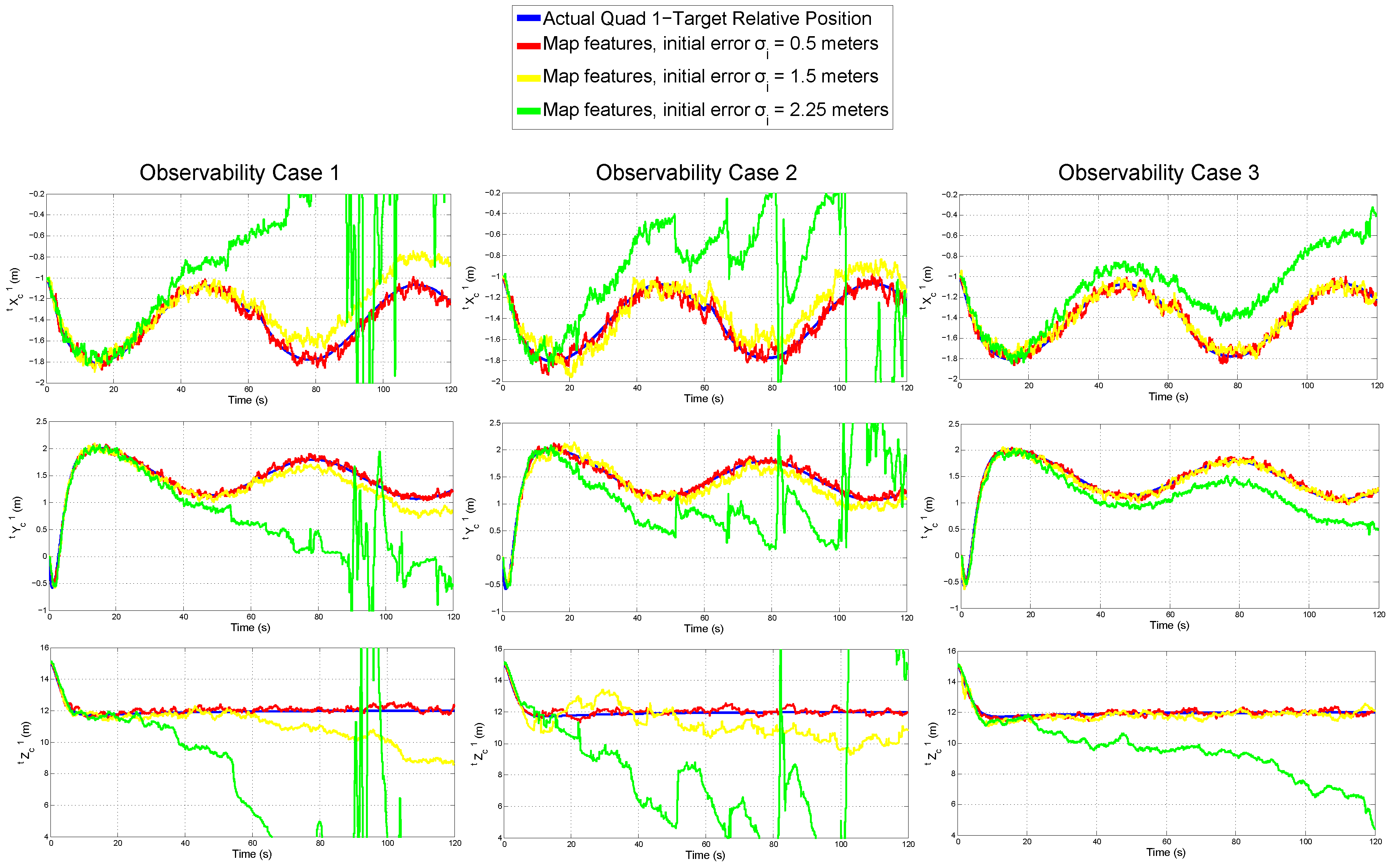

6.2. Convergence and Stability Tests

In a series of tests, the convergence and stability of the proposed cooperative visual-SLAM system, in open-loop, were evaluated under the three different observability conditions described in

Section 3.2. In this case, it is assumed that there is a control system able to maintain the aerial robots flying in formation with respect to the target.

Two different kinds of tests were performed:

- (a)

For the first test, the robustness of the cooperative visual-SLAM system with respect to errors in the initialization of map features is tested. In this case, the initial conditions of , , , and are assumed to be known exactly, but each i map feature is forced to be initialized into the system state with a determined error position. Three different conditions of initial error are considered: meters.

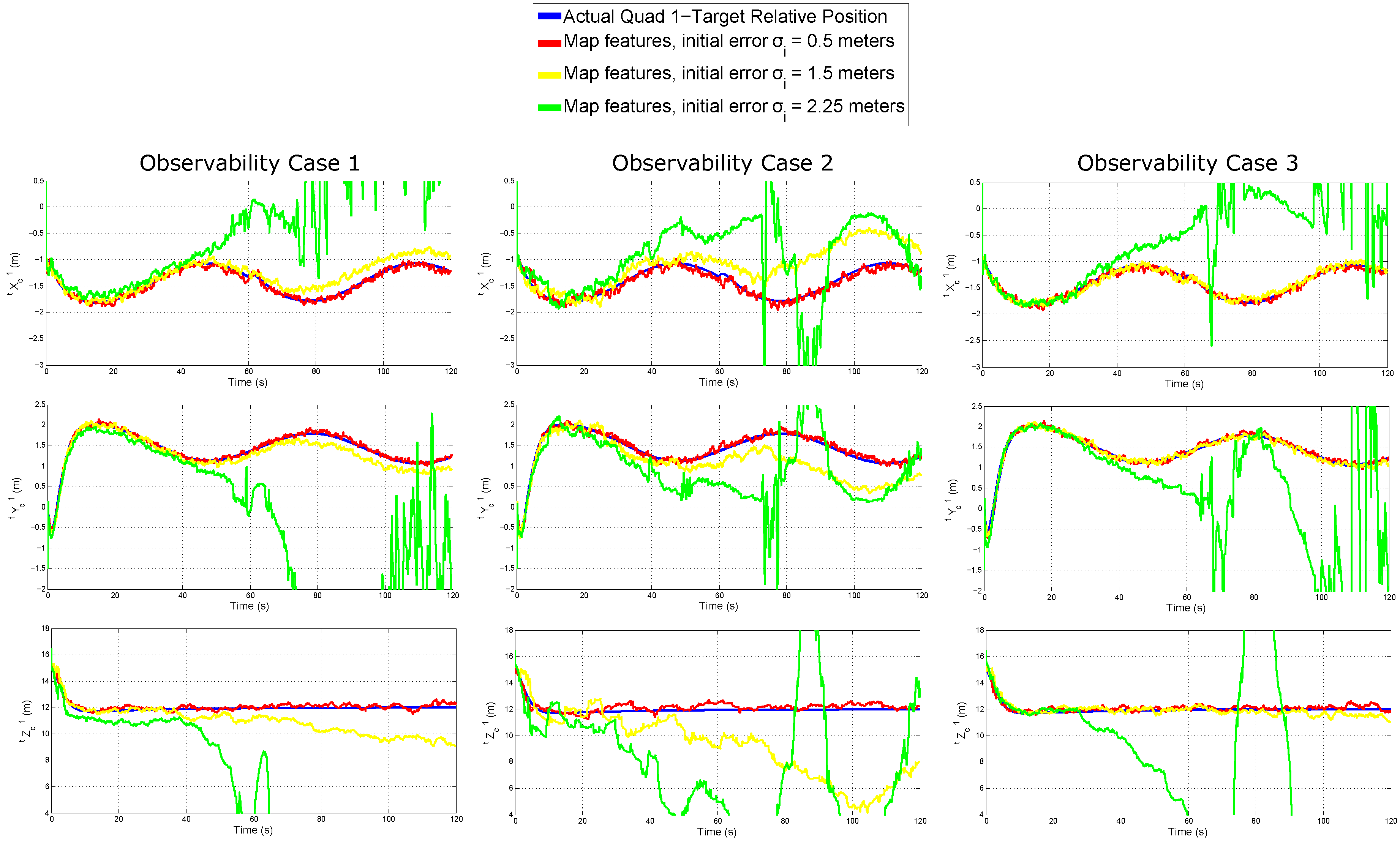

- (b)

For the second test, additional to the map features initial errors, the initial conditions of , , , , and are not known exactly and have a considerable error with respect to the real values. In this case, the robustness of the proposed estimation system is evaluated in function to the errors in the initial conditions of all the state variables.

Figure 7 and

Figure 8 show respectively the results for tests (a) and (b). In this case, the estimated relative position of the Quad 1 with respect to the target is plotted for each reference axis (row plots). Note that, for the sake of clarity, only the estimated values for Quad 1 are presented. The results for Quad 2 are quite similar to those presented for Quad 1. In these figures, each column of plots shows the results obtained from an observability case.

In both figures, it can be observed that both the observability property and the initial conditions play a preponderant role in the convergence and stability of the EKF-SLAM. For several applications, at least the initial position of the UAVs is assumed to be known. However, in SLAM, the position of the map features must be estimated online. The above outcome confirms the importance of using good feature initialization techniques in visual-SLAM. Of course, as it can be expected, the performance of the EKF-SLAM is considerably better when the system is observable. Note that, for the observability case 3, and despite a noticeable error drift, the filter still exhibits certain stability for the worst case of features initialization, when the initial conditions of the UAVs are known.

6.3. Comparative Study

Using the same simulation setup, for this series of tests, the performance of the proposed system, for estimating the relative position of the UAVs with respect to the moving target, was evaluated through a comparative study.

Table 3 summarizes the characteristics of five UAV-based target-tracking methods used in this comparative study.

There are some remarks about the methods used in the comparison. Method (1) represents a purely standard monocular-SLAM approach. Features initialization is based on [

67]. Because the metric scale cannot be retrieved using only monocular vision, it is assumed that the positions of landmarks seen in the first frame are perfectly known. Method (2) is similar to the proposed previous method, but the system is robot-centric parametrized, instead of target-centric. Method (3) represents an standard visual-stereo tracking approach. In this case, the moving target position is directly obtained by a stereo system, with a baseline of 15 cm. The method (4) is based on the approach presented in [

68]. In this case, UAVs are equipped with GPS, and the moving target position is estimated through the pseudo-stereo vision system composed of the monocular cameras of each UAV. Gaussian noise with

cm is added to GPS measurements. Method (5) estimates the target position using monocular and range measurements to the target. In this case, it is assumed some cooperative-target scheme for obtaining range measurements. Technology for obtaining such kind of range measurements is presented in [

69]. Gaussian noise with

cm is added to the range measurements. Note that methods (3), (4), and (5) are not SLAM methods. Methods (3) and (5) provide only the relative estimation of the target position with respect to the UAV. Method (4), due to the GPS, provides also global position estimations of the target and UAVs.

In case of SLAM methods and to carry out a more realistic evaluation, the data association problem was also accounted for. To emulate the failures of the visual data association process,

of the total number of visual correspondences are forced to be outliers in a random manner.

Table 4 shows the number of outliers introduced into the simulation due to the data association problem. Outliers for the visual data association in each camera as well as outliers for the cooperative visual data association are considered.

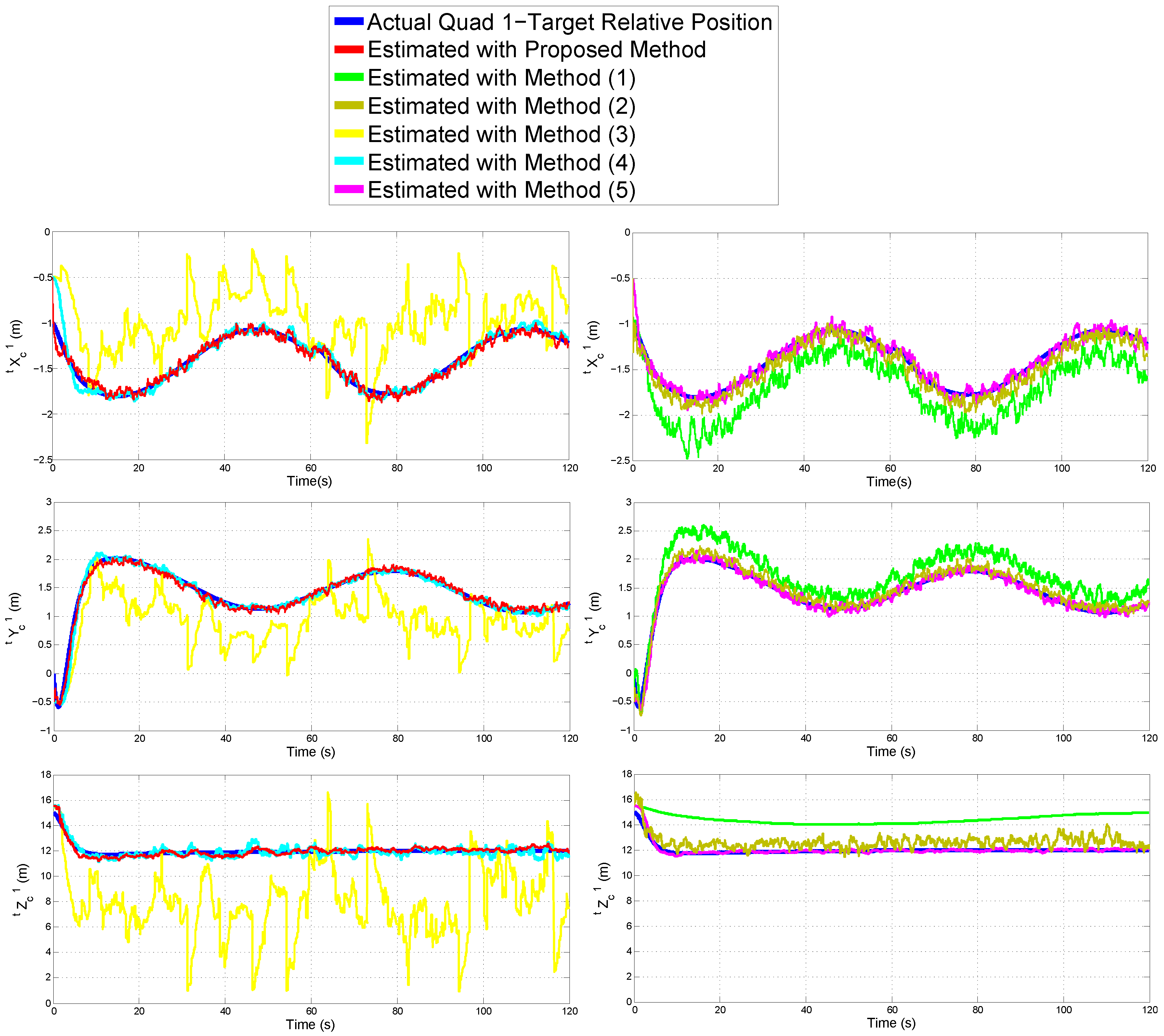

Figure 9 shows the results obtained from each method for estimating the relative position of Quad 1 with respect to the target. For the sake of clarity, the results are shown in two columns of plots. Each row of plots represents a reference axis.

Table 5 summarizes the Mean Squared Error (MSE) for the estimated relative position of Quad 1 with respect to the target in the three axes.

Regarding the performance of SLAM methods for estimating the features map,

Table 6 shows the total (sum of all) Mean Squared Errors for the estimated position of landmarks, while

Table 7 shows the total Mean Squared Errors for the initial estimated position of the landmarks.

According to the above results, the relative position of the UAV with respect to the moving target was recovered fairly well with the following methods: authors’ proposed Target-Centric Multi-UAV SLAM approach, (2) Robot-Centric Multi-UAV SLAM, (4) Multi-UAV Pseudo-stereo, and (5) Single-UAV monocular-range.

It is important to note that method (4) relies on GPS and thus it is not suitable for GPS-denied environments. Method (5) relies on range measurements to the target which can be often difficult to obtain, or it requires some cooperative target scheme which is not even possible to accomplish for several kinds of applications. Regarding the other approaches, method (1) does not have direct three-dimensional information of the target (only monocular measurement) and thus the estimation is not good. In the case of method (3), it is well known that, with a fixed stereo system, the quality of measurements considerably degenerates as the distance with respect to the target increases.

Thus far, the above results suggest that both the proposed approach and the method (2) (both SLAM systems) exhibit good performance in challenging conditions: (i) GPS-denied environments, (ii) non-cooperative target, and (iii) long distance to the target. The only difference between these two methods is the parametrization of the system (Target-centric vs. Robot-centric). To investigate if there is an advantage in the use of one parametrization over the other, another test was carried out.

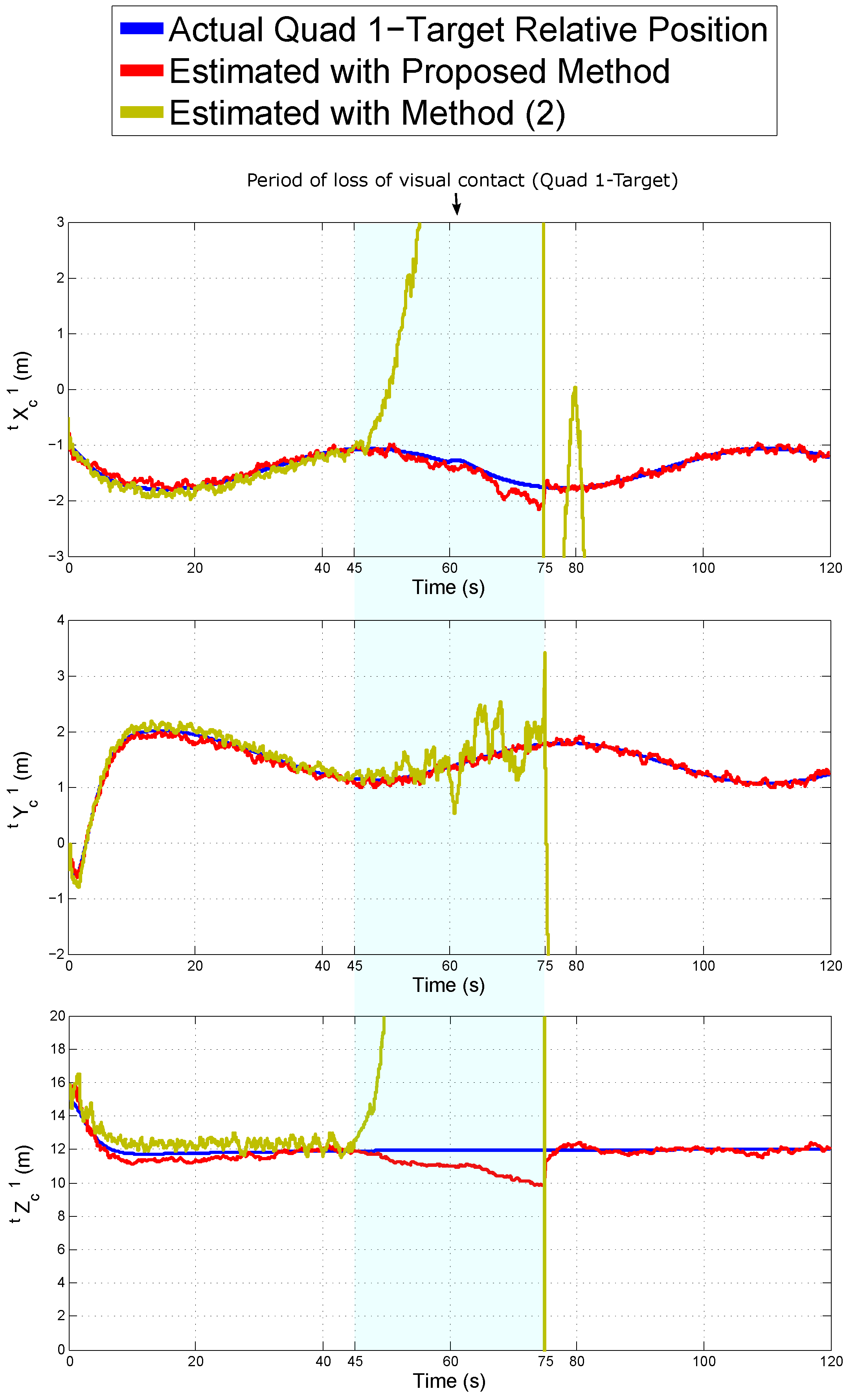

In this case, using the same simulation setup, the robustness of the methods was tested against the loss of visual contact to the moving target by one UAV during some period.

Figure 10 shows the estimated relative positions of Quad 1 with respect to the target obtained with both systems. Note that, during the seconds 45 to 75 of simulation, there are no monocular measurements of the target done by Quad 1. Only the estimated values of Quad 1 are presented, but the results of Quad 2 are very similar.

Given the above results, it can be seen that, contrary to the method (2), the proposed system is robust to the loss of visual contact of the target by one of the Quads. This is a clear advantage of the proposed Target-centric parameterization.

6.4. Control Simulations’ Results

A set of simulations was also carried out to test the whole proposed system in an estimation-control closed-loop manner. In this case, to maintain a stable flight formation with respect to the moving target, the estimates obtained from the proposed visual-based estimation system are used as feedback to the control scheme described in

Section 5).

In this test, the values of vectors that define the flight formation are:

For Quad 1: .

For Quad 2: .

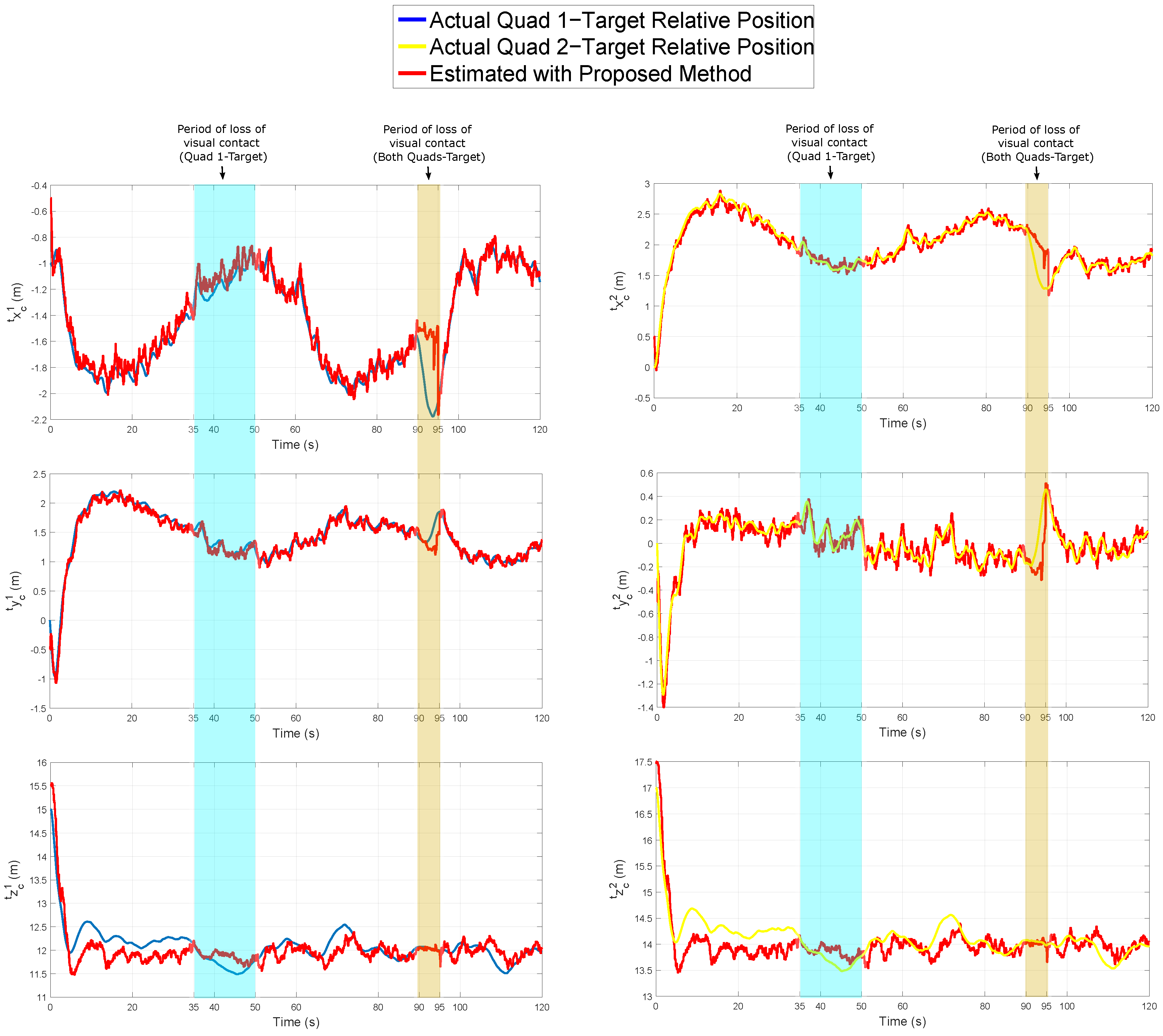

In addition, in this test, a period of visual contact loss of target by Quad 1 is simulated (seconds 35 to 50). A period of a visual contact loss of target by both Quads is simulated (seconds 90 to 95) as well.

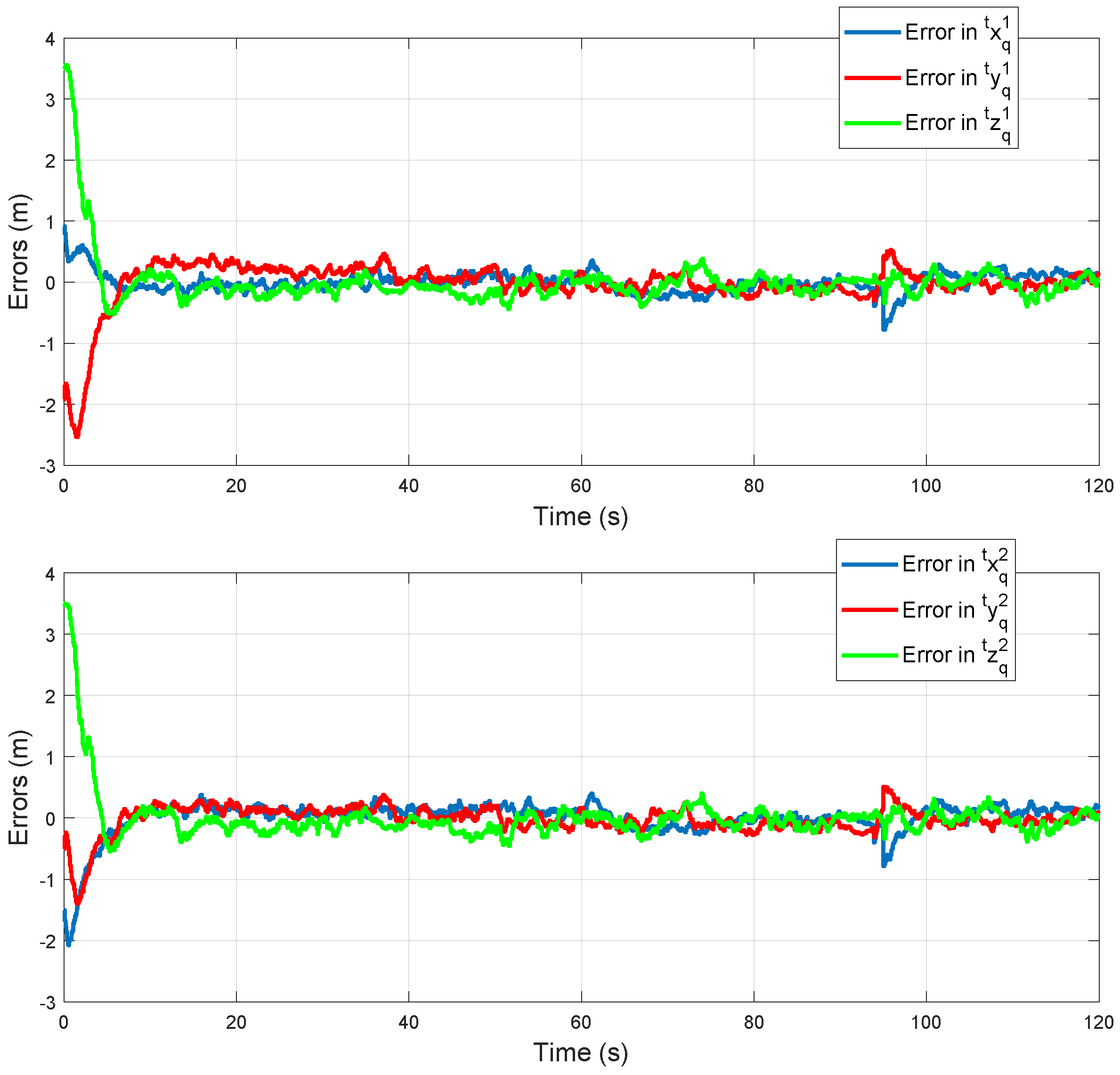

Figure 11 shows the evolution of the error with respect to the desired values

. In all the cases, note that the errors are bounded after an initial transient period.

Figure 12 shows the real and estimated relative position of Quad 1 (left column) and Quad 2 (right column) with respect to the target.

Table 8 summarizes the Mean Squared Error (MSE), in each axis, for the estimated relative position of Quad 1 and Quad 2 with respect to the target.

According to the above results, it can be seen that the control system was able to maintain a stable flight formation along with all the trajectory with respect to the target, using the proposed visual-based estimation system as feedback.