Sequence-To-Sequence Neural Networks Inference on Embedded Processors Using Dynamic Beam Search

Abstract

1. Introduction

- We propose a new policy for setting the BW at runtime depending on the input, based on Shannon’s Entropy, and compare it to the previously proposed solution based on the standard deviation of the top-k scores.

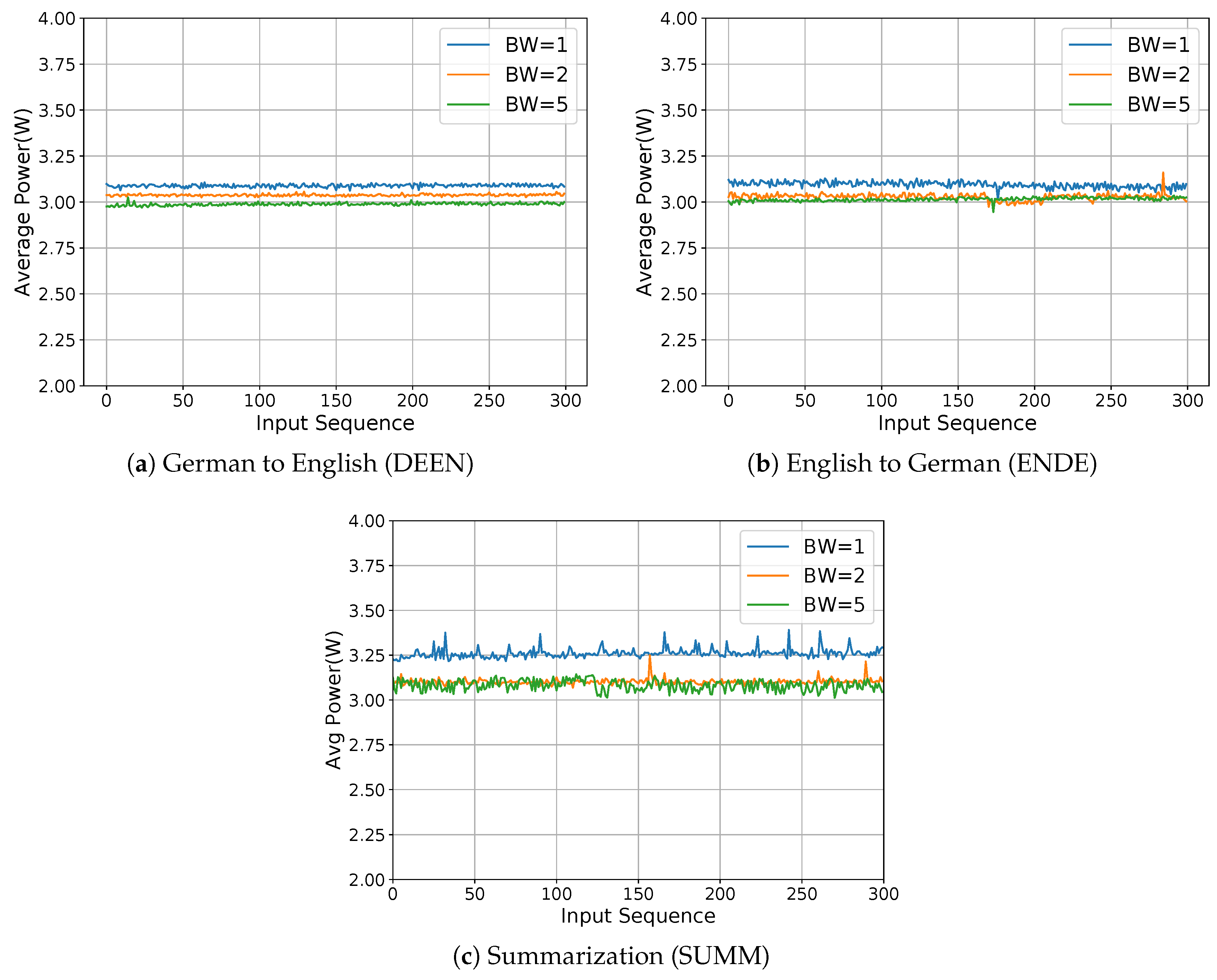

- We test the proposed solution on three state-of-the-art sequence-to-sequence networks, with different internal architectures and targeting different applications (translation and summarization).

- We measure the actual inference time and energy consumption obtained with our approach on a real ARM-based embedded device.

2. Background and Related Works

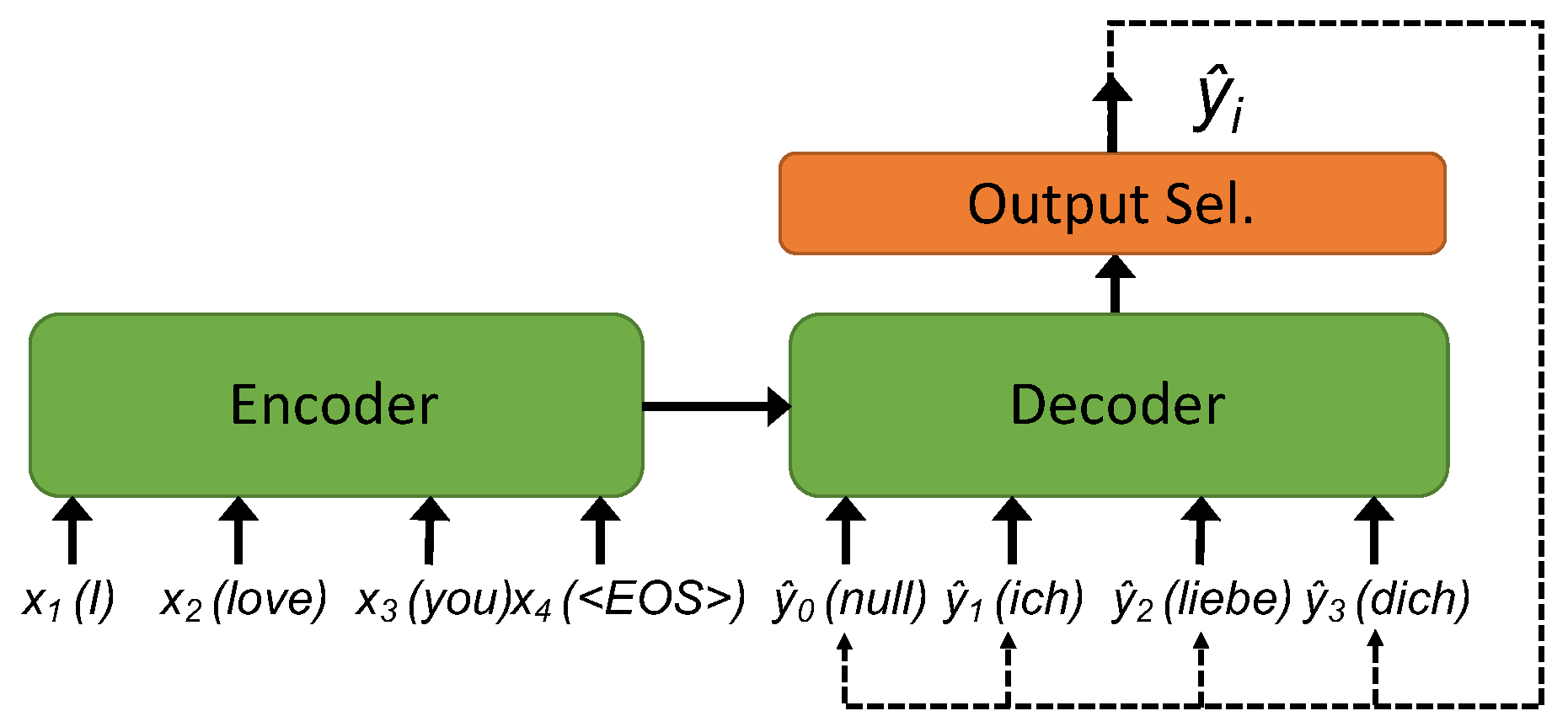

2.1. Encoder-Decoder Sequence-To-Sequence Neural Networks

2.2. Related Works

2.2.1. Custom Hardware Designs

2.2.2. Static Optimizations

2.2.3. Dynamic Optimizations

3. Proposed Method

3.1. Motivation

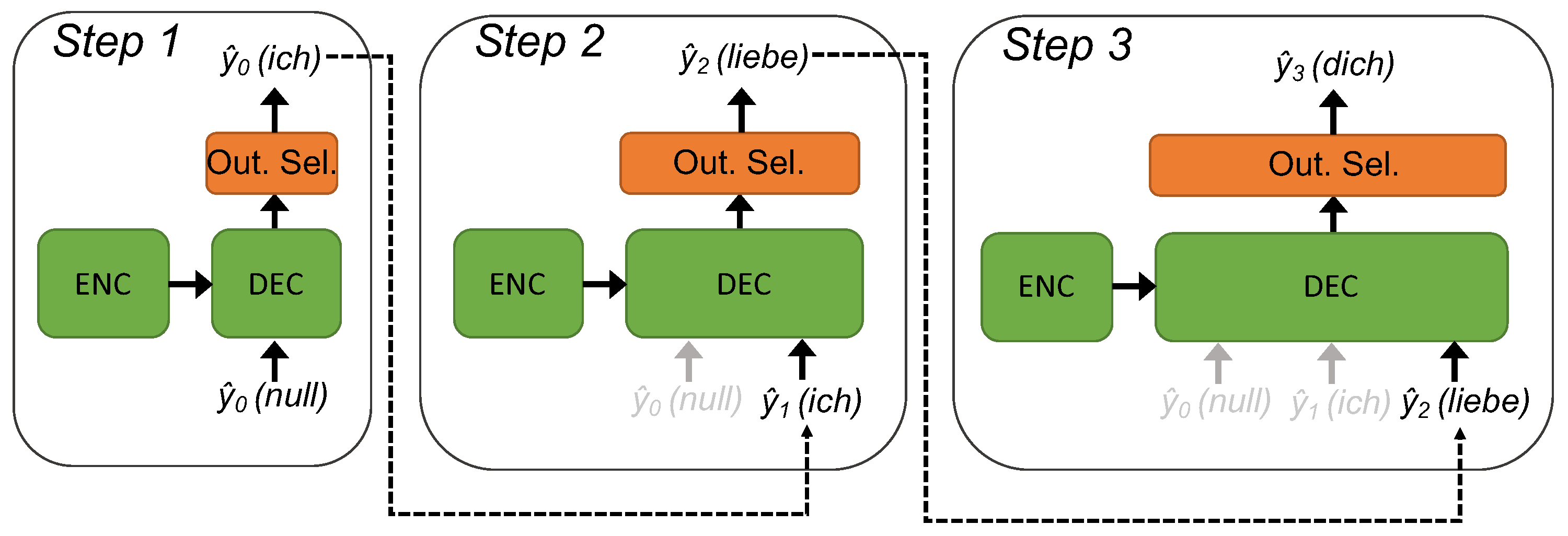

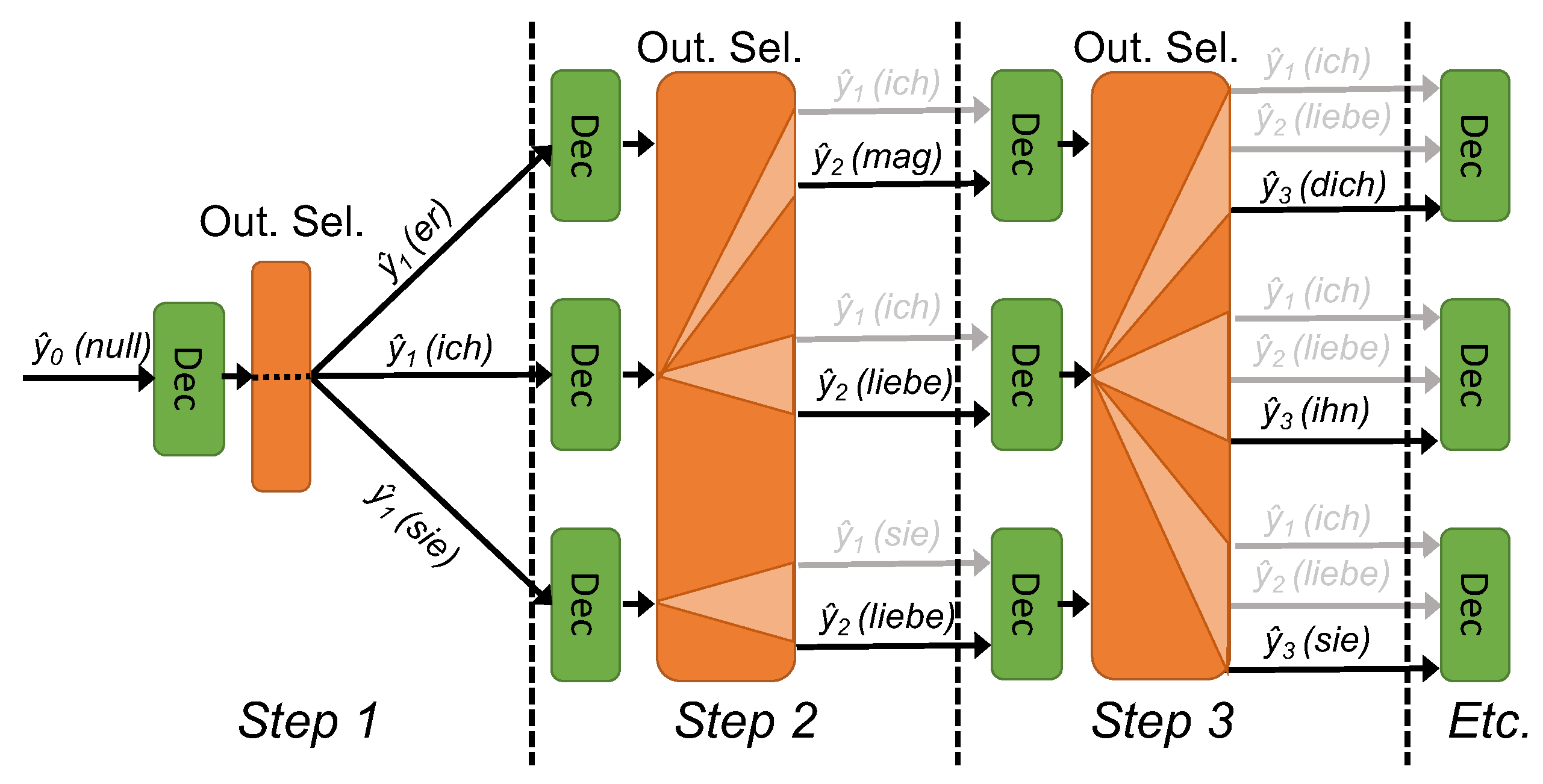

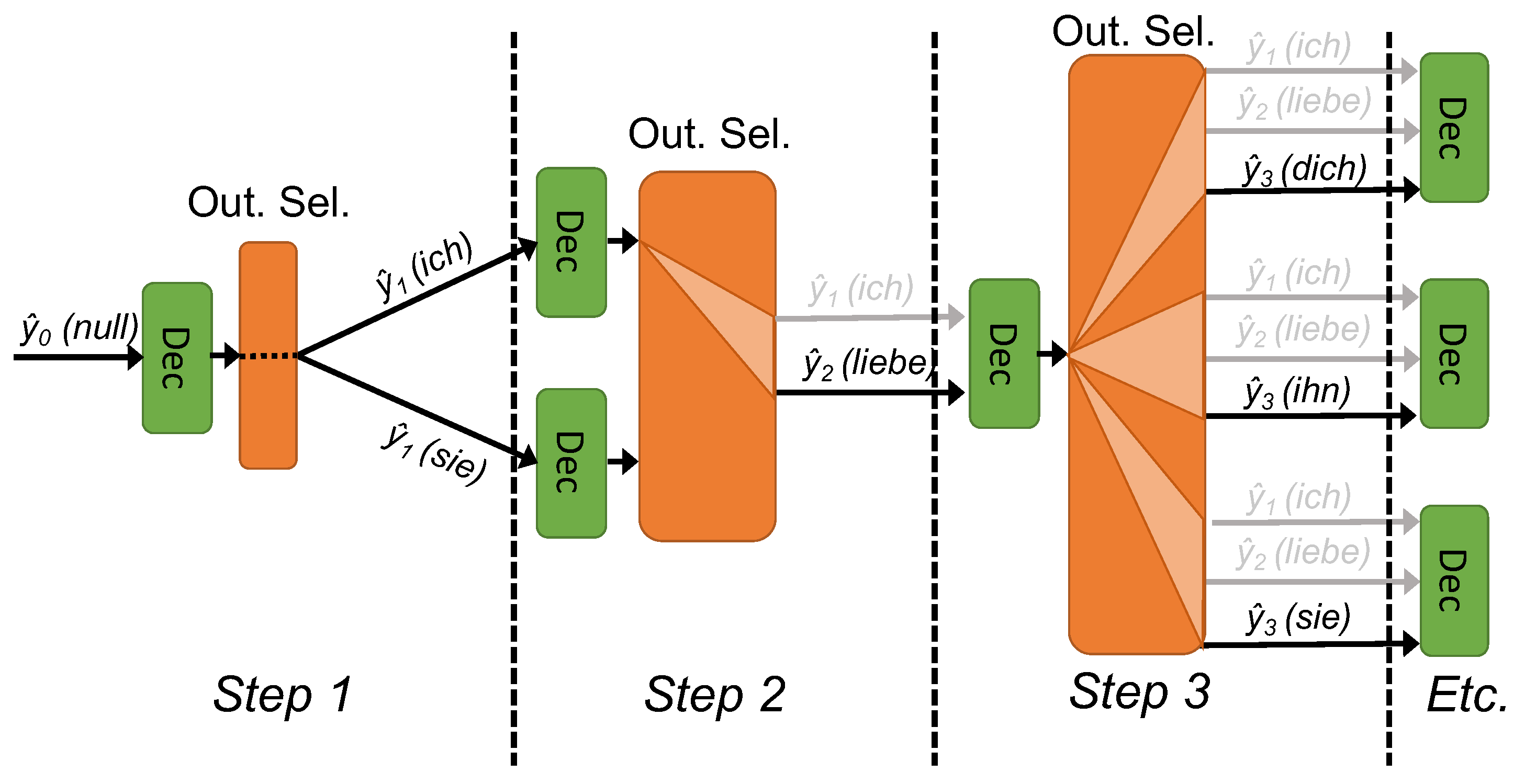

3.2. Dynamic Beam Search

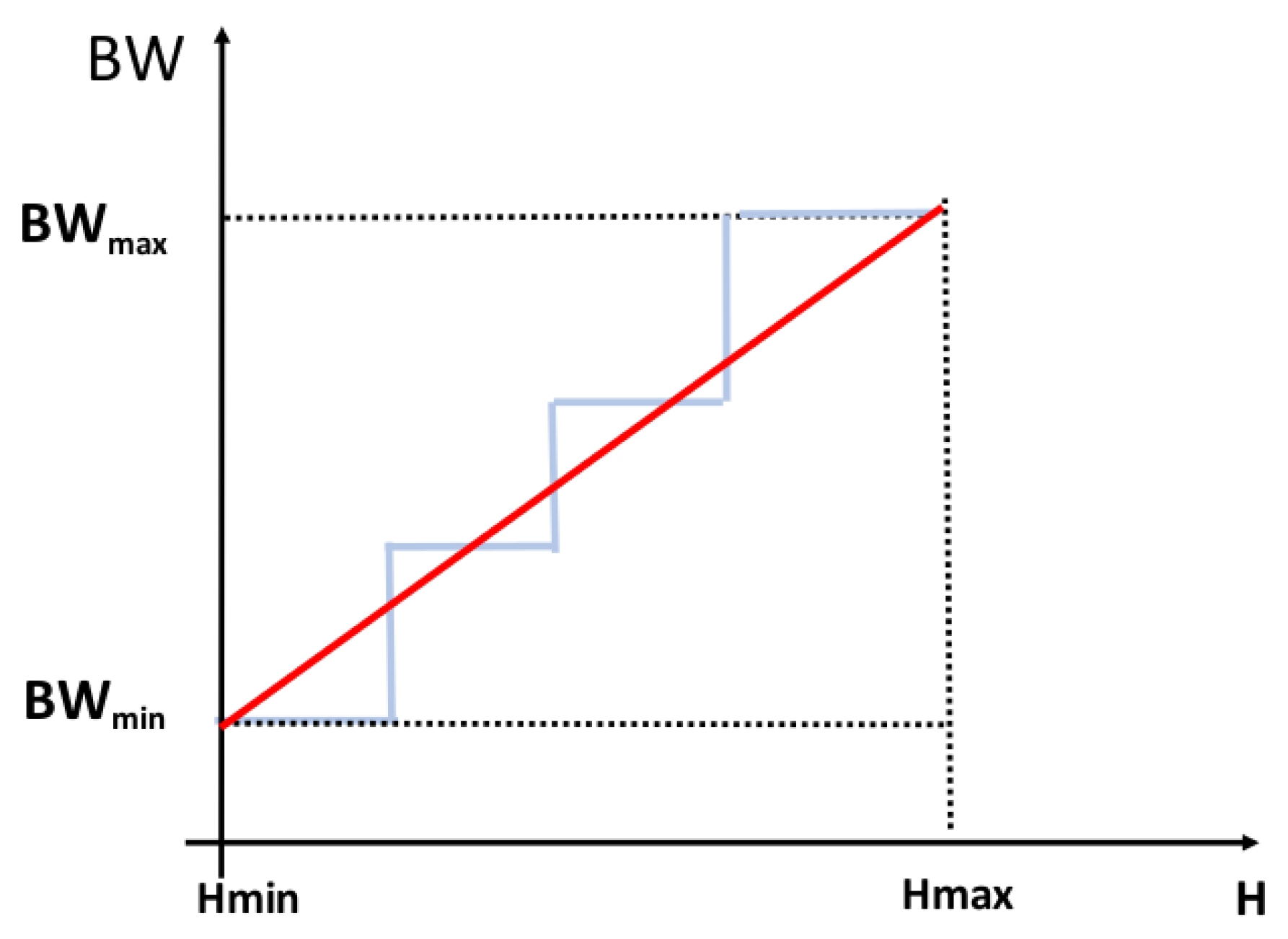

3.2.1. Entropy Mapping

3.2.2. Standard Deviation Mapping

3.3. Implementation Details

4. Results

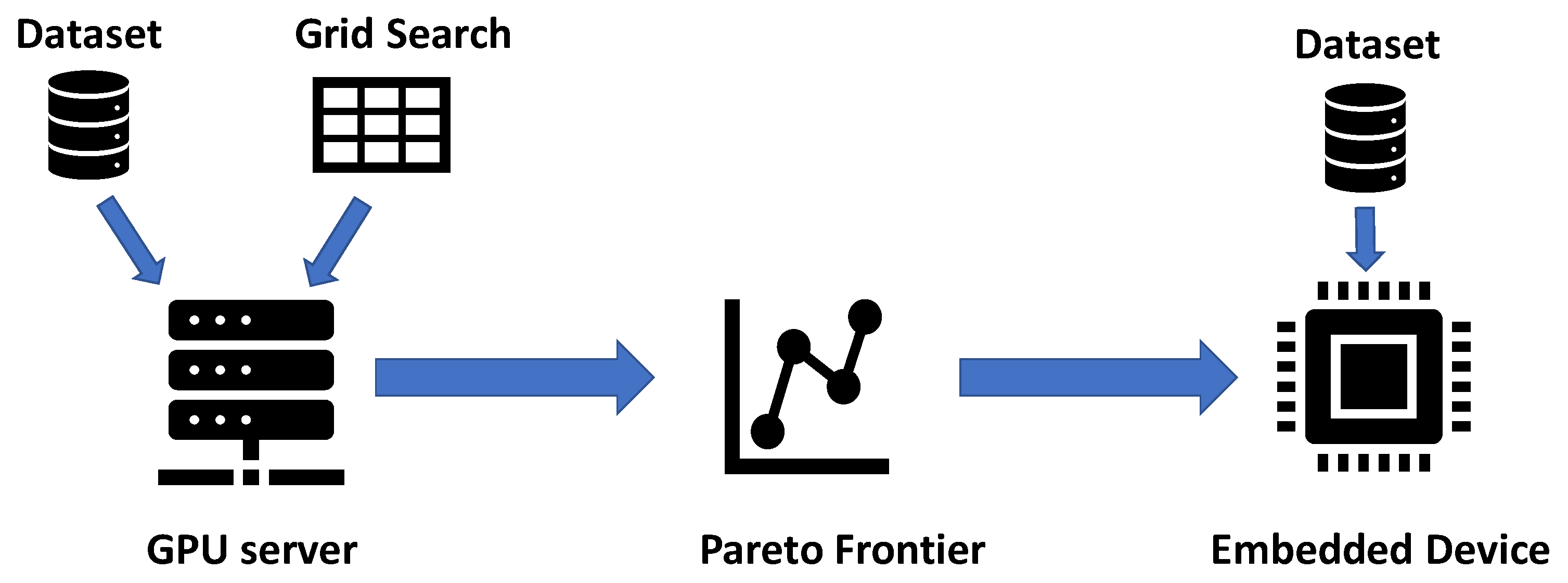

4.1. Setup

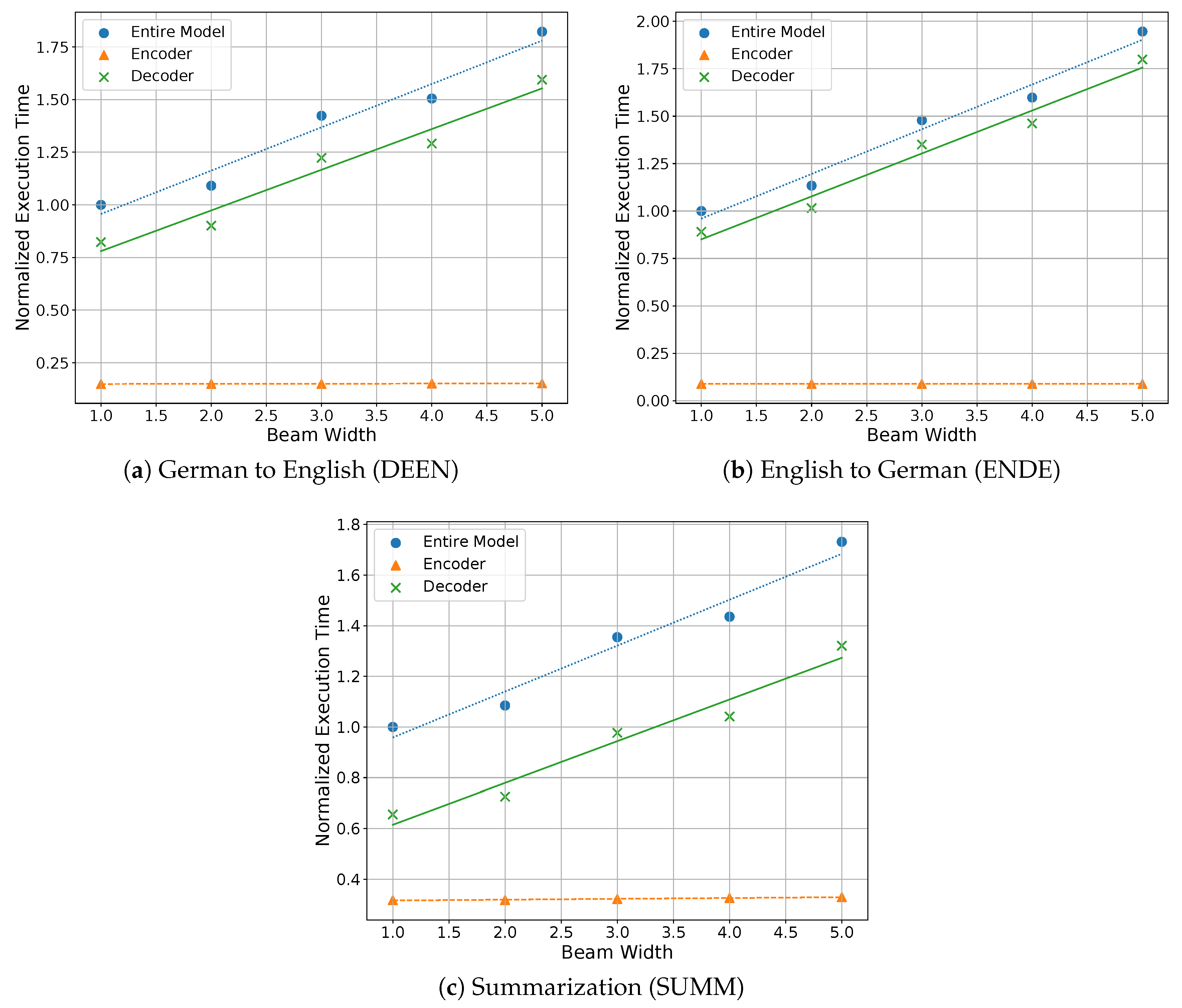

4.2. Design Space Exploration Results

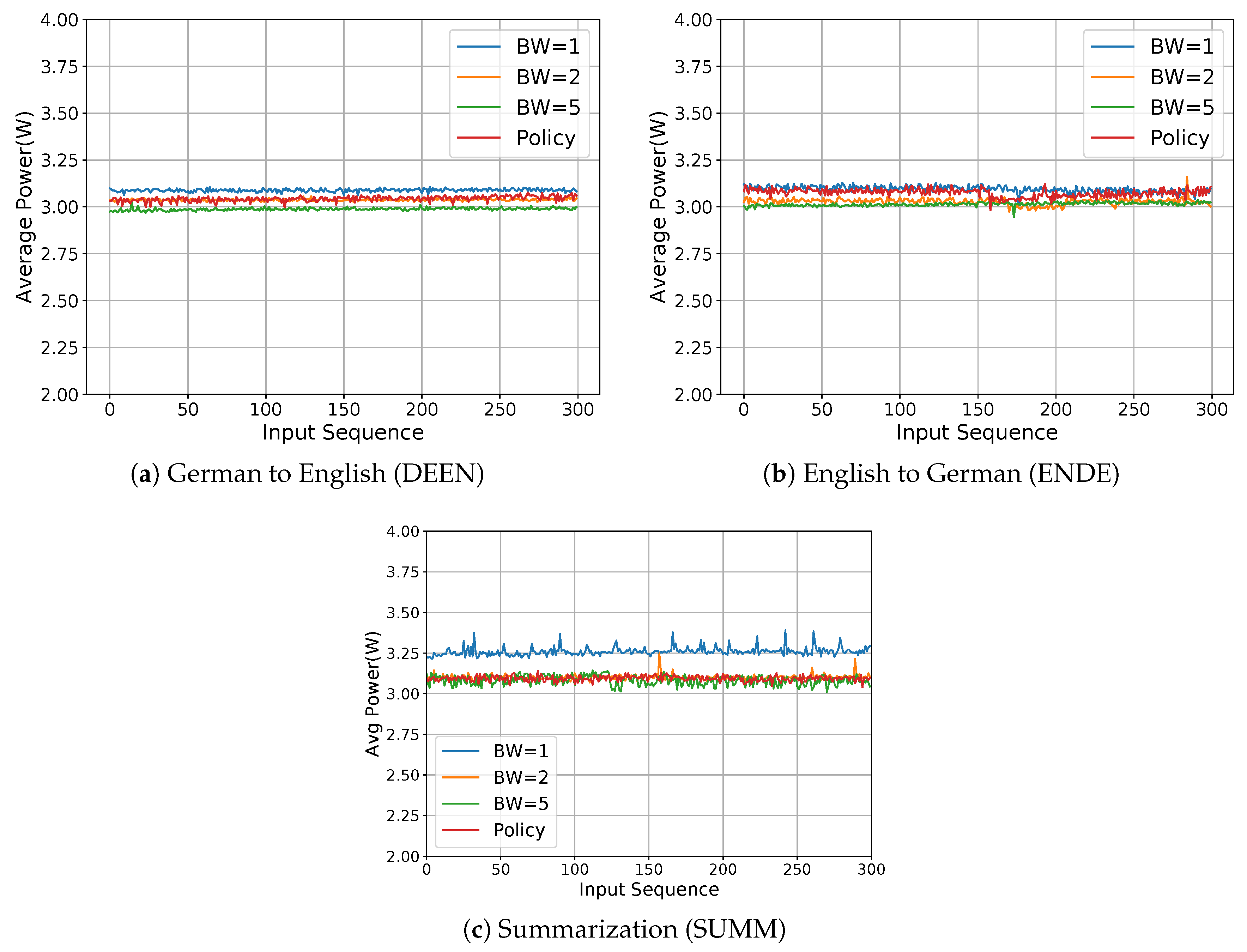

4.3. Mapping Policies Overheads

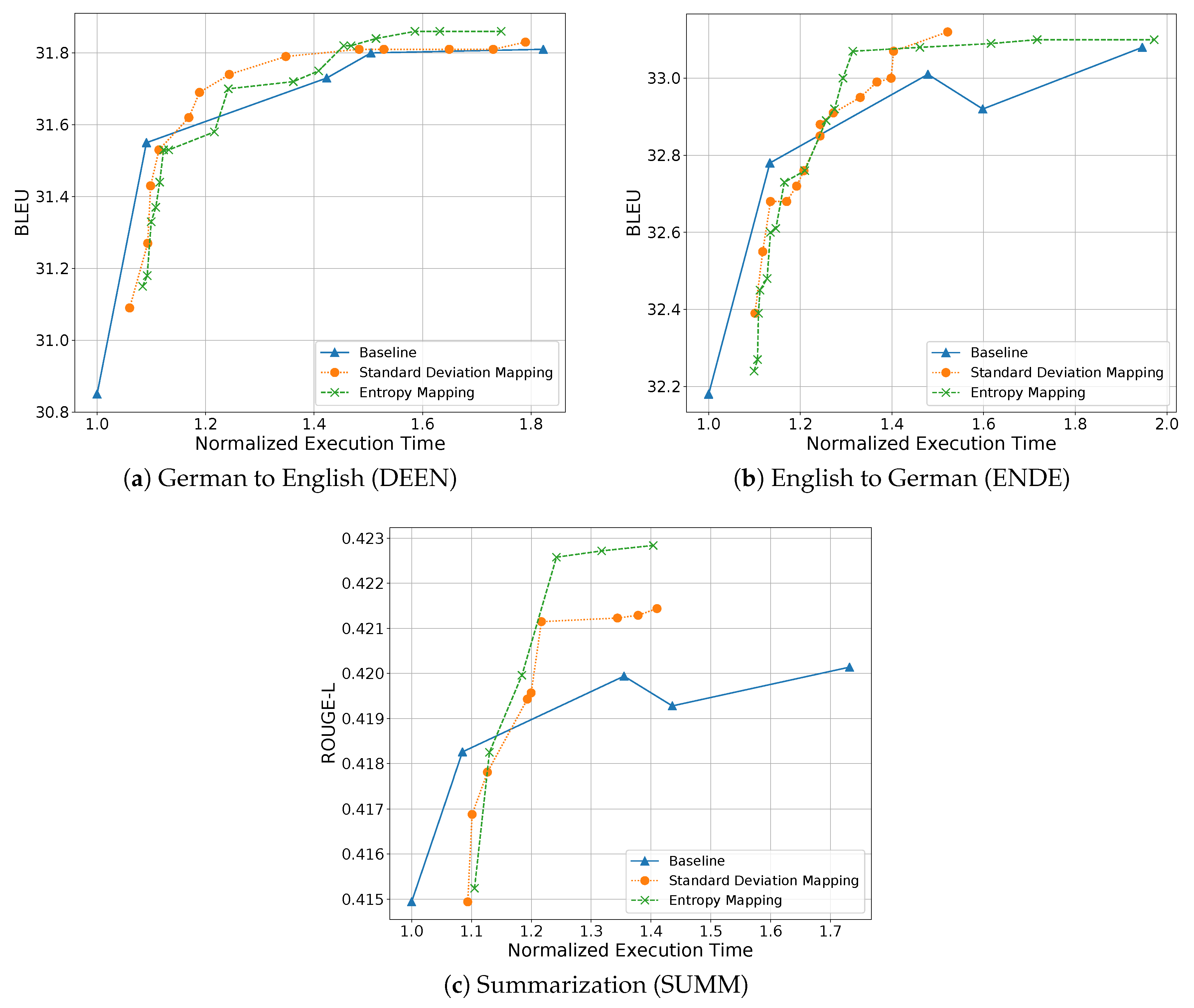

4.4. Accuracy Versus Execution Time

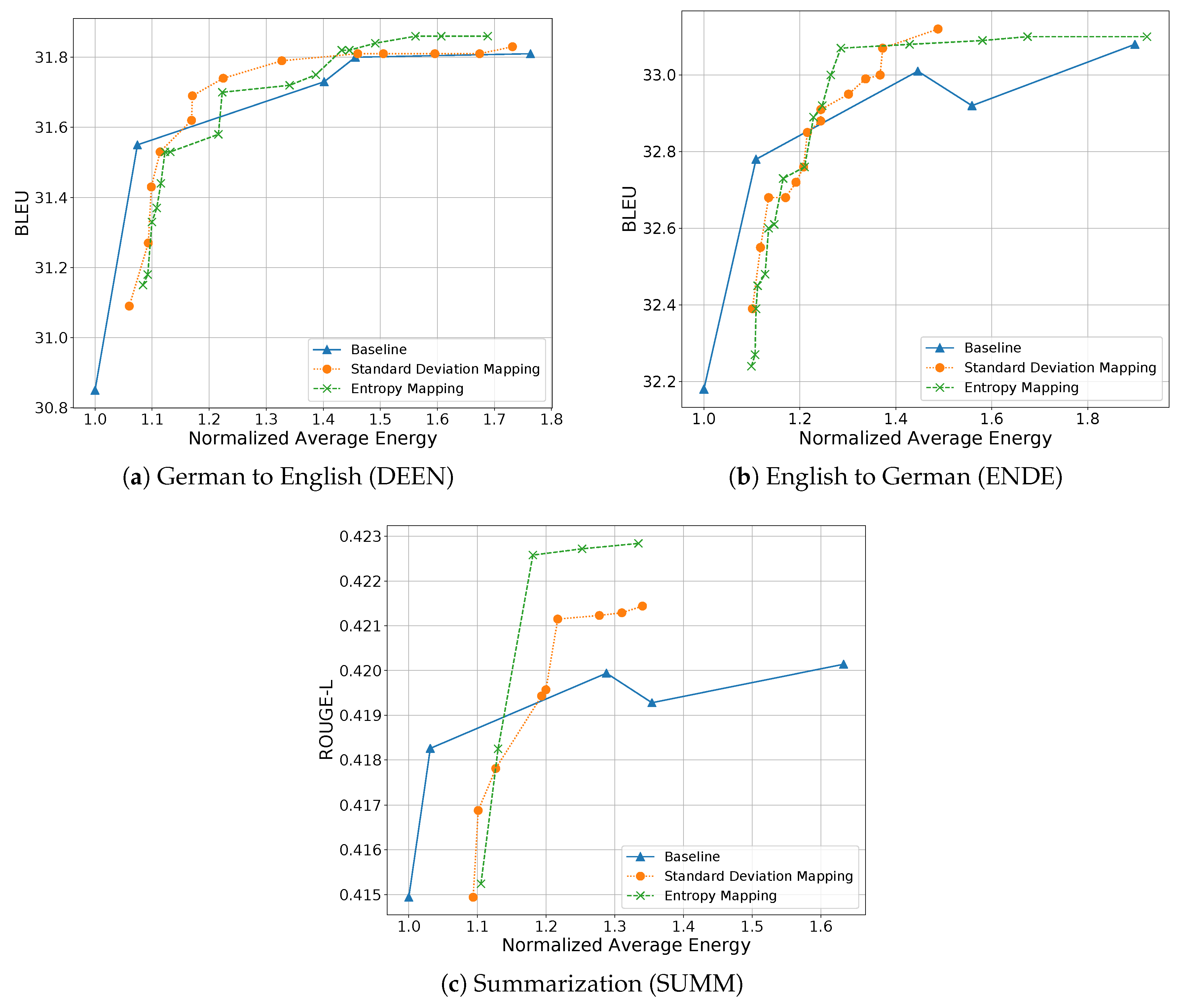

4.5. Accuracy Versus Energy

5. Conclusions

Author Contributions

Funding

Conflicts of Interest

Abbreviations

| NMT | neural machine translation |

| IoT | Internet of Things |

| NN | neural networks |

| Enc | encoder |

| Dec | decoder |

| RNN | recurrent neural network |

| GRU | gated recurrent unit |

| LSTM | long-short term memory |

| TCN | temporal convolutional network |

| EOS | end Of sentence |

| BW | beam width |

| FPGA | field-programmable gate array |

| ASIC | application-specific integrated circuit |

| CNN | convolutional neural network |

| MPU | microprocessing unit |

| MCU | microcontroller unit |

| SIMD | single-instruction multiple-data |

| GPU | graphical processing unit |

| CPU | central processing unit |

| BLEU | bilingual evaluation understudy |

| ROUGE | recall-oriented understudy for Gisting evaluation |

| DSE | design space exploration |

| DEEN | German to English network |

| ENDE | English to German network |

| SUMM | English summarization network |

References

- LeCun, Y.; Bengio, Y.; Hinton, G. Deep Learning; The MIT Press: Cambridge, MA, USA, 2006. [Google Scholar]

- Vaswani, A.; Shazeer, N.; Parmar, N.; Uszkoreit, J.; Jones, L.; Gomez, A.N.; Kaiser, L.; Polosukhin, I. Attention Is All You Need. arXiv 2017, arXiv:1706.03762. [Google Scholar]

- Sze, V.; Chen, Y.H.; Yang, T.J.; Emer, J.S. Efficient Processing of Deep Neural Networks: A Tutorial and Survey. Proc. IEEE 2017, 105, 2295–2329. [Google Scholar] [CrossRef]

- Moons, B.; Verhelst, M. A 0.3-2.6 TOPS/W precision-scalable processor for real-time large-scale ConvNets. In Proceedings of the 2016 IEEE Symposium on VLSI Circuits (VLSI-Circuits), Honolulu, HI, USA, 15–17 June 2016; pp. 1–2. [Google Scholar] [CrossRef]

- Tann, H.; Hashemi, S.; Bahar, R.I.; Reda, S. Runtime configurable deep neural networks for energy-accuracy trade-off. In Proceedings of the Eleventh IEEE/ACM/IFIP International Conference on Hardware/Software Codesign and System Synthesis—CODES ’16, New York, NY, USA, 1–7 October 2016; pp. 1–10. [Google Scholar] [CrossRef]

- Andri, R.; Cavigelli, L.; Rossi, D.; Benini, L. YodaNN: An ultra-low power convolutional neural network accelerator based on binary weights. In Proceedings of the IEEE Computer Society Annual Symposium on VLSI (ISVLSI), Pittsburgh, PA, USA, 11–13 July 2016; pp. 236–241. [Google Scholar] [CrossRef]

- Guo, Y.; Yao, A.; Chen, Y. Dynamic Network Surgery for Efficient DNNs. arXiv 2016, arXiv:1608.04493. [Google Scholar]

- Jahier Pagliari, D.; Macii, E.; Poncino, M. Dynamic Bit-width Reconfiguration for Energy-Efficient Deep Learning Hardware. In Proceedings of the International Symposium on Low Power Electronics and Design (ISLPED ’18), Seattle, WA, USA, 23–25 July 2018; ACM: New York, NY, USA, 2018; pp. 47:1–47:6. [Google Scholar] [CrossRef]

- Jahier Pagliari, D.; Panini, F.; Macii, E.; Poncino, M. Dynamic Beam Width Tuning for Energy-Efficient Recurrent Neural Networks. In Proceedings of the 2019 on Great Lakes Symposium on VLSI (GLSVLSI ’19), Tysons Corner, VA, USA, 9–11 May 2019; ACM: New York, NY, USA, 2019; pp. 69–74. [Google Scholar] [CrossRef]

- Silfa, F.; Dot, G.; Arnau, J.M.; Gonzalez, A. E-PUR: An Energy-Efficient Processing Unit for Recurrent Neural Networks. In Proceedings of the 27th International Conference on Parallel Architectures and Compilation Techniques (PACT ’18), Limassol, Cyprus, 1–4 November 2018; ACM: New York, NY, USA, 2018; pp. 1–12. [Google Scholar] [CrossRef]

- Park, E.; Kim, D.; Kim, S.; Kim, Y.D.; Kim, G.; Yoon, S.; Yoo, S. Big/little deep neural network for ultra low power inference. In Proceedings of the 2015 International Conference on Hardware/Software Codesign and System Synthesis (CODES+ISSS), Amsterdam, The Netherlands, 4–9 October 2015; pp. 124–132. [Google Scholar] [CrossRef]

- Chen, X.; Mao, J.; Gao, J.; Nixon, K.W.; Chen, Y. MORPh. In Proceedings of the 53rd Annual Design Automation Conference on—DAC ’16, Austin, TX, USA, 5–9 June 2016; ACM Press: New York, NY, USA, 2016; pp. 1–6. [Google Scholar] [CrossRef]

- Sun, F.; Lin, J.; Wang, Z. Intra-layer Nonuniform Quantization for Deep Convolutional Neural Network. arXiv 2016, arXiv:1607.02720. [Google Scholar]

- Yang, T.; Chen, Y.; Sze, V. Designing Energy-Efficient Convolutional Neural Networks Using Energy-Aware Pruning. In Proceedings of the 2017 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Honolulu, HI, USA, 21–26 July 2017; pp. 6071–6079. [Google Scholar] [CrossRef]

- Gao, C.; Neil, D.; Ceolini, E.; Liu, S.C.; Delbruck, T. DeltaRNN: A Power-efficient Recurrent Neural Network Accelerator. In Proceedings of the 2018 ACM/SIGDA International Symposium on Field-Programmable Gate Arrays (FPGA ’18), Monterey, CA, USA, 25–27 February 2018; pp. 21–30. [Google Scholar] [CrossRef]

- Zhang, C.; Li, P.; Sun, G.; Guan, Y.; Xiao, B.; Cong, J. Optimizing FPGA-based Accelerator Design for Deep Convolutional Neural Networks. In Proceedings of the 2015 ACM/SIGDA International Symposium on Field-Programmable Gate Arrays (FPGA ’15), Monterey, CA, USA, 22–24 February 2015; ACM: New York, NY, USA, 2015; pp. 161–170. [Google Scholar] [CrossRef]

- Shi, R.; Liu, J.; So, H.K.H.; Wang, S.; Liang, Y. E-LSTM: Efficient Inference of Sparse LSTM on Embedded Heterogeneous System. In Proceedings of the 56th Annual Design Automation Conference 2019 (DAC ’19), Las Vegas NV USA, 3–6 June 2019; ACM: New York, NY, USA, 2019; pp. 182:1–182:6. [Google Scholar] [CrossRef]

- Kung, J.; Park, J.; Park, S.; Kim, J.J. Peregrine: A Flexible Hardware Accelerator for LSTM with Limited Synaptic Connection Patterns. In Proceedings of the 56th Annual Design Automation Conference 2019 (DAC ’19), Las Vegas NV USA, 3–6 June 2019; ACM: New York, NY, USA, 2019; pp. 209:1–209:6. [Google Scholar] [CrossRef]

- Cao, S.; Zhang, C.; Yao, Z.; Xiao, W.; Nie, L.; Zhan, D.; Liu, Y.; Wu, M.; Zhang, L. Efficient and Effective Sparse LSTM on FPGA with Bank-Balanced Sparsity. In Proceedings of the 2019 ACM/SIGDA International Symposium on Field-Programmable Gate Arrays (FPGA ’19), Seaside, CA, USA, 24–26 February 2019; ACM: New York, NY, USA, 2019; pp. 63–72. [Google Scholar] [CrossRef]

- Moons, B.; Brabandere, B.D.; Gool, L.V.; Verhelst, M. Energy-efficient ConvNets through approximate computing. In Proceedings of the 2016 IEEE Winter Conference on Applications of Computer Vision (WACV), Lake Placid, NY, USA, 7–10 March 2016; pp. 1–8. [Google Scholar] [CrossRef]

- Hubara, I.; Courbariaux, M.; Soudry, D.; El-Yaniv, R.; Bengio, Y. Binarized Neural Networks. In Advances in Neural Information Processing Systems 29; Lee, D.D., Sugiyama, M., Luxburg, U.V., Guyon, I., Garnett, R., Eds.; Curran Associates, Inc.: Red Hook, NY, USA, 2016; pp. 4107–4115. [Google Scholar]

- Amoh, J.; Odame, K. An Optimized Recurrent Unit for Ultra-Low-Power Keyword Spotting. arXiv 2019, arXiv:1902.05026. [Google Scholar] [CrossRef]

- Jahier Pagliari, D.; Poncino, M. Application-Driven Synthesis of Energy-Efficient Reconfigurable-Precision Operators. In Proceedings of the 2018 IEEE International Symposium on Circuits and Systems (ISCAS), Florence, Italy, 27–30 May 2018; pp. 1–5. [Google Scholar] [CrossRef]

- Panda, P.; Sengupta, A.; Roy, K. Conditional Deep Learning for Energy-Efficient and Enhanced Pattern Recognition. In Proceedings of the 2016 Conference on Design, Automation & Test in Europe (DATE ’16), Dresden, Germany, 14–18 March 2016; EDA Consortium: San Jose, CA, USA, 2016; pp. 475–480. [Google Scholar]

- Parsa, M.; Panda, P.; Sen, S.; Roy, K. Staged Inference using Conditional Deep Learning for energy efficient real-time smart diagnosis. In Proceedings of the 2017 39th Annual International Conference of the IEEE Engineering in Medicine and Biology Society (EMBC), Jeju Island, Korea, 11–15 July 2017; pp. 78–81. [Google Scholar] [CrossRef]

- Moons, B.; Uytterhoeven, R.; Dehaene, W.; Verhelst, M. Envision: A 0.26-to-10TOPS/W subword-parallel dynamic-voltage-accuracy-frequency-scalable Convolutional Neural Network processor in 28nm FDSOI. In Proceedings of the 2017 IEEE International Solid-State Circuits Conference (ISSCC), San Francisco, CA, USA, 5–9 February 2017; pp. 246–247. [Google Scholar] [CrossRef]

- Yan, Z.; Zhang, H.; Piramuthu, R.; Jagadeesh, V.; DeCoste, D.; Di, W.; Yu, Y. HD-CNN: Hierarchical Deep Convolutional Neural Networks for Large Scale Visual Recognition. In Proceedings of the 2015 IEEE International Conference on Computer Vision (ICCV), Santiago, Chile, 11–18 December 2015; pp. 2740–2748. [Google Scholar] [CrossRef]

- Moons, B.; Uytterhoeven, R.; Dehaene, W.; Verhelst, M. DVAFS: Trading computational accuracy for energy through dynamic-voltage-accuracy-frequency-scaling. In Proceedings of the Design, Automation Test in Europe Conference Exhibition (DATE), Lausanne, Switzerland, 27–31 March 2017; pp. 488–493. [Google Scholar] [CrossRef]

- Freitag, M.; Al-Onaizan, Y. Beam search strategies for neural machine translation. arXiv 2017, arXiv:1702.01806. [Google Scholar]

- Branke, J.; Branke, J.; Deb, K.; Miettinen, K.; Slowiński, R. Multiobjective Optimization: Interactive and Evolutionary Approaches; Springer Science & Business Media: Berlin/Heidelberg, Germany, 2008; Volume 5252. [Google Scholar]

- Goodfellow, I.; Bengio, Y.; Courville, A. Deep Learning; The MIT Press: Cambridge, MA, USA, 2016. [Google Scholar]

- Cettolo, M.; Niehues, J.; Stüker, S.; Bentivogli, L.; Federico, M. Report on the 11th IWSLT evaluation campaign, IWSLT 2014. In Proceedings of the International Workshop on Spoken Language Translation, South Lake Tahoe, CA, USA, 4–5 December 2014; p. 57. [Google Scholar]

- Bojar, O.; Buck, C.; Federmann, C.; Haddow, B.; Koehn, P.; Leveling, J.; Monz, C.; Pecina, P.; Post, M.; Saint-Amand, H.; et al. Findings of the 2014 Workshop on Statistical Machine Translation. In Proceedings of the Ninth Workshop on Statistical Machine Translation, Baltimore, MD, USA, 26–27 June 2014; Association for Computational Linguistics: Stroudsburg, PA, USA, 2014; pp. 12–58. [Google Scholar]

- Graff, D.; Kong, J.; Chen, K.; Maeda, K. English gigaword. Linguist. Data Consort. Phila. 2003, 4, 34. [Google Scholar]

- Matuszewski, J.; Pietrow, D. Recognition of electromagnetic sources with the use of deep neural networks. In Proceedings of the XII Conference on Reconnaissance and Electronic Warfare Systems, Ołtarzew, Poland, 19–21 November 2018; Kaniewski, P., Ed.; International Society for Optics and Photonics: San Diego, CA, USA, 2018; Volume 11055, pp. 100–114. [Google Scholar] [CrossRef]

- Matuszewski, J. Radar signal identification using a neural network and pattern recognition methods. In Proceedings of the 2018 14th International Conference on Advanced Trends in Radioelecrtronics, Telecommunications and Computer Engineering (TCSET), Lviv-Slavske, Ukraine, 20–24 February 2018; pp. 79–83. [Google Scholar] [CrossRef]

- Dudczyk, J.; Kawalec, A. Adaptive Forming of the Beam Pattern of Microstrip Antenna with the Use of an Artificial Neural Network. Int. J. Antennas Propag. 2012, 2012. [Google Scholar] [CrossRef]

- Gehring, J.; Auli, M.; Grangier, D.; Yarats, D.; Dauphin, Y.N. Convolutional Sequence to Sequence Learning. arXiv 2017, arXiv:1705.03122. [Google Scholar]

- Zhang, Y.; Wang, C.; Gong, L.; Lu, Y.; Sun, F.; Xu, C.; Li, X.; Zhou, X. A Power-Efficient Accelerator Based on FPGAs for LSTM Network. In Proceedings of the 2017 IEEE International Conference on Cluster Computing (CLUSTER), Honolulu, HI, USA, 5–8 September 2017; pp. 629–630. [Google Scholar] [CrossRef]

- Chen, C.; Ding, H.; Peng, H.; Zhu, H.; Ma, R.; Zhang, P.; Yan, X.; Wang, Y.; Wang, M.; Min, H.; et al. OCEAN: An on-chip incremental-learning enhanced processor with gated recurrent neural network accelerators. In Proceedings of the ESSCIRC 2017—43rd IEEE European Solid State Circuits Conference, Leuven, Belgium, 11–14 September 2017; pp. 259–262. [Google Scholar] [CrossRef]

- Lee, J.; Shin, D.; Yoo, H.J. A 21mW low-power recurrent neural network accelerator with quantization tables for embedded deep learning applications. In Proceedings of the 2017 IEEE Asian Solid-State Circuits Conference (A-SSCC), Seoul, Korea, 6–8 November 2017; pp. 237–240. [Google Scholar] [CrossRef]

- Lin, D.D.; Talathi, S.S.; Annapureddy, V.S. Fixed Point Quantization of Deep Convolutional Networks. In Proceedings of the International Conference on Machine Learning, Lille, France, 6–11 July 2015; Volume 48, pp. 2849–2858. [Google Scholar]

- Hashemi, S.; Anthony, N.; Tann, H.; Bahar, R.I.; Reda, S. Understanding the Impact of Precision Quantization on the Accuracy and Energy of Neural Networks. In Proceedings of the Design, Automation & Test in Europe Conference & Exhibition (DATE), Lausanne, Switzerland, 27–31 March 2017; pp. 1474–1479. [Google Scholar] [CrossRef]

- Courbariaux, M.; Hubara, I.; Soudry, D.; El-Yaniv, R.; Bengio, Y. Binarized Neural Networks: Training Deep Neural Networks with Weights and Activations Constrained to +1 or −1. arXiv 2016, arXiv:1602.02830. [Google Scholar]

- Jahier Pagliari, D.; Poncino, M.; Macii, E. Energy-Efficient Digital Processing via Approximate Computing. In Smart Systems Integration and Simulation; Bombieri, N., Poncino, M., Pravadelli, G., Eds.; Springer International Publishing: Cham, Switzerlands, 2016; Chapter 4; pp. 55–89. [Google Scholar] [CrossRef]

- Ott, J.; Lin, Z.; Zhang, Y.; Liu, S.C.; Bengio, Y. Recurrent Neural Networks With Limited Numerical Precision. arXiv 2016, arXiv:1611.07065. [Google Scholar]

- Hubara, I.; Courbariaux, M.; Soudry, D.; El-Yaniv, R.; Bengio, Y. Quantized Neural Networks: Training Neural Networks with Low Precision Weights and Activations. arXiv 2016, arXiv:1609.07061. [Google Scholar]

- Molchanov, P.; Tyree, S.; Karras, T.; Aila, T.; Kautz, J. Pruning Convolutional Neural Networks for Resource Efficient Inference. arXiv 2016, arXiv:1611.06440. [Google Scholar]

- Lai, L.; Suda, N.; Chandra, V. CMSIS-NN: Efficient Neural Network Kernels for Arm Cortex-M CPUs. arXiv 2018, arXiv:1801.06601. [Google Scholar]

- Garofalo, A.; Rusci, M.; Conti, F.; Rossi, D.; Benini, L. PULP-NN: Accelerating quantized neural networks on parallel ultra-low-power RISC-V processors. Philos. Trans. R. Soc. Math. Phys. Eng. Sci. 2020, 378, 20190155. [Google Scholar] [CrossRef] [PubMed]

- Devlin, J.; Chang, M.W.; Lee, K.; Toutanova, K. BERT: Pre-training of Deep Bidirectional Transformers for Language Understanding. arXiv 2018, arXiv:1810.04805. [Google Scholar]

- Radford, A.; Narasimhan, K.; Salimans, T.; Sutskever, I. Improving Language Understanding by Generative Pre-Training. Available online: https://openai.com/blog/language-unsupervised/ (accessed on 6 February 2020).

- Abadi, M.; Agarwal, A.; Barham, P.; Brevdo, E.; Chen, Z.; Citro, C.; Corrado, G.S.; Davis, A.; Dean, J.; Devin, M.; et al. Tensorflow: Large-scale machine learning on heterogeneous distributed systems. arXiv 2016, arXiv:1603.04467. [Google Scholar]

- Paszke, A.; Gross, S.; Massa, F.; Lerer, A.; Bradbury, J.; Chanan, G.; Killeen, T.; Lin, Z.; Gimelshein, N.; Antiga, L.; et al. PyTorch: An imperative style, high-performance deep learning library. In Proceedings of the Advances in Neural Information Processing Systems (NIPS 2019), Vancouver, BC, Canada, 8–14 December 2019; pp. 8024–8035. [Google Scholar]

- Klein, G.; Kim, Y.; Deng, Y.; Senellart, J.; Rush, A. OpenNMT: Open-Source Toolkit for Neural Machine Translation. In Proceedings of the 55th Annual Meeting of the Association for Computational Linguistics (ACL) 2017, System Demonstrations, Vancouver, BC, Canada, 30 July–4 August 2017; pp. 67–72. [Google Scholar]

- Papineni, K.; Roukos, S.; Ward, T.; Zhu, W.J. BLEU: a method for automatic evaluation of machine translation. In Proceedings of the 40th Annual Meeting on Association for Computational Linguistics (ACL), Philadelpha, PA, USA, 7–12 July 2002; pp. 311–318. [Google Scholar]

- Lin, C.Y. Rouge: A Package for Automatic Evaluation of Summaries; Text Summarization Branches Out; Association for Computational Linguistics: Barcelona, Spain, 2004; pp. 74–81. [Google Scholar]

| Model | Total DSE Time [H] | Total Points Tested | Pareto Points Selected | Reduction |

|---|---|---|---|---|

| DEEN | 20 | 200 | 17 | 12x |

| ENDE | 60 | 200 | 17 | 12x |

| SUMM | 7 | 200 | 13 | 15x |

| Model | Policy | Min. [s] | Max. [s] | Median [s] |

|---|---|---|---|---|

| DEEN | Entropy | 0.0054 | 0.0276 | 0.0165 |

| Std. Dev. | 0.0010 | 0.01458 | 0.0053 | |

| ENDE | Entropy | 0.0068 | 0.0345 | 0.0209 |

| Std. Dev. | 0.0011 | 0.0180 | 0.0068 | |

| SUMM | Entropy | 0.0106 | 0.0557 | 0.0326 |

| Std. Dev. | 0.0018 | 0.0302 | 0.0112 |

| Model | BWmin | BWmax | Coeff. | Intercept |

|---|---|---|---|---|

| DEEN | 1 | 2 | 1.1 | 0.5 |

| ENDE | 1 | 2 | 4 | 0.5 |

| SUMM | 1 | 4 | 0.3 | 0.3 |

© 2020 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Jahier Pagliari, D.; Daghero, F.; Poncino, M. Sequence-To-Sequence Neural Networks Inference on Embedded Processors Using Dynamic Beam Search. Electronics 2020, 9, 337. https://doi.org/10.3390/electronics9020337

Jahier Pagliari D, Daghero F, Poncino M. Sequence-To-Sequence Neural Networks Inference on Embedded Processors Using Dynamic Beam Search. Electronics. 2020; 9(2):337. https://doi.org/10.3390/electronics9020337

Chicago/Turabian StyleJahier Pagliari, Daniele, Francesco Daghero, and Massimo Poncino. 2020. "Sequence-To-Sequence Neural Networks Inference on Embedded Processors Using Dynamic Beam Search" Electronics 9, no. 2: 337. https://doi.org/10.3390/electronics9020337

APA StyleJahier Pagliari, D., Daghero, F., & Poncino, M. (2020). Sequence-To-Sequence Neural Networks Inference on Embedded Processors Using Dynamic Beam Search. Electronics, 9(2), 337. https://doi.org/10.3390/electronics9020337