Automated and Intelligent System for Monitoring Swimming Pool Safety Based on the IoT and Transfer Learning

Abstract

1. Introduction

- We introduce a novel intelligent system for off-time monitoring swimming pools based on IoT and transfer learning.

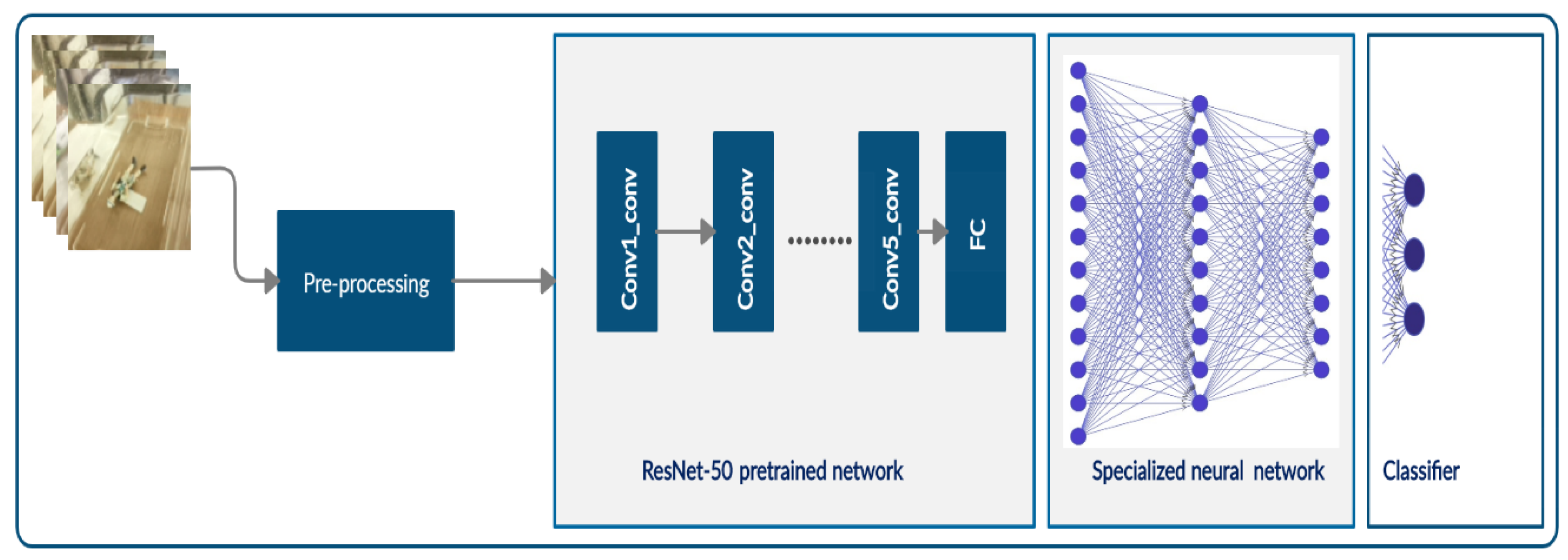

- We propose a specialized neural network based on the ResNet50 (residual network with 50 layers) architecture that can utilize a single image to detect and classify drowning humans from other objects.

- The method achieves an accuracy of 99% on a collected dataset and outperforms existing transfer learning algorithms, such the DCNN (deep convolution neural network), Xception (Extreme Inception), VGG16 (Visual Geometry Group), and VGG19.

- The efficiency and robustness of the proposed system are evaluated through several experimental analyses.

2. Related Work

3. Background

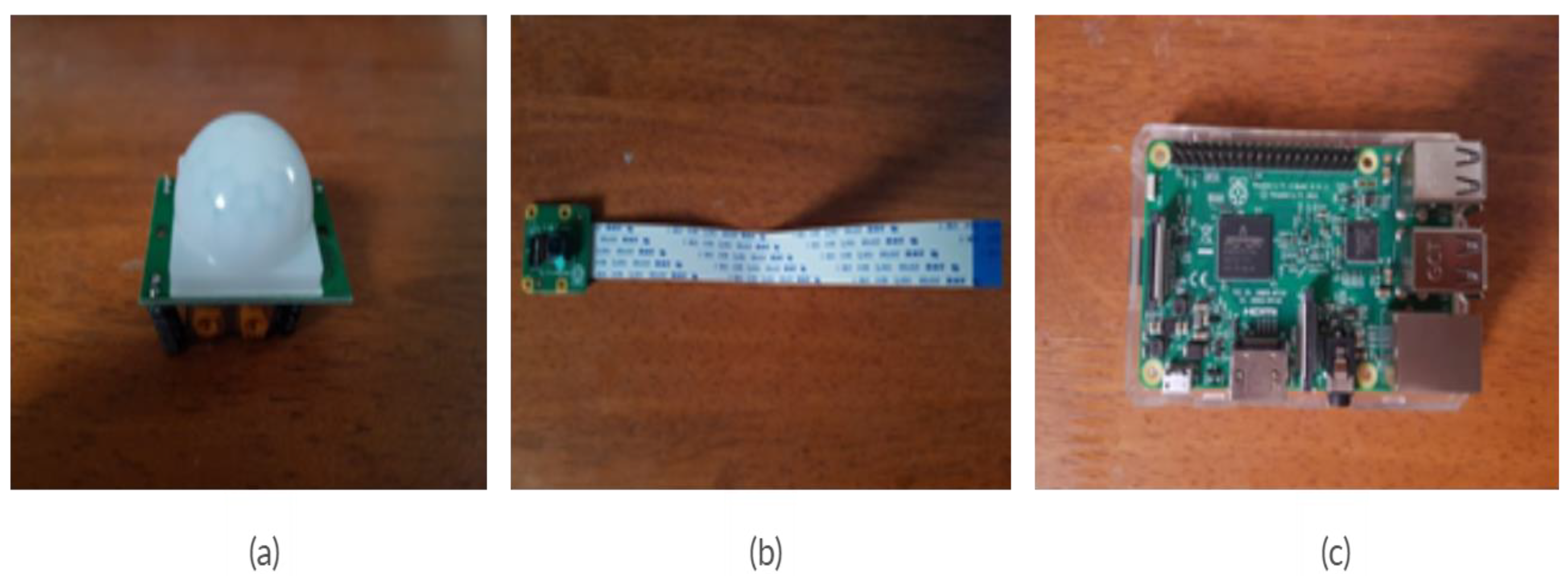

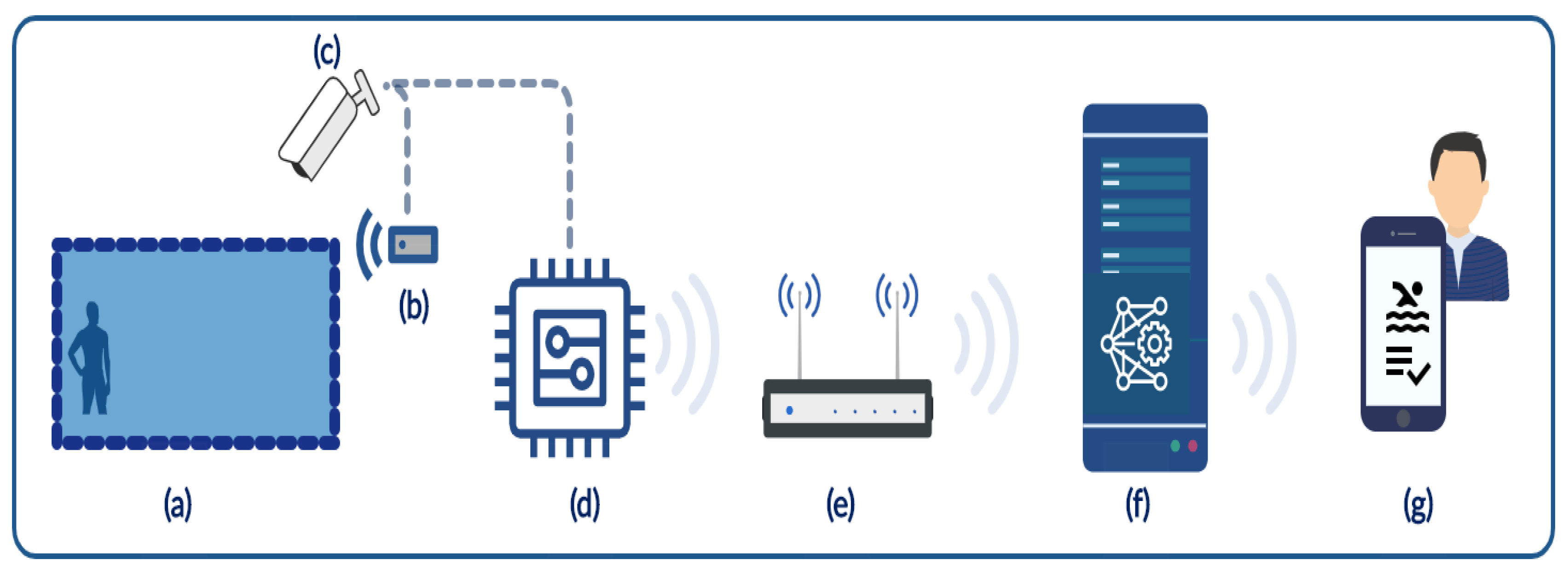

3.1. Hardware

3.1.1. Sensing Unit

3.1.2. Control Unit

3.1.3. Communications

3.2. Transfer Learning

- (1)

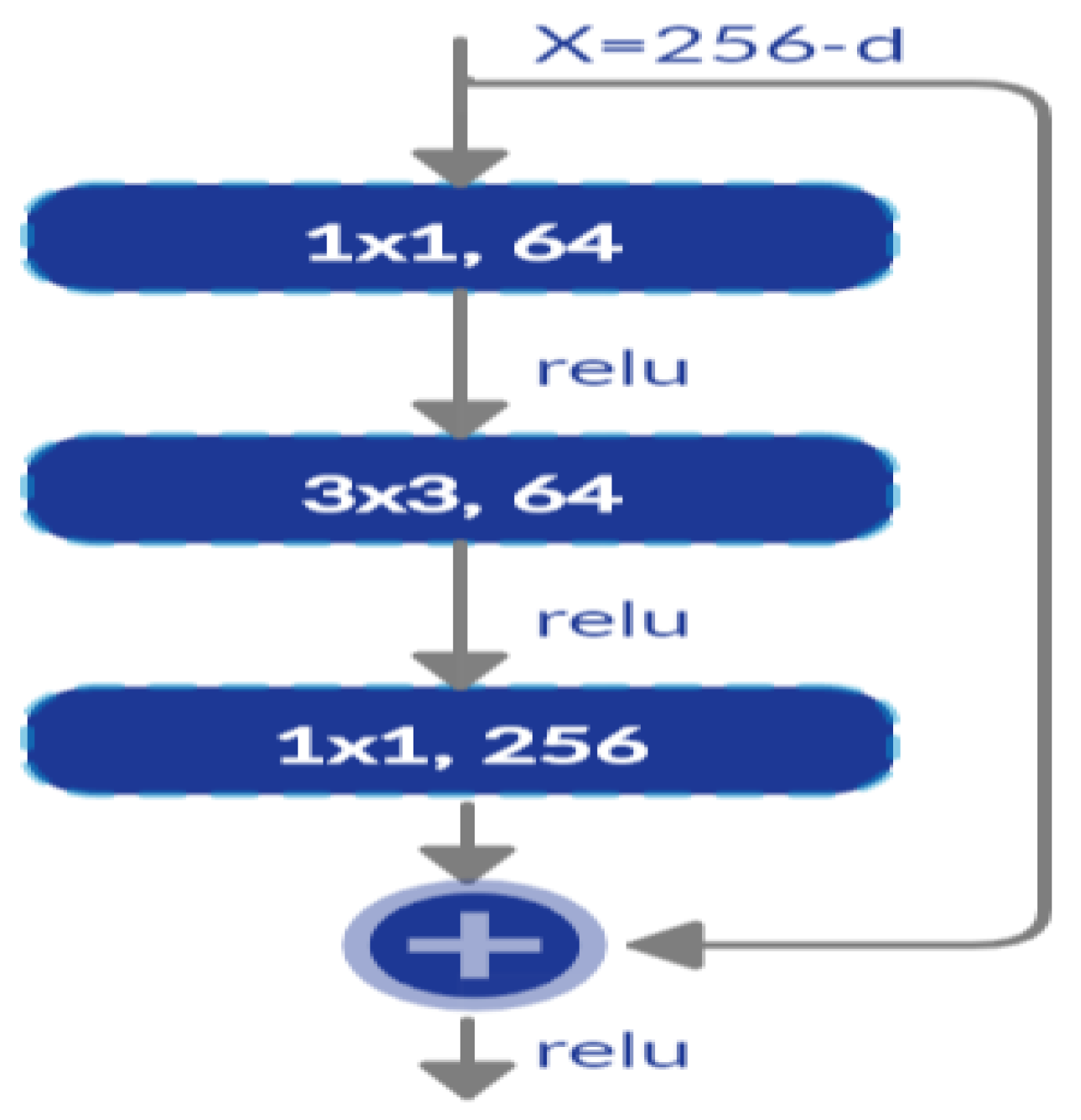

- ResNet:

- (2)

- VGGNet [26]:

- (3)

- Xception [26]:

4. Proposed Methodology

- Proposed Specialized ResNet50

5. Discussion and Experimental Results

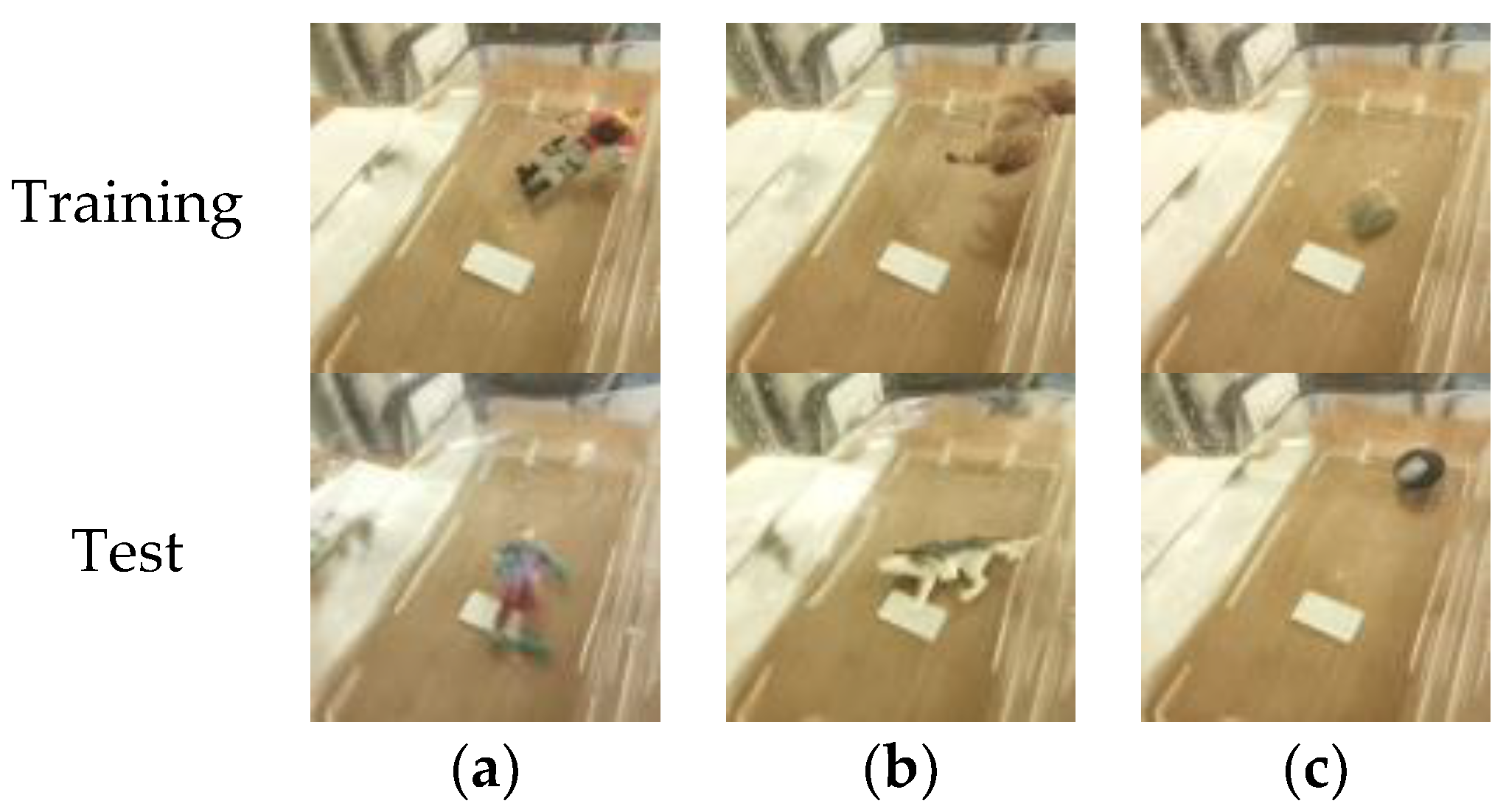

5.1. Dataset

5.2. Metrics

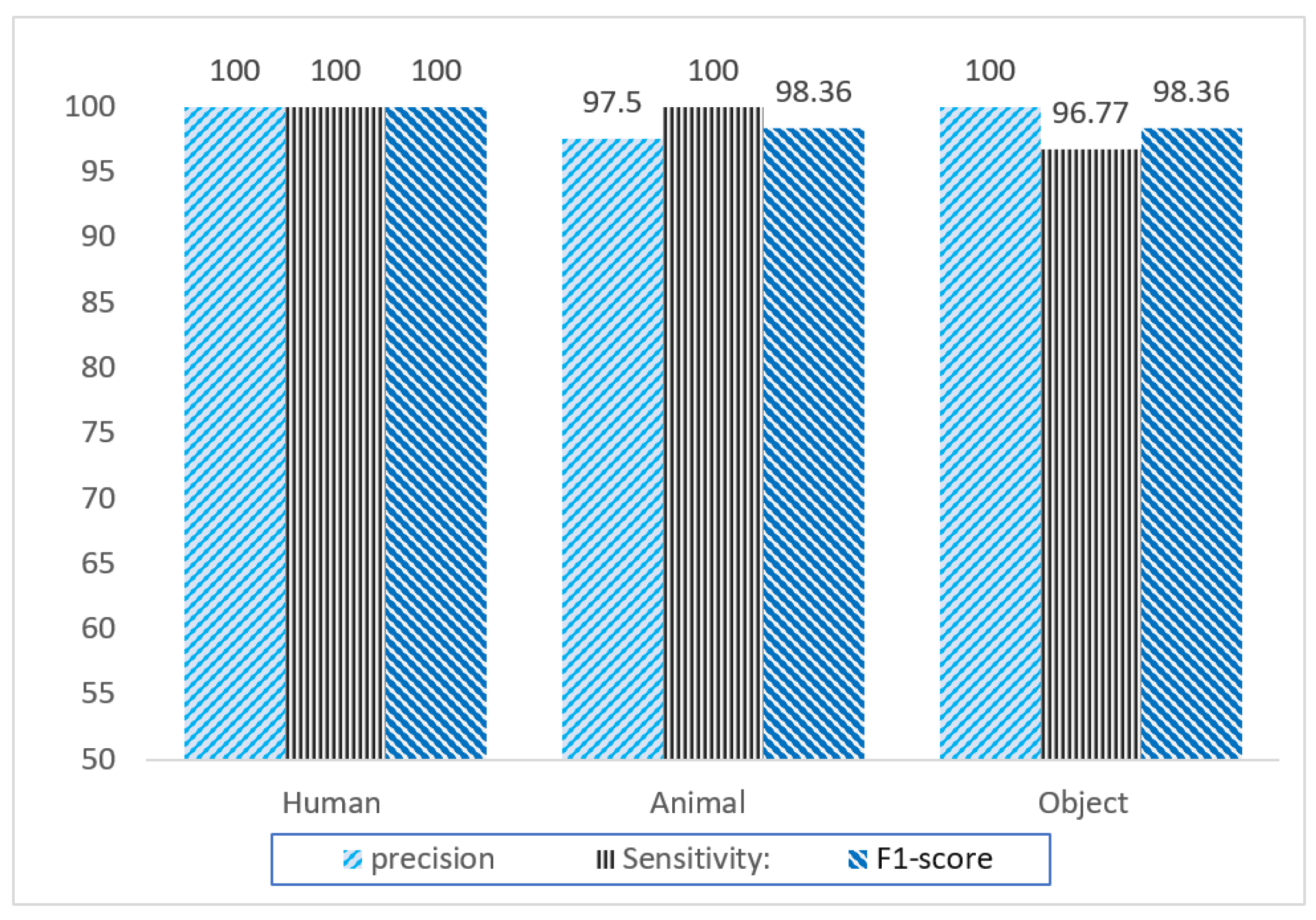

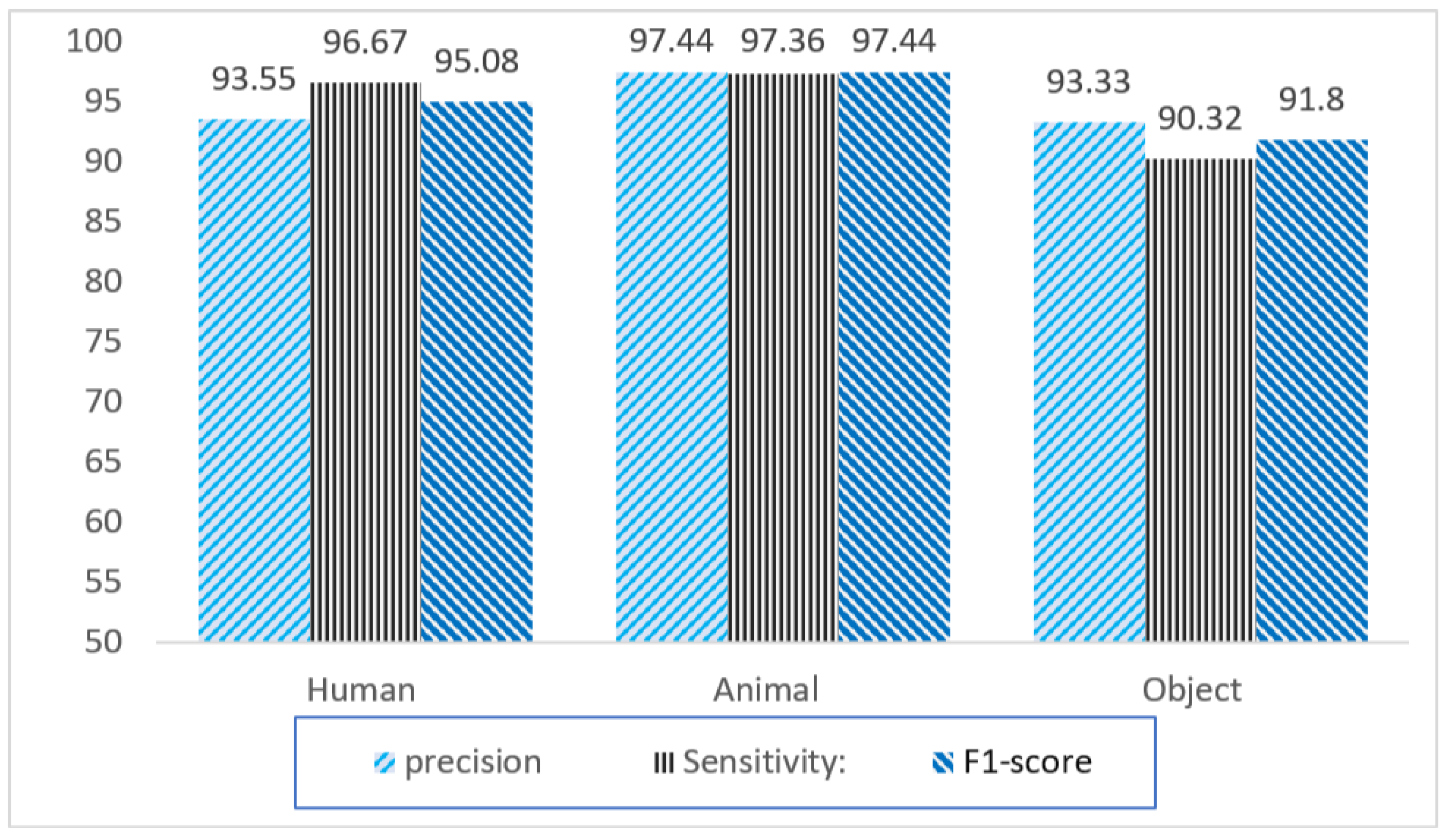

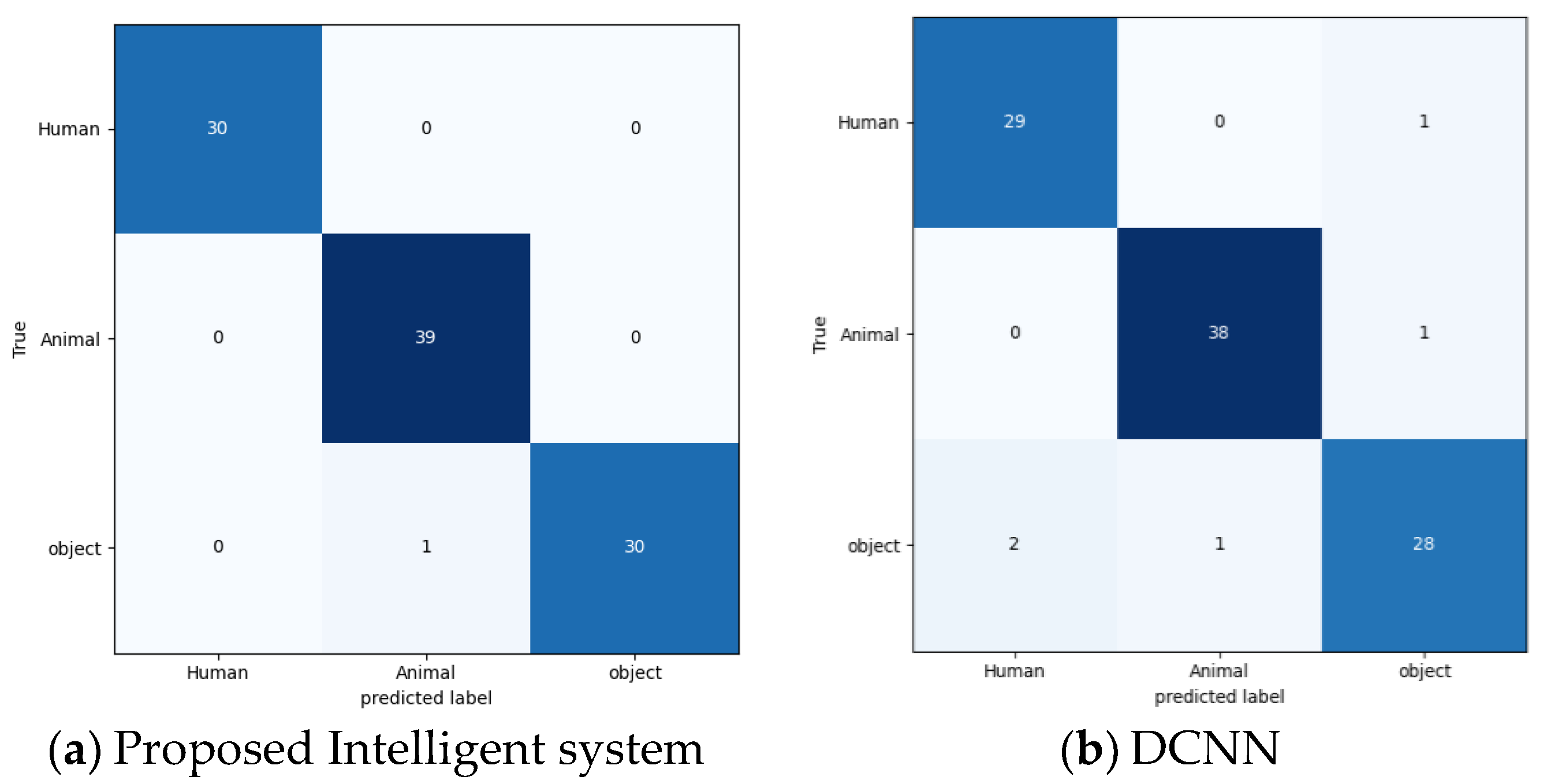

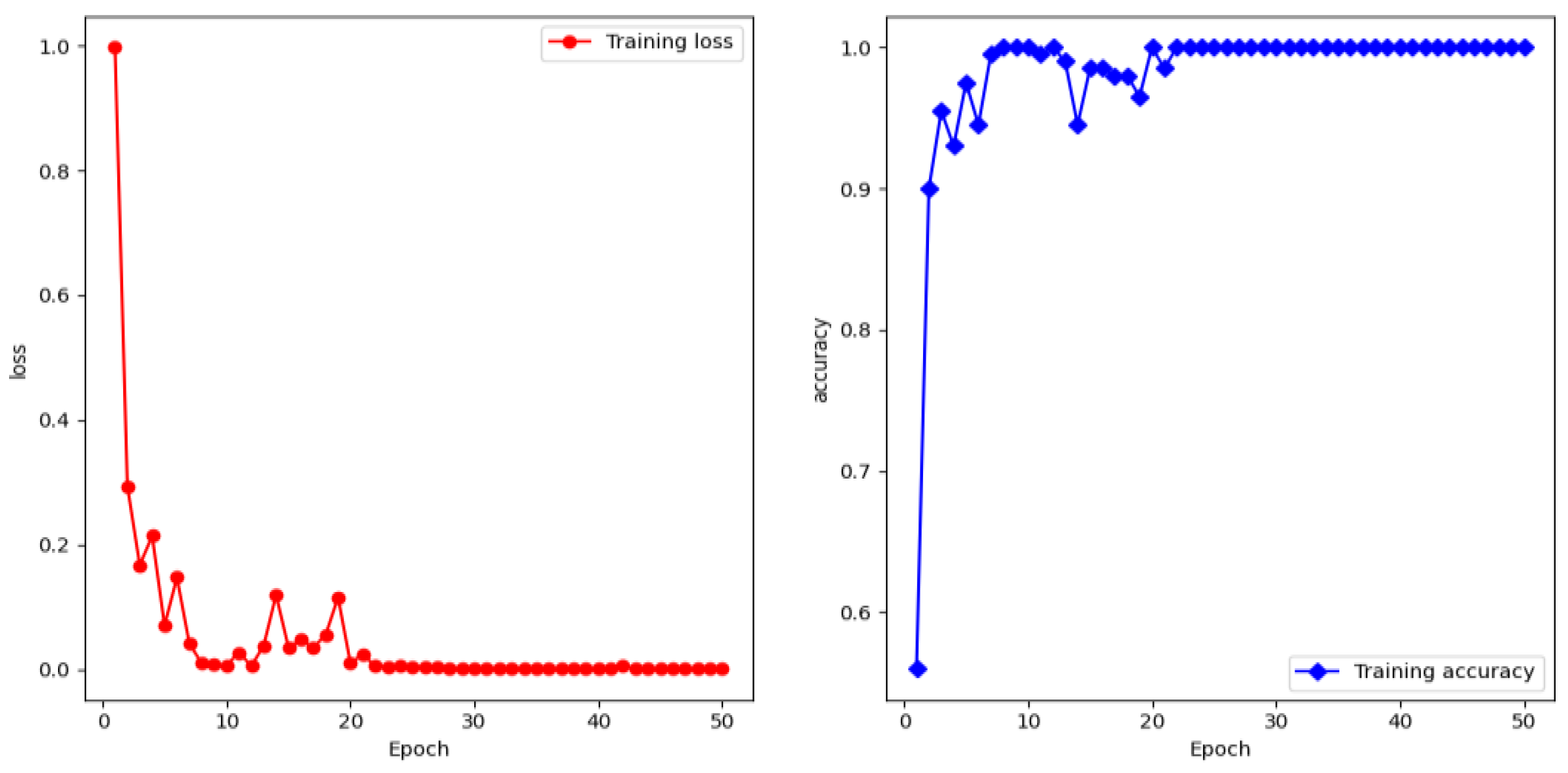

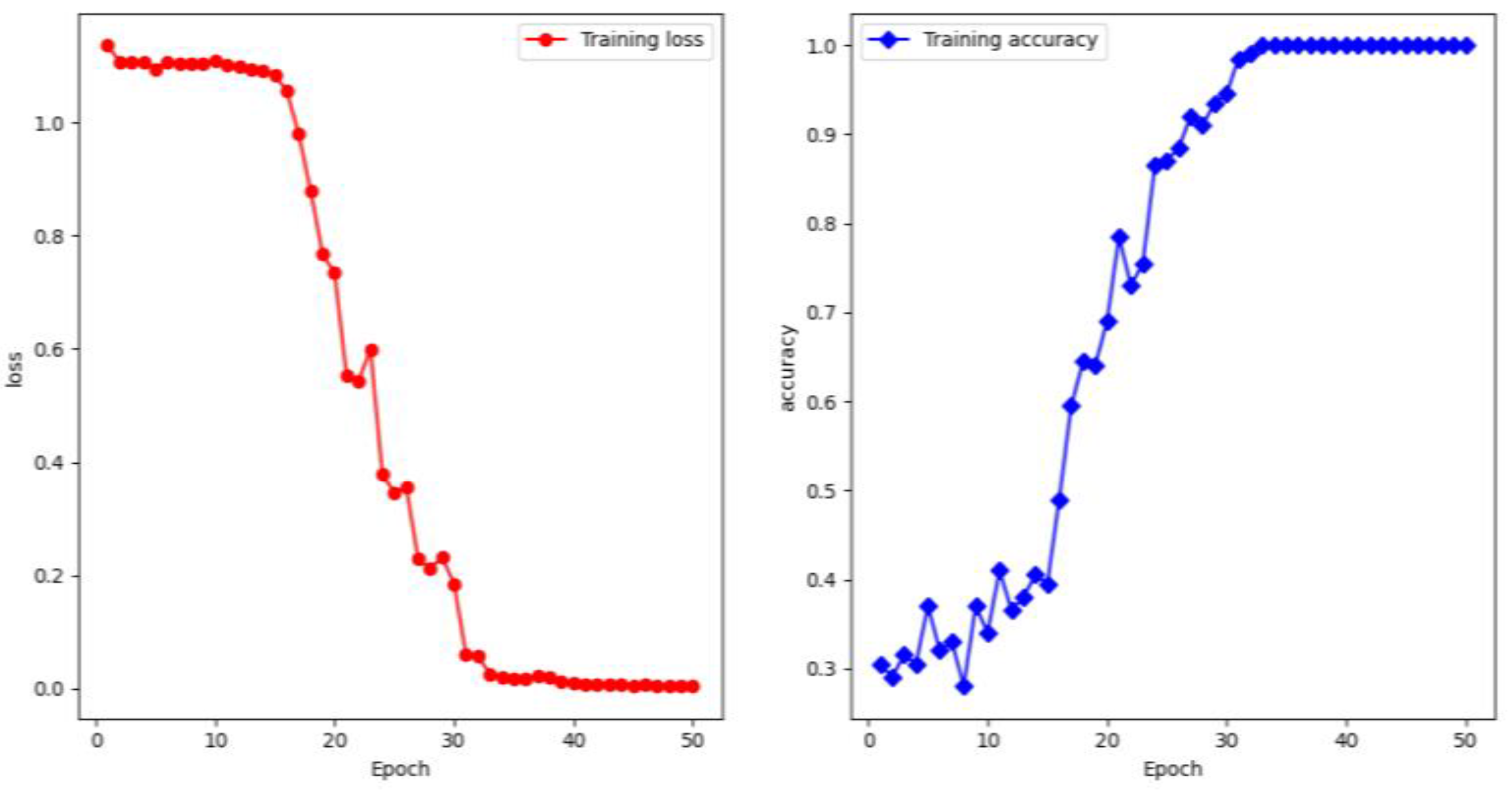

5.3. Performance Evaluation

5.4. Discussion

6. Conclusions and Future Work

Funding

Conflicts of Interest

Abbreviations

| IoT | Internet of Things |

| ML | machine learning |

| TL | transfer learning |

| DNN | deep neural network |

| DCNN | deep convolution neural network |

| ResNet | residual network |

| VGG | Visual Geometry Group |

| Xception | Extreme Inception |

| ReLU | rectified linear unit |

| Tanh | hyperbolic tangent |

| SGD | stochastic gradient descent |

References

- Yaïci, W.; Krishnamurthy, K.; Entchev, E.; Longo, M. Survey of Internet of Things (IoT) Infrastructures for Building Energy Systems. In Proceedings of the 2020 Global Internet of Things Summit (GIoTS), Dublin, Ireland, 3 June 2020. [Google Scholar]

- Cisko. Cisco Annual Internet Report (2018–2023) White Paper. 2020. Available online: https://www.cisco.com/c/en/us/solutions/collateral/executive-perspectives/annual-internet-report/white-paper-c11-741490.html (accessed on 3 August 2020).

- Yavari, A.; Georgakopoulos, D.; Stoddart, P.R.; Shafiei, M. Internet of Things-based Hydrocarbon Sensing for Real-time Environmental Monitoring. In Proceedings of the 2019 IEEE 5th World Forum on Internet of Things (WF-IoT), Limerick, Ireland, 15–18 April 2019. [Google Scholar]

- Khattab, A.; Youssry, N. Machine Learning for IoT Systems. In Internet of Things (IoT); Springer: Cham, Switzerland, 2020; pp. 105–127. [Google Scholar]

- Cui, L.; Yang, S.; Chen, F.; Ming, Z. A survey on application of machine learning for Internet of Things. Int. J. Mach. Learn. Cybern. 2018, 9, 1399–1417. [Google Scholar] [CrossRef]

- Kazeem, O.O.; Akintade, O.O.; Kehinde, L.O. Comparative Study of Communication Interfaces for Sensors and Actuators in the Cloud of Internet of Things. Int. J. Internet Things 2017, 6, 9–13. [Google Scholar]

- Liu, H.; Wen, B.; Frej, M.B.H. A Novel Method for Recognition, Localization, and Alarming to Prevent Swimmers from Drowning. In Proceedings of the 2019 IEEE Cloud Summit, Washington, DC, USA, 8–10 August 2019. [Google Scholar]

- Borrero, J.D.; Zabalo, A. An Autonomous Wireless Device for Real-Time Monitoring of Water Needs. Sensors 2020, 20, 2078. [Google Scholar] [CrossRef]

- Simões, G.; Dionísio, C.; Glória, A.; Sebastião, P.; Souto, N. Smart System for Monitoring and Control of Swimming Pools. In Proceedings of the 2019 IEEE 5th World Forum on Internet of Things (WF-IoT), Limerick, Ireland, 15–18 April 2019. [Google Scholar]

- Zhang, C.; Li, X.; Lei, F. A novel camera-based drowning detection algorithm. In Proceedings of the Chinese Conference on Image and Graphics Technologies, Beijing, China, 19–20 April 2015. [Google Scholar]

- Alshbatat, A.I.N.; Alhameli, S.; Almazrouei, S.; Alhameli, S.; Almarar, W. Automated Vision-based Surveillance System to Detect Drowning Incidents in Swimming Pools. In Proceedings of the 2020 Advances in Science and Engineering Technology International Conferences (ASET), Dubai, UAE, 4 February–9 April 2020. [Google Scholar]

- Prakash, B.D. Near-drowning Early Prediction Technique Using Novel Equations (NEPTUNE) for Swimming Pools. arXiv 2018, arXiv:1805.02530. [Google Scholar]

- Hayat, M.A.; Yang, G.; Iqbal, A.; Saleem, A.; Hussain, A.; Mateen, M. The Swimmers Motion Detection Using Improved VIBE Algorithm. In Proceedings of the 2019 International Conference on Robotics and Automation in Industry (ICRAI), Rawalpindi, Pakistan, 21–22 October 2019. [Google Scholar]

- Wong, W.K.; Hui, J.H.; Loo, C.K.; Lim, W.S. Off-time swimming pool surveillance using thermal imaging system. In Proceedings of the 2011 IEEE International Conference on Signal and Image Processing Applications (ICSIPA), Kuala Lumpur, Malaysia, 16–18 November 2011. [Google Scholar]

- Fei, L.; Xueli, W.; Dongsheng, C. Drowning detection based on background subtraction. In Proceedings of the 2009 International Conference on Embedded Software and Systems, Zhejiang, China, 25–27 May 2009. [Google Scholar]

- Carballo-Fazanes, A.; Bierens, J.J. The visible behaviour of drowning persons: A pilot observational study using analytic software and a nominal group technique. Int. J. Environ. Res. Public Health 2020, 17, 6930. [Google Scholar] [CrossRef] [PubMed]

- Claesson, A.; Schierbeck, S.; Hollenberg, J.; Forsberg, S.; Nordberg, P.; Ringh, M.; Olaussonb, M.; Janssonb, A.; Norda, A. The use of drones and a machine-learning model for recognition of simulated drowning victims—A feasibility study. Resuscitation 2020, 156, 196–201. [Google Scholar] [CrossRef] [PubMed]

- Sethi, P.; Sarangi, S.R. Internet of things: Architectures, protocols, and applications. Int. J. Electr. Comput. Eng. 2017, 2017. [Google Scholar] [CrossRef]

- Shafi, U.; Mumtaz, R.; García-Nieto, J.; Ali Hassan, S. Precision agriculture techniques and practices: From considerations to applications. Sensors 2019, 19, 3796. [Google Scholar] [CrossRef] [PubMed]

- Glória, A.; Dionísio, C.; Simões, G.; Cardoso, J.; Sebastião, P. Water Management for Sustainable Irrigation Systems Using Internet-of-Things. Sensors 2020, 20, 1402. [Google Scholar] [CrossRef] [PubMed]

- Kandaswamy, C.; Silva, L.M.; Alexandre, L.A.; Santos, J.M. Deep transfer learning ensemble for classification. In Proceedings of the International Work-Conference on Artificial Neural Networks, Palma de Mallorca, Spain, 10–12 June 2015. [Google Scholar]

- Deniz, E.; Şengür, A.; Kadiroğlu, Z.; Guo., Y.; Bajaj, V.; Budak, Ü. Transfer learning based histopathologic image classification for breast cancer detection. Health Inf. Sci. Syst. 2018, 6, 18. [Google Scholar] [CrossRef] [PubMed]

- Al-Dhamari, A.; Sudirman, R.; Mahmood, N.H. Transfer Deep Learning Along with Binary Support Vector Machine for Abnormal Behavior Detection. IEEE Access 2020, 8, 61085–61095. [Google Scholar] [CrossRef]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Deep residual learning for image recognition. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016. [Google Scholar]

- Theckedath, D.; Sedamkar, R. Detecting Affect States Using VGG16, ResNet50 and SE-ResNet50 Networks. SN Comput. Sci. 2020, 1, 1–7. [Google Scholar] [CrossRef]

- Simonyan, K.; Zisserman, A. Very deep convolutional networks for large-scale image recognition. arXiv 2014, arXiv:1409.1556. [Google Scholar]

| Hardware | Software and Technology |

|---|---|

| Motion detection sensor | Transfer learning |

| Camera sensor | Deep learning |

| Wi-Fi device | TensorFlow tool and Keras library |

| Server station | Visual Studio 2017 |

| Communication Protocols | Data Rate (kbps) | Range (m) | Power |

|---|---|---|---|

| 6LoWPAN | 0.3–50 kb/s | 2000–5000 | Low |

| ZigBee 2 | 25 kb/s | 10–100 | Low |

| Wi-Fi | 1–54 Mb/s | 1–100 | Medium |

| Specific Parameter | Value/Type |

|---|---|

| Layers | 53 |

| Pretrained Model | ImageNet |

| Input shape | 100, 100, 3 |

| Epochs | 50 |

| Optimizer | SGD (stochastic gradient descent) |

| Loss Function | Categorical cross entropy |

| Activation Function | ReLU (rectified linear unit) |

| Pooling | Global average pooling |

| include_top | False |

| Extra Layer | 3 fully connected layers |

| Activation Function—Last Layer | Softmax |

| Network | Accuracy |

|---|---|

| DCNN | 95.00 |

| ResNet50 | 97.00 |

| VGG16 | 98.00 |

| VGG19 | 97.00 |

| Xception | 98.00 |

| Specialized Model | 99.00 |

| Network | Precision | Specificity | Sensitivity | F1-score |

|---|---|---|---|---|

| DCNN | 93.55 | 96.67 | 97.06 | 95.08 |

| ResNet50 | 96.77 | 98.53 | 100 | 98.36 |

| Specialized Model | 100 | 100 | 100 | 100 |

| Method | Sensing Method | Mode of Action | System Complexity | Cost Efficiency | Reliability |

|---|---|---|---|---|---|

| Camera-Based Drowning Detection Algorithm [10] | Video sequences | Detection and classification | Moderate | Moderate | High |

| Automated Vision-Based Surveillance System [11] | Video sequences | Detection, rescue and alarm | High | High | Moderate |

| Motion Detection Using Improved VIBE Algorithm [13] | Video sequences | Detection | Moderate | Moderate | Moderate |

| NEPTUNE [12] | Video sequences between 1 to 5 s | Detection and alarm | High | Moderate | Moderate |

| Ours | Motion-detector sensor, image processing | Detection, Recognition, and alert | Low | Moderate | High |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2020 by the author. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Alotaibi, A. Automated and Intelligent System for Monitoring Swimming Pool Safety Based on the IoT and Transfer Learning. Electronics 2020, 9, 2082. https://doi.org/10.3390/electronics9122082

Alotaibi A. Automated and Intelligent System for Monitoring Swimming Pool Safety Based on the IoT and Transfer Learning. Electronics. 2020; 9(12):2082. https://doi.org/10.3390/electronics9122082

Chicago/Turabian StyleAlotaibi, Aziz. 2020. "Automated and Intelligent System for Monitoring Swimming Pool Safety Based on the IoT and Transfer Learning" Electronics 9, no. 12: 2082. https://doi.org/10.3390/electronics9122082

APA StyleAlotaibi, A. (2020). Automated and Intelligent System for Monitoring Swimming Pool Safety Based on the IoT and Transfer Learning. Electronics, 9(12), 2082. https://doi.org/10.3390/electronics9122082