Stochastic Optimization Methods for Parametric Level Set Reconstructions in 2D through-the-Wall Radar Imaging

Abstract

1. Introduction

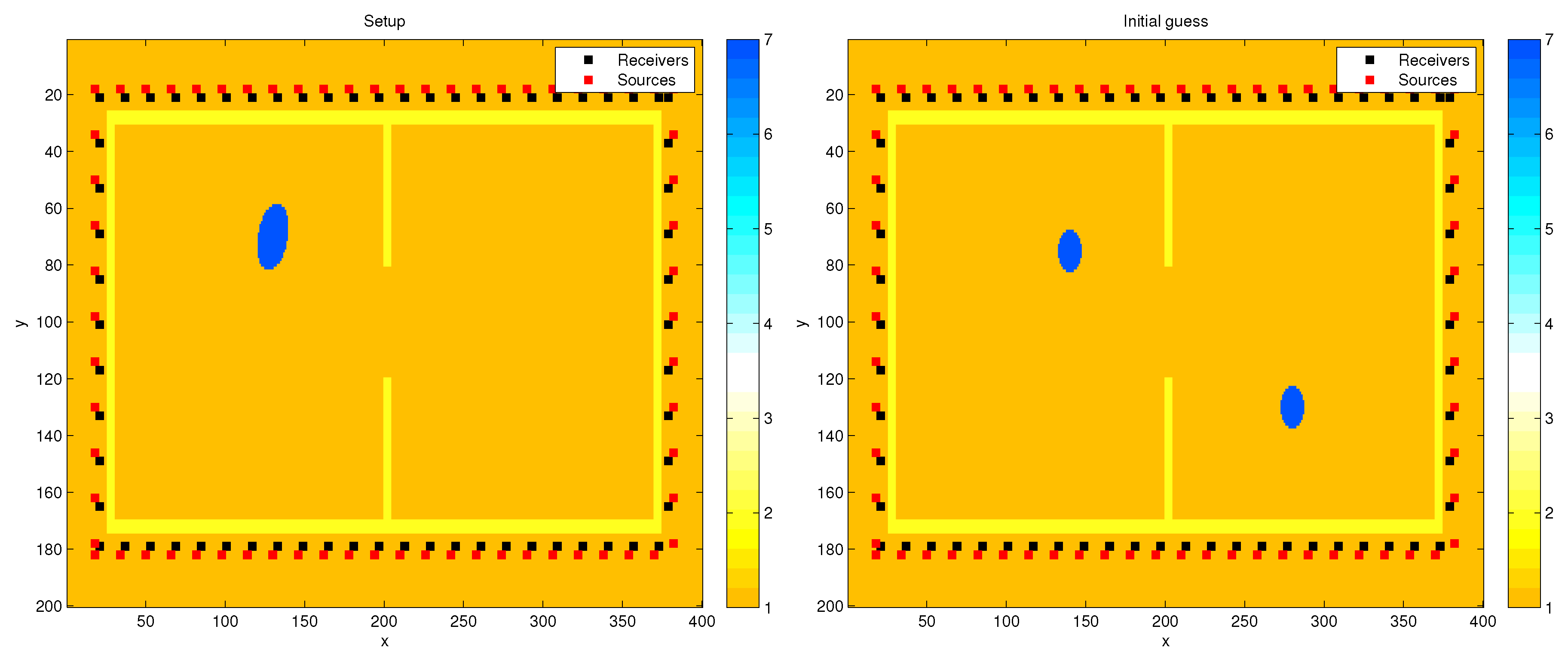

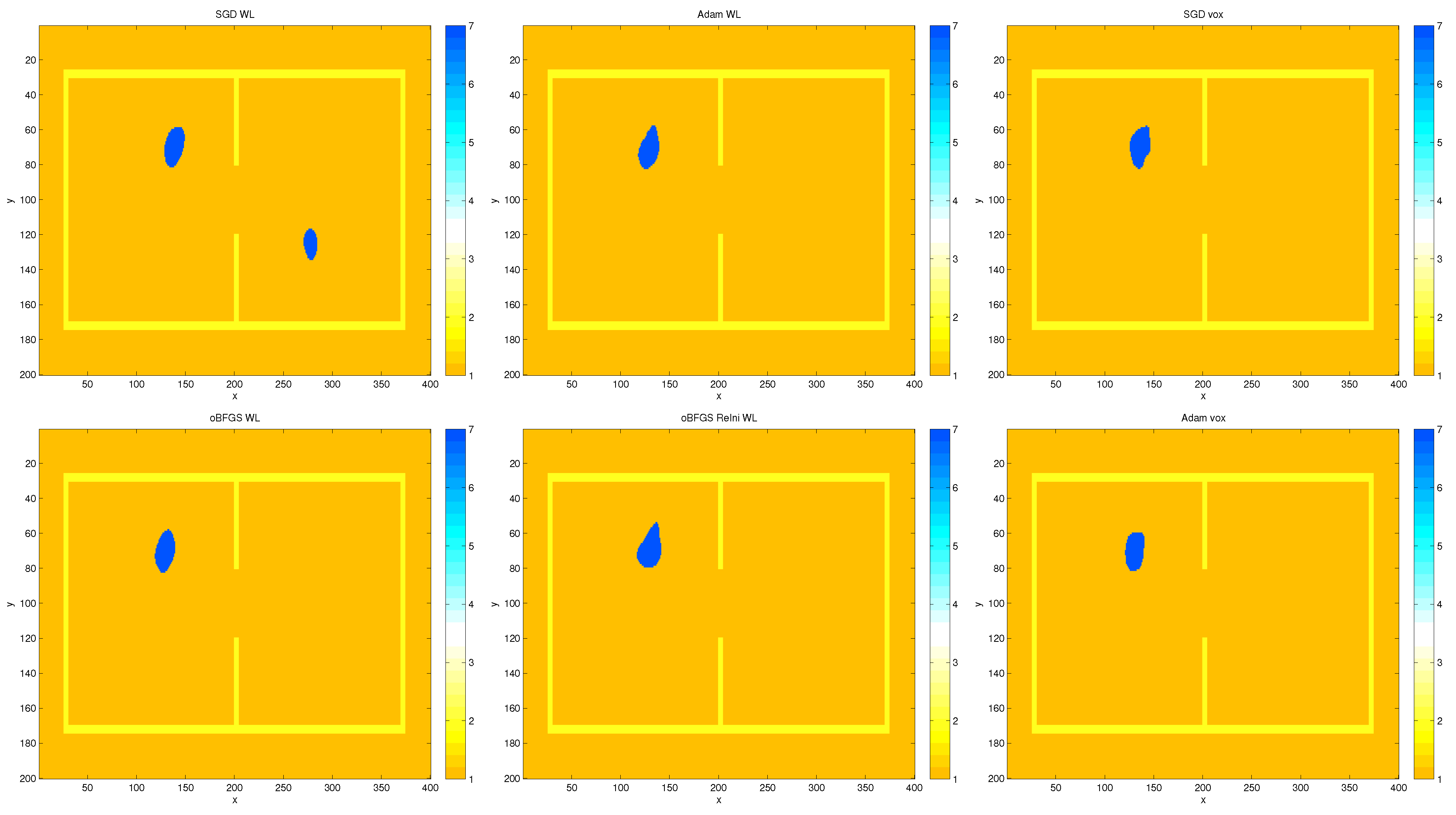

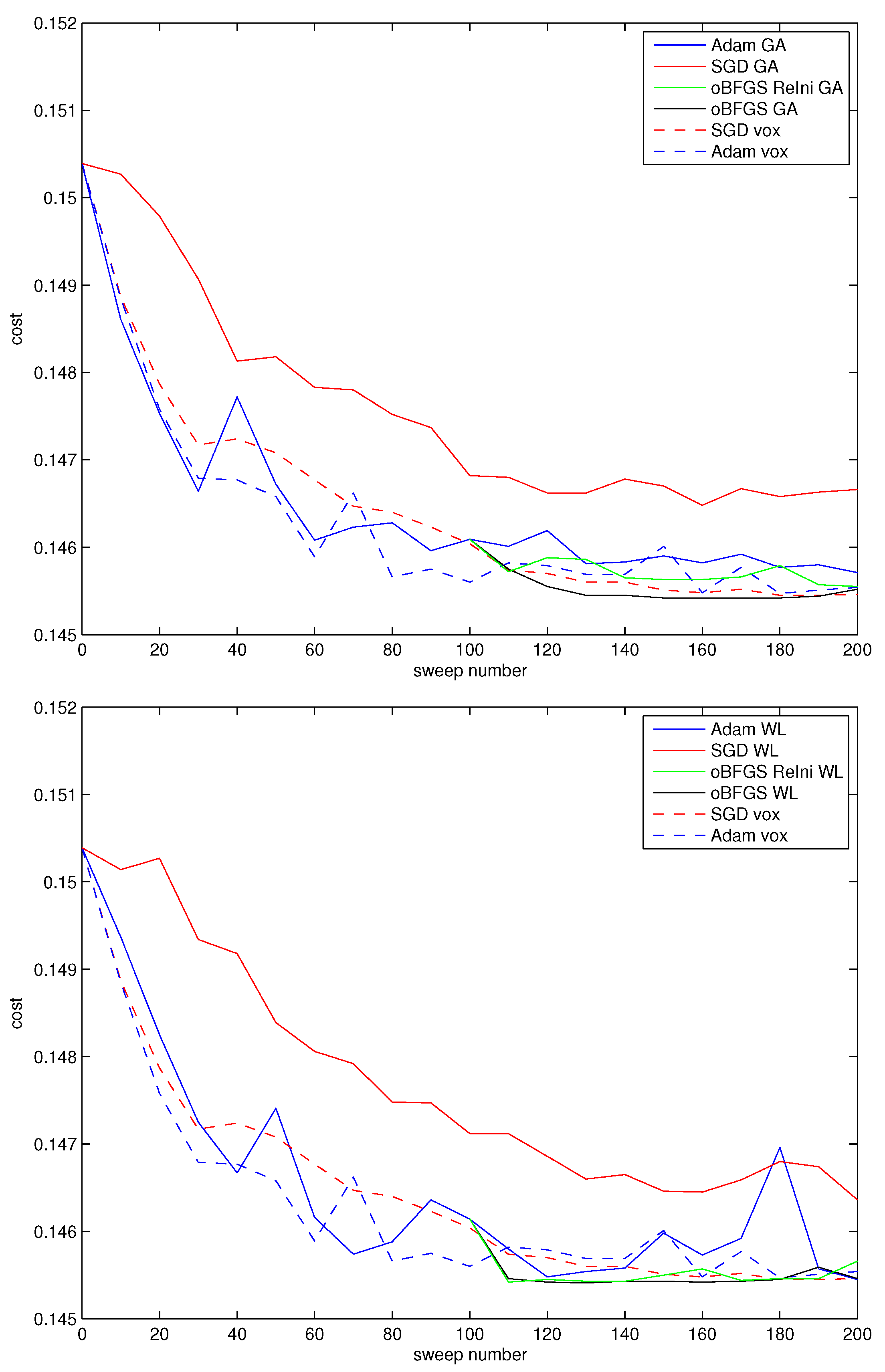

2. Materials and Methods

2.1. The Shape-Based Inverse Problem

2.1.1. The Forward Problem

2.1.2. Level Set Representation of Shapes

2.1.3. Calculation of Descent Directions

2.1.4. Specific Choice of Basis Functions

2.1.5. Shape Evolution Schemes

2.2. Stochastic Optimization Algorithms

2.2.1. A Line Search Scheme for Stochastic Shape Optimization

| Algorithm 1: Shape-based line search scheme. |

| Let and be the coefficient vector at iteration t and the new update direction |

| Compute the level set function associated with (Equation (15)) |

| Compute the permittivity profile associated with (Equation (8)) |

| Initialize the line search parameter , where |

| Define the parameters such that and |

| Define the interval |

| while () do |

| % trial coefficient vector |

| Compute the corresponding level set function (Equation (15)) |

| Compute the corresponding permittivity profile (Equation (8)) |

| Count the number of pixels where |

| if () then |

| end if |

| if () then |

| end if |

| end while |

| return |

2.2.2. A Stochastic Gradient Descent Algorithm

2.2.3. The Adam Algorithm

| Algorithm 2: SGD algorithm. |

| Initialize the parameter vector |

| Initialize the level set function |

| while not converged do |

| Randomly extract a data subset from the dataset |

| Compute the gradient of the cost wrt : |

| Update the coefficient vector: |

| where is chosen according to the line search Algorithm 1 |

| end while |

| Compute the final level set and the corresponding permittivity profile |

| Algorithm 3: Adam algorithm. |

| Initialize the parameter vector |

| Initialize the level set function |

| Define the exponential decay rates for the moment estimates: |

| Initialize the first moment vector |

| Initialize the second moment vector |

| while not converged do |

| Randomly extract a data subset from the dataset |

| Compute the gradient of the cost wrt : |

| Update the first moment vector |

| Update the second moment vector , where denotes the elementwise square of |

| Rescale the first moment vector |

| Rescale the second moment vector |

| Update the coefficient vector: , where |

| and is chosen according to the line search Algorithm |

| end while |

| Compute the final level set and the corresponding permittivity profile |

2.2.4. The Online BFGS Algorithm

| Algorithm 4: oBFGS algorithm. |

| Initialize the parameter vector |

| Initialize the level set function |

| Initialize the parameters: , , |

| Initialize the inverse Hessian approximation of the cost: |

| while not converged do |

| Randomly extract a data subset from the dataset |

| Update the coefficient vector: , where |

| with chosen according to the line search Algorithm |

| if (t=0) then |

| end if |

| end while |

| Compute the final level set and the corresponding permittivity profile |

3. Results and Discussion

4. Conclusions

Author Contributions

Funding

Conflicts of Interest

References

- Nkwari, P.K.M.; Sinha, S.; Ferreira, H.C. Through-the-wall radar imaging: A review. IETE Tech. Rev. 2018, 35, 631–639. [Google Scholar] [CrossRef]

- Incorvaia, G.; Dorn, O. 2D Through-the-wall Radar Imaging Using a Level Set Approach. In Proceedings of the 2019 PhotonIcs & Electromagnetics Research Symposium-Spring (PIERS-Spring), Rome, Italy, 17–20 June 2019; pp. 63–72. [Google Scholar]

- Dorn, O.; Miller, E.L.; Rappaport, C.M. A shape reconstruction method for electromagnetic tomography using adjoint fields and level sets. Inverse Probl. 2000, 16, 1119. [Google Scholar] [CrossRef]

- Incorvaia, G.; Dorn, O. Tracking targets from indirect through-the-wall radar observations. In Proceedings of the 2020 14th European Conference on Antennas and Propagation (EuCAP), Copenhagen, Denmark, 15–20 March 2020; pp. 1–5. [Google Scholar]

- Ampazis, N.; Perantonis, S.J. Two highly efficient second-order algorithms for training feedforward networks. IEEE Trans. Neural Netw. 2002, 13, 1064–1074. [Google Scholar] [CrossRef] [PubMed]

- Shepherd, A.J. Second-Order Methods for Neural Networks: Fast and Reliable Training Methods for Multi-Layer Perceptrons; Springer: Berlin/Heidelberg, Germany, 2012. [Google Scholar]

- Dorn, O.; Lesselier, D. Level set methods for inverse scattering. Inverse Probl. 2006, 22, R67. [Google Scholar] [CrossRef]

- Dorn, O.; Miller, E.L.; Rappaport, C.M. Shape reconstruction in 2D from limited-view. In Radon Transforms and Tomography: 2000 AMS-IMS-SIAM Joint Summer Research Conference on Radon Transforms and Tomography, Mount Holyoke College, South Hadley, MA, USA, 18–22 June 2000; American Mathematical Society: Providence, RI, USA, 2001; Volume 278, p. 97. [Google Scholar]

- Dorn, O.; Lesselier, D. Level set methods for inverse scattering - some recent developments. Inverse Probl. 2009, 25, 125001. [Google Scholar] [CrossRef]

- Berenger, J.P. A perfectly matched layer for the absorption of electromagnetic waves. J. Comput. Phys. 1994, 114, 185–200. [Google Scholar] [CrossRef]

- Osher, S.; Sethian, J. Fronts propagating with curvature-dependent speed: Algorithms based on Hamilton- Jacobi formulations. J. Comput. Phys 1988, 79, 12–49. [Google Scholar] [CrossRef]

- Sethian, J. Level set Methods and Fast Marching Methods: Evolving Interfaces in Computational Geometry, Fluid Mechanics, Computers Visions and Material Sciences; Cambridge Monographs on Applied and Computational Mathematics; Cambridge University Press: Cambridge, UK, 1996. [Google Scholar]

- Osher, S.; Fedkiw, R. Level set Method and Dynamic Implicit Surfaces. In Applied Mathematical Sciences; Springer: Berlin/Heidelberg, Germany, 2003; Volume 153. [Google Scholar]

- Santosa, F. A Level-Set Approach for Inverse Problems Involving Obstacles. ESAIM Control. Optim. Calc. Var. 1996, 1, 17–33. [Google Scholar] [CrossRef]

- Aghasi, A.; Kilmer, M.; Miller, E.L. Parametric level set methods for inverse problems. SIAM J. Imaging Sci. 2011, 4, 618–650. [Google Scholar] [CrossRef]

- Kadu, A.; Van Leeuwen, T.; Mulder, W. A parametric level-set approach for seismic full-waveform inversion. In SEG Technical Program Expanded Abstracts 2016; Society of Exploration Geophysicists: Tulsa, OK, USA, 2016; pp. 1146–1150. [Google Scholar]

- Larusson, F.; Fantini, S.; Miller, E.L. Parametric level set reconstruction methods for hyperspectral diffuse optical tomography. Biomed. Opt. Express 2012, 3, 1006–1024. [Google Scholar] [CrossRef]

- Dorn, O.; Bertete-Aguirre, H.; Berryman, J.G.; Papanicolaou, G.C. A nonlinear inversion method for 3D electromagnetic imaging using adjoint fields. Inverse Probl. 1999, 15, 1523. [Google Scholar] [CrossRef]

- Natterer, F.; Sielschott, H.; Dorn, O.; Dierkes, T.; Palamodov, V. Fréchet Derivatives for Some Bilinear Inverse Problems. SIAM J. Appl. Math. 2002, 62, 2092–2113. [Google Scholar] [CrossRef]

- Wright, G.B. Radial Basis Function Interpolation: Numerical and Analytical Developments. Ph.D. Thesis, University of Colorado at Boulder, Boulder, CO, USA, 2003. [Google Scholar]

- Zhang, T. Solving large scale linear prediction problems using stochastic gradient descent algorithms. In Twenty-First International Conference on Machine Learning; Association for Computing Machinery: New York, NY, USA, 2004; p. 116. [Google Scholar]

- Johnson, R.; Zhang, T. Accelerating stochastic gradient descent using predictive variance reduction. Adv. Neural Inf. Process. Syst. 2013, 26, 315–323. [Google Scholar]

- Hedges, L.O.; Kim, H.A.; Jack, R.L. Stochastic level-set method for shape optimisation. J. Comput. Phys. 2017, 348, 82–107. [Google Scholar] [CrossRef]

- Wang, X.; Ma, S.; Goldfarb, D.; Liu, W. Stochastic quasi-Newton methods for nonconvex stochastic optimization. SIAM J. Optim. 2017, 27, 927–956. [Google Scholar] [CrossRef]

- Dorn, O.; Hiles, A. A Level Set Method for Magnetic Induction Tomography of 3D Boxes and Containers. In Electromagnetic Non-Destructive Evaluation (XXI); IOS Press: Amsterdam, The Netherlands, 2018; pp. 33–40. [Google Scholar]

- Hiles, A.; Dorn, O. Sparsity and level set regularization for near-field electromagnetic imaging in 3D. Inverse Probl. 2020, 36, 025012. [Google Scholar] [CrossRef]

- Hiles, A.; Dorn, O. Colour level set regularization for the electromagnetic imaging of highly discontinuous parameters in 3D. Inverse Probl. Sci. Eng. 2020, 1–36. [Google Scholar] [CrossRef]

- Bottou, L. Stochastic gradient learning in neural networks. Proc. Neuro-Nımes 1991, 91, 12. [Google Scholar]

- Bottou, L. Large-scale machine learning with stochastic gradient descent. In Proceedings of COMPSTAT’2010; Springer: Berlin/Heidelberg, Germany, 2010; pp. 177–186. [Google Scholar]

- Kingma, D.P.; Ba, J. Adam: A method for stochastic optimization. arXiv 2014, arXiv:1412.6980. [Google Scholar]

- Schraudolph, N.N.; Yu, J.; Günter, S. A Stochastic Quasi-Newton Method for Online Convex Optimization. In Proceedings of the 11th International Conference on Artificial Intelligence and Statistics (AISTATS 2007); 2007; pp. 436–443. Available online: http://proceedings.mlr.press/v2/schraudolph07a/schraudolph07a.pdf (accessed on 29 September 2020).

- Fletcher, R. A new approach to variable metric algorithms. Comput. J. 1970, 13, 317–322. [Google Scholar] [CrossRef]

- Liu, D.C.; Nocedal, J. On the limited memory BFGS method for large scale optimization. Math. Program. 1989, 45, 503–528. [Google Scholar] [CrossRef]

- Byrd, R.H.; Hansen, S.L.; Nocedal, J.; Singer, Y. A stochastic quasi-Newton method for large-scale optimization. SIAM J. Optim. 2016, 26, 1008–1031. [Google Scholar] [CrossRef]

- Dai, Y.H.; Liao, L.Z.; Li, D. On restart procedures for the conjugate gradient method. Numer. Algorithms 2004, 35, 249–260. [Google Scholar] [CrossRef]

- Powell, M.J.D. Restart procedures for the conjugate gradient method. Math. Program. 1977, 12, 241–254. [Google Scholar] [CrossRef]

- Nocedal, J.; Wright, S. Numerical Optimization, 2nd ed.; Springer: Berlin/Heidelberg, Germany, 2006. [Google Scholar]

- Breglia, A.; Capozzoli, A.; Curcio, C.; Liseno, A. CUDA expression templates forelectromagnetic applications on GPUs. IEEE Antennas Prop. Mag. 2013, 55, 156–166. [Google Scholar] [CrossRef]

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2020 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Incorvaia, G.; Dorn, O. Stochastic Optimization Methods for Parametric Level Set Reconstructions in 2D through-the-Wall Radar Imaging. Electronics 2020, 9, 2055. https://doi.org/10.3390/electronics9122055

Incorvaia G, Dorn O. Stochastic Optimization Methods for Parametric Level Set Reconstructions in 2D through-the-Wall Radar Imaging. Electronics. 2020; 9(12):2055. https://doi.org/10.3390/electronics9122055

Chicago/Turabian StyleIncorvaia, Gabriele, and Oliver Dorn. 2020. "Stochastic Optimization Methods for Parametric Level Set Reconstructions in 2D through-the-Wall Radar Imaging" Electronics 9, no. 12: 2055. https://doi.org/10.3390/electronics9122055

APA StyleIncorvaia, G., & Dorn, O. (2020). Stochastic Optimization Methods for Parametric Level Set Reconstructions in 2D through-the-Wall Radar Imaging. Electronics, 9(12), 2055. https://doi.org/10.3390/electronics9122055