Bouncer: A Resource-Aware Admission Control Scheme for Cloud Services

Abstract

1. Introduction

2. Related Work

3. Background and Challenges

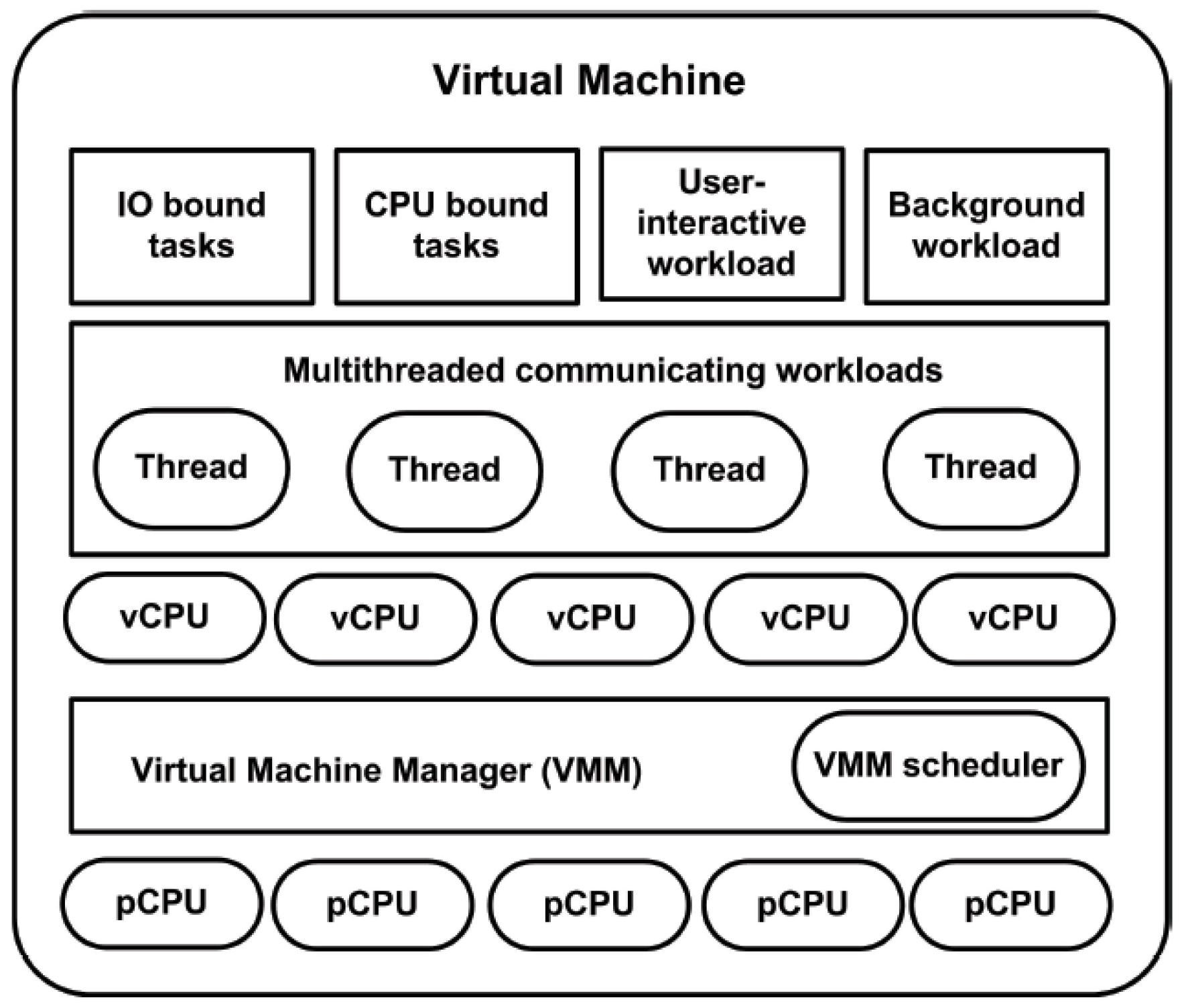

3.1. Workload Admission in Virtualized Environments

- Allowing the efficient use of resources.

- Initiating support for upcoming requests.

- Assigning requests their respective resource requirements.

- Mapping various user requests with different QoS parameters to VMs.

3.2. Admission Control Challenges in System Virtualization

- Resource contention: Virtualized services and environments consistently need to verify whether there are enough resources available to satisfy the VM-level reservation (without interrupting VM kernel operations) or the VM-level reservations of other VMs running on that host.

- Resource pre-emption: To maximize profits, service providers are keen to accept as many services as possible. This develops a need to define the effective number of requests that can be accepted by a resource provider while ensuring that QoS violations are minimized. Efficient mechanisms are required to provide these services and involve using pre-emption aware schemes.

- Oversubscription: While oversubscription can leverage underutilized capacity in the cloud, it can also lead to overload. Workload-admission control avoids oversubscription effects by minimizing congestion, packet loss, and possible degradation of the user service experience.

- Overhead and overbooking: VM contention issues on shared computing resources in data centers bring noticeable performance overheads and can affect the VM performance for tenants. Efficient resource consolidation strategies can address these issues by using various overhead mitigation techniques; a number of these are presented in [37].

4. System Design

4.1. Design Considerations

- Prioritization of services: The prioritization of services minimizes or eliminates the need for a detailed and well-rehearsed plan. In order to enable the prioritization of services, Bouncer enforces prioritization levels at the workload-admission control and scheduling phases. This classification does not violate QoS rules and is also in line with the optimization requirements of the system.

- Capacity Awareness: To avoid overloading, Bouncer uses service profiling through SDN-based control (implemented during evaluation). This allows a system to define and extend its process capacity to avoid any service degradation.

- Coordination of functions: Admission-control systems should be based on the controlled-time-sharing principle implemented on VMs [41]. The fundamental reason for this is that time-sharing if controlled properly, allows an elastic response to a wide variety of disturbances affecting the workload performance. In Bouncer, we, therefore, assume that the requested time (by services) is accurate.

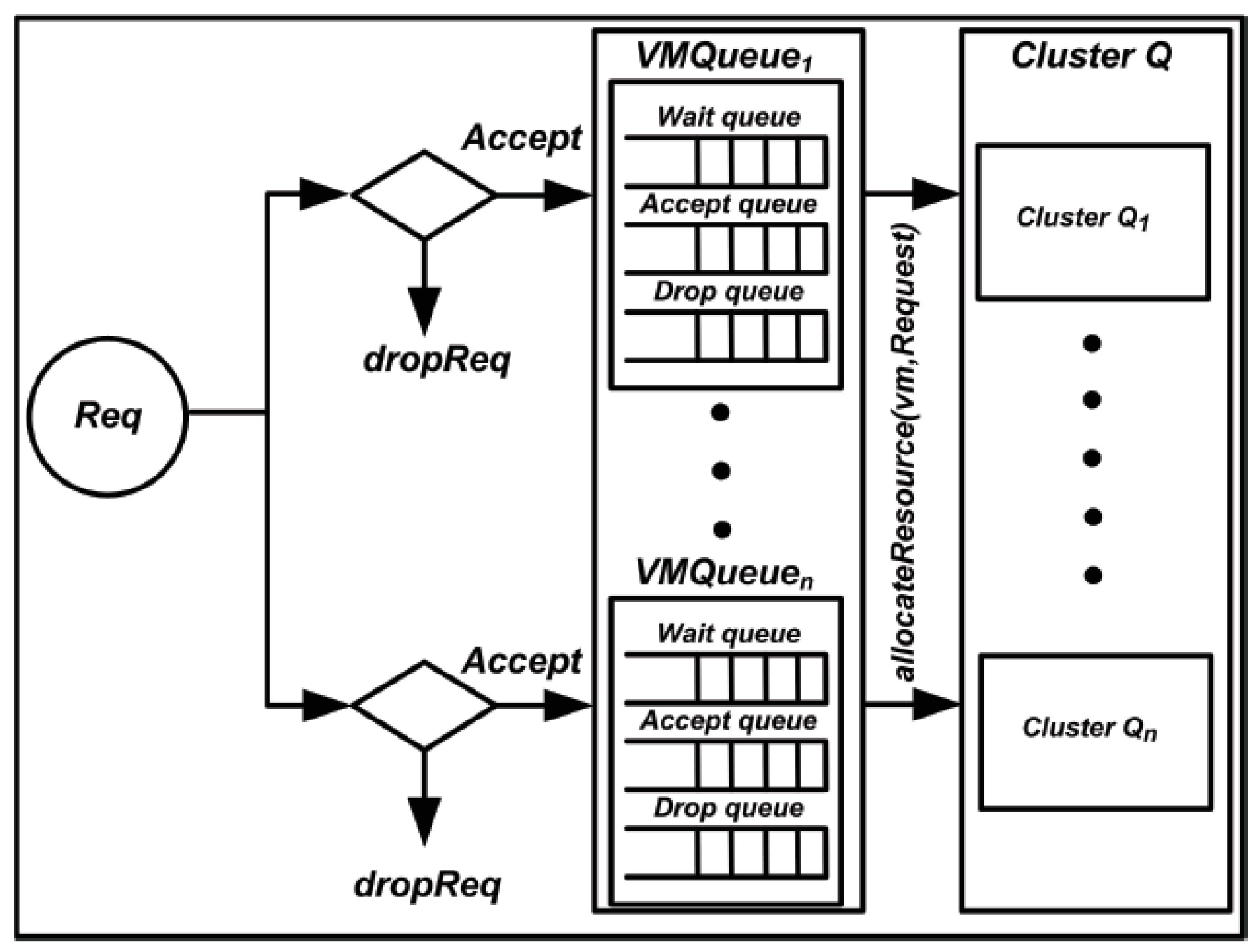

4.2. Workload Distribution and Overload Avoidance Policy for Workload-Admission Control

- By mapping the requests along with their resource usage, which provides a clear description of all the services and their occupied resources by using Equation (1).

- By analyzing Rt for individual requests and ensuring that the results do not exceed Wthreshold, which helps to ensure that the deadline violations of the requests can be minimized by using Equation (2).

| Algorithm 1. Workload distribution and overload avoidance policy. | |

| 1: | Input: reqQueue: The queue of requesting services; vmQueue: The list of available VMs; reqResource: The required resources for executing a VM request; vmResource: Cumulative available VM resource; waitQueue:The waiting queue of (not satisfactory) applications. |

| 2: | Output: Capacity-aware admission control and forwarding decision |

| 3: | /* We implement 3 major conditions to be satisfied before an application can be allocated to a VM. The wait and drop Queues amass the pending and rejected applications */ |

| 4: | whilereqQueue != NULLdo |

| 5: | Request←DeQueue(reqQueue); |

| 6: | ifvmQueue == NULLthen |

| 7: | dropReq (request); |

| 8: | continue; |

| 9: | end if |

| 10: | whilevmQueue != NULL do |

| 11: | vm←getVM (vmQueue); |

| 12: | /* We consider required resources of a VM as a composite of CPU, Memory and Storage resources. VM must ensure that it have enough resources to handle the resource demands */ |

| 13: | ifvmResource[vm] ≥reqResource[request] then |

| 14: | allocateResource(vm, request); |

| 15: | DeqQueue(vmQueue, vm); |

| 16: | /* Traverse VM queue, and consider next resource demand*/ |

| 17: | break; |

| 18: | end if |

| 19: | vmQueue≥vmQueue.next; |

| 20: | /*If none of available VM can satisfy the service requirements, append requests */ |

| 21: | if vmQueue== NULL then |

| 22: | EnQueue(waitQueue, request); |

| 23: | end if |

| 24: | end while |

| 25: | end while |

5. Performance Evaluation and Results

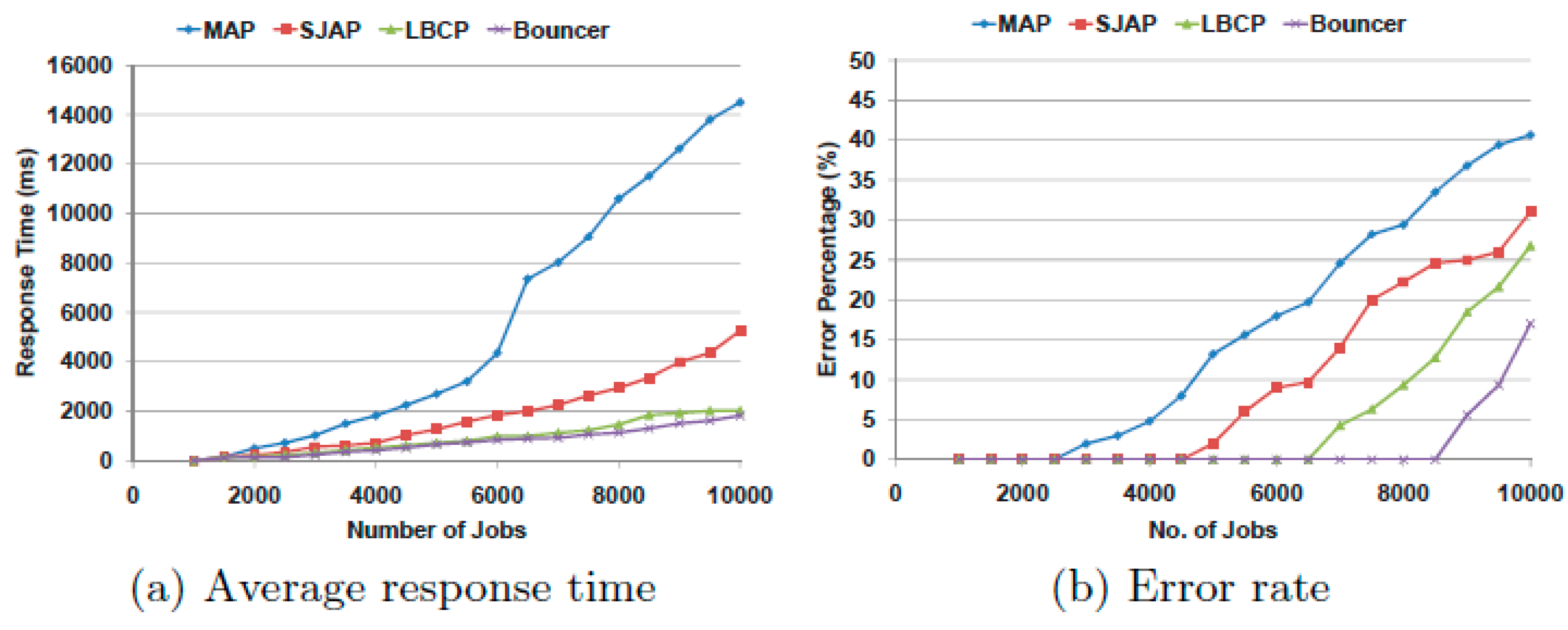

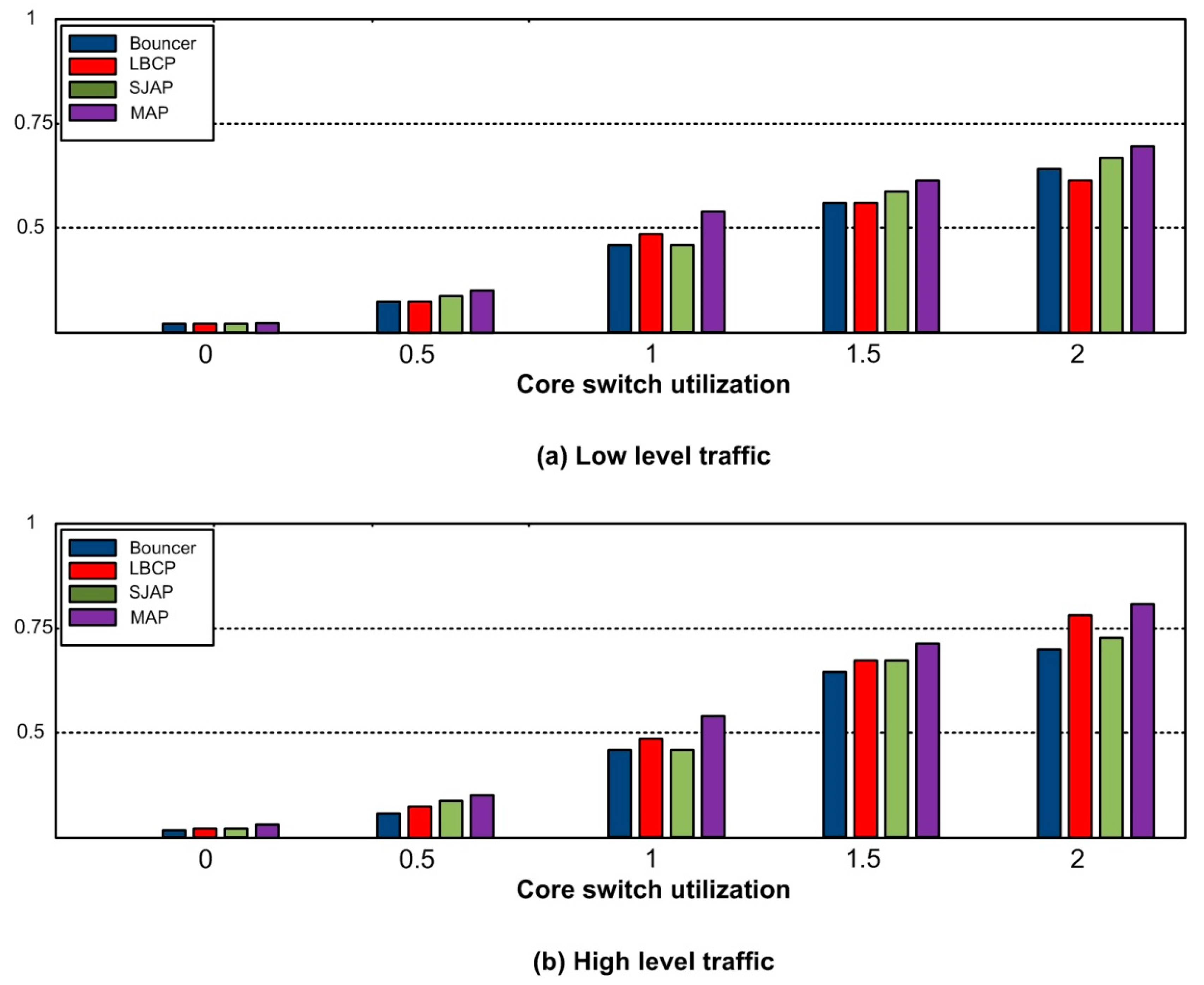

5.1. Baseline Strategy

- Maximal Admittance Policy (MAP): The strategy allows all incoming requests without filtering them. Its objective is to improve and maximize the resource admittance rate in a system. The acceptance queue for this strategy in our system is theoretically set to maximum (infinite) but we limited our experimentation value up to 2500 requesting jobs in the job queue. This policy is similar to High Availability Admission Control Setting and Policy [44], often used in conventional SLAs.

- Smart Job Admission Policy (SJAP): In SJAP, we consider a scenario where the request time Rt for a job Qi measured on the basis of the waiting processes Qinprocess. Therefore, in order to get selected for admission in SJAP queue, a request must satisfy the condition of Rt > Qinprocess and is similar to [45] except that it does not perform the slicing for function isolation.

- Load Balancing and Control Policy (LBCP): LBCP identify the incoming requests based on their impact on system resources by performing isolation on individual requests. The policy is a limited version of the load balancing policy presented in [46].

5.2. Characteristics of the Proposed Methodology

5.3. Testbed and Topology

5.4. Results and Discussion

6. Conclusion and Future Work

Author Contributions

Funding

Acknowledgments

Conflicts of Interest

References

- Xu, M.; Buyya, R. Brownout approach for adaptive management of resources and applications in cloud computing systems: A taxonomy and future directions. ACM Comput. Surv. (CSUR) 2019, 52, 8. [Google Scholar] [CrossRef]

- Zahavi, E.; Shpiner, A.; Rottenstreich, O.; Kolodny, A.; Keslassy, I. Links as a Service (LaaS): Guaranteed tenant isolation in the shared cloud. IEEE J. Sel. Areas Commun. 2019, 37, 1072–1084. [Google Scholar] [CrossRef]

- Wang, S.; Zhao, Y.; Xu, J.; Yuan, J.; Hsu, C.H. Edge server placement in mobile edge computing. J. Parallel Distrib. Comput. 2019, 127, 160–168. [Google Scholar] [CrossRef]

- Lu, Q.; Xu, X.; Liu, Y.; Weber, I.; Zhu, L.; Zhang, W. uBaaS: A unified blockchain as a service platform. Future Gener. Comput. Syst. 2019, 101, 564–575. [Google Scholar] [CrossRef]

- Abbasi, A.A.; Abbasi, A.; Shamshirband, S.; Chronopoulous, A.T.; Persico, V.; Pescapè, A. Software-Defined Cloud Computing: A Systematic Review on Latest Trends and Developments. IEEE Access 2019, 7, 93294–93314. [Google Scholar] [CrossRef]

- Leontiou, N.; Dechouniotis, D.; Denazis, S.; Papavassiliou, S. A hierarchical control framework of load balancing and resource allocation of cloud computing services. Comput. Electr. Eng. 2018, 67, 235–251. [Google Scholar] [CrossRef]

- Sikora, T.D.; Magoulas, G.D. Neural adaptive admission control framework: SLA-driven action termination for real-time application service management. Enterp. Inf. Syst. 2019, 1–41. [Google Scholar] [CrossRef]

- Ari, A.A.A.; Damakoa, I.; Titouna, C.; Labraoui, N.; Gueroui, A. Efficient and Scalable ACO-Based Task Scheduling for Green Cloud Computing Environment. In Proceedings of the 2017 IEEE International Conference on Smart Cloud (SmartCloud), New York, NY, USA, 3–5 November 2017; pp. 66–71. [Google Scholar]

- Nayak, B.; Padhi, S.K.; Pattnaik, P.K. Static Task Scheduling Heuristic Approach in Cloud Computing Environment. In Information Systems. Design and Intelligent Applications; Springer: Singapore, 2019; pp. 473–480. [Google Scholar]

- Jlassi, S.; Mammar, A.; Abbassi, I.; Graiet, M. Towards correct cloud resource allocation in FOSS applications. Future Gener. Comput. Syst. 2019, 91, 392–406. [Google Scholar] [CrossRef]

- Zhou, H.; Ouyang, X.; Ren, Z.; Su, J.; de Laat, C.; Zhao, Z. A Blockchain Based Witness Model for Trustworthy Cloud Service Level Agreement Enforcement. In Proceedings of the IEEE INFOCOM 2019-IEEE Conference on Computer Communications, Paris, France, 29 April–2 May 2019; pp. 1567–1575. [Google Scholar]

- Jin, H.; Abbasi, A.A.; Wu, S. Pathfinder: Application-aware distributed path computation in clouds. Int. J. Parallel Program. 2017, 45, 1273–1284. [Google Scholar] [CrossRef]

- Abbasi, A.; Jin, H. v-Mapper: An Application-Aware Resource Consolidation Scheme for Cloud Data Centers. Future Internet 2018, 10, 90. [Google Scholar] [CrossRef]

- Shen, D.; Junzhou, L.; Dong, F.; Jin, J.; Zhang, J.; Shen, J. Facilitating Application-aware Bandwidth Allocation in the Cloud with One-step-ahead Traffic Information. IEEE Trans. Serv. Comput. 2019. [Google Scholar] [CrossRef]

- Wei, X.; Tang, C.; Fan, J.; Subramaniam, S. Joint Optimization of Energy Consumption and Delay in Cloud-to-Thing Continuum. IEEE Internet Things J. 2019, 6, 2325–2337. [Google Scholar] [CrossRef]

- Sun, H.; Yu, H.; Fan, G.; Chen, L. Energy and time efficient task offloading and resource allocation on the generic IoT-fog-cloud architecture. Peer Peer Netw. Appl. 2019, 1–16. [Google Scholar] [CrossRef]

- Liu, C.F.; Bennis, M.; Debbah, M.; Poor, H.V. Dynamic task offloading and resource allocation for ultra-reliable low-latency edge computing. IEEE Trans. Commun. 2019, 67, 4132–4150. [Google Scholar] [CrossRef]

- Mou, D.; Li, W.; Li, J. A network revenue management model with capacity allocation and overbooking. Soft Comput. 2019, 1–10. [Google Scholar] [CrossRef]

- Chen, M.; Li, W.; Fortino, G.; Hao, Y.; Hu, L.; Humar, I. A Dynamic Service Migration Mechanism in Edge Cognitive Computing. ACM Trans. Internet Technol. (TOIT) 2019, 19, 30. [Google Scholar] [CrossRef]

- Calzarossa, M.C.; Della Vedova, M.L.; Tessera, D. A methodological framework for cloud resource provisioning and scheduling of data parallel applications under uncertainty. Future Gener. Comput. Syst. 2019, 93, 212–223. [Google Scholar] [CrossRef]

- Zhang, Y.; Zhang, L.; Du, L.; Liu, C.; Chen, Y. Distributed energy-efficient target tracking algorithm based on event-triggered strategy for sensor networks. IET Control. Theory Appl. 2019, 13, 1564–1570. [Google Scholar] [CrossRef]

- Gavvala, S.K.; Jatoth, C.; Gangadharan, G.R.; Buyya, R. QoS-aware cloud service composition using eagle strategy. Future Gener. Comput. Syst. 2019, 90, 273–290. [Google Scholar] [CrossRef]

- Abbasi, A.A.; Jin, H.; Wu, S. A software-Defined Cloud Resource Management Framework. In Asia-Pacific Services Computing Conference; Springer: Cham, Switzerland, 2015; pp. 61–75. [Google Scholar]

- Zhang, J.; Peng, C.; Xie, X.; Yue, D. Output Feedback Stabilization of Networked Control Systems Under a Stochastic Scheduling Protocol. IEEE Trans. Cybern. 2019. [Google Scholar] [CrossRef]

- Tan, Z.; Qu, H.; Zhao, J.; Ren, G.; Wang, W. Low-complexity networking based on joint energy efficiency in ultradense mmWave backhaul networks. Trans. Emerg. Telecommun. Technol. 2019, 30, e3508. [Google Scholar] [CrossRef]

- Harishankar, M.; Pilaka, S.; Sharma, P.; Srinivasan, N.; Joe-Wong, C.; Tague, P. Procuring Spontaneous Session-Level Resource Guarantees for Real-Time Applications: An Auction Approach. IEEE J. Sel. Areas Commun. 2019, 37, 1534–1548. [Google Scholar] [CrossRef]

- Bega, D.; Gramaglia, M.; Banchs, A.; Sciancalepore, V.; Costa-Perez, X. A machine learning approach to 5G infrastructure market optimization. IEEE Trans. Mobile Comput. 2019. [Google Scholar] [CrossRef]

- Momenzadeh, Z.; Safi-Esfahani, F. Workflow scheduling applying adaptable and dynamic fragmentation (WSADF) based on runtime conditions in cloud computing. Future Gener. Comput. Syst. 2019, 90, 327–346. [Google Scholar] [CrossRef]

- Gürsu, H.M.; Vilgelm, M.; Alba, A.M.; Berioli, M.; Kellerer, W. Admission Control Based Traffic-Agnostic Delay-Constrained Random Access (AC/DC-RA) for M2M Communication. IEEE Trans. Wirel. Commun. 2019, 18, 2858–2871. [Google Scholar] [CrossRef]

- Al-qaness, M.A.A.; Elaziz, A.A.; Kim, S.; Ewees, A.A.; Abbasi, A.A.; Alhaj, Y.A.; Hawbani, A. Channel State Information from Pure Communication to Sense and Track Human Motion: A Survey. Sensors 2019, 19, 3329. [Google Scholar] [CrossRef]

- Nam, J.; Jo, H.; Kim, Y.; Porras, P.; Yegneswaran, V.; Shin, S. Operator-Defined Reconfigurable Network OS for Software-Defined Networks. IEEE/ACM Trans. Netw. 2019, 27, 1206–1219. [Google Scholar] [CrossRef]

- Avgeris, M.; Dechouniotis, D.; Athanasopoulos, N.; Papavassiliou, S. Adaptive resource allocation for computation offloading: A control-theoretic approach. ACM Trans. Internet Technol. (TOIT) 2019, 19, 23. [Google Scholar] [CrossRef]

- Chen, G.; Zhang, Y.; Shi, Y.; Zeng, Q. Joint resource allocation and admission control mechanism in software defined mobile networks. China Commun. 2019, 16, 33–45. [Google Scholar]

- Taleb, T.; Afolabi, I.; Samdanis, K.; Yousaf, F.Z. On Multi-domain Network Slicing Orchestration Architecture and Federated Resource Control. IEEE Netw. 2019. [Google Scholar] [CrossRef]

- Abeni, L.; Biondi, A.; Bini, E. Hierarchical scheduling of real-time tasks over Linux-based virtual machines. J. Syst. Softw. 2019, 149, 234–249. [Google Scholar] [CrossRef]

- Kyung, Y.; Park, J. Prioritized admission control with load distribution over multiple controllers for scalable SDN-based mobile networks. Wirel. Netw. 2019, 25, 2963–2976. [Google Scholar] [CrossRef]

- Bhushan, K.; Gupta, B.B. Network flow analysis for detection and mitigation of Fraudulent Resource Consumption (FRC) attacks in multimedia cloud computing. Multimed. Tools Appl. 2019, 78, 4267–4298. [Google Scholar] [CrossRef]

- Qin, Y.; Guo, D.; Luo, L.; Cheng, G.; Ding, Z. Design and optimization of VLC based small-world data centers. Front. Comput. Sci. 2019, 13, 1034–1047. [Google Scholar] [CrossRef]

- Wang, S.; Li, X.; Qian, Z.; Yuan, J. Distancer: A Host-Based Distributed Adaptive Load Balancer for Datacenter Traffic. In International Conference on Algorithms and Architectures for Parallel Processing; Springer: Cham, Switzerland, 2008; pp. 567–581. [Google Scholar]

- Shen, H.; Chen, L. Resource demand misalignment: An important factor to consider for reducing resource over-provisioning in cloud datacenters. IEEE/ACM Trans. Netw. 2018, 26, 1207–1221. [Google Scholar] [CrossRef]

- Caballero, P.; Banchs, A.; de Veciana, G.; Costa-Pérez, X.; Azcorra, A. Network slicing for guaranteed rate services: Admission control and resource allocation games. IEEE Trans. Wirel. Commun. 2018, 17, 6419–6432. [Google Scholar] [CrossRef]

- Martini, B.; Gharbaoui, M.; Adami, D.; Castoldi, P.; Giordano, S. Experimenting SDN and Cloud Orchestration in Virtualized Testing Facilities: Performance Results and Comparison. IEEE Trans. Netw. Serv. Manag. 2019. [Google Scholar] [CrossRef]

- Massaro, A.; de Pellegrini, F.; Maggi, L. Optimal Trunk-Reservation by Policy Learning. In Proceedings of the IEEE INFOCOM 2019—IEEE Conference on Computer Communications, Paris, France, 29 April–2 May 2019; pp. 127–135. [Google Scholar]

- Rothenberg, C.E.; Roos, A. A review of policy-based resource and admission control functions in evolving access and next generation networks. J. Netw. Syst. Manag. 2008, 16, 14–45. [Google Scholar] [CrossRef]

- Han, B.; Sciancalepore, V.; di Feng Costa-Perez, X.; Schotten, H.D. A Utility-Driven Multi-Queue Admission Control Solution for Network Slicing. In Proceedings of the IEEE INFOCOM 2019—IEEE Conference on Computer Communications, Paris, France, 29 April–2 May 2019; pp. 55–63. [Google Scholar]

- Lin, Y.-D.; Wang, C.C.; Lu, Y.; Lai, Y.; Yang, H.-S. Two-tier dynamic load balancing in SDN-enabled Wi-Fi networks. Wirel. Netw. 2018, 24, 2811–2823. [Google Scholar] [CrossRef]

- Gao, K.; Xu, C.; Qin, J.; Zhong, L.; Muntean, G.M. A Stochastic Optimal Scheduler for Multipath TCP in Software Defined Wireless Network. In Proceedings of the ICC 2019-2019 IEEE International Conference on Communucations (ICC), Shanghai, China, 20–24 May 2019; pp. 1–6. [Google Scholar]

- Ferdouse, L.; Anpalagan, A.; Erkucuk, S. Joint Communication and Computing Resource Allocation in 5G Cloud Radio Access Networks. IEEE Trans. Veh. Technol. 2019. [Google Scholar] [CrossRef]

- Zhang, W.; Sun, H.; Zhao, D.; Xu, L.; Liu, X.; Zhou, J.; Ning, H.; Guo, Y.; Yang, S. A Streaming Cloud Platform for Real-Time Video Processing on Embedded Devices. IEEE Trans. Cloud Comput. 2019. [Google Scholar] [CrossRef]

- Bhimani, J.; Mi, N.; Leeser, M.; Yang, Z. New Performance Modeling Methods for Parallel Data Processing Applications. ACM Trans. Model. Comput. Simul. (TOMACS) 2019, 29, 15. [Google Scholar] [CrossRef]

- Kiss, T.; DesLauriers, J.; Gesmier, G.; Terstyanszky, G.; Pierantoni, G.; Oun, O.A.; Taylor, S.J.; Anagnostou, A.; Kovacs, J. A cloud-agnostic queuing system to support the implementation of deadline-based application execution policies. Future Gener. Comput. Syst. 2019, 101, 99–111. [Google Scholar] [CrossRef]

| Variable | Description |

|---|---|

| r,t | Row vectors |

| T | Total number of available compute nodes |

| Tc | Cumulative tasks |

| Rc | Resource utilization |

| Pt | Number of permissible tasks |

| Rt (c) | Total response time |

| Wt (Qinprogress) | Waiting time of in-process requests of cluster Q |

| Wt (Qwaiting) | Waiting time of waiting requests of cluster Q |

| Wt (Qthreshold) | Threshold value for waiting time of cluster Q |

| CPU Utilization | 10% | 20% | 30% | 40% | 50% |

|---|---|---|---|---|---|

| Bouncer | 2.93 | 3.45 | 3.90 | 4.22 | 4.53 |

| Load Balancing and Control Policy (LBCP) | 2.68 | 3.12 | 3.87 | 4.14 | 4.36 |

| Smart Job Admission Policy (SJAP) | 3.16 | 3.58 | 3.98 | 4.29 | 4.83 |

| Maximal Admittance Policy (MAP) | 3.46 | 3.83 | 4.32 | 4.65 | 5.23 |

© 2019 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Abbasi, A.A.; Al-qaness, M.A.A.; Elaziz, M.A.; Khalil, H.A.; Kim, S. Bouncer: A Resource-Aware Admission Control Scheme for Cloud Services. Electronics 2019, 8, 928. https://doi.org/10.3390/electronics8090928

Abbasi AA, Al-qaness MAA, Elaziz MA, Khalil HA, Kim S. Bouncer: A Resource-Aware Admission Control Scheme for Cloud Services. Electronics. 2019; 8(9):928. https://doi.org/10.3390/electronics8090928

Chicago/Turabian StyleAbbasi, Aaqif Afzaal, Mohammed A. A. Al-qaness, Mohamed Abd Elaziz, Hassan A. Khalil, and Sunghwan Kim. 2019. "Bouncer: A Resource-Aware Admission Control Scheme for Cloud Services" Electronics 8, no. 9: 928. https://doi.org/10.3390/electronics8090928

APA StyleAbbasi, A. A., Al-qaness, M. A. A., Elaziz, M. A., Khalil, H. A., & Kim, S. (2019). Bouncer: A Resource-Aware Admission Control Scheme for Cloud Services. Electronics, 8(9), 928. https://doi.org/10.3390/electronics8090928