Smartphone-Based Context Flow Recognition for Outdoor Parking System with Machine Learning Approaches

Abstract

1. Introduction

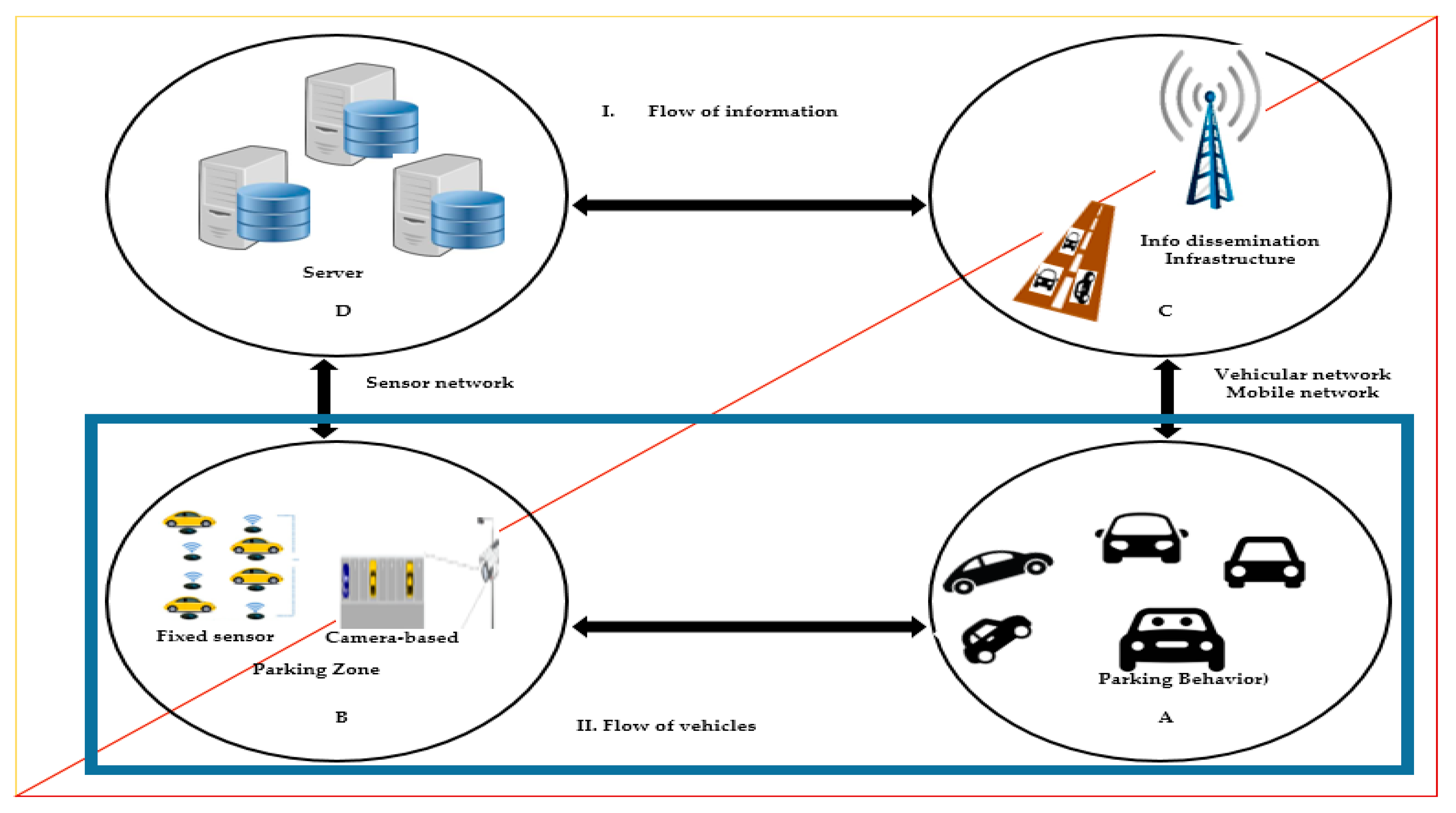

2. Literature Review

2.1. Vision-Based Parking Systems

2.2. Sensor-Based Parking Systems

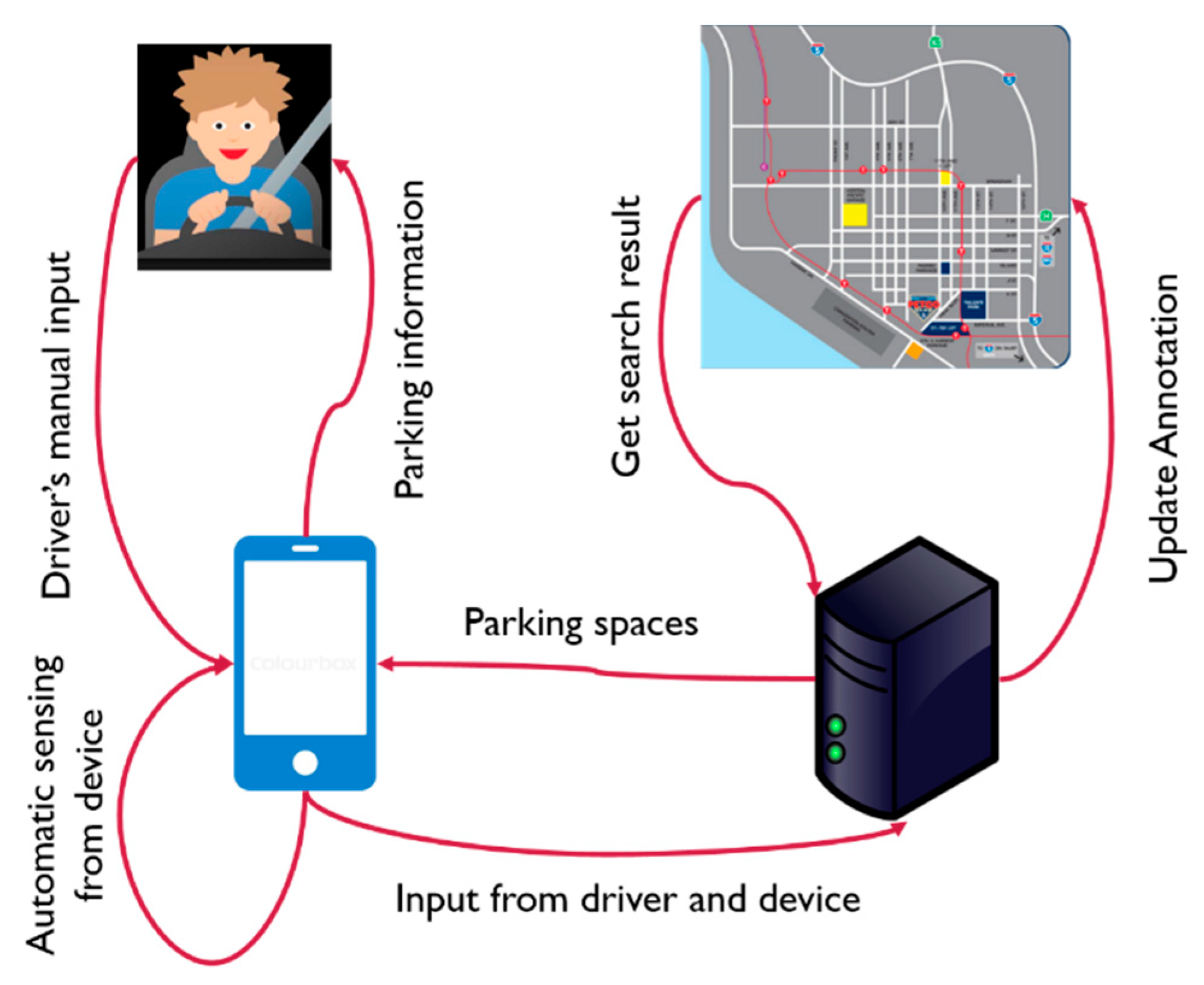

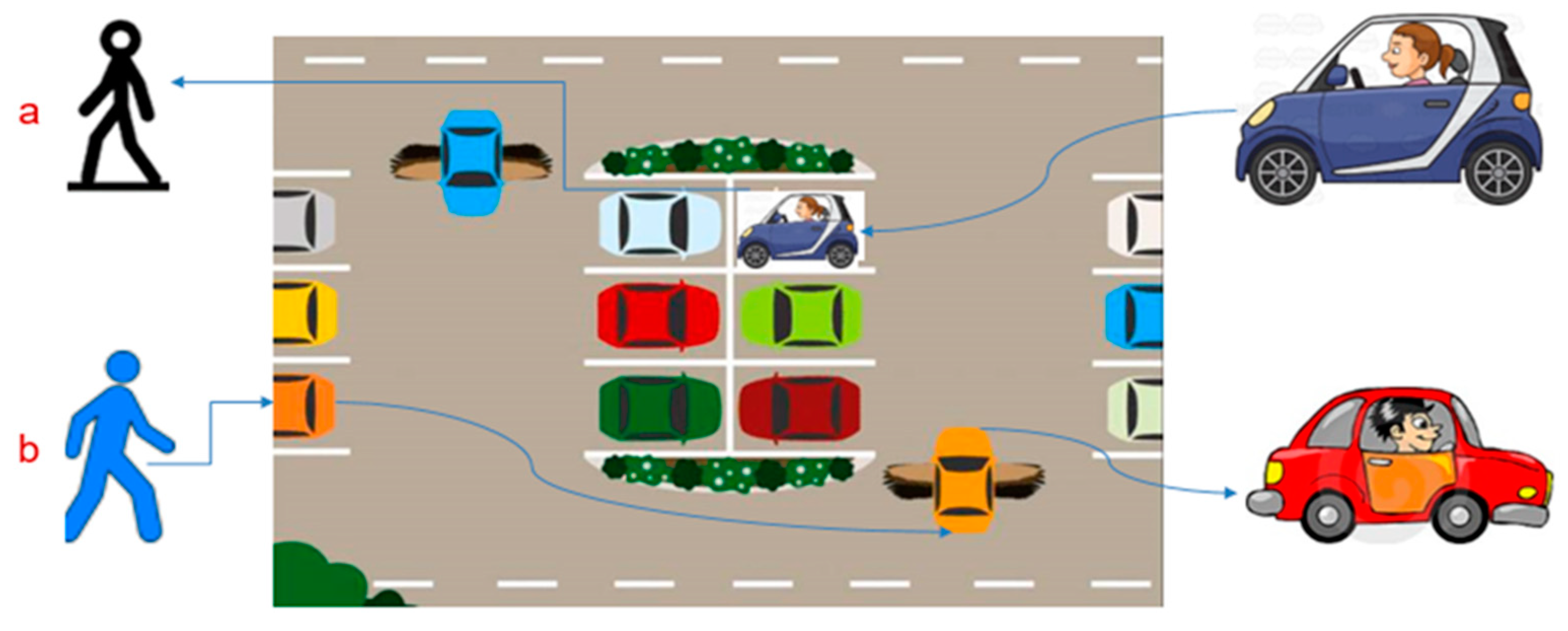

2.3. Smartphone-Based Parking Systems

2.4. Summary of Existing Works

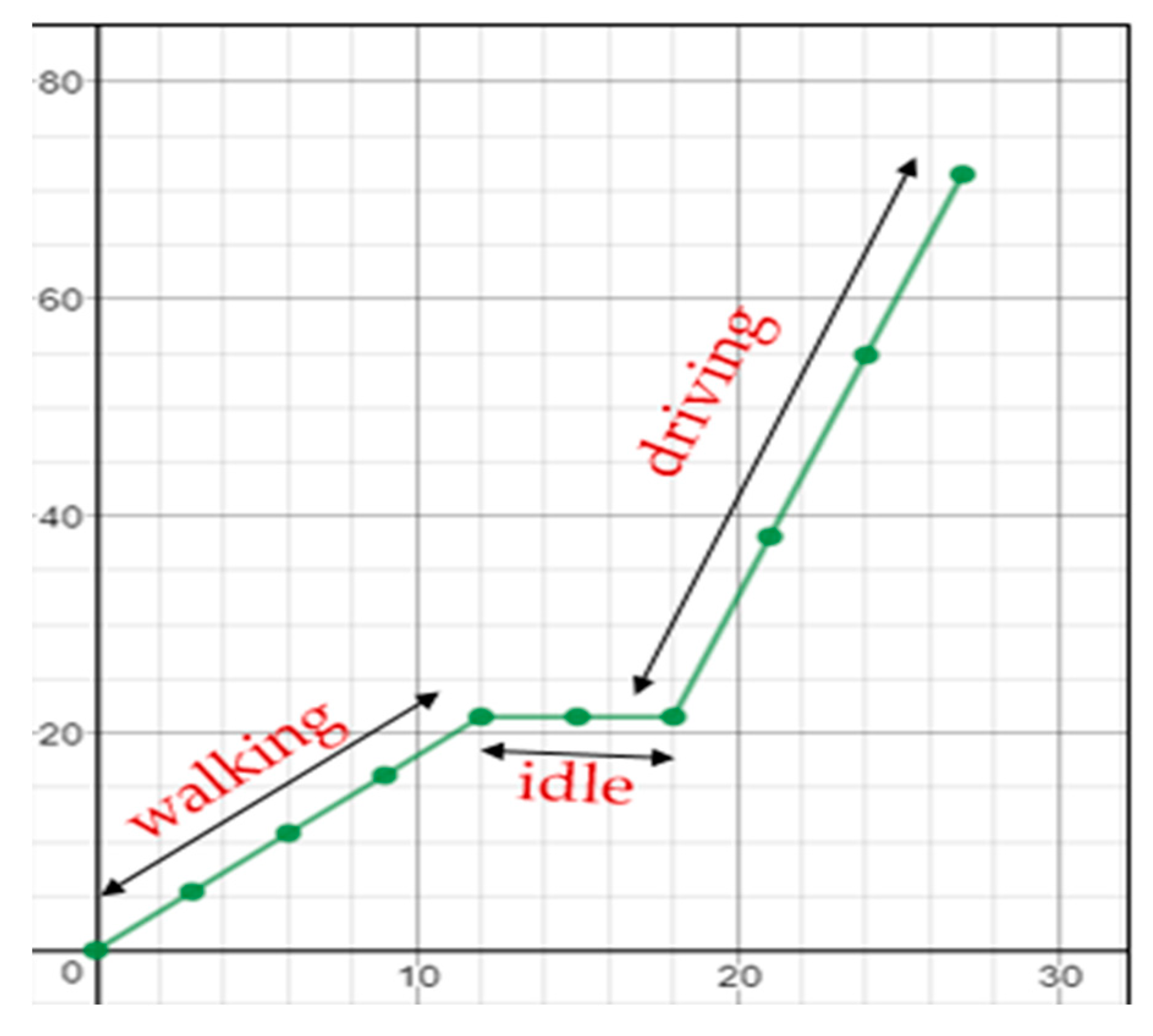

3. Theoretical Idea of the Proposed Approach

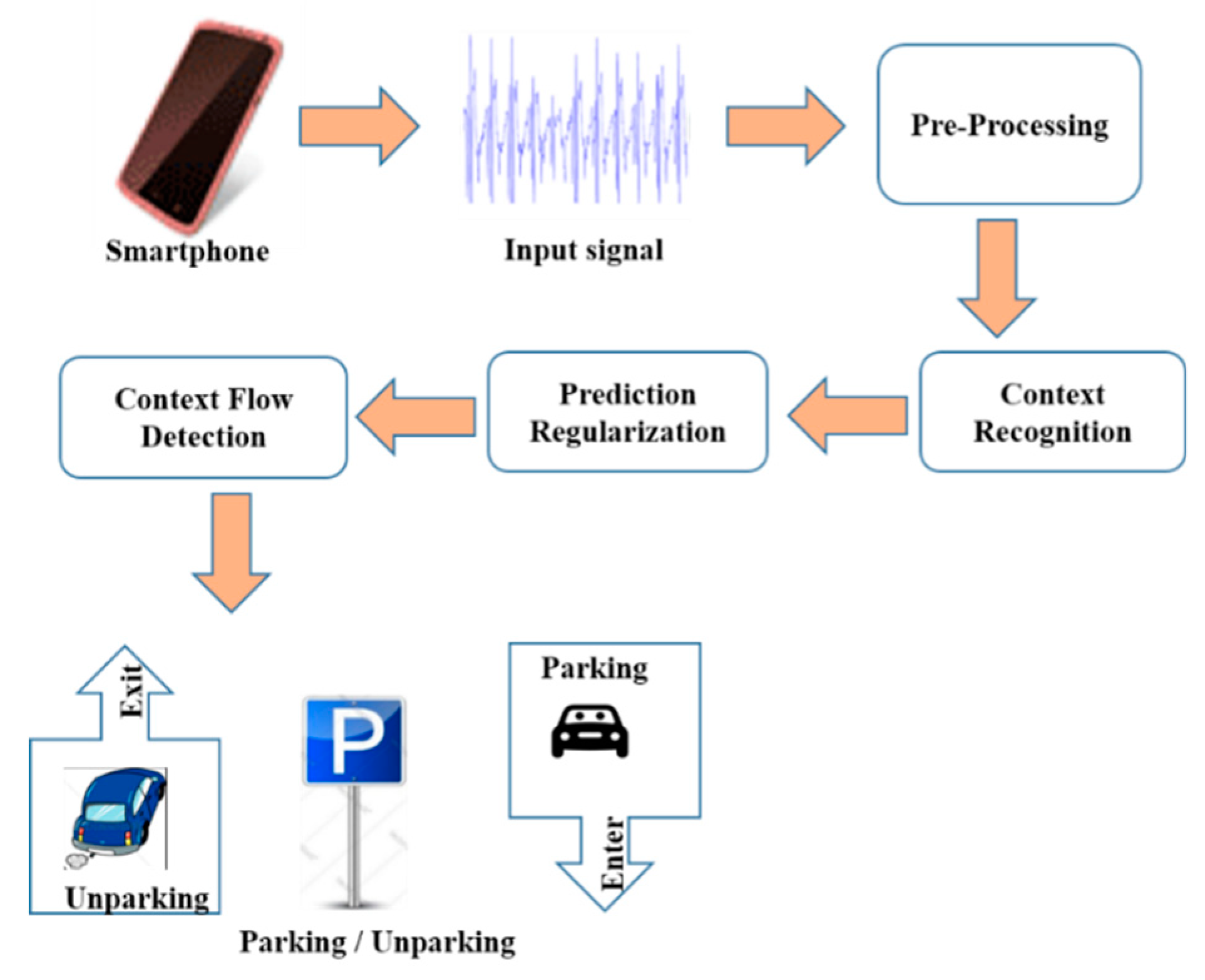

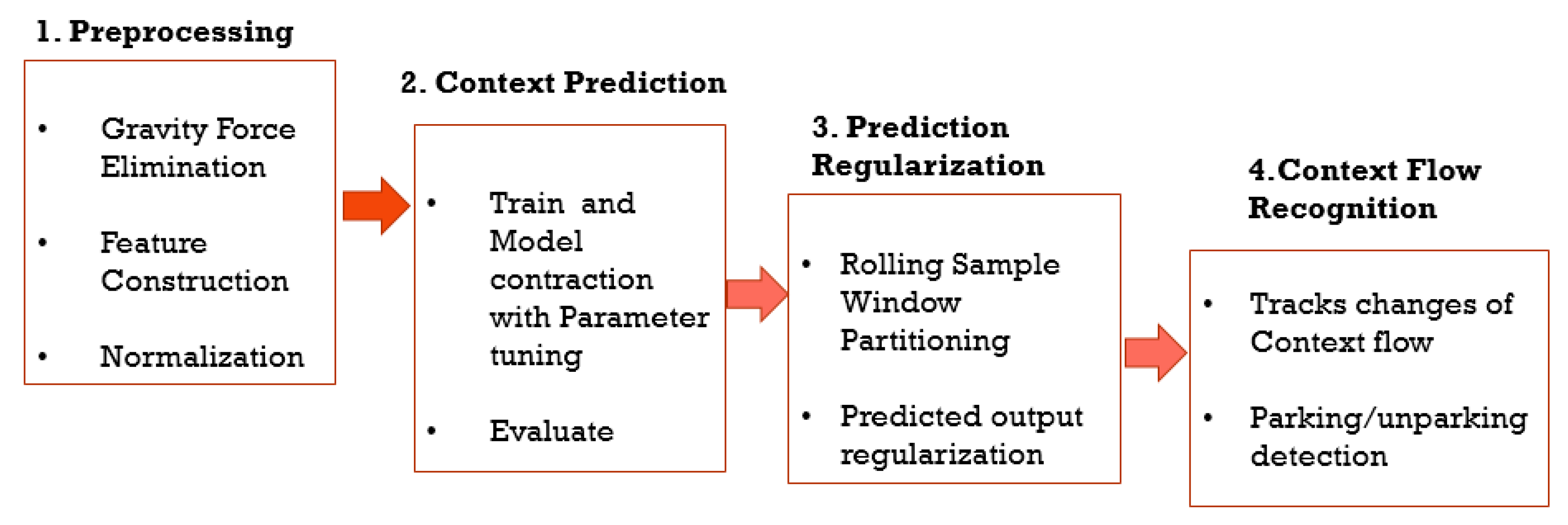

4. Proposed Method

4.1. Pre-Processing

4.1.1. Gravity Force Elimination

4.1.2. Feature Construction

4.1.3. Normalization

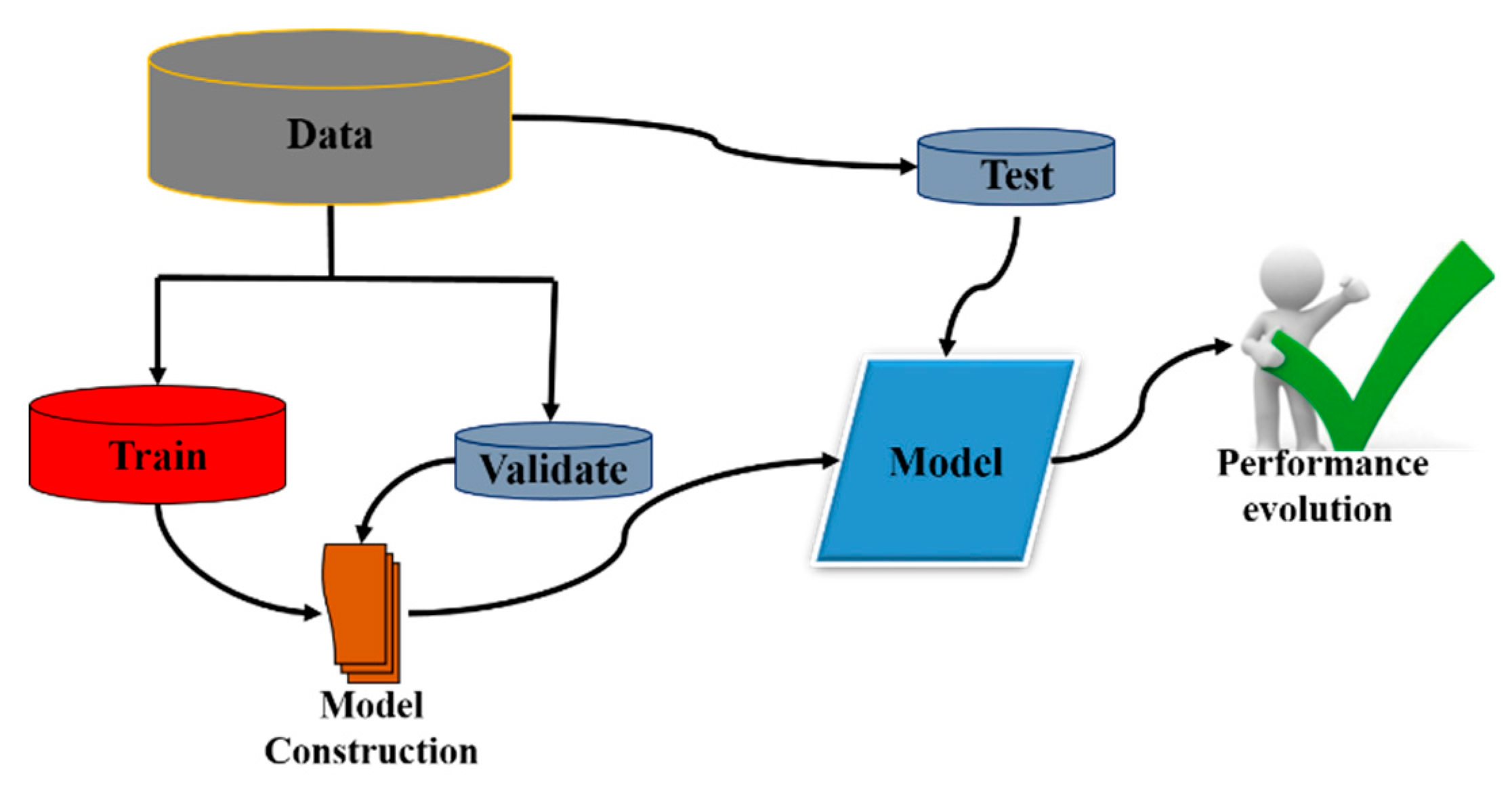

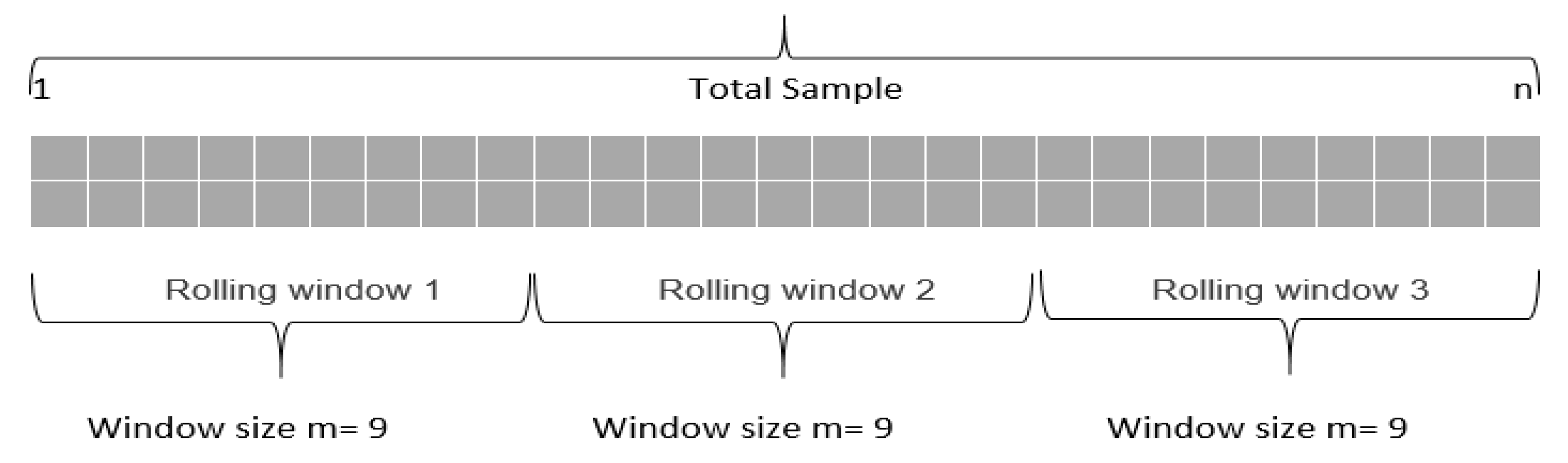

4.2. Context Prediction (CP)

4.3. Prediction Regularization (PR)

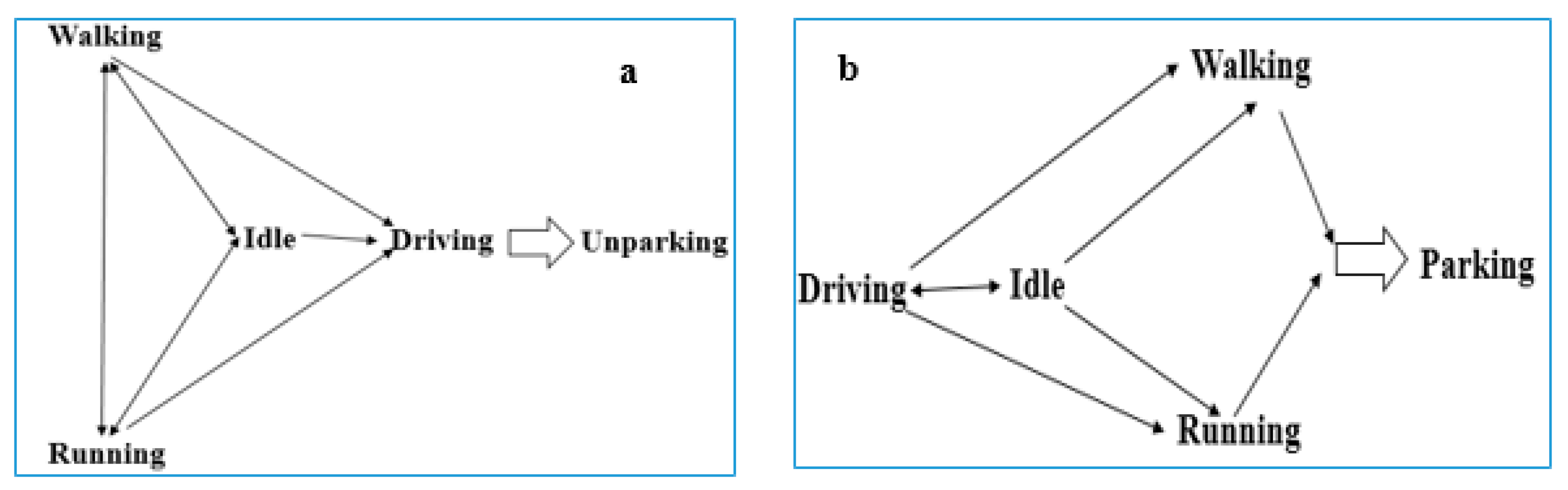

4.4. Context Flow Recognition

5. Results and Discussions

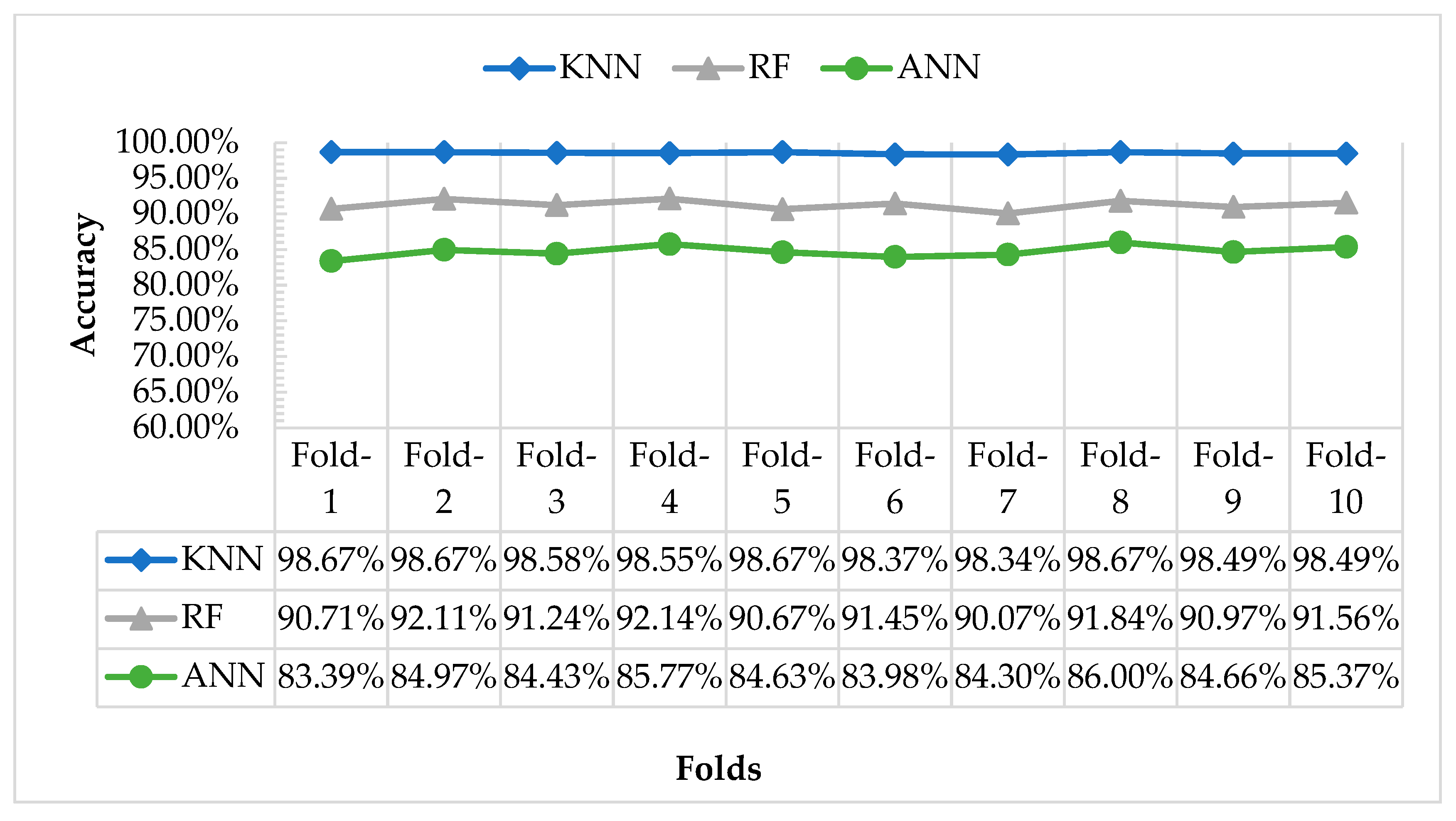

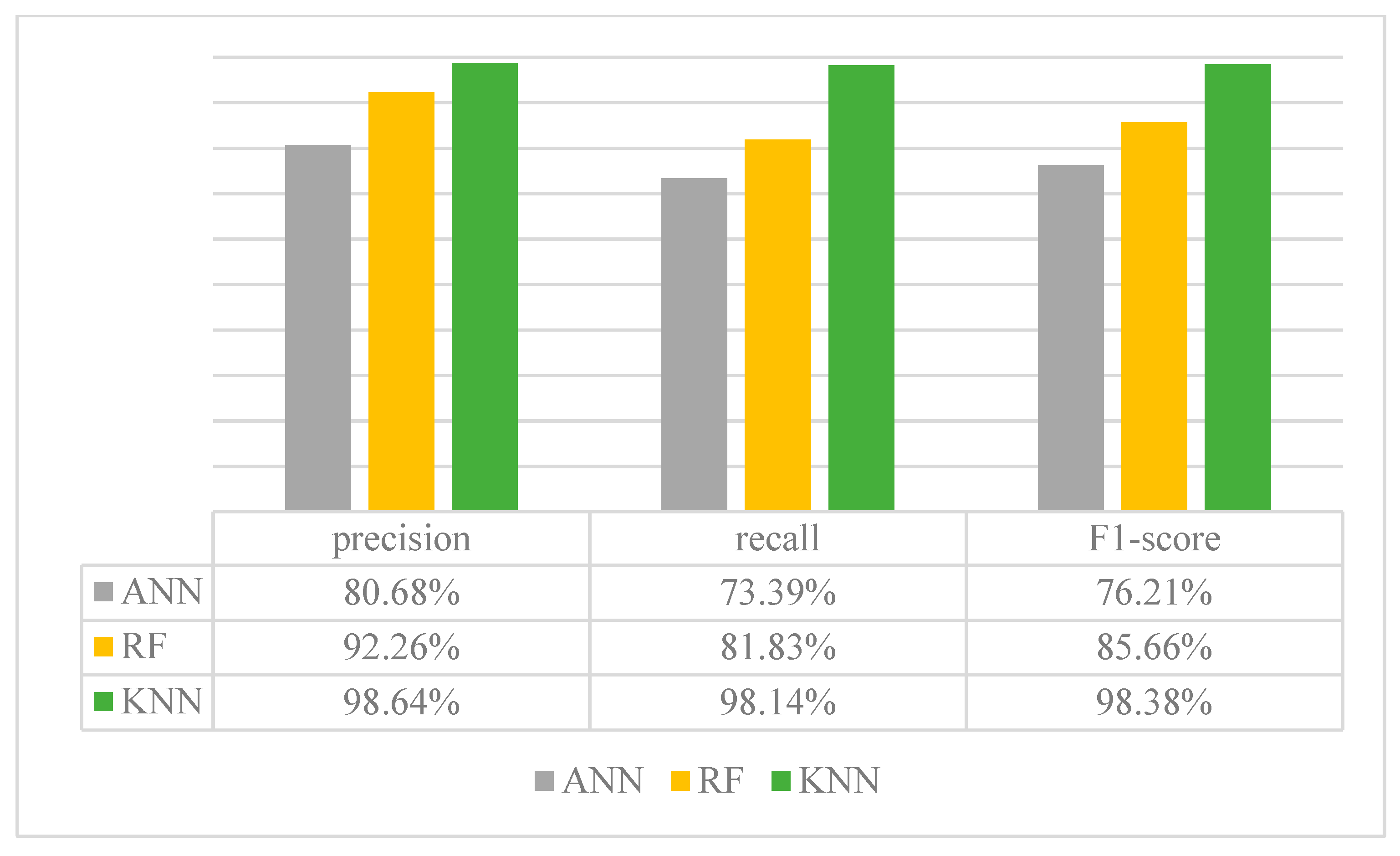

5.1. Experiment on Context Recognition

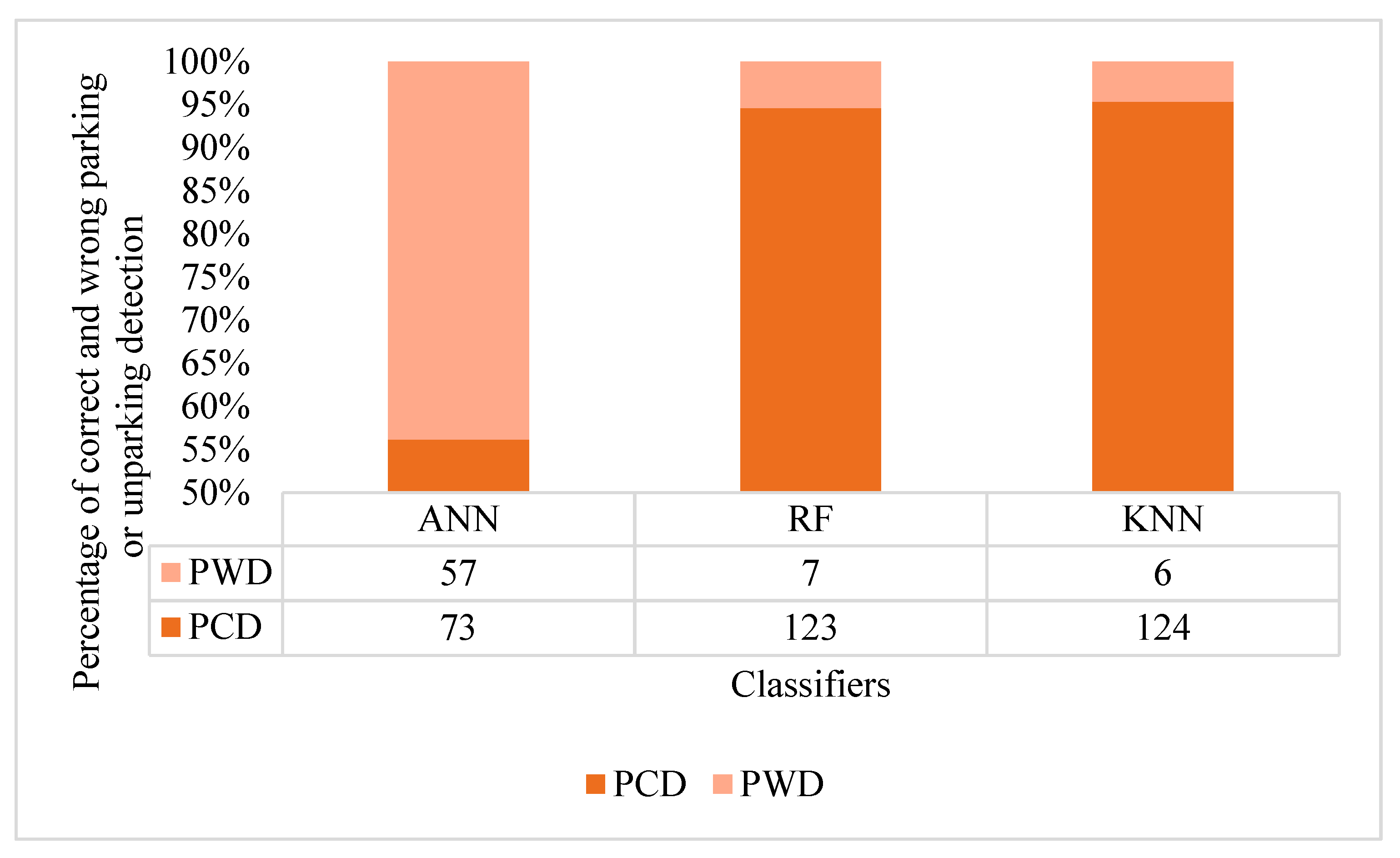

5.2. Experiment Context Flow Recognition

- Parking: If the output of CFR matches the flow of the parking list as shown in Figure 4.

- Unparking: If the output of CFR matches the flow of the unparking list as shown in Figure 4.

- Unknown: When the result of CFR is undefined and does not match any of the above two categories, then it is categorized as unknown. This output means that the action is unknown to CFR.

6. Conclusions

Author Contributions

Funding

Acknowledgments

Conflicts of Interest

References

- Stenneth, L.; Wolfson, O.; Xu, B.; Yu, P.S. PhonePark: Street Parking Using Mobile Phones. In Proceedings of the IEEE 13th International Conference on Mobile Data Management, Bengular, India, 23–26 July 2012; pp. 278–279. [Google Scholar]

- Mathur, S.; Kaul, S.; Gruteser, M.; Trappe, W. ParkNet. In Proceedings of the MobiHoc S3 workshop on MobiHoc S3, New Orleans, LA, USA, 18–21 May 2009; p. 25. [Google Scholar]

- White, P.S. No Vacancy Park Slope’s Parking Problem And How to Fix It; Transportation Alternatives: New York, NY, USA, 27 February 2007. [Google Scholar]

- McCoy, K. Drivers Spend an Average of 17 Hours a Year Searching for Parking Spots. 2017. Available online: https://www.usatoday.com/story/money/2017/07/12/parking-pain-causes-financial-and-personal-strain/467637001/ (accessed on 5 May 2019).

- Hossen, M.I.; Goh, M.; Connie, T.; Aris, A.; Pei, W.L. A Review on Outdoor Parking Systems Using Feasibility of Mobile Sensors. In International Conference on Computational Science and Technology; Springer: Singapore, 2017; pp. 241–251. [Google Scholar]

- Nawaz, S.; Efstratiou, C.; Mascolo, C. ParkSense: A Smartphone Based Sensing System For On-Street Parking. In Proceedings of the 19th Annual International Conference on Mobile Computing & Networking (MobiCom’19), Miami, FL, USA, 30 September–4 October 2013; pp. 75–86. [Google Scholar]

- Lin, T.; Rivano, H.; le Mouel, F. A Survey of Smart Parking Solutions. IEEE Trans. Intell. Transp. Syst. 2017, 18, 3229–3253. [Google Scholar] [CrossRef]

- Kessler, S. How Smarter Parking Technology Will Reduce Traffic Congestion. 2011. Available online: https://mashable.com/2011/04/13/smart-parking-tech/#yRd0m1SOvOqB (accessed on 3 December 2018).

- Kinyanjui, K.E.; Kahonge, A.M. Mobile Phone-Based Parking System. Int. J. Inf. Technol. Control Autom. 2013, 3, 2013. [Google Scholar] [CrossRef]

- Lan, K.C.; Shih, W.Y. An intelligent driver location system for smart parking. Expert Syst. Appl. 2014, 41, 2443–2456. [Google Scholar] [CrossRef]

- Hussain, G.; Jabbar, M.S.; Cho, J.D.; Bae, S. Indoor Positioning System: A New Approach Based on LSTM and Two Stage Activity Classification. Electronics 2019, 8, 375. [Google Scholar] [CrossRef]

- Hegde, N.; Sazonov, E.; Hegde, N.; Sazonov, E. SmartStep: A Fully Integrated, Low-Power Insole Monitor. Electronics 2014, 3, 381–397. [Google Scholar] [CrossRef]

- Fraifer, M.; Fernström, M. Investigation of smart parking systems and their technologies. In Proceedings of the Thirty Seventh International Conference on Information Systems, IoT Smart City Challenges Applications (ISCA 2016), Dublin, Ireland, 9 December 2016; pp. 1–14. [Google Scholar]

- Van Melsen, N. Automated Car Parking History. 2013. Available online: http://www.parking-net.com/parking-industry-blog/skyline-parking-ag/history (accessed on 5 December 2018).

- Kosowski, M.; Wojcikowski, M.; Czyzewski, A. Vision-based parking lot occupancy evaluation system using 2D separable discrete wavelet transform. Bull. Pol. Acad. Sci. Tech. Sci. 2015, 63, 569–573. [Google Scholar] [CrossRef]

- Zhang, L.; Huang, J.; Li, X.; Xiong, L. Vision-Based Parking-Slot Detection: A DCNN-Based Approach and a Large-Scale Benchmark Dataset. IEEE Trans. Image Process. 2018, 27, 5350–5364. [Google Scholar] [CrossRef] [PubMed]

- Shih, S.-E.; Tsai, W.-H. A Convenient Vision-Based System for Automatic Detection of Parking Spaces in Indoor Parking Lots Using Wide-Angle Cameras. IEEE Trans. Veh. Technol. 2014, 63, 2521–2532. [Google Scholar] [CrossRef]

- Suhr, J.K.; Jung, H.G. Sensor Fusion-Based Vacant Parking Slot Detection and Tracking. IEEE Trans. Intell. Transp. Syst. 2014, 15, 21–36. [Google Scholar] [CrossRef]

- Jung, H.G.; Cho, Y.H.; Yoon, P.J.; Kim, J. Scanning Laser Radar-Based Target Position Designation for Parking Aid System. IEEE Trans. Intell. Transp. Syst. 2008, 9, 406–424. [Google Scholar] [CrossRef]

- Vera-Gómez, J.A.; Quesada-Arencibia, A.; García, C.R.; Moreno, R.S.; Hernández, F.G. An Intelligent Parking Management System for Urban Areas. Sensors 2016, 16, 931. [Google Scholar] [CrossRef] [PubMed]

- Reve, S.V.; Choudhri, S. Management of Car Parking System Using Wireless Sensor Network. Int. J. Emerg. Technol. Adv. Eng. 2012, 2, 7. [Google Scholar]

- Le Nguyen, T.; Zhang, Y.; Griss, M. ProbIN: Probabilistic Inertial Navigation. In Proceedings of the IEEE 7th International Conference Mobile Ad-Hoc Sensor System (MASS 2010), San Francisco, CA, USA, 8–12 November 2010; pp. 650–657. [Google Scholar]

- Biondi, S.; Monteleone, S.; la Torre, G.; Catania, V. A Context-Aware Smart Parking System. In Proceedings of the 12th International Conference on Signal Image Technology and Internet Based Systems, Naples, Italy, 28 November–1 December 2016; pp. 450–454. [Google Scholar]

- Nandugudi, A.; Ki, T.; Nuessle, C.; Challen, G. PocketParker: Pocketsourcing parking lot availability. In Proceedings of the ACM International Joint Conference on Pervasive and Ubiquitous Computing(UbiComp ’14Adjunct), Seattle, WA, USA, 13–17 September 2014; pp. 963–973. [Google Scholar]

- Moses, N.; Chincholkar, Y.D. Smart Parking System for Monitoring Vacant Parking. Int. J. Adv. Res. Comput. Commun. Eng. 2016, 5, 717–720. [Google Scholar]

- Long, L.L.; Srinivasan, M. Walking, running, and resting under time, distance, and average speed constraints: Optimality of walk-run-rest mixtures. J. R. Soc. Interface 2013, 10, 20120980. [Google Scholar] [CrossRef]

- Tate, D.R. Acceleration Due to Gravity at the National Bureau of Standards. J. Res. Natl. Bur. Stand. C Eng. Instrum. 1965, 72, 20. [Google Scholar]

- Coates, A.; Arbor, A.; Ng, A.Y. An Analysis of Single-Layer Networks in Unsupervised Feature Learning. In Proceedings of the Fourteenth International Conference on Artificial Intelligence and Statistics (Aistats), Ft. Lauderdale, FL, USA, 11–13 April 2011. [Google Scholar]

| Artificial Neural Network (ANN) | Random Forest (RF) | K-Nearest Neighbor (KNN) |

|---|---|---|

| activation = ‘relu’ | min_samples_split = 8 | n_neighbors = 15 |

| solver = ‘adam’ | max_leaf_nodes= None | - |

| alpha = 0.001, hidden_layer_siz | n_estimator = 24 | - |

| e = (20, 8), random_state=1 | max_depth = 71 | - |

| - | min_samples_leaf = 6 | - |

| - | min_weight_fraction_leaf = 0.0 | - |

| - | random_state = 50 | - |

| - | criterion = ‘gini’ | - |

| - | max_features = ‘auto’ | - |

| Actual | w | w | w | w | w | w | w | w | w | d | d | d | d | d | d | d | d | d |

| Predicted | w | d | w | w | w | d | w | w | w | d | d | d | w | d | d | d | d | w |

| - | W(1) | W(2) | W(3) | W(4) | W(5) | W(6) | W(7) |

|---|---|---|---|---|---|---|---|

| m consecutive outputs in each window w | drive | walk | drive | walk | idle | walk | idle |

| walk | drive | drive | walk | walk | walk | walk | |

| drive | drive | drive | idle | idle | walk | walk | |

| drive | drive | walk | idle | idle | walk | walk | |

| drive | idle | drive | idle | idle | walk | Run | |

| idle | idle | Run | idle | idle | walk | walk | |

| drive | drive | drive | idle | idle | walk | walk | |

| drive | run | drive | idle | idle | idle | walk | |

| drive | drive | drive | idle | idle | walk | walk | |

| mode(o) | drive | drive | drive | idle | idle | walk | walk |

| Activities from Each Rolling Window | Drive (current) | Drive (next) | Drive | Idle | Idle | Walk | Walk |

| Iteration 1 | Drive (current) | Drive (next) | Idle | Idle | Walk | Walk | |

| Iteration 2 | Drive (current) | Idle (next) | Idle | Walk | Walk | ||

| Iteration 2 | Drive | Idle (current) | Idle(next) | Walk | Walk | ||

| Iteration 4 | Drive | Idle (current) | Walk (next) | Walk | |||

| Iteration 5 | Drive | Idle | Walk (current) | Walk (next) | |||

| Iteration 6 | Drive | Idle | Walk (current) | Walk (next) | |||

| Iteration 7 | Drive | Idle | Walk | ||||

| Phone’s Position | Number of Correctly Identified Exp | ||

|---|---|---|---|

| KNN | ANN | RF | |

| Fixed | 58 | 29 | 55 |

| Random | 56 | 34 | 58 |

| Overall | 114 | 63 | 113 |

| - | ANN | RF | KNN |

|---|---|---|---|

| Unknown | 10 | 10 | 10 |

| Detected | 0 | 0 | 0 |

© 2019 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Hossen, M.I.; Michael, G.K.O.; Connie, T.; Lau, S.H.; Hossain, F. Smartphone-Based Context Flow Recognition for Outdoor Parking System with Machine Learning Approaches. Electronics 2019, 8, 784. https://doi.org/10.3390/electronics8070784

Hossen MI, Michael GKO, Connie T, Lau SH, Hossain F. Smartphone-Based Context Flow Recognition for Outdoor Parking System with Machine Learning Approaches. Electronics. 2019; 8(7):784. https://doi.org/10.3390/electronics8070784

Chicago/Turabian StyleHossen, Md Ismail, Goh Kah Ong Michael, Tee Connie, Siong Hoe Lau, and Ferdous Hossain. 2019. "Smartphone-Based Context Flow Recognition for Outdoor Parking System with Machine Learning Approaches" Electronics 8, no. 7: 784. https://doi.org/10.3390/electronics8070784

APA StyleHossen, M. I., Michael, G. K. O., Connie, T., Lau, S. H., & Hossain, F. (2019). Smartphone-Based Context Flow Recognition for Outdoor Parking System with Machine Learning Approaches. Electronics, 8(7), 784. https://doi.org/10.3390/electronics8070784